modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

Zaib/Vulnerability-detection

|

Zaib

| 2022-08-05T08:47:07Z

| 13

| 5

|

transformers

|

[

"transformers",

"pytorch",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-07-16T09:16:45Z

|

---

tags:

- generated_from_trainer

model-index:

- name: Vulnerability-detection

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Vulnerability-detection

This model is a fine-tuned version of [mrm8488/codebert-base-finetuned-detect-insecure-code](https://huggingface.co/mrm8488/codebert-base-finetuned-detect-insecure-code) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5778

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

jefsnacker/testpyramidsrnd

|

jefsnacker

| 2022-08-05T07:52:18Z

| 4

| 0

|

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

] |

reinforcement-learning

| 2022-08-05T07:52:11Z

|

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

library_name: ml-agents

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Pyramids

2. Step 1: Write your model_id: jefsnacker/testpyramidsrnd

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

abdulmatinomotoso/multi_news_article_title_1200

|

abdulmatinomotoso

| 2022-08-05T07:14:16Z

| 15

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-08-05T06:42:59Z

|

---

tags:

- generated_from_trainer

model-index:

- name: multi_news_article_title_1200

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# multi_news_article_title_1200

This model is a fine-tuned version of [google/pegasus-multi_news](https://huggingface.co/google/pegasus-multi_news) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

sagar122/xperimentalilst_hackathon_2022

|

sagar122

| 2022-08-05T06:19:19Z

| 0

| 1

| null |

[

"arxiv:2205.02455",

"license:cc-by-nc-4.0",

"region:us"

] | null | 2022-08-04T08:49:20Z

|

---

license: cc-by-nc-4.0

---

## COGMEN; Official Pytorch Implementation

[](https://paperswithcode.com/sota/multimodal-emotion-recognition-on-iemocap?p=cogmen-contextualized-gnn-based-multimodal)

**CO**ntextualized **G**NN based **M**ultimodal **E**motion recognitio**N**

**Picture:** *My sample picture for logo*

This repository contains the official Pytorch implementation of the following paper:

> **COGMEN: COntextualized GNN based Multimodal Emotion recognitioN**<br>

> **Paper:** https://arxiv.org/abs/2205.02455

> **Authors:** Abhinav Joshi, Ashwani Bhat, Ayush Jain, Atin Vikram Singh, Ashutosh Modi<br>

>

> **Abstract:** *Emotions are an inherent part of human interactions, and consequently, it is imperative to develop AI systems that understand and recognize human emotions. During a conversation involving various people, a person’s emotions are influenced by the other speaker’s utterances and their own emotional state over the utterances. In this paper, we propose COntextualized Graph Neural Network based Multimodal Emotion recognitioN (COGMEN) system that leverages local information (i.e., inter/intra dependency between speakers) and global information (context). The proposed model uses Graph Neural Network (GNN) based architecture to model the complex dependencies (local and global information) in a conversation. Our model gives state-of-theart (SOTA) results on IEMOCAP and MOSEI datasets, and detailed ablation experiments

show the importance of modeling information at both levels*

## Requirements

- We use PyG (PyTorch Geometric) for the GNN component in our architecture. [RGCNConv](https://pytorch-geometric.readthedocs.io/en/latest/modules/nn.html#torch_geometric.nn.conv.RGCNConv) and [TransformerConv](https://pytorch-geometric.readthedocs.io/en/latest/modules/nn.html#torch_geometric.nn.conv.TransformerConv)

- We use [comet](https://comet.ml) for logging all our experiments and its Bayesian optimizer for hyperparameter tuning.

- For textual features we use [SBERT](https://www.sbert.net/).

### Installations

- [Install PyTorch Geometric](https://pytorch-geometric.readthedocs.io/en/latest/notes/installation.html)

- [Install Comet.ml](https://www.comet.ml/docs/python-sdk/advanced/)

- [Install SBERT](https://www.sbert.net/)

## Preparing datasets for training

python preprocess.py --dataset="iemocap_4"

## Training networks

python train.py --dataset="iemocap_4" --modalities="atv" --from_begin --epochs=55

## Run Evaluation [](https://colab.research.google.com/drive/1biIvonBdJWo2TiYyTiQkxZ_V88JEXa_d?usp=sharing)

python eval.py --dataset="iemocap_4" --modalities="atv"

Please cite the paper using following citation:

## Citation

@inproceedings{joshi-etal-2022-cogmen,

title = "{COGMEN}: {CO}ntextualized {GNN} based Multimodal Emotion recognitio{N}",

author = "Joshi, Abhinav and

Bhat, Ashwani and

Jain, Ayush and

Singh, Atin and

Modi, Ashutosh",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jul,

year = "2022",

address = "Seattle, United States",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.naacl-main.306",

pages = "4148--4164",

abstract = "Emotions are an inherent part of human interactions, and consequently, it is imperative to develop AI systems that understand and recognize human emotions. During a conversation involving various people, a person{'}s emotions are influenced by the other speaker{'}s utterances and their own emotional state over the utterances. In this paper, we propose COntextualized Graph Neural Network based Multi- modal Emotion recognitioN (COGMEN) system that leverages local information (i.e., inter/intra dependency between speakers) and global information (context). The proposed model uses Graph Neural Network (GNN) based architecture to model the complex dependencies (local and global information) in a conversation. Our model gives state-of-the- art (SOTA) results on IEMOCAP and MOSEI datasets, and detailed ablation experiments show the importance of modeling information at both levels.",}

## Acknowledgments

The structure of our code is inspired by [pytorch-DialogueGCN-mianzhang](https://github.com/mianzhang/dialogue_gcn).

|

okho0653/Bio_ClinicalBERT-zero-shot-finetuned-50cad-50noncad-optimal

|

okho0653

| 2022-08-05T05:29:50Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-05T05:12:27Z

|

---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: Bio_ClinicalBERT-zero-shot-finetuned-50cad-50noncad-optimal

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Bio_ClinicalBERT-zero-shot-finetuned-50cad-50noncad-optimal

This model is a fine-tuned version of [emilyalsentzer/Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 9.8836

- Accuracy: 0.5

- F1: 0.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.2

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

okho0653/Bio_ClinicalBERT-zero-shot-finetuned-all-cad

|

okho0653

| 2022-08-05T04:50:14Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-05T04:33:44Z

|

---

license: mit

tags:

- generated_from_trainer

model-index:

- name: Bio_ClinicalBERT-zero-shot-finetuned-all-cad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Bio_ClinicalBERT-zero-shot-finetuned-all-cad

This model is a fine-tuned version of [emilyalsentzer/Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

zhiguoxu/chinese-roberta-wwm-ext-finetuned2

|

zhiguoxu

| 2022-08-05T03:45:08Z

| 5

| 0

|

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-03T07:54:52Z

|

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: chinese-roberta-wwm-ext-finetuned2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# chinese-roberta-wwm-ext-finetuned2

This model is a fine-tuned version of [hfl/chinese-roberta-wwm-ext](https://huggingface.co/hfl/chinese-roberta-wwm-ext) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1448

- Accuracy: 1.0

- F1: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 1.4081 | 1.0 | 3 | 0.9711 | 0.7273 | 0.6573 |

| 0.9516 | 2.0 | 6 | 0.8174 | 0.8182 | 0.8160 |

| 0.8945 | 3.0 | 9 | 0.6617 | 0.9091 | 0.9124 |

| 0.7042 | 4.0 | 12 | 0.5308 | 1.0 | 1.0 |

| 0.6641 | 5.0 | 15 | 0.4649 | 1.0 | 1.0 |

| 0.5731 | 6.0 | 18 | 0.4046 | 1.0 | 1.0 |

| 0.5132 | 7.0 | 21 | 0.3527 | 1.0 | 1.0 |

| 0.3999 | 8.0 | 24 | 0.3070 | 1.0 | 1.0 |

| 0.4198 | 9.0 | 27 | 0.2673 | 1.0 | 1.0 |

| 0.3677 | 10.0 | 30 | 0.2378 | 1.0 | 1.0 |

| 0.3545 | 11.0 | 33 | 0.2168 | 1.0 | 1.0 |

| 0.3237 | 12.0 | 36 | 0.1980 | 1.0 | 1.0 |

| 0.3122 | 13.0 | 39 | 0.1860 | 1.0 | 1.0 |

| 0.2802 | 14.0 | 42 | 0.1759 | 1.0 | 1.0 |

| 0.2552 | 15.0 | 45 | 0.1671 | 1.0 | 1.0 |

| 0.2475 | 16.0 | 48 | 0.1598 | 1.0 | 1.0 |

| 0.2259 | 17.0 | 51 | 0.1541 | 1.0 | 1.0 |

| 0.201 | 18.0 | 54 | 0.1492 | 1.0 | 1.0 |

| 0.2083 | 19.0 | 57 | 0.1461 | 1.0 | 1.0 |

| 0.2281 | 20.0 | 60 | 0.1448 | 1.0 | 1.0 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.12.0+cu102

- Datasets 2.1.0

- Tokenizers 0.12.1

|

ariesutiono/scibert-lm-v1-finetuned-20

|

ariesutiono

| 2022-08-05T03:07:59Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"generated_from_trainer",

"dataset:conll2003",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-08-04T01:57:31Z

|

---

tags:

- generated_from_trainer

datasets:

- conll2003

model-index:

- name: scibert-lm-v1-finetuned-20

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# scibert-lm-v1-finetuned-20

This model is a fine-tuned version of [allenai/scibert_scivocab_cased](https://huggingface.co/allenai/scibert_scivocab_cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 22.6145

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.0118 | 1.0 | 1756 | 15.0609 |

| 0.0001 | 2.0 | 3512 | 17.9265 |

| 0.0 | 3.0 | 5268 | 18.6256 |

| 0.0001 | 4.0 | 7024 | 19.5144 |

| 0.0002 | 5.0 | 8780 | 19.8926 |

| 0.0 | 6.0 | 10536 | 21.6975 |

| 0.0 | 7.0 | 12292 | 22.2388 |

| 0.0 | 8.0 | 14048 | 21.0441 |

| 0.0 | 9.0 | 15804 | 21.6852 |

| 0.0 | 10.0 | 17560 | 22.4439 |

| 0.0 | 11.0 | 19316 | 20.9994 |

| 0.0 | 12.0 | 21072 | 21.7275 |

| 0.0 | 13.0 | 22828 | 22.1329 |

| 0.0 | 14.0 | 24584 | 22.4599 |

| 0.0 | 15.0 | 26340 | 22.5726 |

| 0.0 | 16.0 | 28096 | 22.7823 |

| 0.0 | 17.0 | 29852 | 22.4167 |

| 0.0 | 18.0 | 31608 | 22.4075 |

| 0.0 | 19.0 | 33364 | 22.5731 |

| 0.0 | 20.0 | 35120 | 22.6145 |

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

tals/roberta_python

|

tals

| 2022-08-05T02:30:51Z

| 5

| 2

|

transformers

|

[

"transformers",

"pytorch",

"roberta",

"fill-mask",

"arxiv:2106.05784",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z

|

# roberta_python

---

language: code

datasets:

- code_search_net

- Fraser/python-lines

tags:

- python

- code

- masked-lm

widget:

- text "assert 6 == sum([i for i in range(<mask>)])"

---

# Details

This is a roBERTa-base model trained on the python part of [CodeSearchNet](https://github.com/github/CodeSearchNet) and reached a dev perplexity of 3.296

This model was used for the Programming Puzzles enumerative solver baseline detailed in [Programming Puzzles paper](https://arxiv.org/abs/2106.05784).

See also the [Python Programming Puzzles (P3) Repository](https://github.com/microsoft/PythonProgrammingPuzzles) for more details.

# Usage

You can either load the model and further fine-tune it for a target task (as done for the puzzle solver), or you can experiment with mask-filling directly with this model as in the following example:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead, pipeline

tokenizer = AutoTokenizer.from_pretrained("tals/roberta_python")

model = AutoModelWithLMHead.from_pretrained("tals/roberta_python")

demo = pipeline("fill-mask", model=model, tokenizer=tokenizer)

code = """sum= 0

for i in range(<mask>):

sum += i

assert sum == 6

"""

demo(code)

```

# BibTeX entry and citation info

```bibtex

@inproceedings{

schuster2021programming,

title={Programming Puzzles},

author={Tal Schuster and Ashwin Kalyan and Alex Polozov and Adam Tauman Kalai},

booktitle={Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 1)},

year={2021},

url={https://openreview.net/forum?id=fe_hCc4RBrg}

}

```

|

tals/albert-base-vitaminc_wnei-fever

|

tals

| 2022-08-05T02:25:41Z

| 6

| 1

|

transformers

|

[

"transformers",

"pytorch",

"albert",

"text-classification",

"dataset:tals/vitaminc",

"dataset:fever",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z

|

---

datasets:

- tals/vitaminc

- fever

---

# Details

Model used in [Get Your Vitamin C! Robust Fact Verification with Contrastive Evidence](https://aclanthology.org/2021.naacl-main.52/) (Schuster et al., NAACL 21`).

For more details see: https://github.com/TalSchuster/VitaminC

When using this model, please cite the paper.

# BibTeX entry and citation info

```bibtex

@inproceedings{schuster-etal-2021-get,

title = "Get Your Vitamin {C}! Robust Fact Verification with Contrastive Evidence",

author = "Schuster, Tal and

Fisch, Adam and

Barzilay, Regina",

booktitle = "Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jun,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.naacl-main.52",

doi = "10.18653/v1/2021.naacl-main.52",

pages = "624--643",

abstract = "Typical fact verification models use retrieved written evidence to verify claims. Evidence sources, however, often change over time as more information is gathered and revised. In order to adapt, models must be sensitive to subtle differences in supporting evidence. We present VitaminC, a benchmark infused with challenging cases that require fact verification models to discern and adjust to slight factual changes. We collect over 100,000 Wikipedia revisions that modify an underlying fact, and leverage these revisions, together with additional synthetically constructed ones, to create a total of over 400,000 claim-evidence pairs. Unlike previous resources, the examples in VitaminC are contrastive, i.e., they contain evidence pairs that are nearly identical in language and content, with the exception that one supports a given claim while the other does not. We show that training using this design increases robustness{---}improving accuracy by 10{\%} on adversarial fact verification and 6{\%} on adversarial natural language inference (NLI). Moreover, the structure of VitaminC leads us to define additional tasks for fact-checking resources: tagging relevant words in the evidence for verifying the claim, identifying factual revisions, and providing automatic edits via factually consistent text generation.",

}

```

|

fzwd6666/NLTBert_multi_fine_tune_new

|

fzwd6666

| 2022-08-05T00:22:54Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-05T00:04:38Z

|

This model is a fine-tuned version of fzwd6666/Ged_bert_new with 4 layers on an NLT dataset. It achieves the following results on the evaluation set:

{'precision': 0.9795081967213115} {'recall': 0.989648033126294} {'f1': 0.984552008238929} {'accuracy': 0.9843227424749164}

Training hyperparameters:

learning_rate: 1e-4

train_batch_size: 8

eval_batch_size: 8

optimizer: AdamW with betas=(0.9,0.999) and epsilon=1e-08

weight_decay= 0.01

lr_scheduler_type: linear

num_epochs: 3

It achieves the following results on the test set:

Incorrect UD Padded:

{'precision': 0.6878048780487804} {'recall': 0.2863913337846987} {'f1': 0.4043977055449331} {'accuracy': 0.4722575180008471}

Incorrect UD Unigram:

{'precision': 0.6348314606741573} {'recall': 0.3060257278266757} {'f1': 0.4129739607126542} {'accuracy': 0.4557390936044049}

Incorrect UD Bigram:

{'precision': 0.6588419405320813} {'recall': 0.28503723764387273} {'f1': 0.3979206049149338} {'accuracy': 0.4603981363828886}

Incorrect UD All:

{'precision': 0.4} {'recall': 0.0013540961408259986} {'f1': 0.002699055330634278} {'accuracy': 0.373994070309191}

Incorrect Sentence:

{'precision': 0.5} {'recall': 0.012186865267433988} {'f1': 0.02379378717779247} {'accuracy': 0.37441761965268955}

|

SharpAI/mal-tls-bert-base-w8a8

|

SharpAI

| 2022-08-04T23:39:11Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-07-27T21:02:28Z

|

---

tags:

- generated_from_keras_callback

model-index:

- name: mal-tls-bert-base-w8a8

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal-tls-bert-base-w8a8

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.15.0

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.10.3

|

fzwd6666/NLI_new

|

fzwd6666

| 2022-08-04T22:33:38Z

| 5

| 0

|

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-04T21:42:12Z

|

This model is a fine-tuned version of bert-base-uncased on an NLI dataset. It achieves the following results on the evaluation set:

{'precision': 0.9690210656753407} {'recall': 0.9722337339411521} {'f1': 0.9706247414149772} {'accuracy': 0.9535340314136126}

Training hyperparameters:

learning_rate: 2e-5

train_batch_size: 8

eval_batch_size: 8

optimizer: AdamW with betas=(0.9,0.999) and epsilon=1e-08

weight_decay= 0.01

lr_scheduler_type: linear

num_epochs: 3

It achieves the following results on the test set:

Incorrect UD Padded:

{'precision': 0.623370110330993} {'recall': 0.8415707515233581} {'f1': 0.7162201094785364} {'accuracy': 0.5828038966539602}

Incorrect UD Unigram:

{'precision': 0.6211431461810825} {'recall': 0.8314150304671631} {'f1': 0.7110596409959468} {'accuracy': 0.5772977551884795}

Incorrect UD Bigram:

{'precision': 0.6203980099502487} {'recall': 0.8442789438050101} {'f1': 0.7152279896759391} {'accuracy': 0.579415501905972}

Incorrect UD All:

{'precision': 0.605543710021322} {'recall': 0.1922816519972918} {'f1': 0.2918807810894142} {'accuracy': 0.4163490046590428}

Incorrect Sentence:

{'precision': 0.6411042944785276} {'recall': 0.4245091401489506} {'f1': 0.5107942973523422} {'accuracy': 0.4913172384582804}

|

fzwd6666/Ged_bert_new

|

fzwd6666

| 2022-08-04T22:32:48Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-04T22:14:19Z

|

This model is a fine-tuned version of bert-base-uncased on an NLI dataset. It achieves the following results on the evaluation set:

{'precision': 0.8384560400285919} {'recall': 0.9536585365853658} {'f1': 0.892354507417269} {'accuracy': 0.8345996493278784}

Training hyperparameters:

learning_rate=2e-5

batch_size=32

epochs = 4

warmup_steps=10% training data number

optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

lr_scheduler_type: linear

|

SharpAI/mal-tls-bert-large-relu-w8a8

|

SharpAI

| 2022-08-04T22:20:15Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-04T21:31:59Z

|

---

tags:

- generated_from_keras_callback

model-index:

- name: mal-tls-bert-large-relu-w8a8

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal-tls-bert-large-relu-w8a8

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.15.0

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.10.3

|

SharpAI/mal-tls-bert-large-w8a8

|

SharpAI

| 2022-08-04T22:03:00Z

| 6

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-07-27T17:48:37Z

|

---

tags:

- generated_from_keras_callback

model-index:

- name: mal-tls-bert-large-w8a8

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal-tls-bert-large-w8a8

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.15.0

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.10.3

|

SharpAI/mal-tls-bert-large-relu

|

SharpAI

| 2022-08-04T21:41:21Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-04T17:58:24Z

|

---

tags:

- generated_from_keras_callback

model-index:

- name: mal-tls-bert-large-relu

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal-tls-bert-large-relu

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.12.1

|

SharpAI/mal-tls-bert-large

|

SharpAI

| 2022-08-04T21:04:08Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-07-25T22:26:09Z

|

---

tags:

- generated_from_keras_callback

model-index:

- name: mal-tls-bert-large

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal-tls-bert-large

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.12.1

|

DOOGLAK/wikigold_trained_no_DA_testing2

|

DOOGLAK

| 2022-08-04T20:30:35Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:wikigold_splits",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-04T19:39:03Z

|

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- wikigold_splits

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: wikigold_trained_no_DA_testing2

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: wikigold_splits

type: wikigold_splits

args: default

metrics:

- name: Precision

type: precision

value: 0.8410852713178295

- name: Recall

type: recall

value: 0.84765625

- name: F1

type: f1

value: 0.8443579766536965

- name: Accuracy

type: accuracy

value: 0.9571820972693489

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wikigold_trained_no_DA_testing2

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the wikigold_splits dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1431

- Precision: 0.8411

- Recall: 0.8477

- F1: 0.8444

- Accuracy: 0.9572

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 167 | 0.1618 | 0.7559 | 0.75 | 0.7529 | 0.9410 |

| No log | 2.0 | 334 | 0.1488 | 0.8384 | 0.8242 | 0.8313 | 0.9530 |

| 0.1589 | 3.0 | 501 | 0.1431 | 0.8411 | 0.8477 | 0.8444 | 0.9572 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.11.0+cu113

- Datasets 2.4.0

- Tokenizers 0.11.6

|

aliprf/ASMNet

|

aliprf

| 2022-08-04T19:48:14Z

| 0

| 1

| null |

[

"cvpr2021",

"computer vision",

"face alignment",

"facial landmark point",

"pose estimation",

"face pose tracking",

"CNN",

"loss",

"custom loss",

"ASMNet",

"Tensor Flow",

"en",

"license:mit",

"region:us"

] | null | 2022-08-04T19:19:41Z

|

---

language: en

tags: [cvpr2021, computer vision, face alignment, facial landmark point, pose estimation, face pose tracking, CNN, loss, custom loss, ASMNet, Tensor Flow]

license: mit

---

[](https://paperswithcode.com/sota/pose-estimation-on-300w-full?p=deep-active-shape-model-for-face-alignment)

[](https://paperswithcode.com/sota/face-alignment-on-wflw?p=deep-active-shape-model-for-face-alignment)

[](https://paperswithcode.com/sota/face-alignment-on-300w?p=deep-active-shape-model-for-face-alignment)

```diff

! plaese STAR the repo if you like it.

```

# [ASMNet](https://scholar.google.com/scholar?oi=bibs&cluster=3428857185978099736&btnI=1&hl=en)

## a Lightweight Deep Neural Network for Face Alignment and Pose Estimation

#### Link to the paper:

https://scholar.google.com/scholar?oi=bibs&cluster=3428857185978099736&btnI=1&hl=en

#### Link to the paperswithcode.com:

https://paperswithcode.com/paper/asmnet-a-lightweight-deep-neural-network-for

#### Link to the article on Towardsdatascience.com:

https://aliprf.medium.com/asmnet-a-lightweight-deep-neural-network-for-face-alignment-and-pose-estimation-9e9dfac07094

```

Please cite this work as:

@inproceedings{fard2021asmnet,

title={ASMNet: A Lightweight Deep Neural Network for Face Alignment and Pose Estimation},

author={Fard, Ali Pourramezan and Abdollahi, Hojjat and Mahoor, Mohammad},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={1521--1530},

year={2021}

}

```

## Introduction

ASMNet is a lightweight Convolutional Neural Network (CNN) which is designed to perform face alignment and pose estimation efficiently while having acceptable accuracy. ASMNet proposed inspired by MobileNetV2, modified to be suitable for face alignment and pose

estimation, while being about 2 times smaller in terms of number of the parameters. Moreover, Inspired by Active Shape Model (ASM), ASM-assisted loss function is proposed in order to improve the accuracy of facial landmark points detection and pose estimation.

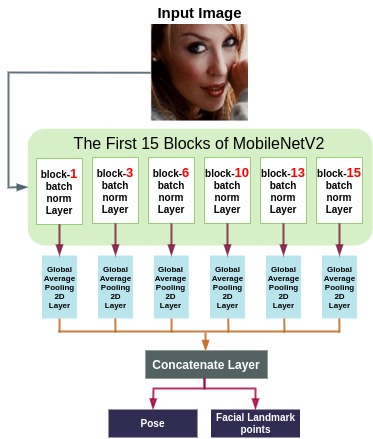

## ASMnet Architecture

Features in a CNN are distributed hierarchically. In other words, the lower layers have features such as edges, and corners which are more suitable for tasks like landmark localization and pose estimation, and deeper layers contain more abstract features that are more suitable for tasks like image classification and image detection. Furthermore, training a network for correlated tasks simultaneously builds a synergy that can improve the performance of each task.

Having said that, we designed ASMNe by fusing the features that are available if different layers of the model. Furthermore, by concatenating the features that are collected after each global average pooling layer in the back-propagation process, it will be possible for the network to evaluate the effect of each shortcut path. Following is the ASMNet architecture:

The implementation of ASMNet in TensorFlow is provided in the following path:

https://github.com/aliprf/ASMNet/blob/master/cnn_model.py

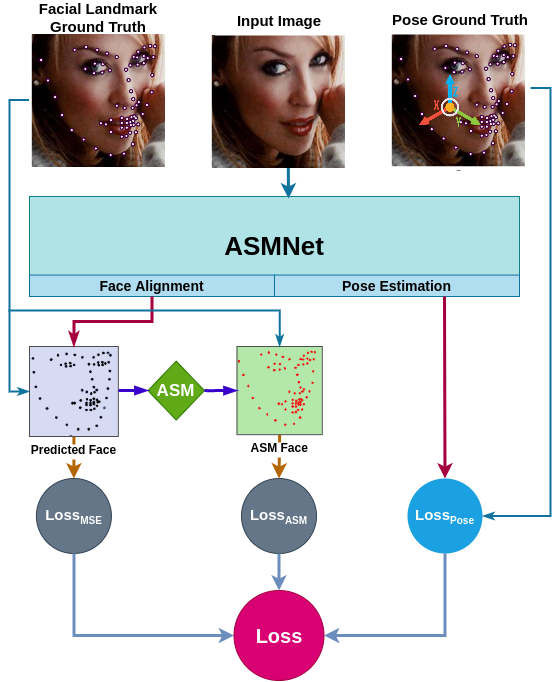

## ASM Loss

We proposed a new loss function called ASM-LOSS which utilizes ASM to improve the accuracy of the network. In other words, during the training process, the loss function compares the predicted facial landmark points with their corresponding ground truth as well as the smoothed version the ground truth which is generated using ASM operator. Accordingly, ASM-LOSS guides the network to first learn the smoothed distribution of the facial landmark points. Then, it leads the network to learn the original landmark points. For more detail please refer to the paper.

Following is the ASM Loss diagram:

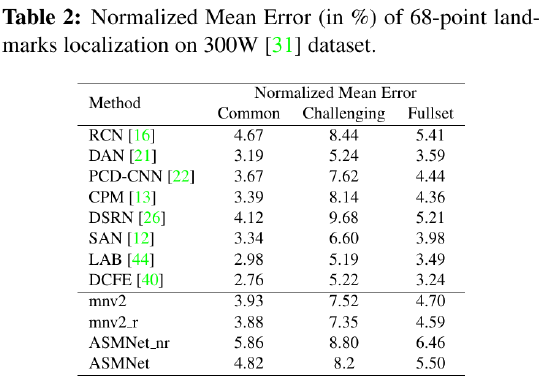

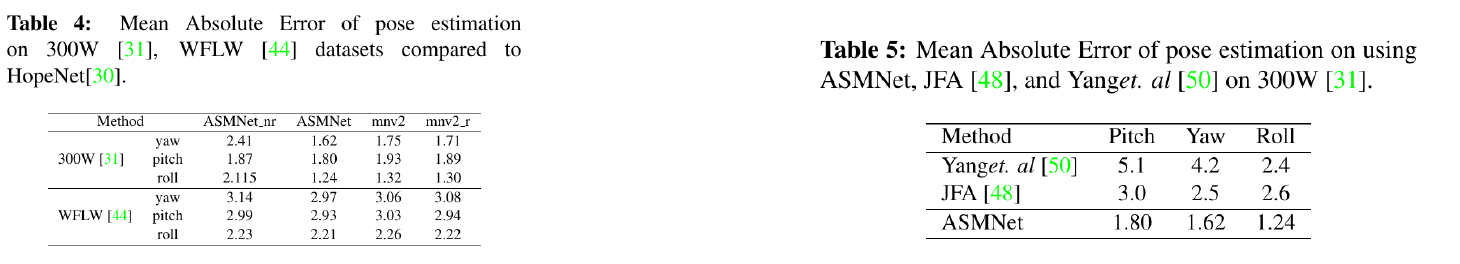

## Evaluation

As you can see in the following tables, ASMNet has only 1.4 M parameters which is the smallets comparing to the similar Facial landmark points detection models. Moreover, ASMNet designed to performs Face alignment as well as Pose estimation with a very small CNN while having an acceptable accuracy.

Although ASMNet is much smaller than the state-of-the-art methods on face alignment, it's performance is also very good and acceptable for many real-world applications:

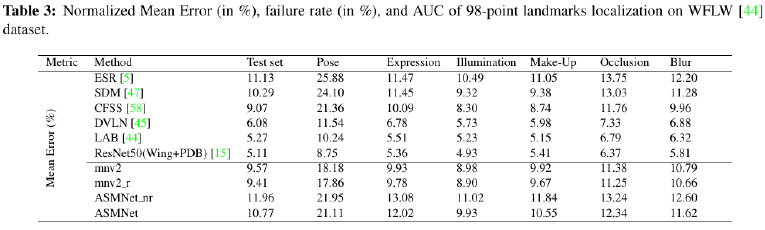

As shown in the following table, ASMNet performs much better that the state-of-the-art models on 300W dataseton Pose estimation task:

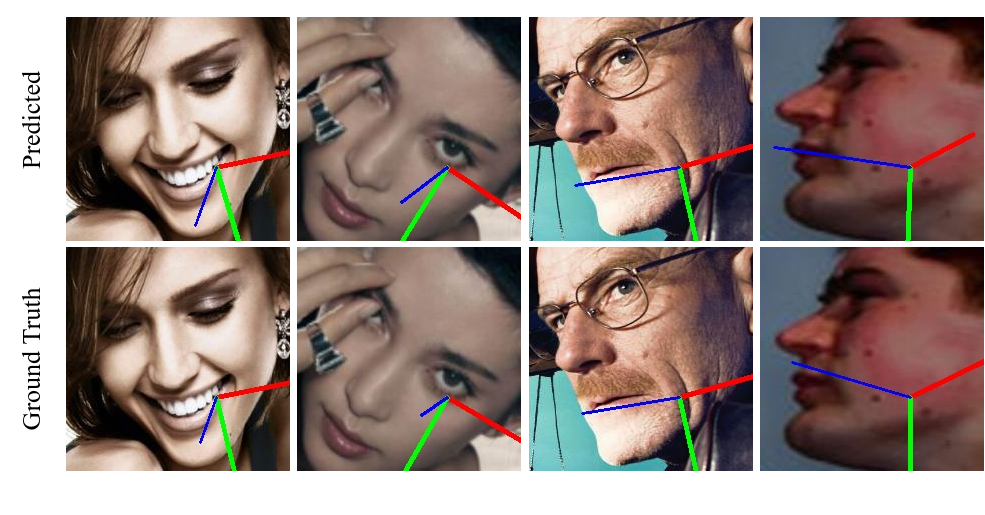

Following are some samples in order to show the visual performance of ASMNet on 300W and WFLW datasets:

The visual performance of Pose estimation task using ASMNet is very accurate and the results also are much better than the state-of-the-art pose estimation over 300W dataset:

----------------------------------------------------------------------------------------------------------------------------------

## Installing the requirements

In order to run the code you need to install python >= 3.5.

The requirements and the libraries needed to run the code can be installed using the following command:

```

pip install -r requirements.txt

```

## Using the pre-trained models

You can test and use the preetrained models using the following codes which are available in the following file:

https://github.com/aliprf/ASMNet/blob/master/main.py

```

tester = Test()

tester.test_model(ds_name=DatasetName.w300,

pretrained_model_path='./pre_trained_models/ASMNet/ASM_loss/ASMNet_300W_ASMLoss.h5')

```

## Training Network from scratch

### Preparing Data

Data needs to be normalized and saved in npy format.

### PCA creation

you can you the pca_utility.py class to create the eigenvalues, eigenvectors, and the meanvector:

```

pca_calc = PCAUtility()

pca_calc.create_pca_from_npy(dataset_name=DatasetName.w300,

labels_npy_path='./data/w300/normalized_labels/',

pca_percentages=90)

```

### Training

The training implementation is located in train.py class. You can use the following code to start the training:

```

trainer = Train(arch=ModelArch.ASMNet,

dataset_name=DatasetName.w300,

save_path='./',

asm_accuracy=90)

```

Please cite this work as:

@inproceedings{fard2021asmnet,

title={ASMNet: A Lightweight Deep Neural Network for Face Alignment and Pose Estimation},

author={Fard, Ali Pourramezan and Abdollahi, Hojjat and Mahoor, Mohammad},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={1521--1530},

year={2021}

}

```diff

@@plaese STAR the repo if you like it.@@

```

|

aliprf/ACR-Loss

|

aliprf

| 2022-08-04T19:47:19Z

| 0

| 0

| null |

[

"ICPR",

"ICPR2022",

"computer vision",

"face alignment",

"facial landmark point",

"CNN",

"loss",

"Tensor Flow",

"en",

"arxiv:2203.15835",

"license:mit",

"region:us"

] | null | 2022-08-04T18:26:32Z

|

---

language: en

tags: [ICPR, ICPR2022, computer vision, face alignment, facial landmark point, CNN, loss, Tensor Flow ]

thumbnail:

license: mit

---

# [ACR-Loss](https://scholar.google.com/citations?view_op=view_citation&hl=en&user=96lS6HIAAAAJ&citation_for_view=96lS6HIAAAAJ:eQOLeE2rZwMC)

### Accepted in ICPR 2022

ACR Loss: Adaptive Coordinate-based Regression Loss for Face Alignment

#### Link to the paper:

https://arxiv.org/pdf/2203.15835.pdf

```diff

@@plaese STAR the repo if you like it.@@

```

```

Please cite this work as:

@article{fard2022acr,

title={ACR Loss: Adaptive Coordinate-based Regression Loss for Face Alignment},

author={Fard, Ali Pourramezan and Mahoor, Mohammah H},

journal={arXiv preprint arXiv:2203.15835},

year={2022}

}

```

## Introduction

Although deep neural networks have achieved reasonable accuracy in solving face alignment, it is still a challenging task, specifically when we deal with facial images, under occlusion, or extreme head poses. Heatmap-based Regression (HBR) and Coordinate-based Regression (CBR) are among the two mainly used methods for face alignment. CBR methods require less computer memory, though their performance is less than HBR methods. In this paper, we propose an Adaptive Coordinatebased Regression (ACR) loss to improve the accuracy of CBR for face alignment. Inspired by the Active Shape Model (ASM), we generate Smooth-Face objects, a set of facial landmark points with less variations compared to the ground truth landmark points. We then introduce a method to estimate the level of difficulty in predicting each landmark point for the network by comparing the distribution of the ground truth landmark points

and the corresponding Smooth-Face objects. Our proposed ACR Loss can adaptively modify its curvature and the influence of the loss based on the difficulty level of predicting each landmark point in a face. Accordingly, the ACR Loss guides the network toward challenging points than easier points, which improves the accuracy of the face alignment task. Our extensive evaluation shows the capabilities of the proposed ACR Loss in predicting facial landmark points in various facial images.

We evaluated our ACR Loss using MobileNetV2, EfficientNetB0, and EfficientNet-B3 on widely used 300W, and COFW datasets and showed that the performance of face alignment using the ACR Loss is much better than the widely-used L2 loss. Moreover, on the COFW dataset, we achieved state-of-theart accuracy. In addition, on 300W the ACR Loss performance is comparable to the state-of-the-art methods. We also compared the performance of MobileNetV2 trained using the ACR Loss with the lightweight state-of-the-art methods, and we achieved the best accuracy, highlighting the effectiveness of our ACR Loss for face alignment specifically for the lightweight models.

----------------------------------------------------------------------------------------------------------------------------------

## Installing the requirements

In order to run the code you need to install python >= 3.5.

The requirements and the libraries needed to run the code can be installed using the following command:

```

pip install -r requirements.txt

```

## Using the pre-trained models

You can test and use the preetrained models using the following codes:

```

tester = Test()

tester.test_model(ds_name=DatasetName.w300,

pretrained_model_path='./pre_trained_models/ACRLoss/300w/EF_3/300w_EF3_ACRLoss.h5')

```

## Training Network from scratch

### Preparing Data

Data needs to be normalized and saved in npy format.

### PCA creation

you can you the pca_utility.py class to create the eigenvalues, eigenvectors, and the meanvector:

```

pca_calc = PCAUtility()

pca_calc.create_pca_from_npy(dataset_name=DatasetName.w300,

labels_npy_path='./data/w300/normalized_labels/',

pca_percentages=90)

```

### Training

The training implementation is located in train.py class. You can use the following code to start the training:

```

trainer = Train(arch=ModelArch.MNV2,

dataset_name=DatasetName.w300,

save_path='./')

```

|

aliprf/Ad-Corre

|

aliprf

| 2022-08-04T19:46:42Z

| 0

| 2

| null |

[

"Ad-Corre",

"facial expression recognition",

"emotion recognition",

"expression recognition",

"computer vision",

"CNN",

"loss",

"IEEE Access",

"Tensor Flow",

"en",

"license:mit",

"region:us"

] | null | 2022-08-04T19:11:54Z

|

---

language: en

tags: [Ad-Corre, facial expression recognition, emotion recognition, expression recognition, computer vision, CNN, loss, IEEE Access, Tensor Flow ]

thumbnail:

license: mit

---

# Ad-Corre

Ad-Corre: Adaptive Correlation-Based Loss for Facial Expression Recognition in the Wild

[](https://paperswithcode.com/sota/facial-expression-recognition-on-raf-db?p=ad-corre-adaptive-correlation-based-loss-for)

<!--

[](https://paperswithcode.com/sota/facial-expression-recognition-on-affectnet?p=ad-corre-adaptive-correlation-based-loss-for)

[](https://paperswithcode.com/sota/facial-expression-recognition-on-fer2013?p=ad-corre-adaptive-correlation-based-loss-for)

-->

#### Link to the paper (open access):

https://ieeexplore.ieee.org/document/9727163

#### Link to the paperswithcode.com:

https://paperswithcode.com/paper/ad-corre-adaptive-correlation-based-loss-for

```

Please cite this work as:

@ARTICLE{9727163,

author={Fard, Ali Pourramezan and Mahoor, Mohammad H.},

journal={IEEE Access},

title={Ad-Corre: Adaptive Correlation-Based Loss for Facial Expression Recognition in the Wild},

year={2022},

volume={},

number={},

pages={1-1},

doi={10.1109/ACCESS.2022.3156598}}

```

## Introduction

Automated Facial Expression Recognition (FER) in the wild using deep neural networks is still challenging due to intra-class variations and inter-class similarities in facial images. Deep Metric Learning (DML) is among the widely used methods to deal with these issues by improving the discriminative power of the learned embedded features. This paper proposes an Adaptive Correlation (Ad-Corre) Loss to guide the network towards generating embedded feature vectors with high correlation for within-class samples and less correlation for between-class samples. Ad-Corre consists of 3 components called Feature Discriminator, Mean Discriminator, and Embedding Discriminator. We design the Feature Discriminator component to guide the network to create the embedded feature vectors to be highly correlated if they belong to a similar class, and less correlated if they belong to different classes. In addition, the Mean Discriminator component leads the network to make the mean embedded feature vectors of different classes to be less similar to each other.We use Xception network as the backbone of our model, and contrary to previous work, we propose an embedding feature space that contains k feature vectors. Then, the Embedding Discriminator component penalizes the network to generate the embedded feature vectors, which are dissimilar.We trained our model using the combination of our proposed loss functions called Ad-Corre Loss jointly with the cross-entropy loss. We achieved a very promising recognition accuracy on AffectNet, RAF-DB, and FER-2013. Our extensive experiments and ablation study indicate the power of our method to cope well with challenging FER tasks in the wild.

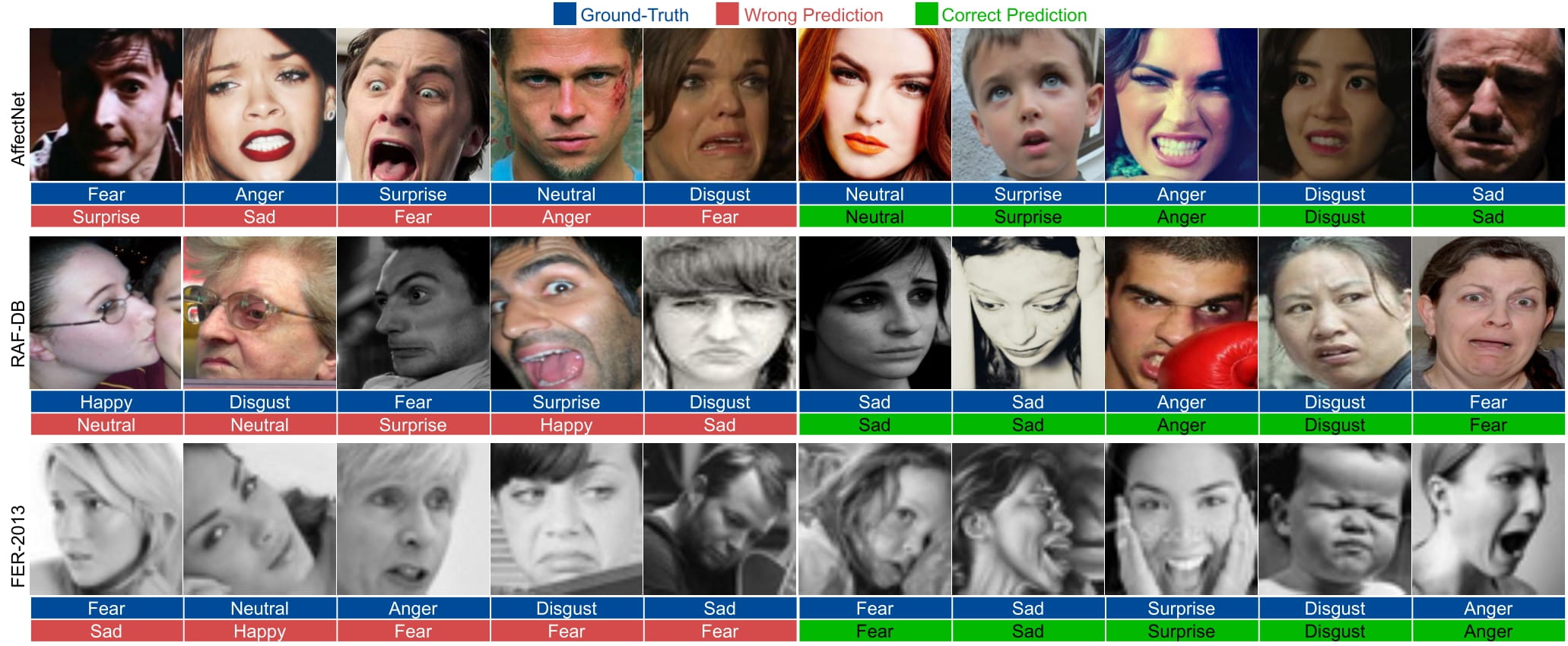

## Evaluation and Samples

The following samples are taken from the paper:

----------------------------------------------------------------------------------------------------------------------------------

## Installing the requirements

In order to run the code you need to install python >= 3.5.

The requirements and the libraries needed to run the code can be installed using the following command:

```

pip install -r requirements.txt

```

## Using the pre-trained models

The pretrained models for Affectnet, RafDB, and Fer2013 are provided in the [Trained_Models](https://github.com/aliprf/Ad-Corre/tree/main/Trained_Models) folder. You can use the following code to predict the facial emotionn of a facial image:

```

tester = TestModels(h5_address='./trained_models/AffectNet_6336.h5')

tester.recognize_fer(img_path='./img.jpg')

```

plaese see the following [main.py](https://github.com/aliprf/Ad-Corre/tree/main/main.py) file.

## Training Network from scratch

The information and the code to train the model is provided in train.py .Plaese see the following [main.py](https://github.com/aliprf/Ad-Corre/tree/main/main.py) file:

```

'''training part'''

trainer = TrainModel(dataset_name=DatasetName.affectnet, ds_type=DatasetType.train_7)

trainer.train(arch="xcp", weight_path="./")

```

### Preparing Data

Data needs to be normalized and saved in npy format.

---------------------------------------------------------------

```

Please cite this work as:

@ARTICLE{9727163,

author={Fard, Ali Pourramezan and Mahoor, Mohammad H.},

journal={IEEE Access},

title={Ad-Corre: Adaptive Correlation-Based Loss for Facial Expression Recognition in the Wild},

year={2022},

volume={},

number={},

pages={1-1},

doi={10.1109/ACCESS.2022.3156598}}

```

|

Talha/URDU-ASR

|

Talha

| 2022-08-04T19:27:04Z

| 113

| 0

|

transformers

|

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-08-03T19:50:46Z

|

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: output

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# output

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2822

- Wer: 0.2423

- Cer: 0.0842

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

I have used dataset other than mozila common voice, thats why for fair evaluation, i do 80:20 split.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 48

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 192

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 15

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Cer | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:------:|:---------------:|:------:|

| No log | 1.0 | 174 | 0.9860 | 3.1257 | 1.0 |

| No log | 2.0 | 348 | 0.9404 | 2.4914 | 0.9997 |

| No log | 3.0 | 522 | 0.1889 | 0.5970 | 0.5376 |

| No log | 4.0 | 696 | 0.1428 | 0.4462 | 0.4121 |

| No log | 5.0 | 870 | 0.1211 | 0.3775 | 0.3525 |

| 1.7 | 6.0 | 1044 | 0.1113 | 0.3594 | 0.3264 |

| 1.7 | 7.0 | 1218 | 0.1032 | 0.3354 | 0.3013 |

| 1.7 | 8.0 | 1392 | 0.1005 | 0.3171 | 0.2843 |

| 1.7 | 9.0 | 1566 | 0.0953 | 0.3115 | 0.2717 |

| 1.7 | 10.0 | 1740 | 0.0934 | 0.3058 | 0.2671 |

| 1.7 | 11.0 | 1914 | 0.0926 | 0.3060 | 0.2656 |

| 0.3585 | 12.0 | 2088 | 0.0899 | 0.3070 | 0.2566 |

| 0.3585 | 13.0 | 2262 | 0.0888 | 0.2979 | 0.2509 |

| 0.3585 | 14.0 | 2436 | 0.0868 | 0.3005 | 0.2473 |

| 0.3585 | 15.0 | 2610 | 0.2822 | 0.2423 | 0.0842 |

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0

- Datasets 2.4.0

- Tokenizers 0.12.1

|

keepitreal/mini-phobert-v2.1

|

keepitreal

| 2022-08-04T16:42:05Z

| 3

| 0

|

transformers

|

[

"transformers",

"pytorch",

"roberta",

"fill-mask",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-08-04T14:49:57Z

|

---

tags:

- generated_from_trainer

model-index:

- name: mini-phobert-v2.1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mini-phobert-v2.1

This model is a fine-tuned version of [](https://huggingface.co/) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 6.3279

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 64

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

dquisi/story_spanish_category

|

dquisi

| 2022-08-04T15:44:12Z

| 5

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-08-03T20:01:25Z

|

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: story_spanish_category

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# story_spanish_category

This model is a fine-tuned version of [datificate/gpt2-small-spanish](https://huggingface.co/datificate/gpt2-small-spanish) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

yukseltron/lyrics-classifier

|

yukseltron

| 2022-08-04T15:42:31Z

| 0

| 0

| null |

[

"tensorboard",

"text-classification",

"lyrics",

"catboost",

"en",

"dataset:data",

"license:gpl-3.0",

"region:us"

] |

text-classification

| 2022-07-28T12:48:01Z

|

---

language:

- en

thumbnail: "http://s4.thingpic.com/images/Yx/zFbS5iJFJMYNxDp9HTR7TQtT.png"

tags:

- text-classification

- lyrics

- catboost

license: gpl-3.0

datasets:

- data

metrics:

- accuracy

widget:

- text: "I know when that hotline bling, that can only mean one thing"

---

# Lyrics Classifier

This submission uses [CatBoost](https://catboost.ai/).

CatBoost was chosen for its listed benefits, mainly in requiring less hyperparameter tuning and preprocessing of categorical and text features. It is also fast and fairly easy to set up.

<img src="http://s4.thingpic.com/images/Yx/zFbS5iJFJMYNxDp9HTR7TQtT.png"

alt="Markdown Monster icon"

style="float: left; margin-right: 10px;" />

|

tj-solergibert/xlm-roberta-base-finetuned-panx-it

|

tj-solergibert

| 2022-08-04T15:36:59Z

| 6

| 0

|

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-04T15:21:38Z

|

---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-it

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

config: PAN-X.it

split: train

args: PAN-X.it

metrics:

- name: F1

type: f1

value: 0.8124233755619126

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-it

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2630

- F1: 0.8124

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.8193 | 1.0 | 70 | 0.3200 | 0.7356 |

| 0.2773 | 2.0 | 140 | 0.2841 | 0.7882 |

| 0.1807 | 3.0 | 210 | 0.2630 | 0.8124 |

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Jacobsith/autotrain-Hello_there-1209845735

|

Jacobsith

| 2022-08-04T15:30:19Z

| 14

| 0

|

transformers

|

[

"transformers",

"pytorch",

"longt5",

"text2text-generation",

"autotrain",

"summarization",

"unk",

"dataset:Jacobsith/autotrain-data-Hello_there",

"model-index",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-08-02T06:38:58Z

|

---

tags:

- autotrain

- summarization

language:

- unk

widget:

- text: "I love AutoTrain \U0001F917"

datasets:

- Jacobsith/autotrain-data-Hello_there

co2_eq_emissions:

emissions: 3602.3174355473616

model-index:

- name: Jacobsith/autotrain-Hello_there-1209845735

results:

- task:

type: summarization

name: Summarization

dataset:

name: Blaise-g/SumPubmed

type: Blaise-g/SumPubmed

config: Blaise-g--SumPubmed

split: test

metrics:

- name: ROUGE-1

type: rouge

value: 38.2084

verified: true

- name: ROUGE-2

type: rouge

value: 12.4744

verified: true

- name: ROUGE-L

type: rouge

value: 21.5536

verified: true

- name: ROUGE-LSUM

type: rouge

value: 34.229

verified: true

- name: loss

type: loss

value: 2.0952045917510986

verified: true

- name: gen_len

type: gen_len

value: 126.3001

verified: true

---

# Model Trained Using AutoTrain

- Problem type: Summarization

- Model ID: 1209845735

- CO2 Emissions (in grams): 3602.3174

## Validation Metrics

- Loss: 2.484

- Rouge1: 38.448

- Rouge2: 10.900

- RougeL: 22.080

- RougeLsum: 33.458

- Gen Len: 115.982

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/Jacobsith/autotrain-Hello_there-1209845735

```

|

mindwrapped/collaborative-filtering-movielens-copy

|

mindwrapped

| 2022-08-04T15:17:05Z

| 0

| 1

|

keras

|

[

"keras",

"tensorboard",

"tf-keras",

"collaborative-filtering",

"recommender",

"tabular-classification",

"license:cc0-1.0",

"region:us"

] |

tabular-classification

| 2022-06-08T16:15:46Z

|

---

library_name: keras

tags:

- collaborative-filtering

- recommender

- tabular-classification

license:

- cc0-1.0

---

## Model description

This repo contains the model and the notebook on [how to build and train a Keras model for Collaborative Filtering for Movie Recommendations](https://keras.io/examples/structured_data/collaborative_filtering_movielens/).

Full credits to [Siddhartha Banerjee](https://twitter.com/sidd2006).

## Intended uses & limitations

Based on a user and movies they have rated highly in the past, this model outputs the predicted rating a user would give to a movie they haven't seen yet (between 0-1). This information can be used to find out the top recommended movies for this user.

## Training and evaluation data

The dataset consists of user's ratings on specific movies. It also consists of the movie's specific genres.

## Training procedure

The model was trained for 5 epochs with a batch size of 64.

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': 0.001, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

- training_precision: float32

## Training Metrics

| Epochs | Train Loss | Validation Loss |

|--- |--- |--- |

| 1| 0.637| 0.619|

| 2| 0.614| 0.616|

| 3| 0.609| 0.611|

| 4| 0.608| 0.61|

| 5| 0.608| 0.609|

## Model Plot

<details>

<summary>View Model Plot</summary>

</details>

|

Ilyes/wav2vec2-large-xlsr-53-french

|

Ilyes

| 2022-08-04T14:51:35Z

| 29

| 4

|

transformers

|

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"audio",

"speech",

"xlsr-fine-tuning-week",

"fr",

"dataset:common_voice",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-03-02T23:29:04Z

|

---

language: fr

datasets:

- common_voice

tags:

- audio

- automatic-speech-recognition

- speech

- xlsr-fine-tuning-week

license: apache-2.0

model-index:

- name: wav2vec2-large-xlsr-53-French by Ilyes Rebai

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice fr

type: common_voice

args: fr

metrics:

- name: Test WER

type: wer

value: 12.82

---

## Evaluation on Common Voice FR Test

The script used for training and evaluation can be found here: https://github.com/irebai/wav2vec2

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import (

Wav2Vec2ForCTC,

Wav2Vec2Processor,

)

import re

model_name = "Ilyes/wav2vec2-large-xlsr-53-french"

device = "cpu" # "cuda"

model = Wav2Vec2ForCTC.from_pretrained(model_name).to(device)

processor = Wav2Vec2Processor.from_pretrained(model_name)

ds = load_dataset("common_voice", "fr", split="test", cache_dir="./data/fr")

chars_to_ignore_regex = '[\,\?\.\!\;\:\"\“\%\‘\”\�\‘\’\’\’\‘\…\·\!\ǃ\?\«\‹\»\›“\”\\ʿ\ʾ\„\∞\\|\.\,\;\:\*\—\–\─\―\_\/\:\ː\;\,\=\«\»\→]'

def map_to_array(batch):

speech, _ = torchaudio.load(batch["path"])

batch["speech"] = resampler.forward(speech.squeeze(0)).numpy()

batch["sampling_rate"] = resampler.new_freq

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower().replace("’", "'")

return batch

resampler = torchaudio.transforms.Resample(48_000, 16_000)

ds = ds.map(map_to_array)

def map_to_pred(batch):

features = processor(batch["speech"], sampling_rate=batch["sampling_rate"][0], padding=True, return_tensors="pt")

input_values = features.input_values.to(device)

attention_mask = features.attention_mask.to(device)

with torch.no_grad():

logits = model(input_values, attention_mask=attention_mask).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["predicted"] = processor.batch_decode(pred_ids)

batch["target"] = batch["sentence"]

return batch

result = ds.map(map_to_pred, batched=True, batch_size=16, remove_columns=list(ds.features.keys()))

wer = load_metric("wer")

print(wer.compute(predictions=result["predicted"], references=result["target"]))

```

## Results

WER=12.82%

CER=4.40%

|

nikitakapitan/FrozenLake-v2-4x4-Slippery

|

nikitakapitan

| 2022-08-04T14:36:18Z

| 0

| 0

| null |

[

"FrozenLake-v1-4x4",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-07-21T20:31:46Z

|

---

tags:

- FrozenLake-v1-4x4

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: FrozenLake-v2-4x4-Slippery

results:

- metrics:

- type: mean_reward

value: 0.73 +/- 0.45

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4

type: FrozenLake-v1-4x4

---

# **Q-Learning** Agent playing **FrozenLake-v2-4x4-Slippery**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v2-4x4-Slippery** .

## Usage

```python

model = load_from_hub(repo_id="nikitakapitan/FrozenLake-v2-4x4-Slippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

29thDay/PPO-MountainCar-v0

|

29thDay

| 2022-08-04T14:07:15Z

| 4

| 0

|

stable-baselines3

|

[

"stable-baselines3",

"MountainCar-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-08-04T12:08:40Z

|

---

library_name: stable-baselines3

tags:

- MountainCar-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: -91.30 +/- 7.04

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: MountainCar-v0

type: MountainCar-v0

---

# **PPO** Agent playing **MountainCar-v0**

This is a trained model of a **PPO** agent playing **MountainCar-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

schnell/bert-small-ipadic_bpe

|

schnell

| 2022-08-04T13:37:42Z

| 4

| 0

|

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-08-01T15:40:13Z

|

---

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: bert-small-ipadic_bpe

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-small-ipadic_bpe

This model is a fine-tuned version of [](https://huggingface.co/) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6777

- Accuracy: 0.6519

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 256

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 3

- total_train_batch_size: 768