modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-08-02 00:43:11

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 548

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-08-02 00:35:11

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

vocabtrimmer/mbart-large-cc25-squad-qa-trimmed-en-10000

|

vocabtrimmer

| 2023-04-01T02:05:26Z | 106 | 0 |

transformers

|

[

"transformers",

"pytorch",

"mbart",

"text2text-generation",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-04-01T01:39:20Z |

# Vocabulary Trimmed [lmqg/mbart-large-cc25-squad-qa](https://huggingface.co/lmqg/mbart-large-cc25-squad-qa): `vocabtrimmer/mbart-large-cc25-squad-qa-trimmed-en-10000`

This model is a trimmed version of [lmqg/mbart-large-cc25-squad-qa](https://huggingface.co/lmqg/mbart-large-cc25-squad-qa) by [`vocabtrimmer`](https://github.com/asahi417/lm-vocab-trimmer), a tool for trimming vocabulary of language models to compress the model size.

Following table shows a summary of the trimming process.

| | lmqg/mbart-large-cc25-squad-qa | vocabtrimmer/mbart-large-cc25-squad-qa-trimmed-en-10000 |

|:---------------------------|:---------------------------------|:----------------------------------------------------------|

| parameter_size_full | 610,852,864 | 365,068,288 |

| parameter_size_embedding | 512,057,344 | 20,488,192 |

| vocab_size | 250,028 | 10,004 |

| compression_rate_full | 100.0 | 59.76 |

| compression_rate_embedding | 100.0 | 4.0 |

Following table shows the parameter used to trim vocabulary.

| language | dataset | dataset_column | dataset_name | dataset_split | target_vocab_size | min_frequency |

|:-----------|:----------------------------|:-----------------|:---------------|:----------------|--------------------:|----------------:|

| en | vocabtrimmer/mc4_validation | text | en | validation | 10000 | 2 |

|

wjmm/q-FrozenLake-v1-4x4-noSlippery

|

wjmm

| 2023-04-01T01:43:56Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-04-01T01:43:53Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="wjmm/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

proleetops/a2c-PandaReachDense-v2

|

proleetops

| 2023-04-01T01:38:33Z | 3 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"PandaReachDense-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-04-01T01:36:09Z |

---

library_name: stable-baselines3

tags:

- PandaReachDense-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v2

type: PandaReachDense-v2

metrics:

- type: mean_reward

value: -2.97 +/- 1.73

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v2**

This is a trained model of a **A2C** agent playing **PandaReachDense-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

keemooo/9898

|

keemooo

| 2023-04-01T01:25:58Z | 0 | 0 | null |

[

"chemistry",

"music",

"art",

"text-generation-inference",

"hr",

"dataset:gsdf/EasyNegative",

"region:us"

] | null | 2023-04-01T01:23:30Z |

---

datasets:

- gsdf/EasyNegative

language:

- hr

tags:

- chemistry

- music

- art

- text-generation-inference

---

|

Brizape/Yepes_0.0001_250

|

Brizape

| 2023-04-01T00:55:10Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-03-31T23:25:32Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: Yepes_0.0001_250

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Yepes_0.0001_250

This model is a fine-tuned version of [microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1555

- Precision: 0.5922

- Recall: 0.4552

- F1: 0.5148

- Accuracy: 0.9768

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 500

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.4065 | 1.39 | 25 | 0.2115 | 0.0 | 0.0 | 0.0 | 0.9672 |

| 0.1995 | 2.78 | 50 | 0.2120 | 0.0 | 0.0 | 0.0 | 0.9672 |

| 0.1995 | 4.17 | 75 | 0.2108 | 0.0 | 0.0 | 0.0 | 0.9672 |

| 0.1694 | 5.56 | 100 | 0.1646 | 0.0 | 0.0 | 0.0 | 0.9672 |

| 0.1493 | 6.94 | 125 | 0.1513 | 0.0 | 0.0 | 0.0 | 0.9672 |

| 0.1266 | 8.33 | 150 | 0.1446 | 0.0 | 0.0 | 0.0 | 0.9672 |

| 0.106 | 9.72 | 175 | 0.1396 | 0.4019 | 0.2139 | 0.2792 | 0.9704 |

| 0.086 | 11.11 | 200 | 0.1162 | 0.5037 | 0.3408 | 0.4065 | 0.9740 |

| 0.0613 | 12.5 | 225 | 0.1230 | 0.5015 | 0.4104 | 0.4514 | 0.9740 |

| 0.047 | 13.89 | 250 | 0.1306 | 0.5333 | 0.4378 | 0.4809 | 0.9753 |

| 0.0351 | 15.28 | 275 | 0.1351 | 0.5629 | 0.4453 | 0.4972 | 0.9757 |

| 0.0266 | 16.67 | 300 | 0.1453 | 0.5617 | 0.4303 | 0.4873 | 0.9765 |

| 0.02 | 18.06 | 325 | 0.1441 | 0.5573 | 0.4478 | 0.4966 | 0.9757 |

| 0.0153 | 19.44 | 350 | 0.1555 | 0.5922 | 0.4552 | 0.5148 | 0.9768 |

### Framework versions

- Transformers 4.27.4

- Pytorch 1.13.1+cu116

- Datasets 2.11.0

- Tokenizers 0.13.2

|

saif-daoud/whisper-small-hi-2400_500_133

|

saif-daoud

| 2023-04-01T00:54:00Z | 75 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:afrispeech-200",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2023-03-31T22:23:54Z |

---

tags:

- generated_from_trainer

datasets:

- afrispeech-200

metrics:

- wer

model-index:

- name: whisper-small-hi-2400_500_133

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: afrispeech-200

type: afrispeech-200

config: hausa

split: train

args: hausa

metrics:

- name: Wer

type: wer

value: 0.32728583443469905

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# whisper-small-hi-2400_500_133

This model is a fine-tuned version of [saif-daoud/whisper-small-hi-2400_500_132](https://huggingface.co/saif-daoud/whisper-small-hi-2400_500_132) on the afrispeech-200 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7843

- Wer: 0.3273

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 150

- training_steps: 540

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.9568 | 0.5 | 270 | 0.7916 | 0.3298 |

| 0.9337 | 1.5 | 540 | 0.7843 | 0.3273 |

### Framework versions

- Transformers 4.28.0.dev0

- Pytorch 1.13.1+cu116

- Datasets 2.11.0

- Tokenizers 0.13.2

|

Brizape/Variome_0.0005_250

|

Brizape

| 2023-04-01T00:48:48Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-04-01T00:32:57Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: Variome_0.0005_250

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Variome_0.0005_250

This model is a fine-tuned version of [microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1812

- Precision: 0.0

- Recall: 0.0

- F1: 0.0

- Accuracy: 0.9760

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 500

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:---:|:--------:|

| 0.3356 | 0.35 | 25 | 0.1809 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.1851 | 0.69 | 50 | 0.1807 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.1635 | 1.04 | 75 | 0.1863 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.1848 | 1.39 | 100 | 0.1810 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.1697 | 1.74 | 125 | 0.1817 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.1735 | 2.08 | 150 | 0.1802 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.1576 | 2.43 | 175 | 0.1833 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.178 | 2.78 | 200 | 0.1811 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.18 | 3.12 | 225 | 0.1815 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.1809 | 3.47 | 250 | 0.1825 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.1616 | 3.82 | 275 | 0.1828 | 0.0 | 0.0 | 0.0 | 0.9760 |

| 0.1682 | 4.17 | 300 | 0.1812 | 0.0 | 0.0 | 0.0 | 0.9760 |

### Framework versions

- Transformers 4.27.4

- Pytorch 1.13.1+cu116

- Datasets 2.11.0

- Tokenizers 0.13.2

|

takinai/DreamShaper

|

takinai

| 2023-04-01T00:44:52Z | 0 | 0 | null |

[

"stable_diffusion",

"checkpoint",

"region:us"

] | null | 2023-03-31T23:16:08Z |

---

tags:

- stable_diffusion

- checkpoint

---

The source of the models is listed below. Please check the original licenses from the source.

https://civitai.com/models/4384

|

vocabtrimmer/xlm-v-base-trimmed-ar-30000

|

vocabtrimmer

| 2023-04-01T00:31:18Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2023-04-01T00:30:06Z |

# Vocabulary Trimmed [facebook/xlm-v-base](https://huggingface.co/facebook/xlm-v-base): `vocabtrimmer/xlm-v-base-trimmed-ar-30000`

This model is a trimmed version of [facebook/xlm-v-base](https://huggingface.co/facebook/xlm-v-base) by [`vocabtrimmer`](https://github.com/asahi417/lm-vocab-trimmer), a tool for trimming vocabulary of language models to compress the model size.

Following table shows a summary of the trimming process.

| | facebook/xlm-v-base | vocabtrimmer/xlm-v-base-trimmed-ar-30000 |

|:---------------------------|:----------------------|:-------------------------------------------|

| parameter_size_full | 779,396,349 | 109,115,186 |

| parameter_size_embedding | 692,451,072 | 23,041,536 |

| vocab_size | 901,629 | 30,002 |

| compression_rate_full | 100.0 | 14.0 |

| compression_rate_embedding | 100.0 | 3.33 |

Following table shows the parameter used to trim vocabulary.

| language | dataset | dataset_column | dataset_name | dataset_split | target_vocab_size | min_frequency |

|:-----------|:----------------------------|:-----------------|:---------------|:----------------|--------------------:|----------------:|

| ar | vocabtrimmer/mc4_validation | text | ar | validation | 30000 | 2 |

|

vocabtrimmer/xlm-v-base-trimmed-ar-15000-tweet-sentiment-ar

|

vocabtrimmer

| 2023-04-01T00:25:41Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-04-01T00:24:35Z |

# `vocabtrimmer/xlm-v-base-trimmed-ar-15000-tweet-sentiment-ar`

This model is a fine-tuned version of [/home/asahi/lm-vocab-trimmer/ckpts/xlm-v-base-trimmed-ar-15000](https://huggingface.co//home/asahi/lm-vocab-trimmer/ckpts/xlm-v-base-trimmed-ar-15000) on the

[cardiffnlp/tweet_sentiment_multilingual](https://huggingface.co/datasets/cardiffnlp/tweet_sentiment_multilingual) (arabic).

Following metrics are computed on the `test` split of

[cardiffnlp/tweet_sentiment_multilingual](https://huggingface.co/datasets/cardiffnlp/tweet_sentiment_multilingual)(arabic).

| | eval_f1_micro | eval_recall_micro | eval_precision_micro | eval_f1_macro | eval_recall_macro | eval_precision_macro | eval_accuracy |

|---:|----------------:|--------------------:|-----------------------:|----------------:|--------------------:|-----------------------:|----------------:|

| 0 | 52.87 | 52.87 | 52.87 | 46.59 | 52.87 | 50.88 | 52.87 |

Check the result file [here](https://huggingface.co/vocabtrimmer/xlm-v-base-trimmed-ar-15000-tweet-sentiment-ar/raw/main/eval.json).

|

vocabtrimmer/xlm-v-base-tweet-sentiment-fr-trimmed-fr-5000

|

vocabtrimmer

| 2023-04-01T00:25:41Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-04-01T00:22:55Z |

# Vocabulary Trimmed [cardiffnlp/xlm-v-base-tweet-sentiment-fr](https://huggingface.co/cardiffnlp/xlm-v-base-tweet-sentiment-fr): `vocabtrimmer/xlm-v-base-tweet-sentiment-fr-trimmed-fr-5000`

This model is a trimmed version of [cardiffnlp/xlm-v-base-tweet-sentiment-fr](https://huggingface.co/cardiffnlp/xlm-v-base-tweet-sentiment-fr) by [`vocabtrimmer`](https://github.com/asahi417/lm-vocab-trimmer), a tool for trimming vocabulary of language models to compress the model size.

Following table shows a summary of the trimming process.

| | cardiffnlp/xlm-v-base-tweet-sentiment-fr | vocabtrimmer/xlm-v-base-tweet-sentiment-fr-trimmed-fr-5000 |

|:---------------------------|:-------------------------------------------|:-------------------------------------------------------------|

| parameter_size_full | 778,495,491 | 89,885,955 |

| parameter_size_embedding | 692,451,072 | 3,841,536 |

| vocab_size | 901,629 | 5,002 |

| compression_rate_full | 100.0 | 11.55 |

| compression_rate_embedding | 100.0 | 0.55 |

Following table shows the parameter used to trim vocabulary.

| language | dataset | dataset_column | dataset_name | dataset_split | target_vocab_size | min_frequency |

|:-----------|:----------------------------|:-----------------|:---------------|:----------------|--------------------:|----------------:|

| fr | vocabtrimmer/mc4_validation | text | fr | validation | 5000 | 2 |

|

vocabtrimmer/mt5-small-trimmed-en-90000-squad-qa

|

vocabtrimmer

| 2023-04-01T00:05:28Z | 106 | 0 |

transformers

|

[

"transformers",

"pytorch",

"mt5",

"text2text-generation",

"question answering",

"en",

"dataset:lmqg/qg_squad",

"arxiv:2210.03992",

"license:cc-by-4.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-04-01T00:03:14Z |

---

license: cc-by-4.0

metrics:

- bleu4

- meteor

- rouge-l

- bertscore

- moverscore

language: en

datasets:

- lmqg/qg_squad

pipeline_tag: text2text-generation

tags:

- question answering

widget:

- text: "question: What is a person called is practicing heresy?, context: Heresy is any provocative belief or theory that is strongly at variance with established beliefs or customs. A heretic is a proponent of such claims or beliefs. Heresy is distinct from both apostasy, which is the explicit renunciation of one's religion, principles or cause, and blasphemy, which is an impious utterance or action concerning God or sacred things."

example_title: "Question Answering Example 1"

- text: "question: who created the post as we know it today?, context: 'So much of The Post is Ben,' Mrs. Graham said in 1994, three years after Bradlee retired as editor. 'He created it as we know it today.'— Ed O'Keefe (@edatpost) October 21, 2014"

example_title: "Question Answering Example 2"

model-index:

- name: vocabtrimmer/mt5-small-trimmed-en-90000-squad-qa

results:

- task:

name: Text2text Generation

type: text2text-generation

dataset:

name: lmqg/qg_squad

type: default

args: default

metrics:

- name: BLEU4 (Question Answering)

type: bleu4_question_answering

value: 33.47

- name: ROUGE-L (Question Answering)

type: rouge_l_question_answering

value: 67.38

- name: METEOR (Question Answering)

type: meteor_question_answering

value: 39.13

- name: BERTScore (Question Answering)

type: bertscore_question_answering

value: 91.86

- name: MoverScore (Question Answering)

type: moverscore_question_answering

value: 81.36

- name: AnswerF1Score (Question Answering)

type: answer_f1_score__question_answering

value: 68.65

- name: AnswerExactMatch (Question Answering)

type: answer_exact_match_question_answering

value: 54.26

---

# Model Card of `vocabtrimmer/mt5-small-trimmed-en-90000-squad-qa`

This model is fine-tuned version of [ckpts/mt5-small-trimmed-en-90000](https://huggingface.co/ckpts/mt5-small-trimmed-en-90000) for question answering task on the [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) (dataset_name: default) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [ckpts/mt5-small-trimmed-en-90000](https://huggingface.co/ckpts/mt5-small-trimmed-en-90000)

- **Language:** en

- **Training data:** [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) (default)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="en", model="vocabtrimmer/mt5-small-trimmed-en-90000-squad-qa")

# model prediction

answers = model.answer_q(list_question="What is a person called is practicing heresy?", list_context=" Heresy is any provocative belief or theory that is strongly at variance with established beliefs or customs. A heretic is a proponent of such claims or beliefs. Heresy is distinct from both apostasy, which is the explicit renunciation of one's religion, principles or cause, and blasphemy, which is an impious utterance or action concerning God or sacred things.")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "vocabtrimmer/mt5-small-trimmed-en-90000-squad-qa")

output = pipe("question: What is a person called is practicing heresy?, context: Heresy is any provocative belief or theory that is strongly at variance with established beliefs or customs. A heretic is a proponent of such claims or beliefs. Heresy is distinct from both apostasy, which is the explicit renunciation of one's religion, principles or cause, and blasphemy, which is an impious utterance or action concerning God or sacred things.")

```

## Evaluation

- ***Metric (Question Answering)***: [raw metric file](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-en-90000-squad-qa/raw/main/eval/metric.first.answer.paragraph_question.answer.lmqg_qg_squad.default.json)

| | Score | Type | Dataset |

|:-----------------|--------:|:--------|:---------------------------------------------------------------|

| AnswerExactMatch | 54.26 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| AnswerF1Score | 68.65 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| BERTScore | 91.86 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| Bleu_1 | 49.27 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| Bleu_2 | 43.25 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| Bleu_3 | 37.89 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| Bleu_4 | 33.47 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| METEOR | 39.13 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| MoverScore | 81.36 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| ROUGE_L | 67.38 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qg_squad

- dataset_name: default

- input_types: ['paragraph_question']

- output_types: ['answer']

- prefix_types: None

- model: ckpts/mt5-small-trimmed-en-90000

- max_length: 512

- max_length_output: 32

- epoch: 10

- batch: 32

- lr: 0.0005

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 2

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-en-90000-squad-qa/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

Brizape/SETH_5e-05_250

|

Brizape

| 2023-04-01T00:00:15Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-03-31T23:49:57Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: SETH_5e-05_250

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# SETH_5e-05_250

This model is a fine-tuned version of [microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0716

- Precision: 0.7964

- Recall: 0.8036

- F1: 0.8000

- Accuracy: 0.9849

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 500

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.3757 | 0.76 | 25 | 0.1924 | 0.0 | 0.0 | 0.0 | 0.9625 |

| 0.1119 | 1.52 | 50 | 0.0723 | 0.6237 | 0.7473 | 0.6799 | 0.9775 |

| 0.0565 | 2.27 | 75 | 0.0614 | 0.6569 | 0.7727 | 0.7101 | 0.9794 |

| 0.048 | 3.03 | 100 | 0.0586 | 0.6667 | 0.8655 | 0.7532 | 0.9801 |

| 0.0355 | 3.79 | 125 | 0.0519 | 0.7206 | 0.8345 | 0.7734 | 0.9835 |

| 0.0328 | 4.55 | 150 | 0.0532 | 0.7165 | 0.8455 | 0.7756 | 0.9831 |

| 0.0258 | 5.3 | 175 | 0.0539 | 0.7460 | 0.8382 | 0.7894 | 0.9835 |

| 0.022 | 6.06 | 200 | 0.0561 | 0.7612 | 0.7709 | 0.7660 | 0.9836 |

| 0.0189 | 6.82 | 225 | 0.0564 | 0.7636 | 0.74 | 0.7516 | 0.9828 |

| 0.0166 | 7.58 | 250 | 0.0597 | 0.7274 | 0.8491 | 0.7836 | 0.9836 |

| 0.0128 | 8.33 | 275 | 0.0626 | 0.8251 | 0.7636 | 0.7932 | 0.9854 |

| 0.0113 | 9.09 | 300 | 0.0603 | 0.8029 | 0.8 | 0.8015 | 0.9854 |

| 0.009 | 9.85 | 325 | 0.0687 | 0.8026 | 0.7909 | 0.7967 | 0.9857 |

| 0.0075 | 10.61 | 350 | 0.0716 | 0.7964 | 0.8036 | 0.8000 | 0.9849 |

### Framework versions

- Transformers 4.27.4

- Pytorch 1.13.1+cu116

- Datasets 2.11.0

- Tokenizers 0.13.2

|

vocabtrimmer/xlm-v-base-trimmed-ar-10000

|

vocabtrimmer

| 2023-03-31T23:49:56Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2023-03-31T23:48:49Z |

# Vocabulary Trimmed [facebook/xlm-v-base](https://huggingface.co/facebook/xlm-v-base): `vocabtrimmer/xlm-v-base-trimmed-ar-10000`

This model is a trimmed version of [facebook/xlm-v-base](https://huggingface.co/facebook/xlm-v-base) by [`vocabtrimmer`](https://github.com/asahi417/lm-vocab-trimmer), a tool for trimming vocabulary of language models to compress the model size.

Following table shows a summary of the trimming process.

| | facebook/xlm-v-base | vocabtrimmer/xlm-v-base-trimmed-ar-10000 |

|:---------------------------|:----------------------|:-------------------------------------------|

| parameter_size_full | 779,396,349 | 93,735,186 |

| parameter_size_embedding | 692,451,072 | 7,681,536 |

| vocab_size | 901,629 | 10,002 |

| compression_rate_full | 100.0 | 12.03 |

| compression_rate_embedding | 100.0 | 1.11 |

Following table shows the parameter used to trim vocabulary.

| language | dataset | dataset_column | dataset_name | dataset_split | target_vocab_size | min_frequency |

|:-----------|:----------------------------|:-----------------|:---------------|:----------------|--------------------:|----------------:|

| ar | vocabtrimmer/mc4_validation | text | ar | validation | 10000 | 2 |

|

vocabtrimmer/xlm-v-base-trimmed-ar-5000

|

vocabtrimmer

| 2023-03-31T23:30:41Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2023-03-31T23:29:39Z |

# Vocabulary Trimmed [facebook/xlm-v-base](https://huggingface.co/facebook/xlm-v-base): `vocabtrimmer/xlm-v-base-trimmed-ar-5000`

This model is a trimmed version of [facebook/xlm-v-base](https://huggingface.co/facebook/xlm-v-base) by [`vocabtrimmer`](https://github.com/asahi417/lm-vocab-trimmer), a tool for trimming vocabulary of language models to compress the model size.

Following table shows a summary of the trimming process.

| | facebook/xlm-v-base | vocabtrimmer/xlm-v-base-trimmed-ar-5000 |

|:---------------------------|:----------------------|:------------------------------------------|

| parameter_size_full | 779,396,349 | 89,890,186 |

| parameter_size_embedding | 692,451,072 | 3,841,536 |

| vocab_size | 901,629 | 5,002 |

| compression_rate_full | 100.0 | 11.53 |

| compression_rate_embedding | 100.0 | 0.55 |

Following table shows the parameter used to trim vocabulary.

| language | dataset | dataset_column | dataset_name | dataset_split | target_vocab_size | min_frequency |

|:-----------|:----------------------------|:-----------------|:---------------|:----------------|--------------------:|----------------:|

| ar | vocabtrimmer/mc4_validation | text | ar | validation | 5000 | 2 |

|

vocabtrimmer/xlm-v-base-trimmed-ar-tweet-sentiment-ar

|

vocabtrimmer

| 2023-03-31T23:28:30Z | 113 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-03-31T23:26:50Z |

# `vocabtrimmer/xlm-v-base-trimmed-ar-tweet-sentiment-ar`

This model is a fine-tuned version of [/home/asahi/lm-vocab-trimmer/ckpts/xlm-v-base-trimmed-ar](https://huggingface.co//home/asahi/lm-vocab-trimmer/ckpts/xlm-v-base-trimmed-ar) on the

[cardiffnlp/tweet_sentiment_multilingual](https://huggingface.co/datasets/cardiffnlp/tweet_sentiment_multilingual) (arabic).

Following metrics are computed on the `test` split of

[cardiffnlp/tweet_sentiment_multilingual](https://huggingface.co/datasets/cardiffnlp/tweet_sentiment_multilingual)(arabic).

| | eval_f1_micro | eval_recall_micro | eval_precision_micro | eval_f1_macro | eval_recall_macro | eval_precision_macro | eval_accuracy |

|---:|----------------:|--------------------:|-----------------------:|----------------:|--------------------:|-----------------------:|----------------:|

| 0 | 65.4 | 65.4 | 65.4 | 64.72 | 65.4 | 65.15 | 65.4 |

Check the result file [here](https://huggingface.co/vocabtrimmer/xlm-v-base-trimmed-ar-tweet-sentiment-ar/raw/main/eval.json).

|

Brizape/tmvar_0.0001_250

|

Brizape

| 2023-03-31T23:23:08Z | 121 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-03-31T23:14:03Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: tmvar_0.0001_250

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tmvar_0.0001_250

This model is a fine-tuned version of [microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0142

- Precision: 0.8520

- Recall: 0.9027

- F1: 0.8766

- Accuracy: 0.9972

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 500

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2033 | 1.0 | 25 | 0.0313 | 0.6273 | 0.3730 | 0.4678 | 0.9899 |

| 0.0336 | 2.0 | 50 | 0.0197 | 0.6723 | 0.8541 | 0.7524 | 0.9946 |

| 0.0133 | 3.0 | 75 | 0.0134 | 0.8763 | 0.8811 | 0.8787 | 0.9969 |

| 0.0075 | 4.0 | 100 | 0.0192 | 0.7110 | 0.8378 | 0.7692 | 0.9952 |

| 0.0065 | 5.0 | 125 | 0.0126 | 0.8681 | 0.8541 | 0.8610 | 0.9969 |

| 0.0029 | 6.0 | 150 | 0.0130 | 0.8513 | 0.8973 | 0.8737 | 0.9974 |

| 0.002 | 7.0 | 175 | 0.0121 | 0.8446 | 0.8811 | 0.8624 | 0.9969 |

| 0.0017 | 8.0 | 200 | 0.0103 | 0.8462 | 0.8919 | 0.8684 | 0.9974 |

| 0.0011 | 9.0 | 225 | 0.0148 | 0.8299 | 0.8703 | 0.8496 | 0.9967 |

| 0.0007 | 10.0 | 250 | 0.0150 | 0.8426 | 0.8973 | 0.8691 | 0.9971 |

| 0.0005 | 11.0 | 275 | 0.0142 | 0.8376 | 0.8919 | 0.8639 | 0.9970 |

| 0.0004 | 12.0 | 300 | 0.0142 | 0.8513 | 0.8973 | 0.8737 | 0.9972 |

| 0.0003 | 13.0 | 325 | 0.0143 | 0.8469 | 0.8973 | 0.8714 | 0.9971 |

| 0.0003 | 14.0 | 350 | 0.0142 | 0.8520 | 0.9027 | 0.8766 | 0.9972 |

### Framework versions

- Transformers 4.27.4

- Pytorch 1.13.1+cu116

- Datasets 2.11.0

- Tokenizers 0.13.2

|

wjmm/ppo-LunarLander-v2

|

wjmm

| 2023-03-31T23:19:34Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-31T23:06:50Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 246.05 +/- 22.92

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

|

Mentatko/bert-finetuned-squad

|

Mentatko

| 2023-03-31T23:18:19Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"bert",

"question-answering",

"generated_from_keras_callback",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-03-28T06:21:06Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: Mentatko/bert-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Mentatko/bert-finetuned-squad

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.7318

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 5545, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Epoch |

|:----------:|:-----:|

| 0.7318 | 0 |

### Framework versions

- Transformers 4.27.3

- TensorFlow 2.10.0

- Datasets 2.10.1

- Tokenizers 0.13.2

|

vocabtrimmer/xlm-v-base-trimmed-ar

|

vocabtrimmer

| 2023-03-31T23:09:15Z | 124 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2023-03-31T23:07:34Z |

# Vocabulary Trimmed [facebook/xlm-v-base](https://huggingface.co/facebook/xlm-v-base): `vocabtrimmer/xlm-v-base-trimmed-ar`

This model is a trimmed version of [facebook/xlm-v-base](https://huggingface.co/facebook/xlm-v-base) by [`vocabtrimmer`](https://github.com/asahi417/lm-vocab-trimmer), a tool for trimming vocabulary of language models to compress the model size.

Following table shows a summary of the trimming process.

| | facebook/xlm-v-base | vocabtrimmer/xlm-v-base-trimmed-ar |

|:---------------------------|:----------------------|:-------------------------------------|

| parameter_size_full | 779,396,349 | 157,554,496 |

| parameter_size_embedding | 692,451,072 | 71,417,856 |

| vocab_size | 901,629 | 92,992 |

| compression_rate_full | 100.0 | 20.21 |

| compression_rate_embedding | 100.0 | 10.31 |

Following table shows the parameter used to trim vocabulary.

| language | dataset | dataset_column | dataset_name | dataset_split | target_vocab_size | min_frequency |

|:-----------|:----------------------------|:-----------------|:---------------|:----------------|:--------------------|----------------:|

| ar | vocabtrimmer/mc4_validation | text | ar | validation | | 2 |

|

Brizape/tmvar_2e-05_250

|

Brizape

| 2023-03-31T23:04:44Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-03-31T22:55:34Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: tmvar_2e-05_250

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tmvar_2e-05_250

This model is a fine-tuned version of [microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0128

- Precision: 0.8756

- Recall: 0.9135

- F1: 0.8942

- Accuracy: 0.9974

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 500

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.486 | 1.0 | 25 | 0.0910 | 0.0 | 0.0 | 0.0 | 0.9858 |

| 0.0765 | 2.0 | 50 | 0.0410 | 0.6267 | 0.2541 | 0.3615 | 0.9889 |

| 0.0399 | 3.0 | 75 | 0.0230 | 0.6513 | 0.6865 | 0.6684 | 0.9941 |

| 0.0254 | 4.0 | 100 | 0.0176 | 0.7170 | 0.8216 | 0.7657 | 0.9957 |

| 0.0139 | 5.0 | 125 | 0.0129 | 0.8710 | 0.8757 | 0.8733 | 0.9968 |

| 0.0078 | 6.0 | 150 | 0.0107 | 0.9027 | 0.9027 | 0.9027 | 0.9974 |

| 0.0057 | 7.0 | 175 | 0.0110 | 0.8763 | 0.9189 | 0.8971 | 0.9975 |

| 0.0042 | 8.0 | 200 | 0.0113 | 0.8718 | 0.9189 | 0.8947 | 0.9971 |

| 0.003 | 9.0 | 225 | 0.0118 | 0.8802 | 0.9135 | 0.8966 | 0.9974 |

| 0.0022 | 10.0 | 250 | 0.0121 | 0.8877 | 0.8973 | 0.8925 | 0.9972 |

| 0.0019 | 11.0 | 275 | 0.0126 | 0.8756 | 0.9135 | 0.8942 | 0.9972 |

| 0.0016 | 12.0 | 300 | 0.0126 | 0.8802 | 0.9135 | 0.8966 | 0.9974 |

| 0.0015 | 13.0 | 325 | 0.0129 | 0.8769 | 0.9243 | 0.9 | 0.9974 |

| 0.0013 | 14.0 | 350 | 0.0128 | 0.8756 | 0.9135 | 0.8942 | 0.9974 |

### Framework versions

- Transformers 4.27.4

- Pytorch 1.13.1+cu116

- Datasets 2.11.0

- Tokenizers 0.13.2

|

kfahn/dreambooth_diffusion_model_gesture

|

kfahn

| 2023-03-31T23:01:59Z | 5 | 0 |

keras

|

[

"keras",

"tf-keras",

"region:us"

] | null | 2023-03-30T15:57:59Z |

---

library_name: keras

---

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

| Hyperparameters | Value |

| :-- | :-- |

| inner_optimizer.class_name | Custom>RMSprop |

| inner_optimizer.config.name | RMSprop |

| inner_optimizer.config.weight_decay | None |

| inner_optimizer.config.clipnorm | None |

| inner_optimizer.config.global_clipnorm | None |

| inner_optimizer.config.clipvalue | None |

| inner_optimizer.config.use_ema | False |

| inner_optimizer.config.ema_momentum | 0.99 |

| inner_optimizer.config.ema_overwrite_frequency | 100 |

| inner_optimizer.config.jit_compile | True |

| inner_optimizer.config.is_legacy_optimizer | False |

| inner_optimizer.config.learning_rate | 0.0010000000474974513 |

| inner_optimizer.config.rho | 0.9 |

| inner_optimizer.config.momentum | 0.0 |

| inner_optimizer.config.epsilon | 1e-07 |

| inner_optimizer.config.centered | False |

| dynamic | True |

| initial_scale | 32768.0 |

| dynamic_growth_steps | 2000 |

| training_precision | mixed_float16 |

## Model Plot

<details>

<summary>View Model Plot</summary>

</details>

|

dvruette/oasst-llama-13b-2-epochs

|

dvruette

| 2023-03-31T22:44:54Z | 1,494 | 7 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-03-31T22:26:30Z |

https://wandb.ai/open-assistant/supervised-finetuning/runs/lguuq2c1

|

takinai/xiaorenshu

|

takinai

| 2023-03-31T22:31:21Z | 0 | 1 | null |

[

"stable_diffusion",

"lora",

"region:us"

] | null | 2023-03-31T22:30:57Z |

---

tags:

- stable_diffusion

- lora

---

The source of the models is listed below. Please check the original licenses from the source.

https://civitai.com/models/18323

|

amannlp/ppo-LunarLander-v2

|

amannlp

| 2023-03-31T22:22:59Z | 4 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-31T22:22:34Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 249.08 +/- 34.67

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

letingliu/my_awesome_model_tweets

|

letingliu

| 2023-03-31T22:22:34Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"distilbert",

"text-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-03-07T05:40:01Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: letingliu/my_awesome_model_tweets

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# letingliu/my_awesome_model_tweets

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.5490

- Validation Loss: 0.5429

- Train Accuracy: 0.6692

- Epoch: 19

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'weight_decay': None, 'clipnorm': None, 'global_clipnorm': None, 'clipvalue': None, 'use_ema': False, 'ema_momentum': 0.99, 'ema_overwrite_frequency': None, 'jit_compile': False, 'is_legacy_optimizer': False, 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 40, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Accuracy | Epoch |

|:----------:|:---------------:|:--------------:|:-----:|

| 0.6582 | 0.6337 | 0.6692 | 0 |

| 0.6230 | 0.6035 | 0.6692 | 1 |

| 0.6015 | 0.5766 | 0.6692 | 2 |

| 0.5738 | 0.5533 | 0.6692 | 3 |

| 0.5540 | 0.5429 | 0.6692 | 4 |

| 0.5534 | 0.5429 | 0.6692 | 5 |

| 0.5515 | 0.5429 | 0.6692 | 6 |

| 0.5524 | 0.5429 | 0.6692 | 7 |

| 0.5455 | 0.5429 | 0.6692 | 8 |

| 0.5463 | 0.5429 | 0.6692 | 9 |

| 0.5380 | 0.5429 | 0.6692 | 10 |

| 0.5494 | 0.5429 | 0.6692 | 11 |

| 0.5467 | 0.5429 | 0.6692 | 12 |

| 0.5382 | 0.5429 | 0.6692 | 13 |

| 0.5562 | 0.5429 | 0.6692 | 14 |

| 0.5517 | 0.5429 | 0.6692 | 15 |

| 0.5462 | 0.5429 | 0.6692 | 16 |

| 0.5456 | 0.5429 | 0.6692 | 17 |

| 0.5499 | 0.5429 | 0.6692 | 18 |

| 0.5490 | 0.5429 | 0.6692 | 19 |

### Framework versions

- Transformers 4.27.4

- TensorFlow 2.12.0

- Datasets 2.11.0

- Tokenizers 0.13.2

|

dvruette/oasst-llama-13b-1000-steps

|

dvruette

| 2023-03-31T22:22:28Z | 1,494 | 0 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-03-31T19:22:45Z |

https://wandb.ai/open-assistant/supervised-finetuning/runs/17boywm8?workspace=

|

Inzamam567/Useless-MORIMORImix

|

Inzamam567

| 2023-03-31T22:19:47Z | 0 | 1 | null |

[

"region:us"

] | null | 2023-03-31T22:19:47Z |

---

duplicated_from: morit-00/MORIMORImix

---

|

Inzamam567/Useless-somethingv3

|

Inzamam567

| 2023-03-31T22:14:27Z | 0 | 1 | null |

[

"region:us"

] | null | 2023-03-31T22:14:26Z |

---

duplicated_from: NoCrypt/SomethingV3

---

|

Inzamam567/Useless-SukiyakiMix-v1.0

|

Inzamam567

| 2023-03-31T22:01:54Z | 0 | 5 | null |

[

"stable-diffusion",

"text-to-image",

"ja",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2023-03-31T22:01:54Z |

---

license: creativeml-openrail-m

language:

- ja

tags:

- stable-diffusion

- text-to-image

duplicated_from: Vsukiyaki/SukiyakiMix-v1.0

---

# ◆ SukiyakiMix-v1.0

**SukiyakiMix-v1.0** は、**pastel-mix** をベースに **AbyssOrangeMix2** をマージしたモデルです。

## VAE:

VAE はお好きなものをお使いください。推奨は、 [WarriorMama777/OrangeMixs](https://huggingface.co/WarriorMama777/OrangeMixs) の **orangemix.vae.pt** です。

<hr>

# ◆ Recipe

このモデルは、以下の 2 つのモデルを**単純**にマージして生成されたモデルです。

<dl>

<dt><a href="https://huggingface.co/andite/pastel-mix">andite/pastel-mix</a></dt>

<dd>└ pastel-mix</dd>

<dt><a href="https://huggingface.co/WarriorMama777/OrangeMixs">WarriorMama777/OrangeMixs</a></dt>

<dd>└ AbyssOrangeMix2_sfw (AOM2s)</dd>

</dl>

| Model A | Model B | Ratio |

| :--------: | :-------------------------: | :-----: |

| pastel-mix | AbyssOrangeMix2_sfw (AOM2s) | 60 : 40 |

※U-Net の階層ごとの重みは変化させていません。<br>

※マージには[merge-models

](https://github.com/eyriewow/merge-models)のマージ用スクリプトを使用しています。

<hr>

# ◆ Licence

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage. The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully) Please read the full license here :https://huggingface.co/spaces/CompVis/stable-diffusion-license

<br>

#### 【和訳】

このモデルはオープンアクセスであり、すべての人が利用できます。CreativeML OpenRAIL-M ライセンスにより、権利と使用方法がさらに規定されています。CreativeML OpenRAIL ライセンスでは、次のことが規定されています。

1. モデルを使用して、違法または有害な出力またはコンテンツを意図的に作成または共有することはできません。

2. 作成者は、あなたが生成した出力に対していかなる権利も主張しません。あなたはそれらを自由に使用でき、ライセンスに設定された規定に違反してはならない使用について説明責任を負います。

3. 重みを再配布し、モデルを商用および/またはサービスとして使用することができます。その場合、ライセンスに記載されているのと同じ使用制限を含め、CreativeML OpenRAIL-M のコピーをすべてのユーザーと共有する必要があることに注意してください。 (ライセンスを完全にかつ慎重にお読みください。) [こちらからライセンス全文をお読みください。](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

<br>

🚫 本モデルを商用の画像生成サービスで利用する行為 <br>

Use of this model for commercial image generation services

🚫 本モデルや本モデルをマージしたモデルを販売する行為<br>

The act of selling this model or a model merged with this model

🚫 本モデルを使用し意図的に違法な出力をする行為 <br>

Intentionally using this model to produce illegal output

🚫 本モデルをマージしたモデルに異なる権限を与える行為 <br>

Have different permissions when sharing

🚫 本モデルをマージしたモデルを配布または本モデルを再配布した際に同じ使用制限を含め、CreativeML OpenRAIL-M のコピーをすべてのユーザーと共有しない行為 <br>

The act of not sharing a copy of CreativeML OpenRAIL-M with all users, including the same usage restrictions when distributing or redistributing a merged model of this model.

⭕ 本モデルで生成した画像を商用利用する行為 <br>

Commercial use of images generated by this model

⭕ 本モデルを使用したマージモデルを使用または再配布する行為 <br>

Use or redistribution of merged models using this model

⭕ 本モデルのクレジット表記をせずに使用する行為 <br>

Use of this model without crediting the model

<hr>

# ◆ Examples

### NMKD SD-GUI-1.8.1-NoMdl

- VAE: orangemix.vae.pt

<img src="https://huggingface.co/Vsukiyaki/SukiyakiMix-v1.0/resolve/main/imgs/Example1.png" width="512px">

```

Positive:

(best quality)+,(masterpiece)++,(ultra detailed)++,cute girl,

Negative:

(low quality, worst quality)1.4, (bad anatomy)+, (inaccurate limb)1.3,bad composition, inaccurate eyes, extra digit,fewer digits,(extra arms)1.2,logo,text

Steps: 20

CFG Scale: 8

Size: 1024x1024 (High-Resolution Fix)

Seed: 1696068555

Sampler: PLMS

```

<br>

<img src="https://huggingface.co/Vsukiyaki/SukiyakiMix-v1.0/resolve/main/imgs/Example2.png" width="512px">

```

Positive:

(best quality)+,(masterpiece)++,(ultra detailed)++,cute girl,

Negative:

(low quality, worst quality)1.4, (bad anatomy)+, (inaccurate limb)1.3,bad composition, inaccurate eyes, extra digit,fewer digits,(extra arms)1.2,logo,text

Steps: 20

CFG Scale: 8

Size: 1024x1024 (High-Resolution Fix)

Seed: 1596727034

Sampler: DDIM

```

<br>

<img src="https://huggingface.co/Vsukiyaki/SukiyakiMix-v1.0/resolve/main/imgs/Example3.png" width="512px">

```

Positive:

(best quality)+,(masterpiece)++,(ultra detailed)++,sharp focus,cute little girl sitting in a messy room,Roomful of sundries,black hair,long hair,blush,clutter,miscellaneous goods are placed in a mess,wide shot,smile,light particles,hoodie,Bookshelves, drink, cushions, chairs, desks, game equipment, crayons, drawing paper

Negative:

(low quality, worst quality)1.4, (bad anatomy)+, (inaccurate limb)1.3,bad composition, inaccurate eyes, extra digit,fewer digits,(extra arms)1.2,logo,text

Steps: 80

CFG Scale: 8

Size: 1024x1024 (High-Resolution Fix)

Seed: 629024761

Sampler: DPM++ 2

```

<br>

<img src="https://huggingface.co/Vsukiyaki/SukiyakiMix-v1.0/resolve/main/imgs/Example4.png" width="512px">

```

Positive:

(masterpiece, best quality, ultra detailed)++,cute girl sitting at a desk in a girlish room filled with furniture, surrounded by various gaming devices and other tech,Include details such as the room's vibrant,pink hair,blue eyes,short hair,cat ears,smile,playful,creative

Negative:

(low quality, worst quality)1.4, (bad anatomy)+, (inaccurate limb)1.2,bad composition, inaccurate eyes, extra digit,fewer digits,(extra arms)1.2,(2 girl)

Steps: 80

CFG Scale: 8

Size: 1024x768 (High-Resolution Fix)

Seed: 1887602021

Sampler: DPM++ 2

```

<br>

### stable-diffusion-webui

- VAE: orangemix.vae.pt

<img src="https://huggingface.co/Vsukiyaki/SukiyakiMix-v1.0/resolve/main/imgs/Example5.png" width="512px">

```

Positive:

(best quality)+,(masterpiece)++,(ultra detailed)++,cute girl,school uniform

Negative:

(low quality, worst quality)1.4, (bad anatomy)+, (inaccurate limb)1.3,bad composition, inaccurate eyes, extra digit,fewer digits,(extra arms)1.2,logo,text

Steps: 50

CFG Scale: 8

Size: 512x768

Seed: 3357075383

Sampler: DPM++ SDE Karras

```

<br>

<img src="https://huggingface.co/Vsukiyaki/SukiyakiMix-v1.0/resolve/main/imgs/Example6.png" width="512px">

```

Positive:

(best quality)+,(masterpiece)++,(ultra detailed)++,a girl,messy room

Negative:

(low quality, worst quality)1.4, (bad anatomy)+, (inaccurate limb)1.3,bad composition, inaccurate eyes, extra digit,fewer digits,(extra arms)1.2,logo,text

Steps: 20

CFG Scale: 7

Size: 1024x1024

Seed: 1103020084

Sampler: DPM++ SDE Karras

```

<hr>

Twiter: [@Vsukiyaki_AIArt](https://twitter.com/Vsukiyaki_AIArt)

|

Inzamam567/Useless-TriPhaze

|

Inzamam567

| 2023-03-31T22:00:09Z | 6 | 1 |

diffusers

|

[

"diffusers",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2023-03-31T22:00:08Z |

---

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

duplicated_from: Lucetepolis/TriPhaze

---

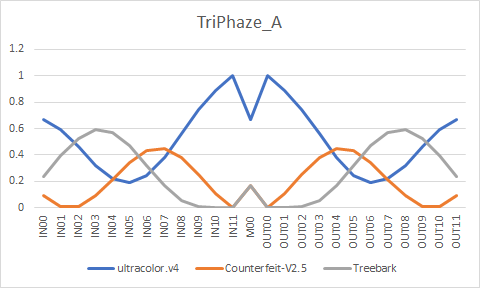

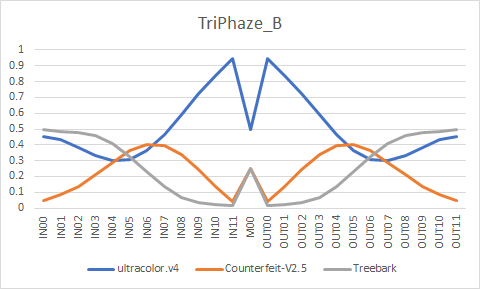

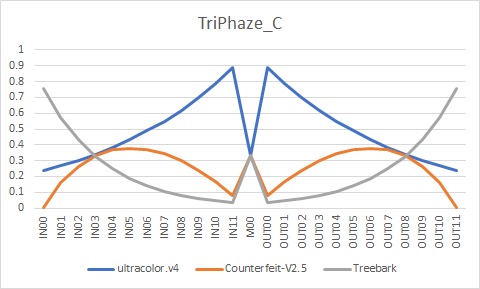

# TriPhaze

ultracolor.v4 - <a href="https://huggingface.co/xdive/ultracolor.v4">Download</a> / <a href="https://arca.live/b/aiart/68609290">Sample</a><br/>

Counterfeit-V2.5 - <a href="https://huggingface.co/gsdf/Counterfeit-V2.5">Download / Sample</a><br/>

Treebark - <a href="https://huggingface.co/HIZ/aichan_pick">Download</a> / <a href="https://arca.live/b/aiart/67648642">Sample</a><br/>

EasyNegative and pastelmix-lora seem to work well with the models.

EasyNegative - <a href="https://huggingface.co/datasets/gsdf/EasyNegative">Download / Sample</a><br/>

pastelmix-lora - <a href="https://huggingface.co/andite/pastel-mix">Download / Sample</a>

# Formula

```

ultracolor.v4 + Counterfeit-V2.5 = temp1

U-Net Merge - 0.870333, 0.980430, 0.973645, 0.716758, 0.283242, 0.026355, 0.019570, 0.129667, 0.273791, 0.424427, 0.575573, 0.726209, 0.5, 0.726209, 0.575573, 0.424427, 0.273791, 0.129667, 0.019570, 0.026355, 0.283242, 0.716758, 0.973645, 0.980430, 0.870333

temp1 + Treebark = temp2

U-Net Merge - 0.752940, 0.580394, 0.430964, 0.344691, 0.344691, 0.430964, 0.580394, 0.752940, 0.902369, 0.988642, 0.988642, 0.902369, 0.666667, 0.902369, 0.988642, 0.988642, 0.902369, 0.752940, 0.580394, 0.430964, 0.344691, 0.344691, 0.430964, 0.580394, 0.752940

temp2 + ultracolor.v4 = TriPhaze_A

U-Net Merge - 0.042235, 0.056314, 0.075085, 0.100113, 0.133484, 0.177979, 0.237305, 0.316406, 0.421875, 0.5625, 0.75, 1, 0.5, 1, 0.75, 0.5625, 0.421875, 0.316406, 0.237305, 0.177979, 0.133484, 0.100113, 0.075085, 0.056314, 0.042235

ultracolor.v4 + Counterfeit-V2.5 = temp3

U-Net Merge - 0.979382, 0.628298, 0.534012, 0.507426, 0.511182, 0.533272, 0.56898, 0.616385, 0.674862, 0.7445, 0.825839, 0.919748, 0.5, 0.919748, 0.825839, 0.7445, 0.674862, 0.616385, 0.56898, 0.533272, 0.511182, 0.507426, 0.534012, 0.628298, 0.979382

temp3 + Treebark = TriPhaze_C

U-Net Merge - 0.243336, 0.427461, 0.566781, 0.672199, 0.751965, 0.812321, 0.857991, 0.892547, 0.918694, 0.938479, 0.953449, 0.964777, 0.666667, 0.964777, 0.953449, 0.938479, 0.918694, 0.892547, 0.857991, 0.812321, 0.751965, 0.672199, 0.566781, 0.427461, 0.243336

TriPhaze_A + TriPhaze_C = TriPhaze_B

U-Net Merge - 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5

```

# Converted weights

# Samples

All of the images use following negatives/settings. EXIF preserved.

```

Negative prompt: (worst quality, low quality:1.4), easynegative, bad anatomy, bad hands, error, missing fingers, extra digit, fewer digits, nsfw

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 7, Seed: 1853114200, Size: 768x512, Model hash: 6bad0b419f, Denoising strength: 0.6, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: R-ESRGAN 4x+ Anime6B

```

# TriPhaze_A

# TriPhaze_B

# TriPhaze_C

|

NiltonAlf18/eros

|

NiltonAlf18

| 2023-03-31T21:54:15Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-03-31T21:52:45Z |

---

license: creativeml-openrail-m

---

|

lunnan/Reinforce-CartPole-v1

|

lunnan

| 2023-03-31T21:46:43Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-31T21:46:32Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

Inzamam567/Useless-X-mix

|

Inzamam567

| 2023-03-31T21:34:49Z | 24 | 2 |

diffusers

|

[

"diffusers",

"stable-diffusion",

"text-to-image",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2023-03-31T21:34:49Z |

---

license: creativeml-openrail-m

library_name: diffusers

tags:

- stable-diffusion

pipeline_tag: text-to-image

duplicated_from: les-chien/X-mix

---

# X-mix

**Civitai**: [X-mix | Stable Diffusion Checkpoint | Civitai](https://civitai.com/models/13069/x-mix)

X-mix is a merging model used to generate anime images. My English is not very good, so there may be some parts of this article that are unclear.

## V2.0

V2.0 is a merged model based on V1.0. This model supports nsfw.

### Difference from V1.0

- The performance of V2.0 is not better than that of V1.0, but the generated images now exhibit a different artistic style.

- V2.0 offers better support for nsfw than V1.0, but the drawback is that even when you do not intend to generate an nsfw image, there is still a possibility of generating one. If you are more interested in the sfw model, I will provide a detailed explanation in the recipe section.

- In my opinion, V2.0 is not as user-friendly as V1.0, and it appears to be more challenging to generate an excellent image.

### Recommended Settings

- Sampler: DPM++ SDE Karras (sfw), DDIM (nsfw)

- Steps: 20 (DDIM may require more steps)

- CFG Scale: 5

- Hires upscale: Latent (bicubic antialiased), Latent (nearest-exact), Denoising strength: 0.4~0.7

- vae: NAI.vae

- Clip skip: 2

- ENSD: 31337

- Eta: 0.67

### Example

```

masterpiece, best quality, ultra-detailed, illustration, portrait, 1girl

Negative prompt: EasyNegative, photograph by bad-artist, bad_prompt_version2, DeepNegative-V1-75T

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 4291846267, Size: 512x512, Model hash: 7bc4c05c90, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (nearest-exact), Eta: 0.67

```

```

Indoor, bright, 1Girl, gray hair, amber eyes, smile, black dress, barefoot, sitting posture,

Negative prompt: EasyNegative, by bad-artist, bad_prompt_version2

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 2118045521, Size: 600x400, Model hash: 7961a4960e, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

%2C%20white%20t.png)

```

landscape, in spring, cherry blossoms, cloudy sky, 1girl, solo, long blue hair, smirk, pink eyes, (school uniform:1.05), white thighhighs,

Negative prompt: EasyNegative, by bad-artist, bad_prompt_version2, DeepNegative-V1-75T

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 3093571233, Size: 400x600, Model hash: 7961a4960e, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

```

1girl, on bed, wet, see-through shirt, thighhighs, cleavage, collarbone, full body,

Negative prompt: EasyNegative, photograph by bad-artist, bad_prompt_version2, DeepNegative-V1-75T

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 986400693, Size: 512x512, Model hash: 7961a4960e, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

%2C%20solo%2C%20Flowery%20meadow%2C%20cloudy%20sky%2C%20aqua%20eyes%2C%20white%20pantyhose%2C%20blonde%20hair%2C.png)

```

Alice \(Alice in wonderland\), solo, Flowery meadow, cloudy sky, aqua eyes, white pantyhose, blonde hair,

Negative prompt: EasyNegative, photograph by bad-artist, bad_prompt_version2, DeepNegative-V1-75T

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 273840053, Size: 512x512, Model hash: 7961a4960e, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

```

masterpiece, best quality, ultra-detailed, illustration, portrait, hakurei reimu, 1girl, throne room, dimly lit

Negative prompt: EasyNegative, by bad-artist, bad_prompt_version2, DeepNegative-V1-75T

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 2212365348, Size: 512x512, Model hash: 7961a4960e, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (nearest-exact), Eta: 0.67

```

```

masterpiece, best quality, ultra-detailed, illustration, 1girl, witch hat, purple eyes, blonde hair, wielding a purple staff blasting purple energy, purple beam, purple effects, dragons, chaos

Negative prompt: EasyNegative, photograph by bad-artist, bad_prompt_version2, DeepNegative-V1-75T

Steps: 20, Sampler: DDIM, CFG scale: 5, Seed: 293615512, Size: 512x512, Model hash: 7961a4960e, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (nearest-exact)

```

```

1girl, solo, black skirt, blue eyes, electric guitar, guitar, headphones, holding, holding plectrum, instrument, long hair, , music, one side up, pink hair, playing guitar, pleated skirt, black shirt, indoors

Negative prompt: EasyNegative, photograph by bad-artist, bad_prompt_version2, DeepNegative-V1-75T

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 3442031040, Size: 512x512, Model hash: 7961a4960e, Denoising strength: 0.6, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (nearest-exact), Eta: 0.67

```

### Recipe

**Step 1:** animefull-latest (model) + pastelmix-lora (lora) + ligneClaireStyleCogecha (lora) = pastel-Cogecha

You can try replacing animefull-latest with Anything-V3.0 or your preferred model. However, I cannot confirm if this will yield better results and it requires you to experiment with it on your own.

**Step 2:** MBW: Chilloutmix + X-mix-V1.0

| Model A | Model B | base_alpha | Weight | Merge Name |

| ----------- | ---------- | ---------- | ------------------------------------------------- | --------------- |

| Chilloutmix | X-mix-V1.0 | 1 | 1,1,1,1,1,1,1,1,0,0,0,0,1,0,0,0,0,1,1,1,1,1,1,1,1 | X-mix-V2.0-base |

This is the step of the sfw version. The steps for the nsfw version are as follows: I merged several LoRAs into Chilloutmix to obtain Chilloutmix-nsfw. Then I merged Chilloutmix-nsfw and X-mix-V1.0 to get X-mix-V2.0-nsfwBase1. Finally, I merged several LoRAs into X-mix-V2.0-nsfwBase1 to get X-mix-V2.0-nsfwBase2.

LoRAs related to real people should be merged into Chilloutmix or other photo-realistic models that you like, while LoRAs related to anime should be merged into X-mix-V2.0-base. Which LoRAs to use depends on your preference.

**Step 3:** MBW: pastel-Cogecha + X-mix-V2.0-base

| Model A | Model B | base_alpha | Weight | Merge Name |

| -------------- | --------------- | ---------- | ------------------------------------------------------- | -------------- |

| pastel-Cogecha | X-mix-V2.0-base | 0 | 1,1,1,1,1,0.3,0,0,0,1,0.1,1,1,1,1,1,0,1,0,1,1,0.2,1,1,1 | X-mix-V2.0-sfw |

In fact, I never tried to obtain the sfw version because I didn't plan on using it from the beginning. So this process is for reference only, and I am not sure about the actual effect of the sfw model.

## V1.0

I have forgotten the recipe for X-mix-V1.0, as too many models were used for merging. This model supports nsfw, but the effect may not be very good.

### Recommended Settings

- Sampler: DPM++ SDE Karras

- Steps: 20

- CFG Scale: 5

- Hires upscaler: Latent (bicubic antialiased), Denoising strength: 0.5~0.6

- vae: NAI.vae

- Clip skip: 2

- ENSD: 31337

- Eta: 0.67

### Examples

```

masterpiece, best quality, ultra-detailed, illustration, portrait, 1girl

Negative prompt: EasyNegative, by bad-artist, bad_prompt_version2

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 1906918205, Size: 512x512, Model hash: 7bc4c05c90, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

```

Indoor, bright, 1girl, gray hair, amber eyes, smile, black dress, barefoot, sitting posture,

Negative prompt: EasyNegative, by bad-artist, bad_prompt_version2

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 2118045521, Size: 600x400, Model hash: 7bc4c05c90, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

```

landscape, in spring, cherry blossoms, cloudy sky, 1girl, solo, long blue hair, smirk, pink eyes, (school uniform:1.05), white thighhighs,

Negative prompt: EasyNegative, by bad-artist, bad_prompt_version2

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 3093571233, Size: 400x600, Model hash: 7bc4c05c90, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

```

1girl, on bed, wet, see-through shirt, thighhighs, cleavage, collarbone, full body,

Negative prompt: EasyNegative, photograph by bad-artist, bad_prompt_version2

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 1666118295, Size: 512x512, Model hash: 7bc4c05c90, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

```

Alice \(Alice in wonderland\), solo, Flowery meadow, cloudy sky, aqua eyes, white pantyhose, blonde hair,

Negative prompt: EasyNegative, sketch by bad-artist

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 807449917, Size: 512x512, Model hash: 7bc4c05c90, Denoising strength: 0.5, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (nearest-exact), Eta: 0.67

```

```

masterpiece, best quality, ultra-detailed, illustration, portrait, hakurei reimu, 1girl, throne room, dimly lit

Negative prompt: EasyNegative, by bad-artist, bad_prompt_version2

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 116927034, Size: 512x512, Model hash: 7bc4c05c90, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

```

masterpiece, best quality, ultra-detailed, illustration, 1girl, witch hat, purple eyes, blonde hair, wielding a purple staff blasting purple energy, purple beam, purple effects, dragons, chaos

Negative prompt: EasyNegative, photograph by bad-artist, bad_prompt_version2

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 1705759664, Size: 512x512, Model hash: 7bc4c05c90, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

```

1girl, solo, black skirt, blue eyes, electric guitar, guitar, headphones, holding, holding plectrum, instrument, long hair, , music, one side up, pink hair, playing guitar, pleated skirt, black shirt, indoors

Negative prompt: EasyNegative, by bad-artist, bad_prompt_version2

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 5, Seed: 2548407675, Size: 512x512, Model hash: 7bc4c05c90, Denoising strength: 0.55, Clip skip: 2, ENSD: 31337, Hires upscale: 2, Hires upscaler: Latent (bicubic antialiased), Eta: 0.67

```

## Embedding

If you need the embedding used in examples, click them:

- **EasyNegative:** [embed/EasyNegative · Hugging Face](https://huggingface.co/embed/EasyNegative)

- **bad-artist:** [nick-x-hacker/bad-artist · Hugging Face](https://huggingface.co/nick-x-hacker/bad-artist)