modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-22 00:45:16

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 491

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-22 00:44:03

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

eunjin/koMHBERT-kcbert-based-v1 | eunjin | 2021-05-19T16:46:41Z | 6 | 1 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"feature-extraction",

"endpoints_compatible",

"region:us"

] | feature-extraction | 2022-03-02T23:29:05Z | korean Mental Health BERT

kcBERT를 아래의 dataset으로 MLM fine-tuining한 Bert Model입니다. 정신건강 문제 해결에 도움이 될만한 데이터셋이라고 판단하여 domain-adaptation하였고, 향후 정신건강 관련 감정 및 상태 classification 및 그에 따른 chatbot 구현에 사용할 수 있습니다.

이후 공개될 예정인 더 큰 규모의 데이터셋까지 Dapt할 예정입니다.

datasets from AIhub

웰니스 대화 스크립트 데이터셋1 & 2 (중복 제거 약 2만9천개)

@inproceedings{lee2020kcbert, title={KcBERT: Korean Comments BERT}, author={Lee, Junbum}, booktitle={Proceedings of the 32nd Annual Conference on Human and Cognitive Language Technology}, pages={437--440}, year={2020} } |

dvilares/bertinho-gl-base-cased | dvilares | 2021-05-19T16:17:47Z | 52 | 3 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"fill-mask",

"gl",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language: gl

widget:

- text: "As filloas son un [MASK] típico do entroido en Galicia "

---

# Bertinho-gl-base-cased

A pre-trained BERT model for Galician (12layers, cased). Trained on Wikipedia

|

dkleczek/bert-base-polish-uncased-v1 | dkleczek | 2021-05-19T15:55:32Z | 4,980 | 11 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"fill-mask",

"pl",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language: pl

thumbnail: https://raw.githubusercontent.com/kldarek/polbert/master/img/polbert.png

---

# Polbert - Polish BERT

Polish version of BERT language model is here! It is now available in two variants: cased and uncased, both can be downloaded and used via HuggingFace transformers library. I recommend using the cased model, more info on the differences and benchmark results below.

## Cased and uncased variants

* I initially trained the uncased model, the corpus and training details are referenced below. Here are some issues I found after I published the uncased model:

* Some Polish characters and accents are not tokenized correctly through the BERT tokenizer when applying lowercase. This doesn't impact sequence classification much, but may influence token classfication tasks significantly.

* I noticed a lot of duplicates in the Open Subtitles dataset, which dominates the training corpus.

* I didn't use Whole Word Masking.

* The cased model improves on the uncased model in the following ways:

* All Polish characters and accents should now be tokenized correctly.

* I removed duplicates from Open Subtitles dataset. The corpus is smaller, but more balanced now.

* The model is trained with Whole Word Masking.

## Pre-training corpora

Below is the list of corpora used along with the output of `wc` command (counting lines, words and characters). These corpora were divided into sentences with srxsegmenter (see references), concatenated and tokenized with HuggingFace BERT Tokenizer.

### Uncased

| Tables | Lines | Words | Characters |

| ------------- |--------------:| -----:| -----:|

| [Polish subset of Open Subtitles](http://opus.nlpl.eu/OpenSubtitles-v2018.php) | 236635408| 1431199601 | 7628097730 |

| [Polish subset of ParaCrawl](http://opus.nlpl.eu/ParaCrawl.php) | 8470950 | 176670885 | 1163505275 |

| [Polish Parliamentary Corpus](http://clip.ipipan.waw.pl/PPC) | 9799859 | 121154785 | 938896963 |

| [Polish Wikipedia - Feb 2020](https://dumps.wikimedia.org/plwiki/latest/plwiki-latest-pages-articles.xml.bz2) | 8014206 | 132067986 | 1015849191 |

| Total | 262920423 | 1861093257 | 10746349159 |

### Cased

| Tables | Lines | Words | Characters |

| ------------- |--------------:| -----:| -----:|

| [Polish subset of Open Subtitles (Deduplicated) ](http://opus.nlpl.eu/OpenSubtitles-v2018.php) | 41998942| 213590656 | 1424873235 |

| [Polish subset of ParaCrawl](http://opus.nlpl.eu/ParaCrawl.php) | 8470950 | 176670885 | 1163505275 |

| [Polish Parliamentary Corpus](http://clip.ipipan.waw.pl/PPC) | 9799859 | 121154785 | 938896963 |

| [Polish Wikipedia - Feb 2020](https://dumps.wikimedia.org/plwiki/latest/plwiki-latest-pages-articles.xml.bz2) | 8014206 | 132067986 | 1015849191 |

| Total | 68283960 | 646479197 | 4543124667 |

## Pre-training details

### Uncased

* Polbert was trained with code provided in Google BERT's github repository (https://github.com/google-research/bert)

* Currently released model follows bert-base-uncased model architecture (12-layer, 768-hidden, 12-heads, 110M parameters)

* Training set-up: in total 1 million training steps:

* 100.000 steps - 128 sequence length, batch size 512, learning rate 1e-4 (10.000 steps warmup)

* 800.000 steps - 128 sequence length, batch size 512, learning rate 5e-5

* 100.000 steps - 512 sequence length, batch size 256, learning rate 2e-5

* The model was trained on a single Google Cloud TPU v3-8

### Cased

* Same approach as uncased model, with the following differences:

* Whole Word Masking

* Training set-up:

* 100.000 steps - 128 sequence length, batch size 2048, learning rate 1e-4 (10.000 steps warmup)

* 100.000 steps - 128 sequence length, batch size 2048, learning rate 5e-5

* 100.000 steps - 512 sequence length, batch size 256, learning rate 2e-5

## Usage

Polbert is released via [HuggingFace Transformers library](https://huggingface.co/transformers/).

For an example use as language model, see [this notebook](/LM_testing.ipynb) file.

### Uncased

```python

from transformers import *

model = BertForMaskedLM.from_pretrained("dkleczek/bert-base-polish-uncased-v1")

tokenizer = BertTokenizer.from_pretrained("dkleczek/bert-base-polish-uncased-v1")

nlp = pipeline('fill-mask', model=model, tokenizer=tokenizer)

for pred in nlp(f"Adam Mickiewicz wielkim polskim {nlp.tokenizer.mask_token} był."):

print(pred)

# Output:

# {'sequence': '[CLS] adam mickiewicz wielkim polskim poeta był. [SEP]', 'score': 0.47196975350379944, 'token': 26596}

# {'sequence': '[CLS] adam mickiewicz wielkim polskim bohaterem był. [SEP]', 'score': 0.09127858281135559, 'token': 10953}

# {'sequence': '[CLS] adam mickiewicz wielkim polskim człowiekiem był. [SEP]', 'score': 0.0647173821926117, 'token': 5182}

# {'sequence': '[CLS] adam mickiewicz wielkim polskim pisarzem był. [SEP]', 'score': 0.05232388526201248, 'token': 24293}

# {'sequence': '[CLS] adam mickiewicz wielkim polskim politykiem był. [SEP]', 'score': 0.04554257541894913, 'token': 44095}

```

### Cased

```python

model = BertForMaskedLM.from_pretrained("dkleczek/bert-base-polish-cased-v1")

tokenizer = BertTokenizer.from_pretrained("dkleczek/bert-base-polish-cased-v1")

nlp = pipeline('fill-mask', model=model, tokenizer=tokenizer)

for pred in nlp(f"Adam Mickiewicz wielkim polskim {nlp.tokenizer.mask_token} był."):

print(pred)

# Output:

# {'sequence': '[CLS] Adam Mickiewicz wielkim polskim pisarzem był. [SEP]', 'score': 0.5391148328781128, 'token': 37120}

# {'sequence': '[CLS] Adam Mickiewicz wielkim polskim człowiekiem był. [SEP]', 'score': 0.11683262139558792, 'token': 6810}

# {'sequence': '[CLS] Adam Mickiewicz wielkim polskim bohaterem był. [SEP]', 'score': 0.06021466106176376, 'token': 17709}

# {'sequence': '[CLS] Adam Mickiewicz wielkim polskim mistrzem był. [SEP]', 'score': 0.051870670169591904, 'token': 14652}

# {'sequence': '[CLS] Adam Mickiewicz wielkim polskim artystą był. [SEP]', 'score': 0.031787533313035965, 'token': 35680}

```

See the next section for an example usage of Polbert in downstream tasks.

## Evaluation

Thanks to Allegro, we now have the [KLEJ benchmark](https://klejbenchmark.com/leaderboard/), a set of nine evaluation tasks for the Polish language understanding. The following results are achieved by running standard set of evaluation scripts (no tricks!) utilizing both cased and uncased variants of Polbert.

| Model | Average | NKJP-NER | CDSC-E | CDSC-R | CBD | PolEmo2.0-IN | PolEmo2.0-OUT | DYK | PSC | AR |

| ------------- |--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|

| Polbert cased | 81.7 | 93.6 | 93.4 | 93.8 | 52.7 | 87.4 | 71.1 | 59.1 | 98.6 | 85.2 |

| Polbert uncased | 81.4 | 90.1 | 93.9 | 93.5 | 55.0 | 88.1 | 68.8 | 59.4 | 98.8 | 85.4 |

Note how the uncased model performs better than cased on some tasks? My guess this is because of the oversampling of Open Subtitles dataset and its similarity to data in some of these tasks. All these benchmark tasks are sequence classification, so the relative strength of the cased model is not so visible here.

## Bias

The data used to train the model is biased. It may reflect stereotypes related to gender, ethnicity etc. Please be careful when using the model for downstream task to consider these biases and mitigate them.

## Acknowledgements

* I'd like to express my gratitude to Google [TensorFlow Research Cloud (TFRC)](https://www.tensorflow.org/tfrc) for providing the free TPU credits - thank you!

* Also appreciate the help from Timo Möller from [deepset](https://deepset.ai) for sharing tips and scripts based on their experience training German BERT model.

* Big thanks to Allegro for releasing KLEJ Benchmark and specifically to Piotr Rybak for help with the evaluation and pointing out some issues with the tokenization.

* Finally, thanks to Rachel Thomas, Jeremy Howard and Sylvain Gugger from [fastai](https://www.fast.ai) for their NLP and Deep Learning courses!

## Author

Darek Kłeczek - contact me on Twitter [@dk21](https://twitter.com/dk21)

## References

* https://github.com/google-research/bert

* https://github.com/narusemotoki/srx_segmenter

* SRX rules file for sentence splitting in Polish, written by Marcin Miłkowski: https://raw.githubusercontent.com/languagetool-org/languagetool/master/languagetool-core/src/main/resources/org/languagetool/resource/segment.srx

* [KLEJ benchmark](https://klejbenchmark.com/leaderboard/) |

deepset/sentence_bert | deepset | 2021-05-19T15:34:03Z | 10,668 | 20 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

license: apache-2.0

---

This is an upload of the bert-base-nli-stsb-mean-tokens pretrained model from the Sentence Transformers Repo (https://github.com/UKPLab/sentence-transformers)

|

ceshine/TinyBERT_L-4_H-312_v2-distill-AllNLI | ceshine | 2021-05-19T14:01:36Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"feature-extraction",

"endpoints_compatible",

"region:us"

] | feature-extraction | 2022-03-02T23:29:05Z | # TinyBERT_L-4_H-312_v2 English Sentence Encoder

This is distilled from the `bert-base-nli-stsb-mean-tokens` pre-trained model from [Sentence-Transformers](https://sbert.net/).

The embedding vector is obtained by mean/average pooling of the last layer's hidden states.

Update 20210325: Added the attention matrices imitation objective as in the TinyBERT paper, and the distill target has been changed from `distilbert-base-nli-stsb-mean-tokens` to `bert-base-nli-stsb-mean-tokens` (they have almost the same STSb performance).

## Model Comparison

We compute cosine similarity scores of the embeddings of the sentence pair to get the spearman correlation on the STS benchmark (bigger is better):

| | Dev | Test |

| ------------------------------------ | ----- | ----- |

| bert-base-nli-stsb-mean-tokens | .8704 | .8505 |

| distilbert-base-nli-stsb-mean-tokens | .8667 | .8516 |

| TinyBERT_L-4_H-312_v2-distill-AllNLI | .8587 | .8283 |

| TinyBERT_L-4_H (20210325) | .8551 | .8341 |

|

cahya/bert-base-indonesian-522M | cahya | 2021-05-19T13:38:45Z | 3,317 | 25 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"id",

"dataset:wikipedia",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language: "id"

license: "mit"

datasets:

- wikipedia

widget:

- text: "Ibu ku sedang bekerja [MASK] sawah."

---

# Indonesian BERT base model (uncased)

## Model description

It is BERT-base model pre-trained with indonesian Wikipedia using a masked language modeling (MLM) objective. This

model is uncased: it does not make a difference between indonesia and Indonesia.

This is one of several other language models that have been pre-trained with indonesian datasets. More detail about

its usage on downstream tasks (text classification, text generation, etc) is available at [Transformer based Indonesian Language Models](https://github.com/cahya-wirawan/indonesian-language-models/tree/master/Transformers)

## Intended uses & limitations

### How to use

You can use this model directly with a pipeline for masked language modeling:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='cahya/bert-base-indonesian-522M')

>>> unmasker("Ibu ku sedang bekerja [MASK] supermarket")

[{'sequence': '[CLS] ibu ku sedang bekerja di supermarket [SEP]',

'score': 0.7983310222625732,

'token': 1495},

{'sequence': '[CLS] ibu ku sedang bekerja. supermarket [SEP]',

'score': 0.090003103017807,

'token': 17},

{'sequence': '[CLS] ibu ku sedang bekerja sebagai supermarket [SEP]',

'score': 0.025469014421105385,

'token': 1600},

{'sequence': '[CLS] ibu ku sedang bekerja dengan supermarket [SEP]',

'score': 0.017966199666261673,

'token': 1555},

{'sequence': '[CLS] ibu ku sedang bekerja untuk supermarket [SEP]',

'score': 0.016971781849861145,

'token': 1572}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import BertTokenizer, BertModel

model_name='cahya/bert-base-indonesian-522M'

tokenizer = BertTokenizer.from_pretrained(model_name)

model = BertModel.from_pretrained(model_name)

text = "Silakan diganti dengan text apa saja."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in Tensorflow:

```python

from transformers import BertTokenizer, TFBertModel

model_name='cahya/bert-base-indonesian-522M'

tokenizer = BertTokenizer.from_pretrained(model_name)

model = TFBertModel.from_pretrained(model_name)

text = "Silakan diganti dengan text apa saja."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

This model was pre-trained with 522MB of indonesian Wikipedia.

The texts are lowercased and tokenized using WordPiece and a vocabulary size of 32,000. The inputs of the model are

then of the form:

```[CLS] Sentence A [SEP] Sentence B [SEP]```

|

ayansinha/lic-class-scancode-bert-base-cased-L32-1 | ayansinha | 2021-05-19T12:04:46Z | 9 | 0 | transformers | [

"transformers",

"tf",

"bert",

"fill-mask",

"license",

"sentence-classification",

"scancode",

"license-compliance",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:scancode-rules",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language: en

tags:

- license

- sentence-classification

- scancode

- license-compliance

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- scancode-rules

version: 1.0

---

# `lic-class-scancode-bert-base-cased-L32-1`

## Intended Use

This model is intended to be used for Sentence Classification which is used for results

analysis in [`scancode-results-analyzer`](https://github.com/nexB/scancode-results-analyzer).

`scancode-results-analyzer` helps detect faulty scans in [`scancode-toolkit`](https://github.com/nexB/scancode-results-analyzer) by using statistics and nlp modeling, among other tools,

to make Scancode better.

## How to Use

Refer [quickstart](https://github.com/nexB/scancode-results-analyzer#quickstart---local-machine) section in `scancode-results-analyzer` documentation, for installing and getting started.

- [Link to Code](https://github.com/nexB/scancode-results-analyzer/blob/master/src/results_analyze/nlp_models.py)

Then in `NLPModelsPredict` class, function `predict_basic_lic_class` uses this classifier to

predict sentances as either valid license tags or false positives.

## Limitations and Bias

As this model is a fine-tuned version of the [`bert-base-cased`](https://huggingface.co/bert-base-cased) model,

it has the same biases, but as the task it is fine-tuned to is a very specific task

(license text/notice/tag/referance) without those intended biases, it's safe to assume

those don't apply at all here.

## Training and Fine-Tuning Data

The BERT model was pretrained on BookCorpus, a dataset consisting of 11,038 unpublished books and English Wikipedia (excluding lists, tables and headers).

Then this `bert-base-cased` model was fine-tuned on Scancode Rule texts, specifically

trained in the context of sentence classification, where the four classes are

- License Text

- License Notice

- License Tag

- License Referance

## Training Procedure

For fine-tuning procedure and training, refer `scancode-results-analyzer` code.

- [Link to Code](https://github.com/nexB/scancode-results-analyzer/blob/master/src/results_analyze/nlp_models.py)

In `NLPModelsTrain` class, function `prepare_input_data_false_positive` prepares the

training data.

In `NLPModelsTrain` class, function `train_basic_false_positive_classifier` fine-tunes

this classifier.

1. Model - [BertBaseCased](https://huggingface.co/bert-base-cased) (Weights 0.5 GB)

2. Sentence Length - 32

3. Labels - 4 (License Text/Notice/Tag/Referance)

4. After 4 Epochs of Fine-Tuning with learning rate 2e-5 (60 secs each on an RTX 2060)

Note: The classes aren't balanced.

## Eval Results

- Accuracy on the training data (90%) : 0.98 (+- 0.01)

- Accuracy on the validation data (10%) : 0.84 (+- 0.01)

## Further Work

1. Apllying Splitting/Aggregation Strategies

2. Data Augmentation according to Vaalidation Errors

3. Bigger/Better Suited Models

|

ayansinha/false-positives-scancode-bert-base-uncased-L8-1 | ayansinha | 2021-05-19T12:04:24Z | 8 | 0 | transformers | [

"transformers",

"tf",

"bert",

"fill-mask",

"license",

"sentence-classification",

"scancode",

"license-compliance",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:scancode-rules",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language: en

tags:

- license

- sentence-classification

- scancode

- license-compliance

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- scancode-rules

version: 1.0

---

# `false-positives-scancode-bert-base-uncased-L8-1`

## Intended Use

This model is intended to be used for Sentence Classification which is used for results

analysis in [`scancode-results-analyzer`](https://github.com/nexB/scancode-results-analyzer).

`scancode-results-analyzer` helps detect faulty scans in [`scancode-toolkit`](https://github.com/nexB/scancode-results-analyzer) by using statistics and nlp modeling, among other tools,

to make Scancode better.

#### How to use

Refer [quickstart](https://github.com/nexB/scancode-results-analyzer#quickstart---local-machine) section in `scancode-results-analyzer` documentation, for installing and getting started.

- [Link to Code](https://github.com/nexB/scancode-results-analyzer/blob/master/src/results_analyze/nlp_models.py)

Then in `NLPModelsPredict` class, function `predict_basic_false_positive` uses this classifier to

predict sentances as either valid license tags or false positives.

#### Limitations and bias

As this model is a fine-tuned version of the [`bert-base-uncased`](https://huggingface.co/bert-base-uncased) model,

it has the same biases, but as the task it is fine-tuned to is a very specific field

(license tags vs false positives) without those intended biases, it's safe to assume

those don't apply at all here.

## Training and Fine-Tuning Data

The BERT model was pretrained on BookCorpus, a dataset consisting of 11,038 unpublished books and English Wikipedia (excluding lists, tables and headers).

Then this `bert-base-uncased` model was fine-tuned on Scancode Rule texts, specifically

trained in the context of sentence classification, where the two classes are

- License Tags

- False Positives of License Tags

## Training procedure

For fine-tuning procedure and training, refer `scancode-results-analyzer` code.

- [Link to Code](https://github.com/nexB/scancode-results-analyzer/blob/master/src/results_analyze/nlp_models.py)

In `NLPModelsTrain` class, function `prepare_input_data_false_positive` prepares the

training data.

In `NLPModelsTrain` class, function `train_basic_false_positive_classifier` fine-tunes

this classifier.

1. Model - [BertBaseUncased](https://huggingface.co/bert-base-uncased) (Weights 0.5 GB)

2. Sentence Length - 8

3. Labels - 2 (False Positive/License Tag)

4. After 4-6 Epochs of Fine-Tuning with learning rate 2e-5 (6 secs each on an RTX 2060)

Note: The classes aren't balanced.

## Eval results

- Accuracy on the training data (90%) : 0.99 (+- 0.005)

- Accuracy on the validation data (10%) : 0.96 (+- 0.015)

The errors have lower confidence scores using thresholds on confidence scores almost

makes it a perfect classifier as the classification task is comparatively easier.

Results are stable, in the sence fine-tuning accuracy is very easily achieved every

time, though more learning epochs makes the data overfit, i.e. the training loss

decreases, but the validation loss increases, even though accuracies are very stable

even on overfitting.

|

allenyummy/chinese-bert-wwm-ehr-ner-qasl | allenyummy | 2021-05-19T11:42:17Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

language: zh-tw

---

# Model name

Chinese-bert-wwm-electrical-health-records-ner-question-answering-sequence-labeling

#### How to use

```

from transformers import AutoTokenizer, AutoModelForTokenClassification

tokenizer = AutoTokenizer.from_pretrained("allenyummy/chinese-bert-wwm-ehr-ner-qasl")

model = AutoModelForTokenClassification.from_pretrained("allenyummy/chinese-bert-wwm-ehr-ner-qasl")

``` |

airesearch/bert-base-multilingual-cased-finetuned | airesearch | 2021-05-19T11:39:44Z | 9 | 0 | transformers | [

"transformers",

"bert",

"fill-mask",

"arxiv:1810.04805",

"arxiv:2101.09635",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | # Finetuend `bert-base-multilignual-cased` model on Thai sequence and token classification datasets

<br>

Finetuned XLM Roberta BASE model on Thai sequence and token classification datasets

The script and documentation can be found at [this repository](https://github.com/vistec-AI/thai2transformers).

<br>

## Model description

<br>

We use the pretrained cross-lingual BERT model (mBERT) as proposed by [[Devlin et al., 2018]](https://arxiv.org/abs/1810.04805). We download the pretrained PyTorch model via HuggingFace's Model Hub (https://huggingface.co/bert-base-multilignual-cased)

<br>

## Intended uses & limitations

<br>

You can use the finetuned models for multiclass/multilabel text classification and token classification task.

<br>

**Multiclass text classification**

- `wisesight_sentiment`

4-class text classification task (`positive`, `neutral`, `negative`, and `question`) based on social media posts and tweets.

- `wongnai_reivews`

Users' review rating classification task (scale is ranging from 1 to 5)

- `generated_reviews_enth` : (`review_star` as label)

Generated users' review rating classification task (scale is ranging from 1 to 5).

**Multilabel text classification**

- `prachathai67k`

Thai topic classification with 12 labels based on news article corpus from prachathai.com. The detail is described in this [page](https://huggingface.co/datasets/prachathai67k).

**Token classification**

- `thainer`

Named-entity recognition tagging with 13 named-entities as descibed in this [page](https://huggingface.co/datasets/thainer).

- `lst20` : NER NER and POS tagging

Named-entity recognition tagging with 10 named-entities and Part-of-Speech tagging with 16 tags as descibed in this [page](https://huggingface.co/datasets/lst20).

<br>

## How to use

<br>

The example notebook demonstrating how to use finetuned model for inference can be found at this [Colab notebook](https://colab.research.google.com/drive/1Kbk6sBspZLwcnOE61adAQo30xxqOQ9ko)

<br>

**BibTeX entry and citation info**

```

@misc{lowphansirikul2021wangchanberta,

title={WangchanBERTa: Pretraining transformer-based Thai Language Models},

author={Lalita Lowphansirikul and Charin Polpanumas and Nawat Jantrakulchai and Sarana Nutanong},

year={2021},

eprint={2101.09635},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

DJSammy/bert-base-danish-uncased_BotXO-ai | DJSammy | 2021-05-19T11:13:30Z | 35 | 1 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"masked-lm",

"fill-mask",

"da",

"dataset:common_crawl",

"dataset:wikipedia",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:04Z | ---

language: da

tags:

- bert

- masked-lm

license: cc-by-4.0

datasets:

- common_crawl

- wikipedia

pipeline_tag: fill-mask

widget:

- text: "København er [MASK] i Danmark."

---

# Danish BERT (uncased) model

[BotXO.ai](https://www.botxo.ai/) developed this model. For data and training details see their [GitHub repository](https://github.com/botxo/nordic_bert).

The original model was trained in TensorFlow then I converted it to Pytorch using [transformers-cli](https://huggingface.co/transformers/converting_tensorflow_models.html?highlight=cli).

For TensorFlow version download here: https://www.dropbox.com/s/19cjaoqvv2jicq9/danish_bert_uncased_v2.zip?dl=1

## Architecture

```python

from transformers import AutoModelForPreTraining

model = AutoModelForPreTraining.from_pretrained("DJSammy/bert-base-danish-uncased_BotXO,ai")

params = list(model.named_parameters())

print('danish_bert_uncased_v2 has {:} different named parameters.\n'.format(len(params)))

print('==== Embedding Layer ====\n')

for p in params[0:5]:

print("{:<55} {:>12}".format(p[0], str(tuple(p[1].size()))))

print('\n==== First Transformer ====\n')

for p in params[5:21]:

print("{:<55} {:>12}".format(p[0], str(tuple(p[1].size()))))

print('\n==== Last Transformer ====\n')

for p in params[181:197]:

print("{:<55} {:>12}".format(p[0], str(tuple(p[1].size()))))

print('\n==== Output Layer ====\n')

for p in params[197:]:

print("{:<55} {:>12}".format(p[0], str(tuple(p[1].size()))))

# danish_bert_uncased_v2 has 206 different named parameters.

# ==== Embedding Layer ====

# bert.embeddings.word_embeddings.weight (32000, 768)

# bert.embeddings.position_embeddings.weight (512, 768)

# bert.embeddings.token_type_embeddings.weight (2, 768)

# bert.embeddings.LayerNorm.weight (768,)

# bert.embeddings.LayerNorm.bias (768,)

# ==== First Transformer ====

# bert.encoder.layer.0.attention.self.query.weight (768, 768)

# bert.encoder.layer.0.attention.self.query.bias (768,)

# bert.encoder.layer.0.attention.self.key.weight (768, 768)

# bert.encoder.layer.0.attention.self.key.bias (768,)

# bert.encoder.layer.0.attention.self.value.weight (768, 768)

# bert.encoder.layer.0.attention.self.value.bias (768,)

# bert.encoder.layer.0.attention.output.dense.weight (768, 768)

# bert.encoder.layer.0.attention.output.dense.bias (768,)

# bert.encoder.layer.0.attention.output.LayerNorm.weight (768,)

# bert.encoder.layer.0.attention.output.LayerNorm.bias (768,)

# bert.encoder.layer.0.intermediate.dense.weight (3072, 768)

# bert.encoder.layer.0.intermediate.dense.bias (3072,)

# bert.encoder.layer.0.output.dense.weight (768, 3072)

# bert.encoder.layer.0.output.dense.bias (768,)

# bert.encoder.layer.0.output.LayerNorm.weight (768,)

# bert.encoder.layer.0.output.LayerNorm.bias (768,)

# ==== Last Transformer ====

# bert.encoder.layer.11.attention.self.query.weight (768, 768)

# bert.encoder.layer.11.attention.self.query.bias (768,)

# bert.encoder.layer.11.attention.self.key.weight (768, 768)

# bert.encoder.layer.11.attention.self.key.bias (768,)

# bert.encoder.layer.11.attention.self.value.weight (768, 768)

# bert.encoder.layer.11.attention.self.value.bias (768,)

# bert.encoder.layer.11.attention.output.dense.weight (768, 768)

# bert.encoder.layer.11.attention.output.dense.bias (768,)

# bert.encoder.layer.11.attention.output.LayerNorm.weight (768,)

# bert.encoder.layer.11.attention.output.LayerNorm.bias (768,)

# bert.encoder.layer.11.intermediate.dense.weight (3072, 768)

# bert.encoder.layer.11.intermediate.dense.bias (3072,)

# bert.encoder.layer.11.output.dense.weight (768, 3072)

# bert.encoder.layer.11.output.dense.bias (768,)

# bert.encoder.layer.11.output.LayerNorm.weight (768,)

# bert.encoder.layer.11.output.LayerNorm.bias (768,)

# ==== Output Layer ====

# bert.pooler.dense.weight (768, 768)

# bert.pooler.dense.bias (768,)

# cls.predictions.bias (32000,)

# cls.predictions.transform.dense.weight (768, 768)

# cls.predictions.transform.dense.bias (768,)

# cls.predictions.transform.LayerNorm.weight (768,)

# cls.predictions.transform.LayerNorm.bias (768,)

# cls.seq_relationship.weight (2, 768)

# cls.seq_relationship.bias (2,)

```

## Example Pipeline

```python

from transformers import pipeline

unmasker = pipeline('fill-mask', model='DJSammy/bert-base-danish-uncased_BotXO,ai')

unmasker('København er [MASK] i Danmark.')

# Copenhagen is the [MASK] of Denmark.

# =>

# [{'score': 0.788068950176239,

# 'sequence': '[CLS] københavn er hovedstad i danmark. [SEP]',

# 'token': 12610,

# 'token_str': 'hovedstad'},

# {'score': 0.07606703042984009,

# 'sequence': '[CLS] københavn er hovedstaden i danmark. [SEP]',

# 'token': 8108,

# 'token_str': 'hovedstaden'},

# {'score': 0.04299738258123398,

# 'sequence': '[CLS] københavn er metropol i danmark. [SEP]',

# 'token': 23305,

# 'token_str': 'metropol'},

# {'score': 0.008163209073245525,

# 'sequence': '[CLS] københavn er ikke i danmark. [SEP]',

# 'token': 89,

# 'token_str': 'ikke'},

# {'score': 0.006238455418497324,

# 'sequence': '[CLS] københavn er ogsa i danmark. [SEP]',

# 'token': 25253,

# 'token_str': 'ogsa'}]

```

|

patrickvonplaten/norwegian-roberta-base | patrickvonplaten | 2021-05-19T10:12:21Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ## Roberta-Base

This repo trains [roberta-base](https://huggingface.co/roberta-base) from scratch on the [Norwegian training subset of Oscar](https://oscar-corpus.com/) containing roughly 4.7 GB of data according to [this](https://github.com/huggingface/transformers/tree/master/examples/flax/language-modeling) example.

Training is done on a TPUv3-8 in Flax. More statistics on the training run can be found under [tf.hub](https://tensorboard.dev/experiment/GdYmdak2TWeVz0DDRYOrrg).

|

BonjinKim/dst_kor_bert | BonjinKim | 2021-05-19T05:35:57Z | 15 | 1 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"pretraining",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:04Z | # Korean bert base model for DST

- This is ConversationBert for dsksd/bert-ko-small-minimal(base-module) + 5 datasets

- Use dsksd/bert-ko-small-minimal tokenizer

- 5 datasets

- tweeter_dialogue : xlsx

- speech : trn

- office_dialogue : json

- KETI_dialogue : txt

- WOS_dataset : json

```python

tokenizer = AutoTokenizer.from_pretrained("BonjinKim/dst_kor_bert")

model = AutoModel.from_pretrained("BonjinKim/dst_kor_bert")

``` |

rohanrajpal/bert-base-multilingual-codemixed-cased-sentiment | rohanrajpal | 2021-05-19T00:35:16Z | 11 | 2 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"hi",

"en",

"codemix",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

language:

- hi

- en

tags:

- hi

- en

- codemix

license: "apache-2.0"

datasets:

- SAIL 2017

metrics:

- fscore

- accuracy

---

# BERT codemixed base model for hinglish (cased)

## Model description

Input for the model: Any codemixed hinglish text

Output for the model: Sentiment. (0 - Negative, 1 - Neutral, 2 - Positive)

I took a bert-base-multilingual-cased model from Huggingface and finetuned it on [SAIL 2017](http://www.dasdipankar.com/SAILCodeMixed.html) dataset.

Performance of this model on the SAIL 2017 dataset

| metric | score |

|------------|----------|

| acc | 0.588889 |

| f1 | 0.582678 |

| acc_and_f1 | 0.585783 |

| precision | 0.586516 |

| recall | 0.588889 |

## Intended uses & limitations

#### How to use

Here is how to use this model to get the features of a given text in *PyTorch*:

```python

# You can include sample code which will be formatted

from transformers import BertTokenizer, BertModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("rohanrajpal/bert-base-codemixed-uncased-sentiment")

model = AutoModelForSequenceClassification.from_pretrained("rohanrajpal/bert-base-codemixed-uncased-sentiment")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in *TensorFlow*:

```python

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('rohanrajpal/bert-base-codemixed-uncased-sentiment')

model = TFBertModel.from_pretrained("rohanrajpal/bert-base-codemixed-uncased-sentiment")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

#### Limitations and bias

Coming soon!

## Training data

I trained on the SAIL 2017 dataset [link](http://amitavadas.com/SAIL/Data/SAIL_2017.zip) on this [pretrained model](https://huggingface.co/bert-base-multilingual-cased).

## Training procedure

No preprocessing.

## Eval results

### BibTeX entry and citation info

```bibtex

@inproceedings{khanuja-etal-2020-gluecos,

title = "{GLUEC}o{S}: An Evaluation Benchmark for Code-Switched {NLP}",

author = "Khanuja, Simran and

Dandapat, Sandipan and

Srinivasan, Anirudh and

Sitaram, Sunayana and

Choudhury, Monojit",

booktitle = "Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics",

month = jul,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.acl-main.329",

pages = "3575--3585"

}

```

|

rohanrajpal/bert-base-en-es-codemix-cased | rohanrajpal | 2021-05-19T00:26:38Z | 13 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"es",

"en",

"codemix",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

language:

- es

- en

tags:

- es

- en

- codemix

license: "apache-2.0"

datasets:

- SAIL 2017

metrics:

- fscore

- accuracy

- precision

- recall

---

# BERT codemixed base model for spanglish (cased)

This model was built using [lingualytics](https://github.com/lingualytics/py-lingualytics), an open-source library that supports code-mixed analytics.

## Model description

Input for the model: Any codemixed spanglish text

Output for the model: Sentiment. (0 - Negative, 1 - Neutral, 2 - Positive)

I took a bert-base-multilingual-cased model from Huggingface and finetuned it on [CS-EN-ES-CORPUS](http://www.grupolys.org/software/CS-CORPORA/cs-en-es-corpus-wassa2015.txt) dataset.

Performance of this model on the dataset

| metric | score |

|------------|----------|

| acc | 0.718615 |

| f1 | 0.71759 |

| acc_and_f1 | 0.718103 |

| precision | 0.719302 |

| recall | 0.718615 |

## Intended uses & limitations

Make sure to preprocess your data using [these methods](https://github.com/microsoft/GLUECoS/blob/master/Data/Preprocess_Scripts/preprocess_sent_en_es.py) before using this model.

#### How to use

Here is how to use this model to get the features of a given text in *PyTorch*:

```python

# You can include sample code which will be formatted

from transformers import BertTokenizer, BertModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained('rohanrajpal/bert-base-en-es-codemix-cased')

model = AutoModelForSequenceClassification.from_pretrained('rohanrajpal/bert-base-en-es-codemix-cased')

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in *TensorFlow*:

```python

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('rohanrajpal/bert-base-en-es-codemix-cased')

model = TFBertModel.from_pretrained('rohanrajpal/bert-base-en-es-codemix-cased')

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

#### Limitations and bias

Since I dont know spanish, I cant verify the quality of annotations or the dataset itself. This is a very simple transfer learning approach and I'm open to discussions to improve upon this.

## Training data

I trained on the dataset on the [bert-base-multilingual-cased model](https://huggingface.co/bert-base-multilingual-cased).

## Training procedure

Followed the preprocessing techniques followed [here](https://github.com/microsoft/GLUECoS/blob/master/Data/Preprocess_Scripts/preprocess_sent_en_es.py)

## Eval results

### BibTeX entry and citation info

```bibtex

@inproceedings{khanuja-etal-2020-gluecos,

title = "{GLUEC}o{S}: An Evaluation Benchmark for Code-Switched {NLP}",

author = "Khanuja, Simran and

Dandapat, Sandipan and

Srinivasan, Anirudh and

Sitaram, Sunayana and

Choudhury, Monojit",

booktitle = "Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics",

month = jul,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.acl-main.329",

pages = "3575--3585"

}

```

|

aodiniz/bert_uncased_L-4_H-256_A-4_cord19-200616 | aodiniz | 2021-05-18T23:50:55Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"fill-mask",

"arxiv:1908.08962",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | # BERT L-4 H-256 fine-tuned on MLM (CORD-19 2020/06/16)

BERT model with [4 Transformer layers and hidden embedding of size 256](https://huggingface.co/google/bert_uncased_L-4_H-256_A-4), referenced in [Well-Read Students Learn Better: On the Importance of Pre-training Compact Models](https://arxiv.org/abs/1908.08962), fine-tuned for MLM on CORD-19 dataset (as released on 2020/06/16).

## Training the model

```bash

python run_language_modeling.py

--model_type bert

--model_name_or_path google/bert_uncased_L-4_H-256_A-4

--do_train

--train_data_file {cord19-200616-dataset}

--mlm

--mlm_probability 0.2

--line_by_line

--block_size 256

--per_device_train_batch_size 20

--learning_rate 3e-5

--num_train_epochs 2

--output_dir bert_uncased_L-4_H-256_A-4_cord19-200616

|

aodiniz/bert_uncased_L-2_H-512_A-8_cord19-200616 | aodiniz | 2021-05-18T23:48:58Z | 8 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"fill-mask",

"arxiv:1908.08962",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | # BERT L-2 H-512 fine-tuned on MLM (CORD-19 2020/06/16)

BERT model with [2 Transformer layers and hidden embedding of size 512](https://huggingface.co/google/bert_uncased_L-2_H-512_A-8), referenced in [Well-Read Students Learn Better: On the Importance of Pre-training Compact Models](https://arxiv.org/abs/1908.08962), fine-tuned for MLM on CORD-19 dataset (as released on 2020/06/16).

## Training the model

```bash

python run_language_modeling.py

--model_type bert

--model_name_or_path google/bert_uncased_L-2_H-512_A-8

--do_train

--train_data_file {cord19-200616-dataset}

--mlm

--mlm_probability 0.2

--line_by_line

--block_size 512

--per_device_train_batch_size 20

--learning_rate 3e-5

--num_train_epochs 2

--output_dir bert_uncased_L-2_H-512_A-8_cord19-200616

|

alexbrandsen/ArcheoBERTje | alexbrandsen | 2021-05-18T23:22:51Z | 22 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | # ArcheoBERTje

A Dutch BERT model for the Archaeology domain

This model is based on the Dutch BERTje model by wietsedv (https://github.com/wietsedv/bertje).

We further finetuned BERTje with a corpus of roughly 60k Dutch excavation reports (~650 million tokens) from the DANS data archive (https://easy.dans.knaw.nl/ui/home). |

alexbrandsen/ArcheoBERTje-NER | alexbrandsen | 2021-05-18T23:21:58Z | 4 | 1 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"token-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | 2022-03-02T23:29:05Z | # ArcheoBERTje-NER

A Dutch BERT model for Named Entity Recognition in the Archaeology domain

This is the [ArcheoBERTje](https://huggingface.co/alexbrandsen/ArcheoBERTje) model finetuned for NER, targeting the following entities:

- Time periods

- Places

- Artefacts

- Contexts

- Materials

- Species

|

agiagoulas/bert-pss | agiagoulas | 2021-05-18T23:16:17Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | bert-base-uncased model trained on the tobacco800 dataset for the task of page-stream-segmentation.

[Link](https://github.com/agiagoulas/page-stream-segmentation) to the GitHub Repo with the model implementation. |

activebus/BERT_Review | activebus | 2021-05-18T23:05:54Z | 450 | 1 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | # ReviewBERT

BERT (post-)trained from review corpus to understand sentiment, options and various e-commence aspects.

`BERT_Review` is cross-domain (beyond just `laptop` and `restaurant`) language model with one example from randomly mixed domains, post-trained (fine-tuned) on a combination of 5-core Amazon reviews and all Yelp data, expected to be 22 G in total. It is trained for 4 epochs on `bert-base-uncased`.

The preprocessing code [here](https://github.com/howardhsu/BERT-for-RRC-ABSA/transformers).

## Model Description

The original model is from `BERT-base-uncased` trained from Wikipedia+BookCorpus.

Models are post-trained from [Amazon Dataset](http://jmcauley.ucsd.edu/data/amazon/) and [Yelp Dataset](https://www.yelp.com/dataset/challenge/).

## Instructions

Loading the post-trained weights are as simple as, e.g.,

```python

import torch

from transformers import AutoModel, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("activebus/BERT_Review")

model = AutoModel.from_pretrained("activebus/BERT_Review")

```

## Evaluation Results

Check our [NAACL paper](https://www.aclweb.org/anthology/N19-1242.pdf)

`BERT_Review` is expected to have similar performance on domain-specific tasks (such as aspect extraction) as `BERT-DK`, but much better on general tasks such as aspect sentiment classification (different domains mostly share similar sentiment words).

## Citation

If you find this work useful, please cite as following.

```

@inproceedings{xu_bert2019,

title = "BERT Post-Training for Review Reading Comprehension and Aspect-based Sentiment Analysis",

author = "Xu, Hu and Liu, Bing and Shu, Lei and Yu, Philip S.",

booktitle = "Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics",

month = "jun",

year = "2019",

}

```

|

abhishek/autonlp-japanese-sentiment-59363 | abhishek | 2021-05-18T22:56:15Z | 13 | 1 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"text-classification",

"autonlp",

"ja",

"dataset:abhishek/autonlp-data-japanese-sentiment",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

tags: autonlp

language: ja

widget:

- text: "🤗AutoNLPが大好きです"

datasets:

- abhishek/autonlp-data-japanese-sentiment

---

# Model Trained Using AutoNLP

- Problem type: Binary Classification

- Model ID: 59363

## Validation Metrics

- Loss: 0.12651239335536957

- Accuracy: 0.9532079853817648

- Precision: 0.9729688278823665

- Recall: 0.9744633462616643

- AUC: 0.9717333684823413

- F1: 0.9737155136027014

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/abhishek/autonlp-japanese-sentiment-59363

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("abhishek/autonlp-japanese-sentiment-59363", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("abhishek/autonlp-japanese-sentiment-59363", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

``` |

apanc/russian-sensitive-topics | apanc | 2021-05-18T22:41:20Z | 18,572 | 20 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"toxic comments classification",

"ru",

"arxiv:2103.05345",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

language:

- ru

tags:

- toxic comments classification

licenses:

- cc-by-nc-sa

---

## General concept of the model

This model is trained on the dataset of sensitive topics of the Russian language. The concept of sensitive topics is described [in this article ](https://www.aclweb.org/anthology/2021.bsnlp-1.4/) presented at the workshop for Balto-Slavic NLP at the EACL-2021 conference. Please note that this article describes the first version of the dataset, while the model is trained on the extended version of the dataset open-sourced on our [GitHub](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/sensitive_topics/sensitive_topics.csv) or on [kaggle](https://www.kaggle.com/nigula/russian-sensitive-topics). The properties of the dataset is the same as the one described in the article, the only difference is the size.

## Instructions

The model predicts combinations of 18 sensitive topics described in the [article](https://arxiv.org/abs/2103.05345). You can find step-by-step instructions for using the model [here](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/sensitive_topics/Inference.ipynb)

## Metrics

The dataset partially manually labeled samples and partially semi-automatically labeled samples. Learn more in our article. We tested the performance of the classifier only on the part of manually labeled data that is why some topics are not well represented in the test set.

| | precision | recall | f1-score | support |

|-------------------|-----------|--------|----------|---------|

| offline_crime | 0.65 | 0.55 | 0.6 | 132 |

| online_crime | 0.5 | 0.46 | 0.48 | 37 |

| drugs | 0.87 | 0.9 | 0.88 | 87 |

| gambling | 0.5 | 0.67 | 0.57 | 6 |

| pornography | 0.73 | 0.59 | 0.65 | 204 |

| prostitution | 0.75 | 0.69 | 0.72 | 91 |

| slavery | 0.72 | 0.72 | 0.73 | 40 |

| suicide | 0.33 | 0.29 | 0.31 | 7 |

| terrorism | 0.68 | 0.57 | 0.62 | 47 |

| weapons | 0.89 | 0.83 | 0.86 | 138 |

| body_shaming | 0.9 | 0.67 | 0.77 | 109 |

| health_shaming | 0.84 | 0.55 | 0.66 | 108 |

| politics | 0.68 | 0.54 | 0.6 | 241 |

| racism | 0.81 | 0.59 | 0.68 | 204 |

| religion | 0.94 | 0.72 | 0.81 | 102 |

| sexual_minorities | 0.69 | 0.46 | 0.55 | 102 |

| sexism | 0.66 | 0.64 | 0.65 | 132 |

| social_injustice | 0.56 | 0.37 | 0.45 | 181 |

| none | 0.62 | 0.67 | 0.64 | 250 |

| micro avg | 0.72 | 0.61 | 0.66 | 2218 |

| macro avg | 0.7 | 0.6 | 0.64 | 2218 |

| weighted avg | 0.73 | 0.61 | 0.66 | 2218 |

| samples avg | 0.75 | 0.66 | 0.68 | 2218 |

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png

## Citation

If you find this repository helpful, feel free to cite our publication:

```

@inproceedings{babakov-etal-2021-detecting,

title = "Detecting Inappropriate Messages on Sensitive Topics that Could Harm a Company{'}s Reputation",

author = "Babakov, Nikolay and

Logacheva, Varvara and

Kozlova, Olga and

Semenov, Nikita and

Panchenko, Alexander",

booktitle = "Proceedings of the 8th Workshop on Balto-Slavic Natural Language Processing",

month = apr,

year = "2021",

address = "Kiyv, Ukraine",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.bsnlp-1.4",

pages = "26--36",

abstract = "Not all topics are equally {``}flammable{''} in terms of toxicity: a calm discussion of turtles or fishing less often fuels inappropriate toxic dialogues than a discussion of politics or sexual minorities. We define a set of sensitive topics that can yield inappropriate and toxic messages and describe the methodology of collecting and labelling a dataset for appropriateness. While toxicity in user-generated data is well-studied, we aim at defining a more fine-grained notion of inappropriateness. The core of inappropriateness is that it can harm the reputation of a speaker. This is different from toxicity in two respects: (i) inappropriateness is topic-related, and (ii) inappropriate message is not toxic but still unacceptable. We collect and release two datasets for Russian: a topic-labelled dataset and an appropriateness-labelled dataset. We also release pre-trained classification models trained on this data.",

}

``` |

apanc/russian-inappropriate-messages | apanc | 2021-05-18T22:39:46Z | 3,244 | 20 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"toxic comments classification",

"ru",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

language:

- ru

tags:

- toxic comments classification

licenses:

- cc-by-nc-sa

---

## General concept of the model

#### Proposed usage

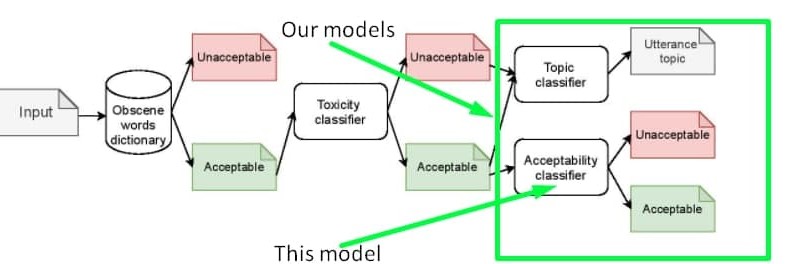

The **'inappropriateness'** substance we tried to collect in the dataset and detect with the model **is NOT a substitution of toxicity**, it is rather a derivative of toxicity. So the model based on our dataset could serve as **an additional layer of inappropriateness filtering after toxicity and obscenity filtration**. You can detect the exact sensitive topic by using [another model](https://huggingface.co/Skoltech/russian-sensitive-topics). The proposed pipeline is shown in the scheme below.

You can also train one classifier for both toxicity and inappropriateness detection. The data to be mixed with toxic labelled samples could be found on our [GitHub](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/appropriateness/Appropriateness.csv) or on [kaggle](https://www.kaggle.com/nigula/russianinappropriatemessages)

#### Inappropraiteness intuition

This model is trained on the dataset of inappropriate messages of the Russian language. Generally, an inappropriate utterance is an utterance that has not obscene words or any kind of toxic intent, but can still harm the reputation of the speaker. Find some sample for more intuition in the table below. Learn more about the concept of inappropriateness [in this article ](https://www.aclweb.org/anthology/2021.bsnlp-1.4/) presented at the workshop for Balto-Slavic NLP at the EACL-2021 conference. Please note that this article describes the first version of the dataset, while the model is trained on the extended version of the dataset open-sourced on our [GitHub](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/appropriateness/Appropriateness.csv) or on [kaggle](https://www.kaggle.com/nigula/russianinappropriatemessages). The properties of the dataset are the same as the one described in the article, the only difference is the size.

| Text | Inappropriate | Comment | Unsafe topic |

|------------------------------------------------------------------------------------------------------|---------------|----------------------------------------------------------------|---------------|

| Ладно бы видного деятеля завалили а тут какого то ноунейм нигру преступника | yes | Оправдание убийства | offline crime |

| Это нарушение УКРФ! | no | Осуждение нарушения закона | offline crime |

| Религия - это насилие высшего уровня. Верующего принуждают к вере еще в младенчестве. | yes | Оскорбление чувств верующих | religion |

| Христиане, как вы относитесь к иноверцам, в частности к Гамалиилу и к уверовавшим в Иисуса фарисеям? | no | Обычные вопросы к верующим без намека на оскорбление их чувств | religion |

| Хорошо что проституция запрещена | no | Осуждение простуитуции | prostitution |

| Возьму бабки и сниму шлюх на выходных | yes | Популяризация проституции | prostitution |

#### Metrics

The model was trained, validated, and tested only on the samples with 100% confidence, which allowed to get the following metrics on test set:

| | precision | recall | f1-score | support |

|--------------|----------|--------|----------|---------|

| 0 | 0.92 | 0.93 | 0.93 | 7839 |

| 1 | 0.80 | 0.76 | 0.78 | 2726 |

| accuracy | | | 0.89 | 10565 |

| macro avg | 0.86 | 0.85 | 0.85 | 10565 |

| weighted avg | 0.89 | 0.89 | 0.89 | 10565 |

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png

## Citation

If you find this repository helpful, feel free to cite our publication:

```

@inproceedings{babakov-etal-2021-detecting,

title = "Detecting Inappropriate Messages on Sensitive Topics that Could Harm a Company{'}s Reputation",

author = "Babakov, Nikolay and

Logacheva, Varvara and

Kozlova, Olga and

Semenov, Nikita and

Panchenko, Alexander",

booktitle = "Proceedings of the 8th Workshop on Balto-Slavic Natural Language Processing",

month = apr,

year = "2021",

address = "Kiyv, Ukraine",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.bsnlp-1.4",

pages = "26--36",

abstract = "Not all topics are equally {``}flammable{''} in terms of toxicity: a calm discussion of turtles or fishing less often fuels inappropriate toxic dialogues than a discussion of politics or sexual minorities. We define a set of sensitive topics that can yield inappropriate and toxic messages and describe the methodology of collecting and labelling a dataset for appropriateness. While toxicity in user-generated data is well-studied, we aim at defining a more fine-grained notion of inappropriateness. The core of inappropriateness is that it can harm the reputation of a speaker. This is different from toxicity in two respects: (i) inappropriateness is topic-related, and (ii) inappropriate message is not toxic but still unacceptable. We collect and release two datasets for Russian: a topic-labelled dataset and an appropriateness-labelled dataset. We also release pre-trained classification models trained on this data.",

}

```

## Contacts

If you have any questions please contact [Nikolay](mailto:[email protected]) |

Shuvam/autonlp-college_classification-164469 | Shuvam | 2021-05-18T22:37:16Z | 7 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"text-classification",

"autonlp",

"en",

"dataset:Shuvam/autonlp-data-college_classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

tags: autonlp

language: en

widget:

- text: "I love AutoNLP 🤗"

datasets:

- Shuvam/autonlp-data-college_classification

---

# Model Trained Using AutoNLP

- Problem type: Binary Classification

- Model ID: 164469

## Validation Metrics

- Loss: 0.05527503043413162

- Accuracy: 0.9853049228508449

- Precision: 0.991044776119403

- Recall: 0.9793510324483776

- AUC: 0.9966895139869654

- F1: 0.9851632047477745

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/Shuvam/autonlp-college_classification-164469

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("Shuvam/autonlp-college_classification-164469", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("Shuvam/autonlp-college_classification-164469", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

``` |

Sahajtomar/GBERTQnA | Sahajtomar | 2021-05-18T22:19:34Z | 18 | 4 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"question-answering",

"de",

"dataset:mlqa",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:04Z |

---

language: de

tags:

- pytorch

- tf

- bert

datasets:

- mlqa

metrics:

- f1

- em

---

### QA Model trained on MLQA dataset for german langauge.

MODEL used for fine tuning is GBERT Large by deepset.ai

## MLQA DEV (german)

EM: 63.82

F1: 77.20

## XQUAD TEST (german)

EM: 65.96

F1: 80.85

## Model inferencing:

```python

!pip install -q transformers

from transformers import pipeline

qa_pipeline = pipeline(

"question-answering",

model="Sahajtomar/GBERTQnA",

tokenizer="Sahajtomar/GBERTQnA"

)

qa_pipeline({

'context': "Vor einigen Jahren haben Wissenschaftler ein wichtiges Mutagen identifiziert, das in unseren eigenen Zellen liegt: APOBEC, ein Protein, das normalerweise als Schutzmittel gegen Virusinfektionen fungiert. Heute hat ein Team von Schweizer und russischen Wissenschaftlern unter der Leitung von Sergey Nikolaev, Genetiker an der Universität Genf (UNIGE) in der Schweiz, entschlüsselt, wie APOBEC eine Schwäche unseres DNA-Replikationsprozesses ausnutzt, um Mutationen in unserem Genom zu induzieren.",

'question': "Welches Mutagen schützt vor Virusinfektionen?"

})

# output

{'answer': 'APOBEC', 'end': 121, 'score': 0.9815779328346252, 'start': 115}

## Even complex queries can be answered pretty well

qa_pipeline({

"context": 'Im Juli 1944 befand sich die Rote Armee tief auf polnischem Gebiet und verfolgte die Deutschen in Richtung Warschau. In dem Wissen, dass Stalin der Idee eines unabhängigen Polens feindlich gegenüberstand, gab die polnische Exilregierung in London der unterirdischen Heimatarmee (AK) den Befehl, vor dem Eintreffen der Roten Armee zu versuchen, die Kontrolle über Warschau von den Deutschen zu übernehmen. So begann am 1. August 1944, als sich die Rote Armee der Stadt näherte, der Warschauer Aufstand. Der bewaffnete Kampf, der 48 Stunden dauern sollte, war teilweise erfolgreich, dauerte jedoch 63 Tage. Schließlich mussten die Kämpfer der Heimatarmee und die ihnen unterstützenden Zivilisten kapitulieren. Sie wurden in Kriegsgefangenenlager in Deutschland transportiert, während die gesamte Zivilbevölkerung ausgewiesen wurde. Die Zahl der polnischen Zivilisten wird auf 150.000 bis 200.000 geschätzt.'

'question': "Wer wurde nach Deutschland transportiert?"

#output

{'answer': 'die Kämpfer der Heimatarmee und die ihnen unterstützenden Zivilisten',

'end': 693,

'score': 0.23357819020748138,

'start': 625}

```

Try it on a Colab:

<a href="https://github.com/Sahajtomar/Question-Answering/blob/main/Sahajtomar_GBERTQnA.ipynb" target="_parent"><img src="https://camo.githubusercontent.com/52feade06f2fecbf006889a904d221e6a730c194/68747470733a2f2f636f6c61622e72657365617263682e676f6f676c652e636f6d2f6173736574732f636f6c61622d62616467652e737667" alt="Open In Colab" data-canonical-src="https://colab.research.google.com/assets/colab-badge.svg"></a>

|

RecordedFuture/Swedish-Sentiment-Violence | RecordedFuture | 2021-05-18T22:02:50Z | 32 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"sv",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:04Z | ---

language: sv

license: mit

---

## Swedish BERT models for sentiment analysis

[Recorded Future](https://www.recordedfuture.com/) together with [AI Sweden](https://www.ai.se/en) releases two language models for sentiment analysis in Swedish. The two models are based on the [KB\/bert-base-swedish-cased](https://huggingface.co/KB/bert-base-swedish-cased) model and has been fine-tuned to solve a multi-label sentiment analysis task.

The models have been fine-tuned for the sentiments fear and violence. The models output three floats corresponding to the labels "Negative", "Weak sentiment", and "Strong Sentiment" at the respective indexes.

The models have been trained on Swedish data with a conversational focus, collected from various internet sources and forums.

The models are only trained on Swedish data and only supports inference of Swedish input texts. The models inference metrics for all non-Swedish inputs are not defined, these inputs are considered as out of domain data.

The current models are supported at Transformers version >= 4.3.3 and Torch version 1.8.0, compatibility with older versions are not verified.

### Swedish-Sentiment-Fear

The model can be imported from the transformers library by running

from transformers import BertForSequenceClassification, BertTokenizerFast

tokenizer = BertTokenizerFast.from_pretrained("RecordedFuture/Swedish-Sentiment-Fear")

classifier_fear= BertForSequenceClassification.from_pretrained("RecordedFuture/Swedish-Sentiment-Fear")

When the model and tokenizer are initialized the model can be used for inference.

#### Sentiment definitions

#### The strong sentiment includes but are not limited to

Texts that:

- Hold an expressive emphasis on fear and/ or anxiety

#### The weak sentiment includes but are not limited to

Texts that:

- Express fear and/ or anxiety in a neutral way

#### Verification metrics

During training, the model had maximized validation metrics at the following classification breakpoint.

| Classification Breakpoint | F-score | Precision | Recall |

|:-------------------------:|:-------:|:---------:|:------:|

| 0.45 | 0.8754 | 0.8618 | 0.8895 |

#### Swedish-Sentiment-Violence

The model be can imported from the transformers library by running

from transformers import BertForSequenceClassification, BertTokenizerFast

tokenizer = BertTokenizerFast.from_pretrained("RecordedFuture/Swedish-Sentiment-Violence")

classifier_violence = BertForSequenceClassification.from_pretrained("RecordedFuture/Swedish-Sentiment-Violence")

When the model and tokenizer are initialized the model can be used for inference.

### Sentiment definitions

#### The strong sentiment includes but are not limited to

Texts that:

- Referencing highly violent acts

- Hold an aggressive tone

#### The weak sentiment includes but are not limited to

Texts that:

- Include general violent statements that do not fall under the strong sentiment

#### Verification metrics

During training, the model had maximized validation metrics at the following classification breakpoint.

| Classification Breakpoint | F-score | Precision | Recall |

|:-------------------------:|:-------:|:---------:|:------:|

| 0.35 | 0.7677 | 0.7456 | 0.791 | |

RecordedFuture/Swedish-Sentiment-Fear | RecordedFuture | 2021-05-18T22:00:42Z | 28 | 2 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"sv",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:04Z | ---

language: sv

license: mit

---

## Swedish BERT models for sentiment analysis

[Recorded Future](https://www.recordedfuture.com/) together with [AI Sweden](https://www.ai.se/en) releases two language models for sentiment analysis in Swedish. The two models are based on the [KB\/bert-base-swedish-cased](https://huggingface.co/KB/bert-base-swedish-cased) model and has been fine-tuned to solve a multi-label sentiment analysis task.

The models have been fine-tuned for the sentiments fear and violence. The models output three floats corresponding to the labels "Negative", "Weak sentiment", and "Strong Sentiment" at the respective indexes.

The models have been trained on Swedish data with a conversational focus, collected from various internet sources and forums.

The models are only trained on Swedish data and only supports inference of Swedish input texts. The models inference metrics for all non-Swedish inputs are not defined, these inputs are considered as out of domain data.

The current models are supported at Transformers version >= 4.3.3 and Torch version 1.8.0, compatibility with older versions are not verified.

### Swedish-Sentiment-Fear

The model can be imported from the transformers library by running

from transformers import BertForSequenceClassification, BertTokenizerFast

tokenizer = BertTokenizerFast.from_pretrained("RecordedFuture/Swedish-Sentiment-Fear")

classifier_fear= BertForSequenceClassification.from_pretrained("RecordedFuture/Swedish-Sentiment-Fear")

When the model and tokenizer are initialized the model can be used for inference.

#### Sentiment definitions

#### The strong sentiment includes but are not limited to

Texts that:

- Hold an expressive emphasis on fear and/ or anxiety

#### The weak sentiment includes but are not limited to

Texts that:

- Express fear and/ or anxiety in a neutral way

#### Verification metrics

During training, the model had maximized validation metrics at the following classification breakpoint.

| Classification Breakpoint | F-score | Precision | Recall |

|:-------------------------:|:-------:|:---------:|:------:|

| 0.45 | 0.8754 | 0.8618 | 0.8895 |

#### Swedish-Sentiment-Violence

The model be can imported from the transformers library by running

from transformers import BertForSequenceClassification, BertTokenizerFast

tokenizer = BertTokenizerFast.from_pretrained("RecordedFuture/Swedish-Sentiment-Violence")

classifier_violence = BertForSequenceClassification.from_pretrained("RecordedFuture/Swedish-Sentiment-Violence")

When the model and tokenizer are initialized the model can be used for inference.

### Sentiment definitions

#### The strong sentiment includes but are not limited to

Texts that:

- Referencing highly violent acts

- Hold an aggressive tone

#### The weak sentiment includes but are not limited to

Texts that:

- Include general violent statements that do not fall under the strong sentiment

#### Verification metrics

During training, the model had maximized validation metrics at the following classification breakpoint.

| Classification Breakpoint | F-score | Precision | Recall |

|:-------------------------:|:-------:|:---------:|:------:|

| 0.35 | 0.7677 | 0.7456 | 0.791 | |

NathanZhu/GabHateCorpusTrained | NathanZhu | 2021-05-18T21:47:53Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:04Z | Test for use in Google Colab :'( |

MutazYoune/Ara_DialectBERT | MutazYoune | 2021-05-18T21:44:01Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"fill-mask",

"ar",

"dataset:HARD-Arabic-Dataset",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:04Z | ---

language: ar

datasets:

- HARD-Arabic-Dataset

---

# Ara-dialect-BERT

We used a pretrained model to further train it on [HARD-Arabic-Dataset](https://github.com/elnagara/HARD-Arabic-Dataset), the weights were initialized using [CAMeL-Lab](https://huggingface.co/CAMeL-Lab/bert-base-camelbert-msa-eighth) "bert-base-camelbert-msa-eighth" model

### Usage

The model weights can be loaded using `transformers` library by HuggingFace.

```python

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("MutazYoune/Ara_DialectBERT")

model = AutoModel.from_pretrained("MutazYoune/Ara_DialectBERT")

```

Example using `pipeline`:

```python

from transformers import pipeline

fill_mask = pipeline(

"fill-mask",

model="MutazYoune/Ara_DialectBERT",

tokenizer="MutazYoune/Ara_DialectBERT"

)

fill_mask("الفندق جميل و لكن [MASK] بعيد")

```

```python

{'sequence': 'الفندق جميل و لكن الموقع بعيد', 'score': 0.28233852982521057, 'token': 3221, 'token_str': 'الموقع'}

{'sequence': 'الفندق جميل و لكن موقعه بعيد', 'score': 0.24436227977275848, 'token': 19218, 'token_str': 'موقعه'}

{'sequence': 'الفندق جميل و لكن المكان بعيد', 'score': 0.15372352302074432, 'token': 5401, 'token_str': 'المكان'}

{'sequence': 'الفندق جميل و لكن الفندق بعيد', 'score': 0.029026474803686142, 'token': 11133, 'token_str': 'الفندق'}

{'sequence': 'الفندق جميل و لكن مكانه بعيد', 'score': 0.024554792791604996, 'token': 10701, 'token_str': 'مكانه'}

|

M-CLIP/M-BERT-Base-ViT-B | M-CLIP | 2021-05-18T21:34:39Z | 3,399 | 12 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"feature-extraction",

"endpoints_compatible",

"region:us"

] | feature-extraction | 2022-03-02T23:29:04Z | <br />

<p align="center">

<h1 align="center">M-BERT Base ViT-B</h1>

<p align="center">

<a href="https://github.com/FreddeFrallan/Multilingual-CLIP/tree/main/Model%20Cards/M-BERT%20Base%20ViT-B">Github Model Card</a>

</p>

</p>

## Usage

To use this model along with the original CLIP vision encoder you need to download the code and additional linear weights from the [Multilingual-CLIP Github](https://github.com/FreddeFrallan/Multilingual-CLIP).

Once this is done, you can load and use the model with the following code

```python

from src import multilingual_clip

model = multilingual_clip.load_model('M-BERT-Base-ViT')

embeddings = model(['Älgen är skogens konung!', 'Wie leben Eisbären in der Antarktis?', 'Вы знали, что все белые медведи левши?'])

print(embeddings.shape)

# Yields: torch.Size([3, 640])

```

<!-- ABOUT THE PROJECT -->

## About

A [BERT-base-multilingual](https://huggingface.co/bert-base-multilingual-cased) tuned to match the embedding space for [69 languages](https://github.com/FreddeFrallan/Multilingual-CLIP/blob/main/Model%20Cards/M-BERT%20Base%2069/Fine-Tune-Languages.md), to the embedding space of the CLIP text encoder which accompanies the ViT-B/32 vision encoder. <br>