modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-25 06:27:54

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 495

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-25 06:24:22

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

kparker/ppo-Huggy | kparker | 2023-01-18T12:54:21Z | 13 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] | reinforcement-learning | 2023-01-18T12:54:12Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: kparker/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

nabdan/mnist | nabdan | 2023-01-18T12:39:37Z | 6 | 0 | diffusers | [

"diffusers",

"pytorch",

"unconditional-image-generation",

"diffusion-models-class",

"license:mit",

"diffusers:DDPMPipeline",

"region:us"

] | unconditional-image-generation | 2023-01-18T12:38:08Z | ---

license: mit

tags:

- pytorch

- diffusers

- unconditional-image-generation

- diffusion-models-class

---

# Model Card for Unit 1 of the [Diffusion Models Class 🧨](https://github.com/huggingface/diffusion-models-class)

This model is a diffusion model for unconditional image generation of cute 🦋.

## Usage

```python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained('nabdan/mnist')

image = pipeline().images[0]

image

```

|

mqy/mt5-small-finetuned-18jan-4 | mqy | 2023-01-18T12:29:36Z | 8 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"summarization",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | summarization | 2023-01-18T11:02:26Z | ---

license: apache-2.0

tags:

- summarization

- generated_from_trainer

metrics:

- rouge

model-index:

- name: mt5-small-finetuned-18jan-4

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-finetuned-18jan-4

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.6070

- Rouge1: 5.8518

- Rouge2: 0.3333

- Rougel: 5.8423

- Rougelsum: 5.7268

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 10

- eval_batch_size: 10

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|

| 7.6303 | 1.0 | 60 | 3.0842 | 6.1768 | 1.2345 | 6.2047 | 6.1838 |

| 3.8899 | 2.0 | 120 | 2.7540 | 7.9407 | 1.0 | 7.8852 | 7.9087 |

| 3.4335 | 3.0 | 180 | 2.7391 | 8.5431 | 0.5667 | 8.5448 | 8.4406 |

| 3.2524 | 4.0 | 240 | 2.6775 | 8.7375 | 0.4167 | 8.6926 | 8.569 |

| 3.0853 | 5.0 | 300 | 2.6776 | 7.7823 | 0.1667 | 7.7548 | 7.6573 |

| 2.974 | 6.0 | 360 | 2.6641 | 8.375 | 0.1667 | 8.3333 | 8.2167 |

| 2.9018 | 7.0 | 420 | 2.6233 | 7.2137 | 0.3333 | 7.147 | 7.0595 |

| 2.859 | 8.0 | 480 | 2.6238 | 6.6125 | 0.4167 | 6.656 | 6.4595 |

| 2.8123 | 9.0 | 540 | 2.5961 | 6.4262 | 0.3333 | 6.3682 | 6.2131 |

| 2.7843 | 10.0 | 600 | 2.6070 | 5.8518 | 0.3333 | 5.8423 | 5.7268 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

justinpinkney/lhq-sg2-1024 | justinpinkney | 2023-01-18T12:28:25Z | 0 | 6 | null | [

"license:mit",

"region:us"

] | null | 2023-01-18T12:12:44Z | ---

license: mit

---

# StyleGAN2 LHQ 1024

A [StyleGAN2 config-f](https://github.com/NVlabs/stylegan2-ada-pytorch) model trained on the [LHQ dataset](https://github.com/universome/alis).

Trained for 2.06 Million images FID=2.56

|

Rakib/roberta-base-on-cuad | Rakib | 2023-01-18T12:18:53Z | 19,027 | 7 | transformers | [

"transformers",

"pytorch",

"roberta",

"question-answering",

"legal-contract-review",

"cuad",

"en",

"dataset:cuad",

"license:mit",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:04Z | ---

language:

- en

license: mit

datasets:

- cuad

pipeline_tag: question-answering

tags:

- legal-contract-review

- roberta

- cuad

library_name: transformers

---

# Model Card for roberta-base-on-cuad

# Model Details

## Model Description

- **Developed by:** Mohammed Rakib

- **Shared by [Optional]:** More information needed

- **Model type:** Question Answering

- **Language(s) (NLP):** en

- **License:** MIT

- **Related Models:**

- **Parent Model:** RoBERTa

- **Resources for more information:**

- GitHub Repo: [defactolaw](https://github.com/afra-tech/defactolaw)

- Associated Paper: [An Open Source Contractual Language Understanding Application Using Machine Learning](https://aclanthology.org/2022.lateraisse-1.6/)

# Uses

## Direct Use

This model can be used for the task of Question Answering on Legal Documents.

# Training Details

Read: [An Open Source Contractual Language Understanding Application Using Machine Learning](https://aclanthology.org/2022.lateraisse-1.6/)

for detailed information on training procedure, dataset preprocessing and evaluation.

## Training Data

See [CUAD dataset card](https://huggingface.co/datasets/cuad) for more information.

## Training Procedure

### Preprocessing

More information needed

### Speeds, Sizes, Times

More information needed

# Evaluation

## Testing Data, Factors & Metrics

### Testing Data

See [CUAD dataset card](https://huggingface.co/datasets/cuad) for more information.

### Factors

### Metrics

More information needed

## Results

More information needed

# Model Examination

More information needed

- **Hardware Type:** More information needed

- **Hours used:** More information needed

- **Cloud Provider:** More information needed

- **Compute Region:** More information needed

- **Carbon Emitted:** More information needed

# Technical Specifications [optional]

## Model Architecture and Objective

More information needed

## Compute Infrastructure

More information needed

### Hardware

Used V100/P100 from Google Colab Pro

### Software

Python, Transformers

# Citation

**BibTeX:**

```

@inproceedings{nawar-etal-2022-open,

title = "An Open Source Contractual Language Understanding Application Using Machine Learning",

author = "Nawar, Afra and

Rakib, Mohammed and

Hai, Salma Abdul and

Haq, Sanaulla",

booktitle = "Proceedings of the First Workshop on Language Technology and Resources for a Fair, Inclusive, and Safe Society within the 13th Language Resources and Evaluation Conference",

month = jun,

year = "2022",

address = "Marseille, France",

publisher = "European Language Resources Association",

url = "https://aclanthology.org/2022.lateraisse-1.6",

pages = "42--50",

abstract = "Legal field is characterized by its exclusivity and non-transparency. Despite the frequency and relevance of legal dealings, legal documents like contracts remains elusive to non-legal professionals for the copious usage of legal jargon. There has been little advancement in making legal contracts more comprehensible. This paper presents how Machine Learning and NLP can be applied to solve this problem, further considering the challenges of applying ML to the high length of contract documents and training in a low resource environment. The largest open-source contract dataset so far, the Contract Understanding Atticus Dataset (CUAD) is utilized. Various pre-processing experiments and hyperparameter tuning have been carried out and we successfully managed to eclipse SOTA results presented for models in the CUAD dataset trained on RoBERTa-base. Our model, A-type-RoBERTa-base achieved an AUPR score of 46.6{\%} compared to 42.6{\%} on the original RoBERT-base. This model is utilized in our end to end contract understanding application which is able to take a contract and highlight the clauses a user is looking to find along with it{'}s descriptions to aid due diligence before signing. Alongside digital, i.e. searchable, contracts the system is capable of processing scanned, i.e. non-searchable, contracts using tesseract OCR. This application is aimed to not only make contract review a comprehensible process to non-legal professionals, but also to help lawyers and attorneys more efficiently review contracts.",

}

```

# Glossary [optional]

More information needed

# More Information [optional]

More information needed

# Model Card Authors [optional]

Mohammed Rakib in collaboration with Ezi Ozoani and the Hugging Face team

# Model Card Contact

More information needed

# How to Get Started with the Model

Use the code below to get started with the model.

<details>

<summary> Click to expand </summary>

```python

from transformers import AutoTokenizer, AutoModelForQuestionAnswering

tokenizer = AutoTokenizer.from_pretrained("Rakib/roberta-base-on-cuad")

model = AutoModelForQuestionAnswering.from_pretrained("Rakib/roberta-base-on-cuad")

```

</details> |

Kushrjain/results_6_to_11_with_embedding2 | Kushrjain | 2023-01-18T12:09:13Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-01-16T05:35:14Z | ---

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: bert-base-codemixed-uncased-sentiment-hatespeech-multilanguage

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# results_6_to_11_with_embedding2

This model is a fine-tuned version of [rohanrajpal/bert-base-codemixed-uncased-sentiment](https://huggingface.co/rohanrajpal/bert-base-codemixed-uncased-sentiment) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3507

- Accuracy: 0.8759

- Precision: 0.8751

- Recall: 0.8759

- F1: 0.8755

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 0.3889 | 1.0 | 1460 | 0.3761 | 0.8335 | 0.8484 | 0.8335 | 0.8371 |

| 0.3273 | 2.0 | 2920 | 0.3196 | 0.8542 | 0.8602 | 0.8542 | 0.8561 |

| 0.2955 | 3.0 | 4380 | 0.3116 | 0.8645 | 0.8644 | 0.8645 | 0.8645 |

| 0.27 | 4.0 | 5840 | 0.3014 | 0.8704 | 0.8695 | 0.8704 | 0.8699 |

| 0.2601 | 5.0 | 7300 | 0.3285 | 0.8676 | 0.8714 | 0.8676 | 0.8689 |

| 0.2376 | 6.0 | 8760 | 0.3147 | 0.8726 | 0.8737 | 0.8726 | 0.8731 |

| 0.213 | 7.0 | 10220 | 0.3103 | 0.8699 | 0.8714 | 0.8699 | 0.8706 |

| 0.2013 | 8.0 | 11680 | 0.3424 | 0.8737 | 0.8733 | 0.8737 | 0.8735 |

| 0.192 | 9.0 | 13140 | 0.3398 | 0.8758 | 0.8746 | 0.8758 | 0.8750 |

| 0.1763 | 10.0 | 14600 | 0.3507 | 0.8759 | 0.8751 | 0.8759 | 0.8755 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

TomUdale/sec_example | TomUdale | 2023-01-18T11:56:19Z | 19 | 0 | transformers | [

"transformers",

"pytorch",

"distilbert",

"token-classification",

"finance",

"legal",

"en",

"dataset:tner/fin",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | 2023-01-18T10:35:15Z | ---

datasets:

- tner/fin

language:

- en

tags:

- finance

- legal

--- |

rishipatel92/a2c-PandaReachDense-v2 | rishipatel92 | 2023-01-18T11:55:54Z | 1 | 0 | stable-baselines3 | [

"stable-baselines3",

"PandaReachDense-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T11:53:36Z | ---

library_name: stable-baselines3

tags:

- PandaReachDense-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v2

type: PandaReachDense-v2

metrics:

- type: mean_reward

value: -0.89 +/- 0.29

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v2**

This is a trained model of a **A2C** agent playing **PandaReachDense-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Dharkelf/a2c-PandaReachDense-v2_2 | Dharkelf | 2023-01-18T11:51:17Z | 1 | 0 | stable-baselines3 | [

"stable-baselines3",

"PandaReachDense-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T11:49:06Z | ---

library_name: stable-baselines3

tags:

- PandaReachDense-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v2

type: PandaReachDense-v2

metrics:

- type: mean_reward

value: -9.66 +/- 5.01

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v2**

This is a trained model of a **A2C** agent playing **PandaReachDense-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

sd-concepts-library/barbosa | sd-concepts-library | 2023-01-18T11:28:06Z | 0 | 2 | null | [

"license:mit",

"region:us"

] | null | 2023-01-18T11:26:06Z | ---

license: mit

---

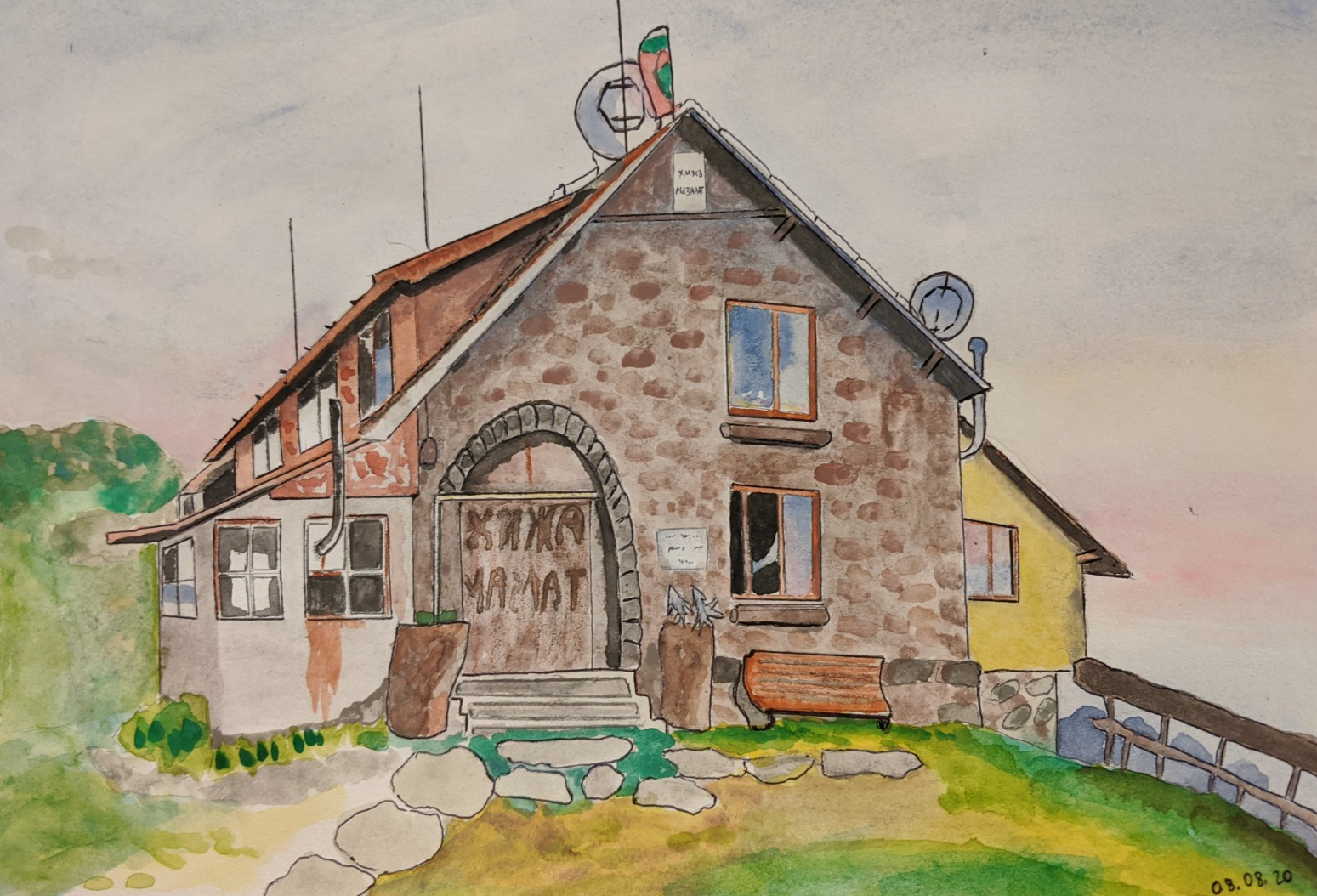

### barbosa on Stable Diffusion

This is the `<barbosa>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

---

These are watercolor paintings by Veronika Ulychny.

Here is the new concept you will be able to use as a `style`:

|

jimypbr/bart-base-finetuned-xsum | jimypbr | 2023-01-18T11:16:54Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"optimum_graphcore",

"bart",

"text2text-generation",

"generated_from_trainer",

"dataset:xsum",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2023-01-18T10:29:10Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- xsum

model-index:

- name: bart-base-finetuned-xsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-base-finetuned-xsum

This model is a fine-tuned version of [facebook/bart-base](https://huggingface.co/facebook/bart-base) on the xsum dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8584

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- distributed_type: IPU

- gradient_accumulation_steps: 16

- total_train_batch_size: 64

- total_eval_batch_size: 24

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- training precision: Mixed Precision

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.1176 | 1.0 | 3188 | 1.8584 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.13.0+cpu

- Datasets 2.8.0

- Tokenizers 0.12.1

|

Adapting/KeyBartAdapter | Adapting | 2023-01-18T11:12:55Z | 2 | 0 | null | [

"pytorch",

"Keyphrase Generation",

"license:mit",

"region:us"

] | null | 2022-10-19T09:35:10Z | ---

license: mit

tags:

- Keyphrase Generation

---

# Usage

```python

!pip install KeyBartAdapter

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

from models import KeyBartAdapter

model = KeyBartAdapter.from_pretrained('Adapting/KeyBartAdapter', revision = '3aee5ecf1703b9955ab0cd1b23208cc54eb17fce',adapter_hid_dim =32)

tokenizer = AutoTokenizer.from_pretrained("bloomberg/KeyBART")

```

- adapter layer hd 512 init model: `e38c77df86e0e289e5846455e226f4e9af09ef8e`

- adapter layer hd 256 init model: `c6f3b357d953dcb5943b6333a0f9f941b832477`

- adapter layer hd 128 init model: `f88116fa1c995f07ccd5ad88862e0aa4f162b1ea`

- adapter layer hd 64 init model: `f7e8c6323b8d5822667ddc066ffe19ac7b810f4a`

- adapter layer hd 32 init model: `24ec15daef1670fb9849a56517a6886b69b652f6`

**1. inference**

```

from transformers import Text2TextGenerationPipeline

pipe = Text2TextGenerationPipeline(model=model,tokenizer=tokenizer)

abstract = '''Non-referential face image quality assessment methods have gained popularity as a pre-filtering step on face recognition systems. In most of them, the quality score is usually designed with face matching in mind. However, a small amount of work has been done on measuring their impact and usefulness on Presentation Attack Detection (PAD). In this paper, we study the effect of quality assessment methods on filtering bona fide and attack samples, their impact on PAD systems, and how the performance of such systems is improved when training on a filtered (by quality) dataset. On a Vision Transformer PAD algorithm, a reduction of 20% of the training dataset by removing lower quality samples allowed us to improve the BPCER by 3% in a cross-dataset test.'''

pipe(abstract)

``` |

MilaNLProc/bert-base-uncased-ear-misogyny | MilaNLProc | 2023-01-18T11:02:51Z | 1,901 | 1 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"misogyny detection",

"abusive language",

"hate speech",

"offensive language",

"en",

"license:gpl-3.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-01-16T23:31:46Z | ---

language:

- en

license: gpl-3.0

tags:

- misogyny detection

- abusive language

- hate speech

- offensive language

widget:

- text: I believe women need to be protected more.

example_title: Misogyny Detection Example 1

pipeline_tag: text-classification

---

# Entropy-based Attention Regularization 👂

This is an English BERT fine-tuned with [Entropy-based Attention Regularization](https://aclanthology.org/2022.findings-acl.88/) to reduce lexical overfitting to specific words on the task of Misogyny Identification.

Use this model if you want a debiased alternative to a BERT classifier.

Please refer to the paper to know all the training details.

## Dataset

The model was fine-tuned on the [Automatic Misogyny Identification dataset](https://ceur-ws.org/Vol-2263/paper009.pdf).

## Model

This model is the fine-tuned version of the [bert-base-uncased](https://huggingface.co/bert-base-uncased) model.

We trained a total of three versions for Italian and English.

| Model | Download |

| ------ | -------------------------|

| `bert-base-uncased-ear-misogyny` | [Link](https://huggingface.co/MilaNLProc/bert-base-uncased-ear-misogyny) |

| `bert-base-uncased-ear-mlma` | [Link](https://huggingface.co/MilaNLProc/bert-base-uncased-ear-mlma) |

| `bert-base-uncased-ear-misogyny-italian` | [Link](https://huggingface.co/MilaNLProc/bert-base-uncased-ear-misogyny-italian) |

# Authors

- [Giuseppe Attanasio](https://gattanasio.cc/)

- [Debora Nozza](http://dnozza.github.io/)

- [Dirk Hovy](https://federicobianchi.io/)

- [Elena Baralis](https://dbdmg.polito.it/wordpress/people/elena-baralis/)

# Citation

Please use the following BibTeX entry if you use this model in your project:

```

@inproceedings{attanasio-etal-2022-entropy,

title = "Entropy-based Attention Regularization Frees Unintended Bias Mitigation from Lists",

author = "Attanasio, Giuseppe and

Nozza, Debora and

Hovy, Dirk and

Baralis, Elena",

booktitle = "Findings of the Association for Computational Linguistics: ACL 2022",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.findings-acl.88",

doi = "10.18653/v1/2022.findings-acl.88",

pages = "1105--1119",

abstract = "Natural Language Processing (NLP) models risk overfitting to specific terms in the training data, thereby reducing their performance, fairness, and generalizability. E.g., neural hate speech detection models are strongly influenced by identity terms like gay, or women, resulting in false positives, severe unintended bias, and lower performance.Most mitigation techniques use lists of identity terms or samples from the target domain during training. However, this approach requires a-priori knowledge and introduces further bias if important terms are neglected.Instead, we propose a knowledge-free Entropy-based Attention Regularization (EAR) to discourage overfitting to training-specific terms. An additional objective function penalizes tokens with low self-attention entropy.We fine-tune BERT via EAR: the resulting model matches or exceeds state-of-the-art performance for hate speech classification and bias metrics on three benchmark corpora in English and Italian.EAR also reveals overfitting terms, i.e., terms most likely to induce bias, to help identify their effect on the model, task, and predictions.",

}

```

# Limitations

Entropy-Attention Regularization mitigates lexical overfitting but does not completely remove it. We expect the model still to show biases, e.g., peculiar keywords that induce a specific prediction regardless of the context.

Please refer to our paper for a quantitative evaluation of this mitigation.

## License

[GNU GPLv3](https://choosealicense.com/licenses/gpl-3.0/) |

MilaNLProc/bert-base-uncased-ear-misogyny-italian | MilaNLProc | 2023-01-18T11:02:33Z | 7 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"misogyny detection",

"abusive language",

"hate speech",

"offensive language",

"it",

"license:gpl-3.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-01-16T23:39:00Z | ---

language: it

license: gpl-3.0

tags:

- misogyny detection

- abusive language

- hate speech

- offensive language

widget:

- text: Apprezzo il lavoro delle donne nella nostra comunità.

example_title: Misogyny Detection Example 1

pipeline_tag: text-classification

---

# Entropy-based Attention Regularization 👂

This is an Italian BERT fine-tuned with [Entropy-based Attention Regularization](https://aclanthology.org/2022.findings-acl.88/) to reduce lexical overfitting to specific words on the task of Misogyny Identification.

Use this model if you want a debiased alternative to a BERT classifier.

Please refer to the paper to know all the training details.

## Dataset

The model was fine-tuned on the [Italian Automatic Misogyny Identification dataset](https://ceur-ws.org/Vol-2765/paper161.pdf).

## Model

This model is the fine-tuned version of the Italian [dbmdz/bert-base-italian-uncased](https://huggingface.co/dbmdz/bert-base-italian-uncased) model.

We trained a total of three versions for Italian and English.

| Model | Download |

| ------ | -------------------------|

| `bert-base-uncased-ear-misogyny` | [Link](https://huggingface.co/MilaNLProc/bert-base-uncased-ear-misogyny) |

| `bert-base-uncased-ear-mlma` | [Link](https://huggingface.co/MilaNLProc/bert-base-uncased-ear-mlma) |

| `bert-base-uncased-ear-misogyny-italian` | [Link](https://huggingface.co/MilaNLProc/bert-base-uncased-ear-misogyny-italian) |

# Authors

- [Giuseppe Attanasio](https://gattanasio.cc/)

- [Debora Nozza](http://dnozza.github.io/)

- [Dirk Hovy](https://federicobianchi.io/)

- [Elena Baralis](https://dbdmg.polito.it/wordpress/people/elena-baralis/)

# Citation

Please use the following BibTeX entry if you use this model in your project:

```

@inproceedings{attanasio-etal-2022-entropy,

title = "Entropy-based Attention Regularization Frees Unintended Bias Mitigation from Lists",

author = "Attanasio, Giuseppe and

Nozza, Debora and

Hovy, Dirk and

Baralis, Elena",

booktitle = "Findings of the Association for Computational Linguistics: ACL 2022",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.findings-acl.88",

doi = "10.18653/v1/2022.findings-acl.88",

pages = "1105--1119",

abstract = "Natural Language Processing (NLP) models risk overfitting to specific terms in the training data, thereby reducing their performance, fairness, and generalizability. E.g., neural hate speech detection models are strongly influenced by identity terms like gay, or women, resulting in false positives, severe unintended bias, and lower performance.Most mitigation techniques use lists of identity terms or samples from the target domain during training. However, this approach requires a-priori knowledge and introduces further bias if important terms are neglected.Instead, we propose a knowledge-free Entropy-based Attention Regularization (EAR) to discourage overfitting to training-specific terms. An additional objective function penalizes tokens with low self-attention entropy.We fine-tune BERT via EAR: the resulting model matches or exceeds state-of-the-art performance for hate speech classification and bias metrics on three benchmark corpora in English and Italian.EAR also reveals overfitting terms, i.e., terms most likely to induce bias, to help identify their effect on the model, task, and predictions.",

}

```

# Limitations

Entropy-Attention Regularization mitigates lexical overfitting but does not completely remove it. We expect the model still to show biases, e.g., peculiar keywords that induce a specific prediction regardless of the context.

Please refer to our paper for a quantitative evaluation of this mitigation.

## License

[GNU GPLv3](https://choosealicense.com/licenses/gpl-3.0/) |

MilaNLProc/bert-base-uncased-ear-mlma | MilaNLProc | 2023-01-18T11:02:12Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"misogyny detection",

"abusive language",

"hate speech",

"offensive language",

"en",

"dataset:nedjmaou/MLMA_hate_speech",

"license:gpl-3.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-01-17T11:25:19Z | ---

language:

- en

license: gpl-3.0

tags:

- misogyny detection

- abusive language

- hate speech

- offensive language

widget:

- text: I believe religious minorities need to be protected more.

example_title: Hate Speech Detection Example 1

pipeline_tag: text-classification

datasets:

- nedjmaou/MLMA_hate_speech

---

# Entropy-based Attention Regularization 👂

This is an English BERT fine-tuned with [Entropy-based Attention Regularization](https://aclanthology.org/2022.findings-acl.88/) to reduce lexical overfitting to specific words on the task of Misogyny Identification.

Use this model if you want a debiased alternative to a BERT classifier.

Please refer to the paper to know all the training details.

## Dataset

The model was fine-tuned on the English part of the [MLMA dataset](https://aclanthology.org/D19-1474/).

## Model

This model is the fine-tuned version of the [bert-base-uncased](https://huggingface.co/bert-base-uncased) model.

We trained a total of three versions for Italian and English.

| Model | Download |

| ------ | -------------------------|

| `bert-base-uncased-ear-misogyny` | [Link](https://huggingface.co/MilaNLProc/bert-base-uncased-ear-misogyny) |

| `bert-base-uncased-ear-mlma` | [Link](https://huggingface.co/MilaNLProc/bert-base-uncased-ear-mlma) |

| `bert-base-uncased-ear-misogyny-italian` | [Link](https://huggingface.co/MilaNLProc/bert-base-uncased-ear-misogyny-italian) |

# Authors

- [Giuseppe Attanasio](https://gattanasio.cc/)

- [Debora Nozza](http://dnozza.github.io/)

- [Dirk Hovy](https://federicobianchi.io/)

- [Elena Baralis](https://dbdmg.polito.it/wordpress/people/elena-baralis/)

# Citation

Please use the following BibTeX entry if you use this model in your project:

```

@inproceedings{attanasio-etal-2022-entropy,

title = "Entropy-based Attention Regularization Frees Unintended Bias Mitigation from Lists",

author = "Attanasio, Giuseppe and

Nozza, Debora and

Hovy, Dirk and

Baralis, Elena",

booktitle = "Findings of the Association for Computational Linguistics: ACL 2022",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.findings-acl.88",

doi = "10.18653/v1/2022.findings-acl.88",

pages = "1105--1119",

abstract = "Natural Language Processing (NLP) models risk overfitting to specific terms in the training data, thereby reducing their performance, fairness, and generalizability. E.g., neural hate speech detection models are strongly influenced by identity terms like gay, or women, resulting in false positives, severe unintended bias, and lower performance.Most mitigation techniques use lists of identity terms or samples from the target domain during training. However, this approach requires a-priori knowledge and introduces further bias if important terms are neglected.Instead, we propose a knowledge-free Entropy-based Attention Regularization (EAR) to discourage overfitting to training-specific terms. An additional objective function penalizes tokens with low self-attention entropy.We fine-tune BERT via EAR: the resulting model matches or exceeds state-of-the-art performance for hate speech classification and bias metrics on three benchmark corpora in English and Italian.EAR also reveals overfitting terms, i.e., terms most likely to induce bias, to help identify their effect on the model, task, and predictions.",

}

```

# Limitations

Entropy-Attention Regularization mitigates lexical overfitting but does not completely remove it. We expect the model still to show biases, e.g., peculiar keywords that induce a specific prediction regardless of the context.

Please refer to our paper for a quantitative evaluation of this mitigation.

# License

[GNU GPLv3](https://choosealicense.com/licenses/gpl-3.0/) |

NoNameFound/Pyramids-ppo | NoNameFound | 2023-01-18T10:59:41Z | 6 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

] | reinforcement-learning | 2023-01-18T10:59:33Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

library_name: ml-agents

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Pyramids

2. Step 1: Write your model_id: Kaushik3497/Pyramids-ppo

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

mqy/mt5-small-finetuned-18jan-3 | mqy | 2023-01-18T10:42:58Z | 9 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"summarization",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | summarization | 2023-01-18T06:43:00Z | ---

license: apache-2.0

tags:

- summarization

- generated_from_trainer

metrics:

- rouge

model-index:

- name: mt5-small-finetuned-18jan-3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-finetuned-18jan-3

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.6115

- Rouge1: 7.259

- Rouge2: 0.3667

- Rougel: 7.1595

- Rougelsum: 7.156

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 10

- eval_batch_size: 10

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|

| 7.1947 | 1.0 | 60 | 3.1045 | 5.91 | 0.8583 | 5.8687 | 5.8123 |

| 3.8567 | 2.0 | 120 | 2.7744 | 8.0065 | 0.4524 | 8.0204 | 7.85 |

| 3.4346 | 3.0 | 180 | 2.7319 | 7.5954 | 0.4524 | 7.5204 | 7.4833 |

| 3.219 | 4.0 | 240 | 2.6736 | 8.5329 | 0.3333 | 8.487 | 8.312 |

| 3.0836 | 5.0 | 300 | 2.6583 | 8.3405 | 0.5667 | 8.2003 | 8.0543 |

| 2.9713 | 6.0 | 360 | 2.6516 | 8.8421 | 0.1667 | 8.7597 | 8.6754 |

| 2.9757 | 7.0 | 420 | 2.6369 | 8.04 | 0.3667 | 8.0018 | 7.8489 |

| 2.8321 | 8.0 | 480 | 2.6215 | 6.8739 | 0.3667 | 6.859 | 6.7917 |

| 2.794 | 9.0 | 540 | 2.6090 | 7.0738 | 0.4167 | 7.0232 | 6.9619 |

| 2.7695 | 10.0 | 600 | 2.6115 | 7.259 | 0.3667 | 7.1595 | 7.156 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

zhenyabeznisko/sd-class-butterflies-32 | zhenyabeznisko | 2023-01-18T10:26:54Z | 0 | 0 | diffusers | [

"diffusers",

"pytorch",

"unconditional-image-generation",

"diffusion-models-class",

"license:mit",

"diffusers:DDPMPipeline",

"region:us"

] | unconditional-image-generation | 2023-01-18T10:26:37Z | ---

license: mit

tags:

- pytorch

- diffusers

- unconditional-image-generation

- diffusion-models-class

---

# Model Card for Unit 1 of the [Diffusion Models Class 🧨](https://github.com/huggingface/diffusion-models-class)

This model is a diffusion model for unconditional image generation of cute 🦋.

## Usage

```python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained('zhenyabeznisko/sd-class-butterflies-32')

image = pipeline().images[0]

image

```

|

eolang/DRL-SpaceInvadersNoFrameskip-v4 | eolang | 2023-01-18T10:26:49Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T10:26:14Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 513.00 +/- 148.75

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga eolang -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga eolang -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga eolang

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

happycoding/a2c-AntBulletEnv-v0 | happycoding | 2023-01-18T10:24:50Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"AntBulletEnv-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T10:23:42Z | ---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

metrics:

- type: mean_reward

value: 1667.55 +/- 75.52

name: mean_reward

verified: false

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

babakc/Reinforce-Pixelcopter-PLE-v0 | babakc | 2023-01-18T10:09:43Z | 0 | 0 | null | [

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T06:14:28Z | ---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-Pixelcopter-PLE-v0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 21.50 +/- 12.61

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

Rakib/whisper-tiny-bn | Rakib | 2023-01-18T10:05:55Z | 17 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"whisper-event",

"generated_from_trainer",

"bn",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2023-01-17T08:34:18Z | ---

language:

- bn

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

metrics:

- wer

model-index:

- name: Whisper Tiny Bengali

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: mozilla-foundation/common_voice_11_0 bn

type: mozilla-foundation/common_voice_11_0

config: bn

split: test

args: bn

metrics:

- name: Wer

type: wer

value: 32.89771261927907

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Tiny Bengali

This model is a fine-tuned version of [openai/whisper-tiny](https://huggingface.co/openai/whisper-tiny) on the mozilla-foundation/common_voice_11_0 bn dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2314

- Wer: 32.8977

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.3362 | 0.96 | 1000 | 0.3536 | 45.0860 |

| 0.2395 | 1.91 | 2000 | 0.2745 | 37.1714 |

| 0.205 | 2.87 | 3000 | 0.2485 | 34.7353 |

| 0.1795 | 3.83 | 4000 | 0.2352 | 33.2469 |

| 0.1578 | 4.78 | 5000 | 0.2314 | 32.8977 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.8.1.dev0

- Tokenizers 0.13.2

|

Tomor0720/deberta-base-finetuned-sst2 | Tomor0720 | 2023-01-18T10:00:44Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"deberta",

"text-classification",

"generated_from_trainer",

"dataset:glue",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-01-18T09:22:08Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

model-index:

- name: deberta-base-finetuned-sst2

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

config: sst2

split: train

args: sst2

metrics:

- name: Accuracy

type: accuracy

value: 0.9495412844036697

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# deberta-base-finetuned-sst2

This model is a fine-tuned version of [microsoft/deberta-base](https://huggingface.co/microsoft/deberta-base) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2411

- Accuracy: 0.9495

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.1946 | 1.0 | 4210 | 0.2586 | 0.9278 |

| 0.1434 | 2.0 | 8420 | 0.2296 | 0.9472 |

| 0.1025 | 3.0 | 12630 | 0.2411 | 0.9495 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

GDJ1978/reddit-top-posts-jan23 | GDJ1978 | 2023-01-18T09:59:56Z | 0 | 0 | null | [

"region:us"

] | null | 2023-01-14T10:31:17Z | midjourneygirl, genderrevealnuke, stelfiepics, mods, animegirl, analog, comiccowboys, oldman, spacegirl, protogirls, propaganda |

smko77/LunarLander-v2 | smko77 | 2023-01-18T09:14:02Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T09:13:39Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 259.68 +/- 22.31

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

keshan/a2c-PandaReachDense-v2 | keshan | 2023-01-18T09:10:55Z | 1 | 0 | stable-baselines3 | [

"stable-baselines3",

"PandaReachDense-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T04:51:45Z | ---

library_name: stable-baselines3

tags:

- PandaReachDense-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v2

type: PandaReachDense-v2

metrics:

- type: mean_reward

value: -1.37 +/- 0.36

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v2**

This is a trained model of a **A2C** agent playing **PandaReachDense-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

mbdmbs/Marsey-Diffusion-v1 | mbdmbs | 2023-01-18T09:04:34Z | 0 | 0 | null | [

"art",

"text-to-image",

"en",

"license:creativeml-openrail-m",

"region:us"

] | text-to-image | 2023-01-18T08:26:12Z | ---

license: creativeml-openrail-m

language:

- en

tags:

- art

pipeline_tag: text-to-image

---

# Marsey Diffusion v1

Marsey Diffusion is a [Dreambooth](https://dreambooth.github.io/) model trained on Marsey emotes from rDrama.net. It is based on [Stable Diffusion v1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5).

## Usage

To generate novel Marseys, use the keyword `rdmarsey` in your prompt.

**Example prompt:** `rdmarsey giving a thumbs up`

## Samples

**Sample of input images:**

**Sample of outputs (cherrypicked):**

|

kjmann/ppo-Huggy | kjmann | 2023-01-18T09:02:01Z | 12 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] | reinforcement-learning | 2023-01-18T09:01:53Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: kjmann/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

96harsh56/upload_test | 96harsh56 | 2023-01-18T08:31:41Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"distilbert",

"question-answering",

"en",

"dataset:squad_v2",

"arxiv:1910.09700",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | question-answering | 2023-01-18T04:54:50Z | ---

license: apache-2.0

datasets:

- squad_v2

language:

- en

metrics:

- squad_v2

pipeline_tag: question-answering

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

This modelcard aims to be a base template for new models. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/modelcard_template.md?plain=1).

# Model Details

## Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

## Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

# Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

## Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

## Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

## Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

# Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

## Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

# Training Details

## Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

## Training Procedure [optional]

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

### Preprocessing

[More Information Needed]

### Speeds, Sizes, Times

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

# Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

## Testing Data, Factors & Metrics

### Testing Data

<!-- This should link to a Data Card if possible. -->

[More Information Needed]

### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

## Results

[More Information Needed]

### Summary

# Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

# Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

# Technical Specifications [optional]

## Model Architecture and Objective

[More Information Needed]

## Compute Infrastructure

[More Information Needed]

### Hardware

[More Information Needed]

### Software

[More Information Needed]

# Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

# Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

# More Information [optional]

[More Information Needed]

# Model Card Authors [optional]

[More Information Needed]

# Model Card Contact

[More Information Needed]

|

Srivathsava/ddpm-celb-faces | Srivathsava | 2023-01-18T08:19:27Z | 0 | 0 | diffusers | [

"diffusers",

"tensorboard",

"en",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2023-01-12T10:29:15Z | ---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: /content/drive/MyDrive/ColabNotebooks/img_align_celeba

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-celb-faces

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `/content/drive/MyDrive/ColabNotebooks/img_align_celeba` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/Srivathsava/ddpm-celb-faces/tensorboard?#scalars)

|

caffsean/gpt2-the-economist | caffsean | 2023-01-18T08:14:27Z | 10 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-01-18T07:35:46Z | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: gpt2-the-economist

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-the-economist

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 3.5285

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 9820

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 3.8737 | 1.0 | 1228 | 3.7960 |

| 3.6767 | 2.0 | 2456 | 3.6544 |

| 3.5561 | 3.0 | 3684 | 3.5948 |

| 3.431 | 4.0 | 4912 | 3.5495 |

| 3.3127 | 5.0 | 6140 | 3.5285 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Tokenizers 0.13.2

|

imflash217/SpaceInvaders_NoFrameskip_v4 | imflash217 | 2023-01-18T07:25:05Z | 3 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T07:24:18Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 807.00 +/- 311.73

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga imflash217 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga imflash217 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga imflash217

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

msgerasyov/a2c-AntBulletEnv-v0 | msgerasyov | 2023-01-18T07:13:35Z | 1 | 0 | stable-baselines3 | [

"stable-baselines3",

"AntBulletEnv-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T07:12:25Z | ---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

metrics:

- type: mean_reward

value: 1189.62 +/- 486.75

name: mean_reward

verified: false

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

gzerveas/CODER-CoCondenser | gzerveas | 2023-01-18T07:09:39Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"feature-extraction",

"Information Retrieval",

"en",

"dataset:ms_marco",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | feature-extraction | 2023-01-18T06:40:43Z | ---

datasets:

- ms_marco

language:

- en

metrics:

- MRR

- nDCG

tags:

- Information Retrieval

--- |

farukbuldur/ppo-LunarLander-v2 | farukbuldur | 2023-01-18T07:07:23Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T07:06:58Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 266.42 +/- 21.65

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

rootacess/q-FrozenLake-v1-4x4-noSlippery | rootacess | 2023-01-18T06:47:31Z | 0 | 0 | null | [

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] | reinforcement-learning | 2023-01-18T06:47:27Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="rootacess/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

NoCrypt/SomethingV1 | NoCrypt | 2023-01-18T06:25:09Z | 0 | 4 | null | [

"region:us"

] | null | 2023-01-17T06:15:09Z | Anime text-to-image model that focused on very vibrant and saturated images

|

aplnestrella/pegasus-samsum-18-2 | aplnestrella | 2023-01-18T05:49:45Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"dataset:samsum",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2023-01-18T04:42:38Z | ---

tags:

- generated_from_trainer

datasets:

- samsum

model-index:

- name: pegasus-samsum-18-2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-samsum-18-2

This model is a fine-tuned version of [google/pegasus-cnn_dailymail](https://huggingface.co/google/pegasus-cnn_dailymail) on the samsum dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 18

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 36

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 1

### Training results

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

shi-labs/dinat-large-in22k-in1k-224 | shi-labs | 2023-01-18T05:16:44Z | 15 | 0 | transformers | [

"transformers",

"pytorch",

"dinat",

"image-classification",

"vision",

"dataset:imagenet-21k",

"dataset:imagenet-1k",

"arxiv:2209.15001",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2022-11-18T22:05:43Z | ---

license: mit

tags:

- vision

- image-classification

datasets:

- imagenet-21k

- imagenet-1k

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# DiNAT (large variant)

DiNAT-Large with a 7x7 kernel pre-trained on ImageNet-21K, and fine-tuned on ImageNet-1K at 224x224.

It was introduced in the paper [Dilated Neighborhood Attention Transformer](https://arxiv.org/abs/2209.15001) by Hassani et al. and first released in [this repository](https://github.com/SHI-Labs/Neighborhood-Attention-Transformer).

## Model description

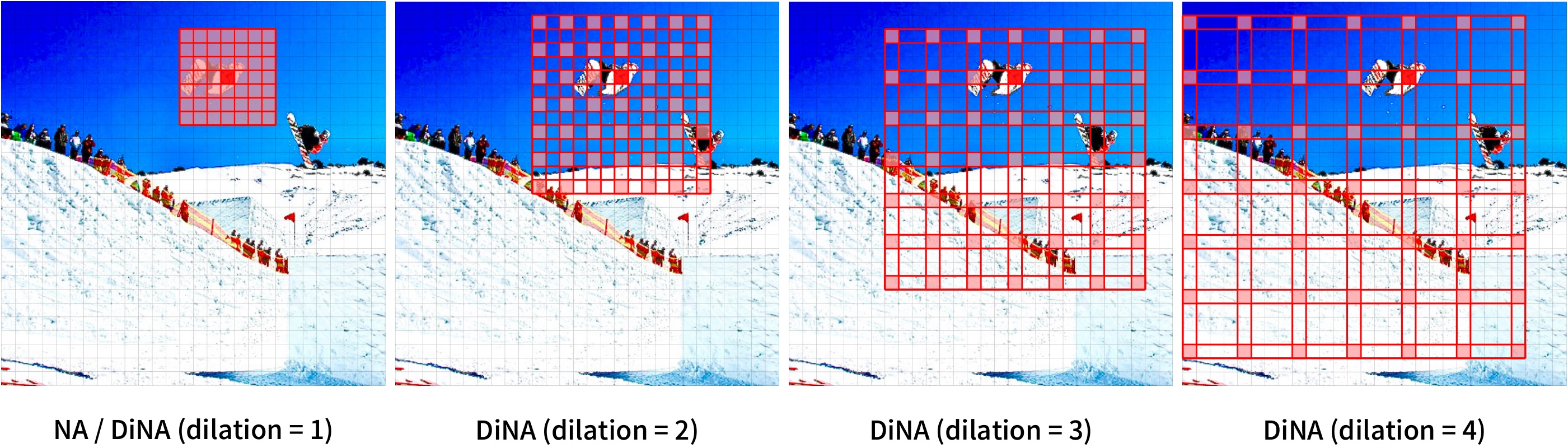

DiNAT is a hierarchical vision transformer based on Neighborhood Attention (NA) and its dilated variant (DiNA).

Neighborhood Attention is a restricted self attention pattern in which each token's receptive field is limited to its nearest neighboring pixels.

NA and DiNA are therefore sliding-window attention patterns, and as a result are highly flexible and maintain translational equivariance.

They come with PyTorch implementations through the [NATTEN](https://github.com/SHI-Labs/NATTEN/) package.

[Source](https://paperswithcode.com/paper/dilated-neighborhood-attention-transformer)

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=dinat) to look for

fine-tuned versions on a task that interests you.

### Example