modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-28 00:40:13

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 500

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-28 00:36:54

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

akolov/vasko-style-second-try | akolov | 2023-05-16T09:22:06Z | 8 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-15T08:11:26Z | ---

license: mit

---

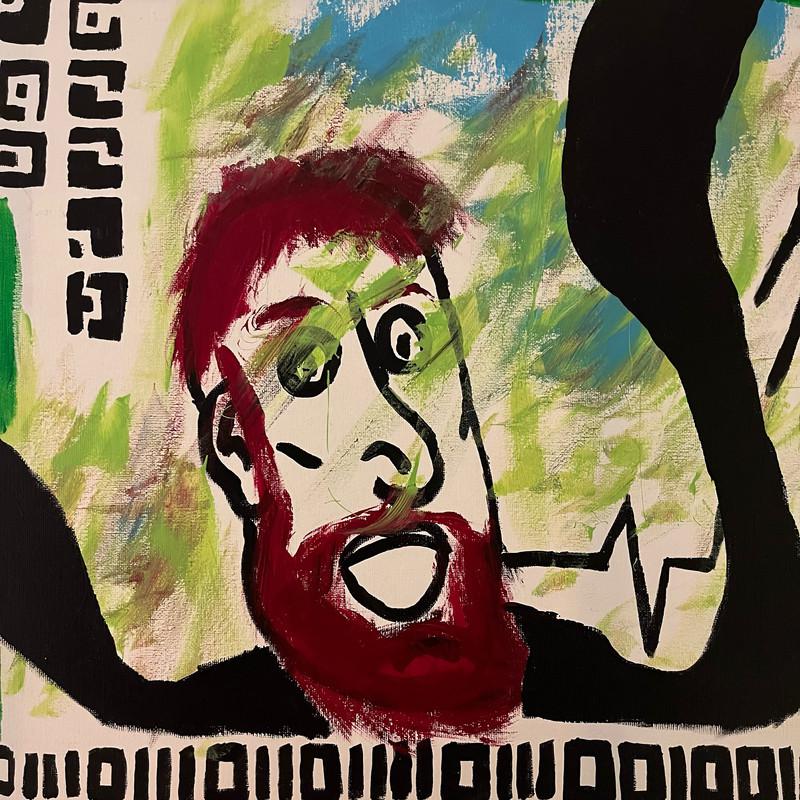

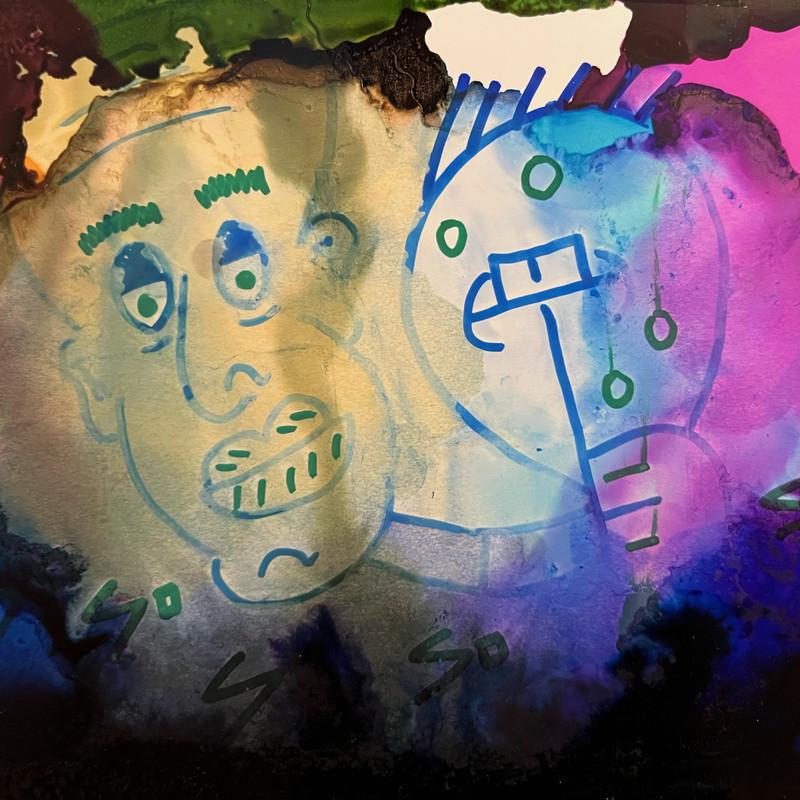

### Vasko style second try on Stable Diffusion via Dreambooth

#### model by akolov

This your the Stable Diffusion model fine-tuned the Vasko style second try concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a painting by vasko style**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

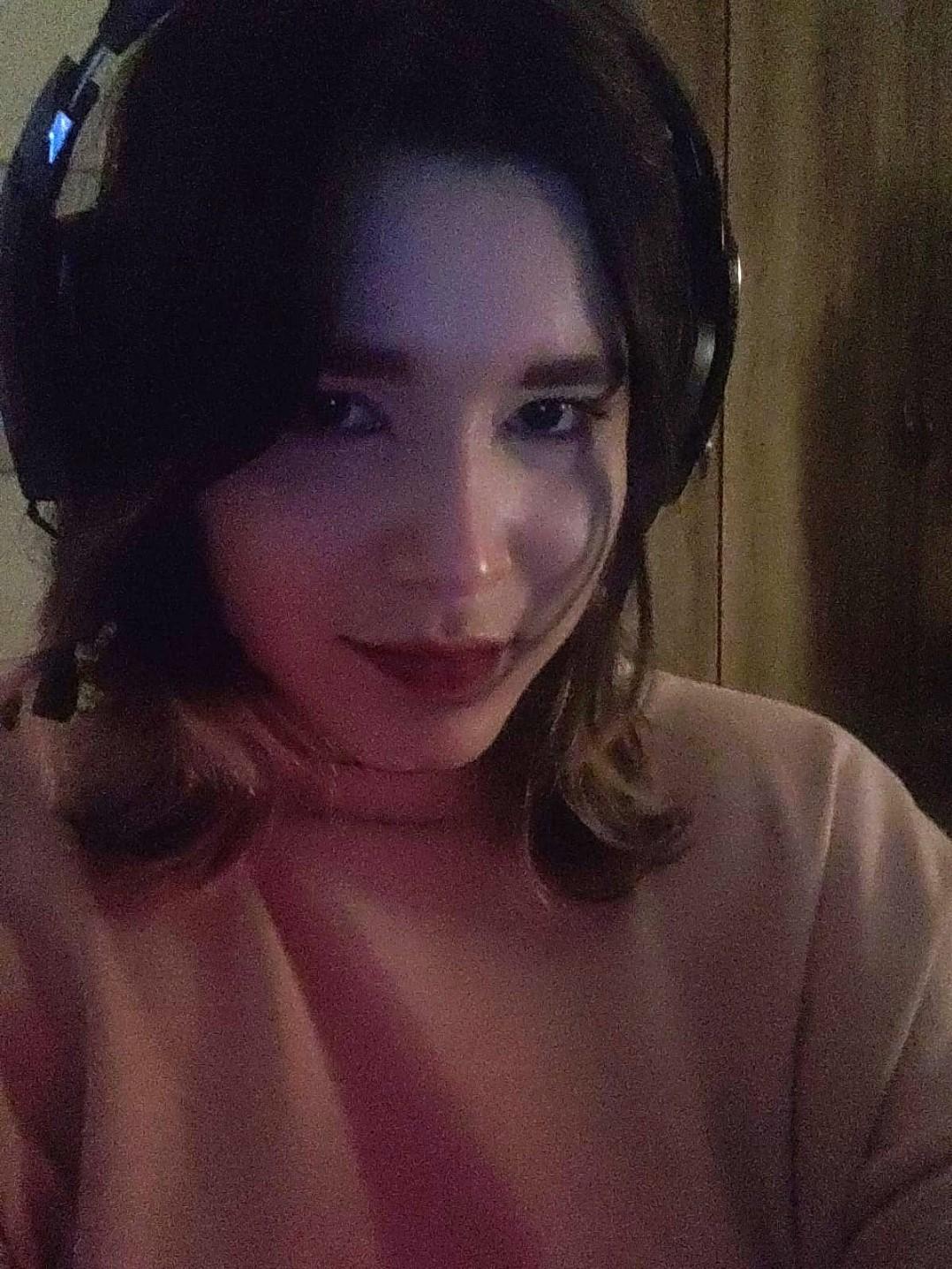

Here are the images used for training this concept:

|

sd-dreambooth-library/rajj | sd-dreambooth-library | 2023-05-16T09:22:03Z | 38 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-14T17:51:11Z | ---

license: mit

---

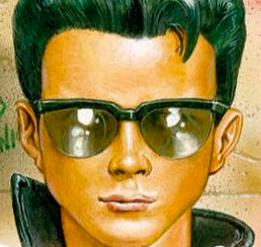

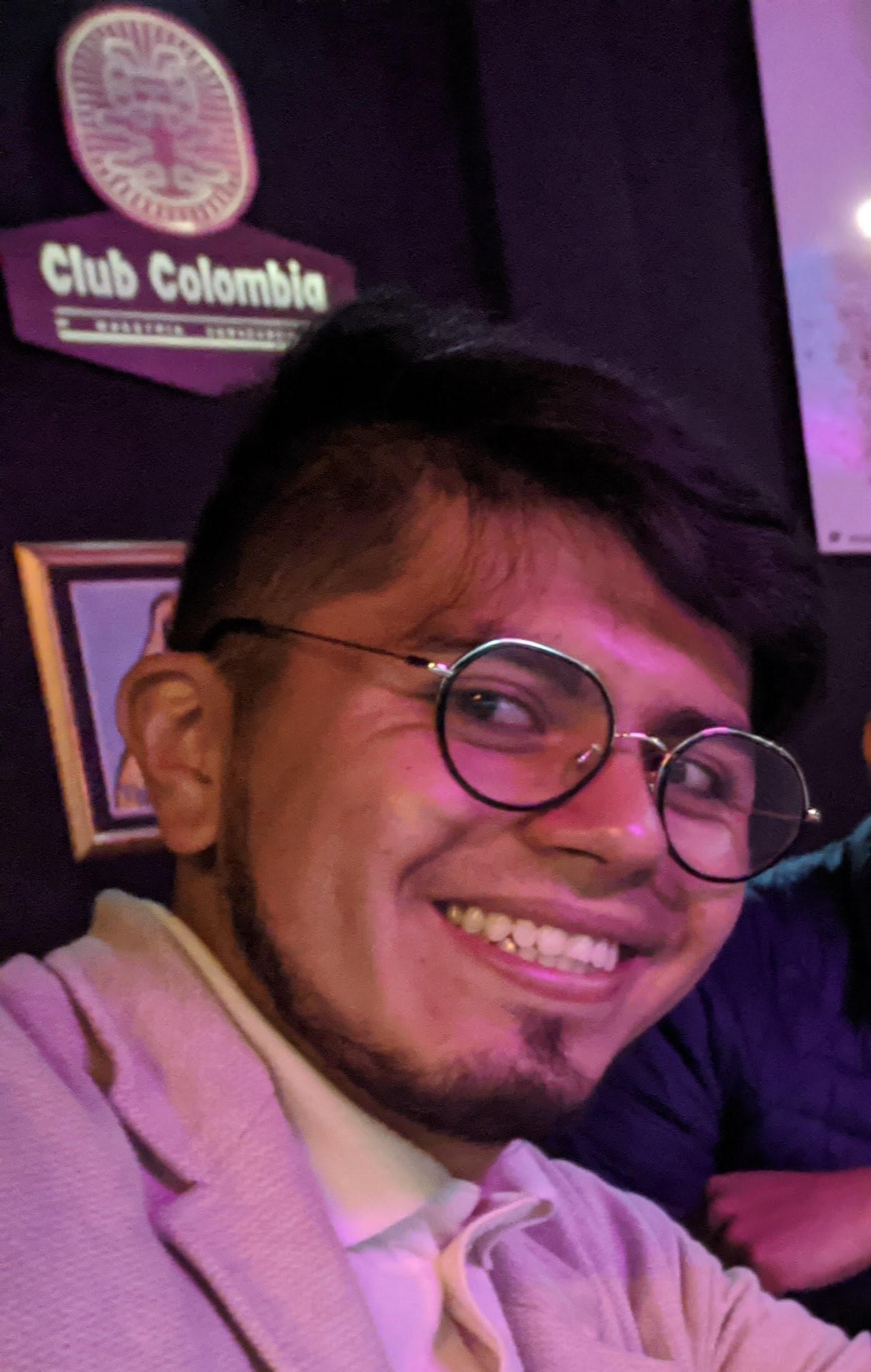

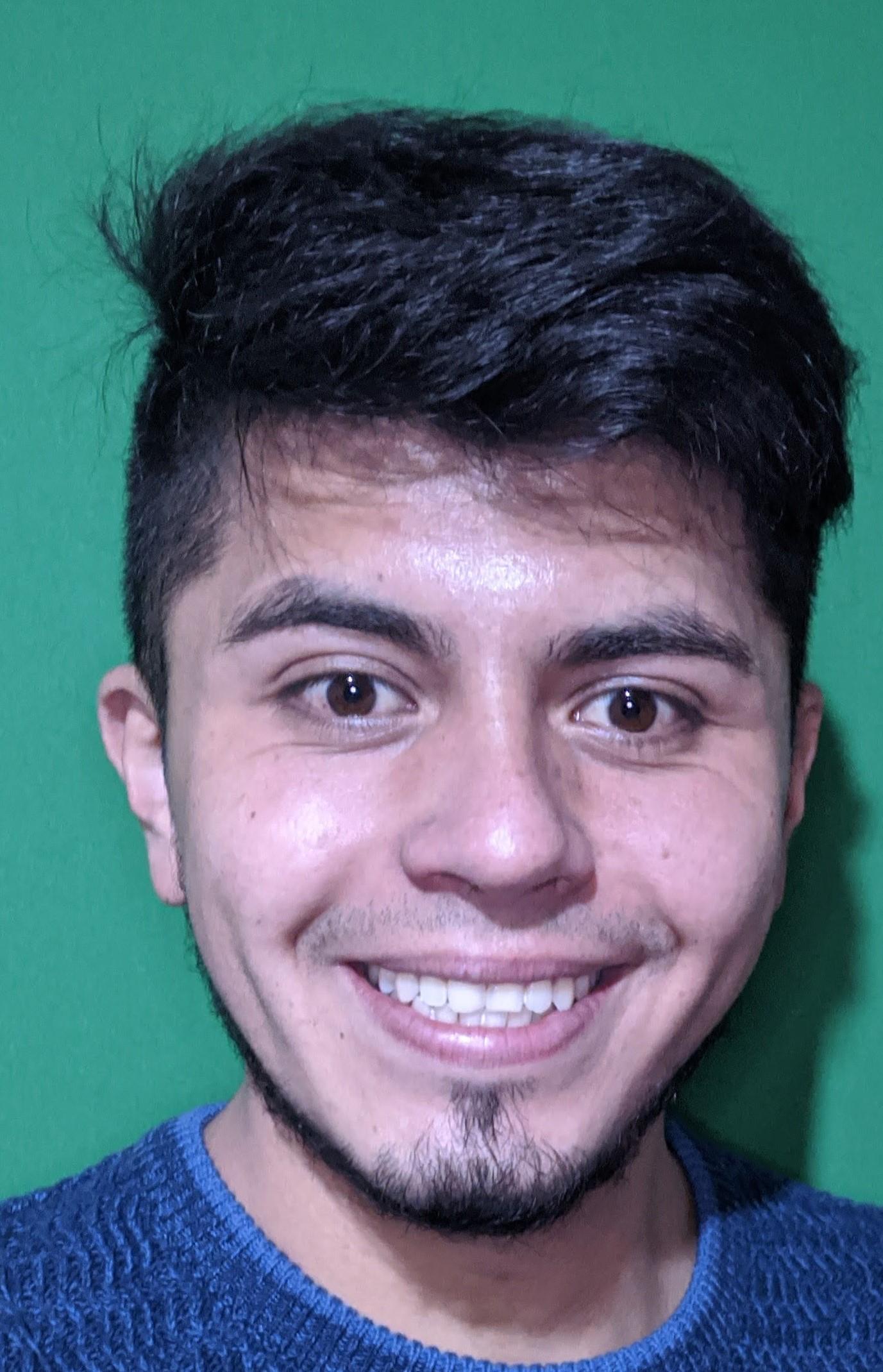

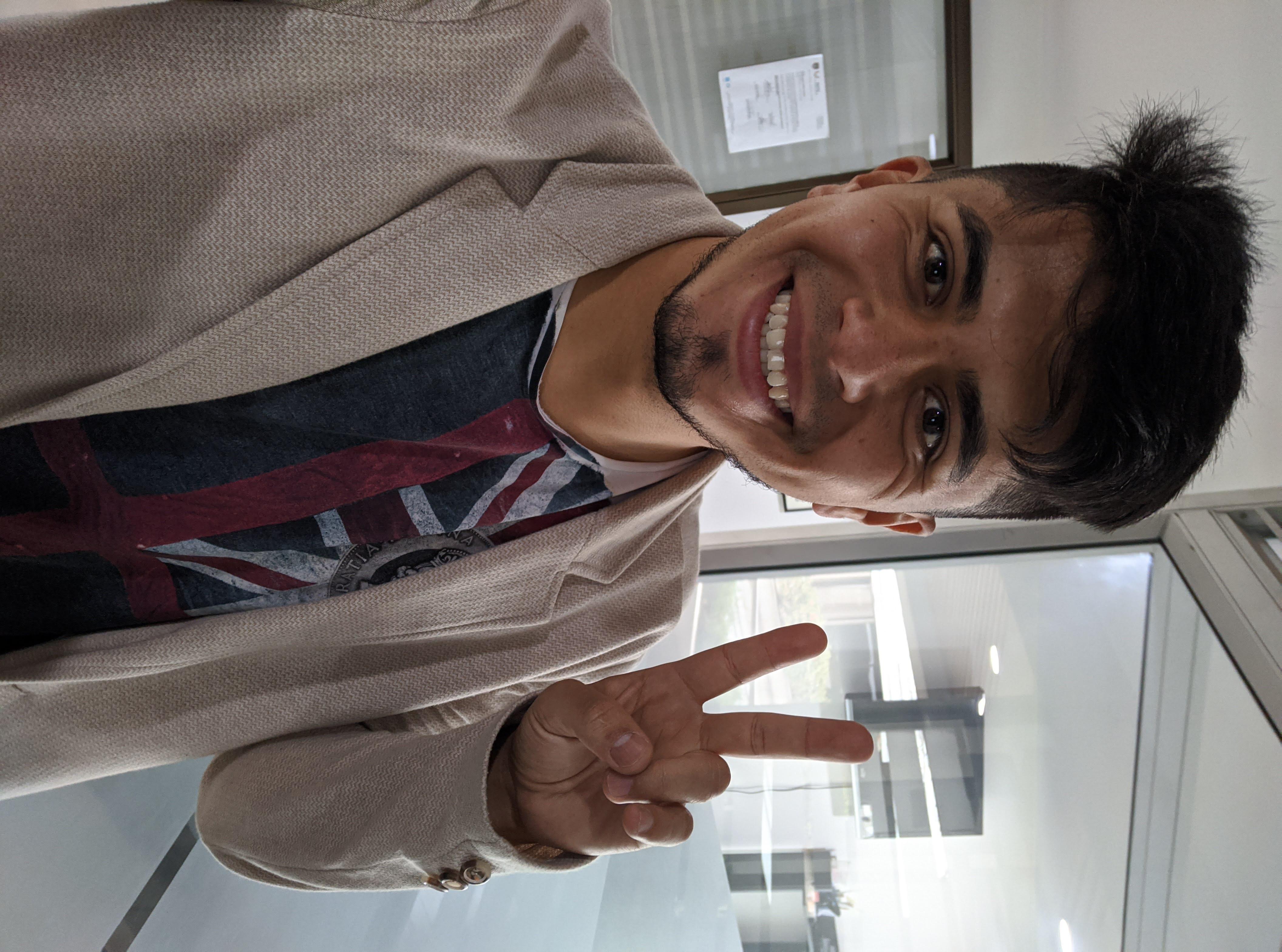

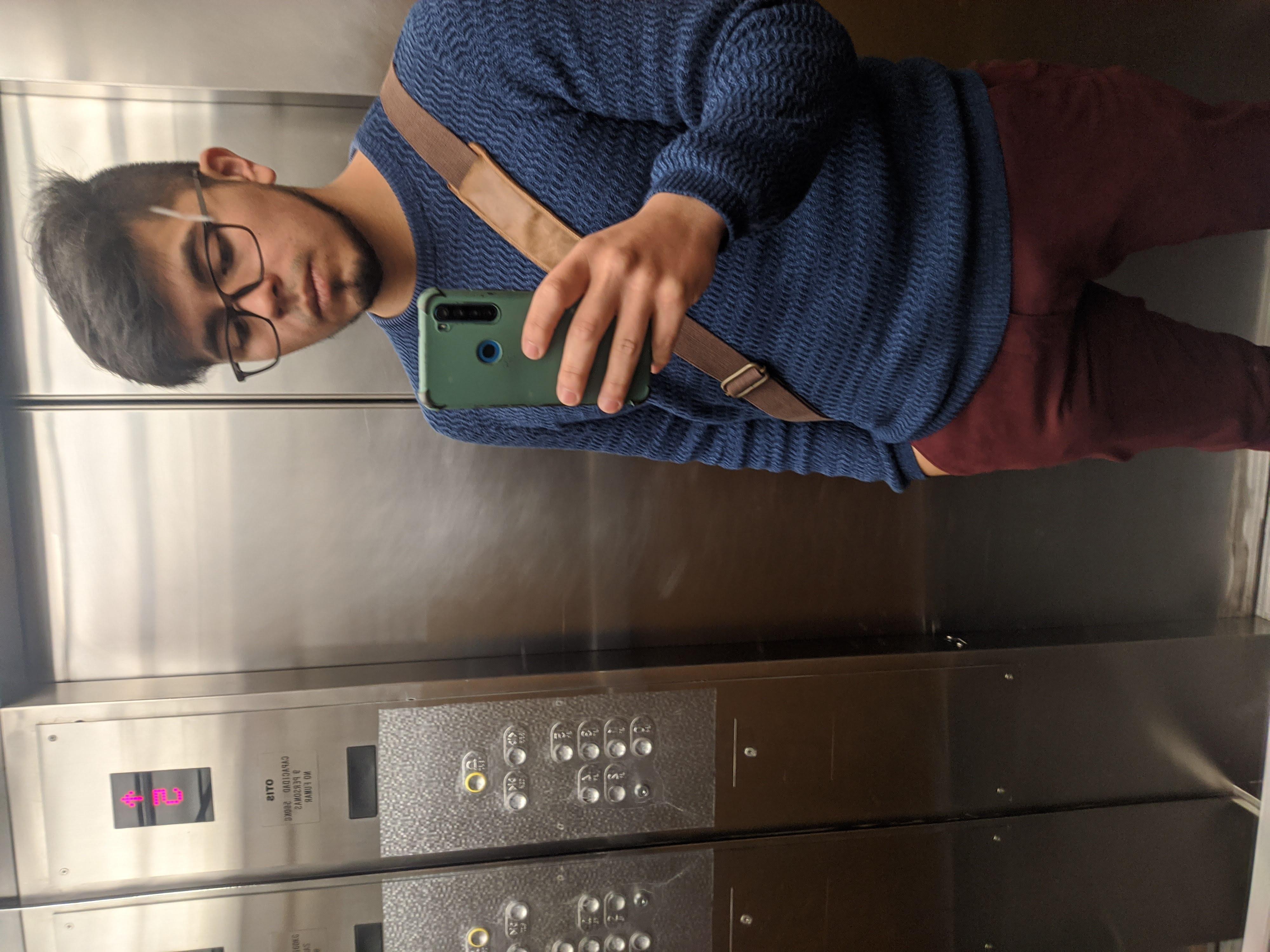

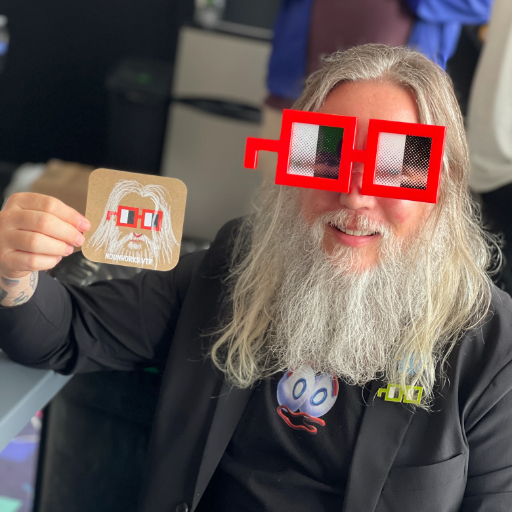

### Rajj on Stable Diffusion via Dreambooth

#### model by Rodrigoajj

This your the Stable Diffusion model fine-tuned the Rajj concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks man face**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

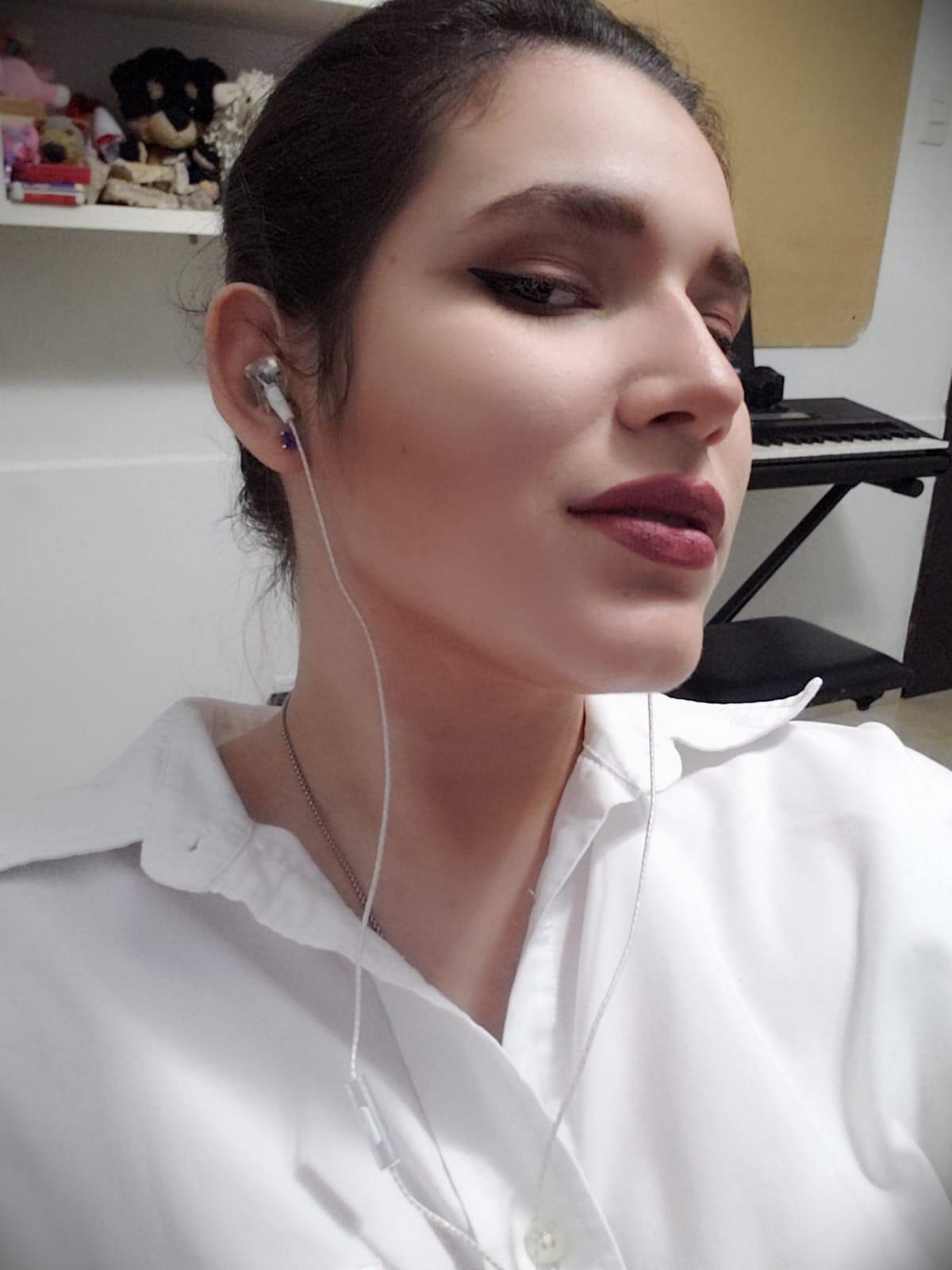

Here are the images used for training this concept:

|

sd-dreambooth-library/tails-from-sonic | sd-dreambooth-library | 2023-05-16T09:22:01Z | 29 | 2 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-13T19:54:57Z | ---

license: mit

---

### Tails from Sonic on Stable Diffusion via Dreambooth

#### model by Skittleology

This your the Stable Diffusion model fine-tuned the Tails from Sonic concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **tails**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

ejcho623/shoe | ejcho623 | 2023-05-16T09:21:57Z | 37 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-13T17:30:29Z | ---

license: mit

---

### Shoe on Stable Diffusion via Dreambooth

#### model by ejcho623

This your the Stable Diffusion model fine-tuned the Shoe concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **sks shoe**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

Gazoche/sd-gundam-diffusers | Gazoche | 2023-05-16T09:21:55Z | 0 | 1 | diffusers | [

"diffusers",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-12T11:06:25Z | See https://github.com/Askannz/gundam-stable-diffusion |

Bioskop/lucyedge | Bioskop | 2023-05-16T09:21:45Z | 30 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-10T23:09:26Z | ---

license: mit

---

### LucyEdge on Stable Diffusion via Dreambooth

#### model by Bioskop

This your the Stable Diffusion model fine-tuned the LucyEdge concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **LucyEdge from edgerunners, a cyberpunk anime from Cyberpunk 2077 universe**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

muchojarabe/muxoyara | muchojarabe | 2023-05-16T09:21:44Z | 35 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-10T22:07:45Z | ---

license: mit

---

### muxoyara on Stable Diffusion via Dreambooth

#### model by muchojarabe

This your the Stable Diffusion model fine-tuned the muxoyara concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **muxoyara**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

waterplayfire/MyModel | waterplayfire | 2023-05-16T09:21:41Z | 32 | 0 | diffusers | [

"diffusers",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-10T09:59:22Z | language:

- "List of ISO 639-1 code for your language"

- lang1

- lang2

thumbnail: "url to a thumbnail used in social sharing"

tags:

- tag1

- tag2

license: "any valid license identifier"

datasets:

- dataset1

- dataset2

metrics:

- metric1

- metric2 |

Bioskop/rebeccaedgerunners | Bioskop | 2023-05-16T09:21:39Z | 35 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-10T03:35:27Z | ---

license: mit

---

### RebeccaEdgerunners on Stable Diffusion via Dreambooth

#### model by Bioskop

This your the Stable Diffusion model fine-tuned the RebeccaEdgerunners concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **RebeccaEdge**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

sd-dreambooth-library/pikachu | sd-dreambooth-library | 2023-05-16T09:21:36Z | 40 | 7 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-09T20:39:26Z | ---

license: mit

---

### Pikachu on Stable Diffusion via Dreambooth

#### model by Skittleology

This your the Stable Diffusion model fine-tuned the Pikachu concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **pikachu**

Model requested by Pikachu, an Uberduck admin/user.

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

okale/i-am | okale | 2023-05-16T09:21:29Z | 34 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-07T19:36:20Z | ---

license: mit

---

### i am on Stable Diffusion via Dreambooth

#### model by okale

This your the Stable Diffusion model fine-tuned the i am concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **iggy**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

Xmuzz/xordixx | Xmuzz | 2023-05-16T09:21:19Z | 34 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-06T21:48:43Z | ---

license: mit

---

### xordixx on Stable Diffusion via Dreambooth

#### model by Xmuzz

This your the Stable Diffusion model fine-tuned the xordixx concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **xordizz**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

Bitset/person | Bitset | 2023-05-16T09:21:13Z | 29 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-06T07:18:47Z | ---

license: mit

---

### person on Stable Diffusion via Dreambooth

#### model by Bitset

This your the Stable Diffusion model fine-tuned the person concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks person**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

sd-dreambooth-library/mexican-concha | sd-dreambooth-library | 2023-05-16T09:21:11Z | 39 | 1 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-06T05:17:22Z | ---

license: mit

---

### mexican_concha on Stable Diffusion via Dreambooth

#### model by MrHidden

This your the Stable Diffusion model fine-tuned the mexican_concha concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks Mexican Concha**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

nitrosocke/elden-ring-diffusion | nitrosocke | 2023-05-16T09:21:07Z | 2,082 | 322 | diffusers | [

"diffusers",

"stable-diffusion",

"text-to-image",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-05T22:55:13Z | ---

license: creativeml-openrail-m

tags:

- stable-diffusion

- text-to-image

---

**Elden Ring Diffusion**

This is the fine-tuned Stable Diffusion model trained on the game art from Elden Ring.

Use the tokens **_elden ring style_** in your prompts for the effect.

You can download the latest version here: [eldenRing-v3-pruned.ckpt](https://huggingface.co/nitrosocke/elden-ring-diffusion/resolve/main/eldenRing-v3-pruned.ckpt)

**If you enjoy my work, please consider supporting me**

[](https://patreon.com/user?u=79196446)

### 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

You can also export the model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX]().

```python

#!pip install diffusers transformers scipy torch

from diffusers import StableDiffusionPipeline

import torch

model_id = "nitrosocke/elden-ring-diffusion"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a magical princess with golden hair, elden ring style"

image = pipe(prompt).images[0]

image.save("./magical_princess.png")

```

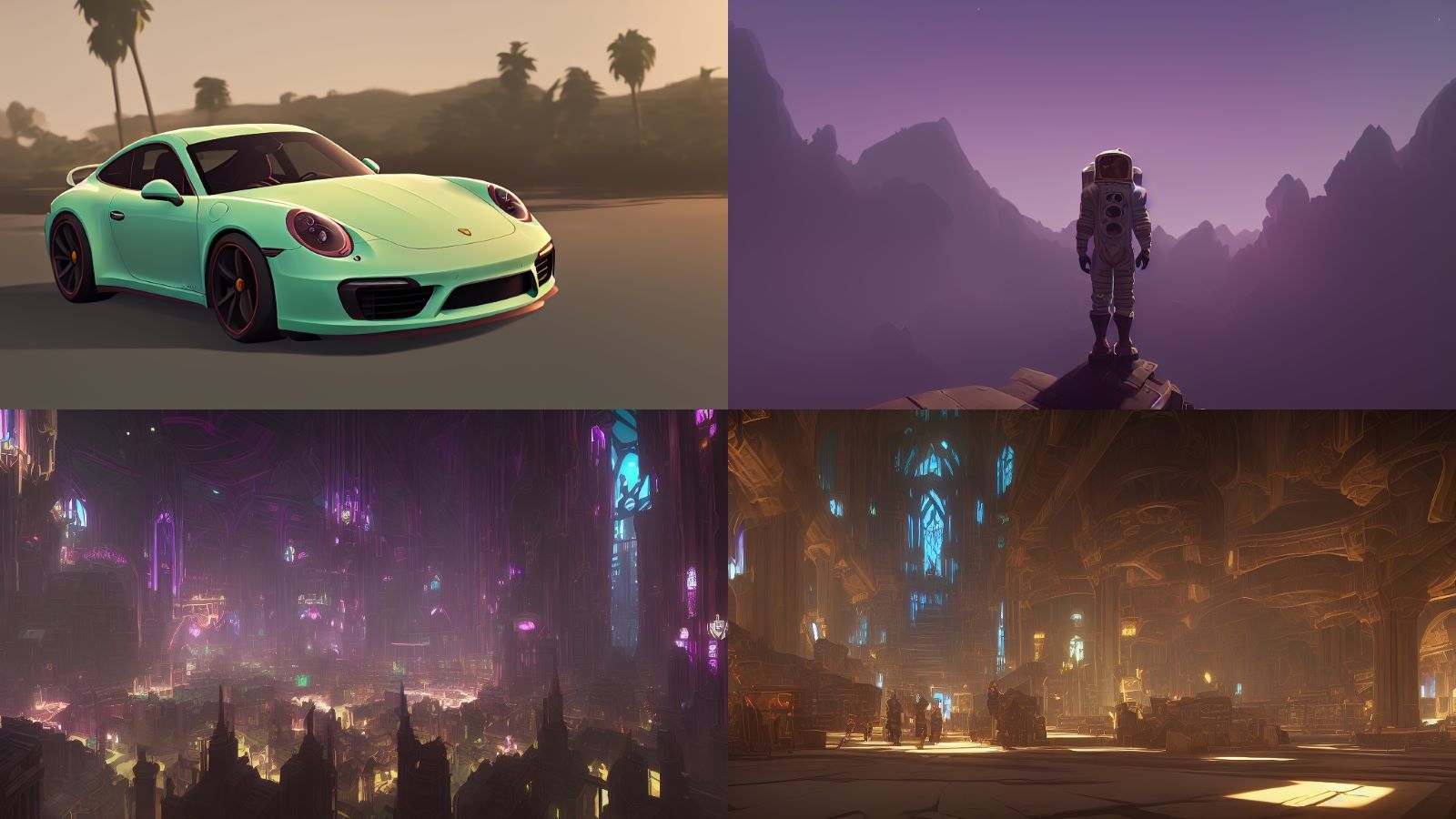

**Portraits rendered with the model:**

**Landscape Shots rendered with the model:**

**Sample images used for training:**

This model was trained using the diffusers based dreambooth training and prior-preservation loss in 3.000 steps.

#### Prompt and settings for portraits:

**elden ring style portrait of a beautiful woman highly detailed 8k elden ring style**

_Steps: 35, Sampler: DDIM, CFG scale: 7, Seed: 3289503259, Size: 512x704_

#### Prompt and settings for landscapes:

**elden ring style dark blue night (castle) on a cliff dark night (giant birds) elden ring style Negative prompt: bright day**

_Steps: 30, Sampler: DDIM, CFG scale: 7, Seed: 350813576, Size: 1024x576_

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license) |

mauromauro/mochoa | mauromauro | 2023-05-16T09:21:05Z | 35 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-05T21:23:41Z | ---

license: mit

---

### mochoa on Stable Diffusion via Dreambooth

#### model by mauromauro

This your the Stable Diffusion model fine-tuned the mochoa concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks mochoa**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

bosnakdev/turkishReviews-ds-mini | bosnakdev | 2023-05-16T09:21:03Z | 61 | 0 | transformers | [

"transformers",

"tf",

"gpt2",

"text-generation",

"generated_from_keras_callback",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-05-16T08:44:13Z | ---

license: mit

tags:

- generated_from_keras_callback

model-index:

- name: turkishReviews-ds-mini

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# turkishReviews-ds-mini

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 9.1786

- Validation Loss: 9.2546

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 5e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5e-05, 'decay_steps': -896, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 10.3061 | 9.9746 | 0 |

| 9.6620 | 9.6315 | 1 |

| 9.1786 | 9.2546 | 2 |

### Framework versions

- Transformers 4.29.1

- TensorFlow 2.12.0

- Datasets 2.12.0

- Tokenizers 0.13.3

|

tyler274/waifu-diffusion-testing | tyler274 | 2023-05-16T09:21:01Z | 0 | 0 | diffusers | [

"diffusers",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-05T07:35:32Z | ---

license: creativeml-openrail-m

---

|

Seonauta/jfj | Seonauta | 2023-05-16T09:20:57Z | 29 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-04T14:45:13Z | ---

license: mit

---

### jfj on Stable Diffusion via Dreambooth

#### model by Seonauta

This your the Stable Diffusion model fine-tuned the jfj concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks jfj**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

yuk/asahi-waifu-diffusion | yuk | 2023-05-16T09:20:55Z | 38 | 7 | diffusers | [

"diffusers",

"tensorboard",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"en",

"license:bigscience-bloom-rail-1.0",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-04T11:01:52Z | ---

license: bigscience-bloom-rail-1.0

language:

- en

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

---

このモデルは、アイドルマスター シャイニーカラーズに登場するアイドル、芹沢あさひのイラストを生成するのに特化したStable-DiffusionのDiffuser用のモデルです。

This model is for Diffuser, a Stable-Diffusion specialized for generating illustrations of Asahi Serizawa, an idol from THE iDOLM@STER SHINY COLORS.

DreamBoothを利用して、WaifuDiffusionを追加学習し作成されました。

It was created using DreamBooth with additional learning of WaifuDiffusion.

生成した画像が芹沢あさひに類似していた場合、その著作権はBandai Namco Entertainment Inc.に所属する可能性があります。

If the generated image resembles Asahi Serizawa, the copyright may belong to Bandai Namco Entertainment Inc.

その他の利用上の注意点は bigscience-bloom-rail-1.0のライセンスを御覧ください。

For other usage notes, please refer to the license of bigscience-bloom-rail-1.0.

https://hf.space/static/bigscience/license/index.html

|

sd-dreambooth-library/face2contra | sd-dreambooth-library | 2023-05-16T09:20:47Z | 32 | 2 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-03T18:54:41Z | ---

license: mit

---

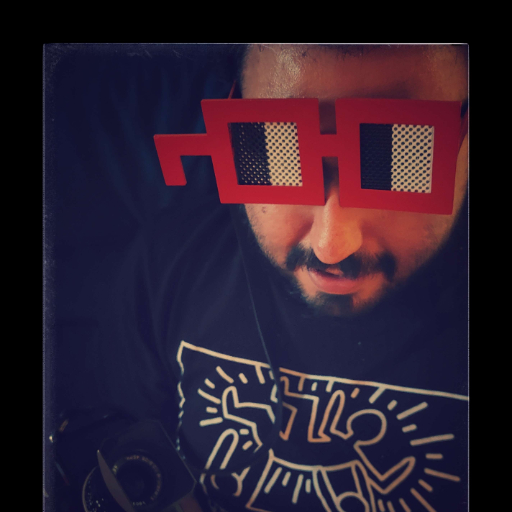

### face2contra-sd-dreambooth on Stable Diffusion via Dreambooth

#### model by avantcontra

This your the Stable Diffusion model fine-tuned the face2contra-sd-dreambooth concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks face2contra**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

nitrosocke/Arcane-Diffusion | nitrosocke | 2023-05-16T09:20:36Z | 1,020 | 752 | diffusers | [

"diffusers",

"stable-diffusion",

"text-to-image",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-02T11:41:27Z | ---

license: creativeml-openrail-m

tags:

- stable-diffusion

- text-to-image

---

# Arcane Diffusion

This is the fine-tuned Stable Diffusion model trained on images from the TV Show Arcane.

Use the tokens **_arcane style_** in your prompts for the effect.

**If you enjoy my work, please consider supporting me**

[](https://patreon.com/user?u=79196446)

### 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

You can also export the model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX]().

```python

#!pip install diffusers transformers scipy torch

from diffusers import StableDiffusionPipeline

import torch

model_id = "nitrosocke/Arcane-Diffusion"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "arcane style, a magical princess with golden hair"

image = pipe(prompt).images[0]

image.save("./magical_princess.png")

```

# Gradio & Colab

We also support a [Gradio](https://github.com/gradio-app/gradio) Web UI and Colab with Diffusers to run fine-tuned Stable Diffusion models:

[](https://huggingface.co/spaces/anzorq/finetuned_diffusion)

[](https://colab.research.google.com/drive/1j5YvfMZoGdDGdj3O3xRU1m4ujKYsElZO?usp=sharing)

### Sample images from v3:

### Sample images from the model:

### Sample images used for training:

**Version 3** (arcane-diffusion-v3): This version uses the new _train-text-encoder_ setting and improves the quality and edibility of the model immensely. Trained on 95 images from the show in 8000 steps.

**Version 2** (arcane-diffusion-v2): This uses the diffusers based dreambooth training and prior-preservation loss is way more effective. The diffusers where then converted with a script to a ckpt file in order to work with automatics repo.

Training was done with 5k steps for a direct comparison to v1 and results show that it needs more steps for a more prominent result. Version 3 will be tested with 11k steps.

**Version 1** (arcane-diffusion-5k): This model was trained using _Unfrozen Model Textual Inversion_ utilizing the _Training with prior-preservation loss_ methods. There is still a slight shift towards the style, while not using the arcane token.

|

yuk/fuyuko-waifu-diffusion | yuk | 2023-05-16T09:20:35Z | 13 | 16 | diffusers | [

"diffusers",

"tensorboard",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"en",

"license:bigscience-bloom-rail-1.0",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-02T09:51:38Z | ---

license: bigscience-bloom-rail-1.0

language:

- en

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

---

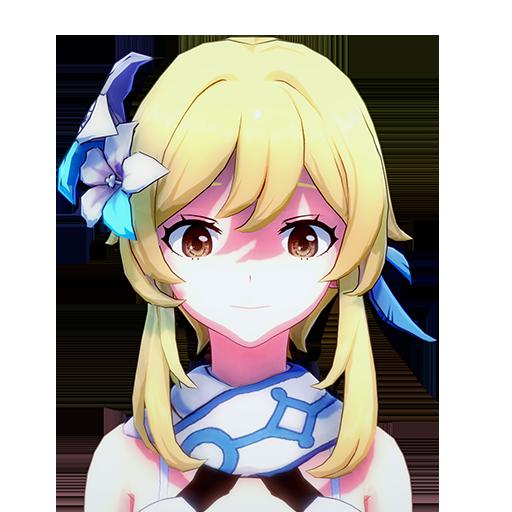

このモデルは、アイドルマスター シャイニーカラーズに登場するアイドル、黛冬優子のイラストを生成するのに特化したStable-DiffusionのDiffuser用のモデルです。

This model is for Diffuser, a Stable-Diffusion specialized for generating illustrations of Fuyuko Mayuzumi Fuyu, an idol from THE iDOLM@STER SHINY COLORS.

DreamBoothを利用して、WaifuDiffusionを追加学習し作成されました。

It was created using DreamBooth with additional learning of WaifuDiffusion.

生成した画像が黛冬優子に類似していた場合、その著作権はBandai Namco Entertainment Inc.に所属する可能性があります。

If the generated image resembles Fuyuko Mayuzumi, the copyright may belong to Bandai Namco Entertainment Inc.

その他の利用上の注意点は bigscience-bloom-rail-1.0のライセンスを御覧ください。

For other usage notes, please refer to the license of bigscience-bloom-rail-1.0.

https://hf.space/static/bigscience/license/index.html

|

Zack3D/Zack3D_Kinky-v1 | Zack3D | 2023-05-16T09:20:31Z | 58 | 35 | diffusers | [

"diffusers",

"stable-diffusion",

"text-to-image",

"en",

"license:creativeml-openrail-m",

"autotrain_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-02T00:07:37Z | ---

language:

- en

tags:

- stable-diffusion

- text-to-image

license: creativeml-openrail-m

inference: false

---

Stable Diffusion model trained on E621 data, specializing on the kinkier side.

Model is also live in my discord server on a free-to-use bot. [The Gooey Pack](https://discord.gg/WBjvffyJZf)

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license) |

sd-dreambooth-library/kaltsit | sd-dreambooth-library | 2023-05-16T09:20:27Z | 49 | 5 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-01T22:59:10Z | ---

license: mit

---

### kaltsit_v2 on Stable Diffusion via Dreambooth

This your the Stable Diffusion model fine-tuned the kaltsit_v2 concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **kaltsit**

v2 update: 1. increase sample size. more stable results. 2. prompt update: kaltsit. 3. prior update: cat girl

Use the model in Google Colab:

[](https://colab.research.google.com/drive/11yzVX9rNEkzMBq6rj1HyQxkDjllI4P1-)

Here is an example output:

prompt = "detailed wallpaper of kaltsit on beach, green animal ears, white hair, green eyes, cleavage breasts and thigh, by ilya kuvshinov and alphonse mucha, strong rim light, splash particles, intense shadows, by Canon EOS, SIGMA Art Lens"

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts) |

sd-dreambooth-library/leone-from-akame-ga-kill-v2 | sd-dreambooth-library | 2023-05-16T09:20:21Z | 31 | 2 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-01T18:16:14Z | ---

license: mit

---

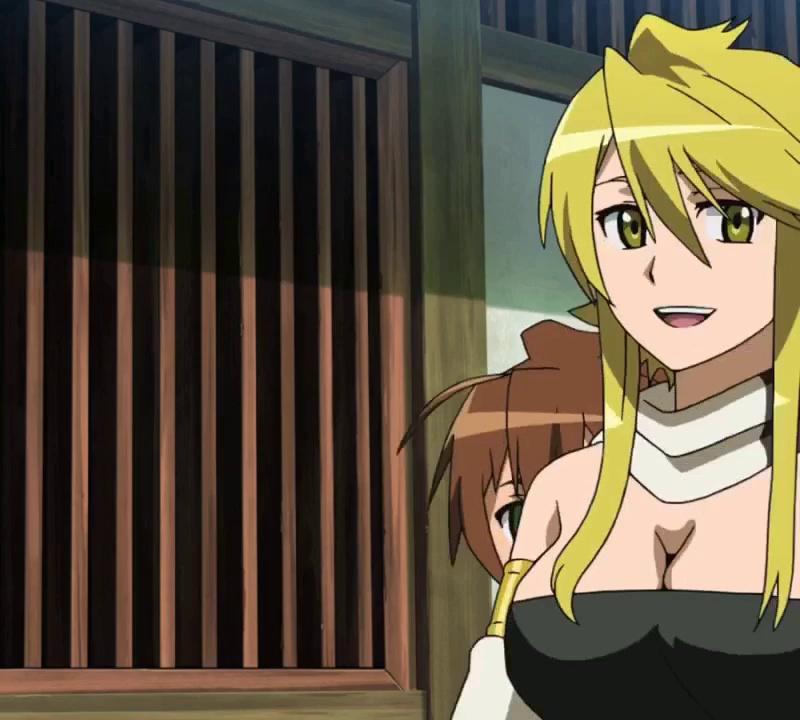

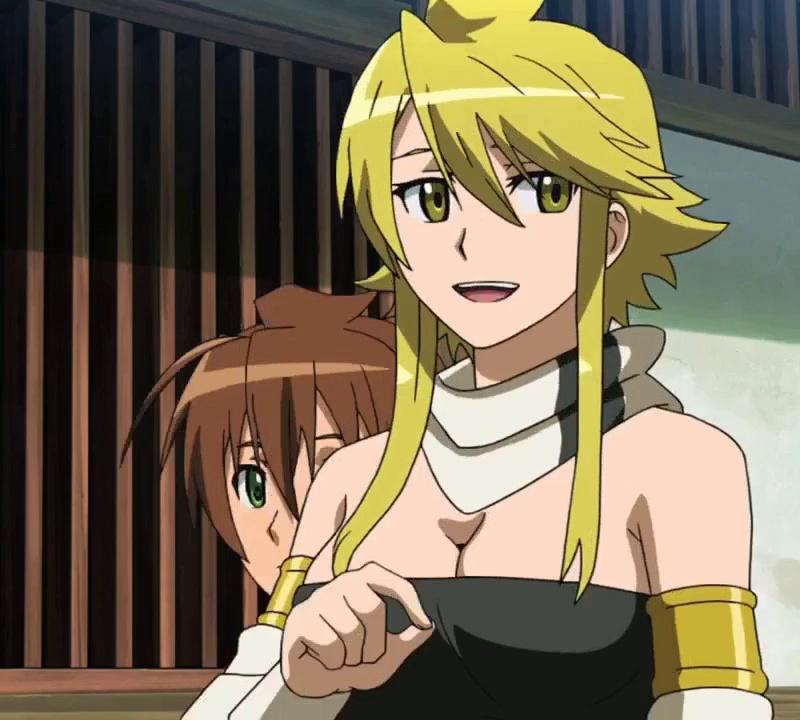

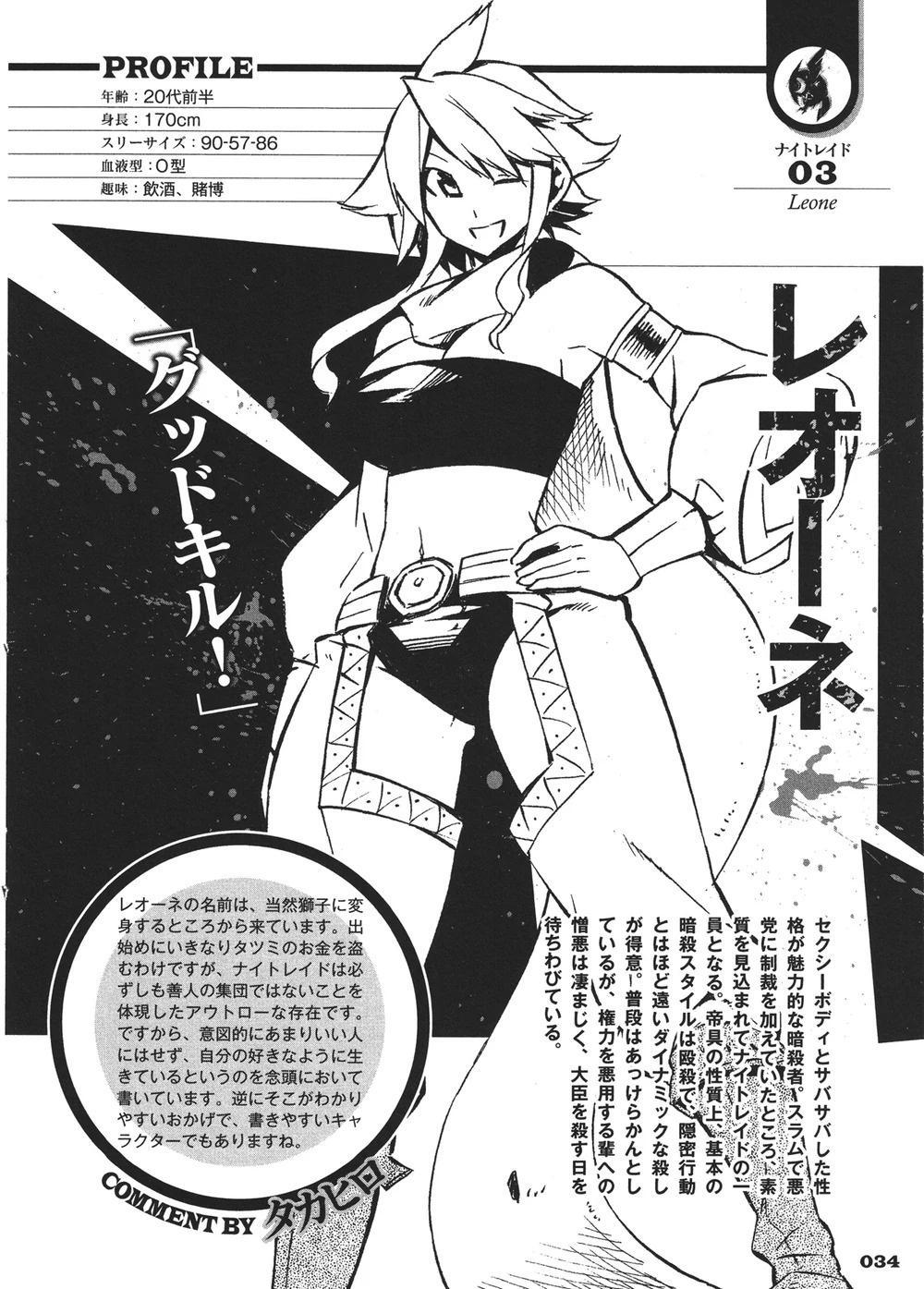

### Leone From Akame Ga Kill V2 on Stable Diffusion via Dreambooth

#### model by Mrkimmon

This your the Stable Diffusion model fine-tuned the Leone From Akame Ga Kill V2 concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **an anime woman character of sks**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

dadosdq/chairtest | dadosdq | 2023-05-16T09:20:12Z | 35 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-10-01T07:21:27Z | ---

license: mit

---

### ChairTest on Stable Diffusion via Dreambooth

#### model by dadosdq

This your the Stable Diffusion model fine-tuned the ChairTest concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **ChA1r**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

sd-dreambooth-library/yagami-taichi-from-digimon-adventure-1999 | sd-dreambooth-library | 2023-05-16T09:20:06Z | 34 | 1 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-30T21:16:43Z | ---

license: mit

---

### Yagami Taichi from Digimon Adventure (1999) on Stable Diffusion via Dreambooth

#### model by KnightMichael

This your the Stable Diffusion model fine-tuned the Yagami Taichi from Digimon Adventure (1999) concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **an anime boy character of sks**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

DavLeonardo/sofi | DavLeonardo | 2023-05-16T09:19:59Z | 30 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-30T18:11:09Z | ---

license: mit

---

### sofi on Stable Diffusion via Dreambooth

#### model by DavLeonardo

This your the Stable Diffusion model fine-tuned the sofi concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **sofi**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

shoya140/mitou-symbol-v0-2 | shoya140 | 2023-05-16T09:19:57Z | 34 | 1 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-30T17:23:40Z | ---

license: mit

---

### mitou-symbol v0.2 on Stable Diffusion via Dreambooth

#### model by shoya140

This your the Stable Diffusion model fine-tuned the mitou-symbol v0.2 concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **an illustration of sks symbol**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

sd-dreambooth-library/mate | sd-dreambooth-library | 2023-05-16T09:19:55Z | 32 | 2 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-30T15:18:55Z | ---

license: mit

---

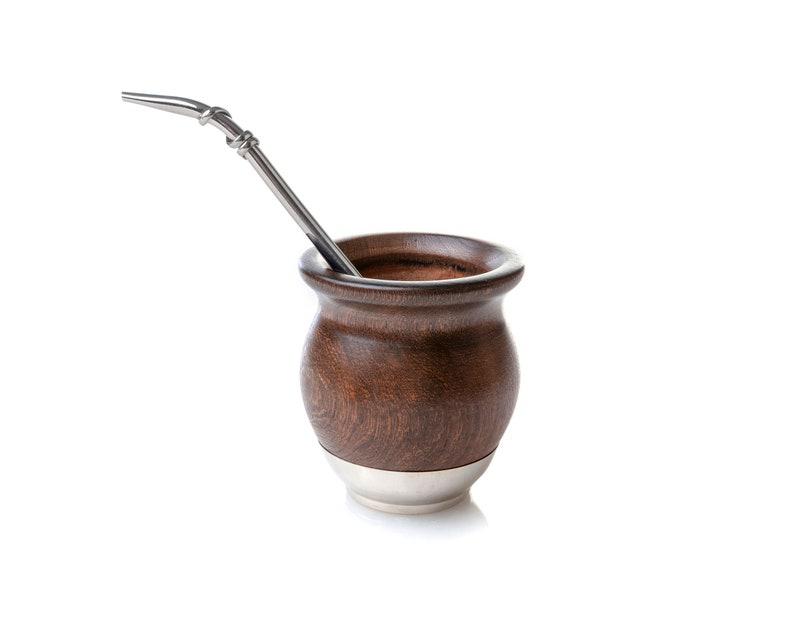

### mate on Stable Diffusion via Dreambooth

#### model by machinelearnear

This your the Stable Diffusion model fine-tuned the mate concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks mate**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

sd-dreambooth-library/hensley-art-style | sd-dreambooth-library | 2023-05-16T09:19:51Z | 35 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-30T00:43:56Z | ---

license: mit

---

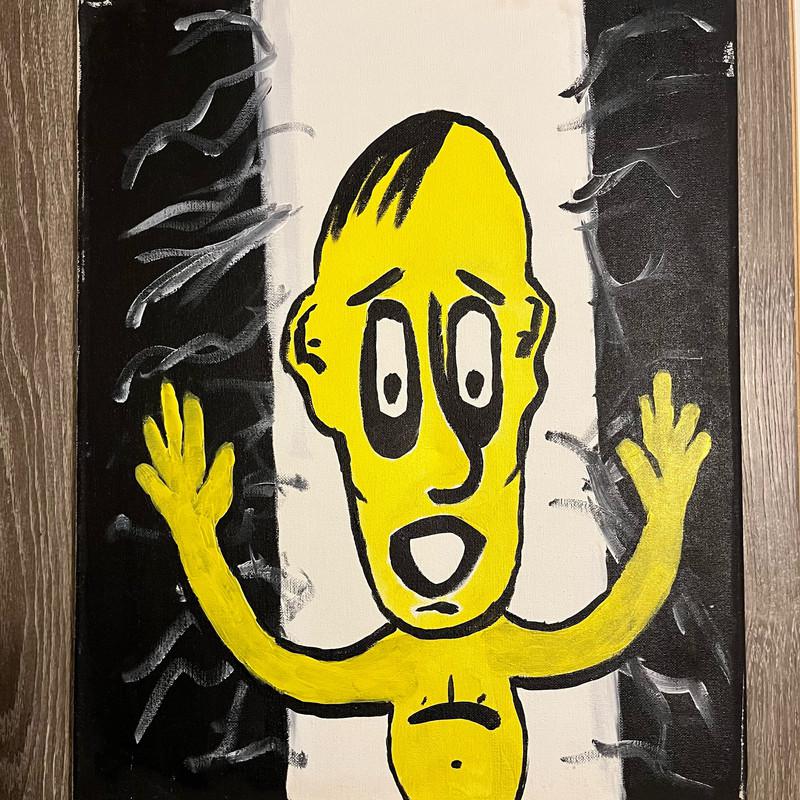

### Hensley art style on Stable Diffusion via Dreambooth

#### model by Pinguin

This your the Stable Diffusion model fine-tuned the Hensley art style concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a painting in style of sks **

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

sd-dreambooth-library/beard-oil-big-sur | sd-dreambooth-library | 2023-05-16T09:19:45Z | 34 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T18:44:22Z | ---

license: mit

---

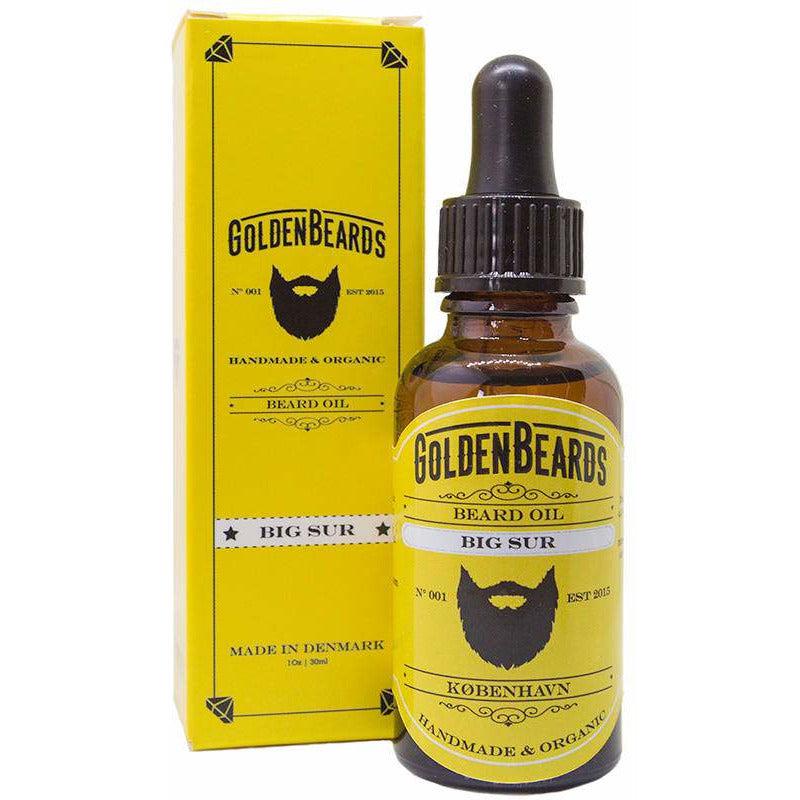

### beard oil big sur on Stable Diffusion via Dreambooth

#### model by soulpawa

This your the Stable Diffusion model fine-tuned the beard oil big sur concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks beard oil**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

sd-dreambooth-library/vaporfades | sd-dreambooth-library | 2023-05-16T09:19:41Z | 27 | 3 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T18:34:16Z | ---

license: mit

---

### VaporFades on Stable Diffusion via Dreambooth

#### model by nlatina

This your the Stable Diffusion model fine-tuned the VaporFades concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **an image in the style of sks**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/mario-action-figure | sd-dreambooth-library | 2023-05-16T09:19:40Z | 32 | 7 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T18:25:01Z | ---

license: mit

---

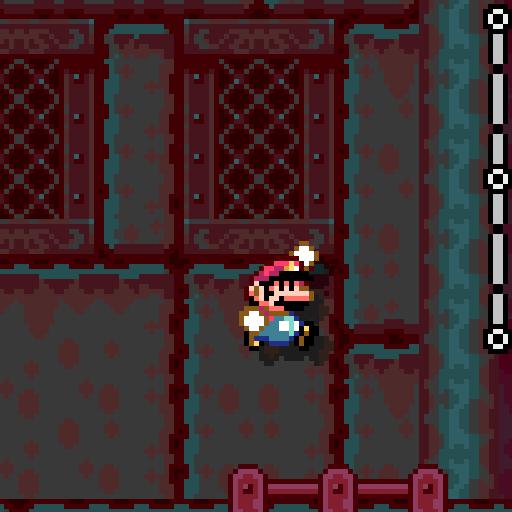

### mario action figure on Stable Diffusion via Dreambooth

#### model by misas4444

This your the Stable Diffusion model fine-tuned the mario action figure concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks action figure**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

.. |

sd-dreambooth-library/road-to-ruin | sd-dreambooth-library | 2023-05-16T09:19:32Z | 31 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T15:14:14Z | ---

license: mit

---

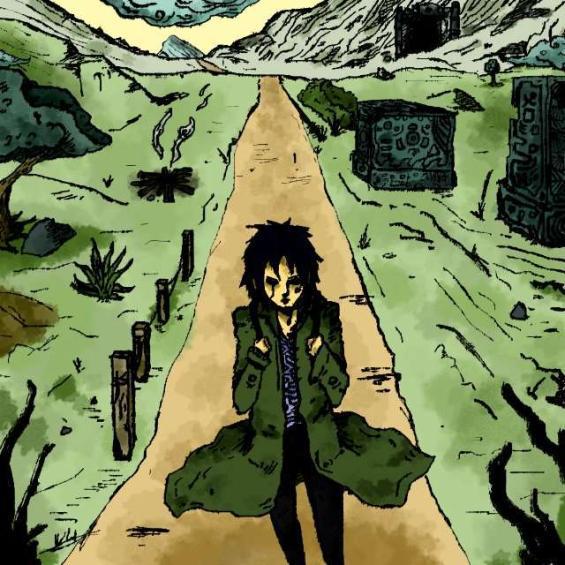

### Road to Ruin on Stable Diffusion via Dreambooth

#### model by nlatina

This your the Stable Diffusion model fine-tuned the Road to Ruin concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **starry night. sks themed level design. tiki ruins, stone statues, night sky and black silhouettes **

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/neff-voice-amp-2 | sd-dreambooth-library | 2023-05-16T09:19:29Z | 30 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T14:23:17Z | ---

license: mit

---

### neff voice amp #2 on Stable Diffusion via Dreambooth

#### model by Crazycloud

This your the Stable Diffusion model fine-tuned the neff voice amp #2 concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks neff voice amp #1**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/kid-chameleon-character | sd-dreambooth-library | 2023-05-16T09:19:21Z | 36 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T09:11:01Z | ---

license: mit

---

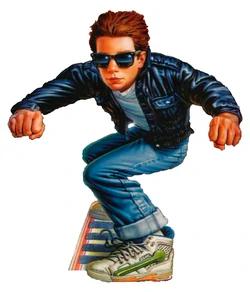

### kid-chameleon-character on Stable Diffusion via Dreambooth

#### model by gregfargo

This your the Stable Diffusion model fine-tuned the kid-chameleon-character concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **kid-chameleon-character**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/yingdream | sd-dreambooth-library | 2023-05-16T09:19:16Z | 29 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T07:48:36Z | ---

license: mit

---

### yingdream on Stable Diffusion via Dreambooth

#### model by Worldwars

This your the Stable Diffusion model fine-tuned the yingdream concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of an anime girl**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/arthur-leywin | sd-dreambooth-library | 2023-05-16T09:19:14Z | 30 | 1 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T06:25:19Z | ---

license: mit

---

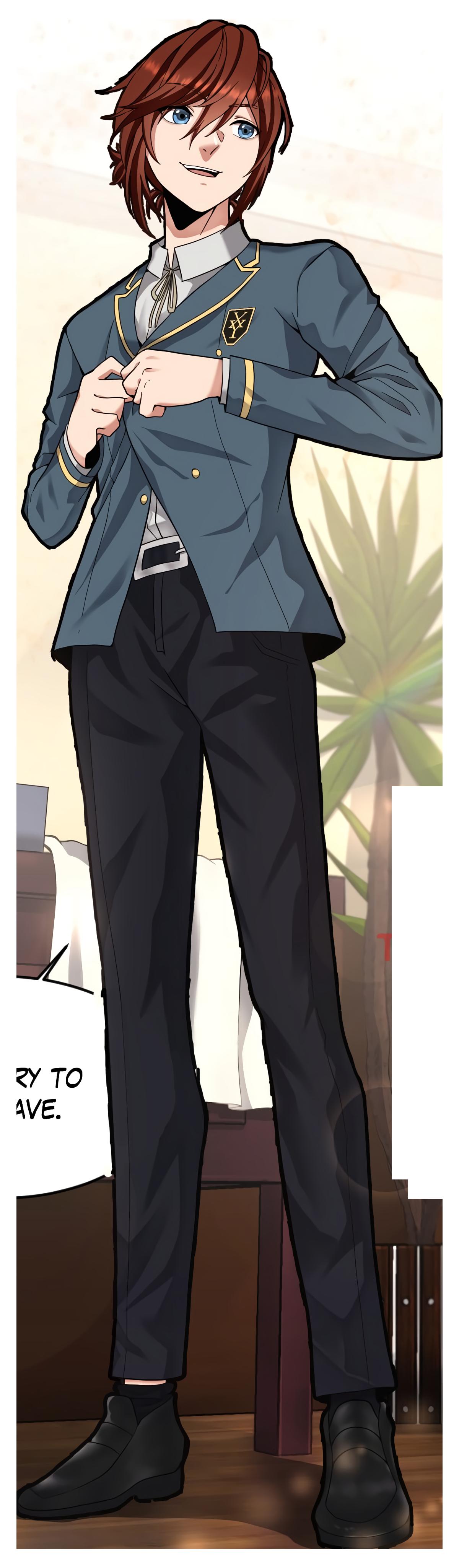

### Arthur Leywin on Stable Diffusion via Dreambooth

#### model by deref

This your the Stable Diffusion model fine-tuned the Arthur Leywin concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks guy**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/langel | sd-dreambooth-library | 2023-05-16T09:19:07Z | 34 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T03:54:24Z | ---

license: mit

---

### Langel on Stable Diffusion via Dreambooth

#### model by Kasuzu

This your the Stable Diffusion model fine-tuned the Langel concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **Langel**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/gomber | sd-dreambooth-library | 2023-05-16T09:19:05Z | 56 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T03:52:15Z | ---

license: mit

---

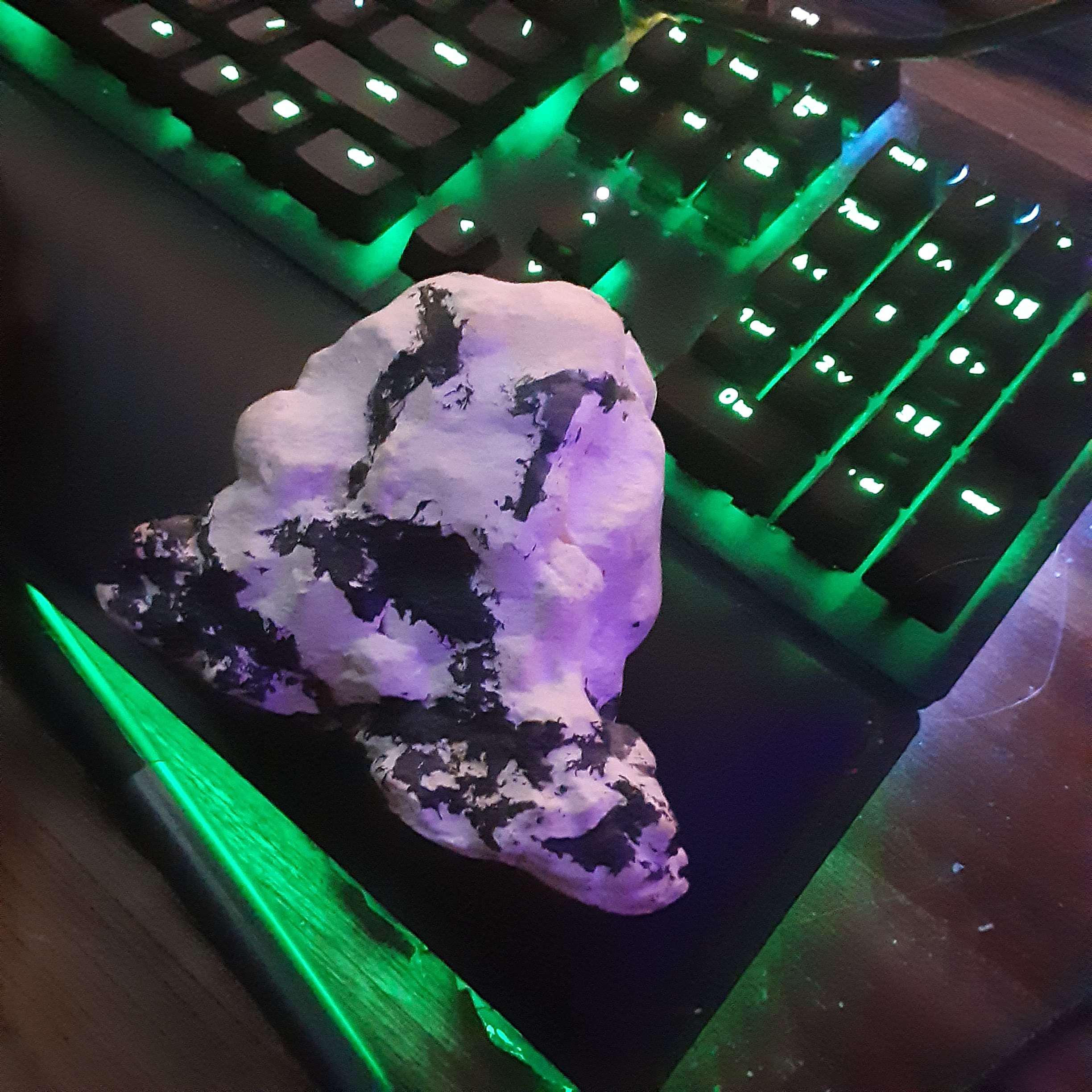

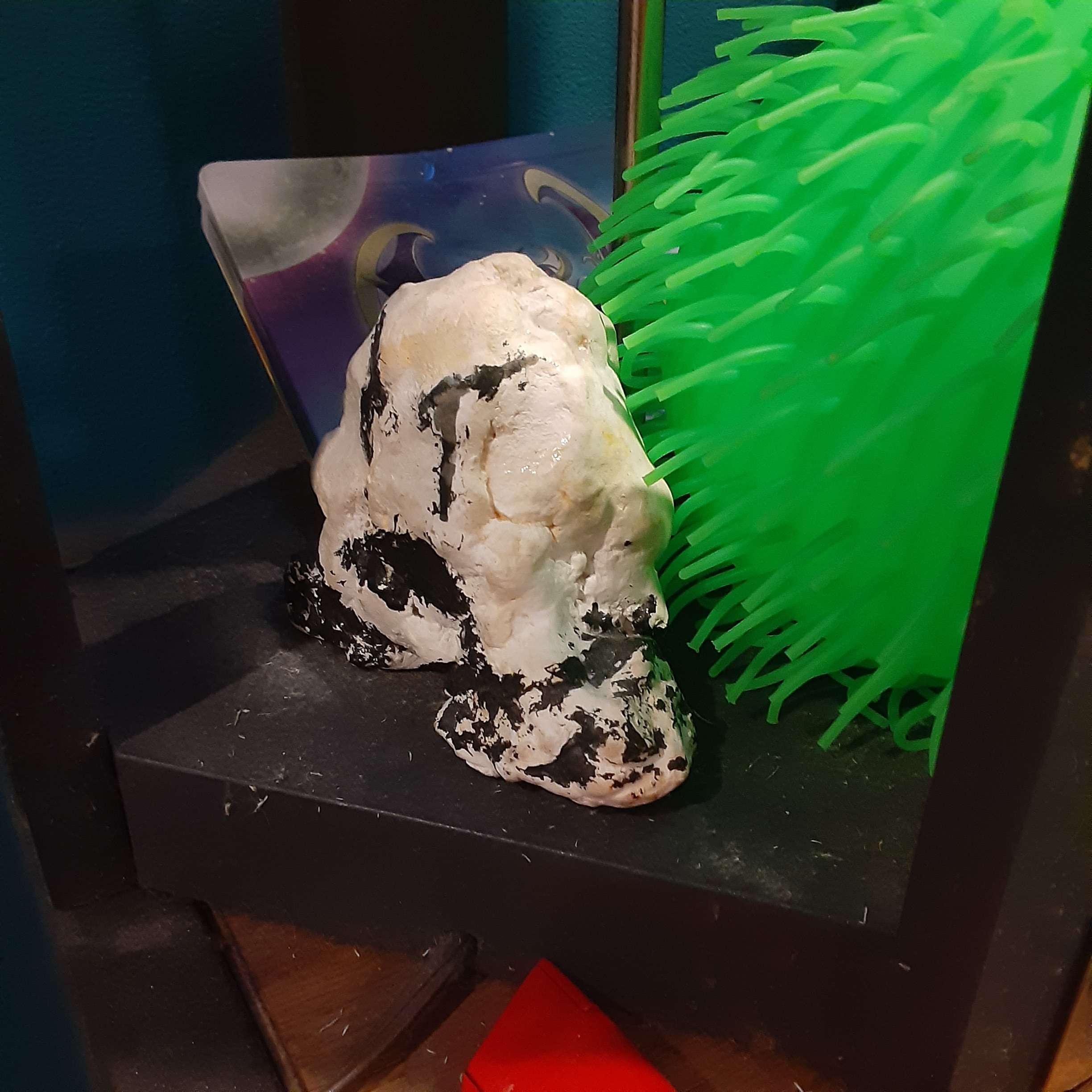

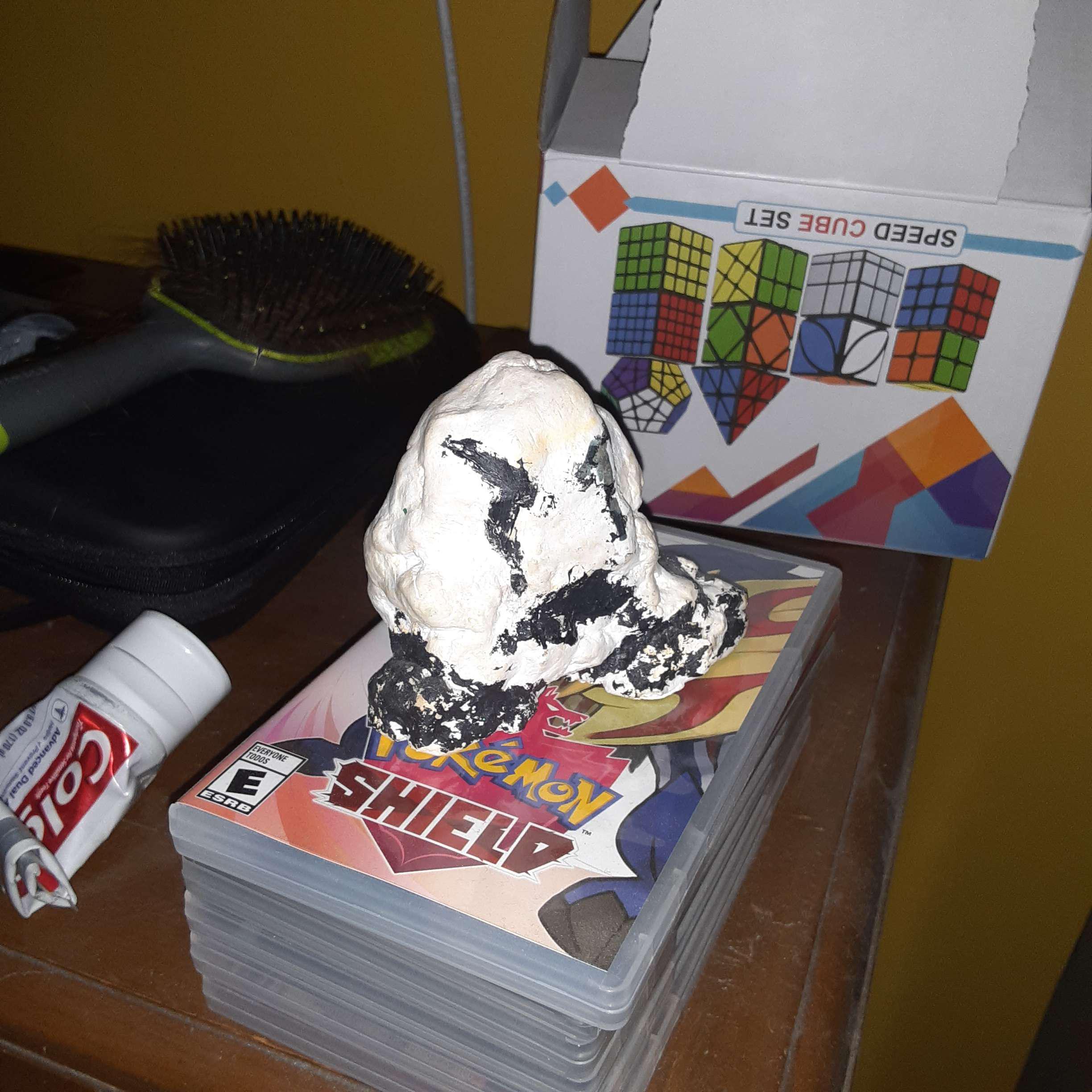

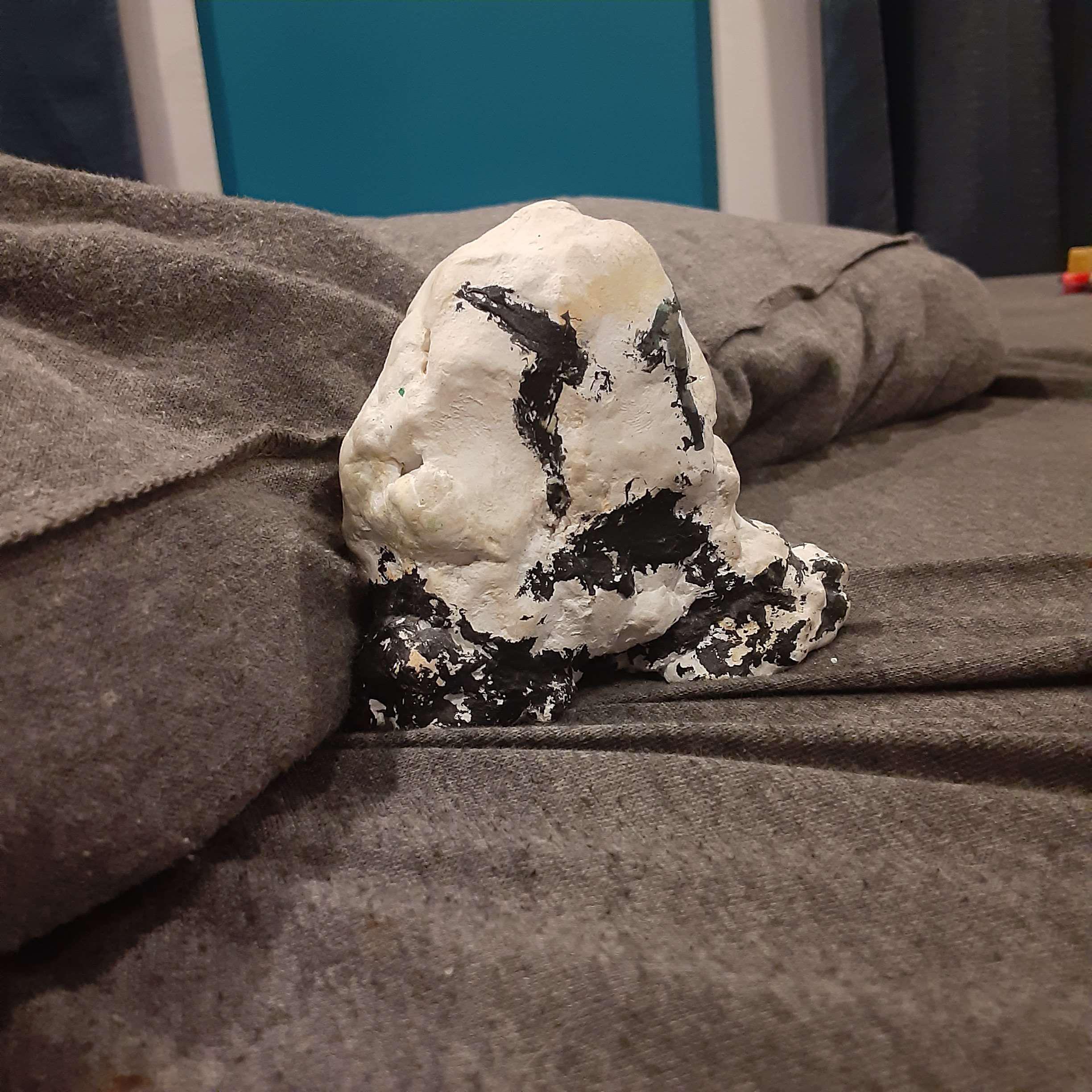

### Gomber on Stable Diffusion via Dreambooth

#### model by chelunderscore

This your the Stable Diffusion model fine-tuned the Gomber concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks toy**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/little-mario-jumping | sd-dreambooth-library | 2023-05-16T09:18:56Z | 30 | 1 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T01:52:32Z | ---

license: mit

---

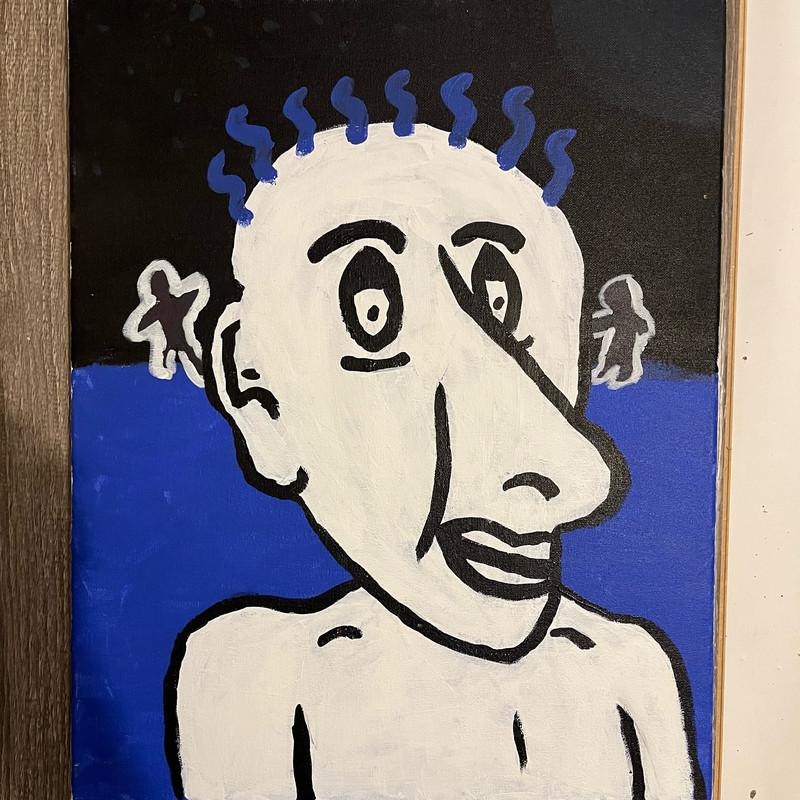

### little mario jumping on Stable Diffusion via Dreambooth

#### model by Pinguin

This your the Stable Diffusion model fine-tuned the little mario jumping concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a screenshot of tiny sks character**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/robeez-baby-girl-water-shoes | sd-dreambooth-library | 2023-05-16T09:18:55Z | 29 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T17:21:25Z | ---

license: mit

---

### robeez baby girl water shoes on Stable Diffusion via Dreambooth

#### model by chrisemoody

This your the Stable Diffusion model fine-tuned the robeez baby girl water shoes concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks shoes**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/the-child | sd-dreambooth-library | 2023-05-16T09:18:54Z | 31 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T01:10:23Z | ---

license: mit

---

### the child on Stable Diffusion via Dreambooth

#### model by jGatzB

This your the Stable Diffusion model fine-tuned the the child concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of a mini australian shepherd with a slight underbite sks**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/alien-coral | sd-dreambooth-library | 2023-05-16T09:18:52Z | 31 | 6 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-29T00:50:46Z | ---

license: mit

---

### Alien Coral on Stable Diffusion via Dreambooth

#### model by A-Merk

This your the Stable Diffusion model fine-tuned the Alien Coral concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks alien coral**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/mirtha-legrand | sd-dreambooth-library | 2023-05-16T09:18:46Z | 31 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T23:31:03Z | ---

license: mit

---

### mirtha legrand on Stable Diffusion via Dreambooth

#### model by machinelearnear

This your the Stable Diffusion model fine-tuned the mirtha legrand concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks mirtha legrand**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/a-hat-in-time-girl | sd-dreambooth-library | 2023-05-16T09:18:42Z | 45 | 2 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T22:01:58Z | ---

license: mit

---

### a hat in time girl on Stable Diffusion via Dreambooth

#### model by Pinguin

This your the Stable Diffusion model fine-tuned the a hat in time girl concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a render of sks **

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/justinkrane-artwork | sd-dreambooth-library | 2023-05-16T09:18:40Z | 31 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T21:43:31Z | ---

license: mit

---

### JustinKrane_artwork on Stable Diffusion via Dreambooth

#### model by JetJaguar

This your the Stable Diffusion model fine-tuned the JustinKrane_artwork concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **art by sks JustinKrane**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

touch20032003/xuyuan-trial-sentiment-bert-chinese | touch20032003 | 2023-05-16T09:18:37Z | 68 | 12 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-04-28T05:36:41Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: xuyuan-trial-sentiment-bert-chinese

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xuyuan-trial-sentiment-bert-chinese

This model is a fine-tuned version of [hfl/chinese-bert-wwm-ext](https://huggingface.co/hfl/chinese-bert-wwm-ext) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0247

- F1 Macro: 0.9899

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.28.0

- Pytorch 2.0.0+cu118

- Datasets 2.12.0

- Tokenizers 0.13.3

|

sd-dreambooth-library/noggles-glasses-1200 | sd-dreambooth-library | 2023-05-16T09:18:34Z | 43 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T20:44:25Z | ---

license: mit

---

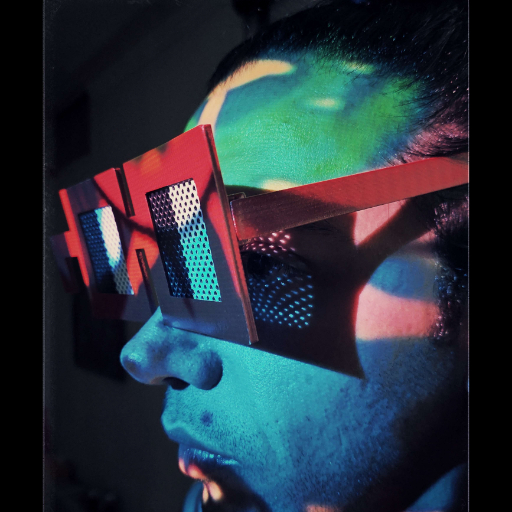

### noggles_glasses_1200 on Stable Diffusion via Dreambooth

#### model by alxdfy

This your the Stable Diffusion model fine-tuned the noggles_glasses_1200 concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of a person wearing sks glasses**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/edd | sd-dreambooth-library | 2023-05-16T09:18:32Z | 35 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T20:17:35Z | ---

license: mit

---

### edd on Stable Diffusion via Dreambooth

#### model by mangooo

This your the Stable Diffusion model fine-tuned the edd concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **sks boy smiles**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/smario-world-map | sd-dreambooth-library | 2023-05-16T09:18:30Z | 50 | 5 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T20:01:22Z | ---

license: mit

---

### Smario world Map on Stable Diffusion via Dreambooth

#### model by Pinguin

This your the Stable Diffusion model fine-tuned the Smario world Map concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a map in style of sks **

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/froggewut | sd-dreambooth-library | 2023-05-16T09:18:26Z | 33 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T19:22:37Z | ---

license: mit

---

### FroggeWut on Stable Diffusion via Dreambooth

#### model by nlatina

This your the Stable Diffusion model fine-tuned the FroggeWut concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a painting in the style of sks**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/homelander | sd-dreambooth-library | 2023-05-16T09:18:22Z | 29 | 3 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T18:36:00Z | ---

license: mit

---

### Homelander on Stable Diffusion via Dreambooth

#### model by Abdifatah

This your the Stable Diffusion model fine-tuned the Homelander concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of homelander guy**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/paolo-bonolis | sd-dreambooth-library | 2023-05-16T09:18:17Z | 31 | 1 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T17:48:56Z | ---

license: mit

---

### paolo-bonolis on Stable Diffusion via Dreambooth

#### model by thesun1094224

This your the Stable Diffusion model fine-tuned the paolo-bonolis concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks paolo bonolis**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/tempa | sd-dreambooth-library | 2023-05-16T09:18:11Z | 39 | 0 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T16:50:04Z | ---

license: mit

---

### Tempa on Stable Diffusion via Dreambooth

#### model by Giordyman

This your the Stable Diffusion model fine-tuned the Tempa concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks Tempa**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

sd-dreambooth-library/cat-toy | sd-dreambooth-library | 2023-05-16T09:18:06Z | 47 | 3 | diffusers | [

"diffusers",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-28T10:47:05Z | ---

license: mit

---

### Cat toy on Stable Diffusion via Dreambooth

#### model by multimodalart

This your the Stable Diffusion model fine-tuned the Cat toy concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks toy**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

Here are the images used for training this concept:

|

jcplus/waifu-diffusion | jcplus | 2023-05-16T09:18:02Z | 38 | 5 | diffusers | [

"diffusers",

"stable-diffusion",

"text-to-image",

"en",

"license:bigscience-bloom-rail-1.0",

"autotrain_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-09-22T09:39:42Z | ---

language:

- en

tags:

- stable-diffusion

- text-to-image

license: bigscience-bloom-rail-1.0

inference: false

---

# waifu-diffusion - Diffusion for Weebs

waifu-diffusion is a latent text-to-image diffusion model that has been conditioned on high-quality anime images through fine-tuning.

# Gradio

We also support a [Gradio](https://github.com/gradio-app/gradio) web ui with diffusers to run inside a colab notebook:

[](https://colab.research.google.com/drive/1_8wPN7dJO746QXsFnB09Uq2VGgSRFuYE#scrollTo=1HaCauSq546O)

<img src=https://cdn.discordapp.com/attachments/930559077170421800/1017265913231327283/unknown.png width=40% height=40%>

[Original PyTorch Model Download Link](https://thisanimedoesnotexist.ai/downloads/wd-v1-2-full-ema.ckpt)

## Model Description

The model originally used for fine-tuning is [Stable Diffusion V1-4](https://huggingface.co/CompVis/stable-diffusion-v1-4), which is a latent image diffusion model trained on [LAION2B-en](https://huggingface.co/datasets/laion/laion2B-en).

The current model has been fine-tuned with a learning rate of 5.0e-6 for 4 epochs on 56k text-image pairs obtained through Danbooru which all have an aesthetic rating greater than `6.0`.

**Note:** This project has **no affiliation with Danbooru.**

## Training Data & Annotative Prompting

The data used for fine-tuning has come from a random sample of 56k Danbooru images, which were filtered based on [CLIP Aesthetic Scoring](https://github.com/christophschuhmann/improved-aesthetic-predictor) where only images with an aesthetic score greater than `6.0` were used.

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

## Downstream Uses

This model can be used for entertainment purposes and as a generative art assistant.

## Example Code

```python

import torch

from torch import autocast

from diffusers import StableDiffusionPipeline, DDIMScheduler

model_id = "hakurei/waifu-diffusion"

device = "cuda"

pipe = StableDiffusionPipeline.from_pretrained(

model_id,

torch_dtype=torch.float16,

revision="fp16",

scheduler=DDIMScheduler(

beta_start=0.00085,

beta_end=0.012,

beta_schedule="scaled_linear",

clip_sample=False,

set_alpha_to_one=False,

),

)

pipe = pipe.to(device)

prompt = "touhou hakurei_reimu 1girl solo portrait"

with autocast("cuda"):

image = pipe(prompt, guidance_scale=7.5)["sample"][0]

image.save("reimu_hakurei.png")

```

## Team Members and Acknowledgements

This project would not have been possible without the incredible work by the [CompVis Researchers](https://ommer-lab.com/).

- [Anthony Mercurio](https://github.com/harubaru)

- [Salt](https://github.com/sALTaccount/)

- [Sta @ Bit192](https://twitter.com/naclbbr)

In order to reach us, you can join our [Discord server](https://discord.gg/touhouai).

[](https://discord.gg/touhouai) |

Ryosuke/noumison | Ryosuke | 2023-05-16T09:11:08Z | 26 | 0 | diffusers | [

"diffusers",

"safetensors",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-05-16T09:01:23Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### noumison Dreambooth model trained by Ryosuke with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

|

OscarCH95/LANA | OscarCH95 | 2023-05-16T09:09:35Z | 0 | 0 | null | [

"arxiv:1910.09700",

"region:us"

] | null | 2023-05-16T09:08:06Z | ---

# For reference on model card metadata, see the spec: https://github.com/huggingface/hub-docs/blob/main/modelcard.md?plain=1

# Doc / guide: https://huggingface.co/docs/hub/model-cards

{}

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

This modelcard aims to be a base template for new models. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/modelcard_template.md?plain=1).

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Data Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics