modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-05 06:27:31

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 468

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-05 06:26:36

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

msavel-prnt/distilbert-base-uncased-finetuned-clinc | msavel-prnt | 2022-01-05T15:37:05Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:clinc_oos",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- clinc_oos

metrics:

- accuracy

model_index:

- name: distilbert-base-uncased-finetuned-clinc

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: clinc_oos

type: clinc_oos

args: plus

metric:

name: Accuracy

type: accuracy

value: 0.9180645161290323

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-clinc

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the clinc_oos dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7528

- Accuracy: 0.9181

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 318 | 3.3044 | 0.7623 |

| 3.7959 | 2.0 | 636 | 1.8674 | 0.8597 |

| 3.7959 | 3.0 | 954 | 1.1377 | 0.8948 |

| 1.6819 | 4.0 | 1272 | 0.8351 | 0.9126 |

| 0.8804 | 5.0 | 1590 | 0.7528 | 0.9181 |

### Framework versions

- Transformers 4.8.2

- Pytorch 1.9.0+cu102

- Datasets 1.9.0

- Tokenizers 0.10.3

|

tadejmagajna/flair-sl-pos | tadejmagajna | 2022-01-05T15:07:06Z | 2 | 0 | flair | [

"flair",

"pytorch",

"token-classification",

"sequence-tagger-model",

"sl",

"region:us"

] | token-classification | 2022-03-02T23:29:05Z | ---

tags:

- flair

- token-classification

- sequence-tagger-model

language: sl

widget:

- text: "Danes je lep dan."

---

## Slovene Part-of-speech (PoS) Tagging for Flair

This is a Slovene part-of-speech (PoS) tagger trained on the [Slovenian UD Treebank](https://github.com/UniversalDependencies/UD_Slovenian-SSJ) using Flair NLP framework.

The tagger is trained using a combination of forward Slovene contextual string embeddings, backward Slovene contextual string embeddings and classic Slovene FastText embeddings.

F-score (micro): **94,96**

The model is trained on a large (500+) number of different tags that described at [https://universaldependencies.org/tagset-conversion/sl-multext-uposf.html](https://universaldependencies.org/tagset-conversion/sl-multext-uposf.html).

Based on [Flair embeddings](https://www.aclweb.org/anthology/C18-1139/) and LSTM-CRF.

---

### Demo: How to use in Flair

Requires: **[Flair](https://github.com/flairNLP/flair/)** (`pip install flair`)

```python

from flair.data import Sentence

from flair.models import SequenceTagger

# load tagger

tagger = SequenceTagger.load("tadejmagajna/flair-sl-pos")

# make example sentence

sentence = Sentence("Danes je lep dan.")

# predict PoS tags

tagger.predict(sentence)

# print sentence

print(sentence)

# print predicted PoS spans

print('The following PoS tags are found:')

# iterate over parts of speech and print

for tag in sentence.get_spans('pos'):

print(tag)

```

This prints out the following output:

```

Sentence: "Danes je lep dan ." [− Tokens: 5 − Token-Labels: "Danes <Rgp> je <Va-r3s-n> lep <Agpmsnn> dan <Ncmsn> . <Z>"]

The following PoS tags are found:

Span [1]: "Danes" [− Labels: Rgp (1.0)]

Span [2]: "je" [− Labels: Va-r3s-n (1.0)]

Span [3]: "lep" [− Labels: Agpmsnn (0.9999)]

Span [4]: "dan" [− Labels: Ncmsn (1.0)]

Span [5]: "." [− Labels: Z (1.0)]

```

---

### Training: Script to train this model

The following standard Flair script was used to train this model:

```python

from flair.data import Corpus

from flair.datasets import UD_SLOVENIAN

from flair.embeddings import WordEmbeddings, StackedEmbeddings, FlairEmbeddings

# 1. get the corpus

corpus: Corpus = UD_SLOVENIAN()

# 2. what tag do we want to predict?

tag_type = 'pos'

# 3. make the tag dictionary from the corpus

tag_dictionary = corpus.make_tag_dictionary(tag_type=tag_type)

# 4. initialize embeddings

embedding_types = [

WordEmbeddings('sl'),

FlairEmbeddings('sl-forward'),

FlairEmbeddings('sl-backward'),

]

embeddings: StackedEmbeddings = StackedEmbeddings(embeddings=embedding_types)

# 5. initialize sequence tagger

from flair.models import SequenceTagger

tagger: SequenceTagger = SequenceTagger(hidden_size=256,

embeddings=embeddings,

tag_dictionary=tag_dictionary,

tag_type=tag_type)

# 6. initialize trainer

from flair.trainers import ModelTrainer

trainer: ModelTrainer = ModelTrainer(tagger, corpus)

# 7. start training

trainer.train('resources/taggers/pos-slovene',

train_with_dev=True,

max_epochs=150)

```

---

### Cite

Please cite the following paper when using this model.

```

@inproceedings{akbik2018coling,

title={Contextual String Embeddings for Sequence Labeling},

author={Akbik, Alan and Blythe, Duncan and Vollgraf, Roland},

booktitle = {{COLING} 2018, 27th International Conference on Computational Linguistics},

pages = {1638--1649},

year = {2018}

}

```

---

### Issues?

The Flair issue tracker is available [here](https://github.com/flairNLP/flair/issues/). |

Icelandic-lt/electra-small-igc-is | Icelandic-lt | 2022-01-05T14:56:02Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"electra",

"pretraining",

"is",

"dataset:igc",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] | null | 2024-05-27T11:38:14Z | ---

language:

- is

license: cc-by-4.0

datasets:

- igc

---

# Icelandic ELECTRA-Small

This model was pretrained on the [Icelandic Gigaword Corpus](http://igc.arnastofnun.is/), which contains approximately 1.69B tokens, using default settings. The model uses a WordPiece tokenizer with a vocabulary size of 32,105.

# Acknowledgments

This research was supported with Cloud TPUs from Google's TPU Research Cloud (TRC).

This project was funded by the Language Technology Programme for Icelandic 2019-2023. The programme, which is managed and coordinated by [Almannarómur](https://almannaromur.is/), is funded by the Icelandic Ministry of Education, Science and Culture. |

jonfd/electra-small-igc-is | jonfd | 2022-01-05T14:56:02Z | 47 | 0 | transformers | [

"transformers",

"pytorch",

"electra",

"pretraining",

"is",

"dataset:igc",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

language:

- is

license: cc-by-4.0

datasets:

- igc

---

# Icelandic ELECTRA-Small

This model was pretrained on the [Icelandic Gigaword Corpus](http://igc.arnastofnun.is/), which contains approximately 1.69B tokens, using default settings. The model uses a WordPiece tokenizer with a vocabulary size of 32,105.

# Acknowledgments

This research was supported with Cloud TPUs from Google's TPU Research Cloud (TRC).

This project was funded by the Language Technology Programme for Icelandic 2019-2023. The programme, which is managed and coordinated by [Almannarómur](https://almannaromur.is/), is funded by the Icelandic Ministry of Education, Science and Culture. |

jonfd/electra-base-igc-is | jonfd | 2022-01-05T14:54:23Z | 10 | 0 | transformers | [

"transformers",

"pytorch",

"electra",

"pretraining",

"is",

"dataset:igc",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

language:

- is

license: cc-by-4.0

datasets:

- igc

---

# Icelandic ELECTRA-Base

This model was pretrained on the [Icelandic Gigaword Corpus](http://igc.arnastofnun.is/), which contains approximately 1.69B tokens, using default settings. The model uses a WordPiece tokenizer with a vocabulary size of 32,105.

# Acknowledgments

This research was supported with Cloud TPUs from Google's TPU Research Cloud (TRC).

This project was funded by the Language Technology Programme for Icelandic 2019-2023. The programme, which is managed and coordinated by [Almannarómur](https://almannaromur.is/), is funded by the Icelandic Ministry of Education, Science and Culture. |

kurone/cp_tags_prediction | kurone | 2022-01-05T13:32:49Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | This model can predict which categories a specific competitive problem falls into |

huggingtweets/sporeball | huggingtweets | 2022-01-05T08:02:01Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-03-02T23:29:05Z | ---

language: en

thumbnail: http://www.huggingtweets.com/sporeball/1641369716297/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1365405536401776642/Z17NbuYy_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">lux</div>

<div style="text-align: center; font-size: 14px;">@sporeball</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from lux.

| Data | lux |

| --- | --- |

| Tweets downloaded | 1150 |

| Retweets | 171 |

| Short tweets | 120 |

| Tweets kept | 859 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/2w9y6gn1/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @sporeball's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2tg3n5a5) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2tg3n5a5/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/sporeball')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Prasadi/wav2vec2-base-timit-demo-colab-1 | Prasadi | 2022-01-05T06:18:01Z | 109 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:04Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2-base-timit-demo-colab-1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-colab-1

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3857

- Wer: 0.3874

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.4285 | 2.01 | 500 | 1.4732 | 0.9905 |

| 0.7457 | 4.02 | 1000 | 0.5278 | 0.4960 |

| 0.3463 | 6.02 | 1500 | 0.4245 | 0.4155 |

| 0.2034 | 8.03 | 2000 | 0.3857 | 0.3874 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.13.3

- Tokenizers 0.10.3

|

rdpatilds/con-nlu | rdpatilds | 2022-01-05T05:31:42Z | 5 | 0 | transformers | [

"transformers",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: con-nlu

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# con-nlu

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.15.0

- TensorFlow 2.7.0

- Datasets 1.17.0

- Tokenizers 0.10.3

|

abdelkader/distilbert-base-uncased-finetuned-emotion | abdelkader | 2022-01-04T23:18:05Z | 107 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9215

- name: F1

type: f1

value: 0.9215604730468001

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2162

- Accuracy: 0.9215

- F1: 0.9216

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8007 | 1.0 | 250 | 0.3082 | 0.907 | 0.9045 |

| 0.2438 | 2.0 | 500 | 0.2162 | 0.9215 | 0.9216 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.0+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

|

mbateman/bert-finetuned-ner | mbateman | 2022-01-04T20:30:26Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:conll2003",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9333553828344634

- name: Recall

type: recall

value: 0.9498485358465163

- name: F1

type: f1

value: 0.9415297355909584

- name: Accuracy

type: accuracy

value: 0.9868281627126626

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0622

- Precision: 0.9334

- Recall: 0.9498

- F1: 0.9415

- Accuracy: 0.9868

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0881 | 1.0 | 1756 | 0.0683 | 0.9136 | 0.9322 | 0.9228 | 0.9826 |

| 0.0383 | 2.0 | 3512 | 0.0641 | 0.9277 | 0.9456 | 0.9366 | 0.9854 |

| 0.0229 | 3.0 | 5268 | 0.0622 | 0.9334 | 0.9498 | 0.9415 | 0.9868 |

### Framework versions

- Transformers 4.12.5

- Pytorch 1.10.0+cu102

- Datasets 1.15.1

- Tokenizers 0.10.1

|

huawei-noah/JABER | huawei-noah | 2022-01-04T20:19:57Z | 1 | 0 | null | [

"pytorch",

"arxiv:2112.04329",

"region:us"

] | null | 2022-03-02T23:29:05Z | # Overview

<p align="center">

<img src="https://avatars.githubusercontent.com/u/12619994?s=200&v=4" width="150">

</p>

<!-- -------------------------------------------------------------------------------- -->

JABER (Junior Arabic BERt) is a 12-layer Arabic pretrained Language Model.

JABER obtained rank one on [ALUE leaderboard](https://www.alue.org/leaderboard) at `01/09/2021`.

This model is **only compatible** with the code in [this github repo](https://github.com/huawei-noah/Pretrained-Language-Model/tree/master/JABER-PyTorch) (not supported by the [Transformers](https://github.com/huggingface/transformers) library)

## Citation

Please cite the following [paper](https://arxiv.org/abs/2112.04329) when using our code and model:

``` bibtex

@misc{ghaddar2021jaber,

title={JABER: Junior Arabic BERt},

author={Abbas Ghaddar and Yimeng Wu and Ahmad Rashid and Khalil Bibi and Mehdi Rezagholizadeh and Chao Xing and Yasheng Wang and Duan Xinyu and Zhefeng Wang and Baoxing Huai and Xin Jiang and Qun Liu and Philippe Langlais},

year={2021},

eprint={2112.04329},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

ncoop57/athena | ncoop57 | 2022-01-04T19:24:11Z | 5 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"longformer",

"feature-extraction",

"sentence-similarity",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | sentence-similarity | 2022-03-02T23:29:05Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

---

# ncoop57/athena

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 256 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('ncoop57/athena')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=ncoop57/athena)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 50 with parameters:

```

{'batch_size': 2, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MultipleNegativesRankingLoss.MultipleNegativesRankingLoss` with parameters:

```

{'scale': 20.0, 'similarity_fct': 'cos_sim'}

```

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'transformers.optimization.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 100,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 1024, 'do_lower_case': False}) with Transformer model: LongformerModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

(2): Dense({'in_features': 768, 'out_features': 256, 'bias': True, 'activation_function': 'torch.nn.modules.activation.Tanh'})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

huggingtweets/darth | huggingtweets | 2022-01-04T19:21:54Z | 106 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-03-02T23:29:05Z | ---

language: en

thumbnail: http://www.huggingtweets.com/darth/1641324110436/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1459410046169731073/gRiEO9Yd_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">darth™</div>

<div style="text-align: center; font-size: 14px;">@darth</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from darth™.

| Data | darth™ |

| --- | --- |

| Tweets downloaded | 3189 |

| Retweets | 1278 |

| Short tweets | 677 |

| Tweets kept | 1234 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3t5g6hcx/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @darth's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/c56rnej9) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/c56rnej9/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/darth')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Khanh/distilbert-base-multilingual-cased-finetuned-viquad | Khanh | 2022-01-04T19:19:15Z | 106 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:04Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: distilbert-base-multilingual-cased-finetuned-viquad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-multilingual-cased-finetuned-viquad

This model is a fine-tuned version of [distilbert-base-multilingual-cased](https://huggingface.co/distilbert-base-multilingual-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.4241

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 65 | 4.0975 |

| No log | 2.0 | 130 | 3.9315 |

| No log | 3.0 | 195 | 3.6742 |

| No log | 4.0 | 260 | 3.4878 |

| No log | 5.0 | 325 | 3.4241 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.0+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

|

ericRosello/distilbert-base-uncased-finetuned-squad-frozen-v2 | ericRosello | 2022-01-04T18:06:41Z | 11 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: distilbert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2104

## Model description

Most base model weights were frozen leaving only to finetune the last layer (qa outputs) and 3 last layers of the encoder.

## Training and evaluation data

Achieved EM: 73.519394512772, F1: 82.71779517079237

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 1.3937 | 1.0 | 5533 | 1.2915 |

| 1.1522 | 2.0 | 11066 | 1.2227 |

| 1.0055 | 3.0 | 16599 | 1.2104 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.0+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

|

yosemite/autonlp-imdb-sentiment-analysis-english-470512388 | yosemite | 2022-01-04T17:34:50Z | 18 | 2 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"autonlp",

"en",

"dataset:yosemite/autonlp-data-imdb-sentiment-analysis-english",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

tags: autonlp

language: en

widget:

- text: "I love AutoNLP 🤗"

datasets:

- yosemite/autonlp-data-imdb-sentiment-analysis-english

co2_eq_emissions: 256.38650494338367

---

# Model Trained Using AutoNLP

- Problem type: Binary Classification

- Model ID: 470512388

- CO2 Emissions (in grams): 256.38650494338367

## Validation Metrics

- Loss: 0.18712733685970306

- Accuracy: 0.9388

- Precision: 0.9300274402195218

- Recall: 0.949

- AUC: 0.98323192

- F1: 0.9394179370421698

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/yosemite/autonlp-imdb-sentiment-analysis-english-470512388

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("yosemite/autonlp-imdb-sentiment-analysis-english-470512388", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("yosemite/autonlp-imdb-sentiment-analysis-english-470512388", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

``` |

nvidia/megatron-bert-uncased-345m | nvidia | 2022-01-04T15:16:39Z | 0 | 7 | null | [

"arxiv:1909.08053",

"region:us"

] | null | 2022-03-02T23:29:05Z | <!---

# ##############################################################################################

#

# Copyright (c) 2021-, NVIDIA CORPORATION. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# ##############################################################################################

-->

[Megatron](https://arxiv.org/pdf/1909.08053.pdf) is a large, powerful transformer developed by the Applied Deep Learning Research team at NVIDIA. This particular Megatron model was trained from a bidirectional transformer in the style of BERT with text sourced from Wikipedia, RealNews, OpenWebText, and CC-Stories. This model contains 345 million parameters. It is made up of 24 layers, 16 attention heads with a hidden size of 1024.

Find more information at [https://github.com/NVIDIA/Megatron-LM](https://github.com/NVIDIA/Megatron-LM)

# How to run Megatron BERT using Transformers

## Prerequisites

In that guide, we run all the commands from a folder called `$MYDIR` and defined as (in `bash`):

```

export MYDIR=$HOME

```

Feel free to change the location at your convenience.

To run some of the commands below, you'll have to clone `Transformers`.

```

git clone https://github.com/huggingface/transformers.git $MYDIR/transformers

```

## Get the checkpoint from the NVIDIA GPU Cloud

You must create a directory called `nvidia/megatron-bert-uncased-345m`.

```

mkdir -p $MYDIR/nvidia/megatron-bert-uncased-345m

```

You can download the checkpoint from the [NVIDIA GPU Cloud (NGC)](https://ngc.nvidia.com/catalog/models/nvidia:megatron_bert_345m). For that you

have to [sign up](https://ngc.nvidia.com/signup) for and setup the NVIDIA GPU

Cloud (NGC) Registry CLI. Further documentation for downloading models can be

found in the [NGC

documentation](https://docs.nvidia.com/dgx/ngc-registry-cli-user-guide/index.html#topic_6_4_1).

Alternatively, you can directly download the checkpoint using:

```

wget --content-disposition https://api.ngc.nvidia.com/v2/models/nvidia/megatron_bert_345m/versions/v0.1_uncased/zip -O $MYDIR/nvidia/megatron-bert-uncased-345m/checkpoint.zip

```

## Converting the checkpoint

In order to be loaded into `Transformers`, the checkpoint has to be converted. You should run the following commands for that purpose.

Those commands will create `config.json` and `pytorch_model.bin` in `$MYDIR/nvidia/megatron-bert-{cased,uncased}-345m`.

You can move those files to different directories if needed.

```

python3 $MYDIR/transformers/src/transformers/models/megatron_bert/convert_megatron_bert_checkpoint.py $MYDIR/nvidia/megatron-bert-uncased-345m/checkpoint.zip

```

As explained in [PR #14956](https://github.com/huggingface/transformers/pull/14956), if when running this conversion

script and you're getting an exception:

```

ModuleNotFoundError: No module named 'megatron.model.enums'

```

you need to tell python where to find the clone of Megatron-LM, e.g.:

```

cd /tmp

git clone https://github.com/NVIDIA/Megatron-LM

PYTHONPATH=/tmp/Megatron-LM python src/transformers/models/megatron_bert/convert_megatron_bert_checkpoint.py ...

```

Or, if you already have it cloned elsewhere, simply adjust the path to the existing path.

If the training was done using a Megatron-LM fork, e.g. [Megatron-DeepSpeed](https://github.com/microsoft/Megatron-DeepSpeed/) then

you may need to have that one in your path, i.e., /path/to/Megatron-DeepSpeed.

## Masked LM

The following code shows how to use the Megatron BERT checkpoint and the Transformers API to perform a `Masked LM` task.

```

import os

import torch

from transformers import BertTokenizer, MegatronBertForMaskedLM

# The tokenizer. Megatron was trained with standard tokenizer(s).

tokenizer = BertTokenizer.from_pretrained('nvidia/megatron-bert-uncased-345m')

# The path to the config/checkpoint (see the conversion step above).

directory = os.path.join(os.environ['MYDIR'], 'nvidia/megatron-bert-uncased-345m')

# Load the model from $MYDIR/nvidia/megatron-bert-uncased-345m.

model = MegatronBertForMaskedLM.from_pretrained(directory)

# Copy to the device and use FP16.

assert torch.cuda.is_available()

device = torch.device("cuda")

model.to(device)

model.eval()

model.half()

# Create inputs (from the BERT example page).

input = tokenizer("The capital of France is [MASK]", return_tensors="pt").to(device)

label = tokenizer("The capital of France is Paris", return_tensors="pt")["input_ids"].to(device)

# Run the model.

with torch.no_grad():

output = model(**input, labels=label)

print(output)

```

## Next sentence prediction

The following code shows how to use the Megatron BERT checkpoint and the Transformers API to perform next

sentence prediction.

```

import os

import torch

from transformers import BertTokenizer, MegatronBertForNextSentencePrediction

# The tokenizer. Megatron was trained with standard tokenizer(s).

tokenizer = BertTokenizer.from_pretrained('nvidia/megatron-bert-uncased-345m')

# The path to the config/checkpoint (see the conversion step above).

directory = os.path.join(os.environ['MYDIR'], 'nvidia/megatron-bert-uncased-345m')

# Load the model from $MYDIR/nvidia/megatron-bert-uncased-345m.

model = MegatronBertForNextSentencePrediction.from_pretrained(directory)

# Copy to the device and use FP16.

assert torch.cuda.is_available()

device = torch.device("cuda")

model.to(device)

model.eval()

model.half()

# Create inputs (from the BERT example page).

input = tokenizer('In Italy, pizza served in formal settings is presented unsliced.',

'The sky is blue due to the shorter wavelength of blue light.',

return_tensors='pt').to(device)

label = torch.LongTensor([1]).to(device)

# Run the model.

with torch.no_grad():

output = model(**input, labels=label)

print(output)

```

# Original code

The original code for Megatron can be found here: [https://github.com/NVIDIA/Megatron-LM](https://github.com/NVIDIA/Megatron-LM).

|

nvidia/megatron-bert-cased-345m | nvidia | 2022-01-04T15:15:44Z | 0 | 4 | null | [

"arxiv:1909.08053",

"region:us"

] | null | 2022-03-02T23:29:05Z | <!---

# ##############################################################################################

#

# Copyright (c) 2021-, NVIDIA CORPORATION. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# ##############################################################################################

-->

[Megatron](https://arxiv.org/pdf/1909.08053.pdf) is a large, powerful transformer developed by the Applied Deep Learning Research team at NVIDIA. This particular Megatron model was trained from a bidirectional transformer in the style of BERT with text sourced from Wikipedia, RealNews, OpenWebText, and CC-Stories. This model contains 345 million parameters. It is made up of 24 layers, 16 attention heads with a hidden size of 1024.

Find more information at [https://github.com/NVIDIA/Megatron-LM](https://github.com/NVIDIA/Megatron-LM)

# How to run Megatron BERT using Transformers

## Prerequisites

In that guide, we run all the commands from a folder called `$MYDIR` and defined as (in `bash`):

```

export MYDIR=$HOME

```

Feel free to change the location at your convenience.

To run some of the commands below, you'll have to clone `Transformers`.

```

git clone https://github.com/huggingface/transformers.git $MYDIR/transformers

```

## Get the checkpoint from the NVIDIA GPU Cloud

You must create a directory called `nvidia/megatron-bert-cased-345m`.

```

mkdir -p $MYDIR/nvidia/megatron-bert-cased-345m

```

You can download the checkpoint from the [NVIDIA GPU Cloud (NGC)](https://ngc.nvidia.com/catalog/models/nvidia:megatron_bert_345m). For that you

have to [sign up](https://ngc.nvidia.com/signup) for and setup the NVIDIA GPU

Cloud (NGC) Registry CLI. Further documentation for downloading models can be

found in the [NGC

documentation](https://docs.nvidia.com/dgx/ngc-registry-cli-user-guide/index.html#topic_6_4_1).

Alternatively, you can directly download the checkpoint using:

```

wget --content-disposition https://api.ngc.nvidia.com/v2/models/nvidia/megatron_bert_345m/versions/v0.1_cased/zip -O $MYDIR/nvidia/megatron-bert-cased-345m/checkpoint.zip

```

## Converting the checkpoint

In order to be loaded into `Transformers`, the checkpoint has to be converted. You should run the following commands for that purpose.

Those commands will create `config.json` and `pytorch_model.bin` in `$MYDIR/nvidia/megatron-bert-cased-345m`.

You can move those files to different directories if needed.

```

python3 $MYDIR/transformers/src/transformers/models/megatron_bert/convert_megatron_bert_checkpoint.py $MYDIR/nvidia/megatron-bert-cased-345m/checkpoint.zip

```

As explained in [PR #14956](https://github.com/huggingface/transformers/pull/14956), if when running this conversion

script and you're getting an exception:

```

ModuleNotFoundError: No module named 'megatron.model.enums'

```

you need to tell python where to find the clone of Megatron-LM, e.g.:

```

cd /tmp

git clone https://github.com/NVIDIA/Megatron-LM

PYTHONPATH=/tmp/Megatron-LM python src/transformers/models/megatron_bert/convert_megatron_bert_checkpoint.py ...

```

Or, if you already have it cloned elsewhere, simply adjust the path to the existing path.

If the training was done using a Megatron-LM fork, e.g. [Megatron-DeepSpeed](https://github.com/microsoft/Megatron-DeepSpeed/) then

you may need to have that one in your path, i.e., /path/to/Megatron-DeepSpeed.

## Masked LM

The following code shows how to use the Megatron BERT checkpoint and the Transformers API to perform a `Masked LM` task.

```

import os

import torch

from transformers import BertTokenizer, MegatronBertForMaskedLM

# The tokenizer. Megatron was trained with standard tokenizer(s).

tokenizer = BertTokenizer.from_pretrained('nvidia/megatron-bert-cased-345m')

# The path to the config/checkpoint (see the conversion step above).

directory = os.path.join(os.environ['MYDIR'], 'nvidia/megatron-bert-cased-345m')

# Load the model from $MYDIR/nvidia/megatron-bert-cased-345m.

model = MegatronBertForMaskedLM.from_pretrained(directory)

# Copy to the device and use FP16.

assert torch.cuda.is_available()

device = torch.device("cuda")

model.to(device)

model.eval()

model.half()

# Create inputs (from the BERT example page).

input = tokenizer("The capital of France is [MASK]", return_tensors="pt").to(device)

label = tokenizer("The capital of France is Paris", return_tensors="pt")["input_ids"].to(device)

# Run the model.

with torch.no_grad():

output = model(**input, labels=label)

print(output)

```

## Next sentence prediction

The following code shows how to use the Megatron BERT checkpoint and the Transformers API to perform next

sentence prediction.

```

import os

import torch

from transformers import BertTokenizer, MegatronBertForNextSentencePrediction

# The tokenizer. Megatron was trained with standard tokenizer(s).

tokenizer = BertTokenizer.from_pretrained('nvidia/megatron-bert-cased-345m')

# The path to the config/checkpoint (see the conversion step above).

directory = os.path.join(os.environ['MYDIR'], 'nvidia/megatron-bert-cased-345m')

# Load the model from $MYDIR/nvidia/megatron-bert-cased-345m.

model = MegatronBertForNextSentencePrediction.from_pretrained(directory)

# Copy to the device and use FP16.

assert torch.cuda.is_available()

device = torch.device("cuda")

model.to(device)

model.eval()

model.half()

# Create inputs (from the BERT example page).

input = tokenizer('In Italy, pizza served in formal settings is presented unsliced.',

'The sky is blue due to the shorter wavelength of blue light.',

return_tensors='pt').to(device)

label = torch.LongTensor([1]).to(device)

# Run the model.

with torch.no_grad():

output = model(**input, labels=label)

print(output)

```

# Original code

The original code for Megatron can be found here: [https://github.com/NVIDIA/Megatron-LM](https://github.com/NVIDIA/Megatron-LM).

|

scasutt/Prototype_training | scasutt | 2022-01-04T14:59:34Z | 13 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: Prototype_training

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Prototype_training

This model is a fine-tuned version of [scasutt/Prototype_training](https://huggingface.co/scasutt/Prototype_training) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3719

- Wer: 0.4626

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.3853 | 1.47 | 100 | 0.3719 | 0.4626 |

| 0.3867 | 2.94 | 200 | 0.3719 | 0.4626 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

|

Khanh/bert-base-multilingual-cased-finetuned-squad | Khanh | 2022-01-04T14:51:33Z | 54 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"question-answering",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:04Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: bert-base-multilingual-cased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-multilingual-cased-finetuned-squad

This model is a fine-tuned version of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4919

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.1782 | 1.0 | 579 | 0.5258 |

| 0.4938 | 2.0 | 1158 | 0.4639 |

| 0.32 | 3.0 | 1737 | 0.4919 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.0+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

|

sshasnain/wav2vec2-xls-r-timit-trainer | sshasnain | 2022-01-04T14:49:41Z | 161 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2-xls-r-timit-trainer

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-xls-r-timit-trainer

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1064

- Wer: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 100

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.5537 | 4.03 | 500 | 0.6078 | 1.0 |

| 0.5444 | 8.06 | 1000 | 0.4990 | 0.9994 |

| 0.3744 | 12.1 | 1500 | 0.5530 | 1.0 |

| 0.2863 | 16.13 | 2000 | 0.6401 | 1.0 |

| 0.2357 | 20.16 | 2500 | 0.6485 | 1.0 |

| 0.1933 | 24.19 | 3000 | 0.7448 | 0.9994 |

| 0.162 | 28.22 | 3500 | 0.7502 | 1.0 |

| 0.1325 | 32.26 | 4000 | 0.7801 | 1.0 |

| 0.1169 | 36.29 | 4500 | 0.8334 | 1.0 |

| 0.1031 | 40.32 | 5000 | 0.8269 | 1.0 |

| 0.0913 | 44.35 | 5500 | 0.8432 | 1.0 |

| 0.0793 | 48.39 | 6000 | 0.8738 | 1.0 |

| 0.0694 | 52.42 | 6500 | 0.8897 | 1.0 |

| 0.0613 | 56.45 | 7000 | 0.8966 | 1.0 |

| 0.0548 | 60.48 | 7500 | 0.9398 | 1.0 |

| 0.0444 | 64.51 | 8000 | 0.9548 | 1.0 |

| 0.0386 | 68.55 | 8500 | 0.9647 | 1.0 |

| 0.0359 | 72.58 | 9000 | 0.9901 | 1.0 |

| 0.0299 | 76.61 | 9500 | 1.0151 | 1.0 |

| 0.0259 | 80.64 | 10000 | 1.0526 | 1.0 |

| 0.022 | 84.67 | 10500 | 1.0754 | 1.0 |

| 0.0189 | 88.71 | 11000 | 1.0688 | 1.0 |

| 0.0161 | 92.74 | 11500 | 1.0914 | 1.0 |

| 0.0138 | 96.77 | 12000 | 1.1064 | 1.0 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.13.3

- Tokenizers 0.10.3

|

Bhuvana/t5-base-spellchecker | Bhuvana | 2022-01-04T12:46:55Z | 192 | 13 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:04Z | ---

widget:

- text: "christmas is celbrated on decembr 25 evry ear"

---

# Spell checker using T5 base transformer

A simple spell checker built using T5-Base transformer. To use this model

```

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("Bhuvana/t5-base-spellchecker")

model = AutoModelForSeq2SeqLM.from_pretrained("Bhuvana/t5-base-spellchecker")

def correct(inputs):

input_ids = tokenizer.encode(inputs,return_tensors='pt')

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_p=0.99,

num_return_sequences=1

)

res = tokenizer.decode(sample_output[0], skip_special_tokens=True)

return res

text = "christmas is celbrated on decembr 25 evry ear"

print(correct(text))

```

This should print the corrected statement

```

christmas is celebrated on december 25 every year

```

You can also type the text under the Hosted inference API and get predictions online.

|

junnyu/roformer_chinese_char_base | junnyu | 2022-01-04T11:45:40Z | 5 | 0 | paddlenlp | [

"paddlenlp",

"pytorch",

"tf",

"jax",

"paddlepaddle",

"roformer",

"tf2.0",

"zh",

"arxiv:2104.09864",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

language: zh

tags:

- roformer

- pytorch

- tf2.0

widget:

- text: "今天[MASK]很好,我想去公园玩!"

---

## 介绍

### tf版本

https://github.com/ZhuiyiTechnology/roformer

### pytorch版本+tf2.0版本

https://github.com/JunnYu/RoFormer_pytorch

## pytorch使用

```python

import torch

from transformers import RoFormerForMaskedLM, RoFormerTokenizer

text = "今天[MASK]很好,我[MASK]去公园玩。"

tokenizer = RoFormerTokenizer.from_pretrained("junnyu/roformer_chinese_char_base")

pt_model = RoFormerForMaskedLM.from_pretrained("junnyu/roformer_chinese_char_base")

pt_inputs = tokenizer(text, return_tensors="pt")

with torch.no_grad():

pt_outputs = pt_model(**pt_inputs).logits[0]

pt_outputs_sentence = "pytorch: "

for i, id in enumerate(tokenizer.encode(text)):

if id == tokenizer.mask_token_id:

tokens = tokenizer.convert_ids_to_tokens(pt_outputs[i].topk(k=5)[1])

pt_outputs_sentence += "[" + "||".join(tokens) + "]"

else:

pt_outputs_sentence += "".join(

tokenizer.convert_ids_to_tokens([id], skip_special_tokens=True))

print(pt_outputs_sentence)

# pytorch: 今天[天||气||都||风||人]很好,我[想||要||就||也||还]去公园玩。

```

## tensorflow2.0使用

```python

import tensorflow as tf

from transformers import RoFormerTokenizer, TFRoFormerForMaskedLM

text = "今天[MASK]很好,我[MASK]去公园玩。"

tokenizer = RoFormerTokenizer.from_pretrained("junnyu/roformer_chinese_char_base")

tf_model = TFRoFormerForMaskedLM.from_pretrained("junnyu/roformer_chinese_char_base")

tf_inputs = tokenizer(text, return_tensors="tf")

tf_outputs = tf_model(**tf_inputs, training=False).logits[0]

tf_outputs_sentence = "tf2.0: "

for i, id in enumerate(tokenizer.encode(text)):

if id == tokenizer.mask_token_id:

tokens = tokenizer.convert_ids_to_tokens(

tf.math.top_k(tf_outputs[i], k=5)[1])

tf_outputs_sentence += "[" + "||".join(tokens) + "]"

else:

tf_outputs_sentence += "".join(

tokenizer.convert_ids_to_tokens([id], skip_special_tokens=True))

print(tf_outputs_sentence)

# tf2.0 今天[天||气||都||风||人]很好,我[想||要||就||也||还]去公园玩。

```

## 引用

Bibtex:

```tex

@misc{su2021roformer,

title={RoFormer: Enhanced Transformer with Rotary Position Embedding},

author={Jianlin Su and Yu Lu and Shengfeng Pan and Bo Wen and Yunfeng Liu},

year={2021},

eprint={2104.09864},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

junnyu/roformer_chinese_char_small | junnyu | 2022-01-04T11:45:10Z | 8 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"roformer",

"fill-mask",

"tf2.0",

"zh",

"arxiv:2104.09864",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language: zh

tags:

- roformer

- pytorch

- tf2.0

widget:

- text: "今天[MASK]很好,我想去公园玩!"

---

## 介绍

### tf版本

https://github.com/ZhuiyiTechnology/roformer

### pytorch版本+tf2.0版本

https://github.com/JunnYu/RoFormer_pytorch

## pytorch使用

```python

import torch

from transformers import RoFormerForMaskedLM, RoFormerTokenizer

text = "今天[MASK]很好,我[MASK]去公园玩。"

tokenizer = RoFormerTokenizer.from_pretrained("junnyu/roformer_chinese_char_small")

pt_model = RoFormerForMaskedLM.from_pretrained("junnyu/roformer_chinese_char_small")

pt_inputs = tokenizer(text, return_tensors="pt")

with torch.no_grad():

pt_outputs = pt_model(**pt_inputs).logits[0]

pt_outputs_sentence = "pytorch: "

for i, id in enumerate(tokenizer.encode(text)):

if id == tokenizer.mask_token_id:

tokens = tokenizer.convert_ids_to_tokens(pt_outputs[i].topk(k=5)[1])

pt_outputs_sentence += "[" + "||".join(tokens) + "]"

else:

pt_outputs_sentence += "".join(

tokenizer.convert_ids_to_tokens([id], skip_special_tokens=True))

print(pt_outputs_sentence)

# pytorch: 今天[也||都||又||还||我]很好,我[就||想||去||也||又]去公园玩。

```

## tensorflow2.0使用

```python

import tensorflow as tf

from transformers import RoFormerTokenizer, TFRoFormerForMaskedLM

text = "今天[MASK]很好,我[MASK]去公园玩。"

tokenizer = RoFormerTokenizer.from_pretrained("junnyu/roformer_chinese_char_small")

tf_model = TFRoFormerForMaskedLM.from_pretrained("junnyu/roformer_chinese_char_small")

tf_inputs = tokenizer(text, return_tensors="tf")

tf_outputs = tf_model(**tf_inputs, training=False).logits[0]

tf_outputs_sentence = "tf2.0: "

for i, id in enumerate(tokenizer.encode(text)):

if id == tokenizer.mask_token_id:

tokens = tokenizer.convert_ids_to_tokens(

tf.math.top_k(tf_outputs[i], k=5)[1])

tf_outputs_sentence += "[" + "||".join(tokens) + "]"

else:

tf_outputs_sentence += "".join(

tokenizer.convert_ids_to_tokens([id], skip_special_tokens=True))

print(tf_outputs_sentence)

# tf2.0: 今天[也||都||又||还||我]很好,我[就||想||去||也||又]去公园玩。

```

## 引用

Bibtex:

```tex

@misc{su2021roformer,

title={RoFormer: Enhanced Transformer with Rotary Position Embedding},

author={Jianlin Su and Yu Lu and Shengfeng Pan and Bo Wen and Yunfeng Liu},

year={2021},

eprint={2104.09864},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

pierreguillou/bert-base-cased-squad-v1.1-portuguese | pierreguillou | 2022-01-04T09:57:53Z | 2,742 | 35 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"question-answering",

"bert-base",

"pt",

"dataset:brWaC",

"dataset:squad",

"dataset:squad_v1_pt",

"license:mit",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:05Z | ---

language: pt

license: mit

tags:

- question-answering

- bert

- bert-base

- pytorch

datasets:

- brWaC

- squad

- squad_v1_pt

metrics:

- squad

widget:

- text: "Quando começou a pandemia de Covid-19 no mundo?"

context: "A pandemia de COVID-19, também conhecida como pandemia de coronavírus, é uma pandemia em curso de COVID-19, uma doença respiratória aguda causada pelo coronavírus da síndrome respiratória aguda grave 2 (SARS-CoV-2). A doença foi identificada pela primeira vez em Wuhan, na província de Hubei, República Popular da China, em 1 de dezembro de 2019, mas o primeiro caso foi reportado em 31 de dezembro do mesmo ano."

- text: "Onde foi descoberta a Covid-19?"

context: "A pandemia de COVID-19, também conhecida como pandemia de coronavírus, é uma pandemia em curso de COVID-19, uma doença respiratória aguda causada pelo coronavírus da síndrome respiratória aguda grave 2 (SARS-CoV-2). A doença foi identificada pela primeira vez em Wuhan, na província de Hubei, República Popular da China, em 1 de dezembro de 2019, mas o primeiro caso foi reportado em 31 de dezembro do mesmo ano."

---

# Portuguese BERT base cased QA (Question Answering), finetuned on SQUAD v1.1

## Introduction

The model was trained on the dataset SQUAD v1.1 in portuguese from the [Deep Learning Brasil group](http://www.deeplearningbrasil.com.br/) on Google Colab.

The language model used is the [BERTimbau Base](https://huggingface.co/neuralmind/bert-base-portuguese-cased) (aka "bert-base-portuguese-cased") from [Neuralmind.ai](https://neuralmind.ai/): BERTimbau Base is a pretrained BERT model for Brazilian Portuguese that achieves state-of-the-art performances on three downstream NLP tasks: Named Entity Recognition, Sentence Textual Similarity and Recognizing Textual Entailment. It is available in two sizes: Base and Large.

## Informations on the method used

All the informations are in the blog post : [NLP | Modelo de Question Answering em qualquer idioma baseado no BERT base (estudo de caso em português)](https://medium.com/@pierre_guillou/nlp-modelo-de-question-answering-em-qualquer-idioma-baseado-no-bert-base-estudo-de-caso-em-12093d385e78)

## Notebooks in Google Colab & GitHub

- Google Colab: [colab_question_answering_BERT_base_cased_squad_v11_pt.ipynb](https://colab.research.google.com/drive/18ueLdi_V321Gz37x4gHq8mb4XZSGWfZx?usp=sharing)

- GitHub: [colab_question_answering_BERT_base_cased_squad_v11_pt.ipynb](https://github.com/piegu/language-models/blob/master/colab_question_answering_BERT_base_cased_squad_v11_pt.ipynb)

## Performance

The results obtained are the following:

```

f1 = 82.50

exact match = 70.49

```

## How to use the model... with Pipeline

```python

import transformers

from transformers import pipeline

# source: https://pt.wikipedia.org/wiki/Pandemia_de_COVID-19

context = r"""

A pandemia de COVID-19, também conhecida como pandemia de coronavírus, é uma pandemia em curso de COVID-19,

uma doença respiratória aguda causada pelo coronavírus da síndrome respiratória aguda grave 2 (SARS-CoV-2).

A doença foi identificada pela primeira vez em Wuhan, na província de Hubei, República Popular da China,

em 1 de dezembro de 2019, mas o primeiro caso foi reportado em 31 de dezembro do mesmo ano.

Acredita-se que o vírus tenha uma origem zoonótica, porque os primeiros casos confirmados

tinham principalmente ligações ao Mercado Atacadista de Frutos do Mar de Huanan, que também vendia animais vivos.

Em 11 de março de 2020, a Organização Mundial da Saúde declarou o surto uma pandemia. Até 8 de fevereiro de 2021,

pelo menos 105 743 102 casos da doença foram confirmados em pelo menos 191 países e territórios,

com cerca de 2 308 943 mortes e 58 851 440 pessoas curadas.

"""

model_name = 'pierreguillou/bert-base-cased-squad-v1.1-portuguese'

nlp = pipeline("question-answering", model=model_name)

question = "Quando começou a pandemia de Covid-19 no mundo?"

result = nlp(question=question, context=context)

print(f"Answer: '{result['answer']}', score: {round(result['score'], 4)}, start: {result['start']}, end: {result['end']}")

# Answer: '1 de dezembro de 2019', score: 0.713, start: 328, end: 349

```

## How to use the model... with the Auto classes

```python

from transformers import AutoTokenizer, AutoModelForQuestionAnswering

tokenizer = AutoTokenizer.from_pretrained("pierreguillou/bert-base-cased-squad-v1.1-portuguese")

model = AutoModelForQuestionAnswering.from_pretrained("pierreguillou/bert-base-cased-squad-v1.1-portuguese")

```

Or just clone the model repo:

```python

git lfs install

git clone https://huggingface.co/pierreguillou/bert-base-cased-squad-v1.1-portuguese

# if you want to clone without large files – just their pointers

# prepend your git clone with the following env var:

GIT_LFS_SKIP_SMUDGE=1

```

## Limitations and bias

The training data used for this model come from Portuguese SQUAD. It could contain a lot of unfiltered content, which is far from neutral, and biases.

## Author

Portuguese BERT base cased QA (Question Answering), finetuned on SQUAD v1.1 was trained and evaluated by [Pierre GUILLOU](https://www.linkedin.com/in/pierreguillou/) thanks to the Open Source code, platforms and advices of many organizations ([link to the list](https://medium.com/@pierre_guillou/nlp-modelo-de-question-answering-em-qualquer-idioma-baseado-no-bert-base-estudo-de-caso-em-12093d385e78#c572)). In particular: [Hugging Face](https://huggingface.co/), [Neuralmind.ai](https://neuralmind.ai/), [Deep Learning Brasil group](http://www.deeplearningbrasil.com.br/), [Google Colab](https://colab.research.google.com/) and [AI Lab](https://ailab.unb.br/).

## Citation

If you use our work, please cite:

```bibtex

@inproceedings{pierreguillou2021bertbasecasedsquadv11portuguese,

title={Portuguese BERT base cased QA (Question Answering), finetuned on SQUAD v1.1},

author={Pierre Guillou},

year={2021}

}

``` |

pierreguillou/bert-large-cased-squad-v1.1-portuguese | pierreguillou | 2022-01-04T09:57:00Z | 1,067 | 45 | transformers | [

"transformers",

"pytorch",

"tf",

"bert",

"question-answering",

"bert-large",

"pt",

"dataset:brWaC",

"dataset:squad",

"dataset:squad_v1_pt",

"license:mit",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:05Z | ---

language: pt

license: mit

tags:

- question-answering

- bert

- bert-large

- pytorch

datasets:

- brWaC

- squad

- squad_v1_pt

metrics:

- squad

widget:

- text: "Quando começou a pandemia de Covid-19 no mundo?"

context: "A pandemia de COVID-19, também conhecida como pandemia de coronavírus, é uma pandemia em curso de COVID-19, uma doença respiratória causada pelo coronavírus da síndrome respiratória aguda grave 2 (SARS-CoV-2). O vírus tem origem zoonótica e o primeiro caso conhecido da doença remonta a dezembro de 2019 em Wuhan, na China."

- text: "Onde foi descoberta a Covid-19?"

context: "A pandemia de COVID-19, também conhecida como pandemia de coronavírus, é uma pandemia em curso de COVID-19, uma doença respiratória causada pelo coronavírus da síndrome respiratória aguda grave 2 (SARS-CoV-2). O vírus tem origem zoonótica e o primeiro caso conhecido da doença remonta a dezembro de 2019 em Wuhan, na China."

---

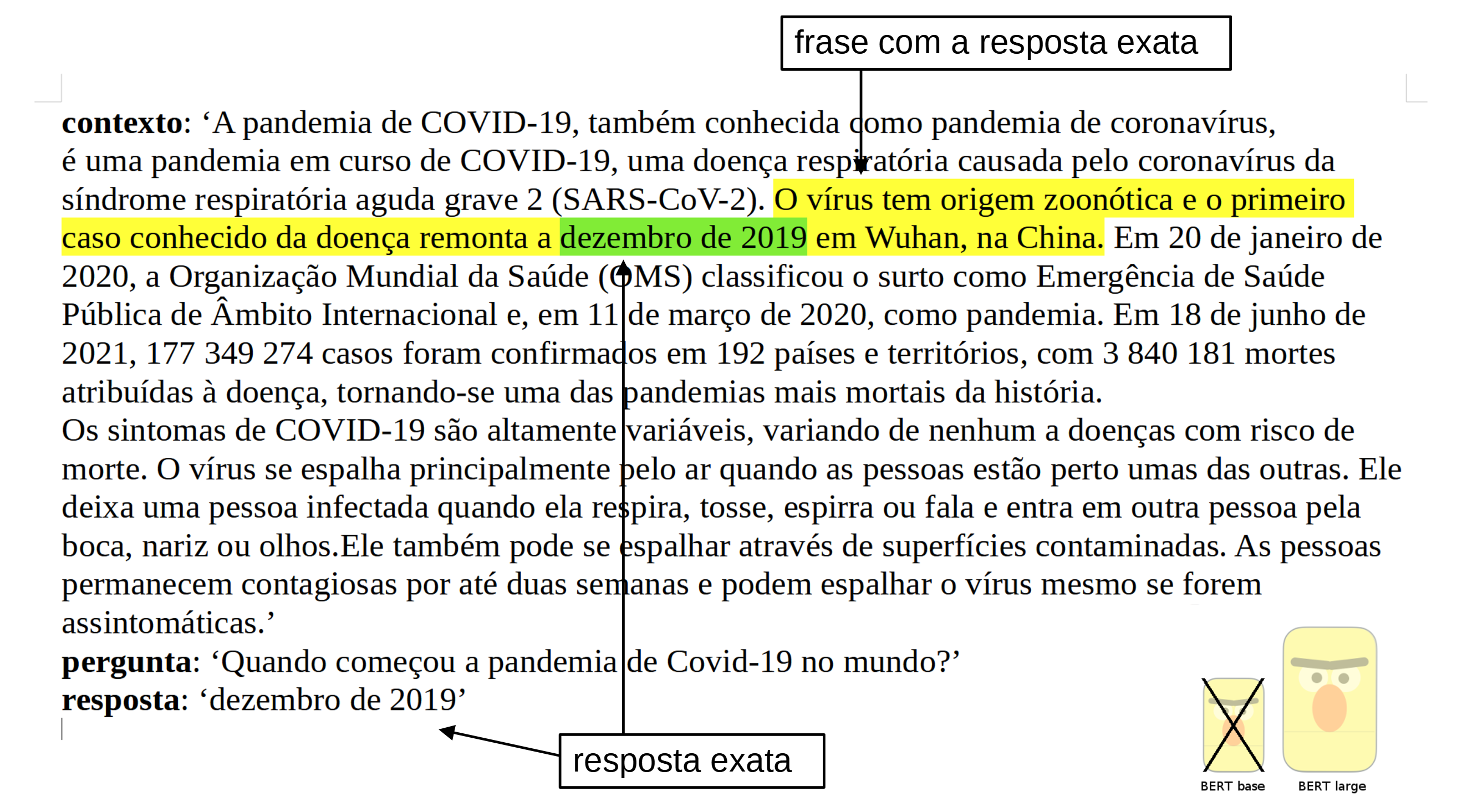

# Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1

## Introduction

The model was trained on the dataset SQUAD v1.1 in portuguese from the [Deep Learning Brasil group](http://www.deeplearningbrasil.com.br/).

The language model used is the [BERTimbau Large](https://huggingface.co/neuralmind/bert-large-portuguese-cased) (aka "bert-large-portuguese-cased") from [Neuralmind.ai](https://neuralmind.ai/): BERTimbau is a pretrained BERT model for Brazilian Portuguese that achieves state-of-the-art performances on three downstream NLP tasks: Named Entity Recognition, Sentence Textual Similarity and Recognizing Textual Entailment. It is available in two sizes: Base and Large.

## Informations on the method used

All the informations are in the blog post : [NLP | Como treinar um modelo de Question Answering em qualquer linguagem baseado no BERT large, melhorando o desempenho do modelo utilizando o BERT base? (estudo de caso em português)](https://medium.com/@pierre_guillou/nlp-como-treinar-um-modelo-de-question-answering-em-qualquer-linguagem-baseado-no-bert-large-1c899262dd96)

## Notebook in GitHub

[question_answering_BERT_large_cased_squad_v11_pt.ipynb](https://github.com/piegu/language-models/blob/master/question_answering_BERT_large_cased_squad_v11_pt.ipynb) ([nbviewer version](https://nbviewer.jupyter.org/github/piegu/language-models/blob/master/question_answering_BERT_large_cased_squad_v11_pt.ipynb))

## Performance

The results obtained are the following:

```

f1 = 84.43 (against 82.50 for the base model)

exact match = 72.68 (against 70.49 for the base model)

```

## How to use the model... with Pipeline

```python

import transformers

from transformers import pipeline

# source: https://pt.wikipedia.org/wiki/Pandemia_de_COVID-19

context = r"""

A pandemia de COVID-19, também conhecida como pandemia de coronavírus, é uma pandemia em curso de COVID-19,

uma doença respiratória causada pelo coronavírus da síndrome respiratória aguda grave 2 (SARS-CoV-2).

O vírus tem origem zoonótica e o primeiro caso conhecido da doença remonta a dezembro de 2019 em Wuhan, na China.

Em 20 de janeiro de 2020, a Organização Mundial da Saúde (OMS) classificou o surto

como Emergência de Saúde Pública de Âmbito Internacional e, em 11 de março de 2020, como pandemia.

Em 18 de junho de 2021, 177 349 274 casos foram confirmados em 192 países e territórios,

com 3 840 181 mortes atribuídas à doença, tornando-se uma das pandemias mais mortais da história.

Os sintomas de COVID-19 são altamente variáveis, variando de nenhum a doenças com risco de morte.

O vírus se espalha principalmente pelo ar quando as pessoas estão perto umas das outras.

Ele deixa uma pessoa infectada quando ela respira, tosse, espirra ou fala e entra em outra pessoa pela boca, nariz ou olhos.

Ele também pode se espalhar através de superfícies contaminadas.

As pessoas permanecem contagiosas por até duas semanas e podem espalhar o vírus mesmo se forem assintomáticas.

"""

model_name = 'pierreguillou/bert-large-cased-squad-v1.1-portuguese'

nlp = pipeline("question-answering", model=model_name)

question = "Quando começou a pandemia de Covid-19 no mundo?"

result = nlp(question=question, context=context)

print(f"Answer: '{result['answer']}', score: {round(result['score'], 4)}, start: {result['start']}, end: {result['end']}")

# Answer: 'dezembro de 2019', score: 0.5087, start: 290, end: 306

```

## How to use the model... with the Auto classes

```python

from transformers import AutoTokenizer, AutoModelForQuestionAnswering

tokenizer = AutoTokenizer.from_pretrained("pierreguillou/bert-large-cased-squad-v1.1-portuguese")

model = AutoModelForQuestionAnswering.from_pretrained("pierreguillou/bert-large-cased-squad-v1.1-portuguese")

```

Or just clone the model repo:

```python

git lfs install

git clone https://huggingface.co/pierreguillou/bert-large-cased-squad-v1.1-portuguese