modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

sequence | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

marcoyang/librispeech_bigram | marcoyang | 2023-07-05T06:45:19Z | 0 | 0 | null | [

"region:us"

] | null | 2022-11-14T04:19:15Z | This is a token bi-gram trained on LibriSpeech 960h text. It is used for LODR decoding in `icefall`.

Please refer to https://github.com/k2-fsa/icefall/pull/678 for more details. |

stlxx/vit-base-beans | stlxx | 2023-07-05T06:44:02Z | 223 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"vision",

"generated_from_trainer",

"dataset:beans",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2023-06-28T07:51:20Z | ---

license: apache-2.0

tags:

- image-classification

- vision

- generated_from_trainer

datasets:

- beans

metrics:

- accuracy

model-index:

- name: vit-base-beans

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: beans

type: beans

config: default

split: validation

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.8195488721804511

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-base-beans

This model is a fine-tuned version of [google/vit-huge-patch14-224-in21k](https://huggingface.co/google/vit-huge-patch14-224-in21k) on the beans dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9760

- Accuracy: 0.8195

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 1337

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.0596 | 1.0 | 259 | 1.0507 | 0.7143 |

| 1.0165 | 2.0 | 518 | 1.0165 | 0.7895 |

| 1.0113 | 3.0 | 777 | 0.9941 | 0.8045 |

| 1.0067 | 4.0 | 1036 | 0.9804 | 0.8195 |

| 0.9746 | 5.0 | 1295 | 0.9760 | 0.8195 |

### Framework versions

- Transformers 4.31.0.dev0

- Pytorch 1.13.1+cu117-with-pypi-cudnn

- Datasets 2.12.0

- Tokenizers 0.13.3

|

heka-ai/tasb-bert-100k | heka-ai | 2023-07-05T06:42:30Z | 2 | 1 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"distilbert",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | sentence-similarity | 2023-07-05T06:42:26Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# heka-ai/tasb-bert-100k

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('heka-ai/tasb-bert-100k')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

def cls_pooling(model_output, attention_mask):

return model_output[0][:,0]

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('heka-ai/tasb-bert-100k')

model = AutoModel.from_pretrained('heka-ai/tasb-bert-100k')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, cls pooling.

sentence_embeddings = cls_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=heka-ai/tasb-bert-100k)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 10000 with parameters:

```

{'batch_size': 32, 'sampler': 'torch.utils.data.sampler.SequentialSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`gpl.toolkit.loss.MarginDistillationLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 100000,

"warmup_steps": 1000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 350, 'do_lower_case': False}) with Transformer model: DistilBertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

nolanaatama/ktysksngngrvcv2360pchktgwsn | nolanaatama | 2023-07-05T06:42:24Z | 0 | 0 | null | [

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-07-05T06:38:12Z | ---

license: creativeml-openrail-m

---

|

linlinlin/peft-dialogue-summary-0705 | linlinlin | 2023-07-05T06:40:42Z | 0 | 0 | null | [

"pytorch",

"tensorboard",

"generated_from_trainer",

"license:apache-2.0",

"region:us"

] | null | 2023-07-05T06:01:40Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: peft-dialogue-summary-0705

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# peft-dialogue-summary-0705

This model is a fine-tuned version of [google/flan-t5-base](https://huggingface.co/google/flan-t5-base) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 50

### Training results

### Framework versions

- Transformers 4.27.2

- Pytorch 2.0.1+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

cerspense/zeroscope_v2_1111models | cerspense | 2023-07-05T06:39:40Z | 0 | 24 | null | [

"license:cc-by-nc-4.0",

"region:us"

] | null | 2023-07-03T23:09:54Z | ---

license: cc-by-nc-4.0

---

[example outputs](https://www.youtube.com/watch?v=HO3APT_0UA4) (courtesy of [dotsimulate](https://www.instagram.com/dotsimulate/))

# zeroscope_v2 1111 models

A collection of watermark-free Modelscope-based video models capable of generating high quality video at [448x256](https://huggingface.co/cerspense/zeroscope_v2_dark_30x448x256), [576x320](https://huggingface.co/cerspense/zeroscope_v2_576w) and [1024 x 576](https://huggingface.co/cerspense/zeroscope_v2_XL). These models were trained from the [original weights](https://huggingface.co/damo-vilab/modelscope-damo-text-to-video-synthesis) with offset noise using 9,923 clips and 29,769 tagged frames.<br />

This collection makes it easy to switch between models with the new dropdown menu in the 1111 extension.

### Using it with the 1111 text2video extension

Simply download the contents of this repo to 'stable-diffusion-webui\models\text2video'

Or, manually download the model folders you want, along with VQGAN_autoencoder.pth.

Thanks to [dotsimulate](https://www.instagram.com/dotsimulate/) for the config files.

Thanks to [camenduru](https://github.com/camenduru), [kabachuha](https://github.com/kabachuha), [ExponentialML](https://github.com/ExponentialML), [VANYA](https://twitter.com/veryVANYA), [polyware](https://twitter.com/polyware_ai), [tin2tin](https://github.com/tin2tin)<br /> |

PD0AUTOMATIONAL/blip-large-endpoint | PD0AUTOMATIONAL | 2023-07-05T06:38:22Z | 95 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"blip",

"image-text-to-text",

"image-captioning",

"image-to-text",

"arxiv:2201.12086",

"license:bsd-3-clause",

"endpoints_compatible",

"region:us"

] | image-to-text | 2023-07-05T06:37:51Z | ---

pipeline_tag: image-to-text

tags:

- image-captioning

languages:

- en

license: bsd-3-clause

duplicated_from: Salesforce/blip-image-captioning-large

---

# BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

Model card for image captioning pretrained on COCO dataset - base architecture (with ViT large backbone).

|  |

|:--:|

| <b> Pull figure from BLIP official repo | Image source: https://github.com/salesforce/BLIP </b>|

## TL;DR

Authors from the [paper](https://arxiv.org/abs/2201.12086) write in the abstract:

*Vision-Language Pre-training (VLP) has advanced the performance for many vision-language tasks. However, most existing pre-trained models only excel in either understanding-based tasks or generation-based tasks. Furthermore, performance improvement has been largely achieved by scaling up the dataset with noisy image-text pairs collected from the web, which is a suboptimal source of supervision. In this paper, we propose BLIP, a new VLP framework which transfers flexibly to both vision-language understanding and generation tasks. BLIP effectively utilizes the noisy web data by bootstrapping the captions, where a captioner generates synthetic captions and a filter removes the noisy ones. We achieve state-of-the-art results on a wide range of vision-language tasks, such as image-text retrieval (+2.7% in average recall@1), image captioning (+2.8% in CIDEr), and VQA (+1.6% in VQA score). BLIP also demonstrates strong generalization ability when directly transferred to videolanguage tasks in a zero-shot manner. Code, models, and datasets are released.*

## Usage

You can use this model for conditional and un-conditional image captioning

### Using the Pytorch model

#### Running the model on CPU

<details>

<summary> Click to expand </summary>

```python

import requests

from PIL import Image

from transformers import BlipProcessor, BlipForConditionalGeneration

processor = BlipProcessor.from_pretrained("Salesforce/blip-image-captioning-large")

model = BlipForConditionalGeneration.from_pretrained("Salesforce/blip-image-captioning-large")

img_url = 'https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg'

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert('RGB')

# conditional image captioning

text = "a photography of"

inputs = processor(raw_image, text, return_tensors="pt")

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))

# unconditional image captioning

inputs = processor(raw_image, return_tensors="pt")

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))

```

</details>

#### Running the model on GPU

##### In full precision

<details>

<summary> Click to expand </summary>

```python

import requests

from PIL import Image

from transformers import BlipProcessor, BlipForConditionalGeneration

processor = BlipProcessor.from_pretrained("Salesforce/blip-image-captioning-large")

model = BlipForConditionalGeneration.from_pretrained("Salesforce/blip-image-captioning-large").to("cuda")

img_url = 'https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg'

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert('RGB')

# conditional image captioning

text = "a photography of"

inputs = processor(raw_image, text, return_tensors="pt").to("cuda")

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))

# unconditional image captioning

inputs = processor(raw_image, return_tensors="pt").to("cuda")

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))

```

</details>

##### In half precision (`float16`)

<details>

<summary> Click to expand </summary>

```python

import torch

import requests

from PIL import Image

from transformers import BlipProcessor, BlipForConditionalGeneration

processor = BlipProcessor.from_pretrained("Salesforce/blip-image-captioning-large")

model = BlipForConditionalGeneration.from_pretrained("Salesforce/blip-image-captioning-large", torch_dtype=torch.float16).to("cuda")

img_url = 'https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg'

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert('RGB')

# conditional image captioning

text = "a photography of"

inputs = processor(raw_image, text, return_tensors="pt").to("cuda", torch.float16)

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))

# >>> a photography of a woman and her dog

# unconditional image captioning

inputs = processor(raw_image, return_tensors="pt").to("cuda", torch.float16)

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))

>>> a woman sitting on the beach with her dog

```

</details>

## BibTex and citation info

```

@misc{https://doi.org/10.48550/arxiv.2201.12086,

doi = {10.48550/ARXIV.2201.12086},

url = {https://arxiv.org/abs/2201.12086},

author = {Li, Junnan and Li, Dongxu and Xiong, Caiming and Hoi, Steven},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

``` |

PD0AUTOMATIONAL/blip2-endpoint | PD0AUTOMATIONAL | 2023-07-05T06:35:31Z | 9 | 2 | transformers | [

"transformers",

"pytorch",

"blip-2",

"visual-question-answering",

"vision",

"image-to-text",

"image-captioning",

"en",

"arxiv:2301.12597",

"license:mit",

"endpoints_compatible",

"region:us"

] | image-to-text | 2023-07-05T06:23:12Z | ---

language: en

license: mit

tags:

- vision

- image-to-text

- image-captioning

- visual-question-answering

pipeline_tag: image-to-text

duplicated_from: Salesforce/blip2-opt-6.7b-coco

---

# BLIP-2, OPT-6.7b, fine-tuned on COCO

BLIP-2 model, leveraging [OPT-6.7b](https://huggingface.co/facebook/opt-6.7b) (a large language model with 6.7 billion parameters).

It was introduced in the paper [BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models](https://arxiv.org/abs/2301.12597) by Li et al. and first released in [this repository](https://github.com/salesforce/LAVIS/tree/main/projects/blip2).

Disclaimer: The team releasing BLIP-2 did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

BLIP-2 consists of 3 models: a CLIP-like image encoder, a Querying Transformer (Q-Former) and a large language model.

The authors initialize the weights of the image encoder and large language model from pre-trained checkpoints and keep them frozen

while training the Querying Transformer, which is a BERT-like Transformer encoder that maps a set of "query tokens" to query embeddings,

which bridge the gap between the embedding space of the image encoder and the large language model.

The goal for the model is simply to predict the next text token, giving the query embeddings and the previous text.

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/model_doc/blip2_architecture.jpg"

alt="drawing" width="600"/>

This allows the model to be used for tasks like:

- image captioning

- visual question answering (VQA)

- chat-like conversations by feeding the image and the previous conversation as prompt to the model

## Direct Use and Downstream Use

You can use the raw model for conditional text generation given an image and optional text. See the [model hub](https://huggingface.co/models?search=Salesforce/blip) to look for

fine-tuned versions on a task that interests you.

## Bias, Risks, Limitations, and Ethical Considerations

BLIP2-OPT uses off-the-shelf OPT as the language model. It inherits the same risks and limitations as mentioned in Meta's model card.

> Like other large language models for which the diversity (or lack thereof) of training

> data induces downstream impact on the quality of our model, OPT-175B has limitations in terms

> of bias and safety. OPT-175B can also have quality issues in terms of generation diversity and

> hallucination. In general, OPT-175B is not immune from the plethora of issues that plague modern

> large language models.

>

BLIP2 is fine-tuned on image-text datasets (e.g. [LAION](https://laion.ai/blog/laion-400-open-dataset/) ) collected from the internet. As a result the model itself is potentially vulnerable to generating equivalently inappropriate content or replicating inherent biases in the underlying data.

BLIP2 has not been tested in real world applications. It should not be directly deployed in any applications. Researchers should first carefully assess the safety and fairness of the model in relation to the specific context they’re being deployed within.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/main/en/model_doc/blip-2#transformers.Blip2ForConditionalGeneration.forward.example). |

sagorsarker/codeswitch-spaeng-pos-lince | sagorsarker | 2023-07-05T06:32:02Z | 118 | 1 | transformers | [

"transformers",

"pytorch",

"jax",

"safetensors",

"bert",

"token-classification",

"codeswitching",

"spanish-english",

"pos",

"es",

"en",

"multilingual",

"dataset:lince",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | 2022-03-02T23:29:05Z | ---

language:

- es

- en

- multilingual

license: mit

tags:

- codeswitching

- spanish-english

- pos

datasets:

- lince

---

# codeswitch-spaeng-pos-lince

This is a pretrained model for **Part of Speech Tagging** of `spanish-english` code-mixed data used from [LinCE](https://ritual.uh.edu/lince/home)

This model is trained for this below repository.

[https://github.com/sagorbrur/codeswitch](https://github.com/sagorbrur/codeswitch)

To install codeswitch:

```

pip install codeswitch

```

## Part-of-Speech Tagging of Spanish-English Mixed Data

* **Method-1**

```py

from transformers import AutoTokenizer, AutoModelForTokenClassification, pipeline

tokenizer = AutoTokenizer.from_pretrained("sagorsarker/codeswitch-spaeng-pos-lince")

model = AutoModelForTokenClassification.from_pretrained("sagorsarker/codeswitch-spaeng-pos-lince")

pos_model = pipeline('ner', model=model, tokenizer=tokenizer)

pos_model("put any spanish english code-mixed sentence")

```

* **Method-2**

```py

from codeswitch.codeswitch import POS

pos = POS('spa-eng')

text = "" # your mixed sentence

result = pos.tag(text)

print(result)

```

|

sunil18p31a0101/PoleCopter | sunil18p31a0101 | 2023-07-05T06:29:25Z | 0 | 0 | null | [

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-05T06:29:23Z | ---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: PoleCopter

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 9.20 +/- 8.61

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

alsonlai/q-FrozenLake-v1-4x4-Slippery2 | alsonlai | 2023-07-05T06:16:38Z | 0 | 0 | null | [

"FrozenLake-v1-4x4",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-05T06:16:32Z | ---

tags:

- FrozenLake-v1-4x4

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-Slippery2

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4

type: FrozenLake-v1-4x4

metrics:

- type: mean_reward

value: 0.74 +/- 0.44

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="alsonlai/q-FrozenLake-v1-4x4-Slippery2", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

IMJONEZZ/ggml-openchat-8192-q4_0 | IMJONEZZ | 2023-07-05T06:04:27Z | 0 | 8 | null | [

"llama",

"openchat",

"en",

"license:apache-2.0",

"region:us"

] | null | 2023-07-02T19:40:18Z | ---

license: apache-2.0

language:

- en

tags:

- llama

- openchat

---

Since this is an OpenChat model, here's the OpenChat card.

# OpenChat: Less is More for Open-source Models

OpenChat is a series of open-source language models fine-tuned on a diverse and high-quality dataset of multi-round conversations. With only ~6K GPT-4 conversations filtered from the ~90K ShareGPT conversations, OpenChat is designed to achieve high performance with limited data.

**Generic models:**

- OpenChat: based on LLaMA-13B (2048 context length)

- **🚀 105.7%** of ChatGPT score on Vicuna GPT-4 evaluation

- **🔥 80.9%** Win-rate on AlpacaEval

- **🤗 Only used 6K data for finetuning!!!**

- OpenChat-8192: based on LLaMA-13B (extended to 8192 context length)

- **106.6%** of ChatGPT score on Vicuna GPT-4 evaluation

- **79.5%** of ChatGPT score on Vicuna GPT-4 evaluation

**Code models:**

- OpenCoderPlus: based on StarCoderPlus (native 8192 context length)

- **102.5%** of ChatGPT score on Vicuna GPT-4 evaluation

- **78.7%** Win-rate on AlpacaEval

*Note:* Please load the pretrained models using *bfloat16*

## Code and Inference Server

We provide the full source code, including an inference server compatible with the "ChatCompletions" API, in the [OpenChat](https://github.com/imoneoi/openchat) GitHub repository.

## Web UI

OpenChat also includes a web UI for a better user experience. See the GitHub repository for instructions.

## Conversation Template

The conversation template **involves concatenating tokens**.

Besides base model vocabulary, an end-of-turn token `<|end_of_turn|>` is added, with id `eot_token_id`.

```python

# OpenChat

[bos_token_id] + tokenize("Human: ") + tokenize(user_question) + [eot_token_id] + tokenize("Assistant: ")

# OpenCoder

tokenize("User:") + tokenize(user_question) + [eot_token_id] + tokenize("Assistant:")

```

*Hint: In BPE, `tokenize(A) + tokenize(B)` does not always equals to `tokenize(A + B)`*

Following is the code for generating the conversation templates:

```python

@dataclass

class ModelConfig:

# Prompt

system: Optional[str]

role_prefix: dict

ai_role: str

eot_token: str

bos_token: Optional[str] = None

# Get template

def generate_conversation_template(self, tokenize_fn, tokenize_special_fn, message_list):

tokens = []

masks = []

# begin of sentence (bos)

if self.bos_token:

t = tokenize_special_fn(self.bos_token)

tokens.append(t)

masks.append(False)

# System

if self.system:

t = tokenize_fn(self.system) + [tokenize_special_fn(self.eot_token)]

tokens.extend(t)

masks.extend([False] * len(t))

# Messages

for idx, message in enumerate(message_list):

# Prefix

t = tokenize_fn(self.role_prefix[message["from"]])

tokens.extend(t)

masks.extend([False] * len(t))

# Message

if "value" in message:

t = tokenize_fn(message["value"]) + [tokenize_special_fn(self.eot_token)]

tokens.extend(t)

masks.extend([message["from"] == self.ai_role] * len(t))

else:

assert idx == len(message_list) - 1, "Empty message for completion must be on the last."

return tokens, masks

MODEL_CONFIG_MAP = {

# OpenChat / OpenChat-8192

"openchat": ModelConfig(

# Prompt

system=None,

role_prefix={

"human": "Human: ",

"gpt": "Assistant: "

},

ai_role="gpt",

eot_token="<|end_of_turn|>",

bos_token="<s>",

),

# OpenCoder / OpenCoderPlus

"opencoder": ModelConfig(

# Prompt

system=None,

role_prefix={

"human": "User:",

"gpt": "Assistant:"

},

ai_role="gpt",

eot_token="<|end_of_turn|>",

bos_token=None,

)

}

``` |

NasimB/gpt2-concat-cl-log-rarity-10-220k-mod-datasets-rarity1-root3 | NasimB | 2023-07-05T05:59:58Z | 126 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-05T03:41:14Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: gpt2-concat-cl-log-rarity-10-220k-mod-datasets-rarity1-root3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-concat-cl-log-rarity-10-220k-mod-datasets-rarity1-root3

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 5.0416

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 6.3754 | 0.06 | 500 | 5.9052 |

| 5.0899 | 0.12 | 1000 | 5.5421 |

| 4.8108 | 0.18 | 1500 | 5.3468 |

| 4.6258 | 0.24 | 2000 | 5.2562 |

| 4.4818 | 0.3 | 2500 | 5.1938 |

| 4.3762 | 0.36 | 3000 | 5.1291 |

| 4.2781 | 0.42 | 3500 | 5.0818 |

| 4.184 | 0.48 | 4000 | 5.0492 |

| 4.0944 | 0.54 | 4500 | 5.0293 |

| 4.0096 | 0.6 | 5000 | 5.0134 |

| 3.9209 | 0.66 | 5500 | 4.9953 |

| 3.8449 | 0.72 | 6000 | 4.9897 |

| 3.7748 | 0.78 | 6500 | 4.9793 |

| 3.7162 | 0.84 | 7000 | 4.9719 |

| 3.6813 | 0.9 | 7500 | 4.9687 |

| 3.6592 | 0.96 | 8000 | 4.9669 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

dlabs-matic-leva/segformer-b0-finetuned-segments-sidewalk-2 | dlabs-matic-leva | 2023-07-05T05:54:12Z | 186 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"segformer",

"vision",

"image-segmentation",

"dataset:segments/sidewalk-semantic",

"endpoints_compatible",

"region:us"

] | image-segmentation | 2023-07-04T07:03:03Z | ---

tags:

- vision

- image-segmentation

datasets:

- segments/sidewalk-semantic

finetuned_from: nvidia/mit-b0

widget:

- src: >-

https://datasets-server.huggingface.co/assets/segments/sidewalk-semantic/--/segments--sidewalk-semantic-2/train/3/pixel_values/image.jpg

example_title: Sidewalk example

--- |

ireneli1024/biobart-v2-base-elife-finetuned | ireneli1024 | 2023-07-05T05:52:51Z | 124 | 0 | transformers | [

"transformers",

"pytorch",

"bart",

"text2text-generation",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2023-07-05T05:50:02Z | ---

license: other

---

This is the finetuned model based on the [biobart-v2-base](https://huggingface.co/GanjinZero/biobart-v2-base) model.

The data is from BioLaySumm 2023 [shared task 1](https://biolaysumm.org/#data). |

niansong1996/lever-gsm8k-codex | niansong1996 | 2023-07-05T05:48:36Z | 103 | 0 | transformers | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"dataset:gsm8k",

"arxiv:2302.08468",

"arxiv:1910.09700",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-07-03T03:42:52Z | ---

license: apache-2.0

datasets:

- gsm8k

metrics:

- accuracy

model-index:

- name: lever-gsm8k-codex

results:

- task:

type: code generation # Required. Example: automatic-speech-recognition

# name: {task_name} # Optional. Example: Speech Recognition

dataset:

type: gsm8k # Required. Example: common_voice. Use dataset id from https://hf.co/datasets

name: GSM8K (Math Reasoning) # Required. A pretty name for the dataset. Example: Common Voice (French)

# config: {dataset_config} # Optional. The name of the dataset configuration used in `load_dataset()`. Example: fr in `load_dataset("common_voice", "fr")`. See the `datasets` docs for more info: https://huggingface.co/docs/datasets/package_reference/loading_methods#datasets.load_dataset.name

# split: {dataset_split} # Optional. Example: test

# revision: {dataset_revision} # Optional. Example: 5503434ddd753f426f4b38109466949a1217c2bb

# args:

# {arg_0}: {value_0} # Optional. Additional arguments to `load_dataset()`. Example for wikipedia: language: en

# {arg_1}: {value_1} # Optional. Example for wikipedia: date: 20220301

metrics:

- type: accuracy # Required. Example: wer. Use metric id from https://hf.co/metrics

value: 84.5 # Required. Example: 20.90

# name: {metric_name} # Optional. Example: Test WER

# config: {metric_config} # Optional. The name of the metric configuration used in `load_metric()`. Example: bleurt-large-512 in `load_metric("bleurt", "bleurt-large-512")`. See the `datasets` docs for more info: https://huggingface.co/docs/datasets/v2.1.0/en/loading#load-configurations

# args:

# {arg_0}: {value_0} # Optional. The arguments passed during `Metric.compute()`. Example for `bleu`: max_order: 4

verified: false # Optional. If true, indicates that evaluation was generated by Hugging Face (vs. self-reported).

---

# LEVER (for Codex on GSM8K)

This is one of the models produced by the paper ["LEVER: Learning to Verify Language-to-Code Generation with Execution"](https://arxiv.org/abs/2302.08468).

**Authors:** [Ansong Ni](https://niansong1996.github.io), Srini Iyer, Dragomir Radev, Ves Stoyanov, Wen-tau Yih, Sida I. Wang*, Xi Victoria Lin*

**Note**: This specific model is for Codex on the [GSM8K](https://github.com/openai/grade-school-math) dataset, for the models pretrained on other datasets, please see:

* [lever-spider-codex](https://huggingface.co/niansong1996/lever-spider-codex)

* [lever-wikitq-codex](https://huggingface.co/niansong1996/lever-wikitq-codex)

* [lever-mbpp-codex](https://huggingface.co/niansong1996/lever-mbpp-codex)

# Model Details

## Model Description

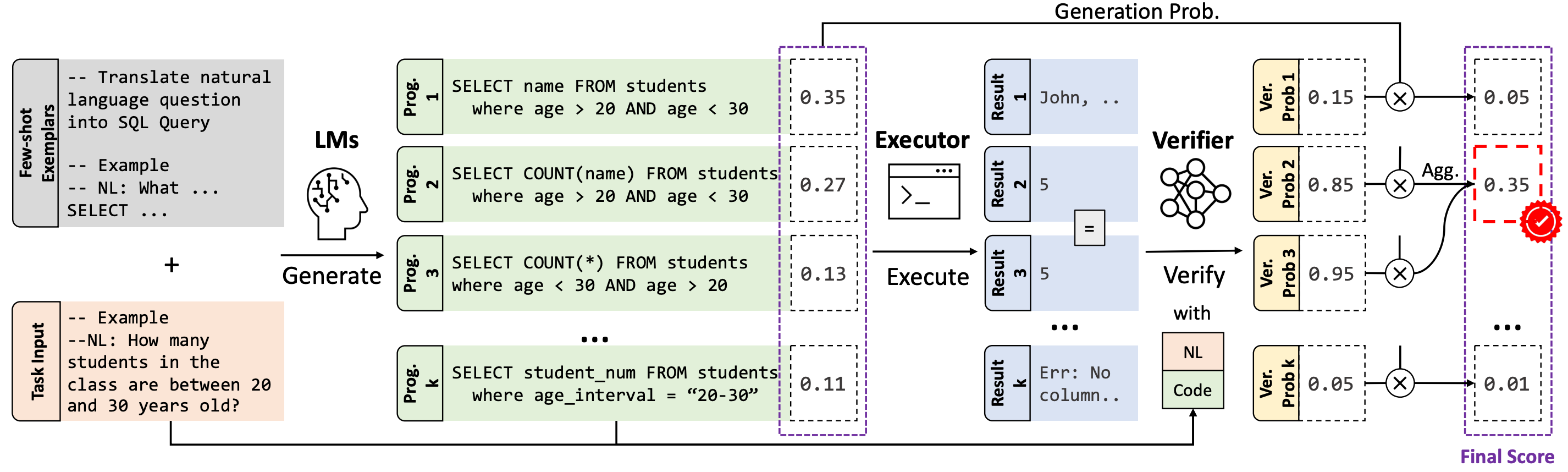

The advent of pre-trained code language models (Code LLMs) has led to significant progress in language-to-code generation. State-of-the-art approaches in this area combine CodeLM decoding with sample pruning and reranking using test cases or heuristics based on the execution results. However, it is challenging to obtain test cases for many real-world language-to-code applications, and heuristics cannot well capture the semantic features of the execution results, such as data type and value range, which often indicates the correctness of the program. In this work, we propose LEVER, a simple approach to improve language-to-code generation by learning to verify the generated programs with their execution results. Specifically, we train verifiers to determine whether a program sampled from the CodeLM is correct or not based on the natural language input, the program itself and its execution results. The sampled programs are reranked by combining the verification score with the CodeLM generation probability, and marginalizing over programs with the same execution results. On four datasets across the domains of table QA, math QA and basic Python programming, LEVER consistently improves over the base CodeLMs (4.6% to 10.9% with code-davinci-002) and achieves new state-of-the-art results on all of them.

- **Developed by:** Yale University and Meta AI

- **Shared by:** Ansong Ni

- **Model type:** Text Classification

- **Language(s) (NLP):** More information needed

- **License:** Apache-2.0

- **Parent Model:** RoBERTa-large

- **Resources for more information:**

- [Github Repo](https://github.com/niansong1996/lever)

- [Associated Paper](https://arxiv.org/abs/2302.08468)

# Uses

## Direct Use

This model is *not* intended to be directly used. LEVER is used to verify and rerank the programs generated by code LLMs (e.g., Codex). We recommend checking out our [Github Repo](https://github.com/niansong1996/lever) for more details.

## Downstream Use

LEVER is learned to verify and rerank the programs sampled from code LLMs for different tasks.

More specifically, for `lever-gsm8k-codex`, it was trained on the outputs of `code-davinci-002` on the [GSM8K](https://github.com/openai/grade-school-math) dataset. It can be used to rerank the SQL programs generated by Codex out-of-box.

Moreover, it may also be applied to other model's outputs on the GSM8K dataset, as studied in the [Original Paper](https://arxiv.org/abs/2302.08468).

## Out-of-Scope Use

The model should not be used to intentionally create hostile or alienating environments for people.

# Bias, Risks, and Limitations

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)). Predictions generated by the model may include disturbing and harmful stereotypes across protected classes; identity characteristics; and sensitive, social, and occupational groups.

## Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

# Training Details

## Training Data

The model is trained with the outputs from `code-davinci-002` model on the [GSM8K](https://github.com/openai/grade-school-math) dataset.

## Training Procedure

20 program samples are drawn from the Codex model on the training examples of the GSM8K dataset, those programs are later executed to obtain the execution information.

And for each example and its program sample, the natural language description and execution information are also part of the inputs that used to train the RoBERTa-based model to predict "yes" or "no" as the verification labels.

### Preprocessing

Please follow the instructions in the [Github Repo](https://github.com/niansong1996/lever) to reproduce the results.

### Speeds, Sizes, Times

More information needed

# Evaluation

## Testing Data, Factors & Metrics

### Testing Data

Dev and test set of the [GSM8K](https://github.com/openai/grade-school-math) dataset.

### Factors

More information needed

### Metrics

Execution accuracy (i.e., pass@1)

## Results

### GSM8K Math Reasoning via Python Code Generation

| | Exec. Acc. (Dev) | Exec. Acc. (Test) |

|-----------------|------------------|-------------------|

| Codex | 68.1 | 67.2 |

| Codex+LEVER | 84.1 | 84.5 |

# Model Examination

More information needed

# Environmental Impact

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** More information needed

- **Hours used:** More information needed

- **Cloud Provider:** More information needed

- **Compute Region:** More information needed

- **Carbon Emitted:** More information needed

# Technical Specifications [optional]

## Model Architecture and Objective

`lever-gsm8k-codex` is based on RoBERTa-large.

## Compute Infrastructure

More information needed

### Hardware

More information needed

### Software

More information needed.

# Citation

**BibTeX:**

```bibtex

@inproceedings{ni2023lever,

title={Lever: Learning to verify language-to-code generation with execution},

author={Ni, Ansong and Iyer, Srini and Radev, Dragomir and Stoyanov, Ves and Yih, Wen-tau and Wang, Sida I and Lin, Xi Victoria},

booktitle={Proceedings of the 40th International Conference on Machine Learning (ICML'23)},

year={2023}

}

```

# Glossary [optional]

More information needed

# More Information [optional]

More information needed

# Model Card Author and Contact

Ansong Ni, contact info on [personal website](https://niansong1996.github.io)

# How to Get Started with the Model

This model is *not* intended to be directly used, please follow the instructions in the [Github Repo](https://github.com/niansong1996/lever). |

thenewcompany/reinforce-CartPole-v1 | thenewcompany | 2023-07-05T05:47:20Z | 0 | 0 | null | [

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-05T05:47:12Z | ---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: reinforce-CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

NasimB/gpt2-concat-mod-datasets-rarity1 | NasimB | 2023-07-05T05:41:11Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-05T02:36:15Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: gpt2-concat-mod-datasets-rarity1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-concat-mod-datasets-rarity1

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 3.0210

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 7

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 6.7299 | 0.3 | 500 | 5.6367 |

| 5.3814 | 0.59 | 1000 | 5.2097 |

| 5.0305 | 0.89 | 1500 | 4.9565 |

| 4.7532 | 1.18 | 2000 | 4.8178 |

| 4.6062 | 1.48 | 2500 | 4.6913 |

| 4.4987 | 1.78 | 3000 | 4.5883 |

| 4.3593 | 2.07 | 3500 | 4.5246 |

| 4.1845 | 2.37 | 4000 | 4.4796 |

| 4.1539 | 2.66 | 4500 | 4.4191 |

| 4.1258 | 2.96 | 5000 | 4.3681 |

| 3.898 | 3.26 | 5500 | 4.3751 |

| 3.8758 | 3.55 | 6000 | 4.3495 |

| 3.8598 | 3.85 | 6500 | 4.3088 |

| 3.7173 | 4.14 | 7000 | 4.3340 |

| 3.5968 | 4.44 | 7500 | 4.3170 |

| 3.5934 | 4.74 | 8000 | 4.3049 |

| 3.5491 | 5.03 | 8500 | 4.3103 |

| 3.3358 | 5.33 | 9000 | 4.3192 |

| 3.3363 | 5.62 | 9500 | 4.3181 |

| 3.3409 | 5.92 | 10000 | 4.3105 |

| 3.2189 | 6.22 | 10500 | 4.3290 |

| 3.1812 | 6.51 | 11000 | 4.3286 |

| 3.1879 | 6.81 | 11500 | 4.3297 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

anejaisha/output1 | anejaisha | 2023-07-05T05:32:35Z | 1 | 0 | null | [

"tensorboard",

"generated_from_trainer",

"base_model:google/flan-t5-large",

"base_model:finetune:google/flan-t5-large",

"license:apache-2.0",

"region:us"

] | null | 2023-07-05T05:10:41Z | ---

license: apache-2.0

base_model: google/flan-t5-large

tags:

- generated_from_trainer

model-index:

- name: output1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# output1

This model is a fine-tuned version of [google/flan-t5-large](https://huggingface.co/google/flan-t5-large) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Framework versions

- Transformers 4.31.0.dev0

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

modelmaker/melanie | modelmaker | 2023-07-05T05:26:44Z | 0 | 0 | diffusers | [

"diffusers",

"text-to-image",

"am",

"dataset:Open-Orca/OpenOrca",

"license:openrail",

"region:us"

] | text-to-image | 2023-07-05T05:15:40Z | ---

license: openrail

datasets:

- Open-Orca/OpenOrca

language:

- am

metrics:

- accuracy

library_name: diffusers

pipeline_tag: text-to-image

--- |

thirupathibandam/bloom560 | thirupathibandam | 2023-07-05T05:22:00Z | 116 | 0 | transformers | [

"transformers",

"pytorch",

"bloom",

"feature-extraction",

"text-generation",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-05T03:51:48Z | ---

pipeline_tag: text-generation

--- |

ConnorAzure/BillieJoeArmstrong_300_Epoch_Version | ConnorAzure | 2023-07-05T05:21:30Z | 0 | 0 | null | [

"license:cc-by-nc-sa-4.0",

"region:us"

] | null | 2023-07-05T05:19:38Z | ---

license: cc-by-nc-sa-4.0

---

|

Chattiori/RandMix | Chattiori | 2023-07-05T05:07:47Z | 0 | 1 | null | [

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-07-03T02:36:10Z | ---

license: creativeml-openrail-m

---

<span style="font-size: 250%; font-weight:bold; color:#A00000; -webkit-text-stroke: 1.5px #8080FF;">$()RandMix()$</span>

*Merging random fetched amounts of realistic models with random alpha values.*

*<a href="https://github.com/Faildes/CivitAI-ModelFetch-RandomScripter" style="font-size: 250%; font-weight:bold; color:#A00000;">Link for the Tool</span>*

# attemptD

## Authors

[CalicoMixReal-v2.0](https://civitai.com/models/83593/) by [Kybalico](https://civitai.com/user/Kybalico)

[ThisIsReal-v2.0](https://civitai.com/models/93529/) by [ChangeMeNot](https://civitai.com/user/ChangeMeNot)

[donGmiXX_realistic-v1.0](https://civitai.com/models/72745/) by [Dong09](https://civitai.com/user/Dong09)

[fantasticmix-v6.5](https://civitai.com/models/22402/) by [michin](https://civitai.com/user/michin)

[XtReMiX UltiMate Merge-v1.8](https://civitai.com/models/93589/) by [creatumundo399](https://civitai.com/user/creatumundo399)

[Blessing Mix-V1-VAE](https://civitai.com/models/94179/) by [mixboy](https://civitai.com/user/mixboy)

[Magical woman-v1.0](https://civitai.com/models/87659/) by [Aderek514](https://civitai.com/user/Aderek514)

[X-Flare Mix-Real](https://civitai.com/models/87533/) by [noah4u](https://civitai.com/user/noah4u)

[Kawaii Realistic European Mix-v0.2](https://civitai.com/models/90694/) by [szxex](https://civitai.com/user/szxex)

[midmix-v2.0](https://civitai.com/models/91837/) by [aigirl951877](https://civitai.com/user/aigirl951877)

[NextPhoto-v2.0](https://civitai.com/models/84335/) by [bigbeanboiler](https://civitai.com/user/bigbeanboiler)

[epiCRealism-pure Evolution V3](https://civitai.com/models/25694/) by [epinikion](https://civitai.com/user/epinikion)

## Mergition

Sum Twice, [Magicalwoman-v1.0](https://civitai.com/models/87659/) + [NextPhoto-v2.0](https://civitai.com/models/84335/) + [BlessingMix-V1-VAE](https://civitai.com/models/94179/),rand_alpha(0.0, 1.0, 362988133) rand_beta(0.0, 1.0, 2503978625) >> TEMP_0

Sum Twice, [ThisIsReal-v2.0-pruned](https://civitai.com/models/93529/) + [epiCRealism-pureEvolutionV3](https://civitai.com/models/25694/) + [X-FlareMix-Real](https://civitai.com/models/87533/),rand_alpha(0.0, 1.0, 1164438173) rand_beta(0.0, 1.0, 2889722594) >> TEMP_1

Sum Twice, TEMP_1 + [XtReMiXUltiMateMerge-v1.8-pruned](https://civitai.com/models/93589/) + [KawaiiRealisticEuropeanMix-v0.2](https://civitai.com/models/90694/),rand_alpha(0.0, 1.0, 2548759651) rand_beta(0.0, 1.0, 939190814) >> TEMP_2

Sum Twice, [donGmiXX_realistic-v1.0-pruned](https://civitai.com/models/72745/) + TEMP_2 + TEMP_0,rand_alpha(0.0, 1.0, 4211068902) rand_beta(0.0, 1.0, 2851752676) >> TEMP_3

Sum Twice, [CalicoMixReal-v2.0](https://civitai.com/models/83593/) + [midmix-v2.0](https://civitai.com/models/91837/) + [fantasticmix-v6.5](https://civitai.com/models/22402/),rand_alpha(0.0, 1.0, 1155017101) rand_beta(0.0, 1.0, 1186832395) >> TEMP_4

Weighted Sum, TEMP_4 + TEMP_3,rand_alpha(0.0, 1.0, 4170699435) >> RandMix-attemptD

# attemptE

## Authors

[ThisIsReal-v2.0](https://civitai.com/models/93529/) by [ChangeMeNot](https://civitai.com/user/ChangeMeNot)

[WaffleMix-v3](https://civitai.com/models/82657/) by [WaffleAbyss](https://civitai.com/user/WaffleAbyss)

[AddictiveFuture_Realistic_SemiAsian-V1](https://civitai.com/models/94725/) by [AddictiveFuture](https://civitai.com/user/AddictiveFuture)

[UltraReal-v1.0](https://civitai.com/models/101116/) by [ndsempai872](https://civitai.com/user/ndsempai872)

[Opiate-Opiate.v2.0-pruned-fp16](https://civitai.com/models/69587/) by [DominoPrincip](https://civitai.com/user/DominoPrincip)

[Milky-Chicken-v1.1](https://civitai.com/models/91662/) by [ArcticFlamingo](https://civitai.com/user/ArcticFlamingo)

[LOFA_RealMIX-v2.1](https://civitai.com/models/97203/) by [XSELE](https://civitai.com/user/XSELE)

[epiCRealism-pure Evolution V3](https://civitai.com/models/25694/) by [epinikion](https://civitai.com/user/epinikion)

[CalicoMixReal-v2.0](https://civitai.com/models/83593/) by [Kybalico](https://civitai.com/user/Kybalico)

[fantasticmix-v6.5](https://civitai.com/models/22402/) by [michin](https://civitai.com/user/michin)

[Sensual Visions-v1.0](https://civitai.com/models/96147/) by [Chik](https://civitai.com/user/Chik)

[yayoi_mix-v1.31](https://civitai.com/models/83096/) by [kotajiro001](https://civitai.com/user/kotajiro001)

[cbimix-v1.2](https://civitai.com/models/21341/) by [RobertoGonzalez](https://civitai.com/user/RobertoGonzalez)

[kisaragi_mix-v2.2](https://civitai.com/models/45757/) by [kotajiro001](https://civitai.com/user/kotajiro001)

[OS-AmberGlow-v1.0](https://civitai.com/models/96715/) by [BakingBeans](https://civitai.com/user/BakingBeans)

[CyberRealistic -v3.1](https://civitai.com/models/15003/) by [Cyberdelia](https://civitai.com/user/Cyberdelia)

[MoYouMIX_nature-v10.2](https://civitai.com/models/86232/) by [MoYou](https://civitai.com/user/MoYou)

[XXMix_9realistic-v4.0](https://civitai.com/models/47274/) by [Zyx_xx](https://civitai.com/user/Zyx_xx)

[puremix-v2.0](https://civitai.com/models/63558/) by [aigirl951877](https://civitai.com/user/aigirl951877)

[Shampoo Mix-v4](https://civitai.com/models/33918/) by [handcleanmists](https://civitai.com/user/handcleanmists)

[mutsuki_mix-v2](https://civitai.com/models/45614/) by [kotajiro001](https://civitai.com/user/kotajiro001)

## Mergition

Sum Twice, [ThisIsReal-v2.0-pruned](https://civitai.com/models/93529/) + [kisaragi_mix-v2.2](https://civitai.com/models/45757/) + [XXMix_9realistic-v4.0](https://civitai.com/models/47274/),rand_alpha(0.0, 1.0, 443960021) rand_beta(0.0, 1.0, 3789696212) >> TEMP_0

Sum Twice, [MoYouMIX_nature-v10.2](https://civitai.com/models/86232/) + [OS-AmberGlow-v1.0](https://civitai.com/models/96715/) + [WaffleMix-v3-pruned](https://civitai.com/models/82657/),rand_alpha(0.0, 1.0, 1803109590) rand_beta(0.0, 1.0, 1453385409) >> TEMP_1

Sum Twice, [LOFA_RealMIX-v2.1](https://civitai.com/models/97203/) + [cbimix-v1.2-pruned](https://civitai.com/models/21341/) + [UltraReal-v1.0](https://civitai.com/models/101116/),rand_alpha(0.0, 1.0, 4256340902) rand_beta(0.0, 1.0, 807878137) >> TEMP_2

Sum Twice, TEMP_2 + [epiCRealism-pureEvolutionV3](https://civitai.com/models/25694/) + [fantasticmix-v6.5](https://civitai.com/models/22402/),rand_alpha(0.0, 1.0, 3772562984) rand_beta(0.0, 1.0, 4240203753) >> TEMP_3

Sum Twice, [Milky-Chicken-v1.1](https://civitai.com/models/91662/) + [SensualVisions-v1.0](https://civitai.com/models/96147/) + [CyberRealistic-v3.1](https://civitai.com/models/15003/),rand_alpha(0.0, 1.0, 4126859432) rand_beta(0.0, 1.0, 2028377392) >> TEMP_4

Sum Twice, [Opiate-Opiate.v2.0-pruned-fp16](https://civitai.com/models/69587/) + [mutsuki_mix-v2](https://civitai.com/models/45614/) + [CalicoMixReal-v2.0](https://civitai.com/models/83593/),rand_alpha(0.0, 1.0, 2593256182) rand_beta(0.0, 1.0, 1602942704) >> TEMP_5

Sum Twice, [puremix-v2.0](https://civitai.com/models/63558/) + TEMP_5 + TEMP_0,rand_alpha(0.0, 1.0, 1463835870) rand_beta(0.0, 1.0, 1919004708) >> TEMP_6

Sum Twice, TEMP_6 + [ShampooMix-v4](https://civitai.com/models/33918/) + TEMP_4,rand_alpha(0.0, 1.0, 2771317666) rand_beta(0.0, 1.0, 3798261900) >> TEMP_7

Sum Twice, TEMP_1 + [yayoi_mix-v1.31](https://civitai.com/models/83096/) + TEMP_3,rand_alpha(0.0, 1.0, 2433722680) rand_beta(0.0, 1.0, 3707256183) >> TEMP_8

Sum Twice, TEMP_7 + [AddictiveFuture_Realistic_SemiAsian-V1](https://civitai.com/models/94725/) + TEMP_8,rand_alpha(0.0, 1.0, 2818401144) rand_beta(0.0, 1.0, 4137586985) >> RandMix-attemptE

# attemptF

## Authors

[cineMaErosPG_V4-cineMaErosPG_V4_ UF](https://civitai.com/models/74426/) by [Filly](https://civitai.com/user/Filly)

[Fresh Photo-v2.0](https://civitai.com/models/63149/) by [eddiemauro](https://civitai.com/user/eddiemauro)

[LRM - Liangyius Realistic Mix-v1.5](https://civitai.com/models/81304/) by [liangyiu](https://civitai.com/user/liangyiu)

[Nobmodel-v1.0](https://civitai.com/models/99326/) by [Nobdy](https://civitai.com/user/Nobdy)

[cbimix-v1.2](https://civitai.com/models/21341/) by [RobertoGonzalez](https://civitai.com/user/RobertoGonzalez)

[ChillyMix-chillymix V2 VAE Fp16](https://civitai.com/models/58772/) by [mixboy](https://civitai.com/user/mixboy)

[XXMix_9realistic-v4.0](https://civitai.com/models/47274/) by [Zyx_xx](https://civitai.com/user/Zyx_xx)

[X-Flare Mix-Real](https://civitai.com/models/87533/) by [noah4u](https://civitai.com/user/noah4u)

[Nymph Mix-v1.0_pruned](https://civitai.com/models/96374/) by [NymphMix](https://civitai.com/user/NymphMix)

[NeverEnding Dream-v1.22](https://civitai.com/models/10028/) by [Lykon](https://civitai.com/user/Lykon)

[ICBINP - I Cannot Believe ItIs Not Photography-Afterburn](https://civitai.com/models/28059/) by [residentchiefnz](https://civitai.com/user/residentchiefnz)

[epiCRealism-pure Evolution V3](https://civitai.com/models/25694/) by [epinikion](https://civitai.com/user/epinikion)

[kisaragi_mix-v2.2](https://civitai.com/models/45757/) by [kotajiro001](https://civitai.com/user/kotajiro001)

[Opiate-Opiate.v2.0-pruned-fp16](https://civitai.com/models/69587/) by [DominoPrincip](https://civitai.com/user/DominoPrincip)

[AIbijoModel-no47p22](https://civitai.com/models/65155/) by [AIbijo](https://civitai.com/user/AIbijo)

[LazyMix+-v3.0a](https://civitai.com/models/10961/) by [kaylazy](https://civitai.com/user/kaylazy)

[Kawaii Realistic European Mix-v0.2](https://civitai.com/models/90694/) by [szxex](https://civitai.com/user/szxex)

[fantasticmix-v6.5](https://civitai.com/models/22402/) by [michin](https://civitai.com/user/michin)

[CyberRealistic Classic-Classic V1.4](https://civitai.com/models/71185/) by [Cyberdelia](https://civitai.com/user/Cyberdelia)

[blue_pencil_realistic-v0.5](https://civitai.com/models/88941/) by [blue_pen5805](https://civitai.com/user/blue_pen5805)

## Mergition

Sum Twice, [ChillyMix-chillymixV2VAEFp16](https://civitai.com/models/58772/) + [AIbijoModel-no47p22](https://civitai.com/models/65155/) + [KawaiiRealisticEuropeanMix-v0.2](https://civitai.com/models/90694/),rand_alpha(0.0, 1.0, 1448726226) rand_beta(0.0, 1.0, 1612718918) >> TEMP_0

Sum Twice, [NymphMix-v1.0_pruned](https://civitai.com/models/96374/) + [blue_pencil_realistic-v0.5](https://civitai.com/models/88941/) + [XXMix_9realistic-v4.0](https://civitai.com/models/47274/),rand_alpha(0.0, 1.0, 3996249117) rand_beta(0.0, 1.0, 1325610322) >> TEMP_1

Sum Twice, [NeverEndingDream-v1.22](https://civitai.com/models/10028/) + [Nobmodel-v1.0-pruned](https://civitai.com/models/99326/) + [cineMaErosPG_V4-cineMaErosPG_V4_UF](https://civitai.com/models/74426/),rand_alpha(0.0, 1.0, 3380603779) rand_beta(0.0, 1.0, 3034448733) >> TEMP_2

Sum Twice, [CyberRealisticClassic-ClassicV1.4](https://civitai.com/models/71185/) + [LRM-LiangyiusRealisticMix-v1.5](https://civitai.com/models/81304/) + TEMP_1,rand_alpha(0.0, 1.0, 3442830754) rand_beta(0.0, 1.0, 3394049346) >> TEMP_3

Sum Twice, [epiCRealism-pureEvolutionV3](https://civitai.com/models/25694/) + [ICBINP-ICannotBelieveItIsNotPhotography-Afterburn](https://civitai.com/models/28059/) + [FreshPhoto-v2.0-pruned](https://civitai.com/models/63149/),rand_alpha(0.0, 1.0, 3406789958) rand_beta(0.0, 1.0, 2616453593) >> TEMP_4

Sum Twice, [fantasticmix-v6.5](https://civitai.com/models/22402/) + [cbimix-v1.2-pruned](https://civitai.com/models/21341/) + TEMP_0,rand_alpha(0.0, 1.0, 636301224) rand_beta(0.0, 1.0, 1333752761) >> TEMP_5

Sum Twice, [Opiate-Opiate.v2.0-pruned-fp16](https://civitai.com/models/69587/) + TEMP_5 + [kisaragi_mix-v2.2](https://civitai.com/models/45757/),rand_alpha(0.0, 1.0, 3025193242) rand_beta(0.0, 1.0, 1994900822) >> TEMP_6

Sum Twice, TEMP_6 + TEMP_2 + TEMP_4,rand_alpha(0.0, 1.0, 1437849591) rand_beta(0.0, 1.0, 1280504514) >> TEMP_7

Sum Twice, [LazyMix+-v3.0a](https://civitai.com/models/10961/) + TEMP_7 + [X-FlareMix-Real](https://civitai.com/models/87533/),rand_alpha(0.0, 1.0, 2116550821) rand_beta(0.0, 1.0, 1220687392) >> TEMP_8

Weighted Sum, TEMP_3 + TEMP_8,rand_alpha(0.0, 1.0, 2376068494) >> RandMix-attemptF

|

fanlino/distilbert-base-uncased-finetuned-emotion | fanlino | 2023-07-05T05:04:53Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-06-19T05:59:35Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

config: split

split: validation

args: split

metrics:

- name: Accuracy

type: accuracy

value: 0.9255

- name: F1

type: f1

value: 0.9255469274059955

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2134

- Accuracy: 0.9255

- F1: 0.9255

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8099 | 1.0 | 250 | 0.3119 | 0.907 | 0.9039 |

| 0.2425 | 2.0 | 500 | 0.2134 | 0.9255 | 0.9255 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

digiplay/PeachMixsRelistic_R0 | digiplay | 2023-07-05T05:00:28Z | 393 | 4 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-07-04T06:31:42Z | ---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

Model info :

https://civitai.com/models/99595/peachmixs-relistic

Original Author's DEMO image :

|

chenxingphh/marian-finetuned-kde4-en-to-fr | chenxingphh | 2023-07-05T04:59:29Z | 104 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"marian",

"text2text-generation",

"translation",

"generated_from_trainer",

"dataset:kde4",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | translation | 2023-07-05T03:16:55Z | ---

license: apache-2.0

tags:

- translation

- generated_from_trainer

datasets:

- kde4

model-index:

- name: marian-finetuned-kde4-en-to-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# marian-finetuned-kde4-en-to-fr

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-en-fr](https://huggingface.co/Helsinki-NLP/opus-mt-en-fr) on the kde4 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

lIlBrother/ko-TextNumbarT | lIlBrother | 2023-07-05T04:36:42Z | 125 | 2 | transformers | [

"transformers",

"pytorch",

"safetensors",

"bart",

"text2text-generation",

"ko",

"dataset:aihub",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-10-31T07:02:36Z | ---

language:

- ko # Example: fr

license: apache-2.0 # Example: apache-2.0 or any license from https://hf.co/docs/hub/repositories-licenses

library_name: transformers # Optional. Example: keras or any library from https://github.com/huggingface/hub-docs/blob/main/js/src/lib/interfaces/Libraries.ts

tags:

- text2text-generation # Example: audio

datasets:

- aihub # Example: common_voice. Use dataset id from https://hf.co/datasets

metrics:

- bleu # Example: wer. Use metric id from https://hf.co/metrics

- rouge

# Optional. Add this if you want to encode your eval results in a structured way.

model-index:

- name: ko-TextNumbarT

results:

- task:

type: text2text-generation # Required. Example: automatic-speech-recognition

name: text2text-generation # Optional. Example: Speech Recognition

metrics:

- type: bleu # Required. Example: wer. Use metric id from https://hf.co/metrics

value: 0.958234790096092 # Required. Example: 20.90

name: eval_bleu # Optional. Example: Test WER

verified: false # Optional. If true, indicates that evaluation was generated by Hugging Face (vs. self-reported).

- type: rouge1 # Required. Example: wer. Use metric id from https://hf.co/metrics

value: 0.9735361877162854 # Required. Example: 20.90

name: eval_rouge1 # Optional. Example: Test WER

verified: false # Optional. If true, indicates that evaluation was generated by Hugging Face (vs. self-reported).

- type: rouge2 # Required. Example: wer. Use metric id from https://hf.co/metrics

value: 0.9493975212378124 # Required. Example: 20.90

name: eval_rouge2 # Optional. Example: Test WER

verified: false # Optional. If true, indicates that evaluation was generated by Hugging Face (vs. self-reported).

- type: rougeL # Required. Example: wer. Use metric id from https://hf.co/metrics

value: 0.9734558938864928 # Required. Example: 20.90

name: eval_rougeL # Optional. Example: Test WER

verified: false # Optional. If true, indicates that evaluation was generated by Hugging Face (vs. self-reported).

- type: rougeLsum # Required. Example: wer. Use metric id from https://hf.co/metrics

value: 0.9734350757552404 # Required. Example: 20.90

name: eval_rougeLsum # Optional. Example: Test WER

verified: false # Optional. If true, indicates that evaluation was generated by Hugging Face (vs. self-reported).

---

# ko-TextNumbarT(TNT Model🧨): Try Korean Reading To Number(한글을 숫자로 바꾸는 모델)

## Table of Contents

- [ko-TextNumbarT(TNT Model🧨): Try Korean Reading To Number(한글을 숫자로 바꾸는 모델)](#ko-textnumbarttnt-model-try-korean-reading-to-number한글을-숫자로-바꾸는-모델)

- [Table of Contents](#table-of-contents)

- [Model Details](#model-details)

- [Uses](#uses)

- [Evaluation](#evaluation)

- [How to Get Started With the Model](#how-to-get-started-with-the-model)

## Model Details

- **Model Description:**

뭔가 찾아봐도 모델이나 알고리즘이 딱히 없어서 만들어본 모델입니다. <br />

BartForConditionalGeneration Fine-Tuning Model For Korean To Number <br />

BartForConditionalGeneration으로 파인튜닝한, 한글을 숫자로 변환하는 Task 입니다. <br />

- Dataset use [Korea aihub](https://aihub.or.kr/aihubdata/data/list.do?currMenu=115&topMenu=100&srchDataRealmCode=REALM002&srchDataTy=DATA004) <br />

I can't open my fine-tuning datasets for my private issue <br />

데이터셋은 Korea aihub에서 받아서 사용하였으며, 파인튜닝에 사용된 모든 데이터를 사정상 공개해드릴 수는 없습니다. <br />

- Korea aihub data is ONLY permit to Korean!!!!!!! <br />

aihub에서 데이터를 받으실 분은 한국인일 것이므로, 한글로만 작성합니다. <br />

정확히는 철자전사를 음성전사로 번역하는 형태로 학습된 모델입니다. (ETRI 전사기준) <br />

- In case, ten million, some people use 10 million or some people use 10000000, so this model is crucial for training datasets <br />

천만을 1000만 혹은 10000000으로 쓸 수도 있기에, Training Datasets에 따라 결과는 상이할 수 있습니다. <br />

- **수관형사와 수 의존명사의 띄어쓰기에 따라 결과가 확연히 달라질 수 있습니다. (쉰살, 쉰 살 -> 쉰살, 50살)** https://eretz2.tistory.com/34 <br />

일단은 기준을 잡고 치우치게 학습시키기엔 어떻게 사용될지 몰라, 학습 데이터 분포에 맡기도록 했습니다. (쉰 살이 더 많을까 쉰살이 더 많을까!?)

- **Developed by:** Yoo SungHyun(https://github.com/YooSungHyun)

- **Language(s):** Korean

- **License:** apache-2.0

- **Parent Model:** See the [kobart-base-v2](https://huggingface.co/gogamza/kobart-base-v2) for more information about the pre-trained base model.

## Uses

Want see more detail follow this URL [KoGPT_num_converter](https://github.com/ddobokki/KoGPT_num_converter) <br /> and see `bart_inference.py` and `bart_train.py`

## Evaluation

Just using `evaluate-metric/bleu` and `evaluate-metric/rouge` in huggingface `evaluate` library <br />

[Training wanDB URL](https://wandb.ai/bart_tadev/BartForConditionalGeneration/runs/14hyusvf?workspace=user-bart_tadev)

## How to Get Started With the Model

```python

from transformers.pipelines import Text2TextGenerationPipeline

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

texts = ["그러게 누가 여섯시까지 술을 마시래?"]

tokenizer = AutoTokenizer.from_pretrained("lIlBrother/ko-TextNumbarT")

model = AutoModelForSeq2SeqLM.from_pretrained("lIlBrother/ko-TextNumbarT")

seq2seqlm_pipeline = Text2TextGenerationPipeline(model=model, tokenizer=tokenizer)

kwargs = {

"min_length": 0,

"max_length": 1206,

"num_beams": 100,

"do_sample": False,

"num_beam_groups": 1,

}

pred = seq2seqlm_pipeline(texts, **kwargs)

print(pred)

# 그러게 누가 6시까지 술을 마시래?

```

|

Madhav1988/candy-finetuned | Madhav1988 | 2023-07-05T04:20:23Z | 187 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"detr",

"object-detection",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | object-detection | 2023-06-25T15:34:17Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

model-index:

- name: candy-finetuned

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# candy-finetuned

This model is a fine-tuned version of [Madhav1988/candy-finetuned](https://huggingface.co/Madhav1988/candy-finetuned) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

OmarAboBakr/output_dir | OmarAboBakr | 2023-07-05T03:57:01Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2023-07-05T03:53:59Z | ---

tags:

- generated_from_trainer

model-index:

- name: output_dir

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# output_dir

This model is a fine-tuned version of [ahmeddbahaa/AraT5-base-finetune-ar-xlsum](https://huggingface.co/ahmeddbahaa/AraT5-base-finetune-ar-xlsum) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.3896

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 35 | 2.7832 |

| No log | 2.0 | 70 | 2.8460 |

| No log | 3.0 | 105 | 2.9176 |

| No log | 4.0 | 140 | 3.0041 |

| No log | 5.0 | 175 | 3.0820 |

| No log | 6.0 | 210 | 3.1322 |

| No log | 7.0 | 245 | 3.2356 |

| No log | 8.0 | 280 | 3.2674 |

| No log | 9.0 | 315 | 3.3620 |

| No log | 10.0 | 350 | 3.3896 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

hiepnh/xgen-7b-8k-inst-8bit-sharded | hiepnh | 2023-07-05T03:47:32Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-05T03:33:54Z | sharded version of legendhasit/xgen-7b-8k-inst-8bit |

GabrielOnohara/distilbert-base-uncased-finetuned-cola | GabrielOnohara | 2023-07-05T03:44:22Z | 61 | 0 | transformers | [

"transformers",

"tf",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-07-05T01:15:19Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: GabrielOnohara/distilbert-base-uncased-finetuned-cola

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# GabrielOnohara/distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.1800

- Validation Loss: 0.5561

- Train Matthews Correlation: 0.5182

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed