modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

sequence | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

hazemOmrann14/AraBART-summ-finetuned-xsum-finetuned-xsum-finetuned-xsum | hazemOmrann14 | 2023-07-05T16:28:35Z | 106 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"mbart",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2023-07-05T16:08:14Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: AraBART-summ-finetuned-xsum-finetuned-xsum-finetuned-xsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# AraBART-summ-finetuned-xsum-finetuned-xsum-finetuned-xsum

This model is a fine-tuned version of [hazemOmrann14/AraBART-summ-finetuned-xsum-finetuned-xsum](https://huggingface.co/hazemOmrann14/AraBART-summ-finetuned-xsum-finetuned-xsum) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3.328889038158605e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|:-------:|

| No log | 1.0 | 10 | 2.5240 | 0.0 | 0.0 | 0.0 | 0.0 | 20.0 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

ozsenior13/distilbert-base-uncased-finetuned-squad | ozsenior13 | 2023-07-05T16:24:38Z | 116 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | question-answering | 2023-07-05T15:02:16Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: distilbert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2169

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.263 | 1.0 | 5533 | 1.2169 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

NasimB/gpt2-dp-cl-rarity-11-135k-mod-datasets-rarity1-root3 | NasimB | 2023-07-05T16:22:18Z | 7 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-05T13:27:11Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: gpt2-dp-cl-rarity-11-135k-mod-datasets-rarity1-root3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-dp-cl-rarity-11-135k-mod-datasets-rarity1-root3

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.7616

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 6.7003 | 0.05 | 500 | 5.8421 |

| 5.4077 | 0.1 | 1000 | 5.4379 |

| 5.0667 | 0.15 | 1500 | 5.2282 |

| 4.8285 | 0.2 | 2000 | 5.0890 |

| 4.6639 | 0.25 | 2500 | 4.9968 |

| 4.5282 | 0.29 | 3000 | 4.9414 |

| 4.4194 | 0.34 | 3500 | 4.8843 |

| 4.3138 | 0.39 | 4000 | 4.8436 |

| 4.2135 | 0.44 | 4500 | 4.8229 |

| 4.1242 | 0.49 | 5000 | 4.7947 |

| 4.0388 | 0.54 | 5500 | 4.7670 |

| 3.952 | 0.59 | 6000 | 4.7585 |

| 3.8701 | 0.64 | 6500 | 4.7431 |

| 3.8026 | 0.69 | 7000 | 4.7273 |

| 3.7345 | 0.74 | 7500 | 4.7219 |

| 3.6661 | 0.79 | 8000 | 4.7135 |

| 3.6259 | 0.84 | 8500 | 4.7072 |

| 3.5927 | 0.88 | 9000 | 4.7052 |

| 3.5699 | 0.93 | 9500 | 4.7025 |

| 3.5638 | 0.98 | 10000 | 4.7018 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

omar-al-sharif/AlQalam-finetuned-mmj | omar-al-sharif | 2023-07-05T16:21:33Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2023-07-05T14:11:56Z | ---

tags:

- generated_from_trainer

model-index:

- name: AlQalam-finetuned-mmj

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# AlQalam-finetuned-mmj

This model is a fine-tuned version of [malmarjeh/t5-arabic-text-summarization](https://huggingface.co/malmarjeh/t5-arabic-text-summarization) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0723

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.3745 | 1.0 | 1678 | 1.1947 |

| 1.219 | 2.0 | 3356 | 1.1176 |

| 1.065 | 3.0 | 5034 | 1.0895 |

| 0.9928 | 4.0 | 6712 | 1.0734 |

| 0.9335 | 5.0 | 8390 | 1.0723 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

stabilityai/stable-diffusion-2-1-base | stabilityai | 2023-07-05T16:19:20Z | 863,939 | 647 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"text-to-image",

"arxiv:2112.10752",

"arxiv:2202.00512",

"arxiv:1910.09700",

"license:openrail++",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-12-06T17:25:36Z | ---

license: openrail++

tags:

- stable-diffusion

- text-to-image

---

# Stable Diffusion v2-1-base Model Card

This model card focuses on the model associated with the Stable Diffusion v2-1-base model.

This `stable-diffusion-2-1-base` model fine-tunes [stable-diffusion-2-base](https://huggingface.co/stabilityai/stable-diffusion-2-base) (`512-base-ema.ckpt`) with 220k extra steps taken, with `punsafe=0.98` on the same dataset.

- Use it with the [`stablediffusion`](https://github.com/Stability-AI/stablediffusion) repository: download the `v2-1_512-ema-pruned.ckpt` [here](https://huggingface.co/stabilityai/stable-diffusion-2-1-base/resolve/main/v2-1_512-ema-pruned.ckpt).

- Use it with 🧨 [`diffusers`](#examples)

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [CreativeML Open RAIL++-M License](https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/LICENSE-MODEL)

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([OpenCLIP-ViT/H](https://github.com/mlfoundations/open_clip)).

- **Resources for more information:** [GitHub Repository](https://github.com/Stability-AI/).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

## Examples

Using the [🤗's Diffusers library](https://github.com/huggingface/diffusers) to run Stable Diffusion 2 in a simple and efficient manner.

```bash

pip install diffusers transformers accelerate scipy safetensors

```

Running the pipeline (if you don't swap the scheduler it will run with the default PNDM/PLMS scheduler, in this example we are swapping it to EulerDiscreteScheduler):

```python

from diffusers import StableDiffusionPipeline, EulerDiscreteScheduler

import torch

model_id = "stabilityai/stable-diffusion-2-1-base"

scheduler = EulerDiscreteScheduler.from_pretrained(model_id, subfolder="scheduler")

pipe = StableDiffusionPipeline.from_pretrained(model_id, scheduler=scheduler, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

image.save("astronaut_rides_horse.png")

```

**Notes**:

- Despite not being a dependency, we highly recommend you to install [xformers](https://github.com/facebookresearch/xformers) for memory efficient attention (better performance)

- If you have low GPU RAM available, make sure to add a `pipe.enable_attention_slicing()` after sending it to `cuda` for less VRAM usage (to the cost of speed)

# Uses

## Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

Excluded uses are described below.

### Misuse, Malicious Use, and Out-of-Scope Use

_Note: This section is originally taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), was used for Stable Diffusion v1, but applies in the same way to Stable Diffusion v2_.

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

#### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

#### Misuse and Malicious Use

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

- Impersonating individuals without their consent.

- Sexual content without consent of the people who might see it.

- Mis- and disinformation

- Representations of egregious violence and gore

- Sharing of copyrighted or licensed material in violation of its terms of use.

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The model was trained mainly with English captions and will not work as well in other languages.

- The autoencoding part of the model is lossy

- The model was trained on a subset of the large-scale dataset

[LAION-5B](https://laion.ai/blog/laion-5b/), which contains adult, violent and sexual content. To partially mitigate this, we have filtered the dataset using LAION's NFSW detector (see Training section).

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Stable Diffusion vw was primarily trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

which consists of images that are limited to English descriptions.

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

Stable Diffusion v2 mirrors and exacerbates biases to such a degree that viewer discretion must be advised irrespective of the input or its intent.

## Training

**Training Data**

The model developers used the following dataset for training the model:

- LAION-5B and subsets (details below). The training data is further filtered using LAION's NSFW detector, with a "p_unsafe" score of 0.1 (conservative). For more details, please refer to LAION-5B's [NeurIPS 2022](https://openreview.net/forum?id=M3Y74vmsMcY) paper and reviewer discussions on the topic.

**Training Procedure**

Stable Diffusion v2 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

- Text prompts are encoded through the OpenCLIP-ViT/H text-encoder.

- The output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet. We also use the so-called _v-objective_, see https://arxiv.org/abs/2202.00512.

We currently provide the following checkpoints, for various versions:

### Version 2.1

- `512-base-ema.ckpt`: Fine-tuned on `512-base-ema.ckpt` 2.0 with 220k extra steps taken, with `punsafe=0.98` on the same dataset.

- `768-v-ema.ckpt`: Resumed from `768-v-ema.ckpt` 2.0 with an additional 55k steps on the same dataset (`punsafe=0.1`), and then fine-tuned for another 155k extra steps with `punsafe=0.98`.

### Version 2.0

- `512-base-ema.ckpt`: 550k steps at resolution `256x256` on a subset of [LAION-5B](https://laion.ai/blog/laion-5b/) filtered for explicit pornographic material, using the [LAION-NSFW classifier](https://github.com/LAION-AI/CLIP-based-NSFW-Detector) with `punsafe=0.1` and an [aesthetic score](https://github.com/christophschuhmann/improved-aesthetic-predictor) >= `4.5`.

850k steps at resolution `512x512` on the same dataset with resolution `>= 512x512`.

- `768-v-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for 150k steps using a [v-objective](https://arxiv.org/abs/2202.00512) on the same dataset. Resumed for another 140k steps on a `768x768` subset of our dataset.

- `512-depth-ema.ckpt`: Resumed from `512-base-ema.ckpt` and finetuned for 200k steps. Added an extra input channel to process the (relative) depth prediction produced by [MiDaS](https://github.com/isl-org/MiDaS) (`dpt_hybrid`) which is used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized.

- `512-inpainting-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for another 200k steps. Follows the mask-generation strategy presented in [LAMA](https://github.com/saic-mdal/lama) which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized. The same strategy was used to train the [1.5-inpainting checkpoint](https://github.com/saic-mdal/lama).

- `x4-upscaling-ema.ckpt`: Trained for 1.25M steps on a 10M subset of LAION containing images `>2048x2048`. The model was trained on crops of size `512x512` and is a text-guided [latent upscaling diffusion model](https://arxiv.org/abs/2112.10752).

In addition to the textual input, it receives a `noise_level` as an input parameter, which can be used to add noise to the low-resolution input according to a [predefined diffusion schedule](configs/stable-diffusion/x4-upscaling.yaml).

- **Hardware:** 32 x 8 x A100 GPUs

- **Optimizer:** AdamW

- **Gradient Accumulations**: 1

- **Batch:** 32 x 8 x 2 x 4 = 2048

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

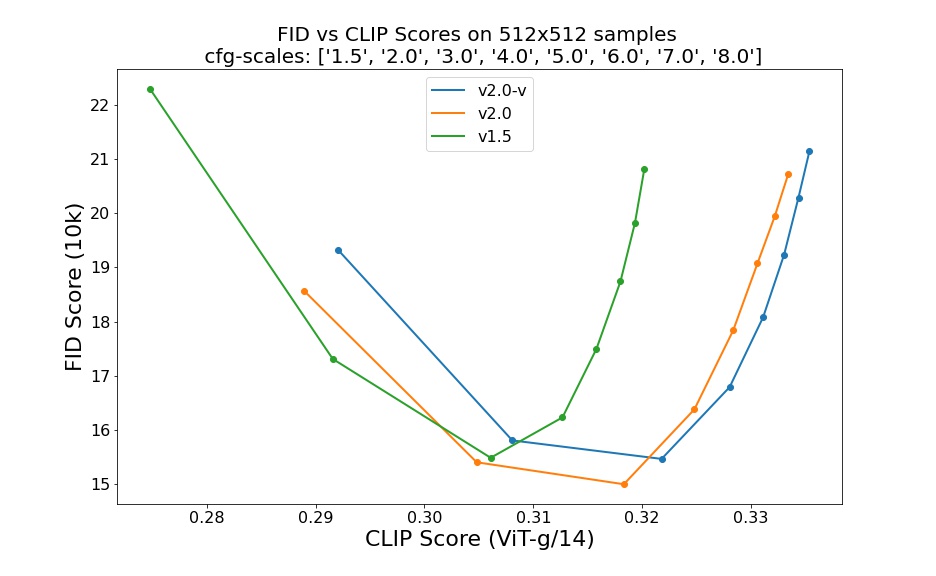

## Evaluation Results

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 steps DDIM sampling steps show the relative improvements of the checkpoints:

Evaluated using 50 DDIM steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

## Environmental Impact

**Stable Diffusion v1** **Estimated Emissions**

Based on that information, we estimate the following CO2 emissions using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact.

- **Hardware Type:** A100 PCIe 40GB

- **Hours used:** 200000

- **Cloud Provider:** AWS

- **Compute Region:** US-east

- **Carbon Emitted (Power consumption x Time x Carbon produced based on location of power grid):** 15000 kg CO2 eq.

## Citation

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

*This model card was written by: Robin Rombach, Patrick Esser and David Ha and is based on the [Stable Diffusion v1](https://github.com/CompVis/stable-diffusion/blob/main/Stable_Diffusion_v1_Model_Card.md) and [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).* |

stabilityai/stable-diffusion-2-depth | stabilityai | 2023-07-05T16:19:06Z | 8,224 | 386 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"arxiv:2112.10752",

"arxiv:2202.00512",

"arxiv:1910.09700",

"license:openrail++",

"diffusers:StableDiffusionDepth2ImgPipeline",

"region:us"

] | null | 2022-11-23T17:41:46Z | ---

license: openrail++

tags:

- stable-diffusion

inference: false

---

# Stable Diffusion v2 Model Card

This model card focuses on the model associated with the Stable Diffusion v2 model, available [here](https://github.com/Stability-AI/stablediffusion).

This `stable-diffusion-2-depth` model is resumed from [stable-diffusion-2-base](https://huggingface.co/stabilityai/stable-diffusion-2-base) (`512-base-ema.ckpt`) and finetuned for 200k steps. Added an extra input channel to process the (relative) depth prediction produced by [MiDaS](https://github.com/isl-org/MiDaS) (`dpt_hybrid`) which is used as an additional conditioning.

- Use it with the [`stablediffusion`](https://github.com/Stability-AI/stablediffusion) repository: download the `512-depth-ema.ckpt` [here](https://huggingface.co/stabilityai/stable-diffusion-2-depth/resolve/main/512-depth-ema.ckpt).

- Use it with 🧨 [`diffusers`](#examples)

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [CreativeML Open RAIL++-M License](https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/LICENSE-MODEL)

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([OpenCLIP-ViT/H](https://github.com/mlfoundations/open_clip)).

- **Resources for more information:** [GitHub Repository](https://github.com/Stability-AI/).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

## Examples

Using the [🤗's Diffusers library](https://github.com/huggingface/diffusers) to run Stable Diffusion 2 in a simple and efficient manner.

```bash

pip install -U git+https://github.com/huggingface/transformers.git

pip install diffusers transformers accelerate scipy safetensors

```

Running the pipeline (if you don't swap the scheduler it will run with the default DDIM, in this example we are swapping it to EulerDiscreteScheduler):

```python

import torch

import requests

from PIL import Image

from diffusers import StableDiffusionDepth2ImgPipeline

pipe = StableDiffusionDepth2ImgPipeline.from_pretrained(

"stabilityai/stable-diffusion-2-depth",

torch_dtype=torch.float16,

).to("cuda")

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

init_image = Image.open(requests.get(url, stream=True).raw)

prompt = "two tigers"

n_propmt = "bad, deformed, ugly, bad anotomy"

image = pipe(prompt=prompt, image=init_image, negative_prompt=n_propmt, strength=0.7).images[0]

```

**Notes**:

- Despite not being a dependency, we highly recommend you to install [xformers](https://github.com/facebookresearch/xformers) for memory efficient attention (better performance)

- If you have low GPU RAM available, make sure to add a `pipe.enable_attention_slicing()` after sending it to `cuda` for less VRAM usage (to the cost of speed)

# Uses

## Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

Excluded uses are described below.

### Misuse, Malicious Use, and Out-of-Scope Use

_Note: This section is originally taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), was used for Stable Diffusion v1, but applies in the same way to Stable Diffusion v2_.

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

#### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

#### Misuse and Malicious Use

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

- Impersonating individuals without their consent.

- Sexual content without consent of the people who might see it.

- Mis- and disinformation

- Representations of egregious violence and gore

- Sharing of copyrighted or licensed material in violation of its terms of use.

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The model was trained mainly with English captions and will not work as well in other languages.

- The autoencoding part of the model is lossy

- The model was trained on a subset of the large-scale dataset

[LAION-5B](https://laion.ai/blog/laion-5b/), which contains adult, violent and sexual content. To partially mitigate this, we have filtered the dataset using LAION's NFSW detector (see Training section).

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Stable Diffusion vw was primarily trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

which consists of images that are limited to English descriptions.

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

Stable Diffusion v2 mirrors and exacerbates biases to such a degree that viewer discretion must be advised irrespective of the input or its intent.

## Training

**Training Data**

The model developers used the following dataset for training the model:

- LAION-5B and subsets (details below). The training data is further filtered using LAION's NSFW detector, with a "p_unsafe" score of 0.1 (conservative). For more details, please refer to LAION-5B's [NeurIPS 2022](https://openreview.net/forum?id=M3Y74vmsMcY) paper and reviewer discussions on the topic.

**Training Procedure**

Stable Diffusion v2 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

- Text prompts are encoded through the OpenCLIP-ViT/H text-encoder.

- The output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet. We also use the so-called _v-objective_, see https://arxiv.org/abs/2202.00512.

We currently provide the following checkpoints:

- `512-base-ema.ckpt`: 550k steps at resolution `256x256` on a subset of [LAION-5B](https://laion.ai/blog/laion-5b/) filtered for explicit pornographic material, using the [LAION-NSFW classifier](https://github.com/LAION-AI/CLIP-based-NSFW-Detector) with `punsafe=0.1` and an [aesthetic score](https://github.com/christophschuhmann/improved-aesthetic-predictor) >= `4.5`.

850k steps at resolution `512x512` on the same dataset with resolution `>= 512x512`.

- `768-v-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for 150k steps using a [v-objective](https://arxiv.org/abs/2202.00512) on the same dataset. Resumed for another 140k steps on a `768x768` subset of our dataset.

- `512-depth-ema.ckpt`: Resumed from `512-base-ema.ckpt` and finetuned for 200k steps. Added an extra input channel to process the (relative) depth prediction produced by [MiDaS](https://github.com/isl-org/MiDaS) (`dpt_hybrid`) which is used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized.

- `512-inpainting-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for another 200k steps. Follows the mask-generation strategy presented in [LAMA](https://github.com/saic-mdal/lama) which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized. The same strategy was used to train the [1.5-inpainting checkpoint](https://github.com/saic-mdal/lama).

- `x4-upscaling-ema.ckpt`: Trained for 1.25M steps on a 10M subset of LAION containing images `>2048x2048`. The model was trained on crops of size `512x512` and is a text-guided [latent upscaling diffusion model](https://arxiv.org/abs/2112.10752).

In addition to the textual input, it receives a `noise_level` as an input parameter, which can be used to add noise to the low-resolution input according to a [predefined diffusion schedule](configs/stable-diffusion/x4-upscaling.yaml).

- **Hardware:** 32 x 8 x A100 GPUs

- **Optimizer:** AdamW

- **Gradient Accumulations**: 1

- **Batch:** 32 x 8 x 2 x 4 = 2048

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

## Evaluation Results

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 steps DDIM sampling steps show the relative improvements of the checkpoints:

Evaluated using 50 DDIM steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

## Environmental Impact

**Stable Diffusion v1** **Estimated Emissions**

Based on that information, we estimate the following CO2 emissions using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact.

- **Hardware Type:** A100 PCIe 40GB

- **Hours used:** 200000

- **Cloud Provider:** AWS

- **Compute Region:** US-east

- **Carbon Emitted (Power consumption x Time x Carbon produced based on location of power grid):** 15000 kg CO2 eq.

## Citation

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

*This model card was written by: Robin Rombach, Patrick Esser and David Ha and is based on the [Stable Diffusion v1](https://github.com/CompVis/stable-diffusion/blob/main/Stable_Diffusion_v1_Model_Card.md) and [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).*

|

stabilityai/stable-diffusion-2-base | stabilityai | 2023-07-05T16:19:03Z | 83,300 | 349 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"text-to-image",

"arxiv:2112.10752",

"arxiv:2202.00512",

"arxiv:1910.09700",

"license:openrail++",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-11-23T17:41:31Z | ---

license: openrail++

tags:

- stable-diffusion

- text-to-image

---

# Stable Diffusion v2-base Model Card

This model card focuses on the model associated with the Stable Diffusion v2-base model, available [here](https://github.com/Stability-AI/stablediffusion).

The model is trained from scratch 550k steps at resolution `256x256` on a subset of [LAION-5B](https://laion.ai/blog/laion-5b/) filtered for explicit pornographic material, using the [LAION-NSFW classifier](https://github.com/LAION-AI/CLIP-based-NSFW-Detector) with `punsafe=0.1` and an [aesthetic score](https://github.com/christophschuhmann/improved-aesthetic-predictor) >= `4.5`. Then it is further trained for 850k steps at resolution `512x512` on the same dataset on images with resolution `>= 512x512`.

- Use it with the [`stablediffusion`](https://github.com/Stability-AI/stablediffusion) repository: download the `512-base-ema.ckpt` [here](https://huggingface.co/stabilityai/stable-diffusion-2-base/resolve/main/512-base-ema.ckpt).

- Use it with 🧨 [`diffusers`](https://huggingface.co/stabilityai/stable-diffusion-2-base#examples)

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [CreativeML Open RAIL++-M License](https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/LICENSE-MODEL)

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([OpenCLIP-ViT/H](https://github.com/mlfoundations/open_clip)).

- **Resources for more information:** [GitHub Repository](https://github.com/Stability-AI/).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

## Examples

Using the [🤗's Diffusers library](https://github.com/huggingface/diffusers) to run Stable Diffusion 2 in a simple and efficient manner.

```bash

pip install diffusers transformers accelerate scipy safetensors

```

Running the pipeline (if you don't swap the scheduler it will run with the default PNDM/PLMS scheduler, in this example we are swapping it to EulerDiscreteScheduler):

```python

from diffusers import StableDiffusionPipeline, EulerDiscreteScheduler

import torch

model_id = "stabilityai/stable-diffusion-2-base"

# Use the Euler scheduler here instead

scheduler = EulerDiscreteScheduler.from_pretrained(model_id, subfolder="scheduler")

pipe = StableDiffusionPipeline.from_pretrained(model_id, scheduler=scheduler, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

image.save("astronaut_rides_horse.png")

```

**Notes**:

- Despite not being a dependency, we highly recommend you to install [xformers](https://github.com/facebookresearch/xformers) for memory efficient attention (better performance)

- If you have low GPU RAM available, make sure to add a `pipe.enable_attention_slicing()` after sending it to `cuda` for less VRAM usage (to the cost of speed)

# Uses

## Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

Excluded uses are described below.

### Misuse, Malicious Use, and Out-of-Scope Use

_Note: This section is originally taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), was used for Stable Diffusion v1, but applies in the same way to Stable Diffusion v2_.

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

#### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

#### Misuse and Malicious Use

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

- Impersonating individuals without their consent.

- Sexual content without consent of the people who might see it.

- Mis- and disinformation

- Representations of egregious violence and gore

- Sharing of copyrighted or licensed material in violation of its terms of use.

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The model was trained mainly with English captions and will not work as well in other languages.

- The autoencoding part of the model is lossy

- The model was trained on a subset of the large-scale dataset

[LAION-5B](https://laion.ai/blog/laion-5b/), which contains adult, violent and sexual content. To partially mitigate this, we have filtered the dataset using LAION's NFSW detector (see Training section).

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Stable Diffusion vw was primarily trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

which consists of images that are limited to English descriptions.

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

Stable Diffusion v2 mirrors and exacerbates biases to such a degree that viewer discretion must be advised irrespective of the input or its intent.

## Training

**Training Data**

The model developers used the following dataset for training the model:

- LAION-5B and subsets (details below). The training data is further filtered using LAION's NSFW detector, with a "p_unsafe" score of 0.1 (conservative). For more details, please refer to LAION-5B's [NeurIPS 2022](https://openreview.net/forum?id=M3Y74vmsMcY) paper and reviewer discussions on the topic.

**Training Procedure**

Stable Diffusion v2 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

- Text prompts are encoded through the OpenCLIP-ViT/H text-encoder.

- The output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet. We also use the so-called _v-objective_, see https://arxiv.org/abs/2202.00512.

We currently provide the following checkpoints:

- `512-base-ema.ckpt`: 550k steps at resolution `256x256` on a subset of [LAION-5B](https://laion.ai/blog/laion-5b/) filtered for explicit pornographic material, using the [LAION-NSFW classifier](https://github.com/LAION-AI/CLIP-based-NSFW-Detector) with `punsafe=0.1` and an [aesthetic score](https://github.com/christophschuhmann/improved-aesthetic-predictor) >= `4.5`.

850k steps at resolution `512x512` on the same dataset with resolution `>= 512x512`.

- `768-v-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for 150k steps using a [v-objective](https://arxiv.org/abs/2202.00512) on the same dataset. Resumed for another 140k steps on a `768x768` subset of our dataset.

- `512-depth-ema.ckpt`: Resumed from `512-base-ema.ckpt` and finetuned for 200k steps. Added an extra input channel to process the (relative) depth prediction produced by [MiDaS](https://github.com/isl-org/MiDaS) (`dpt_hybrid`) which is used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized.

- `512-inpainting-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for another 200k steps. Follows the mask-generation strategy presented in [LAMA](https://github.com/saic-mdal/lama) which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized. The same strategy was used to train the [1.5-inpainting checkpoint](https://github.com/saic-mdal/lama).

- `x4-upscaling-ema.ckpt`: Trained for 1.25M steps on a 10M subset of LAION containing images `>2048x2048`. The model was trained on crops of size `512x512` and is a text-guided [latent upscaling diffusion model](https://arxiv.org/abs/2112.10752).

In addition to the textual input, it receives a `noise_level` as an input parameter, which can be used to add noise to the low-resolution input according to a [predefined diffusion schedule](configs/stable-diffusion/x4-upscaling.yaml).

- **Hardware:** 32 x 8 x A100 GPUs

- **Optimizer:** AdamW

- **Gradient Accumulations**: 1

- **Batch:** 32 x 8 x 2 x 4 = 2048

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

## Evaluation Results

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 steps DDIM sampling steps show the relative improvements of the checkpoints:

Evaluated using 50 DDIM steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

## Environmental Impact

**Stable Diffusion v1** **Estimated Emissions**

Based on that information, we estimate the following CO2 emissions using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact.

- **Hardware Type:** A100 PCIe 40GB

- **Hours used:** 200000

- **Cloud Provider:** AWS

- **Compute Region:** US-east

- **Carbon Emitted (Power consumption x Time x Carbon produced based on location of power grid):** 15000 kg CO2 eq.

## Citation

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

*This model card was written by: Robin Rombach, Patrick Esser and David Ha and is based on the [Stable Diffusion v1](https://github.com/CompVis/stable-diffusion/blob/main/Stable_Diffusion_v1_Model_Card.md) and [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).* |

stabilityai/stable-diffusion-2 | stabilityai | 2023-07-05T16:19:01Z | 237,820 | 1,856 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"text-to-image",

"arxiv:2202.00512",

"arxiv:2112.10752",

"arxiv:1910.09700",

"license:openrail++",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-11-23T11:54:34Z | ---

license: openrail++

tags:

- stable-diffusion

- text-to-image

---

# Stable Diffusion v2 Model Card

This model card focuses on the model associated with the Stable Diffusion v2 model, available [here](https://github.com/Stability-AI/stablediffusion).

This `stable-diffusion-2` model is resumed from [stable-diffusion-2-base](https://huggingface.co/stabilityai/stable-diffusion-2-base) (`512-base-ema.ckpt`) and trained for 150k steps using a [v-objective](https://arxiv.org/abs/2202.00512) on the same dataset. Resumed for another 140k steps on `768x768` images.

- Use it with the [`stablediffusion`](https://github.com/Stability-AI/stablediffusion) repository: download the `768-v-ema.ckpt` [here](https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/768-v-ema.ckpt).

- Use it with 🧨 [`diffusers`](https://huggingface.co/stabilityai/stable-diffusion-2#examples)

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [CreativeML Open RAIL++-M License](https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/LICENSE-MODEL)

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([OpenCLIP-ViT/H](https://github.com/mlfoundations/open_clip)).

- **Resources for more information:** [GitHub Repository](https://github.com/Stability-AI/).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

## Examples

Using the [🤗's Diffusers library](https://github.com/huggingface/diffusers) to run Stable Diffusion 2 in a simple and efficient manner.

```bash

pip install diffusers transformers accelerate scipy safetensors

```

Running the pipeline (if you don't swap the scheduler it will run with the default DDIM, in this example we are swapping it to EulerDiscreteScheduler):

```python

from diffusers import StableDiffusionPipeline, EulerDiscreteScheduler

model_id = "stabilityai/stable-diffusion-2"

# Use the Euler scheduler here instead

scheduler = EulerDiscreteScheduler.from_pretrained(model_id, subfolder="scheduler")

pipe = StableDiffusionPipeline.from_pretrained(model_id, scheduler=scheduler, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

image.save("astronaut_rides_horse.png")

```

**Notes**:

- Despite not being a dependency, we highly recommend you to install [xformers](https://github.com/facebookresearch/xformers) for memory efficient attention (better performance)

- If you have low GPU RAM available, make sure to add a `pipe.enable_attention_slicing()` after sending it to `cuda` for less VRAM usage (to the cost of speed)

# Uses

## Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

Excluded uses are described below.

### Misuse, Malicious Use, and Out-of-Scope Use

_Note: This section is originally taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), was used for Stable Diffusion v1, but applies in the same way to Stable Diffusion v2_.

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

#### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

#### Misuse and Malicious Use

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

- Impersonating individuals without their consent.

- Sexual content without consent of the people who might see it.

- Mis- and disinformation

- Representations of egregious violence and gore

- Sharing of copyrighted or licensed material in violation of its terms of use.

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The model was trained mainly with English captions and will not work as well in other languages.

- The autoencoding part of the model is lossy

- The model was trained on a subset of the large-scale dataset

[LAION-5B](https://laion.ai/blog/laion-5b/), which contains adult, violent and sexual content. To partially mitigate this, we have filtered the dataset using LAION's NFSW detector (see Training section).

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Stable Diffusion was primarily trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

which consists of images that are limited to English descriptions.

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

Stable Diffusion v2 mirrors and exacerbates biases to such a degree that viewer discretion must be advised irrespective of the input or its intent.

## Training

**Training Data**

The model developers used the following dataset for training the model:

- LAION-5B and subsets (details below). The training data is further filtered using LAION's NSFW detector, with a "p_unsafe" score of 0.1 (conservative). For more details, please refer to LAION-5B's [NeurIPS 2022](https://openreview.net/forum?id=M3Y74vmsMcY) paper and reviewer discussions on the topic.

**Training Procedure**

Stable Diffusion v2 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

- Text prompts are encoded through the OpenCLIP-ViT/H text-encoder.

- The output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet. We also use the so-called _v-objective_, see https://arxiv.org/abs/2202.00512.

We currently provide the following checkpoints:

- `512-base-ema.ckpt`: 550k steps at resolution `256x256` on a subset of [LAION-5B](https://laion.ai/blog/laion-5b/) filtered for explicit pornographic material, using the [LAION-NSFW classifier](https://github.com/LAION-AI/CLIP-based-NSFW-Detector) with `punsafe=0.1` and an [aesthetic score](https://github.com/christophschuhmann/improved-aesthetic-predictor) >= `4.5`.

850k steps at resolution `512x512` on the same dataset with resolution `>= 512x512`.

- `768-v-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for 150k steps using a [v-objective](https://arxiv.org/abs/2202.00512) on the same dataset. Resumed for another 140k steps on a `768x768` subset of our dataset.

- `512-depth-ema.ckpt`: Resumed from `512-base-ema.ckpt` and finetuned for 200k steps. Added an extra input channel to process the (relative) depth prediction produced by [MiDaS](https://github.com/isl-org/MiDaS) (`dpt_hybrid`) which is used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized.

- `512-inpainting-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for another 200k steps. Follows the mask-generation strategy presented in [LAMA](https://github.com/saic-mdal/lama) which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized. The same strategy was used to train the [1.5-inpainting checkpoint](https://github.com/saic-mdal/lama).

- `x4-upscaling-ema.ckpt`: Trained for 1.25M steps on a 10M subset of LAION containing images `>2048x2048`. The model was trained on crops of size `512x512` and is a text-guided [latent upscaling diffusion model](https://arxiv.org/abs/2112.10752).

In addition to the textual input, it receives a `noise_level` as an input parameter, which can be used to add noise to the low-resolution input according to a [predefined diffusion schedule](configs/stable-diffusion/x4-upscaling.yaml).

- **Hardware:** 32 x 8 x A100 GPUs

- **Optimizer:** AdamW

- **Gradient Accumulations**: 1

- **Batch:** 32 x 8 x 2 x 4 = 2048

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

## Evaluation Results

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 steps DDIM sampling steps show the relative improvements of the checkpoints:

Evaluated using 50 DDIM steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

## Environmental Impact

**Stable Diffusion v1** **Estimated Emissions**

Based on that information, we estimate the following CO2 emissions using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact.

- **Hardware Type:** A100 PCIe 40GB

- **Hours used:** 200000

- **Cloud Provider:** AWS

- **Compute Region:** US-east

- **Carbon Emitted (Power consumption x Time x Carbon produced based on location of power grid):** 15000 kg CO2 eq.

## Citation

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

*This model card was written by: Robin Rombach, Patrick Esser and David Ha and is based on the [Stable Diffusion v1](https://github.com/CompVis/stable-diffusion/blob/main/Stable_Diffusion_v1_Model_Card.md) and [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).*

|

CompVis/ldm-super-resolution-4x-openimages | CompVis | 2023-07-05T16:18:48Z | 4,364 | 112 | diffusers | [

"diffusers",

"pytorch",

"super-resolution",

"diffusion-super-resolution",

"arxiv:2112.10752",

"license:apache-2.0",

"diffusers:LDMSuperResolutionPipeline",

"region:us"

] | null | 2022-11-09T12:35:04Z | ---

license: apache-2.0

tags:

- pytorch

- diffusers

- super-resolution

- diffusion-super-resolution

---

# Latent Diffusion Models (LDM) for super-resolution

**Paper**: [High-Resolution Image Synthesis with Latent Diffusion Models](https://arxiv.org/abs/2112.10752)

**Abstract**:

*By decomposing the image formation process into a sequential application of denoising autoencoders, diffusion models (DMs) achieve state-of-the-art synthesis results on image data and beyond. Additionally, their formulation allows for a guiding mechanism to control the image generation process without retraining. However, since these models typically operate directly in pixel space, optimization of powerful DMs often consumes hundreds of GPU days and inference is expensive due to sequential evaluations. To enable DM training on limited computational resources while retaining their quality and flexibility, we apply them in the latent space of powerful pretrained autoencoders. In contrast to previous work, training diffusion models on such a representation allows for the first time to reach a near-optimal point between complexity reduction and detail preservation, greatly boosting visual fidelity. By introducing cross-attention layers into the model architecture, we turn diffusion models into powerful and flexible generators for general conditioning inputs such as text or bounding boxes and high-resolution synthesis becomes possible in a convolutional manner. Our latent diffusion models (LDMs) achieve a new state of the art for image inpainting and highly competitive performance on various tasks, including unconditional image generation, semantic scene synthesis, and super-resolution, while significantly reducing computational requirements compared to pixel-based DMs.*

**Authors**

*Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, Björn Ommer*

## Usage

### Inference with a pipeline

```python

!pip install git+https://github.com/huggingface/diffusers.git

import requests

from PIL import Image

from io import BytesIO

from diffusers import LDMSuperResolutionPipeline

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

model_id = "CompVis/ldm-super-resolution-4x-openimages"

# load model and scheduler

pipeline = LDMSuperResolutionPipeline.from_pretrained(model_id)

pipeline = pipeline.to(device)

# let's download an image

url = "https://user-images.githubusercontent.com/38061659/199705896-b48e17b8-b231-47cd-a270-4ffa5a93fa3e.png"

response = requests.get(url)

low_res_img = Image.open(BytesIO(response.content)).convert("RGB")

low_res_img = low_res_img.resize((128, 128))

# run pipeline in inference (sample random noise and denoise)

upscaled_image = pipeline(low_res_img, num_inference_steps=100, eta=1).images[0]

# save image

upscaled_image.save("ldm_generated_image.png")

```

|

CompVis/stable-diffusion-v1-1 | CompVis | 2023-07-05T16:18:08Z | 10,189 | 69 | diffusers | [

"diffusers",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"arxiv:2112.10752",

"arxiv:2103.00020",

"arxiv:2205.11487",

"arxiv:2207.12598",

"arxiv:1910.09700",

"license:creativeml-openrail-m",

"autotrain_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-08-19T10:24:23Z | ---

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

inference: false

extra_gated_prompt: |-

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claim no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

Please read the full license carefully here: https://huggingface.co/spaces/CompVis/stable-diffusion-license

extra_gated_heading: Please read the LICENSE to access this model

---

# Stable Diffusion v1-1 Model Card

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input.

For more information about how Stable Diffusion functions, please have a look at [🤗's Stable Diffusion with D🧨iffusers blog](https://huggingface.co/blog/stable_diffusion).

The **Stable-Diffusion-v1-1** was trained on 237,000 steps at resolution `256x256` on [laion2B-en](https://huggingface.co/datasets/laion/laion2B-en), followed by

194,000 steps at resolution `512x512` on [laion-high-resolution](https://huggingface.co/datasets/laion/laion-high-resolution) (170M examples from LAION-5B with resolution `>= 1024x1024`). For more information, please refer to [Training](#training).

This weights here are intended to be used with the D🧨iffusers library. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, [come here](https://huggingface.co/CompVis/stable-diffusion-v-1-1-original)

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [The CreativeML OpenRAIL M license](https://huggingface.co/spaces/CompVis/stable-diffusion-license) is an [Open RAIL M license](https://www.licenses.ai/blog/2022/8/18/naming-convention-of-responsible-ai-licenses), adapted from the work that [BigScience](https://bigscience.huggingface.co/) and [the RAIL Initiative](https://www.licenses.ai/) are jointly carrying in the area of responsible AI licensing. See also [the article about the BLOOM Open RAIL license](https://bigscience.huggingface.co/blog/the-bigscience-rail-license) on which our license is based.

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([CLIP ViT-L/14](https://arxiv.org/abs/2103.00020)) as suggested in the [Imagen paper](https://arxiv.org/abs/2205.11487).

- **Resources for more information:** [GitHub Repository](https://github.com/CompVis/stable-diffusion), [Paper](https://arxiv.org/abs/2112.10752).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

## Examples

We recommend using [🤗's Diffusers library](https://github.com/huggingface/diffusers) to run Stable Diffusion.

```bash

pip install --upgrade diffusers transformers scipy

```

Running the pipeline with the default PNDM scheduler:

```python

import torch

from torch import autocast

from diffusers import StableDiffusionPipeline

model_id = "CompVis/stable-diffusion-v1-1"

device = "cuda"

pipe = StableDiffusionPipeline.from_pretrained(model_id)

pipe = pipe.to(device)

prompt = "a photo of an astronaut riding a horse on mars"

with autocast("cuda"):

image = pipe(prompt)["sample"][0]

image.save("astronaut_rides_horse.png")

```

**Note**:

If you are limited by GPU memory and have less than 10GB of GPU RAM available, please make sure to load the StableDiffusionPipeline in float16 precision instead of the default float32 precision as done above. You can do so by telling diffusers to expect the weights to be in float16 precision:

```py

import torch

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to(device)

prompt = "a photo of an astronaut riding a horse on mars"

with autocast("cuda"):

image = pipe(prompt, guidance_scale=7.5)["sample"][0]

image.save("astronaut_rides_horse.png")

```

To swap out the noise scheduler, pass it to `from_pretrained`:

```python

from diffusers import StableDiffusionPipeline, LMSDiscreteScheduler

model_id = "CompVis/stable-diffusion-v1-1"

# Use the K-LMS scheduler here instead

scheduler = LMSDiscreteScheduler(beta_start=0.00085, beta_end=0.012, beta_schedule="scaled_linear", num_train_timesteps=1000)

pipe = StableDiffusionPipeline.from_pretrained(model_id, scheduler=scheduler, use_auth_token=True)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

with autocast("cuda"):

image = pipe(prompt, guidance_scale=7.5)["sample"][0]

image.save("astronaut_rides_horse.png")

```

# Uses

## Direct Use

The model is intended for research purposes only. Possible research areas and

tasks include

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

Excluded uses are described below.

### Misuse, Malicious Use, and Out-of-Scope Use

_Note: This section is taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), but applies in the same way to Stable Diffusion v1_.

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

#### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

#### Misuse and Malicious Use

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

- Impersonating individuals without their consent.

- Sexual content without consent of the people who might see it.

- Mis- and disinformation

- Representations of egregious violence and gore

- Sharing of copyrighted or licensed material in violation of its terms of use.

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The model was trained mainly with English captions and will not work as well in other languages.

- The autoencoding part of the model is lossy

- The model was trained on a large-scale dataset

[LAION-5B](https://laion.ai/blog/laion-5b/) which contains adult material

and is not fit for product use without additional safety mechanisms and

considerations.

- No additional measures were used to deduplicate the dataset. As a result, we observe some degree of memorization for images that are duplicated in the training data.

The training data can be searched at [https://rom1504.github.io/clip-retrieval/](https://rom1504.github.io/clip-retrieval/) to possibly assist in the detection of memorized images.

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Stable Diffusion v1 was trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

which consists of images that are primarily limited to English descriptions.

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

## Training

### Training Data

The model developers used the following dataset for training the model:

- LAION-2B (en) and subsets thereof (see next section)

### Training Procedure

Stable Diffusion v1-4 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

- Text prompts are encoded through a ViT-L/14 text-encoder.

- The non-pooled output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet.

We currently provide four checkpoints, which were trained as follows.

- [`stable-diffusion-v1-1`](https://huggingface.co/CompVis/stable-diffusion-v1-1): 237,000 steps at resolution `256x256` on [laion2B-en](https://huggingface.co/datasets/laion/laion2B-en).

194,000 steps at resolution `512x512` on [laion-high-resolution](https://huggingface.co/datasets/laion/laion-high-resolution) (170M examples from LAION-5B with resolution `>= 1024x1024`).

- [`stable-diffusion-v1-2`](https://huggingface.co/CompVis/stable-diffusion-v1-2): Resumed from `stable-diffusion-v1-1`.

515,000 steps at resolution `512x512` on "laion-improved-aesthetics" (a subset of laion2B-en,

filtered to images with an original size `>= 512x512`, estimated aesthetics score `> 5.0`, and an estimated watermark probability `< 0.5`. The watermark estimate is from the LAION-5B metadata, the aesthetics score is estimated using an [improved aesthetics estimator](https://github.com/christophschuhmann/improved-aesthetic-predictor)).

- [`stable-diffusion-v1-3`](https://huggingface.co/CompVis/stable-diffusion-v1-3): Resumed from `stable-diffusion-v1-2`. 195,000 steps at resolution `512x512` on "laion-improved-aesthetics" and 10 % dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

- [**`stable-diffusion-v1-4`**](https://huggingface.co/CompVis/stable-diffusion-v1-4) Resumed from `stable-diffusion-v1-2`.225,000 steps at resolution `512x512` on "laion-aesthetics v2 5+" and 10 % dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

### Training details

- **Hardware:** 32 x 8 x A100 GPUs

- **Optimizer:** AdamW

- **Gradient Accumulations**: 2

- **Batch:** 32 x 8 x 2 x 4 = 2048

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

## Evaluation Results

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 PLMS sampling

steps show the relative improvements of the checkpoints:

Evaluated using 50 PLMS steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

## Environmental Impact

**Stable Diffusion v1** **Estimated Emissions**