modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-28 06:27:35

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 500

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-28 06:24:42

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

zaanind/gpt2_nmt_tune | zaanind | 2024-10-28T14:59:57Z | 134 | 0 | transformers | [

"transformers",

"safetensors",

"gpt2",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-10-28T11:04:55Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

mradermacher/Qwenslerp1-7B-i1-GGUF | mradermacher | 2024-10-28T14:59:05Z | 35 | 1 | transformers | [

"transformers",

"gguf",

"mergekit",

"merge",

"en",

"base_model:allknowingroger/Qwenslerp1-7B",

"base_model:quantized:allknowingroger/Qwenslerp1-7B",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"imatrix",

"conversational"

] | null | 2024-10-28T13:48:38Z | ---

base_model: allknowingroger/Qwenslerp1-7B

language:

- en

library_name: transformers

license: apache-2.0

quantized_by: mradermacher

tags:

- mergekit

- merge

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

weighted/imatrix quants of https://huggingface.co/allknowingroger/Qwenslerp1-7B

<!-- provided-files -->

static quants are available at https://huggingface.co/mradermacher/Qwenslerp1-7B-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ1_S.gguf) | i1-IQ1_S | 2.0 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ1_M.gguf) | i1-IQ1_M | 2.1 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 2.4 | |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ2_XS.gguf) | i1-IQ2_XS | 2.6 | |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ2_S.gguf) | i1-IQ2_S | 2.7 | |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ2_M.gguf) | i1-IQ2_M | 2.9 | |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q2_K.gguf) | i1-Q2_K | 3.1 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 3.2 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ3_XS.gguf) | i1-IQ3_XS | 3.4 | |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q3_K_S.gguf) | i1-Q3_K_S | 3.6 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ3_S.gguf) | i1-IQ3_S | 3.6 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ3_M.gguf) | i1-IQ3_M | 3.7 | |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q3_K_M.gguf) | i1-Q3_K_M | 3.9 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q3_K_L.gguf) | i1-Q3_K_L | 4.2 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-IQ4_XS.gguf) | i1-IQ4_XS | 4.3 | |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q4_0_4_4.gguf) | i1-Q4_0_4_4 | 4.5 | fast on arm, low quality |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q4_0_4_8.gguf) | i1-Q4_0_4_8 | 4.5 | fast on arm+i8mm, low quality |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q4_0_8_8.gguf) | i1-Q4_0_8_8 | 4.5 | fast on arm+sve, low quality |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q4_0.gguf) | i1-Q4_0 | 4.5 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q4_K_S.gguf) | i1-Q4_K_S | 4.6 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q4_K_M.gguf) | i1-Q4_K_M | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q5_K_S.gguf) | i1-Q5_K_S | 5.4 | |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q5_K_M.gguf) | i1-Q5_K_M | 5.5 | |

| [GGUF](https://huggingface.co/mradermacher/Qwenslerp1-7B-i1-GGUF/resolve/main/Qwenslerp1-7B.i1-Q6_K.gguf) | i1-Q6_K | 6.4 | practically like static Q6_K |

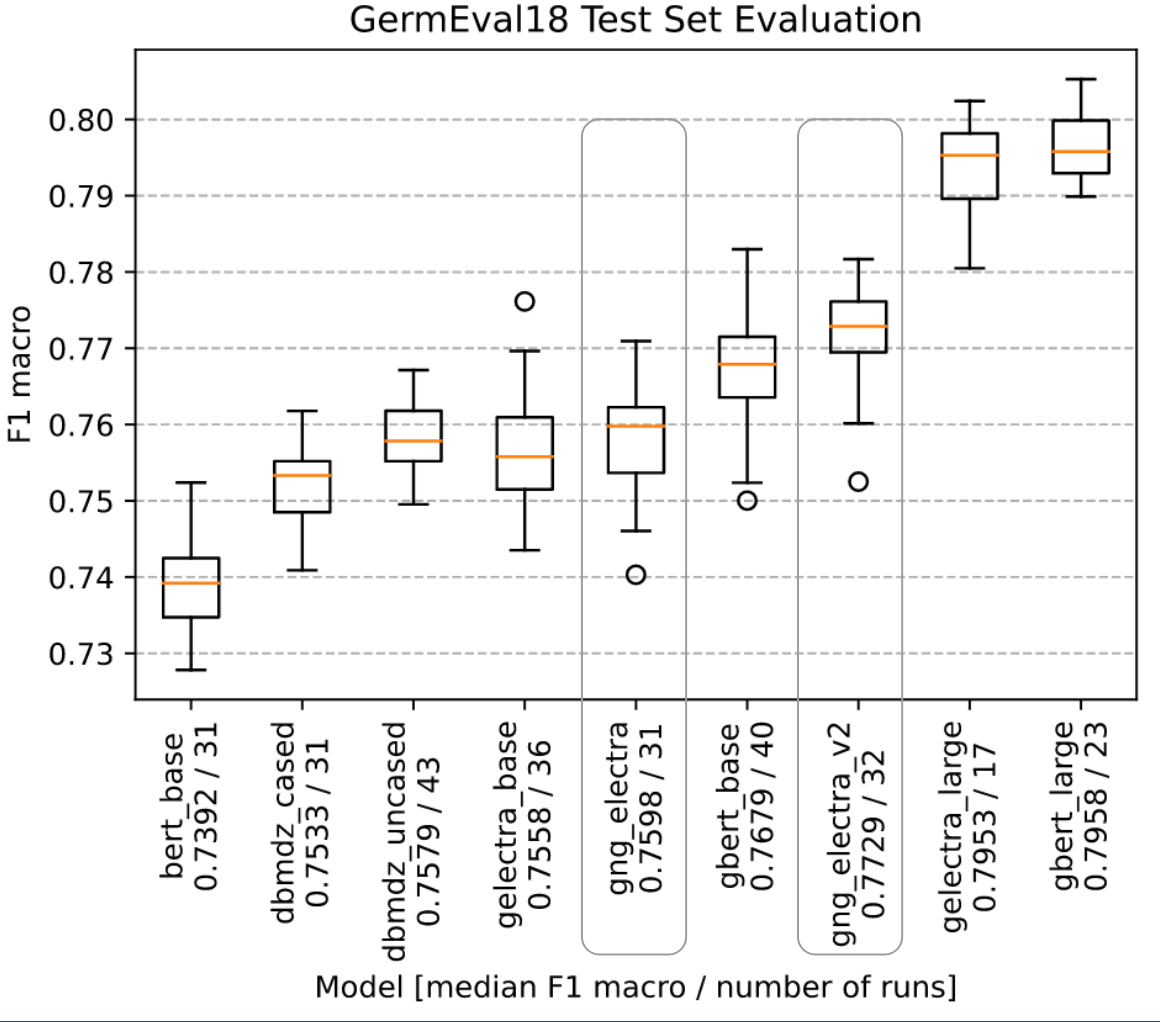

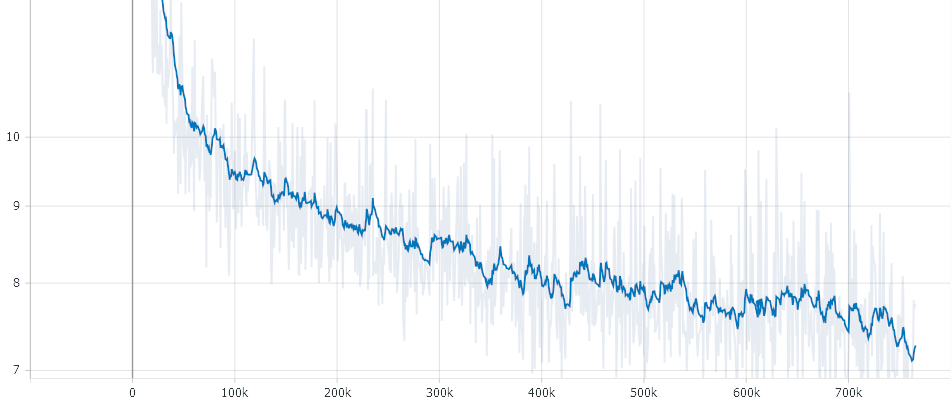

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his private supercomputer, enabling me to provide many more imatrix quants, at much higher quality, than I would otherwise be able to.

<!-- end -->

|

mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF | mradermacher | 2024-10-28T14:58:09Z | 39 | 0 | transformers | [

"transformers",

"gguf",

"en",

"base_model:wangrongsheng/LongWriter-llama3.1-8b-abliterated",

"base_model:quantized:wangrongsheng/LongWriter-llama3.1-8b-abliterated",

"endpoints_compatible",

"region:us"

] | null | 2024-10-28T12:46:04Z | ---

base_model: wangrongsheng/LongWriter-llama3.1-8b-abliterated

language:

- en

library_name: transformers

quantized_by: mradermacher

tags: []

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/wangrongsheng/LongWriter-llama3.1-8b-abliterated

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q2_K.gguf) | Q2_K | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q3_K_S.gguf) | Q3_K_S | 3.8 | |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q3_K_M.gguf) | Q3_K_M | 4.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q3_K_L.gguf) | Q3_K_L | 4.4 | |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.IQ4_XS.gguf) | IQ4_XS | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q4_K_S.gguf) | Q4_K_S | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q4_K_M.gguf) | Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q5_K_S.gguf) | Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q5_K_M.gguf) | Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q6_K.gguf) | Q6_K | 6.7 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.Q8_0.gguf) | Q8_0 | 8.6 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/LongWriter-llama3.1-8b-abliterated-GGUF/resolve/main/LongWriter-llama3.1-8b-abliterated.f16.gguf) | f16 | 16.2 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his private supercomputer, enabling me to provide many more imatrix quants, at much higher quality, than I would otherwise be able to.

<!-- end -->

|

mav23/dolly-v2-12b-GGUF | mav23 | 2024-10-28T14:52:53Z | 19 | 0 | transformers | [

"transformers",

"gguf",

"en",

"dataset:databricks/databricks-dolly-15k",

"license:mit",

"region:us"

] | null | 2024-10-28T13:23:26Z | ---

license: mit

language:

- en

library_name: transformers

inference: false

datasets:

- databricks/databricks-dolly-15k

---

# dolly-v2-12b Model Card

## Summary

Databricks' `dolly-v2-12b`, an instruction-following large language model trained on the Databricks machine learning platform

that is licensed for commercial use. Based on `pythia-12b`, Dolly is trained on ~15k instruction/response fine tuning records

[`databricks-dolly-15k`](https://github.com/databrickslabs/dolly/tree/master/data) generated

by Databricks employees in capability domains from the InstructGPT paper, including brainstorming, classification, closed QA, generation,

information extraction, open QA and summarization. `dolly-v2-12b` is not a state-of-the-art model, but does exhibit surprisingly

high quality instruction following behavior not characteristic of the foundation model on which it is based.

Dolly v2 is also available in these smaller models sizes:

* [dolly-v2-7b](https://huggingface.co/databricks/dolly-v2-7b), a 6.9 billion parameter based on `pythia-6.9b`

* [dolly-v2-3b](https://huggingface.co/databricks/dolly-v2-3b), a 2.8 billion parameter based on `pythia-2.8b`

Please refer to the [dolly GitHub repo](https://github.com/databrickslabs/dolly#getting-started-with-response-generation) for tips on

running inference for various GPU configurations.

**Owner**: Databricks, Inc.

## Model Overview

`dolly-v2-12b` is a 12 billion parameter causal language model created by [Databricks](https://databricks.com/) that is derived from

[EleutherAI's](https://www.eleuther.ai/) [Pythia-12b](https://huggingface.co/EleutherAI/pythia-12b) and fine-tuned

on a [~15K record instruction corpus](https://github.com/databrickslabs/dolly/tree/master/data) generated by Databricks employees and released under a permissive license (CC-BY-SA)

## Usage

To use the model with the `transformers` library on a machine with GPUs, first make sure you have the `transformers` and `accelerate` libraries installed.

In a Databricks notebook you could run:

```python

%pip install "accelerate>=0.16.0,<1" "transformers[torch]>=4.28.1,<5" "torch>=1.13.1,<2"

```

The instruction following pipeline can be loaded using the `pipeline` function as shown below. This loads a custom `InstructionTextGenerationPipeline`

found in the model repo [here](https://huggingface.co/databricks/dolly-v2-3b/blob/main/instruct_pipeline.py), which is why `trust_remote_code=True` is required.

Including `torch_dtype=torch.bfloat16` is generally recommended if this type is supported in order to reduce memory usage. It does not appear to impact output quality.

It is also fine to remove it if there is sufficient memory.

```python

import torch

from transformers import pipeline

generate_text = pipeline(model="databricks/dolly-v2-12b", torch_dtype=torch.bfloat16, trust_remote_code=True, device_map="auto")

```

You can then use the pipeline to answer instructions:

```python

res = generate_text("Explain to me the difference between nuclear fission and fusion.")

print(res[0]["generated_text"])

```

Alternatively, if you prefer to not use `trust_remote_code=True` you can download [instruct_pipeline.py](https://huggingface.co/databricks/dolly-v2-3b/blob/main/instruct_pipeline.py),

store it alongside your notebook, and construct the pipeline yourself from the loaded model and tokenizer:

```python

import torch

from instruct_pipeline import InstructionTextGenerationPipeline

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("databricks/dolly-v2-12b", padding_side="left")

model = AutoModelForCausalLM.from_pretrained("databricks/dolly-v2-12b", device_map="auto", torch_dtype=torch.bfloat16)

generate_text = InstructionTextGenerationPipeline(model=model, tokenizer=tokenizer)

```

### LangChain Usage

To use the pipeline with LangChain, you must set `return_full_text=True`, as LangChain expects the full text to be returned

and the default for the pipeline is to only return the new text.

```python

import torch

from transformers import pipeline

generate_text = pipeline(model="databricks/dolly-v2-12b", torch_dtype=torch.bfloat16,

trust_remote_code=True, device_map="auto", return_full_text=True)

```

You can create a prompt that either has only an instruction or has an instruction with context:

```python

from langchain import PromptTemplate, LLMChain

from langchain.llms import HuggingFacePipeline

# template for an instrution with no input

prompt = PromptTemplate(

input_variables=["instruction"],

template="{instruction}")

# template for an instruction with input

prompt_with_context = PromptTemplate(

input_variables=["instruction", "context"],

template="{instruction}\n\nInput:\n{context}")

hf_pipeline = HuggingFacePipeline(pipeline=generate_text)

llm_chain = LLMChain(llm=hf_pipeline, prompt=prompt)

llm_context_chain = LLMChain(llm=hf_pipeline, prompt=prompt_with_context)

```

Example predicting using a simple instruction:

```python

print(llm_chain.predict(instruction="Explain to me the difference between nuclear fission and fusion.").lstrip())

```

Example predicting using an instruction with context:

```python

context = """George Washington (February 22, 1732[b] - December 14, 1799) was an American military officer, statesman,

and Founding Father who served as the first president of the United States from 1789 to 1797."""

print(llm_context_chain.predict(instruction="When was George Washington president?", context=context).lstrip())

```

## Known Limitations

### Performance Limitations

**`dolly-v2-12b` is not a state-of-the-art generative language model** and, though quantitative benchmarking is ongoing, is not designed to perform

competitively with more modern model architectures or models subject to larger pretraining corpuses.

The Dolly model family is under active development, and so any list of shortcomings is unlikely to be exhaustive, but we include known limitations and misfires here as a means to document and share our preliminary findings with the community.

In particular, `dolly-v2-12b` struggles with: syntactically complex prompts, programming problems, mathematical operations, factual errors,

dates and times, open-ended question answering, hallucination, enumerating lists of specific length, stylistic mimicry, having a sense of humor, etc.

Moreover, we find that `dolly-v2-12b` does not have some capabilities, such as well-formatted letter writing, present in the original model.

### Dataset Limitations

Like all language models, `dolly-v2-12b` reflects the content and limitations of its training corpuses.

- **The Pile**: GPT-J's pre-training corpus contains content mostly collected from the public internet, and like most web-scale datasets,

it contains content many users would find objectionable. As such, the model is likely to reflect these shortcomings, potentially overtly

in the case it is explicitly asked to produce objectionable content, and sometimes subtly, as in the case of biased or harmful implicit

associations.

- **`databricks-dolly-15k`**: The training data on which `dolly-v2-12b` is instruction tuned represents natural language instructions generated

by Databricks employees during a period spanning March and April 2023 and includes passages from Wikipedia as references passages

for instruction categories like closed QA and summarization. To our knowledge it does not contain obscenity, intellectual property or

personally identifying information about non-public figures, but it may contain typos and factual errors.

The dataset may also reflect biases found in Wikipedia. Finally, the dataset likely reflects

the interests and semantic choices of Databricks employees, a demographic which is not representative of the global population at large.

Databricks is committed to ongoing research and development efforts to develop helpful, honest and harmless AI technologies that

maximize the potential of all individuals and organizations.

### Benchmark Metrics

Below you'll find various models benchmark performance on the [EleutherAI LLM Evaluation Harness](https://github.com/EleutherAI/lm-evaluation-harness);

model results are sorted by geometric mean to produce an intelligible ordering. As outlined above, these results demonstrate that `dolly-v2-12b` is not state of the art,

and in fact underperforms `dolly-v1-6b` in some evaluation benchmarks. We believe this owes to the composition and size of the underlying fine tuning datasets,

but a robust statement as to the sources of these variations requires further study.

| model | openbookqa | arc_easy | winogrande | hellaswag | arc_challenge | piqa | boolq | gmean |

| --------------------------------- | ------------ | ---------- | ------------ | ----------- | --------------- | -------- | -------- | ---------|

| EleutherAI/pythia-2.8b | 0.348 | 0.585859 | 0.589582 | 0.591217 | 0.323379 | 0.73395 | 0.638226 | 0.523431 |

| EleutherAI/pythia-6.9b | 0.368 | 0.604798 | 0.608524 | 0.631548 | 0.343857 | 0.761153 | 0.6263 | 0.543567 |

| databricks/dolly-v2-3b | 0.384 | 0.611532 | 0.589582 | 0.650767 | 0.370307 | 0.742655 | 0.575535 | 0.544886 |

| EleutherAI/pythia-12b | 0.364 | 0.627104 | 0.636148 | 0.668094 | 0.346416 | 0.760065 | 0.673394 | 0.559676 |

| EleutherAI/gpt-j-6B | 0.382 | 0.621633 | 0.651144 | 0.662617 | 0.363481 | 0.761153 | 0.655963 | 0.565936 |

| databricks/dolly-v2-12b | 0.408 | 0.63931 | 0.616417 | 0.707927 | 0.388225 | 0.757889 | 0.568196 | 0.56781 |

| databricks/dolly-v2-7b | 0.392 | 0.633838 | 0.607735 | 0.686517 | 0.406997 | 0.750816 | 0.644037 | 0.573487 |

| databricks/dolly-v1-6b | 0.41 | 0.62963 | 0.643252 | 0.676758 | 0.384812 | 0.773667 | 0.687768 | 0.583431 |

| EleutherAI/gpt-neox-20b | 0.402 | 0.683923 | 0.656669 | 0.7142 | 0.408703 | 0.784004 | 0.695413 | 0.602236 |

# Citation

```

@online{DatabricksBlog2023DollyV2,

author = {Mike Conover and Matt Hayes and Ankit Mathur and Jianwei Xie and Jun Wan and Sam Shah and Ali Ghodsi and Patrick Wendell and Matei Zaharia and Reynold Xin},

title = {Free Dolly: Introducing the World's First Truly Open Instruction-Tuned LLM},

year = {2023},

url = {https://www.databricks.com/blog/2023/04/12/dolly-first-open-commercially-viable-instruction-tuned-llm},

urldate = {2023-06-30}

}

```

# Happy Hacking! |

g-assismoraes/deberta-large-semeval25_EN08_fold4 | g-assismoraes | 2024-10-28T14:52:31Z | 9 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"deberta-v2",

"text-classification",

"generated_from_trainer",

"base_model:microsoft/deberta-v3-large",

"base_model:finetune:microsoft/deberta-v3-large",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2024-10-28T14:37:49Z | ---

library_name: transformers

license: mit

base_model: microsoft/deberta-v3-large

tags:

- generated_from_trainer

model-index:

- name: deberta-large-semeval25_EN08_fold4

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# deberta-large-semeval25_EN08_fold4

This model is a fine-tuned version of [microsoft/deberta-v3-large](https://huggingface.co/microsoft/deberta-v3-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 8.0968

- Precision Samples: 0.1277

- Recall Samples: 0.8179

- F1 Samples: 0.2131

- Precision Macro: 0.3800

- Recall Macro: 0.7101

- F1 Macro: 0.2707

- Precision Micro: 0.1256

- Recall Micro: 0.7889

- F1 Micro: 0.2167

- Precision Weighted: 0.2111

- Recall Weighted: 0.7889

- F1 Weighted: 0.2494

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision Samples | Recall Samples | F1 Samples | Precision Macro | Recall Macro | F1 Macro | Precision Micro | Recall Micro | F1 Micro | Precision Weighted | Recall Weighted | F1 Weighted |

|:-------------:|:-----:|:----:|:---------------:|:-----------------:|:--------------:|:----------:|:---------------:|:------------:|:--------:|:---------------:|:------------:|:--------:|:------------------:|:---------------:|:-----------:|

| 10.3595 | 1.0 | 73 | 10.0150 | 0.1187 | 0.4154 | 0.1640 | 0.9017 | 0.3154 | 0.2532 | 0.1115 | 0.3167 | 0.1650 | 0.6711 | 0.3167 | 0.0826 |

| 9.1971 | 2.0 | 146 | 9.4160 | 0.1191 | 0.6148 | 0.1778 | 0.7693 | 0.4444 | 0.2773 | 0.1049 | 0.5417 | 0.1758 | 0.4587 | 0.5417 | 0.1310 |

| 7.9996 | 3.0 | 219 | 8.8114 | 0.1176 | 0.7117 | 0.1924 | 0.5851 | 0.5468 | 0.2806 | 0.1088 | 0.6667 | 0.1871 | 0.3031 | 0.6667 | 0.1706 |

| 7.463 | 4.0 | 292 | 8.5503 | 0.1224 | 0.7819 | 0.1931 | 0.5197 | 0.6480 | 0.2805 | 0.1125 | 0.7472 | 0.1955 | 0.2758 | 0.7472 | 0.1944 |

| 8.4991 | 5.0 | 365 | 8.3932 | 0.1203 | 0.7938 | 0.2006 | 0.4699 | 0.6469 | 0.2725 | 0.1138 | 0.7472 | 0.1976 | 0.2545 | 0.7472 | 0.2056 |

| 5.8266 | 6.0 | 438 | 8.2974 | 0.1222 | 0.8157 | 0.2042 | 0.4218 | 0.6797 | 0.2494 | 0.1148 | 0.7778 | 0.2001 | 0.2412 | 0.7778 | 0.2214 |

| 6.4555 | 7.0 | 511 | 8.2044 | 0.1241 | 0.7945 | 0.2076 | 0.3889 | 0.6770 | 0.2569 | 0.1224 | 0.7667 | 0.2111 | 0.2286 | 0.7667 | 0.2450 |

| 6.1701 | 8.0 | 584 | 8.2297 | 0.1285 | 0.8057 | 0.2131 | 0.3902 | 0.7018 | 0.2765 | 0.1267 | 0.7722 | 0.2176 | 0.2159 | 0.7722 | 0.2478 |

| 6.2618 | 9.0 | 657 | 8.1061 | 0.1281 | 0.8229 | 0.2137 | 0.3794 | 0.7040 | 0.2694 | 0.1243 | 0.7833 | 0.2145 | 0.2090 | 0.7833 | 0.2462 |

| 6.6155 | 10.0 | 730 | 8.0968 | 0.1277 | 0.8179 | 0.2131 | 0.3800 | 0.7101 | 0.2707 | 0.1256 | 0.7889 | 0.2167 | 0.2111 | 0.7889 | 0.2494 |

### Framework versions

- Transformers 4.46.0

- Pytorch 2.3.1

- Datasets 2.21.0

- Tokenizers 0.20.1

|

Cloyne/vietnamese-sbert | Cloyne | 2024-10-28T14:49:54Z | 56 | 0 | sentence-transformers | [

"sentence-transformers",

"safetensors",

"roberta",

"sentence-similarity",

"feature-extraction",

"generated_from_trainer",

"dataset_size:120210",

"loss:MultipleNegativesRankingLoss",

"arxiv:1908.10084",

"arxiv:1705.00652",

"base_model:keepitreal/vietnamese-sbert",

"base_model:finetune:keepitreal/vietnamese-sbert",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | sentence-similarity | 2024-10-28T14:49:39Z | ---

base_model: keepitreal/vietnamese-sbert

library_name: sentence-transformers

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- sentence-similarity

- feature-extraction

- generated_from_trainer

- dataset_size:120210

- loss:MultipleNegativesRankingLoss

widget:

- source_sentence: Chủ tịch Ủy ban nhân dân xã có quyền ra quyết định cưỡng chế tháo

dỡ công trình xây dựng trên đất nông nghiệp khi chưa chuyển mục đích sử dụng đất

hay không?

sentences:

- 'Đối tượng, điều kiện kéo dài tuổi phục vụ tại ngũ

1. Đối tượng:

a) Quân nhân chuyên nghiệp có trình độ cao đẳng trở lên đang đảm nhiệm các chức

danh: Kỹ thuật viên, Nhân viên Kỹ thuật, Huấn luyện viên, Nghệ sĩ, Nhạc sĩ, Diễn

viên làm việc đúng chuyên ngành đào tạo ở các cơ sở nghiên cứu, nhà trường, bệnh

viện, trung tâm thể dục thể thao, đoàn nghệ thuật, nhà máy, doanh nghiệp quốc

phòng; đơn vị đóng quân ở địa bàn vùng sâu, vùng xa, biên giới, hải đảo.

b) Quân nhân chuyên nghiệp đang làm việc thuộc các chuyên ngành hẹp được đào tạo

công phu hoặc chuyên ngành Quân đội chưa đào tạo được; thợ bậc cao.

c) Quân nhân chuyên nghiệp đang đảm nhiệm chức vụ chỉ huy, quản lý ở các nhà máy,

doanh nghiệp quốc phòng.

d) Quân nhân chuyên nghiệp không thuộc đối tượng quy định tại điểm a, điểm b,

điểm c khoản này do Bộ trưởng Bộ Quốc phòng quyết định.

2. Điều kiện:

Quân nhân chuyên nghiệp thuộc đối tượng quy định tại khoản 1 Điều này được kéo

dài tuổi phục vụ tại ngũ khi có đủ các điều kiện sau:

a) Đơn vị có biên chế và nhu cầu sử dụng;

b) Hết hạn tuổi phục vụ tại ngũ cao nhất theo cấp bậc quân hàm quy định tại khoản

2 Điều 17 Luật Quân nhân chuyên nghiệp, công nhân và viên chức quốc phòng; chưa

có người thay thế; tự nguyện tiếp tục phục vụ tại ngũ;

c) Có đủ phẩm chất chính trị, đạo đức, sức khỏe để hoàn thành nhiệm vụ được giao;

d) Có trình độ chuyên môn kỹ thuật, nghiệp vụ giỏi; tay nghề cao; chất lượng,

hiệu quả công tác tốt.'

- 'Thi hành quyết định cưỡng chế

1. Người ra quyết định cưỡng chế có trách nhiệm gửi ngay quyết định cưỡng chế

cho các cá nhân, tổ chức liên quan và tổ chức thực hiện việc cưỡng chế thi hành

quyết định xử phạt của mình và của cấp dưới.

..."'

- 'Trình tự, thủ tục đăng ký tài khoản định danh điện tử đối với công dân Việt Nam

1. Đăng ký tài khoản định danh điện tử mức độ 1 qua ứng dụng VNelD đối với công

dân đã có thẻ Căn cước công dân gắn chíp điện tử

a) Công dân sử dụng thiết bị di động tải và cài đặt ứng dụng VNelD.

b) Công dân sử dụng ứng dụng VNelD để nhập thông tin về số định danh cá nhân và

số điện thoại hoặc địa chỉ thư điện tử; cung cấp các thông tin theo hướng dẫn

trên ứng dụng VNelD; thu nhận ảnh chân dung bằng thiết bị di động và gửi yêu cầu

đề nghị cấp tài khoản định danh điện tử tới cơ quan quản lý định danh và xác thực

điện tử qua ứng dụng VNelD.

c) Cơ quan quản lý định danh điện tử thông báo kết quả đăng ký tài khoản qua ứng

dụng VNelD hoặc tin nhắn SMS hoặc địa chỉ thư điện tử.

2. Đăng ký tài khoản định danh điện tử mức độ 2

a) Đối với công dân đã được cấp thẻ Căn cước công dân gắn chíp điện tử:

Công dân đến Công an xã, phường, thị trấn hoặc nơi làm thủ tục cấp thẻ Căn cước

công dân để làm thủ tục cấp tài khoản định danh điện tử. Công dân xuất trình thẻ

Căn cước công dân gắn chíp điện tử, cung cấp thông tin về số điện thoại hoặc địa

chỉ thư điện tử và đề nghị bổ sung thông tin được tích hợp vào tài khoản định

danh điện tử.

Cán bộ tiếp nhận nhập thông tin công dân cung cấp vào hệ thống định danh và xác

thực điện tử; chụp ảnh chân dung, thu nhận vân tay của công dân đến làm thủ tục

để xác thực với Cơ sở dữ liệu căn cước công dân và khẳng định sự đồng ý đăng ký

tạo lập tài khoản định danh điện tử.

Cơ quan quản lý định danh điện tử thông báo kết quả đăng ký tài khoản qua ứng

dụng VNelD hoặc tin nhắn SMS hoặc địa chỉ thư điện tử.

b) Cơ quan Công an tiến hành cấp tài khoản định danh điện tử mức độ 2 cùng với

cấp thẻ Căn cước công dân với trường hợp công dân chưa được cấp Căn cước công

dân gắn chíp điện tử.'

- source_sentence: Mức hưởng chế độ thai sản đối với lao động nam là người nước ngoài

được pháp luật quy định như thế nào?

sentences:

- '"Điều 21. Thông báo kết quả và xác nhận nhập học

1. Cơ sở đào tạo gửi giấy báo trúng tuyển cho những thí sinh trúng tuyển, trong

đó ghi rõ những thủ tục cần thiết đối với thí sinh khi nhập học và phương thức

nhập học của thí sinh.

2. Thí sinh xác nhận nhập học bằng hình thức trực tuyến trên hệ thống, trước khi

nhập học tại cơ sở đào tạo.

3. Đối với những thí sinh không xác nhận nhập học trong thời hạn quy định:

a) Nếu không có lý do chính đáng thì coi như thí sinh từ chối nhập học và cơ sở

đào tạo có quyền không tiếp nhận;

b) Nếu do ốm đau, tai nạn, có giấy xác nhận của bệnh viện quận, huyện trở lên

hoặc do thiên tai có xác nhận của UBND quận, huyện trở lên, cơ sở đào tạo xem

xét quyết định tiếp nhận thí sinh vào học hoặc bảo lưu kết quả tuyển sinh để thí

sinh vào học sau;

c) Nếu do sai sót, nhầm lẫn của cán bộ thực hiện công tác tuyển sinh hoặc cá nhân

thí sinh gây ra, cơ sở đào tạo chủ động phối hợp với các cá nhân, tổ chức liên

quan xem xét các minh chứng và quyết định việc tiếp nhận thí sinh vào học hoặc

bảo lưu kết quả tuyển sinh để thí sinh vào học sau.

4. Thí sinh đã xác nhận nhập học tại một cơ sở đào tạo không được tham gia xét

tuyển ở nơi khác hoặc ở các đợt xét tuyển bổ sung, trừ trường hợp được cơ sở đào

tạo cho phép."'

- 'Tổ chức, nhiệm vụ, quyền hạn của Ban Chỉ huy

...

2. Nhiệm vụ, quyền hạn của Ban Chỉ huy:

a) Chỉ đạo xây dựng, ban hành quy định về công tác bảo đảm an toàn PCCC và CNCH

tại Trụ sở cơ quan Bộ Tư pháp.

b) Hướng dẫn, phối hợp với các đơn vị thuộc Bộ và chỉ đạo Đội PCCC và CNCH cơ

sở tổ chức tuyên truyền, bồi dưỡng nghiệp vụ PCCC và CNCH.

c) Chỉ đạo Đội PCCC và CNCH cơ sở tại Trụ sở cơ quan Bộ Tư pháp xây dựng, trình

cấp có thẩm quyền phê duyệt và tổ chức thực tập phương án PCCC, phương án CNCH.

d) Chỉ đạo Đội PCCC và CNCH cơ sở tại Trụ sở cơ quan Bộ Tư pháp quản lý các trang

thiết bị PCCC và CNCH.

đ) Chỉ đạo chữa cháy, CNCH khi xảy ra cháy, sự cố, tai nạn tại Trụ sở cơ quan

Bộ Tư pháp.

e) Chỉ đạo việc tổ chức lập và lưu giữ hồ sơ quản lý, theo dõi hoạt động PCCC,

CNCH tại Trụ sở cơ quan Bộ Tư pháp.

g) Chỉ đạo việc sơ kết, tổng kết các hoạt động về PCCC và CNCH của cơ quan; kiểm

tra, đôn đốc việc chấp hành các quy định về PCCC và CNCH.

h) Đề xuất việc khen thưởng, kỷ luật các tập thể, cá nhân trong việc thực hiện

công tác PCCC, CNCH.

i) Chỉ đạo Đội PCCC và CNCH cơ sở dự trù kinh phí cho các hoạt động PCCC và CNCH

tại Trụ sở cơ quan Bộ Tư pháp.

k) Thực hiện các nhiệm vụ khác do Bộ trưởng giao và theo quy định của pháp luật.'

- 'Mức hưởng chế độ thai sản

...

b) Mức hưởng một ngày đối với trường hợp quy định tại Điều 32 và khoản 2 Điều

34 của Luật này được tính bằng mức hưởng chế độ thai sản theo tháng chia cho 24

ngày.'

- source_sentence: Doanh nghiệp được áp dụng chế độ ưu tiên không cung cấp báo cáo

kiểm toán đúng thời hạn bị phạt bao nhiêu tiền?

sentences:

- 'Thay đổi Thẩm phán, Hội thẩm

1. Thẩm phán, Hội thẩm phải từ chối tham gia xét xử hoặc bị thay đổi khi thuộc

một trong các trường hợp:

a) Trường hợp quy định tại Điều 49 của Bộ luật này;

b) Họ cùng trong một Hội đồng xét xử và là người thân thích với nhau;

c) Đã tham gia xét xử sơ thẩm hoặc phúc thẩm hoặc tiến hành tố tụng vụ án đó với

tư cách là Điều tra viên, Cán bộ điều tra, Kiểm sát viên, Kiểm tra viên, Thẩm

tra viên, Thư ký Tòa án.

2. Việc thay đổi Thẩm phán, Hội thẩm trước khi mở phiên tòa do Chánh án hoặc Phó

Chánh án Tòa án được phân công giải quyết vụ án quyết định.

Thẩm phán bị thay đổi là Chánh án Tòa án thì do Chánh án Tòa án trên một cấp quyết

định.

Việc thay đổi Thẩm phán, Hội thẩm tại phiên tòa do Hội đồng xét xử quyết định

trước khi bắt đầu xét hỏi bằng cách biểu quyết tại phòng nghị án. Khi xem xét

thay đổi thành viên nào thì thành viên đó được trình bày ý kiến của mình, Hội

đồng quyết định theo đa số.

Trường hợp phải thay đổi Thẩm phán, Hội thẩm tại phiên tòa thì Hội đồng xét xử

ra quyết định hoãn phiên tòa.'

- '“Điều 21. Chấm dứt hưởng trợ cấp thất nghiệp

1. Các trường hợp người lao động đang hưởng trợ cấp thất nghiệp bị chấm dứt hưởng

trợ cấp thất nghiệp được quy định như sau:

e) Trong thời gian hưởng trợ cấp thất nghiệp, 03 tháng liên tục không thực hiện

thông báo hằng tháng về việc tìm kiếm việc làm với trung tâm dịch vụ việc làm

theo quy định

Ngày mà người lao động được xác định bị chấm dứt hưởng trợ cấp thất nghiệp là

ngày kết thúc của thời hạn thông báo tìm kiếm việc làm của tháng thứ 3 liên tục

mà người lao động không thực hiện thông báo hằng tháng về việc tìm kiếm việc làm."'

- 'Vi phạm quy định về thời hạn làm thủ tục hải quan, nộp hồ sơ thuế

...

2. Phạt tiền từ 1.000.000 đồng đến 2.000.000 đồng đối với hành vi không thực hiện

đúng thời hạn quy định thuộc một trong các trường hợp sau:

a) Cung cấp báo cáo kiểm toán, báo cáo tài chính của doanh nghiệp được áp dụng

chế độ ưu tiên;

b) Thông báo cho cơ quan hải quan quyết định xử lý vi phạm pháp luật về quản lý

thuế, kế toán đối với doanh nghiệp được áp dụng chế độ ưu tiên;

c) Báo cáo về lượng hàng hóa nhập khẩu phục vụ xây dựng nhà xưởng, hàng hóa gửi

kho bên ngoài của doanh nghiệp chế xuất;

d) Báo cáo về lượng hàng hóa trung chuyển đưa vào, đưa ra, còn lưu tại cảng;

đ) Báo cáo thống kê thông quan hàng bưu chính đưa vào Việt Nam để chuyển tiếp

đi quốc tế.

...'

- source_sentence: Tài chính của Hội Kiểm toán viên hành nghề Việt Nam được chi cho

những khoản nào?

sentences:

- 'Giải thể và xử lý tài chính khi giải thể

1. Khi xét thấy hoạt động của Hội không có hiệu quả, không mang lại lợi ích cho

Hội viên hoặc gây phiền hà, cản trở cho Hội viên thì BCH Hội quyết định triệu

tập Đại hội để bàn biện pháp củng cố tổ chức hoặc giải thể Hội. Nếu giải thể Hội

thì do Đại hội đại biểu hoặc Đại hội toàn quốc của Hội thông qua và đề nghị cơ

quan Nhà nước có thẩm quyền xem xét, quyết định.

2. Khi Hội bị giải thể, Ban Thường trực và Ban Kiểm tra của Hội phải tiến hành

kiểm kê tài sản, kiểm quỹ và báo cáo BCH Hội quyết định việc xử lý tài sản, tiền

tồn quỹ và tiến hành thủ tục giải thể theo quy định của pháp luật.'

- '"Điều 14. Miễn trừ đối với thỏa thuận hạn chế cạnh tranh bị cấm

1. Thỏa thuận hạn chế cạnh tranh quy định tại các khoản 1, 2, 3, 7, 8, 9, 10 và

11 Điều 11 bị cấm theo quy định tại Điều 12 của Luật này được miễn trừ có thời

hạn nếu có lợi cho người tiêu dùng và đáp ứng một trong các điều kiện sau đây:

a) Tác động thúc đẩy tiến bộ kỹ thuật, công nghệ, nâng cao chất lượng hàng hóa,

dịch vụ;

b) Tăng cường sức cạnh tranh của doanh nghiệp Việt Nam trên thị trường quốc tế;

c) Thúc đẩy việc áp dụng thống nhất tiêu chuẩn chất lượng, định mức kỹ thuật của

chủng loại sản phẩm;

d) Thống nhất các điều kiện thực hiện hợp đồng, giao hàng, thanh toán nhưng không

liên quan đến giá và các yếu tố của giá.

2. Thỏa thuận lao động, thỏa thuận hợp tác trong các ngành, lĩnh vực đặc thù được

thực hiện theo quy định của luật khác thì thực hiện theo quy định của luật đó".'

- '"Điều 2. Sửa đổi, bổ sung một số điều của Nghị định số 15/2019/NĐ-CP ngày 01

tháng 02 năm 2019 của Chính phủ quy định chi tiết một số điều và biện pháp thi

hành Luật Giáo dục nghề nghiệp

...

12. Sửa đổi, bổ sung Điều 24 như sau:

Điều 24. Thẩm quyền cấp giấy chứng nhận đăng ký hoạt động liên kết đào tạo với

nước ngoài

1. Tổng cục Giáo dục nghề nghiệp cấp giấy chứng nhận đăng ký hoạt động liên kết

đào tạo với nước ngoài đối với trường cao đẳng.

2. Sở Lao động - Thương binh và Xã hội nơi trường trung cấp, trung tâm giáo dục

nghề nghiệp, trung tâm giáo dục nghề nghiệp - giáo dục thường xuyên và doanh nghiệp

tổ chức hoạt động liên kết đào tạo với nước ngoài cấp giấy chứng nhận đăng ký

hoạt động liên kết đào tạo với nước ngoài đối với trường trung cấp, trung tâm

giáo dục nghề nghiệp, trung tâm giáo dục nghề nghiệp - giáo dục thường xuyên và

doanh nghiệp."'

- source_sentence: NLĐ ký nhiều hợp đồng lao động thì đóng BHYT như thế nào?

sentences:

- 'Hồ sơ, thủ tục xác định trường hợp được bồi thường

[...]

3. Trong thời hạn 05 ngày làm việc, kể từ ngày nhận được đơn và các giấy tờ hợp

lệ, nếu xác định yêu cầu thuộc trách nhiệm giải quyết của mình thì Sở Y tế phải

thụ lý và thông báo bằng văn bản về việc thụ lý đơn cho người bị thiệt hại hoặc

thân nhân của người bị thiệt hại (sau đây gọi tắt là người bị thiệt hại). Trường

hợp hồ sơ không đầy đủ thì Sở Y tế có văn bản hướng dẫn người bị thiệt hại bổ

sung.

4. Trong thời hạn 15 ngày, kể từ ngày nhận được đơn yêu cầu của người bị thiệt

hại, Sở Y tế phải hoàn thành việc xác định nguyên nhân gây tai biến, mức độ tổn

thương và thông báo bằng văn bản cho người yêu cầu đồng thời báo cáo Bộ Y tế.'

- 'Chuyển nhượng quyền thăm dò khoáng sản

1. Tổ chức, cá nhân nhận chuyển nhượng quyền thăm dò khoáng sản phải có đủ điều

kiện để được cấp Giấy phép thăm dò khoáng sản theo quy định của Luật này.

2. Việc chuyển nhượng quyền thăm dò khoáng sản phải được cơ quan quản lý nhà nước

có thẩm quyền cấp Giấy phép thăm dò khoáng sản chấp thuận; trường hợp được chấp

thuận, tổ chức, cá nhân nhận chuyển nhượng quyền thăm dò khoáng sản được cấp Giấy

phép thăm dò khoáng sản mới.

3. Tổ chức, cá nhân chuyển nhượng quyền thăm dò khoáng sản đã thực hiện được ít

nhất 50% dự toán của đề án thăm dò khoáng sản.

4. Chính phủ quy định chi tiết việc chuyển nhượng quyền thăm dò khoáng sản.'

- '"Sửa đổi, bổ sung một số điều của Luật bảo hiểm y tế:

...

6. Sửa đổi, bổ sung Điều 12 như sau:

“Điều 12. Đối tượng tham gia bảo hiểm y tế

1. Nhóm do người lao động và người sử dụng lao động đóng, bao gồm:

a) Người lao động làm việc theo hợp đồng lao động không xác định thời hạn, hợp

đồng lao động có thời hạn từ đủ 3 tháng trở lên; người lao động là người quản

lý doanh nghiệp hưởng tiền lương; cán bộ, công chức, viên chức (sau đây gọi chung

là người lao động);

b) Người hoạt động không chuyên trách ở xã, phường, thị trấn theo quy định của

pháp luật.=

...

4. Nhóm được ngân sách nhà nước hỗ trợ mức đóng, bao gồm:

a) Người thuộc hộ gia đình cận nghèo;

b) Học sinh, sinh viên.

5. Nhóm tham gia bảo hiểm y tế theo hộ gia đình gồm những người thuộc hộ gia đình,

trừ đối tượng quy định tại các khoản 1, 2, 3 và 4 Điều này.

6. Chính phủ quy định các đối tượng khác ngoài các đối tượng quy định tại các

khoản 3, 4 và 5 Điều này; quy định việc cấp thẻ bảo hiểm y tế đối với đối tượng

do Bộ Quốc phòng, Bộ Công an quản lý và đối tượng quy định tại điểm 1 khoản 3

Điều này; quy định lộ trình thực hiện bảo hiểm y tế, phạm vi quyền lợi, mức hưởng

bảo hiểm y tế, khám bệnh, chữa bệnh bảo hiểm y tế, quản lý, sử dụng phần kinh

phí dành cho khám bệnh, chữa bệnh bảo hiểm y tế, giám định bảo hiểm y tế, thanh

toán, quyết toán bảo hiểm y tế đối với các đối tượng quy định tại điểm a khoản

3 Điều này.”'

---

# SentenceTransformer based on keepitreal/vietnamese-sbert

This is a [sentence-transformers](https://www.SBERT.net) model finetuned from [keepitreal/vietnamese-sbert](https://huggingface.co/keepitreal/vietnamese-sbert) on the csv dataset. It maps sentences & paragraphs to a 768-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

## Model Details

### Model Description

- **Model Type:** Sentence Transformer

- **Base model:** [keepitreal/vietnamese-sbert](https://huggingface.co/keepitreal/vietnamese-sbert) <!-- at revision a9467ef2ef47caa6448edeabfd8e5e5ce0fa2a23 -->

- **Maximum Sequence Length:** 256 tokens

- **Output Dimensionality:** 768 tokens

- **Similarity Function:** Cosine Similarity

- **Training Dataset:**

- csv

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Documentation:** [Sentence Transformers Documentation](https://sbert.net)

- **Repository:** [Sentence Transformers on GitHub](https://github.com/UKPLab/sentence-transformers)

- **Hugging Face:** [Sentence Transformers on Hugging Face](https://huggingface.co/models?library=sentence-transformers)

### Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 256, 'do_lower_case': False}) with Transformer model: RobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

)

```

## Usage

### Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

```bash

pip install -U sentence-transformers

```

Then you can load this model and run inference.

```python

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("Cloyne/vietnamese-embedding_finetuned")

# Run inference

sentences = [

'NLĐ ký nhiều hợp đồng lao động thì đóng BHYT như thế nào?',

'"Sửa đổi, bổ sung một số điều của Luật bảo hiểm y tế:\n...\n6. Sửa đổi, bổ sung Điều 12 như sau:\n“Điều 12. Đối tượng tham gia bảo hiểm y tế\n1. Nhóm do người lao động và người sử dụng lao động đóng, bao gồm:\na) Người lao động làm việc theo hợp đồng lao động không xác định thời hạn, hợp đồng lao động có thời hạn từ đủ 3 tháng trở lên; người lao động là người quản lý doanh nghiệp hưởng tiền lương; cán bộ, công chức, viên chức (sau đây gọi chung là người lao động);\nb) Người hoạt động không chuyên trách ở xã, phường, thị trấn theo quy định của pháp luật.=\n...\n4. Nhóm được ngân sách nhà nước hỗ trợ mức đóng, bao gồm:\na) Người thuộc hộ gia đình cận nghèo;\nb) Học sinh, sinh viên.\n5. Nhóm tham gia bảo hiểm y tế theo hộ gia đình gồm những người thuộc hộ gia đình, trừ đối tượng quy định tại các khoản 1, 2, 3 và 4 Điều này.\n6. Chính phủ quy định các đối tượng khác ngoài các đối tượng quy định tại các khoản 3, 4 và 5 Điều này; quy định việc cấp thẻ bảo hiểm y tế đối với đối tượng do Bộ Quốc phòng, Bộ Công an quản lý và đối tượng quy định tại điểm 1 khoản 3 Điều này; quy định lộ trình thực hiện bảo hiểm y tế, phạm vi quyền lợi, mức hưởng bảo hiểm y tế, khám bệnh, chữa bệnh bảo hiểm y tế, quản lý, sử dụng phần kinh phí dành cho khám bệnh, chữa bệnh bảo hiểm y tế, giám định bảo hiểm y tế, thanh toán, quyết toán bảo hiểm y tế đối với các đối tượng quy định tại điểm a khoản 3 Điều này.”',

'Hồ sơ, thủ tục xác định trường hợp được bồi thường\n[...]\n3. Trong thời hạn 05 ngày làm việc, kể từ ngày nhận được đơn và các giấy tờ hợp lệ, nếu xác định yêu cầu thuộc trách nhiệm giải quyết của mình thì Sở Y tế phải thụ lý và thông báo bằng văn bản về việc thụ lý đơn cho người bị thiệt hại hoặc thân nhân của người bị thiệt hại (sau đây gọi tắt là người bị thiệt hại). Trường hợp hồ sơ không đầy đủ thì Sở Y tế có văn bản hướng dẫn người bị thiệt hại bổ sung.\n4. Trong thời hạn 15 ngày, kể từ ngày nhận được đơn yêu cầu của người bị thiệt hại, Sở Y tế phải hoàn thành việc xác định nguyên nhân gây tai biến, mức độ tổn thương và thông báo bằng văn bản cho người yêu cầu đồng thời báo cáo Bộ Y tế.',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 768]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities.shape)

# [3, 3]

```

<!--

### Direct Usage (Transformers)

<details><summary>Click to see the direct usage in Transformers</summary>

</details>

-->

<!--

### Downstream Usage (Sentence Transformers)

You can finetune this model on your own dataset.

<details><summary>Click to expand</summary>

</details>

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Training Dataset

#### csv

* Dataset: csv

* Size: 120,210 training samples

* Columns: <code>anchor</code> and <code>positive</code>

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:----------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 8 tokens</li><li>mean: 25.08 tokens</li><li>max: 49 tokens</li></ul> | <ul><li>min: 21 tokens</li><li>mean: 206.98 tokens</li><li>max: 256 tokens</li></ul> |

* Samples:

| anchor | positive |

|:--------------------------------------------------------------------------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>Nội dung lồng ghép vấn đề bình đẳng giới trong xây dựng văn bản quy phạm pháp luật được quy định thế nào?</code> | <code>Nội dung lồng ghép vấn đề bình đẳng giới trong xây dựng văn bản quy phạm pháp luật<br>Trong phạm vi điều chỉnh của văn bản quy phạm pháp luật:<br>1. Xác định nội dung liên quan đến vấn đề bình đẳng giới hoặc vấn đề bất bình đẳng giới, phân biệt đối xử về giới.<br>2. Quy định các biện pháp cần thiết để thực hiện bình đẳng giới hoặc để giải quyết vấn đề bất bình đẳng giới, phân biệt đối xử về giới; dự báo tác động của các quy định đó đối với nam và nữ sau khi được ban hành.<br>3. Xác định nguồn nhân lực, tài chính cần thiết để triển khai các biện pháp thực hiện bình đẳng giới hoặc để giải quyết vấn đề bất bình đẳng giới, phân biệt đối xử về giới.</code> |

| <code>Điều kiện để giáo viên trong cơ sở giáo dục mầm non, tiểu học ngoài công lập bị ảnh hưởng bởi Covid-19 được hưởng chính sách hỗ trợ là gì?</code> | <code>Điều kiện được hưởng<br>Cán bộ quản lý, giáo viên, nhân viên được hưởng chính sách khi bảo đảm các điều kiện sau:<br>1. Là người đang làm việc tại cơ sở giáo dục ngoài công lập trước khi cơ sở phải tạm dừng hoạt động theo yêu cầu của cơ quan nhà nước có thẩm quyền để phòng, chống dịch COVID-19 tính từ ngày 01 tháng 5 năm 2021 đến hết ngày 31 tháng 12 năm 2021.<br>2. Nghỉ việc không hưởng lương từ 01 tháng trở lên tính từ ngày 01 tháng 5 năm 2021 đến hết ngày 31 tháng 12 năm 2021.<br>3. Chưa được hưởng chính sách hỗ trợ đối với người lao động tạm hoãn hợp đồng lao động, nghỉ việc không hưởng lương theo quy định tại khoản 4, khoản 5, khoản 6 Mục II Nghị quyết số 68/NQ-CP ngày 01 tháng 7 năm 2021 của Chính phủ về một số chính sách hỗ trợ người lao động và người sử dụng lao động gặp khó khăn do đại dịch COVID-19, Nghị quyết số 126/NQ-CP ngày 08 tháng 10 năm 2021 của Chính phủ sửa đổi, bổ sung Nghị quyết số 68/NQ-CP ngày 01 tháng 7 năm 2021 của Chính phủ về một số chính sách hỗ trợ người lao động và người sử dụng lao động gặp khó khăn do đại dịch COVID-19 (sau đây gọi tắt là Nghị quyết số 68/NQ-CP) do không tham gia Bảo hiểm xã hội bắt buộc.<br>4. Có xác nhận làm việc tại cơ sở giáo dục ngoài công lập ít nhất hết năm học 2021 - 2022 theo kế hoạch năm học của địa phương, bao gồm cơ sở giáo dục ngoài công lập đã làm việc trước đây hoặc cơ sở giáo dục ngoài công lập khác trong trường hợp cơ sở giáo dục ngoài công lập trước đây làm việc không hoạt động trở lại.</code> |

| <code>Nguyên tắc áp dụng phụ cấp ưu đãi nghề y tế thế nào?</code> | <code>Nguyên tắc áp dụng<br>1. Trường hợp công chức, viên chức chuyên môn y tế thuộc đối tượng được hưởng các mức phụ cấp ưu đãi theo nghề khác nhau thì được hưởng một mức phụ cấp ưu đãi theo nghề cao nhất.<br>2. Công chức, viên chức đã hưởng phụ cấp ưu đãi theo nghề quy định tại Thông tư liên tịch số 06/2010/TTLT-BYT-BNV-BTC ngày 22/3/2010 của Bộ Y tế, Bộ Nội vụ, Bộ Tài chính hướng dẫn thực hiện Nghị định số 64/2009/NĐ-CP ngày 30/7/2009 của Chính phủ về chính sách đối với cán bộ, viên chức y tế công tác ở vùng có điều kiện kinh tế - xã hội đặc biệt khó khăn thì không hưởng phụ cấp ưu đãi theo nghề quy định tại Thông tư liên tịch này.</code> |

* Loss: [<code>MultipleNegativesRankingLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#multiplenegativesrankingloss) with these parameters:

```json

{

"scale": 20.0,

"similarity_fct": "cos_sim"

}

```

### Evaluation Dataset

#### train

* Dataset: train

* Size: 13,357 evaluation samples

* Columns: <code>anchor</code> and <code>positive</code>

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:----------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 7 tokens</li><li>mean: 24.61 tokens</li><li>max: 51 tokens</li></ul> | <ul><li>min: 17 tokens</li><li>mean: 202.71 tokens</li><li>max: 256 tokens</li></ul> |

* Samples:

| anchor | positive |

|:-------------------------------------------------------------------------------------------------------------------------------------------|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>Toà án cấp nào có thẩm quyền giải quyết việc đòi tài sản đã cho người khác vay theo hợp đồng cho vay?</code> | <code>"Điều 35. Thẩm quyền của Tòa án nhân dân cấp huyện<br>1. Tòa án nhân dân cấp huyện có thẩm quyền giải quyết theo thủ tục sơ thẩm những tranh chấp sau đây:<br>a) Tranh chấp về dân sự, hôn nhân và gia đình quy định tại Điều 26 và Điều 28 của Bộ luật này, trừ tranh chấp quy định tại khoản 7 Điều 26 của Bộ luật này;<br>b) Tranh chấp về kinh doanh, thương mại quy định tại khoản 1 Điều 30 của Bộ luật này;<br>c) Tranh chấp về lao động quy định tại Điều 32 của Bộ luật này.<br>2. Tòa án nhân dân cấp huyện có thẩm quyền giải quyết những yêu cầu sau đây:<br>a) Yêu cầu về dân sự quy định tại các khoản 1, 2, 3, 4, 6, 7, 8, 9 và 10 Điều 27 của Bộ luật này;<br>b) Yêu cầu về hôn nhân và gia đình quy định tại các khoản 1, 2, 3, 4, 5, 6, 7, 8, 10 và 11 Điều 29 của Bộ luật này;<br>c) Yêu cầu về kinh doanh, thương mại quy định tại khoản 1 và khoản 6 Điều 31 của Bộ luật này;<br>d) Yêu cầu về lao động quy định tại khoản 1 và khoản 5 Điều 33 của Bộ luật này.<br>3. Những tranh chấp, yêu cầu quy định tại khoản 1 và khoản 2 Điều này mà có đương sự hoặc tài sản ở nước ngoài hoặc cần phải ủy thác tư pháp cho cơ quan đại diện nước Cộng hòa xã hội chủ nghĩa Việt Nam ở nước ngoài, cho Tòa án, cơ quan có thẩm quyền của nước ngoài không thuộc thẩm quyền giải quyết của Tòa án nhân dân cấp huyện, trừ trường hợp quy định tại khoản 4 Điều này.<br>4. Tòa án nhân dân cấp huyện nơi cư trú của công dân Việt Nam hủy việc kết hôn trái pháp luật, giải quyết việc ly hôn, các tranh chấp về quyền và nghĩa vụ của vợ chồng, cha mẹ và con, về nhận cha, mẹ, con, nuôi con nuôi và giám hộ giữa công dân Việt Nam cư trú ở khu vực biên giới với công dân của nước láng giềng cùng cư trú ở khu vực biên giới với Việt Nam theo quy định của Bộ luật này và các quy định khác của pháp luật Việt Nam."</code> |

| <code>Những phiếu bầu nào được xem là không hợp lệ?</code> | <code>Phiếu bầu không hợp lệ<br>1. Những phiếu bầu sau đây là phiếu bầu không hợp lệ:<br>a) Phiếu không theo mẫu quy định do Tổ bầu cử phát ra;<br>b) Phiếu không có dấu của Tổ bầu cử;<br>c) Phiếu để số người được bầu nhiều hơn số lượng đại biểu được bầu đã ấn định cho đơn vị bầu cử;<br>d) Phiếu gạch xóa hết tên những người ứng cử;<br>đ) Phiếu ghi thêm tên người ngoài danh sách những người ứng cử hoặc phiếu có ghi thêm nội dung khác.<br>2. Trường hợp có phiếu bầu được cho là không hợp lệ thì Tổ trường Tổ bầu cử đưa ra để toàn Tổ xem xét, quyết định. Tổ bầu cử không được gạch xóa hoặc sửa các tên ghi trên phiếu bầu.</code> |

| <code>Đề nghị tạm đình chỉ chấp hành quyết định áp dụng biện pháp đưa vào trường giáo dưỡng cho học sinh cần đảm bảo nguyên tắc gì?</code> | <code>Nguyên tắc xét duyệt, đề nghị giảm thời hạn, tạm đình chỉ chấp hành quyết định, miễn chấp hành phần thời gian còn lại cho học sinh trường giáo dưỡng, trại viên cơ sở giáo dục bắt buộc<br>1. Tuân thủ quy định của pháp luật về thi hành biện pháp xử lý hành chính đưa vào trường giáo dưỡng, cơ sở giáo dục bắt buộc, quy định tại Thông tư này và quy định của pháp luật có liên quan.<br>2. Bảo đảm khách quan, công khai, minh bạch, đúng trình tự, thủ tục, thẩm quyền; tôn trọng và bảo vệ quyền, lợi ích hợp pháp của học sinh trường giáo dưỡng, trại viên cơ sở giáo dục bắt buộc.</code> |

* Loss: [<code>MultipleNegativesRankingLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#multiplenegativesrankingloss) with these parameters:

```json

{

"scale": 20.0,

"similarity_fct": "cos_sim"

}

```

### Training Hyperparameters

#### Non-Default Hyperparameters

- `eval_strategy`: steps

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 32

- `num_train_epochs`: 4

- `warmup_ratio`: 0.1

- `fp16`: True

- `batch_sampler`: no_duplicates

#### All Hyperparameters

<details><summary>Click to expand</summary>

- `overwrite_output_dir`: False

- `do_predict`: False

- `eval_strategy`: steps

- `prediction_loss_only`: True

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 32

- `per_gpu_train_batch_size`: None

- `per_gpu_eval_batch_size`: None

- `gradient_accumulation_steps`: 1

- `eval_accumulation_steps`: None

- `torch_empty_cache_steps`: None

- `learning_rate`: 5e-05

- `weight_decay`: 0.0

- `adam_beta1`: 0.9

- `adam_beta2`: 0.999

- `adam_epsilon`: 1e-08

- `max_grad_norm`: 1.0

- `num_train_epochs`: 4

- `max_steps`: -1

- `lr_scheduler_type`: linear

- `lr_scheduler_kwargs`: {}

- `warmup_ratio`: 0.1

- `warmup_steps`: 0

- `log_level`: passive

- `log_level_replica`: warning

- `log_on_each_node`: True

- `logging_nan_inf_filter`: True

- `save_safetensors`: True

- `save_on_each_node`: False

- `save_only_model`: False

- `restore_callback_states_from_checkpoint`: False

- `no_cuda`: False

- `use_cpu`: False

- `use_mps_device`: False

- `seed`: 42

- `data_seed`: None

- `jit_mode_eval`: False

- `use_ipex`: False

- `bf16`: False

- `fp16`: True

- `fp16_opt_level`: O1

- `half_precision_backend`: auto

- `bf16_full_eval`: False

- `fp16_full_eval`: False

- `tf32`: None

- `local_rank`: 0

- `ddp_backend`: None

- `tpu_num_cores`: None

- `tpu_metrics_debug`: False

- `debug`: []

- `dataloader_drop_last`: False

- `dataloader_num_workers`: 0

- `dataloader_prefetch_factor`: None

- `past_index`: -1

- `disable_tqdm`: False

- `remove_unused_columns`: True

- `label_names`: None

- `load_best_model_at_end`: False

- `ignore_data_skip`: False

- `fsdp`: []

- `fsdp_min_num_params`: 0

- `fsdp_config`: {'min_num_params': 0, 'xla': False, 'xla_fsdp_v2': False, 'xla_fsdp_grad_ckpt': False}

- `fsdp_transformer_layer_cls_to_wrap`: None

- `accelerator_config`: {'split_batches': False, 'dispatch_batches': None, 'even_batches': True, 'use_seedable_sampler': True, 'non_blocking': False, 'gradient_accumulation_kwargs': None}

- `deepspeed`: None

- `label_smoothing_factor`: 0.0

- `optim`: adamw_torch

- `optim_args`: None

- `adafactor`: False

- `group_by_length`: False

- `length_column_name`: length

- `ddp_find_unused_parameters`: None

- `ddp_bucket_cap_mb`: None

- `ddp_broadcast_buffers`: False

- `dataloader_pin_memory`: True

- `dataloader_persistent_workers`: False

- `skip_memory_metrics`: True

- `use_legacy_prediction_loop`: False

- `push_to_hub`: False

- `resume_from_checkpoint`: None

- `hub_model_id`: None

- `hub_strategy`: every_save

- `hub_private_repo`: False

- `hub_always_push`: False

- `gradient_checkpointing`: False

- `gradient_checkpointing_kwargs`: None

- `include_inputs_for_metrics`: False

- `eval_do_concat_batches`: True

- `fp16_backend`: auto

- `push_to_hub_model_id`: None

- `push_to_hub_organization`: None

- `mp_parameters`:

- `auto_find_batch_size`: False

- `full_determinism`: False

- `torchdynamo`: None

- `ray_scope`: last

- `ddp_timeout`: 1800

- `torch_compile`: False

- `torch_compile_backend`: None

- `torch_compile_mode`: None

- `dispatch_batches`: None

- `split_batches`: None

- `include_tokens_per_second`: False

- `include_num_input_tokens_seen`: False

- `neftune_noise_alpha`: None

- `optim_target_modules`: None

- `batch_eval_metrics`: False

- `eval_on_start`: False

- `use_liger_kernel`: False

- `eval_use_gather_object`: False

- `batch_sampler`: no_duplicates

- `multi_dataset_batch_sampler`: proportional

</details>

### Training Logs

| Epoch | Step | Training Loss | train loss |

|:------:|:-----:|:-------------:|:----------:|

| 0.1331 | 500 | 0.3247 | 0.2239 |

| 0.2662 | 1000 | 0.1513 | 0.1605 |

| 0.3993 | 1500 | 0.119 | 0.1664 |

| 0.5323 | 2000 | 0.1047 | 0.1384 |

| 0.6654 | 2500 | 0.0915 | 0.1269 |

| 0.7985 | 3000 | 0.0861 | 0.1140 |

| 0.9316 | 3500 | 0.0839 | 0.1091 |

| 1.0647 | 4000 | 0.0693 | 0.0989 |

| 1.1978 | 4500 | 0.0582 | 0.0931 |

| 1.3308 | 5000 | 0.0457 | 0.0953 |

| 1.4639 | 5500 | 0.0284 | 0.0826 |

| 1.5970 | 6000 | 0.0233 | 0.0848 |

| 1.7301 | 6500 | 0.0256 | 0.0785 |

| 1.8632 | 7000 | 0.0236 | 0.0829 |

| 1.9963 | 7500 | 0.0203 | 0.0827 |

| 2.1294 | 8000 | 0.0182 | 0.0730 |

| 2.2624 | 8500 | 0.0143 | 0.0718 |

| 2.3955 | 9000 | 0.0103 | 0.0720 |

| 2.5286 | 9500 | 0.0086 | 0.0720 |

| 2.6617 | 10000 | 0.0058 | 0.0706 |

| 2.7948 | 10500 | 0.0074 | 0.0675 |

| 2.9279 | 11000 | 0.0073 | 0.0650 |

| 3.0610 | 11500 | 0.0054 | 0.0651 |

| 3.1940 | 12000 | 0.0043 | 0.0639 |

| 3.3271 | 12500 | 0.004 | 0.0626 |

| 3.4602 | 13000 | 0.0035 | 0.0617 |

| 3.5933 | 13500 | 0.0022 | 0.0614 |

| 3.7264 | 14000 | 0.003 | 0.0624 |

| 3.8595 | 14500 | 0.0022 | 0.0616 |

| 3.9925 | 15000 | 0.0028 | 0.0606 |

### Framework Versions

- Python: 3.10.14

- Sentence Transformers: 3.2.1

- Transformers: 4.45.1

- PyTorch: 2.4.0

- Accelerate: 0.34.2

- Datasets: 3.0.1

- Tokenizers: 0.20.0

## Citation

### BibTeX

#### Sentence Transformers

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "https://arxiv.org/abs/1908.10084",

}

```

#### MultipleNegativesRankingLoss

```bibtex

@misc{henderson2017efficient,

title={Efficient Natural Language Response Suggestion for Smart Reply},

author={Matthew Henderson and Rami Al-Rfou and Brian Strope and Yun-hsuan Sung and Laszlo Lukacs and Ruiqi Guo and Sanjiv Kumar and Balint Miklos and Ray Kurzweil},

year={2017},

eprint={1705.00652},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

<!--

## Glossary

*Clearly define terms in order to be accessible across audiences.*

-->

<!--

## Model Card Authors

*Lists the people who create the model card, providing recognition and accountability for the detailed work that goes into its construction.*

-->

<!--

## Model Card Contact

*Provides a way for people who have updates to the Model Card, suggestions, or questions, to contact the Model Card authors.*

--> |

g-assismoraes/deberta-large-semeval25_EN08_fold3 | g-assismoraes | 2024-10-28T14:37:33Z | 5 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"deberta-v2",

"text-classification",

"generated_from_trainer",

"base_model:microsoft/deberta-v3-large",

"base_model:finetune:microsoft/deberta-v3-large",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2024-10-28T14:23:46Z | ---

library_name: transformers

license: mit

base_model: microsoft/deberta-v3-large

tags:

- generated_from_trainer

model-index:

- name: deberta-large-semeval25_EN08_fold3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# deberta-large-semeval25_EN08_fold3

This model is a fine-tuned version of [microsoft/deberta-v3-large](https://huggingface.co/microsoft/deberta-v3-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 8.2442

- Precision Samples: 0.1144

- Recall Samples: 0.7997

- F1 Samples: 0.1930

- Precision Macro: 0.3896

- Recall Macro: 0.6167

- F1 Macro: 0.2236

- Precision Micro: 0.1104

- Recall Micro: 0.7507

- F1 Micro: 0.1924

- Precision Weighted: 0.2237

- Recall Weighted: 0.7507

- F1 Weighted: 0.2130

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision Samples | Recall Samples | F1 Samples | Precision Macro | Recall Macro | F1 Macro | Precision Micro | Recall Micro | F1 Micro | Precision Weighted | Recall Weighted | F1 Weighted |

|:-------------:|:-----:|:----:|:---------------:|:-----------------:|:--------------:|:----------:|:---------------:|:------------:|:--------:|:---------------:|:------------:|:--------:|:------------------:|:---------------:|:-----------:|

| 8.2513 | 1.0 | 73 | 9.8237 | 0.1093 | 0.4542 | 0.1631 | 0.8860 | 0.2559 | 0.1808 | 0.1110 | 0.3399 | 0.1674 | 0.6512 | 0.3399 | 0.0922 |

| 6.8883 | 2.0 | 146 | 9.3725 | 0.1082 | 0.6445 | 0.1710 | 0.7810 | 0.3726 | 0.1997 | 0.0995 | 0.5637 | 0.1692 | 0.4732 | 0.5637 | 0.1274 |

| 8.4363 | 3.0 | 219 | 8.8450 | 0.1195 | 0.7090 | 0.1933 | 0.6684 | 0.4525 | 0.2167 | 0.1073 | 0.6374 | 0.1837 | 0.3811 | 0.6374 | 0.1603 |

| 8.6787 | 4.0 | 292 | 8.5427 | 0.1068 | 0.7465 | 0.1790 | 0.5303 | 0.5162 | 0.1950 | 0.0967 | 0.6941 | 0.1697 | 0.2823 | 0.6941 | 0.1599 |

| 6.8889 | 5.0 | 365 | 8.5407 | 0.1100 | 0.7823 | 0.1854 | 0.4867 | 0.5780 | 0.2249 | 0.1022 | 0.7337 | 0.1794 | 0.2365 | 0.7337 | 0.1842 |

| 7.9121 | 6.0 | 438 | 8.4019 | 0.1096 | 0.7957 | 0.1858 | 0.4441 | 0.5804 | 0.2166 | 0.1041 | 0.7365 | 0.1825 | 0.2387 | 0.7365 | 0.1936 |

| 7.1827 | 7.0 | 511 | 8.3315 | 0.1085 | 0.8046 | 0.1846 | 0.4158 | 0.6204 | 0.2251 | 0.1042 | 0.7507 | 0.1831 | 0.2210 | 0.7507 | 0.2018 |

| 5.9674 | 8.0 | 584 | 8.1923 | 0.1100 | 0.8047 | 0.1857 | 0.3929 | 0.6172 | 0.2292 | 0.1046 | 0.7620 | 0.1839 | 0.2236 | 0.7620 | 0.2136 |

| 6.397 | 9.0 | 657 | 8.2536 | 0.1113 | 0.8023 | 0.1884 | 0.3999 | 0.6139 | 0.2328 | 0.1077 | 0.7507 | 0.1883 | 0.2269 | 0.7507 | 0.2148 |

| 6.4848 | 10.0 | 730 | 8.2442 | 0.1144 | 0.7997 | 0.1930 | 0.3896 | 0.6167 | 0.2236 | 0.1104 | 0.7507 | 0.1924 | 0.2237 | 0.7507 | 0.2130 |

### Framework versions

- Transformers 4.46.0

- Pytorch 2.3.1

- Datasets 2.21.0

- Tokenizers 0.20.1

|

devilteo911/whisper-small-ita-ct2 | devilteo911 | 2024-10-28T14:35:05Z | 20 | 0 | ctranslate2 | [

"ctranslate2",

"audio",

"automatic-speech-recognition",

"hf-asr-leaderboard",

"it",

"en",

"arxiv:2212.04356",

"license:apache-2.0",

"region:us"

] | automatic-speech-recognition | 2024-10-28T14:21:50Z | ---

license: apache-2.0

language:

- it

- en

metrics:

- wer

pipeline_tag: automatic-speech-recognition

tags:

- audio

- automatic-speech-recognition