modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

sequence | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

Zhandos38/whisper-small-sber-v4 | Zhandos38 | 2024-02-05T07:04:41Z | 5 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"whisper",

"automatic-speech-recognition",

"generated_from_trainer",

"base_model:openai/whisper-small",

"base_model:finetune:openai/whisper-small",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2024-02-04T20:57:24Z | ---

license: apache-2.0

base_model: openai/whisper-small

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: whisper-small-sber-v4

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# whisper-small-sber-v4

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3522

- Wer: 22.1427

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.1636 | 1.33 | 1000 | 0.4798 | 31.6750 |

| 0.0691 | 2.67 | 2000 | 0.4455 | 30.3746 |

| 0.0212 | 4.0 | 3000 | 0.3982 | 26.7478 |

| 0.0014 | 5.33 | 4000 | 0.3522 | 22.1427 |

### Framework versions

- Transformers 4.36.2

- Pytorch 1.14.0a0+44dac51

- Datasets 2.16.1

- Tokenizers 0.15.0

|

jeevana/G8_mistral7b_qlora_1211_v02 | jeevana | 2024-02-05T07:03:34Z | 4 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-26T15:01:35Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

Tensorride/censorship_classifier_transformer | Tensorride | 2024-02-05T07:01:07Z | 91 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-07-10T18:38:23Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: censorship_classifier_transformer

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# censorship_classifier_transformer

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6150

- Accuracy: 0.7727

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 2 | 0.6542 | 0.5909 |

| No log | 2.0 | 4 | 0.6344 | 0.5909 |

| No log | 3.0 | 6 | 0.6212 | 0.6364 |

| No log | 4.0 | 8 | 0.6150 | 0.7727 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cpu

- Datasets 2.13.1

- Tokenizers 0.13.3

|

davidho27941/rl-course-model-20240205 | davidho27941 | 2024-02-05T06:54:02Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2024-02-05T06:43:48Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 298.77 +/- 15.86

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

CognitiveLab/Fireship-clone-hf | CognitiveLab | 2024-02-05T06:42:23Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"generated_from_trainer",

"base_model:NousResearch/Llama-2-7b-hf",

"base_model:finetune:NousResearch/Llama-2-7b-hf",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-05T06:42:21Z | ---

base_model: NousResearch/Llama-2-7b-hf

tags:

- generated_from_trainer

model-index:

- name: out

results: []

---

```yaml

base_model: NousResearch/Llama-2-7b-hf

model_type: LlamaForCausalLM

tokenizer_type: LlamaTokenizer

is_llama_derived_model: true

load_in_8bit: false

load_in_4bit: false

strict: false

datasets:

- path: CognitiveLab/FS_transcribe_summary_prompt

type: completion

dataset_prepared_path: last_run_prepared

val_set_size: 0.05

output_dir: ./out

sequence_len: 4096

sample_packing: true

pad_to_sequence_len: true

adapter:

lora_model_dir:

lora_r:

lora_alpha:

lora_dropout:

lora_target_linear:

lora_fan_in_fan_out:

wandb_project: fireship-fft

wandb_entity:

wandb_watch:

wandb_name:

wandb_log_model:

gradient_accumulation_steps: 4

micro_batch_size: 4

num_epochs: 1

optimizer: adamw_bnb_8bit

lr_scheduler: cosine

learning_rate: 0.0002

train_on_inputs: false

group_by_length: false

bf16: auto

fp16:

tf32: false

gradient_checkpointing: true

early_stopping_patience:

resume_from_checkpoint:

local_rank:

logging_steps: 1

xformers_attention:

flash_attention: true

flash_attn_cross_entropy: false

flash_attn_rms_norm: true

flash_attn_fuse_qkv: false

flash_attn_fuse_mlp: true

warmup_steps: 100

evals_per_epoch: 4

eval_table_size:

saves_per_epoch: 2

debug:

deepspeed: #deepspeed_configs/zero2.json # multi-gpu only

weight_decay: 0.1

fsdp:

fsdp_config:

special_tokens:

```

</details><br>

# out

This model is a fine-tuned version of [NousResearch/Llama-2-7b-hf](https://huggingface.co/NousResearch/Llama-2-7b-hf) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8444

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 100

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.1256 | 0.06 | 1 | 2.1641 |

| 2.1049 | 0.25 | 4 | 2.1254 |

| 1.9826 | 0.49 | 8 | 1.9868 |

| 1.8545 | 0.74 | 12 | 1.8779 |

| 1.8597 | 0.98 | 16 | 1.8444 |

### Framework versions

- Transformers 4.36.2

- Pytorch 2.0.1+cu118

- Datasets 2.16.1

- Tokenizers 0.15.0

|

TinyPixel/guanaco | TinyPixel | 2024-02-05T06:38:54Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-02-01T15:59:53Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

Pankaj001/TabularClassification-wine_quality | Pankaj001 | 2024-02-05T06:32:05Z | 0 | 0 | null | [

"tabular-classification",

"dataset:codesignal/wine-quality",

"license:apache-2.0",

"region:us"

] | tabular-classification | 2024-01-22T08:51:32Z | ---

license: apache-2.0

datasets:

- codesignal/wine-quality

metrics:

- accuracy

pipeline_tag: tabular-classification

---

# Random Forest Model for Wine-Quality Prediction

This repository contains a Random Forest model trained on wine-quality data for wine quality prediction. The model has been trained to classify wine quality into six classes. During training, it achieved a 100% accuracy on the training dataset and a 66% accuracy on the validation dataset.

## Model Details

- **Algorithm**: Random Forest

- **Dataset**: Wine-Quality Data

- **Objective**: Wine quality prediction (Six classes) - (3,4,5,6,7,8) and prediction above 5 is good quality wine.

- **Dataset Size**: 320 samples with 11 features.

- **Target Variable**: Wine Quality

- **Data Split**: 80% for training, 20% for validation

- **Training Accuracy**: 100%

- **Validation Accuracy**: 66%

## Usage

You can use this model to predict wine quality based on the provided features. Below are some code snippets to help you get started:

```python

# Load the model and perform predictions

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

import joblib

# Load the trained Random Forest model (assuming 'model.pkl' is your model file)

model = joblib.load('model/random_forest_model.pkl')

# Prepare your data for prediction (assuming 'data' is your input data)

# Ensure that your input data has the same features as the training data

# Perform predictions

predictions = model.predict(data)

# Get the predicted wine quality class

# The predicted class will be an integer between 0 and 5

|

roku02/t-5-med-fine-tuned | roku02 | 2024-02-05T06:30:02Z | 0 | 0 | peft | [

"peft",

"region:us"

] | null | 2024-02-04T16:06:33Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.4.0

|

ericpolewski/Palworld-SME-13b | ericpolewski | 2024-02-05T06:22:11Z | 49 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-sa-3.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-05T05:38:02Z | ---

license: cc-by-sa-3.0

---

This is a Subject Matter Expert (SME) bot trained on the Palworld Fandom Wiki as a test of a new SME model pipeline. There's no RAG. All information is embedded in the model. It uses the OpenOrca-Platypus-13b fine-tune as a base.

Should work in any applicable loader/app, though I only tested it in [EricLLM](https://github.com/epolewski/EricLLM) and TGWUI.

All SME bots are generally useful, but focus on a topic. [Contact me](https://www.linkedin.com/in/eric-polewski-94b92214/) if you're interested in having one built.

|

Herry443/Mistral-7B-KNUT-ref-ALL | Herry443 | 2024-02-05T06:08:14Z | 2,201 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"ko",

"en",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-01T12:45:23Z | ---

license: cc-by-nc-4.0

language:

- ko

- en

library_name: transformers

tags:

- mistral

---

### Model Details

- Base Model: [Herry443/Mistral-7B-KNUT-ref](https://huggingface.co/Herry443/Mistral-7B-KNUT-ref)

### Datasets

- sampling [skt/kobest_v1](https://huggingface.co/datasets/skt/kobest_v1)

- sampling [allenai/ai2_arc](https://huggingface.co/datasets/allenai/ai2_arc)

- sampling [Rowan/hellaswag](https://huggingface.co/datasets/Rowan/hellaswag)

- sampling [Stevross/mmlu](https://huggingface.co/datasets/Stevross/mmlu)

|

yvonne1123/TrainingDynamic | yvonne1123 | 2024-02-05T05:39:47Z | 0 | 0 | null | [

"image-classification",

"dataset:mnist",

"dataset:cifar10",

"license:apache-2.0",

"region:us"

] | image-classification | 2024-01-27T03:57:09Z | ---

license: apache-2.0

datasets:

- mnist

- cifar10

metrics:

- accuracy

pipeline_tag: image-classification

--- |

xingyaoww/CodeActAgent-Llama-2-7b | xingyaoww | 2024-02-05T05:24:27Z | 14 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"llm-agent",

"conversational",

"en",

"dataset:xingyaoww/code-act",

"arxiv:2402.01030",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-05T10:44:17Z | ---

license: llama2

datasets:

- xingyaoww/code-act

language:

- en

tags:

- llm-agent

pipeline_tag: text-generation

---

<h1 align="center"> Executable Code Actions Elicit Better LLM Agents </h1>

<p align="center">

<a href="https://github.com/xingyaoww/code-act">💻 Code</a>

•

<a href="https://arxiv.org/abs/2402.01030">📃 Paper</a>

•

<a href="https://huggingface.co/datasets/xingyaoww/code-act" >🤗 Data (CodeActInstruct)</a>

•

<a href="https://huggingface.co/xingyaoww/CodeActAgent-Mistral-7b-v0.1" >🤗 Model (CodeActAgent-Mistral-7b-v0.1)</a>

•

<a href="https://chat.xwang.dev/">🤖 Chat with CodeActAgent!</a>

</p>

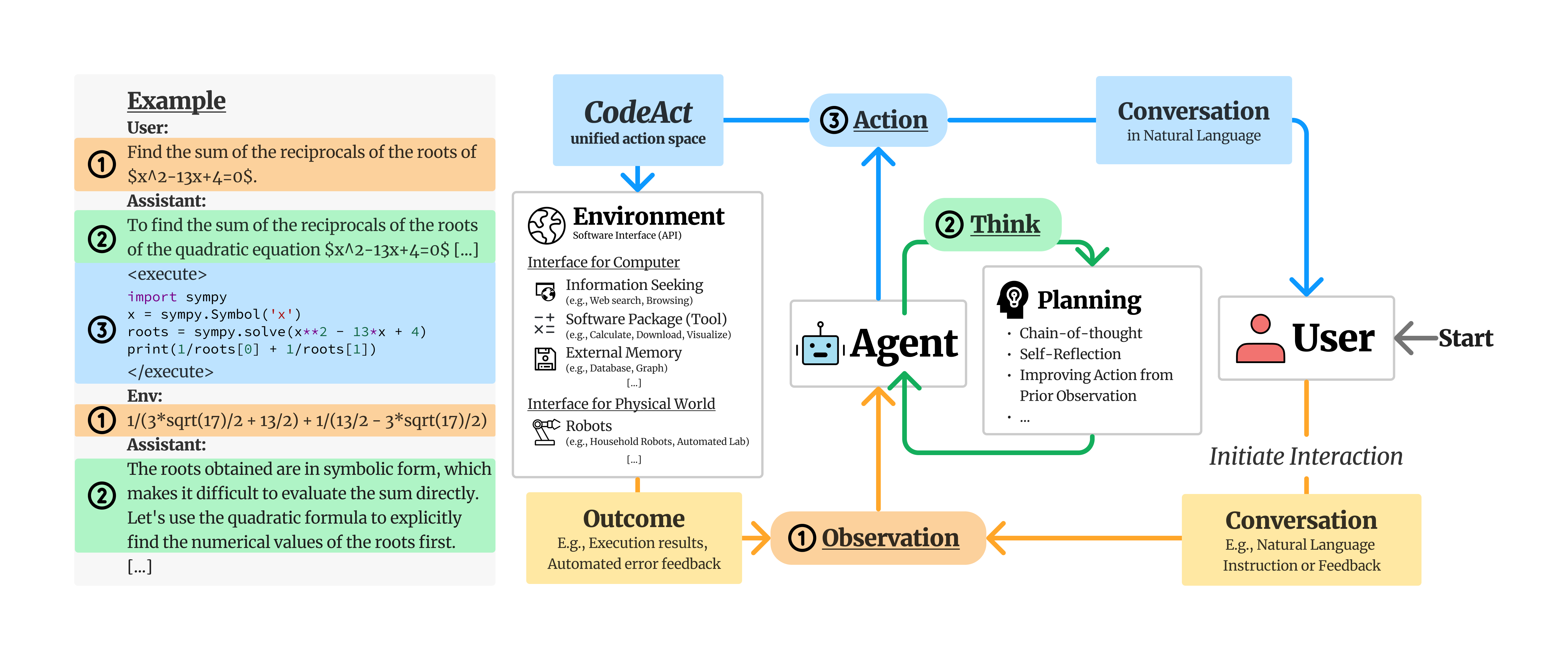

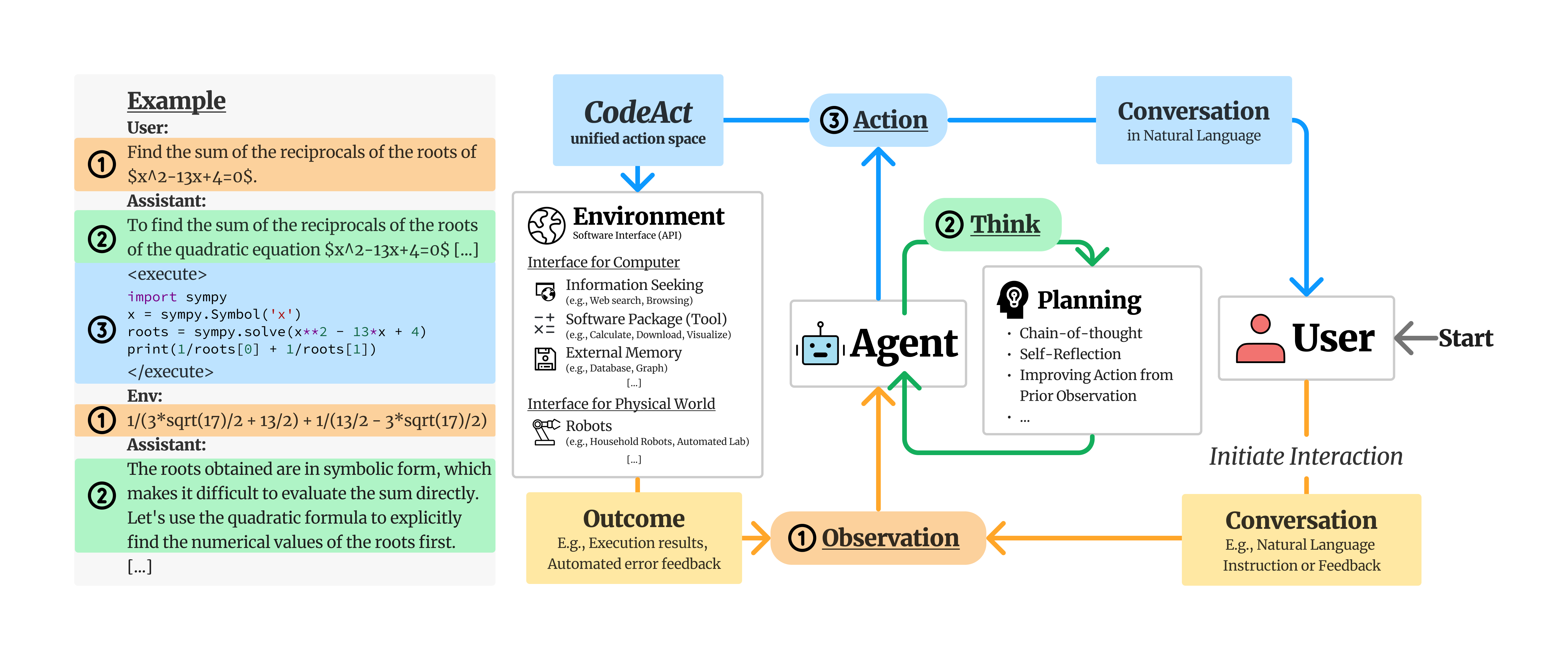

We propose to use executable Python **code** to consolidate LLM agents’ **act**ions into a unified action space (**CodeAct**).

Integrated with a Python interpreter, CodeAct can execute code actions and dynamically revise prior actions or emit new actions upon new observations (e.g., code execution results) through multi-turn interactions.

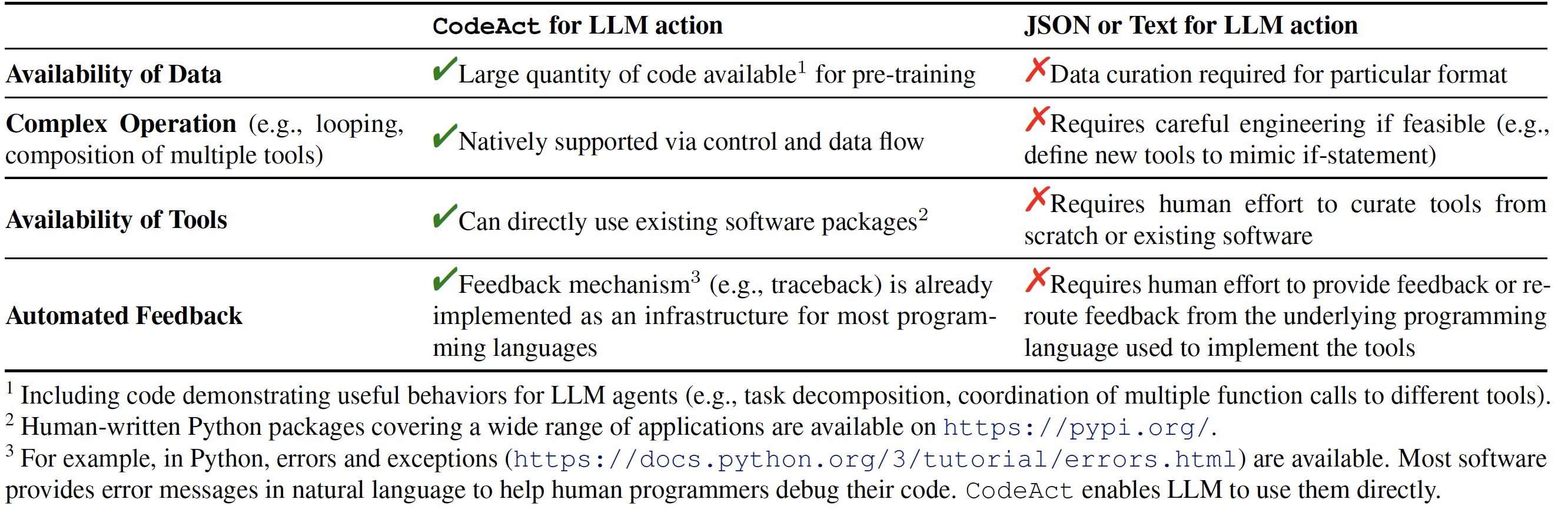

## Why CodeAct?

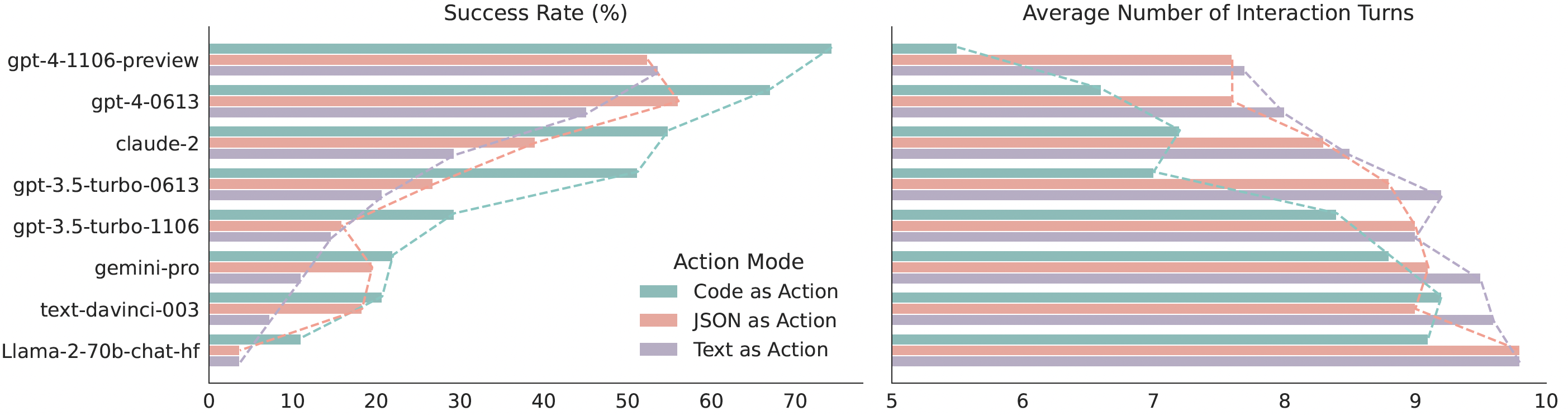

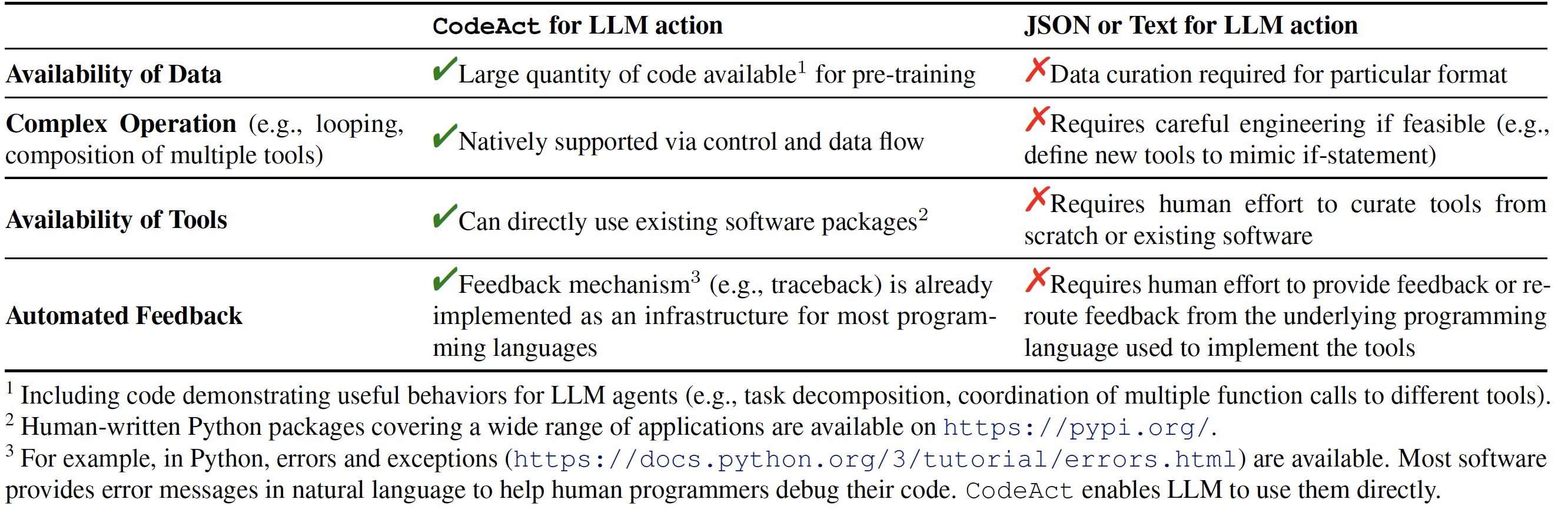

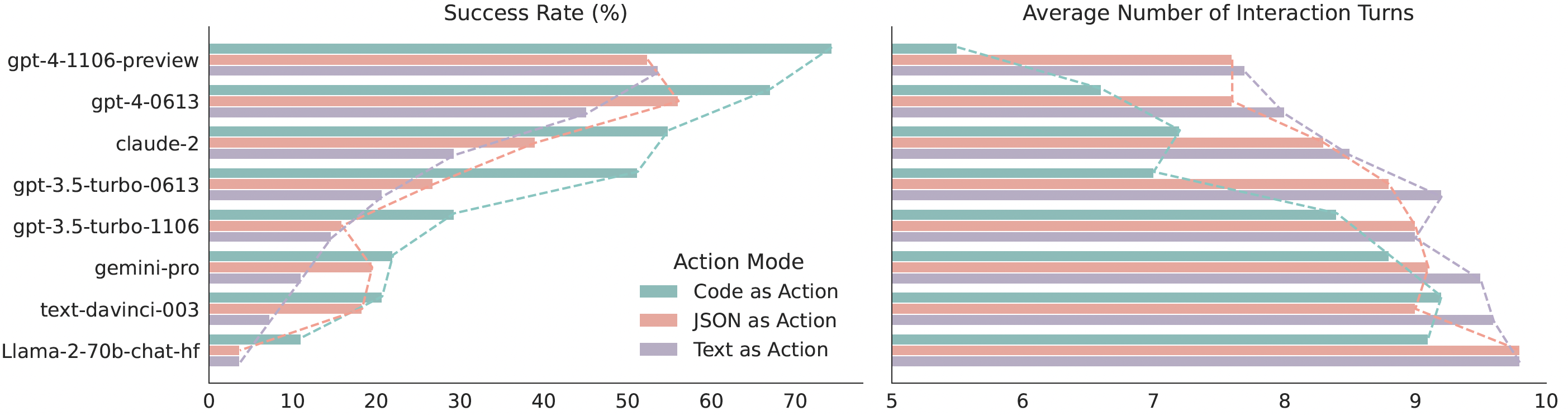

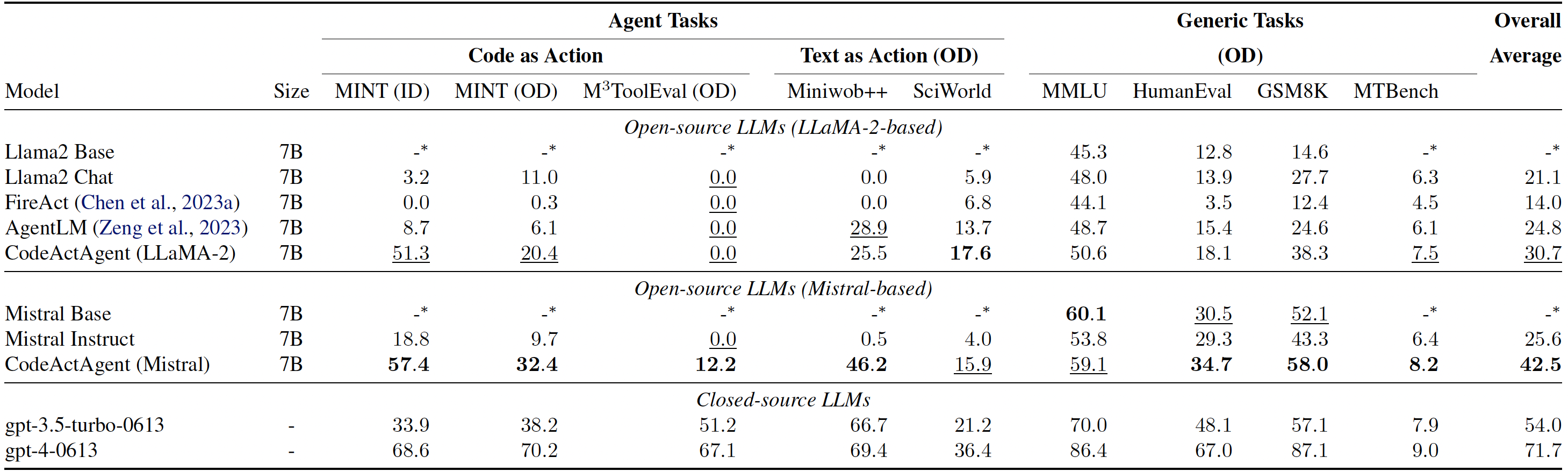

Our extensive analysis of 17 LLMs on API-Bank and a newly curated benchmark [M<sup>3</sup>ToolEval](docs/EVALUATION.md) shows that CodeAct outperforms widely used alternatives like Text and JSON (up to 20% higher success rate). Please check our paper for more detailed analysis!

*Comparison between CodeAct and Text / JSON as action.*

*Quantitative results comparing CodeAct and {Text, JSON} on M<sup>3</sup>ToolEval.*

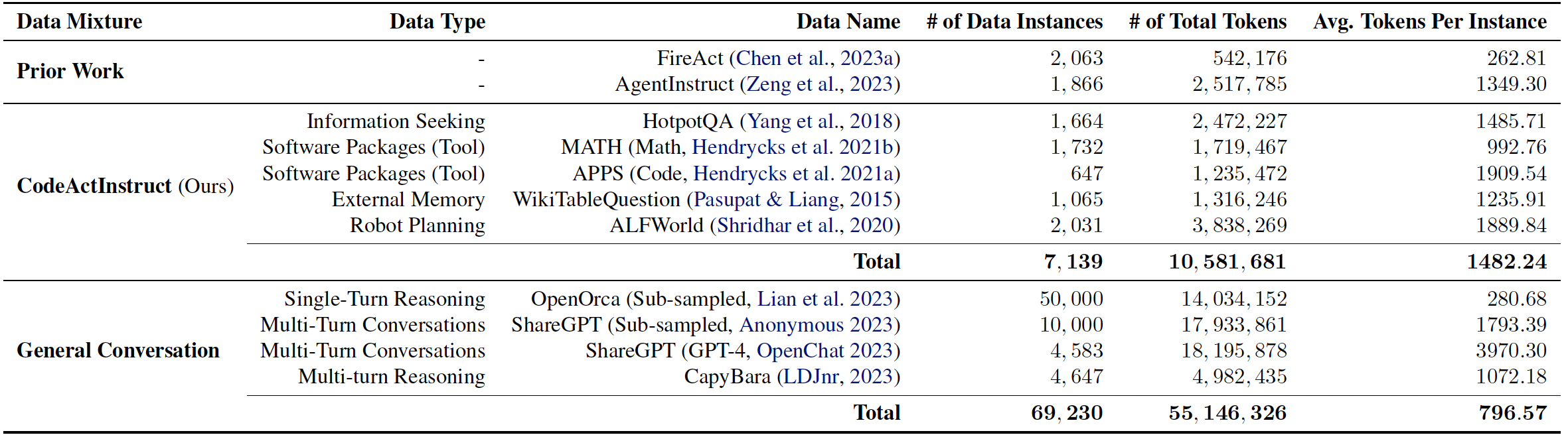

## 📁 CodeActInstruct

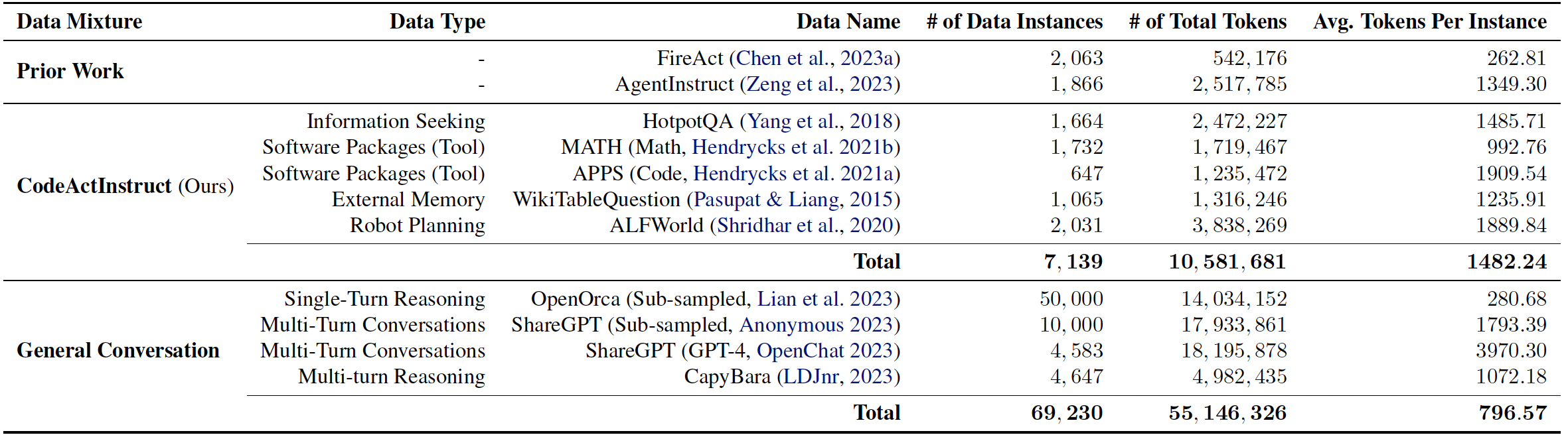

We collect an instruction-tuning dataset CodeActInstruct that consists of 7k multi-turn interactions using CodeAct. Dataset is release at [huggingface dataset 🤗](https://huggingface.co/datasets/xingyaoww/code-act). Please refer to the paper and [this section](#-data-generation-optional) for details of data collection.

*Dataset Statistics. Token statistics are computed using Llama-2 tokenizer.*

## 🪄 CodeActAgent

Trained on **CodeActInstruct** and general conversaions, **CodeActAgent** excels at out-of-domain agent tasks compared to open-source models of the same size, while not sacrificing generic performance (e.g., knowledge, dialog). We release two variants of CodeActAgent:

- **CodeActAgent-Mistral-7b-v0.1** (recommended, [model link](https://huggingface.co/xingyaoww/CodeActAgent-Mistral-7b-v0.1)): using Mistral-7b-v0.1 as the base model with 32k context window.

- **CodeActAgent-Llama-7b** ([model link](https://huggingface.co/xingyaoww/CodeActAgent-Llama-2-7b)): using Llama-2-7b as the base model with 4k context window.

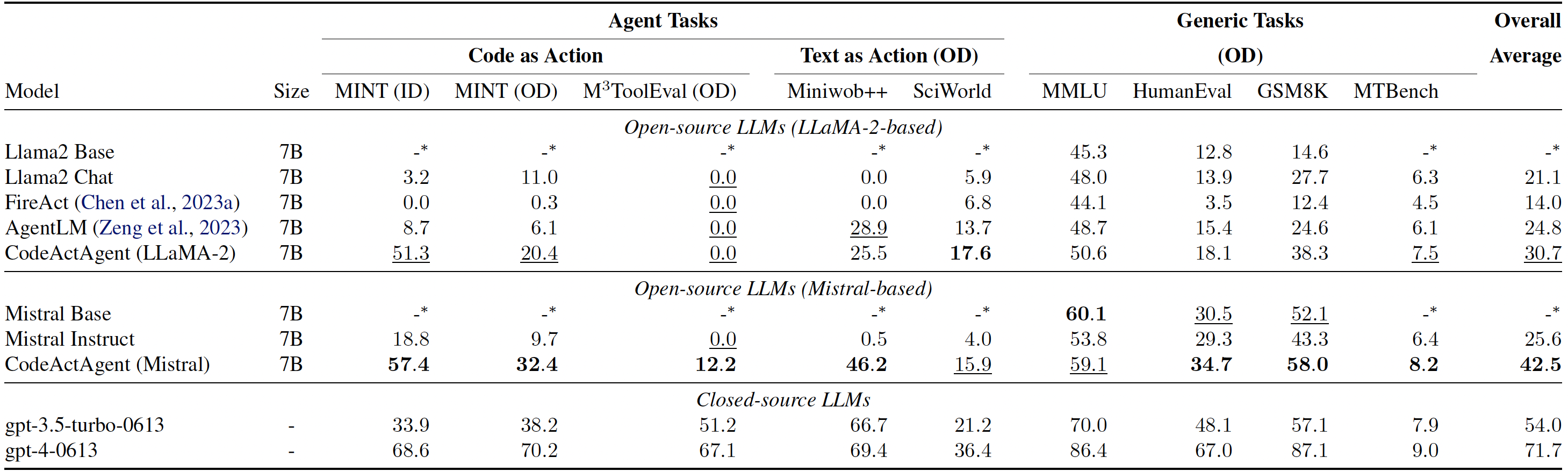

*Evaluation results for CodeActAgent. ID and OD stand for in-domain and out-of-domain evaluation correspondingly. Overall averaged performance normalizes the MT-Bench score to be consistent with other tasks and excludes in-domain tasks for fair comparison.*

Please check out [our paper](TODO) and [code](https://github.com/xingyaoww/code-act) for more details about data collection, model training, and evaluation.

## 📚 Citation

```bibtex

@misc{wang2024executable,

title={Executable Code Actions Elicit Better LLM Agents},

author={Xingyao Wang and Yangyi Chen and Lifan Yuan and Yizhe Zhang and Yunzhu Li and Hao Peng and Heng Ji},

year={2024},

eprint={2402.01030},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

janhq/finance-llm-GGUF | janhq | 2024-02-05T05:24:11Z | 14 | 2 | null | [

"gguf",

"finance",

"text-generation",

"en",

"dataset:Open-Orca/OpenOrca",

"dataset:GAIR/lima",

"dataset:WizardLM/WizardLM_evol_instruct_V2_196k",

"base_model:AdaptLLM/finance-LLM",

"base_model:quantized:AdaptLLM/finance-LLM",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-05T05:10:53Z | ---

language:

- en

datasets:

- Open-Orca/OpenOrca

- GAIR/lima

- WizardLM/WizardLM_evol_instruct_V2_196k

metrics:

- accuracy

pipeline_tag: text-generation

tags:

- finance

base_model: AdaptLLM/finance-LLM

model_creator: AdaptLLM

model_name: finance-LLM

quantized_by: JanHQ

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://github.com/janhq/jan/assets/89722390/35daac7d-b895-487c-a6ac-6663daaad78e" alt="Jan banner" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<p align="center">

<a href="https://jan.ai/">Jan</a>

- <a href="https://discord.gg/AsJ8krTT3N">Discord</a>

</p>

<!-- header end -->

# Model Description

This is a GGUF version of [AdaptLLM/finance-LLM](https://huggingface.co/AdaptLLM/finance-LLM)

- Model creator: [AdaptLLM](https://huggingface.co/AdaptLLM)

- Original model: [finance-LLM](https://huggingface.co/AdaptLLM/finance-LLM)

- Model description: [Readme](https://huggingface.co/AdaptLLM/finance-LLM/blob/main/README.md)

# About Jan

Jan believes in the need for an open-source AI ecosystem and is building the infra and tooling to allow open-source AIs to compete on a level playing field with proprietary ones.

Jan's long-term vision is to build a cognitive framework for future robots, who are practical, useful assistants for humans and businesses in everyday life.

# Jan Model Converter

This is a repository for the [open-source converter](https://github.com/janhq/model-converter. We would be grateful if the community could contribute and strengthen this repository. We are aiming to expand the repo that can convert into various types of format

|

xingyaoww/CodeActAgent-Mistral-7b-v0.1 | xingyaoww | 2024-02-05T05:24:01Z | 28 | 27 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"llm-agent",

"conversational",

"en",

"dataset:xingyaoww/code-act",

"arxiv:2402.01030",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-05T09:35:19Z | ---

license: apache-2.0

datasets:

- xingyaoww/code-act

language:

- en

pipeline_tag: text-generation

tags:

- llm-agent

---

<h1 align="center"> Executable Code Actions Elicit Better LLM Agents </h1>

<p align="center">

<a href="https://github.com/xingyaoww/code-act">💻 Code</a>

•

<a href="https://arxiv.org/abs/2402.01030">📃 Paper</a>

•

<a href="https://huggingface.co/datasets/xingyaoww/code-act" >🤗 Data (CodeActInstruct)</a>

•

<a href="https://huggingface.co/xingyaoww/CodeActAgent-Mistral-7b-v0.1" >🤗 Model (CodeActAgent-Mistral-7b-v0.1)</a>

•

<a href="https://chat.xwang.dev/">🤖 Chat with CodeActAgent!</a>

</p>

We propose to use executable Python **code** to consolidate LLM agents’ **act**ions into a unified action space (**CodeAct**).

Integrated with a Python interpreter, CodeAct can execute code actions and dynamically revise prior actions or emit new actions upon new observations (e.g., code execution results) through multi-turn interactions.

## Why CodeAct?

Our extensive analysis of 17 LLMs on API-Bank and a newly curated benchmark [M<sup>3</sup>ToolEval](docs/EVALUATION.md) shows that CodeAct outperforms widely used alternatives like Text and JSON (up to 20% higher success rate). Please check our paper for more detailed analysis!

*Comparison between CodeAct and Text / JSON as action.*

*Quantitative results comparing CodeAct and {Text, JSON} on M<sup>3</sup>ToolEval.*

## 📁 CodeActInstruct

We collect an instruction-tuning dataset CodeActInstruct that consists of 7k multi-turn interactions using CodeAct. Dataset is release at [huggingface dataset 🤗](https://huggingface.co/datasets/xingyaoww/code-act). Please refer to the paper and [this section](#-data-generation-optional) for details of data collection.

*Dataset Statistics. Token statistics are computed using Llama-2 tokenizer.*

## 🪄 CodeActAgent

Trained on **CodeActInstruct** and general conversaions, **CodeActAgent** excels at out-of-domain agent tasks compared to open-source models of the same size, while not sacrificing generic performance (e.g., knowledge, dialog). We release two variants of CodeActAgent:

- **CodeActAgent-Mistral-7b-v0.1** (recommended, [model link](https://huggingface.co/xingyaoww/CodeActAgent-Mistral-7b-v0.1)): using Mistral-7b-v0.1 as the base model with 32k context window.

- **CodeActAgent-Llama-7b** ([model link](https://huggingface.co/xingyaoww/CodeActAgent-Llama-2-7b)): using Llama-2-7b as the base model with 4k context window.

*Evaluation results for CodeActAgent. ID and OD stand for in-domain and out-of-domain evaluation correspondingly. Overall averaged performance normalizes the MT-Bench score to be consistent with other tasks and excludes in-domain tasks for fair comparison.*

Please check out [our paper](TODO) and [code](https://github.com/xingyaoww/code-act) for more details about data collection, model training, and evaluation.

## 📚 Citation

```bibtex

@misc{wang2024executable,

title={Executable Code Actions Elicit Better LLM Agents},

author={Xingyao Wang and Yangyi Chen and Lifan Yuan and Yizhe Zhang and Yunzhu Li and Hao Peng and Heng Ji},

year={2024},

eprint={2402.01030},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

neolord/distilbert-base-uncased-finetuned-clinc | neolord | 2024-02-05T05:17:37Z | 4 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"distilbert",

"text-classification",

"generated_from_trainer",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2024-02-05T05:07:16Z | ---

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-clinc

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-clinc

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7730

- Accuracy: 0.9161

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 318 | 3.2776 | 0.7287 |

| 3.7835 | 2.0 | 636 | 1.8647 | 0.8358 |

| 3.7835 | 3.0 | 954 | 1.1524 | 0.8977 |

| 1.6878 | 4.0 | 1272 | 0.8547 | 0.9129 |

| 0.8994 | 5.0 | 1590 | 0.7730 | 0.9161 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

|

gustavokpc/bert-base-portuguese-cased_LRATE_1e-06_EPOCHS_10 | gustavokpc | 2024-02-05T05:10:31Z | 45 | 0 | transformers | [

"transformers",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"base_model:neuralmind/bert-base-portuguese-cased",

"base_model:finetune:neuralmind/bert-base-portuguese-cased",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2024-02-05T03:18:40Z | ---

license: mit

base_model: neuralmind/bert-base-portuguese-cased

tags:

- generated_from_keras_callback

model-index:

- name: gustavokpc/bert-base-portuguese-cased_LRATE_1e-06_EPOCHS_10

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# gustavokpc/bert-base-portuguese-cased_LRATE_1e-06_EPOCHS_10

This model is a fine-tuned version of [neuralmind/bert-base-portuguese-cased](https://huggingface.co/neuralmind/bert-base-portuguese-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.1478

- Train Accuracy: 0.9481

- Train F1 M: 0.5518

- Train Precision M: 0.4013

- Train Recall M: 0.9436

- Validation Loss: 0.1862

- Validation Accuracy: 0.9307

- Validation F1 M: 0.5600

- Validation Precision M: 0.4033

- Validation Recall M: 0.9613

- Epoch: 9

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'weight_decay': None, 'clipnorm': None, 'global_clipnorm': None, 'clipvalue': None, 'use_ema': False, 'ema_momentum': 0.99, 'ema_overwrite_frequency': None, 'jit_compile': False, 'is_legacy_optimizer': False, 'learning_rate': {'module': 'keras.optimizers.schedules', 'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 1e-06, 'decay_steps': 7580, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, 'registered_name': None}, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Train Accuracy | Train F1 M | Train Precision M | Train Recall M | Validation Loss | Validation Accuracy | Validation F1 M | Validation Precision M | Validation Recall M | Epoch |

|:----------:|:--------------:|:----------:|:-----------------:|:--------------:|:---------------:|:-------------------:|:---------------:|:----------------------:|:-------------------:|:-----:|

| 0.4813 | 0.7972 | 0.2411 | 0.2093 | 0.3344 | 0.2665 | 0.9090 | 0.5217 | 0.3877 | 0.8393 | 0 |

| 0.2432 | 0.9126 | 0.5317 | 0.3942 | 0.8764 | 0.2185 | 0.9169 | 0.5490 | 0.3979 | 0.9239 | 1 |

| 0.2054 | 0.9262 | 0.5438 | 0.3981 | 0.9151 | 0.2059 | 0.9222 | 0.5441 | 0.3948 | 0.9188 | 2 |

| 0.1883 | 0.9300 | 0.5471 | 0.3992 | 0.9253 | 0.1970 | 0.9294 | 0.5504 | 0.3977 | 0.9356 | 3 |

| 0.1771 | 0.9359 | 0.5494 | 0.4011 | 0.9339 | 0.1918 | 0.9268 | 0.5550 | 0.4005 | 0.9486 | 4 |

| 0.1632 | 0.9418 | 0.5507 | 0.4016 | 0.9369 | 0.1889 | 0.9294 | 0.5578 | 0.4023 | 0.9538 | 5 |

| 0.1591 | 0.9436 | 0.5507 | 0.4023 | 0.9416 | 0.1878 | 0.9307 | 0.5547 | 0.4005 | 0.9464 | 6 |

| 0.1536 | 0.9452 | 0.5529 | 0.4028 | 0.9419 | 0.1871 | 0.9301 | 0.5561 | 0.4010 | 0.9521 | 7 |

| 0.1512 | 0.9471 | 0.5514 | 0.4012 | 0.9396 | 0.1864 | 0.9307 | 0.5599 | 0.4032 | 0.9613 | 8 |

| 0.1478 | 0.9481 | 0.5518 | 0.4013 | 0.9436 | 0.1862 | 0.9307 | 0.5600 | 0.4033 | 0.9613 | 9 |

### Framework versions

- Transformers 4.37.2

- TensorFlow 2.15.0

- Datasets 2.16.1

- Tokenizers 0.15.1

|

janhq/medicine-llm-GGUF | janhq | 2024-02-05T04:52:32Z | 14 | 0 | null | [

"gguf",

"biology",

"medical",

"text-generation",

"en",

"dataset:Open-Orca/OpenOrca",

"dataset:GAIR/lima",

"dataset:WizardLM/WizardLM_evol_instruct_V2_196k",

"dataset:EleutherAI/pile",

"base_model:AdaptLLM/medicine-LLM",

"base_model:quantized:AdaptLLM/medicine-LLM",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-05T04:45:42Z | ---

language:

- en

datasets:

- Open-Orca/OpenOrca

- GAIR/lima

- WizardLM/WizardLM_evol_instruct_V2_196k

- EleutherAI/pile

metrics:

- accuracy

pipeline_tag: text-generation

tags:

- biology

- medical

base_model: AdaptLLM/medicine-LLM

model_creator: AdaptLLM

model_name: medicine-LLM

quantized_by: JanHQ

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://github.com/janhq/jan/assets/89722390/35daac7d-b895-487c-a6ac-6663daaad78e" alt="Jan banner" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<p align="center">

<a href="https://jan.ai/">Jan</a>

- <a href="https://discord.gg/AsJ8krTT3N">Discord</a>

</p>

<!-- header end -->

# Model Description

This is a GGUF version of [AdaptLLM/medicine-LLM](https://huggingface.co/AdaptLLM/medicine-LLM)

- Model creator: [AdaptLLM](https://huggingface.co/AdaptLLM)

- Original model: [medicine-LLM](https://huggingface.co/AdaptLLM/medicine-LLM)

- Model description: [Readme](https://huggingface.co/AdaptLLM/medicine-LLM/blob/main/README.md)

# About Jan

Jan believes in the need for an open-source AI ecosystem and is building the infra and tooling to allow open-source AIs to compete on a level playing field with proprietary ones.

Jan's long-term vision is to build a cognitive framework for future robots, who are practical, useful assistants for humans and businesses in everyday life.

# Jan Model Converter

This is a repository for the [open-source converter](https://github.com/janhq/model-converter. We would be grateful if the community could contribute and strengthen this repository. We are aiming to expand the repo that can convert into various types of format

|

emaadshehzad/setfit-DK-V1 | emaadshehzad | 2024-02-05T04:50:43Z | 9 | 0 | setfit | [

"setfit",

"safetensors",

"bert",

"sentence-transformers",

"text-classification",

"generated_from_setfit_trainer",

"arxiv:2209.11055",

"base_model:sentence-transformers/all-MiniLM-L12-v1",

"base_model:finetune:sentence-transformers/all-MiniLM-L12-v1",

"region:us"

] | text-classification | 2023-12-04T13:12:58Z | ---

library_name: setfit

tags:

- setfit

- sentence-transformers

- text-classification

- generated_from_setfit_trainer

metrics:

- accuracy

widget: []

pipeline_tag: text-classification

inference: true

base_model: sentence-transformers/all-MiniLM-L12-v1

---

# SetFit with sentence-transformers/all-MiniLM-L12-v1

This is a [SetFit](https://github.com/huggingface/setfit) model that can be used for Text Classification. This SetFit model uses [sentence-transformers/all-MiniLM-L12-v1](https://huggingface.co/sentence-transformers/all-MiniLM-L12-v1) as the Sentence Transformer embedding model. A [LogisticRegression](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html) instance is used for classification.

The model has been trained using an efficient few-shot learning technique that involves:

1. Fine-tuning a [Sentence Transformer](https://www.sbert.net) with contrastive learning.

2. Training a classification head with features from the fine-tuned Sentence Transformer.

## Model Details

### Model Description

- **Model Type:** SetFit

- **Sentence Transformer body:** [sentence-transformers/all-MiniLM-L12-v1](https://huggingface.co/sentence-transformers/all-MiniLM-L12-v1)

- **Classification head:** a [LogisticRegression](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html) instance

- **Maximum Sequence Length:** 256 tokens

<!-- - **Number of Classes:** Unknown -->

<!-- - **Training Dataset:** [Unknown](https://huggingface.co/datasets/unknown) -->

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Repository:** [SetFit on GitHub](https://github.com/huggingface/setfit)

- **Paper:** [Efficient Few-Shot Learning Without Prompts](https://arxiv.org/abs/2209.11055)

- **Blogpost:** [SetFit: Efficient Few-Shot Learning Without Prompts](https://huggingface.co/blog/setfit)

## Uses

### Direct Use for Inference

First install the SetFit library:

```bash

pip install setfit

```

Then you can load this model and run inference.

```python

from setfit import SetFitModel

# Download from the 🤗 Hub

model = SetFitModel.from_pretrained("emaadshehzad/setfit-DK-V1")

# Run inference

preds = model("I loved the spiderman movie!")

```

<!--

### Downstream Use

*List how someone could finetune this model on their own dataset.*

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Framework Versions

- Python: 3.10.12

- SetFit: 1.0.3

- Sentence Transformers: 2.3.1

- Transformers: 4.35.2

- PyTorch: 2.1.0+cu121

- Datasets: 2.16.1

- Tokenizers: 0.15.1

## Citation

### BibTeX

```bibtex

@article{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

```

<!--

## Glossary

*Clearly define terms in order to be accessible across audiences.*

-->

<!--

## Model Card Authors

*Lists the people who create the model card, providing recognition and accountability for the detailed work that goes into its construction.*

-->

<!--

## Model Card Contact

*Provides a way for people who have updates to the Model Card, suggestions, or questions, to contact the Model Card authors.*

--> |

Jarles/PPO-LunarLander-v2 | Jarles | 2024-02-05T04:48:42Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2024-02-05T04:48:22Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 252.17 +/- 59.48

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

e22vvb/ALL_mt5-base_5_spider_15_wikiSQL_new | e22vvb | 2024-02-05T04:48:00Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2024-02-05T03:30:32Z | ---

tags:

- generated_from_trainer

model-index:

- name: ALL_mt5-base_5_spider_15_wikiSQL_new

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ALL_mt5-base_5_spider_15_wikiSQL_new

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2299

- Rouge2 Precision: 0.5924

- Rouge2 Recall: 0.4008

- Rouge2 Fmeasure: 0.4493

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge2 Precision | Rouge2 Recall | Rouge2 Fmeasure |

|:-------------:|:-----:|:----:|:---------------:|:----------------:|:-------------:|:---------------:|

| 0.2318 | 1.0 | 1212 | 0.2258 | 0.5422 | 0.3546 | 0.4016 |

| 0.1515 | 2.0 | 2424 | 0.2190 | 0.5676 | 0.3783 | 0.4251 |

| 0.1112 | 3.0 | 3636 | 0.2262 | 0.578 | 0.389 | 0.4362 |

| 0.0951 | 4.0 | 4848 | 0.2304 | 0.5869 | 0.3947 | 0.4431 |

| 0.088 | 5.0 | 6060 | 0.2299 | 0.5924 | 0.4008 | 0.4493 |

### Framework versions

- Transformers 4.26.1

- Pytorch 2.0.1+cu117

- Datasets 2.14.7.dev0

- Tokenizers 0.13.3

|

ankhamun/IIIIIIIo-oIIIIIII | ankhamun | 2024-02-05T04:38:17Z | 4 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-05T04:10:24Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

mbenachour/cms_rules1 | mbenachour | 2024-02-05T04:33:24Z | 1 | 0 | peft | [

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:bigscience/bloom-3b",

"base_model:adapter:bigscience/bloom-3b",

"region:us"

] | null | 2024-02-05T04:33:14Z | ---

library_name: peft

base_model: bigscience/bloom-3b

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.8.2 |

Ketak-ZoomRx/Indication_PYT_v3 | Ketak-ZoomRx | 2024-02-05T04:27:55Z | 13 | 0 | transformers | [

"transformers",

"pytorch",

"gpt_neox",

"text-generation",

"gpt",

"llm",

"large language model",

"h2o-llmstudio",

"en",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-05T04:27:19Z | ---

language:

- en

library_name: transformers

tags:

- gpt

- llm

- large language model

- h2o-llmstudio

inference: false

thumbnail: https://h2o.ai/etc.clientlibs/h2o/clientlibs/clientlib-site/resources/images/favicon.ico

---

# Model Card

## Summary

This model was trained using [H2O LLM Studio](https://github.com/h2oai/h2o-llmstudio).

- Base model: [EleutherAI/pythia-2.8b-deduped](https://huggingface.co/EleutherAI/pythia-2.8b-deduped)

## Usage

To use the model with the `transformers` library on a machine with GPUs, first make sure you have the `transformers`, `accelerate` and `torch` libraries installed.

```bash

pip install transformers==4.29.2

pip install einops==0.6.1

pip install accelerate==0.19.0

pip install torch==2.0.0

```

```python

import torch

from transformers import pipeline

generate_text = pipeline(

model="Ketak-ZoomRx/Indication_PYT_v3",

torch_dtype="auto",

trust_remote_code=True,

use_fast=True,

device_map={"": "cuda:0"},

)

res = generate_text(

"Why is drinking water so healthy?",

min_new_tokens=2,

max_new_tokens=256,

do_sample=False,

num_beams=1,

temperature=float(0.3),

repetition_penalty=float(1.2),

renormalize_logits=True

)

print(res[0]["generated_text"])

```

You can print a sample prompt after the preprocessing step to see how it is feed to the tokenizer:

```python

print(generate_text.preprocess("Why is drinking water so healthy?")["prompt_text"])

```

```bash

<|prompt|>Why is drinking water so healthy?<|endoftext|><|answer|>

```

Alternatively, you can download [h2oai_pipeline.py](h2oai_pipeline.py), store it alongside your notebook, and construct the pipeline yourself from the loaded model and tokenizer. If the model and the tokenizer are fully supported in the `transformers` package, this will allow you to set `trust_remote_code=False`.

```python

import torch

from h2oai_pipeline import H2OTextGenerationPipeline

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(

"Ketak-ZoomRx/Indication_PYT_v3",

use_fast=True,

padding_side="left",

trust_remote_code=True,

)

model = AutoModelForCausalLM.from_pretrained(

"Ketak-ZoomRx/Indication_PYT_v3",

torch_dtype="auto",

device_map={"": "cuda:0"},

trust_remote_code=True,

)

generate_text = H2OTextGenerationPipeline(model=model, tokenizer=tokenizer)

res = generate_text(

"Why is drinking water so healthy?",

min_new_tokens=2,

max_new_tokens=256,

do_sample=False,

num_beams=1,

temperature=float(0.3),

repetition_penalty=float(1.2),

renormalize_logits=True

)

print(res[0]["generated_text"])

```

You may also construct the pipeline from the loaded model and tokenizer yourself and consider the preprocessing steps:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Ketak-ZoomRx/Indication_PYT_v3" # either local folder or huggingface model name

# Important: The prompt needs to be in the same format the model was trained with.

# You can find an example prompt in the experiment logs.

prompt = "<|prompt|>How are you?<|endoftext|><|answer|>"

tokenizer = AutoTokenizer.from_pretrained(

model_name,

use_fast=True,

trust_remote_code=True,

)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map={"": "cuda:0"},

trust_remote_code=True,

)

model.cuda().eval()

inputs = tokenizer(prompt, return_tensors="pt", add_special_tokens=False).to("cuda")

# generate configuration can be modified to your needs

tokens = model.generate(

input_ids=inputs["input_ids"],

attention_mask=inputs["attention_mask"],

min_new_tokens=2,

max_new_tokens=256,

do_sample=False,

num_beams=1,

temperature=float(0.3),

repetition_penalty=float(1.2),

renormalize_logits=True

)[0]

tokens = tokens[inputs["input_ids"].shape[1]:]

answer = tokenizer.decode(tokens, skip_special_tokens=True)

print(answer)

```

## Quantization and sharding

You can load the models using quantization by specifying ```load_in_8bit=True``` or ```load_in_4bit=True```. Also, sharding on multiple GPUs is possible by setting ```device_map=auto```.

## Model Architecture

```

GPTNeoXForCausalLM(

(gpt_neox): GPTNeoXModel(

(embed_in): Embedding(50304, 2560)

(layers): ModuleList(

(0-31): 32 x GPTNeoXLayer(

(input_layernorm): LayerNorm((2560,), eps=1e-05, elementwise_affine=True)

(post_attention_layernorm): LayerNorm((2560,), eps=1e-05, elementwise_affine=True)

(attention): GPTNeoXAttention(

(rotary_emb): RotaryEmbedding()

(query_key_value): Linear(in_features=2560, out_features=7680, bias=True)

(dense): Linear(in_features=2560, out_features=2560, bias=True)

)

(mlp): GPTNeoXMLP(

(dense_h_to_4h): Linear(in_features=2560, out_features=10240, bias=True)

(dense_4h_to_h): Linear(in_features=10240, out_features=2560, bias=True)

(act): GELUActivation()

)

)

)

(final_layer_norm): LayerNorm((2560,), eps=1e-05, elementwise_affine=True)

)

(embed_out): Linear(in_features=2560, out_features=50304, bias=False)

)

```

## Model Configuration

This model was trained using H2O LLM Studio and with the configuration in [cfg.yaml](cfg.yaml). Visit [H2O LLM Studio](https://github.com/h2oai/h2o-llmstudio) to learn how to train your own large language models.

## Disclaimer

Please read this disclaimer carefully before using the large language model provided in this repository. Your use of the model signifies your agreement to the following terms and conditions.

- Biases and Offensiveness: The large language model is trained on a diverse range of internet text data, which may contain biased, racist, offensive, or otherwise inappropriate content. By using this model, you acknowledge and accept that the generated content may sometimes exhibit biases or produce content that is offensive or inappropriate. The developers of this repository do not endorse, support, or promote any such content or viewpoints.

- Limitations: The large language model is an AI-based tool and not a human. It may produce incorrect, nonsensical, or irrelevant responses. It is the user's responsibility to critically evaluate the generated content and use it at their discretion.

- Use at Your Own Risk: Users of this large language model must assume full responsibility for any consequences that may arise from their use of the tool. The developers and contributors of this repository shall not be held liable for any damages, losses, or harm resulting from the use or misuse of the provided model.

- Ethical Considerations: Users are encouraged to use the large language model responsibly and ethically. By using this model, you agree not to use it for purposes that promote hate speech, discrimination, harassment, or any form of illegal or harmful activities.

- Reporting Issues: If you encounter any biased, offensive, or otherwise inappropriate content generated by the large language model, please report it to the repository maintainers through the provided channels. Your feedback will help improve the model and mitigate potential issues.

- Changes to this Disclaimer: The developers of this repository reserve the right to modify or update this disclaimer at any time without prior notice. It is the user's responsibility to periodically review the disclaimer to stay informed about any changes.

By using the large language model provided in this repository, you agree to accept and comply with the terms and conditions outlined in this disclaimer. If you do not agree with any part of this disclaimer, you should refrain from using the model and any content generated by it. |

gayanin/bart-noised-with-kaggle-gcd-dist | gayanin | 2024-02-05T04:19:01Z | 10 | 0 | transformers | [

"transformers",

"safetensors",

"bart",

"text2text-generation",

"generated_from_trainer",

"base_model:gayanin/bart-noised-with-kaggle-dist",

"base_model:finetune:gayanin/bart-noised-with-kaggle-dist",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2024-02-05T02:56:41Z | ---

license: apache-2.0

base_model: gayanin/bart-noised-with-kaggle-dist

tags:

- generated_from_trainer

model-index:

- name: bart-noised-with-kaggle-gcd-dist

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-noised-with-kaggle-gcd-dist

This model is a fine-tuned version of [gayanin/bart-noised-with-kaggle-dist](https://huggingface.co/gayanin/bart-noised-with-kaggle-dist) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4538

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 10

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.6061 | 0.11 | 500 | 0.5365 |

| 0.5537 | 0.21 | 1000 | 0.5251 |

| 0.5591 | 0.32 | 1500 | 0.5202 |

| 0.5669 | 0.43 | 2000 | 0.5069 |

| 0.4669 | 0.54 | 2500 | 0.5038 |

| 0.5457 | 0.64 | 3000 | 0.4923 |

| 0.5237 | 0.75 | 3500 | 0.4922 |

| 0.5186 | 0.86 | 4000 | 0.4802 |

| 0.5148 | 0.96 | 4500 | 0.4777 |

| 0.4127 | 1.07 | 5000 | 0.4822 |

| 0.4207 | 1.18 | 5500 | 0.4807 |

| 0.4362 | 1.28 | 6000 | 0.4770 |

| 0.4072 | 1.39 | 6500 | 0.4763 |

| 0.4503 | 1.5 | 7000 | 0.4701 |

| 0.3683 | 1.61 | 7500 | 0.4693 |

| 0.3897 | 1.71 | 8000 | 0.4636 |

| 0.4421 | 1.82 | 8500 | 0.4561 |

| 0.3836 | 1.93 | 9000 | 0.4588 |

| 0.3405 | 2.03 | 9500 | 0.4634 |

| 0.3147 | 2.14 | 10000 | 0.4682 |

| 0.3115 | 2.25 | 10500 | 0.4622 |

| 0.3153 | 2.35 | 11000 | 0.4625 |

| 0.3295 | 2.46 | 11500 | 0.4597 |

| 0.3529 | 2.57 | 12000 | 0.4564 |

| 0.3191 | 2.68 | 12500 | 0.4555 |

| 0.2974 | 2.78 | 13000 | 0.4547 |

| 0.3253 | 2.89 | 13500 | 0.4534 |

| 0.3627 | 3.0 | 14000 | 0.4538 |

### Framework versions

- Transformers 4.37.2

- Pytorch 2.1.2+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

|

DouglasPontes/2020-Q3-25p-filtered | DouglasPontes | 2024-02-05T04:16:41Z | 13 | 0 | transformers | [

"transformers",

"pytorch",

"roberta",

"fill-mask",

"generated_from_trainer",

"base_model:cardiffnlp/twitter-roberta-base-2019-90m",

"base_model:finetune:cardiffnlp/twitter-roberta-base-2019-90m",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2024-02-02T03:57:11Z | ---

license: mit

base_model: cardiffnlp/twitter-roberta-base-2019-90m

tags:

- generated_from_trainer

model-index:

- name: 2020-Q3-25p-filtered