modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

sequence | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

LoneStriker/DeepMagic-Coder-7b-6.0bpw-h6-exl2 | LoneStriker | 2024-02-07T03:31:53Z | 4 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-07T03:29:42Z | ---

license: other

license_name: deepseek

license_link: https://github.com/deepseek-ai/DeepSeek-Coder/blob/main/LICENSE-MODEL

---

DeepMagic-Coder-7b

Alternate version:

- https://huggingface.co/rombodawg/DeepMagic-Coder-7b-Alt

This is an extremely successful merge of the deepseek-coder-6.7b-instruct and Magicoder-S-DS-6.7B models, bringing an uplift in overall coding performance without any compromise to the models integrity (at least with limited testing).

This is the first of my models to use the merge-kits *task_arithmetic* merging method. The method is detailed bellow, and its clearly very usefull for merging ai models that were fine-tuned from a common base:

Task Arithmetic:

```

Computes "task vectors" for each model by subtracting a base model.

Merges the task vectors linearly and adds back the base.

Works great for models that were fine tuned from a common ancestor.

Also a super useful mental framework for several of the more involved

merge methods.

```

The original models used in this merge can be found here:

- https://huggingface.co/ise-uiuc/Magicoder-S-DS-6.7B

- https://huggingface.co/deepseek-ai/deepseek-coder-6.7b-instruct

The Merge was created using Mergekit and the paremeters can be found bellow:

```yaml

models:

- model: deepseek-ai_deepseek-coder-6.7b-instruct

parameters:

weight: 1

- model: ise-uiuc_Magicoder-S-DS-6.7B

parameters:

weight: 1

merge_method: task_arithmetic

base_model: ise-uiuc_Magicoder-S-DS-6.7B

parameters:

normalize: true

int8_mask: true

dtype: float16

``` |

nightdude/ddpm-butterflies-128 | nightdude | 2024-02-07T03:29:40Z | 0 | 0 | diffusers | [

"diffusers",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"lora",

"license:creativeml-openrail-m",

"region:us"

] | text-to-image | 2024-02-07T03:27:23Z |

---

license: creativeml-openrail-m

base_model: anton_l/ddpm-butterflies-128

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- lora

inference: true

---

# LoRA text2image fine-tuning - ddpm-butterflies-128

These are LoRA adaption weights for anton_l/ddpm-butterflies-128. The weights were fine-tuned on the huggan/smithsonian_butterflies_subset dataset. You can find some example images in the following.

|

LoneStriker/DeepMagic-Coder-7b-5.0bpw-h6-exl2 | LoneStriker | 2024-02-07T03:29:39Z | 4 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-07T03:27:46Z | ---

license: other

license_name: deepseek

license_link: https://github.com/deepseek-ai/DeepSeek-Coder/blob/main/LICENSE-MODEL

---

DeepMagic-Coder-7b

Alternate version:

- https://huggingface.co/rombodawg/DeepMagic-Coder-7b-Alt

This is an extremely successful merge of the deepseek-coder-6.7b-instruct and Magicoder-S-DS-6.7B models, bringing an uplift in overall coding performance without any compromise to the models integrity (at least with limited testing).

This is the first of my models to use the merge-kits *task_arithmetic* merging method. The method is detailed bellow, and its clearly very usefull for merging ai models that were fine-tuned from a common base:

Task Arithmetic:

```

Computes "task vectors" for each model by subtracting a base model.

Merges the task vectors linearly and adds back the base.

Works great for models that were fine tuned from a common ancestor.

Also a super useful mental framework for several of the more involved

merge methods.

```

The original models used in this merge can be found here:

- https://huggingface.co/ise-uiuc/Magicoder-S-DS-6.7B

- https://huggingface.co/deepseek-ai/deepseek-coder-6.7b-instruct

The Merge was created using Mergekit and the paremeters can be found bellow:

```yaml

models:

- model: deepseek-ai_deepseek-coder-6.7b-instruct

parameters:

weight: 1

- model: ise-uiuc_Magicoder-S-DS-6.7B

parameters:

weight: 1

merge_method: task_arithmetic

base_model: ise-uiuc_Magicoder-S-DS-6.7B

parameters:

normalize: true

int8_mask: true

dtype: float16

``` |

LoneStriker/DeepMagic-Coder-7b-4.0bpw-h6-exl2 | LoneStriker | 2024-02-07T03:27:43Z | 7 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-07T03:26:09Z | ---

license: other

license_name: deepseek

license_link: https://github.com/deepseek-ai/DeepSeek-Coder/blob/main/LICENSE-MODEL

---

DeepMagic-Coder-7b

Alternate version:

- https://huggingface.co/rombodawg/DeepMagic-Coder-7b-Alt

This is an extremely successful merge of the deepseek-coder-6.7b-instruct and Magicoder-S-DS-6.7B models, bringing an uplift in overall coding performance without any compromise to the models integrity (at least with limited testing).

This is the first of my models to use the merge-kits *task_arithmetic* merging method. The method is detailed bellow, and its clearly very usefull for merging ai models that were fine-tuned from a common base:

Task Arithmetic:

```

Computes "task vectors" for each model by subtracting a base model.

Merges the task vectors linearly and adds back the base.

Works great for models that were fine tuned from a common ancestor.

Also a super useful mental framework for several of the more involved

merge methods.

```

The original models used in this merge can be found here:

- https://huggingface.co/ise-uiuc/Magicoder-S-DS-6.7B

- https://huggingface.co/deepseek-ai/deepseek-coder-6.7b-instruct

The Merge was created using Mergekit and the paremeters can be found bellow:

```yaml

models:

- model: deepseek-ai_deepseek-coder-6.7b-instruct

parameters:

weight: 1

- model: ise-uiuc_Magicoder-S-DS-6.7B

parameters:

weight: 1

merge_method: task_arithmetic

base_model: ise-uiuc_Magicoder-S-DS-6.7B

parameters:

normalize: true

int8_mask: true

dtype: float16

``` |

LoneStriker/DeepMagic-Coder-7b-GGUF | LoneStriker | 2024-02-07T03:19:15Z | 8 | 5 | null | [

"gguf",

"license:other",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2024-02-07T03:03:17Z | ---

license: other

license_name: deepseek

license_link: https://github.com/deepseek-ai/DeepSeek-Coder/blob/main/LICENSE-MODEL

---

DeepMagic-Coder-7b

Alternate version:

- https://huggingface.co/rombodawg/DeepMagic-Coder-7b-Alt

This is an extremely successful merge of the deepseek-coder-6.7b-instruct and Magicoder-S-DS-6.7B models, bringing an uplift in overall coding performance without any compromise to the models integrity (at least with limited testing).

This is the first of my models to use the merge-kits *task_arithmetic* merging method. The method is detailed bellow, and its clearly very usefull for merging ai models that were fine-tuned from a common base:

Task Arithmetic:

```

Computes "task vectors" for each model by subtracting a base model.

Merges the task vectors linearly and adds back the base.

Works great for models that were fine tuned from a common ancestor.

Also a super useful mental framework for several of the more involved

merge methods.

```

The original models used in this merge can be found here:

- https://huggingface.co/ise-uiuc/Magicoder-S-DS-6.7B

- https://huggingface.co/deepseek-ai/deepseek-coder-6.7b-instruct

The Merge was created using Mergekit and the paremeters can be found bellow:

```yaml

models:

- model: deepseek-ai_deepseek-coder-6.7b-instruct

parameters:

weight: 1

- model: ise-uiuc_Magicoder-S-DS-6.7B

parameters:

weight: 1

merge_method: task_arithmetic

base_model: ise-uiuc_Magicoder-S-DS-6.7B

parameters:

normalize: true

int8_mask: true

dtype: float16

``` |

Sacbe/ViT_SAM_Classification | Sacbe | 2024-02-07T03:17:54Z | 0 | 0 | transformers | [

"transformers",

"biology",

"image-classification",

"arxiv:2010.11929",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | image-classification | 2024-02-07T02:31:37Z | ---

license: apache-2.0

metrics:

- accuracy

- f1

- precision

- recall

library_name: transformers

pipeline_tag: image-classification

tags:

- biology

---

# Resumen

El modelo fue entrenado usando el modelo base de VisionTransformer junto con el optimizador SAM de Google y la función de perdida Negative log likelihood, sobre los datos [Wildfire](https://drive.google.com/file/d/1TlF8DIBLAccd0AredDUimQQ54sl_DwCE/view?usp=sharing). Los resultados muestran que el clasificador alcanzó una precisión del 97% con solo 10 épocas de entrenamiento.

La teoría de se muestra a continuación.

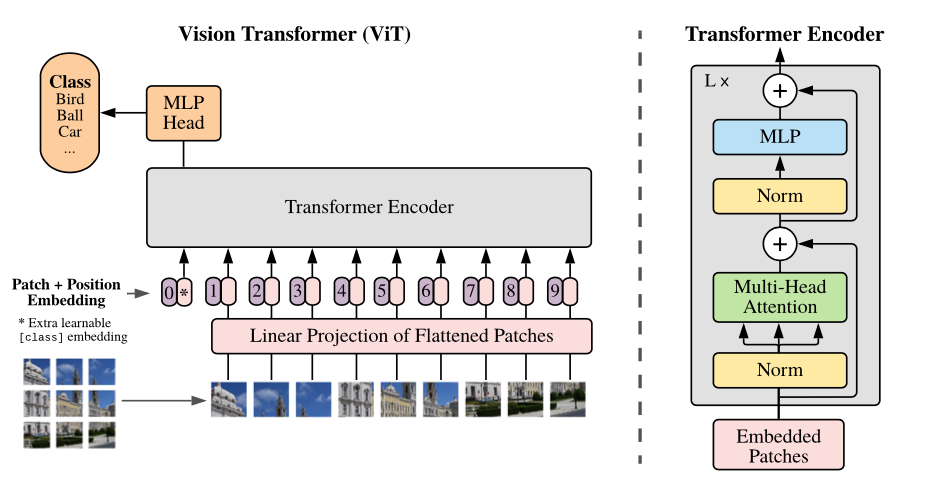

# VisionTransformer

**Attention-based neural networks such as the Vision Transformer** (ViT) have recently attained state-of-the-art results on many computer vision benchmarks. Scale is a primary ingredient in attaining excellent results, therefore, understanding a model's scaling properties is a key to designing future generations effectively. While the laws for scaling Transformer language models have been studied, it is unknown how Vision Transformers scale. To address this, we scale ViT models and data, both up and down, and characterize the relationships between error rate, data, and compute. Along the way, we refine the architecture and training of ViT, reducing memory consumption and increasing accuracy of the resulting models. As a result, we successfully train a ViT model with two billion parameters, which attains a new state-of-the-art on ImageNet of 90.45% top-1 accuracy. The model also performs well for few-shot transfer, for example, reaching 84.86% top-1 accuracy on ImageNet with only 10 examples per class.

[1] A. Dosovitskiy et al., “An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale”. arXiv, el 3 de junio de 2021. Consultado: el 12 de noviembre de 2023. [En línea]. Disponible en: http://arxiv.org/abs/2010.11929

# Sharpness Aware Minimization (SAM)

SAM simultaneously minimizes loss value and loss sharpness. In particular, it seeks parameters that lie in neighborhoods having uniformly low loss. SAM improves model generalization and yields SoTA performance for several datasets. Additionally, it provides robustness to label noise on par with that provided by SoTA procedures that specifically target learning with noisy labels.

*ResNet loss landscape at the end of training with and without SAM. Sharpness-aware updates lead to a significantly wider minimum, which then leads to better generalization properties.*

[2] P. Foret, A. Kleiner, y H. Mobahi, “Sharpness-Aware Minimization For Efficiently Improving Generalization”, 2021.

# The negative log likelihood loss

It is useful to train a classification problem with $C$ classes.

If provided, the optional argument weight should be a 1D Tensor assigning weight to each of the classes. This is particularly useful when you have an unbalanced training set.

The input given through a forward call is expected to contain log-probabilities of each class. input has to be a Tensor of size either (minibatch, $C$ ) or ( minibatch, $C, d_1, d_2, \ldots, d_K$ ) with $K \geq 1$ for the $K$-dimensional case. The latter is useful for higher dimension inputs, such as computing NLL loss per-pixel for 2D images.

Obtaining log-probabilities in a neural network is easily achieved by adding a LogSoftmax layer in the last layer of your network. You may use CrossEntropyLoss instead, if you prefer not to add an extra layer.

The target that this loss expects should be a class index in the range $\[0, C-1\]$ where $C$ number of classes; if ignore_index is specified, this loss also accepts this class index (this index may not necessarily be in the class range).

The unreduced (i.e. with reduction set to 'none ') loss can be described as:

$$

\ell(x, y)=L=\left\{l_1, \ldots, l_N\right\}^{\top}, \quad l_n=-w_{y_n} x_{n, y_n}, \quad w_c=\text { weight }[c] \cdot 1

$$

where $x$ is the input, $y$ is the target, $w$ is the weight, and $N$ is the batch size. If reduction is not 'none' (default 'mean'), then

$$

\ell(x, y)= \begin{cases}\sum_{n=1}^N \frac{1}{\sum_{n=1}^N w_{y_n}} l_n, & \text { if reduction }=\text { 'mean' } \\ \sum_{n=1}^N l_n, & \text { if reduction }=\text { 'sum' }\end{cases}

$$

# Resultados obtenidos

<img src="https://cdn-uploads.huggingface.co/production/uploads/64ff2131f7f3fa2d7fe256fc/CO6vFEjt3FkxB8JgZTbEd.png" width="500" /> |

ambrosfitz/tinyllama-history-chat_v0.1 | ambrosfitz | 2024-02-07T03:16:49Z | 5 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-03T17:55:50Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

Deepnoid/OPEN-SOLAR-KO-10.7B | Deepnoid | 2024-02-07T03:11:36Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"llama",

"text-generation",

"generated_from_trainer",

"base_model:beomi/OPEN-SOLAR-KO-10.7B",

"base_model:finetune:beomi/OPEN-SOLAR-KO-10.7B",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-07T01:46:52Z | ---

license: apache-2.0

base_model: beomi/OPEN-SOLAR-KO-10.7B

tags:

- generated_from_trainer

model-index:

- name: beomidpo-out-v2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/OpenAccess-AI-Collective/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/OpenAccess-AI-Collective/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.0`

```yaml

base_model: beomi/OPEN-SOLAR-KO-10.7B

load_in_8bit: false

load_in_4bit: false

strict: false

rl: dpo

datasets:

- path: datasets/dposet/dpodatav2.jsonl

ds_type: json

data_files:

- datasets/dposet/dpodatav2.jsonl

split: train

dataset_prepared_path:

val_set_size: 0.0

output_dir: ./beomidpo-out-v2

adapter: lora

lora_model_dir:

sequence_len: 2048

sample_packing: false

pad_to_sequence_len: false

lora_r: 8

lora_alpha: 32

lora_dropout: 0.05

lora_target_linear: true

lora_fan_in_fan_out:

lora_target_modules:

- q_proj

- v_proj

- k_proj

- o_proj

gradient_accumulation_steps: 1

micro_batch_size: 1

num_epochs: 1

optimizer: paged_adamw_8bit

lr_scheduler: cosine

learning_rate: 2e-5

train_on_inputs: false

group_by_length: false

bf16: false

fp16: true

tf32: false

gradient_checkpointing: true

early_stopping_patience:

resume_from_checkpoint:

local_rank:

logging_steps: 1

xformers_attention:

flash_attention: false

warmup_steps: 10

save_steps: 100

save_total_limit: 3

debug:

deepspeed: deepspeed_configs/zero2.json

weight_decay: 0.0

fsdp:

fsdp_config:

special_tokens:

save_safetensors: false

```

</details><br>

# beomidpo-out-v2

This model is a fine-tuned version of [beomi/OPEN-SOLAR-KO-10.7B](https://huggingface.co/beomi/OPEN-SOLAR-KO-10.7B) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 8

- total_eval_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 2645

### Training results

### Framework versions

- Transformers 4.38.0.dev0

- Pytorch 2.0.1+cu118

- Datasets 2.16.1

- Tokenizers 0.15.0

|

chenhaodev/mistral-7b-medqa-v1 | chenhaodev | 2024-02-07T03:05:03Z | 3 | 1 | peft | [

"peft",

"safetensors",

"llama-factory",

"lora",

"generated_from_trainer",

"base_model:mistralai/Mistral-7B-v0.1",

"base_model:adapter:mistralai/Mistral-7B-v0.1",

"license:other",

"region:us"

] | null | 2024-02-07T02:28:34Z | ---

license: other

library_name: peft

tags:

- llama-factory

- lora

- generated_from_trainer

base_model: mistralai/Mistral-7B-v0.1

model-index:

- name: mistral-7b-medqa-v1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mistral-7b-medqa-v1

This model is a fine-tuned version of mistralai/Mistral-7B-v0.1 on the medical_meadow_medqa dataset.

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 20

- num_epochs: 1.0

- mixed_precision_training: Native AMP

### Framework versions

- PEFT 0.8.2

- Transformers 4.37.2

- Pytorch 2.1.1+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

### Performance

hf (pretrained=mistralai/Mistral-7B-v0.1,parallelize=True,load_in_4bit=True,peft=chenhugging/mistral-7b-medqa-v1), gen_kwargs: (None), limit: 100.0, num_fewshot: None

| Tasks |Version|Filter|n-shot| Metric |Value| |Stderr|

|---------------------|-------|------|-----:|--------|----:|---|-----:|

|pubmedqa | 1|none | 0|acc | 0.98|± |0.0141|

|ocn |Yaml |none | 0|acc | 0.71|± |0.0456|

|professional_medicine| 0|none | 0|acc | 0.69|± |0.0465|

|college_medicine | 0|none | 0|acc | 0.61|± |0.0490|

|clinical_knowledge | 0|none | 0|acc | 0.63|± |0.0485|

|medmcqa |Yaml |none | 0|acc | 0.41|± |0.0494|

|aocnp |Yaml |none | 0|acc | 0.61|± |0.0490|

### Appendix (original performance before lora-finetune)

hf (pretrained=mistralai/Mistral-7B-v0.1,parallelize=True,load_in_4bit=True), gen_kwargs: (None), limit: 100.0, num_fewshot: None, batch_size: 1

| Tasks |Version|Filter|n-shot| Metric |Value| |Stderr|

|---------------------|-------|------|-----:|--------|----:|---|-----:|

|pubmedqa | 1|none | 0|acc | 0.98|± |0.0141|

|ocn |Yaml |none | 0|acc | 0.62|± |0.0488|

|professional_medicine| 0|none | 0|acc | 0.64|± |0.0482|

|college_medicine | 0|none | 0|acc | 0.65|± |0.0479|

|clinical_knowledge | 0|none | 0|acc | 0.68|± |0.0469|

|medmcqa |Yaml |none | 0|acc | 0.45|± |0.0500|

|aocnp |Yaml |none | 0|acc | 0.47|± |0.0502|

|

gokulraj/whisper-small-trail-5-preon | gokulraj | 2024-02-07T03:05:00Z | 4 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"whisper",

"automatic-speech-recognition",

"generated_from_trainer",

"ta",

"dataset:whisper-small-preon-test-1",

"base_model:openai/whisper-small",

"base_model:finetune:openai/whisper-small",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2024-02-07T02:17:45Z | ---

language:

- ta

license: apache-2.0

base_model: openai/whisper-small

tags:

- generated_from_trainer

datasets:

- whisper-small-preon-test-1

metrics:

- wer

model-index:

- name: Whisper small

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: custom dataset

type: whisper-small-preon-test-1

metrics:

- name: Wer

type: wer

value: 11.920529801324504

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper small

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the custom dataset dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1046

- Wer Ortho: 11.8421

- Wer: 11.9205

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant_with_warmup

- lr_scheduler_warmup_steps: 50

- training_steps: 500

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer Ortho | Wer |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:-------:|

| 0.4335 | 5.0 | 100 | 0.1326 | 11.8421 | 9.2715 |

| 0.0049 | 10.0 | 200 | 0.1332 | 15.7895 | 13.9073 |

| 0.0001 | 15.0 | 300 | 0.1019 | 11.8421 | 11.9205 |

| 0.0 | 20.0 | 400 | 0.1041 | 11.8421 | 11.9205 |

| 0.0 | 25.0 | 500 | 0.1046 | 11.8421 | 11.9205 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

|

vikhyatk/moondream1 | vikhyatk | 2024-02-07T02:57:53Z | 76,449 | 487 | transformers | [

"transformers",

"pytorch",

"safetensors",

"moondream1",

"text-generation",

"custom_code",

"en",

"autotrain_compatible",

"region:us"

] | text-generation | 2024-01-20T18:10:04Z | ---

language:

- en

---

# 🌔 moondream1

1.6B parameter model built by [@vikhyatk](https://x.com/vikhyatk) using SigLIP, Phi-1.5 and the LLaVa training dataset.

The model is release for research purposes only, commercial use is not allowed.

Try it out on [Huggingface Spaces](https://huggingface.co/spaces/vikhyatk/moondream1)!

**Usage**

```

pip install transformers timm einops

```

```python

from transformers import AutoModelForCausalLM, CodeGenTokenizerFast as Tokenizer

from PIL import Image

model_id = "vikhyatk/moondream1"

model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True)

tokenizer = Tokenizer.from_pretrained(model_id)

image = Image.open('<IMAGE_PATH>')

enc_image = model.encode_image(image)

print(model.answer_question(enc_image, "<QUESTION>", tokenizer))

```

## Benchmarks

| Model | Parameters | VQAv2 | GQA | TextVQA |

| --- | --- | --- | --- | --- |

| LLaVA-1.5 | 13.3B | 80.0 | 63.3 | 61.3 |

| LLaVA-1.5 | 7.3B | 78.5 | 62.0 | 58.2 |

| **moondream1** | 1.6B | 74.7 | 57.9 | 35.6 |

## Examples

| Image | Examples |

| --- | --- |

|  | **What is the title of this book?**<br>The Little Book of Deep Learning<br><br>**What can you tell me about this book?**<br>The book in the image is titled "The Little Book of Deep Learning." It appears to be a guide or manual that provides information and instructions on the subject of deep learning. The book is described as being small and thin, making it easy to carry and read. It is set in a room with a view outside, suggesting that the person holding the book might be in a living or working space with a view. The content of the book is likely to be complex, as the title implies a focus on advanced concepts in deep learning.<br><br>**Where is the person standing?**<br> The person is standing on a balcony or deck, which is outside. |

|  | **What type of food is the girl holding?**<br>The girl is holding a hamburger.<br><br>**What color is the woman's hair?**<br>The woman's hair is white.<br><br>**What is the girl doing in the image?**<br>The girl in the image is eating a hamburger. |

|  | **What color is the train?**<br>The train is red.<br><br>**What is the bus doing in the image?**<br> The bus in the image is driving down a city street, passing through an intersection.<br><br>**What kind of public transportation is in the image?**<br>The image features a red train or trolley as the public transportation option. |

|  | **What is the girl looking at?**<br>The girl is looking at her reflection in the mirror.<br><br>**What color is the woman's hair?**<br>The woman's hair is brown.<br><br>**What is the woman doing in the mirror?**<br>The woman is adjusting her hair in the mirror. |

|  | **What might be the reason for the dog's aggressive behavior?**<br>The dog's aggressive behavior, including its growling, baring its teeth, and showing its sharp teeth, could be due to various reasons. It is possible that the dog is feeling threatened, scared, or defensive about something in its environment, such as another animal, a loud noise, or a person nearby. Alternatively, the dog might be reacting to a command it received from its owner, or it could be experiencing discomfort or pain, which is causing it to display aggressive behavior. It is essential to observe the dog's body language and context to determine the underlying cause of its aggressive behavior and respond accordingly to address its needs or concerns.<br><br>**What kind of dog is in the picture?**<br>The dog in the picture is a small, black and white chihuahua. |

|

matr1xx/scibert_scivocab_uncased-finetuned-molstmraw-mlm-0.3-5epochs | matr1xx | 2024-02-07T02:57:03Z | 6 | 0 | transformers | [

"transformers",

"safetensors",

"bert",

"fill-mask",

"generated_from_trainer",

"base_model:allenai/scibert_scivocab_uncased",

"base_model:finetune:allenai/scibert_scivocab_uncased",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2024-02-07T01:58:18Z | ---

base_model: allenai/scibert_scivocab_uncased

tags:

- generated_from_trainer

model-index:

- name: scibert_scivocab_uncased-finetuned-molstmraw-mlm-0.3-5epochs

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# scibert_scivocab_uncased-finetuned-molstmraw-mlm-0.3-5epochs

This model is a fine-tuned version of [allenai/scibert_scivocab_uncased](https://huggingface.co/allenai/scibert_scivocab_uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5085

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.8095 | 1.0 | 1265 | 0.6320 |

| 0.6481 | 2.0 | 2530 | 0.5629 |

| 0.5938 | 3.0 | 3795 | 0.5315 |

| 0.5664 | 4.0 | 5060 | 0.5132 |

| 0.5526 | 5.0 | 6325 | 0.5084 |

### Framework versions

- Transformers 4.37.2

- Pytorch 2.0.1

- Datasets 2.16.1

- Tokenizers 0.15.1

|

rhplus0831/maid-yuzu-v5 | rhplus0831 | 2024-02-07T02:52:28Z | 7 | 0 | transformers | [

"transformers",

"safetensors",

"mixtral",

"text-generation",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-06T18:20:26Z | This model was created because I was curious about whether the 8X7B model created randomly by the user would be merged with other existing 8x7b models.

Was this not suitable for the MoE's design? A problem occurred during the quantization process |

Krisbiantoro/merged_mixtral_id | Krisbiantoro | 2024-02-07T02:42:24Z | 0 | 0 | peft | [

"peft",

"tensorboard",

"safetensors",

"mixtral",

"arxiv:1910.09700",

"base_model:mistralai/Mixtral-8x7B-v0.1",

"base_model:adapter:mistralai/Mixtral-8x7B-v0.1",

"4-bit",

"bitsandbytes",

"region:us"

] | null | 2024-01-25T04:23:59Z | ---

library_name: peft

base_model: mistralai/Mixtral-8x7B-v0.1

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.7.2.dev0 |

SolaireOfTheSun/Llama-2-7b-chat-hf-sharded-bf16-fine-tuned-adapters | SolaireOfTheSun | 2024-02-07T02:39:56Z | 0 | 0 | peft | [

"peft",

"arxiv:1910.09700",

"base_model:Trelis/Llama-2-7b-chat-hf-sharded-bf16",

"base_model:adapter:Trelis/Llama-2-7b-chat-hf-sharded-bf16",

"region:us"

] | null | 2024-02-07T01:52:39Z | ---

library_name: peft

base_model: Trelis/Llama-2-7b-chat-hf-sharded-bf16

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.8.2 |

gokulraj/preon-whisper-tiny-trial-4 | gokulraj | 2024-02-07T02:35:12Z | 4 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"whisper",

"automatic-speech-recognition",

"generated_from_trainer",

"ta",

"dataset:tamilcustomvoice",

"base_model:openai/whisper-medium",

"base_model:finetune:openai/whisper-medium",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2024-02-07T02:12:52Z | ---

language:

- ta

license: apache-2.0

base_model: openai/whisper-medium

tags:

- generated_from_trainer

datasets:

- tamilcustomvoice

metrics:

- wer

model-index:

- name: Whisper tiny custom

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: custom dataset

type: tamilcustomvoice

metrics:

- name: Wer

type: wer

value: 7.28476821192053

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper tiny custom

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the custom dataset dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0315

- Wer Ortho: 9.2105

- Wer: 7.2848

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant_with_warmup

- lr_scheduler_warmup_steps: 50

- training_steps: 500

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer Ortho | Wer |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:-------:|

| 1.6536 | 2.5 | 50 | 0.4681 | 57.8947 | 50.9934 |

| 0.0732 | 5.0 | 100 | 0.0820 | 19.7368 | 15.2318 |

| 0.0076 | 7.5 | 150 | 0.0396 | 9.2105 | 7.9470 |

| 0.0013 | 10.0 | 200 | 0.0336 | 9.2105 | 8.6093 |

| 0.0007 | 12.5 | 250 | 0.0356 | 7.8947 | 5.9603 |

| 0.0005 | 15.0 | 300 | 0.0339 | 7.8947 | 5.9603 |

| 0.0004 | 17.5 | 350 | 0.0326 | 7.8947 | 5.9603 |

| 0.0003 | 20.0 | 400 | 0.0323 | 7.8947 | 5.9603 |

| 0.0003 | 22.5 | 450 | 0.0320 | 9.2105 | 7.2848 |

| 0.0002 | 25.0 | 500 | 0.0315 | 9.2105 | 7.2848 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

|

SparseLLM/reglu-90B | SparseLLM | 2024-02-07T02:34:26Z | 7 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-14T07:06:32Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/reglu-65B | SparseLLM | 2024-02-07T02:31:37Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-14T06:41:43Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/reglu-60B | SparseLLM | 2024-02-07T02:31:16Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-14T06:36:19Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/reglu-45B | SparseLLM | 2024-02-07T02:30:31Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-14T06:18:00Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/reglu-40B | SparseLLM | 2024-02-07T02:30:17Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-14T05:47:31Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/reglu-20B | SparseLLM | 2024-02-07T02:29:17Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-14T05:33:06Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/reglu-10B | SparseLLM | 2024-02-07T02:28:42Z | 9 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-14T05:22:05Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/reglu-5B | SparseLLM | 2024-02-07T02:28:12Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-14T05:14:35Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/swiglu-95B | SparseLLM | 2024-02-07T02:27:34Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-13T14:38:45Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

mathreader/ppo-LunarLander-v2 | mathreader | 2024-02-07T02:26:22Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2024-02-07T02:26:04Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 258.96 +/- 13.10

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

SparseLLM/swiglu-25B | SparseLLM | 2024-02-07T02:22:10Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-13T14:08:49Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/swiglu-35B | SparseLLM | 2024-02-07T02:21:35Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-13T14:00:50Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.

This phenomenon prompts an essential question: Which activation function is optimal for sparse LLMs? Although previous works on activation function selection have focused on the performance of LLMs, we argue that the efficiency of sparse computation should also be considered so that the LLMs can proceed with efficient inference while preserving performance.

To answer this question, we pretrain 4 LLMs with different activation functions, including ReLU, SwiGLU, ReGLU, and Squared ReLU to do more comprehensive experiments.

### Dataset

We pretrain the model on 100 billion tokens, including:

* Refinedweb

* SlimPajama

### Training Hyper-parameters

| Parameter | Value |

|-----------------------|-------------|

| Batch_Size | 4M |

| GPUs | 64xA100(80G)|

| LR_Scheduler | cosine |

| LR | 3e-4 |

### Citation:

Please kindly cite using the following BibTeX:

```bibtex

@article{zhang2024relu2,

title={ReLU$^2$ Wins: Discovering Efficient Activation Functions for Sparse LLMs},

author={Zhengyan Zhang and Yixin Song and Guanghui Yu and Xu Han and Yankai Lin and Chaojun Xiao and Chenyang Song and Zhiyuan Liu and Zeyu Mi and Maosong Sun},

journal = {arXiv preprint arXiv:2402.03804},

year={2024},

}

```

|

SparseLLM/swiglu-40B | SparseLLM | 2024-02-07T02:21:20Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"en",

"arxiv:2402.03804",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-01-13T13:58:26Z | ---

language:

- en

library_name: transformers

license: llama2

---

### Background

Sparse computation is increasingly recognized as an important direction in enhancing the computational efficiency of large language models (LLMs).

Prior research has demonstrated that LLMs utilizing the ReLU activation function exhibit sparse activations. Interestingly, our findings indicate that models based on SwiGLU also manifest sparse activations.