modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-22 00:45:16

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 491

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-22 00:44:03

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

ws11yrin/dqn-SpaceInvadersNoFrameskip-v4 | ws11yrin | 2024-05-13T19:13:45Z | 1 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2024-05-13T19:05:24Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 832.00 +/- 383.77

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga ws11yrin -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga ws11yrin -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga ws11yrin

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 10000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

fahad0071/Therapist-2 | fahad0071 | 2024-05-13T19:11:38Z | 0 | 0 | peft | [

"peft",

"safetensors",

"trl",

"sft",

"generated_from_trainer",

"dataset:generator",

"base_model:meta-llama/Llama-2-7b-chat-hf",

"base_model:adapter:meta-llama/Llama-2-7b-chat-hf",

"license:llama2",

"region:us"

] | null | 2024-05-13T19:02:18Z | ---

license: llama2

library_name: peft

tags:

- trl

- sft

- generated_from_trainer

base_model: meta-llama/Llama-2-7b-chat-hf

datasets:

- generator

model-index:

- name: Therapist-2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Therapist-2

This model is a fine-tuned version of [meta-llama/Llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2547

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- lr_scheduler_warmup_ratio: 0.03

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.188 | 1.0 | 272 | 1.2547 |

### Framework versions

- PEFT 0.10.0

- Transformers 4.39.3

- Pytorch 2.1.2

- Datasets 2.18.0

- Tokenizers 0.15.2 |

ulasfiliz954/ppo-LunarLander-v1 | ulasfiliz954 | 2024-05-13T19:11:30Z | 0 | 0 | null | [

"tensorboard",

"LunarLander-v2",

"ppo",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"deep-rl-course",

"model-index",

"region:us"

] | reinforcement-learning | 2024-05-13T19:11:23Z | ---

tags:

- LunarLander-v2

- ppo

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

- deep-rl-course

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: -153.34 +/- 69.65

name: mean_reward

verified: false

---

# PPO Agent Playing LunarLander-v2

This is a trained model of a PPO agent playing LunarLander-v2.

# Hyperparameters

|

Sonatafyai/scibert-finetuned_ADEs_SonatafyAI | Sonatafyai | 2024-05-13T19:07:26Z | 222 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"bert",

"token-classification",

"generated_from_trainer",

"base_model:jsylee/scibert_scivocab_uncased-finetuned-ner",

"base_model:finetune:jsylee/scibert_scivocab_uncased-finetuned-ner",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | 2024-05-13T19:00:12Z | ---

base_model: jsylee/scibert_scivocab_uncased-finetuned-ner

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: scibert-finetuned_ADEs_SonatafyAI

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# scibert-finetuned_ADEs_SonatafyAI

This model is a fine-tuned version of [jsylee/scibert_scivocab_uncased-finetuned-ner](https://huggingface.co/jsylee/scibert_scivocab_uncased-finetuned-ner) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2004

- Precision: 0.6454

- Recall: 0.6962

- F1: 0.6698

- Accuracy: 0.9095

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-07

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2918 | 1.0 | 640 | 0.2240 | 0.6095 | 0.7148 | 0.6579 | 0.9029 |

| 0.2305 | 2.0 | 1280 | 0.2064 | 0.6354 | 0.6896 | 0.6614 | 0.9079 |

| 0.2223 | 3.0 | 1920 | 0.2031 | 0.636 | 0.6951 | 0.6642 | 0.9082 |

| 0.2145 | 4.0 | 2560 | 0.2010 | 0.6419 | 0.6973 | 0.6684 | 0.9089 |

| 0.2081 | 5.0 | 3200 | 0.2004 | 0.6454 | 0.6962 | 0.6698 | 0.9095 |

### Framework versions

- Transformers 4.40.2

- Pytorch 2.2.1+cu121

- Datasets 2.19.1

- Tokenizers 0.19.1

|

RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf | RichardErkhov | 2024-05-13T19:05:37Z | 212 | 2 | null | [

"gguf",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2024-05-13T14:45:54Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

Qwen1.5-MoE-A2.7B-Chat - GGUF

- Model creator: https://huggingface.co/Qwen/

- Original model: https://huggingface.co/Qwen/Qwen1.5-MoE-A2.7B-Chat/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [Qwen1.5-MoE-A2.7B-Chat.Q2_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q2_K.gguf) | Q2_K | 5.49GB |

| [Qwen1.5-MoE-A2.7B-Chat.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.IQ3_XS.gguf) | IQ3_XS | 6.07GB |

| [Qwen1.5-MoE-A2.7B-Chat.IQ3_S.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.IQ3_S.gguf) | IQ3_S | 6.37GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q3_K_S.gguf) | Q3_K_S | 6.37GB |

| [Qwen1.5-MoE-A2.7B-Chat.IQ3_M.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.IQ3_M.gguf) | IQ3_M | 6.46GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q3_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q3_K.gguf) | Q3_K | 6.93GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q3_K_M.gguf) | Q3_K_M | 6.93GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q3_K_L.gguf) | Q3_K_L | 7.21GB |

| [Qwen1.5-MoE-A2.7B-Chat.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.IQ4_XS.gguf) | IQ4_XS | 7.4GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q4_0.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q4_0.gguf) | Q4_0 | 7.59GB |

| [Qwen1.5-MoE-A2.7B-Chat.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.IQ4_NL.gguf) | IQ4_NL | 7.68GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q4_K_S.gguf) | Q4_K_S | 8.11GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q4_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q4_K.gguf) | Q4_K | 8.84GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q4_K_M.gguf) | Q4_K_M | 8.84GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q4_1.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q4_1.gguf) | Q4_1 | 8.41GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q5_0.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q5_0.gguf) | Q5_0 | 9.22GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q5_K_S.gguf) | Q5_K_S | 9.46GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q5_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q5_K.gguf) | Q5_K | 10.09GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q5_K_M.gguf) | Q5_K_M | 10.09GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q5_1.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q5_1.gguf) | Q5_1 | 10.04GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q6_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q6_K.gguf) | Q6_K | 11.89GB |

| [Qwen1.5-MoE-A2.7B-Chat.Q8_0.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen1.5-MoE-A2.7B-Chat-gguf/blob/main/Qwen1.5-MoE-A2.7B-Chat.Q8_0.gguf) | Q8_0 | 14.18GB |

Original model description:

---

license: other

license_name: tongyi-qianwen

license_link: >-

https://huggingface.co/Qwen/Qwen1.5-MoE-A2.7B-Chat/blob/main/LICENSE

language:

- en

pipeline_tag: text-generation

tags:

- chat

---

# Qwen1.5-MoE-A2.7B-Chat

## Introduction

Qwen1.5-MoE is a transformer-based MoE decoder-only language model pretrained on a large amount of data.

For more details, please refer to our [blog post](https://qwenlm.github.io/blog/qwen-moe/) and [GitHub repo](https://github.com/QwenLM/Qwen1.5).

## Model Details

Qwen1.5-MoE employs Mixture of Experts (MoE) architecture, where the models are upcycled from dense language models. For instance, `Qwen1.5-MoE-A2.7B` is upcycled from `Qwen-1.8B`. It has 14.3B parameters in total and 2.7B activated parameters during runtime, while achieching comparable performance to `Qwen1.5-7B`, it only requires 25% of the training resources. We also observed that the inference speed is 1.74 times that of `Qwen1.5-7B`.

## Training details

We pretrained the models with a large amount of data, and we post-trained the models with both supervised finetuning and direct preference optimization.

## Requirements

The code of Qwen1.5-MoE has been in the latest Hugging face transformers and we advise you to build from source with command `pip install git+https://github.com/huggingface/transformers`, or you might encounter the following error:

```

KeyError: 'qwen2_moe'.

```

## Quickstart

Here provides a code snippet with `apply_chat_template` to show you how to load the tokenizer and model and how to generate contents.

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

device = "cuda" # the device to load the model onto

model = AutoModelForCausalLM.from_pretrained(

"Qwen/Qwen1.5-MoE-A2.7B-Chat",

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen1.5-MoE-A2.7B-Chat")

prompt = "Give me a short introduction to large language model."

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(device)

generated_ids = model.generate(

model_inputs.input_ids,

max_new_tokens=512

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

```

For quantized models, we advise you to use the GPTQ correspondents, namely `Qwen1.5-MoE-A2.7B-Chat-GPTQ-Int4`.

## Tips

* If you encounter code switching or other bad cases, we advise you to use our provided hyper-parameters in `generation_config.json`.

*

|

infekt/virtue_id_03 | infekt | 2024-05-13T19:04:11Z | 5 | 0 | transformers | [

"transformers",

"safetensors",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"trl",

"en",

"base_model:unsloth/llama-3-8b-bnb-4bit",

"base_model:quantized:unsloth/llama-3-8b-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-05-13T18:12:28Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

base_model: unsloth/llama-3-8b-bnb-4bit

---

# Uploaded model

- **Developed by:** infekt

- **License:** apache-2.0

- **Finetuned from model :** unsloth/llama-3-8b-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

manusehgal/llamafinetuned | manusehgal | 2024-05-13T19:02:32Z | 2 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"text-generation-inference",

"unsloth",

"trl",

"sft",

"en",

"base_model:unsloth/llama-3-8b-bnb-4bit",

"base_model:finetune:unsloth/llama-3-8b-bnb-4bit",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-05-13T18:01:22Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

- sft

base_model: unsloth/llama-3-8b-bnb-4bit

---

# Uploaded model

- **Developed by:** manusehgal

- **License:** apache-2.0

- **Finetuned from model :** unsloth/llama-3-8b-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

veronica-girolimetti/mistral-ft-lora04 | veronica-girolimetti | 2024-05-13T18:57:19Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"text-generation-inference",

"unsloth",

"mistral",

"trl",

"en",

"base_model:unsloth/mistral-7b-instruct-v0.2-bnb-4bit",

"base_model:finetune:unsloth/mistral-7b-instruct-v0.2-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-05-13T18:49:25Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- mistral

- trl

base_model: unsloth/mistral-7b-instruct-v0.2-bnb-4bit

---

# Uploaded model

- **Developed by:** veronica-girolimetti

- **License:** apache-2.0

- **Finetuned from model :** unsloth/mistral-7b-instruct-v0.2-bnb-4bit

This mistral model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

veronica-girolimetti/mistral-ft-04 | veronica-girolimetti | 2024-05-13T18:55:02Z | 4 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"text-generation-inference",

"unsloth",

"trl",

"sft",

"conversational",

"en",

"base_model:unsloth/mistral-7b-instruct-v0.2-bnb-4bit",

"base_model:finetune:unsloth/mistral-7b-instruct-v0.2-bnb-4bit",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-05-13T18:43:39Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- mistral

- trl

- sft

base_model: unsloth/mistral-7b-instruct-v0.2-bnb-4bit

---

# Uploaded model

- **Developed by:** veronica-girolimetti

- **License:** apache-2.0

- **Finetuned from model :** unsloth/mistral-7b-instruct-v0.2-bnb-4bit

This mistral model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

danyoung/leonardo | danyoung | 2024-05-13T18:54:40Z | 4 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-05-13T18:45:58Z | ---

license: apache-2.0

---

|

Sonatafyai/bert-base-cased-finetuned_ADEs_SonatafyAI | Sonatafyai | 2024-05-13T18:54:21Z | 117 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"bert",

"token-classification",

"generated_from_trainer",

"base_model:google-bert/bert-base-cased",

"base_model:finetune:google-bert/bert-base-cased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | 2024-05-13T18:46:04Z | ---

license: apache-2.0

base_model: bert-base-cased

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-base-cased-finetuned_ADEs_SonatafyAI

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-finetuned_ADEs_SonatafyAI

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3543

- Precision: 0.3857

- Recall: 0.4776

- F1: 0.4268

- Accuracy: 0.8554

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-07

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.5644 | 1.0 | 640 | 0.4536 | 0.2717 | 0.3148 | 0.2916 | 0.8285 |

| 0.4695 | 2.0 | 1280 | 0.3977 | 0.3292 | 0.4109 | 0.3656 | 0.8462 |

| 0.4253 | 3.0 | 1920 | 0.3717 | 0.3653 | 0.4536 | 0.4047 | 0.8509 |

| 0.3872 | 4.0 | 2560 | 0.3578 | 0.3747 | 0.4623 | 0.4139 | 0.8544 |

| 0.3758 | 5.0 | 3200 | 0.3543 | 0.3857 | 0.4776 | 0.4268 | 0.8554 |

### Framework versions

- Transformers 4.40.2

- Pytorch 2.2.1+cu121

- Datasets 2.19.1

- Tokenizers 0.19.1

|

Amo/so-vits-svc-4.0_GA | Amo | 2024-05-13T18:53:45Z | 0 | 4 | null | [

"audio-to-audio",

"region:us"

] | audio-to-audio | 2023-03-07T12:28:07Z | ---

pipeline_tag: audio-to-audio

---

Models creaded with and in the use in the Vul So-Vits-SVC 4.0 UI.

**_List of models:**

**----Pony:**

CakeMrs_70<br>Derpy_100000<br>Spike_45000<br>TreeHugger_69k<br>ddm_DaringDo_100k (+ Lighting Dust and Moondancer)<br>djPon3_Dash_Mix_85000<br>Tirek_100k

<br>DJpon3_V3_120k<br>OctaviaBrit (trained on 15ai tts audio)

**----Non-Pony:**

DRD_60000<br>Dagoth_Ur_50k<br>Dagoth_Ur_80k<br>Glados_50k<br>Gwenpool_50000 (multilingual)<br>NamelessHero_eng<br>Saul_Goodman_80000

TF2_SaxtonHale_100k<br>TF2_demoman_75k<br>TF2_engineer_60k<br>TF2_heavy_100k<br>TF2_medic_100k<br>TF2_scout_60k<br>TF2_sniper_60k<br>TF2_soldier_60k<br>TF2_spy_60k

g1_Diego_PL_60000<br>Boss_MGS_80k<br>Gaunter ODimm<br>B1_BattleDroid<br>Frank Sinatra

**_List of datasets:**

TF2_SaxtonHale_100k, TF2_demoman_75k, TF2_engineer_60k, TF2_heavy_100k, TF2_medic_100k, TF2_scout_60k, TF2_sniper_60k, TF2_soldier_60k, TF2_spy_60k

DRD_MLP<br>Daring_Do_multiple<br>DerpyWavExpanded<br>djPon3_Dash_Mix_(audio_dataset) (OLD, im working on making update on this)<br>Dagoth Ur<br>Boss_MGS<br>Gaunter ODimm

<br><br>OctaviaBrit (15ai tts audio)

B1_BattleDroid<br>DJpon3_V3 (NEW dataset, better than old one but still not perfect)<br>saul_goodman<br>Frank Sinatra<br>StarTrekComputer

|

Ramikan-BR/tinyllama_PY-CODER-4bit-lora_4k-v8 | Ramikan-BR | 2024-05-13T18:53:27Z | 5 | 0 | transformers | [

"transformers",

"safetensors",

"gguf",

"llama",

"text-generation",

"text-generation-inference",

"unsloth",

"en",

"base_model:Ramikan-BR/tinyllama_PY-CODER-4bit-lora_4k-v7",

"base_model:quantized:Ramikan-BR/tinyllama_PY-CODER-4bit-lora_4k-v7",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-05-13T06:14:45Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- gguf

base_model: Ramikan-BR/tinyllama_PY-CODER-4bit-lora_4k-v7

---

# Uploaded model

- **Developed by:** Ramikan-BR

- **License:** apache-2.0

- **Finetuned from model :** Ramikan-BR/tinyllama_PY-CODER-4bit-lora_4k-v7

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

Angy309/swinv2-tiny-patch4-window8-256-prueba2 | Angy309 | 2024-05-13T18:48:10Z | 151 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"swinv2",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"base_model:microsoft/swinv2-tiny-patch4-window8-256",

"base_model:finetune:microsoft/swinv2-tiny-patch4-window8-256",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2024-05-13T18:47:11Z | ---

license: apache-2.0

base_model: microsoft/swinv2-tiny-patch4-window8-256

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swinv2-tiny-patch4-window8-256-prueba2

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.7948717948717948

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swinv2-tiny-patch4-window8-256-prueba2

This model is a fine-tuned version of [microsoft/swinv2-tiny-patch4-window8-256](https://huggingface.co/microsoft/swinv2-tiny-patch4-window8-256) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4983

- Accuracy: 0.7949

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:------:|:----:|:---------------:|:--------:|

| No log | 0.9091 | 5 | 0.5855 | 0.7692 |

| 0.6548 | 2.0 | 11 | 0.4983 | 0.7949 |

| 0.6548 | 2.7273 | 15 | 0.4941 | 0.7692 |

### Framework versions

- Transformers 4.40.2

- Pytorch 2.2.1+cu121

- Datasets 2.19.1

- Tokenizers 0.19.1

|

Angy309/swin-tiny-patch4-window7-224-pueba1 | Angy309 | 2024-05-13T18:47:06Z | 217 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"swin",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"base_model:microsoft/swin-tiny-patch4-window7-224",

"base_model:finetune:microsoft/swin-tiny-patch4-window7-224",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2024-05-13T18:33:06Z | ---

license: apache-2.0

base_model: microsoft/swin-tiny-patch4-window7-224

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swin-tiny-patch4-window7-224-pueba1

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.7948717948717948

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-pueba1

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4784

- Accuracy: 0.7949

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:------:|:----:|:---------------:|:--------:|

| No log | 0.9091 | 5 | 0.6238 | 0.6410 |

| 0.653 | 2.0 | 11 | 0.5156 | 0.7692 |

| 0.653 | 2.7273 | 15 | 0.4784 | 0.7949 |

### Framework versions

- Transformers 4.40.2

- Pytorch 2.2.1+cu121

- Datasets 2.19.1

- Tokenizers 0.19.1

|

davelotito/donut-base-sroie-metrics-combined-new | davelotito | 2024-05-13T18:45:55Z | 7 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"vision-encoder-decoder",

"image-text-to-text",

"generated_from_trainer",

"base_model:naver-clova-ix/donut-base",

"base_model:finetune:naver-clova-ix/donut-base",

"license:mit",

"endpoints_compatible",

"region:us"

] | image-text-to-text | 2024-04-24T15:05:08Z | ---

license: mit

base_model: naver-clova-ix/donut-base

tags:

- generated_from_trainer

metrics:

- bleu

- wer

model-index:

- name: donut-base-sroie-metrics-combined-new

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# donut-base-sroie-metrics-combined-new

This model is a fine-tuned version of [naver-clova-ix/donut-base](https://huggingface.co/naver-clova-ix/donut-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4671

- Bleu: 0.0662

- Precisions: [0.785140562248996, 0.6825396825396826, 0.6197916666666666, 0.5626911314984709]

- Brevity Penalty: 0.1007

- Length Ratio: 0.3035

- Translation Length: 498

- Reference Length: 1641

- Cer: 0.7528

- Wer: 0.8385

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 2

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Precisions | Brevity Penalty | Length Ratio | Translation Length | Reference Length | Cer | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|:----------------------------------------------------------------------------------:|:---------------:|:------------:|:------------------:|:----------------:|:------:|:------:|

| 3.6559 | 1.0 | 253 | 1.5613 | 0.0007 | [0.5056179775280899, 0.1943127962085308, 0.07692307692307693, 0.02830188679245283] | 0.0058 | 0.1627 | 267 | 1641 | 0.8768 | 0.9436 |

| 1.2493 | 2.0 | 506 | 0.6697 | 0.0409 | [0.6560509554140127, 0.5048309178743962, 0.4481792717086835, 0.39] | 0.0834 | 0.2870 | 471 | 1641 | 0.7766 | 0.8837 |

| 0.9257 | 3.0 | 759 | 0.5168 | 0.0594 | [0.75, 0.6275862068965518, 0.5714285714285714, 0.5264797507788161] | 0.0968 | 0.2998 | 492 | 1641 | 0.7570 | 0.8499 |

| 0.6416 | 4.0 | 1012 | 0.4671 | 0.0662 | [0.785140562248996, 0.6825396825396826, 0.6197916666666666, 0.5626911314984709] | 0.1007 | 0.3035 | 498 | 1641 | 0.7528 | 0.8385 |

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.1.0

- Datasets 2.19.1

- Tokenizers 0.19.1

|

mjrdbds/llama3-4b-classifierunsloth-130524 | mjrdbds | 2024-05-13T18:45:26Z | 6 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"text-generation-inference",

"unsloth",

"trl",

"sft",

"en",

"base_model:unsloth/llama-3-8b-bnb-4bit",

"base_model:finetune:unsloth/llama-3-8b-bnb-4bit",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-05-13T16:58:37Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

- sft

base_model: unsloth/llama-3-8b-bnb-4bit

---

# Uploaded model

- **Developed by:** mjrdbds

- **License:** apache-2.0

- **Finetuned from model :** unsloth/llama-3-8b-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

snigdhachandan/ganeet-V2 | snigdhachandan | 2024-05-13T18:45:10Z | 10 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"merge",

"mergekit",

"lazymergekit",

"deepseek-ai/deepseek-math-7b-rl",

"meta-math/MetaMath-7B-V1.0",

"conversational",

"base_model:deepseek-ai/deepseek-math-7b-rl",

"base_model:merge:deepseek-ai/deepseek-math-7b-rl",

"base_model:meta-math/MetaMath-7B-V1.0",

"base_model:merge:meta-math/MetaMath-7B-V1.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-05-13T18:42:48Z | ---

tags:

- merge

- mergekit

- lazymergekit

- deepseek-ai/deepseek-math-7b-rl

- meta-math/MetaMath-7B-V1.0

base_model:

- deepseek-ai/deepseek-math-7b-rl

- meta-math/MetaMath-7B-V1.0

---

# ganeet-V2

ganeet-V2 is a merge of the following models using [LazyMergekit](https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing):

* [deepseek-ai/deepseek-math-7b-rl](https://huggingface.co/deepseek-ai/deepseek-math-7b-rl)

* [meta-math/MetaMath-7B-V1.0](https://huggingface.co/meta-math/MetaMath-7B-V1.0)

## 🧩 Configuration

```yaml

slices:

- sources:

- model: deepseek-ai/deepseek-math-7b-rl

layer_range: [0, 30]

- model: meta-math/MetaMath-7B-V1.0

layer_range: [0, 30]

merge_method: slerp

base_model: deepseek-ai/deepseek-math-7b-rl

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.4

dtype: bfloat16

```

## 💻 Usage

```python

!pip install -qU transformers accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "snigdhachandan/ganeet-V2"

messages = [{"role": "user", "content": "What is a large language model?"}]

tokenizer = AutoTokenizer.from_pretrained(model)

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

outputs = pipeline(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

``` |

binhK/flan_t5_finetuned_sarcastic | binhK | 2024-05-13T18:43:55Z | 169 | 0 | transformers | [

"transformers",

"safetensors",

"t5",

"text2text-generation",

"generated_from_trainer",

"base_model:google/flan-t5-base",

"base_model:finetune:google/flan-t5-base",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2024-05-13T18:16:36Z | ---

license: apache-2.0

base_model: google/flan-t5-base

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: flan_t5_finetuned_sarcastic

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# flan_t5_finetuned_sarcastic

This model is a fine-tuned version of [google/flan-t5-base](https://huggingface.co/google/flan-t5-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 4.5109

- Rouge1: 19.0525

- Rouge2: 6.5322

- Rougel: 17.439

- Rougelsum: 17.4744

- Gen Len: 17.4448

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:-------:|:---------:|:-------:|

| 1.6482 | 1.0 | 834 | 4.4165 | 18.9525 | 6.7215 | 17.5456 | 17.4554 | 17.1070 |

| 1.4688 | 2.0 | 1668 | 4.5109 | 19.0525 | 6.5322 | 17.439 | 17.4744 | 17.4448 |

### Framework versions

- Transformers 4.40.2

- Pytorch 2.3.0

- Datasets 2.19.1

- Tokenizers 0.19.1

|

kyl23/hw3_RTE_bitfit_1e-4 | kyl23 | 2024-05-13T18:41:47Z | 163 | 0 | transformers | [

"transformers",

"safetensors",

"roberta",

"text-classification",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2024-05-13T13:34:30Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

veronica-girolimetti/mistral_qt_finetuned_LoRA_04 | veronica-girolimetti | 2024-05-13T18:39:43Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"text-generation-inference",

"unsloth",

"mistral",

"trl",

"en",

"base_model:unsloth/mistral-7b-instruct-v0.2-bnb-4bit",

"base_model:finetune:unsloth/mistral-7b-instruct-v0.2-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-05-13T18:37:24Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- mistral

- trl

base_model: unsloth/mistral-7b-instruct-v0.2-bnb-4bit

---

# Uploaded model

- **Developed by:** veronica-girolimetti

- **License:** apache-2.0

- **Finetuned from model :** unsloth/mistral-7b-instruct-v0.2-bnb-4bit

This mistral model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

AndiB93/ppo-Huggy | AndiB93 | 2024-05-13T18:38:46Z | 2 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"Huggy",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] | reinforcement-learning | 2024-05-13T18:36:33Z | ---

library_name: ml-agents

tags:

- Huggy

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: AndiB93/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

Ziyu25/dqn-SpaceInvadersNoFrameskip-v4 | Ziyu25 | 2024-05-13T18:37:59Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2024-05-13T18:37:24Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 149.00 +/- 123.18

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga Ziyu25 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga Ziyu25 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga Ziyu25

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 100000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

blueprintninja/dolphin-2.9.1-llama-3-8b-llamafile-nonAVX | blueprintninja | 2024-05-13T18:35:37Z | 4 | 1 | null | [

"llamafile",

"GGUF",

"base_model:crusoeai/dolphin-2.9.1-llama-3-8b-GGUF",

"base_model:finetune:crusoeai/dolphin-2.9.1-llama-3-8b-GGUF",

"region:us"

] | null | 2024-05-13T18:33:32Z |

---

tags:

- llamafile

- GGUF

base_model: crusoeai/dolphin-2.9.1-llama-3-8b-GGUF

---

## dolphin-2.9.1-llama-3-8b-llamafile-nonAVX

llamafile lets you distribute and run LLMs with a single file. [announcement blog post](https://hacks.mozilla.org/2023/11/introducing-llamafile/)

#### Downloads

- [dolphin-2.9.1-llama-3-8b.Q4_0.llamafile](https://huggingface.co/blueprintninja/dolphin-2.9.1-llama-3-8b-llamafile-nonAVX/resolve/main/dolphin-2.9.1-llama-3-8b.Q4_0.llamafile)

This repository was created using the [llamafile-builder](https://github.com/rabilrbl/llamafile-builder)

|

AnnaLissa/fine_tuned_model | AnnaLissa | 2024-05-13T18:33:56Z | 64 | 0 | transformers | [

"transformers",

"tf",

"bert",

"text-classification",

"code",

"en",

"dataset:HuggingFaceFW/fineweb",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2024-05-13T17:59:16Z | ---

license: mit

datasets:

- HuggingFaceFW/fineweb

language:

- en

metrics:

- bertscore

tags:

- code

--- |

Sonatafyai/roberta-large-finetuned_ADEs_SonatafyAI | Sonatafyai | 2024-05-13T18:29:37Z | 125 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"roberta",

"token-classification",

"generated_from_trainer",

"base_model:FacebookAI/roberta-large",

"base_model:finetune:FacebookAI/roberta-large",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | 2024-05-13T18:03:41Z | ---

license: mit

base_model: roberta-large

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: roberta-large-finetuned_ADEs_SonatafyAI

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-large-finetuned_ADEs_SonatafyAI

This model is a fine-tuned version of [roberta-large](https://huggingface.co/roberta-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2571

- Precision: 0.5269

- Recall: 0.6208

- F1: 0.5700

- Accuracy: 0.8859

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-07

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.7192 | 1.0 | 640 | 0.3366 | 0.4491 | 0.5202 | 0.4820 | 0.8653 |

| 0.3549 | 2.0 | 1280 | 0.2814 | 0.4982 | 0.6066 | 0.5471 | 0.8803 |

| 0.3118 | 3.0 | 1920 | 0.2653 | 0.5178 | 0.6186 | 0.5637 | 0.8831 |

| 0.2827 | 4.0 | 2560 | 0.2624 | 0.5276 | 0.6372 | 0.5772 | 0.8833 |

| 0.2741 | 5.0 | 3200 | 0.2571 | 0.5269 | 0.6208 | 0.5700 | 0.8859 |

### Framework versions

- Transformers 4.40.2

- Pytorch 2.2.1+cu121

- Datasets 2.19.1

- Tokenizers 0.19.1

|

rushdiodeh/Multi-dialect-Arabicrush | rushdiodeh | 2024-05-13T18:24:55Z | 0 | 0 | null | [

"text-classification",

"license:apache-2.0",

"region:us"

] | text-classification | 2024-04-13T20:27:24Z | ---

license: apache-2.0

metrics:

- accuracy

pipeline_tag: text-classification

---

# Arabic-Dialects-Identification-Model

here's a complete Python code to perform the Arabic dialects identification using MultinomialNB and Random Forest classifiers and evaluate the model using various performance metrics:

This creates a dataframe 'comparison_df' that has three columns: 'Actual', 'Multinomial NB', and Random ,Forest'. The Actual column contains the true labels for the testing data, while the Multinomial NB and Random Forest columns contain the predicted labels for the testing data using the corresponding classifier.

Finally, the comparison dataframe is saved to an Excel file called arabic_dialects_comparison.xlsx using the to_excel() method with index=False argument to exclude the row index from the Excel file.

|

RichardErkhov/TeeZee_-_Bielik-SOLAR-LIKE-10.7B-Instruct-v0.1-8bits | RichardErkhov | 2024-05-13T18:24:32Z | 4 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"8-bit",

"bitsandbytes",

"region:us"

] | text-generation | 2024-05-13T18:12:06Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

Bielik-SOLAR-LIKE-10.7B-Instruct-v0.1 - bnb 8bits

- Model creator: https://huggingface.co/TeeZee/

- Original model: https://huggingface.co/TeeZee/Bielik-SOLAR-LIKE-10.7B-Instruct-v0.1/

Original model description:

---

license: cc-by-nc-4.0

---

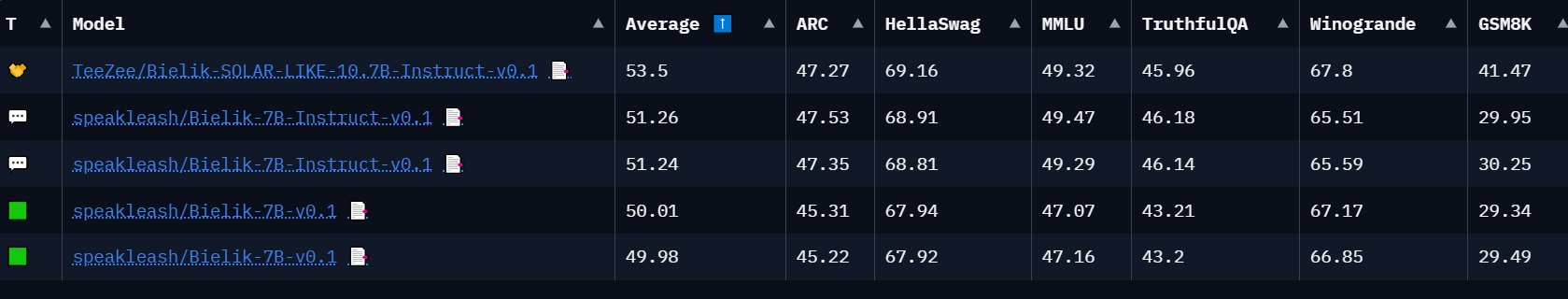

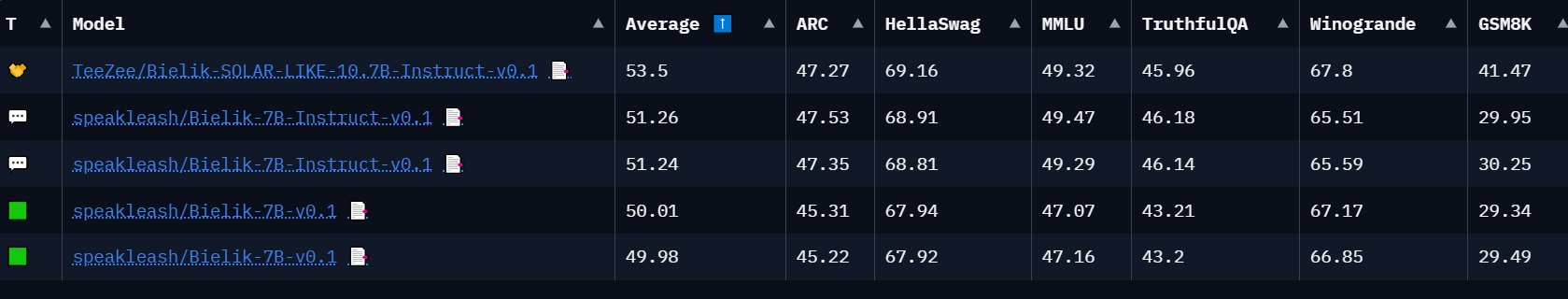

### TeeZee/Bielik-SOLAR-LIKE-10.7B-Instruct-v0.1 ###

Precise recipe used by Upstage to create [SOLAR](https://huggingface.co/upstage/SOLAR-10.7B-v1.0) was applied to https://huggingface.co/speakleash/Bielik-7B-Instruct-v0.1

*(just merge, no finetuning)

### Results ###

- model is still coherent in Polish language, even without finetuning after merge

- instruct mode works in ooba without issues

- model is censored and aligned

- seems that this model scores highest amongst all versions of original Bielik models, further finetunig should improve results even more.

- on dedicated to Polish speaking LLM leaderboards, its 2nd, just behind instruct version used for this merge, and thats to be expected when applying DUS merge - very small quality loss.

[Polish LLMs leaderboards](https://huggingface.co/spaces/speakleash/open_pl_llm_leaderboard)

- overall it seems like a good base for further finetunig in Polish language.

|

snigdhachandan/ganeet-V1 | snigdhachandan | 2024-05-13T18:23:57Z | 5 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"merge",

"mergekit",

"lazymergekit",

"deepseek-ai/deepseek-math-7b-rl",

"deepseek-ai/deepseek-math-7b-instruct",

"conversational",

"base_model:deepseek-ai/deepseek-math-7b-instruct",

"base_model:merge:deepseek-ai/deepseek-math-7b-instruct",

"base_model:deepseek-ai/deepseek-math-7b-rl",

"base_model:merge:deepseek-ai/deepseek-math-7b-rl",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-05-13T18:20:51Z | ---

tags:

- merge

- mergekit

- lazymergekit

- deepseek-ai/deepseek-math-7b-rl

- deepseek-ai/deepseek-math-7b-instruct

base_model:

- deepseek-ai/deepseek-math-7b-rl

- deepseek-ai/deepseek-math-7b-instruct

---

# ganeet-V1

ganeet-V1 is a merge of the following models using [LazyMergekit](https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing):

* [deepseek-ai/deepseek-math-7b-rl](https://huggingface.co/deepseek-ai/deepseek-math-7b-rl)

* [deepseek-ai/deepseek-math-7b-instruct](https://huggingface.co/deepseek-ai/deepseek-math-7b-instruct)

## 🧩 Configuration

```yaml

slices:

- sources:

- model: deepseek-ai/deepseek-math-7b-rl

layer_range: [0, 30]

- model: deepseek-ai/deepseek-math-7b-instruct

layer_range: [0, 30]

merge_method: slerp

base_model: deepseek-ai/deepseek-math-7b-rl

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.4

dtype: bfloat16

```

## 💻 Usage

```python

!pip install -qU transformers accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "snigdhachandan/ganeet-V1"

messages = [{"role": "user", "content": "What is a large language model?"}]

tokenizer = AutoTokenizer.from_pretrained(model)

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

outputs = pipeline(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

``` |

Sufiyan11919/dqn-AsteroidsNoFrameskip-v4 | Sufiyan11919 | 2024-05-13T18:22:32Z | 9 | 0 | stable-baselines3 | [

"stable-baselines3",

"AsteroidsNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2024-05-13T18:22:11Z | ---

library_name: stable-baselines3

tags:

- AsteroidsNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AsteroidsNoFrameskip-v4

type: AsteroidsNoFrameskip-v4

metrics:

- type: mean_reward

value: 688.00 +/- 189.94

name: mean_reward

verified: false

---

# **DQN** Agent playing **AsteroidsNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **AsteroidsNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env AsteroidsNoFrameskip-v4 -orga Sufiyan11919 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env AsteroidsNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env AsteroidsNoFrameskip-v4 -orga Sufiyan11919 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env AsteroidsNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env AsteroidsNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env AsteroidsNoFrameskip-v4 -f logs/ -orga Sufiyan11919

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('normalize', False),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

kanaluvu/bloomz-560m-prompted-finetuned | kanaluvu | 2024-05-13T18:18:53Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-05-13T18:18:16Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

abhishek/autotrain-w77ed-kah7g | abhishek | 2024-05-13T18:17:22Z | 195 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"detr",

"object-detection",

"autotrain",

"vision",

"dataset:cppe-5",

"endpoints_compatible",

"region:us"

] | object-detection | 2024-05-13T17:25:50Z |

---

tags:

- autotrain

- object-detection

- vision

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

datasets:

- cppe-5

---

# Model Trained Using AutoTrain

- Problem type: Object Detection

## Validation Metrics

loss: 1.1950929164886475

map: 0.3186

map_50: 0.638

map_75: 0.2701

map_small: 0.1709

map_medium: 0.2412

map_large: 0.4688

mar_1: 0.2876

mar_10: 0.4874

mar_100: 0.4997

mar_small: 0.2254

mar_medium: 0.4182

mar_large: 0.6428

map_Coverall: 0.5105

mar_100_Coverall: 0.6889

map_Face_Shield: 0.2804

mar_100_Face_Shield: 0.5765

map_Gloves: 0.2836

mar_100_Gloves: 0.4246

map_Goggles: 0.1154

mar_100_Goggles: 0.3375

map_Mask: 0.4031

mar_100_Mask: 0.4712

|

ISTA-DASLab/Meta-Llama-3-70B-Instruct-AQLM-2Bit-1x16 | ISTA-DASLab | 2024-05-13T18:14:11Z | 135 | 20 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"facebook",

"meta",

"llama-3",

"conversational",

"text-generation-inference",

"arxiv:2401.06118",

"autotrain_compatible",

"endpoints_compatible",

"aqlm",

"region:us"

] | text-generation | 2024-05-03T09:45:59Z | ---

library_name: transformers

tags:

- llama

- facebook

- meta

- llama-3

- conversational

- text-generation-inference

---

Official [AQLM](https://arxiv.org/abs/2401.06118) quantization of [meta-llama/Meta-Llama-3-70B-Instruct

](https://huggingface.co/meta-llama/Meta-Llama-3-70B-Instruct).

For this quantization, we used 1 codebook of 16 bits.

Results (measured with `lm_eval==4.0`):

| Model | Quantization | MMLU (5-shot) | ArcC| ArcE| Hellaswag | Winogrande | PiQA | Model size, Gb |

|------|------|-------|------|------|------|------|------|------|

|meta-llama/Meta-Llama-3-70B | - | 0.7980 | 0.6160 | 0.8624 | 0.6367 | 0.8183 | 0.7632 | 141.2 |

| | 1x16 | 0.7587 | 0.4863 | 0.7668 | 0.6159 | 0.7481 | 0.7537 | 21.9 | |

npvinHnivqn/rag_healthcare_vietnamese_vinallama_xattn_extractor_v08 | npvinHnivqn | 2024-05-13T18:12:35Z | 49 | 0 | transformers | [

"transformers",

"safetensors",

"blip_2_qformer",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-05-13T18:12:08Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses