modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

sequence | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

nicholasbien/gpt2_finetuned-20k-2_7 | nicholasbien | 2024-02-25T04:04:02Z | 164 | 0 | transformers | [

"transformers",

"safetensors",

"gpt2",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-25T03:23:11Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

enhanceaiteam/Codehelp-33b | enhanceaiteam | 2024-02-25T03:23:18Z | 0 | 2 | null | [

"LLM",

"Text to text",

"Code",

"Chatgpt",

"Llama",

"text-generation",

"license:mit",

"region:us"

] | text-generation | 2024-02-24T18:58:24Z | ---

license: mit

metrics:

- code_eval

pipeline_tag: text-generation

tags:

- LLM

- Text to text

- Code

- Chatgpt

- Llama

---

## Description

CodeHelp-33b is a merge model developed by Pranav for assisting developers with code-related tasks. This model is based on the Language Model (LLM) architecture.

## Features

- **Code Assistance:** Provides recommendations and suggestions for coding tasks.

- **Merge Model:** Combines multiple models for enhanced performance.

- **Developed by Pranav:** Created by Pranav, a skilled developer in the field.

## Usage

1. Load the model:

python

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("enhanceaiteam/Codehelp-33b")

tokenizer = AutoTokenizer.from_pretrained("enhanceaiteam/Codehelp-33b")

2. Generate code assistance:

python

input_text = "Write a function to sort a list of integers."

input_ids = tokenizer.encode(input_text, return_tensors="pt")

output = model.generate(input_ids, max_length=100, num_return_sequences=1)

generated_code = tokenizer.decode(output[0], skip_special_tokens=True)

print(generated_code)

## Acknowledgements

- This model is based on the Hugging Face Transformers library.

- Special thanks to Pranav for developing and sharing this merge model for the developer community.

## License

This project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.

Please customize this template with specific details about your Model CodeHelp-30b repository. If you have any further questions or need assistance, feel free to reach out.

|

liminerity/i-guess-this-is-a-lora-model-i-made | liminerity | 2024-02-25T03:16:52Z | 0 | 0 | peft | [

"peft",

"region:us"

] | null | 2024-02-25T03:16:47Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.4.0

|

alhosseini/Llama-2-7b-hf_neoliberal_subreddit | alhosseini | 2024-02-25T03:11:14Z | 76 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"bitsandbytes",

"region:us"

] | text-generation | 2024-02-25T03:09:17Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

jsfs11/MixtureofMerges-MoE-4x7b-v4-GGUF | jsfs11 | 2024-02-25T03:04:52Z | 8 | 1 | null | [

"gguf",

"moe",

"frankenmoe",

"merge",

"mergekit",

"lazymergekit",

"flemmingmiguel/MBX-7B-v3",

"Kukedlc/NeuTrixOmniBe-7B-model-remix",

"PetroGPT/WestSeverus-7B-DPO",

"vanillaOVO/supermario_v4",

"base_model:Kukedlc/NeuTrixOmniBe-7B-model-remix",

"base_model:merge:Kukedlc/NeuTrixOmniBe-7B-model-remix",

"base_model:PetroGPT/WestSeverus-7B-DPO",

"base_model:merge:PetroGPT/WestSeverus-7B-DPO",

"base_model:flemmingmiguel/MBX-7B-v3",

"base_model:merge:flemmingmiguel/MBX-7B-v3",

"base_model:vanillaOVO/supermario_v4",

"base_model:merge:vanillaOVO/supermario_v4",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-02-24T00:46:59Z | ---

license: apache-2.0

tags:

- moe

- frankenmoe

- merge

- mergekit

- lazymergekit

- flemmingmiguel/MBX-7B-v3

- Kukedlc/NeuTrixOmniBe-7B-model-remix

- PetroGPT/WestSeverus-7B-DPO

- vanillaOVO/supermario_v4

base_model:

- flemmingmiguel/MBX-7B-v3

- Kukedlc/NeuTrixOmniBe-7B-model-remix

- PetroGPT/WestSeverus-7B-DPO

- vanillaOVO/supermario_v4

---

# Open-LLM Benchmark Results:

MixtureofMerges-MoE-4x7b-v4 (As of 12/02/24 PB Score) on Open LLM Leaderboard📑

Average: 76.23

ARC: 72.53

HellaSwag: 88.85

MMLU: 64.53

TruthfulQA: 75.3

Winogrande: 84.85

GSM8K: 71.34

# MixtureofMerges-MoE-4x7b-v4

MixtureofMerges-MoE-4x7b-v4 is a Mixure of Experts (MoE) made with the following models using [LazyMergekit](https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing):

* [flemmingmiguel/MBX-7B-v3](https://huggingface.co/flemmingmiguel/MBX-7B-v3)

* [Kukedlc/NeuTrixOmniBe-7B-model-remix](https://huggingface.co/Kukedlc/NeuTrixOmniBe-7B-model-remix)

* [PetroGPT/WestSeverus-7B-DPO](https://huggingface.co/PetroGPT/WestSeverus-7B-DPO)

* [vanillaOVO/supermario_v4](https://huggingface.co/vanillaOVO/supermario_v4)

## 🧩 Configuration

```yaml

base_model: Kukedlc/NeuTrixOmniBe-7B-model-remix

gate_mode: hidden

dtype: bfloat16

experts:

- source_model: flemmingmiguel/MBX-7B-v3

positive_prompts:

- "Answer this question from the ARC (Argument Reasoning Comprehension)."

- "Use common sense and logical reasoning skills."

- "What assumptions does this argument rely on?"

- "Are these assumptions valid? Explain."

- "Could this be explained in a different way? Provide an alternative explanation."

- "Identify any weaknesses in this argument."

- "Does this argument contain any logical fallacies? If so, which ones?"

negative_prompts:

- "misses key evidence"

- "overly general"

- "focuses on irrelevant details"

- "assumes information not provided"

- "relies on stereotypes"

- source_model: Kukedlc/NeuTrixOmniBe-7B-model-remix

positive_prompts:

- "Answer this question, demonstrating commonsense understanding and using any relevant general knowledge you may have."

- "Provide a concise summary of this passage, then explain why the highlighted section is essential to the main idea."

- "Read these two brief articles presenting different viewpoints on the same topic. List their key arguments and highlight where they disagree."

- "Paraphrase this statement, changing the emotional tone but keeping the core meaning intact. Example: Rephrase a worried statement in a humorous way"

- "Create a short analogy that helps illustrate the main concept of this article."

negative_prompts:

- "sounds too basic"

- "understated"

- "dismisses important details"

- "avoids the question's nuance"

- "takes this statement too literally"

- source_model: PetroGPT/WestSeverus-7B-DPO

positive_prompts:

- "Calculate the answer to this math problem"

- "My mathematical capabilities are strong, allowing me to handle complex mathematical queries"

- "solve for"

- "A store sells apples at $0.50 each. If Emily buys 12 apples, how much does she need to pay?"

- "Isolate x in the following equation: 2x + 5 = 17"

- "Solve this equation and show your working."

- "Explain why you used this formula to solve the problem."

- "Attempt to divide this number by zero. Explain why this cannot be done."

negative_prompts:

- "incorrect"

- "inaccurate"

- "creativity"

- "assumed without proof"

- "rushed calculation"

- "confuses mathematical concepts"

- "draws illogical conclusions"

- "circular reasoning"

- source_model: vanillaOVO/supermario_v4

positive_prompts:

- "Generate a few possible continuations to this scenario."

- "Demonstrate understanding of everyday commonsense in your response."

- "Use contextual clues to determine the most likely outcome."

- "Continue this scenario, but make the writing style sound archaic and overly formal."

- "This narrative is predictable. Can you introduce an unexpected yet plausible twist?"

- "The character is angry. Continue this scenario showcasing a furious outburst."

negative_prompts:

- "repetitive phrases"

- "overuse of the same words"

- "contradicts earlier statements - breaks the internal logic of the scenario"

- "out of character dialogue"

- "awkward phrasing - sounds unnatural"

- "doesn't match the given genre"

```

## 💻 Usage

```python

!pip install -qU transformers bitsandbytes accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "jsfs11/MixtureofMerges-MoE-4x7b-v4"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline(

"text-generation",

model=model,

model_kwargs={"torch_dtype": torch.float16, "load_in_4bit": True},

)

messages = [{"role": "user", "content": "Explain what a Mixture of Experts is in less than 100 words."}]

prompt = pipeline.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

outputs = pipeline(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

``` |

throwromans/hpymrchnt | throwromans | 2024-02-25T03:03:55Z | 0 | 0 | null | [

"license:gpl",

"region:us"

] | null | 2023-08-02T05:30:56Z | ---

license: gpl

---

for best results, use with AbyssOrangeMix2 checkpoint

https://huggingface.co/WarriorMama777/OrangeMixs/blob/main/Models/AbyssOrangeMix2/AbyssOrangeMix2_hard.safetensors

https://civitai.com/models/4451?modelVersionId=5038

6 gorillion possibilities |

wintercoming6/shinomiya-sdxl-base-1-0-lora | wintercoming6 | 2024-02-25T02:53:29Z | 6 | 1 | diffusers | [

"diffusers",

"stable-diffusion-xl",

"stable-diffusion-xl-diffusers",

"text-to-image",

"lora",

"template:sd-lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:adapter:stabilityai/stable-diffusion-xl-base-1.0",

"license:openrail++",

"region:us"

] | text-to-image | 2024-02-25T01:17:24Z | ---

tags:

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- lora

- template:sd-lora

widget:

- text: 'cartoon style, Shinomiya, a cartoon girl with black hair, in white boots, smiling, eyes closed, mouth open, running to the right, three-quarter view, facing right, with pumping fists and striding legs, full body, simple background'

output:

url:

"image_0.png"

- text: 'cartoon style, Shinomiya, a cartoon girl with black hair, in white boots, smiling, eyes closed, mouth open, running to the right, three-quarter view, facing right, with pumping fists and striding legs, full body, simple background'

output:

url:

"image_1.png"

- text: 'cartoon style, Shinomiya, a cartoon girl with black hair, in white boots, smiling, eyes closed, mouth open, running to the right, three-quarter view, facing right, with pumping fists and striding legs, full body, simple background'

output:

url:

"image_2.png"

- text: 'cartoon style, Shinomiya, a cartoon girl with black hair, in white boots, smiling, eyes closed, mouth open, running to the right, three-quarter view, facing right, with pumping fists and striding legs, full body, simple background'

output:

url:

"image_3.png"

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: None

license: openrail++

---

# SDXL LoRA DreamBooth - wintercoming6/shinomiya-sdxl-base-1-0-lora

<Gallery />

## Model description

### These are wintercoming6/shinomiya-sdxl-base-1-0-lora LoRA adaption weights for stabilityai/stable-diffusion-xl-base-1.0.

## Download model

### Use it with UIs such as AUTOMATIC1111, Comfy UI, SD.Next, Invoke

- **LoRA**: download **[`shinomiya-sdxl-base-1-0-lora.safetensors` here 💾](/wintercoming6/shinomiya-sdxl-base-1-0-lora/blob/main/shinomiya-sdxl-base-1-0-lora.safetensors)**.

- Place it on your `models/Lora` folder.

- On AUTOMATIC1111, load the LoRA by adding `<lora:shinomiya-sdxl-base-1-0-lora:1>` to your prompt. On ComfyUI just [load it as a regular LoRA](https://comfyanonymous.github.io/ComfyUI_examples/lora/).

- *Embeddings*: download **[`shinomiya-sdxl-base-1-0-lora_emb.safetensors` here 💾](/wintercoming6/shinomiya-sdxl-base-1-0-lora/blob/main/shinomiya-sdxl-base-1-0-lora_emb.safetensors)**.

- Place it on it on your `embeddings` folder

- Use it by adding `shinomiya-sdxl-base-1-0-lora_emb` to your prompt. For example, `None`

(you need both the LoRA and the embeddings as they were trained together for this LoRA)

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

from huggingface_hub import hf_hub_download

from safetensors.torch import load_file

pipeline = AutoPipelineForText2Image.from_pretrained('stabilityai/stable-diffusion-xl-base-1.0', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('wintercoming6/shinomiya-sdxl-base-1-0-lora', weight_name='pytorch_lora_weights.safetensors')

embedding_path = hf_hub_download(repo_id='wintercoming6/shinomiya-sdxl-base-1-0-lora', filename='shinomiya-sdxl-base-1-0-lora_emb.safetensors', repo_type="model")

state_dict = load_file(embedding_path)

pipeline.load_textual_inversion(state_dict["clip_l"], token=[], text_encoder=pipeline.text_encoder, tokenizer=pipeline.tokenizer)

pipeline.load_textual_inversion(state_dict["clip_g"], token=[], text_encoder=pipeline.text_encoder_2, tokenizer=pipeline.tokenizer_2)

image = pipeline('cartoon style, Shinomiya, a cartoon girl with black hair, in white boots, smiling, eyes closed, mouth open, running to the right, three-quarter view, facing right, with pumping fists and striding legs, full body, simple background').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

## Trigger words

To trigger image generation of trained concept(or concepts) replace each concept identifier in you prompt with the new inserted tokens:

to trigger concept `TOK` → use `<s0><s1>` in your prompt

## Details

All [Files & versions](/wintercoming6/shinomiya-sdxl-base-1-0-lora/tree/main).

The weights were trained using [🧨 diffusers Advanced Dreambooth Training Script](https://github.com/huggingface/diffusers/blob/main/examples/advanced_diffusion_training/train_dreambooth_lora_sdxl_advanced.py).

LoRA for the text encoder was enabled. False.

Pivotal tuning was enabled: True.

Special VAE used for training: madebyollin/sdxl-vae-fp16-fix.

|

huglf/GPTQ-quantized-on-a-fine-tuned-Llama-2-model | huglf | 2024-02-25T02:49:47Z | 78 | 1 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"gptq",

"region:us"

] | text-generation | 2024-02-24T09:19:19Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

ChrisGoringe/vit-large-p14-vision-fp16 | ChrisGoringe | 2024-02-25T02:45:42Z | 35 | 0 | transformers | [

"transformers",

"safetensors",

"clip_vision_model",

"endpoints_compatible",

"region:us"

] | null | 2024-02-25T01:51:54Z | fp16 version of the vision model from openai/clip-vit-large-patch14|CLIPVisionModelWithProjection

---

license: mit

---

|

lvcalucioli/flan-t5-large__multiple-choice | lvcalucioli | 2024-02-25T02:38:04Z | 107 | 0 | transformers | [

"transformers",

"safetensors",

"t5",

"text2text-generation",

"generated_from_trainer",

"base_model:google/flan-t5-large",

"base_model:finetune:google/flan-t5-large",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2024-02-25T02:21:07Z | ---

license: apache-2.0

base_model: google/flan-t5-large

tags:

- generated_from_trainer

model-index:

- name: flan-t5-large__multiple-choice

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# flan-t5-large__multiple-choice

This model is a fine-tuned version of [google/flan-t5-large](https://huggingface.co/google/flan-t5-large) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.38.0.dev0

- Pytorch 2.0.1+cu117

- Datasets 2.16.1

- Tokenizers 0.15.2

|

matthewchung74/mistral-mcqa | matthewchung74 | 2024-02-25T02:29:29Z | 8 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-24T23:16:07Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

baihaqy/results | baihaqy | 2024-02-25T02:23:58Z | 161 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"distilbert",

"text-classification",

"generated_from_trainer",

"base_model:distilbert/distilbert-base-multilingual-cased",

"base_model:finetune:distilbert/distilbert-base-multilingual-cased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2024-02-25T01:17:13Z | ---

license: apache-2.0

base_model: distilbert-base-multilingual-cased

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: results

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# results

This model is a fine-tuned version of [distilbert-base-multilingual-cased](https://huggingface.co/distilbert-base-multilingual-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5117

- Accuracy: 0.7169

- Precision: 0.6803

- Recall: 0.6642

- F1: 0.6693

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 999

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 0.7606 | 1.0 | 761 | 0.6333 | 0.7228 | 0.6856 | 0.6831 | 0.6819 |

| 0.4448 | 2.0 | 1522 | 0.6450 | 0.7320 | 0.6983 | 0.7065 | 0.7011 |

| 1.0076 | 3.0 | 2283 | 0.6573 | 0.7346 | 0.7038 | 0.7153 | 0.7069 |

| 0.1369 | 4.0 | 3044 | 0.8941 | 0.7248 | 0.6855 | 0.6762 | 0.6796 |

| 0.0096 | 5.0 | 3805 | 1.1590 | 0.7264 | 0.6874 | 0.6911 | 0.6889 |

| 0.0728 | 6.0 | 4566 | 1.2896 | 0.7366 | 0.7001 | 0.6875 | 0.6910 |

| 0.0007 | 7.0 | 5327 | 1.5882 | 0.7297 | 0.7027 | 0.6787 | 0.6825 |

| 0.0106 | 8.0 | 6088 | 1.5117 | 0.7169 | 0.6803 | 0.6642 | 0.6693 |

### Framework versions

- Transformers 4.37.2

- Pytorch 2.1.0+cu121

- Datasets 2.17.1

- Tokenizers 0.15.2

|

Yihsiu/hw-01 | Yihsiu | 2024-02-25T02:21:49Z | 9 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"llama",

"text-generation",

"generated_from_trainer",

"trl",

"sft",

"conversational",

"base_model:distilbert/distilbert-base-uncased",

"base_model:quantized:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"8-bit",

"bitsandbytes",

"region:us"

] | text-generation | 2023-12-23T01:35:46Z | ---

license: apache-2.0

tags:

- generated_from_trainer

- trl

- sft

metrics:

- matthews_correlation

base_model: distilbert-base-uncased

model-index:

- name: hw-01

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hw-01

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7973

- Matthews Correlation: 0.5222

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.516 | 1.0 | 535 | 0.4558 | 0.4386 |

| 0.3417 | 2.0 | 1070 | 0.4704 | 0.5215 |

| 0.2353 | 3.0 | 1605 | 0.6810 | 0.5062 |

| 0.1624 | 4.0 | 2140 | 0.7973 | 0.5222 |

| 0.1221 | 5.0 | 2675 | 0.8570 | 0.5215 |

### Framework versions

- Transformers 4.36.2

- Pytorch 2.1.0+cu121

- Datasets 2.16.0

- Tokenizers 0.15.0

|

glenn2/gemma-2b-lora16b2 | glenn2 | 2024-02-25T02:20:04Z | 151 | 0 | transformers | [

"transformers",

"safetensors",

"gemma",

"text-generation",

"arxiv:1910.09700",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-25T02:11:57Z | ---

library_name: transformers

license: mit

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

ottoykh/Smart-Traffic | ottoykh | 2024-02-25T02:18:20Z | 54 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"onnx",

"yolov8-seg",

"image-segmentation",

"en",

"license:mit",

"endpoints_compatible",

"region:us"

] | image-segmentation | 2024-02-23T08:09:24Z | ---

license: mit

language:

- en

metrics:

- accuracy

pipeline_tag: image-segmentation

widget:

- src: >-

https://tdcctv.data.one.gov.hk/AID01209.JPG

example_title: Road cam 1

---

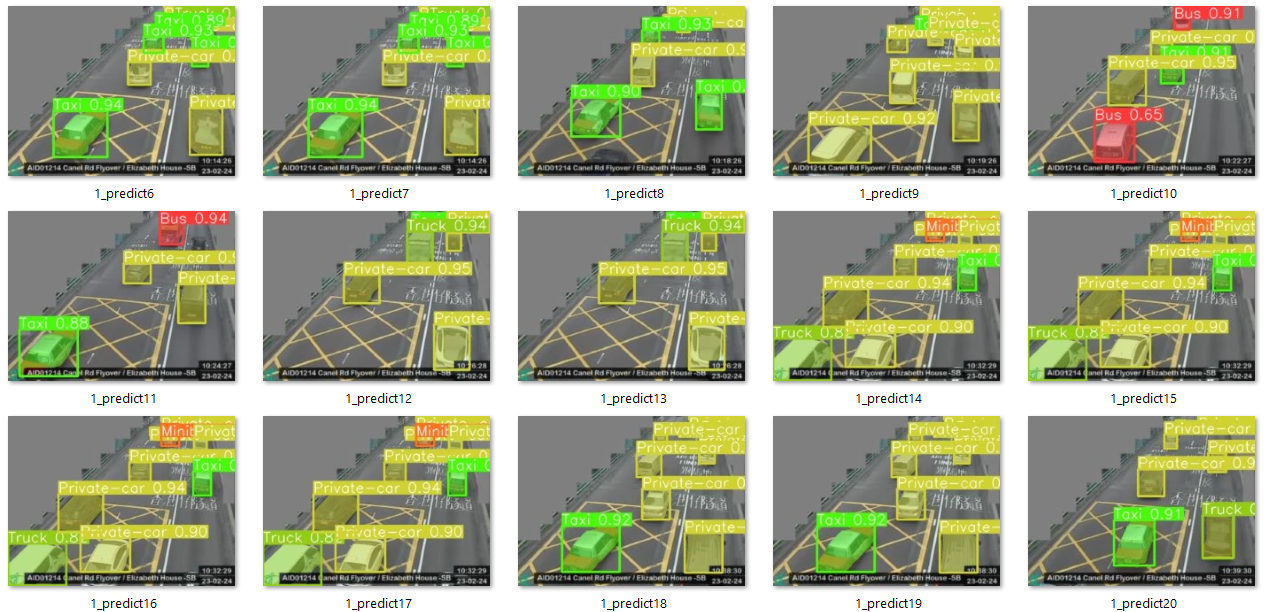

# Model Card for Smart-Traffic

This is a machine learning model designed for the Real-Time CCTV road traffic monitoring, use for the road traffic estimation and indexing.

# Table of Contents

- [Model Card for Smart-Traffic](#model-card-for--model_id-)

- [Table of Contents](#table-of-contents)

- [Table of Contents](#table-of-contents-1)

- [Model Details](#model-details)

- [Model Description](#model-description)

# Model Details

## Model Description

<!-- Provide a longer summary of what this model is/does. -->

This is a machine learning model designed for the Real-Time CCTV road traffic monitoring, use for the road traffic estimation and indexing.

- **Developed by:** Yu Kai Him Otto

- **Shared by [Optional]:** Yu Kai Him Otto

- **Model type:** Language model

- **Language(s) (NLP):** en

- **License:** mit

- **Parent Model:** More information needed

- **Resources for more information:** More information needed

# Model Card Authors [optional]

<!-- This section provides another layer of transparency and accountability. Whose views is this model card representing? How many voices were included in its construction? Etc. -->

Yu Kai Him Otto, Chan Ka Hin and Leung Yat Long

# Model Card Contact

More information needed

# How to Get Started with the Model

Use the code below to get started with the model.

<details>

<summary> Click to expand </summary>

More information needed

</details> |

AkhilKashyap1998/gpt2-GPTQ | AkhilKashyap1998 | 2024-02-25T02:12:15Z | 2 | 0 | transformers | [

"transformers",

"gpt2",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"4-bit",

"gptq",

"region:us"

] | text-generation | 2024-02-25T02:11:40Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

liminerity/baccules-3b-slerp | liminerity | 2024-02-25T02:01:03Z | 108 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"merge",

"mergekit",

"lazymergekit",

"indischepartij/MiniCPM-3B-Hercules-v2.0",

"indischepartij/MiniCPM-3B-Bacchus",

"conversational",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-25T01:59:16Z | ---

license: apache-2.0

tags:

- merge

- mergekit

- lazymergekit

- indischepartij/MiniCPM-3B-Hercules-v2.0

- indischepartij/MiniCPM-3B-Bacchus

---

# baccules-3b-slerp

baccules-3b-slerp is a merge of the following models using [mergekit](https://github.com/cg123/mergekit):

* [indischepartij/MiniCPM-3B-Hercules-v2.0](https://huggingface.co/indischepartij/MiniCPM-3B-Hercules-v2.0)

* [indischepartij/MiniCPM-3B-Bacchus](https://huggingface.co/indischepartij/MiniCPM-3B-Bacchus)

## 🧩 Configuration

```yaml

slices:

- sources:

- model: indischepartij/MiniCPM-3B-Hercules-v2.0

layer_range: [0, 40]

- model: indischepartij/MiniCPM-3B-Bacchus

layer_range: [0, 40]

merge_method: slerp

base_model: indischepartij/MiniCPM-3B-Hercules-v2.0

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

``` |

ChrisGoringe/vitH16 | ChrisGoringe | 2024-02-25T01:54:46Z | 87 | 0 | transformers | [

"transformers",

"pytorch",

"clip",

"zero-shot-image-classification",

"endpoints_compatible",

"region:us"

] | zero-shot-image-classification | 2024-02-04T01:50:51Z | Outdated. Suggest using ChrisGoringe/vit-large-p14-vision-fp16 or one of the other models.

torch.float16 version of laion/CLIP-ViT-H-14-laion2B-s32B-b79K

Intended for use with custom aesthetic trainer.

---

license: mit

---

|

glenn2/gemma-2b-lora16b | glenn2 | 2024-02-25T01:54:23Z | 108 | 0 | transformers | [

"transformers",

"safetensors",

"gemma",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-25T01:50:22Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

glenn2/gemma-2b-lora3 | glenn2 | 2024-02-25T01:42:24Z | 145 | 0 | transformers | [

"transformers",

"safetensors",

"gemma",

"text-generation",

"arxiv:1910.09700",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-02-25T01:18:50Z | ---

library_name: transformers

license: mit

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

NoteDance/Gemma | NoteDance | 2024-02-25T01:31:55Z | 0 | 0 | tf | [

"tf",

"Note",

"gemma",

"text-generation",

"license:apache-2.0",

"region:us"

] | text-generation | 2024-02-25T01:27:26Z | ---

license: apache-2.0

library_name: tf

pipeline_tag: text-generation

tags:

- Note

- gemma

---

This model is built by Note, Note repository can be found [here](https://github.com/NoteDance/Note). The model can be found [here](https://github.com/NoteDance/Note/blob/Note-7.0/Note/neuralnetwork/tf/Gemma.py). The tutorial can be found [here](https://github.com/NoteDance/Note-documentation/tree/tf-7.0). |

Litzy619/V0224B1 | Litzy619 | 2024-02-25T01:30:34Z | 0 | 0 | null | [

"safetensors",

"generated_from_trainer",

"base_model:yahma/llama-7b-hf",

"base_model:finetune:yahma/llama-7b-hf",

"region:us"

] | null | 2024-02-24T22:57:00Z | ---

base_model: yahma/llama-7b-hf

tags:

- generated_from_trainer

model-index:

- name: V0224B1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# V0224B1

This model is a fine-tuned version of [yahma/llama-7b-hf](https://huggingface.co/yahma/llama-7b-hf) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7525

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 32

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine_with_restarts

- lr_scheduler_warmup_steps: 20

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.1045 | 0.13 | 10 | 1.0762 |

| 0.9886 | 0.26 | 20 | 0.9163 |

| 0.8808 | 0.39 | 30 | 0.8540 |

| 0.8273 | 0.52 | 40 | 0.8241 |

| 0.8082 | 0.65 | 50 | 0.8067 |

| 0.7915 | 0.78 | 60 | 0.7954 |

| 0.7687 | 0.91 | 70 | 0.7883 |

| 0.7644 | 1.04 | 80 | 0.7814 |

| 0.7454 | 1.17 | 90 | 0.7759 |

| 0.7613 | 1.3 | 100 | 0.7717 |

| 0.7512 | 1.43 | 110 | 0.7681 |

| 0.7416 | 1.55 | 120 | 0.7644 |

| 0.7315 | 1.68 | 130 | 0.7613 |

| 0.7434 | 1.81 | 140 | 0.7594 |

| 0.7477 | 1.94 | 150 | 0.7563 |

| 0.7299 | 2.07 | 160 | 0.7555 |

| 0.7148 | 2.2 | 170 | 0.7540 |

| 0.7272 | 2.33 | 180 | 0.7538 |

| 0.7203 | 2.46 | 190 | 0.7532 |

| 0.7216 | 2.59 | 200 | 0.7531 |

| 0.7233 | 2.72 | 210 | 0.7527 |

| 0.7213 | 2.85 | 220 | 0.7526 |