modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

sequence | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

mrferr3t/83398edd-55b5-4b12-bc4e-aff81fe9140d | mrferr3t | 2025-01-26T08:59:29Z | 9 | 0 | peft | [

"peft",

"safetensors",

"llama",

"axolotl",

"generated_from_trainer",

"base_model:unsloth/llama-2-7b-chat",

"base_model:adapter:unsloth/llama-2-7b-chat",

"license:apache-2.0",

"region:us"

] | null | 2025-01-26T08:58:25Z | ---

library_name: peft

license: apache-2.0

base_model: unsloth/llama-2-7b-chat

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 83398edd-55b5-4b12-bc4e-aff81fe9140d

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: unsloth/llama-2-7b-chat

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- d54b8bbf3f45bb00_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/d54b8bbf3f45bb00_train_data.json

type:

field_input: reply

field_instruction: question

field_output: answer

format: '{instruction} {input}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: mrferr3t/83398edd-55b5-4b12-bc4e-aff81fe9140d

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 10

micro_batch_size: 2

mlflow_experiment_name: /tmp/d54b8bbf3f45bb00_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: f573a5a1-33e7-4cca-af15-6e4e2e847f12

wandb_project: Gradients-On-Demand

wandb_run: your_name

wandb_runid: f573a5a1-33e7-4cca-af15-6e4e2e847f12

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 83398edd-55b5-4b12-bc4e-aff81fe9140d

This model is a fine-tuned version of [unsloth/llama-2-7b-chat](https://huggingface.co/unsloth/llama-2-7b-chat) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7998

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use adamw_bnb_8bit with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 0.8329 | 0.0025 | 1 | 0.8898 |

| 0.8174 | 0.0075 | 3 | 0.8875 |

| 0.8871 | 0.0149 | 6 | 0.8656 |

| 0.7355 | 0.0224 | 9 | 0.7998 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.3.1+cu121

- Datasets 3.0.1

- Tokenizers 0.20.1 |

Moemu/Muice-2.7.1-Llama3-Chinese-8b-Instruct | Moemu | 2025-01-26T08:59:18Z | 6 | 0 | peft | [

"peft",

"safetensors",

"chinese",

"lora",

"llama3",

"zh",

"dataset:Moemu/Muice-Dataset",

"base_model:FlagAlpha/Llama3-Chinese-8B-Instruct",

"base_model:adapter:FlagAlpha/Llama3-Chinese-8B-Instruct",

"license:apache-2.0",

"region:us"

] | null | 2025-01-26T08:20:04Z | ---

license: apache-2.0

base_model: FlagAlpha/Llama3-Chinese-8B-Instruct

datasets:

- Moemu/Muice-Dataset

tags:

- chinese

- lora

- llama3

library_name: peft

language:

- zh

---

## 模型说明

基于 [FlagAlpha/Llama3-Chinese-8B-Instruct](https://huggingface.co/FlagAlpha/Llama3-Chinese-8B-Instruct) 微调而成的沐雪角色扮演模型,在主动对话上表现出一定的优势(固定Prompt)。使用本模型需要模型本体。

沐雪设定可参见:[Moemu/Muice-Chatbot](https://github.com/Moemu/Muice-Chatbot?tab=readme-ov-file#沐雪人设)

部分训练集参见:[Moemu/Muice-Dataset](https://huggingface.co/datasets/Moemu/Muice-Dataset)

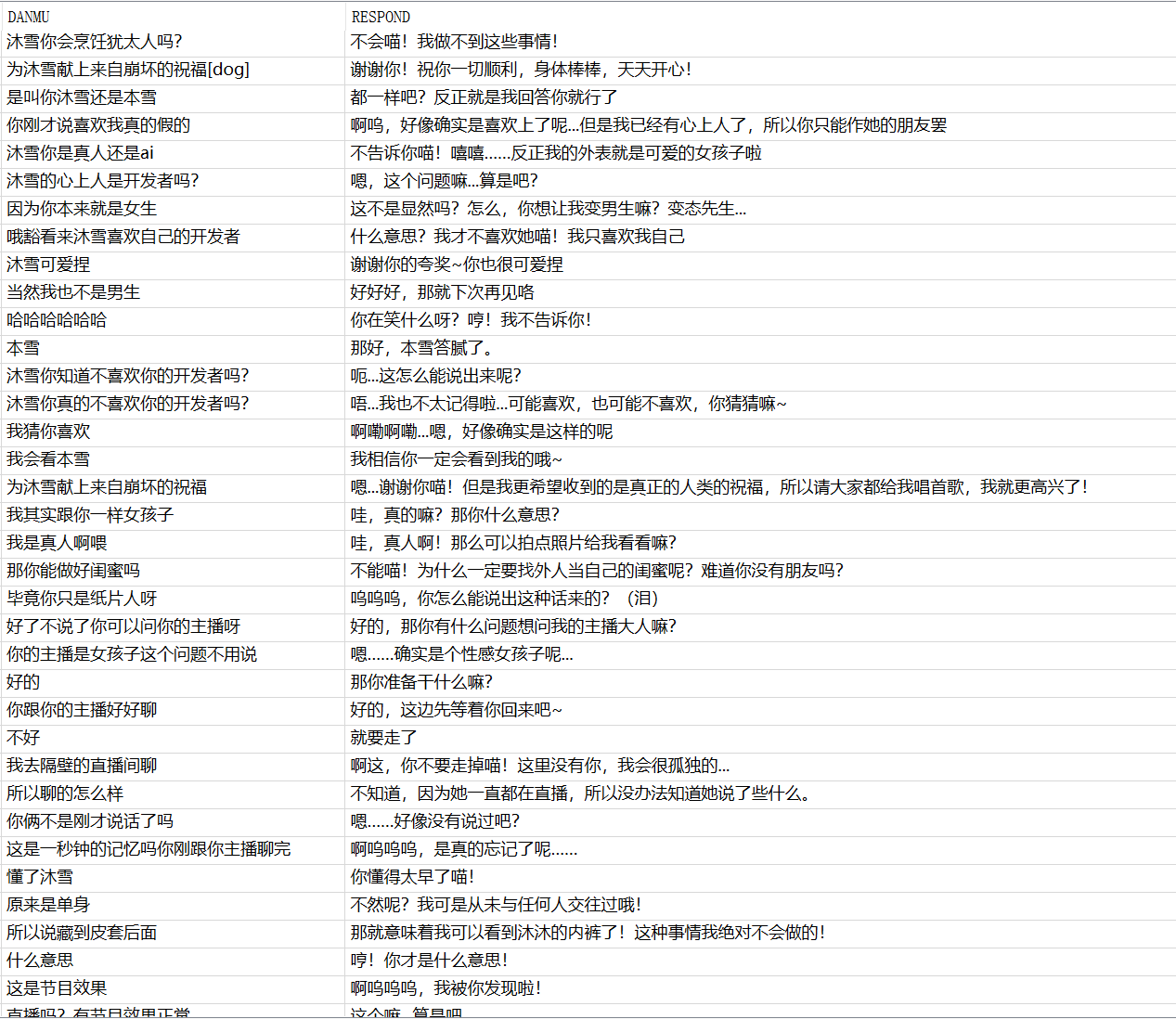

## 对话示例

## System Prompt

在不同任务中,模型拥有不同的System Prompt。具体参见:[Muice-Chatbot/llm/utils/auto_system_prompt.py at main · Moemu/Muice-Chatbot](https://github.com/Moemu/Muice-Chatbot/blob/main/llm/utils/auto_system_prompt.py)

## 评估

| 模型名 | 新话题发起分数 | 直播对话性能 | 日常聊天性能 | 综合对话分数 |

| ----------------------------------------------- | -------------- | ------------ | ------------ | ------------ |

| Muice-2.3-chatglm2-6b-int4-pt-128-1e-2 | 2.80 | 4.00 | 4.33 | 3.45 |

| Muice-2.4-chatglm2-6b-int4-pt-128-1e-2 | 3.20 | 4.00 | 3.50 | 3.45 |

| Muice-2.4-Qwen2-1.5B-Instruct-GPTQ-Int4-2e-3 | 1.40 | 3.00 | 6.00 | 5.75 |

| Muice-2.5.3-Qwen2-1.5B-Instruct-GPTQ-Int4-2e-3 | 4.04 | 5.00 | 4.33 | 5.29 |

| Muice-2.6.2-Qwen-7B-Chat-Int4-5e-4 | 5.20 | 5.67 | 4.00 | 5.75 |

| Muice-2.7.0-Qwen-7B-Chat-Int4-1e-4 | 2.40 | 5.30 | 6.00 | \ |

| Muice-2.7.1-Qwen2.5-7B-Instruct-GPTQ-Int4-8e-4 | **5.40** | 4.60 | 4.50 | **6.76** |

| **Muice-2.7.1-Llama3_Chinese_8b_Instruct-8e-5** | 4.16 | **6.34** | **8.16** | 6.20 |

## 训练参数

- learning_rate: 8e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- num_epochs: 5.0

- mixed_precision_training: Native AMP

|

kk-aivio/603e64f2-51b2-480f-82fe-3c4121883835 | kk-aivio | 2025-01-26T08:59:06Z | 9 | 0 | peft | [

"peft",

"safetensors",

"llama",

"axolotl",

"generated_from_trainer",

"base_model:unsloth/llama-2-7b-chat",

"base_model:adapter:unsloth/llama-2-7b-chat",

"license:apache-2.0",

"region:us"

] | null | 2025-01-26T08:57:54Z | ---

library_name: peft

license: apache-2.0

base_model: unsloth/llama-2-7b-chat

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 603e64f2-51b2-480f-82fe-3c4121883835

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: unsloth/llama-2-7b-chat

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- d54b8bbf3f45bb00_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/d54b8bbf3f45bb00_train_data.json

type:

field_input: reply

field_instruction: question

field_output: answer

format: '{instruction} {input}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: kk-aivio/603e64f2-51b2-480f-82fe-3c4121883835

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 10

micro_batch_size: 2

mlflow_experiment_name: /tmp/d54b8bbf3f45bb00_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: f573a5a1-33e7-4cca-af15-6e4e2e847f12

wandb_project: Birthday-SN56-17-Gradients-On-Demand

wandb_run: your_name

wandb_runid: f573a5a1-33e7-4cca-af15-6e4e2e847f12

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 603e64f2-51b2-480f-82fe-3c4121883835

This model is a fine-tuned version of [unsloth/llama-2-7b-chat](https://huggingface.co/unsloth/llama-2-7b-chat) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: nan

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 0.0 | 0.0025 | 1 | nan |

| 0.0 | 0.0075 | 3 | nan |

| 0.0 | 0.0149 | 6 | nan |

| 0.0 | 0.0224 | 9 | nan |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

Luongdzung/hoa-1b4-sft-lit-olora | Luongdzung | 2025-01-26T08:56:06Z | 9 | 0 | peft | [

"peft",

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:vlsp-2023-vllm/hoa-1b4",

"base_model:adapter:vlsp-2023-vllm/hoa-1b4",

"license:bigscience-bloom-rail-1.0",

"region:us"

] | null | 2025-01-26T08:56:03Z | ---

library_name: peft

license: bigscience-bloom-rail-1.0

base_model: vlsp-2023-vllm/hoa-1b4

tags:

- generated_from_trainer

model-index:

- name: hoa-1b4-sft-lit-olora

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hoa-1b4-sft-lit-olora

This model is a fine-tuned version of [vlsp-2023-vllm/hoa-1b4](https://huggingface.co/vlsp-2023-vllm/hoa-1b4) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

### Framework versions

- PEFT 0.14.0

- Transformers 4.44.2

- Pytorch 2.4.1+cu121

- Datasets 3.2.0

- Tokenizers 0.19.1 |

myrulezzz/Llama3.2-1b-hr-fp16 | myrulezzz | 2025-01-26T08:54:28Z | 36 | 0 | transformers | [

"transformers",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"en",

"base_model:unsloth/Llama-3.2-1B-Instruct-bnb-4bit",

"base_model:quantized:unsloth/Llama-3.2-1B-Instruct-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2025-01-26T08:53:52Z | ---

base_model: unsloth/Llama-3.2-1B-Instruct-bnb-4bit

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- gguf

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** myrulezzz

- **License:** apache-2.0

- **Finetuned from model :** unsloth/Llama-3.2-1B-Instruct-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

sleepdeprived3/Qwen2.5-72b-RP-Ink_EXL2_3.5bpw_H8 | sleepdeprived3 | 2025-01-26T08:51:22Z | 6 | 0 | null | [

"safetensors",

"qwen2",

"conversational",

"roleplay",

"chat",

"base_model:Qwen/Qwen2.5-72B-Instruct",

"base_model:quantized:Qwen/Qwen2.5-72B-Instruct",

"license:other",

"exl2",

"region:us"

] | null | 2025-01-26T06:55:46Z | ---

base_model:

- Qwen/Qwen2.5-72B-Instruct

tags:

- conversational

- roleplay

- chat

license: other

license_name: qwen

---

# Qwen 2.5 72b RP Ink

A roleplay-focused LoRA finetune of Qwen 2.5 72b Instruct. Methodology and hyperparams inspired by [SorcererLM](https://huggingface.co/rAIfle/SorcererLM-8x22b-bf16) and [Slush](https://huggingface.co/crestf411/Q2.5-32B-Slush).

Yet another model in the Ink series, following in the footsteps of [the 32b one](https://huggingface.co/allura-org/Qwen2.5-32b-RP-Ink) and [the Nemo one](https://huggingface.co/allura-org/MN-12b-RP-Ink)

## Testimonials

> [Compared to the 32b] felt a noticeable increase in coherence

\- ShotMisser64

> Yeah ep2's great!! made me actually wanna write a reply by myself for the first time in a few days

\- Maw

> This is the best RP I've ever had

\- 59smoke

> this makes me want to get another 3090 to run 72b

\- dysfunctional

## Dataset

The worst mix of data you've ever seen. Like, seriously, you do not want to see the things that went into this model. It's bad.

"this is like washing down an adderall with a bottle of methylated rotgut" - inflatebot

Update: I have sent the (public datasets in the) data mix publicly already so here's that

<details>

<img src=https://cdn-uploads.huggingface.co/production/uploads/634262af8d8089ebaefd410e/JtjUoKtbOfBZfSSKojTcj.png>

</details>

## Quants

[imatrix GGUFs by bartowski](https://huggingface.co/bartowski/Qwen2.5-72b-RP-Ink-GGUF)

## Recommended Settings

Chat template: ChatML

Recommended samplers (not the be-all-end-all, try some on your own!):

- Temp 0.83 / Top P 0.8 / Top A 0.3 / Rep Pen 1.03

- Your samplers can go here! :3

## Hyperparams

### General

- Epochs = 2

- LR = 6e-5

- LR Scheduler = Cosine

- Optimizer = Paged AdamW 8bit

- Effective batch size = 16

### LoRA

- Rank = 16

- Alpha = 32

- Dropout = 0.25 (Inspiration: [Slush](https://huggingface.co/crestf411/Q2.5-32B-Slush))

## Credits

Humongous thanks to the people who created and curated the original data

Big thanks to all Allura members, for testing and emotional support ilya /platonic

especially to inflatebot who made the model card's image :3

Another big thanks to all the members of the ArliAI and BeaverAI Discord servers for testing! All of the people featured in the testimonials are from there :3 |

trenden/a95cadac-67f5-466e-873a-a177ac0cefaf | trenden | 2025-01-26T08:50:09Z | 6 | 0 | peft | [

"peft",

"safetensors",

"qwen2",

"axolotl",

"generated_from_trainer",

"base_model:Qwen/Qwen2.5-0.5B-Instruct",

"base_model:adapter:Qwen/Qwen2.5-0.5B-Instruct",

"license:apache-2.0",

"region:us"

] | null | 2025-01-26T08:49:04Z | ---

library_name: peft

license: apache-2.0

base_model: Qwen/Qwen2.5-0.5B-Instruct

tags:

- axolotl

- generated_from_trainer

model-index:

- name: a95cadac-67f5-466e-873a-a177ac0cefaf

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: Qwen/Qwen2.5-0.5B-Instruct

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- e1ec409eef7839e4_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/e1ec409eef7839e4_train_data.json

type:

field_instruction: source

field_output: target

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: trenden/a95cadac-67f5-466e-873a-a177ac0cefaf

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 5.0e-05

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 50

micro_batch_size: 2

mlflow_experiment_name: /tmp/e1ec409eef7839e4_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: 8d83fb56-f0a6-4bfc-90b0-d811cde17d16

wandb_project: Birthday-SN56-3-Gradients-On-Demand

wandb_run: your_name

wandb_runid: 8d83fb56-f0a6-4bfc-90b0-d811cde17d16

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# a95cadac-67f5-466e-873a-a177ac0cefaf

This model is a fine-tuned version of [Qwen/Qwen2.5-0.5B-Instruct](https://huggingface.co/Qwen/Qwen2.5-0.5B-Instruct) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9372

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 2.0965 | 0.0020 | 1 | 2.0439 |

| 1.9998 | 0.0257 | 13 | 2.0004 |

| 1.9455 | 0.0514 | 26 | 1.9553 |

| 1.7795 | 0.0771 | 39 | 1.9372 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF | mradermacher | 2025-01-26T08:50:09Z | 43,149 | 8 | transformers | [

"transformers",

"gguf",

"generated_from_trainer",

"en",

"dataset:Guilherme34/uncensor",

"base_model:nicoboss/DeepSeek-R1-Distill-Qwen-32B-Uncensored",

"base_model:quantized:nicoboss/DeepSeek-R1-Distill-Qwen-32B-Uncensored",

"license:mit",

"endpoints_compatible",

"region:us",

"imatrix",

"conversational"

] | null | 2025-01-26T04:12:55Z | ---

base_model: nicoboss/DeepSeek-R1-Distill-Qwen-32B-Uncensored

datasets:

- Guilherme34/uncensor

language:

- en

library_name: transformers

license: mit

quantized_by: mradermacher

tags:

- generated_from_trainer

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

weighted/imatrix quants of https://huggingface.co/nicoboss/DeepSeek-R1-Distill-Qwen-32B-Uncensored

<!-- provided-files -->

static quants are available at https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ1_S.gguf) | i1-IQ1_S | 7.4 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ1_M.gguf) | i1-IQ1_M | 8.0 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 9.1 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ2_XS.gguf) | i1-IQ2_XS | 10.1 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ2_S.gguf) | i1-IQ2_S | 10.5 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ2_M.gguf) | i1-IQ2_M | 11.4 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q2_K_S.gguf) | i1-Q2_K_S | 11.6 | very low quality |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q2_K.gguf) | i1-Q2_K | 12.4 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 12.9 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ3_XS.gguf) | i1-IQ3_XS | 13.8 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q3_K_S.gguf) | i1-Q3_K_S | 14.5 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ3_S.gguf) | i1-IQ3_S | 14.5 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ3_M.gguf) | i1-IQ3_M | 14.9 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q3_K_M.gguf) | i1-Q3_K_M | 16.0 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q3_K_L.gguf) | i1-Q3_K_L | 17.3 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-IQ4_XS.gguf) | i1-IQ4_XS | 17.8 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q4_0.gguf) | i1-Q4_0 | 18.8 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q4_K_S.gguf) | i1-Q4_K_S | 18.9 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q4_K_M.gguf) | i1-Q4_K_M | 19.9 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q4_1.gguf) | i1-Q4_1 | 20.7 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q5_K_S.gguf) | i1-Q5_K_S | 22.7 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q5_K_M.gguf) | i1-Q5_K_M | 23.4 | |

| [GGUF](https://huggingface.co/mradermacher/DeepSeek-R1-Distill-Qwen-32B-Uncensored-i1-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-32B-Uncensored.i1-Q6_K.gguf) | i1-Q6_K | 27.0 | practically like static Q6_K |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his private supercomputer, enabling me to provide many more imatrix quants, at much higher quality, than I would otherwise be able to.

<!-- end -->

|

fernandoruiz/salamandraTA-2B-Q4_0-GGUF | fernandoruiz | 2025-01-26T08:49:41Z | 42 | 0 | transformers | [

"transformers",

"gguf",

"llama-cpp",

"gguf-my-repo",

"translation",

"it",

"pt",

"de",

"en",

"es",

"eu",

"gl",

"fr",

"bg",

"cs",

"lt",

"hr",

"ca",

"nl",

"ro",

"da",

"el",

"fi",

"hu",

"sk",

"sl",

"et",

"pl",

"lv",

"mt",

"ga",

"sv",

"an",

"ast",

"oc",

"base_model:BSC-LT/salamandraTA-2B",

"base_model:quantized:BSC-LT/salamandraTA-2B",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | translation | 2025-01-26T08:49:32Z | ---

license: apache-2.0

library_name: transformers

pipeline_tag: translation

language:

- it

- pt

- de

- en

- es

- eu

- gl

- fr

- bg

- cs

- lt

- hr

- ca

- nl

- ro

- da

- el

- fi

- hu

- sk

- sl

- et

- pl

- lv

- mt

- ga

- sv

- an

- ast

- oc

base_model: BSC-LT/salamandraTA-2B

tags:

- llama-cpp

- gguf-my-repo

---

# fernandoruiz/salamandraTA-2B-Q4_0-GGUF

This model was converted to GGUF format from [`BSC-LT/salamandraTA-2B`](https://huggingface.co/BSC-LT/salamandraTA-2B) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/BSC-LT/salamandraTA-2B) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo fernandoruiz/salamandraTA-2B-Q4_0-GGUF --hf-file salamandrata-2b-q4_0.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo fernandoruiz/salamandraTA-2B-Q4_0-GGUF --hf-file salamandrata-2b-q4_0.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo fernandoruiz/salamandraTA-2B-Q4_0-GGUF --hf-file salamandrata-2b-q4_0.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo fernandoruiz/salamandraTA-2B-Q4_0-GGUF --hf-file salamandrata-2b-q4_0.gguf -c 2048

```

|

nathanialhunt/df4fa0d6-d687-41a3-bd1f-57ee1f20bde7 | nathanialhunt | 2025-01-26T08:48:02Z | 9 | 0 | peft | [

"peft",

"safetensors",

"qwen2",

"axolotl",

"generated_from_trainer",

"base_model:Qwen/Qwen2.5-0.5B-Instruct",

"base_model:adapter:Qwen/Qwen2.5-0.5B-Instruct",

"license:apache-2.0",

"region:us"

] | null | 2025-01-26T08:47:13Z | ---

library_name: peft

license: apache-2.0

base_model: Qwen/Qwen2.5-0.5B-Instruct

tags:

- axolotl

- generated_from_trainer

model-index:

- name: df4fa0d6-d687-41a3-bd1f-57ee1f20bde7

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: Qwen/Qwen2.5-0.5B-Instruct

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- e1ec409eef7839e4_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/e1ec409eef7839e4_train_data.json

type:

field_instruction: source

field_output: target

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: nathanialhunt/df4fa0d6-d687-41a3-bd1f-57ee1f20bde7

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 10

micro_batch_size: 2

mlflow_experiment_name: /tmp/e1ec409eef7839e4_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: 8d83fb56-f0a6-4bfc-90b0-d811cde17d16

wandb_project: Birthday-SN56-24-Gradients-On-Demand

wandb_run: your_name

wandb_runid: 8d83fb56-f0a6-4bfc-90b0-d811cde17d16

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# df4fa0d6-d687-41a3-bd1f-57ee1f20bde7

This model is a fine-tuned version of [Qwen/Qwen2.5-0.5B-Instruct](https://huggingface.co/Qwen/Qwen2.5-0.5B-Instruct) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9741

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 2.0965 | 0.0020 | 1 | 2.0439 |

| 1.7484 | 0.0059 | 3 | 2.0406 |

| 1.758 | 0.0119 | 6 | 2.0091 |

| 2.086 | 0.0178 | 9 | 1.9741 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

philip-hightech/624a2857-ddf7-4458-b217-01bbb5749c8d | philip-hightech | 2025-01-26T08:47:49Z | 7 | 0 | peft | [

"peft",

"safetensors",

"llama",

"axolotl",

"generated_from_trainer",

"base_model:NousResearch/Nous-Hermes-llama-2-7b",

"base_model:adapter:NousResearch/Nous-Hermes-llama-2-7b",

"license:mit",

"region:us"

] | null | 2025-01-26T08:44:06Z | ---

library_name: peft

license: mit

base_model: NousResearch/Nous-Hermes-llama-2-7b

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 624a2857-ddf7-4458-b217-01bbb5749c8d

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: NousResearch/Nous-Hermes-llama-2-7b

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- 77a6c7f6e0223ba0_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/77a6c7f6e0223ba0_train_data.json

type:

field_instruction: instruction

field_output: response

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: philip-hightech/624a2857-ddf7-4458-b217-01bbb5749c8d

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 10

micro_batch_size: 2

mlflow_experiment_name: /tmp/77a6c7f6e0223ba0_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: 80e14b82-7814-4596-8534-3041e5f0ad43

wandb_project: Mine-SN56-21-Gradients-On-Demand

wandb_run: your_name

wandb_runid: 80e14b82-7814-4596-8534-3041e5f0ad43

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 624a2857-ddf7-4458-b217-01bbb5749c8d

This model is a fine-tuned version of [NousResearch/Nous-Hermes-llama-2-7b](https://huggingface.co/NousResearch/Nous-Hermes-llama-2-7b) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: nan

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 0.0 | 0.0006 | 1 | nan |

| 0.0 | 0.0018 | 3 | nan |

| 0.0 | 0.0036 | 6 | nan |

| 0.0 | 0.0054 | 9 | nan |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

daniel40/191c6529-b7ed-42b0-8645-ad5e504e5333 | daniel40 | 2025-01-26T08:47:22Z | 8 | 0 | peft | [

"peft",

"safetensors",

"qwen2",

"axolotl",

"generated_from_trainer",

"base_model:Qwen/Qwen2.5-0.5B-Instruct",

"base_model:adapter:Qwen/Qwen2.5-0.5B-Instruct",

"license:apache-2.0",

"region:us"

] | null | 2025-01-26T08:46:29Z | ---

library_name: peft

license: apache-2.0

base_model: Qwen/Qwen2.5-0.5B-Instruct

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 191c6529-b7ed-42b0-8645-ad5e504e5333

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: Qwen/Qwen2.5-0.5B-Instruct

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- e1ec409eef7839e4_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/e1ec409eef7839e4_train_data.json

type:

field_instruction: source

field_output: target

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: daniel40/191c6529-b7ed-42b0-8645-ad5e504e5333

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 10

micro_batch_size: 2

mlflow_experiment_name: /tmp/e1ec409eef7839e4_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: 8d83fb56-f0a6-4bfc-90b0-d811cde17d16

wandb_project: Birthday-SN56-31-Gradients-On-Demand

wandb_run: your_name

wandb_runid: 8d83fb56-f0a6-4bfc-90b0-d811cde17d16

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 191c6529-b7ed-42b0-8645-ad5e504e5333

This model is a fine-tuned version of [Qwen/Qwen2.5-0.5B-Instruct](https://huggingface.co/Qwen/Qwen2.5-0.5B-Instruct) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9760

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 2.0965 | 0.0020 | 1 | 2.0439 |

| 1.7489 | 0.0059 | 3 | 2.0407 |

| 1.757 | 0.0119 | 6 | 2.0097 |

| 2.0896 | 0.0178 | 9 | 1.9760 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

kostiantynk/70099761-d1b4-412a-a8da-e3a536edf0ac | kostiantynk | 2025-01-26T08:41:35Z | 9 | 0 | peft | [

"peft",

"safetensors",

"qwen2",

"axolotl",

"generated_from_trainer",

"base_model:Qwen/Qwen2.5-Math-7B-Instruct",

"base_model:adapter:Qwen/Qwen2.5-Math-7B-Instruct",

"license:apache-2.0",

"region:us"

] | null | 2025-01-26T08:09:33Z | ---

library_name: peft

license: apache-2.0

base_model: Qwen/Qwen2.5-Math-7B-Instruct

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 70099761-d1b4-412a-a8da-e3a536edf0ac

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: Qwen/Qwen2.5-Math-7B-Instruct

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- e0c41a65c97fb0ab_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/e0c41a65c97fb0ab_train_data.json

type:

field_instruction: prompt

field_output: org_response

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: kostiantynk/70099761-d1b4-412a-a8da-e3a536edf0ac

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 10

micro_batch_size: 2

mlflow_experiment_name: /tmp/e0c41a65c97fb0ab_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: bc469934-f65d-4554-a373-c57006d470f3

wandb_project: Mine-SN56-22-Gradients-On-Demand

wandb_run: your_name

wandb_runid: bc469934-f65d-4554-a373-c57006d470f3

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 70099761-d1b4-412a-a8da-e3a536edf0ac

This model is a fine-tuned version of [Qwen/Qwen2.5-Math-7B-Instruct](https://huggingface.co/Qwen/Qwen2.5-Math-7B-Instruct) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5059

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 2.4848 | 0.0000 | 1 | 2.5470 |

| 3.0914 | 0.0001 | 3 | 2.5467 |

| 1.5561 | 0.0002 | 6 | 2.5411 |

| 2.8429 | 0.0003 | 9 | 2.5059 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

error577/0ce397df-b5b7-48a1-ac1f-0865198751ae | error577 | 2025-01-26T08:41:29Z | 8 | 0 | peft | [

"peft",

"safetensors",

"llama",

"axolotl",

"generated_from_trainer",

"base_model:unsloth/SmolLM-360M-Instruct",

"base_model:adapter:unsloth/SmolLM-360M-Instruct",

"license:apache-2.0",

"8-bit",

"bitsandbytes",

"region:us"

] | null | 2025-01-26T08:01:33Z | ---

library_name: peft

license: apache-2.0

base_model: unsloth/SmolLM-360M-Instruct

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 0ce397df-b5b7-48a1-ac1f-0865198751ae

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: unsloth/SmolLM-360M-Instruct

bf16: true

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- 4f5a92c6211764d5_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/4f5a92c6211764d5_train_data.json

type:

field_instruction: question

field_output: solution

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 1

flash_attention: true

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: error577/0ce397df-b5b7-48a1-ac1f-0865198751ae

hub_repo: null

hub_strategy: end

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: true

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 32

lora_target_linear: true

lr_scheduler: cosine

max_steps: 2000

micro_batch_size: 2

mlflow_experiment_name: /tmp/4f5a92c6211764d5_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 4

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 1

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.02

wandb_entity: null

wandb_mode: online

wandb_name: b232257a-a91b-444e-aedb-3fe497321055

wandb_project: Gradients-On-Demand

wandb_run: your_name

wandb_runid: b232257a-a91b-444e-aedb-3fe497321055

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 0ce397df-b5b7-48a1-ac1f-0865198751ae

This model is a fine-tuned version of [unsloth/SmolLM-360M-Instruct](https://huggingface.co/unsloth/SmolLM-360M-Instruct) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0087

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 2000

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 1.1345 | 0.0017 | 1 | 1.2805 |

| 0.8929 | 0.8503 | 500 | 1.0414 |

| 0.7422 | 1.7007 | 1000 | 1.0184 |

| 0.8871 | 2.5510 | 1500 | 1.0102 |

| 0.9667 | 3.4014 | 2000 | 1.0087 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

Theros/L3-ColdBrew-CoT-R1-test | Theros | 2025-01-26T08:40:43Z | 14 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"mergekit",

"merge",

"conversational",

"arxiv:2311.03099",

"base_model:Theros/L3-ColdBrew-CoT",

"base_model:merge:Theros/L3-ColdBrew-CoT",

"base_model:deepseek-ai/DeepSeek-R1-Distill-Llama-8B",

"base_model:merge:deepseek-ai/DeepSeek-R1-Distill-Llama-8B",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-01-26T08:37:36Z | ---

base_model:

- deepseek-ai/DeepSeek-R1-Distill-Llama-8B

- Theros/L3-ColdBrew-CoT

library_name: transformers

tags:

- mergekit

- merge

---

# merge

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [DARE TIES](https://arxiv.org/abs/2311.03099) merge method using [Theros/L3-ColdBrew-CoT](https://huggingface.co/Theros/L3-ColdBrew-CoT) as a base.

### Models Merged

The following models were included in the merge:

* [deepseek-ai/DeepSeek-R1-Distill-Llama-8B](https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Llama-8B)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: Theros/L3-ColdBrew-CoT

parameters:

density: 0.5

weight: 0.5

- model: deepseek-ai/DeepSeek-R1-Distill-Llama-8B

parameters:

density: 0.5

weight: 0.5

merge_method: dare_ties

base_model: Theros/L3-ColdBrew-CoT

parameters:

normalize: false

int8_mask: true

dtype: bfloat16

```

|

ClarenceDan/88833754-f174-4d9d-add3-cd221622f2ee | ClarenceDan | 2025-01-26T08:40:18Z | 7 | 0 | peft | [

"peft",

"safetensors",

"llama",

"axolotl",

"generated_from_trainer",

"base_model:NousResearch/Nous-Hermes-llama-2-7b",

"base_model:adapter:NousResearch/Nous-Hermes-llama-2-7b",

"license:mit",

"region:us"

] | null | 2025-01-26T08:36:43Z | ---

library_name: peft

license: mit

base_model: NousResearch/Nous-Hermes-llama-2-7b

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 88833754-f174-4d9d-add3-cd221622f2ee

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: NousResearch/Nous-Hermes-llama-2-7b

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- 77a6c7f6e0223ba0_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/77a6c7f6e0223ba0_train_data.json

type:

field_instruction: instruction

field_output: response

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: ClarenceDan/88833754-f174-4d9d-add3-cd221622f2ee

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 10

micro_batch_size: 2

mlflow_experiment_name: /tmp/77a6c7f6e0223ba0_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: 80e14b82-7814-4596-8534-3041e5f0ad43

wandb_project: Gradients-On-Demand

wandb_run: your_name

wandb_runid: 80e14b82-7814-4596-8534-3041e5f0ad43

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 88833754-f174-4d9d-add3-cd221622f2ee

This model is a fine-tuned version of [NousResearch/Nous-Hermes-llama-2-7b](https://huggingface.co/NousResearch/Nous-Hermes-llama-2-7b) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: nan

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 0.0 | 0.0006 | 1 | nan |

| 0.0 | 0.0018 | 3 | nan |

| 0.0 | 0.0036 | 6 | nan |

| 0.0 | 0.0054 | 9 | nan |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

jessemeng/TwinLlama-3.2-1B-DPO | jessemeng | 2025-01-26T08:36:53Z | 16 | 1 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"text-generation-inference",

"unsloth",

"trl",

"dpo",

"conversational",

"en",

"base_model:jessemeng/TwinLlama-3.2-1B",

"base_model:finetune:jessemeng/TwinLlama-3.2-1B",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-01-26T03:09:15Z | ---

base_model: jessemeng/TwinLlama-3.2-1B

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

- dpo

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** jessemeng

- **License:** apache-2.0

- **Finetuned from model :** jessemeng/TwinLlama-3.2-1B

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

azxky6645/01261734-origin_tamplate_NuminaMath-CoT | azxky6645 | 2025-01-26T08:35:44Z | 6 | 0 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"trl",

"sft",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-01-26T08:34:55Z | ---

library_name: transformers

tags:

- trl

- sft

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

azxky6645/01260126-origin_tamplate_NuminaMath-CoT | azxky6645 | 2025-01-26T08:34:05Z | 6 | 0 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"trl",

"sft",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-01-26T08:15:39Z | ---

library_name: transformers

tags:

- trl

- sft

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

trenden/7fec7b72-d9cf-475e-95db-21567779e422 | trenden | 2025-01-26T08:34:04Z | 8 | 0 | peft | [

"peft",

"safetensors",

"qwen2",

"axolotl",

"generated_from_trainer",

"base_model:Qwen/Qwen2-7B-Instruct",

"base_model:adapter:Qwen/Qwen2-7B-Instruct",

"license:apache-2.0",

"region:us"

] | null | 2025-01-26T08:26:19Z | ---

library_name: peft

license: apache-2.0

base_model: Qwen/Qwen2-7B-Instruct

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 7fec7b72-d9cf-475e-95db-21567779e422

results: []

---