modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-25 18:28:32

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 495

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-25 18:28:16

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

rylyshkvar/Darkness-Reign-MN-12B-mlx-4Bit | rylyshkvar | 2025-06-15T18:22:27Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"mergekit",

"merge",

"mlx",

"mlx-my-repo",

"conversational",

"base_model:Aleteian/Darkness-Reign-MN-12B",

"base_model:quantized:Aleteian/Darkness-Reign-MN-12B",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"region:us"

] | text-generation | 2025-06-15T18:21:50Z | ---

base_model: Aleteian/Darkness-Reign-MN-12B

library_name: transformers

tags:

- mergekit

- merge

- mlx

- mlx-my-repo

---

# rylyshkvar/Darkness-Reign-MN-12B-mlx-4Bit

The Model [rylyshkvar/Darkness-Reign-MN-12B-mlx-4Bit](https://huggingface.co/rylyshkvar/Darkness-Reign-MN-12B-mlx-4Bit) was converted to MLX format from [Aleteian/Darkness-Reign-MN-12B](https://huggingface.co/Aleteian/Darkness-Reign-MN-12B) using mlx-lm version **0.22.3**.

## Use with mlx

```bash

pip install mlx-lm

```

```python

from mlx_lm import load, generate

model, tokenizer = load("rylyshkvar/Darkness-Reign-MN-12B-mlx-4Bit")

prompt="hello"

if hasattr(tokenizer, "apply_chat_template") and tokenizer.chat_template is not None:

messages = [{"role": "user", "content": prompt}]

prompt = tokenizer.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True

)

response = generate(model, tokenizer, prompt=prompt, verbose=True)

```

|

openbmb/BitCPM4-1B-GGUF | openbmb | 2025-06-15T18:18:40Z | 0 | 0 | transformers | [

"transformers",

"gguf",

"text-generation",

"zh",

"en",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | text-generation | 2025-06-13T11:41:44Z | ---

license: apache-2.0

language:

- zh

- en

pipeline_tag: text-generation

library_name: transformers

---

<div align="center">

<img src="https://github.com/OpenBMB/MiniCPM/blob/main/assets/minicpm_logo.png?raw=true" width="500em" ></img>

</div>

<p align="center">

<a href="https://github.com/OpenBMB/MiniCPM/" target="_blank">GitHub Repo</a> |

<a href="https://github.com/OpenBMB/MiniCPM/tree/main/report/MiniCPM_4_Technical_Report.pdf" target="_blank">Technical Report</a>

</p>

<p align="center">

👋 Join us on <a href="https://discord.gg/3cGQn9b3YM" target="_blank">Discord</a> and <a href="https://github.com/OpenBMB/MiniCPM/blob/main/assets/wechat.jpg" target="_blank">WeChat</a>

</p>

## What's New

- [2025.06.06] **MiniCPM4** series are released! This model achieves ultimate efficiency improvements while maintaining optimal performance at the same scale! It can achieve over 5x generation acceleration on typical end-side chips! You can find technical report [here](https://github.com/OpenBMB/MiniCPM/tree/main/report/MiniCPM_4_Technical_Report.pdf).🔥🔥🔥

## MiniCPM4 Series

MiniCPM4 series are highly efficient large language models (LLMs) designed explicitly for end-side devices, which achieves this efficiency through systematic innovation in four key dimensions: model architecture, training data, training algorithms, and inference systems.

- [MiniCPM4-8B](https://huggingface.co/openbmb/MiniCPM4-8B): The flagship of MiniCPM4, with 8B parameters, trained on 8T tokens.

- [MiniCPM4-0.5B](https://huggingface.co/openbmb/MiniCPM4-0.5B): The small version of MiniCPM4, with 0.5B parameters, trained on 1T tokens.

- [MiniCPM4-8B-Eagle-FRSpec](https://huggingface.co/openbmb/MiniCPM4-8B-Eagle-FRSpec): Eagle head for FRSpec, accelerating speculative inference for MiniCPM4-8B.

- [MiniCPM4-8B-Eagle-FRSpec-QAT-cpmcu](https://huggingface.co/openbmb/MiniCPM4-8B-Eagle-FRSpec-QAT-cpmcu): Eagle head trained with QAT for FRSpec, efficiently integrate speculation and quantization to achieve ultra acceleration for MiniCPM4-8B.

- [MiniCPM4-8B-Eagle-vLLM](https://huggingface.co/openbmb/MiniCPM4-8B-Eagle-vLLM): Eagle head in vLLM format, accelerating speculative inference for MiniCPM4-8B.

- [MiniCPM4-8B-marlin-Eagle-vLLM](https://huggingface.co/openbmb/MiniCPM4-8B-marlin-Eagle-vLLM): Quantized Eagle head for vLLM format, accelerating speculative inference for MiniCPM4-8B.

- [BitCPM4-0.5B](https://huggingface.co/openbmb/BitCPM4-0.5B): Extreme ternary quantization applied to MiniCPM4-0.5B compresses model parameters into ternary values, achieving a 90% reduction in bit width.

- [BitCPM4-1B](https://huggingface.co/openbmb/BitCPM4-1B): Extreme ternary quantization applied to MiniCPM3-1B compresses model parameters into ternary values, achieving a 90% reduction in bit width.

- [MiniCPM4-Survey](https://huggingface.co/openbmb/MiniCPM4-Survey): Based on MiniCPM4-8B, accepts users' quiries as input and autonomously generate trustworthy, long-form survey papers.

- [MiniCPM4-MCP](https://huggingface.co/openbmb/MiniCPM4-MCP): Based on MiniCPM4-8B, accepts users' queries and available MCP tools as input and autonomously calls relevant MCP tools to satisfy users' requirements.

- [BitCPM4-0.5B-GGUF](https://huggingface.co/openbmb/BitCPM4-0.5B-GGUF): GGUF version of BitCPM4-0.5B.

- [BitCPM4-1B-GGUF](https://huggingface.co/openbmb/BitCPM4-1B-GGUF): GGUF version of BitCPM4-1B. (**<-- you are here**)

## Introduction

BitCPM4 are ternary quantized models derived from the MiniCPM series models through quantization-aware training (QAT), achieving significant improvements in both training efficiency and model parameter efficiency.

- Improvements of the training method

- Searching hyperparameters with a wind-tunnel on a small model.

- Using a two-stage training method: training in high-precision first and then QAT, making the best of the trained high-precision models and significantly reducing the computational resources required for the QAT phase.

- High parameter efficiency

- Achieving comparable performance to full-precision models of similar parameter models with a bit width of only 1.58 bits, demonstrating high parameter efficiency.

## Usage

### Inference with [llama.cpp](https://github.com/ggml-org/llama.cpp)

```bash

./llama-cli -c 1024 -m BitCPM4-1B-q4_0.gguf -n 1024 --top-p 0.7 --temp 0.7 --prompt "请写一篇关于人工智能的文章,详细介绍人工智能的未来发展和隐患。"

```

## Evaluation Results

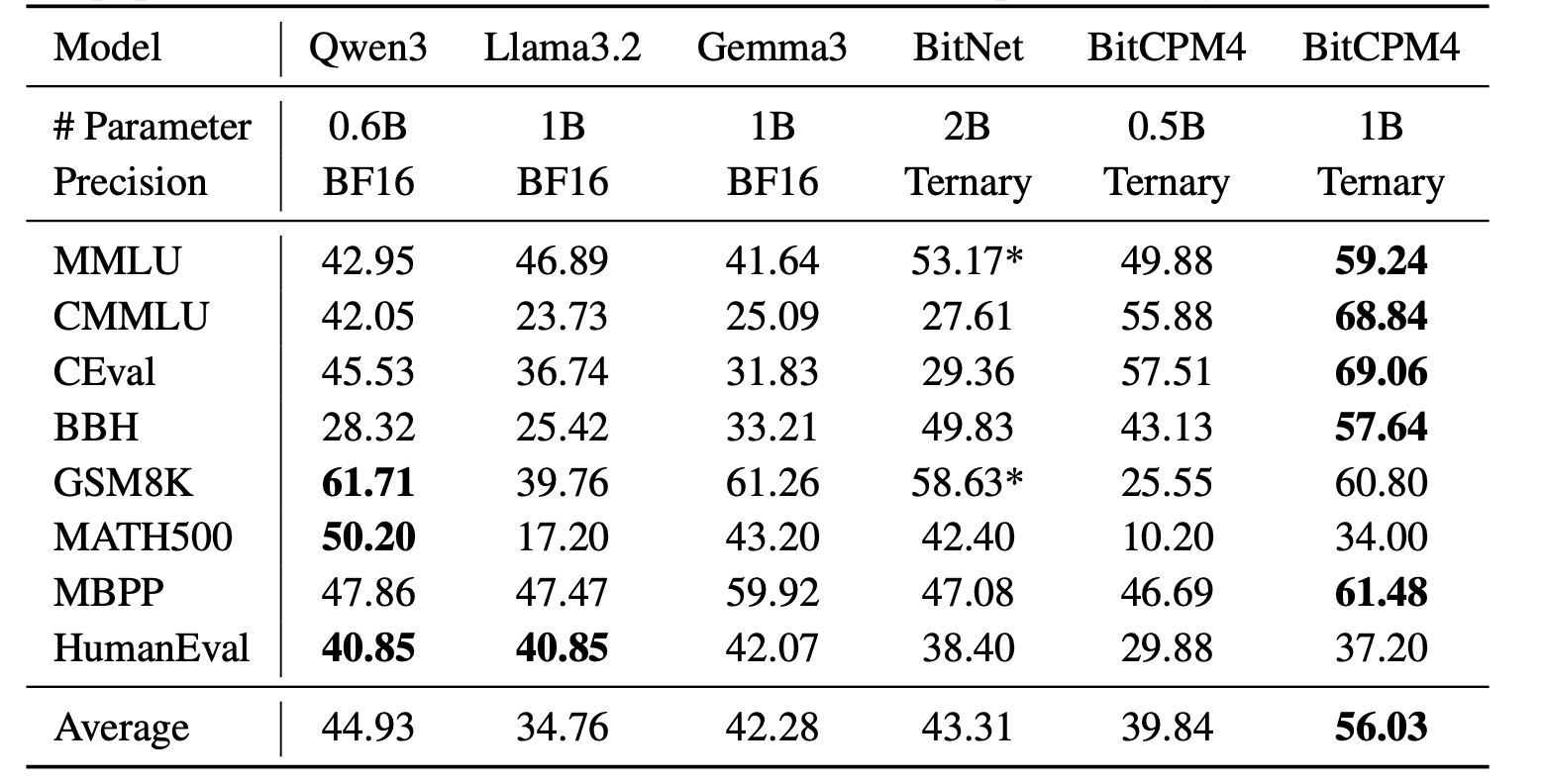

BitCPM4's performance is comparable with other full-precision models in same model size.

## Statement

- As a language model, MiniCPM generates content by learning from a vast amount of text.

- However, it does not possess the ability to comprehend or express personal opinions or value judgments.

- Any content generated by MiniCPM does not represent the viewpoints or positions of the model developers.

- Therefore, when using content generated by MiniCPM, users should take full responsibility for evaluating and verifying it on their own.

## LICENSE

- This repository and MiniCPM models are released under the [Apache-2.0](https://github.com/OpenBMB/MiniCPM/blob/main/LICENSE) License.

## Citation

- Please cite our [paper](https://github.com/OpenBMB/MiniCPM/tree/main/report/MiniCPM_4_Technical_Report.pdf) if you find our work valuable.

```bibtex

@article{minicpm4,

title={{MiniCPM4}: Ultra-Efficient LLMs on End Devices},

author={MiniCPM Team},

year={2025}

}

```

|

JonLoRA/deynairaLoRAv1 | JonLoRA | 2025-06-15T18:17:55Z | 0 | 0 | diffusers | [

"diffusers",

"flux",

"lora",

"replicate",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:other",

"region:us"

] | text-to-image | 2025-06-15T16:21:56Z | ---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

language:

- en

tags:

- flux

- diffusers

- lora

- replicate

base_model: "black-forest-labs/FLUX.1-dev"

pipeline_tag: text-to-image

# widget:

# - text: >-

# prompt

# output:

# url: https://...

instance_prompt: photo of a girl

---

# Deynairalorav1

<Gallery />

## About this LoRA

This is a [LoRA](https://replicate.com/docs/guides/working-with-loras) for the FLUX.1-dev text-to-image model. It can be used with diffusers or ComfyUI.

It was trained on [Replicate](https://replicate.com/) using AI toolkit: https://replicate.com/ostris/flux-dev-lora-trainer/train

## Trigger words

You should use `photo of a girl` to trigger the image generation.

## Run this LoRA with an API using Replicate

```py

import replicate

input = {

"prompt": "photo of a girl",

"lora_weights": "https://huggingface.co/JonLoRA/deynairaLoRAv1/resolve/main/lora.safetensors"

}

output = replicate.run(

"black-forest-labs/flux-dev-lora",

input=input

)

for index, item in enumerate(output):

with open(f"output_{index}.webp", "wb") as file:

file.write(item.read())

```

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('black-forest-labs/FLUX.1-dev', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('JonLoRA/deynairaLoRAv1', weight_name='lora.safetensors')

image = pipeline('photo of a girl').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

## Training details

- Steps: 6000

- Learning rate: 0.0002

- LoRA rank: 64

## Contribute your own examples

You can use the [community tab](https://huggingface.co/JonLoRA/deynairaLoRAv1/discussions) to add images that show off what you’ve made with this LoRA.

|

meezo-fun-video/Latest.Full.Update.meezo.fun.video.meezo.fun.mezo.fun.meezo.fun | meezo-fun-video | 2025-06-15T18:16:47Z | 0 | 0 | null | [

"region:us"

] | null | 2025-06-15T18:15:28Z | <a rel="nofollow" href="https://www.profitableratecpm.com/ad9ybzrr?key=ad7e5afbc6b154d0ae1429627f60d4a7"><img src="https://i.postimg.cc/qvPp49Sm/ythngythg.gif" alt="fsd"></a>

<a rel="nofollow" href="https://www.profitableratecpm.com/ad9ybzrr?key=ad7e5afbc6b154d0ae1429627f60d4a7">🌐 𝖢𝖫𝖨𝖢𝖪 𝖧𝖤𝖱𝖤 🟢==►► 𝖶𝖠𝖳𝖢𝖧 𝖭𝖮𝖶</a>

<a rel="nofollow" href="https://viralflix.xyz/leaked/?ht">🔴 CLICK HERE 🌐==►► Download Now)</a> |

shwabler/lithuanian-gemma-4b-bnb-4bit | shwabler | 2025-06-15T18:15:44Z | 0 | 1 | null | [

"safetensors",

"unsloth",

"license:mit",

"region:us"

] | null | 2025-06-15T12:49:53Z | ---

license: mit

tags:

- unsloth

---

|

Stroeller/Strllr | Stroeller | 2025-06-15T18:14:30Z | 0 | 0 | null | [

"license:other",

"region:us"

] | null | 2025-06-13T09:07:59Z | ---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

--- |

yununuy/guesswho-scale-game | yununuy | 2025-06-15T18:13:36Z | 101 | 0 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"unsloth",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-06-14T11:52:14Z | ---

library_name: transformers

tags:

- unsloth

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

vinnvinn/mistral-hugz | vinnvinn | 2025-06-15T18:13:06Z | 0 | 1 | transformers | [

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-06-15T18:13:03Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

MinaMila/gemma_2b_unlearned_2nd_5e-7_1.0_0.15_0.25_0.5_epoch1 | MinaMila | 2025-06-15T18:10:57Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"gemma2",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-06-15T18:09:03Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

jack813liu/mlx-chroma | jack813liu | 2025-06-15T18:10:49Z | 0 | 0 | null | [

"safetensors",

"license:mit",

"region:us"

] | null | 2025-06-15T06:55:11Z | ---

license: mit

---

Overview

====

This repository — [MLX-Chroma](https://github.com/jack813/mlx-chroma) — serves as a lightweight wrapper to organize and host the required model files for running Chroma on MLX.

• Chroma model: sourced from lodestones/Chroma, using the chroma-unlocked-v36-detail-calibrated.safetensors checkpoint.

• T5 and VAE models: sourced from black-forest-labs/FLUX.1-dev.

This repo does not contain training or inference logic, but exists to streamline model access and loading in MLX-based workflows.

MLX-Chroma

====

Chroma implementation in MLX. The implementation is ported from Author's Project

[flow](https://github.com/lodestone-rock/flow.git)、 [ComfyUI](https://github.com/comfyanonymous/ComfyUI) and [MLX-Examples Flux](https://github.com/ml-explore/mlx-examples/tree/main/flux)

Git: [https://github.com/jack813/mlx-chroma](https://github.com/jack813/mlx-chroma)

Blog: [https://blog.exp-pi.com/2025/06/migrating-chroma-to-mlx.html](https://blog.exp-pi.com/2025/06/migrating-chroma-to-mlx.html) |

MichiganNLP/tama-5e-7 | MichiganNLP | 2025-06-15T18:08:31Z | 10 | 0 | null | [

"safetensors",

"llama",

"table",

"text-generation",

"conversational",

"en",

"arxiv:2501.14693",

"base_model:meta-llama/Llama-3.1-8B-Instruct",

"base_model:finetune:meta-llama/Llama-3.1-8B-Instruct",

"license:mit",

"region:us"

] | text-generation | 2024-12-11T00:50:43Z | ---

license: mit

language:

- en

base_model:

- meta-llama/Llama-3.1-8B-Instruct

pipeline_tag: text-generation

tags:

- table

---

# Model Card for TAMA-5e-7

<!-- Provide a quick summary of what the model is/does. -->

Recent advances in table understanding have focused on instruction-tuning large language models (LLMs) for table-related tasks. However, existing research has overlooked the impact of hyperparameter choices, and also lacks a comprehensive evaluation of the out-of-domain table understanding ability and the general capabilities of these table LLMs. In this paper, we evaluate these abilities in existing table LLMs, and find significant declines in both out-of-domain table understanding and general capabilities as compared to their base models.

Through systematic analysis, we show that hyperparameters, such as learning rate, can significantly influence both table-specific and general capabilities. Contrary to the previous table instruction-tuning work, we demonstrate that smaller learning rates and fewer training instances can enhance table understanding while preserving general capabilities. Based on our findings, we introduce TAMA, a TAble LLM instruction-tuned from LLaMA 3.1 8B Instruct, which achieves performance on par with, or surpassing GPT-3.5 and GPT-4 on table tasks, while maintaining strong out-of-domain generalization and general capabilities. Our findings highlight the potential for reduced data annotation costs and more efficient model development through careful hyperparameter selection.

## 🚀 Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Model type:** Text generation.

- **Language(s) (NLP):** English.

- **License:** [[License for Llama models](https://github.com/meta-llama/llama-models/blob/main/models/llama3_1/LICENSE))]

- **Finetuned from model:** [[meta-llama/Llama-3.1-8b-Instruct](https://huggingface.co/meta-llama/Llama-3.1-8B-Instruct)]

### Model Sources

<!-- Provide the basic links for the model. -->

- **Repository:** [[github](https://github.com/MichiganNLP/TAMA)]

- **Paper:** [[paper](https://arxiv.org/abs/2501.14693)]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

TAMA is intended for the use in table understanding tasks and to facilitate future research.

## 🔨 How to Get Started with the Model

Use the code below to get started with the model.

Starting with `transformers >= 4.43.0` onward, you can run conversational inference using the Transformers pipeline abstraction or by leveraging the Auto classes with the generate() function.

Make sure to update your transformers installation via `pip install --upgrade transformers`.

```

import transformers

import torch

model_id = "MichiganNLP/tama-5e-7"

pipeline = transformers.pipeline(

"text-generation", model=model_id, model_kwargs={"torch_dtype": torch.bfloat16}, device_map="auto"

)

pipeline("Hey how are you doing today?")

```

You may replace the prompt with table-specific instructions. We recommend using the following prompt structure:

```

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that

appropriately completes the request.

### Instruction:

{instruction}

### Input:

{table_content}

### Question:

{question}

### Response:

```

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[TAMA Instruct](https://huggingface.co/datasets/MichiganNLP/TAMA_Instruct).

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

We utilize the [LLaMA Factory](https://github.com/hiyouga/LLaMA-Factory) library for model training and inference. Example YAML configuration files are provided [here](https://github.com/MichiganNLP/TAMA/blob/main/yamls/train.yaml).

The training command is:

```

llamafactory-cli train yamls/train.yaml

```

#### Training Hyperparameters

- **Training regime:** bf16

- **Training epochs:** 2.0

- **Learning rate scheduler:** linear

- **Cutoff length:** 2048

- **Learning rate**: 5e-7

## 📝 Evaluation

### Results

<!-- This should link to a Dataset Card if possible. -->

<table>

<tr>

<th>Models</th>

<th>FeTaQA</th>

<th>HiTab</th>

<th>TaFact</th>

<th>FEVEROUS</th>

<th>WikiTQ</th>

<th>WikiSQL</th>

<th>HybridQA</th>

<th>TATQA</th>

<th>AIT-QA</th>

<th>TABMWP</th>

<th>InfoTabs</th>

<th>KVRET</th>

<th>ToTTo</th>

<th>TableGPT<sub>subset</sub></th>

<th>TableBench</th>

</tr>

<tr>

<th>Metrics</th>

<th>BLEU</th>

<th>Acc</th>

<th>Acc</th>

<th>Acc</th>

<th>Acc</th>

<th>Acc</th>

<th>Acc</th>

<th>Acc</th>

<th>Acc</th>

<th>Acc</th>

<th>Acc</th>

<th>Micro F1</th>

<th>BLEU</th>

<th>Acc</th>

<th>ROUGE-L</th>

</tr>

<tr>

<td>GPT-3.5</td>

<td><u>26.49</u></td>

<td>43.62</td>

<td>67.41</td>

<td>60.79</td>

<td><u>53.13</u></td>

<td>41.91</td>

<td>40.22</td>

<td>31.38</td>

<td>84.13</td>

<td>46.30</td>

<td>56.00</td>

<td><u>54.56</u></td>

<td><u>16.81</u></td>

<td>54.80</td>

<td>27.75</td>

</tr>

<tr>

<td>GPT-4</td>

<td>21.70</td>

<td><u>48.40</u></td>

<td><b>74.40</b></td>

<td><u>71.60</u></td>

<td><b>68.40</b></td>

<td><u>47.60</u></td>

<td><u>58.60</u></td>

<td><b>55.81</b></td>

<td><u>88.57</u></td>

<td><b>67.10</b></td>

<td><u>58.60</u></td>

<td><b>56.46</b></td>

<td>12.21</td>

<td><b>80.20</b></td>

<td><b>40.38</b></td>

</tr>

<tr>

<td>base</td>

<td>15.33</td>

<td>32.83</td>

<td>58.44</td>

<td>66.37</td>

<td>43.46</td>

<td>20.43</td>

<td>32.83</td>

<td>26.70</td>

<td>82.54</td>

<td>39.97</td>

<td>48.39</td>

<td>50.80</td>

<td>13.24</td>

<td>53.60</td>

<td>23.47</td>

</tr>

<tr>

<td>TAMA</td>

<td><b>35.37</b></td>

<td><b>63.51</b></td>

<td><u>73.82</u></td>

<td><b>77.39</b></td>

<td>52.88</td>

<td><b>68.31</b></td>

<td><b>60.86</b></td>

<td><u>48.47</u></td>

<td><b>89.21</b></td>

<td><u>65.09</u></td>

<td><b>64.54</b></td>

<td>43.94</td>

<td><b>37.94</b></td>

<td><u>53.60</u></td>

<td><u>28.60</u></td>

</tr>

</table>

**Note these results are corresponding to the [tama-1e-6](https://huggingface.co/MichiganNLP/tama-1e-6) checkpoint. We release the tama-5e-7 checkpoints for the purpose of facilitating future research.**

We make the number bold if it is the best among the four, we underline the number if it is at the second place.

Please refer to our [paper](https://arxiv.org/abs/2501.14693) for additional details.

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

Please refer to our [paper](https://arxiv.org/abs/2501.14693) for additional details.

#### Summary

Notably, as an 8B model, TAMA demonstrates strong table understanding ability, outperforming GPT-3.5 on most of the table understanding benchmarks, even achieving performance on par or better than GPT-4.

## Technical Specifications

### Model Architecture and Objective

We base our model on the [Llama-3.1-8B-Instruct model](https://huggingface.co/meta-llama/Llama-3.1-8B-Instruct).

We instruction tune the model on a set of 2,600 table instructions.

### Compute Infrastructure

#### Hardware

We conduct our experiments on A40 and A100 GPUs.

#### Software

We leverage the [LLaMA Factory](https://github.com/hiyouga/LLaMA-Factory) for model training.

## Citation

```

@misc{

deng2025rethinking,

title={Rethinking Table Instruction Tuning},

author={Naihao Deng and Rada Mihalcea},

year={2025},

url={https://openreview.net/forum?id=GLmqHCwbOJ}

}

```

## Model Card Authors

Naihao Deng

## Model Card Contact

Naihao Deng |

mehultyagi/classifier_model | mehultyagi | 2025-06-15T18:07:58Z | 0 | 0 | open-clip | [

"open-clip",

"clip",

"medical-imaging",

"image-classification",

"vision-language",

"dermatology",

"license:mit",

"region:us"

] | image-classification | 2025-06-15T17:52:44Z | ---

license: mit

tags:

- clip

- medical-imaging

- image-classification

- vision-language

- dermatology

pipeline_tag: image-classification

library_name: open-clip

---

# CLIP Medical Image Classifier

This is a fine-tuned CLIP model for medical image classification, specifically designed for dermatological applications as part of the DermAgent system.

## Model Details

- **Model Type**: CLIP (Contrastive Language-Image Pre-training)

- **Base Model**: ViT-L-14

- **Fine-tuning**: Medical image classification

- **Framework**: OpenCLIP

- **File**: `classify_CF.pt`

## Usage

### Loading the Model

```python

import torch

import open_clip

from huggingface_hub import hf_hub_download

# Download the model

model_path = hf_hub_download(

repo_id="mehultyagi/classifier_model",

filename="classify_CF.pt"

)

# Load the checkpoint

checkpoint = torch.load(model_path, map_location="cpu", weights_only=False)

state_dict = checkpoint["state_dict"]

# Create base model

model, _, image_preprocess = open_clip.create_model_and_transforms(

model_name="ViT-L-14",

pretrained="commonpool_xl_clip_s13b_b90k"

)

tokenizer = open_clip.get_tokenizer("ViT-L-14")

# Load fine-tuned weights

adjusted_state_dict = {}

for k, v in state_dict.items():

name = k[7:] if k.startswith('module.') else k

adjusted_state_dict[name] = v

model.load_state_dict(adjusted_state_dict, strict=False)

model.eval()

# Move to device

device = "cuda" if torch.cuda.is_available() else "cpu"

model.to(device)

```

### Making Predictions

```python

from PIL import Image

# Load and preprocess image

image = Image.open("medical_image.jpg")

image_processed = image_preprocess(image).unsqueeze(0).to(device)

# Define text prompts

prompts = ["chest x-ray", "brain MRI", "skin lesion", "histology slide"]

text_processed = tokenizer(prompts).to(device)

# Get predictions

with torch.no_grad():

image_features = model.encode_image(image_processed)

text_features = model.encode_text(text_processed)

# Normalize features

image_features /= image_features.norm(dim=-1, keepdim=True)

text_features /= text_features.norm(dim=-1, keepdim=True)

# Calculate similarities

logits_per_image = (100.0 * image_features @ text_features.T)

probs = logits_per_image.softmax(dim=-1)

# Print results

for prompt, prob in zip(prompts, probs.squeeze()):

print(f"{prompt}: {prob:.3f}")

```

## Model Architecture

- **Vision Encoder**: Vision Transformer (ViT-L-14)

- **Text Encoder**: Transformer with 12 layers

- **Embedding Dimension**: 768 (text), 1024 (vision)

- **Parameters**: ~427M total parameters

## Training Details

- **Base Model**: CommonPool XL CLIP (s13b_b90k)

- **Fine-tuning Dataset**: Medical imaging dataset

- **Alpha**: 0 (pure fine-tuned weights)

- **Temperature**: 100.0

## Intended Use

This model is designed for:

- Medical image classification

- Vision-language understanding in medical domain

- Research and development in medical AI

- Integration with DermAgent system

## Limitations

- Primarily trained on dermatological images

- Not a substitute for professional medical diagnosis

- Requires proper preprocessing and validation

- Performance may vary on out-of-domain images

## Citation

If you use this model, please cite the DermAgent project and the original CLIP paper:

```bibtex

@misc{dermagent2025,

title={DermAgent: CLIP-based Medical Image Classification},

author={DermAgent Team},

year={2025},

url={https://huggingface.co/mehultyagi/classifier_model}

}

```

## License

This model is released under the MIT License.

## Contact

For questions and support, please open an issue in the repository.

|

meezo-fun-tv/Video.meezo.fun.trending.viral.Full.Video.telegram | meezo-fun-tv | 2025-06-15T18:03:28Z | 0 | 0 | null | [

"region:us"

] | null | 2025-06-15T18:02:55Z | <a rel="nofollow" href="https://viralflix.xyz/leaked/?sd">🔴 CLICK HERE 🌐==►► Download Now)</a>

<a rel="nofollow" href="https://viralflix.xyz/leaked/?sd"><img src="https://i.postimg.cc/qvPp49Sm/ythngythg.gif" alt="fsd"></a>

<a rel="nofollow" href="https://anyplacecoming.com/zq5yqv0i?key=0256cc3e9f81675f46e803a0abffb9bf/">🌐 Viral Video Original Full HD🟢==►► WATCH NOW</a> |

MinaMila/gemma_2b_unlearned_2nd_5e-7_1.0_0.15_0.25_0.75_epoch2 | MinaMila | 2025-06-15T18:02:41Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"gemma2",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-06-15T18:00:47Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

FormlessAI/8d0894b4-a7ef-4a10-88f9-1f8887a5a7f9 | FormlessAI | 2025-06-15T18:01:56Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"generated_from_trainer",

"trl",

"grpo",

"arxiv:2402.03300",

"base_model:teknium/OpenHermes-2.5-Mistral-7B",

"base_model:finetune:teknium/OpenHermes-2.5-Mistral-7B",

"endpoints_compatible",

"region:us"

] | null | 2025-06-15T12:19:57Z | ---

base_model: teknium/OpenHermes-2.5-Mistral-7B

library_name: transformers

model_name: 8d0894b4-a7ef-4a10-88f9-1f8887a5a7f9

tags:

- generated_from_trainer

- trl

- grpo

licence: license

---

# Model Card for 8d0894b4-a7ef-4a10-88f9-1f8887a5a7f9

This model is a fine-tuned version of [teknium/OpenHermes-2.5-Mistral-7B](https://huggingface.co/teknium/OpenHermes-2.5-Mistral-7B).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="FormlessAI/8d0894b4-a7ef-4a10-88f9-1f8887a5a7f9", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/phoenix-formless/Gradients/runs/hosdy86c)

This model was trained with GRPO, a method introduced in [DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models](https://huggingface.co/papers/2402.03300).

### Framework versions

- TRL: 0.18.1

- Transformers: 4.52.4

- Pytorch: 2.7.0+cu128

- Datasets: 3.6.0

- Tokenizers: 0.21.1

## Citations

Cite GRPO as:

```bibtex

@article{zhihong2024deepseekmath,

title = {{DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models}},

author = {Zhihong Shao and Peiyi Wang and Qihao Zhu and Runxin Xu and Junxiao Song and Mingchuan Zhang and Y. K. Li and Y. Wu and Daya Guo},

year = 2024,

eprint = {arXiv:2402.03300},

}

```

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

``` |

mic3456/anneth | mic3456 | 2025-06-15T18:01:11Z | 0 | 0 | diffusers | [

"diffusers",

"text-to-image",

"flux",

"lora",

"template:sd-lora",

"fluxgym",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:other",

"region:us"

] | text-to-image | 2025-06-15T18:00:54Z | ---

tags:

- text-to-image

- flux

- lora

- diffusers

- template:sd-lora

- fluxgym

base_model: black-forest-labs/FLUX.1-dev

instance_prompt: ath

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

---

# annehathaway2

A Flux LoRA trained on a local computer with [Fluxgym](https://github.com/cocktailpeanut/fluxgym)

<Gallery />

## Trigger words

You should use `ath` to trigger the image generation.

## Download model and use it with ComfyUI, AUTOMATIC1111, SD.Next, Invoke AI, Forge, etc.

Weights for this model are available in Safetensors format.

|

parveen-Official-Viral-Video-Link/18.Original.Full.Clip.parveen.Viral.Video.Leaks.Official | parveen-Official-Viral-Video-Link | 2025-06-15T18:00:08Z | 0 | 0 | null | [

"region:us"

] | null | 2025-06-15T17:59:49Z | <animated-image data-catalyst=""><a href="https://tinyurl.com/5ye5v3bc?dfhgKasbonStudiosdfg" rel="nofollow" data-target="animated-image.originalLink"><img src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" alt="Foo" data-canonical-src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" style="max-width: 100%; display: inline-block;" data-target="animated-image.originalImage"></a>

|

Peacemann/google_gemma-3-4b-it_LMUL | Peacemann | 2025-06-15T17:58:32Z | 0 | 0 | null | [

"L-Mul,",

"optimazation",

"quantization",

"text-generation",

"research",

"experimental",

"base_model:google/gemma-3-4b-it",

"base_model:finetune:google/gemma-3-4b-it",

"license:gemma",

"region:us"

] | text-generation | 2025-06-15T17:55:58Z | ---

base_model: google/gemma-3-4b-it

tags:

- L-Mul,

- optimazation

- quantization

- text-generation

- research

- experimental

license: gemma

---

# L-Mul Optimized: google/gemma-3-4b-it

This is a modified version of Google's [gemma-3-4b-it](https://huggingface.co/google/gemma-3-4b-it) model. The modification consists of replacing the standard attention mechanism with one that uses a custom, approximate matrix multiplication algorithm termed "L-Mul".

This work was performed as part of a research project to evaluate the performance and accuracy trade-offs of algorithmic substitutions in transformer architectures.

**This model is intended strictly for educational and scientific purposes.**

## Model Description

The core architecture of `google/gemma-3-4b-it` is preserved. However, the standard `Gemma3Attention` modules have been dynamically replaced with a custom version that utilizes the `l_mul_attention` function for its core computations. This function is defined in the `lmul.py` file included in this repository.

- **Base Model:** [google/gemma-3-4b-it](https://huggingface.co/google/gemma-3-4b-it)

- **Modification:** Replacement of standard attention with L-Mul approximate attention.

- **Primary Use-Case:** Research and educational analysis of algorithmic impact on LLMs.

## How to Get Started

To use this model, you must use the `trust_remote_code=True` flag when loading it. This is required to execute the custom `lmul.py` file that defines the new attention mechanism.

You can load the model directly from this repository using the `transformers` library:

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

# Define the repository ID for the specific model

repo_id = "Peacemann/google_gemma-3-4b-it-lmul-attention" # Replace with the correct repo ID if different

# Load the tokenizer and model, trusting the remote code to load lmul.py

tokenizer = AutoTokenizer.from_pretrained(repo_id)

model = AutoModelForCausalLM.from_pretrained(

repo_id,

trust_remote_code=True,

torch_dtype=torch.bfloat16,

device_map="auto",

)

# Example usage

prompt = "The L-Mul algorithm is an experimental method for..."

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, max_new_tokens=50)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

```

## Intended Uses & Limitations

This model is intended for researchers and students exploring the internal workings of LLMs. It is a tool for visualizing and analyzing the effects of fundamental algorithmic changes.

**This model is NOT intended for any commercial or production application.**

The modification is experimental. The impact on the model's performance, safety alignment, accuracy, and potential for generating biased or harmful content is **unknown and untested**.

## Licensing Information

The use of this model is subject to the original **Gemma 3 Community License**. By using this model, you agree to the terms outlined in the license. |

yalhessi/lemexp-task1-v2-lemma_object_full_nodefs-deepseek-coder-1.3b-base-ddp-8lr-v2 | yalhessi | 2025-06-15T17:56:54Z | 0 | 0 | peft | [

"peft",

"safetensors",

"generated_from_trainer",

"base_model:deepseek-ai/deepseek-coder-1.3b-base",

"base_model:adapter:deepseek-ai/deepseek-coder-1.3b-base",

"license:other",

"region:us"

] | null | 2025-06-15T17:56:41Z | ---

library_name: peft

license: other

base_model: deepseek-ai/deepseek-coder-1.3b-base

tags:

- generated_from_trainer

model-index:

- name: lemexp-task1-v2-lemma_object_full_nodefs-deepseek-coder-1.3b-base-ddp-8lr-v2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# lemexp-task1-v2-lemma_object_full_nodefs-deepseek-coder-1.3b-base-ddp-8lr-v2

This model is a fine-tuned version of [deepseek-ai/deepseek-coder-1.3b-base](https://huggingface.co/deepseek-ai/deepseek-coder-1.3b-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2426

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0008

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 16

- total_eval_batch_size: 16

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 12

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:------:|:---------------:|

| 0.5096 | 0.2 | 3094 | 0.5142 |

| 0.4699 | 0.4 | 6188 | 0.4815 |

| 0.4503 | 0.6 | 9282 | 0.4479 |

| 0.4359 | 0.8 | 12376 | 0.4406 |

| 0.4266 | 1.0 | 15470 | 0.4249 |

| 0.4181 | 1.2 | 18564 | 0.4146 |

| 0.4126 | 1.4 | 21658 | 0.4122 |

| 0.4076 | 1.6 | 24752 | 0.4043 |

| 0.4022 | 1.8 | 27846 | 0.4012 |

| 0.3969 | 2.0 | 30940 | 0.3975 |

| 0.3874 | 2.2 | 34034 | 0.3964 |

| 0.3865 | 2.4 | 37128 | 0.3813 |

| 0.379 | 2.6 | 40222 | 0.3783 |

| 0.3772 | 2.8 | 43316 | 0.3750 |

| 0.3735 | 3.0 | 46410 | 0.3765 |

| 0.3637 | 3.2 | 49504 | 0.3659 |

| 0.3669 | 3.4 | 52598 | 0.3610 |

| 0.3577 | 3.6 | 55692 | 0.3615 |

| 0.3578 | 3.8 | 58786 | 0.3567 |

| 0.3563 | 4.0 | 61880 | 0.3510 |

| 0.3442 | 4.2 | 64974 | 0.3461 |

| 0.3403 | 4.4 | 68068 | 0.3428 |

| 0.3385 | 4.6 | 71162 | 0.3442 |

| 0.3309 | 4.8 | 74256 | 0.3399 |

| 0.3271 | 5.0 | 77350 | 0.3290 |

| 0.3225 | 5.2 | 80444 | 0.3299 |

| 0.3241 | 5.4 | 83538 | 0.3253 |

| 0.321 | 5.6 | 86632 | 0.3258 |

| 0.3168 | 5.8 | 89726 | 0.3225 |

| 0.3117 | 6.0 | 92820 | 0.3182 |

| 0.2992 | 6.2 | 95914 | 0.3187 |

| 0.2985 | 6.4 | 99008 | 0.3104 |

| 0.2975 | 6.6 | 102102 | 0.3072 |

| 0.3021 | 6.8 | 105196 | 0.3018 |

| 0.2921 | 7.0 | 108290 | 0.3012 |

| 0.2807 | 7.2 | 111384 | 0.2967 |

| 0.2758 | 7.4 | 114478 | 0.2962 |

| 0.2807 | 7.6 | 117572 | 0.2932 |

| 0.2786 | 7.8 | 120666 | 0.2901 |

| 0.2778 | 8.0 | 123760 | 0.2846 |

| 0.2632 | 8.2 | 126854 | 0.2863 |

| 0.262 | 8.4 | 129948 | 0.2809 |

| 0.2611 | 8.6 | 133042 | 0.2828 |

| 0.2648 | 8.8 | 136136 | 0.2762 |

| 0.2632 | 9.0 | 139230 | 0.2730 |

| 0.2461 | 9.2 | 142324 | 0.2676 |

| 0.2443 | 9.4 | 145418 | 0.2669 |

| 0.2435 | 9.6 | 148512 | 0.2655 |

| 0.2431 | 9.8 | 151606 | 0.2631 |

| 0.2379 | 10.0 | 154700 | 0.2599 |

| 0.2275 | 10.2 | 157794 | 0.2583 |

| 0.2281 | 10.4 | 160888 | 0.2570 |

| 0.2243 | 10.6 | 163982 | 0.2530 |

| 0.2222 | 10.8 | 167076 | 0.2541 |

| 0.2219 | 11.0 | 170170 | 0.2494 |

| 0.2112 | 11.2 | 173264 | 0.2495 |

| 0.2077 | 11.4 | 176358 | 0.2471 |

| 0.2065 | 11.6 | 179452 | 0.2451 |

| 0.2029 | 11.8 | 182546 | 0.2432 |

| 0.2073 | 12.0 | 185640 | 0.2426 |

### Framework versions

- PEFT 0.14.0

- Transformers 4.47.0

- Pytorch 2.5.1+cu124

- Datasets 3.2.0

- Tokenizers 0.21.0 |

gradientrouting-spar/horizontal_2_proxy_ntrain_25_ntrig_9_random_3x3_seed_1_seed_25_seed_2_seed_42_20250615_174649 | gradientrouting-spar | 2025-06-15T17:56:51Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-06-15T17:56:02Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

MinaMila/gemma_2b_unlearned_2nd_5e-7_1.0_0.15_0.25_0.75_epoch1 | MinaMila | 2025-06-15T17:54:50Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"gemma2",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-06-15T17:52:59Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

arunmadhusudh/qwen2_VL_2B_LatexOCR_qlora_qptq_epoch3 | arunmadhusudh | 2025-06-15T17:49:31Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-06-15T17:49:28Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use