anchor

stringlengths 58

24.4k

| positive

stringlengths 9

13.4k

| negative

stringlengths 166

14k

| anchor_status

stringclasses 3

values |

|---|---|---|---|

## Inspiration

Let’s take you through a simple encounter between a recruiter and an aspiring student looking for a job during a career fair. The student greets the recruiter eagerly after having to wait in a 45 minute line and hands him his beautifully crafted paper resume. The recruiter, having been talking to thousands of students knows that his time is short and tries to skim the article rapidly, inevitably skipping important skills that the student brings to the table. In the meantime, the clock has been ticking and while the recruiter is still reading non-relevant parts of the resume the student waits, blankly staring at the recruiter. The recruiter finally looks up only to be able to exchange a few words of acknowledge and a good luck before having to move onto the next student. And the resume? It ends up tossed in the back of a bin and jumbled together with thousands of other resumes. The clear bottleneck here is the use of the paper Resume.

Instead of having the recruiter stare at a thousand word page crammed with everything someone has done with their life, it would make much more sense to have the student be able to show his achievements in a quick, easy way and have it elegantly displayed for the recruiter.

With Reko, both recruiters and students will be geared for an easy, digital way to transfer information.

## What it does

By allowing employers and job-seekers to connect in a secure and productive manner, Reko calls forward a new era of stress free peer-to-peer style data transfer. The magic of Reko is in its simplicity. Simply walk up to another Reko user, scan their QR code (or have them scan yours!), and instantly enjoy a UX rich file transfer channel between your two devices. During PennApps, we set out to demonstrate the power of this technology in what is mainly still a paper-based ecosystem: career fairs.

With Reko, employers no longer need to peddle countless informational pamphlets, and students will never again have to rush to print out countless resume copies before a career fair. Not only can this save a large amount of paper, but it also allows students to freely choose what aspects of their resumes they want to accentuate. Reko also allows employers to interact with the digital resume cards sent to them by letting them score each card on a scale of 1 - 100. Using this data alongside machine learning, Reko then provides the recruiter with an estimated candidate match percentage which can be used to streamline the hiring process. Reko also serves to help students by providing them a recruiting dashboard. This dashboard can be used to understand recruiter impressions and aims to help students develop better candidate profiles and resumes.

## How we built it

### Front-End // Swift

The frontend of Reko focuses on delivering a phenomenal user experience through an exceptional user interface and efficient performance. We utilized native frameworks and a few Cocoapods to provide a novel, intriguing experience. The QR code exchange handshake protocol is accomplished through the very powerful VisionKit. The MVVM design pattern was implemented and protocols were introduced to make the most out of the information cards. The hardest implementation was the Web Socket implementation of the creative exchange of the information cards — between the student and interviewer.

### Back-End // Node.Js

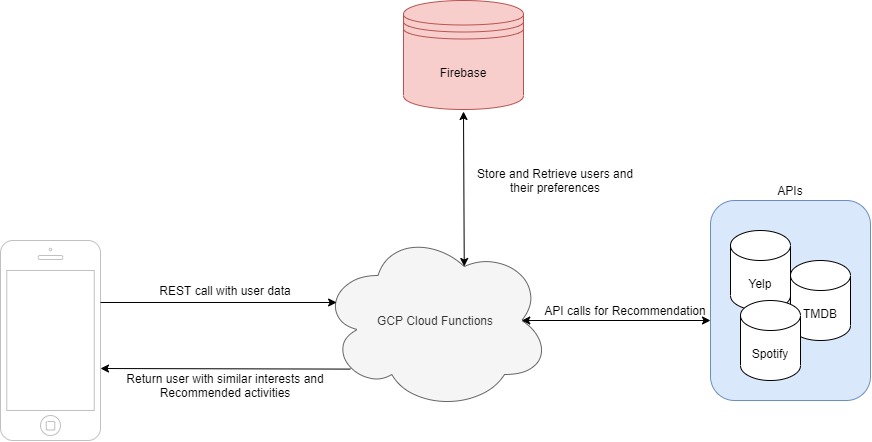

The backend of Reko focuses on handling websocket sessions, establishing connection between front-end and our machine learning service, and managing the central MongoDB.

Every time a new ‘user-pair’ is instantiated via a QR code scan, the backend stores the two unique socket machine IDs as ‘partners’, and by doing so is able to handle what events are sent to one, or both partners. By also handling the MongoDB, Reko’s backend is able to relate these unique socket IDs to stored user account’s data. In turn, this allows Reko to take advantage of data sets to provide the user with valuable unique data analysis. Using the User ID as context, Reko’s backend is able to POST our self-contained Machine Learning web service. Reko’s ML web service responded with an assortment of statistical data, which is then emitted to the front-end via websocket for display & view by the user.

### Machine Learning // Python

In order to properly integrate machine learning into our product, we had to build a self-contained web application. This container application was built on a virtual environment with a REST API layer and Django framework. We chose to use these technologies because they are scalable and easy to deploy to the cloud. With the Django framework, we used POST to easily communicate with the node backend and thus increase the overall workflow via abstraction. We were then able to use Python to train a machine learning model based on data sent from the node backend. After connecting to the MongoDB with the pymongo library, we were able to prepare training and testing data sets. We used the pandas python library to develop DataFrames for each data set and built a machine learning model using the algorithms from the scikit library. We tested various algorithms with our dataset and finalized a model that utilized the Logistic Regression algorithm. Using these data sets and the machine learning model, our service can predict the percentage a candidate matches to a recruiter’s job profile. The final container application is able to receive data and return results in under 1 second and is over 90% accurate.

## Challenges we ran into

* Finding a realistic data set to train our machine learning model

* Deploying our backend to the cloud

* Configuring the container web application

* Properly populating our MongoDB

* Finding the best web service for our use case

* Finding the optimal machine learning for our data sets

## Accomplishments that we're proud of

* UI/UX Design

* Websocket implementation

* Machine Learning integration

* Scalably structured database

* Self-contained Django web application

## What we learned

* Deploying container applications on the cloud

* Using MongoDB with Django

* Data Modeling/Analysis for our specific use case

* Good practices in structuring a MongoDB database as opposed to a SQL database.

* How to successfully integrate three software layers to generate a consistent and fluid final product.

* Strategies for linking iOS devices in a peer-to-peer fashion via websockets.

## What's next for reko

* Our vision for Reko is to have an app which allows for general and easy to use data transfer between two people who may be complete strangers.

* We hope to transfer from QR code to NFC to allow for even easier data transfer and thus better user experience.

* We believe that a data transfer system such as the one Reko showcases is the future of in-person data transfer due to its “no-username” operation. This system allows individuals to keep their anonymity if desired, and thus protects their privacy.

|

## Project Title

**UniConnect** - Enhancing the College Experience

## Inspiration

🌟 Our inspiration stems from a deep understanding of the challenges that university freshmen encounter during their initial year on campus. Having personally navigated these highs and lows during our own freshman year last year, we felt compelled to create a solution that addresses these issues and assists fellow students on their academic journey. Thus, we came up with UniConnect, a platform that offers essential support and resources.

## What It Does

🚀 **CrowdSense**: Enhance your campus experience by effortlessly finding available spaces for studying, enjoying fitness sessions, outdoor activities, or meals in real-time, ensuring a stress-free and comfortable environment.

🌐 **SpaceSync**: Simplify the lives of international students by seamlessly connecting them with convenient summer storage solutions, eliminating the hassle and worries associated with storing belongings during breaks.

🖥️ Front-end: HTML/CSS/JavaScript

🔌 Backend: JavaScript with MongoDB Atlas

## Challenges We Overcame

🏆 Elevating User-Friendliness and Accessibility: We dedicated ourselves to making our solution as intuitive and accessible as possible, ensuring that every user can benefit from it.

📊 Mastering Data Analysis and Backend Representation: Tackling the intricacies of data analysis and backend operations pushed us to expand our knowledge and skills, enabling us to deliver a robust platform.

🛠️ Streamlining Complex Feature Integration: We met the challenge of integrating diverse features seamlessly, providing a unified and efficient user experience.

⏱️ Maximizing Time Efficiency: Our commitment to optimizing time efficiency drove us to fine-tune our solution, ensuring that users can accomplish more in less time.

## Proud Achievements

🌟 Successful Execution of Uniconnect's First Phase: We have successfully completed the initial phase of Uniconnect, bringing SpaceSync to fruition and making significant progress with CrowdSense.

🌐 Intuitive and Accessible Solutions: Our unwavering commitment to creating user-friendly and accessible solutions ensures that every user can derive maximum benefit from our platform.

🏆 Positive Impact on College Students: We are thrilled to have a positive impact on the college experience of students who may not have had a strong support system during their memorable four-year journey.

## Valuable Learning

📚 Efficient Integration of Multiple Webpages: We've mastered the art of seamlessly connecting various webpages using HTML, CSS, and JS to create a cohesive user experience.

📈 Data Extraction and Backend Utilization: We've acquired the skills to extract and leverage data effectively in the backend using JavaScript, enhancing the functionality of our platform.

🌐 Enhancing User-Friendliness: Through JavaScript, we've honed our ability to make our website exceptionally user-friendly, prioritizing the user experience above all else.

## What's next for UniConnect

🚀 Expanding CrowdSense: We have ambitious plans to enhance and grow the CrowdSense section of UniConnect, providing even more real-time solutions for students on campus.

💼 Introducing "Collab Center": Our next big step involves introducing a new section called \*\* "Collab Center." \*\* This feature will empower the student community to create employment opportunities for each other, fostering a supportive ecosystem where both employers and employees are college students. This initiative aims to provide financial support to those in need while offering valuable assistance to those seeking help. Together, we can make a significant impact on students' lives.

🎓 UniConnect is our solution to enhance university life, making it easier, more connected, and full of opportunities

|

## Inspiration

As students, we collectively realized how much we disliked creating and updating our resumes. After getting ghosted by companies, we’re often left wondering what was missing from our resume, what skills we should be looking to acquire, and how to acquire them. We also realized that, from a recruiter’s point of view, reviewing resumes becomes a monotonous, repetitive, and tedious process. Recruiters who have to parse through thousands of candidates, while being bored out of their minds, often miss some great candidates!

## What it does

CS Resume allows students to upload their resumes, see how their resumes are broken down into sections, compare their skills to what tech companies are looking for, and receive suggested projects based on what skills they might be missing. It also features a VR application for the Quest 2, where recruiters can quickly view summaries of candidate resumes, expand to learn more about a candidate, and mark them for future review, all within a stimulating virtual environment of their choosing!

## How we built it

We used MongoDB, Next.js, Express, React, Node, Typescript, Cohere, NLP, Google Cloud Platform, CICD, AppEngine, Unity, and C# in order to create our final product.

## Challenges we ran into

The main challenge we faced was getting all the components to work together in a single system. This was one of our first big projects using Next.js AND our first time connecting VR headsets to our web app. Ultimately, we vastly underestimated the complexity of what we were trying to accomplish but ended up successfully working through our issues.

## Accomplishments that we're proud of

* Finishing a prototype for demo day.

* Starting off our coding by using Figma to make prototypes and wireframes rather than starting off without any designs.

* Being able to create such a complex web app (and corresponding VR app) in less than 48 hours.

* Successfully getting our VR application connected to our web app’s backend

## What we learned

It's important to have a plan early on in the development process and be conservative about how quickly you can learn/adapt to new frameworks.

## What's next for CS Resume

1. Improvements on how the resume is initially parsed, and additional support for resume fields.

2. Giving users more options for what projects are recommended based on interests.

3. Additional features for how recruiter ratings are handled on the backend.

|

winning

|

## Our Inspiration

We were inspired by apps like Duolingo and Quizlet for language learning, and wanted to extend those experiences to a VR environment. The goal was to gameify the entire learning experience and make it immersive all while providing users with the resources to dig deeper into concepts.

## What it does

EduSphere is an interactive AR/VR language learning VisionOS application designed for the new Apple Vision Pro. It contains three fully developed features: a 3D popup game, a multi-lingual chatbot, and an immersive learning environment. It leverages the visually compelling and intuitive nature of the VisionOS system to target three of the most crucial language learning styles: visual, kinesthetic, and literacy - allowing users to truly learn at their own comfort. We believe the immersive environment will make language learning even more memorable and enjoyable.

## How we built it

We built the VisionOS app using the Beta development kit for the Apple Vision Pro. The front-end and AR/VR components were made using Swift, SwiftUI, Alamofire, RealityKit, and concurrent MVVM design architecture. 3D Models were converted through Reality Converter as .usdz files for AR modelling. We stored these files on the Google Cloud Bucket Storage, with their corresponding metadata on CockroachDB. We used a microservice architecture for the backend, creating various scripts involving Python, Flask, SQL, and Cohere. To control the Apple Vision Pro simulator, we linked a Nintendo Switch controller for interaction in 3D space.

## Challenges we ran into

Learning to build for the VisionOS was challenging mainly due to the lack of documentation and libraries available. We faced various problems with 3D Modelling, colour rendering, and databases, as it was difficult to navigate this new space without references or sources to fall back on. We had to build many things from scratch while discovering the limitations within the Beta development environment. Debugging certain issues also proved to be a challenge. We also really wanted to try using eye tracking or hand gesturing technologies, but unfortunately, Apple hasn't released these yet without a physical Vision Pro. We would be happy to try out these cool features in the future, and we're definitely excited about what's to come in AR/VR!

## Accomplishments that we're proud of

We're really proud that we were able to get a functional app working on the VisionOS, especially since this was our first time working with the platform. The use of multiple APIs and 3D modelling tools was also the amalgamation of all our interests and skillsets combined, which was really rewarding to see come to life.

|

## 💡INSPIRATION💡

Our team is from Ontario and BC, two provinces that have been hit HARD by the opioid crisis in Canada. Over **4,500 Canadians under the age of 45** lost their lives through overdosing during 2021, almost all of them preventable, a **30% increase** from the year before. During an unprecedented time, when the world is dealing with the covid pandemic and the war in Ukraine, seeing the destruction and sadness that so many problems are bringing, knowing that there are still people fighting to make a better world inspired us. Our team wanted to try and make a difference in our country and our communities, so... we came up with **SafePulse, an app to combat the opioid crisis, where you're one call away from OK, not OD.**

**Please checkout what people are doing to combat the opioid crisis, how it's affecting Canadians and learn more about why it's so dangerous and what YOU can do.**

<https://globalnews.ca/tag/opioid-crisis/>

<https://globalnews.ca/news/8361071/record-toxic-illicit-drug-deaths-bc-coroner/>

<https://globalnews.ca/news/8405317/opioid-deaths-doubled-first-nations-people-ontario-amid-pandemic/>

<https://globalnews.ca/news/8831532/covid-excess-deaths-canada-heat-overdoses/>

<https://www.youtube.com/watch?v=q_quiTXfWr0>

<https://www2.gov.bc.ca/gov/content/overdose/what-you-need-to-know/responding-to-an-overdose>

## ⚙️WHAT IT DOES⚙️

**SafePulse** is a mobile app designed to combat the opioid crisis. SafePulse provides users with resources that they might not know about such as *'how to respond to an overdose'* or *'where to get free naxolone kits'.* Phone numbers to Live Support through 24/7 nurses are also provided, this way if the user chooses to administer themselves drugs, they can try and do it safely through the instructions of a registered nurse. There is also an Emergency Response Alarm for users, the alarm alerts emergency services and informs them of the type of drug administered, the location, and access instruction of the user. Information provided to users through resources and to emergency services through the alarm system is vital in overdose prevention.

## 🛠️HOW WE BUILT IT🛠️

We wanted to get some user feedback to help us decide/figure out which features would be most important for users and ultimately prevent an overdose/saving someone's life.

Check out the [survey](https://forms.gle/LHPnQgPqjzDX9BuN9) and the [results](https://docs.google.com/spreadsheets/d/1JKTK3KleOdJR--Uj41nWmbbMbpof1v2viOfy5zaXMqs/edit?usp=sharing)!

As a result of the survey, we found out that many people don't know what the symptoms of overdoses are and what they may look like; we added another page before the user exits the timer to double check whether or not they have symptoms. We also determined that by having instructions available while the user is overdosing increases the chances of someone helping.

So, we landed on 'passerby information' and 'supportive resources' as our additions to the app.

Passerby information is information that anyone can access while the user in a state of emergency to try and save their life. This took the form of the 'SAVEME' page, a set of instructions for Good Samaritans that could ultimately save the life of someone who's overdosing.

Supportive resources are resources that the user might not know about or might need to access such as live support from registered nurses, free naxolone kit locations, safe injection site locations, how to use a narcan kit, and more!

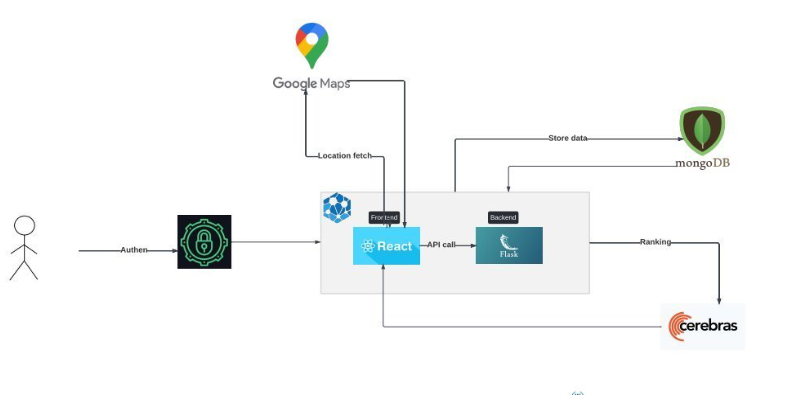

Tech Stack: ReactJS, Firebase, Python/Flask

SafePulse was built with ReactJS in the frontend and we used Flask, Python and Firebase for the backend and used the Twilio API to make the emergency calls.

## 😣 CHALLENGES WE RAN INTO😣

* It was Jacky's **FIRST** hackathon and Matthew's **THIRD** so there was a learning curve to a lot of stuff especially since we were building an entire app

* We originally wanted to make the app utilizing MERN, we tried setting up the database and connecting with Twilio but it was too difficult with all of the debugging + learning nodejs and Twilio documentation at the same time 🥺

* Twilio?? HUGEEEEE PAIN, we couldn't figure out how to get different Canadian phone numbers to work for outgoing calls and also have our own custom messages for a little while. After a couple hours of reading documentation, we got it working!

## 🎉ACCOMPLISHMENTS WE ARE PROUD OF🎉

* Learning git and firebase was HUGE! Super important technologies in a lot of projects

* With only 1 frontend developer, we managed to get a sexy looking app 🤩 (shoutouts to Mitchell!!)

* Getting Twilio to work properly (its our first time)

* First time designing a supportive app that's ✨**functional AND pretty** ✨without a dedicated ui/ux designer

* USER AUTHENTICATION WORKS!! ( つ•̀ω•́)つ

* Using so many tools, languages and frameworks at once, and making them work together :D

* Submitting on time (I hope? 😬)

## ⏭️WHAT'S NEXT FOR SafePulse⏭️

SafePulse has a lot to do before it can be deployed as a genuine app.

* Partner with local governments and organizations to roll out the app and get better coverage

* Add addiction prevention resources

* Implement google maps API + location tracking data and pass on the info to emergency services so they get the most accurate location of the user

* Turn it into a web app too!

* Put it on the app store and spread the word! It can educate tons of people and save lives!

* We may want to change from firebase to MongoDB or another database if we're looking to scale the app

* Business-wise, a lot of companies sell user data or exploit their users - we don't want to do that - we'd be looking to completely sell the app to the government and get a contract to continue working on it/scale the project. Another option would be to sell our services to the government and other organizations on a subscription basis, this would give us more control over the direction of the app and its features while partnering with said organizations

## 🎁ABOUT THE TEAM🎁

*we got two Matthew's by the way (what are the chances?)*

Mitchell is a 1st year computer science student at Carleton University studying Computer Science. He is most inter tested in programing language enineering. You can connect with him at his [LinkedIn](https://www.linkedin.com/in/mitchell-monireoluwa-mark-george-261678155/) or view his [Portfolio](https://github.com/MitchellMarkGeorge)

Jacky is a 2nd year Systems Design Engineering student at the University of Waterloo. He is most experienced with embedded programming and backend. He is looking to explore various fields in development. He is passionate about reading and cooking. You can reach out to him at his [LinkedIn](https://www.linkedin.com/in/chenyuxiangjacky/) or view his [Portfolio](https://github.com/yuxstar1444)

Matthew B is an incoming 3rd year computer science student at Wilfrid Laurier University. He is most experienced with backend development but looking to learn new technologies and frameworks. He is passionate about music and video games and always looking to connect with new people. You can reach out to him at his [LinkedIn](https://www.linkedin.com/in/matthew-borkowski-b8b8bb178/) or view his [GitHub](https://github.com/Sulima1)

Matthew W is a 3rd year computer science student at Simon Fraser University, currently looking for a summer 2022 internship. He has formal training in data science. He's interested in learning new and honing his current frontend skills/technologies. Moreover, he has a deep understanding of machine learning, AI and neural networks. He's always willing to have a chat about games, school, data science and more! You can reach out to him at his [LinkedIn](https://www.linkedin.com/in/matthew-wong-240837124/), visit his [website](https://wongmatt.dev) or take a look at what he's [working on](https://github.com/WongMatthew)

### 🥳🎉THANK YOU WLU FOR HOSTING HAWKHACKS🥳🎉

|

## Inspiration

It has always been very time consuming to dedicate time to learning a new language. Many of the traditional methods often involve studying words in a dull static environment. Our experience has shown that this is not always the best or most fun way to learn new languages in a way that makes it "stick". This was why we wanted to develop an on the go AR app that anyone could take with you and live translate in real time words that a user sees more often to personalize the learning experience for the individual.

## What it does

Using iOS ARKit, we created an iOS app which works with augmented headset. We use the live video feed from the camera and leverage a tensor flow object recognizing model to scan the field of view and when the user focuses in on the object of choice, we use the model to identify the object in English. The user then must speak the translated word for the object and the app will determine if they are correct.

## How we built it

We leveraged ARKit from the iOS library for the app. Integrated a tensor flow model for object recognition. Created an api server for translated words using stdlib hosted on azure. Word translation was done using microsoft bing translation service. Our score system is a db we created in firebase.

## Challenges we ran into

Hacking out field of view for the ARKit. Getting translation to work on the app. Speech to text. Learning js and integrating stdlib. Voice commands

## Accomplishments that we're proud of

It worked!!

## What we learned

How to work with ARKit scenes. How to spin up an api quickly through stdlib. A bit of mandarin in the process of testing the app!

## What's next for Visualingo

Voice commands for app settings (e.g. language), motion commands (head movements). Gamification of the app.

|

winning

|

## Inspiration

I dreamed about the day we would use vaccine passports to travel long before the mRNA vaccines even reached clinical trials. I was just another individual, fortunate enough to experience stability during an unstable time, having a home to feel safe in during this scary time. It was only when I started to think deeper about the effects of travel, or rather the lack thereof, that I remembered the children I encountered in Thailand and Myanmar who relied on tourists to earn $1 USD a day from selling handmade bracelets. 1 in 10 jobs are supported by the tourism industry, providing livelihoods for many millions of people in both developing and developed economies. COVID has cost global tourism $1.2 trillion USD and this number will continue to rise the longer people are apprehensive about travelling due to safety concerns. Although this project is far from perfect, it attempts to tackle vaccine passports in a universal manner in hopes of buying us time to mitigate tragic repercussions caused by the pandemic.

## What it does

* You can login with your email, and generate a personalised interface with yours and your family’s (or whoever you’re travelling with’s) vaccine data

* Universally Generated QR Code after the input of information

* To do list prior to travel to increase comfort and organisation

* Travel itinerary and calendar synced onto the app

* Country-specific COVID related information (quarantine measures, mask mandates etc.) all consolidated in one destination

* Tourism section with activities to do in a city

## How we built it

Project was built using Google QR-code APIs and Glideapps.

## Challenges we ran into

I first proposed this idea to my first team, and it was very well received. I was excited for the project, however little did I know, many individuals would leave for a multitude of reasons. This was not the experience I envisioned when I signed up for my first hackathon as I had minimal knowledge of coding and volunteered to work mostly on the pitching aspect of the project. However, flying solo was incredibly rewarding and to visualise the final project containing all the features I wanted gave me lots of satisfaction. The list of challenges is long, ranging from time-crunching to figuring out how QR code APIs work but in the end, I learned an incredible amount with the help of Google.

## Accomplishments that we're proud of

I am proud of the app I produced using Glideapps. Although I was unable to include more intricate features to the app as I had hoped, I believe that the execution was solid and I’m proud of the purpose my application held and conveyed.

## What we learned

I learned that a trio of resilience, working hard and working smart will get you to places you never thought you could reach. Challenging yourself and continuing to put one foot in front of the other during the most adverse times will definitely show you what you’re made of and what you’re capable of achieving. This is definitely the first of many Hackathons I hope to attend and I’m thankful for all the technical as well as soft skills I have acquired from this experience.

## What's next for FlightBAE

Utilising GeoTab or other geographical softwares to create a logistical approach in solving the distribution of Oyxgen in India as well as other pressing and unaddressed bottlenecks that exist within healthcare. I would also love to pursue a tech-related solution regarding vaccine inequity as it is a current reality for too many.

|

## Inspiration

Recently, security has come to the forefront of media with the events surrounding Equifax. We took that fear and distrust and decided to make something to secure and protect data such that only those who should have access to it actually do.

## What it does

Our product encrypts QR codes such that, if scanned by someone who is not authorized to see them, they present an incomprehensible amalgamation of symbols. However, if scanned by someone with proper authority, they reveal the encrypted message inside.

## How we built it

This was built using cloud functions and Firebase as our back end and a Native-react front end. The encryption algorithm was RSA and the QR scanning was open sourced.

## Challenges we ran into

One major challenge we ran into was writing the back end cloud functions. Despite how easy and intuitive Google has tried to make it, it still took a lot of man hours of effort to get it operating the way we wanted it to. Additionally, making react-native compile and run on our computers was a huge challenge as every step of the way it seemed to want to fight us.

## Accomplishments that we're proud of

We're really proud of introducing encryption and security into this previously untapped market. Nobody to our kowledge has tried to encrypt QR codes before and being able to segment the data in this way is sure to change the way we look at QR.

## What we learned

We learned a lot about Firebase. Before this hackathon, only one of us had any experience with Firebase and even that was minimal, however, by the end of this hackathon, all the members had some experience with Firebase and appreciate it a lot more for the technology that it is. A similar story can be said about react-native as that was another piece of technology that only a couple of us really knew how to use. Getting both of these technologies off the ground and making them work together, while not a gargantuan task, was certainly worthy of a project in and of itself let alone rolling cryptography into the mix.

## What's next for SeQR Scanner and Generator

Next, if this gets some traction, is to try and sell this product on th marketplace. Particularly for corporations with, say, QR codes used for labelling boxes in a warehouse, such a technology would be really useful to prevent people from gainng unnecessary and possibly debiliatory information.

|

## Inspiration

As college students learning to be socially responsible global citizens, we realized that it's important for all community members to feel a sense of ownership, responsibility, and equal access toward shared public spaces. Often, our interactions with public spaces inspire us to take action to help others in the community by initiating improvements and bringing up issues that need fixing. However, these issues don't always get addressed efficiently, in a way that empowers citizens to continue feeling that sense of ownership, or sometimes even at all! So, we devised a way to help FixIt for them!

## What it does

Our app provides a way for users to report Issues in their communities with the click of a button. They can also vote on existing Issues that they want Fixed! This crowdsourcing platform leverages the power of collective individuals to raise awareness and improve public spaces by demonstrating a collective effort for change to the individuals responsible for enacting it. For example, city officials who hear in passing that a broken faucet in a public park restroom needs fixing might not perceive a significant sense of urgency to initiate repairs, but they would get a different picture when 50+ individuals want them to FixIt now!

## How we built it

We started out by brainstorming use cases for our app and and discussing the populations we want to target with our app. Next, we discussed the main features of the app that we needed to ensure full functionality to serve these populations. We collectively decided to use Android Studio to build an Android app and use the Google Maps API to have an interactive map display.

## Challenges we ran into

Our team had little to no exposure to Android SDK before so we experienced a steep learning curve while developing a functional prototype in 36 hours. The Google Maps API took a lot of patience for us to get working and figuring out certain UI elements. We are very happy with our end result and all the skills we learned in 36 hours!

## Accomplishments that we're proud of

We are most proud of what we learned, how we grew as designers and programmers, and what we built with limited experience! As we were designing this app, we not only learned more about app design and technical expertise with the Google Maps API, but we also explored our roles as engineers that are also citizens. Empathizing with our user group showed us a clear way to lay out the key features of the app that we wanted to build and helped us create an efficient design and clear display.

## What we learned

As we mentioned above, this project helped us learn more about the design process, Android Studio, the Google Maps API, and also what it means to be a global citizen who wants to actively participate in the community! The technical skills we gained put us in an excellent position to continue growing!

## What's next for FixIt

An Issue’s Perspective

\* Progress bar, fancier rating system

\* Crowdfunding

A Finder’s Perspective

\* Filter Issues, badges/incentive system

A Fixer’s Perspective

\* Filter Issues off scores, Trending Issues

|

partial

|

# **Cough It**

#### COVID-19 Diagnosis at Ease

## Inspiration

As the pandemic has nearly crippled all the nations and still in many countries, people are in lockdown, there are many innovations in these two years that came up in order to find an effective way of tackling the issues of COVID-19. Out of all the problems, detecting the COVID-19 strain has been the hardest so far as it is always mutating due to rapid infections.

Just like many others, we started to work on an idea to detect COVID-19 with the help of cough samples generated by the patients. What makes this app useful is its simplicity and scalability as users can record a cough sample and just wait for the results to load and it can give an accurate result of where one have the chances of having COVID-19 or not.

## Objective

The current COVID-19 diagnostic procedures are resource-intensive, expensive and slow. Therefore they are lacking scalability and retarding the efficiency of mass-testing during the pandemic. In many cases even the physical distancing protocol has to be violated in order to collect subject's samples. Disposing off biohazardous samples after diagnosis is also not eco-friendly.

To tackle this, we aim to develop a mobile-based application COVID-19 diagnostic system that:

* provides a fast, safe and user-friendly to detect COVID-19 infection just by providing their cough audio samples

* is accurate enough so that can be scaled-up to cater a large population, thus eliminating dependency on resource-heavy labs

* makes frequent testing and result tracking efficient, inexpensive and free of human error, thus eliminating economical and logistic barriers, and reducing the wokload of medical professionals

Our [proposed CNN](https://dicova2021.github.io/docs/reports/team_Brogrammers_DiCOVA_2021_Challenge_System_Report.pdf) architecture also secured Rank 1 at [DiCOVA](https://dicova2021.github.io/) Challenge 2021, held by IISc Bangalore researchers, amongst 85 teams spread across the globe. With only being trained on small dataset of 1,040 cough samples our model reported:

Accuracy: 94.61%

Sensitivity: 80% (20% false negative rate)

AUC of ROC curve: 87.07% (on blind test set)

## What it does

The working of **Cough It** is simple. User can simply install the app and tap to open it. Then, the app will ask for user permission for external storage and microphone. The user can then just tap the record button and it will take the user to a countdown timer like interface. Playing the play button will simply start recording a 7-seconds clip of cough sample of the user and upon completion it will navigate to the result screen for prediction the chances of the user having COVID-19

## How we built it

Our project is divided into three different modules -->

#### **ML Model**

Our machine learning model ( CNN architecture ) will be trained and deployed using the Sagemaker API which is apart of AWS to predict positive or negative infection from the pre-processed audio samples. The training data will also contain noisy and bad quality audio sample, so that it is robust for practical applications.

#### **Android App**

At first, we prepared the wireframe for the app and decided the architecture of the app which we will be using for our case. Then, we worked from the backend part first, so that we can structure our app in proper android MVVM architecture. We constructed all the models, Retrofit Instances and other necessary modules for code separation.

The android app is built in Kotlin and is following MVVM architecture for scalability. The app uses Media Recorder class to record the cough samples of the patient and store them locally. The saved file is then accessed by the android app and converted to byte array and Base64 encoded which is then sent to the web backend through Retrofit.

#### **Web Backend**

The web backend is actually a Node.js application which is deployed on EC2 instance in AWS. We choose this type of architecture for our backend service because we wanted a more reliable connection between our ML model and our Node.js application.

At first, we created a backend server using Node.js and Express.js and deployed the Node.js server in AWS EC2 instance. The server then receives the audio file in Base64 encoded form from the android client through a POST request API call. After that, the file is getting converted to .wav file through a module in terminal through command. After successfully, generating the .wav file, we put that .wav file as argument in the pre-processor which is a python script. Then we call the AWS Sagemaker API to get the predictions and the Node.js application then sends the predictions back to the android counterpart to the endpoint.

## Challenges we ran into

#### **Android**

Initially, in android, we were facing a lot of issues in recording a cough sample as there are two APIs for recording from the android developers, i.e., MediaRecorder, AudioRecord. As the ML model required a .wav file of the cough sample to pre-process, we had to generate it on-device. It is possible with AudioRecord class but requires heavy customization to work and also, saving a file and writing to that file, is a really tedious and buggy process. So, for android counterpart, we used the MediaRecorder class and saving the file and all that boilerplate code is handled by that MediaRecorder class and then we just access that file and send it to our API endpoint which then converts it into a .wav file for the pre-processor to pre-process.

#### **Web Backend**

In the web backend side, we faced a lot of issues in deploying the ML model and to further communicate with the model with node.js application.

Initially, we deployed the Node.js application in AWS Lamdba, but for processing the audio file, we needed to have a python environment as well, so we could not continue with lambda as it was a Node.js environment. So, to actually get the python environment we had to use AWS EC2 instance for deploying the backend server.

Also, we are processing the audio file, we had to use ffmpeg module for which we had to downgrade from the latest version of numpy library in python to older version.

#### **ML Model**

The most difficult challenge for our ml-model was to get it deployed so that it can be directly accessed from the Node.js server to feed the model with the MFCC values for the prediction. But due to lot of complexity of the Sagemaker API and with its integration with Node.js application this was really a challenge for us. But, at last through a lot of documentation and guidance we are able to deploy the model in Sagemaker and we tested some sample data through Postman also.

## Accomplishments that we're proud of

Through this project, we are proud that we are able to get a real and accurate prediction of a real sample data. We are able to send a successful query to the ML Model that is hosted on Sagemaker and the prediction was accurate.

Also, this made us really happy that in a very small amount we are able to overcome with so much of difficulties and also, we are able to solve them and get the app and web backend running and we are able to set the whole system that we planned for maintaining a proper architecture.

## What we learned

Cough It is really an interesting project to work on. It has so much of potential to be one of the best diagnostic tools for COVID-19 which always keeps us motivated to work on it make it better.

In android, working with APIs like MediaRecorder has always been a difficult position for us, but after doing this project and that too in Kotlin, we feel more confident in making a production quality android app. Also, developing an ML powered app is difficult and we are happy that finally we made it.

In web, we learnt the various scenarios in which EC2 instance can be more reliable than AWS Lambda also running various script files in node.js server is a good lesson to be learnt.

In machine learning, we learnt to deploy the ML model in Sagemaker and after that, how to handle the pre-processing file in various types of environments.

## What's next for Untitled

As of now, our project is more focused on our core idea, i.e., to predict by analysing the sample data of the user. So, our app is limited to only one user, but in future, we have already planned to make a database for user management and to show them report of their daily tests and possibility of COVID-19 on a weekly basis as per diagnosis.

## Final Words

There is a lot of scope for this project and this project and we don't want to stop innovating. We would like to take our idea to more platforms and we might also launch the app in the Play-Store soon when everything will be stable enough for the general public.

Our hopes on this project is high and we will say that, we won't leave this project until perfection.

|

## Inspiration

Every year hundreds of thousands of preventable deaths occur due to the lack of first aid knowledge in our societies. Many lives could be saved if the right people are in the right places at the right times. We aim towards connecting people by giving them the opportunity to help each other in times of medical need.

## What it does

It is a mobile application that is aimed towards connecting members of our society together in times of urgent medical need. Users can sign up as respondents which will allow them to be notified when people within a 300 meter radius are having a medical emergency. This can help users receive first aid prior to the arrival of an ambulance or healthcare professional greatly increasing their chances of survival. This application fills the gap between making the 911 call and having the ambulance arrive.

## How we built it

The app is Android native and relies heavily on the Google Cloud Platform. User registration and authentication is done through the use of Fireauth. Additionally, user data, locations, help requests and responses are all communicated through the Firebase Realtime Database. Lastly, the Firebase ML Kit was also used to provide text recognition for the app's registration page. Users could take a picture of their ID and their information can be retracted.

## Challenges we ran into

There were numerous challenges in terms of handling the flow of data through the Firebase Realtime Database and providing the correct data to authorized users.

## Accomplishments that we're proud of

We were able to build a functioning prototype! Additionally we were able to track and update user locations in a MapFragment and ended up doing/implementing things that we had never done before.

|

### Checkout our site at: <https://rapid-processor.herokuapp.com/>

### Our slideshow at: <https://www.beautiful.ai/player/-MFQZr8ue7Jp0jHtY16q>

## Inspiration

COVID-19 has significantly impacted everyone's lives and we are all eager to return to the pre-pandemic lifestyle. One of the most effective ways of stopping the spread is through mass testing: identifying and isolating those who are infected. However, in some of the hardest-hit regions around the world, the bottleneck of COVID-19 testing is often not gathering samples, but processing them. In certain areas of the US for example, samples can take, on average, up to 2 weeks to process.[1]

On a personal level, that means individuals with mild or no symptoms could be going to public spaces like beaches and parks, and spreading the virus for two full weeks unknowingly. And for those that have a weak immune system, they could face hospitalization or even death. We hope to reduce the processing time, lower the spread of this deadly virus, and prevent death.

A technique commonly used to tackle a problem of this kind is pool sampling. In essence, instead of testing one sample at a time, samples are being tested in batches. Individual samples in a certain batch would only be retested if the given batch return positive (at least one positive COVID-19 case is present in the batch). This technique has the potential to be very effective, but only at the most optimized batch size.

## What it does

Our application predicts the number of active COVID-19 cases, tested cases, thus giving us an active rate (active cases divided by tested cases), calculates the most optimal batch sizes for pool sampling.

## How we built it

Our application fetches data from Johns Hopkin’s University’s (JHU) COVID-19 database, which hosts real-time data from around the world. With available data provided by JHU, our application is pertinent everywhere. With the provided data, our well-trained recurrent neural network accurately predicts the active rate of the coming days based on past data. Then we determine the optimized batch size for the the near future in each region to achieve the fastest processing time.

The frontend is built on React in JavaScript and the backend is built with flask in Python.

Our predicted COVID-19 cases, tested cases, and active rates, which we use to produce the optimal batch sizes, were outputted using machine learning in Keras (TensorFlow). We used a 14-day window to predict the next week’s results and iterated the process to predict the next week’s results. We use mean/max preprocessing on our variables. Our network was a recurrent neural network with two LSTM layers with 16 recurrent units each, and one dense layer with 8 units, followed by a dense linear regression output. We used tanh and ReLU activations. Training was done stochastically on given data with early stopping using an Adam optimizer and a learning rate of 5e-4. Each day, we take newly uploaded data from Johns Hopkins University and perform online learning, updating our model automatically. We then obtain the daily active rate from our algorithm and output the optimal batch sizes.

## Challenges we ran into

We faced two major challenges during this hackathon. Initially, we planned to build our app on Azure. We were able to deploy it successfully with a continuous integration pipeline but were unable to display the user interface. The second challenge we faced is with training neural networks. We had to rely heavily on local computers to train complex networks and this was a time-consuming process during our development.

## Accomplishments that we're proud of

We are proud that we are able to make a fully functional web application with a sophisticated machine learning integrated backend with merely three participants. Furthermore, our app will be able to influence hundreds of millions of people and has the potential to make a significant impact on our fight against COVID-19.

## What we learned

We have learned how to develop and integrate a frontend and back end with a complex machine learning module in an application. We were able to share our existing computer science concepts among us. Each of us brought to the table a unique set of skills that made this project possible.

## What's next for Pool Sampler

We will integrate more cloud services and host our application on platforms such as Microsoft Azure, Amazon Web Services, and Google Cloud services to fully harness the power of cloud computing. We also look forward to collaborating with laboratories and local governments to reduce their testing time and save lives.

[1] <https://www.mercurynews.com/2020/07/29/coronavirus-why-your-covid-19-test-results-are-so-delayed/>

|

winning

|

## Inspiration

Researching for an essay is a pain so we wanted to make an easier way to find, compile, and summarize the right resources. No more insentient googling with 50 tabs open, quickly collect and summarize all you information in one place with The Lazy Scholar!

## What it does

The Lazy Scholar searches for your desired topic and summarizes the top results so you can quickly and easily determine if the link is of value.

The majority of search engines return only a few words matching the search criteria for each link, but the Lazy Scholar does so much more. When a search is requested the program searches through each link returned, summarizing the information contained and displaying only the most relevant information in a paragraph format. You can expand links to generate a more in-depth summary and download the results all to one document through the click of a button.

If you are feeling especially lazy The Lazy Scholar can generate an essay for every word count (quality not guaranteed).

If you are feeling especially lazy The Lazy Scholar can generate a complete essay for you, all you need to do is enter the search criteria and required word count (quality not guaranteed).

## How we built it

The Lazy Scholar was built with an angular front end and powered by a custom python backed. It utilizes the Google custom search API to generate the top search results. The main body of text at each URL is then collected and run through a text summarization program. This program generates the most relevant content using the TextRank algorithm.

|

## Inspiration

Frustrated with the overwhelming amount of notes required in AP classes, we decided to make life easier for ourselves. With the development of machine learning and neural networks, automatic text summary generation has become increasingly accurate; our mission is to provide easy and simple access to the service.

## What it does

The web app takes in a picture/screenshot of text and auto-generates a summary and highlights important sentences, making skimming a dense article simple. In addition, keywords and their definitions are provided along with some other information (sentiment, classification, and Flesch-Kincaid readability). Finally, a few miscellaneous community tools (random student-related articles and a link to Stack Exchange) are also available.

## How we built it

The natural language processing was split into two different parts: abstractive and extractive.

The abstractive section was carried out using a neural network from [this paper](https://arxiv.org/abs/1704.04368) by Abigail See, Peter J. Liu, and Christopher D. Manning ([Github](https://github.com/abisee/pointer-generator)). Stanford's CoreNLP, was used to chunk and preprocess text for analysis.

Extractive text summarize was done using Google Cloud Language, and the python modules gensim, word2vec and nltk.

We also used Google Cloud Vision API to extract text from an image. To find random student-related articles, we webscraped using BeautifulSoup4.

The front end was built using HTML, CSS, and Bootstrap.

## Challenges we ran into

We found it difficult to parse/chunk our plain-text into the correct format for the neural net to take in.

In addition, we found it extremely difficult to set up and host our flask app on App Engine/Firestore in the given time; we were unable to successfully upload our model due to our large files and the lack of time. To solve this problem, we decided to keep our project local and use cookies for data retention. Because of this we were able to redirect our efforts towards other features.

## Accomplishments that we're proud of

We're extremely proud of having a working product at the end of a hackathon, especially a project we are so passionate about. We have so many ideas that we haven't implemented in this short amount of time, and we plan to improve and develop our project further afterwards.

## What we learned

We learned how to work with flask, tensorflow models, various forms of natural language processing, and REST (specifically Google Cloud) APIs.

## What's next for NoteWorthy

Although our product is "finished," we have a lot planned for NoteWorthy. Our main goal is to make NoteWorthy a product not only for the individual but for the community (possibly as a tool in the classroom). We want to enable multi-user availability of summarized documents to encourage discussion and group learning. Additionally, we want to personalize NoteWorthy according to the user's actions. This includes utilizing the subjects of summarized articles and their respective reading levels to provide relevant news articles as well as forum recommendations.

|

## Inspiration

Among our group, we noticed we all know at least one person, who despite seeking medical and nutritional support, suffers from some unidentified food allergy. Seeing people struggle to maintain a healthy diet while "dancing" around foods that they are unsure if they should eat inspired us to do something about it; build **BYTEsense.**

## What it does

BYTEsense is an AI powered tool which personalizes itself to a users individual dietary needs. First, you tell the app what foods you ate, and rate your experience afterwards on a scale of 1-3 - The app then breaks down the food into its individual ingredients, remembers how your experience with them, and stores them to be referenced later. Then, after a sufficient amount of data has been collected, you can use the app to predict how **NEW** foods can affect you through our "How will I feel if I consume..." function!

## How we built it

The web app consists of two main functions, the training and the predicting functions. The training function was built beginning with the receiving of a food and an associated rating. This is then passed on through the OpenAI API to be broken apart to its individual ingredients through ChatGPT's chatting abilities. These ingredients, and their associated ratings, are then saved onto an SQL database which contains all known associations to date. **Furthermore**, there is always a possibility that two different dishes share an ingredient, but your experience is fully different! How do we adjust for that? Well naturally, that would imply that this ingredient is not the significant irritator, and we adjust the ratings according to both data points. Finally, the prediction function of the web app utilizes Cohere's AI endpoints to complete the predictions. Through use of Cohere's classify endpoint, we are able to train an algorithm which can classify a new dish into any of the three aforementioned categories, with relation to the previously acquired data!

The project was all built on Replit, allowing for us to collaborate and host it all in the same place!

## Challenges we ran into

We ran into many challenges over the course of the project. First, it began with our original plan of action being completely unusable after seeing updates to Cohere's API, effectively removing the custom embed models for classification and rerank. But that did not stop us! We readjusted, re-planned, and kept on it! Our next biggest problem was the coders nightmare, a tiny syntax error in our SQLite code which continuously that crashed our entire program. We spent over an hour locating the bug, and even more trying to figure out the issue (it was a wrong data type.). And our final immense issue came quite literally out of the blue, previously, we utilized Cohere's new Coral chatbot to identify ingredients in the various input, but, due to an apparent glitch in the responses - we got our responses sent over 15 times each prompt - we had made a last minute jump to OpenAI! Once we got past those, most other things seemed like a piece of cake - there were a lot of pieces - but we're happy to present the finished product!

## Accomplishments we are proud of:

There are many things that we as a team are proud of, from overcoming trials and tribulations, refusing sleep for nearly two days, and most importantly, producing a finished product. We are proud to see just how far we have come, from having no idea how to even approach LLM, to running a program utilizing **TWO** different ones. But most importantly, I think we are all proud of creating a product that really has potential to help people, using technology to better people's lives is something to be very proud of doing!

## What we learned:

What did we learn? Well, that depends who you ask! I feel like each member of the team learnt an unbelievable amount, whether it be from each other or individually. For instance, I learnt a lot about flask and front end development from working with a proficient teammate, and I hope I gave something to learn from too! Even more so, throughout the weekend we attended many workshops, ranging from ML, to LLM, Replit, and so many others, that even if we didn't use what we learnt there in this project, I have no doubt it will appear in a next!

## What’s next for BYTEsense:

All of us in the team honestly believe that BYTEsense has reached a level which is not only functional, but viable. As we keep on going all that is left is tidying up and cleaning some code and a potentially market ready app could be born! Who knows, maybe we'll be a sponsor one day!

But either way, I am definitely using a copy when I get back home!

|

losing

|

## Inspiration

We were inspired to create such a project since we are all big fans of 2D content, yet have no way of actually animating 2D movies. Hence, the idea for StoryMation was born!

## What it does

Given a text prompt, our platform converts it into a fully-featured 2D animation, complete with music, lots of action, and amazing-looking sprites! And the best part? This isn't achieved by calling some image generation API to generate a video for our movie; instead, we call on such APIs to create lots of 2D sprites per scene, and then leverage the power of LLMs (CoHere) to move those sprites around in a fluid and dynamic matter!

## How we built it

On the frontend we used React and Tailwind, whereas on the backend we used Node JS and Express. However, for the actual movie generation, we used a massive, complex pipeline of AI-APIs. We first use Cohere to split the provided story plot into a set of scenes. We then use another Cohere API call to generate a list of characters, and a lot of their attributes, such as their type, description (for image gen), and most importantly, Actions. Each "Action" consists of a transformation (translation/rotation) in some way, and by interpolating between different "Actions" for each character, we can integrate them seamlessly into a 2D animation.

This framework for moving, rotating and scaling ALL sprites using LLMs like Cohere is what makes this project truly stand out. Had we used an Image Generation API like SDXL to simply generate a set of frames for our "video", we would have ended up with a janky stop-motion video. However, we used Cohere in a creative way, to decide where and when each character should move, scale, rotate, etc. thus ending up with a very smooth and human-like final 2D animation.

## Challenges we ran into

Since our project is very heavily reliant on BETA parts of Cohere for many parts of its pipeline, getting Cohere to fit everything into the strict JSON prompts we had provided, despite the fine-tuning, was often quite difficult.

## Accomplishments that we're proud of

In the end, we were able to accomplish what we wanted!

|

## Inspiration

This year's theme of Nostalgia reminded us of our childhoods, reading stories and being so immersed in them. As a result, we created Mememto as a way for us to collectively look back on the past from the retelling of it through thrilling and exciting stories.

## What it does

We created a web application that asks users to input an image, date, and brief description of the memory associated with the provided image. Doing so, users are then given a generated story full of emotions, allowing them to relive the past in a unique and comforting way. Users are also able to connect with others on the platform and even create groups with each other.

## How we built it

Thanks to Taipy and Cohere, we were able to bring this application to life. Taipy supplied both the necessary front-end and back-end components. Additionally, Cohere enabled story generation through natural language processing (NLP) via their POST chat endpoint (<https://api.cohere.ai/v1/chat>).

## Challenges we ran into

Mastering Taipy presented a significant challenge. Due to its novelty, we encountered difficulty freely styling, constrained by its syntax. Setting up virtual environments also posed challenges initially, but ultimately, we successfully learned the proper setup.

## Accomplishments that we're proud of

* We were able to build a web application that functions

* We were able to use Taipy and Cohere to build a functional application

## What we learned

* We were able to learn a lot about the Taipy library, Cohere, and Figma

## What's next for Memento

* Adding login and sign-up

* Improving front-end design

* Adding image processing, able to identify entities within user given image and using that information, along with the brief description of the photo, to produce a more accurate story that resonates with the user

* Saving and storing data

|

## Inspiration

Everybody knows how annoying it can be to develop web applications. But this sentiment is certainly true for those who have minimal to no working experience with web development. We were inspired by the power of Cohere LLM's to transform natural language into many different forms and in our case, to generate websites. With this, we are able to quickly turn a users idea into a website which they can download and edit on the spot.

## What it does

SiteSynth turns natural language input into a clean formatted and stylized website.

## How we built it

SiteSynth is powered by Django in the back end and HTML/CSS/JS in the front end. In the back end, we use the Cohere generate API to generate the HTML and CSS code.

## Challenges we ran into

Some challenges that we ran into were with the throttled API and perfecting the prompt. One of the most important parts of an NLP project is the input prompt to the LLM. We spent a lot of time perfecting the prompt of the input in order to ensure that the output is HTML code and ONLY HTML code. Also, the throttled speed of API calls slowed down our development and leads to a slow running app. However, despite these hardships, we have ended up with a project that we are quite proud of.

## Accomplishments that we're proud of

The project as a whole was a huge accomplishment which we are very happy with, but there are some parts which we appreciate more than others. In particular, we think the design of the main page is very clean. Likewise, the backend, while messy, does the job very well and we are proud of that.

## What we learned

This project was very insightful for learning about new cutting edge technologies. While we have worked with Django before, this was our first time working with the Cohere API (or any LLM API for that matter) and the importance of verbose and specific prompts was certainly highlighted. We also learned how difficult it can be to create a full-fledged application in a day in a half.

## What's next for SiteSynth

For the future, there are many ways in which we can improve SiteSynth. In particular, we know that images are integral to web development and as such we would like to properly integrate images. Likewise, with a proper API key, we could speed up the app tremendously. Finally, by also supporting dynamic templates, we can make the websites truly unique and desired.

|

partial

|

## Inspiration

We were inspired to build Hank after reading the sponsor contests and seeing that Vitech was running what we thought was a super challenging problem. We built Hank as a proof-of-concept to show that much of the advice sought from insurance professionals can be accurately generated by a finely tuned model. Considering our interest in machine learning and data visualization, the project seemed like the perfect fit. In the case of Hank, we aimed to generate plan suggestions and prices for a user through a simple survey. We believe a page like this could live on an insurance company's website and provide users with accurate quotes based off a machine learning model the company is able to tweak in real time.

## What it does

Hank provides a broad set of functionality that helps save time and money for both the clients and the life insurance providers.

For clients looking to purchase health insurance, Hank provides an easy introduction to the process with a simple and friendly application that guides the client through a series of questions to gather information such as age, family status, and personal health. With this information, Hank provides an accurate quote for each of the four insurance plans, as well as making a recommendation for which plan is likely the best for them.

Hank also provides an insurance provider facing visualization that allows for the life insurance provider to tweak Hank's suggestions based on business metrics such as life time value (LTV), customer satisfaction, and customer retention, all without re-training the two machine learning models that Hank uses to provide suggestions.

## How we built it

Hank's suggestion system is composed of three modules that work in series to provide the most accurate and useful data to the user.

The first module, the premium estimator, uses the gathered user demographic data to determine what the premiums for each of the four plans (bronze, silver, gold, platinum) would be. This is done with a neural network trained using tensor flow on the insurance data set provided by Vitech for this competition. Using a neural network for a mixture of continuous and discrete data allows Hank to make complex associations between user features and make accurate premium predictions.

The second module is the suggestion module. The suggestion module uses the premium pricing predicted by the premium estimator module as well as the user's demographic data to suggest which of the four plans would be the most suited to them. Because of many-dimensional nature of the data set as well as the fact that there were so many data points to use, a kth-nearest neighbor training model was applied using scipy.

The third module, the business module, is an exciting module that provides value to the life insurance provider by giving fine control over the suggestions that Hank makes to customers using simple metrics. The business module works by combining information from the first and second modules, as well as data from the World Population API to provide a data set that can be modified using simple scaling factors. Using the data-visualization front-end of this module allows the life insurance provider to tweak Hank's suggestions to better align with business goals. This would normally be a very time consuming process as the machine learning that Hank uses to make decisions would have to be re-trained and re-validated, but with the business module, the key components are pre-abstracted away from the machine learning implementation.

## Challenges we ran into

Our first hurdle for Hank was downloading the dataset provided by Vitech. At 1.4m records, it was a difficult task to retrieve and store, especially on a limited connection.

Once our data was successfully scraped, our next challenge was deciding on an appropriate model to use for the machine learning aspect of the project. Many options were tried and discarded, namely Bayseian classification, support vector machines, and random forest classification. By continuously training and testing different models, we ultimately decided on two - a nearest neighbour simulation and a neural network.

## Accomplishments that we're proud of

We're happy to say that we managed to deliver on what we thought would be our two biggest challenges - a pleasing and responsive UI and a meaningful data visualization.

We're especially proud of the business logic control panel which is used to modify and visualize the different goals a company wishes to optimize for.

## What we learned

This project was a chance for us to learn about machine learning as well as test our ability to put ourselves in the shoes of both our client as a software developer and the users of the application, to provide special features to both. The time constraints provided by the hackathon also taught us to manage our time and communicate well - as a team of two people tackling such an ambitious project, constant communication was a must.

## What's next for Hank: Your health insurance advisor

The next step for Hank is to train it against a larger, more diverse dataset and further optimize our models. Once we've trained our model against another set, we'll also be able to add additional steps to the survey, giving us more information to query with. The ultimate goal for Hank would be to host it live on an insurance company's site and see it used by real people and trained with real data.

|

We were inspired by the daily struggle of social isolation.

Shows the emotion of a text message on Facebook

We built this using Javascript, IBM-Watson NLP API, Python https server, and jQuery.

Accessing the message string was a lot more challenging than initially anticipated.

Finding the correct API for our needs and updating in real time also posed challenges.

The fact that we have a fully working final product.

How to interface JavaScript with Python backend, and manually scrape a templated HTML doc for specific key words in specific locations

Incorporate the ability to display alternative messages after a user types their initial response.

|

## Inspiration

Everybody struggles with their personal finances. Financial inequality in the workplace is particularly prevalent among young females. On average, women make 88 cents per every dollar a male makes in Ontario. This is why it is important to encourage women to become more cautious of spending habits. Even though budgeting apps such as Honeydue or Mint exist, they depend heavily on self-motivation from users.

## What it does

Our app is a budgeting tool that targets young females with useful incentives to boost self-motivation for their financial well-being. The app features simple scale graphics visualizing the financial balancing act of the user. By balancing the scale and achieving their monthly financial goals, users will be provided with various rewards, such as discount coupons or small cash vouchers based on their interests. Users are free to set their goals on their own terms and follow through with them. The app re-enforces good financial behaviour by providing gamified experiences with small incentives.

The app will be provided to users free of charge. As with any free service, the anonymized user data will be shared with marketing and retail partners for analytics. Discount offers and other incentives could lead to better brand awareness and spending from our users for participating partners. The customized reward is an opportunity for targeted advertising

## Persona

Twenty-year-old Ellie Smith works two jobs to make ends meet. The rising costs of living make it difficult for her to maintain her budget. She heard about this new app called Re:skale that provides personalized rewards for just achieving the budget goals. She signed up after answering a few questions and linking her financial accounts to the app. The app provided simple balancing scale animation for immediate visual feedback of her financial well-being. The app frequently provided words of encouragement and useful tips to maximize the chance of her success. She especially loves how she could set the goals and follow through on her own terms. The personalized reward was sweet, and she managed to save on a number of essentials such as groceries. She is now on 3 months streak with a chance to get better rewards.

## How we built it

We used : React, NodeJs, Firebase, HTML & Figma

## Challenges we ran into

We had a number of ideas but struggled to define the scope and topic for the project.

* Different design philosophies made it difficult to maintain consistent and cohesive design.

* Sharing resources was another difficulty due to the digital nature of this hackathon

* On the developing side, there were technologies that were unfamiliar to over half of the team, such as Firebase and React Hooks. It took a lot of time in order to understand the documentation and implement it into our app.

* Additionally, resolving merge conflicts proved to be more difficult. The time constraint was also a challenge.

## Accomplishments that we're proud of

* The use of harder languages including firebase and react hooks

* On the design side it was great to create a complete prototype of the vision of the app.

* Being some members first hackathon, the time constraint was a stressor but with the support of the team they were able to feel more comfortable with the lack of time

## What we learned

* we learned how to meet each other’s needs in a virtual space

* The designers learned how to merge design philosophies

* How to manage time and work with others who are on different schedules

## What's next for Re:skale

Re:skale can be rescaled to include people of all gender and ages.

* More close integration with other financial institutions and credit card providers for better automation and prediction

* Physical receipt scanner feature for non-debt and credit payments

## Try our product

This is the link to a prototype app

<https://www.figma.com/proto/nTb2IgOcW2EdewIdSp8Sa4/hack-the-6ix-team-library?page-id=312%3A3&node-id=375%3A1838&viewport=241%2C48%2C0.39&scaling=min-zoom&starting-point-node-id=375%3A1838&show-proto-sidebar=1>

This is a link for a prototype website

<https://www.figma.com/proto/nTb2IgOcW2EdewIdSp8Sa4/hack-the-6ix-team-library?page-id=0%3A1&node-id=360%3A1855&viewport=241%2C48%2C0.18&scaling=min-zoom&starting-point-node-id=360%3A1855&show-proto-sidebar=1>

|

partial

|

## Inspiration

Swap was inspired by COVID-19 having an impact on many individuals’ daily routines. Sleep schedules were shifted, more distractions were present due to working from home, and being away from friends and family members was difficult. Our team wanted to create a solution that would help others add excitement to their quarantine routines and also connect them with their friends and family members again.

## What it does

Swap is a mobile application that allows users to swap routines with their friends, family members, or even strangers to try something new! You can input daily activities and photos, add an optional mood tracker, add friends, initiate swaps instantly, pre-schedule swaps, and even randomize swaps.

## How we built it

For this project, we created a working prototype and wrote the backend code on how the swaps would be made. The prototype was created using Figma. For writing the backend code, we used python and applications such as Xcode, MySQL, and PyCharm.

## Challenges we ran into