repo

stringclasses 856

values | pull_number

int64 3

127k

| instance_id

stringlengths 12

58

| issue_numbers

sequencelengths 1

5

| base_commit

stringlengths 40

40

| patch

stringlengths 67

1.54M

| test_patch

stringlengths 0

107M

| problem_statement

stringlengths 3

307k

| hints_text

stringlengths 0

908k

| created_at

timestamp[s] |

|---|---|---|---|---|---|---|---|---|---|

gradio-app/gradio | 3,011 | gradio-app__gradio-3011 | [

"2982"

] | f7f5398e4c57ec174466fa5160c21bcfb8d83fe6 | diff --git a/gradio/external.py b/gradio/external.py

--- a/gradio/external.py

+++ b/gradio/external.py

@@ -63,6 +63,32 @@ def load_blocks_from_repo(

return blocks

+def chatbot_preprocess(text, state):

+ payload = {

+ "inputs": {"generated_responses": None, "past_user_inputs": None, "text": text}

+ }

+ if state is not None:

+ payload["inputs"]["generated_responses"] = state["conversation"][

+ "generated_responses"

+ ]

+ payload["inputs"]["past_user_inputs"] = state["conversation"][

+ "past_user_inputs"

+ ]

+

+ return payload

+

+

+def chatbot_postprocess(response):

+ response_json = response.json()

+ chatbot_value = list(

+ zip(

+ response_json["conversation"]["past_user_inputs"],

+ response_json["conversation"]["generated_responses"],

+ )

+ )

+ return chatbot_value, response_json

+

+

def from_model(model_name: str, api_key: str | None, alias: str | None, **kwargs):

model_url = "https://huggingface.co/{}".format(model_name)

api_url = "https://api-inference.huggingface.co/models/{}".format(model_name)

@@ -76,7 +102,6 @@ def from_model(model_name: str, api_key: str | None, alias: str | None, **kwargs

response.status_code == 200

), f"Could not find model: {model_name}. If it is a private or gated model, please provide your Hugging Face access token (https://huggingface.co/settings/tokens) as the argument for the `api_key` parameter."

p = response.json().get("pipeline_tag")

-

pipelines = {

"audio-classification": {

# example model: ehcalabres/wav2vec2-lg-xlsr-en-speech-emotion-recognition

@@ -101,6 +126,12 @@ def from_model(model_name: str, api_key: str | None, alias: str | None, **kwargs

"preprocess": to_binary,

"postprocess": lambda r: r.json()["text"],

},

+ "conversational": {

+ "inputs": [components.Textbox(), components.State()], # type: ignore

+ "outputs": [components.Chatbot(), components.State()], # type: ignore

+ "preprocess": chatbot_preprocess,

+ "postprocess": chatbot_postprocess,

+ },

"feature-extraction": {

# example model: julien-c/distilbert-feature-extraction

"inputs": components.Textbox(label="Input"),

@@ -125,6 +156,12 @@ def from_model(model_name: str, api_key: str | None, alias: str | None, **kwargs

{i["label"].split(", ")[0]: i["score"] for i in r.json()}

),

},

+ "image-to-text": {

+ "inputs": components.Image(type="filepath", label="Input Image"),

+ "outputs": components.Textbox(),

+ "preprocess": to_binary,

+ "postprocess": lambda r: r.json()[0]["generated_text"],

+ },

"question-answering": {

# Example: deepset/xlm-roberta-base-squad2

"inputs": [

@@ -311,7 +348,12 @@ def query_huggingface_api(*params):

}

kwargs = dict(interface_info, **kwargs)

- kwargs["_api_mode"] = True # So interface doesn't run pre/postprocess.

+

+ # So interface doesn't run pre/postprocess

+ # except for conversational interfaces which

+ # are stateful

+ kwargs["_api_mode"] = p != "conversational"

+

interface = gradio.Interface(**kwargs)

return interface

| diff --git a/test/test_external.py b/test/test_external.py

--- a/test/test_external.py

+++ b/test/test_external.py

@@ -228,6 +228,28 @@ def test_numerical_to_label_space(self):

except TooManyRequestsError:

pass

+ def test_image_to_text(self):

+ io = gr.Interface.load("models/nlpconnect/vit-gpt2-image-captioning")

+ try:

+ output = io("gradio/test_data/lion.jpg")

+ assert isinstance(output, str)

+ except TooManyRequestsError:

+ pass

+

+ def test_conversational(self):

+ io = gr.Interface.load("models/microsoft/DialoGPT-medium")

+ app, _, _ = io.launch(prevent_thread_lock=True)

+ client = TestClient(app)

+ assert app.state_holder == {}

+ response = client.post(

+ "/api/predict/",

+ json={"session_hash": "foo", "data": ["Hi!", None], "fn_index": 0},

+ )

+ output = response.json()

+ assert isinstance(output["data"], list)

+ assert isinstance(output["data"][0], list)

+ assert isinstance(app.state_holder["foo"], dict)

+

def test_speech_recognition_model(self):

io = gr.Interface.load("models/facebook/wav2vec2-base-960h")

try:

| Add conversational pipeline to Interface.load

- [X] I have searched to see if a similar issue already exists.

When trying to use the HuggingFace hosted Chatbot-models the Interface.load fails with the error:

`ValueError: Unsupported pipeline type: conversational`

The hosted models on HuggingFace are really useful for testing out models without having to download them. And also makes it possible to use large models while developing on a laptop.

So it would be very useful to be able to use conversational models via the HTTP load functionality.

| 2023-01-18T17:52:59 |

|

gradio-app/gradio | 3,048 | gradio-app__gradio-3048 | [

"3043"

] | c85572192e28f85d4d75fda9c6b3431c2ecf352d | diff --git a/gradio/components.py b/gradio/components.py

--- a/gradio/components.py

+++ b/gradio/components.py

@@ -2250,6 +2250,10 @@ def __init__(

"""

self.file_count = file_count

self.file_types = file_types

+ if file_types is not None and not isinstance(file_types, list):

+ raise ValueError(

+ f"Parameter file_types must be a list. Received {file_types.__class__.__name__}"

+ )

valid_types = [

"file",

"binary",

@@ -2982,6 +2986,10 @@ def __init__(

"""

self.type = type

self.file_count = file_count

+ if file_types is not None and not isinstance(file_types, list):

+ raise ValueError(

+ f"Parameter file_types must be a list. Received {file_types.__class__.__name__}"

+ )

self.file_types = file_types

self.label = label

TempFileManager.__init__(self)

| diff --git a/test/test_components.py b/test/test_components.py

--- a/test/test_components.py

+++ b/test/test_components.py

@@ -942,6 +942,12 @@ def test_component_functions(self):

output2 = file_input.postprocess("test/test_files/sample_file.pdf")

assert output1 == output2

+ def test_file_type_must_be_list(self):

+ with pytest.raises(

+ ValueError, match="Parameter file_types must be a list. Received str"

+ ):

+ gr.File(file_types=".json")

+

def test_in_interface_as_input(self):

"""

Interface, process

@@ -983,6 +989,12 @@ def test_component_functions(self):

input2 = upload_input.preprocess(x_file)

assert input1.name == input2.name

+ def test_raises_if_file_types_is_not_list(self):

+ with pytest.raises(

+ ValueError, match="Parameter file_types must be a list. Received int"

+ ):

+ gr.UploadButton(file_types=2)

+

class TestDataframe:

def test_component_functions(self):

| `gr.File` with `file_types="image"` breaks front-end rendering

### Describe the bug

If a `gr.File` contains the `file_types="image"` the front-end never renders due to a `Uncaught (in promise) TypeError: i.map is not a function` in the browser console

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

```py

import gradio as gr

with gr.Blocks() as demo:

gr.File(label="Test", file_count="multiple", interactive=True, file_types="image")

demo.launch()

```

https://huggingface.co/spaces/multimodalart/grFileBug

### Screenshot

_No response_

### Logs

```shell

FileUpload.svelte:49 Uncaught (in promise) TypeError: i.map is not a function

at Ce (FileUpload.svelte:49:27)

at Pt (index.4395ab38.js:4:6005)

at new Ee (FileUpload.svelte:60:30)

at Array.Me (File.svelte:57:16)

at Array.Se (File.svelte:40:16)

at jn (index.4395ab38.js:1:1425)

at _n (index.4395ab38.js:76:13914)

at Wc (index.4395ab38.js:76:14690)

at Pt (index.4395ab38.js:4:6206)

at new Zc (index.4395ab38.js:76:16107)

```

### System Info

```shell

gradio==3.16.2

```

### Severity

blocking upgrade to latest gradio version

| PS: Works perfectly in Gradio 3.15, but breaks from `3.16.0` to `3.16.2`

@apolinario Can you try with

`gr.File(label="Test", file_count="multiple", interactive=True, file_types=["image"]) `

Works for me on main right now

<img width="1301" alt="image" src="https://user-images.githubusercontent.com/41651716/214081132-b311bef4-5183-4cbe-bc51-40ed94c9b2b8.png">

That works! Somehow I had old code that did not use a list and it used to work - so that broke on 3.16.x - but as a list it did work!

Glad it works! Will put up a PR to check that `file_types` is a list and raise an error to prevent front-end crashing | 2023-01-24T14:17:28 |

gradio-app/gradio | 3,049 | gradio-app__gradio-3049 | [

"2472"

] | 9599772fd6ceb822846a7096ea55912d2b513e6a | diff --git a/gradio/routes.py b/gradio/routes.py

--- a/gradio/routes.py

+++ b/gradio/routes.py

@@ -184,8 +184,14 @@ def login(form_data: OAuth2PasswordRequestForm = Depends()):

) or (callable(app.auth) and app.auth.__call__(username, password)):

token = secrets.token_urlsafe(16)

app.tokens[token] = username

- response = RedirectResponse(url="/", status_code=status.HTTP_302_FOUND)

- response.set_cookie(key="access-token", value=token, httponly=True)

+ response = JSONResponse(content={"success": True})

+ response.set_cookie(

+ key="access-token",

+ value=token,

+ httponly=True,

+ samesite="none",

+ secure=True,

+ )

return response

else:

raise HTTPException(status_code=400, detail="Incorrect credentials.")

@@ -206,6 +212,7 @@ def main(request: fastapi.Request, user: str = Depends(get_current_user)):

config = {

"auth_required": True,

"auth_message": blocks.auth_message,

+ "is_space": app.get_blocks().is_space,

}

try:

| diff --git a/test/test_blocks.py b/test/test_blocks.py

--- a/test/test_blocks.py

+++ b/test/test_blocks.py

@@ -1338,7 +1338,7 @@ async def say_hello(name):

data={"username": "abc", "password": "123"},

follow_redirects=False,

)

- assert resp.status_code == 302

+ assert resp.status_code == 200

token = resp.cookies.get("access-token")

assert token

| Authentication doesnt work if the app is embedded in a frame

### Describe the bug

Pretty self explanatory, everything is working fine when I embed the gradio app in a div element except the login, which fails without any error message to the console of the browser.

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

Any gradio app that has authentication and is embedded as follows:

```

<html>

<head>

<meta name="keywords" content="" />

<meta name="description" content="" />

<meta http-equiv="content-type" content="text/html; charset=UTF-8" />

</head>

<frameset rows="100%">

<frame src="http://18.195.21.198:7860/" title="mytitle" frameborder="0" noresize="noresize"/>

<noframes>

<body>

<p><a href="http://mygradiohosting:7860">http://mywebsite.com</a> </p>

</body>

</noframes>

</frameset>

</html>

```

### Screenshot

_No response_

### Logs

```shell

No logs in gradio nor in the browser console.

```

### System Info

```shell

Gradio 3.4, Google Chrome

```

### Severity

annoying

| Thanks @ValentinKoch! @aliabid94 do you know if this is fixed by #2112?

Should add that i am hosting on aws ec2 maybe :) if that changes things

Could be related to #2266, probably a CORS issue. | 2023-01-24T14:41:05 |

gradio-app/gradio | 3,089 | gradio-app__gradio-3089 | [

"1883"

] | 431a987d612d5e43097898d13523fb72bdf84214 | diff --git a/gradio/blocks.py b/gradio/blocks.py

--- a/gradio/blocks.py

+++ b/gradio/blocks.py

@@ -1232,6 +1232,7 @@ def queue(

blocks_dependencies=self.dependencies,

)

self.config = self.get_config_file()

+ self.app = routes.App.create_app(self)

return self

def launch(

diff --git a/gradio/routes.py b/gradio/routes.py

--- a/gradio/routes.py

+++ b/gradio/routes.py

@@ -481,6 +481,7 @@ async def join_queue(

async def get_queue_status():

return app.get_blocks()._queue.get_estimation()

+ @app.on_event("startup")

@app.get("/startup-events")

async def startup_events():

if not app.startup_events_triggered:

| diff --git a/test/test_blocks.py b/test/test_blocks.py

--- a/test/test_blocks.py

+++ b/test/test_blocks.py

@@ -16,12 +16,14 @@

import mlflow

import pytest

+import uvicorn

import wandb

import websockets

from fastapi.testclient import TestClient

import gradio as gr

from gradio.exceptions import DuplicateBlockError

+from gradio.networking import Server, get_first_available_port

from gradio.test_data.blocks_configs import XRAY_CONFIG

from gradio.utils import assert_configs_are_equivalent_besides_ids

@@ -323,6 +325,39 @@ async def test_restart_after_close(self):

completed = True

assert msg["output"]["data"][0] == "Victor"

+ @pytest.mark.asyncio

+ async def test_run_without_launching(self):

+ """Test that we can start the app and use queue without calling .launch().

+

+ This is essentially what the 'gradio' reload mode does

+ """

+

+ port = get_first_available_port(7860, 7870)

+

+ io = gr.Interface(lambda s: s, gr.Textbox(), gr.Textbox()).queue()

+

+ config = uvicorn.Config(app=io.app, port=port, log_level="warning")

+

+ server = Server(config=config)

+ server.run_in_thread()

+

+ try:

+ async with websockets.connect(f"ws://localhost:{port}/queue/join") as ws:

+ completed = False

+ while not completed:

+ msg = json.loads(await ws.recv())

+ if msg["msg"] == "send_data":

+ await ws.send(json.dumps({"data": ["Victor"], "fn_index": 0}))

+ if msg["msg"] == "send_hash":

+ await ws.send(

+ json.dumps({"fn_index": 0, "session_hash": "shdce"})

+ )

+ if msg["msg"] == "process_completed":

+ completed = True

+ assert msg["output"]["data"][0] == "Victor"

+ finally:

+ server.close()

+

class TestComponentsInBlocks:

def test_slider_random_value_config(self):

| Warn enable_queue in reload mode

**Is your feature request related to a problem? Please describe.**

Warn the user when enable_queue is true in the reload mode(gradio app.py)

**Additional context**

Since we launch the app with uvicorn in the reload mode, only fastapi app is run. We could make workarounds like triggering an endpoint to the start queueing, however I don't think it is necessary.

| 2023-01-30T21:36:35 |

|

gradio-app/gradio | 3,091 | gradio-app__gradio-3091 | [

"2287"

] | 5264b4c6ff086844946d5e17e4bbc4554766bcb3 | diff --git a/gradio/processing_utils.py b/gradio/processing_utils.py

--- a/gradio/processing_utils.py

+++ b/gradio/processing_utils.py

@@ -52,7 +52,12 @@ def to_binary(x: str | Dict) -> bytes:

def decode_base64_to_image(encoding: str) -> Image.Image:

content = encoding.split(";")[1]

image_encoded = content.split(",")[1]

- return Image.open(BytesIO(base64.b64decode(image_encoded)))

+ img = Image.open(BytesIO(base64.b64decode(image_encoded)))

+ exif = img.getexif()

+ # 274 is the code for image rotation and 1 means "correct orientation"

+ if exif.get(274, 1) != 1 and hasattr(ImageOps, "exif_transpose"):

+ img = ImageOps.exif_transpose(img)

+ return img

def encode_url_or_file_to_base64(path: str | Path, encryption_key: bytes | None = None):

| diff --git a/test/test_processing_utils.py b/test/test_processing_utils.py

--- a/test/test_processing_utils.py

+++ b/test/test_processing_utils.py

@@ -73,6 +73,14 @@ def test_encode_pil_to_base64_keeps_pnginfo(self):

assert decoded_image.info == input_img.info

+ @patch("PIL.Image.Image.getexif", return_value={274: 3})

+ @patch("PIL.ImageOps.exif_transpose")

+ def test_base64_to_image_does_rotation(self, mock_rotate, mock_exif):

+ input_img = Image.open("gradio/test_data/test_image.png")

+ base64 = processing_utils.encode_pil_to_base64(input_img)

+ processing_utils.decode_base64_to_image(base64)

+ mock_rotate.assert_called_once()

+

def test_resize_and_crop(self):

img = Image.open("gradio/test_data/test_image.png")

new_img = processing_utils.resize_and_crop(img, (20, 20))

| images rotated

### Describe the bug

When I upload an image on my iphone it gets rotated vs. uploading the same image on desktop.

I assume this might have something to do with the iphone image upload being HEIC.

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

https://huggingface.co/spaces/krrishD/suitify_v1

### Screenshot

_No response_

### Logs

```shell

no debug output for this

```

### System Info

```shell

Using gradio on my iphone

```

### Severity

serious, I can't work around it

| I've noticed this issue before, it seems to be a general problem with image uploading systems. @dawoodkhan82 would you be able to take a look at this?

I only run into this issue when I select the "Take Photo or Video" option:

This doesn't happen to me when uploading via an image component

But it does happen to me when using UploadButton + Chatbot in https://huggingface.co/spaces/ysharma/InstructPix2Pix_Chatbot

Reproduced here: https://www.loom.com/share/d62c8614873f4294b6169fa637976b97

This is the space I used in the loom (its public): https://huggingface.co/spaces/lakshman111/imageprocessingdebugging

The space just echos the user's image back to them, and it comes back horizontal when I used an iPhone and took a vertical image.

@freddyaboulton it looks like the issue isn't happening on upload, but rather happening during pre or post processing | 2023-01-30T23:20:20 |

gradio-app/gradio | 3,117 | gradio-app__gradio-3117 | [

"3119"

] | 63d5efcfc464ff82877cd89b778231c23c3109ad | diff --git a/demo/video_component/run.py b/demo/video_component/run.py

--- a/demo/video_component/run.py

+++ b/demo/video_component/run.py

@@ -1,8 +1,22 @@

-import gradio as gr

+import gradio as gr

+import os

-css = "footer {display: none !important;} .gradio-container {min-height: 0px !important;}"

-with gr.Blocks(css=css) as demo:

- gr.Video()

+a = os.path.join(os.path.dirname(__file__), "files/world.mp4") # Video

+b = os.path.join(os.path.dirname(__file__), "files/a.mp4") # Video

+c = os.path.join(os.path.dirname(__file__), "files/b.mp4") # Video

-demo.launch()

\ No newline at end of file

+

+demo = gr.Interface(

+ fn=lambda x: x,

+ inputs=gr.Video(type="file"),

+ outputs=gr.Video(),

+ examples=[

+ [a],

+ [b],

+ [c],

+ ],

+)

+

+if __name__ == "__main__":

+ demo.launch()

diff --git a/scripts/copy_demos.py b/scripts/copy_demos.py

--- a/scripts/copy_demos.py

+++ b/scripts/copy_demos.py

@@ -34,6 +34,7 @@ def copy_all_demos(source_dir: str, dest_dir: str):

"stt_or_tts",

"stream_audio",

"stream_frames",

+ "video_component",

"zip_files",

]

for demo in demos_to_copy:

| Video ui is jumpy when the value is changed

### Describe the bug

This video shows the issue:

https://user-images.githubusercontent.com/12937446/216451342-492e8d36-1f9d-4d23-a64c-abdaa8aa8a39.mov

I think the video is jumping from video size -> default size -> video size. It would be good if it at least didn't jump down to that inbetween size.

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

https://user-images.githubusercontent.com/12937446/216451342-492e8d36-1f9d-4d23-a64c-abdaa8aa8a39.mov

### Screenshot

https://user-images.githubusercontent.com/12937446/216451342-492e8d36-1f9d-4d23-a64c-abdaa8aa8a39.mov

### Logs

```shell

no

```

### System Info

```shell

no

```

### Severity

annoying

| 2023-02-02T19:19:33 |

||

gradio-app/gradio | 3,124 | gradio-app__gradio-3124 | [

"2497"

] | ec2b68f554499f6cc4134fe2da59da6e0868320a | diff --git a/gradio/interface.py b/gradio/interface.py

--- a/gradio/interface.py

+++ b/gradio/interface.py

@@ -547,7 +547,7 @@ def render_input_column(

# as a proxy of whether the queue will be enabled.

# Using a generator function without the queue will raise an error.

if inspect.isgeneratorfunction(self.fn):

- stop_btn = Button("Stop", variant="stop")

+ stop_btn = Button("Stop", variant="stop", visible=False)

elif self.interface_type == InterfaceTypes.UNIFIED:

clear_btn = Button("Clear")

submit_btn = Button("Submit", variant="primary")

@@ -588,7 +588,7 @@ def render_output_column(

# is created. We use whether a generator function is provided

# as a proxy of whether the queue will be enabled.

# Using a generator function without the queue will raise an error.

- stop_btn = Button("Stop", variant="stop")

+ stop_btn = Button("Stop", variant="stop", visible=False)

if self.allow_flagging == "manual":

flag_btns = self.render_flag_btns()

elif self.allow_flagging == "auto":

@@ -643,10 +643,38 @@ def attach_submit_events(self, submit_btn: Button | None, stop_btn: Button | Non

)

else:

assert submit_btn is not None, "Submit button not rendered"

+ fn = self.fn

+ extra_output = []

+ if stop_btn:

+

+ # Wrap the original function to show/hide the "Stop" button

+ def fn(*args):

+ # The main idea here is to call the original function

+ # and append some updates to keep the "Submit" button

+ # hidden and the "Stop" button visible

+ # The 'finally' block hides the "Stop" button and

+ # shows the "submit" button. Having a 'finally' block

+ # will make sure the UI is "reset" even if there is an exception

+ try:

+ for output in self.fn(*args):

+ if len(self.output_components) == 1 and not self.batch:

+ output = [output]

+ output = [o for o in output]

+ yield output + [

+ Button.update(visible=False),

+ Button.update(visible=True),

+ ]

+ finally:

+ yield [

+ {"__type__": "generic_update"}

+ for _ in self.output_components

+ ] + [Button.update(visible=True), Button.update(visible=False)]

+

+ extra_output = [submit_btn, stop_btn]

pred = submit_btn.click(

- self.fn,

+ fn,

self.input_components,

- self.output_components,

+ self.output_components + extra_output,

api_name="predict",

scroll_to_output=True,

preprocess=not (self.api_mode),

@@ -655,11 +683,24 @@ def attach_submit_events(self, submit_btn: Button | None, stop_btn: Button | Non

max_batch_size=self.max_batch_size,

)

if stop_btn:

+ submit_btn.click(

+ lambda: (

+ submit_btn.update(visible=False),

+ stop_btn.update(visible=True),

+ ),

+ inputs=None,

+ outputs=[submit_btn, stop_btn],

+ queue=False,

+ )

stop_btn.click(

- None,

+ lambda: (

+ submit_btn.update(visible=True),

+ stop_btn.update(visible=False),

+ ),

inputs=None,

- outputs=None,

+ outputs=[submit_btn, stop_btn],

cancels=[pred],

+ queue=False,

)

def attach_clear_events(

| diff --git a/test/test_blocks.py b/test/test_blocks.py

--- a/test/test_blocks.py

+++ b/test/test_blocks.py

@@ -1039,6 +1039,51 @@ def iteration(a):

cancel.click(None, None, None, cancels=[click])

demo.queue().launch(prevent_thread_lock=True)

+ @pytest.mark.asyncio

+ async def test_cancel_button_for_interfaces(self):

+ def generate(x):

+ for i in range(4):

+ yield i

+ time.sleep(0.2)

+

+ io = gr.Interface(generate, gr.Textbox(), gr.Textbox()).queue()

+ stop_btn_id = next(

+ i for i, k in io.blocks.items() if getattr(k, "value", None) == "Stop"

+ )

+ assert not io.blocks[stop_btn_id].visible

+

+ io.launch(prevent_thread_lock=True)

+

+ async with websockets.connect(

+ f"{io.local_url.replace('http', 'ws')}queue/join"

+ ) as ws:

+ completed = False

+ checked_iteration = False

+ while not completed:

+ msg = json.loads(await ws.recv())

+ if msg["msg"] == "send_data":

+ await ws.send(json.dumps({"data": ["freddy"], "fn_index": 0}))

+ if msg["msg"] == "send_hash":

+ await ws.send(json.dumps({"fn_index": 0, "session_hash": "shdce"}))

+ if msg["msg"] == "process_generating" and isinstance(

+ msg["output"]["data"][0], str

+ ):

+ checked_iteration = True

+ assert msg["output"]["data"][1:] == [

+ {"visible": False, "__type__": "update"},

+ {"visible": True, "__type__": "update"},

+ ]

+ if msg["msg"] == "process_completed":

+ assert msg["output"]["data"] == [

+ {"__type__": "update"},

+ {"visible": True, "__type__": "update"},

+ {"visible": False, "__type__": "update"},

+ ]

+ completed = True

+ assert checked_iteration

+

+ io.close()

+

class TestEvery:

def test_raise_exception_if_parameters_invalid(self):

diff --git a/test/test_routes.py b/test/test_routes.py

--- a/test/test_routes.py

+++ b/test/test_routes.py

@@ -277,7 +277,7 @@ def generator(string):

headers={"Authorization": f"Bearer {app.queue_token}"},

)

output = dict(response.json())

- assert output["data"] == ["a"]

+ assert output["data"][0] == "a"

response = client.post(

"/api/predict/",

@@ -285,7 +285,7 @@ def generator(string):

headers={"Authorization": f"Bearer {app.queue_token}"},

)

output = dict(response.json())

- assert output["data"] == ["b"]

+ assert output["data"][0] == "b"

response = client.post(

"/api/predict/",

@@ -293,7 +293,7 @@ def generator(string):

headers={"Authorization": f"Bearer {app.queue_token}"},

)

output = dict(response.json())

- assert output["data"] == ["c"]

+ assert output["data"][0] == "c"

response = client.post(

"/api/predict/",

@@ -301,7 +301,11 @@ def generator(string):

headers={"Authorization": f"Bearer {app.queue_token}"},

)

output = dict(response.json())

- assert output["data"] == [None]

+ assert output["data"] == [

+ {"__type__": "update"},

+ {"__type__": "update", "visible": True},

+ {"__type__": "update", "visible": False},

+ ]

response = client.post(

"/api/predict/",

@@ -309,7 +313,15 @@ def generator(string):

headers={"Authorization": f"Bearer {app.queue_token}"},

)

output = dict(response.json())

- assert output["data"] == ["a"]

+ assert output["data"][0] is None

+

+ response = client.post(

+ "/api/predict/",

+ json={"data": ["abc"], "fn_index": 0, "session_hash": "11"},

+ headers={"Authorization": f"Bearer {app.queue_token}"},

+ )

+ output = dict(response.json())

+ assert output["data"][0] == "a"

class TestApp:

| Modify interfaces with generators so that submit button turns into stop button

- [x] I have searched to see if a similar issue already exists.

**Is your feature request related to a problem? Please describe.**

As of gradio 3.6, interfaces that use generators will automatically have a Stop button added. It would be nicer if the submit button turned into a stop button when you clicked it and the stop button automatically turned back into a submit button when the prediction finished or when it was clicked.

| 2023-02-03T16:52:42 |

|

gradio-app/gradio | 3,126 | gradio-app__gradio-3126 | [

"3035"

] | 84afc51484a54cb1250186f74ce4b2eeaaa79da3 | diff --git a/gradio/ipython_ext.py b/gradio/ipython_ext.py

--- a/gradio/ipython_ext.py

+++ b/gradio/ipython_ext.py

@@ -3,6 +3,8 @@

except ImportError:

pass

+import warnings

+

import gradio

@@ -12,6 +14,8 @@ def load_ipython_extension(ipython):

@register_cell_magic

@needs_local_scope

def blocks(line, cell, local_ns=None):

+ if "gr.Interface" in cell:

+ warnings.warn("Usage of gr.Interface with %%blocks may result in errors.")

with __demo.clear():

exec(cell, None, local_ns)

__demo.launch(quiet=True)

| %%blocks cell magic doesn't work

### Describe the bug

Unable to use %%blocks in Colab. The following code snippet is just a random one copied from Gradio example.

```py

%%blocks

def generate(text):

"hi"

examples = [

["The Moon's orbit around Earth has"],

["The smooth Borealis basin in the Northern Hemisphere covers 40%"],

]

demo = gr.Interface(

fn=generate,

inputs=gr.inputs.Textbox(lines=5, label="Input Text"),

outputs=gr.outputs.Textbox(label="Generated Text"),

examples=examples

)

```

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

https://colab.research.google.com/drive/1ziLOFs-nSyUgUeo4WBkf1TIfrLPxkgDu?usp=sharing

### Screenshot

<img width="939" alt="image" src="https://user-images.githubusercontent.com/38108242/213739638-7853b185-f769-4c3d-8005-c65902fb5185.png">

### Logs

```shell

---------------------------------------------------------------------------

DuplicateBlockError Traceback (most recent call last)

<ipython-input-18-0e7a87c35f47> in <module>

----> 1 get_ipython().run_cell_magic('blocks', '', '\n\n\ndef generate(text):\n "hi"\n\nexamples = [\n ["The Moon\'s orbit around Earth has"],\n ["The smooth Borealis basin in the Northern Hemisphere covers 40%"],\n]\n\ndemo = gr.Interface(\n fn=generate,\n inputs=gr.inputs.Textbox(lines=5, label="Input Text"),\n outputs=gr.outputs.Textbox(label="Generated Text"),\n examples=examples\n)\n')

5 frames

/usr/local/lib/python3.8/dist-packages/gradio/blocks.py in render(self)

85 """

86 if Context.root_block is not None and self._id in Context.root_block.blocks:

---> 87 raise DuplicateBlockError(

88 f"A block with id: {self._id} has already been rendered in the current Blocks."

89 )

DuplicateBlockError: A block with id: 62 has already been rendered in the current Blocks.

```

### System Info

```shell

Colab, no GPU

```

### Severity

serious, but I can work around it

| Can confirm the issue, will look into it!

I think this only fails if you're running an interface.

<img width="1452" alt="image" src="https://user-images.githubusercontent.com/41651716/214561126-d1248e3b-7b74-4ff1-a0d9-10f180bba2a5.png">

Hmm this is kinda tricky since the cell magic defines a global blocks context that conflicts with the one created with the gr.Interface. | 2023-02-03T22:03:38 |

|

gradio-app/gradio | 3,196 | gradio-app__gradio-3196 | [

"3187"

] | fa094a03e231da55a2b1504780f707cba436178c | diff --git a/gradio/queueing.py b/gradio/queueing.py

--- a/gradio/queueing.py

+++ b/gradio/queueing.py

@@ -4,6 +4,7 @@

import copy

import sys

import time

+from asyncio import TimeoutError as AsyncTimeOutError

from collections import deque

from typing import Any, Deque, Dict, List, Tuple

@@ -205,7 +206,7 @@ async def broadcast_live_estimations(self) -> None:

if self.live_updates:

await self.broadcast_estimations()

- async def gather_event_data(self, event: Event) -> bool:

+ async def gather_event_data(self, event: Event, receive_timeout=60) -> bool:

"""

Gather data for the event

@@ -216,7 +217,20 @@ async def gather_event_data(self, event: Event) -> bool:

client_awake = await self.send_message(event, {"msg": "send_data"})

if not client_awake:

return False

- event.data = await self.get_message(event)

+ data, client_awake = await self.get_message(event, timeout=receive_timeout)

+ if not client_awake:

+ # In the event, we timeout due to large data size

+ # Let the client know, otherwise will hang

+ await self.send_message(

+ event,

+ {

+ "msg": "process_completed",

+ "output": {"error": "Time out uploading data to server"},

+ "success": False,

+ },

+ )

+ return False

+ event.data = data

return True

async def notify_clients(self) -> None:

@@ -424,21 +438,25 @@ async def process_events(self, events: List[Event], batch: bool) -> None:

# to start "from scratch"

await self.reset_iterators(event.session_hash, event.fn_index)

- async def send_message(self, event, data: Dict) -> bool:

+ async def send_message(self, event, data: Dict, timeout: float | int = 1) -> bool:

try:

- await event.websocket.send_json(data=data)

+ await asyncio.wait_for(

+ event.websocket.send_json(data=data), timeout=timeout

+ )

return True

except:

await self.clean_event(event)

return False

- async def get_message(self, event) -> PredictBody | None:

+ async def get_message(self, event, timeout=5) -> Tuple[PredictBody | None, bool]:

try:

- data = await event.websocket.receive_json()

- return PredictBody(**data)

- except:

+ data = await asyncio.wait_for(

+ event.websocket.receive_json(), timeout=timeout

+ )

+ return PredictBody(**data), True

+ except AsyncTimeOutError:

await self.clean_event(event)

- return None

+ return None, False

async def reset_iterators(self, session_hash: str, fn_index: int):

await AsyncRequest(

diff --git a/gradio/routes.py b/gradio/routes.py

--- a/gradio/routes.py

+++ b/gradio/routes.py

@@ -12,6 +12,7 @@

import secrets

import tempfile

import traceback

+from asyncio import TimeoutError as AsyncTimeOutError

from collections import defaultdict

from copy import deepcopy

from typing import Any, Dict, List, Optional, Type

@@ -479,8 +480,20 @@ async def join_queue(

await websocket.accept()

# In order to cancel jobs, we need the session_hash and fn_index

# to create a unique id for each job

- await websocket.send_json({"msg": "send_hash"})

- session_info = await websocket.receive_json()

+ try:

+ await asyncio.wait_for(

+ websocket.send_json({"msg": "send_hash"}), timeout=1

+ )

+ except AsyncTimeOutError:

+ return

+

+ try:

+ session_info = await asyncio.wait_for(

+ websocket.receive_json(), timeout=1

+ )

+ except AsyncTimeOutError:

+ return

+

event = Event(

websocket, session_info["session_hash"], session_info["fn_index"]

)

| diff --git a/test/test_queueing.py b/test/test_queueing.py

--- a/test/test_queueing.py

+++ b/test/test_queueing.py

@@ -1,3 +1,4 @@

+import asyncio

import os

import sys

from collections import deque

@@ -31,7 +32,7 @@ def queue() -> Queue:

@pytest.fixture()

def mock_event() -> Event:

- websocket = MagicMock()

+ websocket = AsyncMock()

event = Event(websocket=websocket, session_hash="test", fn_index=0)

yield event

@@ -53,9 +54,20 @@ async def test_stop_resume(self, queue: Queue):

@pytest.mark.asyncio

async def test_receive(self, queue: Queue, mock_event: Event):

+ mock_event.websocket.receive_json.return_value = {"data": ["test"], "fn": 0}

await queue.get_message(mock_event)

assert mock_event.websocket.receive_json.called

+ @pytest.mark.asyncio

+ async def test_receive_timeout(self, queue: Queue, mock_event: Event):

+ async def take_too_long():

+ await asyncio.sleep(1)

+

+ mock_event.websocket.receive_json = take_too_long

+ data, is_awake = await queue.get_message(mock_event, timeout=0.5)

+ assert data is None

+ assert not is_awake

+

@pytest.mark.asyncio

async def test_send(self, queue: Queue, mock_event: Event):

await queue.send_message(mock_event, {})

@@ -85,7 +97,7 @@ async def test_gather_event_data(self, queue: Queue, mock_event: Event):

queue.send_message = AsyncMock()

queue.get_message = AsyncMock()

queue.send_message.return_value = True

- queue.get_message.return_value = {"data": ["test"], "fn": 0}

+ queue.get_message.return_value = {"data": ["test"], "fn": 0}, True

assert await queue.gather_event_data(mock_event)

assert queue.send_message.called

@@ -95,6 +107,25 @@ async def test_gather_event_data(self, queue: Queue, mock_event: Event):

assert await queue.gather_event_data(mock_event)

assert not (queue.send_message.called)

+ @pytest.mark.asyncio

+ async def test_gather_event_data_timeout(self, queue: Queue, mock_event: Event):

+ async def take_too_long():

+ await asyncio.sleep(1)

+

+ queue.send_message = AsyncMock()

+ queue.send_message.return_value = True

+

+ mock_event.websocket.receive_json = take_too_long

+ is_awake = await queue.gather_event_data(mock_event, receive_timeout=0.5)

+ assert not is_awake

+

+ # Have to use awful [1][0][1] syntax cause of python 3.7

+ assert queue.send_message.call_args_list[1][0][1] == {

+ "msg": "process_completed",

+ "output": {"error": "Time out uploading data to server"},

+ "success": False,

+ }

+

class TestQueueEstimation:

def test_get_update_estimation(self, queue: Queue):

@@ -193,6 +224,8 @@ async def test_process_event_handles_error_sending_process_start_msg(

self, queue: Queue, mock_event: Event

):

mock_event.websocket.send_json = AsyncMock()

+ mock_event.websocket.receive_json.return_value = {"data": ["test"], "fn": 0}

+

mock_event.websocket.send_json.side_effect = ["2", ValueError("Can't connect")]

queue.call_prediction = AsyncMock()

mock_event.disconnect = AsyncMock()

@@ -260,6 +293,7 @@ async def test_process_event_handles_exception_in_is_generating_request(

async def test_process_event_handles_error_sending_process_completed_msg(

self, queue: Queue, mock_event: Event

):

+ mock_event.websocket.receive_json.return_value = {"data": ["test"], "fn": 0}

mock_event.websocket.send_json = AsyncMock()

mock_event.websocket.send_json.side_effect = [

"2",

@@ -289,6 +323,7 @@ async def test_process_event_handles_error_sending_process_completed_msg(

async def test_process_event_handles_exception_during_disconnect(

self, mock_request, queue: Queue, mock_event: Event

):

+ mock_event.websocket.receive_json.return_value = {"data": ["test"], "fn": 0}

mock_event.websocket.send_json = AsyncMock()

queue.call_prediction = AsyncMock(

return_value=MagicMock(has_exception=False, json=dict(is_generating=False))

| load events don't work well on HF spaces if queue enabled

### Describe the bug

I've noticed that some demos don't work well on HF spaces. If you go to them, you'll see that the queue is really large and doesn't go down.

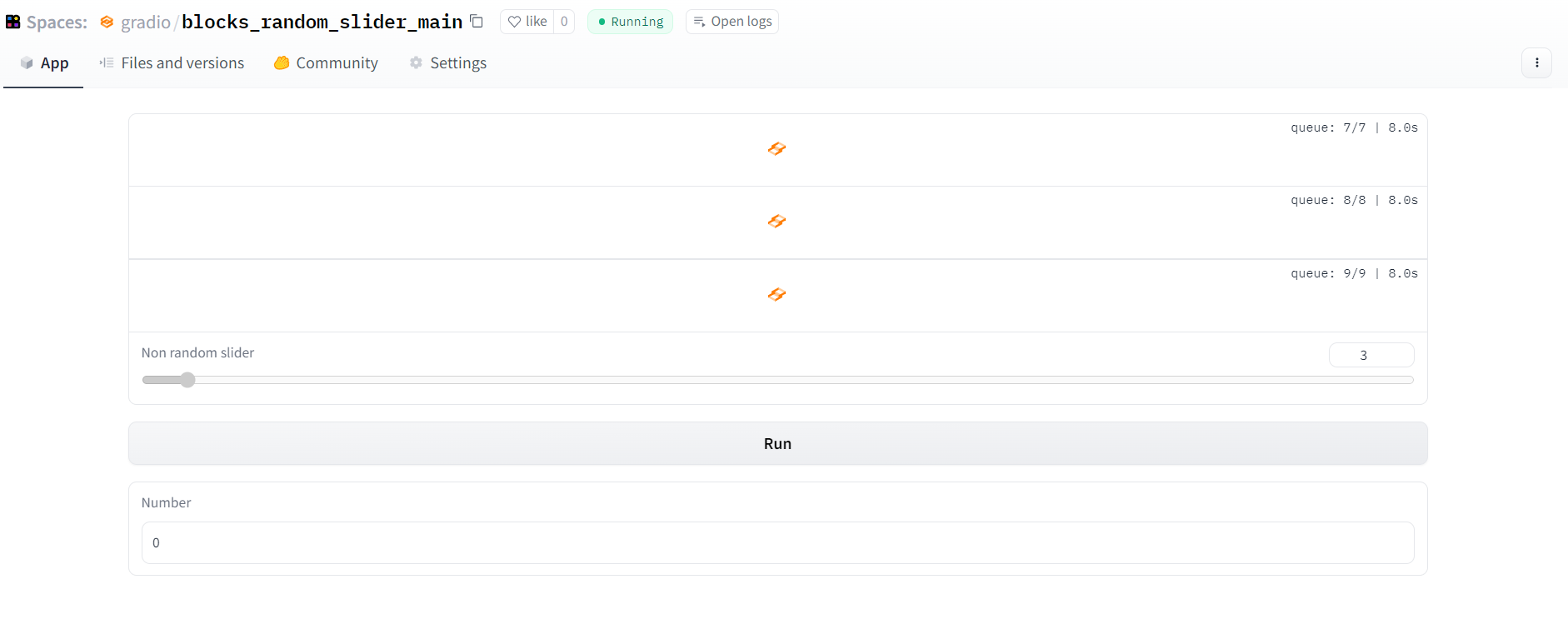

The one thing these demos all have in common is that they have load events and the queue enabled.

* [blocks_random_slider](https://huggingface.co/spaces/gradio/blocks_random_slider)

* https://huggingface.co/spaces/gradio/blocks_random_slider_main

* https://huggingface.co/spaces/gradio/xgboost-income-prediction-with-explainability

* https://huggingface.co/spaces/gradio/native_plots

* https://huggingface.co/spaces/gradio/altair_plot

Running these demos locally works as expected.

The reason I think this is related to the queue is that this demo has load events with the queue disabled and it works well:

https://huggingface.co/spaces/gradio/timeseries-forecasting-with-prophet

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

-

### Screenshot

-

### Logs

```shell

-

```

### System Info

```shell

-

```

### Severity

serious, but I can work around it

| The weird thing is that I duplicated one of these spaces and it's been working fine all day?

https://huggingface.co/spaces/freddyaboulton/blocks_random_slider | 2023-02-14T22:11:04 |

gradio-app/gradio | 3,212 | gradio-app__gradio-3212 | [

"1942"

] | a2b80ca5c6a73de6967fbba73c2e454dc35eb332 | diff --git a/demo/bokeh_plot/run.py b/demo/bokeh_plot/run.py

new file mode 100644

--- /dev/null

+++ b/demo/bokeh_plot/run.py

@@ -0,0 +1,94 @@

+import gradio as gr

+import xyzservices.providers as xyz

+from bokeh.plotting import figure

+from bokeh.tile_providers import get_provider

+from bokeh.models import ColumnDataSource, Whisker

+from bokeh.plotting import figure

+from bokeh.sampledata.autompg2 import autompg2 as df

+from bokeh.sampledata.penguins import data

+from bokeh.transform import factor_cmap, jitter, factor_mark

+

+

+def get_plot(plot_type):

+ if plot_type == "map":

+ tile_provider = get_provider(xyz.OpenStreetMap.Mapnik)

+ plot = figure(

+ x_range=(-2000000, 6000000),

+ y_range=(-1000000, 7000000),

+ x_axis_type="mercator",

+ y_axis_type="mercator",

+ )

+ plot.add_tile(tile_provider)

+ return plot

+ elif plot_type == "whisker":

+ classes = list(sorted(df["class"].unique()))

+

+ p = figure(

+ height=400,

+ x_range=classes,

+ background_fill_color="#efefef",

+ title="Car class vs HWY mpg with quintile ranges",

+ )

+ p.xgrid.grid_line_color = None

+

+ g = df.groupby("class")

+ upper = g.hwy.quantile(0.80)

+ lower = g.hwy.quantile(0.20)

+ source = ColumnDataSource(data=dict(base=classes, upper=upper, lower=lower))

+

+ error = Whisker(

+ base="base",

+ upper="upper",

+ lower="lower",

+ source=source,

+ level="annotation",

+ line_width=2,

+ )

+ error.upper_head.size = 20

+ error.lower_head.size = 20

+ p.add_layout(error)

+

+ p.circle(

+ jitter("class", 0.3, range=p.x_range),

+ "hwy",

+ source=df,

+ alpha=0.5,

+ size=13,

+ line_color="white",

+ color=factor_cmap("class", "Light6", classes),

+ )

+ return p

+ elif plot_type == "scatter":

+

+ SPECIES = sorted(data.species.unique())

+ MARKERS = ["hex", "circle_x", "triangle"]

+

+ p = figure(title="Penguin size", background_fill_color="#fafafa")

+ p.xaxis.axis_label = "Flipper Length (mm)"

+ p.yaxis.axis_label = "Body Mass (g)"

+

+ p.scatter(

+ "flipper_length_mm",

+ "body_mass_g",

+ source=data,

+ legend_group="species",

+ fill_alpha=0.4,

+ size=12,

+ marker=factor_mark("species", MARKERS, SPECIES),

+ color=factor_cmap("species", "Category10_3", SPECIES),

+ )

+

+ p.legend.location = "top_left"

+ p.legend.title = "Species"

+ return p

+

+with gr.Blocks() as demo:

+ with gr.Row():

+ plot_type = gr.Radio(value="scatter", choices=["scatter", "whisker", "map"])

+ plot = gr.Plot()

+ plot_type.change(get_plot, inputs=[plot_type], outputs=[plot])

+ demo.load(get_plot, inputs=[plot_type], outputs=[plot])

+

+

+if __name__ == "__main__":

+ demo.launch()

\ No newline at end of file

diff --git a/gradio/components.py b/gradio/components.py

--- a/gradio/components.py

+++ b/gradio/components.py

@@ -4132,7 +4132,17 @@ def __init__(

)

def get_config(self):

- return {"value": self.value, **IOComponent.get_config(self)}

+ try:

+ import bokeh # type: ignore

+

+ bokeh_version = bokeh.__version__

+ except ImportError:

+ bokeh_version = None

+ return {

+ "value": self.value,

+ "bokeh_version": bokeh_version,

+ **IOComponent.get_config(self),

+ }

@staticmethod

def update(

@@ -4162,9 +4172,11 @@ def postprocess(self, y) -> Dict[str, str] | None:

if isinstance(y, (ModuleType, matplotlib.figure.Figure)):

dtype = "matplotlib"

out_y = processing_utils.encode_plot_to_base64(y)

- elif isinstance(y, dict):

+ elif "bokeh" in y.__module__:

dtype = "bokeh"

- out_y = json.dumps(y)

+ from bokeh.embed import json_item # type: ignore

+

+ out_y = json.dumps(json_item(y))

else:

is_altair = "altair" in y.__module__

if is_altair:

| diff --git a/test/test_components.py b/test/test_components.py

--- a/test/test_components.py

+++ b/test/test_components.py

@@ -2017,7 +2017,9 @@ def test_dataset_calls_as_example(*mocks):

class TestScatterPlot:

+ @patch.dict("sys.modules", {"bokeh": MagicMock(__version__="3.0.3")})

def test_get_config(self):

+

assert gr.ScatterPlot().get_config() == {

"caption": None,

"elem_id": None,

@@ -2029,6 +2031,7 @@ def test_get_config(self):

"style": {},

"value": None,

"visible": True,

+ "bokeh_version": "3.0.3",

}

def test_no_color(self):

@@ -2199,6 +2202,7 @@ def test_scatterplot_accepts_fn_as_value(self):

class TestLinePlot:

+ @patch.dict("sys.modules", {"bokeh": MagicMock(__version__="3.0.3")})

def test_get_config(self):

assert gr.LinePlot().get_config() == {

"caption": None,

@@ -2211,6 +2215,7 @@ def test_get_config(self):

"style": {},

"value": None,

"visible": True,

+ "bokeh_version": "3.0.3",

}

def test_no_color(self):

@@ -2360,6 +2365,7 @@ def test_lineplot_accepts_fn_as_value(self):

class TestBarPlot:

+ @patch.dict("sys.modules", {"bokeh": MagicMock(__version__="3.0.3")})

def test_get_config(self):

assert gr.BarPlot().get_config() == {

"caption": None,

@@ -2372,6 +2378,7 @@ def test_get_config(self):

"style": {},

"value": None,

"visible": True,

+ "bokeh_version": "3.0.3",

}

def test_no_color(self):

| Bokeh plots do not appear

### Describe the bug

When using the `Plot` component, bokeh plots do not appear at all.

This is documented here: #1632

> Pictures cannot be generated in Bokeh mode.

And in this PR: #1609

> The Bokeh plots are currently broken. The reason for this is that bokehJS internally uses getElementById to get the container of the plot and render it. Since Gradio UI is using the shadow DOM to render, this step fails.

> I have tried here a workaround where I added a new hidden div in the index.html file to use as a helper to render the plot, once it is rendered, then the content is appended to the actual div that should have the plot. This part is all working, unfortunately although I can see the div with the expected content, the plot is still not showing.

The PR was closed because it was an attempt to fix the problem using an older version of bokeh. We still need to find a solution using the latest version of bokeh.

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

https://github.com/gradio-app/gradio/blob/main/demo/outbreak_forecast/run.py

### Screenshot

_No response_

### Logs

```shell

N/A

```

### System Info

```shell

3.1.3

```

### Severity

serious, but I can work around it

| cc @dawoodkhan82 @Ian-GL

Is this Bug resolved in the latest release 3.4.0?

> Is this Bug resolved in the latest release 3.4.0?

Unfortunately not yet. It is on our radar though. Will update this issue when we do resolve this.

It looks like bokeh 3.0.3 is out, so it might be good to revisit this @dawoodkhan82

Will revisit!

I was trying to use bokeh maps. Got an error `TypeError: Model.to_json() missing 1 required positional argument: 'include_defaults'`

```python

import gradio as gr

import xyzservices.providers as xyz

from bokeh.plotting import figure

from bokeh.tile_providers import get_provider

def create_map(text):

tile_provider = get_provider(xyz.OpenStreetMap.Mapnik)

p = figure(x_range=(-2000000, 6000000), y_range=(-1000000, 7000000),

x_axis_type="mercator", y_axis_type="mercator")

p.add_tile(tile_provider)

return p

demo = gr.Interface(

fn=create_map,

inputs='text',

outputs=gr.Plot().style(),

)

demo.launch()

```

Will take a look @giswqs ! My guess is that the gradio library is using an outdated bokeh api that's not compatible with the bokeh version in your demo.

Mind sharing your bokeh version? As well as xyzservices? BTW what is that library?

See the example at https://docs.bokeh.org/en/latest/docs/examples/topics/geo/tile_xyzservices.html

[xyzservices](https://github.com/geopandas/xyzservices) is a lightweight library providing a repository of available XYZ services offering raster basemap tiles.

- gradio: 3.18.0

- bokeh: 2.4.3

- xyzservices: 2022.9.0

Thanks @giswqs ! So the issue about `to_json() missing 1 required positional argument` should be easy to fix but the problem about the UI not displaying the plots persists. What's more, looks like there's a big difference between bokeh 2.0 and bokeh 3.0. Might be hard to support both? If we had to choose one, would 3.0 be better?

Yes, prefer bokeh 3.x. | 2023-02-16T16:50:48 |

gradio-app/gradio | 3,223 | gradio-app__gradio-3223 | [

"3171"

] | 3530a86433ef7a27053dd6bd97a2d62947506d24 | diff --git a/gradio/routes.py b/gradio/routes.py

--- a/gradio/routes.py

+++ b/gradio/routes.py

@@ -148,7 +148,9 @@ def create_app(blocks: gradio.Blocks) -> App:

@app.get("/user")

@app.get("/user/")

def get_current_user(request: fastapi.Request) -> Optional[str]:

- token = request.cookies.get("access-token")

+ token = request.cookies.get("access-token") or request.cookies.get(

+ "access-token-unsecure"

+ )

return app.tokens.get(token)

@app.get("/login_check")

@@ -196,6 +198,9 @@ def login(form_data: OAuth2PasswordRequestForm = Depends()):

samesite="none",

secure=True,

)

+ response.set_cookie(

+ key="access-token-unsecure", value=token, httponly=True

+ )

return response

else:

raise HTTPException(status_code=400, detail="Incorrect credentials.")

| Cannot login to application when deployed on-premise

### Describe the bug

I cannot login to my gradio app when it's deployed in docker container on any server. I've tried on 2 different machines with different docker versions. After passing my credentials I'm not redirected to main but login page is refreshed. I've encountered this problem only on versions 3.17.x and 3.18.0 and only when deployed on some server. Everything works perfectly fine on my local machine, both when run locally or via docker.

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

1. Dockerize gradio app with gradio authentication enabled and deploy on some server while exposing it

2. Open the app in your browser

3. Log in

4. Encounter page refresh with no redirection to actual app

### Screenshot

_No response_

### Logs

```shell

I have no logs for this. There's no error or logs neither on python side nor browser.

```

### System Info

```shell

Gradio version: 3.17.x, 3.18.0 (it worked previously on 3.16.2)

```

### Severity

blocking upgrade to latest gradio version

| Hi @Norbiox !

Do you have third party cookies enabled in your browser?

If you open the developer tools, there should be a request to the `login` route, would be helpful to see what that looks like to debug!

Hi,

Yes, I have enabled third party cookies.

I can't find this request. I'm attaching screenshots from before login and immediately after.

Before:

After:

I had the same issue and I was getting this warning in the firefox console:

`Cookie “access-token” has been rejected because a non-HTTPS cookie can’t be set as “secure”.`

Downgrading gradio to 3.16.2 allows login but obviously it is not a very good solution.

If I check the cookie set by gradio with version 3.16.2 it is indeed *not* set as "secure"

The change to make the cookie secure and "sameSite" was added in [this commit ](https://github.com/gradio-app/gradio/commit/9ccfef05421d1733400e3e73d83c756213f2ef29)

Though the commit message does mention it or explain why, as far as I can tell

Thanks for getting back to me @Norbiox and @nerochiaro !

I'm having trouble reproducing this issue.

I created this simple [app ](https://github.com/freddyaboulton/graido-auth) with auth (ignore the typo in the repo name 🙈). It is packaged with Docker and works well for me locally and when I deployed it to this url: https://gradio-auth.onrender.com/ . Credentials `admin/admin`

I tried in Chrome with and without incognito mode and also microsoft edge. I was able to log in correctly. There's a gif of that here:

My guess is that for some reason, your browser is rejecting the gradio cookie set during a successful login?

| 2023-02-17T21:32:20 |

|

gradio-app/gradio | 3,233 | gradio-app__gradio-3233 | [

"3229"

] | 56245276e701f7e4f81228af6e523d4c305af4ed | diff --git a/demo/calculator/run.py b/demo/calculator/run.py

--- a/demo/calculator/run.py

+++ b/demo/calculator/run.py

@@ -27,7 +27,7 @@ def calculator(num1, operation, num2):

[0, "subtract", 1.2],

],

title="Toy Calculator",

- description="Here's a sample toy calculator. Enjoy!",

+ description="Here's a sample toy calculator. Allows you to calculate things like $2+2=4$",

)

if __name__ == "__main__":

demo.launch()

diff --git a/gradio/interface.py b/gradio/interface.py

--- a/gradio/interface.py

+++ b/gradio/interface.py

@@ -307,7 +307,7 @@ def clean_html(raw_html):

"html": True,

},

)

- .use(dollarmath_plugin)

+ .use(dollarmath_plugin, renderer=utils.tex2svg, allow_digits=False)

.use(footnote_plugin)

.enable("table")

)

| Markdown: LaTeX font is black and unreadable in the dark theme

### Describe the bug

The LaTeX font is dark instead of light in the dark theme, rendering it unreadable.

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

```python

import gradio as gr

with gr.Blocks() as interface:

gr.Markdown(value="$ x = 1+1$")

interface.launch()

```

Run the script above.

* Under `http://127.0.0.1:7860/`, the equation is readable.

* Under `http://127.0.0.1:7860/?__theme=dark`, the equation is present but unreadable.

### Screenshot

_No response_

### Logs

```shell

-

```

### System Info

```shell

gradio==3.18.0

```

### Severity

annoying

| 2023-02-18T12:02:21 |

||

gradio-app/gradio | 3,277 | gradio-app__gradio-3277 | [

"3166",

"3248",

"3166"

] | 9c811ed8928c461610fa47e9b0fc450463225881 | diff --git a/demo/blocks_flipper/run.py b/demo/blocks_flipper/run.py

--- a/demo/blocks_flipper/run.py

+++ b/demo/blocks_flipper/run.py

@@ -1,12 +1,15 @@

import numpy as np

import gradio as gr

+

def flip_text(x):

return x[::-1]

+

def flip_image(x):

return np.fliplr(x)

+

with gr.Blocks() as demo:

gr.Markdown("Flip text or image files using this demo.")

with gr.Tab("Flip Text"):

@@ -24,6 +27,6 @@ def flip_image(x):

text_button.click(flip_text, inputs=text_input, outputs=text_output)

image_button.click(flip_image, inputs=image_input, outputs=image_output)

-

+

if __name__ == "__main__":

- demo.launch()

\ No newline at end of file

+ demo.launch()

diff --git a/demo/blocks_mask/run.py b/demo/blocks_mask/run.py

--- a/demo/blocks_mask/run.py

+++ b/demo/blocks_mask/run.py

@@ -1,5 +1,6 @@

import gradio as gr

from gradio.components import Markdown as md

+from PIL import Image

demo = gr.Blocks()

@@ -8,6 +9,9 @@

io2a = gr.Interface(lambda x: x, gr.Image(source="canvas"), gr.Image())

io2b = gr.Interface(lambda x: x, gr.Sketchpad(), gr.Image())

+io2c = gr.Interface(

+ lambda x: x, gr.Image(source="canvas", shape=(512, 512)), gr.Image()

+)

io3a = gr.Interface(

lambda x: [x["mask"], x["image"]],

@@ -53,6 +57,20 @@

)

+def save_image(image):

+ image.save("colorede.png")

+ return image

+

+

+img = Image.new("RGB", (512, 512), (150, 150, 150))

+img.save("image.png", "PNG")

+

+io5d = gr.Interface(

+ save_image,

+ gr.Image("image.png", source="upload", tool="color-sketch", type="pil"),

+ gr.Image(),

+)

+

with demo:

md("# Different Ways to Use the Image Input Component")

md(

@@ -71,6 +89,8 @@

"**2b. Black and White Sketchpad: `gr.Interface(lambda x: x, gr.Sketchpad(), gr.Image())`**"

)

io2b.render()

+ md("**2c. Black and White Sketchpad with `shape=(512,512)`**")

+ io2c.render()

md("**3a. Binary Mask with image upload:**")

md(

"""```python

@@ -130,7 +150,8 @@

io3b2.render()

with gr.Tab("Two"):

io3b3.render()

-

+ md("**5d. Color Sketchpad with image upload and a default images**")

+ io5d.render()

if __name__ == "__main__":

demo.launch()

diff --git a/gradio/components.py b/gradio/components.py

--- a/gradio/components.py

+++ b/gradio/components.py

@@ -1379,6 +1379,7 @@ def __init__(

streaming: bool = False,

elem_id: str | None = None,

mirror_webcam: bool = True,

+ brush_radius: int | None = None,

**kwargs,

):

"""

@@ -1398,7 +1399,9 @@ def __init__(

streaming: If True when used in a `live` interface, will automatically stream webcam feed. Only valid is source is 'webcam'.

elem_id: An optional string that is assigned as the id of this component in the HTML DOM. Can be used for targeting CSS styles.

mirror_webcam: If True webcam will be mirrored. Default is True.

+ brush_radius: Size of the brush for Sketch. Default is None which chooses a sensible default

"""

+ self.brush_radius = brush_radius

self.mirror_webcam = mirror_webcam

valid_types = ["numpy", "pil", "filepath"]

if type not in valid_types:

@@ -1446,6 +1449,7 @@ def get_config(self):

"value": self.value,

"streaming": self.streaming,

"mirror_webcam": self.mirror_webcam,

+ "brush_radius": self.brush_radius,

**IOComponent.get_config(self),

}

@@ -1456,6 +1460,7 @@ def update(

show_label: bool | None = None,

interactive: bool | None = None,

visible: bool | None = None,

+ brush_radius: int | None = None,

):

updated_config = {

"label": label,

@@ -1463,6 +1468,7 @@ def update(

"interactive": interactive,

"visible": visible,

"value": value,

+ "brush_radius": brush_radius,

"__type__": "update",

}

return IOComponent.add_interactive_to_config(updated_config, interactive)

| diff --git a/test/test_components.py b/test/test_components.py

--- a/test/test_components.py

+++ b/test/test_components.py

@@ -625,6 +625,7 @@ def test_component_functions(self):

source="upload", tool="editor", type="pil", label="Upload Your Image"

)

assert image_input.get_config() == {

+ "brush_radius": None,

"image_mode": "RGB",

"shape": None,

"source": "upload",

| Canvas drawing is cut off when the shape is explicitly set to be a square

- [x] I have searched to see if a similar issue already exists.

**Is your feature request related to a problem? Please describe.**

Right now if we want to make a 512\*512 drawing canvas, the only method is (1) ask users to create a pure white 512\*512 image using third-party software like PhotoShop, then (2) ask users to import that blank image into gr.Image(source='upload', tool='sketch'), then (3) use the resulting mask as the user drawing for any applications. (Besides, the initial width of scribble can not be set by code.)

This is over-complicated. We should have an one-line function to make a simple drawing canvas.

**Describe the solution you'd like**

We may consider something like

gr.Image(source='blank', tool='sketch', size=(512, 512))

UI crashes in `gr.Image` with `tool="color-sketch"` and a pre-loaded image

### Describe the bug

Running the following code

```py

#Create a black image

img = Image.new("RGB", (512, 512), (0, 0, 0))

img.save("image.png", "PNG")

#Pre-load the black image in the component

gr.Image(value="image.png", interactive=True, tool="color-sketch")

```

Crashes the UI

<img width="635" alt="image" src="https://user-images.githubusercontent.com/788417/220176049-977ac494-1587-41a8-aafb-b2a961131925.png">

### Reproduction

Here's a colab with an example: https://colab.research.google.com/drive/1Gp5pp51P14B0tM331dS7476-Oqe-Bi04

### System Info

```shell

3.19.0

```

### Severity

serious, but I can work around it

Canvas drawing is cut off when the shape is explicitly set to be a square

- [x] I have searched to see if a similar issue already exists.

**Is your feature request related to a problem? Please describe.**

Right now if we want to make a 512\*512 drawing canvas, the only method is (1) ask users to create a pure white 512\*512 image using third-party software like PhotoShop, then (2) ask users to import that blank image into gr.Image(source='upload', tool='sketch'), then (3) use the resulting mask as the user drawing for any applications. (Besides, the initial width of scribble can not be set by code.)

This is over-complicated. We should have an one-line function to make a simple drawing canvas.

**Describe the solution you'd like**

We may consider something like

gr.Image(source='blank', tool='sketch', size=(512, 512))

| Hi @lllyasviel this is already possible by doing:

```py

gr.Image(source="canvas", shape=(512, 512))

```

For example, you can test this with this Blocks demo:

```py

import gradio as gr

with gr.Blocks() as demo:

i = gr.Image(source="canvas", shape=(512, 512))

o = gr.Image()

i.change(lambda x:x, i, o)

demo.launch()

```

@abidlabs

No. Right now gradio does not support a simple 512\*512 drawing canvas.

pip install --upgrade gradio

then

Then the result will be like

As we can see, this has nothing to do with "shape=(512, 512)", the shape parameter does not control the resolution, it controls a "gradio-style dpi". You can even draw in this long rectangular:

When it is processed, the results provide strong evidence that "shape" is related to dpi and cropping, not shape of canvas

If this is an intentional design, it may be worthwhile to reopen this issue to target a more straightforward drawing board.

Hi @lllyasviel so to achieve what you want, I think we need to do control both the image resolution (with is controlled via the `shape` parameter above) and the display size of the Image component, which is controlled via the `.style()` method of the `Image` component. I agree that it's a bit confusing, but I think this should achieve what you want:

```py

import gradio as gr

with gr.Blocks() as demo:

i = gr.Image(source="canvas", shape=(512, 512)).style(width=512, height=512)

o = gr.Image().style(width=512, height=512)

i.change(lambda x:x, i, o)

demo.launch()

```

However, when I tested this, I got the canvas being cut off at the halfway point, preventing me from drawing in the bottom half of the canvas, which is very strange.

<img width="457" alt="image" src="https://user-images.githubusercontent.com/1778297/218155557-8f766207-6bd1-4848-9344-23380dd41a51.png">

I'm going to reopen this issue so that we can fix this. cc @pngwn

The UI equally crashes if I do something like:

```py

def update_image():

return gr.update(value="image.png")

image = gr.Image(interactive=True, tool="color-sketch")

# ...

demo.load(update_image, inputs=[], outputs=image)

```

And given that the `source="canvas"` can only initialize with a white canvas, I'm currently failing to see any way to initialise a `color-sketch` with a black image to be drawn on top

Actually uploading the image **does** work tho.

This is due to a resize loop, the canvas gets too big and eventually crashes. Surprised it isn't happening when uploading an image though.

Hi @lllyasviel this is already possible by doing:

```py

gr.Image(source="canvas", shape=(512, 512))

```

For example, you can test this with this Blocks demo:

```py

import gradio as gr

with gr.Blocks() as demo:

i = gr.Image(source="canvas", shape=(512, 512))

o = gr.Image()

i.change(lambda x:x, i, o)

demo.launch()

```

@abidlabs

No. Right now gradio does not support a simple 512\*512 drawing canvas.

pip install --upgrade gradio

then

Then the result will be like

As we can see, this has nothing to do with "shape=(512, 512)", the shape parameter does not control the resolution, it controls a "gradio-style dpi". You can even draw in this long rectangular:

When it is processed, the results provide strong evidence that "shape" is related to dpi and cropping, not shape of canvas

If this is an intentional design, it may be worthwhile to reopen this issue to target a more straightforward drawing board.

Hi @lllyasviel so to achieve what you want, I think we need to do control both the image resolution (with is controlled via the `shape` parameter above) and the display size of the Image component, which is controlled via the `.style()` method of the `Image` component. I agree that it's a bit confusing, but I think this should achieve what you want:

```py

import gradio as gr

with gr.Blocks() as demo:

i = gr.Image(source="canvas", shape=(512, 512)).style(width=512, height=512)

o = gr.Image().style(width=512, height=512)

i.change(lambda x:x, i, o)

demo.launch()

```

However, when I tested this, I got the canvas being cut off at the halfway point, preventing me from drawing in the bottom half of the canvas, which is very strange.

<img width="457" alt="image" src="https://user-images.githubusercontent.com/1778297/218155557-8f766207-6bd1-4848-9344-23380dd41a51.png">

I'm going to reopen this issue so that we can fix this. cc @pngwn | 2023-02-22T01:01:15 |

gradio-app/gradio | 3,315 | gradio-app__gradio-3315 | [

"3314"

] | e54042b43b4e4844a46632011a093ca02a5d603a | diff --git a/gradio/blocks.py b/gradio/blocks.py

--- a/gradio/blocks.py

+++ b/gradio/blocks.py

@@ -236,6 +236,10 @@ def set_event_trigger(

"batch": batch,

"max_batch_size": max_batch_size,

"cancels": cancels or [],

+ "types": {

+ "continuous": bool(every),

+ "generator": inspect.isgeneratorfunction(fn) or bool(every),

+ },

}

Context.root_block.dependencies.append(dependency)

return dependency

@@ -579,6 +583,8 @@ def iterate_over_children(children_list):

with block:

iterate_over_children(children)

+ derived_fields = ["types"]

+

with Blocks(theme=config["theme"], css=config["theme"]) as blocks:

# ID 0 should be the root Blocks component

original_mapping[0] = Context.root_block or blocks

@@ -596,6 +602,8 @@ def iterate_over_children(children_list):

# older demos

if dependency["trigger"] == "fake_event":

continue

+ for field in derived_fields:

+ dependency.pop(field, None)

targets = dependency.pop("targets")

trigger = dependency.pop("trigger")

dependency.pop("backend_fn")

| diff --git a/gradio/test_data/blocks_configs.py b/gradio/test_data/blocks_configs.py

--- a/gradio/test_data/blocks_configs.py

+++ b/gradio/test_data/blocks_configs.py

@@ -196,6 +196,7 @@

"max_batch_size": 4,

"cancels": [],

"every": None,

+ "types": {"continuous": False, "generator": False},

},

{

"targets": [39],

@@ -212,6 +213,7 @@

"max_batch_size": 4,

"cancels": [],

"every": None,

+ "types": {"continuous": False, "generator": False},

},

{

"targets": [],

@@ -228,6 +230,7 @@

"max_batch_size": 4,

"cancels": [],

"every": None,

+ "types": {"continuous": False, "generator": False},

},

],

}

@@ -431,6 +434,7 @@

"max_batch_size": 4,

"cancels": [],

"every": None,

+ "types": {"continuous": False, "generator": False},

},

{

"targets": [933],

@@ -447,6 +451,7 @@

"max_batch_size": 4,

"cancels": [],

"every": None,

+ "types": {"continuous": False, "generator": False},

},

{

"targets": [],

@@ -463,6 +468,7 @@

"max_batch_size": 4,

"cancels": [],

"every": None,

+ "types": {"continuous": False, "generator": False},

},

],

}

diff --git a/test/test_blocks.py b/test/test_blocks.py

--- a/test/test_blocks.py

+++ b/test/test_blocks.py

@@ -325,6 +325,37 @@ async def test_restart_after_close(self):

completed = True

assert msg["output"]["data"][0] == "Victor"

+ def test_function_types_documented_in_config(self):

+ def continuous_fn():

+ return 42

+

+ def generator_function():

+ for index in range(10):

+ yield index

+

+ with gr.Blocks() as demo:

+

+ gr.Number(value=lambda: 2, every=2)

+ meaning_of_life = gr.Number()

+ counter = gr.Number()

+ generator_btn = gr.Button(value="Generate")

+ greeting = gr.Textbox()

+ greet_btn = gr.Button(value="Greet")

+

+ greet_btn.click(lambda: "Hello!", inputs=None, outputs=[greeting])

+ generator_btn.click(generator_function, inputs=None, outputs=[counter])

+ demo.load(continuous_fn, inputs=None, outputs=[meaning_of_life], every=1)

+

+ for i, dependency in enumerate(demo.config["dependencies"]):

+ if i == 0:

+ assert dependency["types"] == {"continuous": True, "generator": True}

+ if i == 1:

+ assert dependency["types"] == {"continuous": False, "generator": False}

+ if i == 2:

+ assert dependency["types"] == {"continuous": False, "generator": True}

+ if i == 3:

+ assert dependency["types"] == {"continuous": True, "generator": True}

+

@pytest.mark.asyncio

async def test_run_without_launching(self):

"""Test that we can start the app and use queue without calling .launch().

| Document in the config if the block function is a generator and if it runs forever

- [x] I have searched to see if a similar issue already exists.

**Is your feature request related to a problem? Please describe.**

Needed for the several clients as discussed in this thread: https://huggingface.slack.com/archives/C02SPHC1KD1/p1677190325992569

**Describe the solution you'd like**

A clear and concise description of what you want to happen.

**Additional context**

Add any other context or screenshots about the feature request here.

| We actually already track `every` i think, it is just other generating functions. Maybe we can combine them in the config. Although it would be good to know if they run forever or have a finite number of runs, if we have that info. It might also be good to know if a prediction is the last one, although i don't think that is essential.

> Although it would be good to know if they run forever or have a finite number of runs, if we have that info.

Yea I was thinking of adding two fields to the dependency config

1. `continuous`: True if runs forever.

2. `generator`: True if its is a generating function.

1 implies 2 but not the other way around.

Would we remove `every` in this case? I think those names are better. Or we could add a `types` field with the function type(s). Either a list or an 'object'. Some degree of namespacing might be nice

I guess we can't remove anything as that might be breaking.

I like the suggestion to use `types` field! Let's not remove every for the reason you mentioned and I think it's good to know the expected frequency anyways. | 2023-02-24T17:42:21 |

gradio-app/gradio | 3,338 | gradio-app__gradio-3338 | [

"3319"

] | 9fbe7a06fbd1ff9f49318cb4d16b8e827420f206 | diff --git a/gradio/components.py b/gradio/components.py

--- a/gradio/components.py

+++ b/gradio/components.py

@@ -1259,7 +1259,8 @@ def __init__(

value=value,

**kwargs,

)

- self.cleared_value = self.value

+

+ self.cleared_value = self.value or ([] if multiselect else "")

def get_config(self):

return {

diff --git a/gradio/utils.py b/gradio/utils.py

--- a/gradio/utils.py

+++ b/gradio/utils.py

@@ -886,11 +886,11 @@ def tex2svg(formula, *args):

svg_start = xml_code.index("<svg ")

svg_code = xml_code[svg_start:]

svg_code = re.sub(r"<metadata>.*<\/metadata>", "", svg_code, flags=re.DOTALL)

- svg_code = re.sub(r' width="[^"]+"', '', svg_code)

+ svg_code = re.sub(r' width="[^"]+"', "", svg_code)

height_match = re.search(r'height="([\d.]+)pt"', svg_code)

if height_match:

height = float(height_match.group(1))

- new_height = height / FONTSIZE # conversion from pt to em

+ new_height = height / FONTSIZE # conversion from pt to em

svg_code = re.sub(r'height="[\d.]+pt"', f'height="{new_height}em"', svg_code)

copy_code = f"<span style='font-size: 0px'>{formula}</span>"

return f"{copy_code}{svg_code}"

| 3.16.0 introduced breaking change for Dropdown

### Describe the bug

The addition of the multiselect option to `Dropdown` introduced a breaking change for the Dropdown components output signature. The default case when there is no value (for example, an optional dropdown that a user does not interact with before submitting) is now an array (list on the python side) instead of an empty string. This means checks like `dropdown == ""` etc will now be False rather than True.

I think it is fine if users select an option and _then_ select nothing.

This is the offending line: https://github.com/gradio-app/gradio/pull/2871/files#diff-f0b03055e354ad5f6f0c4c006adba9a9fe60a07ca1790b75e20cd0b69737ede8R11

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Reproduction

Above.

### Screenshot

-

### Logs

```shell

-

```

### System Info

```shell

-

```

### Severity

blocking upgrade to latest gradio version

| Wouldn't it make sense to have the default be `""` if `multiselect=False` and be `[]` if `multiselect=True`?