repo

stringclasses 856

values | pull_number

int64 3

127k

| instance_id

stringlengths 12

58

| issue_numbers

sequencelengths 1

5

| base_commit

stringlengths 40

40

| patch

stringlengths 67

1.54M

| test_patch

stringlengths 0

107M

| problem_statement

stringlengths 3

307k

| hints_text

stringlengths 0

908k

| created_at

timestamp[s] |

|---|---|---|---|---|---|---|---|---|---|

gradio-app/gradio | 66 | gradio-app__gradio-66 | [

"64"

] | 6942d2e44db1eba136f8f2d5103f0c0cf17e0289 | diff --git a/gradio/interface.py b/gradio/interface.py

--- a/gradio/interface.py

+++ b/gradio/interface.py

@@ -3,8 +3,6 @@

interface using the input and output types.

"""

-import tempfile

-import webbrowser

from gradio.inputs import InputComponent

from gradio.outputs import OutputComponent

from gradio import networking, strings, utils

@@ -12,8 +10,8 @@

import requests

import random

import time

+import webbrowser

import inspect

-from IPython import get_ipython

import sys

import weakref

import analytics

@@ -209,21 +207,14 @@ def run_prediction(self, processed_input, return_duration=False):

start = time.time()

if self.capture_session and self.session is not None:

graph, sess = self.session

- with graph.as_default():

- with sess.as_default():

- prediction = predict_fn(*processed_input)

+ with graph.as_default(), sess.as_default():

+ prediction = predict_fn(*processed_input)

else:

try:

prediction = predict_fn(*processed_input)

except ValueError as exception:

- if str(exception).endswith("is not an element of this "

- "graph."):

- raise ValueError("It looks like you might be using "

- "tensorflow < 2.0. Please "

- "pass capture_session=True in "

- "Interface to avoid the 'Tensor is "

- "not an element of this graph.' "

- "error.")

+ if str(exception).endswith("is not an element of this graph."):

+ raise ValueError(strings.en["TF1_ERROR"])

else:

raise exception

duration = time.time() - start

@@ -238,17 +229,11 @@ def run_prediction(self, processed_input, return_duration=False):

else:

return predictions

-

- def process(self, raw_input, predict_fn=None):

+ def process(self, raw_input):

"""

- :param raw_input: a list of raw inputs to process and apply the

- prediction(s) on.

- :param predict_fn: which function to process. If not provided, all of the model functions are used.

- :return:

- processed output: a list of processed outputs to return as the

- prediction(s).

- duration: a list of time deltas measuring inference time for each

- prediction fn.

+ :param raw_input: a list of raw inputs to process and apply the prediction(s) on.

+ processed output: a list of processed outputs to return as the prediction(s).

+ duration: a list of time deltas measuring inference time for each prediction fn.

"""

processed_input = [input_interface.preprocess(raw_input[i])

for i, input_interface in enumerate(self.input_interfaces)]

@@ -258,6 +243,11 @@ def process(self, raw_input, predict_fn=None):

return processed_output, durations

def interpret(self, raw_input):

+ """

+ Runs the interpretation command for the machine learning model. Handles both the "default" out-of-the-box

+ interpretation for a certain set of UI component types, as well as the custom interpretation case.

+ :param raw_input: a list of raw inputs to apply the interpretation(s) on.

+ """

if self.interpretation == "default":

interpreter = gradio.interpretation.default()

processed_input = []

@@ -270,9 +260,22 @@ def interpret(self, raw_input):

interpretation = interpreter(self, processed_input)

else:

processed_input = [input_interface.preprocess(raw_input[i])

- for i, input_interface in enumerate(self.input_interfaces)]

+ for i, input_interface in enumerate(self.input_interfaces)]

interpreter = self.interpretation

- interpretation = interpreter(*processed_input)

+

+ if self.capture_session and self.session is not None:

+ graph, sess = self.session

+ with graph.as_default(), sess.as_default():

+ interpretation = interpreter(*processed_input)

+ else:

+ try:

+ interpretation = interpreter(*processed_input)

+ except ValueError as exception:

+ if str(exception).endswith("is not an element of this graph."):

+ raise ValueError(strings.en["TF1_ERROR"])

+ else:

+ raise exception

+

if len(raw_input) == 1:

interpretation = [interpretation]

return interpretation

@@ -422,9 +425,9 @@ def launch(self, inline=None, inbrowser=None, share=False, debug=False):

if not is_in_interactive_mode:

self.run_until_interrupted(thread, path_to_local_server)

-

return app, path_to_local_server, share_url

+

def reset_all():

for io in Interface.get_instances():

io.close()

diff --git a/gradio/interpretation.py b/gradio/interpretation.py

--- a/gradio/interpretation.py

+++ b/gradio/interpretation.py

@@ -1,6 +1,5 @@

from gradio.inputs import Image, Textbox

from gradio.outputs import Label

-from gradio import processing_utils

from skimage.segmentation import slic

import numpy as np

@@ -8,6 +7,7 @@

Image: "numpy",

}

+

def default(separator=" ", n_segments=20):

"""

Basic "default" interpretation method that uses "leave-one-out" to explain predictions for

diff --git a/gradio/strings.py b/gradio/strings.py

--- a/gradio/strings.py

+++ b/gradio/strings.py

@@ -7,4 +7,6 @@

"PUBLIC_SHARE_TRUE": "To create a public link, set `share=True` in the argument to `launch()`.",

"MODEL_PUBLICLY_AVAILABLE_URL": "Model available publicly at: {} (may take up to a minute for link to be usable)",

"GENERATING_PUBLIC_LINK": "Generating public link (may take a few seconds...):",

+ "TF1_ERROR": "It looks like you might be using tensorflow < 2.0. Please pass capture_session=True in Interface() to"

+ " avoid the 'Tensor is not an element of this graph.' error."

}

| diff --git a/test/test_interpretation.py b/test/test_interpretation.py

new file mode 100644

--- /dev/null

+++ b/test/test_interpretation.py

@@ -0,0 +1,47 @@

+import unittest

+import gradio.interpretation

+import gradio.test_data

+from gradio.processing_utils import decode_base64_to_image

+from gradio import Interface

+import numpy as np

+

+

+class TestDefault(unittest.TestCase):

+ def setUp(self):

+ self.default_method = gradio.interpretation.default()

+

+ def test_default_text(self):

+ max_word_len = lambda text: max([len(word) for word in text.split(" ")])

+ text_interface = Interface(max_word_len, "textbox", "label")

+ interpretation = self.default_method(text_interface, ["quickest brown fox"])[0]

+ self.assertGreater(interpretation[0][1], 0) # Checks to see if the first letter has >0 score.

+ self.assertEqual(interpretation[-1][1], 0) # Checks to see if the last letter has 0 score.

+

+ def test_default_image(self):

+ max_pixel_value = lambda img: img.max()

+ img_interface = Interface(max_pixel_value, "image", "label")

+ array = np.zeros((100,100))

+ array[0, 0] = 1

+ interpretation = self.default_method(img_interface, [array])[0]

+ self.assertGreater(interpretation[0][0], 0) # Checks to see if the top-left has >0 score.

+

+

+class TestCustom(unittest.TestCase):

+ def test_custom_text(self):

+ max_word_len = lambda text: max([len(word) for word in text.split(" ")])

+ custom = lambda text: [(char, 1) for char in text]

+ text_interface = Interface(max_word_len, "textbox", "label", interpretation=custom)

+ result = text_interface.interpret(["quickest brown fox"])[0]

+ self.assertEqual(result[0][1], 1) # Checks to see if the first letter has score of 1.

+

+ def test_custom_img(self):

+ max_pixel_value = lambda img: img.max()

+ custom = lambda img: img.tolist()

+ img_interface = Interface(max_pixel_value, "image", "label", interpretation=custom)

+ result = img_interface.interpret([gradio.test_data.BASE64_IMAGE])[0]

+ expected_result = np.asarray(decode_base64_to_image(gradio.test_data.BASE64_IMAGE).convert('RGB')).tolist()

+ self.assertEqual(result, expected_result)

+

+

+if __name__ == '__main__':

+ unittest.main()

\ No newline at end of file

| Interpretation doesn't work in Tensorflow 1.x

For TF 1.x models, interpretation doesn't set up the correct session

| 2020-10-05T12:44:53 |

|

gradio-app/gradio | 365 | gradio-app__gradio-365 | [

"343"

] | f361f36625d8c2aab5713ce9f073feb741284003 | diff --git a/gradio/mix.py b/gradio/mix.py

--- a/gradio/mix.py

+++ b/gradio/mix.py

@@ -45,18 +45,20 @@ def __init__(self, *interfaces, **options):

def connected_fn(*data): # Run each function with the appropriate preprocessing and postprocessing

for idx, io in enumerate(interfaces):

- # skip preprocessing for first interface since the compound interface will include it

- if idx > 0:

- data = [input_interface.preprocess(data[i]) for i, input_interface in enumerate(io.input_components)]

+ # skip preprocessing for first interface since the Series interface will include it

+ if idx > 0 and not(io.api_mode):

+ data = [input_component.preprocess(data[i]) for i, input_component in enumerate(io.input_components)]

+

# run all of predictions sequentially

predictions = []

for predict_fn in io.predict:

prediction = predict_fn(*data)

predictions.append(prediction)

data = predictions

- # skip postprocessing for final interface since the compound interface will include it

- if idx < len(interfaces) - 1:

- data = [output_interface.postprocess(data[i]) for i, output_interface in enumerate(io.output_components)]

+ # skip postprocessing for final interface since the Series interface will include it

+ if idx < len(interfaces) - 1 and not(io.api_mode):

+ data = [output_component.postprocess(data[i]) for i, output_component in enumerate(io.output_components)]

+

return data[0]

connected_fn.__name__ = " => ".join([f[0].__name__ for f in fns])

@@ -65,6 +67,7 @@ def connected_fn(*data): # Run each function with the appropriate preprocessing

"fn": connected_fn,

"inputs": interfaces[0].input_components,

"outputs": interfaces[-1].output_components,

+ "api_mode": interfaces[0].api_mode, # TODO(abidlabs): allow mixing api_mode and non-api_mode interfaces

}

kwargs.update(options)

super().__init__(**kwargs)

| diff --git a/test/test_mix.py b/test/test_mix.py

--- a/test/test_mix.py

+++ b/test/test_mix.py

@@ -4,6 +4,11 @@

import os

+"""

+WARNING: Some of these tests have an external dependency: namely that Hugging Face's Hub and Space APIs do not change, and they keep their most famous models up. So if, e.g. Spaces is down, then these test will not pass.

+"""

+

+

os.environ["GRADIO_ANALYTICS_ENABLED"] = "False"

@@ -15,6 +20,14 @@ def test_in_interface(self):

series = mix.Series(io1, io2)

self.assertEqual(series.process(["Hello"])[0], ["Hello World!"])

+ def test_with_external(self):

+ io1 = gr.Interface.load("spaces/abidlabs/image-identity")

+ io2 = gr.Interface.load("spaces/abidlabs/image-classifier")

+ series = mix.Series(io1, io2)

+ output = series("test/test_data/lion.jpg")

+ self.assertGreater(output['lion'], 0.5)

+

+

class TestParallel(unittest.TestCase):

def test_in_interface(self):

io1 = gr.Interface(lambda x: x + " World 1!", "textbox",

@@ -24,6 +37,13 @@ def test_in_interface(self):

parallel = mix.Parallel(io1, io2)

self.assertEqual(parallel.process(["Hello"])[0], ["Hello World 1!",

"Hello World 2!"])

+ def test_with_external(self):

+ io1 = gr.Interface.load("spaces/abidlabs/english_to_spanish")

+ io2 = gr.Interface.load("spaces/abidlabs/english2german")

+ parallel = mix.Parallel(io1, io2)

+ hello_es, hello_de = parallel("Hello")

+ self.assertIn("hola", hello_es.lower())

+ self.assertIn("hallo", hello_de.lower())

if __name__ == '__main__':

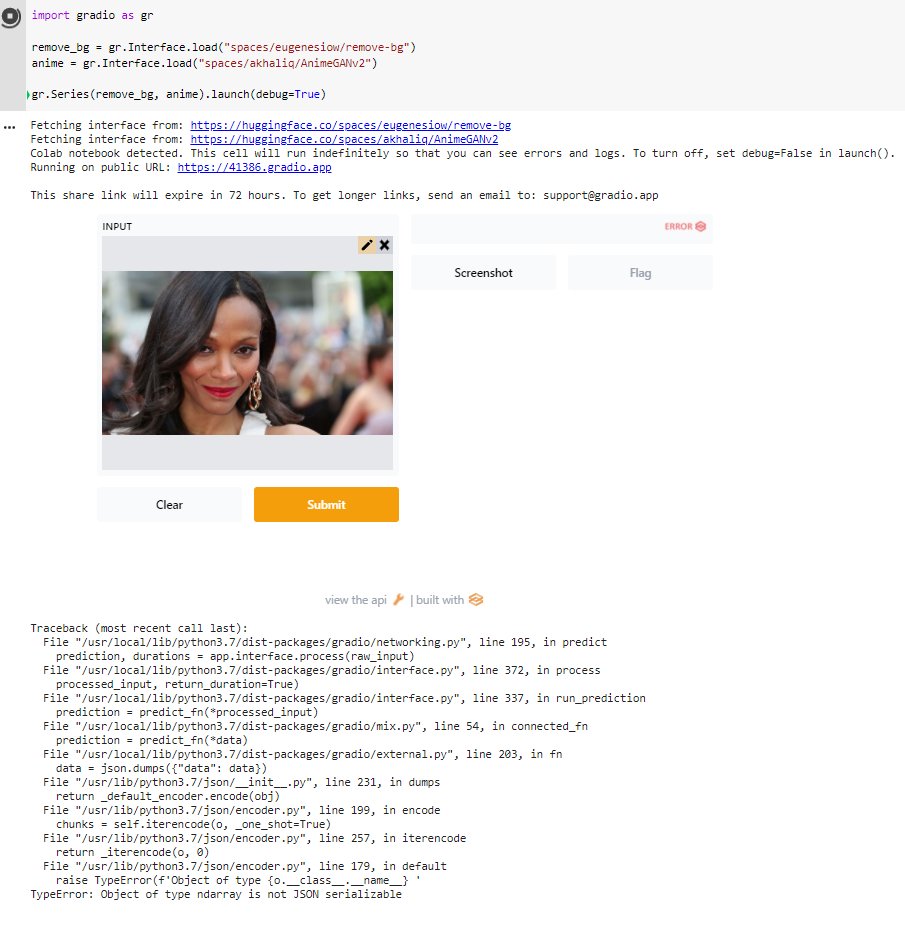

| Series and Parallel don't work if prediction contains an ndarray when using Spaces

I was trying to recreate the example from this tweet:

https://twitter.com/abidlabs/status/1457753971075002376

```

import gradio as gr

general_classifier = gr.Interface.load("spaces/abidlabs/vision-transformer")

bird_classifier = gr.Interface.load("spaces/akhaliq/bird_classifier")

gr.Parallel(general_classifier, bird_classifier).launch(debug=True)

```

But this fails with the following error:

```

Traceback (most recent call last):

File "/usr/local/lib/python3.7/dist-packages/gradio/networking.py", line 193, in predict

prediction, durations = app.interface.process(raw_input)

File "/usr/local/lib/python3.7/dist-packages/gradio/interface.py", line 364, in process

processed_input, return_duration=True)

File "/usr/local/lib/python3.7/dist-packages/gradio/interface.py", line 332, in run_prediction

prediction = predict_fn(*processed_input)

File "/usr/local/lib/python3.7/dist-packages/gradio/external.py", line 203, in fn

data = json.dumps({"data": data})

File "/usr/lib/python3.7/json/__init__.py", line 231, in dumps

return _default_encoder.encode(obj)

File "/usr/lib/python3.7/json/encoder.py", line 199, in encode

chunks = self.iterencode(o, _one_shot=True)

File "/usr/lib/python3.7/json/encoder.py", line 257, in iterencode

return _iterencode(o, 0)

File "/usr/lib/python3.7/json/encoder.py", line 179, in default

raise TypeError(f'Object of type {o.__class__.__name__} '

TypeError: Object of type ndarray is not JSON serializable

```

| You’re right! We’re working on patching Parallel() as soon as possible

On Mon, Nov 8, 2021 at 6:47 PM Jeroen Van Goey ***@***.***>

wrote:

> I was trying to recreate the example from this tweet:

>

> https://twitter.com/abidlabs/status/1457753971075002376

>

> import gradio as gr

>

> general_classifier = gr.Interface.load("spaces/abidlabs/vision-transformer")

> bird_classifier = gr.Interface.load("spaces/akhaliq/bird_classifier")

>

> gr.Parallel(general_classifier, bird_classifier).launch(debug=True)

>

> But this fails with the following error:

>

> Traceback (most recent call last):

> File "/usr/local/lib/python3.7/dist-packages/gradio/networking.py", line 193, in predict

> prediction, durations = app.interface.process(raw_input)

> File "/usr/local/lib/python3.7/dist-packages/gradio/interface.py", line 364, in process

> processed_input, return_duration=True)

> File "/usr/local/lib/python3.7/dist-packages/gradio/interface.py", line 332, in run_prediction

> prediction = predict_fn(*processed_input)

> File "/usr/local/lib/python3.7/dist-packages/gradio/external.py", line 203, in fn

> data = json.dumps({"data": data})

> File "/usr/lib/python3.7/json/__init__.py", line 231, in dumps

> return _default_encoder.encode(obj)

> File "/usr/lib/python3.7/json/encoder.py", line 199, in encode

> chunks = self.iterencode(o, _one_shot=True)

> File "/usr/lib/python3.7/json/encoder.py", line 257, in iterencode

> return _iterencode(o, 0)

> File "/usr/lib/python3.7/json/encoder.py", line 179, in default

> raise TypeError(f'Object of type {o.__class__.__name__} '

> TypeError: Object of type ndarray is not JSON serializable

>

> [image: parallel]

> <https://user-images.githubusercontent.com/59344/140841080-51537e88-5999-4b44-8c0a-0a6b9b5cc778.png>

>

> —

> You are receiving this because you are subscribed to this thread.

> Reply to this email directly, view it on GitHub

> <https://github.com/gradio-app/gradio/issues/343>, or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AANSE6NY7AJMU7CSGE5MVNTULBVSVANCNFSM5HUAWTUA>

> .

> Triage notifications on the go with GitHub Mobile for iOS

> <https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675>

> or Android

> <https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

>

>

--

Abubakar Abid

Department of Electrical Engineering

Stanford University || Paul & Daisy Soros Fellow

@abidlabs <https://twitter.com/abidlabs> || *abidlabs.github.io

<http://abidlabs.github.io>*

Should work now!

Thanks for the quick fix! I can confirm that it now works for Parallel, but on gradio 2.4.5 Series is still broken. For example:

```

import gradio as gr

remove_bg = gr.Interface.load("spaces/eugenesiow/remove-bg")

anime = gr.Interface.load("spaces/akhaliq/AnimeGANv2")

gr.Series(remove_bg, anime).launch()

```

fails with:

```

Traceback (most recent call last):

File "/usr/local/lib/python3.7/dist-packages/gradio/networking.py", line 195, in predict

prediction, durations = app.interface.process(raw_input)

File "/usr/local/lib/python3.7/dist-packages/gradio/interface.py", line 372, in process

processed_input, return_duration=True)

File "/usr/local/lib/python3.7/dist-packages/gradio/interface.py", line 337, in run_prediction

prediction = predict_fn(*processed_input)

File "/usr/local/lib/python3.7/dist-packages/gradio/mix.py", line 54, in connected_fn

prediction = predict_fn(*data)

File "/usr/local/lib/python3.7/dist-packages/gradio/external.py", line 203, in fn

data = json.dumps({"data": data})

File "/usr/lib/python3.7/json/__init__.py", line 231, in dumps

return _default_encoder.encode(obj)

File "/usr/lib/python3.7/json/encoder.py", line 199, in encode

chunks = self.iterencode(o, _one_shot=True)

File "/usr/lib/python3.7/json/encoder.py", line 257, in iterencode

return _iterencode(o, 0)

File "/usr/lib/python3.7/json/encoder.py", line 179, in default

raise TypeError(f'Object of type {o.__class__.__name__} '

TypeError: Object of type ndarray is not JSON serializable

```

Ah you're right, thanks will fix this issue | 2021-11-15T14:42:30 |

gradio-app/gradio | 420 | gradio-app__gradio-420 | [

"419"

] | eb7194f7563def402d5efa6c3afff781340065a5 | diff --git a/gradio/interface.py b/gradio/interface.py

--- a/gradio/interface.py

+++ b/gradio/interface.py

@@ -30,7 +30,7 @@ class Interface:

Interfaces are created with Gradio by constructing a `gradio.Interface()` object or by calling `gradio.Interface.load()`.

"""

- instances = weakref.WeakSet() # stores all currently existing Interface instances

+ instances = weakref.WeakSet() # stores references to all currently existing Interface instances

@classmethod

def get_instances(cls):

@@ -76,9 +76,8 @@ def __init__(self, fn, inputs=None, outputs=None, verbose=None, examples=None,

examples_per_page=10, live=False, layout="unaligned", show_input=True, show_output=True,

capture_session=None, interpretation=None, num_shap=2.0, theme=None, repeat_outputs_per_model=True,

title=None, description=None, article=None, thumbnail=None,

- css=None, server_port=None, server_name=None, height=500, width=900,

- allow_screenshot=True, allow_flagging=None, flagging_options=None, encrypt=False,

- show_tips=None, flagging_dir="flagged", analytics_enabled=None, enable_queue=None, api_mode=None):

+ css=None, height=500, width=900, allow_screenshot=True, allow_flagging=None, flagging_options=None,

+ encrypt=False, show_tips=None, flagging_dir="flagged", analytics_enabled=None, enable_queue=None, api_mode=None):

"""

Parameters:

fn (Callable): the function to wrap an interface around.

@@ -175,12 +174,7 @@ def __init__(self, fn, inputs=None, outputs=None, verbose=None, examples=None,

"Examples argument must either be a directory or a nested list, where each sublist represents a set of inputs.")

self.num_shap = num_shap

self.examples_per_page = examples_per_page

-

- self.server_name = server_name

- self.server_port = server_port

- if server_name is not None or server_port is not None:

- warnings.warn("The server_name and server_port parameters in the `Interface` class will be deprecated. Please provide them in the `launch()` method instead.")

-

+

self.simple_server = None

self.allow_screenshot = allow_screenshot

# For allow_flagging and analytics_enabled: (1) first check for parameter, (2) check for environment variable, (3) default to True

@@ -586,7 +580,6 @@ def launch(self, inline=None, inbrowser=None, share=False, debug=False,

path_to_local_server (str): Locally accessible link

share_url (str): Publicly accessible link (if share=True)

"""

-

# Set up local flask server

config = self.get_config_file()

self.config = config

@@ -595,6 +588,7 @@ def launch(self, inline=None, inbrowser=None, share=False, debug=False,

self.auth = auth

self.auth_message = auth_message

self.show_tips = show_tips

+ self.show_error = show_error

# Request key for encryption

if self.encrypt:

@@ -602,19 +596,18 @@ def launch(self, inline=None, inbrowser=None, share=False, debug=False,

getpass.getpass("Enter key for encryption: "))

# Store parameters

- server_name = server_name or self.server_name or networking.LOCALHOST_NAME

- server_port = server_port or self.server_port or networking.INITIAL_PORT_VALUE

if self.enable_queue is None:

self.enable_queue = enable_queue

# Launch local flask server

- server_port, path_to_local_server, app, thread = networking.start_server(

+ server_port, path_to_local_server, app, thread, server = networking.start_server(

self, server_name, server_port, self.auth)

self.local_url = path_to_local_server

self.server_port = server_port

self.status = "RUNNING"

- self.server = app

- self.show_error = show_error

+ self.server = server

+ self.server_app = app

+ self.server_thread = thread

# Count number of launches

utils.launch_counter()

@@ -709,21 +702,18 @@ def launch(self, inline=None, inbrowser=None, share=False, debug=False,

return app, path_to_local_server, share_url

- def close(self):

+ def close(self, verbose=True):

"""

Closes the Interface that was launched. This will close the server and free the port.

"""

try:

- if self.share_url:

- requests.get("{}/shutdown".format(self.share_url))

- print("Closing Gradio server on port {}...".format(self.server_port))

- elif self.local_url:

- requests.get("{}shutdown".format(self.local_url))

- print("Closing Gradio server on port {}...".format(self.server_port))

- else:

- pass # server not running

- except (requests.ConnectionError, ConnectionResetError):

- pass # server is already closed

+ self.server.shutdown()

+ self.server_thread.join()

+ print("Closing server running on port: {}".format(self.server_port))

+ except AttributeError: # can't close if not running

+ pass

+ except OSError: # sometimes OSError is thrown when shutting down

+ pass

def integrate(self, comet_ml=None, wandb=None, mlflow=None):

"""

@@ -764,11 +754,13 @@ def integrate(self, comet_ml=None, wandb=None, mlflow=None):

utils.integration_analytics(data)

-def close_all():

+def close_all(verbose=True):

+ # Tries to close all running interfaces, but method is a little flaky.

for io in Interface.get_instances():

- io.close()

+ io.close(verbose)

def reset_all():

- warnings.warn("The `reset_all()` method has been renamed to `close_all()`. Please use `close_all()` instead.")

+ warnings.warn("The `reset_all()` method has been renamed to `close_all()` "

+ "and will be deprecated. Please use `close_all()` instead.")

close_all()

diff --git a/gradio/networking.py b/gradio/networking.py

--- a/gradio/networking.py

+++ b/gradio/networking.py

@@ -2,45 +2,44 @@

Defines helper methods useful for setting up ports, launching servers, and handling `ngrok`

"""

-import os

-import socket

-import threading

+import csv

+import datetime

from flask import Flask, request, session, jsonify, abort, send_file, render_template, redirect

from flask_cachebuster import CacheBuster

from flask_login import LoginManager, login_user, current_user, login_required

from flask_cors import CORS

-import threading

-import pkg_resources

-import datetime

-import time

+from functools import wraps

+import inspect

+import io

import json

-import urllib.request

-from shutil import copyfile

+import logging

+import os

+import pkg_resources

import requests

+import socket

import sys

-import csv

-import logging

-from gradio.tunneling import create_tunnel

-from gradio import encryptor

-from gradio import queue

-from functools import wraps

-import io

-import inspect

+import threading

+import time

import traceback

+import urllib.request

from werkzeug.security import safe_join

+from werkzeug.serving import make_server

+from gradio import encryptor

+from gradio import queue

+from gradio.tunneling import create_tunnel

-INITIAL_PORT_VALUE = int(os.getenv(

- 'GRADIO_SERVER_PORT', "7860")) # The http server will try to open on port 7860. If not available, 7861, 7862, etc.

-TRY_NUM_PORTS = int(os.getenv(

- 'GRADIO_NUM_PORTS', "100")) # Number of ports to try before giving up and throwing an exception.

-LOCALHOST_NAME = os.getenv(

- 'GRADIO_SERVER_NAME', "127.0.0.1")

+# By default, the http server will try to open on port 7860. If not available, 7861, 7862, etc.

+INITIAL_PORT_VALUE = int(os.getenv('GRADIO_SERVER_PORT', "7860"))

+# Number of ports to try before giving up and throwing an exception.

+TRY_NUM_PORTS = int(os.getenv('GRADIO_NUM_PORTS', "100"))

+LOCALHOST_NAME = os.getenv('GRADIO_SERVER_NAME', "127.0.0.1")

GRADIO_API_SERVER = "https://api.gradio.app/v1/tunnel-request"

GRADIO_FEATURE_ANALYTICS_URL = "https://api.gradio.app/gradio-feature-analytics/"

STATIC_TEMPLATE_LIB = pkg_resources.resource_filename("gradio", "templates/")

STATIC_PATH_LIB = pkg_resources.resource_filename("gradio", "templates/frontend/static")

VERSION_FILE = pkg_resources.resource_filename("gradio", "version.txt")

+

with open(VERSION_FILE) as version_file:

GRADIO_STATIC_ROOT = "https://gradio.s3-us-west-2.amazonaws.com/" + \

version_file.read().strip() + "/static/"

@@ -426,10 +425,22 @@ def queue_thread(path_to_local_server, test_mode=False):

break

-def start_server(interface, server_name, server_port, auth=None, ssl=None):

- port = get_first_available_port(

- server_port, server_port + TRY_NUM_PORTS

- )

+def start_server(interface, server_name=None, server_port=None, auth=None, ssl=None):

+ if server_name is None:

+ server_name = LOCALHOST_NAME

+ if server_port is None: # if port is not specified, start at 7860 and search for first available port

+ port = get_first_available_port(

+ INITIAL_PORT_VALUE, INITIAL_PORT_VALUE + TRY_NUM_PORTS

+ )

+ else:

+ try:

+ s = socket.socket() # create a socket object

+ s.bind((LOCALHOST_NAME, server_port)) # Bind to the port to see if it's available (otherwise, raise OSError)

+ s.close()

+ except OSError:

+ raise OSError("Port {} is in use. If a gradio.Interface is running on the port, you can close() it or gradio.close_all().".format(server_port))

+ port = server_port

+

url_host_name = "localhost" if server_name == "0.0.0.0" else server_name

path_to_local_server = "http://{}:{}/".format(url_host_name, port)

if auth is not None:

@@ -451,15 +462,13 @@ def start_server(interface, server_name, server_port, auth=None, ssl=None):

app.queue_thread.start()

if interface.save_to is not None:

interface.save_to["port"] = port

- app_kwargs = {"port": port, "host": server_name}

+ app_kwargs = {"app": app, "port": port, "host": server_name}

if ssl:

app_kwargs["ssl_context"] = ssl

- thread = threading.Thread(target=app.run,

- kwargs=app_kwargs,

- daemon=True)

+ server = make_server(**app_kwargs)

+ thread = threading.Thread(target=server.serve_forever, daemon=True)

thread.start()

-

- return port, path_to_local_server, app, thread

+ return port, path_to_local_server, app, thread, server

def get_state():

return session.get("state")

| diff --git a/test/test_interfaces.py b/test/test_interfaces.py

--- a/test/test_interfaces.py

+++ b/test/test_interfaces.py

@@ -10,6 +10,7 @@

from comet_ml import Experiment

import mlflow

import wandb

+import socket

os.environ["GRADIO_ANALYTICS_ENABLED"] = "False"

@@ -24,10 +25,19 @@ def captured_output():

sys.stdout, sys.stderr = old_out, old_err

class TestInterface(unittest.TestCase):

- def test_reset_all(self):

+ def test_close(self):

+ io = Interface(lambda input: None, "textbox", "label")

+ _, local_url, _ = io.launch(prevent_thread_lock=True)

+ response = requests.get(local_url)

+ self.assertEqual(response.status_code, 200)

+ io.close()

+ with self.assertRaises(Exception):

+ response = requests.get(local_url)

+

+ def test_close_all(self):

interface = Interface(lambda input: None, "textbox", "label")

interface.close = mock.MagicMock()

- reset_all()

+ close_all()

interface.close.assert_called()

def test_examples_invalid_input(self):

diff --git a/test/test_networking.py b/test/test_networking.py

--- a/test/test_networking.py

+++ b/test/test_networking.py

@@ -199,7 +199,6 @@ def test_state_initialization(self):

def test_state_value(self):

io = gr.Interface(lambda x: len(x), "text", "label")

- io.launch(prevent_thread_lock=True)

app, _, _ = io.launch(prevent_thread_lock=True)

with app.test_request_context():

networking.set_state("test")

diff --git a/test/test_utils.py b/test/test_utils.py

--- a/test/test_utils.py

+++ b/test/test_utils.py

@@ -101,20 +101,8 @@ def test_readme_to_html_doesnt_crash_on_connection_error(self, mock_get):

readme_to_html("placeholder")

def test_readme_to_html_correct_parse(self):

- readme_to_html("https://github.com/gradio-app/gradio/blob/master/README.md")

-

- def test_launch_counter(self):

- with tempfile.NamedTemporaryFile() as tmp:

- with mock.patch('gradio.utils.JSON_PATH', tmp.name):

- interface = gradio.Interface(lambda x: x, "textbox", "label")

- os.remove(tmp.name)

- interface.launch(prevent_thread_lock=True)

- with open(tmp.name) as j:

- self.assertEqual(json.load(j)['launches'], 1)

- interface.launch(prevent_thread_lock=True)

- with open(tmp.name) as j:

- self.assertEqual(json.load(j)['launches'], 2)

-

+ readme_to_html("https://github.com/gradio-app/gradio/blob/master/README.md")

+

if __name__ == '__main__':

unittest.main()

| [Suggestions] Don't autofind a port when a port is user specified

**Is your feature request related to a problem? Please describe.**

When using `iface = gr.Interface(.., server_port=5000)` if port `5000` is already used it will use port `5001` instead.

**Describe the solution you'd like**

If it could crash instead that would be better saying the port is already used.

**Additional context**

This was an issue when reloading regularly the gradio app and reloading pages on the browser

| Makes sense, we'll fix it! | 2021-12-17T16:44:31 |

gradio-app/gradio | 472 | gradio-app__gradio-472 | [

"447"

] | c1a23a75a4d679fd79e255955e453c0474c91ecb | diff --git a/gradio/app.py b/gradio/app.py

--- a/gradio/app.py

+++ b/gradio/app.py

@@ -2,6 +2,7 @@

from __future__ import annotations

from fastapi import FastAPI, Request, Depends, HTTPException, status

+from fastapi.concurrency import run_in_threadpool

from fastapi.middleware.cors import CORSMiddleware

from fastapi.responses import JSONResponse, HTMLResponse, FileResponse

from fastapi.security import OAuth2PasswordRequestForm

@@ -37,6 +38,7 @@

allow_headers=["*"],

)

+

templates = Jinja2Templates(directory=STATIC_TEMPLATE_LIB)

@@ -178,23 +180,28 @@ async def predict(

if body.get("example_id") != None:

example_id = body["example_id"]

if app.interface.cache_examples:

- prediction = load_from_cache(app.interface, example_id)

+ prediction = await run_in_threadpool(

+ load_from_cache, app.interface, example_id)

durations = None

else:

- prediction, durations = process_example(app.interface, example_id)

+ prediction, durations = await run_in_threadpool(

+ process_example, app.interface, example_id)

else:

raw_input = body["data"]

if app.interface.show_error:

try:

- prediction, durations = app.interface.process(raw_input)

+ prediction, durations = await run_in_threadpool(

+ app.interface.process, raw_input)

except BaseException as error:

traceback.print_exc()

return JSONResponse(content={"error": str(error)},

status_code=500)

else:

- prediction, durations = app.interface.process(raw_input)

+ prediction, durations = await run_in_threadpool(

+ app.interface.process, raw_input)

if app.interface.allow_flagging == "auto":

- flag_index = app.interface.flagging_callback.flag(

+ flag_index = await run_in_threadpool(

+ app.interface.flagging_callback.flag,

app.interface, raw_input, prediction,

flag_option="" if app.interface.flagging_options else None,

username=username)

@@ -216,7 +223,8 @@ async def flag(

await utils.log_feature_analytics(app.interface.ip_address, 'flag')

body = await request.json()

data = body['data']

- app.interface.flagging_callback.flag(

+ await run_in_threadpool(

+ app.interface.flagging_callback.flag,

app.interface, data['input_data'], data['output_data'],

flag_option=data.get("flag_option"), flag_index=data.get("flag_index"),

username=username)

@@ -229,8 +237,8 @@ async def interpret(request: Request):

await utils.log_feature_analytics(app.interface.ip_address, 'interpret')

body = await request.json()

raw_input = body["data"]

- interpretation_scores, alternative_outputs = app.interface.interpret(

- raw_input)

+ interpretation_scores, alternative_outputs = await run_in_threadpool(

+ app.interface.interpret, raw_input)

return {

"interpretation_scores": interpretation_scores,

"alternative_outputs": alternative_outputs

diff --git a/gradio/networking.py b/gradio/networking.py

--- a/gradio/networking.py

+++ b/gradio/networking.py

@@ -147,7 +147,7 @@ def start_server(

app.queue_thread.start()

if interface.save_to is not None: # Used for selenium tests

interface.save_to["port"] = port

-

+

config = uvicorn.Config(app=app, port=port, host=server_name,

log_level="warning")

server = Server(config=config)

| Proper async handling in FastAPI app

**Is your feature request related to a problem? Please describe.**

In reviewing #440, @FarukOzderim brought up the fact that we currently make several synchronous blocking calls from within the FastAPI sever. This reduces the benefits we get from migrating to the asynchronous fasapi server.

**Describe the solution you'd like**

* call `log_feature_analytics()` asynchronously from `api/interpretation` and `api/flag`

* **the biggest one**: calling the prediction function itself asynchronously by spinning up another thread (https://www.aeracode.org/2018/02/19/python-async-simplified/)

| 2022-01-20T23:04:40 |

||

gradio-app/gradio | 601 | gradio-app__gradio-601 | [

"592"

] | 01f52bbd38cde7150e8e7d3ba2cc8bc1b1db6676 | diff --git a/website/homepage/render_html.py b/website/homepage/render_html.py

--- a/website/homepage/render_html.py

+++ b/website/homepage/render_html.py

@@ -151,14 +151,7 @@ def render_guides():

guide_output = guide_output.replace("<pre>", "<div class='code-block' style='display: flex'><pre>")

guide_output = guide_output.replace("</pre>", f"</pre>{copy_button}</div>")

- output_html = markdown2.markdown(guide_output, extras=["target-blank-links"])

-

- for match in re.findall(r"<h3>([A-Za-z0-9 ]*)<\/h3>", output_html):

- output_html = output_html.replace(

- f"<h3>{match}</h3>",

- f"<h3 id={match.lower().replace(' ', '_')}>{match}</h3>",

- )

-

+ output_html = markdown2.markdown(guide_output, extras=["target-blank-links", "header-ids"])

os.makedirs("generated", exist_ok=True)

os.makedirs(os.path.join("generated", guide["name"]), exist_ok=True)

with open(

| Add automatic anchor links to the different sections of getting started and guides automatically

Many times when I'm responding to issues, I want to link to a specific section in our getting started page. For example, the section related to "State"

We could manually add anchor links, but I'm sure there is a way to automatically turn any header tag into an anchorable link. Sometimes I've seen a little symbol next to the heading that you can click on to get the anchor link for that section, which would be super convenient.

Applies to the getting started as well as the guides.

| 2022-02-11T17:15:54 |

||

gradio-app/gradio | 660 | gradio-app__gradio-660 | [

"659"

] | cecb942263b6269e5b2698d5027a2899801560e1 | diff --git a/gradio/networking.py b/gradio/networking.py

--- a/gradio/networking.py

+++ b/gradio/networking.py

@@ -1,181 +1,180 @@

-"""

-Defines helper methods useful for setting up ports, launching servers, and

-creating tunnels.

-"""

-from __future__ import annotations

-

-import http

-import json

-import os

-import socket

-import threading

-import time

-import urllib.parse

-import urllib.request

-from typing import TYPE_CHECKING, Optional, Tuple

-

-import fastapi

-import requests

-import uvicorn

-

-from gradio import queueing

-from gradio.routes import app

-from gradio.tunneling import create_tunnel

-

-if TYPE_CHECKING: # Only import for type checking (to avoid circular imports).

- from gradio import Interface

-

-

-# By default, the local server will try to open on localhost, port 7860.

-# If that is not available, then it will try 7861, 7862, ... 7959.

-INITIAL_PORT_VALUE = int(os.getenv("GRADIO_SERVER_PORT", "7860"))

-TRY_NUM_PORTS = int(os.getenv("GRADIO_NUM_PORTS", "100"))

-LOCALHOST_NAME = os.getenv("GRADIO_SERVER_NAME", "127.0.0.1")

-GRADIO_API_SERVER = "https://api.gradio.app/v1/tunnel-request"

-

-

-class Server(uvicorn.Server):

- def install_signal_handlers(self):

- pass

-

- def run_in_thread(self):

- self.thread = threading.Thread(target=self.run, daemon=True)

- self.thread.start()

- while not self.started:

- time.sleep(1e-3)

-

- def close(self):

- self.should_exit = True

- self.thread.join()

-

-

-def get_first_available_port(initial: int, final: int) -> int:

- """

- Gets the first open port in a specified range of port numbers

- Parameters:

- initial: the initial value in the range of port numbers

- final: final (exclusive) value in the range of port numbers, should be greater than `initial`

- Returns:

- port: the first open port in the range

- """

- for port in range(initial, final):

- try:

- s = socket.socket() # create a socket object

- s.bind((LOCALHOST_NAME, port)) # Bind to the port

- s.close()

- return port

- except OSError:

- pass

- raise OSError(

- "All ports from {} to {} are in use. Please close a port.".format(

- initial, final

- )

- )

-

-

-def start_server(

- interface: Interface,

- server_name: Optional[str] = None,

- server_port: Optional[int] = None,

- ssl_keyfile: Optional[str] = None,

- ssl_certfile: Optional[str] = None,

-) -> Tuple[int, str, fastapi.FastAPI, threading.Thread, None]:

- """Launches a local server running the provided Interface

- Parameters:

- interface: The interface object to run on the server

- server_name: to make app accessible on local network, set this to "0.0.0.0". Can be set by environment variable GRADIO_SERVER_NAME.

- server_port: will start gradio app on this port (if available). Can be set by environment variable GRADIO_SERVER_PORT.

- auth: If provided, username and password (or list of username-password tuples) required to access interface. Can also provide function that takes username and password and returns True if valid login.

- ssl_keyfile: If a path to a file is provided, will use this as the private key file to create a local server running on https.

- ssl_certfile: If a path to a file is provided, will use this as the signed certificate for https. Needs to be provided if ssl_keyfile is provided.

- """

- server_name = server_name or LOCALHOST_NAME

- # if port is not specified, search for first available port

- if server_port is None:

- port = get_first_available_port(

- INITIAL_PORT_VALUE, INITIAL_PORT_VALUE + TRY_NUM_PORTS

- )

- else:

- try:

- s = socket.socket()

- s.bind((LOCALHOST_NAME, server_port))

- s.close()

- except OSError:

- raise OSError(

- "Port {} is in use. If a gradio.Interface is running on the port, you can close() it or gradio.close_all().".format(

- server_port

- )

- )

- port = server_port

-

- url_host_name = "localhost" if server_name == "0.0.0.0" else server_name

-

- if ssl_keyfile is not None:

- if ssl_certfile is None:

- raise ValueError(

- "ssl_certfile must be provided if ssl_keyfile is provided."

- )

- path_to_local_server = "https://{}:{}/".format(url_host_name, port)

- else:

- path_to_local_server = "http://{}:{}/".format(url_host_name, port)

-

- auth = interface.auth

- if auth is not None:

- if not callable(auth):

- app.auth = {account[0]: account[1] for account in auth}

- else:

- app.auth = auth

- else:

- app.auth = None

- app.interface = interface

- app.cwd = os.getcwd()

- app.favicon_path = interface.favicon_path

- app.tokens = {}

-

- if app.interface.enable_queue:

- if auth is not None or app.interface.encrypt:

- raise ValueError("Cannot queue with encryption or authentication enabled.")

- queueing.init()

- app.queue_thread = threading.Thread(

- target=queueing.queue_thread, args=(path_to_local_server,)

- )

- app.queue_thread.start()

- if interface.save_to is not None: # Used for selenium tests

- interface.save_to["port"] = port

-

- config = uvicorn.Config(

- app=app,

- port=port,

- host=server_name,

- log_level="warning",

- ssl_keyfile=ssl_keyfile,

- ssl_certfile=ssl_certfile,

- )

- server = Server(config=config)

- server.run_in_thread()

- return port, path_to_local_server, app, server

-

-

-def setup_tunnel(local_server_port: int, endpoint: str) -> str:

- response = requests.get(

- endpoint + "/v1/tunnel-request" if endpoint is not None else GRADIO_API_SERVER

- )

- if response and response.status_code == 200:

- try:

- payload = response.json()[0]

- return create_tunnel(payload, LOCALHOST_NAME, local_server_port)

- except Exception as e:

- raise RuntimeError(str(e))

- else:

- raise RuntimeError("Could not get share link from Gradio API Server.")

-

-

-def url_ok(url: str) -> bool:

- try:

- for _ in range(5):

- time.sleep(0.500)

- r = requests.head(url, timeout=3, verify=False)

- if r.status_code in (200, 401, 302): # 401 or 302 if auth is set

- return True

- except (ConnectionError, requests.exceptions.ConnectionError):

- return False

+"""

+Defines helper methods useful for setting up ports, launching servers, and

+creating tunnels.

+"""

+from __future__ import annotations

+

+import os

+import socket

+import threading

+import time

+import warnings

+from typing import TYPE_CHECKING, Optional, Tuple

+

+import fastapi

+import requests

+import uvicorn

+

+from gradio import queueing

+from gradio.routes import app

+from gradio.tunneling import create_tunnel

+

+if TYPE_CHECKING: # Only import for type checking (to avoid circular imports).

+ from gradio import Interface

+

+

+# By default, the local server will try to open on localhost, port 7860.

+# If that is not available, then it will try 7861, 7862, ... 7959.

+INITIAL_PORT_VALUE = int(os.getenv("GRADIO_SERVER_PORT", "7860"))

+TRY_NUM_PORTS = int(os.getenv("GRADIO_NUM_PORTS", "100"))

+LOCALHOST_NAME = os.getenv("GRADIO_SERVER_NAME", "127.0.0.1")

+GRADIO_API_SERVER = "https://api.gradio.app/v1/tunnel-request"

+

+

+class Server(uvicorn.Server):

+ def install_signal_handlers(self):

+ pass

+

+ def run_in_thread(self):

+ self.thread = threading.Thread(target=self.run, daemon=True)

+ self.thread.start()

+ while not self.started:

+ time.sleep(1e-3)

+

+ def close(self):

+ self.should_exit = True

+ self.thread.join()

+

+

+def get_first_available_port(initial: int, final: int) -> int:

+ """

+ Gets the first open port in a specified range of port numbers

+ Parameters:

+ initial: the initial value in the range of port numbers

+ final: final (exclusive) value in the range of port numbers, should be greater than `initial`

+ Returns:

+ port: the first open port in the range

+ """

+ for port in range(initial, final):

+ try:

+ s = socket.socket() # create a socket object

+ s.bind((LOCALHOST_NAME, port)) # Bind to the port

+ s.close()

+ return port

+ except OSError:

+ pass

+ raise OSError(

+ "All ports from {} to {} are in use. Please close a port.".format(

+ initial, final

+ )

+ )

+

+

+def start_server(

+ interface: Interface,

+ server_name: Optional[str] = None,

+ server_port: Optional[int] = None,

+ ssl_keyfile: Optional[str] = None,

+ ssl_certfile: Optional[str] = None,

+) -> Tuple[int, str, fastapi.FastAPI, threading.Thread, None]:

+ """Launches a local server running the provided Interface

+ Parameters:

+ interface: The interface object to run on the server

+ server_name: to make app accessible on local network, set this to "0.0.0.0". Can be set by environment variable GRADIO_SERVER_NAME.

+ server_port: will start gradio app on this port (if available). Can be set by environment variable GRADIO_SERVER_PORT.

+ auth: If provided, username and password (or list of username-password tuples) required to access interface. Can also provide function that takes username and password and returns True if valid login.

+ ssl_keyfile: If a path to a file is provided, will use this as the private key file to create a local server running on https.

+ ssl_certfile: If a path to a file is provided, will use this as the signed certificate for https. Needs to be provided if ssl_keyfile is provided.

+ """

+ server_name = server_name or LOCALHOST_NAME

+ # if port is not specified, search for first available port

+ if server_port is None:

+ port = get_first_available_port(

+ INITIAL_PORT_VALUE, INITIAL_PORT_VALUE + TRY_NUM_PORTS

+ )

+ else:

+ try:

+ s = socket.socket()

+ s.bind((LOCALHOST_NAME, server_port))

+ s.close()

+ except OSError:

+ raise OSError(

+ "Port {} is in use. If a gradio.Interface is running on the port, you can close() it or gradio.close_all().".format(

+ server_port

+ )

+ )

+ port = server_port

+

+ url_host_name = "localhost" if server_name == "0.0.0.0" else server_name

+

+ if ssl_keyfile is not None:

+ if ssl_certfile is None:

+ raise ValueError(

+ "ssl_certfile must be provided if ssl_keyfile is provided."

+ )

+ path_to_local_server = "https://{}:{}/".format(url_host_name, port)

+ else:

+ path_to_local_server = "http://{}:{}/".format(url_host_name, port)

+

+ auth = interface.auth

+ if auth is not None:

+ if not callable(auth):

+ app.auth = {account[0]: account[1] for account in auth}

+ else:

+ app.auth = auth

+ else:

+ app.auth = None

+ app.interface = interface

+ app.cwd = os.getcwd()

+ app.favicon_path = interface.favicon_path

+ app.tokens = {}

+

+ if app.interface.enable_queue:

+ if auth is not None or app.interface.encrypt:

+ raise ValueError("Cannot queue with encryption or authentication enabled.")

+ queueing.init()

+ app.queue_thread = threading.Thread(

+ target=queueing.queue_thread, args=(path_to_local_server,)

+ )

+ app.queue_thread.start()

+ if interface.save_to is not None: # Used for selenium tests

+ interface.save_to["port"] = port

+

+ config = uvicorn.Config(

+ app=app,

+ port=port,

+ host=server_name,

+ log_level="warning",

+ ssl_keyfile=ssl_keyfile,

+ ssl_certfile=ssl_certfile,

+ )

+ server = Server(config=config)

+ server.run_in_thread()

+ return port, path_to_local_server, app, server

+

+

+def setup_tunnel(local_server_port: int, endpoint: str) -> str:

+ response = requests.get(

+ endpoint + "/v1/tunnel-request" if endpoint is not None else GRADIO_API_SERVER

+ )

+ if response and response.status_code == 200:

+ try:

+ payload = response.json()[0]

+ return create_tunnel(payload, LOCALHOST_NAME, local_server_port)

+ except Exception as e:

+ raise RuntimeError(str(e))

+ else:

+ raise RuntimeError("Could not get share link from Gradio API Server.")

+

+

+def url_ok(url: str) -> bool:

+ try:

+ for _ in range(5):

+ time.sleep(0.500)

+ with warnings.catch_warnings():

+ warnings.filterwarnings("ignore")

+ r = requests.head(url, timeout=3, verify=False)

+ if r.status_code in (200, 401, 302): # 401 or 302 if auth is set

+ return True

+ except (ConnectionError, requests.exceptions.ConnectionError):

+ return False

| Warning pops up with `share=True` with gradio 2.8.0

Launching an Interface with `share=True` causes this:

To reproduce, run

`gr.Interface(lambda x:x, "text", "text").launch(share=True)`

| 2022-02-18T00:17:43 |

||

gradio-app/gradio | 691 | gradio-app__gradio-691 | [

"663"

] | 80ddcf2e760ba5e99ee5ff07896c8f703a4de55a | diff --git a/gradio/interface.py b/gradio/interface.py

--- a/gradio/interface.py

+++ b/gradio/interface.py

@@ -629,6 +629,7 @@ def launch(

favicon_path: Optional[str] = None,

ssl_keyfile: Optional[str] = None,

ssl_certfile: Optional[str] = None,

+ ssl_keyfile_password: Optional[str] = None,

) -> Tuple[flask.Flask, str, str]:

"""

Launches the webserver that serves the UI for the interface.

@@ -653,6 +654,7 @@ def launch(

favicon_path (str): If a path to a file (.png, .gif, or .ico) is provided, it will be used as the favicon for the web page.

ssl_keyfile (str): If a path to a file is provided, will use this as the private key file to create a local server running on https.

ssl_certfile (str): If a path to a file is provided, will use this as the signed certificate for https. Needs to be provided if ssl_keyfile is provided.

+ ssl_keyfile_password (str): If a password is provided, will use this with the ssl certificate for https.

Returns:

app (flask.Flask): Flask app object

path_to_local_server (str): Locally accessible link

@@ -694,7 +696,12 @@ def launch(

cache_interface_examples(self)

server_port, path_to_local_server, app, server = networking.start_server(

- self, server_name, server_port, ssl_keyfile, ssl_certfile

+ self,

+ server_name,

+ server_port,

+ ssl_keyfile,

+ ssl_certfile,

+ ssl_keyfile_password,

)

self.local_url = path_to_local_server

diff --git a/gradio/networking.py b/gradio/networking.py

--- a/gradio/networking.py

+++ b/gradio/networking.py

@@ -76,6 +76,7 @@ def start_server(

server_port: Optional[int] = None,

ssl_keyfile: Optional[str] = None,

ssl_certfile: Optional[str] = None,

+ ssl_keyfile_password: Optional[str] = None,

) -> Tuple[int, str, fastapi.FastAPI, threading.Thread, None]:

"""Launches a local server running the provided Interface

Parameters:

@@ -85,6 +86,7 @@ def start_server(

auth: If provided, username and password (or list of username-password tuples) required to access interface. Can also provide function that takes username and password and returns True if valid login.

ssl_keyfile: If a path to a file is provided, will use this as the private key file to create a local server running on https.

ssl_certfile: If a path to a file is provided, will use this as the signed certificate for https. Needs to be provided if ssl_keyfile is provided.

+ ssl_keyfile_password (str): If a password is provided, will use this with the ssl certificate for https.

"""

server_name = server_name or LOCALHOST_NAME

# if port is not specified, search for first available port

@@ -147,6 +149,7 @@ def start_server(

log_level="warning",

ssl_keyfile=ssl_keyfile,

ssl_certfile=ssl_certfile,

+ ssl_keyfile_password=ssl_keyfile_password,

)

server = Server(config=config)

server.run_in_thread()

| about ssl_keyfile parameter in 2.8.0 version

I'm working on it for https with gradio. how can I implement ssl password?!

| Thanks for the catch @byeolkady. It looks like we need to add support for that so that it gets passed into the server..

Besides

`ssl_keyfile_password`

Do you need any of these parameters?

`ssl_version,`

`ssl_cert_reqs,`

`ssl_ca_certs,`

`ssl_ciphers`

First of all, I think I need only the password-related part. Then will that part be updated to version 2.9.0? | 2022-02-21T12:54:08 |

|

gradio-app/gradio | 710 | gradio-app__gradio-710 | [

"371"

] | 36045f5a95f940579619bceba9473cc4aeea69f5 | diff --git a/gradio/routes.py b/gradio/routes.py

--- a/gradio/routes.py

+++ b/gradio/routes.py

@@ -9,8 +9,9 @@

import secrets

import traceback

import urllib

-from typing import List, Optional, Type

+from typing import Any, List, Optional, Type

+import orjson

import pkg_resources

import uvicorn

from fastapi import Depends, FastAPI, HTTPException, Request, status

@@ -19,6 +20,7 @@

from fastapi.responses import FileResponse, HTMLResponse, JSONResponse

from fastapi.security import OAuth2PasswordRequestForm

from fastapi.templating import Jinja2Templates

+from jinja2.exceptions import TemplateNotFound

from starlette.responses import RedirectResponse

from gradio import encryptor, queueing, utils

@@ -37,7 +39,15 @@

VERSION

)

-app = FastAPI()

+

+class ORJSONResponse(JSONResponse):

+ media_type = "application/json"

+

+ def render(self, content: Any) -> bytes:

+ return orjson.dumps(content)

+

+

+app = FastAPI(default_response_class=ORJSONResponse)

app.add_middleware(

CORSMiddleware,

allow_origins=["*"],

@@ -108,9 +118,15 @@ def main(request: Request, user: str = Depends(get_current_user)):

else:

config = {"auth_required": True, "auth_message": app.interface.auth_message}

- return templates.TemplateResponse(

- "frontend/index.html", {"request": request, "config": config}

- )

+ try:

+ return templates.TemplateResponse(

+ "frontend/index.html", {"request": request, "config": config}

+ )

+ except TemplateNotFound:

+ raise ValueError(

+ "Did you install Gradio from source files? You need to build "

+ "the frontend by running /scripts/build_frontend.sh"

+ )

@app.get("/config/", dependencies=[Depends(login_check)])

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -22,6 +22,7 @@

"markdown-it-py[linkify,plugins]",

"matplotlib",

"numpy",

+ "orjson",

"pandas",

"paramiko",

"pillow",

| BUG: DataFrame output cannot handle null values

#### Result:

pandas DataFrame doesn't display if more than 10 cells are to be shown initially

#### Expectation:

The DataFrame will be displayed

#### Versions:

gradio 2.2.15

Python 3.9.6

#### Reproducible Example

```

import gradio as gr

import pandas as pd

import plotly.express as px

def show_pens(alpha):

df_pens = pd.read_csv('https://raw.githubusercontent.com/mwaskom/seaborn-data/master/penguins.csv')

print(df_pens)

return df_pens.iloc[:5, :3]

iface = gr.Interface(

fn=show_pens,

inputs=['text'],

outputs=[gr.outputs.Dataframe()],

description="Table of Palmer Penguins"

)

iface.launch()

```

### Screenshot of failing output

<img width="1312" alt="Screen Shot 2021-11-16 at 7 59 47 AM" src="https://user-images.githubusercontent.com/7703961/141990015-616ed983-0c78-4b2c-9b35-9b031d7ec735.png">

### Console error screenshot

<img width="544" alt="Screen Shot 2021-11-16 at 8 05 56 AM" src="https://user-images.githubusercontent.com/7703961/141990591-d00b6bcd-7492-4628-ad40-47264e638f2a.png">

### Working example

Change the return statement to ` return df_pens.iloc[:3, :3]`

### Screenshot of working output

<img width="1286" alt="Screen Shot 2021-11-16 at 8 00 24 AM" src="https://user-images.githubusercontent.com/7703961/141990208-e9d1e011-c9e4-4117-a4ce-15f4099975b7.png">

| Interesting, @aliabid94 can you look into this?

WIll take a look, thanks @discdiver

Ah seems like there is null value in the dataframe that's throwing the rendered off - its not related to size of output. See image:

Fixing the null value issue now.

Thanks. Yep, there are a few nulls. So gradio can't display DataFrames with

null values? If that's the case, probably worth adding to the docs.

FWIW, there's probably a fix as that's not an issue on Streamlit.

On Tue, Nov 23, 2021 at 4:57 PM aliabid94 ***@***.***> wrote:

> Ah seems like there is null value in the dataframe that's throwing the

> rendered off - its not related to size of output. See image:

> [image: image]

> <https://user-images.githubusercontent.com/7870876/143135531-a15f4b70-3c00-4cf2-9de2-d98a3992926d.png>

>

> Fixing the null value issue now.

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub

> <https://github.com/gradio-app/gradio/issues/371#issuecomment-977203531>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AB2Y3GJKM36PSKREN2PG5A3UNQE5TANCNFSM5IEG63IQ>

> .

> Triage notifications on the go with GitHub Mobile for iOS

> <https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675>

> or Android

> <https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

>

>

Yes there's a fix in the works, will be in the next release. Will update here when it's in.

Thanks!

On Tue, Nov 23, 2021 at 5:37 PM aliabid94 ***@***.***> wrote:

> Yes there's a fix in the works, will be in the next release. Will update

> here when it's in.

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub

> <https://github.com/gradio-app/gradio/issues/371#issuecomment-977235539>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AB2Y3GJPWJQWNICKBDTVIITUNQJRBANCNFSM5IEG63IQ>

> .

> Triage notifications on the go with GitHub Mobile for iOS

> <https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675>

> or Android

> <https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

>

>

| 2022-02-22T20:32:35 |

|

gradio-app/gradio | 712 | gradio-app__gradio-712 | [

"707"

] | 93f372d98875c7b736c2a29e157f9c301bdbd2c1 | diff --git a/gradio/strings.py b/gradio/strings.py

--- a/gradio/strings.py

+++ b/gradio/strings.py

@@ -24,11 +24,12 @@

"INLINE_DISPLAY_BELOW": "Interface loading below...",

"MEDIA_PERMISSIONS_IN_COLAB": "Your interface requires microphone or webcam permissions - this may cause issues in Colab. Use the External URL in case of issues.",

"TIPS": [

- "You can add authentication to your app with the auth= kwarg in the launch command; for example: gr.Interface(...).launch(auth=('username', 'password'))",

- "Let users specify why they flagged input with the flagging_options= kwarg; for example: gr.Interface(..., flagging_options=['too slow', 'incorrect output', 'other'])",

- "You can show or hide the buttons for flagging, screenshots, and interpretation with the allow_*= kwargs; for example: gr.Interface(..., allow_screenshot=True, allow_flagging=False)",

+ "You can add authentication to your app with the `auth=` kwarg in the `launch()` command; for example: `gr.Interface(...).launch(auth=('username', 'password'))`",

+ "Let users specify why they flagged input with the `flagging_options=` kwarg; for example: `gr.Interface(..., flagging_options=['too slow', 'incorrect output', 'other'])`",

+ "You can show or hide the button for flagging with the `allow_flagging=` kwarg; for example: gr.Interface(..., allow_flagging=False)",

"The inputs and outputs flagged by the users are stored in the flagging directory, specified by the flagging_dir= kwarg. You can view this data through the interface by setting the examples= kwarg to the flagging directory; for example gr.Interface(..., examples='flagged')",

- "You can add a title and description to your interface using the title= and description= kwargs. The article= kwarg can be used to add markdown or HTML under the interface; for example gr.Interface(..., title='My app', description='Lorem ipsum')",

+ "You can add a title and description to your interface using the `title=` and `description=` kwargs. The `article=` kwarg can be used to add a description under the interface; for example gr.Interface(..., title='My app', description='Lorem ipsum'). Try using Markdown!",

+ "For a classification or regression model, set `interpretation='default'` to see why the model made a prediction.",

],

}

diff --git a/gradio/utils.py b/gradio/utils.py

--- a/gradio/utils.py

+++ b/gradio/utils.py

@@ -156,9 +156,9 @@ def readme_to_html(article: str) -> str:

def show_tip(interface: Interface) -> None:

- # Only show tip every other use.

- if interface.show_tips and random.random() < 0.5:

- print(random.choice(gradio.strings.en.TIPS))

+ if interface.show_tips and random.random() < 1.5:

+ tip: str = random.choice(gradio.strings.en["TIPS"])

+ print(f"Tip: {tip}")

def launch_counter() -> None:

| `Interface.launch()` with `show_tips=True` yields error

### Problem:

Using [this gradio colab](https://colab.research.google.com/drive/18ODkJvyxHutTN0P5APWyGFO_xwNcgHDZ?usp=sharing#scrollTo=BtS4nqLIW-dv), I added a few modifications, notably `show_tips=True` to the `launch` method of gradio.Interface.

Without the try | except block (as in the `main1` function below), this error shows up:

```

/usr/local/lib/python3.7/dist-packages/gradio/utils.py in show_tip(interface)

159 # Only show tip every other use.

160 if interface.show_tips and random.random() < 0.5:

--> 161 print(random.choice(gradio.strings.en.TIPS))

162

163

AttributeError: 'dict' object has no attribute 'TIPS'

```

### Reproducible example:

```

def greet(name):

return f"Hello {name}!" # py3.7!

# Original code in colab:

#gradio.Interface(greet, "text", "text").launch(share=True)

# My modifications:

iface = gradio.Interface(fn=greet,

inputs=gradio.inputs.Textbox(lines=2,

placeholder="Name Here"),

outputs="text",

examples=[['Tiger'],['Puma']]

)

# Functions to use to yield the error or trap it:

def main1():

iface.launch(show_tips=True)

def main2():

try:

iface.launch(show_tips=True)

except AttributeError:

pass

# Uncomment and run (possibly twice, re: traceback line 160) to get the error:

#main1()

# No issue when exception is trapped:

main2()

```

#### Device information:

- OS: Windows 10

- Browser: chrome

- Gradio version: 2.8.2

#### Additional context

- Context: gradio colab

| 2022-02-22T21:09:30 |

||

gradio-app/gradio | 724 | gradio-app__gradio-724 | [

"454"

] | a2b89ab2d249fe7434d180c2d0a1552003fd8a90 | diff --git a/demo/diff_texts/run.py b/demo/diff_texts/run.py

--- a/demo/diff_texts/run.py

+++ b/demo/diff_texts/run.py

@@ -19,7 +19,7 @@ def diff_texts(text1, text2):

),

gr.inputs.Textbox(lines=3, default="The fast brown fox jumps over lazy dogs."),

],

- gr.outputs.HighlightedText(),

+ gr.outputs.HighlightedText(color_map={'+': 'green', '-': 'pink'}),

)

if __name__ == "__main__":

iface.launch()

| diff --git a/test/golden/diff_texts/magic_trick.png b/test/golden/diff_texts/magic_trick.png

Binary files a/test/golden/diff_texts/magic_trick.png and b/test/golden/diff_texts/magic_trick.png differ

| Display bug with the version gradio 2.7.0 on HighlightedText

**Describe the bug**

I did describe the bug with notebook and screenshots in the Hugging Face forum: https://discuss.huggingface.co/t/how-to-install-a-specific-version-of-gradio-in-spaces/13552

**To Reproduce**

Run the notebook [diff_texts.ipynb](https://colab.research.google.com/drive/1NPdrvEVzt03GUrWVHGQGDx4TB0ZLPus6?usp=sharing).

**Screenshots**

With gradio 2.7.0

With gradio 2.6.4

| Thank you for raising the issue! We are fixing this issue right now

Thanks again for creating the issue. In case you need a workaround while we fix the issue, you can just run `gr.outputs.HighlightedText()` without passing in a custom `color_map` and it should work fine

The issue happens when you pass in a custom `color_map` to `gr.outputs.HighlightedText()`.

**Working example**:

```python

def diff_texts(text1, text2):

d = Differ()

return [

(token[2:], token[0] if token[0] != " " else None)

for token in d.compare(text1, text2)

]

iface = gr.Interface(

diff_texts,

[

gr.inputs.Textbox(

lines=3, default="The quick brown fox jumped over the lazy dogs."

),

gr.inputs.Textbox(lines=3, default="The fast brown fox jumps over lazy dogs."),

],

gr.outputs.HighlightedText(),

)

```

**Broken example**:

```python

def diff_texts(text1, text2):

d = Differ()

return [

(token[2:], token[0] if token[0] != " " else None)

for token in d.compare(text1, text2)

]

iface = gr.Interface(

diff_texts,

[

gr.inputs.Textbox(

lines=3, default="The quick brown fox jumped over the lazy dogs."

),

gr.inputs.Textbox(lines=3, default="The fast brown fox jumps over lazy dogs."),

],

gr.outputs.HighlightedText(color_map={'+': 'brown', '-': 'pink'}),

)

```

in which case the words that being highlighted no longer appear:

| 2022-02-23T15:19:56 |

gradio-app/gradio | 739 | gradio-app__gradio-739 | [

"734"

] | cc700b80042e576669979eda8ef4531983b62677 | diff --git a/gradio/interface.py b/gradio/interface.py

--- a/gradio/interface.py

+++ b/gradio/interface.py

@@ -123,7 +123,7 @@ def __init__(

css: Optional[str] = None,

height=None,

width=None,

- allow_screenshot: bool = True,

+ allow_screenshot: bool = False,

allow_flagging: Optional[str] = None,

flagging_options: List[str] = None,

encrypt=None,

@@ -135,7 +135,7 @@ def __init__(

enable_queue=None,

api_mode=None,

flagging_callback: FlaggingCallback = CSVLogger(),

- ):

+ ): # TODO: (faruk) Let's remove depreceated parameters in the version 3.0.0

"""

Parameters:

fn (Union[Callable, List[Callable]]): the function to wrap an interface around.

@@ -155,7 +155,7 @@ def __init__(

thumbnail (str): path to image or src to use as display picture for models listed in gradio.app/hub

theme (str): Theme to use - one of "default", "huggingface", "seafoam", "grass", "peach". Add "dark-" prefix, e.g. "dark-peach" for dark theme (or just "dark" for the default dark theme).

css (str): custom css or path to custom css file to use with interface.

- allow_screenshot (bool): if False, users will not see a button to take a screenshot of the interface.

+ allow_screenshot (bool): DEPRECATED if False, users will not see a button to take a screenshot of the interface.

allow_flagging (str): one of "never", "auto", or "manual". If "never" or "auto", users will not see a button to flag an input and output. If "manual", users will see a button to flag. If "auto", every prediction will be automatically flagged. If "manual", samples are flagged when the user clicks flag button. Can be set with environmental variable GRADIO_ALLOW_FLAGGING.

flagging_options (List[str]): if provided, allows user to select from the list of options when flagging. Only applies if allow_flagging is "manual".

encrypt (bool): DEPRECATED. If True, flagged data will be encrypted by key provided by creator at launch

@@ -217,6 +217,11 @@ def __init__(

"The `verbose` parameter in the `Interface`"

"is deprecated and has no effect."

)

+ if allow_screenshot:

+ warnings.warn(

+ "The `allow_screenshot` parameter in the `Interface`"

+ "is deprecated and has no effect."

+ )

self.status = "OFF"

self.live = live

@@ -277,6 +282,7 @@ def clean_html(raw_html):

self.thumbnail = thumbnail

theme = theme if theme is not None else os.getenv("GRADIO_THEME", "default")

+ self.is_space = True if os.getenv("SYSTEM") == "spaces" else False

DEPRECATED_THEME_MAP = {

"darkdefault": "default",

"darkhuggingface": "dark-huggingface",

@@ -731,6 +737,8 @@ def launch(

share = True

if share:

+ if self.is_space:

+ raise RuntimeError("Share is not supported when you are in Spaces")

try:

share_url = networking.setup_tunnel(server_port, private_endpoint)

self.share_url = share_url

@@ -791,6 +799,7 @@ def launch(

"api_mode": self.api_mode,

"server_name": server_name,

"server_port": server_port,

+ "is_spaces": self.is_space,

}

if self.analytics_enabled:

utils.launch_analytics(data)

| Share Breaks In Spaces

### Describe the bug

share=True usage in launch gives error in spaces

### Reproduction

https://huggingface.co/spaces/ruhwang2001/wonder-app/blob/main/app.py

`interface.launch(debug=True, share=True)`

### Screenshot

_No response_

### Logs

```shell

Running on local URL: http://localhost:7860/

█

*** Failed to connect to ec2.gradio.app:22: [Errno 110] Connection timed out

```

### System Info

```shell

Spaces

```

### Severity

critical

| @aliabid94 @aliabd @abidlabs As far as I can see in the codebase we are not aware if we are on HF Spaces in the interface class.

Shall we have a environment variable in spaces which will notify us we are using HF spaces? Just like utils.colab_check().

That makes sense. We can coordinate with the Spaces team to set up that environmental variable.

My suggestion would be that if this environmental variable is set **and `share=True`**, then we print a helpful warning to the user saying that for Spaces, `share` should be set to `False`.

(Instead of overriding `share` as I don't think we should override an explicit parameter with an implicit environmental variable)

Gonna make us of these env variables for this issue

good point! cc @cbensimon | 2022-02-25T08:26:09 |

|

gradio-app/gradio | 752 | gradio-app__gradio-752 | [

"751"

] | 907839926b3298cc3513920103def320e8f9d9e6 | diff --git a/demo/hello_world/run.py b/demo/hello_world/run.py

--- a/demo/hello_world/run.py

+++ b/demo/hello_world/run.py

@@ -7,4 +7,4 @@ def greet(name):

iface = gr.Interface(fn=greet, inputs="text", outputs="text")

if __name__ == "__main__":

- iface.launch()

+ iface.launch();

diff --git a/demo/hello_world_2/run.py b/demo/hello_world_2/run.py

--- a/demo/hello_world_2/run.py

+++ b/demo/hello_world_2/run.py

@@ -11,4 +11,5 @@ def greet(name):

outputs="text",

)

if __name__ == "__main__":

- iface.launch()

+ app, path_to_local_server, share_url = iface.launch()

+

diff --git a/demo/hello_world_3/run.py b/demo/hello_world_3/run.py

--- a/demo/hello_world_3/run.py

+++ b/demo/hello_world_3/run.py

@@ -14,4 +14,5 @@ def greet(name, is_morning, temperature):

outputs=["text", "number"],

)

if __name__ == "__main__":

- iface.launch()

+ app, path_to_local_server, share_url = iface.launch()

+

| Update Launch Getting Started Guides

Usage of `iface.launch()` prints out `(<fastapi.applications.FastAPI at 0x7fef0dbbad10>,` in Google Colab and it is not fancy.

Let's update the first deliveries of `launch` in the getting_started guides so that people can adopt those usages.

| 2022-02-28T14:52:04 |

||

gradio-app/gradio | 782 | gradio-app__gradio-782 | [

"777"

] | 9e2cac6e4c1ed9b62968060bd5bf63e61f4e9c43 | diff --git a/gradio/interface.py b/gradio/interface.py

--- a/gradio/interface.py

+++ b/gradio/interface.py

@@ -28,7 +28,7 @@

from gradio.outputs import State as o_State # type: ignore

from gradio.outputs import get_output_instance

from gradio.process_examples import load_from_cache, process_example

-from gradio.routes import PredictRequest

+from gradio.routes import PredictBody

if TYPE_CHECKING: # Only import for type checking (is False at runtime).

import flask

@@ -559,7 +559,7 @@ def run_prediction(

else:

return predictions

- def process_api(self, data: PredictRequest, username: str = None) -> Dict[str, Any]:

+ def process_api(self, data: PredictBody, username: str = None) -> Dict[str, Any]:

flag_index = None

if data.example_id is not None:

if self.cache_examples:

diff --git a/gradio/queueing.py b/gradio/queueing.py