repo

stringclasses 856

values | pull_number

int64 3

127k

| instance_id

stringlengths 12

58

| issue_numbers

listlengths 1

5

| base_commit

stringlengths 40

40

| patch

stringlengths 67

1.54M

| test_patch

stringlengths 0

107M

| problem_statement

stringlengths 3

307k

| hints_text

stringlengths 0

908k

| created_at

timestamp[s] |

|---|---|---|---|---|---|---|---|---|---|

feast-dev/feast | 2,485 | feast-dev__feast-2485 | [

"2358"

]

| 0c9e5b7e2132b619056e9b41519d54a93e977f6c | diff --git a/sdk/python/feast/infra/online_stores/dynamodb.py b/sdk/python/feast/infra/online_stores/dynamodb.py

--- a/sdk/python/feast/infra/online_stores/dynamodb.py

+++ b/sdk/python/feast/infra/online_stores/dynamodb.py

@@ -17,7 +17,7 @@

from typing import Any, Callable, Dict, List, Optional, Sequence, Tuple

from pydantic import StrictStr

-from pydantic.typing import Literal

+from pydantic.typing import Literal, Union

from feast import Entity, FeatureView, utils

from feast.infra.infra_object import DYNAMODB_INFRA_OBJECT_CLASS_TYPE, InfraObject

@@ -50,17 +50,20 @@ class DynamoDBOnlineStoreConfig(FeastConfigBaseModel):

type: Literal["dynamodb"] = "dynamodb"

"""Online store type selector"""

+ batch_size: int = 40

+ """Number of items to retrieve in a DynamoDB BatchGetItem call."""

+

+ endpoint_url: Union[str, None] = None

+ """DynamoDB local development endpoint Url, i.e. http://localhost:8000"""

+

region: StrictStr

"""AWS Region Name"""

- table_name_template: StrictStr = "{project}.{table_name}"

- """DynamoDB table name template"""

-

sort_response: bool = True

"""Whether or not to sort BatchGetItem response."""

- batch_size: int = 40

- """Number of items to retrieve in a DynamoDB BatchGetItem call."""

+ table_name_template: StrictStr = "{project}.{table_name}"

+ """DynamoDB table name template"""

class DynamoDBOnlineStore(OnlineStore):

@@ -95,8 +98,12 @@ def update(

"""

online_config = config.online_store

assert isinstance(online_config, DynamoDBOnlineStoreConfig)

- dynamodb_client = self._get_dynamodb_client(online_config.region)

- dynamodb_resource = self._get_dynamodb_resource(online_config.region)

+ dynamodb_client = self._get_dynamodb_client(

+ online_config.region, online_config.endpoint_url

+ )

+ dynamodb_resource = self._get_dynamodb_resource(

+ online_config.region, online_config.endpoint_url

+ )

for table_instance in tables_to_keep:

try:

@@ -141,7 +148,9 @@ def teardown(

"""

online_config = config.online_store

assert isinstance(online_config, DynamoDBOnlineStoreConfig)

- dynamodb_resource = self._get_dynamodb_resource(online_config.region)

+ dynamodb_resource = self._get_dynamodb_resource(

+ online_config.region, online_config.endpoint_url

+ )

for table in tables:

_delete_table_idempotent(

@@ -175,7 +184,9 @@ def online_write_batch(

"""

online_config = config.online_store

assert isinstance(online_config, DynamoDBOnlineStoreConfig)

- dynamodb_resource = self._get_dynamodb_resource(online_config.region)

+ dynamodb_resource = self._get_dynamodb_resource(

+ online_config.region, online_config.endpoint_url

+ )

table_instance = dynamodb_resource.Table(

_get_table_name(online_config, config, table)

@@ -217,7 +228,9 @@ def online_read(

"""

online_config = config.online_store

assert isinstance(online_config, DynamoDBOnlineStoreConfig)

- dynamodb_resource = self._get_dynamodb_resource(online_config.region)

+ dynamodb_resource = self._get_dynamodb_resource(

+ online_config.region, online_config.endpoint_url

+ )

table_instance = dynamodb_resource.Table(

_get_table_name(online_config, config, table)

)

@@ -260,14 +273,16 @@ def online_read(

result.extend(batch_size_nones)

return result

- def _get_dynamodb_client(self, region: str):

+ def _get_dynamodb_client(self, region: str, endpoint_url: Optional[str] = None):

if self._dynamodb_client is None:

- self._dynamodb_client = _initialize_dynamodb_client(region)

+ self._dynamodb_client = _initialize_dynamodb_client(region, endpoint_url)

return self._dynamodb_client

- def _get_dynamodb_resource(self, region: str):

+ def _get_dynamodb_resource(self, region: str, endpoint_url: Optional[str] = None):

if self._dynamodb_resource is None:

- self._dynamodb_resource = _initialize_dynamodb_resource(region)

+ self._dynamodb_resource = _initialize_dynamodb_resource(

+ region, endpoint_url

+ )

return self._dynamodb_resource

def _sort_dynamodb_response(self, responses: list, order: list):

@@ -285,12 +300,12 @@ def _sort_dynamodb_response(self, responses: list, order: list):

return table_responses_ordered

-def _initialize_dynamodb_client(region: str):

- return boto3.client("dynamodb", region_name=region)

+def _initialize_dynamodb_client(region: str, endpoint_url: Optional[str] = None):

+ return boto3.client("dynamodb", region_name=region, endpoint_url=endpoint_url)

-def _initialize_dynamodb_resource(region: str):

- return boto3.resource("dynamodb", region_name=region)

+def _initialize_dynamodb_resource(region: str, endpoint_url: Optional[str] = None):

+ return boto3.resource("dynamodb", region_name=region, endpoint_url=endpoint_url)

# TODO(achals): This form of user-facing templating is experimental.

@@ -327,13 +342,20 @@ class DynamoDBTable(InfraObject):

Attributes:

name: The name of the table.

region: The region of the table.

+ endpoint_url: Local DynamoDB Endpoint Url.

+ _dynamodb_client: Boto3 DynamoDB client.

+ _dynamodb_resource: Boto3 DynamoDB resource.

"""

region: str

+ endpoint_url = None

+ _dynamodb_client = None

+ _dynamodb_resource = None

- def __init__(self, name: str, region: str):

+ def __init__(self, name: str, region: str, endpoint_url: Optional[str] = None):

super().__init__(name)

self.region = region

+ self.endpoint_url = endpoint_url

def to_infra_object_proto(self) -> InfraObjectProto:

dynamodb_table_proto = self.to_proto()

@@ -362,8 +384,8 @@ def from_proto(dynamodb_table_proto: DynamoDBTableProto) -> Any:

)

def update(self):

- dynamodb_client = _initialize_dynamodb_client(region=self.region)

- dynamodb_resource = _initialize_dynamodb_resource(region=self.region)

+ dynamodb_client = self._get_dynamodb_client(self.region, self.endpoint_url)

+ dynamodb_resource = self._get_dynamodb_resource(self.region, self.endpoint_url)

try:

dynamodb_resource.create_table(

@@ -384,5 +406,17 @@ def update(self):

dynamodb_client.get_waiter("table_exists").wait(TableName=f"{self.name}")

def teardown(self):

- dynamodb_resource = _initialize_dynamodb_resource(region=self.region)

+ dynamodb_resource = self._get_dynamodb_resource(self.region, self.endpoint_url)

_delete_table_idempotent(dynamodb_resource, self.name)

+

+ def _get_dynamodb_client(self, region: str, endpoint_url: Optional[str] = None):

+ if self._dynamodb_client is None:

+ self._dynamodb_client = _initialize_dynamodb_client(region, endpoint_url)

+ return self._dynamodb_client

+

+ def _get_dynamodb_resource(self, region: str, endpoint_url: Optional[str] = None):

+ if self._dynamodb_resource is None:

+ self._dynamodb_resource = _initialize_dynamodb_resource(

+ region, endpoint_url

+ )

+ return self._dynamodb_resource

| diff --git a/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py b/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py

--- a/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py

+++ b/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py

@@ -7,6 +7,7 @@

from feast.infra.online_stores.dynamodb import (

DynamoDBOnlineStore,

DynamoDBOnlineStoreConfig,

+ DynamoDBTable,

)

from feast.repo_config import RepoConfig

from tests.utils.online_store_utils import (

@@ -38,6 +39,121 @@ def repo_config():

)

+def test_online_store_config_default():

+ """Test DynamoDBOnlineStoreConfig default parameters."""

+ aws_region = "us-west-2"

+ dynamodb_store_config = DynamoDBOnlineStoreConfig(region=aws_region)

+ assert dynamodb_store_config.type == "dynamodb"

+ assert dynamodb_store_config.batch_size == 40

+ assert dynamodb_store_config.endpoint_url is None

+ assert dynamodb_store_config.region == aws_region

+ assert dynamodb_store_config.sort_response is True

+ assert dynamodb_store_config.table_name_template == "{project}.{table_name}"

+

+

+def test_dynamodb_table_default_params():

+ """Test DynamoDBTable default parameters."""

+ tbl_name = "dynamodb-test"

+ aws_region = "us-west-2"

+ dynamodb_table = DynamoDBTable(tbl_name, aws_region)

+ assert dynamodb_table.name == tbl_name

+ assert dynamodb_table.region == aws_region

+ assert dynamodb_table.endpoint_url is None

+ assert dynamodb_table._dynamodb_client is None

+ assert dynamodb_table._dynamodb_resource is None

+

+

+def test_online_store_config_custom_params():

+ """Test DynamoDBOnlineStoreConfig custom parameters."""

+ aws_region = "us-west-2"

+ batch_size = 20

+ endpoint_url = "http://localhost:8000"

+ sort_response = False

+ table_name_template = "feast_test.dynamodb_table"

+ dynamodb_store_config = DynamoDBOnlineStoreConfig(

+ region=aws_region,

+ batch_size=batch_size,

+ endpoint_url=endpoint_url,

+ sort_response=sort_response,

+ table_name_template=table_name_template,

+ )

+ assert dynamodb_store_config.type == "dynamodb"

+ assert dynamodb_store_config.batch_size == batch_size

+ assert dynamodb_store_config.endpoint_url == endpoint_url

+ assert dynamodb_store_config.region == aws_region

+ assert dynamodb_store_config.sort_response == sort_response

+ assert dynamodb_store_config.table_name_template == table_name_template

+

+

+def test_dynamodb_table_custom_params():

+ """Test DynamoDBTable custom parameters."""

+ tbl_name = "dynamodb-test"

+ aws_region = "us-west-2"

+ endpoint_url = "http://localhost:8000"

+ dynamodb_table = DynamoDBTable(tbl_name, aws_region, endpoint_url)

+ assert dynamodb_table.name == tbl_name

+ assert dynamodb_table.region == aws_region

+ assert dynamodb_table.endpoint_url == endpoint_url

+ assert dynamodb_table._dynamodb_client is None

+ assert dynamodb_table._dynamodb_resource is None

+

+

+def test_online_store_config_dynamodb_client():

+ """Test DynamoDBOnlineStoreConfig configure DynamoDB client with endpoint_url."""

+ aws_region = "us-west-2"

+ endpoint_url = "http://localhost:8000"

+ dynamodb_store = DynamoDBOnlineStore()

+ dynamodb_store_config = DynamoDBOnlineStoreConfig(

+ region=aws_region, endpoint_url=endpoint_url

+ )

+ dynamodb_client = dynamodb_store._get_dynamodb_client(

+ dynamodb_store_config.region, dynamodb_store_config.endpoint_url

+ )

+ assert dynamodb_client.meta.region_name == aws_region

+ assert dynamodb_client.meta.endpoint_url == endpoint_url

+

+

+def test_dynamodb_table_dynamodb_client():

+ """Test DynamoDBTable configure DynamoDB client with endpoint_url."""

+ tbl_name = "dynamodb-test"

+ aws_region = "us-west-2"

+ endpoint_url = "http://localhost:8000"

+ dynamodb_table = DynamoDBTable(tbl_name, aws_region, endpoint_url)

+ dynamodb_client = dynamodb_table._get_dynamodb_client(

+ dynamodb_table.region, dynamodb_table.endpoint_url

+ )

+ assert dynamodb_client.meta.region_name == aws_region

+ assert dynamodb_client.meta.endpoint_url == endpoint_url

+

+

+def test_online_store_config_dynamodb_resource():

+ """Test DynamoDBOnlineStoreConfig configure DynamoDB Resource with endpoint_url."""

+ aws_region = "us-west-2"

+ endpoint_url = "http://localhost:8000"

+ dynamodb_store = DynamoDBOnlineStore()

+ dynamodb_store_config = DynamoDBOnlineStoreConfig(

+ region=aws_region, endpoint_url=endpoint_url

+ )

+ dynamodb_resource = dynamodb_store._get_dynamodb_resource(

+ dynamodb_store_config.region, dynamodb_store_config.endpoint_url

+ )

+ assert dynamodb_resource.meta.client.meta.region_name == aws_region

+ assert dynamodb_resource.meta.client.meta.endpoint_url == endpoint_url

+

+

+def test_dynamodb_table_dynamodb_resource():

+ """Test DynamoDBTable configure DynamoDB resource with endpoint_url."""

+ tbl_name = "dynamodb-test"

+ aws_region = "us-west-2"

+ endpoint_url = "http://localhost:8000"

+ dynamodb_table = DynamoDBTable(tbl_name, aws_region, endpoint_url)

+ dynamodb_resource = dynamodb_table._get_dynamodb_resource(

+ dynamodb_table.region, dynamodb_table.endpoint_url

+ )

+ assert dynamodb_resource.meta.client.meta.region_name == aws_region

+ assert dynamodb_resource.meta.client.meta.endpoint_url == endpoint_url

+

+

@mock_dynamodb2

@pytest.mark.parametrize("n_samples", [5, 50, 100])

def test_online_read(repo_config, n_samples):

| [DynamoDB] - Allow passing ddb endpoint_url to enable feast local testing

**Is your feature request related to a problem? Please describe.**

Currently in feature_store.yaml, we can only specify a region for DynamoDB provider. As a result, it requires an actual DynamoDB to be available when we want to do local development/testing or integration testing in a sandbox environment.

**Describe the solution you'd like**

A way to solve this is to let user pass an endpoint_url. More information can be found [here](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/DynamoDBLocal.UsageNotes.html).

This way, users can install and run a [local dynamoDB](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/DynamoDBLocal.html), and use it as an online store locally.

The is especially useful when:

- accessing real DynamoDB requires a tedious and time-consuming steps (e.g.: IAM role set up, permissions, etc.) and these steps can be deferred later,

- integration testing locally, in docker, in Jenkins

**Describe alternatives you've considered**

N/A

**Additional context**

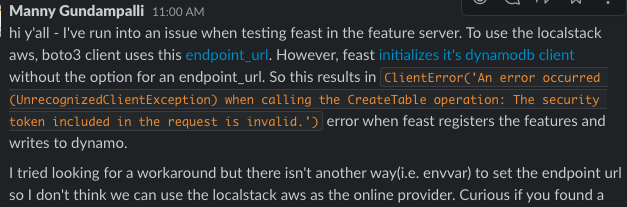

Not much but the initial slack thread can be found [here](https://tectonfeast.slack.com/archives/C01MSKCMB37/p1646166683447239), which was followed by a slack message from our team member

| @vlin-lgtm I think this one is kind of easy [_initialize_dynamodb_client](https://github.com/feast-dev/feast/blob/ea6a9b2034c35bf36ee5073fad93dde52279ebcd/sdk/python/feast/infra/online_stores/dynamodb.py#L288) and [_initialize_dynamodb_resource](_initialize_dynamodb_resource) would now accept `endpoint_url`

```python

def _initialize_dynamodb_client(region: str, url: str):

return boto3.client("dynamodb", endpoint_url=url, region_name=region)

```

which can be passed through the [DynamoDBOnlineStoreConfig](https://github.com/feast-dev/feast/blob/ea6a9b2034c35bf36ee5073fad93dde52279ebcd/sdk/python/feast/infra/online_stores/dynamodb.py#L47).

But, I'm not sure how will this be for local integration tests, as far as I know just need to change the [DYNAMODB_CONFIG](https://github.com/feast-dev/feast/blob/ea6a9b2034c35bf36ee5073fad93dde52279ebcd/sdk/python/tests/integration/feature_repos/repo_configuration.py#L48) with an additional parameter `endpoint_url` and set an [IntegrationTestConfig](https://github.com/feast-dev/feast/blob/ea6a9b2034c35bf36ee5073fad93dde52279ebcd/sdk/python/tests/integration/feature_repos/repo_configuration.py#L93) with DynamoDB as Online Store.

What are your thoughts?

P.D. I'm happy to contribute with this

Thanks so much, @TremaMiguel!

> But, I'm not sure how will this be for local integration tests, ....

My understanding is one can run a [local dynamoDB](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/DynamoDBLocal.html) on their development machine or in a docker container, and by specifying an endpoint_url to for example `localhost:8080`, all DynamoDB invocations will go to the localhost instance instead of an actual one in AWS.

This is useful for integration testing as everything is still "local".

Would love it if you can help contribute to this 🙏

@vlin-lgtm Thanks for replying

I agree with the local integration tests, additionaly I think this development could help with #2400

I'll start working on this.

| 2022-04-04T17:53:16 |

feast-dev/feast | 2,508 | feast-dev__feast-2508 | [

"2482"

]

| df51b942a6f5cae0e47da3206f68f58ea26502b4 | diff --git a/sdk/python/feast/infra/utils/snowflake_utils.py b/sdk/python/feast/infra/utils/snowflake_utils.py

--- a/sdk/python/feast/infra/utils/snowflake_utils.py

+++ b/sdk/python/feast/infra/utils/snowflake_utils.py

@@ -4,9 +4,11 @@

import string

from logging import getLogger

from tempfile import TemporaryDirectory

-from typing import Dict, Iterator, List, Optional, Tuple, cast

+from typing import Any, Dict, Iterator, List, Optional, Tuple, cast

import pandas as pd

+from cryptography.hazmat.backends import default_backend

+from cryptography.hazmat.primitives import serialization

from tenacity import (

retry,

retry_if_exception_type,

@@ -40,18 +42,17 @@ def execute_snowflake_statement(conn: SnowflakeConnection, query) -> SnowflakeCu

def get_snowflake_conn(config, autocommit=True) -> SnowflakeConnection:

- if config.type == "snowflake.offline":

- config_header = "connections.feast_offline_store"

+ assert config.type == "snowflake.offline"

+ config_header = "connections.feast_offline_store"

config_dict = dict(config)

# read config file

config_reader = configparser.ConfigParser()

config_reader.read([config_dict["config_path"]])

+ kwargs: Dict[str, Any] = {}

if config_reader.has_section(config_header):

kwargs = dict(config_reader[config_header])

- else:

- kwargs = {}

if "schema" in kwargs:

kwargs["schema_"] = kwargs.pop("schema")

@@ -67,6 +68,13 @@ def get_snowflake_conn(config, autocommit=True) -> SnowflakeConnection:

else:

kwargs["schema"] = '"PUBLIC"'

+ # https://docs.snowflake.com/en/user-guide/python-connector-example.html#using-key-pair-authentication-key-pair-rotation

+ # https://docs.snowflake.com/en/user-guide/key-pair-auth.html#configuring-key-pair-authentication

+ if "private_key" in kwargs:

+ kwargs["private_key"] = parse_private_key_path(

+ kwargs["private_key"], kwargs["private_key_passphrase"]

+ )

+

try:

conn = snowflake.connector.connect(

application="feast", autocommit=autocommit, **kwargs

@@ -288,3 +296,21 @@ def chunk_helper(lst: pd.DataFrame, n: int) -> Iterator[Tuple[int, pd.DataFrame]

"""Helper generator to chunk a sequence efficiently with current index like if enumerate was called on sequence."""

for i in range(0, len(lst), n):

yield int(i / n), lst[i : i + n]

+

+

+def parse_private_key_path(key_path: str, private_key_passphrase: str) -> bytes:

+

+ with open(key_path, "rb") as key:

+ p_key = serialization.load_pem_private_key(

+ key.read(),

+ password=private_key_passphrase.encode(),

+ backend=default_backend(),

+ )

+

+ pkb = p_key.private_bytes(

+ encoding=serialization.Encoding.DER,

+ format=serialization.PrivateFormat.PKCS8,

+ encryption_algorithm=serialization.NoEncryption(),

+ )

+

+ return pkb

| Allow for connecting to Snowflake with a private key

When connecting to Snowflake with an account that requires MFA its does not work (or at least requires extra work to use MFA devices) to connect with just the username and password credentials.

Instead of using username and password we should be able to use a private key and a private key passphrase to connect to Snowflake. Snowflake already supports this method of authentication. See [here](https://docs.snowflake.com/en/user-guide/python-connector-example.html#label-python-key-pair-authn-rotation). Feast should add this as an option to the feature_store.yaml as part of the Snowflake connection config.

When trying to use a private_key_path and passphrase this error was raised:

```

raise FeastConfigError(e, config_path)

feast.repo_config.FeastConfigError: 2 validation errors for RepoConfig

__root__ -> offline_store -> private_key_passphrase

extra fields not permitted (type=value_error.extra)

__root__ -> offline_store -> private_key_path

extra fields not permitted (type=value_error.extra)

```

It seems like fields related to a passphrase are not permitted [here](https://github.com/feast-dev/feast/blob/b95f4410ee91069ff84e81d2d5f3e9329edc8626/sdk/python/feast/infra/offline_stores/snowflake.py#L56)

cc @sfc-gh-madkins

| After a Slack discussion it was concluded this could be done using the ~/.snowsql/config file and passing in the private key there. Unfortunately, the python connector doesn’t take the private key path instead it takes a byte object of an unecrypted rsa private key. To create this private key we should allow a user to set the private key path and private key passcode in the config file and then convert that to the private key in code using the method detailed [here]( python connector doesn’t take the private key path instead it takes a byte object of an unecrypted rsa private key). | 2022-04-07T19:31:56 |

|

feast-dev/feast | 2,515 | feast-dev__feast-2515 | [

"2483"

]

| 83f3e0dc6c1df42950d1f808a9d6f3f7fc485825 | diff --git a/sdk/python/feast/infra/online_stores/dynamodb.py b/sdk/python/feast/infra/online_stores/dynamodb.py

--- a/sdk/python/feast/infra/online_stores/dynamodb.py

+++ b/sdk/python/feast/infra/online_stores/dynamodb.py

@@ -191,21 +191,7 @@ def online_write_batch(

table_instance = dynamodb_resource.Table(

_get_table_name(online_config, config, table)

)

- with table_instance.batch_writer() as batch:

- for entity_key, features, timestamp, created_ts in data:

- entity_id = compute_entity_id(entity_key)

- batch.put_item(

- Item={

- "entity_id": entity_id, # PartitionKey

- "event_ts": str(utils.make_tzaware(timestamp)),

- "values": {

- k: v.SerializeToString()

- for k, v in features.items() # Serialized Features

- },

- }

- )

- if progress:

- progress(1)

+ self._write_batch_non_duplicates(table_instance, data, progress)

@log_exceptions_and_usage(online_store="dynamodb")

def online_read(

@@ -299,6 +285,32 @@ def _sort_dynamodb_response(self, responses: list, order: list):

_, table_responses_ordered = zip(*table_responses_ordered)

return table_responses_ordered

+ @log_exceptions_and_usage(online_store="dynamodb")

+ def _write_batch_non_duplicates(

+ self,

+ table_instance,

+ data: List[

+ Tuple[EntityKeyProto, Dict[str, ValueProto], datetime, Optional[datetime]]

+ ],

+ progress: Optional[Callable[[int], Any]],

+ ):

+ """Deduplicate write batch request items on ``entity_id`` primary key."""

+ with table_instance.batch_writer(overwrite_by_pkeys=["entity_id"]) as batch:

+ for entity_key, features, timestamp, created_ts in data:

+ entity_id = compute_entity_id(entity_key)

+ batch.put_item(

+ Item={

+ "entity_id": entity_id, # PartitionKey

+ "event_ts": str(utils.make_tzaware(timestamp)),

+ "values": {

+ k: v.SerializeToString()

+ for k, v in features.items() # Serialized Features

+ },

+ }

+ )

+ if progress:

+ progress(1)

+

def _initialize_dynamodb_client(region: str, endpoint_url: Optional[str] = None):

return boto3.client("dynamodb", region_name=region, endpoint_url=endpoint_url)

| diff --git a/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py b/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py

--- a/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py

+++ b/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py

@@ -1,5 +1,7 @@

+from copy import deepcopy

from dataclasses import dataclass

+import boto3

import pytest

from moto import mock_dynamodb2

@@ -162,7 +164,7 @@ def test_online_read(repo_config, n_samples):

data = _create_n_customer_test_samples(n=n_samples)

_insert_data_test_table(data, PROJECT, f"{TABLE_NAME}_{n_samples}", REGION)

- entity_keys, features = zip(*data)

+ entity_keys, features, *rest = zip(*data)

dynamodb_store = DynamoDBOnlineStore()

returned_items = dynamodb_store.online_read(

config=repo_config,

@@ -171,3 +173,24 @@ def test_online_read(repo_config, n_samples):

)

assert len(returned_items) == len(data)

assert [item[1] for item in returned_items] == list(features)

+

+

+@mock_dynamodb2

+def test_write_batch_non_duplicates(repo_config):

+ """Test DynamoDBOnline Store deduplicate write batch request items."""

+ dynamodb_tbl = f"{TABLE_NAME}_batch_non_duplicates"

+ _create_test_table(PROJECT, dynamodb_tbl, REGION)

+ data = _create_n_customer_test_samples()

+ data_duplicate = deepcopy(data)

+ dynamodb_resource = boto3.resource("dynamodb", region_name=REGION)

+ table_instance = dynamodb_resource.Table(f"{PROJECT}.{dynamodb_tbl}")

+ dynamodb_store = DynamoDBOnlineStore()

+ # Insert duplicate data

+ dynamodb_store._write_batch_non_duplicates(

+ table_instance, data + data_duplicate, progress=None

+ )

+ # Request more items than inserted

+ response = table_instance.scan(Limit=20)

+ returned_items = response.get("Items", None)

+ assert returned_items is not None

+ assert len(returned_items) == len(data)

diff --git a/sdk/python/tests/utils/online_store_utils.py b/sdk/python/tests/utils/online_store_utils.py

--- a/sdk/python/tests/utils/online_store_utils.py

+++ b/sdk/python/tests/utils/online_store_utils.py

@@ -19,6 +19,8 @@ def _create_n_customer_test_samples(n=10):

"name": ValueProto(string_val="John"),

"age": ValueProto(int64_val=3),

},

+ datetime.utcnow(),

+ None,

)

for i in range(n)

]

@@ -42,13 +44,13 @@ def _delete_test_table(project, tbl_name, region):

def _insert_data_test_table(data, project, tbl_name, region):

dynamodb_resource = boto3.resource("dynamodb", region_name=region)

table_instance = dynamodb_resource.Table(f"{project}.{tbl_name}")

- for entity_key, features in data:

+ for entity_key, features, timestamp, created_ts in data:

entity_id = compute_entity_id(entity_key)

with table_instance.batch_writer() as batch:

batch.put_item(

Item={

"entity_id": entity_id,

- "event_ts": str(utils.make_tzaware(datetime.utcnow())),

+ "event_ts": str(utils.make_tzaware(timestamp)),

"values": {k: v.SerializeToString() for k, v in features.items()},

}

)

| [DynamoDB] BatchWriteItem operation: Provided list of item keys contains duplicates

## Expected Behavior

Duplication should be handled if a partition key already exists in the batch to be written to DynamoDB.

## Current Behavior

The following exception raises when running the local test `test_online_retrieval[LOCAL:File:dynamodb-True]`

```bash

botocore.exceptions.ClientError: An error occurred (ValidationException) when calling the BatchWriteItem operation: Provided list of item keys contains duplicates

```

## Steps to reproduce

This is the output from the pytest log

```bash

environment = Environment(name='integration_test_63b98a_1', test_repo_config=LOCAL:File:dynamodb, feature_store=<feast.feature_store...sal.data_sources.file.FileDataSourceCreator object at 0x7fb91d38f730>, python_feature_server=False, worker_id='master')

universal_data_sources = (UniversalEntities(customer_vals=[1001, 1002, 1003, 1004, 1005, 1006, 1007, 1008, 1009, 1010, 1011, 1012, 1013, 1014, ...object at 0x7fb905f6b7c0>, field_mapping=<feast.infra.offline_stores.file_source.FileSource object at 0x7fb905f794f0>))

full_feature_names = True

@pytest.mark.integration

@pytest.mark.universal

@pytest.mark.parametrize("full_feature_names", [True, False], ids=lambda v: str(v))

def test_online_retrieval(environment, universal_data_sources, full_feature_names):

fs = environment.feature_store

entities, datasets, data_sources = universal_data_sources

feature_views = construct_universal_feature_views(data_sources)

feature_service = FeatureService(

"convrate_plus100",

features=[feature_views.driver[["conv_rate"]], feature_views.driver_odfv],

)

feature_service_entity_mapping = FeatureService(

name="entity_mapping",

features=[

feature_views.location.with_name("origin").with_join_key_map(

{"location_id": "origin_id"}

),

feature_views.location.with_name("destination").with_join_key_map(

{"location_id": "destination_id"}

),

],

)

feast_objects = []

feast_objects.extend(feature_views.values())

feast_objects.extend(

[

driver(),

customer(),

location(),

feature_service,

feature_service_entity_mapping,

]

)

fs.apply(feast_objects)

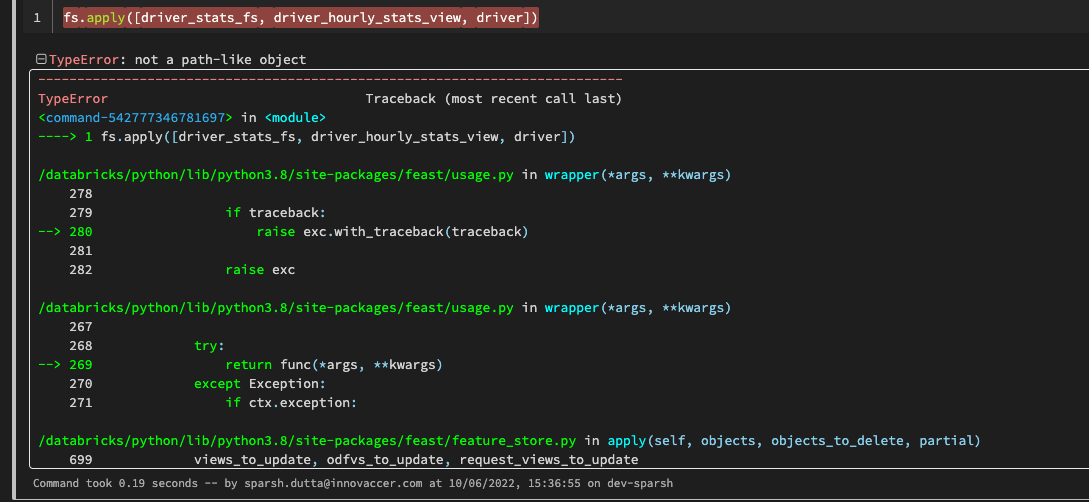

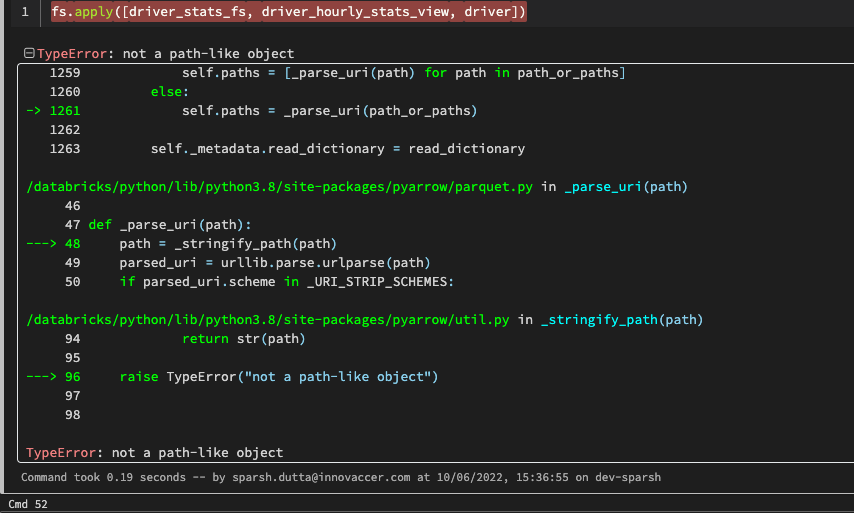

> fs.materialize(

environment.start_date - timedelta(days=1),

environment.end_date + timedelta(days=1),

)

sdk/python/tests/integration/online_store/test_universal_online.py:426:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

sdk/python/feast/feature_store.py:1165: in materialize

provider.materialize_single_feature_view(

sdk/python/feast/infra/passthrough_provider.py:164: in materialize_single_feature_view

self.online_write_batch(

sdk/python/feast/infra/passthrough_provider.py:86: in online_write_batch

self.online_store.online_write_batch(config, table, data, progress)

sdk/python/feast/infra/online_stores/dynamodb.py:208: in online_write_batch

progress(1)

../venv/lib/python3.9/site-packages/boto3/dynamodb/table.py:168: in __exit__

self._flush()

../venv/lib/python3.9/site-packages/boto3/dynamodb/table.py:144: in _flush

response = self._client.batch_write_item(

../venv/lib/python3.9/site-packages/botocore/client.py:395: in _api_call

return self._make_api_call(operation_name, kwargs)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <botocore.client.DynamoDB object at 0x7fb9056a0eb0>, operation_name = 'BatchWriteItem'

api_params = {'RequestItems': {'integration_test_63b98a_1.global_stats': [{'PutRequest': {'Item': {'entity_id': '361ad244a817acdb9c...Item': {'entity_id': '361ad244a817acdb9cb041cf7ee8b4b0', 'event_ts': '2022-04-03 16:00:00+00:00', 'values': {...}}}}]}}

def _make_api_call(self, operation_name, api_params):

operation_model = self._service_model.operation_model(operation_name)

service_name = self._service_model.service_name

history_recorder.record('API_CALL', {

'service': service_name,

'operation': operation_name,

'params': api_params,

})

if operation_model.deprecated:

logger.debug('Warning: %s.%s() is deprecated',

service_name, operation_name)

request_context = {

'client_region': self.meta.region_name,

'client_config': self.meta.config,

'has_streaming_input': operation_model.has_streaming_input,

'auth_type': operation_model.auth_type,

}

request_dict = self._convert_to_request_dict(

api_params, operation_model, context=request_context)

resolve_checksum_context(request_dict, operation_model, api_params)

service_id = self._service_model.service_id.hyphenize()

handler, event_response = self.meta.events.emit_until_response(

'before-call.{service_id}.{operation_name}'.format(

service_id=service_id,

operation_name=operation_name),

model=operation_model, params=request_dict,

request_signer=self._request_signer, context=request_context)

if event_response is not None:

http, parsed_response = event_response

else:

apply_request_checksum(request_dict)

http, parsed_response = self._make_request(

operation_model, request_dict, request_context)

self.meta.events.emit(

'after-call.{service_id}.{operation_name}'.format(

service_id=service_id,

operation_name=operation_name),

http_response=http, parsed=parsed_response,

model=operation_model, context=request_context

)

if http.status_code >= 300:

error_code = parsed_response.get("Error", {}).get("Code")

error_class = self.exceptions.from_code(error_code)

> raise error_class(parsed_response, operation_name)

E botocore.exceptions.ClientError: An error occurred (ValidationException) when calling the BatchWriteItem operation: Provided list of item keys contains duplicates

```

### Specifications

- Version: `feast 0.18.1`

- Platform: `Windows`

## Possible Solution

Overwrite by partition keys in `DynamoDB.online_write_batch()` method

```python

with table_instance.batch_writer(overwrite_by_pkeys=["entity_id"]) as batch:

for entity_key, features, timestamp, created_ts in data:

entity_id = compute_entity_id(entity_key)

```

This solution comes from [StackOverflow](https://stackoverflow.com/questions/56632960/dynamodb-batchwriteitem-provided-list-of-item-keys-contains-duplicates)

## Other Comments

This error prompt while developing #2358 , I can provide a solution to both in the same PR if possible.

| 2022-04-09T03:35:38 |

|

feast-dev/feast | 2,551 | feast-dev__feast-2551 | [

"2399"

]

| f136f8cc6c7feade73466aeb6267500377089485 | diff --git a/sdk/python/feast/infra/offline_stores/file.py b/sdk/python/feast/infra/offline_stores/file.py

--- a/sdk/python/feast/infra/offline_stores/file.py

+++ b/sdk/python/feast/infra/offline_stores/file.py

@@ -299,11 +299,25 @@ def evaluate_offline_job():

if created_timestamp_column

else [event_timestamp_column]

)

+ # try-catch block is added to deal with this issue https://github.com/dask/dask/issues/8939.

+ # TODO(kevjumba): remove try catch when fix is merged upstream in Dask.

+ try:

+ if created_timestamp_column:

+ source_df = source_df.sort_values(by=created_timestamp_column,)

+

+ source_df = source_df.sort_values(by=event_timestamp_column)

+

+ except ZeroDivisionError:

+ # Use 1 partition to get around case where everything in timestamp column is the same so the partition algorithm doesn't

+ # try to divide by zero.

+ if created_timestamp_column:

+ source_df = source_df.sort_values(

+ by=created_timestamp_column, npartitions=1

+ )

- if created_timestamp_column:

- source_df = source_df.sort_values(by=created_timestamp_column)

-

- source_df = source_df.sort_values(by=event_timestamp_column)

+ source_df = source_df.sort_values(

+ by=event_timestamp_column, npartitions=1

+ )

source_df = source_df[

(source_df[event_timestamp_column] >= start_date)

| Feast materialize throws an unhandled "ZeroDivisionError: division by zero" exception

## Expected Behavior

With feast version `0.19.3`, `feast materialize` should not throw an unhandled exception

In feast version `0.18.1`, everything works as expected.

```

→ python feast_materialize.py

Materializing 1 feature views from 2022-03-10 05:41:44-08:00 to 2022-03-11 05:41:44-08:00 into the dynamodb online store.

ryoung_division_by_zero_reproducer:

100%|█████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 19.34it/s]

```

## Current Behavior

```

→ python feast_materialize.py

/Users/ryoung/.pyenv/versions/3.8.10/lib/python3.8/importlib/__init__.py:127: DeprecationWarning: The toolz.compatibility module is no longer needed in Python 3 and has been deprecated. Please import these utilities directly from the standard library. This module will be removed in a future release.

return _bootstrap._gcd_import(name[level:], package, level)

Materializing 1 feature views from 2022-03-10 05:42:56-08:00 to 2022-03-11 05:42:56-08:00 into the dynamodb online store.

ryoung_division_by_zero_reproducer:

Traceback (most recent call last):

File "feast_materialize.py", line 32, in <module>

fs.materialize(

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/feast/usage.py", line 269, in wrapper

return func(*args, **kwargs)

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/feast/feature_store.py", line 1130, in materialize

provider.materialize_single_feature_view(

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/feast/infra/passthrough_provider.py", line 154, in materialize_single_feature_view

table = offline_job.to_arrow()

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/feast/infra/offline_stores/offline_store.py", line 121, in to_arrow

return self._to_arrow_internal()

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/feast/usage.py", line 280, in wrapper

raise exc.with_traceback(traceback)

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/feast/usage.py", line 269, in wrapper

return func(*args, **kwargs)

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/feast/infra/offline_stores/file.py", line 75, in _to_arrow_internal

df = self.evaluation_function().compute()

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/feast/infra/offline_stores/file.py", line 309, in evaluate_offline_job

source_df = source_df.sort_values(by=event_timestamp_column)

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/dask/dataframe/core.py", line 4388, in sort_values

return sort_values(

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/dask/dataframe/shuffle.py", line 146, in sort_values

df = rearrange_by_divisions(

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/dask/dataframe/shuffle.py", line 446, in rearrange_by_divisions

df3 = rearrange_by_column(

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/dask/dataframe/shuffle.py", line 473, in rearrange_by_column

df = df.repartition(npartitions=npartitions)

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/dask/dataframe/core.py", line 1319, in repartition

return repartition_npartitions(self, npartitions)

File "/Users/ryoung/.pyenv/versions/3.8.10/envs/python-monorepo-3.8.10/lib/python3.8/site-packages/dask/dataframe/core.py", line 6859, in repartition_npartitions

npartitions_ratio = df.npartitions / npartitions

ZeroDivisionError: division by zero

```

## Steps to reproduce

Create a list of feature records in PySpark and write them out as a parquet file.

```

from pyspark.sql import types as T

from datetime import datetime, timedelta

INPUT_SCHEMA = T.StructType(

[

T.StructField("id", T.StringType(), False),

T.StructField("feature1", T.FloatType(), False),

T.StructField("feature2", T.FloatType(), False),

T.StructField("event_timestamp", T.TimestampType(), False),

]

)

now = datetime.now()

one_hour_ago = now - timedelta(hours=1)

feature_records = [

{

"id": "foo",

"event_timestamp": one_hour_ago,

"feature1": 5.50,

"feature2": 7.50,

},

{

"id": "bar",

"event_timestamp": one_hour_ago,

"feature1": -1.10,

"feature2": 2.20,

},

]

df = spark.createDataFrame(data=feature_records, schema=INPUT_SCHEMA)

df.show(truncate=False)

df.write.parquet(mode="overwrite", path="s3://XXX/reproducer/2022-03-11T05:34:51.599215/")

```

The output should look something like:

```

+---+--------+--------+--------------------------+

|id |feature1|feature2|event_timestamp |

+---+--------+--------+--------------------------+

|foo|5.5 |7.5 |2022-03-11 04:35:39.318222|

|bar|-1.1 |2.2 |2022-03-11 04:35:39.318222|

+---+--------+--------+--------------------------+

```

Create a `feast_materialize.py` script.

```

from datetime import datetime, timedelta

from feast import FeatureStore, Entity, Feature, FeatureView, FileSource, ValueType

now = datetime.utcnow()

one_day_ago = now - timedelta(days=1)

s3_url = "s3://XXX/reproducer/2022-03-11T05:34:51.599215/"

offline_features_dump = FileSource(

path=s3_url,

event_timestamp_column="event_timestamp",

)

entity = Entity(name="id", value_type=ValueType.STRING)

feature_names = ["feature1", "feature2"]

feature_view = FeatureView(

name="ryoung_division_by_zero_reproducer",

entities=["id"],

ttl=timedelta(days=30),

features=[Feature(name=f, dtype=ValueType.FLOAT) for f in feature_names],

online=True,

batch_source=offline_features_dump,

)

fs = FeatureStore(".")

fs.apply(entity)

fs.apply(feature_view)

fs.materialize(

start_date=one_day_ago,

end_date=now,

feature_views=["ryoung_division_by_zero_reproducer"],

)

```

Note that you need to supply your own S3 bucket.

### Specifications

- Version: `0.19.3`

- Platform: `Darwin Kernel Version 21.3.0`

- Subsystem:

## Possible Solution

I downgraded back to feast version `0.18.1`.

| @tsotnet This looks like a p0 bug. I can always repro it. Should this be prioritized? What would be the quick fix I can apply

thanks for calling this out @zhiyanshao . Will have someone take a look into this asap | 2022-04-15T00:37:49 |

|

feast-dev/feast | 2,554 | feast-dev__feast-2554 | [

"2552"

]

| 753bd8894a6783bd6e39cbe4cf2df5d8e89919ff | diff --git a/sdk/python/feast/infra/online_stores/redis.py b/sdk/python/feast/infra/online_stores/redis.py

--- a/sdk/python/feast/infra/online_stores/redis.py

+++ b/sdk/python/feast/infra/online_stores/redis.py

@@ -42,7 +42,7 @@

try:

from redis import Redis

- from rediscluster import RedisCluster

+ from redis.cluster import ClusterNode, RedisCluster

except ImportError as e:

from feast.errors import FeastExtrasDependencyImportError

@@ -164,7 +164,9 @@ def _get_client(self, online_store_config: RedisOnlineStoreConfig):

online_store_config.connection_string

)

if online_store_config.redis_type == RedisType.redis_cluster:

- kwargs["startup_nodes"] = startup_nodes

+ kwargs["startup_nodes"] = [

+ ClusterNode(**node) for node in startup_nodes

+ ]

self._client = RedisCluster(**kwargs)

else:

kwargs["host"] = startup_nodes[0]["host"]

diff --git a/sdk/python/setup.py b/sdk/python/setup.py

--- a/sdk/python/setup.py

+++ b/sdk/python/setup.py

@@ -72,7 +72,7 @@

]

GCP_REQUIRED = [

- "google-cloud-bigquery>=2.28.1",

+ "google-cloud-bigquery>=2,<3",

"google-cloud-bigquery-storage >= 2.0.0",

"google-cloud-datastore>=2.1.*",

"google-cloud-storage>=1.34.*,<1.41",

@@ -80,8 +80,7 @@

]

REDIS_REQUIRED = [

- "redis==3.5.3",

- "redis-py-cluster>=2.1.3",

+ "redis==4.2.2",

"hiredis>=2.0.0",

]

@@ -108,7 +107,7 @@

CI_REQUIRED = (

[

- "cryptography==3.3.2",

+ "cryptography==3.4.8",

"flake8",

"black==19.10b0",

"isort>=5",

| Switch from `redis-py-cluster` to `redis-py`

As [documented](https://github.com/Grokzen/redis-py-cluster#redis-py-cluster-eol), `redis-py-cluster` has reached EOL. It is now being merged into [`redis-py`](https://github.com/redis/redis-py#cluster-mode). We previously tried to switch from `redis-py-cluster` to `redis-py` but ran into various issues; see #2328. The upstream [bug](https://github.com/redis/redis-py/issues/2003) has since been fixed, so we can now switch from `redis-py-cluster` to `redis-py`.

| Related, we should try to get redis-cluster integration tests running using testcontainers somehow, and part of the normal configurations tested. | 2022-04-15T16:58:13 |

|

feast-dev/feast | 2,606 | feast-dev__feast-2606 | [

"2605"

]

| e4507ac16540cb3a7e29c31121963a0fe8f79fe4 | diff --git a/sdk/python/feast/infra/offline_stores/contrib/spark_offline_store/spark_source.py b/sdk/python/feast/infra/offline_stores/contrib/spark_offline_store/spark_source.py

--- a/sdk/python/feast/infra/offline_stores/contrib/spark_offline_store/spark_source.py

+++ b/sdk/python/feast/infra/offline_stores/contrib/spark_offline_store/spark_source.py

@@ -177,7 +177,8 @@ def get_table_query_string(self) -> str:

"""Returns a string that can directly be used to reference this table in SQL"""

if self.table:

# Backticks make sure that spark sql knows this a table reference.

- return f"`{self.table}`"

+ table = ".".join([f"`{x}`" for x in self.table.split(".")])

+ return table

if self.query:

return f"({self.query})"

| spark source complain about "table or view not found" error.

## Expected Behavior

spark offline store

## Current Behavior

if set table of SparkSource with pattern "db.table",feast will complain about "table or view not found" error.

## Steps to reproduce

1. feast init test_repo

2. modify example.py to use SparkSource, and set table of SparkSource with pattern "db.table"

3. configure featue_store.yaml

4. feast apply

### Specifications

- Version: 0.20.1

- Platform: ubuntu 18/04

- Subsystem:

## Possible Solution

| 2022-04-25T01:34:29 |

||

feast-dev/feast | 2,610 | feast-dev__feast-2610 | [

"2607"

]

| c9eda79c7b1169ef05a481a96f07960c014e88b9 | diff --git a/sdk/python/feast/feature.py b/sdk/python/feast/feature.py

--- a/sdk/python/feast/feature.py

+++ b/sdk/python/feast/feature.py

@@ -91,7 +91,7 @@ def to_proto(self) -> FeatureSpecProto:

value_type = ValueTypeProto.Enum.Value(self.dtype.name)

return FeatureSpecProto(

- name=self.name, value_type=value_type, labels=self.labels,

+ name=self.name, value_type=value_type, tags=self.labels,

)

@classmethod

@@ -106,7 +106,7 @@ def from_proto(cls, feature_proto: FeatureSpecProto):

feature = cls(

name=feature_proto.name,

dtype=ValueType(feature_proto.value_type),

- labels=dict(feature_proto.labels),

+ labels=dict(feature_proto.tags),

)

return feature

diff --git a/sdk/python/feast/field.py b/sdk/python/feast/field.py

--- a/sdk/python/feast/field.py

+++ b/sdk/python/feast/field.py

@@ -12,6 +12,8 @@

# See the License for the specific language governing permissions and

# limitations under the License.

+from typing import Dict, Optional

+

from feast.feature import Feature

from feast.protos.feast.core.Feature_pb2 import FeatureSpecV2 as FieldProto

from feast.types import FeastType, from_value_type

@@ -25,13 +27,15 @@ class Field:

Attributes:

name: The name of the field.

dtype: The type of the field, such as string or float.

+ tags: User-defined metadata in dictionary form.

"""

name: str

dtype: FeastType

+ tags: Dict[str, str]

def __init__(

- self, *, name: str, dtype: FeastType,

+ self, *, name: str, dtype: FeastType, tags: Optional[Dict[str, str]] = None,

):

"""

Creates a Field object.

@@ -39,12 +43,18 @@ def __init__(

Args:

name: The name of the field.

dtype: The type of the field, such as string or float.

+ tags (optional): User-defined metadata in dictionary form.

"""

self.name = name

self.dtype = dtype

+ self.tags = tags or {}

def __eq__(self, other):

- if self.name != other.name or self.dtype != other.dtype:

+ if (

+ self.name != other.name

+ or self.dtype != other.dtype

+ or self.tags != other.tags

+ ):

return False

return True

@@ -58,12 +68,12 @@ def __repr__(self):

return f"{self.name}-{self.dtype}"

def __str__(self):

- return f"Field(name={self.name}, dtype={self.dtype})"

+ return f"Field(name={self.name}, dtype={self.dtype}, tags={self.tags})"

def to_proto(self) -> FieldProto:

"""Converts a Field object to its protobuf representation."""

value_type = self.dtype.to_value_type()

- return FieldProto(name=self.name, value_type=value_type.value)

+ return FieldProto(name=self.name, value_type=value_type.value, tags=self.tags)

@classmethod

def from_proto(cls, field_proto: FieldProto):

@@ -74,7 +84,11 @@ def from_proto(cls, field_proto: FieldProto):

field_proto: FieldProto protobuf object

"""

value_type = ValueType(field_proto.value_type)

- return cls(name=field_proto.name, dtype=from_value_type(value_type=value_type))

+ return cls(

+ name=field_proto.name,

+ dtype=from_value_type(value_type=value_type),

+ tags=dict(field_proto.tags),

+ )

@classmethod

def from_feature(cls, feature: Feature):

@@ -84,4 +98,6 @@ def from_feature(cls, feature: Feature):

Args:

feature: Feature object to convert.

"""

- return cls(name=feature.name, dtype=from_value_type(feature.dtype))

+ return cls(

+ name=feature.name, dtype=from_value_type(feature.dtype), tags=feature.labels

+ )

| diff --git a/java/serving/src/test/java/feast/serving/util/DataGenerator.java b/java/serving/src/test/java/feast/serving/util/DataGenerator.java

--- a/java/serving/src/test/java/feast/serving/util/DataGenerator.java

+++ b/java/serving/src/test/java/feast/serving/util/DataGenerator.java

@@ -126,11 +126,11 @@ public static EntityProto.EntitySpecV2 createEntitySpecV2(

}

public static FeatureProto.FeatureSpecV2 createFeatureSpecV2(

- String name, ValueProto.ValueType.Enum valueType, Map<String, String> labels) {

+ String name, ValueProto.ValueType.Enum valueType, Map<String, String> tags) {

return FeatureProto.FeatureSpecV2.newBuilder()

.setName(name)

.setValueType(valueType)

- .putAllLabels(labels)

+ .putAllTags(tags)

.build();

}

@@ -140,7 +140,7 @@ public static FeatureTableSpec createFeatureTableSpec(

List<String> entities,

Map<String, ValueProto.ValueType.Enum> features,

int maxAgeSecs,

- Map<String, String> labels) {

+ Map<String, String> tags) {

return FeatureTableSpec.newBuilder()

.setName(name)

@@ -152,7 +152,7 @@ public static FeatureTableSpec createFeatureTableSpec(

FeatureSpecV2.newBuilder()

.setName(entry.getKey())

.setValueType(entry.getValue())

- .putAllLabels(labels)

+ .putAllTags(tags)

.build())

.collect(Collectors.toList()))

.setMaxAge(Duration.newBuilder().setSeconds(3600).build())

@@ -169,7 +169,7 @@ public static FeatureTableSpec createFeatureTableSpec(

.setUri("/dev/null")

.build())

.build())

- .putAllLabels(labels)

+ .putAllLabels(tags)

.build();

}

@@ -178,7 +178,7 @@ public static FeatureTableSpec createFeatureTableSpec(

List<String> entities,

ImmutableMap<String, ValueProto.ValueType.Enum> features,

int maxAgeSecs,

- Map<String, String> labels) {

+ Map<String, String> tags) {

return FeatureTableSpec.newBuilder()

.setName(name)

@@ -190,11 +190,11 @@ public static FeatureTableSpec createFeatureTableSpec(

FeatureSpecV2.newBuilder()

.setName(entry.getKey())

.setValueType(entry.getValue())

- .putAllLabels(labels)

+ .putAllTags(tags)

.build())

.collect(Collectors.toList()))

.setMaxAge(Duration.newBuilder().setSeconds(maxAgeSecs).build())

- .putAllLabels(labels)

+ .putAllLabels(tags)

.build();

}

| Keep labels in Field api

I found that new api 'Field' will take place of 'Feature' in 0.21+ feast. but `Field` only have 'name' and 'dtype' parameters. The parameter 'labels' is disappeared.

In my use case 'labels' is very import. 'labels' stores the default value, descriptions,and other things. for example

```python

comic_feature_view = FeatureView(

name="comic_featureV1",

entities=["item_id"],

ttl=Duration(seconds=86400 * 1),

features=[

Feature(name="channel_id", dtype=ValueType.INT32, labels={"default": "14", "desc":"channel"}),

Feature(name="keyword_weight", dtype=ValueType.FLOAT, labels={"default": "0.0", "desc":"keyword's weight"}),

Feature(name="comic_vectorv1", dtype=ValueType.FLOAT, labels={"default": ";".join(["0.0" for i in range(32)]), "desc":"deepwalk vector","faiss_index":"/data/faiss_index/comic_featureV1__comic_vectorv1.index"}),

Feature(name="comic_vectorv2", dtype=ValueType.FLOAT, labels={"default": ";".join(["0.0" for i in range(32)]), "desc":"word2vec vector","faiss_index":"/data/faiss_index/comic_featureV1__comic_vectorv2.index"}),

Feature(name="gender", dtype=ValueType.INT32, labels={"default": "0", "desc":" 0-femal 1-male"}),

Feature(name="pub_time", dtype=ValueType.STRING, labels={"default": "1970-01-01 00:00:00", "desc":"comic's publish time"}),

Feature(name="update_time", dtype=ValueType.STRING, labels={"default": "1970-01-01 00:00:00", "desc":"comic's update time"}),

Feature(name="view_cnt", dtype=ValueType.INT64, labels={"default": "0", "desc":"comic's hot score"}),

Feature(name="collect_cnt", dtype=ValueType.INT64, labels={"default": "0", "desc":"collect count"}),

Feature(name="source_id", dtype=ValueType.INT32, labels={"default": "0", "desc":"comic is from(0-unknown,1-japen,2-usa,3- other)"}),

```

So please keep the parameter 'labels' in Field api

| Thanks for giving feedback and nice catch!

Thanks for the feedback @hsz1273327 , this was definitely an oversight. We're planning on adding this back in soon (but we may be possibly changing the name of this field to `tags` to more accurately convey the purpose of the field). | 2022-04-25T21:27:25 |

feast-dev/feast | 2,646 | feast-dev__feast-2646 | [

"2566"

]

| 41a1da4560bb09077f32c09d37f3304f8ae84f2a | diff --git a/sdk/python/feast/infra/passthrough_provider.py b/sdk/python/feast/infra/passthrough_provider.py

--- a/sdk/python/feast/infra/passthrough_provider.py

+++ b/sdk/python/feast/infra/passthrough_provider.py

@@ -39,12 +39,24 @@ def __init__(self, config: RepoConfig):

super().__init__(config)

self.repo_config = config

- self.offline_store = get_offline_store_from_config(config.offline_store)

- self.online_store = (

- get_online_store_from_config(config.online_store)

- if config.online_store

- else None

- )

+ self._offline_store = None

+ self._online_store = None

+

+ @property

+ def online_store(self):

+ if not self._online_store and self.repo_config.online_store:

+ self._online_store = get_online_store_from_config(

+ self.repo_config.online_store

+ )

+ return self._online_store

+

+ @property

+ def offline_store(self):

+ if not self._offline_store:

+ self._offline_store = get_offline_store_from_config(

+ self.repo_config.offline_store

+ )

+ return self._offline_store

def update_infra(

self,

diff --git a/sdk/python/feast/repo_config.py b/sdk/python/feast/repo_config.py

--- a/sdk/python/feast/repo_config.py

+++ b/sdk/python/feast/repo_config.py

@@ -6,6 +6,7 @@

import yaml

from pydantic import (

BaseModel,

+ Field,

StrictInt,

StrictStr,

ValidationError,

@@ -107,10 +108,10 @@ class RepoConfig(FeastBaseModel):

provider: StrictStr

""" str: local or gcp or aws """

- online_store: Any

+ _online_config: Any = Field(alias="online_store")

""" OnlineStoreConfig: Online store configuration (optional depending on provider) """

- offline_store: Any

+ _offline_config: Any = Field(alias="offline_store")

""" OfflineStoreConfig: Offline store configuration (optional depending on provider) """

feature_server: Optional[Any]

@@ -126,19 +127,27 @@ class RepoConfig(FeastBaseModel):

def __init__(self, **data: Any):

super().__init__(**data)

- if isinstance(self.online_store, Dict):

- self.online_store = get_online_config_from_type(self.online_store["type"])(

- **self.online_store

- )

- elif isinstance(self.online_store, str):

- self.online_store = get_online_config_from_type(self.online_store)()

-

- if isinstance(self.offline_store, Dict):

- self.offline_store = get_offline_config_from_type(

- self.offline_store["type"]

- )(**self.offline_store)

- elif isinstance(self.offline_store, str):

- self.offline_store = get_offline_config_from_type(self.offline_store)()

+ self._offline_store = None

+ if "offline_store" in data:

+ self._offline_config = data["offline_store"]

+ else:

+ if data["provider"] == "local":

+ self._offline_config = "file"

+ elif data["provider"] == "gcp":

+ self._offline_config = "bigquery"

+ elif data["provider"] == "aws":

+ self._offline_config = "redshift"

+

+ self._online_store = None

+ if "online_store" in data:

+ self._online_config = data["online_store"]

+ else:

+ if data["provider"] == "local":

+ self._online_config = "sqlite"

+ elif data["provider"] == "gcp":

+ self._online_config = "datastore"

+ elif data["provider"] == "aws":

+ self._online_config = "dynamodb"

if isinstance(self.feature_server, Dict):

self.feature_server = get_feature_server_config_from_type(

@@ -151,6 +160,35 @@ def get_registry_config(self):

else:

return self.registry

+ @property

+ def offline_store(self):

+ if not self._offline_store:

+ if isinstance(self._offline_config, Dict):

+ self._offline_store = get_offline_config_from_type(

+ self._offline_config["type"]

+ )(**self._offline_config)

+ elif isinstance(self._offline_config, str):

+ self._offline_store = get_offline_config_from_type(

+ self._offline_config

+ )()

+ elif self._offline_config:

+ self._offline_store = self._offline_config

+ return self._offline_store

+

+ @property

+ def online_store(self):

+ if not self._online_store:

+ if isinstance(self._online_config, Dict):

+ self._online_store = get_online_config_from_type(

+ self._online_config["type"]

+ )(**self._online_config)

+ elif isinstance(self._online_config, str):

+ self._online_store = get_online_config_from_type(self._online_config)()

+ elif self._online_config:

+ self._online_store = self._online_config

+

+ return self._online_store

+

@root_validator(pre=True)

@log_exceptions

def _validate_online_store_config(cls, values):

@@ -304,6 +342,9 @@ def write_to_path(self, repo_path: Path):

sort_keys=False,

)

+ class Config:

+ allow_population_by_field_name = True

+

class FeastConfigError(Exception):

def __init__(self, error_message, config_path):

| diff --git a/sdk/python/tests/integration/feature_repos/integration_test_repo_config.py b/sdk/python/tests/integration/feature_repos/integration_test_repo_config.py

--- a/sdk/python/tests/integration/feature_repos/integration_test_repo_config.py

+++ b/sdk/python/tests/integration/feature_repos/integration_test_repo_config.py

@@ -19,7 +19,7 @@ class IntegrationTestRepoConfig:

"""

provider: str = "local"

- online_store: Union[str, Dict] = "sqlite"

+ online_store: Optional[Union[str, Dict]] = "sqlite"

offline_store_creator: Type[DataSourceCreator] = FileDataSourceCreator

online_store_creator: Optional[Type[OnlineStoreCreator]] = None

@@ -38,8 +38,10 @@ def __repr__(self) -> str:

online_store_type = self.online_store.get("redis_type", "redis")

else:

online_store_type = self.online_store["type"]

- else:

+ elif self.online_store:

online_store_type = self.online_store.__name__

+ else:

+ online_store_type = "none"

else:

online_store_type = self.online_store_creator.__name__

diff --git a/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py b/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py

--- a/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py

+++ b/sdk/python/tests/unit/infra/online_store/test_dynamodb_online_store.py

@@ -39,6 +39,7 @@ def repo_config():

project=PROJECT,

provider=PROVIDER,

online_store=DynamoDBOnlineStoreConfig(region=REGION),

+ # online_store={"type": "dynamodb", "region": REGION},

offline_store=FileOfflineStoreConfig(),

)

| Implement lazy loading for offline and online stores

The offline and online stores are eagerly loaded in `FeatureStore`, leading unnecessary dependencies to be pulled in. For example, as #2560 reported, the AWS Lambda feature server does not work with a Snowflake offline store, even though the offline store is not strictly required for serving online features.

The offline and online stores should be lazily loaded instead. This will allow the Snowflake dependencies to removed from the AWS Lambda feature server Dockerfile. Note that #2560 was fixed by #2565, but this issue tracks a longer-term solution.

| 2022-05-06T00:17:12 |

|

feast-dev/feast | 2,647 | feast-dev__feast-2647 | [

"2557"

]

| 30e0bf3ef249d6e31450151701a5994012586934 | diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -47,30 +47,30 @@

REQUIRED = [

"click>=7.0.0,<8.0.2",

- "colorama>=0.3.9",

+ "colorama>=0.3.9,<1",

"dill==0.3.*",

- "fastavro>=1.1.0",

- "google-api-core>=1.23.0",

- "googleapis-common-protos==1.52.*",

- "grpcio>=1.34.0",

- "grpcio-reflection>=1.34.0",

- "Jinja2>=2.0.0",

+ "fastavro>=1.1.0,<2",

+ "google-api-core>=1.23.0,<3",

+ "googleapis-common-protos==1.52.*,<2",

+ "grpcio>=1.34.0,<2",

+ "grpcio-reflection>=1.34.0,<2",

+ "Jinja2>=2,<4",

"jsonschema",

"mmh3",

- "numpy<1.22",

- "pandas>=1.0.0",

+ "numpy<1.22,<2",

+ "pandas>=1,<2",

"pandavro==1.5.*",

"protobuf>=3.10,<3.20",

"proto-plus<1.19.7",

- "pyarrow>=4.0.0",

- "pydantic>=1.0.0",

- "PyYAML>=5.4.*",

+ "pyarrow>=4,<7",

+ "pydantic>=1,<2",

+ "PyYAML>=5.4.*,<7",

"tabulate==0.8.*",

- "tenacity>=7.*",

+ "tenacity>=7,<9",

"toml==0.10.*",

"tqdm==4.*",

- "fastapi>=0.68.0",

- "uvicorn[standard]>=0.14.0",

+ "fastapi>=0.68.0,<1",

+ "uvicorn[standard]>=0.14.0,<1",

"proto-plus<1.19.7",

"tensorflow-metadata>=1.0.0,<2.0.0",

"dask>=2021.*,<2022.02.0",

@@ -78,15 +78,15 @@

GCP_REQUIRED = [

"google-cloud-bigquery>=2,<3",

- "google-cloud-bigquery-storage >= 2.0.0",

- "google-cloud-datastore>=2.1.*",

+ "google-cloud-bigquery-storage >= 2.0.0,<3",

+ "google-cloud-datastore>=2.1.*,<3",

"google-cloud-storage>=1.34.*,<1.41",

"google-cloud-core>=1.4.0,<2.0.0",

]

REDIS_REQUIRED = [

"redis==4.2.2",

- "hiredis>=2.0.0",

+ "hiredis>=2.0.0,<3",

]

AWS_REQUIRED = [

@@ -95,11 +95,11 @@

]

SNOWFLAKE_REQUIRED = [

- "snowflake-connector-python[pandas]>=2.7.3",

+ "snowflake-connector-python[pandas]>=2.7.3,<3",

]

SPARK_REQUIRED = [

- "pyspark>=3.0.0",

+ "pyspark>=3.0.0,<4",

]

TRINO_REQUIRED = [

@@ -107,11 +107,11 @@

]

POSTGRES_REQUIRED = [

- "psycopg2-binary>=2.8.3",

+ "psycopg2-binary>=2.8.3,<3",

]

HBASE_REQUIRED = [

- "happybase>=1.2.0",

+ "happybase>=1.2.0,<3",

]

GE_REQUIRED = [

@@ -119,7 +119,7 @@

]

GO_REQUIRED = [

- "cffi==1.15.*",

+ "cffi==1.15.*,<2",

]

CI_REQUIRED = (

@@ -128,7 +128,7 @@

"cryptography==3.4.8",

"flake8",

"black==19.10b0",

- "isort>=5",

+ "isort>=5,<6",

"grpcio-tools==1.44.0",

"grpcio-testing==1.44.0",

"minio==7.1.0",

@@ -138,19 +138,19 @@

"mypy-protobuf==3.1",

"avro==1.10.0",

"gcsfs",

- "urllib3>=1.25.4",

+ "urllib3>=1.25.4,<2",

"psutil==5.9.0",

- "pytest>=6.0.0",

+ "pytest>=6.0.0,<8",

"pytest-cov",

"pytest-xdist",

- "pytest-benchmark>=3.4.1",

+ "pytest-benchmark>=3.4.1,<4",

"pytest-lazy-fixture==0.6.3",

"pytest-timeout==1.4.2",

"pytest-ordering==0.6.*",

"pytest-mock==1.10.4",

"Sphinx!=4.0.0,<4.4.0",

"sphinx-rtd-theme",

- "testcontainers[postgresql]>=3.5",

+ "testcontainers>=3.5,<4",

"adlfs==0.5.9",

"firebase-admin==4.5.2",

"pre-commit",

| Pin dependencies to major version ranges

We have recently had a bunch of issues due to dependencies not being limited appropriately. For example, having `google-cloud-bigquery>=2.28.1` led to issues when `google-cloud-bigquery` released breaking changes in `v3.0.0`: see #2537 for the issue and #2554 which included the fix. Similarly, #2484 occurred since our `protobuf` dependency was not limited.

I think we should limit dependencies to the next major version. For example, if we currently use version N of a package, we should also limit it to v<(N+1). This way we are not exposed to breaking changes in all our upstream dependencies, while also maintaining a reasonable amount of flexibility for users. If a version N+1 is released and users want us to support it, they can let us know and we can add support; limiting to v<(N+1) just ensures that we aren't being broken all the time.

| I like this way of declaring dependencies as well: https://stackoverflow.com/a/50080281/1735989

> I like this way of declaring dependencies as well: https://stackoverflow.com/a/50080281/1735989

Ooh this looks super neat, but it seems to only work for minor versions - e.g. if I want to specify `>=8.0.0,<9.0.0`, I can't do `~=8`. But this will definitely work for most of our dependencies.

Edit: oops I'm wrong, the below comment is right.

> if I want to specify >=8.0.0,<9.0.0

That's the same as `~=8.0` isn't it? Which is exactly identical to `>=8.0,==8.*`. But I suppose that still doesn't allow something even more specific like `>=8.0.1,==8.*`. | 2022-05-06T01:10:08 |

|

feast-dev/feast | 2,665 | feast-dev__feast-2665 | [

"2576"

]

| fc00ca8fc091ab2642121de69d4624783f11445c | diff --git a/sdk/python/feast/feature_store.py b/sdk/python/feast/feature_store.py

--- a/sdk/python/feast/feature_store.py

+++ b/sdk/python/feast/feature_store.py

@@ -875,7 +875,7 @@ def get_historical_features(

DeprecationWarning,

)

- # TODO(achal): _group_feature_refs returns the on demand feature views, but it's no passed into the provider.

+ # TODO(achal): _group_feature_refs returns the on demand feature views, but it's not passed into the provider.

# This is a weird interface quirk - we should revisit the `get_historical_features` to

# pass in the on demand feature views as well.

fvs, odfvs, request_fvs, request_fv_refs = _group_feature_refs(

@@ -2125,8 +2125,12 @@ def _group_feature_refs(

for ref in features:

view_name, feat_name = ref.split(":")

if view_name in view_index:

+ view_index[view_name].projection.get_feature(feat_name) # For validation

views_features[view_name].add(feat_name)

elif view_name in on_demand_view_index:

+ on_demand_view_index[view_name].projection.get_feature(

+ feat_name

+ ) # For validation

on_demand_view_features[view_name].add(feat_name)

# Let's also add in any FV Feature dependencies here.

for input_fv_projection in on_demand_view_index[

@@ -2135,6 +2139,9 @@ def _group_feature_refs(

for input_feat in input_fv_projection.features:

views_features[input_fv_projection.name].add(input_feat.name)

elif view_name in request_view_index:

+ request_view_index[view_name].projection.get_feature(

+ feat_name

+ ) # For validation

request_views_features[view_name].add(feat_name)

request_view_refs.add(ref)

else:

diff --git a/sdk/python/feast/feature_view_projection.py b/sdk/python/feast/feature_view_projection.py

--- a/sdk/python/feast/feature_view_projection.py

+++ b/sdk/python/feast/feature_view_projection.py

@@ -64,3 +64,11 @@ def from_definition(base_feature_view: "BaseFeatureView"):

name_alias=None,

features=base_feature_view.features,

)

+

+ def get_feature(self, feature_name: str) -> Field:

+ try:

+ return next(field for field in self.features if field.name == feature_name)

+ except StopIteration:

+ raise KeyError(

+ f"Feature {feature_name} not found in projection {self.name_to_use()}"

+ )

| diff --git a/sdk/python/tests/integration/offline_store/test_universal_historical_retrieval.py b/sdk/python/tests/integration/offline_store/test_universal_historical_retrieval.py

--- a/sdk/python/tests/integration/offline_store/test_universal_historical_retrieval.py

+++ b/sdk/python/tests/integration/offline_store/test_universal_historical_retrieval.py

@@ -21,7 +21,7 @@

from feast.infra.offline_stores.offline_utils import (

DEFAULT_ENTITY_DF_EVENT_TIMESTAMP_COL,

)

-from feast.types import Int32

+from feast.types import Float32, Int32

from feast.value_type import ValueType

from tests.integration.feature_repos.repo_configuration import (

construct_universal_feature_views,

@@ -410,6 +410,46 @@ def test_historical_features(environment, universal_data_sources, full_feature_n

)

[email protected]

[email protected]

[email protected]("full_feature_names", [True, False], ids=lambda v: str(v))

+def test_historical_features_with_shared_batch_source(

+ environment, universal_data_sources, full_feature_names

+):

+ # Addresses https://github.com/feast-dev/feast/issues/2576

+

+ store = environment.feature_store

+

+ entities, datasets, data_sources = universal_data_sources

+ driver_stats_v1 = FeatureView(

+ name="driver_stats_v1",

+ entities=["driver"],

+ schema=[Field(name="avg_daily_trips", dtype=Int32)],

+ source=data_sources.driver,

+ )

+ driver_stats_v2 = FeatureView(

+ name="driver_stats_v2",

+ entities=["driver"],

+ schema=[

+ Field(name="avg_daily_trips", dtype=Int32),

+ Field(name="conv_rate", dtype=Float32),

+ ],

+ source=data_sources.driver,

+ )

+

+ store.apply([driver(), driver_stats_v1, driver_stats_v2])

+

+ with pytest.raises(KeyError):

+ store.get_historical_features(

+ entity_df=datasets.entity_df,

+ features=[

+ # `driver_stats_v1` does not have `conv_rate`

+ "driver_stats_v1:conv_rate",

+ ],

+ full_feature_names=full_feature_names,

+ ).to_df()

+

+

@pytest.mark.integration

@pytest.mark.universal_offline_stores

def test_historical_features_with_missing_request_data(

diff --git a/sdk/python/tests/integration/online_store/test_universal_online.py b/sdk/python/tests/integration/online_store/test_universal_online.py

--- a/sdk/python/tests/integration/online_store/test_universal_online.py

+++ b/sdk/python/tests/integration/online_store/test_universal_online.py

@@ -19,7 +19,7 @@

RequestDataNotFoundInEntityRowsException,

)

from feast.online_response import TIMESTAMP_POSTFIX

-from feast.types import String

+from feast.types import Float32, Int32, String

from feast.wait import wait_retry_backoff

from tests.integration.feature_repos.repo_configuration import (

Environment,

@@ -324,6 +324,60 @@ def get_online_features_dict(

return dict1

[email protected]

[email protected]

+def test_online_retrieval_with_shared_batch_source(environment, universal_data_sources):

+ # Addresses https://github.com/feast-dev/feast/issues/2576

+

+ fs = environment.feature_store

+

+ entities, datasets, data_sources = universal_data_sources

+ driver_stats_v1 = FeatureView(

+ name="driver_stats_v1",

+ entities=["driver"],

+ schema=[Field(name="avg_daily_trips", dtype=Int32)],

+ source=data_sources.driver,

+ )

+ driver_stats_v2 = FeatureView(

+ name="driver_stats_v2",

+ entities=["driver"],

+ schema=[

+ Field(name="avg_daily_trips", dtype=Int32),

+ Field(name="conv_rate", dtype=Float32),

+ ],

+ source=data_sources.driver,

+ )

+

+ fs.apply([driver(), driver_stats_v1, driver_stats_v2])

+

+ data = pd.DataFrame(

+ {

+ "driver_id": [1, 2],

+ "avg_daily_trips": [4, 5],

+ "conv_rate": [0.5, 0.3],

+ "event_timestamp": [

+ pd.to_datetime(1646263500, utc=True, unit="s"),

+ pd.to_datetime(1646263600, utc=True, unit="s"),

+ ],

+ "created": [

+ pd.to_datetime(1646263500, unit="s"),

+ pd.to_datetime(1646263600, unit="s"),

+ ],

+ }

+ )

+ fs.write_to_online_store("driver_stats_v1", data.drop("conv_rate", axis=1))

+ fs.write_to_online_store("driver_stats_v2", data)

+

+ with pytest.raises(KeyError):

+ fs.get_online_features(

+ features=[

+ # `driver_stats_v1` does not have `conv_rate`

+ "driver_stats_v1:conv_rate",

+ ],

+ entity_rows=[{"driver_id": 1}, {"driver_id": 2}],

+ )

+

+

@pytest.mark.integration

@pytest.mark.universal_online_stores

@pytest.mark.parametrize("full_feature_names", [True, False], ids=lambda v: str(v))

| Undefined features should be rejected when being fetched via `get_historical_features` / `get_online_features`

## Context

I want to create versioned feature views. Through various versions, features could be added or removed.

## Expected Behavior

When doing `feast.get_historical_features`, features that are not defined should be rejected.

## Current Behavior

The features get returned even though they have not been defined.

## Steps to reproduce

1. Initialize a new feast repository

2. Define the features:

```python

driver_hourly_stats = FileSource(

path="/home/benjamintan/workspace/feast-workflow-demo/feature_repo/data/driver_stats.parquet",

timestamp_field="event_timestamp",

created_timestamp_column="created",

)

driver = Entity(name="driver_id", value_type=ValueType.INT64, description="driver id",)

driver_hourly_stats_view_v1 = FeatureView(

name="driver_hourly_stats_v1",

entities=["driver_id"],

ttl=timedelta(days=1),

schema=[

Field(name="avg_daily_trips", dtype=Int64),

],

online=True,

batch_source=driver_hourly_stats,

tags={},

)

driver_hourly_stats_view_v2 = FeatureView(

name="driver_hourly_stats_v2",

entities=["driver_id"],

ttl=timedelta(days=1),

schema=[

Field(name="conv_rate", dtype=Float32),

Field(name="acc_rate", dtype=Float32),

Field(name="avg_daily_trips", dtype=Int64),

],

online=True,

batch_source=driver_hourly_stats,

tags={},

)

```

3. `feast apply`

4. Querying Feast: