sha

null | last_modified

null | library_name

stringclasses 154

values | text

stringlengths 1

900k

| metadata

stringlengths 2

348k

| pipeline_tag

stringclasses 45

values | id

stringlengths 5

122

| tags

listlengths 1

1.84k

| created_at

stringlengths 25

25

| arxiv

listlengths 0

201

| languages

listlengths 0

1.83k

| tags_str

stringlengths 17

9.34k

| text_str

stringlengths 0

389k

| text_lists

listlengths 0

722

| processed_texts

listlengths 1

723

| tokens_length

listlengths 1

723

| input_texts

listlengths 1

61

| embeddings

listlengths 768

768

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

null | null |

transformers

|

# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 panoptic (118k annotated images). It was introduced in the paper [End-to-End Object Detection with Transformers](https://arxiv.org/abs/2005.12872) by Carion et al. and first released in [this repository](https://github.com/facebookresearch/detr).

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

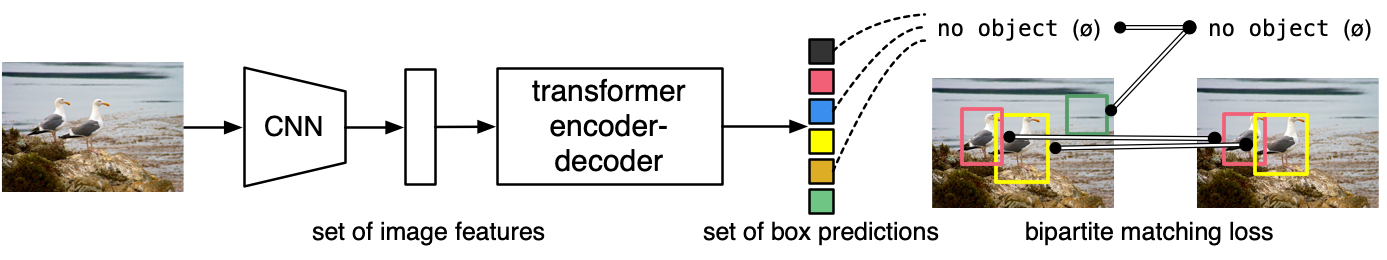

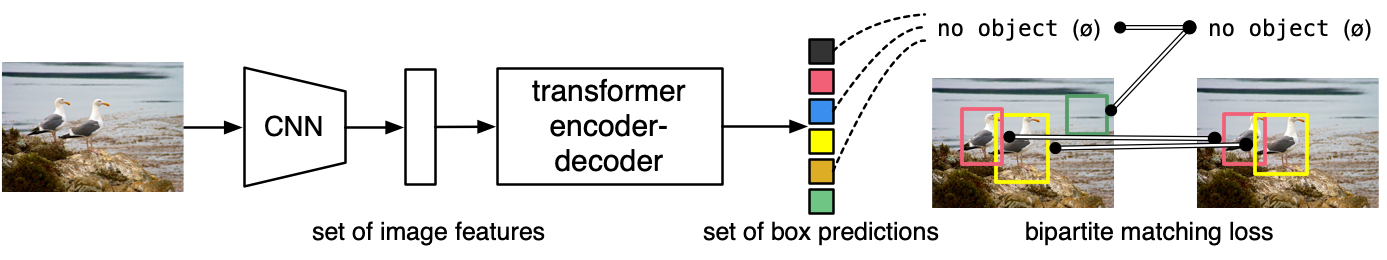

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

DETR can be naturally extended to perform panoptic segmentation, by adding a mask head on top of the decoder outputs.

## Intended uses & limitations

You can use the raw model for panoptic segmentation. See the [model hub](https://huggingface.co/models?search=facebook/detr) to look for all available DETR models.

### How to use

Here is how to use this model:

```python

from transformers import DetrFeatureExtractor, DetrForSegmentation

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = DetrFeatureExtractor.from_pretrained('facebook/detr-resnet-50-dc5-panoptic')

model = DetrForSegmentation.from_pretrained('facebook/detr-resnet-50-dc5-panoptic')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

# model predicts COCO classes, bounding boxes, and masks

logits = outputs.logits

bboxes = outputs.pred_boxes

masks = outputs.pred_masks

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The DETR model was trained on [COCO 2017 panoptic](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/facebookresearch/detr/blob/master/datasets/coco_panoptic.py).

Images are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).

### Training

The model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).

## Evaluation results

This model achieves the following results on COCO 2017 validation: a box AP (average precision) of **40.2**, a segmentation AP (average precision) of **31.9** and a PQ (panoptic quality) of **44.6**.

For more details regarding evaluation results, we refer to table 5 of the original paper.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2005-12872,

author = {Nicolas Carion and

Francisco Massa and

Gabriel Synnaeve and

Nicolas Usunier and

Alexander Kirillov and

Sergey Zagoruyko},

title = {End-to-End Object Detection with Transformers},

journal = {CoRR},

volume = {abs/2005.12872},

year = {2020},

url = {https://arxiv.org/abs/2005.12872},

archivePrefix = {arXiv},

eprint = {2005.12872},

timestamp = {Thu, 28 May 2020 17:38:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-12872.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

{"license": "apache-2.0", "tags": ["image-segmentation"], "datasets": ["coco"], "widget": [{"src": "https://huggingface.co/datasets/mishig/sample_images/resolve/main/dog-cat.jpg", "example_title": "Dog & Cat"}, {"src": "https://huggingface.co/datasets/mishig/sample_images/resolve/main/construction-site.jpg", "example_title": "Construction Site"}, {"src": "https://huggingface.co/datasets/mishig/sample_images/resolve/main/apple-orange.jpg", "example_title": "Apple & Orange"}]}

|

image-segmentation

|

facebook/detr-resnet-50-dc5-panoptic

|

[

"transformers",

"pytorch",

"safetensors",

"detr",

"image-segmentation",

"dataset:coco",

"arxiv:2005.12872",

"license:apache-2.0",

"endpoints_compatible",

"has_space",

"region:us"

] |

2022-03-02T23:29:05+00:00

|

[

"2005.12872"

] |

[] |

TAGS

#transformers #pytorch #safetensors #detr #image-segmentation #dataset-coco #arxiv-2005.12872 #license-apache-2.0 #endpoints_compatible #has_space #region-us

|

# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 panoptic (118k annotated images). It was introduced in the paper End-to-End Object Detection with Transformers by Carion et al. and first released in this repository.

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

DETR can be naturally extended to perform panoptic segmentation, by adding a mask head on top of the decoder outputs.

## Intended uses & limitations

You can use the raw model for panoptic segmentation. See the model hub to look for all available DETR models.

### How to use

Here is how to use this model:

Currently, both the feature extractor and model support PyTorch.

## Training data

The DETR model was trained on COCO 2017 panoptic, a dataset consisting of 118k/5k annotated images for training/validation respectively.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found here.

Images are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).

### Training

The model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).

## Evaluation results

This model achieves the following results on COCO 2017 validation: a box AP (average precision) of 40.2, a segmentation AP (average precision) of 31.9 and a PQ (panoptic quality) of 44.6.

For more details regarding evaluation results, we refer to table 5 of the original paper.

### BibTeX entry and citation info

|

[

"# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)\n\nDEtection TRansformer (DETR) model trained end-to-end on COCO 2017 panoptic (118k annotated images). It was introduced in the paper End-to-End Object Detection with Transformers by Carion et al. and first released in this repository. \n\nDisclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.",

"## Model description\n\nThe DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100. \n\nThe model is trained using a \"bipartite matching loss\": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a \"no object\" as class and \"no bounding box\" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.\n\nDETR can be naturally extended to perform panoptic segmentation, by adding a mask head on top of the decoder outputs.",

"## Intended uses & limitations\n\nYou can use the raw model for panoptic segmentation. See the model hub to look for all available DETR models.",

"### How to use\n\nHere is how to use this model:\n\n\n\nCurrently, both the feature extractor and model support PyTorch.",

"## Training data\n\nThe DETR model was trained on COCO 2017 panoptic, a dataset consisting of 118k/5k annotated images for training/validation respectively.",

"## Training procedure",

"### Preprocessing\n\nThe exact details of preprocessing of images during training/validation can be found here. \n\nImages are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).",

"### Training\n\nThe model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).",

"## Evaluation results\n\nThis model achieves the following results on COCO 2017 validation: a box AP (average precision) of 40.2, a segmentation AP (average precision) of 31.9 and a PQ (panoptic quality) of 44.6.\n\nFor more details regarding evaluation results, we refer to table 5 of the original paper.",

"### BibTeX entry and citation info"

] |

[

"TAGS\n#transformers #pytorch #safetensors #detr #image-segmentation #dataset-coco #arxiv-2005.12872 #license-apache-2.0 #endpoints_compatible #has_space #region-us \n",

"# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)\n\nDEtection TRansformer (DETR) model trained end-to-end on COCO 2017 panoptic (118k annotated images). It was introduced in the paper End-to-End Object Detection with Transformers by Carion et al. and first released in this repository. \n\nDisclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.",

"## Model description\n\nThe DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100. \n\nThe model is trained using a \"bipartite matching loss\": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a \"no object\" as class and \"no bounding box\" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.\n\nDETR can be naturally extended to perform panoptic segmentation, by adding a mask head on top of the decoder outputs.",

"## Intended uses & limitations\n\nYou can use the raw model for panoptic segmentation. See the model hub to look for all available DETR models.",

"### How to use\n\nHere is how to use this model:\n\n\n\nCurrently, both the feature extractor and model support PyTorch.",

"## Training data\n\nThe DETR model was trained on COCO 2017 panoptic, a dataset consisting of 118k/5k annotated images for training/validation respectively.",

"## Training procedure",

"### Preprocessing\n\nThe exact details of preprocessing of images during training/validation can be found here. \n\nImages are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).",

"### Training\n\nThe model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).",

"## Evaluation results\n\nThis model achieves the following results on COCO 2017 validation: a box AP (average precision) of 40.2, a segmentation AP (average precision) of 31.9 and a PQ (panoptic quality) of 44.6.\n\nFor more details regarding evaluation results, we refer to table 5 of the original paper.",

"### BibTeX entry and citation info"

] |

[

61,

125,

325,

34,

28,

40,

3,

94,

42,

74,

11

] |

[

"passage: TAGS\n#transformers #pytorch #safetensors #detr #image-segmentation #dataset-coco #arxiv-2005.12872 #license-apache-2.0 #endpoints_compatible #has_space #region-us \n# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)\n\nDEtection TRansformer (DETR) model trained end-to-end on COCO 2017 panoptic (118k annotated images). It was introduced in the paper End-to-End Object Detection with Transformers by Carion et al. and first released in this repository. \n\nDisclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team."

] |

[

-0.07406463474035263,

0.0017924208659678698,

-0.0011195981642231345,

0.06568663567304611,

0.1125514879822731,

0.022018050774931908,

0.07789719104766846,

0.00818848330527544,

-0.09172855317592621,

0.03225686773657799,

0.14285790920257568,

0.11256001144647598,

0.03461400419473648,

0.1347421258687973,

-0.023337701335549355,

-0.1443895399570465,

0.0780131071805954,

0.11041366308927536,

0.0749502182006836,

0.1353522092103958,

0.07790866494178772,

-0.11358919739723206,

0.1252315491437912,

0.036433860659599304,

-0.2358856201171875,

-0.015579847618937492,

-0.027177046984434128,

-0.09360998868942261,

0.07530947029590607,

-0.019558152183890343,

0.18702499568462372,

0.054180245846509933,

0.10039637982845306,

0.024585073813796043,

0.027560515329241753,

0.04050358012318611,

-0.050507962703704834,

0.07022407650947571,

-0.005019110161811113,

-0.05995235964655876,

0.111955925822258,

0.01830870471894741,

0.06800612807273865,

-0.006820468697696924,

-0.03697502613067627,

-0.23511859774589539,

-0.09018747508525848,

0.18233785033226013,

0.06261236220598221,

0.09310821443796158,

0.03690968453884125,

0.15987934172153473,

0.04439638555049896,

0.06884703040122986,

0.11519897729158401,

-0.18736718595027924,

-0.07022390514612198,

0.09167104959487915,

0.023375799879431725,

-0.027684500440955162,

0.005457885563373566,

0.01001337356865406,

0.05897419899702072,

0.05434936285018921,

0.1251450926065445,

-0.02060658112168312,

0.006836488377302885,

-0.07216551154851913,

-0.14089959859848022,

-0.08896281570196152,

0.12719127535820007,

-0.023824546486139297,

-0.07976139336824417,

-0.054813094437122345,

-0.09763423353433609,

-0.07006461918354034,

-0.0014572071377187967,

-0.006482680793851614,

0.006830472499132156,

-0.004723612684756517,

-0.007686185650527477,

-0.018499234691262245,

-0.1032482460141182,

-0.10862067341804504,

-0.1635221242904663,

0.1457039713859558,

0.022133104503154755,

0.11826511472463608,

-0.091251440346241,

0.08704090863466263,

-0.008418885059654713,

-0.061255306005477905,

-0.037722792476415634,

-0.09759466350078583,

0.10997691005468369,

0.01187506876885891,

-0.04766375571489334,

-0.1159001812338829,

-0.022858822718262672,

0.11633475124835968,

-0.010036380030214787,

-0.021987061947584152,

-0.045397039502859116,

0.08686979115009308,

0.039872173219919205,

0.12467935681343079,

-0.1012938842177391,

0.1327947974205017,

0.022026514634490013,

0.00555067416280508,

0.12179706245660782,

-0.04144815728068352,

-0.0826210156083107,

0.010876779444515705,

0.05088960379362106,

-0.01772345043718815,

0.0321376696228981,

0.07203131914138794,

0.031205900013446808,

-0.019995952025055885,

0.24254226684570312,

-0.033895526081323624,

-0.05406412482261658,

0.010801952332258224,

-0.004684248473495245,

0.10526467114686966,

0.13466975092887878,

-0.05651372671127319,

0.02172859013080597,

0.09660438448190689,

-0.07922988384962082,

0.00632607564330101,

-0.04611680284142494,

-0.11264269053936005,

0.03514346480369568,

-0.16909274458885193,

-0.005673957988619804,

-0.22460800409317017,

-0.11759506165981293,

0.03912527859210968,

0.014933684840798378,

0.006291991099715233,

0.06015423312783241,

0.033807557076215744,

-0.04167911037802696,

0.031003830954432487,

0.028404975309967995,

-0.05555921420454979,

-0.02112269215285778,

0.08266407251358032,

-0.01589318737387657,

0.13602802157402039,

-0.2084343284368515,

0.025745060294866562,

-0.12561224400997162,

0.05150929465889931,

-0.15140222012996674,

-0.02067706547677517,

-0.018471326678991318,

0.06637632846832275,

-0.049007661640644073,

-0.05127529427409172,

-0.03417012840509415,

0.01344483532011509,

0.025523994117975235,

0.12258521467447281,

-0.06106194481253624,

-0.019576948136091232,

0.16619230806827545,

-0.19617804884910583,

-0.09883981943130493,

0.055151406675577164,

-0.012730422429740429,

0.008394002914428711,

0.0019827084615826607,

0.047009218484163284,

0.022137513384222984,

-0.1403609812259674,

-0.06349842995405197,

0.03496871143579483,

-0.15602000057697296,

-0.12319685518741608,

0.050857141613960266,

0.05938320979475975,

-0.17189927399158478,

0.011751962825655937,

-0.1356513798236847,

0.030698265880346298,

-0.053084224462509155,

-0.06198219954967499,

-0.051936015486717224,

-0.0867237001657486,

0.07027707993984222,

0.001915075583383441,

0.04321034997701645,

0.005476758349686861,

-0.03611289709806442,

0.02498422935605049,

0.054401397705078125,

-0.031913675367832184,

-0.022988470271229744,

-0.07873941957950592,

0.20608651638031006,

-0.13230398297309875,

-0.01633242890238762,

-0.13518908619880676,

-0.05970368534326553,

-0.019697541370987892,

0.0162306260317564,

-0.012409310787916183,

-0.0511859729886055,

0.04660551995038986,

0.052980002015829086,

0.02466088905930519,

-0.033434126526117325,

0.022887902334332466,

0.014054662548005581,

-0.018088746815919876,

-0.04723794385790825,

-0.01666839048266411,

-0.05540908873081207,

0.10146582871675491,

-0.000312572083203122,

0.027691805735230446,

0.00881414208561182,

0.06931851804256439,

-0.02383754961192608,

0.02416357211768627,

0.01554663572460413,

0.016435453668236732,

-0.007193034514784813,

-0.03769201785326004,

0.08125502616167068,

0.0643191710114479,

-0.021911917254328728,

0.07460945099592209,

-0.1226552352309227,

0.07464851438999176,

0.2007913440465927,

-0.11235356330871582,

-0.1135389432311058,

0.05394582822918892,

-0.020832225680351257,

0.004117976408451796,

-0.031181951984763145,

-0.001205160398967564,

0.02356678619980812,

-0.08392713218927383,

0.13449938595294952,

-0.08312956243753433,

0.05015679448843002,

0.10460883378982544,

-0.030251532793045044,

-0.0980464443564415,

0.010519263334572315,

0.11165156960487366,

-0.09275995194911957,

0.08687532693147659,

0.11627735197544098,

-0.1018579751253128,

0.06256759166717529,

0.010409610345959663,

-0.029558954760432243,

-0.007703033275902271,

0.005020993761718273,

0.01217904593795538,

0.12229098379611969,

-0.026684073731303215,

-0.051925692707300186,

0.055443327873945236,

-0.07608761638402939,

0.0048660277388989925,

-0.138926163315773,

0.000020181956642773002,

0.03829187527298927,

0.04245506599545479,

-0.1064106896519661,

0.06776092201471329,

-0.024699166417121887,

0.07087802141904831,

0.0230511836707592,

-0.11645114421844482,

0.06456971168518066,

0.024723120033740997,

-0.05435976758599281,

0.1428077518939972,

-0.04775706306099892,

-0.3221800625324249,

-0.09569154679775238,

-0.0351138673722744,

-0.014086706563830376,

0.035089608281850815,

0.04398581385612488,

-0.03916952386498451,

-0.0456903874874115,

-0.07868510484695435,

-0.08158275485038757,

-0.0034156206529587507,

0.03745725378394127,

0.06502517312765121,

-0.05942428857088089,

0.022979959845542908,

-0.06994087249040604,

0.007005765568464994,

-0.07400014996528625,

-0.07498175650835037,

0.10218455642461777,

-0.07612523436546326,

0.14805515110492706,

0.15768791735172272,

-0.07808469235897064,

0.014147828333079815,

-0.013735095039010048,

0.12281063199043274,

-0.02721424773335457,

-0.00009648047125665471,

0.21658167243003845,

-0.03651169314980507,

0.05326589196920395,

0.08111219853162766,

-0.011740493588149548,

-0.029327213764190674,

-0.017571378499269485,

-0.0612272210419178,

-0.15541696548461914,

-0.08934911340475082,

-0.05229315906763077,

0.009583539329469204,

0.06752070784568787,

0.09492315351963043,

0.03281343728303909,

0.13026772439479828,

0.14054027199745178,

0.009646696969866753,

0.0052553038112819195,

-0.05246695503592491,

0.08768011629581451,

0.0943574383854866,

-0.003002390032634139,

0.12681326270103455,

-0.1028486117720604,

-0.02936612442135811,

0.0691177174448967,

-0.00139047100674361,

0.15800796449184418,

-0.03910120576620102,

-0.07234498858451843,

0.04459509626030922,

0.11699866503477097,

0.07822231203317642,

0.11737770587205887,

-0.06493133306503296,

0.005053235217928886,

-0.00848686695098877,

-0.05416741222143173,

-0.04090951755642891,

-0.0012028736528009176,

-0.07015911489725113,

0.032985322177410126,

-0.07312159985303879,

0.04325412958860397,

0.011424342170357704,

0.07311739772558212,

0.029043152928352356,

-0.32318001985549927,

-0.07012312114238739,

-0.051177531480789185,

0.10307469964027405,

-0.1172296404838562,

0.030439643189311028,

0.005325948353856802,

-0.016715094447135925,

0.045435283333063126,

-0.051021795719861984,

0.03148247301578522,

-0.07130753993988037,

-0.0064109633676707745,

0.03707919269800186,

-0.016751699149608612,

0.04335654899477959,

-0.012295437045395374,

-0.09278670698404312,

0.15724554657936096,

-0.01386759802699089,

0.002636859891936183,

-0.06541408598423004,

0.0016307244077324867,

0.04175934940576553,

0.14871174097061157,

0.16969993710517883,

0.005338252056390047,

-0.0468486025929451,

-0.07418683916330338,

-0.07371275126934052,

0.009652958251535892,

0.0007308353087864816,

0.04215981438755989,

0.02689751237630844,

-0.01506325788795948,

-0.033641114830970764,

0.03524124622344971,

0.03187166154384613,

0.017099885269999504,

-0.06171676889061928,

0.04564560204744339,

0.023221449926495552,

-0.04788622260093689,

-0.033787891268730164,

-0.09692919999361038,

-0.07333458214998245,

0.0030465691816061735,

-0.008210376836359501,

-0.08880961686372757,

-0.10400338470935822,

-0.03394252434372902,

0.013732461258769035,

-0.047842781990766525,

0.13957209885120392,

-0.08584079891443253,

0.03728317469358444,

-0.013935493305325508,

-0.18048082292079926,

0.0965280532836914,

-0.0923072025179863,

-0.07367254793643951,

-0.007005015388131142,

0.032626163214445114,

-0.006450273562222719,

-0.003317341674119234,

0.038541603833436966,

0.032982390373945236,

-0.07467915117740631,

-0.04885666444897652,

0.09863066673278809,

0.09982751309871674,

0.07670482248067856,

0.007982928305864334,

0.05504996329545975,

-0.17366912961006165,

0.034194789826869965,

0.06661885976791382,

0.06473556160926819,

0.01739313080906868,

-0.10728584229946136,

0.07052833586931229,

0.08142416179180145,

-0.008985833264887333,

-0.31727927923202515,

-0.049905188381671906,

-0.04021130129694939,

-0.006754348054528236,

-0.09355010837316513,

-0.07536856830120087,

0.09099763631820679,

0.0543859526515007,

-0.06496214121580124,

0.11611486971378326,

-0.2168426513671875,

-0.04032086953520775,

0.16367119550704956,

0.10269485414028168,

0.3162699043750763,

-0.1304096281528473,

-0.03817145898938179,

-0.024639900773763657,

-0.23439539968967438,

0.09189357608556747,

-0.10513829439878464,

0.03783261775970459,

-0.0506134070456028,

0.02194903790950775,

-0.010722585953772068,

-0.08066533505916595,

0.0717356726527214,

-0.07581844180822372,

0.0536087304353714,

-0.10428912192583084,

-0.07992888242006302,

0.07234496623277664,

-0.04027671739459038,

0.12126360088586807,

0.01672251895070076,

0.10180195420980453,

0.012884645722806454,

-0.04758675396442413,

-0.1128588616847992,

0.07710694521665573,

0.004949660040438175,

-0.05604299157857895,

-0.08438187837600708,

-0.01406126283109188,

-0.003566100960597396,

-0.015444333665072918,

0.0678667426109314,

-0.0026883806567639112,

0.0644274652004242,

0.1172427162528038,

0.019893109798431396,

0.0037592786829918623,

-0.03770671784877777,

-0.073589988052845,

-0.10942120850086212,

0.1140415370464325,

-0.18297763168811798,

0.008955147117376328,

0.061815112829208374,

0.035165127366781235,

-0.01230630837380886,

0.0861603319644928,

0.014030033722519875,

0.029993338510394096,

0.06756620109081268,

-0.1352025419473648,

-0.03198840469121933,

-0.004256234038621187,

0.037165530025959015,

0.03973929584026337,

0.14682936668395996,

0.12814968824386597,

-0.11737632751464844,

-0.02579280361533165,

-0.026048438623547554,

0.010683713480830193,

-0.013423574157059193,

-0.013927036896348,

0.09357617050409317,

-0.005380117334425449,

-0.09626142680644989,

0.08038172125816345,

0.02794470824301243,

-0.008219449780881405,

-0.0068514542654156685,

-0.030973367393016815,

-0.12597456574440002,

-0.08636587858200073,

-0.08767731487751007,

0.051374368369579315,

-0.2416848987340927,

-0.09273097664117813,

-0.038794271647930145,

-0.1168900653719902,

0.030693676322698593,

0.27401718497276306,

0.09305267035961151,

0.053697191178798676,

0.010810954496264458,

0.003986313007771969,

-0.13160854578018188,

0.029155906289815903,

-0.11120632290840149,

0.06694042682647705,

-0.13783244788646698,

0.07761789858341217,

0.029897261410951614,

0.0944172590970993,

-0.09041322767734528,

-0.01858201064169407,

-0.06284847110509872,

-0.0012735376367345452,

-0.15189354121685028,

0.05302603915333748,

-0.04597907140851021,

-0.005633285269141197,

0.024017563089728355,

0.03696146607398987,

-0.03564395755529404,

0.06276624649763107,

-0.03913598507642746,

0.0317620187997818,

0.03159453719854355,

-0.026959367096424103,

-0.10698923468589783,

-0.022870300337672234,

-0.021380402147769928,

0.0014421845553442836,

0.06653931736946106,

0.08453765511512756,

-0.03628366068005562,

0.017657669261097908,

-0.09520646184682846,

-0.054126348346471786,

0.06100192666053772,

0.0010055368766188622,

0.009123093448579311,

-0.035446543246507645,

0.01715790666639805,

0.0505557619035244,

-0.12837134301662445,

0.011681094765663147,

0.09952018409967422,

-0.06872624158859253,

0.011979583650827408,

-0.015585007146000862,

0.014704596251249313,

-0.03311442583799362,

0.007701689377427101,

0.12007337808609009,

0.06048508360981941,

0.056523557752370834,

-0.021097246557474136,

0.020606674253940582,

-0.08814562857151031,

-0.0032661529257893562,

-0.028006864711642265,

-0.11187507212162018,

-0.0949309766292572,

-0.004781910218298435,

0.019447216764092445,

0.001322758849710226,

0.16465404629707336,

0.019127655774354935,

-0.06754894554615021,

-0.0007668090402148664,

0.14344245195388794,

0.006067346315830946,

0.00448446162045002,

0.24120181798934937,

0.01086181215941906,

-0.02676103077828884,

0.04760526493191719,

0.0710342526435852,

0.04036485403776169,

0.026676228269934654,

0.08699888736009598,

0.09771763533353806,

0.07307887077331543,

0.096872478723526,

0.09713389724493027,

0.03890369459986687,

-0.12145724147558212,

-0.12796857953071594,

-0.04311073571443558,

0.06435931473970413,

-0.026435434818267822,

0.005647442303597927,

0.15819722414016724,

0.02666281722486019,

-0.0404425673186779,

-0.02710367925465107,

-0.010716567747294903,

-0.06160486117005348,

-0.09688562899827957,

-0.07390163093805313,

-0.11132808774709702,

-0.003561462741345167,

0.00282685155980289,

-0.07852242887020111,

0.13898228108882904,

-0.013801426626741886,

-0.02925010211765766,

0.16334474086761475,

-0.057646144181489944,

0.017813144251704216,

0.07538945227861404,

0.015238654799759388,

-0.057093147188425064,

-0.004043663386255503,

-0.024121291935443878,

0.051255516707897186,

0.03939257934689522,

0.02786930464208126,

-0.01774763874709606,

0.07061560451984406,

0.0841827467083931,

-0.014611441642045975,

-0.11143475770950317,

-0.02530829980969429,

0.07860816270112991,

-0.007747482508420944,

0.04734061285853386,

-0.019631171599030495,

0.05486753210425377,

0.008307252079248428,

0.0837455466389656,

-0.04112447053194046,

-0.007050707470625639,

-0.09098802506923676,

0.11922571063041687,

-0.09373190999031067,

0.00260817795060575,

0.00007244787411764264,

-0.05063348636031151,

-0.014889499172568321,

0.21059855818748474,

0.1878896951675415,

0.017688684165477753,

0.01915803924202919,

0.019776109606027603,

0.016050025820732117,

-0.01627189852297306,

0.037807971239089966,

0.11428507417440414,

0.21434709429740906,

-0.048975929617881775,

-0.10133199393749237,

-0.09266582131385803,

0.032709553837776184,

-0.009132687933743,

-0.050773270428180695,

-0.004165216349065304,

-0.0228225439786911,

-0.08704821020364761,

0.011739271692931652,

-0.06883415579795837,

0.039222899824380875,

0.14652685821056366,

-0.02370455302298069,

-0.018016109243035316,

0.0029891154263168573,

0.05848865956068039,

0.019787820056080818,

0.011811424978077412,

-0.05721157044172287,

0.010597849264740944,

0.1715679168701172,

0.004206915386021137,

-0.15547403693199158,

-0.0529358796775341,

0.004613170400261879,

0.02753753401339054,

0.20630355179309845,

-0.009859784506261349,

0.05300268903374672,

0.04887590929865837,

0.06887627393007278,

-0.09994977712631226,

0.05979819595813751,

-0.011525912210345268,

-0.07767360657453537,

-0.007349001709371805,

0.05490737035870552,

-0.020795069634914398,

0.006533030420541763,

-0.012008575722575188,

-0.07719884812831879,

0.007672841195017099,

0.03441813215613365,

-0.05302200838923454,

-0.032992925494909286,

0.006891858763992786,

-0.07966234534978867,

0.13011404871940613,

0.10847253352403641,

0.010928702540695667,

-0.060314226895570755,

-0.0009841981809586287,

0.008036493323743343,

0.02853504754602909,

-0.08867693692445755,

0.027041852474212646,

-0.07735928148031235,

0.013174346648156643,

-0.040487825870513916,

0.02164696715772152,

-0.18573662638664246,

-0.029941167682409286,

-0.10008249431848526,

-0.05951394885778427,

-0.06116499751806259,

-0.04670485854148865,

0.0524461530148983,

0.028704963624477386,

-0.042688582092523575,

0.20302976667881012,

-0.021947268396615982,

0.08561836928129196,

-0.05185062438249588,

-0.11020298302173615

] |

null | null |

transformers

|

# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [End-to-End Object Detection with Transformers](https://arxiv.org/abs/2005.12872) by Carion et al. and first released in [this repository](https://github.com/facebookresearch/detr).

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=facebook/detr) to look for all available DETR models.

### How to use

Here is how to use this model:

```python

from transformers import DetrFeatureExtractor, DetrForObjectDetection

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = DetrFeatureExtractor.from_pretrained('facebook/detr-resnet-50-dc5')

model = DetrForObjectDetection.from_pretrained('facebook/detr-resnet-50-dc5')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

# model predicts bounding boxes and corresponding COCO classes

logits = outputs.logits

bboxes = outputs.pred_boxes

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/google-research/vision_transformer/blob/master/vit_jax/input_pipeline.py).

Images are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).

### Training

The model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).

## Evaluation results

This model achieves an AP (average precision) of **43.3** on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2005-12872,

author = {Nicolas Carion and

Francisco Massa and

Gabriel Synnaeve and

Nicolas Usunier and

Alexander Kirillov and

Sergey Zagoruyko},

title = {End-to-End Object Detection with Transformers},

journal = {CoRR},

volume = {abs/2005.12872},

year = {2020},

url = {https://arxiv.org/abs/2005.12872},

archivePrefix = {arXiv},

eprint = {2005.12872},

timestamp = {Thu, 28 May 2020 17:38:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-12872.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

{"license": "apache-2.0", "tags": ["object-detection", "vision"], "datasets": ["coco"], "widget": [{"src": "https://huggingface.co/datasets/mishig/sample_images/resolve/main/savanna.jpg", "example_title": "Savanna"}, {"src": "https://huggingface.co/datasets/mishig/sample_images/resolve/main/football-match.jpg", "example_title": "Football Match"}, {"src": "https://huggingface.co/datasets/mishig/sample_images/resolve/main/airport.jpg", "example_title": "Airport"}]}

|

object-detection

|

facebook/detr-resnet-50-dc5

|

[

"transformers",

"pytorch",

"safetensors",

"detr",

"object-detection",

"vision",

"dataset:coco",

"arxiv:2005.12872",

"license:apache-2.0",

"endpoints_compatible",

"has_space",

"region:us"

] |

2022-03-02T23:29:05+00:00

|

[

"2005.12872"

] |

[] |

TAGS

#transformers #pytorch #safetensors #detr #object-detection #vision #dataset-coco #arxiv-2005.12872 #license-apache-2.0 #endpoints_compatible #has_space #region-us

|

# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper End-to-End Object Detection with Transformers by Carion et al. and first released in this repository.

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the model hub to look for all available DETR models.

### How to use

Here is how to use this model:

Currently, both the feature extractor and model support PyTorch.

## Training data

The DETR model was trained on COCO 2017 object detection, a dataset consisting of 118k/5k annotated images for training/validation respectively.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found here.

Images are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).

### Training

The model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).

## Evaluation results

This model achieves an AP (average precision) of 43.3 on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.

### BibTeX entry and citation info

|

[

"# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)\n\nDEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper End-to-End Object Detection with Transformers by Carion et al. and first released in this repository. \n\nDisclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.",

"## Model description\n\nThe DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100. \n\nThe model is trained using a \"bipartite matching loss\": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a \"no object\" as class and \"no bounding box\" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.",

"## Intended uses & limitations\n\nYou can use the raw model for object detection. See the model hub to look for all available DETR models.",

"### How to use\n\nHere is how to use this model:\n\n\n\nCurrently, both the feature extractor and model support PyTorch.",

"## Training data\n\nThe DETR model was trained on COCO 2017 object detection, a dataset consisting of 118k/5k annotated images for training/validation respectively.",

"## Training procedure",

"### Preprocessing\n\nThe exact details of preprocessing of images during training/validation can be found here. \n\nImages are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).",

"### Training\n\nThe model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).",

"## Evaluation results\n\nThis model achieves an AP (average precision) of 43.3 on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.",

"### BibTeX entry and citation info"

] |

[

"TAGS\n#transformers #pytorch #safetensors #detr #object-detection #vision #dataset-coco #arxiv-2005.12872 #license-apache-2.0 #endpoints_compatible #has_space #region-us \n",

"# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)\n\nDEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper End-to-End Object Detection with Transformers by Carion et al. and first released in this repository. \n\nDisclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.",

"## Model description\n\nThe DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100. \n\nThe model is trained using a \"bipartite matching loss\": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a \"no object\" as class and \"no bounding box\" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.",

"## Intended uses & limitations\n\nYou can use the raw model for object detection. See the model hub to look for all available DETR models.",

"### How to use\n\nHere is how to use this model:\n\n\n\nCurrently, both the feature extractor and model support PyTorch.",

"## Training data\n\nThe DETR model was trained on COCO 2017 object detection, a dataset consisting of 118k/5k annotated images for training/validation respectively.",

"## Training procedure",

"### Preprocessing\n\nThe exact details of preprocessing of images during training/validation can be found here. \n\nImages are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).",

"### Training\n\nThe model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).",

"## Evaluation results\n\nThis model achieves an AP (average precision) of 43.3 on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.",

"### BibTeX entry and citation info"

] |

[

62,

126,

295,

33,

28,

41,

3,

94,

42,

43,

11

] |

[

"passage: TAGS\n#transformers #pytorch #safetensors #detr #object-detection #vision #dataset-coco #arxiv-2005.12872 #license-apache-2.0 #endpoints_compatible #has_space #region-us \n# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)\n\nDEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper End-to-End Object Detection with Transformers by Carion et al. and first released in this repository. \n\nDisclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.## Model description\n\nThe DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100. \n\nThe model is trained using a \"bipartite matching loss\": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a \"no object\" as class and \"no bounding box\" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model."

] |

[

-0.043032120913267136,

0.06092856824398041,

-0.0065768081694841385,

0.072905994951725,

0.09476758539676666,

0.003933784086257219,

0.07610145956277847,

0.02473614364862442,

0.01496042124927044,

0.10092394798994064,

-0.018238618969917297,

0.033506862819194794,

0.10438618808984756,

0.06814130395650864,

0.05152986943721771,

-0.1645766645669937,

0.07984352111816406,

-0.04977186396718025,

0.004463928751647472,

0.03804612159729004,

0.05056659132242203,

-0.07684587687253952,

0.09156262129545212,

-0.015407482162117958,

-0.05411051958799362,

0.01307989563792944,

-0.03314966335892677,

-0.0020500055979937315,

0.08700483292341232,

0.05769665539264679,

0.061556845903396606,

-0.03645947203040123,

0.04021500051021576,

-0.034669239073991776,

0.01708575338125229,

0.13041208684444427,

-0.029401682317256927,

0.059820786118507385,

0.048190757632255554,

-0.03628791868686676,

-0.00363167398609221,

-0.07866171002388,

0.04236097261309624,

0.002174381399527192,

-0.08198560774326324,

-0.04813989996910095,

-0.09257790446281433,

0.060716137290000916,

0.13340973854064941,

0.03855655714869499,

-0.005888348910957575,

0.036732833832502365,

0.077363982796669,

0.05927896499633789,

0.1428077220916748,

-0.329274982213974,

-0.008557134307920933,

0.013446174561977386,

-0.015357195399701595,

0.0626409575343132,

-0.03646852821111679,

-0.056546930223703384,

-0.013842407613992691,

-0.0016872277483344078,

0.06523312628269196,

-0.02218976989388466,

0.054768156260252,

-0.08167809247970581,

-0.1296548992395401,

-0.0960979014635086,

0.07710036635398865,

0.015987690538167953,

-0.12741237878799438,

-0.05265618860721588,

-0.10604932904243469,

-0.09255266934633255,

0.027699928730726242,

-0.000448322796728462,

0.016897346824407578,

0.045880746096372604,

0.07127183675765991,

-0.00203902181237936,

-0.08680612593889236,

-0.01654043234884739,

-0.09922569990158081,

0.11145328730344772,

0.028815435245633125,

0.06190880760550499,

0.06555168330669403,

0.12490426003932953,

-0.03540473058819771,

-0.07574690878391266,

-0.09395246207714081,

-0.01802910678088665,

-0.1766476035118103,

-0.025987977162003517,

0.007974226027727127,

-0.1451214700937271,

-0.08937768638134003,

0.1353519856929779,

0.00016436762234661728,

0.06589744985103607,

-0.07272055745124817,

0.06568235158920288,

0.084256611764431,

0.1363188922405243,

-0.08037108927965164,

0.06826236099004745,

0.019777720794081688,

-0.0332387313246727,

0.08359003812074661,

-0.07153502106666565,

-0.04298773035407066,

-0.012956297025084496,

0.08151310682296753,

0.005068299360573292,

0.028622958809137344,

0.010306023061275482,

-0.031144753098487854,

-0.07095875591039658,

0.14546550810337067,

-0.1243588998913765,

0.024038570001721382,

0.018579604104161263,

-0.000855600752402097,

-0.01719525083899498,

0.043360382318496704,

0.017136620357632637,

-0.07522306591272354,

0.06244729831814766,

-0.04708131030201912,

0.01400473341345787,

-0.06775681674480438,

-0.10947864502668381,

0.027905110269784927,

-0.1468634456396103,

-0.054652079939842224,

-0.11917747557163239,

-0.07980094850063324,

-0.05576807260513306,

0.050622694194316864,

-0.03351235017180443,

0.022585904225707054,

-0.02105051837861538,

-0.03633284196257591,

-0.013445514254271984,

0.022095683962106705,

-0.05362369865179062,

-0.01589973270893097,

0.035979218780994415,

-0.12078951299190521,

0.07053235918283463,

-0.053476519882678986,

0.003582060569897294,

-0.09049481153488159,

0.05717703700065613,

-0.1234118640422821,

0.15280255675315857,

0.065300852060318,

0.035249024629592896,

-0.09444016218185425,

0.020382681861519814,

-0.09781606495380402,

-0.03689146786928177,

0.03188589587807655,

0.09273535013198853,

-0.191136434674263,

-0.014543878845870495,

0.07935749739408493,

-0.1572692096233368,

0.007772369310259819,

0.05710345506668091,

-0.03198873624205589,

0.02507423609495163,

0.0569152757525444,

-0.005820553749799728,

0.13268394768238068,

-0.04424086585640907,

-0.1550208330154419,

-0.06292282044887543,

-0.13484720885753632,

0.09556743502616882,

0.060245029628276825,

0.075560063123703,

-0.044032514095306396,

-0.013643995858728886,

-0.05781839042901993,

-0.05701068043708801,

0.027758996933698654,

-0.08143722265958786,

0.024946607649326324,

0.026492560282349586,

-0.041864100843667984,

-0.04007228463888168,

-0.0002158144925488159,

0.02059168554842472,

-0.05488782003521919,

-0.10434119403362274,

0.046324681490659714,

-0.05192472040653229,

0.05197683721780777,

-0.08723025023937225,

0.04363919794559479,

-0.1538049727678299,

0.017306771129369736,

-0.17181278765201569,

-0.0478002168238163,

0.07574043422937393,

-0.058571621775627136,

0.061191245913505554,

0.0007943347445689142,

0.04142411798238754,

0.09062088280916214,

0.01909659430384636,

-0.06905234605073929,

0.024892669171094894,

-0.0651489719748497,

-0.038271136581897736,

-0.14503416419029236,

-0.08696277439594269,

-0.051683954894542694,

0.04822462424635887,

0.034913599491119385,

-0.0022125307004898787,

0.054503846913576126,

0.11202497780323029,

0.054253898561000824,

-0.05685197934508324,

-0.033086370676755905,

0.01242495235055685,

-0.037482183426618576,

-0.06686004251241684,

-0.0033705118112266064,

0.03268483653664589,

-0.03770308941602707,

0.04510881379246712,

-0.13519322872161865,

-0.006873028352856636,

0.05332503467798233,

0.01240869052708149,

-0.05356617271900177,

0.017673226073384285,

-0.01808704063296318,

-0.018342915922403336,

-0.05715884640812874,

-0.025305435061454773,

0.23603452742099762,

0.026901310309767723,

0.05701655149459839,

-0.04433537274599075,

-0.004849104210734367,

0.04743685573339462,

0.0013350084191188216,

-0.11419226974248886,

-0.00974989403039217,

0.07934568077325821,

-0.17182829976081848,

0.04734095558524132,

0.00504817022010684,

0.043929148465394974,

0.12393565475940704,

0.03026050515472889,

-0.07023165374994278,

-0.042733084410429,

0.09395452588796616,

0.048309575766325,

0.04912881553173065,

0.026266567409038544,

0.012548538856208324,

0.0166653860360384,

0.03885798528790474,

-0.011300716549158096,

-0.07334022223949432,

0.08893783390522003,

0.01811910606920719,

-0.01837228238582611,

0.027005910873413086,

-0.047566551715135574,

-0.013735372573137283,

0.06515461206436157,

0.07851358503103256,

0.02090965397655964,

0.0051137590780854225,

-0.054838500916957855,

-0.12070688605308533,

0.11081820726394653,

-0.103434257209301,

-0.288949579000473,

-0.22125422954559326,

0.02459842711687088,

-0.05617634952068329,

0.0644032284617424,

0.025791915133595467,

-0.010426562279462814,

-0.034891050308942795,

-0.10118155926465988,

0.010426280088722706,

0.04836941882967949,

0.052863992750644684,

-0.006629844196140766,

-0.0017766578821465373,

0.0516175851225853,

-0.08950336277484894,

0.020841356366872787,

-0.017712052911520004,

-0.16689343750476837,

0.03127840906381607,

0.037784043699502945,

0.0602945014834404,

0.09201265871524811,

-0.02451927214860916,

-0.02089596726000309,

0.000086824300524313,

0.1531282216310501,

-0.04579088091850281,

0.05761139094829559,

0.04689757153391838,

-0.08273102343082428,

0.07107487320899963,

0.1803112030029297,

-0.03583097830414772,

-0.01765103079378605,

-0.002308144001290202,

0.020631352439522743,

-0.0958155170083046,

-0.08955121785402298,

-0.01418980024755001,

-0.035202838480472565,

-0.019126717001199722,

0.11124679446220398,

0.08486184477806091,

-0.010935183614492416,

0.044887393712997437,

-0.06938280910253525,

0.06646755337715149,

0.027539588510990143,

0.09920333325862885,

-0.05922280624508858,

0.01330292783677578,

0.07109848409891129,

-0.08187064528465271,

-0.018153026700019836,

0.0480927936732769,

0.11104150861501694,

0.1999373883008957,

-0.09346678107976913,

0.012202493846416473,

0.04881494492292404,

0.025591550394892693,

0.05486675351858139,

0.09882700443267822,

-0.08424505591392517,

0.054694924503564835,

-0.019112657755613327,

-0.07711199671030045,

-0.10823836177587509,

0.09168227016925812,

0.0026160134002566338,

0.036649323999881744,

-0.050181757658720016,

0.024811938405036926,

0.054012659937143326,

0.2649746835231781,

0.08683184534311295,

-0.20110628008842468,

-0.0545225515961647,

0.03993126377463341,

-0.018146436661481857,

-0.08725765347480774,

-0.0485864020884037,

0.10097119212150574,

-0.04636454954743385,

0.015169121325016022,

-0.04490093141794205,

0.04615209251642227,

-0.22075915336608887,

-0.03560374677181244,

-0.005152181722223759,

0.012464722618460655,

0.002386357868090272,

-0.0038355097640305758,

-0.07213397324085236,

-0.0037573024164885283,

0.024382583796977997,

0.0878940224647522,

-0.05778835713863373,

0.06521229445934296,

-0.005761154927313328,

-0.026499321684241295,

0.07573074847459793,

0.03135671466588974,

-0.12117867171764374,

-0.17188945412635803,

-0.10492429882287979,

0.0359795056283474,

0.04386734589934349,

-0.08813192695379257,

0.11385257542133331,

0.008003110066056252,

-0.02134816348552704,

-0.057404205203056335,

0.026847487315535545,

0.0507434643805027,

-0.1327534019947052,

0.07734983414411545,

-0.048726800829172134,

0.0596894733607769,

-0.04391619563102722,

-0.011001843959093094,

0.04556470736861229,

0.0894206315279007,

-0.24524416029453278,

-0.05330081284046173,

-0.07512710988521576,

-0.007698300294578075,

0.042688582092523575,

-0.06246780976653099,

0.0481736920773983,

-0.011955920606851578,

0.12915629148483276,

-0.010061852633953094,

-0.14626286923885345,

0.009883711114525795,

-0.032619815319776535,

-0.08085216581821442,

-0.06390237808227539,

0.1487494707107544,

0.07895161956548691,

-0.00191548524890095,

0.021026283502578735,

0.041960153728723526,

0.058556441217660904,

-0.0601031519472599,

0.03984931483864784,

0.18208985030651093,

0.03447351232171059,

0.1360936015844345,

-0.033862724900245667,

-0.09416281431913376,

-0.017099181190133095,

0.09600181877613068,

0.037146780639886856,

0.13345545530319214,

-0.03999847546219826,

0.14375661313533783,

0.08538250625133514,

-0.10876256972551346,

-0.16547586023807526,

-0.009460201486945152,

0.07525422424077988,

0.10062766820192337,

-0.0689077377319336,

-0.19381090998649597,

0.08218038082122803,

0.04407111927866936,

-0.020711926743388176,

0.10405685752630234,

-0.18790242075920105,

-0.06475035846233368,

0.02529072016477585,

0.060447484254837036,

0.22961413860321045,

-0.03677242621779442,

-0.0280742347240448,

0.002418778371065855,

-0.1328633576631546,

0.07782254368066788,

-0.027296457439661026,

0.07334037125110626,

0.04733303189277649,

-0.08159628510475159,

0.045052286237478256,

-0.015726363286376,

0.08528781682252884,

0.05200538784265518,

0.04867793619632721,

-0.03780416399240494,

0.07097949087619781,

0.018033713102340698,

-0.07796045392751694,

0.08503569662570953,

0.04351159557700157,

0.024420933797955513,

0.014245030470192432,

-0.03403797373175621,

0.03654259815812111,

0.02653130516409874,

-0.0006857659318484366,

-0.030524317175149918,

-0.07493103295564651,

0.053955335170030594,

0.07433357834815979,

0.028740495443344116,

0.05349939316511154,

0.004854342434555292,

-0.001423834590241313,

0.08971237391233444,

0.056195348501205444,

-0.07301326096057892,

-0.02694643847644329,

-0.02844618260860443,

-0.01716465689241886,

0.11324108392000198,

-0.04866257682442665,

0.11924321204423904,

0.08419344574213028,

-0.04752639681100845,

0.07405087351799011,

0.047315604984760284,

-0.12176738679409027,

0.04397813603281975,

0.09703604131937027,

-0.09918923676013947,

-0.07638294994831085,

0.023257838562130928,

-0.04778185859322548,

-0.047973744571208954,

0.06112914904952049,

0.21099235117435455,

-0.0016768730711191893,

-0.0008726005326025188,

0.008096910081803799,

0.05912114679813385,

0.0009140677866525948,

0.0399840846657753,

-0.05952104926109314,

0.0022112277802079916,

-0.06500687450170517,

0.10837570577859879,

0.08248471468687057,

-0.04168838635087013,

0.03727458044886589,

0.03454262763261795,

-0.09009455144405365,

-0.047789279371500015,

-0.1644449084997177,

0.05342024564743042,

0.016118304803967476,

-0.016469262540340424,

-0.02481214702129364,

-0.08256492763757706,

0.05040426552295685,

0.0642106905579567,

0.0047950660809874535,

0.13792718946933746,

-0.039655957370996475,

0.024012045934796333,

-0.09584823995828629,

0.028926309198141098,

-0.021439338102936745,

0.04728008434176445,

-0.12771043181419373,

0.03549298271536827,

0.017809925600886345,

0.047850076109170914,

-0.015326287597417831,

-0.10837822407484055,

-0.05065213888883591,

-0.022959405556321144,

-0.012110081501305103,

-0.004500050097703934,

-0.09388265758752823,

-0.012962128967046738,

0.039386894553899765,

0.03008231893181801,

-0.028025532141327858,

0.03791627660393715,

-0.001788661116734147,

-0.04500406235456467,

-0.09425922483205795,

0.02055421844124794,

-0.07425807416439056,

-0.010392893105745316,

0.057211704552173615,

-0.10595706105232239,

0.10623614490032196,

0.020426973700523376,

-0.021042196080088615,

0.017619628459215164,

-0.032797858119010925,

0.004643736407160759,

0.06434284895658493,

-0.0009091399842873216,

-0.0086573027074337,

-0.08951833099126816,

0.0455889068543911,

0.009157383814454079,

-0.05209900066256523,

-0.026215078309178352,

0.11530844867229462,

-0.04039948806166649,

0.05721990764141083,

-0.040404293686151505,

0.028340790420770645,

-0.08526456356048584,

0.07437371462583542,

0.0754191130399704,

0.08475188165903091,

0.09182819724082947,

-0.02517074905335903,

0.05392612889409065,

-0.11833114922046661,

-0.029303016141057014,

0.02906022220849991,

-0.022175300866365433,

0.06359508633613586,

-0.08818984776735306,

0.014943568035960197,

-0.005029052030295134,

0.05787607282400131,

0.03226497396826744,

0.0016653634374961257,

-0.009175805374979973,

-0.010817868635058403,

-0.006140075623989105,

0.07538700848817825,

0.03629929572343826,

0.05620722845196724,

-0.08626097440719604,

-0.0035086714196950197,

0.023756081238389015,

0.03489621728658676,

0.02630709856748581,

0.02726759947836399,

0.047468435019254684,

0.1275263875722885,

0.07375288754701614,

0.040632061660289764,

-0.055376678705215454,

-0.09544607251882553,

0.056246932595968246,

-0.08445755392313004,

0.021891813725233078,

0.021326817572116852,

0.03982386738061905,

0.1447495073080063,

-0.11817050725221634,

0.041465453803539276,

0.07928582280874252,

-0.0023238719440996647,

-0.0472266860306263,

-0.18608540296554565,

-0.04205169901251793,

-0.049233727157115936,

-0.018724968656897545,

-0.04882050305604935,

-0.03947072476148605,

0.026619883254170418,

-0.017965523526072502,

0.020241722464561462,

0.07614196836948395,

-0.07340880483388901,

-0.004756071139127016,

0.028832100331783295,

0.024831220507621765,

0.046694982796907425,

0.13456013798713684,

-0.01716814562678337,

0.08852389454841614,

0.0459250845015049,

0.06678016483783722,

0.03867829591035843,

0.17261020839214325,

0.09286431223154068,

-0.03550546616315842,

-0.09383387118577957,

0.0044916607439517975,

-0.030019909143447876,

-0.06614403426647186,

0.08274133503437042,

0.07715034484863281,

-0.04547959566116333,

-0.004862060304731131,

0.12010736763477325,

-0.03587011247873306,

0.04494931921362877,

-0.17304272949695587,

0.05710672214627266,

0.029104236513376236,

0.026334799826145172,

0.010970056988298893,

-0.12664711475372314,

-0.012858416885137558,

0.018270494416356087,

0.11565599590539932,

0.004935357719659805,

0.008683335967361927,

-0.0024798703379929066,

-0.009996075183153152,

-0.003858748357743025,

0.07253043353557587,

0.04294877126812935,

0.35743698477745056,

-0.030176056548953056,

0.05527234077453613,

-0.028421606868505478,

0.01936447061598301,

0.004651663359254599,

0.039971910417079926,

-0.0863257572054863,

0.03920663893222809,

-0.07466942816972733,

-0.0056128185242414474,

0.0037891860119998455,

-0.18696096539497375,

0.14840351045131683,

-0.025816824287176132,

-0.06648365408182144,

0.0447646901011467,

-0.04377903789281845,

-0.009722943417727947,

0.05943773686885834,

0.012524222023785114,

-0.056395530700683594,

0.19989070296287537,

0.02105688862502575,

-0.08485502004623413,

-0.0696401298046112,

-0.014304285869002342,

-0.05219463258981705,

0.23591791093349457,

-0.012581873685121536,

0.03014335036277771,

0.05537279695272446,

0.09097568690776825,

-0.14075496792793274,

-0.008001868613064289,

-0.07220706343650818,

-0.07525980472564697,

-0.012547293677926064,

0.15247401595115662,

-0.006130041554570198,

0.1439424753189087,

0.09622994065284729,

-0.026187460869550705,

-0.001967820804566145,

-0.12070741504430771,

0.02224971540272236,

-0.054036155343055725,

0.006110951770097017,

-0.08169424533843994,

0.10546886920928955,

0.10989375412464142,

0.03758713975548744,

0.028736520558595657,

-0.020082594826817513,

-0.011408098042011261,

0.009119261056184769,

0.05882297828793526,

0.025569966062903404,

-0.12196388840675354,

0.01951914094388485,

-0.06975618004798889,

0.011315739713609219,

-0.1874515414237976,

-0.15152348577976227,

0.035500917583703995,

-0.032695647329092026,

-0.024494921788573265,

0.04499196261167526,

0.0018187856767326593,

0.01725880429148674,

-0.07019434124231339,

-0.0018260997021570802,

0.019899968057870865,

0.07550474256277084,

-0.04743293672800064,

-0.10268337279558182

] |

null | null |

transformers

|

# DETR (End-to-End Object Detection) model with ResNet-50 backbone

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 panoptic (118k annotated images). It was introduced in the paper [End-to-End Object Detection with Transformers](https://arxiv.org/abs/2005.12872) by Carion et al. and first released in [this repository](https://github.com/facebookresearch/detr).

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

DETR can be naturally extended to perform panoptic segmentation, by adding a mask head on top of the decoder outputs.

## Intended uses & limitations

You can use the raw model for panoptic segmentation. See the [model hub](https://huggingface.co/models?search=facebook/detr) to look for all available DETR models.

### How to use

Here is how to use this model:

```python

import io

import requests

from PIL import Image

import torch

import numpy

from transformers import DetrFeatureExtractor, DetrForSegmentation

from transformers.models.detr.feature_extraction_detr import rgb_to_id

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)