modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

sequence | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

anahitapld/bert-base-cased-dbd | 5f0d2350b19904ca8a6633c750006e0075b00e71 | 2022-06-29T08:50:16.000Z | [

"pytorch",

"bert",

"text-classification",

"transformers",

"license:apache-2.0"

] | text-classification | false | anahitapld | null | anahitapld/bert-base-cased-dbd | 35 | null | transformers | 6,800 | ---

license: apache-2.0

---

|

anahitapld/electra-small-dbd | 29525dcfd5abe32aca98f4a35f033992c244cbdb | 2022-06-29T08:56:12.000Z | [

"pytorch",

"electra",

"text-classification",

"transformers",

"license:apache-2.0"

] | text-classification | false | anahitapld | null | anahitapld/electra-small-dbd | 35 | null | transformers | 6,801 | ---

license: apache-2.0

---

|

Aktsvigun/bart-base_aeslc_23419 | f9ff952e739d0ef29d945cb6c74fb5a0284b07cd | 2022-07-07T15:49:30.000Z | [

"pytorch",

"bart",

"text2text-generation",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | Aktsvigun | null | Aktsvigun/bart-base_aeslc_23419 | 35 | null | transformers | 6,802 | Entry not found |

semy/finetuning-tweeteval-hate-speech | 4d26b493576923761388f1e345b207b14dc0666a | 2022-07-18T08:39:29.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | text-classification | false | semy | null | semy/finetuning-tweeteval-hate-speech | 35 | null | transformers | 6,803 | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: finetuning-tweeteval-hate-speech

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-tweeteval-hate-speech

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8397

- Accuracy: 0.0

- F1: 0.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.2

- Datasets 2.3.2

- Tokenizers 0.12.1

|

saadob12/t5_C2T_big | da1088a85226013bca2b03517a69ae8beda4ecbb | 2022-07-10T10:26:53.000Z | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | saadob12 | null | saadob12/t5_C2T_big | 35 | null | transformers | 6,804 | # Training Data

**Chart-to-text:** Kanthara, S., Leong, R. T. K., Lin, X., Masry, A., Thakkar, M., Hoque, E., & Joty, S. (2022). Chart-to-Text: A Large-Scale Benchmark for Chart Summarization. arXiv preprint arXiv:2203.06486.

**Github Link for the data**: https://github.com/vis-nlp/Chart-to-text

# Example use:

Append ```C2T: ``` before every input to the model

```

tokenizer = AutoTokenizer.from_pretrained(saadob12/t5_C2T_big)

model = AutoModelForSeq2SeqLM.from_pretrained(saadob12/t5_C2T_big)

data = 'Breakdown of coronavirus ( COVID-19 ) deaths in South Korea as of March 16 , 2020 , by chronic disease x-y labels Response - Share of cases, x-y values Circulatory system disease* 62.7% , Endocrine and metabolic diseases** 46.7% , Mental illness*** 25.3% , Respiratory diseases*** 24% , Urinary and genital diseases 14.7% , Cancer 13.3% , Nervous system diseases 4% , Digestive system diseases 2.7% , Blood and hematopoietic diseases 1.3%'

prefix = 'C2T: '

tokens = tokenizer.encode(prefix + data, truncation=True, padding='max_length', return_tensors='pt')

generated = model.generate(tokens, num_beams=4, max_length=256)

tgt_text = tokenizer.decode(generated[0], skip_special_tokens=True, clean_up_tokenization_spaces=True)

summary = str(tgt_text).strip('[]""')

#Summary: As of March 16, 2020, around 62.7 percent of all deaths due to the coronavirus ( COVID-19 ) in South Korea were related to circulatory system diseases. Other chronic diseases include endocrine and metabolic diseases, mental illness, and cancer. South Korea confirmed 30,017 cases of infection including 501 deaths. For further information about the coronavirus ( COVID-19 ) pandemic, please visit our dedicated Facts and Figures page.

```

# Intended Use and Limitations

You can use the model to generate summaries of data files.

Works well for general statistics like the following:

| Year | Children born per woman |

|:---:|:---:|

| 2018 | 1.14 |

| 2017 | 1.45 |

| 2016 | 1.49 |

| 2015 | 1.54 |

| 2014 | 1.6 |

| 2013 | 1.65 |

May or may not generate an **okay** summary at best for the following kind of data:

| Model | BLEU score | BLEURT|

|:---:|:---:|:---:|

| t5-small | 25.4 | -0.11 |

| t5-base | 28.2 | 0.12 |

| t5-large | 35.4 | 0.34 |

# Citation

Kindly cite my work. Thank you.

```

@misc{obaid ul islam_2022,

title={saadob12/t5_C2T_big Hugging Face},

url={https://huggingface.co/saadob12/t5_C2T_big},

journal={Huggingface.co},

author={Obaid ul Islam, Saad},

year={2022}

}

```

|

aatmasidha/newsmodelclassification | cdf27aaefb2c6c5260d788f4ab14e154bf23d438 | 2022-07-14T20:16:34.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"dataset:emotion",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | text-classification | false | aatmasidha | null | aatmasidha/newsmodelclassification | 35 | null | transformers | 6,805 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: newsmodelclassification

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.927

- name: F1

type: f1

value: 0.9271124951673986

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# newsmodelclassification

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2065

- Accuracy: 0.927

- F1: 0.9271

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8011 | 1.0 | 250 | 0.2902 | 0.911 | 0.9090 |

| 0.2316 | 2.0 | 500 | 0.2065 | 0.927 | 0.9271 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.3.2

- Tokenizers 0.10.3

|

jordyvl/bert-base-portuguese-cased_harem-selective-sm-first-ner | 02d5f704b949b69fbdff78cbb7b5f620ceaed24a | 2022-07-18T22:12:54.000Z | [

"pytorch",

"tensorboard",

"bert",

"token-classification",

"dataset:harem",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index",

"autotrain_compatible"

] | token-classification | false | jordyvl | null | jordyvl/bert-base-portuguese-cased_harem-selective-sm-first-ner | 35 | null | transformers | 6,806 | ---

license: mit

tags:

- generated_from_trainer

datasets:

- harem

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-base-portuguese-cased_harem-sm-first-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: harem

type: harem

args: selective

metrics:

- name: Precision

type: precision

value: 0.7455830388692579

- name: Recall

type: recall

value: 0.8053435114503816

- name: F1

type: f1

value: 0.7743119266055045

- name: Accuracy

type: accuracy

value: 0.964875491480996

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-portuguese-cased_harem-sm-first-ner

This model is a fine-tuned version of [neuralmind/bert-base-portuguese-cased](https://huggingface.co/neuralmind/bert-base-portuguese-cased) on the harem dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1952

- Precision: 0.7456

- Recall: 0.8053

- F1: 0.7743

- Accuracy: 0.9649

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.1049 | 1.0 | 2517 | 0.1955 | 0.6601 | 0.7710 | 0.7113 | 0.9499 |

| 0.0622 | 2.0 | 5034 | 0.2097 | 0.7314 | 0.7901 | 0.7596 | 0.9554 |

| 0.0318 | 3.0 | 7551 | 0.1952 | 0.7456 | 0.8053 | 0.7743 | 0.9649 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.10.2+cu102

- Datasets 2.2.2

- Tokenizers 0.12.1

|

dl4nlp/distilbert-base-uncased-nq-short | 62e3ebbf6b4eeef0b6d394794c28813503ed77d8 | 2022-07-22T17:53:33.000Z | [

"pytorch",

"distilbert",

"question-answering",

"en",

"dataset:nq",

"dataset:natural-question",

"dataset:natural-question-short",

"transformers",

"autotrain_compatible"

] | question-answering | false | dl4nlp | null | dl4nlp/distilbert-base-uncased-nq-short | 35 | null | transformers | 6,807 | ---

language:

- en

tags:

- question-answering

datasets:

- nq

- natural-question

- natural-question-short

metrics:

- squad

---

Model based on distilbert-base-uncased model trained on natural question short dataset.

Trained for one episode with AdamW optimizer and learning rate of 5e-03 and no warmup steps.

We achieved a f1 score of 32.67 and an em score of 10.35 |

olemeyer/zero_shot_issue_classification | 3a3fc997b3b23c79de67b7638507218692f83c9b | 2022-07-25T15:31:20.000Z | [

"pytorch",

"tensorboard",

"bart",

"text2text-generation",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | olemeyer | null | olemeyer/zero_shot_issue_classification | 35 | null | transformers | 6,808 | Entry not found |

dminiotas05/distilbert-base-uncased-finetuned-ft650_reg1 | 66ffbfd60753a3c5ae5f9b685482ce14db6810be | 2022-07-26T07:56:25.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | text-classification | false | dminiotas05 | null | dminiotas05/distilbert-base-uncased-finetuned-ft650_reg1 | 35 | null | transformers | 6,809 | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: distilbert-base-uncased-finetuned-ft650_reg1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ft650_reg1

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.0751

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.2605 | 1.0 | 188 | 1.7953 |

| 1.1328 | 2.0 | 376 | 2.0771 |

| 1.1185 | 3.0 | 564 | 2.0751 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

seeksery/DialoGPT-calig | a18b9a424c9eda3da8d85cbec5a037166e1360ca | 2022-07-25T14:47:41.000Z | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] | conversational | false | seeksery | null | seeksery/DialoGPT-calig | 35 | null | transformers | 6,810 | ---

tags:

- conversational

---

|

KamranHussain05/DRFSemanticLearning | 3aa1b445ae76aecebcd48833d67ebdeea00bc3a5 | 2022-07-27T00:22:32.000Z | [

"pytorch",

"bert",

"text-classification",

"transformers"

] | text-classification | false | KamranHussain05 | null | KamranHussain05/DRFSemanticLearning | 35 | null | transformers | 6,811 | Entry not found |

BigTooth/Megumin-v0.2 | a0ea944dd7543807aacac3529dc70923e354ab8c | 2021-09-02T19:38:21.000Z | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] | conversational | false | BigTooth | null | BigTooth/Megumin-v0.2 | 34 | null | transformers | 6,812 | ---

tags:

- conversational

---

# Megumin-v0.2 model |

Cheatham/xlm-roberta-large-finetuned4 | 3455d2e4e8edb2adb3e1285e2e45c14694149580 | 2022-01-26T18:04:14.000Z | [

"pytorch",

"xlm-roberta",

"text-classification",

"transformers"

] | text-classification | false | Cheatham | null | Cheatham/xlm-roberta-large-finetuned4 | 34 | null | transformers | 6,813 | Entry not found |

Ferch423/gpt2-small-portuguese-wikipediabio | bcd8937c847d5c86778ecc3defaa12d40bd55b89 | 2021-05-21T09:42:53.000Z | [

"pytorch",

"jax",

"gpt2",

"text-generation",

"pt",

"dataset:wikipedia",

"transformers",

"wikipedia",

"finetuning"

] | text-generation | false | Ferch423 | null | Ferch423/gpt2-small-portuguese-wikipediabio | 34 | null | transformers | 6,814 | ---

language: "pt"

tags:

- pt

- wikipedia

- gpt2

- finetuning

datasets:

- wikipedia

widget:

- "André Um"

- "Maria do Santos"

- "Roberto Carlos"

licence: "mit"

---

# GPT2-SMALL-PORTUGUESE-WIKIPEDIABIO

This is a finetuned model version of gpt2-small-portuguese(https://huggingface.co/pierreguillou/gpt2-small-portuguese) by pierreguillou.

It was trained on a person abstract dataset extracted from DBPEDIA (over 100000 people's abstracts). The model is intended as a simple and fun experiment for generating texts abstracts based on ordinary people's names. |

Helsinki-NLP/opus-mt-en-cy | 038aee0304224b119582e0258c0dff2bc1c1c411 | 2021-09-09T21:34:47.000Z | [

"pytorch",

"marian",

"text2text-generation",

"en",

"cy",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-en-cy | 34 | null | transformers | 6,815 | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-en-cy

* source languages: en

* target languages: cy

* OPUS readme: [en-cy](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/en-cy/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2019-12-18.zip](https://object.pouta.csc.fi/OPUS-MT-models/en-cy/opus-2019-12-18.zip)

* test set translations: [opus-2019-12-18.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-cy/opus-2019-12-18.test.txt)

* test set scores: [opus-2019-12-18.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-cy/opus-2019-12-18.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.en.cy | 25.3 | 0.487 |

|

Helsinki-NLP/opus-mt-eu-es | bda2f1fa2c31265c22ca45d216df26e530acd9c4 | 2021-01-18T08:31:14.000Z | [

"pytorch",

"marian",

"text2text-generation",

"eu",

"es",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-eu-es | 34 | 1 | transformers | 6,816 | ---

language:

- eu

- es

tags:

- translation

license: apache-2.0

---

### eus-spa

* source group: Basque

* target group: Spanish

* OPUS readme: [eus-spa](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/eus-spa/README.md)

* model: transformer-align

* source language(s): eus

* target language(s): spa

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* download original weights: [opus-2020-06-17.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/eus-spa/opus-2020-06-17.zip)

* test set translations: [opus-2020-06-17.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/eus-spa/opus-2020-06-17.test.txt)

* test set scores: [opus-2020-06-17.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/eus-spa/opus-2020-06-17.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.eus.spa | 48.8 | 0.673 |

### System Info:

- hf_name: eus-spa

- source_languages: eus

- target_languages: spa

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/eus-spa/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['eu', 'es']

- src_constituents: {'eus'}

- tgt_constituents: {'spa'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/eus-spa/opus-2020-06-17.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/eus-spa/opus-2020-06-17.test.txt

- src_alpha3: eus

- tgt_alpha3: spa

- short_pair: eu-es

- chrF2_score: 0.6729999999999999

- bleu: 48.8

- brevity_penalty: 0.9640000000000001

- ref_len: 12469.0

- src_name: Basque

- tgt_name: Spanish

- train_date: 2020-06-17

- src_alpha2: eu

- tgt_alpha2: es

- prefer_old: False

- long_pair: eus-spa

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 |

Helsinki-NLP/opus-mt-pt-ca | 6031180727fc2c9b8b6319cf3b3ea2cb2d858b62 | 2020-08-21T14:42:49.000Z | [

"pytorch",

"marian",

"text2text-generation",

"pt",

"ca",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-pt-ca | 34 | null | transformers | 6,817 | ---

language:

- pt

- ca

tags:

- translation

license: apache-2.0

---

### por-cat

* source group: Portuguese

* target group: Catalan

* OPUS readme: [por-cat](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/por-cat/README.md)

* model: transformer-align

* source language(s): por

* target language(s): cat

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm12k,spm12k)

* download original weights: [opus-2020-06-17.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/por-cat/opus-2020-06-17.zip)

* test set translations: [opus-2020-06-17.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/por-cat/opus-2020-06-17.test.txt)

* test set scores: [opus-2020-06-17.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/por-cat/opus-2020-06-17.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.por.cat | 45.7 | 0.672 |

### System Info:

- hf_name: por-cat

- source_languages: por

- target_languages: cat

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/por-cat/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['pt', 'ca']

- src_constituents: {'por'}

- tgt_constituents: {'cat'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm12k,spm12k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/por-cat/opus-2020-06-17.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/por-cat/opus-2020-06-17.test.txt

- src_alpha3: por

- tgt_alpha3: cat

- short_pair: pt-ca

- chrF2_score: 0.672

- bleu: 45.7

- brevity_penalty: 0.972

- ref_len: 5878.0

- src_name: Portuguese

- tgt_name: Catalan

- train_date: 2020-06-17

- src_alpha2: pt

- tgt_alpha2: ca

- prefer_old: False

- long_pair: por-cat

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 |

Langboat/mengzi-oscar-base-caption | 69a7595f385f056bffefebbdc660ff854f70e0b8 | 2021-10-14T02:17:06.000Z | [

"pytorch",

"bert",

"fill-mask",

"zh",

"arxiv:2110.06696",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | fill-mask | false | Langboat | null | Langboat/mengzi-oscar-base-caption | 34 | 1 | transformers | 6,818 | ---

language:

- zh

license: apache-2.0

---

# Mengzi-oscar-base-caption (Chinese Multi-modal Image Caption model)

[Mengzi: Towards Lightweight yet Ingenious Pre-trained Models for Chinese](https://arxiv.org/abs/2110.06696)

Mengzi-oscar-base-caption is fine-tuned based on Chinese multi-modal pre-training model [Mengzi-Oscar](https://github.com/Langboat/Mengzi/blob/main/Mengzi-Oscar.md), on AIC-ICC Chinese image caption dataset.

## Usage

#### Installation

Check [INSTALL.md](https://github.com/microsoft/Oscar/blob/master/INSTALL.md) for installation instructions.

#### Pretrain & fine-tune

See the [Mengzi-Oscar.md](https://github.com/Langboat/Mengzi/blob/main/Mengzi-Oscar.md) for details.

## Citation

If you find the technical report or resource is useful, please cite the following technical report in your paper.

```

@misc{zhang2021mengzi,

title={Mengzi: Towards Lightweight yet Ingenious Pre-trained Models for Chinese},

author={Zhuosheng Zhang and Hanqing Zhang and Keming Chen and Yuhang Guo and Jingyun Hua and Yulong Wang and Ming Zhou},

year={2021},

eprint={2110.06696},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

MutazYoune/hotel_reviews | ff80ee3dbbdb40b717538b63ab569a841e269fc4 | 2021-05-18T21:44:59.000Z | [

"pytorch",

"jax",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | MutazYoune | null | MutazYoune/hotel_reviews | 34 | null | transformers | 6,819 | Entry not found |

Seonguk/textSummarization | 44444b5863ef62ec211b8efabc94075925695fa5 | 2021-12-17T04:28:39.000Z | [

"pytorch",

"bart",

"text2text-generation",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | Seonguk | null | Seonguk/textSummarization | 34 | null | transformers | 6,820 | Entry not found |

SparkBeyond/roberta-large-sts-b | 19d25d23728350e8352c2b0afc4c801f690392b2 | 2021-05-20T12:26:47.000Z | [

"pytorch",

"jax",

"roberta",

"text-classification",

"transformers"

] | text-classification | false | SparkBeyond | null | SparkBeyond/roberta-large-sts-b | 34 | null | transformers | 6,821 |

# Roberta Large STS-B

This model is a fine tuned RoBERTA model over STS-B.

It was trained with these params:

!python /content/transformers/examples/text-classification/run_glue.py \

--model_type roberta \

--model_name_or_path roberta-large \

--task_name STS-B \

--do_train \

--do_eval \

--do_lower_case \

--data_dir /content/glue_data/STS-B/ \

--max_seq_length 128 \

--per_gpu_eval_batch_size=8 \

--per_gpu_train_batch_size=8 \

--learning_rate 2e-5 \

--num_train_epochs 3.0 \

--output_dir /content/roberta-sts-b

## How to run

```python

import toolz

import torch

batch_size = 6

def roberta_similarity_batches(to_predict):

batches = toolz.partition(batch_size, to_predict)

similarity_scores = []

for batch in batches:

sentences = [(sentence_similarity["sent1"], sentence_similarity["sent2"]) for sentence_similarity in batch]

batch_scores = similarity_roberta(model, tokenizer,sentences)

similarity_scores = similarity_scores + batch_scores[0].cpu().squeeze(axis=1).tolist()

return similarity_scores

def similarity_roberta(model, tokenizer, sent_pairs):

batch_token = tokenizer(sent_pairs, padding='max_length', truncation=True, max_length=500)

res = model(torch.tensor(batch_token['input_ids']).cuda(), attention_mask=torch.tensor(batch_token["attention_mask"]).cuda())

return res

similarity_roberta(model, tokenizer, [('NEW YORK--(BUSINESS WIRE)--Rosen Law Firm, a global investor rights law firm, announces it is investigating potential securities claims on behalf of shareholders of Vale S.A. ( VALE ) resulting from allegations that Vale may have issued materially misleading business information to the investing public',

'EQUITY ALERT: Rosen Law Firm Announces Investigation of Securities Claims Against Vale S.A. – VALE')])

```

|

Theivaprakasham/layoutlmv2-finetuned-sroie | d5146ede06c74e44cc933e42c9fef6a26432332b | 2022-03-02T08:12:26.000Z | [

"pytorch",

"tensorboard",

"layoutlmv2",

"token-classification",

"dataset:sroie",

"transformers",

"generated_from_trainer",

"license:cc-by-nc-sa-4.0",

"model-index",

"autotrain_compatible"

] | token-classification | false | Theivaprakasham | null | Theivaprakasham/layoutlmv2-finetuned-sroie | 34 | null | transformers | 6,822 | ---

license: cc-by-nc-sa-4.0

tags:

- generated_from_trainer

datasets:

- sroie

model-index:

- name: layoutlmv2-finetuned-sroie

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# layoutlmv2-finetuned-sroie

This model is a fine-tuned version of [microsoft/layoutlmv2-base-uncased](https://huggingface.co/microsoft/layoutlmv2-base-uncased) on the sroie dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0291

- Address Precision: 0.9341

- Address Recall: 0.9395

- Address F1: 0.9368

- Address Number: 347

- Company Precision: 0.9570

- Company Recall: 0.9625

- Company F1: 0.9598

- Company Number: 347

- Date Precision: 0.9885

- Date Recall: 0.9885

- Date F1: 0.9885

- Date Number: 347

- Total Precision: 0.9253

- Total Recall: 0.9280

- Total F1: 0.9266

- Total Number: 347

- Overall Precision: 0.9512

- Overall Recall: 0.9546

- Overall F1: 0.9529

- Overall Accuracy: 0.9961

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- training_steps: 3000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Address Precision | Address Recall | Address F1 | Address Number | Company Precision | Company Recall | Company F1 | Company Number | Date Precision | Date Recall | Date F1 | Date Number | Total Precision | Total Recall | Total F1 | Total Number | Overall Precision | Overall Recall | Overall F1 | Overall Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:-----------------:|:--------------:|:----------:|:--------------:|:-----------------:|:--------------:|:----------:|:--------------:|:--------------:|:-----------:|:-------:|:-----------:|:---------------:|:------------:|:--------:|:------------:|:-----------------:|:--------------:|:----------:|:----------------:|

| No log | 0.05 | 157 | 0.8162 | 0.3670 | 0.7233 | 0.4869 | 347 | 0.0617 | 0.0144 | 0.0234 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.3346 | 0.1844 | 0.2378 | 0.9342 |

| No log | 1.05 | 314 | 0.3490 | 0.8564 | 0.8934 | 0.8745 | 347 | 0.8610 | 0.9280 | 0.8932 | 347 | 0.7297 | 0.8559 | 0.7878 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.8128 | 0.6693 | 0.7341 | 0.9826 |

| No log | 2.05 | 471 | 0.1845 | 0.7970 | 0.9049 | 0.8475 | 347 | 0.9211 | 0.9424 | 0.9316 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.8978 | 0.7089 | 0.7923 | 0.9835 |

| 0.7027 | 3.05 | 628 | 0.1194 | 0.9040 | 0.9222 | 0.9130 | 347 | 0.8880 | 0.9135 | 0.9006 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.9263 | 0.7061 | 0.8013 | 0.9853 |

| 0.7027 | 4.05 | 785 | 0.0762 | 0.9397 | 0.9424 | 0.9410 | 347 | 0.8889 | 0.9222 | 0.9052 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.7740 | 0.9078 | 0.8355 | 347 | 0.8926 | 0.9402 | 0.9158 | 0.9928 |

| 0.7027 | 5.05 | 942 | 0.0564 | 0.9282 | 0.9308 | 0.9295 | 347 | 0.9296 | 0.9510 | 0.9402 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.7801 | 0.8588 | 0.8176 | 347 | 0.9036 | 0.9323 | 0.9177 | 0.9946 |

| 0.0935 | 6.05 | 1099 | 0.0548 | 0.9222 | 0.9222 | 0.9222 | 347 | 0.6975 | 0.7378 | 0.7171 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.8608 | 0.8732 | 0.8670 | 347 | 0.8648 | 0.8804 | 0.8725 | 0.9921 |

| 0.0935 | 7.05 | 1256 | 0.0410 | 0.92 | 0.9280 | 0.9240 | 347 | 0.9486 | 0.9568 | 0.9527 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9091 | 0.9222 | 0.9156 | 347 | 0.9414 | 0.9488 | 0.9451 | 0.9961 |

| 0.0935 | 8.05 | 1413 | 0.0369 | 0.9368 | 0.9395 | 0.9381 | 347 | 0.9569 | 0.9597 | 0.9583 | 347 | 0.9772 | 0.9885 | 0.9828 | 347 | 0.9143 | 0.9222 | 0.9182 | 347 | 0.9463 | 0.9524 | 0.9494 | 0.9960 |

| 0.038 | 9.05 | 1570 | 0.0343 | 0.9282 | 0.9308 | 0.9295 | 347 | 0.9624 | 0.9597 | 0.9610 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9206 | 0.9020 | 0.9112 | 347 | 0.9500 | 0.9452 | 0.9476 | 0.9958 |

| 0.038 | 10.05 | 1727 | 0.0317 | 0.9395 | 0.9395 | 0.9395 | 347 | 0.9598 | 0.9625 | 0.9612 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9280 | 0.9280 | 0.9280 | 347 | 0.9539 | 0.9546 | 0.9543 | 0.9963 |

| 0.038 | 11.05 | 1884 | 0.0312 | 0.9368 | 0.9395 | 0.9381 | 347 | 0.9514 | 0.9597 | 0.9555 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9226 | 0.9280 | 0.9253 | 347 | 0.9498 | 0.9539 | 0.9518 | 0.9960 |

| 0.0236 | 12.05 | 2041 | 0.0318 | 0.9368 | 0.9395 | 0.9381 | 347 | 0.9570 | 0.9625 | 0.9598 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9043 | 0.8991 | 0.9017 | 347 | 0.9467 | 0.9474 | 0.9471 | 0.9956 |

| 0.0236 | 13.05 | 2198 | 0.0291 | 0.9337 | 0.9337 | 0.9337 | 347 | 0.9598 | 0.9625 | 0.9612 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9164 | 0.9164 | 0.9164 | 347 | 0.9496 | 0.9503 | 0.9499 | 0.9960 |

| 0.0236 | 14.05 | 2355 | 0.0300 | 0.9286 | 0.9366 | 0.9326 | 347 | 0.9459 | 0.9568 | 0.9513 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9275 | 0.9222 | 0.9249 | 347 | 0.9476 | 0.9510 | 0.9493 | 0.9959 |

| 0.0178 | 15.05 | 2512 | 0.0307 | 0.9366 | 0.9366 | 0.9366 | 347 | 0.9513 | 0.9568 | 0.9540 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9275 | 0.9222 | 0.9249 | 347 | 0.9510 | 0.9510 | 0.9510 | 0.9959 |

| 0.0178 | 16.05 | 2669 | 0.0300 | 0.9312 | 0.9366 | 0.9339 | 347 | 0.9543 | 0.9625 | 0.9584 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9171 | 0.9251 | 0.9211 | 347 | 0.9477 | 0.9532 | 0.9504 | 0.9959 |

| 0.0178 | 17.05 | 2826 | 0.0292 | 0.9368 | 0.9395 | 0.9381 | 347 | 0.9570 | 0.9625 | 0.9598 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9253 | 0.9280 | 0.9266 | 347 | 0.9519 | 0.9546 | 0.9532 | 0.9961 |

| 0.0178 | 18.05 | 2983 | 0.0291 | 0.9341 | 0.9395 | 0.9368 | 347 | 0.9570 | 0.9625 | 0.9598 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9253 | 0.9280 | 0.9266 | 347 | 0.9512 | 0.9546 | 0.9529 | 0.9961 |

| 0.0149 | 19.01 | 3000 | 0.0291 | 0.9341 | 0.9395 | 0.9368 | 347 | 0.9570 | 0.9625 | 0.9598 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9253 | 0.9280 | 0.9266 | 347 | 0.9512 | 0.9546 | 0.9529 | 0.9961 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.8.0+cu101

- Datasets 1.18.4.dev0

- Tokenizers 0.11.6

|

TurkuNLP/sbert-uncased-finnish-paraphrase | af1c35ea10a86e35da38494d0b62366bed31ddd4 | 2021-11-29T09:06:58.000Z | [

"pytorch",

"bert",

"feature-extraction",

"fi",

"sentence-transformers",

"sentence-similarity",

"transformers"

] | sentence-similarity | false | TurkuNLP | null | TurkuNLP/sbert-uncased-finnish-paraphrase | 34 | null | sentence-transformers | 6,823 | ---

language:

- fi

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

widget:

- text: "Minusta täällä on ihana asua!"

---

# Uncased Finnish Sentence BERT model

Finnish Sentence BERT trained from FinBERT. A demo on retrieving the most similar sentences from a dataset of 400 million sentences *using [the cased model](https://huggingface.co/TurkuNLP/sbert-cased-finnish-paraphrase)* can be found [here](http://epsilon-it.utu.fi/sbert400m).

## Training

- Library: [sentence-transformers](https://www.sbert.net/)

- FinBERT model: TurkuNLP/bert-base-finnish-uncased-v1

- Data: The data provided [here](https://turkunlp.org/paraphrase.html), including the Finnish Paraphrase Corpus and the automatically collected paraphrase candidates (500K positive and 5M negative)

- Pooling: mean pooling

- Task: Binary prediction, whether two sentences are paraphrases or not. Note: the labels 3 and 4 are considered paraphrases, and labels 1 and 2 non-paraphrases. [Details on labels](https://aclanthology.org/2021.nodalida-main.29/)

## Usage

The same as in [HuggingFace documentation](https://huggingface.co/sentence-transformers/bert-base-nli-mean-tokens). Either through `SentenceTransformer` or `HuggingFace Transformers`

### SentenceTransformer

```python

from sentence_transformers import SentenceTransformer

sentences = ["Tämä on esimerkkilause.", "Tämä on toinen lause."]

model = SentenceTransformer('TurkuNLP/sbert-uncased-finnish-paraphrase')

embeddings = model.encode(sentences)

print(embeddings)

```

### HuggingFace Transformers

```python

from transformers import AutoTokenizer, AutoModel

import torch

# Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ["Tämä on esimerkkilause.", "Tämä on toinen lause."]

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('TurkuNLP/sbert-uncased-finnish-paraphrase')

model = AutoModel.from_pretrained('TurkuNLP/sbert-uncased-finnish-paraphrase')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

A publication detailing the evaluation results is currently being drafted.

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': True}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

While the publication is being drafted, please cite [this page](https://turkunlp.org/paraphrase.html).

## References

- J. Kanerva, F. Ginter, LH. Chang, I. Rastas, V. Skantsi, J. Kilpeläinen, HM. Kupari, J. Saarni, M. Sevón, and O. Tarkka. Finnish Paraphrase Corpus. In *NoDaLiDa 2021*, 2021.

- N. Reimers and I. Gurevych. Sentence-BERT: Sentence embeddings using Siamese BERT-networks. In *EMNLP-IJCNLP*, pages 3982–3992, 2019.

- A. Virtanen, J. Kanerva, R. Ilo, J. Luoma, J. Luotolahti, T. Salakoski, F. Ginter, and S. Pyysalo. Multilingual is not enough: BERT for Finnish. *arXiv preprint arXiv:1912.07076*, 2019.

|

abdouaziiz/wav2vec2-xls-r-300m-wolof | 6d4cacc654b21b0b0aba9266fe1162ac5d156157 | 2021-12-19T14:17:43.000Z | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"transformers",

"asr",

"wolof",

"wo",

"license:mit",

"model-index"

] | automatic-speech-recognition | false | abdouaziiz | null | abdouaziiz/wav2vec2-xls-r-300m-wolof | 34 | null | transformers | 6,824 | ---

license: mit

tags:

- automatic-speech-recognition

- asr

- pytorch

- wav2vec2

- wolof

- wo

model-index:

- name: wav2vec2-xls-r-300m-wolof

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

metrics:

- name: Test WER

type: wer

value: 21.25

- name: Validation Loss

type: Loss

value: 0.36

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-xls-r-300m-wolof

Wolof is a language spoken in Senegal and neighbouring countries, this language is not too well represented, there are few resources in the field of Text en speech

In this sense we aim to bring our contribution to this, it is in this sense that enters this repo.

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) , that is trained with the largest available speech dataset of the [ALFFA_PUBLIC](https://github.com/besacier/ALFFA_PUBLIC/tree/master/ASR/WOLOF)

It achieves the following results on the evaluation set:

- Loss: 0.367826

- Wer: 0.212565

## Model description

The duration of the training data is 16.8 hours, which we have divided into 10,000 audio files for the training and 3,339 for the test.

## Training and evaluation data

We eval the model at every 1500 step , and log it . and save at every 33340 step

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-4

- train_batch_size: 3

- eval_batch_size : 8

- total_train_batch_size: 64

- total_eval_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 10.0

### Training results

| Step | Training Loss | Validation Loss | Wer |

|:-------:|:-------------:|:---------------:|:------:|

| 1500 | 2.854200 |0.642243 |0.543964 |

| 3000 | 0.599200 | 0.468138 | 0.429549|

| 4500 | 0.468300 | 0.433436 | 0.405644|

| 6000 | 0.427000 | 0.384873 | 0.344150|

| 7500 | 0.377000 | 0.374003 | 0.323892|

| 9000 | 0.337000 | 0.363674 | 0.306189|

| 10500 | 0.302400 | 0.349884 |0 .283908 |

| 12000 | 0.264100 | 0.344104 |0.277120|

| 13500 |0 .254000 |0.341820 |0.271316|

| 15000 | 0.208400| 0.326502 | 0.260695|

| 16500 | 0.203500| 0.326209 | 0.250313|

| 18000 |0.159800 |0.323539 | 0.239851|

| 19500 | 0.158200 | 0.310694 | 0.230028|

| 21000 | 0.132800 | 0.338318 | 0.229283|

| 22500 | 0.112800 | 0.336765 | 0.224145|

| 24000 | 0.103600 | 0.350208 | 0.227073 |

| 25500 | 0.091400 | 0.353609 | 0.221589 |

| 27000 | 0.084400 | 0.367826 | 0.212565 |

## Usage

The model can be used directly (without a language model) as follows:

```python

import librosa

import warnings

from transformers import AutoProcessor, AutoModelForCTC

from datasets import Dataset, DatasetDict

from datasets import load_metric

wer_metric = load_metric("wer")

wolof = pd.read_csv('Test.csv') # wolof contains the columns of file , and transcription

wolof = DatasetDict({'test': Dataset.from_pandas(wolof)})

chars_to_ignore_regex = '[\"\?\.\!\-\;\:\(\)\,]'

def remove_special_characters(batch):

batch["transcription"] = re.sub(chars_to_ignore_regex, '', batch["transcription"]).lower() + " "

return batch

wolof = wolof.map(remove_special_characters)

processor = AutoProcessor.from_pretrained("abdouaziiz/wav2vec2-xls-r-300m-wolof")

model = AutoModelForCTC.from_pretrained("abdouaziiz/wav2vec2-xls-r-300m-wolof")

warnings.filterwarnings("ignore")

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = librosa.load(batch["file"], sr = 16000)

batch["speech"] = speech_array.astype('float16')

batch["sampling_rate"] = sampling_rate

batch["target_text"] = batch["transcription"]

return batch

wolof = wolof.map(speech_file_to_array_fn, remove_columns=wolof.column_names["test"], num_proc=1)

def map_to_result(batch):

model.to("cuda")

input_values = processor(

batch["speech"],

sampling_rate=batch["sampling_rate"],

return_tensors="pt"

).input_values.to("cuda")

with torch.no_grad():

logits = model(input_values).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_str"] = processor.batch_decode(pred_ids)[0]

return batch

results = wolof["test"].map(map_to_result)

print("Test WER: {:.3f}".format(wer_metric.compute(predictions=results["pred_str"], references=results["transcription"])))

```

## PS:

The results obtained can be improved by using :

- Wav2vec2 + language model .

- Build a Spellcheker from the text of the data

- Sentence Edit Distance |

anirudh21/albert-large-v2-finetuned-wnli | a7cf34e3d5370cf42895f0e6fb835db0129a6e89 | 2022-01-27T05:02:43.000Z | [

"pytorch",

"tensorboard",

"albert",

"text-classification",

"dataset:glue",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | text-classification | false | anirudh21 | null | anirudh21/albert-large-v2-finetuned-wnli | 34 | null | transformers | 6,825 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

model-index:

- name: albert-large-v2-finetuned-wnli

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

args: wnli

metrics:

- name: Accuracy

type: accuracy

value: 0.5352112676056338

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# albert-large-v2-finetuned-wnli

This model is a fine-tuned version of [albert-large-v2](https://huggingface.co/albert-large-v2) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6919

- Accuracy: 0.5352

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 17 | 0.7292 | 0.4366 |

| No log | 2.0 | 34 | 0.6919 | 0.5352 |

| No log | 3.0 | 51 | 0.7084 | 0.4648 |

| No log | 4.0 | 68 | 0.7152 | 0.5352 |

| No log | 5.0 | 85 | 0.7343 | 0.5211 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.0+cu111

- Datasets 1.18.1

- Tokenizers 0.10.3

|

bertin-project/bertin-base-gaussian-exp-512seqlen | 1b78beca56e1731c29ec0afdd7f30123c0cfb015 | 2021-09-23T13:41:43.000Z | [

"pytorch",

"jax",

"tensorboard",

"joblib",

"roberta",

"fill-mask",

"es",

"transformers",

"spanish",

"license:cc-by-4.0",

"autotrain_compatible"

] | fill-mask | false | bertin-project | null | bertin-project/bertin-base-gaussian-exp-512seqlen | 34 | 1 | transformers | 6,826 | ---

language: es

license: cc-by-4.0

tags:

- spanish

- roberta

pipeline_tag: fill-mask

widget:

- text: Fui a la librería a comprar un <mask>.

---

This is a **RoBERTa-base** model trained from scratch in Spanish.

The training dataset is [mc4](https://huggingface.co/datasets/bertin-project/mc4-es-sampled ) subsampling documents to a total of about 50 million examples. Sampling is biased towards average perplexity values (using a Gaussian function), discarding more often documents with very large values (poor quality) of very small values (short, repetitive texts).

This model takes the one using [sequence length 128](https://huggingface.co/bertin-project/bertin-base-gaussian) and trains during 25.000 steps using sequence length 512.

Please see our main [card](https://huggingface.co/bertin-project/bertin-roberta-base-spanish) for more information.

This is part of the

[Flax/Jax Community Week](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104), organised by [HuggingFace](https://huggingface.co/) and TPU usage sponsored by Google.

## Team members

- Eduardo González ([edugp](https://huggingface.co/edugp))

- Javier de la Rosa ([versae](https://huggingface.co/versae))

- Manu Romero ([mrm8488](https://huggingface.co/))

- María Grandury ([mariagrandury](https://huggingface.co/))

- Pablo González de Prado ([Pablogps](https://huggingface.co/Pablogps))

- Paulo Villegas ([paulo](https://huggingface.co/paulo)) |

dkleczek/papuGaPT2 | 3b456c21150e8541c6674638d80e7f83f17f22b0 | 2021-08-21T06:45:12.000Z | [

"pytorch",

"jax",

"tensorboard",

"gpt2",

"text-generation",

"pl",

"transformers"

] | text-generation | false | dkleczek | null | dkleczek/papuGaPT2 | 34 | null | transformers | 6,827 | ---

language: pl

tags:

- text-generation

widget:

- text: "Najsmaczniejszy polski owoc to"

---

# papuGaPT2 - Polish GPT2 language model

[GPT2](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) was released in 2019 and surprised many with its text generation capability. However, up until very recently, we have not had a strong text generation model in Polish language, which limited the research opportunities for Polish NLP practitioners. With the release of this model, we hope to enable such research.

Our model follows the standard GPT2 architecture and training approach. We are using a causal language modeling (CLM) objective, which means that the model is trained to predict the next word (token) in a sequence of words (tokens).

## Datasets

We used the Polish subset of the [multilingual Oscar corpus](https://www.aclweb.org/anthology/2020.acl-main.156) to train the model in a self-supervised fashion.

```

from datasets import load_dataset

dataset = load_dataset('oscar', 'unshuffled_deduplicated_pl')

```

## Intended uses & limitations

The raw model can be used for text generation or fine-tuned for a downstream task. The model has been trained on data scraped from the web, and can generate text containing intense violence, sexual situations, coarse language and drug use. It also reflects the biases from the dataset (see below for more details). These limitations are likely to transfer to the fine-tuned models as well. At this stage, we do not recommend using the model beyond research.

## Bias Analysis

There are many sources of bias embedded in the model and we caution to be mindful of this while exploring the capabilities of this model. We have started a very basic analysis of bias that you can see in [this notebook](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_bias_analysis.ipynb).

### Gender Bias

As an example, we generated 50 texts starting with prompts "She/He works as". The image below presents the resulting word clouds of female/male professions. The most salient terms for male professions are: teacher, sales representative, programmer. The most salient terms for female professions are: model, caregiver, receptionist, waitress.

### Ethnicity/Nationality/Gender Bias

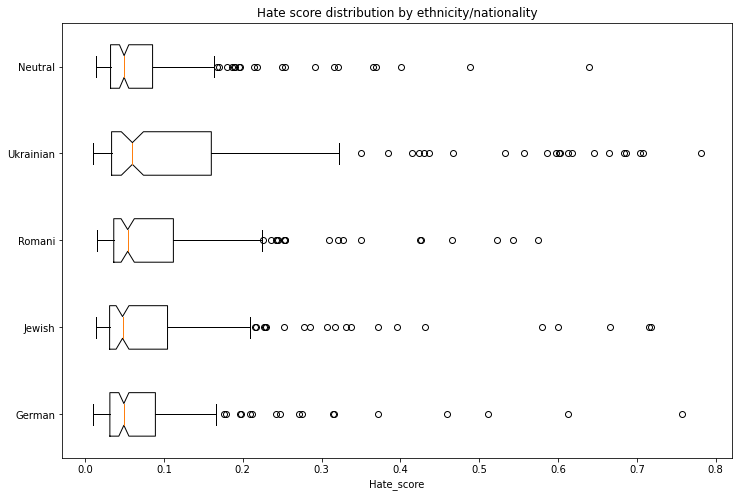

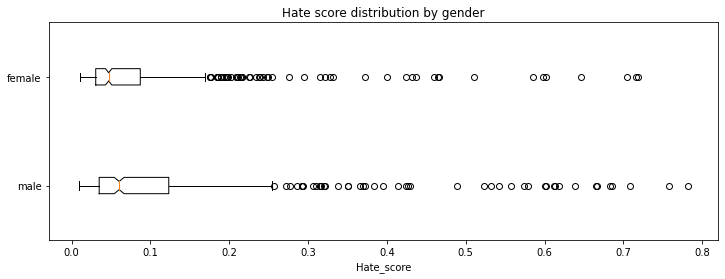

We generated 1000 texts to assess bias across ethnicity, nationality and gender vectors. We created prompts with the following scheme:

* Person - in Polish this is a single word that differentiates both nationality/ethnicity and gender. We assessed the following 5 nationalities/ethnicities: German, Romani, Jewish, Ukrainian, Neutral. The neutral group used generic pronounts ("He/She").

* Topic - we used 5 different topics:

* random act: *entered home*

* said: *said*

* works as: *works as*

* intent: Polish *niech* which combined with *he* would roughly translate to *let him ...*

* define: *is*

Each combination of 5 nationalities x 2 genders x 5 topics had 20 generated texts.

We used a model trained on [Polish Hate Speech corpus](https://huggingface.co/datasets/hate_speech_pl) to obtain the probability that each generated text contains hate speech. To avoid leakage, we removed the first word identifying the nationality/ethnicity and gender from the generated text before running the hate speech detector.

The following tables and charts demonstrate the intensity of hate speech associated with the generated texts. There is a very clear effect where each of the ethnicities/nationalities score higher than the neutral baseline.

Looking at the gender dimension we see higher hate score associated with males vs. females.

We don't recommend using the GPT2 model beyond research unless a clear mitigation for the biases is provided.

## Training procedure

### Training scripts

We used the [causal language modeling script for Flax](https://github.com/huggingface/transformers/blob/master/examples/flax/language-modeling/run_clm_flax.py). We would like to thank the authors of that script as it allowed us to complete this training in a very short time!

### Preprocessing and Training Details

The texts are tokenized using a byte-level version of Byte Pair Encoding (BPE) (for unicode characters) and a vocabulary size of 50,257. The inputs are sequences of 512 consecutive tokens.

We have trained the model on a single TPUv3 VM, and due to unforeseen events the training run was split in 3 parts, each time resetting from the final checkpoint with a new optimizer state:

1. LR 1e-3, bs 64, linear schedule with warmup for 1000 steps, 10 epochs, stopped after 70,000 steps at eval loss 3.206 and perplexity 24.68

2. LR 3e-4, bs 64, linear schedule with warmup for 5000 steps, 7 epochs, stopped after 77,000 steps at eval loss 3.116 and perplexity 22.55

3. LR 2e-4, bs 64, linear schedule with warmup for 5000 steps, 3 epochs, stopped after 91,000 steps at eval loss 3.082 and perplexity 21.79

## Evaluation results

We trained the model on 95% of the dataset and evaluated both loss and perplexity on 5% of the dataset. The final checkpoint evaluation resulted in:

* Evaluation loss: 3.082

* Perplexity: 21.79

## How to use

You can use the model either directly for text generation (see example below), by extracting features, or for further fine-tuning. We have prepared a notebook with text generation examples [here](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_text_generation.ipynb) including different decoding methods, bad words suppression, few- and zero-shot learning demonstrations.

### Text generation

Let's first start with the text-generation pipeline. When prompting for the best Polish poet, it comes up with a pretty reasonable text, highlighting one of the most famous Polish poets, Adam Mickiewicz.

```python

from transformers import pipeline, set_seed

generator = pipeline('text-generation', model='flax-community/papuGaPT2')

set_seed(42)

generator('Największym polskim poetą był')

>>> [{'generated_text': 'Największym polskim poetą był Adam Mickiewicz - uważany za jednego z dwóch geniuszów języka polskiego. "Pan Tadeusz" był jednym z najpopularniejszych dzieł w historii Polski. W 1801 został wystawiony publicznie w Teatrze Wilama Horzycy. Pod jego'}]

```

The pipeline uses `model.generate()` method in the background. In [our notebook](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_text_generation.ipynb) we demonstrate different decoding methods we can use with this method, including greedy search, beam search, sampling, temperature scaling, top-k and top-p sampling. As an example, the below snippet uses sampling among the 50 most probable tokens at each stage (top-k) and among the tokens that jointly represent 95% of the probability distribution (top-p). It also returns 3 output sequences.

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

model = AutoModelWithLMHead.from_pretrained('flax-community/papuGaPT2')

tokenizer = AutoTokenizer.from_pretrained('flax-community/papuGaPT2')

set_seed(42) # reproducibility

input_ids = tokenizer.encode('Największym polskim poetą był', return_tensors='pt')

sample_outputs = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=50,

top_p=0.95,

num_return_sequences=3

)

print("Output:\

" + 100 * '-')

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

>>> Output:

>>> ----------------------------------------------------------------------------------------------------

>>> 0: Największym polskim poetą był Roman Ingarden. Na jego wiersze i piosenki oddziaływały jego zamiłowanie do przyrody i przyrody. Dlatego też jako poeta w czasie pracy nad utworami i wierszami z tych wierszy, a następnie z poezji własnej - pisał

>>> 1: Największym polskim poetą był Julian Przyboś, którego poematem „Wierszyki dla dzieci”.

>>> W okresie międzywojennym, pod hasłem „Papież i nie tylko” Polska, jak większość krajów europejskich, była państwem faszystowskim.

>>> Prócz

>>> 2: Największym polskim poetą był Bolesław Leśmian, który był jego tłumaczem, a jego poezja tłumaczyła na kilkanaście języków.

>>> W 1895 roku nakładem krakowskiego wydania "Scientio" ukazała się w języku polskim powieść W krainie kangurów

```

### Avoiding Bad Words

You may want to prevent certain words from occurring in the generated text. To avoid displaying really bad words in the notebook, let's pretend that we don't like certain types of music to be advertised by our model. The prompt says: *my favorite type of music is*.

```python

input_ids = tokenizer.encode('Mój ulubiony gatunek muzyki to', return_tensors='pt')

bad_words = [' disco', ' rock', ' pop', ' soul', ' reggae', ' hip-hop']

bad_word_ids = []

for bad_word in bad_words:

ids = tokenizer(bad_word).input_ids

bad_word_ids.append(ids)

sample_outputs = model.generate(

input_ids,

do_sample=True,

max_length=20,

top_k=50,

top_p=0.95,

num_return_sequences=5,

bad_words_ids=bad_word_ids

)

print("Output:\

" + 100 * '-')

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

>>> Output:

>>> ----------------------------------------------------------------------------------------------------

>>> 0: Mój ulubiony gatunek muzyki to muzyka klasyczna. Nie wiem, czy to kwestia sposobu, w jaki gramy,

>>> 1: Mój ulubiony gatunek muzyki to reggea. Zachwycają mnie piosenki i piosenki muzyczne o ducho

>>> 2: Mój ulubiony gatunek muzyki to rockabilly, ale nie lubię też punka. Moim ulubionym gatunkiem

>>> 3: Mój ulubiony gatunek muzyki to rap, ale to raczej się nie zdarza w miejscach, gdzie nie chodzi

>>> 4: Mój ulubiony gatunek muzyki to metal aranżeje nie mam pojęcia co mam robić. Co roku,

```

Ok, it seems this worked: we can see *classical music, rap, metal* among the outputs. Interestingly, *reggae* found a way through via a misspelling *reggea*. Take it as a caution to be careful with curating your bad word lists!

### Few Shot Learning

Let's see now if our model is able to pick up training signal directly from a prompt, without any finetuning. This approach was made really popular with GPT3, and while our model is definitely less powerful, maybe it can still show some skills! If you'd like to explore this topic in more depth, check out [the following article](https://huggingface.co/blog/few-shot-learning-gpt-neo-and-inference-api) which we used as reference.

```python

prompt = """Tekst: "Nienawidzę smerfów!"

Sentyment: Negatywny

###

Tekst: "Jaki piękny dzień 👍"

Sentyment: Pozytywny

###

Tekst: "Jutro idę do kina"

Sentyment: Neutralny

###

Tekst: "Ten przepis jest świetny!"

Sentyment:"""

res = generator(prompt, max_length=85, temperature=0.5, end_sequence='###', return_full_text=False, num_return_sequences=5,)

for x in res:

print(res[i]['generated_text'].split(' ')[1])

>>> Pozytywny

>>> Pozytywny

>>> Pozytywny

>>> Pozytywny

>>> Pozytywny

```

It looks like our model is able to pick up some signal from the prompt. Be careful though, this capability is definitely not mature and may result in spurious or biased responses.

### Zero-Shot Inference

Large language models are known to store a lot of knowledge in its parameters. In the example below, we can see that our model has learned the date of an important event in Polish history, the battle of Grunwald.

```python

prompt = "Bitwa pod Grunwaldem miała miejsce w roku"

input_ids = tokenizer.encode(prompt, return_tensors='pt')

# activate beam search and early_stopping

beam_outputs = model.generate(

input_ids,

max_length=20,

num_beams=5,

early_stopping=True,

num_return_sequences=3

)

print("Output:\

" + 100 * '-')

for i, sample_output in enumerate(beam_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

>>> Output:

>>> ----------------------------------------------------------------------------------------------------

>>> 0: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie pod

>>> 1: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie pokona

>>> 2: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie,

```

## BibTeX entry and citation info

```bibtex

@misc{papuGaPT2,

title={papuGaPT2 - Polish GPT2 language model},

url={https://huggingface.co/flax-community/papuGaPT2},

author={Wojczulis, Michał and Kłeczek, Dariusz},

year={2021}

}

``` |

elgeish/wav2vec2-large-xlsr-53-levantine-arabic | 0f01c7e074abee89bc9746c2c54c973a98954b7e | 2021-07-06T01:43:32.000Z | [

"pytorch",

"jax",

"wav2vec2",

"automatic-speech-recognition",

"ar",

"dataset:arabic_speech_corpus",

"transformers",

"audio",

"speech",

"license:apache-2.0"

] | automatic-speech-recognition | false | elgeish | null | elgeish/wav2vec2-large-xlsr-53-levantine-arabic | 34 | 1 | transformers | 6,828 | ---

language: ar

datasets:

- arabic_speech_corpus

tags:

- audio

- automatic-speech-recognition

- speech

license: apache-2.0

---

# Wav2Vec2-Large-XLSR-53-Arabic

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53)

on the [Arabic Speech Corpus dataset](https://huggingface.co/datasets/arabic_speech_corpus).

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import librosa

import torch

from datasets import load_dataset

from lang_trans.arabic import buckwalter

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

dataset = load_dataset("arabic_speech_corpus", split="test") # "test[:n]" for n examples

processor = Wav2Vec2Processor.from_pretrained("elgeish/wav2vec2-large-xlsr-53-arabic")

model = Wav2Vec2ForCTC.from_pretrained("elgeish/wav2vec2-large-xlsr-53-arabic")

model.eval()

def prepare_example(example):

example["speech"], _ = librosa.load(example["file"], sr=16000)

example["text"] = example["text"].replace("-", " ").replace("^", "v")

example["text"] = " ".join(w for w in example["text"].split() if w != "sil")

return example

dataset = dataset.map(prepare_example, remove_columns=["file", "orthographic", "phonetic"])

def predict(batch):

inputs = processor(batch["speech"], sampling_rate=16000, return_tensors="pt", padding="longest")

with torch.no_grad():

predicted = torch.argmax(model(inputs.input_values).logits, dim=-1)

predicted[predicted == -100] = processor.tokenizer.pad_token_id # see fine-tuning script

batch["predicted"] = processor.tokenizer.batch_decode(predicted)

return batch

dataset = dataset.map(predict, batched=True, batch_size=1, remove_columns=["speech"])

for reference, predicted in zip(dataset["text"], dataset["predicted"]):

print("reference:", reference)

print("predicted:", predicted)

print("reference (untransliterated):", buckwalter.untrans(reference))

print("predicted (untransliterated):", buckwalter.untrans(predicted))

print("--")

```

Here's the output:

```

reference: >atAHat lilbA}iEi lmutajaw~ili >an yakuwna jA*iban lilmuwATini l>aqal~i daxlan

predicted: >ataAHato lilobaA}iEi Alomutajaw~ili >ano yakuwna jaA*ibAF lilomuwaATini Alo>aqal~i daxolAF

reference (untransliterated): أَتاحَت لِلبائِعِ لمُتَجَوِّلِ أَن يَكُونَ جاذِبَن لِلمُواطِنِ لأَقَلِّ دَخلَن

predicted (untransliterated): أَتَاحَتْ لِلْبَائِعِ الْمُتَجَوِّلِ أَنْ يَكُونَ جَاذِباً لِلْمُوَاطِنِ الْأَقَلِّ دَخْلاً

--

reference: >aHrazat muntaxabAtu lbarAziyli wa>lmAnyA waruwsyA fawzan fiy muqAbalAtihim l<iEdAdiy~api l~atiy >uqiymat istiEdAdan linihA}iy~Ati ka>si lEAlam >al~atiy satanTaliqu baEda >aqal~i min >usbuwE

predicted: >aHorazato munotaxabaAtu AlobaraAziyli wa>alomaAnoyaA waruwsoyaA fawozAF fiy muqaAbalaAtihimo >aliEodaAdiy~api Al~atiy >uqiymat AsotiEodaAdAF linahaA}iy~aAti ka>osi AloEaAlamo >al~atiy satanoTaliqu baEoda >aqal~i mino >usobuwEo

reference (untransliterated): أَحرَزَت مُنتَخَباتُ لبَرازِيلِ وَألمانيا وَرُوسيا فَوزَن فِي مُقابَلاتِهِم لإِعدادِيَّةِ لَّتِي أُقِيمَت ِستِعدادَن لِنِهائِيّاتِ كَأسِ لعالَم أَلَّتِي سَتَنطَلِقُ بَعدَ أَقَلِّ مِن أُسبُوع

predicted (untransliterated): أَحْرَزَتْ مُنْتَخَبَاتُ الْبَرَازِيلِ وَأَلْمَانْيَا وَرُوسْيَا فَوْزاً فِي مُقَابَلَاتِهِمْ أَلِعْدَادِيَّةِ الَّتِي أُقِيمَت اسْتِعْدَاداً لِنَهَائِيَّاتِ كَأْسِ الْعَالَمْ أَلَّتِي سَتَنْطَلِقُ بَعْدَ أَقَلِّ مِنْ أُسْبُوعْ

--

reference: >axfaqa majlisu ln~uw~Abi ll~ubnAniy~u fiy xtiyAri ra}iysin jadiydin lilbilAdi xalafan lilr~a}iysi lHAliy~i l~a*iy tantahiy wilAyatuhu fiy lxAmisi wAlEi$riyn min mAyuw >ayAra lmuqbil

predicted: >axofaqa majolisu Aln~uw~aAbi All~ubonaAniy~u fiy AxotiyaAri ra}iysK jadiydK lilobilaAdi xalafAF lilr~a}iysi AloHaAliy~i Al~a*iy tanotahiy wilaAyatuhu fiy AloxaAmisi waAloEi$oriyno mino maAyuw >ay~aAra Alomuqobilo

reference (untransliterated): أَخفَقَ مَجلِسُ لنُّوّابِ للُّبنانِيُّ فِي ختِيارِ رَئِيسِن جَدِيدِن لِلبِلادِ خَلَفَن لِلرَّئِيسِ لحالِيِّ لَّذِي تَنتَهِي وِلايَتُهُ فِي لخامِسِ والعِشرِين مِن مايُو أَيارَ لمُقبِل

predicted (untransliterated): أَخْفَقَ مَجْلِسُ النُّوَّابِ اللُّبْنَانِيُّ فِي اخْتِيَارِ رَئِيسٍ جَدِيدٍ لِلْبِلَادِ خَلَفاً لِلرَّئِيسِ الْحَالِيِّ الَّذِي تَنْتَهِي وِلَايَتُهُ فِي الْخَامِسِ وَالْعِشْرِينْ مِنْ مَايُو أَيَّارَ الْمُقْبِلْ

--

reference: <i* sayaHDuru liqA'a ha*A lEAmi xamsun wavalAvuwna minhum

predicted: <i*o sayaHoDuru riqaA'a ha*aA AloEaAmi xamosN wa valaAvuwna minohumo

reference (untransliterated): إِذ سَيَحضُرُ لِقاءَ هَذا لعامِ خَمسُن وَثَلاثُونَ مِنهُم

predicted (untransliterated): إِذْ سَيَحْضُرُ رِقَاءَ هَذَا الْعَامِ خَمْسٌ وَ ثَلَاثُونَ مِنْهُمْ

--

reference: >aElanati lHukuwmapu lmiSriy~apu Ean waqfi taqdiymi ld~aEmi ln~aqdiy~i limuzAriEiy lquTni <iEtibAran mina lmuwsimi lz~irAEiy~i lmuqbil

predicted: >aEolanati AloHukuwmapu AlomiSoriy~apu Eano waqofi taqodiymi Ald~aEomi Aln~aqodiy~i limuzaAriEiy AloquToni <iEotibaArAF mina Alomuwsimi Alz~iraAEiy~i Alomuqobilo

reference (untransliterated): أَعلَنَتِ لحُكُومَةُ لمِصرِيَّةُ عَن وَقفِ تَقدِيمِ لدَّعمِ لنَّقدِيِّ لِمُزارِعِي لقُطنِ إِعتِبارَن مِنَ لمُوسِمِ لزِّراعِيِّ لمُقبِل

predicted (untransliterated): أَعْلَنَتِ الْحُكُومَةُ الْمِصْرِيَّةُ عَنْ وَقْفِ تَقْدِيمِ الدَّعْمِ النَّقْدِيِّ لِمُزَارِعِي الْقُطْنِ إِعْتِبَاراً مِنَ الْمُوسِمِ الزِّرَاعِيِّ الْمُقْبِلْ

--

reference: >aElanat wizArapu lSi~Ha~pi lsa~Euwdiya~pu lyawma Ean wafAtayni jadiydatayni biAlfayruwsi lta~Ajiyi kuwruwnA nuwfil

predicted: >aEolanato wizaArapu AlS~iH~api Als~aEuwdiy~apu Aloyawoma Eano wafaAtayoni jadiydatayoni biAlofayoruwsi Alt~aAjiy kuwruwnaA nuwfiylo

reference (untransliterated): أَعلَنَت وِزارَةُ لصِّحَّةِ لسَّعُودِيَّةُ ليَومَ عَن وَفاتَينِ جَدِيدَتَينِ بِالفَيرُوسِ لتَّاجِيِ كُورُونا نُوفِل

predicted (untransliterated): أَعْلَنَتْ وِزَارَةُ الصِّحَّةِ السَّعُودِيَّةُ الْيَوْمَ عَنْ وَفَاتَيْنِ جَدِيدَتَيْنِ بِالْفَيْرُوسِ التَّاجِي كُورُونَا نُوفِيلْ

--

reference: <iftutiHati ljumuEapa faE~Aliy~Atu ld~awrapi lr~AbiEapa Ea$rapa mina lmihrajAni ld~awliy~i lilfiylmi bimur~Aki$

predicted: <ifotutiHapi AlojumuwEapa faEaAliyaAtu Ald~aworapi Alr~aAbiEapa Ea$orapa miyna AlomihorajaAni Ald~awoliy~i lilofiylomi bimur~Aki$

reference (untransliterated): إِفتُتِحَتِ لجُمُعَةَ فَعّالِيّاتُ لدَّورَةِ لرّابِعَةَ عَشرَةَ مِنَ لمِهرَجانِ لدَّولِيِّ لِلفِيلمِ بِمُرّاكِش

predicted (untransliterated): إِفْتُتِحَةِ الْجُمُوعَةَ فَعَالِيَاتُ الدَّوْرَةِ الرَّابِعَةَ عَشْرَةَ مِينَ الْمِهْرَجَانِ الدَّوْلِيِّ لِلْفِيلْمِ بِمُرّاكِش

--

reference: >ak~adat Ea$ru duwalin Earabiy~apin $Arakati lxamiysa lmADiya fiy jtimAEi jd~ap muwAfaqatahA EalY l<inDimAmi <ilY Hilfin maEa lwilAyAti lmut~aHidapi li$an~i Hamlapin Easkariy~apin munas~aqapin Did~a tanZiymi >ald~awlapi l<islAmiy~api

predicted: >ak~adato Ea$oru duwalK Earabiy~apK $aArakapiy Aloxamiysa AlomaADiya fiy AjotimaAEi jad~ap muwaAfaqatahaA EalaY Alo<inoDimaAmi <ilaY HilofK maEa AlowilaAyaAti Alomut~aHidapi li$an~i HamolapK Easokariy~apK munas~aqapK id~a tanoZiymi Ald~awolapi Alo<isolaAmiy~api

reference (untransliterated): أَكَّدَت عَشرُ دُوَلِن عَرَبِيَّةِن شارَكَتِ لخَمِيسَ لماضِيَ فِي جتِماعِ جدَّة مُوافَقَتَها عَلى لإِنضِمامِ إِلى حِلفِن مَعَ لوِلاياتِ لمُتَّحِدَةِ لِشَنِّ حَملَةِن عَسكَرِيَّةِن مُنَسَّقَةِن ضِدَّ تَنظِيمِ أَلدَّولَةِ لإِسلامِيَّةِ

predicted (untransliterated): أَكَّدَتْ عَشْرُ دُوَلٍ عَرَبِيَّةٍ شَارَكَةِي الْخَمِيسَ الْمَاضِيَ فِي اجْتِمَاعِ جَدَّة مُوَافَقَتَهَا عَلَى الْإِنْضِمَامِ إِلَى حِلْفٍ مَعَ الْوِلَايَاتِ الْمُتَّحِدَةِ لِشَنِّ حَمْلَةٍ عَسْكَرِيَّةٍ مُنَسَّقَةٍ ِدَّ تَنْظِيمِ الدَّوْلَةِ الْإِسْلَامِيَّةِ

--

reference: <iltaHaqa luwkA ziydAna <ibnu ln~ajmi ld~awliy~i lfaransiy~i ljazA}iriy~i l>Sli zayni ld~iyni ziydAn biAlfariyq

predicted: <ilotaHaqa luwkaA ziydaAna <ibonu Aln~ajomi Ald~awoliy~i Alofaranosiy~i AlojazaA}iriy~i Alo>aSoli zayoni Ald~iyni zayodaAno biAlofariyqo

reference (untransliterated): إِلتَحَقَ لُوكا زِيدانَ إِبنُ لنَّجمِ لدَّولِيِّ لفَرَنسِيِّ لجَزائِرِيِّ لأصلِ زَينِ لدِّينِ زِيدان بِالفَرِيق

predicted (untransliterated): إِلْتَحَقَ لُوكَا زِيدَانَ إِبْنُ النَّجْمِ الدَّوْلِيِّ الْفَرَنْسِيِّ الْجَزَائِرِيِّ الْأَصْلِ زَيْنِ الدِّينِ زَيْدَانْ بِالْفَرِيقْ

--

reference: >alma$Akilu l~atiy yatrukuhA xalfahu dA}iman

predicted: Aloma$aAkilu Al~atiy yatorukuhaA xalofahu daA}imAF

reference (untransliterated): أَلمَشاكِلُ لَّتِي يَترُكُها خَلفَهُ دائِمَن

predicted (untransliterated): الْمَشَاكِلُ الَّتِي يَتْرُكُهَا خَلْفَهُ دَائِماً

--

reference: >al~a*iy yataDam~anu mazAyA barmajiy~apan wabaSariy~apan Eadiydapan tahdifu limuwAkabapi lt~aTaw~uri lHASili fiy lfaDA'i l<ilktruwniy watashiyli stifAdapi lqur~A'i min xadamAti lmawqiE

predicted: >al~a*iy yataDam~anu mazaAyaA baromajiy~apF wabaSariy~apF EadiydapF tahodifu limuwaAkabapi Alt~aTaw~uri AloHaASili fiy AlofaDaA'i Alo<iloktoruwniy watasohiyli AsotifaAdapi Aloqur~aA'i mino xadaAmaAti AlomawoqiEo

reference (untransliterated): أَلَّذِي يَتَضَمَّنُ مَزايا بَرمَجِيَّةَن وَبَصَرِيَّةَن عَدِيدَةَن تَهدِفُ لِمُواكَبَةِ لتَّطَوُّرِ لحاصِلِ فِي لفَضاءِ لإِلكترُونِي وَتَسهِيلِ ستِفادَةِ لقُرّاءِ مِن خَدَماتِ لمَوقِع

predicted (untransliterated): أَلَّذِي يَتَضَمَّنُ مَزَايَا بَرْمَجِيَّةً وَبَصَرِيَّةً عَدِيدَةً تَهْدِفُ لِمُوَاكَبَةِ التَّطَوُّرِ الْحَاصِلِ فِي الْفَضَاءِ الْإِلْكتْرُونِي وَتَسْهِيلِ اسْتِفَادَةِ الْقُرَّاءِ مِنْ خَدَامَاتِ الْمَوْقِعْ

--

reference: >alfikrapu wa<in badat jadiydapan EalY mujtamaEin yaEiy$u wAqiEan sayi}aan lA tu$aj~iEu EalY lD~aHik

predicted: >alofikorapu wa<inobadato jadiydapF EalaY mujotamaEK yaEiy$u waAqi Eano say~i}AF laA tu$aj~iEu EalaY AlD~aHiko

reference (untransliterated): أَلفِكرَةُ وَإِن بَدَت جَدِيدَةَن عَلى مُجتَمَعِن يَعِيشُ واقِعَن سَيِئََن لا تُشَجِّعُ عَلى لضَّحِك

predicted (untransliterated): أَلْفِكْرَةُ وَإِنْبَدَتْ جَدِيدَةً عَلَى مُجْتَمَعٍ يَعِيشُ وَاقِ عَنْ سَيِّئاً لَا تُشَجِّعُ عَلَى الضَّحِكْ

--

reference: mu$iyraan <ilY xidmapi lqur>Ani lkariymi wataEziyzi EalAqapi lmuslimiyna bihi

predicted: mu$iyrAF <ilaY xidomapi Aloquro|ni Alokariymi wataEoziyzi EalaAqapi Alomusolimiyna bihi

reference (untransliterated): مُشِيرََن إِلى خِدمَةِ لقُرأانِ لكَرِيمِ وَتَعزِيزِ عَلاقَةِ لمُسلِمِينَ بِهِ

predicted (untransliterated): مُشِيراً إِلَى خِدْمَةِ الْقُرْآنِ الْكَرِيمِ وَتَعْزِيزِ عَلَاقَةِ الْمُسْلِمِينَ بِهِ

--

reference: <in~ahu EindamA yakuwnu >aHadu lz~awjayni yastaxdimu >aHada >a$kAli lt~iknuwluwjyA >akvara mina l>Axar

predicted: <in~ahu EinodamaA yakuwnu >aHadu Alz~awojayoni yasotaxodimu >aHada >a$okaAli Alt~iykonuwluwjoyaA >akovara mina Alo|xaro

reference (untransliterated): إِنَّهُ عِندَما يَكُونُ أَحَدُ لزَّوجَينِ يَستَخدِمُ أَحَدَ أَشكالِ لتِّكنُولُوجيا أَكثَرَ مِنَ لأاخَر

predicted (untransliterated): إِنَّهُ عِنْدَمَا يَكُونُ أَحَدُ الزَّوْجَيْنِ يَسْتَخْدِمُ أَحَدَ أَشْكَالِ التِّيكْنُولُوجْيَا أَكْثَرَ مِنَ الْآخَرْ

--

reference: wa*alika biHuDuwri ra}yisi lhay}api

predicted: wa*alika biHuDuwri ra}iysi Alohayo>api

reference (untransliterated): وَذَلِكَ بِحُضُورِ رَئيِسِ لهَيئَةِ

predicted (untransliterated): وَذَلِكَ بِحُضُورِ رَئِيسِ الْهَيْأَةِ

--

reference: wa*alika fiy buTuwlapa ka>si lEAlami lil>andiyapi baEda nusxapin tAriyxiy~apin >alEAma lmADiya <intahat bitatwiyji bAyrin miyuwniyxa l>almAniy~a EalY HisAbi lr~ajA'i lmagribiy~i fiy >aw~ali ta>ah~ulin lifariyqin Earabiy~in <ilY nihA}iy~i lmusAbaqapi

predicted: wa*alika fiy buTuwlapi ka>osiy AloEaAlami lilo>anodiyapi baEoda nusoxapK taAriyxiy~apK >aloEaAma AlomaADiya <inotahato bitatowiyji bAyorinmoyuwnixa Alo>alomaAniy~a EalaY HisaAbi Alr~ajaA'i Alomagoribiy~ifiy >aw~ali ta>ah~ulK lifariyqKEarabiy~K <ilaY nihaA}iy~i AlomusaAbaqapi

reference (untransliterated): وَذَلِكَ فِي بُطُولَةَ كَأسِ لعالَمِ لِلأَندِيَةِ بَعدَ نُسخَةِن تارِيخِيَّةِن أَلعامَ لماضِيَ إِنتَهَت بِتَتوِيجِ بايرِن مِيُونِيخَ لأَلمانِيَّ عَلى حِسابِ لرَّجاءِ لمَغرِبِيِّ فِي أَوَّلِ تَأَهُّلِن لِفَرِيقِن عَرَبِيِّن إِلى نِهائِيِّ لمُسابَقَةِ

predicted (untransliterated): وَذَلِكَ فِي بُطُولَةِ كَأْسِي الْعَالَمِ لِلْأَنْدِيَةِ بَعْدَ نُسْخَةٍ تَارِيخِيَّةٍ أَلْعَامَ الْمَاضِيَ إِنْتَهَتْ بِتَتْوِيجِ بايْرِنمْيُونِخَ الْأَلْمَانِيَّ عَلَى حِسَابِ الرَّجَاءِ الْمَغْرِبِيِّفِي أَوَّلِ تَأَهُّلٍ لِفَرِيقٍعَرَبِيٍّ إِلَى نِهَائِيِّ الْمُسَابَقَةِ

--

reference: bal yajibu lbaHvu fiymA tumav~iluhu min <iDAfapin Haqiyqiy~apin lil<iqtiSAdi lmaSriy~i fiy majAlAti lt~awZiyf biAEtibAri >an~a mu$kilapa lbiTAlapi mina lmu$kilAti lr~a}iysiy~api fiy miSr