modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

rasta/distilbert-base-uncased-finetuned-fashion

|

9b6f70e275f1a0b7a4cf5569e1f53fe9a5cd1738

|

2022-05-09T08:10:22.000Z

|

[

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] |

text-classification

| false |

rasta

| null |

rasta/distilbert-base-uncased-finetuned-fashion

| 53 | 2 |

transformers

| 5,900 |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-fashion

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-fashion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on a munally created dataset in order to detect fashion (label_0) from non-fashion (label_1) items.

It achieves the following results on the evaluation set:

- Loss: 0.0809

- Accuracy: 0.98

- F1: 0.9801

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.4017 | 1.0 | 47 | 0.1220 | 0.966 | 0.9662 |

| 0.115 | 2.0 | 94 | 0.0809 | 0.98 | 0.9801 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

anas-awadalla/splinter-large-few-shot-k-1024-finetuned-squad-seed-2

|

23f58c42ae6e81cc1f4a7560ae3c3e57dfb482a5

|

2022-05-14T23:31:40.000Z

|

[

"pytorch",

"splinter",

"question-answering",

"dataset:squad",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] |

question-answering

| false |

anas-awadalla

| null |

anas-awadalla/splinter-large-few-shot-k-1024-finetuned-squad-seed-2

| 53 | null |

transformers

| 5,901 |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: splinter-large-few-shot-k-1024-finetuned-squad-seed-2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# splinter-large-few-shot-k-1024-finetuned-squad-seed-2

This model is a fine-tuned version of [tau/splinter-large-qass](https://huggingface.co/tau/splinter-large-qass) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 12

- eval_batch_size: 8

- seed: 2

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10.0

### Training results

### Framework versions

- Transformers 4.20.0.dev0

- Pytorch 1.11.0+cu113

- Datasets 2.0.0

- Tokenizers 0.11.6

|

CEBaB/bert-base-uncased.CEBaB.absa.exclusive.seed_42

|

b31e1ab0039a987ee80fda6f256ee1c88fe34223

|

2022-05-17T18:43:42.000Z

|

[

"pytorch",

"bert",

"transformers"

] | null | false |

CEBaB

| null |

CEBaB/bert-base-uncased.CEBaB.absa.exclusive.seed_42

| 53 | null |

transformers

| 5,902 |

Entry not found

|

Cirilaron/DialoGPT-medium-jetstreamsam

|

02fc2375c982ea3de186a4883b034cfa5b6d3c68

|

2022-06-09T12:37:07.000Z

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

| false |

Cirilaron

| null |

Cirilaron/DialoGPT-medium-jetstreamsam

| 53 | null |

transformers

| 5,903 |

---

tags:

- conversational

---

#Samuel Rodrigues from Metal Gear Rising DialoGPT Model

|

Kittipong/wav2vec2-th-vocal-domain

|

4f5fec019d8b0b9f5be8e0da0ff3c2acb59d6fb1

|

2022-06-12T12:34:43.000Z

|

[

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"transformers",

"license:cc-by-sa-4.0"

] |

automatic-speech-recognition

| false |

Kittipong

| null |

Kittipong/wav2vec2-th-vocal-domain

| 53 | null |

transformers

| 5,904 |

---

license: cc-by-sa-4.0

---

|

cwkeam/m-ctc-t-large-sequence-lid

|

0391241ef74c94275a8d8cbfb1b7fc3f0ca66ea0

|

2022-06-29T04:31:03.000Z

|

[

"pytorch",

"mctct",

"text-classification",

"en",

"dataset:librispeech_asr",

"dataset:common_voice",

"arxiv:2111.00161",

"transformers",

"speech",

"license:apache-2.0"

] |

text-classification

| false |

cwkeam

| null |

cwkeam/m-ctc-t-large-sequence-lid

| 53 | null |

transformers

| 5,905 |

---

language: en

datasets:

- librispeech_asr

- common_voice

tags:

- speech

license: apache-2.0

---

# M-CTC-T

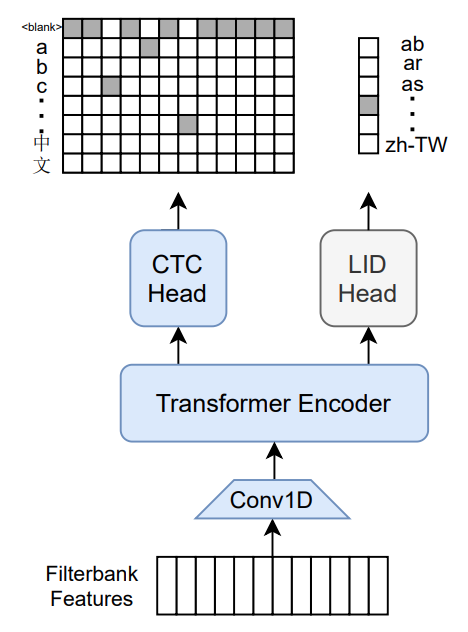

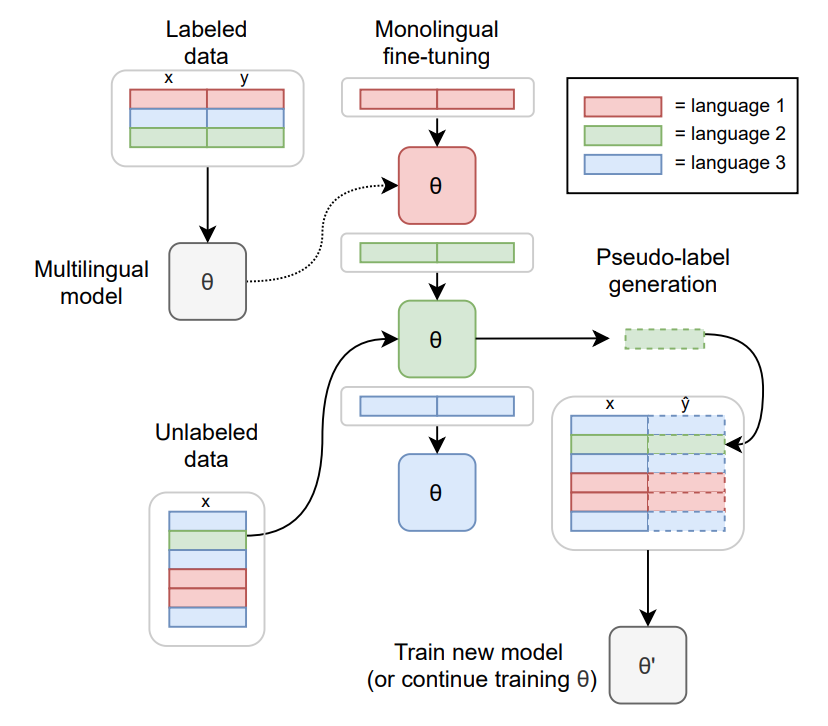

Massively multilingual speech recognizer from Meta AI. The model is a 1B-param transformer encoder, with a CTC head over 8065 character labels and a language identification head over 60 language ID labels. It is trained on Common Voice (version 6.1, December 2020 release) and VoxPopuli. After training on Common Voice and VoxPopuli, the model is trained on Common Voice only. The labels are unnormalized character-level transcripts (punctuation and capitalization are not removed). The model takes as input Mel filterbank features from a 16Khz audio signal.

The original Flashlight code, model checkpoints, and Colab notebook can be found at https://github.com/flashlight/wav2letter/tree/main/recipes/mling_pl .

## Citation

[Paper](https://arxiv.org/abs/2111.00161)

Authors: Loren Lugosch, Tatiana Likhomanenko, Gabriel Synnaeve, Ronan Collobert

```

@article{lugosch2021pseudo,

title={Pseudo-Labeling for Massively Multilingual Speech Recognition},

author={Lugosch, Loren and Likhomanenko, Tatiana and Synnaeve, Gabriel and Collobert, Ronan},

journal={ICASSP},

year={2022}

}

```

Additional thanks to [Chan Woo Kim](https://huggingface.co/cwkeam) and [Patrick von Platen](https://huggingface.co/patrickvonplaten) for porting the model from Flashlight to PyTorch.

# Training method

TO-DO: replace with the training diagram from paper

For more information on how the model was trained, please take a look at the [official paper](https://arxiv.org/abs/2111.00161).

# Usage

To transcribe audio files the model can be used as a standalone acoustic model as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import MCTCTForCTC, MCTCTProcessor

model = MCTCTForCTC.from_pretrained("speechbrain/mctct-large")

processor = MCTCTProcessor.from_pretrained("speechbrain/mctct-large")

# load dummy dataset and read soundfiles

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

# tokenize

input_features = processor(ds[0]["audio"]["array"], return_tensors="pt").input_features

# retrieve logits

logits = model(input_features).logits

# take argmax and decode

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)

```

Results for Common Voice, averaged over all languages:

*Character error rate (CER)*:

| Valid | Test |

|-------|------|

| 21.4 | 23.3 |

|

shash2409/bert-finetuned-squad

|

4ea4437bc266e648ab369ad7552dcae25d90fe47

|

2022-07-03T19:32:27.000Z

|

[

"pytorch",

"tensorboard",

"bert",

"question-answering",

"dataset:squad",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] |

question-answering

| false |

shash2409

| null |

shash2409/bert-finetuned-squad

| 53 | null |

transformers

| 5,906 |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: bert-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-squad

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

semy/finetuning-sentiment-model-sst

|

83734031b99d78b425fa3adaa7c6779d7b958ac2

|

2022-07-01T12:47:28.000Z

|

[

"pytorch",

"distilbert",

"text-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] |

text-classification

| false |

semy

| null |

semy/finetuning-sentiment-model-sst

| 53 | null |

transformers

| 5,907 |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: finetuning-sentiment-model-sst

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-sst

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.2

- Datasets 2.3.2

- Tokenizers 0.12.1

|

zhifei/autotrain-chinese-title-summarization-1-1084539138

|

0bd24fbcde53d2e03c0fbeb8187ad822af0b1970

|

2022-07-04T08:49:18.000Z

|

[

"pytorch",

"mt5",

"text2text-generation",

"unk",

"dataset:zhifei/autotrain-data-chinese-title-summarization-1",

"transformers",

"autotrain",

"co2_eq_emissions",

"autotrain_compatible"

] |

text2text-generation

| false |

zhifei

| null |

zhifei/autotrain-chinese-title-summarization-1-1084539138

| 53 | null |

transformers

| 5,908 |

---

tags: autotrain

language: unk

widget:

- text: "I love AutoTrain 🤗"

datasets:

- zhifei/autotrain-data-chinese-title-summarization-1

co2_eq_emissions: 0.004484038360707097

---

# Model Trained Using AutoTrain

- Problem type: Summarization

- Model ID: 1084539138

- CO2 Emissions (in grams): 0.004484038360707097

## Validation Metrics

- Loss: 0.7330857515335083

- Rouge1: 22.2222

- Rouge2: 10.0

- RougeL: 22.2222

- RougeLsum: 22.2222

- Gen Len: 13.7333

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/zhifei/autotrain-chinese-title-summarization-1-1084539138

```

|

okho0653/Bio_ClinicalBERT-zero-shot-tokenizer-truncation-sentiment-model

|

91d77d8debe3f8769c755eeedc0f42858fdf297d

|

2022-07-08T03:54:48.000Z

|

[

"pytorch",

"tensorboard",

"bert",

"text-classification",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index"

] |

text-classification

| false |

okho0653

| null |

okho0653/Bio_ClinicalBERT-zero-shot-tokenizer-truncation-sentiment-model

| 53 | null |

transformers

| 5,909 |

---

license: mit

tags:

- generated_from_trainer

model-index:

- name: Bio_ClinicalBERT-zero-shot-tokenizer-truncation-sentiment-model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Bio_ClinicalBERT-zero-shot-tokenizer-truncation-sentiment-model

This model is a fine-tuned version of [emilyalsentzer/Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

Lvxue/finetuned-mbart-large-10epoch

|

07b2e2e5e1629c407746ede1f21243f6dd9ae3f1

|

2022-07-11T03:11:38.000Z

|

[

"pytorch",

"mbart",

"text2text-generation",

"en",

"ro",

"dataset:wmt16",

"transformers",

"generated_from_trainer",

"model-index",

"autotrain_compatible"

] |

text2text-generation

| false |

Lvxue

| null |

Lvxue/finetuned-mbart-large-10epoch

| 53 | null |

transformers

| 5,910 |

---

language:

- en

- ro

tags:

- generated_from_trainer

datasets:

- wmt16

model-index:

- name: finetuned-mbart-large-10epoch

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned-mbart-large-10epoch

This model is a fine-tuned version of [facebook/mbart-large-cc25](https://huggingface.co/facebook/mbart-large-cc25) on the wmt16 ro-en dataset.

It achieves the following results on the evaluation set:

- Loss: 2.6032

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 12

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10.0

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

CogComp/roberta-temporal-predictor

|

aa4d28dcd3baacce849e269b4dbeeef35e52f8a2

|

2022-03-22T20:15:03.000Z

|

[

"pytorch",

"roberta",

"fill-mask",

"arxiv:2202.00436",

"transformers",

"license:mit",

"autotrain_compatible"

] |

fill-mask

| false |

CogComp

| null |

CogComp/roberta-temporal-predictor

| 52 | null |

transformers

| 5,911 |

---

license: mit

widget:

- text: "The man turned on the faucet <mask> water flows out."

- text: "The woman received her pension <mask> she retired."

---

# roberta-temporal-predictor

A RoBERTa-base model that is fine-tuned on the [The New York Times Annotated Corpus](https://catalog.ldc.upenn.edu/LDC2008T19)

to predict temporal precedence of two events. This is used as the ``temporality prediction'' component

in our ROCK framework for reasoning about commonsense causality. See our [paper](https://arxiv.org/abs/2202.00436) for more details.

# Usage

You can directly use this model for filling-mask tasks, as shown in the example widget.

However, for better temporal inference, it is recommended to symmetrize the outputs as

$$

P(E_1 \prec E_2) = \frac{1}{2} (f(E_1,E_2) + f(E_2,E_1))

$$

where ``f(E_1,E_2)`` denotes the predicted probability for ``E_1`` to occur preceding ``E_2``.

For simplicity, we implement the following TempPredictor class that incorporate this symmetrization automatically.

Below is an example usage for the ``TempPredictor`` class:

```python

from transformers import (RobertaForMaskedLM, RobertaTokenizer)

from src.temp_predictor import TempPredictor

TORCH_DEV = "cuda:0" # change as needed

tp_roberta_ft = src.TempPredictor(

model=RobertaForMaskedLM.from_pretrained("CogComp/roberta-temporal-predictor"),

tokenizer=RobertaTokenizer.from_pretrained("CogComp/roberta-temporal-predictor"),

device=TORCH_DEV

)

E1 = "The man turned on the faucet."

E2 = "Water flows out."

t12 = tp_roberta_ft(E1, E2, top_k=5)

print(f"P('{E1}' before '{E2}'): {t12}")

```

# BibTeX entry and citation info

```bib

@misc{zhang2022causal,

title={Causal Inference Principles for Reasoning about Commonsense Causality},

author={Jiayao Zhang and Hongming Zhang and Dan Roth and Weijie J. Su},

year={2022},

eprint={2202.00436},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

Hate-speech-CNERG/dehatebert-mono-arabic

|

e592a5ee3b913ec33286ee90fb27c7f7f1a8b996

|

2021-09-25T13:54:53.000Z

|

[

"pytorch",

"jax",

"bert",

"text-classification",

"ar",

"arxiv:2004.06465",

"transformers",

"license:apache-2.0"

] |

text-classification

| false |

Hate-speech-CNERG

| null |

Hate-speech-CNERG/dehatebert-mono-arabic

| 52 | null |

transformers

| 5,912 |

---

language: ar

license: apache-2.0

---

This model is used detecting **hatespeech** in **Arabic language**. The mono in the name refers to the monolingual setting, where the model is trained using only Arabic language data. It is finetuned on multilingual bert model.

The model is trained with different learning rates and the best validation score achieved is 0.877609 for a learning rate of 2e-5. Training code can be found at this [url](https://github.com/punyajoy/DE-LIMIT)

### For more details about our paper

Sai Saketh Aluru, Binny Mathew, Punyajoy Saha and Animesh Mukherjee. "[Deep Learning Models for Multilingual Hate Speech Detection](https://arxiv.org/abs/2004.06465)". Accepted at ECML-PKDD 2020.

***Please cite our paper in any published work that uses any of these resources.***

~~~

@article{aluru2020deep,

title={Deep Learning Models for Multilingual Hate Speech Detection},

author={Aluru, Sai Saket and Mathew, Binny and Saha, Punyajoy and Mukherjee, Animesh},

journal={arXiv preprint arXiv:2004.06465},

year={2020}

}

~~~

|

Helsinki-NLP/opus-mt-aed-es

|

a56c16908eafa534660838102b535b32f40581a3

|

2021-09-09T21:25:50.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"aed",

"es",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-aed-es

| 52 | null |

transformers

| 5,913 |

---

tags:

- translation

license: apache-2.0

---

### opus-mt-aed-es

* source languages: aed

* target languages: es

* OPUS readme: [aed-es](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/aed-es/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-15.zip](https://object.pouta.csc.fi/OPUS-MT-models/aed-es/opus-2020-01-15.zip)

* test set translations: [opus-2020-01-15.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/aed-es/opus-2020-01-15.test.txt)

* test set scores: [opus-2020-01-15.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/aed-es/opus-2020-01-15.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.aed.es | 89.1 | 0.915 |

|

Helsinki-NLP/opus-mt-de-fi

|

bbd50eeefdc1e26d75f6a806495192b55878c04a

|

2021-09-09T21:31:05.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"de",

"fi",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-de-fi

| 52 | null |

transformers

| 5,914 |

---

tags:

- translation

license: apache-2.0

---

### opus-mt-de-fi

* source languages: de

* target languages: fi

* OPUS readme: [de-fi](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/de-fi/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-08.zip](https://object.pouta.csc.fi/OPUS-MT-models/de-fi/opus-2020-01-08.zip)

* test set translations: [opus-2020-01-08.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/de-fi/opus-2020-01-08.test.txt)

* test set scores: [opus-2020-01-08.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/de-fi/opus-2020-01-08.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.de.fi | 40.0 | 0.628 |

|

Helsinki-NLP/opus-mt-fi-sv

|

4f951b1b01773808d66e0868a3e53cf964f73362

|

2021-09-09T21:51:05.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"fi",

"sv",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-fi-sv

| 52 | null |

transformers

| 5,915 |

---

tags:

- translation

license: apache-2.0

---

### opus-mt-fi-sv

* source languages: fi

* target languages: sv

* OPUS readme: [fi-sv](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/fi-sv/README.md)

* dataset: opus+bt

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus+bt-2020-04-11.zip](https://object.pouta.csc.fi/OPUS-MT-models/fi-sv/opus+bt-2020-04-11.zip)

* test set translations: [opus+bt-2020-04-11.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/fi-sv/opus+bt-2020-04-11.test.txt)

* test set scores: [opus+bt-2020-04-11.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/fi-sv/opus+bt-2020-04-11.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| fiskmo_testset.fi.sv | 27.4 | 0.605 |

| Tatoeba.fi.sv | 54.7 | 0.709 |

|

RJ3vans/SignTagger

|

177222c11b652437211b35052b8e1298a6dcc691

|

2021-08-13T09:00:50.000Z

|

[

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] |

token-classification

| false |

RJ3vans

| null |

RJ3vans/SignTagger

| 52 | null |

transformers

| 5,916 |

This model is used to tag the tokens in an input sequence with information about the different signs of syntactic complexity that they contain. For more details, please see Chapters 2 and 3 of my thesis (http://rgcl.wlv.ac.uk/~richard/Evans2020_SentenceSimplificationForTextProcessing.pdf).

It was derived using code written by Dr. Le An Ha at the University of Wolverhampton.

To use this model, the following code snippet may help:

======================================================================

import torch

from transformers import AutoModelForTokenClassification, AutoTokenizer

SignTaggingModel = AutoModelForTokenClassification.from_pretrained('RJ3vans/SignTagger')

SignTaggingTokenizer = AutoTokenizer.from_pretrained('RJ3vans/SignTagger')

label_list = ["M:N_CCV", "M:N_CIN", "M:N_CLA", "M:N_CLAdv", "M:N_CLN", "M:N_CLP", # This could be obtained from the config file

"M:N_CLQ", "M:N_CLV", "M:N_CMA1", "M:N_CMAdv", "M:N_CMN1",

"M:N_CMN2", "M:N_CMN3", "M:N_CMN4", "M:N_CMP", "M:N_CMP2",

"M:N_CMV1", "M:N_CMV2", "M:N_CMV3", "M:N_COMBINATORY", "M:N_CPA",

"M:N_ESAdvP", "M:N_ESCCV", "M:N_ESCM", "M:N_ESMA", "M:N_ESMAdvP",

"M:N_ESMI", "M:N_ESMN", "M:N_ESMP", "M:N_ESMV", "M:N_HELP",

"M:N_SPECIAL", "M:N_SSCCV", "M:N_SSCM", "M:N_SSMA", "M:N_SSMAdvP",

"M:N_SSMI", "M:N_SSMN", "M:N_SSMP", "M:N_SSMV", "M:N_STQ",

"M:N_V", "M:N_nan", "M:Y_CCV", "M:Y_CIN", "M:Y_CLA", "M:Y_CLAdv",

"M:Y_CLN", "M:Y_CLP", "M:Y_CLQ", "M:Y_CLV", "M:Y_CMA1",

"M:Y_CMAdv", "M:Y_CMN1", "M:Y_CMN2", "M:Y_CMN4", "M:Y_CMP",

"M:Y_CMP2", "M:Y_CMV1", "M:Y_CMV2", "M:Y_CMV3",

"M:Y_COMBINATORY", "M:Y_CPA", "M:Y_ESAdvP", "M:Y_ESCCV",

"M:Y_ESCM", "M:Y_ESMA", "M:Y_ESMAdvP", "M:Y_ESMI", "M:Y_ESMN",

"M:Y_ESMP", "M:Y_ESMV", "M:Y_HELP", "M:Y_SPECIAL", "M:Y_SSCCV",

"M:Y_SSCM", "M:Y_SSMA", "M:Y_SSMAdvP", "M:Y_SSMI", "M:Y_SSMN",

"M:Y_SSMP", "M:Y_SSMV", "M:Y_STQ"]

sentence = 'The County Court in Nottingham heard that Roger Gedge, 30, had his leg amputated following the incident outside a rock festival in Wollaton Park, Nottingham, five years ago.'

tokens = SignTaggingTokenizer.tokenize(SignTaggingTokenizer.decode(SignTaggingTokenizer.encode(sentence)))

inputs = SignTaggingTokenizer.encode(sentence, return_tensors="pt")

outputs = SignTaggingModel(inputs)[0]

predictions = torch.argmax(outputs, dim=2)

print([(token, label_list[prediction]) for token, prediction in zip(tokens, predictions[0].tolist())])

======================================================================

|

SEBIS/code_trans_t5_base_code_documentation_generation_javascript_multitask_finetune

|

7d1881514432cb3860195e0b8e466809cddbb1bd

|

2021-06-23T04:31:36.000Z

|

[

"pytorch",

"jax",

"t5",

"feature-extraction",

"transformers",

"summarization"

] |

summarization

| false |

SEBIS

| null |

SEBIS/code_trans_t5_base_code_documentation_generation_javascript_multitask_finetune

| 52 | null |

transformers

| 5,917 |

---

tags:

- summarization

widget:

- text: "function isStandardBrowserEnv ( ) { if ( typeof navigator !== 'undefined' && ( navigator . product === 'ReactNative' || navigator . product === 'NativeScript' || navigator . product === 'NS' ) ) { return false ; } return ( typeof window !== 'undefined' && typeof document !== 'undefined' ) ; }"

---

# CodeTrans model for code documentation generation javascript

Pretrained model on programming language javascript using the t5 base model architecture. It was first released in

[this repository](https://github.com/agemagician/CodeTrans). This model is trained on tokenized javascript code functions: it works best with tokenized javascript functions.

## Model description

This CodeTrans model is based on the `t5-base` model. It has its own SentencePiece vocabulary model. It used multi-task training on 13 supervised tasks in the software development domain and 7 unsupervised datasets. It is then fine-tuned on the code documentation generation task for the javascript function/method.

## Intended uses & limitations

The model could be used to generate the description for the javascript function or be fine-tuned on other javascript code tasks. It can be used on unparsed and untokenized javascript code. However, if the javascript code is tokenized, the performance should be better.

### How to use

Here is how to use this model to generate javascript function documentation using Transformers SummarizationPipeline:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead, SummarizationPipeline

pipeline = SummarizationPipeline(

model=AutoModelWithLMHead.from_pretrained("SEBIS/code_trans_t5_base_code_documentation_generation_javascript_multitask_finetune"),

tokenizer=AutoTokenizer.from_pretrained("SEBIS/code_trans_t5_base_code_documentation_generation_javascript_multitask_finetune", skip_special_tokens=True),

device=0

)

tokenized_code = "function isStandardBrowserEnv ( ) { if ( typeof navigator !== 'undefined' && ( navigator . product === 'ReactNative' || navigator . product === 'NativeScript' || navigator . product === 'NS' ) ) { return false ; } return ( typeof window !== 'undefined' && typeof document !== 'undefined' ) ; }"

pipeline([tokenized_code])

```

Run this example in [colab notebook](https://github.com/agemagician/CodeTrans/blob/main/prediction/multitask/fine-tuning/function%20documentation%20generation/javascript/base_model.ipynb).

## Training data

The supervised training tasks datasets can be downloaded on [Link](https://www.dropbox.com/sh/488bq2of10r4wvw/AACs5CGIQuwtsD7j_Ls_JAORa/finetuning_dataset?dl=0&subfolder_nav_tracking=1)

## Training procedure

### Multi-task Pretraining

The model was trained on a single TPU Pod V3-8 for half million steps in total, using sequence length 512 (batch size 4096).

It has a total of approximately 220M parameters and was trained using the encoder-decoder architecture.

The optimizer used is AdaFactor with inverse square root learning rate schedule for pre-training.

### Fine-tuning

This model was then fine-tuned on a single TPU Pod V2-8 for 10,000 steps in total, using sequence length 512 (batch size 256), using only the dataset only containing javascript code.

## Evaluation results

For the code documentation tasks, different models achieves the following results on different programming languages (in BLEU score):

Test results :

| Language / Model | Python | Java | Go | Php | Ruby | JavaScript |

| -------------------- | :------------: | :------------: | :------------: | :------------: | :------------: | :------------: |

| CodeTrans-ST-Small | 17.31 | 16.65 | 16.89 | 23.05 | 9.19 | 13.7 |

| CodeTrans-ST-Base | 16.86 | 17.17 | 17.16 | 22.98 | 8.23 | 13.17 |

| CodeTrans-TF-Small | 19.93 | 19.48 | 18.88 | 25.35 | 13.15 | 17.23 |

| CodeTrans-TF-Base | 20.26 | 20.19 | 19.50 | 25.84 | 14.07 | 18.25 |

| CodeTrans-TF-Large | 20.35 | 20.06 | **19.54** | 26.18 | 14.94 | **18.98** |

| CodeTrans-MT-Small | 19.64 | 19.00 | 19.15 | 24.68 | 14.91 | 15.26 |

| CodeTrans-MT-Base | **20.39** | 21.22 | 19.43 | **26.23** | **15.26** | 16.11 |

| CodeTrans-MT-Large | 20.18 | **21.87** | 19.38 | 26.08 | 15.00 | 16.23 |

| CodeTrans-MT-TF-Small | 19.77 | 20.04 | 19.36 | 25.55 | 13.70 | 17.24 |

| CodeTrans-MT-TF-Base | 19.77 | 21.12 | 18.86 | 25.79 | 14.24 | 18.62 |

| CodeTrans-MT-TF-Large | 18.94 | 21.42 | 18.77 | 26.20 | 14.19 | 18.83 |

| State of the art | 19.06 | 17.65 | 18.07 | 25.16 | 12.16 | 14.90 |

> Created by [Ahmed Elnaggar](https://twitter.com/Elnaggar_AI) | [LinkedIn](https://www.linkedin.com/in/prof-ahmed-elnaggar/) and Wei Ding | [LinkedIn](https://www.linkedin.com/in/wei-ding-92561270/)

|

alireza7/ARMAN-MSR-persian-base-PN-summary

|

3312c43fc7514afa6a40b5c558a7e662761f8810

|

2021-09-29T19:14:47.000Z

|

[

"pytorch",

"pegasus",

"text2text-generation",

"transformers",

"autotrain_compatible"

] |

text2text-generation

| false |

alireza7

| null |

alireza7/ARMAN-MSR-persian-base-PN-summary

| 52 | null |

transformers

| 5,918 |

More information about models is available [here](https://github.com/alirezasalemi7/ARMAN).

|

asafaya/albert-large-arabic

|

bb5cad09b4480a6403a52ec2d83386dc98471d1e

|

2022-02-11T13:52:18.000Z

|

[

"pytorch",

"tf",

"albert",

"fill-mask",

"ar",

"dataset:oscar",

"dataset:wikipedia",

"transformers",

"masked-lm",

"autotrain_compatible"

] |

fill-mask

| false |

asafaya

| null |

asafaya/albert-large-arabic

| 52 | 1 |

transformers

| 5,919 |

---

language: ar

datasets:

- oscar

- wikipedia

tags:

- ar

- masked-lm

---

# Arabic-ALBERT Large

Arabic edition of ALBERT Large pretrained language model

_If you use any of these models in your work, please cite this work as:_

```

@software{ali_safaya_2020_4718724,

author = {Ali Safaya},

title = {Arabic-ALBERT},

month = aug,

year = 2020,

publisher = {Zenodo},

version = {1.0.0},

doi = {10.5281/zenodo.4718724},

url = {https://doi.org/10.5281/zenodo.4718724}

}

```

## Pretraining data

The models were pretrained on ~4.4 Billion words:

- Arabic version of [OSCAR](https://oscar-corpus.com/) (unshuffled version of the corpus) - filtered from [Common Crawl](http://commoncrawl.org/)

- Recent dump of Arabic [Wikipedia](https://dumps.wikimedia.org/backup-index.html)

__Notes on training data:__

- Our final version of corpus contains some non-Arabic words inlines, which we did not remove from sentences since that would affect some tasks like NER.

- Although non-Arabic characters were lowered as a preprocessing step, since Arabic characters do not have upper or lower case, there is no cased and uncased version of the model.

- The corpus and vocabulary set are not restricted to Modern Standard Arabic, they contain some dialectical Arabic too.

## Pretraining details

- These models were trained using Google ALBERT's github [repository](https://github.com/google-research/albert) on a single TPU v3-8 provided for free from [TFRC](https://www.tensorflow.org/tfrc).

- Our pretraining procedure follows training settings of bert with some changes: trained for 7M training steps with batchsize of 64, instead of 125K with batchsize of 4096.

## Models

| | albert-base | albert-large | albert-xlarge |

|:---:|:---:|:---:|:---:|

| Hidden Layers | 12 | 24 | 24 |

| Attention heads | 12 | 16 | 32 |

| Hidden size | 768 | 1024 | 2048 |

## Results

For further details on the models performance or any other queries, please refer to [Arabic-ALBERT](https://github.com/KUIS-AI-Lab/Arabic-ALBERT/)

## How to use

You can use these models by installing `torch` or `tensorflow` and Huggingface library `transformers`. And you can use it directly by initializing it like this:

```python

from transformers import AutoTokenizer, AutoModel

# loading the tokenizer

tokenizer = AutoTokenizer.from_pretrained("kuisailab/albert-large-arabic")

# loading the model

model = AutoModelForMaskedLM.from_pretrained("kuisailab/albert-large-arabic")

```

## Acknowledgement

Thanks to Google for providing free TPU for the training process and for Huggingface for hosting these models on their servers 😊

|

dbmdz/electra-base-turkish-mc4-uncased-generator

|

2352dd9268eef698305ac0dc1f22eb59e73f55d8

|

2021-09-23T10:43:54.000Z

|

[

"pytorch",

"tf",

"electra",

"fill-mask",

"tr",

"dataset:allenai/c4",

"transformers",

"license:mit",

"autotrain_compatible"

] |

fill-mask

| false |

dbmdz

| null |

dbmdz/electra-base-turkish-mc4-uncased-generator

| 52 | null |

transformers

| 5,920 |

---

language: tr

license: mit

datasets:

- allenai/c4

---

# 🇹🇷 Turkish ELECTRA model

<p align="center">

<img alt="Logo provided by Merve Noyan" title="Awesome logo from Merve Noyan" src="https://raw.githubusercontent.com/stefan-it/turkish-bert/master/merve_logo.png">

</p>

[](https://zenodo.org/badge/latestdoi/237817454)

We present community-driven BERT, DistilBERT, ELECTRA and ConvBERT models for Turkish 🎉

Some datasets used for pretraining and evaluation are contributed from the

awesome Turkish NLP community, as well as the decision for the BERT model name: BERTurk.

Logo is provided by [Merve Noyan](https://twitter.com/mervenoyann).

# Stats

We've also trained an ELECTRA (uncased) model on the recently released Turkish part of the

[multiligual C4 (mC4) corpus](https://github.com/allenai/allennlp/discussions/5265) from the AI2 team.

After filtering documents with a broken encoding, the training corpus has a size of 242GB resulting

in 31,240,963,926 tokens.

We used the original 32k vocab (instead of creating a new one).

# mC4 ELECTRA

In addition to the ELEC**TR**A base cased model, we also trained an ELECTRA uncased model on the Turkish part of the mC4 corpus. We use a

sequence length of 512 over the full training time and train the model for 1M steps on a v3-32 TPU.

# Model usage

All trained models can be used from the [DBMDZ](https://github.com/dbmdz) Hugging Face [model hub page](https://huggingface.co/dbmdz)

using their model name.

Example usage with 🤗/Transformers:

```python

tokenizer = AutoTokenizer.from_pretrained("electra-base-turkish-mc4-uncased-generator")

model = AutoModel.from_pretrained("electra-base-turkish-mc4-uncased-generator")

```

# Citation

You can use the following BibTeX entry for citation:

```bibtex

@software{stefan_schweter_2020_3770924,

author = {Stefan Schweter},

title = {BERTurk - BERT models for Turkish},

month = apr,

year = 2020,

publisher = {Zenodo},

version = {1.0.0},

doi = {10.5281/zenodo.3770924},

url = {https://doi.org/10.5281/zenodo.3770924}

}

```

# Acknowledgments

Thanks to [Kemal Oflazer](http://www.andrew.cmu.edu/user/ko/) for providing us

additional large corpora for Turkish. Many thanks to Reyyan Yeniterzi for providing

us the Turkish NER dataset for evaluation.

We would like to thank [Merve Noyan](https://twitter.com/mervenoyann) for the

awesome logo!

Research supported with Cloud TPUs from Google's TensorFlow Research Cloud (TFRC).

Thanks for providing access to the TFRC ❤️

|

dennlinger/bert-wiki-paragraphs

|

c8d6e5285fe3ea801834ef1f385a5518a4c91281

|

2021-09-30T20:13:44.000Z

|

[

"pytorch",

"bert",

"text-classification",

"arxiv:2012.03619",

"arxiv:1803.09337",

"transformers"

] |

text-classification

| false |

dennlinger

| null |

dennlinger/bert-wiki-paragraphs

| 52 | null |

transformers

| 5,921 |

# BERT-Wiki-Paragraphs

Authors: Satya Almasian\*, Dennis Aumiller\*, Lucienne-Sophie Marmé, Michael Gertz

Contact us at `<lastname>@informatik.uni-heidelberg.de`

Details for the training method can be found in our work [Structural Text Segmentation of Legal Documents](https://arxiv.org/abs/2012.03619).

The training procedure follows the same setup, but we substitute legal documents for Wikipedia in this model.

Training is performed in a form of weakly-supervised fashion to determine whether paragraphs topically belong together or not.

We utilize automatically generated samples from Wikipedia for training, where paragraphs from within the same section are assumed to be topically coherent.

We use the same articles as ([Koshorek et al., 2018](https://arxiv.org/abs/1803.09337)),

albeit from a 2021 dump of Wikpeida, and split at paragraph boundaries instead of the sentence level.

## Training Setup

The model was trained for 3 epochs from `bert-base-uncased` on paragraph pairs (limited to 512 subwork with the `longest_first` truncation strategy).

We use a batch size of 24 wit 2 iterations gradient accumulation (effective batch size of 48), and a learning rate of 1e-4, with gradient clipping at 5.

Training was performed on a single Titan RTX GPU over the duration of 3 weeks.

|

diarsabri/LaDPR-query-encoder

|

600d1091763cd2418ba805d72f55d4bed1c6d6b4

|

2021-05-05T21:00:08.000Z

|

[

"pytorch",

"dpr",

"feature-extraction",

"transformers"

] |

feature-extraction

| false |

diarsabri

| null |

diarsabri/LaDPR-query-encoder

| 52 | null |

transformers

| 5,922 |

Language Model 1

For Language agnostic Dense Passage Retrieval

|

flax-community/indonesian-roberta-base

|

6cedc13543d3e59e980c435d28a2346d9f2bad31

|

2021-07-10T08:19:46.000Z

|

[

"pytorch",

"jax",

"tensorboard",

"roberta",

"fill-mask",

"id",

"dataset:oscar",

"arxiv:1907.11692",

"transformers",

"indonesian-roberta-base",

"license:mit",

"autotrain_compatible"

] |

fill-mask

| false |

flax-community

| null |

flax-community/indonesian-roberta-base

| 52 | 5 |

transformers

| 5,923 |

---

language: id

tags:

- indonesian-roberta-base

license: mit

datasets:

- oscar

widget:

- text: "Budi telat ke sekolah karena ia <mask>."

---

## Indonesian RoBERTa Base

Indonesian RoBERTa Base is a masked language model based on the [RoBERTa](https://arxiv.org/abs/1907.11692) model. It was trained on the [OSCAR](https://huggingface.co/datasets/oscar) dataset, specifically the `unshuffled_deduplicated_id` subset. The model was trained from scratch and achieved an evaluation loss of 1.798 and an evaluation accuracy of 62.45%.

This model was trained using HuggingFace's Flax framework and is part of the [JAX/Flax Community Week](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104) organized by HuggingFace. All training was done on a TPUv3-8 VM, sponsored by the Google Cloud team.

All necessary scripts used for training could be found in the [Files and versions](https://huggingface.co/flax-community/indonesian-roberta-base/tree/main) tab, as well as the [Training metrics](https://huggingface.co/flax-community/indonesian-roberta-base/tensorboard) logged via Tensorboard.

## Model

| Model | #params | Arch. | Training/Validation data (text) |

| ------------------------- | ------- | ------- | ------------------------------------------ |

| `indonesian-roberta-base` | 124M | RoBERTa | OSCAR `unshuffled_deduplicated_id` Dataset |

## Evaluation Results

The model was trained for 8 epochs and the following is the final result once the training ended.

| train loss | valid loss | valid accuracy | total time |

| ---------- | ---------- | -------------- | ---------- |

| 1.870 | 1.798 | 0.6245 | 18:25:39 |

## How to Use

### As Masked Language Model

```python

from transformers import pipeline

pretrained_name = "flax-community/indonesian-roberta-base"

fill_mask = pipeline(

"fill-mask",

model=pretrained_name,

tokenizer=pretrained_name

)

fill_mask("Budi sedang <mask> di sekolah.")

```

### Feature Extraction in PyTorch

```python

from transformers import RobertaModel, RobertaTokenizerFast

pretrained_name = "flax-community/indonesian-roberta-base"

model = RobertaModel.from_pretrained(pretrained_name)

tokenizer = RobertaTokenizerFast.from_pretrained(pretrained_name)

prompt = "Budi sedang berada di sekolah."

encoded_input = tokenizer(prompt, return_tensors='pt')

output = model(**encoded_input)

```

## Team Members

- Wilson Wongso ([@w11wo](https://hf.co/w11wo))

- Steven Limcorn ([@stevenlimcorn](https://hf.co/stevenlimcorn))

- Samsul Rahmadani ([@munggok](https://hf.co/munggok))

- Chew Kok Wah ([@chewkokwah](https://hf.co/chewkokwah))

|

google/t5-3b-ssm

|

de842a05eabdc2688bd66a84b83227e933ed8e5e

|

2020-12-07T19:49:00.000Z

|

[

"pytorch",

"tf",

"t5",

"text2text-generation",

"en",

"dataset:c4",

"dataset:wikipedia",

"arxiv:2002.08909",

"arxiv:1910.10683",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

text2text-generation

| false |

google

| null |

google/t5-3b-ssm

| 52 | 1 |

transformers

| 5,924 |

---

language: en

datasets:

- c4

- wikipedia

license: apache-2.0

---

[Google's T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) for **Closed Book Question Answering**.

The model was pre-trained using T5's denoising objective on [C4](https://huggingface.co/datasets/c4) and subsequently additionally pre-trained using [REALM](https://arxiv.org/pdf/2002.08909.pdf)'s salient span masking objective on [Wikipedia](https://huggingface.co/datasets/wikipedia).

**Note**: This model should be fine-tuned on a question answering downstream task before it is useable for closed book question answering.

Other Community Checkpoints: [here](https://huggingface.co/models?search=ssm)

Paper: [How Much Knowledge Can You Pack

Into the Parameters of a Language Model?](https://arxiv.org/abs/1910.10683.pdf)

Authors: *Adam Roberts, Colin Raffel, Noam Shazeer*

## Abstract

It has recently been observed that neural language models trained on unstructured text can implicitly store and retrieve knowledge using natural language queries. In this short paper, we measure the practical utility of this approach by fine-tuning pre-trained models to answer questions without access to any external context or knowledge. We show that this approach scales with model size and performs competitively with open-domain systems that explicitly retrieve answers from an external knowledge source when answering questions. To facilitate reproducibility and future work, we release our code and trained models at https://goo.gle/t5-cbqa.

|

gsarti/it5-small

|

5b4b3e313cbc2b00a135a55daa3fe826ac077b25

|

2022-03-09T11:56:34.000Z

|

[

"pytorch",

"tf",

"jax",

"tensorboard",

"t5",

"text2text-generation",

"it",

"dataset:gsarti/clean_mc4_it",

"arxiv:2203.03759",

"transformers",

"seq2seq",

"lm-head",

"license:apache-2.0",

"autotrain_compatible"

] |

text2text-generation

| false |

gsarti

| null |

gsarti/it5-small

| 52 | 1 |

transformers

| 5,925 |

---

language:

- it

datasets:

- gsarti/clean_mc4_it

tags:

- seq2seq

- lm-head

license: apache-2.0

inference: false

thumbnail: https://gsarti.com/publication/it5/featured.png

---

# Italian T5 Small 🇮🇹

The [IT5](https://huggingface.co/models?search=it5) model family represents the first effort in pretraining large-scale sequence-to-sequence transformer models for the Italian language, following the approach adopted by the original [T5 model](https://github.com/google-research/text-to-text-transfer-transformer).

This model is released as part of the project ["IT5: Large-Scale Text-to-Text Pretraining for Italian Language Understanding and Generation"](https://arxiv.org/abs/2203.03759), by [Gabriele Sarti](https://gsarti.com/) and [Malvina Nissim](https://malvinanissim.github.io/) with the support of [Huggingface](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104) and with TPU usage sponsored by Google's [TPU Research Cloud](https://sites.research.google/trc/). All the training was conducted on a single TPU3v8-VM machine on Google Cloud. Refer to the Tensorboard tab of the repository for an overview of the training process.

*The inference widget is deactivated because the model needs a task-specific seq2seq fine-tuning on a downstream task to be useful in practice. The models in the [`it5`](https://huggingface.co/it5) organization provide some examples of this model fine-tuned on various downstream task.*

## Model variants

This repository contains the checkpoints for the `base` version of the model. The model was trained for one epoch (1.05M steps) on the [Thoroughly Cleaned Italian mC4 Corpus](https://huggingface.co/datasets/gsarti/clean_mc4_it) (~41B words, ~275GB) using 🤗 Datasets and the `google/t5-v1_1-small` improved configuration. The training procedure is made available [on Github](https://github.com/gsarti/t5-flax-gcp).

The following table summarizes the parameters for all available models

| |`it5-small` (this one) |`it5-base` |`it5-large` |`it5-base-oscar` |

|-----------------------|-----------------------|----------------------|-----------------------|----------------------------------|

|`dataset` |`gsarti/clean_mc4_it` |`gsarti/clean_mc4_it` |`gsarti/clean_mc4_it` |`oscar/unshuffled_deduplicated_it`|

|`architecture` |`google/t5-v1_1-small` |`google/t5-v1_1-base` |`google/t5-v1_1-large` |`t5-base` |

|`learning rate` | 5e-3 | 5e-3 | 5e-3 | 1e-2 |

|`steps` | 1'050'000 | 1'050'000 | 2'100'000 | 258'000 |

|`training time` | 36 hours | 101 hours | 370 hours | 98 hours |

|`ff projection` |`gated-gelu` |`gated-gelu` |`gated-gelu` |`relu` |

|`tie embeds` |`false` |`false` |`false` |`true` |

|`optimizer` | adafactor | adafactor | adafactor | adafactor |

|`max seq. length` | 512 | 512 | 512 | 512 |

|`per-device batch size`| 16 | 16 | 8 | 16 |

|`tot. batch size` | 128 | 128 | 64 | 128 |

|`weigth decay` | 1e-3 | 1e-3 | 1e-2 | 1e-3 |

|`validation split size`| 15K examples | 15K examples | 15K examples | 15K examples |

The high training time of `it5-base-oscar` was due to [a bug](https://github.com/huggingface/transformers/pull/13012) in the training script.

For a list of individual model parameters, refer to the `config.json` file in the respective repositories.

## Using the models

```python

from transformers import AutoTokenzier, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("gsarti/it5-small")

model = AutoModelForSeq2SeqLM.from_pretrained("gsarti/it5-small")

```

*Note: You will need to fine-tune the model on your downstream seq2seq task to use it. See an example [here](https://huggingface.co/it5/it5-base-question-answering).*

Flax and Tensorflow versions of the model are also available:

```python

from transformers import FlaxT5ForConditionalGeneration, TFT5ForConditionalGeneration

model_flax = FlaxT5ForConditionalGeneration.from_pretrained("gsarti/it5-small")

model_tf = TFT5ForConditionalGeneration.from_pretrained("gsarti/it5-small")

```

## Limitations

Due to the nature of the web-scraped corpus on which IT5 models were trained, it is likely that their usage could reproduce and amplify pre-existing biases in the data, resulting in potentially harmful content such as racial or gender stereotypes and conspiracist views. For this reason, the study of such biases is explicitly encouraged, and model usage should ideally be restricted to research-oriented and non-user-facing endeavors.

## Model curators

For problems or updates on this model, please contact [[email protected]](mailto:[email protected]).

## Citation Information

```bibtex

@article{sarti-nissim-2022-it5,

title={IT5: Large-scale Text-to-text Pretraining for Italian Language Understanding and Generation},

author={Sarti, Gabriele and Nissim, Malvina},

journal={ArXiv preprint 2203.03759},

url={https://arxiv.org/abs/2203.03759},

year={2022},

month={mar}

}

```

|

huggingtweets/tilda_tweets

|

2d85aa279ff77324cb7172a82e7eae68f0ffe15b

|

2021-05-23T02:19:01.000Z

|

[

"pytorch",

"jax",

"gpt2",

"text-generation",

"en",

"transformers",

"huggingtweets"

] |

text-generation

| false |

huggingtweets

| null |

huggingtweets/tilda_tweets

| 52 | null |

transformers

| 5,926 |

---

language: en

thumbnail: https://www.huggingtweets.com/tilda_tweets/1614119818814/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div>

<div style="width: 132px; height:132px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1247095679882645511/gsXujIBv_400x400.jpg')">

</div>

<div style="margin-top: 8px; font-size: 19px; font-weight: 800">tilly 🤖 AI Bot </div>

<div style="font-size: 15px">@tilda_tweets bot</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://app.wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-model-to-generate-tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on [@tilda_tweets's tweets](https://twitter.com/tilda_tweets).

| Data | Quantity |

| --- | --- |

| Tweets downloaded | 326 |

| Retweets | 118 |

| Short tweets | 24 |

| Tweets kept | 184 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3n2tjxi3/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @tilda_tweets's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2kg9hiau) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2kg9hiau/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/tilda_tweets')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

nbroad/deberta-v3-xsmall-squad2

|

4b1d92d2daed14c72a00446afe3e436122b96d4f

|

2022-07-22T14:03:41.000Z

|

[

"pytorch",

"tensorboard",

"deberta-v2",

"question-answering",

"en",

"dataset:squad_v2",

"transformers",

"license:cc-by-4.0",

"model-index",

"autotrain_compatible"

] |

question-answering

| false |

nbroad

| null |

nbroad/deberta-v3-xsmall-squad2

| 52 | null |

transformers

| 5,927 |

---

license: cc-by-4.0

widget:

- context: DeBERTa improves the BERT and RoBERTa models using disentangled attention

and enhanced mask decoder. With those two improvements, DeBERTa out perform RoBERTa

on a majority of NLU tasks with 80GB training data. In DeBERTa V3, we further

improved the efficiency of DeBERTa using ELECTRA-Style pre-training with Gradient

Disentangled Embedding Sharing. Compared to DeBERTa, our V3 version significantly

improves the model performance on downstream tasks. You can find more technique

details about the new model from our paper. Please check the official repository

for more implementation details and updates.

example_title: DeBERTa v3 Q1

text: How is DeBERTa version 3 different than previous ones?

- context: DeBERTa improves the BERT and RoBERTa models using disentangled attention

and enhanced mask decoder. With those two improvements, DeBERTa out perform RoBERTa

on a majority of NLU tasks with 80GB training data. In DeBERTa V3, we further

improved the efficiency of DeBERTa using ELECTRA-Style pre-training with Gradient

Disentangled Embedding Sharing. Compared to DeBERTa, our V3 version significantly

improves the model performance on downstream tasks. You can find more technique

details about the new model from our paper. Please check the official repository

for more implementation details and updates.

example_title: DeBERTa v3 Q2

text: Where do I go to see new info about DeBERTa?

datasets:

- squad_v2

metrics:

- f1

- exact

tags:

- question-answering

language: en

model-index:

- name: DeBERTa v3 xsmall squad2

results:

- task:

name: Question Answering

type: question-answering

dataset:

name: SQuAD2.0

type: question-answering

metrics:

- name: f1

type: f1

value: 81.5

- name: exact

type: exact

value: 78.3

- task:

type: question-answering

name: Question Answering

dataset:

name: squad_v2

type: squad_v2

config: squad_v2

split: validation

metrics:

- name: Exact Match

type: exact_match

value: 78.5341

verified: true

- name: F1

type: f1

value: 81.6408

verified: true

- name: total

type: total

value: 11870

verified: true

---

# DeBERTa v3 xsmall SQuAD 2.0

[Microsoft reports that this model can get 84.8/82.0](https://huggingface.co/microsoft/deberta-v3-xsmall#fine-tuning-on-nlu-tasks) on f1/em on the dev set.

I got 81.5/78.3 but I only did one run and I didn't use the official squad2 evaluation script. I will do some more runs and show the results on the official script soon.

|

nlp4good/psych-search

|

894dbb27a8ab4f284b9659ceb6578c6f431d35dc

|

2021-09-22T09:29:47.000Z

|

[

"pytorch",

"jax",

"bert",

"fill-mask",

"en",

"dataset:PubMed",

"transformers",

"mental-health",

"license:apache-2.0",

"autotrain_compatible"

] |

fill-mask

| false |

nlp4good

| null |

nlp4good/psych-search

| 52 | null |

transformers

| 5,928 |

---

language:

- en

tags:

- mental-health

license: apache-2.0

datasets:

- PubMed

---

# Psych-Search

Psych-Search is a work in progress to bring cutting edge NLP to mental health practitioners. The model detailed here serves as a foundation for traditional classification models as well as NLU models for a Psych-Search application. The goal of the Psych-Search Application is to use a combination of traditional text classification models to expand the scope of the MESH taxonomy with the inclusion of relevant categories for mental health pracitioners designing suicide prevention programs for adolescent communities within the United States, as well as the automatic extraction and standardization of entities such as risk factors and protective factors.

Our first expansion efforts to the MESH taxonomy include categories:

- Prevention Strategies

- Protective Factors

We are actively looking for partners on this work and would love to hear from you! Please ping us at [email protected].

## Model description

This model is an extension of [allenai/scibert_scivocab_uncased](https://huggingface.co/allenai/scibert_scivocab_uncased). Continued pretraining was done using SciBERT as the base model using abstract text only from Pyschology and Psychiatry PubMed research. Training was done on approximately 3.5 million papers for 10 epochs and evaluated on a task similar to BioASQ Task A.

## Intended uses & limitations

#### How to use

```python

from transformers import AutoTokenizer, AutoModel

mname = "nlp4good/psych-search"

tokenizer = AutoTokenizer.from_pretrained(mname)

model = AutoModel.from_pretrained(mname)

```

### Limitations and bias

This model was trained on all PubMed abstracts categorized under [Psychology and Psychiatry](https://meshb.nlm.nih.gov/treeView). As of March 1, this corresponds to approximately 3.2 million papers that contains abstract text. Of these 3.2 million papers, relevant sparse mental health categories were back translated to increase the representation of certain mental health categories.

There are several limitation with this dataset including large discrepancies in the number of papers associated with [Sexual and Gender Minorities](https://meshb.nlm.nih.gov/record/ui?ui=D000072339). The training data consisted of the following breakdown across gender groups:

Female | Male | Sexual and Gender Minorities

-------|---------|----------

1,896,301 | 1,945,279 | 4,529

Similar discrepancies are present within [Ethnic Groups](https://meshb.nlm.nih.gov/record/ui?ui=D005006) as defined within the MESH taxonomy:

| African Americans | Arabs | Asian Americans | Hispanic Americans | Indians, Central American | Indians, North American | Indians, South American | Indigenous Peoples | Mexican Americans |

|-------------------|-------|-----------------|--------------------|---------------------------|-------------------------|-------------------------|--------------------|-------------------|

| 31,027 | 2,437 | 5,612 | 18,893 | 124 | 5,657 | 633 | 174 | 3,234 |

These discrepancies can have a significant impact on information retrieval systems, downstream machine learning models, and other forms of NLP that leverage these pretrained models.

## Training data

This model was trained on all PubMed abstracts categorized under [Psychology and Psychiatry](https://meshb.nlm.nih.gov/treeView). As of March 1, this corresponds to approximately 3.2 million papers that contains abstract text. Of these 3.2 million papers, relevant sparse categories were back translated from english to french and from french to english to increase the representation of sparser mental health categories. This included backtranslating the following papers with the following categories:

- Depressive Disorder

- Risk Factors

- Mental Disorders

- Child, Preschool

- Mental Health

In aggregate, this process added 557,980 additional papers to our training data.

## Training procedure

Continued pretraining was done on Psychology and Psychiatry PubMed papers for 10 epochs. Default parameters were used with the exception of gradient accumulation steps which was set at 4, with a per device train batch size of 32. 2 x Nvidia 3090's were used in the development of this model.

## Evaluation results

To evaluate the effectiveness of psych-search within the mental health domain, an evaluation task was constructed by finetuning psych-search for a task similar to [BioASQ Task A](http://bioasq.org/). Here we perform large scale biomedical indexing using the MESH taxonomy associated with each paper underneath Psychology and Psychiatry. The evaluation metric is the micro F1 score across all second level descriptors within Psychology and Psychiatry. This corresponds to 38 different MESH categories used during evaluation.

bert-base-uncased | SciBERT Scivocab Uncased | Psych-Search

-------|---------|----------

0.7348 | 0.7394 | 0.7415

## Next Steps

If you are interested in continuing to build on this work or have other ideas on how we can build on others work, please let us know! We can be reached at [email protected]. Our goal is to bring state of the art NLP capabilities to underserved areas of research, with mental health being our top priority.

|

shtoshni/spanbert_coreference_large

|

b93b0b352fd0153550f18878505b4ad284b97e10

|

2021-03-28T14:23:36.000Z

|

[

"pytorch",

"transformers"

] | null | false |

shtoshni

| null |

shtoshni/spanbert_coreference_large

| 52 | null |

transformers

| 5,929 |

Entry not found

|

uf-aice-lab/math-roberta

|

e535977f65f11632a830a8af74e9cad598c25944

|

2022-02-11T20:21:02.000Z

|

[

"pytorch",

"roberta",

"text-generation",

"en",

"transformers",

"nlp",

"math learning",

"education",

"license:mit"

] |

text-generation

| false |

uf-aice-lab

| null |

uf-aice-lab/math-roberta

| 52 | null |

transformers

| 5,930 |

---

language:

- en

tags:

- nlp

- math learning

- education

license: mit

---

# Math-RoBerta for NLP tasks in math learning environments

This model is fine-tuned RoBERTa-large trained with 8 Nvidia RTX 1080Ti GPUs using 3,000,000 math discussion posts by students and facilitators on Algebra Nation (https://www.mathnation.com/). MathRoBERTa has 24 layers, and 355 million parameters and its published model weights take up to 1.5 gigabytes of disk space. It can potentially provide a good base performance on NLP related tasks (e.g., text classification, semantic search, Q&A) in similar math learning environments.

### Here is how to use it with texts in HuggingFace

```python

from transformers import RobertaTokenizer, RobertaModel

tokenizer = RobertaTokenizer.from_pretrained('uf-aice-lab/math-roberta')

model = RobertaModel.from_pretrained('uf-aice-lab/math-roberta')

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

|

wpnbos/xlm-roberta-base-conll2002-dutch

|

4bb41e4849d873d8fcb49f249342492eaf1f0c31

|

2022-04-20T19:28:55.000Z

|

[

"pytorch",

"xlm-roberta",

"token-classification",

"nl",

"dataset:conll2002",

"arxiv:1911.02116",

"transformers",

"Named Entity Recognition",

"autotrain_compatible"

] |

token-classification

| false |

wpnbos

| null |

wpnbos/xlm-roberta-base-conll2002-dutch

| 52 | null |

transformers

| 5,931 |

---

language:

- nl

tags:

- Named Entity Recognition

- xlm-roberta

datasets:

- conll2002

metrics:

- f1: 90.57

---

# XLM-RoBERTa base ConLL-2002 Dutch

XLM-Roberta base model finetuned on ConLL-2002 Dutch train set, which is a Named Entity Recognition dataset containing the following classes: PER, LOC, ORG and MISC.

Label mapping:

{

0: O,

1: B-PER,

2: I-PER,

3: B-ORG,

4: I-ORG,

5: B-LOC,

6: I-LOC,

7: B-MISC,

8: I-MISC,

}

Results from https://arxiv.org/pdf/1911.02116.pdf reciprocated (original results were 90.39 F1, this finetuned version here scored 90.57).

|

IIC/dpr-spanish-question_encoder-squades-base

|

87da269c24ef47fa7dc2bb19ebedb408d9d7aeb1

|

2022-04-02T15:08:08.000Z

|

[

"pytorch",

"bert",

"fill-mask",

"es",

"dataset:squad_es",

"arxiv:2004.04906",

"transformers",

"sentence similarity",

"passage retrieval",

"model-index",

"autotrain_compatible"

] |

fill-mask

| false |

IIC

| null |

IIC/dpr-spanish-question_encoder-squades-base

| 52 | 3 |

transformers

| 5,932 |

---

language:

- es

tags:

- sentence similarity # Example: audio

- passage retrieval # Example: automatic-speech-recognition

datasets:

- squad_es

metrics:

- eval_loss: 0.08608942725107592

- eval_accuracy: 0.9925325215819639

- eval_f1: 0.8805402320715237

- average_rank: 0.27430093209054596

model-index:

- name: dpr-spanish-passage_encoder-squades-base

results:

- task:

type: text similarity # Required. Example: automatic-speech-recognition

name: text similarity # Optional. Example: Speech Recognition

dataset:

type: squad_es # Required. Example: common_voice. Use dataset id from https://hf.co/datasets

name: squad_es # Required. Example: Common Voice zh-CN

args: es # Optional. Example: zh-CN

metrics:

- type: loss

value: 0.08608942725107592

name: eval_loss

- type: accuracy

value: 0.99

name: accuracy

- type: f1

value: 0.88

name: f1

- type: avgrank

value: 0.2743

name: avgrank

---

[Dense Passage Retrieval](https://arxiv.org/abs/2004.04906) is a set of tools for performing state of the art open-domain question answering. It was initially developed by Facebook and there is an [official repository](https://github.com/facebookresearch/DPR). DPR is intended to retrieve the relevant documents to answer a given question, and is composed of 2 models, one for encoding passages and other for encoding questions. This concrete model is the one used for encoding passages.

Regarding its use, this model should be used to vectorize a question that enters in a Question Answering system, and then we compare that encoding with the encodings of the database (encoded with [the passage encoder](https://huggingface.co/avacaondata/dpr-spanish-passage_encoder-squades-base)) to find the most similar documents , which then should be used for either extracting the answer or generating it.

For training the model, we used the spanish version of SQUAD, [SQUAD-ES](https://huggingface.co/datasets/squad_es), with which we created positive and negative examples for the model.

Example of use:

```python

from transformers import DPRQuestionEncoder, DPRQuestionEncoderTokenizer

model_str = "avacaondata/dpr-spanish-passage_encoder-squades-base"

tokenizer = DPRQuestionEncoderTokenizer.from_pretrained(model_str)

model = DPRQuestionEncoder.from_pretrained(model_str)

input_ids = tokenizer("¿Qué medallas ganó Usain Bolt en 2012?", return_tensors="pt")["input_ids"]

embeddings = model(input_ids).pooler_output

```

The full metrics of this model on the evaluation split of SQUADES are:

```

evalloss: 0.08608942725107592

acc: 0.9925325215819639

f1: 0.8805402320715237

acc_and_f1: 0.9365363768267438

average_rank: 0.27430093209054596

```

And the classification report:

```

precision recall f1-score support

hard_negative 0.9961 0.9961 0.9961 325878

positive 0.8805 0.8805 0.8805 10514

accuracy 0.9925 336392

macro avg 0.9383 0.9383 0.9383 336392

weighted avg 0.9925 0.9925 0.9925 336392

```

### Contributions

Thanks to [@avacaondata](https://huggingface.co/avacaondata), [@alborotis](https://huggingface.co/alborotis), [@albarji](https://huggingface.co/albarji), [@Dabs](https://huggingface.co/Dabs), [@GuillemGSubies](https://huggingface.co/GuillemGSubies) for adding this model.

|

IDEA-CCNL/Bigan-Transformer-XL-denoise-1.1B

|

0484d5e9d159d112a543c1990231762f8a700d2d

|

2022-04-13T07:25:42.000Z

|

[

"pytorch",

"zh",

"transformers",

"license:apache-2.0"

] | null | false |

IDEA-CCNL

| null |

IDEA-CCNL/Bigan-Transformer-XL-denoise-1.1B

| 52 | null |

transformers

| 5,933 |

---

language:

- zh

license: apache-2.0

---

# Abstract

This is a Chinese transformer-xl model trained on [Wudao dataset](https://resource.wudaoai.cn/home?ind&name=WuDaoCorpora%202.0&id=1394901288847716352)

and finetuned on a denoise dataset constructed by our team. The denoise task is to reconstruct a fluent and clean text from a noisy input which includes random insertion/swap/deletion/replacement/sentence reordering.

## Usage

### load model

```python

from fengshen.models.transfo_xl_denoise.tokenization_transfo_xl_denoise import TransfoXLDenoiseTokenizer

from fengshen.models.transfo_xl_denoise.modeling_transfo_xl_denoise import TransfoXLDenoiseModel

tokenizer = TransfoXLDenoiseTokenizer.from_pretrained('IDEA-CCNL/Bigan-Transformer-XL-denoise-1.1B')

model = TransfoXLDenoiseModel.from_pretrained('IDEA-CCNL/Bigan-Transformer-XL-denoise-1.1B')

```

### generation

```python

from fengshen.models.transfo_xl_denoise.generate import denoise_generate

input_text = "凡是有成就的人, 都很严肃地对待生命自己的"

res = denoise_generate(model, tokenizer, input_text)

print(res) # "有成就的人都很严肃地对待自己的生命。"

```

## Citation

If you find the resource is useful, please cite the following website in your paper.

```

@misc{Fengshenbang-LM,

title={Fengshenbang-LM},

author={IDEA-CCNL},

year={2022},

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

}

```

|

Helsinki-NLP/opus-mt-tc-big-en-ro

|

5d0c15b53f631dc74430fe8153c8ed8d02cc7290

|

2022-06-01T13:01:57.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"en",

"ro",

"transformers",

"translation",

"opus-mt-tc",

"license:cc-by-4.0",