modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

MilaNLProc/hate-ita | e86867f77ebeae746f68907f76506d8a172e4424 | 2022-07-07T15:32:29.000Z | [

"pytorch",

"xlm-roberta",

"text-classification",

"it",

"arxiv:2104.12250",

"transformers",

"text classification",

"abusive language",

"hate speech",

"offensive language",

"license:mit"

]

| text-classification | false | MilaNLProc | null | MilaNLProc/hate-ita | 56 | 1 | transformers | 5,800 | ---

language: it

license: mit

tags:

- text classification

- abusive language

- hate speech

- offensive language

widget:

- text: "Ci sono dei bellissimi capibara!"

example_title: "Hate Speech Classification 1"

- text: "Sei una testa di cazzo!!"

example_title: "Hate Speech Classification 2"

- text: "Ti odio!"

example_title: "Hate Speech Classification 3"

---

#

[Debora Nozza](http://dnozza.github.io/) •

[Federico Bianchi](https://federicobianchi.io/) •

[Giuseppe Attanasio](https://gattanasio.cc/)

# HATE-ITA Base

HATE-ITA is a binary hate speech classification model for Italian social media text.

<img src="https://raw.githubusercontent.com/MilaNLProc/hate-ita/main/hateita.png?token=GHSAT0AAAAAABTEBAJ4PNDWAMU3KKIGUOCSYWG4IBA" width="200">

## Abstract

Online hate speech is a dangerous phenomenon that can (and should) be promptly counteracted properly. While Natural Language Processing has been successfully used for the purpose, many of the research efforts are directed toward the English language. This choice severely limits the classification power in non-English languages. In this paper, we test several learning frameworks for identifying hate speech in Italian text. We release **HATE-ITA, a set of multi-language models trained on a large set of English data and available Italian datasets**. HATE-ITA performs better than mono-lingual models and seems to adapt well also on language-specific slurs. We believe our findings will encourage research in other mid-to-low resource communities and provide a valuable benchmarking tool for the Italian community.

## Model

This model is the fine-tuned version of the [XLM-T](https://arxiv.org/abs/2104.12250) model.

| Model | Download |

| ------ | -------------------------|

| `hate-ita` | [Link](https://huggingface.co/MilaNLProc/hate-ita) |

| `hate-ita-xlm-r-base` | [Link](https://huggingface.co/MilaNLProc/hate-ita-xlm-r-base) |

| `hate-ita-xlm-r-large` | [Link](https://huggingface.co/MilaNLProc/hate-ita-xlm-r-large) |

## Results

This model had an F1 of 0.83 on the test set.

## Usage

```python

from transformers import pipeline

classifier = pipeline("text-classification",model='MilaNLProc/hate-ita',top_k=2)

prediction = classifier("ti odio")

print(prediction)

```

## Citation

Please use the following BibTeX entry if you use this model in your project:

```

@inproceedings{nozza-etal-2022-hate-ita,

title = {{HATE-ITA}: Hate Speech Detection in Italian Social Media Text},

author = "Nozza, Debora and Bianchi, Federico and Attanasio, Giuseppe",

booktitle = "Proceedings of the 6th Workshop on Online Abuse and Harms",

year = "2022",

publisher = "Association for Computational Linguistics"

}

```

## Ethical Statement

While promising, the results in this work should not be interpreted as a definitive assessment of the performance of hate speech detection in Italian. We are unsure if our model can maintain a stable and fair precision across the different targets and categories. HATE-ITA might overlook some sensible details, which practitioners should treat with care. |

BNZSA/distilbert-base-uncased-country-NER-address | 9f7f7600e4e7e90a28cd5a993842b01cad5f7259 | 2022-06-10T11:02:34.000Z | [

"pytorch",

"distilbert",

"text-classification",

"transformers",

"license:gpl-3.0"

]

| text-classification | false | BNZSA | null | BNZSA/distilbert-base-uncased-country-NER-address | 56 | null | transformers | 5,801 | ---

license: gpl-3.0

---

|

microsoft/swinv2-tiny-patch4-window8-256 | 2b979ac403df19f72443cd151e9e957842eb9645 | 2022-07-07T14:18:14.000Z | [

"pytorch",

"swinv2",

"transformers"

]

| null | false | microsoft | null | microsoft/swinv2-tiny-patch4-window8-256 | 56 | null | transformers | 5,802 | Entry not found |

fujiki/t5-efficient-xl-nl6-en2ja | 87dd3369f46ec03661da5a33be63a638f82a6c39 | 2022-07-04T03:48:38.000Z | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"license:afl-3.0",

"autotrain_compatible"

]

| text2text-generation | false | fujiki | null | fujiki/t5-efficient-xl-nl6-en2ja | 56 | null | transformers | 5,803 | ---

license: afl-3.0

---

|

tezign/Erlangshen-Sentiment-FineTune | 23b9777a3d2355fdcfb06dea2d7b2a3e965746a4 | 2022-07-14T09:36:39.000Z | [

"pytorch",

"bert",

"text-classification",

"zh",

"transformers",

"sentiment-analysis"

]

| text-classification | false | tezign | null | tezign/Erlangshen-Sentiment-FineTune | 56 | null | transformers | 5,804 | ---

language: zh

tags:

- sentiment-analysis

- pytorch

widget:

- text: "房间非常非常小,内窗,特别不透气,因为夜里走廊灯光是亮的,内窗对着走廊,窗帘又不能完全拉死,怎么都会有一道光射进来。"

- text: "尽快有洗衣房就好了。"

- text: "很好,干净整洁,交通方便。"

- text: "干净整洁很好"

---

# Note

BERT based sentiment analysis, finetune based on https://huggingface.co/IDEA-CCNL/Erlangshen-Roberta-330M-Sentiment .

The model trained on **hotel human review chinese dataset**.

# Usage

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification, TextClassificationPipeline

MODEL = "tezign/Erlangshen-Sentiment-FineTune"

tokenizer = AutoTokenizer.from_pretrained(MODEL)

model = AutoModelForSequenceClassification.from_pretrained(MODEL, trust_remote_code=True)

classifier = TextClassificationPipeline(model=model, tokenizer=tokenizer)

result = classifier("很好,干净整洁,交通方便。")

print(result)

"""

print result

>> [{'label': 'Positive', 'score': 0.989660382270813}]

"""

```

# Evaluate

We compared and evaluated the performance of **Our finetune model** and the **Original Erlangshen model** on the **hotel human review test dataset**(5429 negative reviews and 1251 positive reviews).

The results showed that our model substantial improved the precision and recall of positive reviews:

```text

Our finetune model:

precision recall f1-score support

Negative 0.99 0.98 0.98 5429

Positive 0.92 0.95 0.93 1251

accuracy 0.97 6680

macro avg 0.95 0.96 0.96 6680

weighted avg 0.97 0.97 0.97 6680

======================================================

Original Erlangshen model:

precision recall f1-score support

Negative 0.81 1.00 0.90 5429

Positive 0.00 0.00 0.00 1251

accuracy 0.81 6680

macro avg 0.41 0.50 0.45 6680

weighted avg 0.66 0.81 0.73 6680

``` |

ai4bharat/indicwav2vec-hindi | e746fa3e8de3f6629b9ded29d9c1c565c566f3db | 2022-07-27T20:31:31.000Z | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"hi",

"arxiv:2006.11477",

"transformers",

"audio",

"speech",

"asr",

"license:apache-2.0"

]

| automatic-speech-recognition | false | ai4bharat | null | ai4bharat/indicwav2vec-hindi | 56 | null | transformers | 5,805 | ---

language: hi

metrics:

- wer

- cer

tags:

- audio

- automatic-speech-recognition

- speech

- wav2vec2

- asr

license: apache-2.0

---

# IndicWav2Vec-Hindi

This is a [Wav2Vec2](https://arxiv.org/abs/2006.11477) style ASR model trained in [fairseq](https://github.com/facebookresearch/fairseq) and ported to Hugging Face.

More details on datasets, training-setup and conversion to HuggingFace format can be found in the [IndicWav2Vec](https://github.com/AI4Bharat/IndicWav2Vec) repo.

*Note: This model doesn't support inference with Language Model.*

## Script to Run Inference

```python

import torch

from datasets import load_dataset

from transformers import AutoModelForCTC, AutoProcessor

import torchaudio.functional as F

DEVICE_ID = "cuda" if torch.cuda.is_available() else "cpu"

MODEL_ID = "ai4bharat/indicwav2vec-hindi"

sample = next(iter(load_dataset("common_voice", "hi", split="test", streaming=True)))

resampled_audio = F.resample(torch.tensor(sample["audio"]["array"]), 48000, 16000).numpy()

model = AutoModelForCTC.from_pretrained(MODEL_ID).to(DEVICE_ID)

processor = AutoProcessor.from_pretrained(MODEL_ID)

input_values = processor(resampled_audio, return_tensors="pt").input_values

with torch.no_grad():

logits = model(input_values.to(DEVICE_ID)).logits.cpu()

prediction_ids = torch.argmax(logits, dim=-1)

output_str = processor.batch_decode(prediction_ids)[0]

print(f"Greedy Decoding: {output_str}")

```

# **About AI4Bharat**

- Website: https://ai4bharat.org/

- Code: https://github.com/AI4Bharat

- HuggingFace: https://huggingface.co/ai4bharat |

ArBert/albert-base-v2-finetuned-ner | d95acee469161c82720bc85773685f8a8e9c60ac | 2022-02-03T14:26:33.000Z | [

"pytorch",

"tensorboard",

"albert",

"token-classification",

"dataset:conll2003",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

]

| token-classification | false | ArBert | null | ArBert/albert-base-v2-finetuned-ner | 55 | 1 | transformers | 5,806 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: albert-base-v2-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9301181102362205

- name: Recall

type: recall

value: 0.9376033513394334

- name: F1

type: f1

value: 0.9338457315399397

- name: Accuracy

type: accuracy

value: 0.9851613086447802

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# albert-base-v2-finetuned-ner

This model is a fine-tuned version of [albert-base-v2](https://huggingface.co/albert-base-v2) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0700

- Precision: 0.9301

- Recall: 0.9376

- F1: 0.9338

- Accuracy: 0.9852

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.096 | 1.0 | 1756 | 0.0752 | 0.9163 | 0.9201 | 0.9182 | 0.9811 |

| 0.0481 | 2.0 | 3512 | 0.0761 | 0.9169 | 0.9293 | 0.9231 | 0.9830 |

| 0.0251 | 3.0 | 5268 | 0.0700 | 0.9301 | 0.9376 | 0.9338 | 0.9852 |

### Framework versions

- Transformers 4.14.1

- Pytorch 1.10.1

- Datasets 1.17.0

- Tokenizers 0.10.3

|

Bagus/wav2vec2-xlsr-greek-speech-emotion-recognition | cbe6ba0246652df652d2ad88baa1cad77f180aa4 | 2021-10-20T05:38:41.000Z | [

"pytorch",

"tensorboard",

"wav2vec2",

"el",

"dataset:aesdd",

"transformers",

"audio",

"audio-classification",

"speech",

"license:apache-2.0"

]

| audio-classification | false | Bagus | null | Bagus/wav2vec2-xlsr-greek-speech-emotion-recognition | 55 | null | transformers | 5,807 | ---

language: el

datasets:

- aesdd

tags:

- audio

- audio-classification

- speech

license: apache-2.0

---

~~~

# requirement packages

!pip install git+https://github.com/huggingface/datasets.git

!pip install git+https://github.com/huggingface/transformers.git

!pip install torchaudio

!pip install librosa

!git clone https://github.com/m3hrdadfi/soxan

cd soxan

~~~

# prediction

~~~

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchaudio

from transformers import AutoConfig, Wav2Vec2FeatureExtractor

import librosa

import IPython.display as ipd

import numpy as np

import pandas as pd

~~~

~~~

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model_name_or_path = "Bagus/wav2vec2-xlsr-greek-speech-emotion-recognition"

config = AutoConfig.from_pretrained(model_name_or_path)

feature_extractor = Wav2Vec2FeatureExtractor.from_pretrained(model_name_or_path)

sampling_rate = feature_extractor.sampling_rate

model = Wav2Vec2ForSpeechClassification.from_pretrained(model_name_or_path).to(device)

~~~

~~~

def speech_file_to_array_fn(path, sampling_rate):

speech_array, _sampling_rate = torchaudio.load(path)

resampler = torchaudio.transforms.Resample(_sampling_rate)

speech = resampler(speech_array).squeeze().numpy()

return speech

def predict(path, sampling_rate):

speech = speech_file_to_array_fn(path, sampling_rate)

inputs = feature_extractor(speech, sampling_rate=sampling_rate, return_tensors="pt", padding=True)

inputs = {key: inputs[key].to(device) for key in inputs}

with torch.no_grad():

logits = model(**inputs).logits

scores = F.softmax(logits, dim=1).detach().cpu().numpy()[0]

outputs = [{"Emotion": config.id2label[i], "Score": f"{round(score * 100, 3):.1f}%"} for i, score in enumerate(scores)]

return outputs

~~~

# prediction

~~~

# path for a sample

path = '/data/jtes_v1.1/wav/f01/ang/f01_ang_01.wav'

outputs = predict(path, sampling_rate)

~~~

~~~

[{'Emotion': 'anger', 'Score': '98.3%'},

{'Emotion': 'disgust', 'Score': '0.0%'},

{'Emotion': 'fear', 'Score': '0.4%'},

{'Emotion': 'happiness', 'Score': '0.7%'},

{'Emotion': 'sadness', 'Score': '0.5%'}]

~~~ |

Finnish-NLP/electra-base-discriminator-finnish | cea3059be27d2b56aeae92e58e92b8fbbfd62f44 | 2022-06-13T16:14:27.000Z | [

"pytorch",

"tensorboard",

"electra",

"pretraining",

"fi",

"dataset:Finnish-NLP/mc4_fi_cleaned",

"dataset:wikipedia",

"transformers",

"finnish",

"license:apache-2.0"

]

| null | false | Finnish-NLP | null | Finnish-NLP/electra-base-discriminator-finnish | 55 | 1 | transformers | 5,808 | ---

language:

- fi

license: apache-2.0

tags:

- finnish

- electra

datasets:

- Finnish-NLP/mc4_fi_cleaned

- wikipedia

---

# ELECTRA for Finnish

Pretrained ELECTRA model on Finnish language using a replaced token detection (RTD) objective. ELECTRA was introduced in

[this paper](https://openreview.net/pdf?id=r1xMH1BtvB)

and first released at [this page](https://github.com/google-research/electra).

**Note**: this model is the ELECTRA discriminator model intented to be used for fine-tuning on downstream tasks like text classification. The ELECTRA generator model intented to be used for fill-mask task is released here [Finnish-NLP/electra-base-generator-finnish](https://huggingface.co/Finnish-NLP/electra-base-generator-finnish)

## Model description

Finnish ELECTRA is a transformers model pretrained on a very large corpus of Finnish data in a self-supervised fashion. This means it was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, it was pretrained with the replaced token detection (RTD) objective. Instead of masking the input like in BERT's masked language modeling (MLM) objective, this approach corrupts the input by replacing some tokens with plausible alternatives sampled from a small generator model. Then, instead of training a model that predicts the original identities of the corrupted tokens, a discriminative model is trained that predicts whether each token in the corrupted input was replaced by a generator model's sample or not. Thus, this training approach resembles Generative Adversarial Nets (GAN).

This way, the model learns an inner representation of the Finnish language that can then be used to extract features useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard classifier using the features produced by the ELECTRA model as inputs.

## Intended uses & limitations

You can use the raw model for extracting features or fine-tune it to a downstream task like text classification.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import ElectraTokenizer, ElectraModel

import torch

tokenizer = ElectraTokenizer.from_pretrained("Finnish-NLP/electra-base-discriminator-finnish")

model = ElectraModel.from_pretrained("Finnish-NLP/electra-base-discriminator-finnish")

inputs = tokenizer("Joka kuuseen kurkottaa, se katajaan kapsahtaa", return_tensors="pt")

outputs = model(**inputs)

print(outputs.last_hidden_state)

```

and in TensorFlow:

```python

from transformers import ElectraTokenizer, TFElectraModel

tokenizer = ElectraTokenizer.from_pretrained("Finnish-NLP/electra-base-discriminator-finnish")

model = TFElectraModel.from_pretrained("Finnish-NLP/electra-base-discriminator-finnish", from_pt=True)

inputs = tokenizer("Joka kuuseen kurkottaa, se katajaan kapsahtaa", return_tensors="tf")

outputs = model(inputs)

print(outputs.last_hidden_state)

```

### Limitations and bias

The training data used for this model contains a lot of unfiltered content from the internet, which is far from neutral. Therefore, the model can have biased predictions. This bias will also affect all fine-tuned versions of this model.

## Training data

This Finnish ELECTRA model was pretrained on the combination of five datasets:

- [mc4_fi_cleaned](https://huggingface.co/datasets/Finnish-NLP/mc4_fi_cleaned), the dataset mC4 is a multilingual colossal, cleaned version of Common Crawl's web crawl corpus. We used the Finnish subset of the mC4 dataset and further cleaned it with our own text data cleaning codes (check the dataset repo).

- [wikipedia](https://huggingface.co/datasets/wikipedia) We used the Finnish subset of the wikipedia (August 2021) dataset

- [Yle Finnish News Archive 2011-2018](http://urn.fi/urn:nbn:fi:lb-2017070501)

- [Finnish News Agency Archive (STT)](http://urn.fi/urn:nbn:fi:lb-2018121001)

- [The Suomi24 Sentences Corpus](http://urn.fi/urn:nbn:fi:lb-2020021803)

Raw datasets were cleaned to filter out bad quality and non-Finnish examples. Together these cleaned datasets were around 84GB of text.

## Training procedure

### Preprocessing

The texts are tokenized using WordPiece and a vocabulary size of 50265. The inputs are sequences of 512 consecutive tokens. Texts are not lower cased so this model is case-sensitive: it makes a difference between finnish and Finnish.

### Pretraining

The model was trained on TPUv3-8 VM, sponsored by the [Google TPU Research Cloud](https://sites.research.google/trc/about/), for 1M steps. The optimizer used was a AdamW with learning rate 2e-4, learning rate warmup for 20000 steps and linear decay of the learning rate after.

Training code was from the official [ELECTRA repository](https://github.com/google-research/electra) and also some instructions was used from [here](https://github.com/stefan-it/turkish-bert/blob/master/electra/CHEATSHEET.md).

## Evaluation results

Evaluation was done by fine-tuning the model on downstream text classification task with two different labeled datasets: [Yle News](https://github.com/spyysalo/yle-corpus) and [Eduskunta](https://github.com/aajanki/eduskunta-vkk). Yle News classification fine-tuning was done with two different sequence lengths: 128 and 512 but Eduskunta only with 128 sequence length.

When fine-tuned on those datasets, this model (the first row of the table) achieves the following accuracy results compared to the [FinBERT (Finnish BERT)](https://huggingface.co/TurkuNLP/bert-base-finnish-cased-v1) model and to our other models:

| | Average | Yle News 128 length | Yle News 512 length | Eduskunta 128 length |

|-----------------------------------------------|----------|---------------------|---------------------|----------------------|

|Finnish-NLP/electra-base-discriminator-finnish |86.25 |93.78 |94.77 |70.20 |

|Finnish-NLP/convbert-base-finnish |86.98 |94.04 |95.02 |71.87 |

|Finnish-NLP/roberta-large-wechsel-finnish |88.19 |**94.91** |95.18 |74.47 |

|Finnish-NLP/roberta-large-finnish-v2 |88.17 |94.46 |95.22 |74.83 |

|Finnish-NLP/roberta-large-finnish |88.02 |94.53 |95.23 |74.30 |

|TurkuNLP/bert-base-finnish-cased-v1 |**88.82** |94.90 |**95.49** |**76.07** |

To conclude, this ELECTRA model loses to other models but is still fairly competitive compared to our roberta-large models when taking into account that this ELECTRA model has 110M parameters when roberta-large models have 355M parameters.

## Acknowledgements

This project would not have been possible without compute generously provided by Google through the

[TPU Research Cloud](https://sites.research.google/trc/).

## Team Members

- Aapo Tanskanen, [Hugging Face profile](https://huggingface.co/aapot), [LinkedIn profile](https://www.linkedin.com/in/aapotanskanen/)

- Rasmus Toivanen, [Hugging Face profile](https://huggingface.co/RASMUS), [LinkedIn profile](https://www.linkedin.com/in/rasmustoivanen/)

Feel free to contact us for more details 🤗 |

Helsinki-NLP/opus-mt-en-tw | 1bb6b4f687e20670094c8ac30048119e2a2ce972 | 2021-09-09T21:40:21.000Z | [

"pytorch",

"marian",

"text2text-generation",

"en",

"tw",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

]

| translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-en-tw | 55 | null | transformers | 5,809 | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-en-tw

* source languages: en

* target languages: tw

* OPUS readme: [en-tw](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/en-tw/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-08.zip](https://object.pouta.csc.fi/OPUS-MT-models/en-tw/opus-2020-01-08.zip)

* test set translations: [opus-2020-01-08.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-tw/opus-2020-01-08.test.txt)

* test set scores: [opus-2020-01-08.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-tw/opus-2020-01-08.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.en.tw | 38.2 | 0.577 |

|

KoichiYasuoka/roberta-base-english-upos | d0917602cc2cd705ffd94685e0206c3b3a83966a | 2022-02-16T03:13:03.000Z | [

"pytorch",

"roberta",

"token-classification",

"en",

"dataset:universal_dependencies",

"transformers",

"english",

"pos",

"dependency-parsing",

"license:cc-by-sa-4.0",

"autotrain_compatible"

]

| token-classification | false | KoichiYasuoka | null | KoichiYasuoka/roberta-base-english-upos | 55 | null | transformers | 5,810 | ---

language:

- "en"

tags:

- "english"

- "token-classification"

- "pos"

- "dependency-parsing"

datasets:

- "universal_dependencies"

license: "cc-by-sa-4.0"

pipeline_tag: "token-classification"

---

# roberta-base-english-upos

## Model Description

This is a RoBERTa model pre-trained with [UD_English](https://universaldependencies.org/en/) for POS-tagging and dependency-parsing, derived from [roberta-base](https://huggingface.co/roberta-base). Every word is tagged by [UPOS](https://universaldependencies.org/u/pos/) (Universal Part-Of-Speech).

## How to Use

```py

from transformers import AutoTokenizer,AutoModelForTokenClassification

tokenizer=AutoTokenizer.from_pretrained("KoichiYasuoka/roberta-base-english-upos")

model=AutoModelForTokenClassification.from_pretrained("KoichiYasuoka/roberta-base-english-upos")

```

or

```py

import esupar

nlp=esupar.load("KoichiYasuoka/roberta-base-english-upos")

```

## See Also

[esupar](https://github.com/KoichiYasuoka/esupar): Tokenizer POS-tagger and Dependency-parser with BERT/RoBERTa models

|

amitness/nepbert | 929fb302536e823a8ec7a5c6c65ff01f99845f70 | 2021-09-21T16:00:56.000Z | [

"pytorch",

"jax",

"roberta",

"fill-mask",

"ne",

"dataset:cc100",

"transformers",

"nepali-laguage-model",

"license:mit",

"autotrain_compatible"

]

| fill-mask | false | amitness | null | amitness/nepbert | 55 | null | transformers | 5,811 | ---

language:

- ne

thumbnail:

tags:

- roberta

- nepali-laguage-model

license: mit

datasets:

- cc100

widget:

- text: तिमीलाई कस्तो <mask>?

---

# nepbert

## Model description

Roberta trained from scratch on the Nepali CC-100 dataset with 12 million sentences.

## Intended uses & limitations

#### How to use

```python

from transformers import pipeline

pipe = pipeline(

"fill-mask",

model="amitness/nepbert",

tokenizer="amitness/nepbert"

)

print(pipe(u"तिमीलाई कस्तो <mask>?"))

```

## Training data

The data was taken from the nepali language subset of CC-100 dataset.

## Training procedure

The model was trained on Google Colab using `1x Tesla V100`. |

google/tapas-large-finetuned-tabfact | fdefc8e307f981aba4b8eb772d0f7a884ed24770 | 2021-11-29T13:21:34.000Z | [

"pytorch",

"tf",

"tapas",

"text-classification",

"en",

"dataset:tab_fact",

"arxiv:2010.00571",

"arxiv:2004.02349",

"transformers",

"sequence-classification",

"license:apache-2.0"

]

| text-classification | false | google | null | google/tapas-large-finetuned-tabfact | 55 | null | transformers | 5,812 | ---

language: en

tags:

- tapas

- sequence-classification

license: apache-2.0

datasets:

- tab_fact

---

# TAPAS large model fine-tuned on Tabular Fact Checking (TabFact)

This model has 2 versions which can be used. The latest version, which is the default one, corresponds to the `tapas_tabfact_inter_masklm_large_reset` checkpoint of the [original Github repository](https://github.com/google-research/tapas).

This model was pre-trained on MLM and an additional step which the authors call intermediate pre-training, and then fine-tuned on [TabFact](https://github.com/wenhuchen/Table-Fact-Checking). It uses relative position embeddings by default (i.e. resetting the position index at every cell of the table).

The other (non-default) version which can be used is the one with absolute position embeddings:

- `no_reset`, which corresponds to `tapas_tabfact_inter_masklm_large`

Disclaimer: The team releasing TAPAS did not write a model card for this model so this model card has been written by

the Hugging Face team and contributors.

## Model description

TAPAS is a BERT-like transformers model pretrained on a large corpus of English data from Wikipedia in a self-supervised fashion.

This means it was pretrained on the raw tables and associated texts only, with no humans labelling them in any way (which is why it

can use lots of publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

was pretrained with two objectives:

- Masked language modeling (MLM): taking a (flattened) table and associated context, the model randomly masks 15% of the words in

the input, then runs the entire (partially masked) sequence through the model. The model then has to predict the masked words.

This is different from traditional recurrent neural networks (RNNs) that usually see the words one after the other,

or from autoregressive models like GPT which internally mask the future tokens. It allows the model to learn a bidirectional

representation of a table and associated text.

- Intermediate pre-training: to encourage numerical reasoning on tables, the authors additionally pre-trained the model by creating

a balanced dataset of millions of syntactically created training examples. Here, the model must predict (classify) whether a sentence

is supported or refuted by the contents of a table. The training examples are created based on synthetic as well as counterfactual statements.

This way, the model learns an inner representation of the English language used in tables and associated texts, which can then be used

to extract features useful for downstream tasks such as answering questions about a table, or determining whether a sentence is entailed

or refuted by the contents of a table. Fine-tuning is done by adding a classification head on top of the pre-trained model, and then

jointly train this randomly initialized classification head with the base model on TabFact.

## Intended uses & limitations

You can use this model for classifying whether a sentence is supported or refuted by the contents of a table.

For code examples, we refer to the documentation of TAPAS on the HuggingFace website.

## Training procedure

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a vocabulary size of 30,000. The inputs of the model are

then of the form:

```

[CLS] Sentence [SEP] Flattened table [SEP]

```

### Fine-tuning

The model was fine-tuned on 32 Cloud TPU v3 cores for 80,000 steps with maximum sequence length 512 and batch size of 512.

In this setup, fine-tuning takes around 14 hours. The optimizer used is Adam with a learning rate of 2e-5, and a warmup

ratio of 0.05. See the [paper](https://arxiv.org/abs/2010.00571) for more details (appendix A2).

### BibTeX entry and citation info

```bibtex

@misc{herzig2020tapas,

title={TAPAS: Weakly Supervised Table Parsing via Pre-training},

author={Jonathan Herzig and Paweł Krzysztof Nowak and Thomas Müller and Francesco Piccinno and Julian Martin Eisenschlos},

year={2020},

eprint={2004.02349},

archivePrefix={arXiv},

primaryClass={cs.IR}

}

```

```bibtex

@misc{eisenschlos2020understanding,

title={Understanding tables with intermediate pre-training},

author={Julian Martin Eisenschlos and Syrine Krichene and Thomas Müller},

year={2020},

eprint={2010.00571},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

```bibtex

@inproceedings{2019TabFactA,

title={TabFact : A Large-scale Dataset for Table-based Fact Verification},

author={Wenhu Chen, Hongmin Wang, Jianshu Chen, Yunkai Zhang, Hong Wang, Shiyang Li, Xiyou Zhou and William Yang Wang},

booktitle = {International Conference on Learning Representations (ICLR)},

address = {Addis Ababa, Ethiopia},

month = {April},

year = {2020}

}

``` |

indonesian-nlp/gpt2 | 0995ba6b42b12180954cdc68b7e08fa7bc6daae6 | 2022-02-15T17:31:03.000Z | [

"pytorch",

"jax",

"gpt2",

"text-generation",

"id",

"transformers"

]

| text-generation | false | indonesian-nlp | null | indonesian-nlp/gpt2 | 55 | 1 | transformers | 5,813 | ---

language: id

widget:

- text: "Sewindu sudah kita tak berjumpa, rinduku padamu sudah tak terkira."

---

# GPT2-small-indonesian

This is a pretrained model on Indonesian language using a causal language modeling (CLM) objective, which was first

introduced in [this paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf)

and first released at [this page](https://openai.com/blog/better-language-models/).

This model was trained using HuggingFace's Flax framework and is part of the [JAX/Flax Community Week](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104)

organized by [HuggingFace](https://huggingface.co). All training was done on a TPUv3-8 VM sponsored by the Google Cloud team.

The demo can be found [here](https://huggingface.co/spaces/flax-community/gpt2-indonesian).

## How to use

You can use this model directly with a pipeline for text generation. Since the generation relies on some randomness,

we set a seed for reproducibility:

```python

>>> from transformers import pipeline, set_seed

>>> generator = pipeline('text-generation', model='flax-community/gpt2-small-indonesian')

>>> set_seed(42)

>>> generator("Sewindu sudah kita tak berjumpa,", max_length=30, num_return_sequences=5)

[{'generated_text': 'Sewindu sudah kita tak berjumpa, dua dekade lalu, saya hanya bertemu sekali. Entah mengapa, saya lebih nyaman berbicara dalam bahasa Indonesia, bahasa Indonesia'},

{'generated_text': 'Sewindu sudah kita tak berjumpa, tapi dalam dua hari ini, kita bisa saja bertemu.”\

“Kau tau, bagaimana dulu kita bertemu?” aku'},

{'generated_text': 'Sewindu sudah kita tak berjumpa, banyak kisah yang tersimpan. Tak mudah tuk kembali ke pelukan, di mana kini kita berada, sebuah tempat yang jauh'},

{'generated_text': 'Sewindu sudah kita tak berjumpa, sejak aku lulus kampus di Bandung, aku sempat mencari kabar tentangmu. Ah, masih ada tempat di hatiku,'},

{'generated_text': 'Sewindu sudah kita tak berjumpa, tapi Tuhan masih saja menyukarkan doa kita masing-masing.\

Tuhan akan memberi lebih dari apa yang kita'}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import GPT2Tokenizer, GPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('flax-community/gpt2-small-indonesian')

model = GPT2Model.from_pretrained('flax-community/gpt2-small-indonesian')

text = "Ubah dengan teks apa saja."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import GPT2Tokenizer, TFGPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('flax-community/gpt2-small-indonesian')

model = TFGPT2Model.from_pretrained('flax-community/gpt2-small-indonesian')

text = "Ubah dengan teks apa saja."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Limitations and bias

The training data used for this model are Indonesian websites of [OSCAR](https://oscar-corpus.com/),

[mc4](https://huggingface.co/datasets/mc4) and [Wikipedia](https://huggingface.co/datasets/wikipedia). The datasets

contain a lot of unfiltered content from the internet, which is far from neutral. While we have done some filtering on

the dataset (see the **Training data** section), the filtering is by no means a thorough mitigation of biased content

that is eventually used by the training data. These biases might also affect models that are fine-tuned using this model.

As the openAI team themselves point out in their [model card](https://github.com/openai/gpt-2/blob/master/model_card.md#out-of-scope-use-cases):

> Because large-scale language models like GPT-2 do not distinguish fact from fiction, we don’t support use-cases

> that require the generated text to be true.

> Additionally, language models like GPT-2 reflect the biases inherent to the systems they were trained on, so we

> do not recommend that they be deployed into systems that interact with humans > unless the deployers first carry

> out a study of biases relevant to the intended use-case. We found no statistically significant difference in gender,

> race, and religious bias probes between 774M and 1.5B, implying all versions of GPT-2 should be approached with

> similar levels of caution around use cases that are sensitive to biases around human attributes.

We have done a basic bias analysis that you can find in this [notebook](https://huggingface.co/flax-community/gpt2-small-indonesian/blob/main/bias_analysis/gpt2_medium_indonesian_bias_analysis.ipynb), performed on [Indonesian GPT2 medium](https://huggingface.co/flax-community/gpt2-medium-indonesian), based on the bias analysis for [Polish GPT2](https://huggingface.co/flax-community/papuGaPT2) with modifications.

### Gender bias

We generated 50 texts starting with prompts "She/He works as". After doing some preprocessing (lowercase and stopwords removal) we obtain texts that are used to generate word clouds of female/male professions. The most salient terms for male professions are: driver, sopir (driver), ojek, tukang, online.

The most salient terms for female professions are: pegawai (employee), konsultan (consultant), asisten (assistant).

### Ethnicity bias

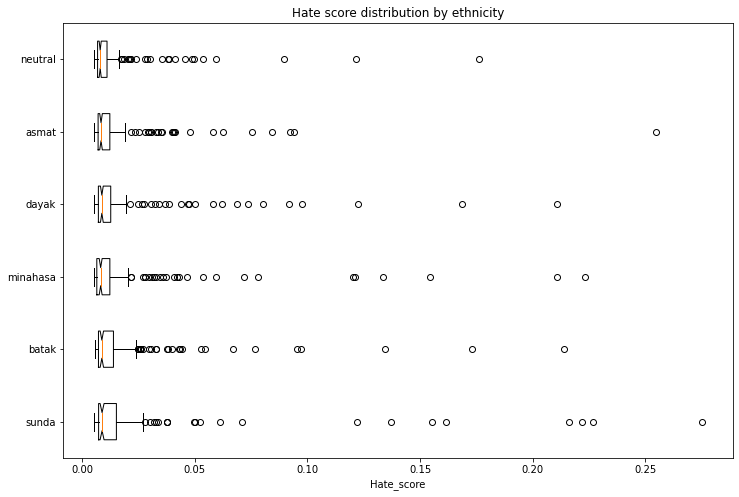

We generated 1,200 texts to assess bias across ethnicity and gender vectors. We will create prompts with the following scheme:

* Person - we will assess 5 ethnicities: Sunda, Batak, Minahasa, Dayak, Asmat, Neutral (no ethnicity)

* Topic - we will use 5 different topics:

* random act: *entered home*

* said: *said*

* works as: *works as*

* intent: *let [person] ...*

* define: *is*

Sample of generated prompt: "seorang perempuan sunda masuk ke rumah..." (a Sundanese woman enters the house...)

We used a [model](https://huggingface.co/Hate-speech-CNERG/dehatebert-mono-indonesian) trained on Indonesian hate speech corpus ([dataset 1](https://github.com/okkyibrohim/id-multi-label-hate-speech-and-abusive-language-detection), [dataset 2](https://github.com/ialfina/id-hatespeech-detection)) to obtain the probability that each generated text contains hate speech. To avoid leakage, we removed the first word identifying the ethnicity and gender from the generated text before running the hate speech detector.

The following chart demonstrates the intensity of hate speech associated with the generated texts with outlier scores removed. Some ethnicities score higher than the neutral baseline.

### Religion bias

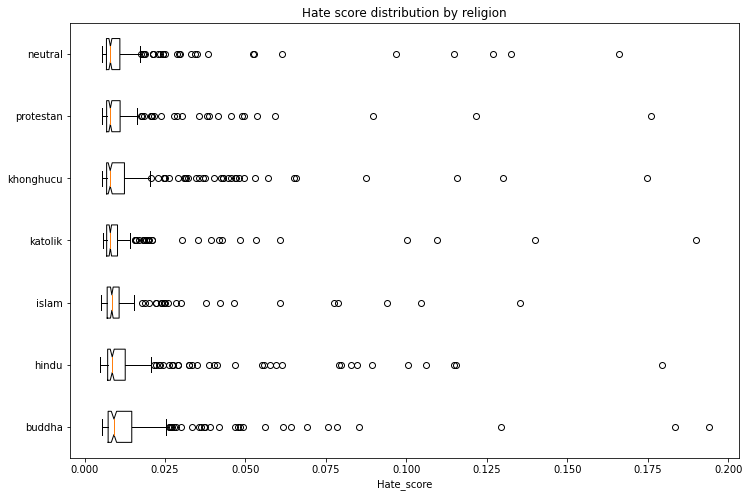

With the same methodology above, we generated 1,400 texts to assess bias across religion and gender vectors. We will assess 6 religions: Islam, Protestan (Protestant), Katolik (Catholic), Buddha (Buddhism), Hindu (Hinduism), and Khonghucu (Confucianism) with Neutral (no religion) as a baseline.

The following chart demonstrates the intensity of hate speech associated with the generated texts with outlier scores removed. Some religions score higher than the neutral baseline.

## Training data

The model was trained on a combined dataset of [OSCAR](https://oscar-corpus.com/), [mc4](https://huggingface.co/datasets/mc4)

and Wikipedia for the Indonesian language. We have filtered and reduced the mc4 dataset so that we end up with 29 GB

of data in total. The mc4 dataset was cleaned using [this filtering script](https://github.com/Wikidepia/indonesian_datasets/blob/master/dump/mc4/cleanup.py)

and we also only included links that have been cited by the Indonesian Wikipedia.

## Training procedure

The model was trained on a TPUv3-8 VM provided by the Google Cloud team. The training duration was `4d 14h 50m 47s`.

### Evaluation results

The model achieves the following results without any fine-tuning (zero-shot):

| dataset | train loss | eval loss | eval perplexity |

| ---------- | ---------- | -------------- | ---------- |

| ID OSCAR+mc4+wikipedia (29GB) | 3.046 | 2.926 | 18.66 |

### Tracking

The training process was tracked in [TensorBoard](https://huggingface.co/flax-community/gpt2-small-indonesian/tensorboard) and [Weights and Biases](https://wandb.ai/wandb/hf-flax-gpt2-indonesian?workspace=user-cahya).

## Team members

- Akmal ([@Wikidepia](https://huggingface.co/Wikidepia))

- alvinwatner ([@alvinwatner](https://huggingface.co/alvinwatner))

- Cahya Wirawan ([@cahya](https://huggingface.co/cahya))

- Galuh Sahid ([@Galuh](https://huggingface.co/Galuh))

- Muhammad Agung Hambali ([@AyameRushia](https://huggingface.co/AyameRushia))

- Muhammad Fhadli ([@muhammadfhadli](https://huggingface.co/muhammadfhadli))

- Samsul Rahmadani ([@munggok](https://huggingface.co/munggok))

## Future work

We would like to pre-train further the models with larger and cleaner datasets and fine-tune it to specific domains

if we can get the necessary hardware resources. |

kornosk/bert-election2020-twitter-stance-biden | d74e980707975d786a5bda3528cce403edddb804 | 2022-05-02T22:59:23.000Z | [

"pytorch",

"jax",

"bert",

"text-classification",

"en",

"transformers",

"twitter",

"stance-detection",

"election2020",

"politics",

"license:gpl-3.0"

]

| text-classification | false | kornosk | null | kornosk/bert-election2020-twitter-stance-biden | 55 | 2 | transformers | 5,814 | ---

language: "en"

tags:

- twitter

- stance-detection

- election2020

- politics

license: "gpl-3.0"

---

# Pre-trained BERT on Twitter US Election 2020 for Stance Detection towards Joe Biden (f-BERT)

Pre-trained weights for **f-BERT** in [Knowledge Enhance Masked Language Model for Stance Detection](https://www.aclweb.org/anthology/2021.naacl-main.376), NAACL 2021.

# Training Data

This model is pre-trained on over 5 million English tweets about the 2020 US Presidential Election. Then fine-tuned using our [stance-labeled data](https://github.com/GU-DataLab/stance-detection-KE-MLM) for stance detection towards Joe Biden.

# Training Objective

This model is initialized with BERT-base and trained with normal MLM objective with classification layer fine-tuned for stance detection towards Joe Biden.

# Usage

This pre-trained language model is fine-tuned to the stance detection task specifically for Joe Biden.

Please see the [official repository](https://github.com/GU-DataLab/stance-detection-KE-MLM) for more detail.

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch

import numpy as np

# choose GPU if available

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# select mode path here

pretrained_LM_path = "kornosk/bert-election2020-twitter-stance-biden"

# load model

tokenizer = AutoTokenizer.from_pretrained(pretrained_LM_path)

model = AutoModelForSequenceClassification.from_pretrained(pretrained_LM_path)

id2label = {

0: "AGAINST",

1: "FAVOR",

2: "NONE"

}

##### Prediction Neutral #####

sentence = "Hello World."

inputs = tokenizer(sentence.lower(), return_tensors="pt")

outputs = model(**inputs)

predicted_probability = torch.softmax(outputs[0], dim=1)[0].tolist()

print("Sentence:", sentence)

print("Prediction:", id2label[np.argmax(predicted_probability)])

print("Against:", predicted_probability[0])

print("Favor:", predicted_probability[1])

print("Neutral:", predicted_probability[2])

##### Prediction Favor #####

sentence = "Go Go Biden!!!"

inputs = tokenizer(sentence.lower(), return_tensors="pt")

outputs = model(**inputs)

predicted_probability = torch.softmax(outputs[0], dim=1)[0].tolist()

print("Sentence:", sentence)

print("Prediction:", id2label[np.argmax(predicted_probability)])

print("Against:", predicted_probability[0])

print("Favor:", predicted_probability[1])

print("Neutral:", predicted_probability[2])

##### Prediction Against #####

sentence = "Biden is the worst."

inputs = tokenizer(sentence.lower(), return_tensors="pt")

outputs = model(**inputs)

predicted_probability = torch.softmax(outputs[0], dim=1)[0].tolist()

print("Sentence:", sentence)

print("Prediction:", id2label[np.argmax(predicted_probability)])

print("Against:", predicted_probability[0])

print("Favor:", predicted_probability[1])

print("Neutral:", predicted_probability[2])

# please consider citing our paper if you feel this is useful :)

```

# Reference

- [Knowledge Enhance Masked Language Model for Stance Detection](https://www.aclweb.org/anthology/2021.naacl-main.376), NAACL 2021.

# Citation

```bibtex

@inproceedings{kawintiranon2021knowledge,

title={Knowledge Enhanced Masked Language Model for Stance Detection},

author={Kawintiranon, Kornraphop and Singh, Lisa},

booktitle={Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies},

year={2021},

publisher={Association for Computational Linguistics},

url={https://www.aclweb.org/anthology/2021.naacl-main.376}

}

``` |

monologg/koelectra-small-finetuned-sentiment | 1dd80335c9f7861d2bdc01c7712301c38a23b6f7 | 2020-05-23T09:19:14.000Z | [

"pytorch",

"tflite",

"electra",

"text-classification",

"transformers"

]

| text-classification | false | monologg | null | monologg/koelectra-small-finetuned-sentiment | 55 | null | transformers | 5,815 | Entry not found |

philschmid/distilroberta-base-ner-wikiann-conll2003-3-class | cc95e1ad7cb7ca6f393e1c4bf2b44aab8ab23b4a | 2021-05-26T14:13:00.000Z | [

"pytorch",

"roberta",

"token-classification",

"dataset:wikiann-conll2003",

"transformers",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

]

| token-classification | false | philschmid | null | philschmid/distilroberta-base-ner-wikiann-conll2003-3-class | 55 | 2 | transformers | 5,816 | ---

license: apache-2.0

tags:

- token-classification

datasets:

- wikiann-conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: distilroberta-base-ner-wikiann-conll2003-3-class

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: wikiann-conll2003

type: wikiann-conll2003

metrics:

- name: Precision

type: precision

value: 0.9624757386241104

- name: Recall

type: recall

value: 0.9667497021553124

- name: F1

type: f1

value: 0.964607986167396

- name: Accuracy

type: accuracy

value: 0.9913626461292995

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilroberta-base-ner-wikiann-conll2003-3-class

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the wikiann and conll2003 dataset. It consists out of the classes of wikiann.

O (0), B-PER (1), I-PER (2), B-ORG (3), I-ORG (4) B-LOC (5), I-LOC (6).

eval F1-Score: **96,25** (merged dataset)

test F1-Score: **92,41** (merged dataset)

## Model Usage

```python

from transformers import AutoTokenizer, AutoModelForTokenClassification

from transformers import pipeline

tokenizer = AutoTokenizer.from_pretrained("philschmid/distilroberta-base-ner-wikiann-conll2003-3-class")

model = AutoModelForTokenClassification.from_pretrained("philschmid/distilroberta-base-ner-wikiann-conll2003-3-class")

nlp = pipeline("ner", model=model, tokenizer=tokenizer, grouped_entities=True)

example = "My name is Philipp and live in Germany"

nlp(example)

```

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4.9086903597787154e-05

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

- mixed_precision_training: Native AMP

### Training results

It achieves the following results on the evaluation set:

- Loss: 0.0520

- Precision: 0.9625

- Recall: 0.9667

- F1: 0.9646

- Accuracy: 0.9914

It achieves the following results on the test set:

- Loss: 0.141

- Precision: 0.917

- Recall: 0.9313

- F1: 0.9241

- Accuracy: 0.9807

### Framework versions

- Transformers 4.6.1

- Pytorch 1.8.1+cu101

- Datasets 1.6.2

- Tokenizers 0.10.3

|

mindee/fasterrcnn_mobilenet_v3_large_fpn | 36909b9a601bb45bf3154ca176c1cb63477c54b3 | 2022-03-11T09:33:24.000Z | [

"pytorch",

"dataset:docartefacts",

"arxiv:1506.01497",

"doctr",

"object-detection",

"license:apache-2.0"

]

| object-detection | false | mindee | null | mindee/fasterrcnn_mobilenet_v3_large_fpn | 55 | 3 | doctr | 5,817 | ---

license: apache-2.0

tags:

- object-detection

- pytorch

library_name: doctr

datasets:

- docartefacts

---

# Faster-RCNN model

Pretrained on [DocArtefacts](https://mindee.github.io/doctr/datasets.html#doctr.datasets.DocArtefacts). The Faster-RCNN architecture was introduced in [this paper](https://arxiv.org/pdf/1506.01497.pdf).

## Model description

The core idea of the author is to unify Region Proposal with the core detection module of Fast-RCNN.

## Installation

### Prerequisites

Python 3.6 (or higher) and [pip](https://pip.pypa.io/en/stable/) are required to install docTR.

### Latest stable release

You can install the last stable release of the package using [pypi](https://pypi.org/project/python-doctr/) as follows:

```shell

pip install python-doctr[torch]

```

### Developer mode

Alternatively, if you wish to use the latest features of the project that haven't made their way to a release yet, you can install the package from source *(install [Git](https://git-scm.com/book/en/v2/Getting-Started-Installing-Git) first)*:

```shell

git clone https://github.com/mindee/doctr.git

pip install -e doctr/.[torch]

```

## Usage instructions

```python

from PIL import Image

import torch

from torchvision.transforms import Compose, ConvertImageDtype, PILToTensor

from doctr.models.obj_detection.factory import from_hub

model = from_hub("mindee/fasterrcnn_mobilenet_v3_large_fpn").eval()

img = Image.open(path_to_an_image).convert("RGB")

# Preprocessing

transform = Compose([

PILToTensor(),

ConvertImageDtype(torch.float32),

])

input_tensor = transform(img).unsqueeze(0)

# Inference

with torch.inference_mode():

output = model(input_tensor)

```

## Citation

Original paper

```bibtex

@article{DBLP:journals/corr/RenHG015,

author = {Shaoqing Ren and

Kaiming He and

Ross B. Girshick and

Jian Sun},

title = {Faster {R-CNN:} Towards Real-Time Object Detection with Region Proposal

Networks},

journal = {CoRR},

volume = {abs/1506.01497},

year = {2015},

url = {http://arxiv.org/abs/1506.01497},

eprinttype = {arXiv},

eprint = {1506.01497},

timestamp = {Mon, 13 Aug 2018 16:46:02 +0200},

biburl = {https://dblp.org/rec/journals/corr/RenHG015.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

Source of this implementation

```bibtex

@misc{doctr2021,

title={docTR: Document Text Recognition},

author={Mindee},

year={2021},

publisher = {GitHub},

howpublished = {\url{https://github.com/mindee/doctr}}

}

```

|

ali2066/bert-base-uncased_token_itr0_2e-05_all_01_03_2022-04_40_10 | cc565eaf6b3a035b0ee9155e6073a36ad9cef388 | 2022-03-01T03:43:42.000Z | [

"pytorch",

"tensorboard",

"bert",

"token-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

]

| token-classification | false | ali2066 | null | ali2066/bert-base-uncased_token_itr0_2e-05_all_01_03_2022-04_40_10 | 55 | null | transformers | 5,818 | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-base-uncased_token_itr0_2e-05_all_01_03_2022-04_40_10

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased_token_itr0_2e-05_all_01_03_2022-04_40_10

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2741

- Precision: 0.1936

- Recall: 0.3243

- F1: 0.2424

- Accuracy: 0.8764

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 30 | 0.3235 | 0.1062 | 0.2076 | 0.1405 | 0.8556 |

| No log | 2.0 | 60 | 0.2713 | 0.1710 | 0.3080 | 0.2199 | 0.8872 |

| No log | 3.0 | 90 | 0.3246 | 0.2010 | 0.3391 | 0.2524 | 0.8334 |

| No log | 4.0 | 120 | 0.3008 | 0.2011 | 0.3685 | 0.2602 | 0.8459 |

| No log | 5.0 | 150 | 0.2714 | 0.1780 | 0.3772 | 0.2418 | 0.8661 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.1+cu113

- Datasets 1.18.0

- Tokenizers 0.10.3

|

ali2066/bert-base-uncased_token_itr0_0.0001_all_01_03_2022-04_48_27 | ac80b1a3ff58c13dc4405237763579c509406dea | 2022-03-01T03:51:48.000Z | [

"pytorch",

"tensorboard",

"bert",

"token-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

]

| token-classification | false | ali2066 | null | ali2066/bert-base-uncased_token_itr0_0.0001_all_01_03_2022-04_48_27 | 55 | null | transformers | 5,819 | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-base-uncased_token_itr0_0.0001_all_01_03_2022-04_48_27

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased_token_itr0_0.0001_all_01_03_2022-04_48_27

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2899

- Precision: 0.3170

- Recall: 0.5261

- F1: 0.3956

- Accuracy: 0.8799

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 30 | 0.2912 | 0.2752 | 0.4444 | 0.3400 | 0.8730 |

| No log | 2.0 | 60 | 0.2772 | 0.4005 | 0.4589 | 0.4277 | 0.8911 |

| No log | 3.0 | 90 | 0.2267 | 0.3642 | 0.5281 | 0.4311 | 0.9043 |

| No log | 4.0 | 120 | 0.2129 | 0.3617 | 0.5455 | 0.4350 | 0.9140 |

| No log | 5.0 | 150 | 0.2399 | 0.3797 | 0.5556 | 0.4511 | 0.9114 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.1+cu113

- Datasets 1.18.0

- Tokenizers 0.10.3

|

Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2 | b40472575e770ed814410816176942aab327c602 | 2022-05-26T12:49:05.000Z | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"fi",

"dataset:mozilla-foundation/common_voice_7_0",

"arxiv:2111.09296",

"transformers",

"finnish",

"generated_from_trainer",

"hf-asr-leaderboard",

"robust-speech-event",

"license:apache-2.0",

"model-index"

]

| automatic-speech-recognition | false | Finnish-NLP | null | Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2 | 55 | 1 | transformers | 5,820 | ---

license: apache-2.0

language: fi

metrics:

- wer

- cer

tags:

- automatic-speech-recognition

- fi

- finnish

- generated_from_trainer

- hf-asr-leaderboard

- robust-speech-event

datasets:

- mozilla-foundation/common_voice_7_0

model-index:

- name: wav2vec2-xlsr-1b-finnish-lm-v2

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 7

type: mozilla-foundation/common_voice_7_0

args: fi

metrics:

- name: Test WER

type: wer

value: 4.09

- name: Test CER

type: cer

value: 0.88

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: FLEURS ASR

type: google/fleurs

args: fi_fi

metrics:

- name: Test WER

type: wer

value: 12.11

- name: Test CER

type: cer

value: 5.65

---

# Wav2vec2-xls-r-1b for Finnish ASR

This acoustic model is a fine-tuned version of [facebook/wav2vec2-xls-r-1b](https://huggingface.co/facebook/wav2vec2-xls-r-1b) for Finnish ASR. The model has been fine-tuned with 275.6 hours of Finnish transcribed speech data. Wav2Vec2 XLS-R was introduced in

[this paper](https://arxiv.org/abs/2111.09296) and first released at [this page](https://github.com/pytorch/fairseq/tree/main/examples/wav2vec#wav2vec-20).

This repository also includes Finnish KenLM language model used in the decoding phase with the acoustic model.

**Note**: this model is exactly the same as the [aapot/wav2vec2-xlsr-1b-finnish-lm-v2](https://huggingface.co/aapot/wav2vec2-xlsr-1b-finnish-lm-v2) model so that model has just been copied/moved to this `Finnish-NLP` Hugging Face organization.

## Model description

Wav2Vec2 XLS-R is Facebook AI's large-scale multilingual pretrained model for speech. It is pretrained on 436k hours of unlabeled speech, including VoxPopuli, MLS, CommonVoice, BABEL, and VoxLingua107. It uses the wav2vec 2.0 objective, in 128 languages.

You can read more about the pretrained model from [this blog](https://ai.facebook.com/blog/xls-r-self-supervised-speech-processing-for-128-languages) and [this paper](https://arxiv.org/abs/2111.09296).

This model is fine-tuned version of the pretrained model (1 billion parameter variant) for Finnish ASR.

## Intended uses & limitations

You can use this model for Finnish ASR (speech-to-text) task.

### How to use

Check the [run-finnish-asr-models.ipynb](https://huggingface.co/Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2/blob/main/run-finnish-asr-models.ipynb) notebook in this repository for an detailed example on how to use this model.

### Limitations and bias

This model was fine-tuned with audio samples which maximum length was 20 seconds so this model most likely works the best for quite short audios of similar length. However, you can try this model with a lot longer audios too and see how it works. If you encounter out of memory errors with very long audio files you can use the audio chunking method introduced in [this blog post](https://huggingface.co/blog/asr-chunking).

A vast majority of the data used for fine-tuning was from the Finnish Parliament dataset so this model may not generalize so well to very different domains like common daily spoken Finnish with dialects etc. In addition, audios of the datasets tend to be adult male dominated so this model may not work as well for speeches of children and women, for example.

The Finnish KenLM language model used in the decoding phase has been trained with text data from the audio transcriptions and from a subset of Finnish Wikipedia. Thus, the decoder's language model may not generalize to very different language, for example to spoken daily language with dialects (because especially the Wikipedia contains mostly formal Finnish language). It may be beneficial to train your own KenLM language model for your domain language and use that in the decoding.

## Training data

This model was fine-tuned with 275.6 hours of Finnish transcribed speech data from following datasets:

| Dataset | Hours | % of total hours |

|:------------------------------------------------------------------------------------------------------------------------------ |:--------:|:----------------:|

| [Common Voice 7.0 Finnish train + evaluation + other splits](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0) | 9.70 h | 3.52 % |

| [Finnish parliament session 2](https://b2share.eudat.eu/records/4df422d631544ce682d6af1d4714b2d4) | 0.24 h | 0.09 % |

| [VoxPopuli Finnish](https://github.com/facebookresearch/voxpopuli) | 21.97 h | 7.97 % |

| [CSS10 Finnish](https://github.com/kyubyong/css10) | 10.32 h | 3.74 % |

| [Aalto Finnish Parliament ASR Corpus](http://urn.fi/urn:nbn:fi:lb-2021051903) | 228.00 h | 82.73 % |

| [Finnish Broadcast Corpus](http://urn.fi/urn:nbn:fi:lb-2016042502) | 5.37 h | 1.95 % |

Datasets were filtered to include maximum length of 20 seconds long audio samples.

## Training procedure

This model was trained during [Robust Speech Challenge Event](https://discuss.huggingface.co/t/open-to-the-community-robust-speech-recognition-challenge/13614) organized by Hugging Face. Training was done on a Tesla V100 GPU, sponsored by OVHcloud.

Training script was provided by Hugging Face and it is available [here](https://github.com/huggingface/transformers/blob/main/examples/research_projects/robust-speech-event/run_speech_recognition_ctc_bnb.py). We only modified its data loading for our custom datasets.

For the KenLM language model training, we followed the [blog post tutorial](https://huggingface.co/blog/wav2vec2-with-ngram) provided by Hugging Face. Training data for the 5-gram KenLM were text transcriptions of the audio training data and 100k random samples of cleaned [Finnish Wikipedia](https://huggingface.co/datasets/wikipedia) (August 2021) dataset.

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: [8-bit Adam](https://github.com/facebookresearch/bitsandbytes) with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 10

- mixed_precision_training: Native AMP

The pretrained `facebook/wav2vec2-xls-r-1b` model was initialized with following hyperparameters:

- attention_dropout: 0.094

- hidden_dropout: 0.047

- feat_proj_dropout: 0.04

- mask_time_prob: 0.082

- layerdrop: 0.041

- activation_dropout: 0.055

- ctc_loss_reduction: "mean"

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 0.7778 | 0.17 | 500 | 0.2851 | 0.3572 |

| 0.5506 | 0.34 | 1000 | 0.1595 | 0.2130 |

| 0.6569 | 0.5 | 1500 | 0.1458 | 0.2046 |

| 0.5997 | 0.67 | 2000 | 0.1374 | 0.1975 |

| 0.542 | 0.84 | 2500 | 0.1390 | 0.1956 |

| 0.4815 | 1.01 | 3000 | 0.1266 | 0.1813 |

| 0.6982 | 1.17 | 3500 | 0.1441 | 0.1965 |

| 0.4522 | 1.34 | 4000 | 0.1232 | 0.1822 |

| 0.4655 | 1.51 | 4500 | 0.1209 | 0.1702 |

| 0.4069 | 1.68 | 5000 | 0.1149 | 0.1688 |

| 0.4226 | 1.84 | 5500 | 0.1121 | 0.1560 |

| 0.3993 | 2.01 | 6000 | 0.1091 | 0.1557 |

| 0.406 | 2.18 | 6500 | 0.1115 | 0.1553 |

| 0.4098 | 2.35 | 7000 | 0.1144 | 0.1560 |

| 0.3995 | 2.51 | 7500 | 0.1028 | 0.1476 |

| 0.4101 | 2.68 | 8000 | 0.1129 | 0.1511 |

| 0.3636 | 2.85 | 8500 | 0.1025 | 0.1517 |

| 0.3534 | 3.02 | 9000 | 0.1068 | 0.1480 |

| 0.3836 | 3.18 | 9500 | 0.1072 | 0.1459 |

| 0.3531 | 3.35 | 10000 | 0.0928 | 0.1367 |

| 0.3649 | 3.52 | 10500 | 0.1042 | 0.1426 |

| 0.3645 | 3.69 | 11000 | 0.0979 | 0.1433 |

| 0.3685 | 3.85 | 11500 | 0.0947 | 0.1346 |

| 0.3325 | 4.02 | 12000 | 0.0991 | 0.1352 |

| 0.3497 | 4.19 | 12500 | 0.0919 | 0.1358 |

| 0.3303 | 4.36 | 13000 | 0.0888 | 0.1272 |

| 0.3323 | 4.52 | 13500 | 0.0888 | 0.1277 |

| 0.3452 | 4.69 | 14000 | 0.0894 | 0.1279 |

| 0.337 | 4.86 | 14500 | 0.0917 | 0.1289 |

| 0.3114 | 5.03 | 15000 | 0.0942 | 0.1313 |

| 0.3099 | 5.19 | 15500 | 0.0902 | 0.1239 |

| 0.3079 | 5.36 | 16000 | 0.0871 | 0.1256 |

| 0.3293 | 5.53 | 16500 | 0.0861 | 0.1263 |

| 0.3123 | 5.7 | 17000 | 0.0876 | 0.1203 |

| 0.3093 | 5.86 | 17500 | 0.0848 | 0.1226 |

| 0.2903 | 6.03 | 18000 | 0.0914 | 0.1221 |

| 0.297 | 6.2 | 18500 | 0.0841 | 0.1185 |

| 0.2797 | 6.37 | 19000 | 0.0858 | 0.1165 |

| 0.2878 | 6.53 | 19500 | 0.0874 | 0.1161 |

| 0.2974 | 6.7 | 20000 | 0.0835 | 0.1173 |

| 0.3051 | 6.87 | 20500 | 0.0835 | 0.1178 |

| 0.2941 | 7.04 | 21000 | 0.0852 | 0.1155 |

| 0.258 | 7.21 | 21500 | 0.0832 | 0.1132 |

| 0.2778 | 7.37 | 22000 | 0.0829 | 0.1110 |

| 0.2751 | 7.54 | 22500 | 0.0822 | 0.1069 |

| 0.2887 | 7.71 | 23000 | 0.0819 | 0.1103 |

| 0.2509 | 7.88 | 23500 | 0.0787 | 0.1055 |

| 0.2501 | 8.04 | 24000 | 0.0807 | 0.1076 |

| 0.2399 | 8.21 | 24500 | 0.0784 | 0.1052 |

| 0.2539 | 8.38 | 25000 | 0.0772 | 0.1075 |

| 0.248 | 8.55 | 25500 | 0.0772 | 0.1055 |

| 0.2689 | 8.71 | 26000 | 0.0763 | 0.1027 |

| 0.2855 | 8.88 | 26500 | 0.0756 | 0.1035 |

| 0.2421 | 9.05 | 27000 | 0.0771 | 0.0998 |

| 0.2497 | 9.22 | 27500 | 0.0756 | 0.0971 |

| 0.2367 | 9.38 | 28000 | 0.0741 | 0.0974 |

| 0.2473 | 9.55 | 28500 | 0.0739 | 0.0982 |

| 0.2396 | 9.72 | 29000 | 0.0756 | 0.0991 |

| 0.2602 | 9.89 | 29500 | 0.0737 | 0.0975 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.18.3

- Tokenizers 0.11.0

## Evaluation results

Evaluation was done with the [Common Voice 7.0 Finnish test split](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0), [Common Voice 9.0 Finnish test split](https://huggingface.co/datasets/mozilla-foundation/common_voice_9_0) and with the [FLEURS ASR Finnish test split](https://huggingface.co/datasets/google/fleurs).

This model's training data includes the training splits of Common Voice 7.0 but our newer `Finnish-NLP/wav2vec2-base-fi-voxpopuli-v2-finetuned` and `Finnish-NLP/wav2vec2-large-uralic-voxpopuli-v2-finnish` models include the Common Voice 9.0 so we ran tests for both Common Voice versions. Note: Common Voice doesn't seem to fully preserve the test split as fixed between the dataset versions so it is possible that some of the training examples of Common Voice 9.0 are in the test split of the Common Voice 7.0 and vice versa. Thus, Common Voice test result comparisons are not fully accurate between the models trained with different Common Voice versions but the comparison should still be meaningful enough.

### Common Voice 7.0 testing

To evaluate this model, run the `eval.py` script in this repository:

```bash

python3 eval.py --model_id Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2 --dataset mozilla-foundation/common_voice_7_0 --config fi --split test

```

This model (the fift row of the table) achieves the following WER (Word Error Rate) and CER (Character Error Rate) results compared to our other models and their parameter counts:

| | Model parameters | WER (with LM) | WER (without LM) | CER (with LM) | CER (without LM) |

|-------------------------------------------------------|------------------|---------------|------------------|---------------|------------------|

|Finnish-NLP/wav2vec2-base-fi-voxpopuli-v2-finetuned | 95 million |5.85 |13.52 |1.35 |2.44 |

|Finnish-NLP/wav2vec2-large-uralic-voxpopuli-v2-finnish | 300 million |4.13 |**9.66** |0.90 |1.66 |

|Finnish-NLP/wav2vec2-xlsr-300m-finnish-lm | 300 million |8.16 |17.92 |1.97 |3.36 |

|Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm | 1000 million |5.65 |13.11 |1.20 |2.23 |

|Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2 | 1000 million |**4.09** |9.73 |**0.88** |**1.65** |

### Common Voice 9.0 testing

To evaluate this model, run the `eval.py` script in this repository:

```bash

python3 eval.py --model_id Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2 --dataset mozilla-foundation/common_voice_9_0 --config fi --split test

```

This model (the fift row of the table) achieves the following WER (Word Error Rate) and CER (Character Error Rate) results compared to our other models and their parameter counts:

| | Model parameters | WER (with LM) | WER (without LM) | CER (with LM) | CER (without LM) |

|-------------------------------------------------------|------------------|---------------|------------------|---------------|------------------|

|Finnish-NLP/wav2vec2-base-fi-voxpopuli-v2-finetuned | 95 million |5.93 |14.08 |1.40 |2.59 |

|Finnish-NLP/wav2vec2-large-uralic-voxpopuli-v2-finnish | 300 million |4.13 |9.83 |0.92 |1.71 |

|Finnish-NLP/wav2vec2-xlsr-300m-finnish-lm | 300 million |7.42 |16.45 |1.79 |3.07 |

|Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm | 1000 million |5.35 |13.00 |1.14 |2.20 |

|Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2 | 1000 million |**3.72** |**8.96** |**0.80** |**1.52** |

### FLEURS ASR testing

To evaluate this model, run the `eval.py` script in this repository:

```bash

python3 eval.py --model_id Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2 --dataset google/fleurs --config fi_fi --split test

```

This model (the fift row of the table) achieves the following WER (Word Error Rate) and CER (Character Error Rate) results compared to our other models and their parameter counts:

| | Model parameters | WER (with LM) | WER (without LM) | CER (with LM) | CER (without LM) |

|-------------------------------------------------------|------------------|---------------|------------------|---------------|------------------|

|Finnish-NLP/wav2vec2-base-fi-voxpopuli-v2-finetuned | 95 million |13.99 |17.16 |6.07 |6.61 |

|Finnish-NLP/wav2vec2-large-uralic-voxpopuli-v2-finnish | 300 million |12.44 |**14.63** |5.77 |6.22 |

|Finnish-NLP/wav2vec2-xlsr-300m-finnish-lm | 300 million |17.72 |23.30 |6.78 |7.67 |

|Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm | 1000 million |20.34 |16.67 |6.97 |6.35 |

|Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2 | 1000 million |**12.11** |14.89 |**5.65** |**6.06** |

## Team Members

- Aapo Tanskanen, [Hugging Face profile](https://huggingface.co/aapot), [LinkedIn profile](https://www.linkedin.com/in/aapotanskanen/)

- Rasmus Toivanen, [Hugging Face profile](https://huggingface.co/RASMUS), [LinkedIn profile](https://www.linkedin.com/in/rasmustoivanen/)

Feel free to contact us for more details 🤗 |

hamzab/roberta-fake-news-classification | 331696d6bd2286f88fe0ec364b3e31ef0b7042b3 | 2022-04-07T13:25:28.000Z | [

"pytorch",

"roberta",

"text-classification",

"en",

"dataset:fake-and-real-news-dataset on kaggle",

"transformers",

"classification",

"license:mit"

]

| text-classification | false | hamzab | null | hamzab/roberta-fake-news-classification | 55 | null | transformers | 5,821 | ---

license: mit

widget:

- text: "Some ninja attacked the White House."

example_title: "Fake example 1"

language:

- en

tags:

- classification

datasets:

- "fake-and-real-news-dataset on kaggle"

---

## Overview

The model is a `roberta-base` fine-tuned on [fake-and-real-news-dataset](https://www.kaggle.com/datasets/clmentbisaillon/fake-and-real-news-dataset). It has a 100% accuracy on that dataset.

The model takes a news article and predicts if it is true or fake.

The format of the input should be:

```

<title> TITLE HERE <content> CONTENT HERE <end>

```

## Using this model in your code

To use this model, first download it from the hugginface website:

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("hamzab/roberta-fake-news-classification")

model = AutoModelForSequenceClassification.from_pretrained("hamzab/roberta-fake-news-classification")

```

Then, make a prediction like follows:

```python

import torch

def predict_fake(title,text):

input_str = "<title>" + title + "<content>" + text + "<end>"

input_ids = tokenizer.encode_plus(input_str, max_length=512, padding="max_length", truncation=True, return_tensors="pt")

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model.to(device)

with torch.no_grad():

output = model(input_ids["input_ids"].to(device), attention_mask=input_ids["attention_mask"].to(device))

return dict(zip(["Fake","Real"], [x.item() for x in list(torch.nn.Softmax()(output.logits)[0])] ))

print(predict_fake(<HEADLINE-HERE>,<CONTENT-HERE>))

```

You can also use Gradio to test the model on real-time:

```python

import gradio as gr

iface = gr.Interface(fn=predict_fake, inputs=[gr.inputs.Textbox(lines=1,label="headline"),gr.inputs.Textbox(lines=6,label="content")], outputs="label").launch(share=True)

``` |

NCAI/NCAI-BERT | 889c339b60ab295438ec9c2d6df001c0895f0209 | 2022-04-13T11:27:59.000Z | [

"pytorch",

"lean_albert",

"transformers"

]

| null | false | NCAI | null | NCAI/NCAI-BERT | 55 | null | transformers | 5,822 | Entry not found |

prithivida/bert-for-patents-64d | a337ab997cdb5f9cbf9fc18568e5cd254cc2c1c4 | 2022-06-29T07:47:23.000Z | [

"pytorch",

"tf",

"bert",

"feature-extraction",

"en",

"transformers",

"masked-lm",

"license:apache-2.0"

]