modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

softcatala/fullstop-catalan-punctuation-prediction

|

4b26218160a6efed8b99f7f5167269f57dcc25aa

|

2022-04-06T12:45:54.000Z

|

[

"pytorch",

"roberta",

"token-classification",

"ca",

"dataset:softcatala/Europarl-catalan",

"transformers",

"punctuation prediction",

"punctuation",

"autotrain_compatible"

] |

token-classification

| false |

softcatala

| null |

softcatala/fullstop-catalan-punctuation-prediction

| 204 | null |

transformers

| 3,600 |

---

language:

- ca

tags:

- punctuation prediction

- punctuation

datasets: softcatala/Europarl-catalan

widget:

- text: "Els investigadors suggereixen que tot i que es tracta de la cua d'un dinosaure jove la mostra revela un plomatge adult i no pas plomissol"

example_title: "Catalan"

metrics:

- f1

---

This model predicts the punctuation of Catalan language.

The model restores the following punctuation markers: **"." "," "?" "-" ":"**

Based on the work https://github.com/oliverguhr/fullstop-deep-punctuation-prediction

## Results

The performance differs for the single punctuation markers as hyphens and colons, in many cases, are optional and can be substituted by either a comma or a full stop. The model achieves the following F1 scores for Catalan language:

| Label | CA |

| ------------- | ----- |

| 0 | 0.99 |

| . | 0.93 |

| , | 0.82 |

| ? | 0.76 |

| - | 0.89 |

| : | 0.64 |

| macro average | 0.84 |

## Contact

Jordi Mas <[email protected]>

|

EMBO/BioMegatron345mUncased

|

ab9ac883b103dbb83e55f3b7a416fffcebff4e1b

|

2022-07-26T06:50:35.000Z

|

[

"pytorch",

"megatron-bert",

"en",

"arxiv:2010.06060",

"transformers",

"language model",

"license:cc-by-4.0"

] | null | false |

EMBO

| null |

EMBO/BioMegatron345mUncased

| 204 | 1 |

transformers

| 3,601 |

---

license: cc-by-4.0

language:

- en

thumbnail:

tags:

- language model

---

!---

# ##############################################################################################

#

# This model has been uploaded to HuggingFace by https://huggingface.co/drAbreu

# The model is based on the NVIDIA checkpoint located at

# https://catalog.ngc.nvidia.com/orgs/nvidia/models/biomegatron345muncased

#

# ##############################################################################################

-->

[BioMegatron](https://arxiv.org/pdf/2010.06060.pdf) is a transformer developed by the Applied Deep Learning Research team at NVIDIA. This particular Megatron model trained on top of the Megatron-LM model, adding a PubMed corpusto the Megatron-LM corpora(Wikipedia, RealNews, OpenWebText, and CC-Stories). BioMegatron follows a similar (albeit not identical) architecture as BERT and it has 345 million parameters:

* 24 layers

* 16 attention heads with a hidden size of 1024.

More information available at [nVIDIA NGC CATALOG](https://catalog.ngc.nvidia.com/orgs/nvidia/models/biomegatron345muncased)

# Running BioMegatron in 🤗 transformers

In this implementation we have followed the commands of the [`nvidia/megatron-bert-uncased-345m`](https://huggingface.co/nvidia/megatron-bert-uncased-345m) repository to make BioMegatron available in 🤗.

However, the file [`convert_megatron_bert_checkpoint.py`](https://github.com/huggingface/transformers/blob/main/src/transformers/models/megatron_bert/convert_megatron_bert_checkpoint.py) needed a modification. The reason is that the Megatron model shown in [`nvidia/megatron-bert-uncased-345m`](https://huggingface.co/nvidia/megatron-bert-uncased-345m) has included head layers, while the weights of the BioMegatron model that we upload to this repository do not contain a head.

We provide in the repository an alternative version of the [python script](https://huggingface.co/EMBO/BioMegatron345mUncased/blob/main/convert_biomegatron_checkpoint.py) in order to any user to cross-check the validity of the model replicated in this repository.

The code below is a modification of the original [`convert_megatron_bert_checkpoint.py`](https://github.com/huggingface/transformers/blob/main/src/transformers/models/megatron_bert/convert_megatron_bert_checkpoint.py).

```python

import os

import torch

from convert_biomegatron_checkpoint import convert_megatron_checkpoint

print_checkpoint_structure = True

path_to_checkpoint = "/path/to/BioMegatron345mUncased/"

# Extract the basename.

basename = os.path.dirname(path_to_checkpoint).split('/')[-1]

# Load the model.

input_state_dict = torch.load(os.path.join(path_to_checkpoint, 'model_optim_rng.pt'), map_location="cpu")

# Convert.

print("Converting")

output_state_dict, output_config = convert_megatron_checkpoint(input_state_dict, head_model=False)

# Print the structure of converted state dict.

if print_checkpoint_structure:

recursive_print(None, output_state_dict)

# Store the config to file.

output_config_file = os.path.join(path_to_checkpoint, "config.json")

print(f'Saving config to "{output_config_file}"')

with open(output_config_file, "w") as f:

json.dump(output_config, f)

# Store the state_dict to file.

output_checkpoint_file = os.path.join(path_to_checkpoint, "pytorch_model.bin")

print(f'Saving checkpoint to "{output_checkpoint_file}"')

torch.save(output_state_dict, output_checkpoint_file)

```

BioMegatron can be run with the standard 🤗 script for loading models. Here we show an example identical to that of [`nvidia/megatron-bert-uncased-345m`](https://huggingface.co/nvidia/megatron-bert-uncased-345m).

```python

import os

import torch

from transformers import BertTokenizer, MegatronBertForMaskedLM, AutoModelForMaskedLM

checkpoint = "EMBO/BioMegatron345mUncased"

# The tokenizer. Megatron was trained with standard tokenizer(s).

tokenizer = BertTokenizer.from_pretrained(checkpoint)

# Load the model from $MYDIR/nvidia/megatron-bert-uncased-345m.

model = AutoModelForMaskedLM.from_pretrained(checkpoint)

device = torch.device("cpu")

# Create inputs (from the BERT example page).

input = tokenizer("The capital of France is [MASK]", return_tensors="pt").to(device)

label = tokenizer("The capital of France is Paris", return_tensors="pt")["input_ids"].to(device)

# Run the model.

with torch.no_grad():

output = model(**input, labels=label)

print(output)

```

# Limitations

This implementation has not been fine-tuned in any task. It has only the weights of the official nVIDIA checkpoint. It needs to be trained to perform any downstream task.

# Original code

The original code for Megatron can be found here: [https://github.com/NVIDIA/Megatron-LM](https://github.com/NVIDIA/Megatron-LM).

|

SharpAI/mal_tls

|

735a8d55f96d3bbbb035834ed8c82df7c8ba9ae2

|

2022-07-27T18:04:04.000Z

|

[

"pytorch",

"tf",

"bert",

"text-classification",

"transformers",

"generated_from_keras_callback",

"model-index"

] |

text-classification

| false |

SharpAI

| null |

SharpAI/mal_tls

| 204 | null |

transformers

| 3,602 |

---

tags:

- generated_from_keras_callback

model-index:

- name: mal_tls

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal_tls

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.12.1

|

PlanTL-GOB-ES/roberta-base-bne-capitel-ner-plus

|

754e83ed79884f3ce74674711f002d95ae5f065e

|

2022-04-06T14:43:21.000Z

|

[

"pytorch",

"roberta",

"token-classification",

"es",

"dataset:bne",

"dataset:capitel",

"arxiv:1907.11692",

"arxiv:2107.07253",

"transformers",

"national library of spain",

"spanish",

"bne",

"capitel",

"ner",

"license:apache-2.0",

"autotrain_compatible"

] |

token-classification

| false |

PlanTL-GOB-ES

| null |

PlanTL-GOB-ES/roberta-base-bne-capitel-ner-plus

| 203 | 4 |

transformers

| 3,603 |

---

language:

- es

license: apache-2.0

tags:

- "national library of spain"

- "spanish"

- "bne"

- "capitel"

- "ner"

datasets:

- "bne"

- "capitel"

metrics:

- "f1"

inference:

parameters:

aggregation_strategy: "first"

---

# Spanish RoBERTa-base trained on BNE finetuned for CAPITEL Named Entity Recognition (NER) dataset.

RoBERTa-base-bne is a transformer-based masked language model for the Spanish language. It is based on the [RoBERTa](https://arxiv.org/abs/1907.11692) base model and has been pre-trained using the largest Spanish corpus known to date, with a total of 570GB of clean and deduplicated text processed for this work, compiled from the web crawlings performed by the [National Library of Spain (Biblioteca Nacional de España)](http://www.bne.es/en/Inicio/index.html) from 2009 to 2019.

Original pre-trained model can be found here: https://huggingface.co/PlanTL-GOB-ES/roberta-base-bne

## Dataset

The dataset used is the one from the [CAPITEL competition at IberLEF 2020](https://sites.google.com/view/capitel2020) (sub-task 1).

**IMPORTANT ABOUT THIS MODEL:** We modified the dataset to make this model more robust to general Spanish input. In the Spanish language all the name entities are capitalized, as this dataset has been elaborated by experts, it is totally correct in terms of Spanish language. We randomly took some entities and we lower-cased some of them for the model to learn not only that the named entities are capitalized, but also the structure of a sentence that should contain a named entity. For instance: "My name is [placeholder]", this [placeholder] should be a named entity even though it is not written capitalized. The model trained on the original capitel dataset can be found here: https://huggingface.co/PlanTL-GOB-ES/roberta-base-bne-capitel-ner

Examples:

This model:

- "Me llamo asier y vivo en barcelona todo el año." → "Me llamo {asier:S-PER} y vivo en {barcelona:S-LOC} todo el año."

- "Hoy voy a visitar el parc güell tras salir del barcelona supercomputing center." → "Hoy voy a visitar el {park:B-LOC} {güell:E-LOC} tras salir del {barcelona:B-ORG} {supercomputing:I-ORG} {center:E-ORG}."

Model trained on original data:

- "Me llamo asier y vivo en barcelona todo el año." → "Me llamo asier y vivo en barcelona todo el año." (nothing)

- "Hoy voy a visitar el parc güell tras salir del barcelona supercomputing center." → "Hoy voy a visitar el parc güell tras salir del barcelona supercomputing center." (nothing)

## Evaluation and results

F1 Score: 0.8867

For evaluation details visit our [GitHub repository](https://github.com/PlanTL-GOB-ES/lm-spanish).

## Citing

Check out our paper for all the details: https://arxiv.org/abs/2107.07253

```

@article{gutierrezfandino2022,

author = {Asier Gutiérrez-Fandiño and Jordi Armengol-Estapé and Marc Pàmies and Joan Llop-Palao and Joaquin Silveira-Ocampo and Casimiro Pio Carrino and Carme Armentano-Oller and Carlos Rodriguez-Penagos and Aitor Gonzalez-Agirre and Marta Villegas},

title = {MarIA: Spanish Language Models},

journal = {Procesamiento del Lenguaje Natural},

volume = {68},

number = {0},

year = {2022},

issn = {1989-7553},

url = {http://journal.sepln.org/sepln/ojs/ojs/index.php/pln/article/view/6405},

pages = {39--60}

}

```

## Funding

This work was partially funded by the Spanish State Secretariat for Digitalization and Artificial Intelligence (SEDIA) within the framework of the Plan-TL, and the Future of Computing Center, a Barcelona Supercomputing Center and IBM initiative (2020).

## Disclaimer

The models published in this repository are intended for a generalist purpose and are available to third parties. These models may have bias and/or any other undesirable distortions.

When third parties, deploy or provide systems and/or services to other parties using any of these models (or using systems based on these models) or become users of the models, they should note that it is their responsibility to mitigate the risks arising from their use and, in any event, to comply with applicable regulations, including regulations regarding the use of artificial intelligence.

In no event shall the owner of the models (SEDIA – State Secretariat for digitalization and artificial intelligence) nor the creator (BSC – Barcelona Supercomputing Center) be liable for any results arising from the use made by third parties of these models.

Los modelos publicados en este repositorio tienen una finalidad generalista y están a disposición de terceros. Estos modelos pueden tener sesgos y/u otro tipo de distorsiones indeseables.

Cuando terceros desplieguen o proporcionen sistemas y/o servicios a otras partes usando alguno de estos modelos (o utilizando sistemas basados en estos modelos) o se conviertan en usuarios de los modelos, deben tener en cuenta que es su responsabilidad mitigar los riesgos derivados de su uso y, en todo caso, cumplir con la normativa aplicable, incluyendo la normativa en materia de uso de inteligencia artificial.

En ningún caso el propietario de los modelos (SEDIA – Secretaría de Estado de Digitalización e Inteligencia Artificial) ni el creador (BSC – Barcelona Supercomputing Center) serán responsables de los resultados derivados del uso que hagan terceros de estos modelos.

|

google/vit-large-patch16-384

|

4b143e77059a54c70b348a76677ab9946f584e13

|

2022-01-28T10:22:26.000Z

|

[

"pytorch",

"tf",

"jax",

"vit",

"image-classification",

"dataset:imagenet",

"dataset:imagenet-21k",

"arxiv:2010.11929",

"arxiv:2006.03677",

"transformers",

"vision",

"license:apache-2.0"

] |

image-classification

| false |

google

| null |

google/vit-large-patch16-384

| 203 | 2 |

transformers

| 3,604 |

---

license: apache-2.0

tags:

- image-classification

- vision

datasets:

- imagenet

- imagenet-21k

---

# Vision Transformer (large-sized model)

Vision Transformer (ViT) model pre-trained on ImageNet-21k (14 million images, 21,843 classes) at resolution 224x224, and fine-tuned on ImageNet 2012 (1 million images, 1,000 classes) at resolution 384x384. It was introduced in the paper [An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale](https://arxiv.org/abs/2010.11929) by Dosovitskiy et al. and first released in [this repository](https://github.com/google-research/vision_transformer). However, the weights were converted from the [timm repository](https://github.com/rwightman/pytorch-image-models) by Ross Wightman, who already converted the weights from JAX to PyTorch. Credits go to him.

Disclaimer: The team releasing ViT did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The Vision Transformer (ViT) is a transformer encoder model (BERT-like) pretrained on a large collection of images in a supervised fashion, namely ImageNet-21k, at a resolution of 224x224 pixels. Next, the model was fine-tuned on ImageNet (also referred to as ILSVRC2012), a dataset comprising 1 million images and 1,000 classes, at a higher resolution of 384x384.

Images are presented to the model as a sequence of fixed-size patches (resolution 16x16), which are linearly embedded. One also adds a [CLS] token to the beginning of a sequence to use it for classification tasks. One also adds absolute position embeddings before feeding the sequence to the layers of the Transformer encoder.

By pre-training the model, it learns an inner representation of images that can then be used to extract features useful for downstream tasks: if you have a dataset of labeled images for instance, you can train a standard classifier by placing a linear layer on top of the pre-trained encoder. One typically places a linear layer on top of the [CLS] token, as the last hidden state of this token can be seen as a representation of an entire image.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=google/vit) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import ViTFeatureExtractor, ViTForImageClassification

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = ViTFeatureExtractor.from_pretrained('google/vit-large-patch16-384')

model = ViTForImageClassification.from_pretrained('google/vit-large-patch16-384')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

```

Currently, both the feature extractor and model support PyTorch. Tensorflow and JAX/FLAX are coming soon, and the API of ViTFeatureExtractor might change.

## Training data

The ViT model was pretrained on [ImageNet-21k](http://www.image-net.org/), a dataset consisting of 14 million images and 21k classes, and fine-tuned on [ImageNet](http://www.image-net.org/challenges/LSVRC/2012/), a dataset consisting of 1 million images and 1k classes.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/google-research/vision_transformer/blob/master/vit_jax/input_pipeline.py).

Images are resized/rescaled to the same resolution (224x224 during pre-training, 384x384 during fine-tuning) and normalized across the RGB channels with mean (0.5, 0.5, 0.5) and standard deviation (0.5, 0.5, 0.5).

### Pretraining

The model was trained on TPUv3 hardware (8 cores). All model variants are trained with a batch size of 4096 and learning rate warmup of 10k steps. For ImageNet, the authors found it beneficial to additionally apply gradient clipping at global norm 1. Pre-training resolution is 224.

## Evaluation results

For evaluation results on several image classification benchmarks, we refer to tables 2 and 5 of the original paper. Note that for fine-tuning, the best results are obtained with a higher resolution (384x384). Of course, increasing the model size will result in better performance.

### BibTeX entry and citation info

```bibtex

@misc{wu2020visual,

title={Visual Transformers: Token-based Image Representation and Processing for Computer Vision},

author={Bichen Wu and Chenfeng Xu and Xiaoliang Dai and Alvin Wan and Peizhao Zhang and Zhicheng Yan and Masayoshi Tomizuka and Joseph Gonzalez and Kurt Keutzer and Peter Vajda},

year={2020},

eprint={2006.03677},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

```bibtex

@inproceedings{deng2009imagenet,

title={Imagenet: A large-scale hierarchical image database},

author={Deng, Jia and Dong, Wei and Socher, Richard and Li, Li-Jia and Li, Kai and Fei-Fei, Li},

booktitle={2009 IEEE conference on computer vision and pattern recognition},

pages={248--255},

year={2009},

organization={Ieee}

}

```

|

mrm8488/bert2bert_shared-spanish-finetuned-paus-x-paraphrasing

|

e5fcd441a0b0e6fc55c87b3c89915623941a9426

|

2021-07-31T05:12:47.000Z

|

[

"pytorch",

"encoder-decoder",

"text2text-generation",

"es",

"dataset:pausx",

"transformers",

"spanish",

"paraphrasing",

"paraphrase",

"autotrain_compatible"

] |

text2text-generation

| false |

mrm8488

| null |

mrm8488/bert2bert_shared-spanish-finetuned-paus-x-paraphrasing

| 203 | 2 |

transformers

| 3,605 |

---

language: es

datasets:

- pausx

tags:

- spanish

- paraphrasing

- paraphrase

widget:

- text: "El pionero suizo John Sutter (1803-1880) llegó a Alta California con otros colonos euroamericanos en agosto de 1839."

---

# Spanish Bert2Bert (shared) fine-tuned on PAUS-X es for paraphrasing

|

HooshvareLab/bert-fa-base-uncased-sentiment-digikala

|

88db8245349b36b47ef1b92e49b8b80428b77d7c

|

2021-05-18T20:59:17.000Z

|

[

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"fa",

"transformers",

"license:apache-2.0"

] |

text-classification

| false |

HooshvareLab

| null |

HooshvareLab/bert-fa-base-uncased-sentiment-digikala

| 202 | null |

transformers

| 3,606 |

---

language: fa

license: apache-2.0

---

# ParsBERT (v2.0)

A Transformer-based Model for Persian Language Understanding

We reconstructed the vocabulary and fine-tuned the ParsBERT v1.1 on the new Persian corpora in order to provide some functionalities for using ParsBERT in other scopes!

Please follow the [ParsBERT](https://github.com/hooshvare/parsbert) repo for the latest information about previous and current models.

## Persian Sentiment [Digikala, SnappFood, DeepSentiPers]

It aims to classify text, such as comments, based on their emotional bias. We tested three well-known datasets for this task: `Digikala` user comments, `SnappFood` user comments, and `DeepSentiPers` in two binary-form and multi-form types.

### Digikala

Digikala user comments provided by [Open Data Mining Program (ODMP)](https://www.digikala.com/opendata/). This dataset contains 62,321 user comments with three labels:

| Label | # |

|:---------------:|:------:|

| no_idea | 10394 |

| not_recommended | 15885 |

| recommended | 36042 |

**Download**

You can download the dataset from [here](https://www.digikala.com/opendata/)

## Results

The following table summarizes the F1 score obtained by ParsBERT as compared to other models and architectures.

| Dataset | ParsBERT v2 | ParsBERT v1 | mBERT | DeepSentiPers |

|:------------------------:|:-----------:|:-----------:|:-----:|:-------------:|

| Digikala User Comments | 81.72 | 81.74* | 80.74 | - |

## How to use :hugs:

| Task | Notebook |

|---------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Sentiment Analysis | [](https://colab.research.google.com/github/hooshvare/parsbert/blob/master/notebooks/Taaghche_Sentiment_Analysis.ipynb) |

### BibTeX entry and citation info

Please cite in publications as the following:

```bibtex

@article{ParsBERT,

title={ParsBERT: Transformer-based Model for Persian Language Understanding},

author={Mehrdad Farahani, Mohammad Gharachorloo, Marzieh Farahani, Mohammad Manthouri},

journal={ArXiv},

year={2020},

volume={abs/2005.12515}

}

```

## Questions?

Post a Github issue on the [ParsBERT Issues](https://github.com/hooshvare/parsbert/issues) repo.

|

abhi1nandy2/EManuals_RoBERTa

|

b6fe473485f493db94fe67b8fa9d62bd3d0a43b9

|

2022-05-04T04:57:53.000Z

|

[

"pytorch",

"roberta",

"feature-extraction",

"English",

"transformers",

"EManuals",

"customer support",

"QA"

] |

feature-extraction

| false |

abhi1nandy2

| null |

abhi1nandy2/EManuals_RoBERTa

| 202 | null |

transformers

| 3,607 |

---

language:

- English

tags:

- EManuals

- customer support

- QA

- roberta

---

Refer to https://aclanthology.org/2021.findings-emnlp.392/ for the paper and https://sites.google.com/view/emanualqa/home for the project website

## Citation

Please cite the work if you would like to use it.

```

@inproceedings{nandy-etal-2021-question-answering,

title = "Question Answering over Electronic Devices: A New Benchmark Dataset and a Multi-Task Learning based {QA} Framework",

author = "Nandy, Abhilash and

Sharma, Soumya and

Maddhashiya, Shubham and

Sachdeva, Kapil and

Goyal, Pawan and

Ganguly, NIloy",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2021",

month = nov,

year = "2021",

address = "Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.findings-emnlp.392",

doi = "10.18653/v1/2021.findings-emnlp.392",

pages = "4600--4609",

abstract = "Answering questions asked from instructional corpora such as E-manuals, recipe books, etc., has been far less studied than open-domain factoid context-based question answering. This can be primarily attributed to the absence of standard benchmark datasets. In this paper, we meticulously create a large amount of data connected with E-manuals and develop a suitable algorithm to exploit it. We collect E-Manual Corpus, a huge corpus of 307,957 E-manuals, and pretrain RoBERTa on this large corpus. We create various benchmark QA datasets which include question answer pairs curated by experts based upon two E-manuals, real user questions from Community Question Answering Forum pertaining to E-manuals etc. We introduce EMQAP (E-Manual Question Answering Pipeline) that answers questions pertaining to electronics devices. Built upon the pretrained RoBERTa, it harbors a supervised multi-task learning framework which efficiently performs the dual tasks of identifying the section in the E-manual where the answer can be found and the exact answer span within that section. For E-Manual annotated question-answer pairs, we show an improvement of about 40{\%} in ROUGE-L F1 scores over most competitive baseline. We perform a detailed ablation study and establish the versatility of EMQAP across different circumstances. The code and datasets are shared at https://github.com/abhi1nandy2/EMNLP-2021-Findings, and the corresponding project website is https://sites.google.com/view/emanualqa/home.",

}

```

|

af-ai-center/bert-base-swedish-uncased

|

5c7fb9dbad916c7c9738751e8ee117b186f7da91

|

2021-05-18T23:12:14.000Z

|

[

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

] |

fill-mask

| false |

af-ai-center

| null |

af-ai-center/bert-base-swedish-uncased

| 202 | null |

transformers

| 3,608 |

Entry not found

|

suhasjain/DailoGPT-small-harrypotter

|

27baccd28f5226a79ac26499d6ed6e8feeaba056

|

2021-09-10T08:47:17.000Z

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

| false |

suhasjain

| null |

suhasjain/DailoGPT-small-harrypotter

| 202 | null |

transformers

| 3,609 |

---

tags:

- conversational

---

#Harry Potter DialoGPT Model

|

yoshitomo-matsubara/bert-base-uncased-mrpc

|

6a95d3c0ae14ab6bf2dccfaaa5bf6ee16b2a5172

|

2021-05-29T21:47:37.000Z

|

[

"pytorch",

"bert",

"text-classification",

"en",

"dataset:mrpc",

"transformers",

"mrpc",

"glue",

"torchdistill",

"license:apache-2.0"

] |

text-classification

| false |

yoshitomo-matsubara

| null |

yoshitomo-matsubara/bert-base-uncased-mrpc

| 202 | null |

transformers

| 3,610 |

---

language: en

tags:

- bert

- mrpc

- glue

- torchdistill

license: apache-2.0

datasets:

- mrpc

metrics:

- f1

- accuracy

---

`bert-base-uncased` fine-tuned on MRPC dataset, using [***torchdistill***](https://github.com/yoshitomo-matsubara/torchdistill) and [Google Colab](https://colab.research.google.com/github/yoshitomo-matsubara/torchdistill/blob/master/demo/glue_finetuning_and_submission.ipynb).

The hyperparameters are the same as those in Hugging Face's example and/or the paper of BERT, and the training configuration (including hyperparameters) is available [here](https://github.com/yoshitomo-matsubara/torchdistill/blob/main/configs/sample/glue/mrpc/ce/bert_base_uncased.yaml).

I submitted prediction files to [the GLUE leaderboard](https://gluebenchmark.com/leaderboard), and the overall GLUE score was **77.9**.

|

deepset/gelectra-base-generator

|

ba9ac3432c2c9904460f59343f1fb80d4c0a0740

|

2021-10-21T12:18:27.000Z

|

[

"pytorch",

"tf",

"electra",

"fill-mask",

"de",

"dataset:wikipedia",

"dataset:OPUS",

"dataset:OpenLegalData",

"arxiv:2010.10906",

"transformers",

"license:mit",

"autotrain_compatible"

] |

fill-mask

| false |

deepset

| null |

deepset/gelectra-base-generator

| 201 | 3 |

transformers

| 3,611 |

---

language: de

license: mit

datasets:

- wikipedia

- OPUS

- OpenLegalData

---

# German ELECTRA base generator

Released, Oct 2020, this is the generator component of the German ELECTRA language model trained collaboratively by the makers of the original German BERT (aka "bert-base-german-cased") and the dbmdz BERT (aka bert-base-german-dbmdz-cased). In our [paper](https://arxiv.org/pdf/2010.10906.pdf), we outline the steps taken to train our model.

The generator is useful for performing masking experiments. If you are looking for a regular language model for embedding extraction, or downstream tasks like NER, classification or QA, please use deepset/gelectra-base.

## Overview

**Paper:** [here](https://arxiv.org/pdf/2010.10906.pdf)

**Architecture:** ELECTRA base (generator)

**Language:** German

See also:

deepset/gbert-base

deepset/gbert-large

deepset/gelectra-base

deepset/gelectra-large

deepset/gelectra-base-generator

deepset/gelectra-large-generator

## Authors

Branden Chan: `branden.chan [at] deepset.ai`

Stefan Schweter: `stefan [at] schweter.eu`

Timo Möller: `timo.moeller [at] deepset.ai`

## About us

We bring NLP to the industry via open source!

Our focus: Industry specific language models & large scale QA systems.

Some of our work:

- [German BERT (aka "bert-base-german-cased")](https://deepset.ai/german-bert)

- [GermanQuAD and GermanDPR datasets and models (aka "gelectra-base-germanquad", "gbert-base-germandpr")](https://deepset.ai/germanquad)

- [FARM](https://github.com/deepset-ai/FARM)

- [Haystack](https://github.com/deepset-ai/haystack/)

Get in touch:

[Twitter](https://twitter.com/deepset_ai) | [LinkedIn](https://www.linkedin.com/company/deepset-ai/) | [Slack](https://haystack.deepset.ai/community/join) | [GitHub Discussions](https://github.com/deepset-ai/haystack/discussions) | [Website](https://deepset.ai)

By the way: [we're hiring!](http://www.deepset.ai/jobs)

|

hakurei/lit-125M

|

f1e7a551a28ee8ad2e982d5425a07e1d59b4be65

|

2022-02-17T22:52:19.000Z

|

[

"pytorch",

"gpt_neo",

"text-generation",

"en",

"transformers",

"causal-lm",

"license:mit"

] |

text-generation

| false |

hakurei

| null |

hakurei/lit-125M

| 201 | null |

transformers

| 3,612 |

---

language:

- en

tags:

- pytorch

- causal-lm

license: mit

---

# Lit-125M - A Small Fine-tuned Model For Fictional Storytelling

Lit-125M is a GPT-Neo 125M model fine-tuned on 2GB of a diverse range of light novels, erotica, and annotated literature for the purpose of generating novel-like fictional text.

## Model Description

The model used for fine-tuning is [GPT-Neo 125M](https://huggingface.co/EleutherAI/gpt-neo-125M), which is a 125 million parameter auto-regressive language model trained on [The Pile](https://pile.eleuther.ai/)..

## Training Data & Annotative Prompting

The data used in fine-tuning has been gathered from various sources such as the [Gutenberg Project](https://www.gutenberg.org/). The annotated fiction dataset has prepended tags to assist in generating towards a particular style. Here is an example prompt that shows how to use the annotations.

```

[ Title: The Dunwich Horror; Author: H. P. Lovecraft; Genre: Horror; Tags: 3rdperson, scary; Style: Dark ]

***

When a traveler in north central Massachusetts takes the wrong fork...

```

The annotations can be mixed and matched to help generate towards a specific style.

## Downstream Uses

This model can be used for entertainment purposes and as a creative writing assistant for fiction writers. The small size of the model can also help for easy debugging or further development of other models with a similar purpose.

## Example Code

```

from transformers import AutoTokenizer, AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained('hakurei/lit-125M')

tokenizer = AutoTokenizer.from_pretrained('hakurei/lit-125M')

prompt = '''[ Title: The Dunwich Horror; Author: H. P. Lovecraft; Genre: Horror ]

***

When a traveler'''

input_ids = tokenizer.encode(prompt, return_tensors='pt')

output = model.generate(input_ids, do_sample=True, temperature=1.0, top_p=0.9, repetition_penalty=1.2, max_length=len(input_ids[0])+100, pad_token_id=tokenizer.eos_token_id)

generated_text = tokenizer.decode(output[0])

print(generated_text)

```

An example output from this code produces a result that will look similar to:

```

[ Title: The Dunwich Horror; Author: H. P. Lovecraft; Genre: Horror ]

***

When a traveler takes a trip through the streets of the world, the traveler feels like a youkai with a whole world inside her mind. It can be very scary for a youkai. When someone goes in the opposite direction and knocks on your door, it is actually the first time you have ever come to investigate something like that.

That's right: everyone has heard stories about youkai, right? If you have heard them, you know what I'm talking about.

It's hard not to say you

```

## Team members and Acknowledgements

- [Anthony Mercurio](https://github.com/harubaru)

- Imperishable_NEET

|

huggingface/funnel-small

|

3829c82f3a863632e1726bfe4ecf950a726689df

|

2020-08-31T21:06:56.000Z

|

[

"pytorch",

"funnel",

"feature-extraction",

"transformers"

] |

feature-extraction

| false |

huggingface

| null |

huggingface/funnel-small

| 201 | null |

transformers

| 3,613 |

Entry not found

|

hyunwoongko/blenderbot-9B

|

20049263e5130cc21015f3327085fb3adbc39939

|

2021-06-17T01:26:34.000Z

|

[

"pytorch",

"blenderbot",

"text2text-generation",

"en",

"dataset:blended_skill_talk",

"arxiv:1907.06616",

"transformers",

"convAI",

"conversational",

"facebook",

"license:apache-2.0",

"autotrain_compatible"

] |

conversational

| false |

hyunwoongko

| null |

hyunwoongko/blenderbot-9B

| 201 | 5 |

transformers

| 3,614 |

---

language:

- en

thumbnail:

tags:

- convAI

- conversational

- facebook

license: apache-2.0

datasets:

- blended_skill_talk

metrics:

- perplexity

---

## Model description

+ Paper: [Recipes for building an open-domain chatbot](https://arxiv.org/abs/1907.06616)

+ [Original PARLAI Code](https://parl.ai/projects/recipes/)

### Abstract

Building open-domain chatbots is a challenging area for machine learning research. While prior work has shown that scaling neural models in the number of parameters and the size of the data they are trained on gives improved results, we show that other ingredients are important for a high-performing chatbot. Good conversation requires a number of skills that an expert conversationalist blends in a seamless way: providing engaging talking points and listening to their partners, both asking and answering questions, and displaying knowledge, empathy and personality appropriately, depending on the situation. We show that large scale models can learn these skills when given appropriate training data and choice of generation strategy. We build variants of these recipes with 90M, 2.7B and 9.4B parameter neural models, and make our models and code publicly available. Human evaluations show our best models are superior to existing approaches in multi-turn dialogue in terms of engagingness and humanness measurements. We then discuss the limitations of this work by analyzing failure cases of our models.

|

lisaterumi/genia-biobert-ent

|

929329e6c4611a8add9409d97e0727ea8cfe9e63

|

2022-07-22T21:40:15.000Z

|

[

"pytorch",

"bert",

"token-classification",

"en",

"dataset:Genia",

"transformers",

"autotrain_compatible"

] |

token-classification

| false |

lisaterumi

| null |

lisaterumi/genia-biobert-ent

| 201 | null |

transformers

| 3,615 |

---

language: "en"

widget:

- text: "Point mutation of a GATA-1 site at -230 reduced promoter activity by 37%."

- text: "Electrophoretic mobility shift assays indicated that the -230 GATA-1 site has a relatively low affinity for GATA-1."

- text: "Accordingly, the effects of the constitutively active PKCs were compared to the effects of mutationally activated p21ras."

- text: "Activated Src and p21ras were able to induce CD69 expression."

datasets:

- Genia

---

# Genia-BioBERT-ENT

## Citation

```

coming soon

```

|

xlm-mlm-17-1280

|

ed2e1c862c37217e1b185c33a282ed8f3ebdc3e2

|

2022-07-22T08:09:41.000Z

|

[

"pytorch",

"tf",

"xlm",

"fill-mask",

"multilingual",

"en",

"fr",

"es",

"de",

"it",

"pt",

"nl",

"sv",

"pl",

"ru",

"ar",

"tr",

"zh",

"ja",

"ko",

"hi",

"vi",

"arxiv:1901.07291",

"arxiv:1911.02116",

"arxiv:1910.09700",

"transformers",

"license:cc-by-nc-4.0",

"autotrain_compatible"

] |

fill-mask

| false | null | null |

xlm-mlm-17-1280

| 200 | 1 |

transformers

| 3,616 |

---

language:

- multilingual

- en

- fr

- es

- de

- it

- pt

- nl

- sv

- pl

- ru

- ar

- tr

- zh

- ja

- ko

- hi

- vi

license: cc-by-nc-4.0

---

# xlm-mlm-17-1280

# Table of Contents

1. [Model Details](#model-details)

2. [Uses](#uses)

3. [Bias, Risks, and Limitations](#bias-risks-and-limitations)

4. [Training](#training)

5. [Evaluation](#evaluation)

6. [Environmental Impact](#environmental-impact)

7. [Technical Specifications](#technical-specifications)

8. [Citation](#citation)

9. [Model Card Authors](#model-card-authors)

10. [How To Get Started With the Model](#how-to-get-started-with-the-model)

# Model Details

xlm-mlm-17-1280 is the XLM model, which was proposed in [Cross-lingual Language Model Pretraining](https://arxiv.org/abs/1901.07291) by Guillaume Lample and Alexis Conneau, trained on text in 17 languages. The model is a transformer pretrained using a masked language modeling (MLM) objective.

## Model Description

- **Developed by:** See [associated paper](https://arxiv.org/abs/1901.07291) and [GitHub Repo](https://github.com/facebookresearch/XLM)

- **Model type:** Language model

- **Language(s) (NLP):** 17 languages, see [GitHub Repo](https://github.com/facebookresearch/XLM#the-17-and-100-languages) for full list.

- **License:** CC-BY-NC-4.0

- **Related Models:** [xlm-mlm-17-1280](https://huggingface.co/xlm-mlm-17-1280)

- **Resources for more information:**

- [Associated paper](https://arxiv.org/abs/1901.07291)

- [GitHub Repo](https://github.com/facebookresearch/XLM#the-17-and-100-languages)

- [Hugging Face Multilingual Models for Inference docs](https://huggingface.co/docs/transformers/v4.20.1/en/multilingual#xlm-with-language-embeddings)

# Uses

## Direct Use

The model is a language model. The model can be used for masked language modeling.

## Downstream Use

To learn more about this task and potential downstream uses, see the Hugging Face [fill mask docs](https://huggingface.co/tasks/fill-mask) and the [Hugging Face Multilingual Models for Inference](https://huggingface.co/docs/transformers/v4.20.1/en/multilingual#xlm-with-language-embeddings) docs. Also see the [associated paper](https://arxiv.org/abs/1901.07291).

## Out-of-Scope Use

The model should not be used to intentionally create hostile or alienating environments for people.

# Bias, Risks, and Limitations

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)).

## Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model.

# Training

This model is the XLM model trained on text in 17 languages. The preprocessing included tokenization and byte-pair-encoding. See the [GitHub repo](https://github.com/facebookresearch/XLM#the-17-and-100-languages) and the [associated paper](https://arxiv.org/pdf/1911.02116.pdf) for further details on the training data and training procedure.

[Conneau et al. (2020)](https://arxiv.org/pdf/1911.02116.pdf) report that this model has 16 layers, 1280 hidden states, 16 attention heads, and the dimension of the feed-forward layer is 1520. The vocabulary size is 200k and the total number of parameters is 570M (see Table 7).

# Evaluation

## Testing Data, Factors & Metrics

The model developers evaluated the model on the XNLI cross-lingual classification task (see the [XNLI data card](https://huggingface.co/datasets/xnli) for more details on XNLI) using the metric of test accuracy. See the [GitHub Repo](https://arxiv.org/pdf/1911.02116.pdf) for further details on the testing data, factors and metrics.

## Results

For xlm-mlm-17-1280, the test accuracy on the XNLI cross-lingual classification task in English (en), Spanish (es), German (de), Arabic (ar), and Chinese (zh):

|Language| en | es | de | ar | zh |

|:------:|:--:|:---:|:--:|:--:|:--:|

| |84.8|79.4 |76.2|71.5|75 |

See the [GitHub repo](https://github.com/facebookresearch/XLM#ii-cross-lingual-language-model-pretraining-xlm) for further details.

# Environmental Impact

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** More information needed

- **Hours used:** More information needed

- **Cloud Provider:** More information needed

- **Compute Region:** More information needed

- **Carbon Emitted:** More information needed

# Technical Specifications

[Conneau et al. (2020)](https://arxiv.org/pdf/1911.02116.pdf) report that this model has 16 layers, 1280 hidden states, 16 attention heads, and the dimension of the feed-forward layer is 1520. The vocabulary size is 200k and the total number of parameters is 570M (see Table 7).

# Citation

**BibTeX:**

```bibtex

@article{lample2019cross,

title={Cross-lingual language model pretraining},

author={Lample, Guillaume and Conneau, Alexis},

journal={arXiv preprint arXiv:1901.07291},

year={2019}

}

```

**APA:**

- Lample, G., & Conneau, A. (2019). Cross-lingual language model pretraining. arXiv preprint arXiv:1901.07291.

# Model Card Authors

This model card was written by the team at Hugging Face.

# How to Get Started with the Model

More information needed. See the [ipython notebook](https://github.com/facebookresearch/XLM/blob/main/generate-embeddings.ipynb) in the associated [GitHub repo](https://github.com/facebookresearch/XLM#the-17-and-100-languages) for examples.

|

Helsinki-NLP/opus-mt-en-bg

|

f3d8448586d3626a38582ec5ff9e61f7d5940255

|

2021-01-18T08:05:27.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"en",

"bg",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-en-bg

| 200 | null |

transformers

| 3,617 |

---

language:

- en

- bg

tags:

- translation

license: apache-2.0

---

### eng-bul

* source group: English

* target group: Bulgarian

* OPUS readme: [eng-bul](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/eng-bul/README.md)

* model: transformer

* source language(s): eng

* target language(s): bul bul_Latn

* model: transformer

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* a sentence initial language token is required in the form of `>>id<<` (id = valid target language ID)

* download original weights: [opus-2020-07-03.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/eng-bul/opus-2020-07-03.zip)

* test set translations: [opus-2020-07-03.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/eng-bul/opus-2020-07-03.test.txt)

* test set scores: [opus-2020-07-03.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/eng-bul/opus-2020-07-03.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.eng.bul | 50.6 | 0.680 |

### System Info:

- hf_name: eng-bul

- source_languages: eng

- target_languages: bul

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/eng-bul/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['en', 'bg']

- src_constituents: {'eng'}

- tgt_constituents: {'bul', 'bul_Latn'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/eng-bul/opus-2020-07-03.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/eng-bul/opus-2020-07-03.test.txt

- src_alpha3: eng

- tgt_alpha3: bul

- short_pair: en-bg

- chrF2_score: 0.68

- bleu: 50.6

- brevity_penalty: 0.96

- ref_len: 69504.0

- src_name: English

- tgt_name: Bulgarian

- train_date: 2020-07-03

- src_alpha2: en

- tgt_alpha2: bg

- prefer_old: False

- long_pair: eng-bul

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41

|

Helsinki-NLP/opus-mt-gem-en

|

a0433c2234bd6b0ee27e493c1d47ba46322312bc

|

2021-01-18T08:51:56.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"da",

"sv",

"af",

"nn",

"fy",

"fo",

"de",

"nb",

"nl",

"is",

"en",

"lb",

"yi",

"gem",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-gem-en

| 200 | 1 |

transformers

| 3,618 |

---

language:

- da

- sv

- af

- nn

- fy

- fo

- de

- nb

- nl

- is

- en

- lb

- yi

- gem

tags:

- translation

license: apache-2.0

---

### gem-eng

* source group: Germanic languages

* target group: English

* OPUS readme: [gem-eng](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/gem-eng/README.md)

* model: transformer

* source language(s): afr ang_Latn dan deu enm_Latn fao frr fry gos got_Goth gsw isl ksh ltz nds nld nno nob nob_Hebr non_Latn pdc sco stq swe swg yid

* target language(s): eng

* model: transformer

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* download original weights: [opus2m-2020-08-01.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/gem-eng/opus2m-2020-08-01.zip)

* test set translations: [opus2m-2020-08-01.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/gem-eng/opus2m-2020-08-01.test.txt)

* test set scores: [opus2m-2020-08-01.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/gem-eng/opus2m-2020-08-01.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| newssyscomb2009-deueng.deu.eng | 27.2 | 0.542 |

| news-test2008-deueng.deu.eng | 26.3 | 0.536 |

| newstest2009-deueng.deu.eng | 25.1 | 0.531 |

| newstest2010-deueng.deu.eng | 28.3 | 0.569 |

| newstest2011-deueng.deu.eng | 26.0 | 0.543 |

| newstest2012-deueng.deu.eng | 26.8 | 0.550 |

| newstest2013-deueng.deu.eng | 30.2 | 0.570 |

| newstest2014-deen-deueng.deu.eng | 30.7 | 0.574 |

| newstest2015-ende-deueng.deu.eng | 32.1 | 0.581 |

| newstest2016-ende-deueng.deu.eng | 36.9 | 0.624 |

| newstest2017-ende-deueng.deu.eng | 32.8 | 0.588 |

| newstest2018-ende-deueng.deu.eng | 40.2 | 0.640 |

| newstest2019-deen-deueng.deu.eng | 36.8 | 0.614 |

| Tatoeba-test.afr-eng.afr.eng | 62.8 | 0.758 |

| Tatoeba-test.ang-eng.ang.eng | 10.5 | 0.262 |

| Tatoeba-test.dan-eng.dan.eng | 61.6 | 0.754 |

| Tatoeba-test.deu-eng.deu.eng | 49.7 | 0.665 |

| Tatoeba-test.enm-eng.enm.eng | 23.9 | 0.491 |

| Tatoeba-test.fao-eng.fao.eng | 23.4 | 0.446 |

| Tatoeba-test.frr-eng.frr.eng | 10.2 | 0.184 |

| Tatoeba-test.fry-eng.fry.eng | 29.6 | 0.486 |

| Tatoeba-test.gos-eng.gos.eng | 17.8 | 0.352 |

| Tatoeba-test.got-eng.got.eng | 0.1 | 0.058 |

| Tatoeba-test.gsw-eng.gsw.eng | 15.3 | 0.333 |

| Tatoeba-test.isl-eng.isl.eng | 51.0 | 0.669 |

| Tatoeba-test.ksh-eng.ksh.eng | 6.7 | 0.266 |

| Tatoeba-test.ltz-eng.ltz.eng | 33.0 | 0.505 |

| Tatoeba-test.multi.eng | 54.0 | 0.687 |

| Tatoeba-test.nds-eng.nds.eng | 33.6 | 0.529 |

| Tatoeba-test.nld-eng.nld.eng | 58.9 | 0.733 |

| Tatoeba-test.non-eng.non.eng | 37.3 | 0.546 |

| Tatoeba-test.nor-eng.nor.eng | 54.9 | 0.696 |

| Tatoeba-test.pdc-eng.pdc.eng | 29.6 | 0.446 |

| Tatoeba-test.sco-eng.sco.eng | 40.5 | 0.581 |

| Tatoeba-test.stq-eng.stq.eng | 14.5 | 0.361 |

| Tatoeba-test.swe-eng.swe.eng | 62.0 | 0.745 |

| Tatoeba-test.swg-eng.swg.eng | 17.1 | 0.334 |

| Tatoeba-test.yid-eng.yid.eng | 19.4 | 0.400 |

### System Info:

- hf_name: gem-eng

- source_languages: gem

- target_languages: eng

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/gem-eng/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['da', 'sv', 'af', 'nn', 'fy', 'fo', 'de', 'nb', 'nl', 'is', 'en', 'lb', 'yi', 'gem']

- src_constituents: {'ksh', 'enm_Latn', 'got_Goth', 'stq', 'dan', 'swe', 'afr', 'pdc', 'gos', 'nno', 'fry', 'gsw', 'fao', 'deu', 'swg', 'sco', 'nob', 'nld', 'isl', 'eng', 'ltz', 'nob_Hebr', 'ang_Latn', 'frr', 'non_Latn', 'yid', 'nds'}

- tgt_constituents: {'eng'}

- src_multilingual: True

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/gem-eng/opus2m-2020-08-01.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/gem-eng/opus2m-2020-08-01.test.txt

- src_alpha3: gem

- tgt_alpha3: eng

- short_pair: gem-en

- chrF2_score: 0.687

- bleu: 54.0

- brevity_penalty: 0.993

- ref_len: 72120.0

- src_name: Germanic languages

- tgt_name: English

- train_date: 2020-08-01

- src_alpha2: gem

- tgt_alpha2: en

- prefer_old: False

- long_pair: gem-eng

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41

|

Helsinki-NLP/opus-mt-pa-en

|

e501d2bca4de5b63384fcdafc6b6185433efb4a9

|

2021-09-10T14:00:06.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"pa",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-pa-en

| 200 | null |

transformers

| 3,619 |

---

tags:

- translation

license: apache-2.0

---

### opus-mt-pa-en

* source languages: pa

* target languages: en

* OPUS readme: [pa-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/pa-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/pa-en/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/pa-en/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/pa-en/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.pa.en | 20.6 | 0.320 |

| Tatoeba.pa.en | 29.3 | 0.464 |

|

ShannonAI/ChineseBERT-base

|

aa8b6fa9c3427f77b0911b07ab35f2b1b8bf248b

|

2022-06-19T08:14:46.000Z

|

[

"pytorch",

"arxiv:2106.16038"

] | null | false |

ShannonAI

| null |

ShannonAI/ChineseBERT-base

| 200 | 6 | null | 3,620 |

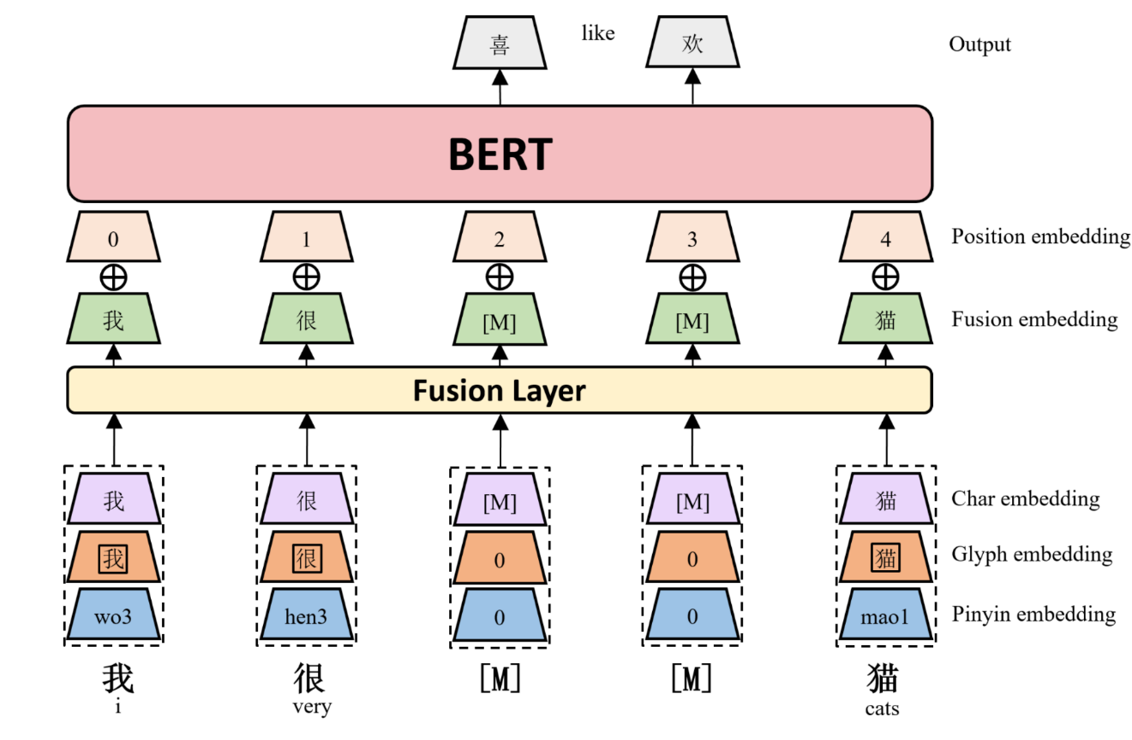

# ChineseBERT-base

This repository contains code, model, dataset for **ChineseBERT** at ACL2021.

paper:

**[ChineseBERT: Chinese Pretraining Enhanced by Glyph and Pinyin Information](https://arxiv.org/abs/2106.16038)**

*Zijun Sun, Xiaoya Li, Xiaofei Sun, Yuxian Meng, Xiang Ao, Qing He, Fei Wu and Jiwei Li*

code:

[ChineseBERT github link](https://github.com/ShannonAI/ChineseBert)

## Model description

We propose ChineseBERT, which incorporates both the glyph and pinyin information of Chinese

characters into language model pretraining.

First, for each Chinese character, we get three kind of embedding.

- **Char Embedding:** the same as origin BERT token embedding.

- **Glyph Embedding:** capture visual features based on different fonts of a Chinese character.

- **Pinyin Embedding:** capture phonetic feature from the pinyin sequence ot a Chinese Character.

Then, char embedding, glyph embedding and pinyin embedding

are first concatenated, and mapped to a D-dimensional embedding through a fully

connected layer to form the fusion embedding.

Finally, the fusion embedding is added with the position embedding, which is fed as input to the BERT model.

The following image shows an overview architecture of ChineseBERT model.

ChineseBERT leverages the glyph and pinyin information of Chinese

characters to enhance the model's ability of capturing

context semantics from surface character forms and

disambiguating polyphonic characters in Chinese.

|

facebook/wav2vec2-large-xlsr-53-dutch

|

ced00f8603b017d58cb3bcb3883e8c28f19ccdb4

|

2021-07-06T02:35:35.000Z

|

[

"pytorch",

"jax",

"wav2vec2",

"automatic-speech-recognition",

"nl",

"dataset:common_voice",

"transformers",

"speech",

"audio",

"license:apache-2.0"

] |

automatic-speech-recognition

| false |

facebook

| null |

facebook/wav2vec2-large-xlsr-53-dutch

| 200 | null |

transformers

| 3,621 |

---

language: nl

datasets:

- common_voice

tags:

- speech

- audio

- automatic-speech-recognition

license: apache-2.0

---

## Evaluation on Common Voice NL Test

```python

import torchaudio

from datasets import load_dataset, load_metric

from transformers import (

Wav2Vec2ForCTC,

Wav2Vec2Processor,

)

import torch

import re

import sys

model_name = "facebook/wav2vec2-large-xlsr-53-dutch"

device = "cuda"

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"]' # noqa: W605

model = Wav2Vec2ForCTC.from_pretrained(model_name).to(device)

processor = Wav2Vec2Processor.from_pretrained(model_name)

ds = load_dataset("common_voice", "nl", split="test", data_dir="./cv-corpus-6.1-2020-12-11")

resampler = torchaudio.transforms.Resample(orig_freq=48_000, new_freq=16_000)

def map_to_array(batch):

speech, _ = torchaudio.load(batch["path"])

batch["speech"] = resampler.forward(speech.squeeze(0)).numpy()

batch["sampling_rate"] = resampler.new_freq

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower().replace("’", "'")

return batch

ds = ds.map(map_to_array)

def map_to_pred(batch):

features = processor(batch["speech"], sampling_rate=batch["sampling_rate"][0], padding=True, return_tensors="pt")

input_values = features.input_values.to(device)

attention_mask = features.attention_mask.to(device)

with torch.no_grad():

logits = model(input_values, attention_mask=attention_mask).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["predicted"] = processor.batch_decode(pred_ids)

batch["target"] = batch["sentence"]

return batch

result = ds.map(map_to_pred, batched=True, batch_size=16, remove_columns=list(ds.features.keys()))

wer = load_metric("wer")

print(wer.compute(predictions=result["predicted"], references=result["target"]))

```

**Result**: 21.1 %

|

maxidl/wav2vec2-large-xlsr-german

|

893c0ff874902327f50822f871ff78e4f584fbf5

|

2021-07-06T12:32:21.000Z

|

[

"pytorch",

"jax",

"wav2vec2",

"automatic-speech-recognition",

"de",

"dataset:common_voice",

"transformers",

"audio",

"speech",

"xlsr-fine-tuning-week",

"license:apache-2.0",

"model-index"

] |

automatic-speech-recognition

| false |

maxidl

| null |

maxidl/wav2vec2-large-xlsr-german

| 200 | null |

transformers

| 3,622 |

---

language: de

datasets:

- common_voice

metrics:

- wer

tags:

- audio

- automatic-speech-recognition

- speech

- xlsr-fine-tuning-week

license: apache-2.0

model-index:

- name: {XLSR Wav2Vec2 Large 53 CV-de}

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice de

type: common_voice

args: de

metrics:

- name: Test WER

type: wer

value: 12.77

---

# Wav2Vec2-Large-XLSR-53-German

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on German using the [Common Voice](https://huggingface.co/datasets/common_voice) dataset.

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "de", split="test[:8]") # use a batch of 8 for demo purposes

processor = Wav2Vec2Processor.from_pretrained("maxidl/wav2vec2-large-xlsr-german")

model = Wav2Vec2ForCTC.from_pretrained("maxidl/wav2vec2-large-xlsr-german")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

"""

Preprocessing the dataset by:

- loading audio files

- resampling to 16kHz

- converting to array

- prepare input tensor using the processor

"""

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

# run forward

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"])

"""

Example Result:

Prediction: [

'zieh durch bittet draußen die schuhe aus',

'es kommt zugvorgebauten fo',

'ihre vorterstrecken erschienen it modemagazinen wie der voge karpes basar mariclair',

'fürliepert eine auch für manachen ungewöhnlich lange drittelliste',

'er wurde zu ehren des reichskanzlers otto von bismarck errichtet',

'was solls ich bin bereit',

'das internet besteht aus vielen computern die miteinander verbunden sind',

'der uranus ist der siebinteplanet in unserem sonnensystem s'

]

Reference: [

'Zieht euch bitte draußen die Schuhe aus.',

'Es kommt zum Showdown in Gstaad.',

'Ihre Fotostrecken erschienen in Modemagazinen wie der Vogue, Harper’s Bazaar und Marie Claire.',

'Felipe hat eine auch für Monarchen ungewöhnlich lange Titelliste.',

'Er wurde zu Ehren des Reichskanzlers Otto von Bismarck errichtet.',

'Was solls, ich bin bereit.',

'Das Internet besteht aus vielen Computern, die miteinander verbunden sind.',

'Der Uranus ist der siebente Planet in unserem Sonnensystem.'

]

"""

```

## Evaluation

The model can be evaluated as follows on the German test data of Common Voice:

```python

import re

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

"""

Evaluation on the full test set:

- takes ~20mins (RTX 3090).

- requires ~170GB RAM to compute the WER. Below, we use a chunked implementation of WER to avoid large RAM consumption.

"""

test_dataset = load_dataset("common_voice", "de", split="test") # use "test[:1%]" for 1% sample

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("maxidl/wav2vec2-large-xlsr-german")

model = Wav2Vec2ForCTC.from_pretrained("maxidl/wav2vec2-large-xlsr-german")

model.to("cuda")

chars_to_ignore_regex = '[\\,\\?\\.\\!\\-\\;\\:\\"\\“]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

\tbatch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

\tspeech_array, sampling_rate = torchaudio.load(batch["path"])

\tbatch["speech"] = resampler(speech_array).squeeze().numpy()

\treturn batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def evaluate(batch):

\tinputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

\twith torch.no_grad():

\t\tlogits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

\tpred_ids = torch.argmax(logits, dim=-1)

\tbatch["pred_strings"] = processor.batch_decode(pred_ids)

\treturn batch

result = test_dataset.map(evaluate, batched=True, batch_size=8) # batch_size=8 -> requires ~14.5GB GPU memory

# non-chunked version:

# print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

# WER: 12.900291

# Chunked version, see https://discuss.huggingface.co/t/spanish-asr-fine-tuning-wav2vec2/4586/5:

import jiwer

def chunked_wer(targets, predictions, chunk_size=None):

if chunk_size is None: return jiwer.wer(targets, predictions)

start = 0

end = chunk_size

H, S, D, I = 0, 0, 0, 0

while start < len(targets):

chunk_metrics = jiwer.compute_measures(targets[start:end], predictions[start:end])

H = H + chunk_metrics["hits"]

S = S + chunk_metrics["substitutions"]

D = D + chunk_metrics["deletions"]

I = I + chunk_metrics["insertions"]

start += chunk_size

end += chunk_size

return float(S + D + I) / float(H + S + D)

print("Total (chunk_size=1000), WER: {:2f}".format(100 * chunked_wer(result["pred_strings"], result["sentence"], chunk_size=1000)))

# Total (chunk=1000), WER: 12.768981

```

**Test Result**: WER: 12.77 %

## Training

The Common Voice German `train` and `validation` were used for training.

The script used for training can be found [here](https://github.com/maxidl/wav2vec2).

The model was trained for 50k steps, taking around 30 hours on a single A100.

The arguments used for training this model are:

```

python run_finetuning.py \\

--model_name_or_path="facebook/wav2vec2-large-xlsr-53" \\

--dataset_config_name="de" \\

--output_dir=./wav2vec2-large-xlsr-german \\

--preprocessing_num_workers="16" \\

--overwrite_output_dir \\

--num_train_epochs="20" \\

--per_device_train_batch_size="64" \\

--per_device_eval_batch_size="32" \\

--learning_rate="1e-4" \\

--warmup_steps="500" \\

--evaluation_strategy="steps" \\

--save_steps="5000" \\

--eval_steps="5000" \\

--logging_steps="1000" \\

--save_total_limit="3" \\

--freeze_feature_extractor \\

--activation_dropout="0.055" \\

--attention_dropout="0.094" \\

--feat_proj_dropout="0.04" \\

--layerdrop="0.04" \\

--mask_time_prob="0.08" \\

--gradient_checkpointing="1" \\

--fp16 \\

--do_train \\

--do_eval \\

--dataloader_num_workers="16" \\

--group_by_length

```

|

pierrerappolt/cart

|

e1b8e44a537f4a3355859982f3327d4478eb4797

|

2022-03-27T02:53:44.000Z

|

[

"pytorch",

"roberta",

"question-answering",

"en",

"dataset:squadv2",

"transformers",

"html",

"license:apache-2.0",

"autotrain_compatible"

] |

question-answering

| false |

pierrerappolt

| null |

pierrerappolt/cart

| 200 | null |

transformers

| 3,623 |

---

language: en

tags:

- html

license: apache-2.0

datasets:

- squadv2

inference:

parameters:

handle_impossible_answer: true

---

Txt

|

facebook/deit-tiny-distilled-patch16-224

|

488ad407f8f6bcfc79829ece3507658c46d15563

|

2022-07-13T11:41:55.000Z

|

[

"pytorch",

"tf",

"deit",

"image-classification",

"dataset:imagenet",

"arxiv:2012.12877",

"arxiv:2006.03677",

"transformers",

"vision",

"license:apache-2.0"

] |

image-classification

| false |

facebook

| null |

facebook/deit-tiny-distilled-patch16-224

| 199 | 1 |

transformers

| 3,624 |

---

license: apache-2.0

tags:

- image-classification

- vision

datasets:

- imagenet

---

# Distilled Data-efficient Image Transformer (tiny-sized model)

Distilled data-efficient Image Transformer (DeiT) model pre-trained and fine-tuned on ImageNet-1k (1 million images, 1,000 classes) at resolution 224x224. It was first introduced in the paper [Training data-efficient image transformers & distillation through attention](https://arxiv.org/abs/2012.12877) by Touvron et al. and first released in [this repository](https://github.com/facebookresearch/deit). However, the weights were converted from the [timm repository](https://github.com/rwightman/pytorch-image-models) by Ross Wightman.

Disclaimer: The team releasing DeiT did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

This model is a distilled Vision Transformer (ViT). It uses a distillation token, besides the class token, to effectively learn from a teacher (CNN) during both pre-training and fine-tuning. The distillation token is learned through backpropagation, by interacting with the class ([CLS]) and patch tokens through the self-attention layers.

Images are presented to the model as a sequence of fixed-size patches (resolution 16x16), which are linearly embedded.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=facebook/deit) to look for

fine-tuned versions on a task that interests you.

### How to use

Since this model is a distilled ViT model, you can plug it into DeiTModel, DeiTForImageClassification or DeiTForImageClassificationWithTeacher. Note that the model expects the data to be prepared using DeiTFeatureExtractor. Here we use AutoFeatureExtractor, which will automatically use the appropriate feature extractor given the model name.

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import AutoFeatureExtractor, DeiTForImageClassificationWithTeacher

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = AutoFeatureExtractor.from_pretrained('facebook/deit-tiny-distilled-patch16-224')

model = DeiTForImageClassificationWithTeacher.from_pretrained('facebook/deit-tiny-distilled-patch16-224')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

```

Currently, both the feature extractor and model support PyTorch. Tensorflow and JAX/FLAX are coming soon.

## Training data

This model was pretrained and fine-tuned with distillation on [ImageNet-1k](http://www.image-net.org/challenges/LSVRC/2012/), a dataset consisting of 1 million images and 1k classes.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/facebookresearch/deit/blob/ab5715372db8c6cad5740714b2216d55aeae052e/datasets.py#L78).

At inference time, images are resized/rescaled to the same resolution (256x256), center-cropped at 224x224 and normalized across the RGB channels with the ImageNet mean and standard deviation.

### Pretraining

The model was trained on a single 8-GPU node for 3 days. Training resolution is 224. For all hyperparameters (such as batch size and learning rate) we refer to table 9 of the original paper.

## Evaluation results

| Model | ImageNet top-1 accuracy | ImageNet top-5 accuracy | # params | URL |

|---------------------------------------|-------------------------|-------------------------|----------|------------------------------------------------------------------|

| DeiT-tiny | 72.2 | 91.1 | 5M | https://huggingface.co/facebook/deit-tiny-patch16-224 |

| DeiT-small | 79.9 | 95.0 | 22M | https://huggingface.co/facebook/deit-small-patch16-224 |

| DeiT-base | 81.8 | 95.6 | 86M | https://huggingface.co/facebook/deit-base-patch16-224 |

| **DeiT-tiny distilled** | **74.5** | **91.9** | **6M** | **https://huggingface.co/facebook/deit-tiny-distilled-patch16-224** |

| DeiT-small distilled | 81.2 | 95.4 | 22M | https://huggingface.co/facebook/deit-small-distilled-patch16-224 |

| DeiT-base distilled | 83.4 | 96.5 | 87M | https://huggingface.co/facebook/deit-base-distilled-patch16-224 |

| DeiT-base 384 | 82.9 | 96.2 | 87M | https://huggingface.co/facebook/deit-base-patch16-384 |

| DeiT-base distilled 384 (1000 epochs) | 85.2 | 97.2 | 88M | https://huggingface.co/facebook/deit-base-distilled-patch16-384 |

Note that for fine-tuning, the best results are obtained with a higher resolution (384x384). Of course, increasing the model size will result in better performance.

### BibTeX entry and citation info

```bibtex

@misc{touvron2021training,

title={Training data-efficient image transformers & distillation through attention},

author={Hugo Touvron and Matthieu Cord and Matthijs Douze and Francisco Massa and Alexandre Sablayrolles and Hervé Jégou},

year={2021},

eprint={2012.12877},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

```bibtex

@misc{wu2020visual,

title={Visual Transformers: Token-based Image Representation and Processing for Computer Vision},

author={Bichen Wu and Chenfeng Xu and Xiaoliang Dai and Alvin Wan and Peizhao Zhang and Zhicheng Yan and Masayoshi Tomizuka and Joseph Gonzalez and Kurt Keutzer and Peter Vajda},

year={2020},

eprint={2006.03677},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

```bibtex

@inproceedings{deng2009imagenet,

title={Imagenet: A large-scale hierarchical image database},

author={Deng, Jia and Dong, Wei and Socher, Richard and Li, Li-Jia and Li, Kai and Fei-Fei, Li},

booktitle={2009 IEEE conference on computer vision and pattern recognition},

pages={248--255},

year={2009},

organization={Ieee}

}

```

|