title

stringlengths 2

169

| diff

stringlengths 235

19.5k

| body

stringlengths 0

30.5k

| url

stringlengths 48

84

| created_at

stringlengths 20

20

| closed_at

stringlengths 20

20

| merged_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| diff_len

float64 101

3.99k

| repo_name

stringclasses 83

values | __index_level_0__

int64 15

52.7k

|

|---|---|---|---|---|---|---|---|---|---|---|

Add TryHackMe

|

diff --git a/sherlock/resources/data.json b/sherlock/resources/data.json

index fea689746..aa7591193 100644

--- a/sherlock/resources/data.json

+++ b/sherlock/resources/data.json

@@ -1652,6 +1652,13 @@

"username_claimed": "blue",

"username_unclaimed": "noonewouldeverusethis7"

},

+ "TryHackMe": {

+ "errorType": "status_code",

+ "url": "https://tryhackme.com/p/{}",

+ "urlMain": "https://tryhackme.com/",

+ "username_claimed": "ashu",

+ "username_unclaimed": "noonewouldeverusethis7"

+ },

"Twitch": {

"errorType": "status_code",

"url": "https://www.twitch.tv/{}",

|

Add new site:

- https://tryhackme.com

|

https://api.github.com/repos/sherlock-project/sherlock/pulls/732

|

2020-08-20T02:08:22Z

|

2020-08-20T04:18:33Z

|

2020-08-20T04:18:33Z

|

2020-08-20T18:07:54Z

| 199

|

sherlock-project/sherlock

| 36,216

|

[ie/crunchyroll] Extract `vo_adaptive_hls` formats by default

|

diff --git a/yt_dlp/extractor/crunchyroll.py b/yt_dlp/extractor/crunchyroll.py

index 8d997debf9b..d35e9995abc 100644

--- a/yt_dlp/extractor/crunchyroll.py

+++ b/yt_dlp/extractor/crunchyroll.py

@@ -136,7 +136,7 @@ def _call_api(self, path, internal_id, lang, note='api', query={}):

return result

def _extract_formats(self, stream_response, display_id=None):

- requested_formats = self._configuration_arg('format') or ['adaptive_hls']

+ requested_formats = self._configuration_arg('format') or ['vo_adaptive_hls']

available_formats = {}

for stream_type, streams in traverse_obj(

stream_response, (('streams', ('data', 0)), {dict.items}, ...)):

|

Closes #9439

<details open><summary>Template</summary> <!-- OPEN is intentional -->

### Before submitting a *pull request* make sure you have:

- [x] At least skimmed through [contributing guidelines](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#developer-instructions) including [yt-dlp coding conventions](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#yt-dlp-coding-conventions)

- [x] [Searched](https://github.com/yt-dlp/yt-dlp/search?q=is%3Apr&type=Issues) the bugtracker for similar pull requests

- [x] Checked the code with [flake8](https://pypi.python.org/pypi/flake8) and [ran relevant tests](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#developer-instructions)

### In order to be accepted and merged into yt-dlp each piece of code must be in public domain or released under [Unlicense](http://unlicense.org/). Check all of the following options that apply:

- [x] I am the original author of this code and I am willing to release it under [Unlicense](http://unlicense.org/)

### What is the purpose of your *pull request*?

- [x] Fix or improvement to an extractor (Make sure to add/update tests)

</details>

|

https://api.github.com/repos/yt-dlp/yt-dlp/pulls/9447

|

2024-03-14T16:37:06Z

|

2024-03-14T21:42:36Z

|

2024-03-14T21:42:35Z

|

2024-03-14T21:42:36Z

| 205

|

yt-dlp/yt-dlp

| 8,124

|

ui: accept 'q' as quit character

|

diff --git a/tests/test_ui.py b/tests/test_ui.py

index 5ac78cbc4..90efbac25 100644

--- a/tests/test_ui.py

+++ b/tests/test_ui.py

@@ -25,15 +25,16 @@ def test_read_actions(patch_get_key):

# Ignored:

'x', 'y',

# Up:

- const.KEY_UP,

+ const.KEY_UP, 'k',

# Down:

- const.KEY_DOWN,

+ const.KEY_DOWN, 'j',

# Ctrl+C:

- const.KEY_CTRL_C])

- assert list(islice(ui.read_actions(), 5)) \

+ const.KEY_CTRL_C, 'q'])

+ assert list(islice(ui.read_actions(), 8)) \

== [const.ACTION_SELECT, const.ACTION_SELECT,

- const.ACTION_PREVIOUS, const.ACTION_NEXT,

- const.ACTION_ABORT]

+ const.ACTION_PREVIOUS, const.ACTION_PREVIOUS,

+ const.ACTION_NEXT, const.ACTION_NEXT,

+ const.ACTION_ABORT, const.ACTION_ABORT]

def test_command_selector():

diff --git a/thefuck/ui.py b/thefuck/ui.py

index 417058da8..2a2f84ba7 100644

--- a/thefuck/ui.py

+++ b/thefuck/ui.py

@@ -16,7 +16,7 @@ def read_actions():

yield const.ACTION_PREVIOUS

elif key in (const.KEY_DOWN, 'j'):

yield const.ACTION_NEXT

- elif key == const.KEY_CTRL_C:

+ elif key in (const.KEY_CTRL_C, 'q'):

yield const.ACTION_ABORT

elif key in ('\n', '\r'):

yield const.ACTION_SELECT

|

'q' is a standard character used in traditional UNIX environment used for 'quit', so it makes sense to support it, in my opinion

Added tests and ammended the previous commit, added missing tests for 'j' and 'k'

|

https://api.github.com/repos/nvbn/thefuck/pulls/521

|

2016-06-25T10:12:13Z

|

2016-06-27T20:14:16Z

|

2016-06-27T20:14:16Z

|

2016-06-27T20:14:20Z

| 364

|

nvbn/thefuck

| 30,565

|

[`Pix2Struct`] Fix pix2struct cross attention

|

diff --git a/src/transformers/models/pix2struct/modeling_pix2struct.py b/src/transformers/models/pix2struct/modeling_pix2struct.py

index b9cfff26a26ac..015007a9679b9 100644

--- a/src/transformers/models/pix2struct/modeling_pix2struct.py

+++ b/src/transformers/models/pix2struct/modeling_pix2struct.py

@@ -1547,8 +1547,9 @@ def custom_forward(*inputs):

present_key_value_states = present_key_value_states + (present_key_value_state,)

if output_attentions:

- all_attentions = all_attentions + (layer_outputs[2],)

- all_cross_attentions = all_cross_attentions + (layer_outputs[3],)

+ all_attentions = all_attentions + (layer_outputs[3],)

+ if encoder_hidden_states is not None:

+ all_cross_attentions = all_cross_attentions + (layer_outputs[5],)

hidden_states = self.final_layer_norm(hidden_states)

hidden_states = self.dropout(hidden_states)

|

# What does this PR do?

Fixes https://github.com/huggingface/transformers/issues/25175

As pointed out by @leitro on the issue, I can confirm the cross-attention should be in `layer_outputs[5]`. Also fixes the attention output index that should be in index `3` as the index `2` is the `position_bias` (they have the same shape so we didn't noticed the silent bug on the CI tests.)

to repro:

```python

import requests

import torch

from PIL import Image

from transformers import Pix2StructForConditionalGeneration, Pix2StructProcessor

url = "https://www.ilankelman.org/stopsigns/australia.jpg"

image = Image.open(requests.get(url, stream=True).raw)

model = Pix2StructForConditionalGeneration.from_pretrained("google/pix2struct-textcaps-base")

processor = Pix2StructProcessor.from_pretrained("google/pix2struct-textcaps-base")

input_ids = torch.LongTensor([[0, 2, 3, 4]])

# image only

inputs = processor(images=image, return_tensors="pt")

outputs = model.forward(**inputs, decoder_input_ids=input_ids, output_attentions=True)

print(outputs.cross_attentions[0].shape)

>>> should be torch.Size([1, 12, 4, 2048])

```

cc @amyeroberts

|

https://api.github.com/repos/huggingface/transformers/pulls/25200

|

2023-07-31T08:48:55Z

|

2023-08-01T08:56:37Z

|

2023-08-01T08:56:37Z

|

2023-08-01T08:57:08Z

| 239

|

huggingface/transformers

| 12,690

|

[youtube_live_chat] use clickTrackingParams

|

diff --git a/yt_dlp/downloader/youtube_live_chat.py b/yt_dlp/downloader/youtube_live_chat.py

index 5303efd0d42..35e88e36706 100644

--- a/yt_dlp/downloader/youtube_live_chat.py

+++ b/yt_dlp/downloader/youtube_live_chat.py

@@ -44,7 +44,7 @@ def dl_fragment(url, data=None, headers=None):

return self._download_fragment(ctx, url, info_dict, http_headers, data)

def parse_actions_replay(live_chat_continuation):

- offset = continuation_id = None

+ offset = continuation_id = click_tracking_params = None

processed_fragment = bytearray()

for action in live_chat_continuation.get('actions', []):

if 'replayChatItemAction' in action:

@@ -53,28 +53,34 @@ def parse_actions_replay(live_chat_continuation):

processed_fragment.extend(

json.dumps(action, ensure_ascii=False).encode('utf-8') + b'\n')

if offset is not None:

- continuation_id = try_get(

+ continuation = try_get(

live_chat_continuation,

- lambda x: x['continuations'][0]['liveChatReplayContinuationData']['continuation'])

+ lambda x: x['continuations'][0]['liveChatReplayContinuationData'], dict)

+ if continuation:

+ continuation_id = continuation.get('continuation')

+ click_tracking_params = continuation.get('clickTrackingParams')

self._append_fragment(ctx, processed_fragment)

- return continuation_id, offset

+ return continuation_id, offset, click_tracking_params

def try_refresh_replay_beginning(live_chat_continuation):

# choose the second option that contains the unfiltered live chat replay

- refresh_continuation_id = try_get(

+ refresh_continuation = try_get(

live_chat_continuation,

- lambda x: x['header']['liveChatHeaderRenderer']['viewSelector']['sortFilterSubMenuRenderer']['subMenuItems'][1]['continuation']['reloadContinuationData']['continuation'], str)

- if refresh_continuation_id:

+ lambda x: x['header']['liveChatHeaderRenderer']['viewSelector']['sortFilterSubMenuRenderer']['subMenuItems'][1]['continuation']['reloadContinuationData'], dict)

+ if refresh_continuation:

# no data yet but required to call _append_fragment

self._append_fragment(ctx, b'')

- return refresh_continuation_id, 0

+ refresh_continuation_id = refresh_continuation.get('continuation')

+ offset = 0

+ click_tracking_params = refresh_continuation.get('trackingParams')

+ return refresh_continuation_id, offset, click_tracking_params

return parse_actions_replay(live_chat_continuation)

live_offset = 0

def parse_actions_live(live_chat_continuation):

nonlocal live_offset

- continuation_id = None

+ continuation_id = click_tracking_params = None

processed_fragment = bytearray()

for action in live_chat_continuation.get('actions', []):

timestamp = self.parse_live_timestamp(action)

@@ -95,11 +101,12 @@ def parse_actions_live(live_chat_continuation):

continuation_data = try_get(live_chat_continuation, continuation_data_getters, dict)

if continuation_data:

continuation_id = continuation_data.get('continuation')

+ click_tracking_params = continuation_data.get('clickTrackingParams')

timeout_ms = int_or_none(continuation_data.get('timeoutMs'))

if timeout_ms is not None:

time.sleep(timeout_ms / 1000)

self._append_fragment(ctx, processed_fragment)

- return continuation_id, live_offset

+ return continuation_id, live_offset, click_tracking_params

def download_and_parse_fragment(url, frag_index, request_data=None, headers=None):

count = 0

@@ -107,7 +114,7 @@ def download_and_parse_fragment(url, frag_index, request_data=None, headers=None

try:

success, raw_fragment = dl_fragment(url, request_data, headers)

if not success:

- return False, None, None

+ return False, None, None, None

try:

data = ie._extract_yt_initial_data(video_id, raw_fragment.decode('utf-8', 'replace'))

except RegexNotFoundError:

@@ -119,19 +126,19 @@ def download_and_parse_fragment(url, frag_index, request_data=None, headers=None

lambda x: x['continuationContents']['liveChatContinuation'], dict) or {}

if info_dict['protocol'] == 'youtube_live_chat_replay':

if frag_index == 1:

- continuation_id, offset = try_refresh_replay_beginning(live_chat_continuation)

+ continuation_id, offset, click_tracking_params = try_refresh_replay_beginning(live_chat_continuation)

else:

- continuation_id, offset = parse_actions_replay(live_chat_continuation)

+ continuation_id, offset, click_tracking_params = parse_actions_replay(live_chat_continuation)

elif info_dict['protocol'] == 'youtube_live_chat':

- continuation_id, offset = parse_actions_live(live_chat_continuation)

- return True, continuation_id, offset

+ continuation_id, offset, click_tracking_params = parse_actions_live(live_chat_continuation)

+ return True, continuation_id, offset, click_tracking_params

except compat_urllib_error.HTTPError as err:

count += 1

if count <= fragment_retries:

self.report_retry_fragment(err, frag_index, count, fragment_retries)

if count > fragment_retries:

self.report_error('giving up after %s fragment retries' % fragment_retries)

- return False, None, None

+ return False, None, None, None

self._prepare_and_start_frag_download(ctx)

@@ -165,6 +172,7 @@ def download_and_parse_fragment(url, frag_index, request_data=None, headers=None

chat_page_url = 'https://www.youtube.com/live_chat?continuation=' + continuation_id

frag_index = offset = 0

+ click_tracking_params = None

while continuation_id is not None:

frag_index += 1

request_data = {

@@ -173,13 +181,16 @@ def download_and_parse_fragment(url, frag_index, request_data=None, headers=None

}

if frag_index > 1:

request_data['currentPlayerState'] = {'playerOffsetMs': str(max(offset - 5000, 0))}

+ if click_tracking_params:

+ request_data['context']['clickTracking'] = {'clickTrackingParams': click_tracking_params}

headers = ie._generate_api_headers(ytcfg, visitor_data=visitor_data)

headers.update({'content-type': 'application/json'})

fragment_request_data = json.dumps(request_data, ensure_ascii=False).encode('utf-8') + b'\n'

- success, continuation_id, offset = download_and_parse_fragment(

+ success, continuation_id, offset, click_tracking_params = download_and_parse_fragment(

url, frag_index, fragment_request_data, headers)

else:

- success, continuation_id, offset = download_and_parse_fragment(chat_page_url, frag_index)

+ success, continuation_id, offset, click_tracking_params = download_and_parse_fragment(

+ chat_page_url, frag_index)

if not success:

return False

if test:

|

## Please follow the guide below

- You will be asked some questions, please read them **carefully** and answer honestly

- Put an `x` into all the boxes [ ] relevant to your *pull request* (like that [x])

- Use *Preview* tab to see how your *pull request* will actually look like

---

### Before submitting a *pull request* make sure you have:

- [x] At least skimmed through [adding new extractor tutorial](https://github.com/ytdl-org/youtube-dl#adding-support-for-a-new-site) and [youtube-dl coding conventions](https://github.com/ytdl-org/youtube-dl#youtube-dl-coding-conventions) sections

- [x] [Searched](https://github.com/yt-dlp/yt-dlp/search?q=is%3Apr&type=Issues) the bugtracker for similar pull requests

- [x] Checked the code with [flake8](https://pypi.python.org/pypi/flake8)

### In order to be accepted and merged into youtube-dl each piece of code must be in public domain or released under [Unlicense](http://unlicense.org/). Check one of the following options:

- [x] I am the original author of this code and I am willing to release it under [Unlicense](http://unlicense.org/)

- [ ] I am not the original author of this code but it is in public domain or released under [Unlicense](http://unlicense.org/) (provide reliable evidence)

### What is the purpose of your *pull request*?

- [x] Bug fix

- [ ] Improvement

- [ ] New extractor

- [ ] New feature

---

### Description of your *pull request* and other information

Fixes https://github.com/yt-dlp/yt-dlp/issues/433

I looked at my captures of members only live chat, and noticed that some of the API request payloads contained `clickTracking` but others didn't. It was always contained in the previous response when it was used. I still haven't been able to test this with an actual live members chat, but I tested that this doesn't break members replays or non-members chat, live or replay.

I'm hoping that @Lytexx could test this with cookies on a members live chat, but I might also be able to do that at some point.

|

https://api.github.com/repos/yt-dlp/yt-dlp/pulls/449

|

2021-06-26T15:54:10Z

|

2021-06-26T23:22:32Z

|

2021-06-26T23:22:32Z

|

2021-06-26T23:22:32Z

| 1,596

|

yt-dlp/yt-dlp

| 7,528

|

Bump black from 21.12b0 to 22.1.0

|

diff --git a/poetry.lock b/poetry.lock

index fcfe395fc..38171bea9 100644

--- a/poetry.lock

+++ b/poetry.lock

@@ -79,30 +79,26 @@ python-versions = "*"

[[package]]

name = "black"

-version = "21.12b0"

+version = "22.1.0"

description = "The uncompromising code formatter."

category = "dev"

optional = false

python-versions = ">=3.6.2"

[package.dependencies]

-click = ">=7.1.2"

+click = ">=8.0.0"

dataclasses = {version = ">=0.6", markers = "python_version < \"3.7\""}

mypy-extensions = ">=0.4.3"

-pathspec = ">=0.9.0,<1"

+pathspec = ">=0.9.0"

platformdirs = ">=2"

-tomli = ">=0.2.6,<2.0.0"

+tomli = ">=1.1.0"

typed-ast = {version = ">=1.4.2", markers = "python_version < \"3.8\" and implementation_name == \"cpython\""}

-typing-extensions = [

- {version = ">=3.10.0.0", markers = "python_version < \"3.10\""},

- {version = "!=3.10.0.1", markers = "python_version >= \"3.10\""},

-]

+typing-extensions = {version = ">=3.10.0.0", markers = "python_version < \"3.10\""}

[package.extras]

colorama = ["colorama (>=0.4.3)"]

d = ["aiohttp (>=3.7.4)"]

jupyter = ["ipython (>=7.8.0)", "tokenize-rt (>=3.2.0)"]

-python2 = ["typed-ast (>=1.4.3)"]

uvloop = ["uvloop (>=0.15.2)"]

[[package]]

@@ -1053,7 +1049,7 @@ jupyter = ["ipywidgets"]

[metadata]

lock-version = "1.1"

python-versions = "^3.6.2"

-content-hash = "74159f2d5dbb53418204e0fd27ef544256648663c41d0a2841c11c34589c52f6"

+content-hash = "656a91a327289529d8bb9135fef6c66486a192e7a7e8ed682d7c3e7bf5f7b239"

[metadata.files]

appnope = [

@@ -1104,8 +1100,29 @@ backcall = [

{file = "backcall-0.2.0.tar.gz", hash = "sha256:5cbdbf27be5e7cfadb448baf0aa95508f91f2bbc6c6437cd9cd06e2a4c215e1e"},

]

black = [

- {file = "black-21.12b0-py3-none-any.whl", hash = "sha256:a615e69ae185e08fdd73e4715e260e2479c861b5740057fde6e8b4e3b7dd589f"},

- {file = "black-21.12b0.tar.gz", hash = "sha256:77b80f693a569e2e527958459634f18df9b0ba2625ba4e0c2d5da5be42e6f2b3"},

+ {file = "black-22.1.0-cp310-cp310-macosx_10_9_universal2.whl", hash = "sha256:1297c63b9e1b96a3d0da2d85d11cd9bf8664251fd69ddac068b98dc4f34f73b6"},

+ {file = "black-22.1.0-cp310-cp310-macosx_10_9_x86_64.whl", hash = "sha256:2ff96450d3ad9ea499fc4c60e425a1439c2120cbbc1ab959ff20f7c76ec7e866"},

+ {file = "black-22.1.0-cp310-cp310-macosx_11_0_arm64.whl", hash = "sha256:0e21e1f1efa65a50e3960edd068b6ae6d64ad6235bd8bfea116a03b21836af71"},

+ {file = "black-22.1.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:e2f69158a7d120fd641d1fa9a921d898e20d52e44a74a6fbbcc570a62a6bc8ab"},

+ {file = "black-22.1.0-cp310-cp310-win_amd64.whl", hash = "sha256:228b5ae2c8e3d6227e4bde5920d2fc66cc3400fde7bcc74f480cb07ef0b570d5"},

+ {file = "black-22.1.0-cp36-cp36m-macosx_10_9_x86_64.whl", hash = "sha256:b1a5ed73ab4c482208d20434f700d514f66ffe2840f63a6252ecc43a9bc77e8a"},

+ {file = "black-22.1.0-cp36-cp36m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:35944b7100af4a985abfcaa860b06af15590deb1f392f06c8683b4381e8eeaf0"},

+ {file = "black-22.1.0-cp36-cp36m-win_amd64.whl", hash = "sha256:7835fee5238fc0a0baf6c9268fb816b5f5cd9b8793423a75e8cd663c48d073ba"},

+ {file = "black-22.1.0-cp37-cp37m-macosx_10_9_x86_64.whl", hash = "sha256:dae63f2dbf82882fa3b2a3c49c32bffe144970a573cd68d247af6560fc493ae1"},

+ {file = "black-22.1.0-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:5fa1db02410b1924b6749c245ab38d30621564e658297484952f3d8a39fce7e8"},

+ {file = "black-22.1.0-cp37-cp37m-win_amd64.whl", hash = "sha256:c8226f50b8c34a14608b848dc23a46e5d08397d009446353dad45e04af0c8e28"},

+ {file = "black-22.1.0-cp38-cp38-macosx_10_9_universal2.whl", hash = "sha256:2d6f331c02f0f40aa51a22e479c8209d37fcd520c77721c034517d44eecf5912"},

+ {file = "black-22.1.0-cp38-cp38-macosx_10_9_x86_64.whl", hash = "sha256:742ce9af3086e5bd07e58c8feb09dbb2b047b7f566eb5f5bc63fd455814979f3"},

+ {file = "black-22.1.0-cp38-cp38-macosx_11_0_arm64.whl", hash = "sha256:fdb8754b453fb15fad3f72cd9cad3e16776f0964d67cf30ebcbf10327a3777a3"},

+ {file = "black-22.1.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:f5660feab44c2e3cb24b2419b998846cbb01c23c7fe645fee45087efa3da2d61"},

+ {file = "black-22.1.0-cp38-cp38-win_amd64.whl", hash = "sha256:6f2f01381f91c1efb1451998bd65a129b3ed6f64f79663a55fe0e9b74a5f81fd"},

+ {file = "black-22.1.0-cp39-cp39-macosx_10_9_universal2.whl", hash = "sha256:efbadd9b52c060a8fc3b9658744091cb33c31f830b3f074422ed27bad2b18e8f"},

+ {file = "black-22.1.0-cp39-cp39-macosx_10_9_x86_64.whl", hash = "sha256:8871fcb4b447206904932b54b567923e5be802b9b19b744fdff092bd2f3118d0"},

+ {file = "black-22.1.0-cp39-cp39-macosx_11_0_arm64.whl", hash = "sha256:ccad888050f5393f0d6029deea2a33e5ae371fd182a697313bdbd835d3edaf9c"},

+ {file = "black-22.1.0-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:07e5c049442d7ca1a2fc273c79d1aecbbf1bc858f62e8184abe1ad175c4f7cc2"},

+ {file = "black-22.1.0-cp39-cp39-win_amd64.whl", hash = "sha256:373922fc66676133ddc3e754e4509196a8c392fec3f5ca4486673e685a421321"},

+ {file = "black-22.1.0-py3-none-any.whl", hash = "sha256:3524739d76b6b3ed1132422bf9d82123cd1705086723bc3e235ca39fd21c667d"},

+ {file = "black-22.1.0.tar.gz", hash = "sha256:a7c0192d35635f6fc1174be575cb7915e92e5dd629ee79fdaf0dcfa41a80afb5"},

]

bleach = [

{file = "bleach-4.1.0-py2.py3-none-any.whl", hash = "sha256:4d2651ab93271d1129ac9cbc679f524565cc8a1b791909c4a51eac4446a15994"},

diff --git a/pyproject.toml b/pyproject.toml

index d110cf38c..4f7d8dd26 100644

--- a/pyproject.toml

+++ b/pyproject.toml

@@ -40,7 +40,7 @@ jupyter = ["ipywidgets"]

[tool.poetry.dev-dependencies]

pytest = "^7.0.0"

-black = "^21.11b1"

+black = "^22.1"

mypy = "^0.930"

pytest-cov = "^3.0.0"

attrs = "^21.4.0"

|

Bumps [black](https://github.com/psf/black) from 21.12b0 to 22.1.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a href="https://github.com/psf/black/releases">black's releases</a>.</em></p>

<blockquote>

<h2>22.1.0</h2>

<p>At long last, Black is no longer a beta product! This is the first non-beta release and the first release covered by our new stability policy.</p>

<h3>Highlights</h3>

<ul>

<li>Remove Python 2 support (<a href="https://github-redirect.dependabot.com/psf/black/issues/2740">#2740</a>)</li>

<li>Introduce the <code>--preview</code> flag (<a href="https://github-redirect.dependabot.com/psf/black/issues/2752">#2752</a>)</li>

</ul>

<h3>Style</h3>

<ul>

<li>Deprecate <code>--experimental-string-processing</code> and move the functionality under <code>--preview</code> (<a href="https://github-redirect.dependabot.com/psf/black/issues/2789">#2789</a>)</li>

<li>For stubs, one blank line between class attributes and methods is now kept if there's at least one pre-existing blank line (<a href="https://github-redirect.dependabot.com/psf/black/issues/2736">#2736</a>)</li>

<li>Black now normalizes string prefix order (<a href="https://github-redirect.dependabot.com/psf/black/issues/2297">#2297</a>)</li>

<li>Remove spaces around power operators if both operands are simple (<a href="https://github-redirect.dependabot.com/psf/black/issues/2726">#2726</a>)</li>

<li>Work around bug that causes unstable formatting in some cases in the presence of the magic trailing comma (<a href="https://github-redirect.dependabot.com/psf/black/issues/2807">#2807</a>)</li>

<li>Use parentheses for attribute access on decimal float and int literals (<a href="https://github-redirect.dependabot.com/psf/black/issues/2799">#2799</a>)</li>

<li>Don't add whitespace for attribute access on hexadecimal, binary, octal, and complex literals (<a href="https://github-redirect.dependabot.com/psf/black/issues/2799">#2799</a>)</li>

<li>Treat blank lines in stubs the same inside top-level if statements (<a href="https://github-redirect.dependabot.com/psf/black/issues/2820">#2820</a>)</li>

<li>Fix unstable formatting with semicolons and arithmetic expressions (<a href="https://github-redirect.dependabot.com/psf/black/issues/2817">#2817</a>)</li>

<li>Fix unstable formatting around magic trailing comma (<a href="https://github-redirect.dependabot.com/psf/black/issues/2572">#2572</a>)</li>

</ul>

<h3>Parser</h3>

<ul>

<li>Fix mapping cases that contain as-expressions, like <code>case {"key": 1 | 2 as password}</code> (<a href="https://github-redirect.dependabot.com/psf/black/issues/2686">#2686</a>)</li>

<li>Fix cases that contain multiple top-level as-expressions, like <code>case 1 as a, 2 as b</code> (<a href="https://github-redirect.dependabot.com/psf/black/issues/2716">#2716</a>)</li>

<li>Fix call patterns that contain as-expressions with keyword arguments, like <code>case Foo(bar=baz as quux)</code> (<a href="https://github-redirect.dependabot.com/psf/black/issues/2749">#2749</a>)</li>

<li>Tuple unpacking on <code>return</code> and <code>yield</code> constructs now implies 3.8+ (<a href="https://github-redirect.dependabot.com/psf/black/issues/2700">#2700</a>)</li>

<li>Unparenthesized tuples on annotated assignments (e.g <code>values: Tuple[int, ...] = 1, 2, 3</code>) now implies 3.8+ (<a href="https://github-redirect.dependabot.com/psf/black/issues/2708">#2708</a>)</li>

<li>Fix handling of standalone <code>match()</code> or <code>case()</code> when there is a trailing newline or a comment inside of the parentheses. (<a href="https://github-redirect.dependabot.com/psf/black/issues/2760">#2760</a>)</li>

<li><code>from __future__ import annotations</code> statement now implies Python 3.7+ (<a href="https://github-redirect.dependabot.com/psf/black/issues/2690">#2690</a>)</li>

</ul>

<h3>Performance</h3>

<ul>

<li>Speed-up the new backtracking parser about 4X in general (enabled when <code>--target-version</code> is set to 3.10 and higher). (<a href="https://github-redirect.dependabot.com/psf/black/issues/2728">#2728</a>)</li>

<li>Black is now compiled with mypyc for an overall 2x speed-up. 64-bit Windows, MacOS, and Linux (not including musl) are supported. (<a href="https://github-redirect.dependabot.com/psf/black/issues/1009">#1009</a>, <a href="https://github-redirect.dependabot.com/psf/black/issues/2431">#2431</a>)</li>

</ul>

<h3>Configuration</h3>

<ul>

<li>Do not accept bare carriage return line endings in pyproject.toml (<a href="https://github-redirect.dependabot.com/psf/black/issues/2408">#2408</a>)</li>

<li>Add configuration option (<code>python-cell-magics</code>) to format cells with custom magics in Jupyter Notebooks (<a href="https://github-redirect.dependabot.com/psf/black/issues/2744">#2744</a>)</li>

<li>Allow setting custom cache directory on all platforms with environment variable <code>BLACK_CACHE_DIR</code> (<a href="https://github-redirect.dependabot.com/psf/black/issues/2739">#2739</a>).</li>

<li>Enable Python 3.10+ by default, without any extra need to specify -<code>-target-version=py310</code>. (<a href="https://github-redirect.dependabot.com/psf/black/issues/2758">#2758</a>)</li>

<li>Make passing <code>SRC</code> or <code>--code</code> mandatory and mutually exclusive (<a href="https://github-redirect.dependabot.com/psf/black/issues/2804">#2804</a>)</li>

</ul>

<h3>Output</h3>

<ul>

<li>Improve error message for invalid regular expression (<a href="https://github-redirect.dependabot.com/psf/black/issues/2678">#2678</a>)</li>

<li>Improve error message when parsing fails during AST safety check by embedding the underlying SyntaxError (<a href="https://github-redirect.dependabot.com/psf/black/issues/2693">#2693</a>)</li>

<li>No longer color diff headers white as it's unreadable in light themed terminals (<a href="https://github-redirect.dependabot.com/psf/black/issues/2691">#2691</a>)</li>

<li>Text coloring added in the final statistics (<a href="https://github-redirect.dependabot.com/psf/black/issues/2712">#2712</a>)</li>

<li>Verbose mode also now describes how a project root was discovered and which paths will be formatted. (<a href="https://github-redirect.dependabot.com/psf/black/issues/2526">#2526</a>)</li>

</ul>

<h3>Packaging</h3>

<ul>

<li>All upper version bounds on dependencies have been removed (<a href="https://github-redirect.dependabot.com/psf/black/issues/2718">#2718</a>)</li>

<li><code>typing-extensions</code> is no longer a required dependency in Python 3.10+ (<a href="https://github-redirect.dependabot.com/psf/black/issues/2772">#2772</a>)</li>

<li>Set <code>click</code> lower bound to <code>8.0.0</code> as <em>Black</em> crashes on <code>7.1.2</code> (<a href="https://github-redirect.dependabot.com/psf/black/issues/2791">#2791</a>)</li>

</ul>

<!-- raw HTML omitted -->

</blockquote>

<p>... (truncated)</p>

</details>

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a href="https://github.com/psf/black/blob/main/CHANGES.md">black's changelog</a>.</em></p>

<blockquote>

<h2>22.1.0</h2>

<p>At long last, <em>Black</em> is no longer a beta product! This is the first non-beta release

and the first release covered by our new stability policy.</p>

<h3>Highlights</h3>

<ul>

<li><strong>Remove Python 2 support</strong> (<a href="https://github-redirect.dependabot.com/psf/black/issues/2740">#2740</a>)</li>

<li>Introduce the <code>--preview</code> flag (<a href="https://github-redirect.dependabot.com/psf/black/issues/2752">#2752</a>)</li>

</ul>

<h3>Style</h3>

<ul>

<li>Deprecate <code>--experimental-string-processing</code> and move the functionality under

<code>--preview</code> (<a href="https://github-redirect.dependabot.com/psf/black/issues/2789">#2789</a>)</li>

<li>For stubs, one blank line between class attributes and methods is now kept if there's

at least one pre-existing blank line (<a href="https://github-redirect.dependabot.com/psf/black/issues/2736">#2736</a>)</li>

<li>Black now normalizes string prefix order (<a href="https://github-redirect.dependabot.com/psf/black/issues/2297">#2297</a>)</li>

<li>Remove spaces around power operators if both operands are simple (<a href="https://github-redirect.dependabot.com/psf/black/issues/2726">#2726</a>)</li>

<li>Work around bug that causes unstable formatting in some cases in the presence of the

magic trailing comma (<a href="https://github-redirect.dependabot.com/psf/black/issues/2807">#2807</a>)</li>

<li>Use parentheses for attribute access on decimal float and int literals (<a href="https://github-redirect.dependabot.com/psf/black/issues/2799">#2799</a>)</li>

<li>Don't add whitespace for attribute access on hexadecimal, binary, octal, and complex

literals (<a href="https://github-redirect.dependabot.com/psf/black/issues/2799">#2799</a>)</li>

<li>Treat blank lines in stubs the same inside top-level <code>if</code> statements (<a href="https://github-redirect.dependabot.com/psf/black/issues/2820">#2820</a>)</li>

<li>Fix unstable formatting with semicolons and arithmetic expressions (<a href="https://github-redirect.dependabot.com/psf/black/issues/2817">#2817</a>)</li>

<li>Fix unstable formatting around magic trailing comma (<a href="https://github-redirect.dependabot.com/psf/black/issues/2572">#2572</a>)</li>

</ul>

<h3>Parser</h3>

<ul>

<li>Fix mapping cases that contain as-expressions, like <code>case {"key": 1 | 2 as password}</code>

(<a href="https://github-redirect.dependabot.com/psf/black/issues/2686">#2686</a>)</li>

<li>Fix cases that contain multiple top-level as-expressions, like <code>case 1 as a, 2 as b</code>

(<a href="https://github-redirect.dependabot.com/psf/black/issues/2716">#2716</a>)</li>

<li>Fix call patterns that contain as-expressions with keyword arguments, like

<code>case Foo(bar=baz as quux)</code> (<a href="https://github-redirect.dependabot.com/psf/black/issues/2749">#2749</a>)</li>

<li>Tuple unpacking on <code>return</code> and <code>yield</code> constructs now implies 3.8+ (<a href="https://github-redirect.dependabot.com/psf/black/issues/2700">#2700</a>)</li>

<li>Unparenthesized tuples on annotated assignments (e.g

<code>values: Tuple[int, ...] = 1, 2, 3</code>) now implies 3.8+ (<a href="https://github-redirect.dependabot.com/psf/black/issues/2708">#2708</a>)</li>

<li>Fix handling of standalone <code>match()</code> or <code>case()</code> when there is a trailing newline or a

comment inside of the parentheses. (<a href="https://github-redirect.dependabot.com/psf/black/issues/2760">#2760</a>)</li>

<li><code>from __future__ import annotations</code> statement now implies Python 3.7+ (<a href="https://github-redirect.dependabot.com/psf/black/issues/2690">#2690</a>)</li>

</ul>

<h3>Performance</h3>

<ul>

<li>Speed-up the new backtracking parser about 4X in general (enabled when

<code>--target-version</code> is set to 3.10 and higher). (<a href="https://github-redirect.dependabot.com/psf/black/issues/2728">#2728</a>)</li>

<li><em>Black</em> is now compiled with <a href="https://github.com/mypyc/mypyc">mypyc</a> for an overall 2x

speed-up. 64-bit Windows, MacOS, and Linux (not including musl) are supported. (<a href="https://github-redirect.dependabot.com/psf/black/issues/1009">#1009</a>,

<a href="https://github-redirect.dependabot.com/psf/black/issues/2431">#2431</a>)</li>

</ul>

<!-- raw HTML omitted -->

</blockquote>

<p>... (truncated)</p>

</details>

<details>

<summary>Commits</summary>

<ul>

<li>See full diff in <a href="https://github.com/psf/black/commits/22.1.0">compare view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't alter it yourself. You can also trigger a rebase manually by commenting `@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating it. You can achieve the same result by closing it manually

- `@dependabot ignore this major version` will close this PR and stop Dependabot creating any more for this major version (unless you reopen the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop Dependabot creating any more for this minor version (unless you reopen the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop Dependabot creating any more for this dependency (unless you reopen the PR or upgrade to it yourself)

</details>

|

https://api.github.com/repos/Textualize/rich/pulls/1892

|

2022-01-31T13:34:24Z

|

2022-02-11T11:09:31Z

|

2022-02-11T11:09:31Z

|

2022-02-11T11:09:32Z

| 2,750

|

Textualize/rich

| 48,213

|

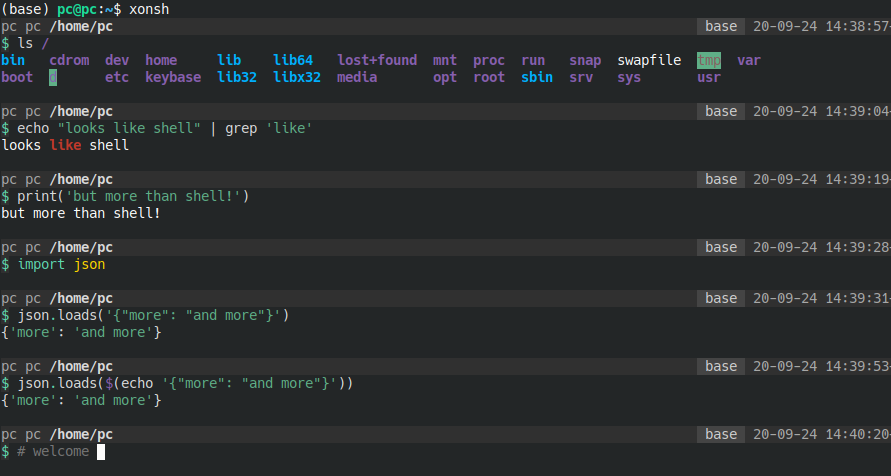

Add xonsh shell is a Python-powered, Unix-gazing shell language and command prompt

|

diff --git a/README.md b/README.md

index baabcb127..d49717f32 100644

--- a/README.md

+++ b/README.md

@@ -77,6 +77,7 @@ Inspired by [awesome-php](https://github.com/ziadoz/awesome-php).

- [Search](#search)

- [Serialization](#serialization)

- [Serverless Frameworks](#serverless-frameworks)

+ - [Shell](#shell)

- [Specific Formats Processing](#specific-formats-processing)

- [Static Site Generator](#static-site-generator)

- [Tagging](#tagging)

@@ -1062,6 +1063,12 @@ Inspired by [awesome-php](https://github.com/ziadoz/awesome-php).

* [python-lambda](https://github.com/nficano/python-lambda) - A toolkit for developing and deploying Python code in AWS Lambda.

* [Zappa](https://github.com/Miserlou/Zappa) - A tool for deploying WSGI applications on AWS Lambda and API Gateway.

+## Shell

+

+*Shells based on Python.*

+

+* [xonsh](https://github.com/xonsh/xonsh/) - A Python-powered, cross-platform, Unix-gazing shell language and command prompt.

+

## Specific Formats Processing

*Libraries for parsing and manipulating specific text formats.*

|

## What is this Python project?

xonsh is a Python-powered, cross-platform, Unix-gazing shell language and command prompt. The language is a superset of Python 3.5+ with additional shell primitives. It is time-tested and very well documented project.

## What's the difference between this Python project and similar ones?

Xonsh is significantly different from most other shells or shell tools. The following table lists features and capabilities that various tools may or may not share.

<table class="colwidths-given docutils align-default">

<colgroup>

<col style="width: 33%">

<col style="width: 11%">

<col style="width: 11%">

<col style="width: 11%">

<col style="width: 11%">

<col style="width: 11%">

<col style="width: 11%">

</colgroup>

<thead>

<tr class="row-odd"><th class="head stub"></th>

<th class="head"><p>Bash</p></th>

<th class="head"><p>zsh</p></th>

<th class="head"><p>plumbum</p></th>

<th class="head"><p>fish</p></th>

<th class="head"><p>IPython</p></th>

<th class="head"><p>xonsh</p></th>

</tr>

</thead>

<tbody>

<tr class="row-even"><th class="stub"><p>Sane language</p></th>

<td></td>

<td></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

</tr>

<tr class="row-odd"><th class="stub"><p>Easily scriptable</p></th>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td></td>

<td><p>✓</p></td>

</tr>

<tr class="row-even"><th class="stub"><p>Native cross-platform support</p></th>

<td></td>

<td></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

</tr>

<tr class="row-odd"><th class="stub"><p>Meant as a shell</p></th>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td></td>

<td><p>✓</p></td>

<td></td>

<td><p>✓</p></td>

</tr>

<tr class="row-even"><th class="stub"><p>Tab completion</p></th>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

</tr>

<tr class="row-odd"><th class="stub"><p>Completion from man-page parsing</p></th>

<td></td>

<td></td>

<td></td>

<td><p>✓</p></td>

<td></td>

<td><p>✓</p></td>

</tr>

<tr class="row-even"><th class="stub"><p>Large standard library</p></th>

<td></td>

<td><p>✓</p></td>

<td></td>

<td></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

</tr>

<tr class="row-odd"><th class="stub"><p>Typed variables</p></th>

<td></td>

<td></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

<td><p>✓</p></td>

</tr>

<tr class="row-even"><th class="stub"><p>Syntax highlighting</p></th>

<td></td>

<td></td>

<td></td>

<td><p>✓</p></td>

<td><p>in notebook</p></td>

<td><p>w/ prompt-toolkit</p></td>

</tr>

<tr class="row-odd"><th class="stub"><p>Pun in name</p></th>

<td><p>✓</p></td>

<td></td>

<td><p>✓</p></td>

<td></td>

<td></td>

<td><p>✓</p></td>

</tr>

<tr class="row-even"><th class="stub"><p>Rich history</p></th>

<td></td>

<td></td>

<td></td>

<td></td>

<td></td>

<td><p>✓</p></td>

</tr>

</tbody>

</table>

|

https://api.github.com/repos/vinta/awesome-python/pulls/1623

|

2020-09-24T11:35:18Z

|

2020-10-27T17:33:21Z

|

2020-10-27T17:33:21Z

|

2020-10-27T17:33:21Z

| 299

|

vinta/awesome-python

| 27,080

|

Fix dnsimple typo

|

diff --git a/certbot-dns-dnsimple/certbot_dns_dnsimple/_internal/dns_dnsimple.py b/certbot-dns-dnsimple/certbot_dns_dnsimple/_internal/dns_dnsimple.py

index 3d1017f0be9..c9ef1cdd186 100644

--- a/certbot-dns-dnsimple/certbot_dns_dnsimple/_internal/dns_dnsimple.py

+++ b/certbot-dns-dnsimple/certbot_dns_dnsimple/_internal/dns_dnsimple.py

@@ -39,7 +39,7 @@ def more_info(self) -> str:

@property

def _provider_name(self) -> str:

- return 'dnssimple'

+ return 'dnsimple'

def _handle_http_error(self, e: HTTPError, domain_name: str) -> errors.PluginError:

hint = None

diff --git a/certbot/CHANGELOG.md b/certbot/CHANGELOG.md

index 1026380217f..4506cb9ca4c 100644

--- a/certbot/CHANGELOG.md

+++ b/certbot/CHANGELOG.md

@@ -14,7 +14,8 @@ Certbot adheres to [Semantic Versioning](https://semver.org/).

### Fixed

-*

+* Fixed a bug that broke the DNS plugin for DNSimple that was introduced in

+ version 2.7.0 of the plugin.

More details about these changes can be found on our GitHub repo.

|

Fixes https://github.com/certbot/certbot/issues/9786.

|

https://api.github.com/repos/certbot/certbot/pulls/9787

|

2023-10-05T17:46:08Z

|

2023-10-05T20:15:30Z

|

2023-10-05T20:15:30Z

|

2023-10-05T20:15:32Z

| 334

|

certbot/certbot

| 3,594

|

Using CRLF as line marker according to http 1.1 definition

|

diff --git a/scrapy/core/downloader/handlers/http11.py b/scrapy/core/downloader/handlers/http11.py

index 52eb35eba7e..617a68ea4cd 100644

--- a/scrapy/core/downloader/handlers/http11.py

+++ b/scrapy/core/downloader/handlers/http11.py

@@ -66,12 +66,11 @@ def __init__(self, reactor, host, port, proxyConf, contextFactory,

def requestTunnel(self, protocol):

"""Asks the proxy to open a tunnel."""

- tunnelReq = 'CONNECT %s:%s HTTP/1.1\n' % (self._tunneledHost,

+ tunnelReq = 'CONNECT %s:%s HTTP/1.1\r\n' % (self._tunneledHost,

self._tunneledPort)

if self._proxyAuthHeader:

- tunnelReq += 'Proxy-Authorization: %s \n\n' % self._proxyAuthHeader

- else:

- tunnelReq += '\n'

+ tunnelReq += 'Proxy-Authorization: %s\r\n' % self._proxyAuthHeader

+ tunnelReq += '\r\n'

protocol.transport.write(tunnelReq)

self._protocolDataReceived = protocol.dataReceived

protocol.dataReceived = self.processProxyResponse

|

According to http 1.1 definition http://www.w3.org/Protocols/rfc2616/rfc2616-sec2.html we should using CRLF as end-of-line marker. In my case some of my proxies will return 407 if it's not ended with '\r\n'. And we can see it clearly in twisted's [http client](https://github.com/twisted/twisted/blob/trunk/twisted/web/http.py#L410) implementation.

|

https://api.github.com/repos/scrapy/scrapy/pulls/787

|

2014-07-08T07:03:20Z

|

2014-07-08T22:37:02Z

|

2014-07-08T22:37:02Z

|

2014-07-09T00:00:19Z

| 296

|

scrapy/scrapy

| 34,990

|

Adds options for grid margins to XYZ Plot and Prompt Matrix

|

diff --git a/modules/images.py b/modules/images.py

index f4b20b2817f..c2ca8849de8 100644

--- a/modules/images.py

+++ b/modules/images.py

@@ -199,7 +199,7 @@ def draw_texts(drawing, draw_x, draw_y, lines, initial_fnt, initial_fontsize):

pad_top = 0 if sum(hor_text_heights) == 0 else max(hor_text_heights) + line_spacing * 2

- result = Image.new("RGB", (im.width + pad_left + margin * (rows-1), im.height + pad_top + margin * (cols-1)), "white")

+ result = Image.new("RGB", (im.width + pad_left + margin * (cols-1), im.height + pad_top + margin * (rows-1)), "white")

for row in range(rows):

for col in range(cols):

@@ -223,7 +223,7 @@ def draw_texts(drawing, draw_x, draw_y, lines, initial_fnt, initial_fontsize):

return result

-def draw_prompt_matrix(im, width, height, all_prompts):

+def draw_prompt_matrix(im, width, height, all_prompts, margin=0):

prompts = all_prompts[1:]

boundary = math.ceil(len(prompts) / 2)

@@ -233,7 +233,7 @@ def draw_prompt_matrix(im, width, height, all_prompts):

hor_texts = [[GridAnnotation(x, is_active=pos & (1 << i) != 0) for i, x in enumerate(prompts_horiz)] for pos in range(1 << len(prompts_horiz))]

ver_texts = [[GridAnnotation(x, is_active=pos & (1 << i) != 0) for i, x in enumerate(prompts_vert)] for pos in range(1 << len(prompts_vert))]

- return draw_grid_annotations(im, width, height, hor_texts, ver_texts)

+ return draw_grid_annotations(im, width, height, hor_texts, ver_texts, margin)

def resize_image(resize_mode, im, width, height, upscaler_name=None):

diff --git a/scripts/prompt_matrix.py b/scripts/prompt_matrix.py

index de921ea84a5..3ee3cbe4c17 100644

--- a/scripts/prompt_matrix.py

+++ b/scripts/prompt_matrix.py

@@ -48,23 +48,17 @@ def ui(self, is_img2img):

gr.HTML('<br />')

with gr.Row():

with gr.Column():

- put_at_start = gr.Checkbox(label='Put variable parts at start of prompt',

- value=False, elem_id=self.elem_id("put_at_start"))

+ put_at_start = gr.Checkbox(label='Put variable parts at start of prompt', value=False, elem_id=self.elem_id("put_at_start"))

+ different_seeds = gr.Checkbox(label='Use different seed for each picture', value=False, elem_id=self.elem_id("different_seeds"))

with gr.Column():

- # Radio buttons for selecting the prompt between positive and negative

- prompt_type = gr.Radio(["positive", "negative"], label="Select prompt",

- elem_id=self.elem_id("prompt_type"), value="positive")

- with gr.Row():

- with gr.Column():

- different_seeds = gr.Checkbox(

- label='Use different seed for each picture', value=False, elem_id=self.elem_id("different_seeds"))

+ prompt_type = gr.Radio(["positive", "negative"], label="Select prompt", elem_id=self.elem_id("prompt_type"), value="positive")

+ variations_delimiter = gr.Radio(["comma", "space"], label="Select joining char", elem_id=self.elem_id("variations_delimiter"), value="comma")

with gr.Column():

- # Radio buttons for selecting the delimiter to use in the resulting prompt

- variations_delimiter = gr.Radio(["comma", "space"], label="Select delimiter", elem_id=self.elem_id(

- "variations_delimiter"), value="comma")

- return [put_at_start, different_seeds, prompt_type, variations_delimiter]

+ margin_size = gr.Slider(label="Grid margins (px)", min=0, max=500, value=0, step=2, elem_id=self.elem_id("margin_size"))

+

+ return [put_at_start, different_seeds, prompt_type, variations_delimiter, margin_size]

- def run(self, p, put_at_start, different_seeds, prompt_type, variations_delimiter):

+ def run(self, p, put_at_start, different_seeds, prompt_type, variations_delimiter, margin_size):

modules.processing.fix_seed(p)

# Raise error if promp type is not positive or negative

if prompt_type not in ["positive", "negative"]:

@@ -106,7 +100,7 @@ def run(self, p, put_at_start, different_seeds, prompt_type, variations_delimite

processed = process_images(p)

grid = images.image_grid(processed.images, p.batch_size, rows=1 << ((len(prompt_matrix_parts) - 1) // 2))

- grid = images.draw_prompt_matrix(grid, p.width, p.height, prompt_matrix_parts)

+ grid = images.draw_prompt_matrix(grid, p.width, p.height, prompt_matrix_parts, margin_size)

processed.images.insert(0, grid)

processed.index_of_first_image = 1

processed.infotexts.insert(0, processed.infotexts[0])

diff --git a/scripts/xyz_grid.py b/scripts/xyz_grid.py

index 3122f6f66db..5982cfbaa7a 100644

--- a/scripts/xyz_grid.py

+++ b/scripts/xyz_grid.py

@@ -205,7 +205,7 @@ def __init__(self, *args, **kwargs):

]

-def draw_xyz_grid(p, xs, ys, zs, x_labels, y_labels, z_labels, cell, draw_legend, include_lone_images, include_sub_grids, first_axes_processed, second_axes_processed):

+def draw_xyz_grid(p, xs, ys, zs, x_labels, y_labels, z_labels, cell, draw_legend, include_lone_images, include_sub_grids, first_axes_processed, second_axes_processed, margin_size):

hor_texts = [[images.GridAnnotation(x)] for x in x_labels]

ver_texts = [[images.GridAnnotation(y)] for y in y_labels]

title_texts = [[images.GridAnnotation(z)] for z in z_labels]

@@ -292,7 +292,7 @@ def index(ix, iy, iz):

end_index = start_index + len(xs) * len(ys)

grid = images.image_grid(image_cache[start_index:end_index], rows=len(ys))

if draw_legend:

- grid = images.draw_grid_annotations(grid, cell_size[0], cell_size[1], hor_texts, ver_texts)

+ grid = images.draw_grid_annotations(grid, cell_size[0], cell_size[1], hor_texts, ver_texts, margin_size)

sub_grids[i] = grid

if include_sub_grids and len(zs) > 1:

processed_result.images.insert(i+1, grid)

@@ -351,10 +351,16 @@ def ui(self, is_img2img):

fill_z_button = ToolButton(value=fill_values_symbol, elem_id="xyz_grid_fill_z_tool_button", visible=False)

with gr.Row(variant="compact", elem_id="axis_options"):

- draw_legend = gr.Checkbox(label='Draw legend', value=True, elem_id=self.elem_id("draw_legend"))

- include_lone_images = gr.Checkbox(label='Include Sub Images', value=False, elem_id=self.elem_id("include_lone_images"))

- include_sub_grids = gr.Checkbox(label='Include Sub Grids', value=False, elem_id=self.elem_id("include_sub_grids"))

- no_fixed_seeds = gr.Checkbox(label='Keep -1 for seeds', value=False, elem_id=self.elem_id("no_fixed_seeds"))

+ with gr.Column():

+ draw_legend = gr.Checkbox(label='Draw legend', value=True, elem_id=self.elem_id("draw_legend"))

+ no_fixed_seeds = gr.Checkbox(label='Keep -1 for seeds', value=False, elem_id=self.elem_id("no_fixed_seeds"))

+ with gr.Column():

+ include_lone_images = gr.Checkbox(label='Include Sub Images', value=False, elem_id=self.elem_id("include_lone_images"))

+ include_sub_grids = gr.Checkbox(label='Include Sub Grids', value=False, elem_id=self.elem_id("include_sub_grids"))

+ with gr.Column():

+ margin_size = gr.Slider(label="Grid margins (px)", min=0, max=500, value=0, step=2, elem_id=self.elem_id("margin_size"))

+

+ with gr.Row(variant="compact", elem_id="swap_axes"):

swap_xy_axes_button = gr.Button(value="Swap X/Y axes", elem_id="xy_grid_swap_axes_button")

swap_yz_axes_button = gr.Button(value="Swap Y/Z axes", elem_id="yz_grid_swap_axes_button")

swap_xz_axes_button = gr.Button(value="Swap X/Z axes", elem_id="xz_grid_swap_axes_button")

@@ -393,9 +399,9 @@ def select_axis(x_type):

(z_values, "Z Values"),

)

- return [x_type, x_values, y_type, y_values, z_type, z_values, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds]

+ return [x_type, x_values, y_type, y_values, z_type, z_values, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds, margin_size]

- def run(self, p, x_type, x_values, y_type, y_values, z_type, z_values, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds):

+ def run(self, p, x_type, x_values, y_type, y_values, z_type, z_values, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds, margin_size):

if not no_fixed_seeds:

modules.processing.fix_seed(p)

@@ -590,7 +596,8 @@ def cell(x, y, z):

include_lone_images=include_lone_images,

include_sub_grids=include_sub_grids,

first_axes_processed=first_axes_processed,

- second_axes_processed=second_axes_processed

+ second_axes_processed=second_axes_processed,

+ margin_size=margin_size

)

if opts.grid_save and len(sub_grids) > 1:

|

Adds slider options for grid margins in the XYZ Plot and Prompt Matrix. Works with or without legends/text, and 0 margin leaves it as before.

Closes #4779

## Changes

- Modifies `draw_prompt_matrix` to also take in margin size

- Fixes slight miscalculation with margin in `draw_grid_annotations`

- Adds `margin_size` slider to XYZ Plot and Prompt Matrix, slightly rearranged UI to allow for it. (Default `margin_size` is 0)

## Screenshots

### XYZ Plot (Note it only affects the X/Y Grids, Z Grids are separated already by column labels)

### Prompt Matrix

## Environment this was tested in

- OS: Windows

- Browser: Firefox

- Graphics card: NVIDIA GTX 1080

|

https://api.github.com/repos/AUTOMATIC1111/stable-diffusion-webui/pulls/7556

|

2023-02-05T08:47:56Z

|

2023-02-05T10:34:36Z

|

2023-02-05T10:34:36Z

|

2023-02-05T10:34:37Z

| 2,330

|

AUTOMATIC1111/stable-diffusion-webui

| 40,067

|

Fixed #24812 -- Fixed app registry RuntimeWarnings in schema and migrations tests.

|

diff --git a/tests/mail/tests.py b/tests/mail/tests.py

index 75d5dd619b089..03dfae7903b6e 100644

--- a/tests/mail/tests.py

+++ b/tests/mail/tests.py

@@ -868,6 +868,9 @@ class FakeSMTPServer(smtpd.SMTPServer, threading.Thread):

def __init__(self, *args, **kwargs):

threading.Thread.__init__(self)

+ # New kwarg added in Python 3.5; default switching to False in 3.6.

+ if sys.version_info >= (3, 5):

+ kwargs['decode_data'] = True

smtpd.SMTPServer.__init__(self, *args, **kwargs)

self._sink = []

self.active = False

diff --git a/tests/migrations/test_state.py b/tests/migrations/test_state.py

index 58bf18921a238..3c6abe3a913ee 100644

--- a/tests/migrations/test_state.py

+++ b/tests/migrations/test_state.py

@@ -396,17 +396,22 @@ def test_add_relations(self):

#24573 - Adding relations to existing models should reload the

referenced models too.

"""

+ new_apps = Apps()

+

class A(models.Model):

class Meta:

app_label = 'something'

+ apps = new_apps

class B(A):

class Meta:

app_label = 'something'

+ apps = new_apps

class C(models.Model):

class Meta:

app_label = 'something'

+ apps = new_apps

project_state = ProjectState()

project_state.add_model(ModelState.from_model(A))

@@ -447,15 +452,19 @@ def test_remove_relations(self):

#24225 - Tests that relations between models are updated while

remaining the relations and references for models of an old state.

"""

+ new_apps = Apps()

+

class A(models.Model):

class Meta:

app_label = "something"

+ apps = new_apps

class B(models.Model):

to_a = models.ForeignKey(A)

class Meta:

app_label = "something"

+ apps = new_apps

def get_model_a(state):

return [mod for mod in state.apps.get_models() if mod._meta.model_name == 'a'][0]

diff --git a/tests/schema/tests.py b/tests/schema/tests.py

index de38f6db9494f..ba20a8a9acb6f 100644

--- a/tests/schema/tests.py

+++ b/tests/schema/tests.py

@@ -765,8 +765,10 @@ class Meta:

app_label = 'schema'

apps = new_apps

- self.local_models = [LocalBookWithM2M]

-

+ self.local_models = [

+ LocalBookWithM2M,

+ LocalBookWithM2M._meta.get_field('tags').remote_field.through,

+ ]

# Create the tables

with connection.schema_editor() as editor:

editor.create_model(Author)

@@ -845,6 +847,7 @@ class Meta:

# Create an M2M field

new_field = M2MFieldClass("schema.TagM2MTest", related_name="authors")

new_field.contribute_to_class(LocalAuthorWithM2M, "tags")

+ self.local_models += [new_field.remote_field.through]

# Ensure there's no m2m table there

self.assertRaises(DatabaseError, self.column_classes, new_field.remote_field.through)

# Add the field

@@ -934,7 +937,10 @@ class Meta:

app_label = 'schema'

apps = new_apps

- self.local_models = [LocalBookWithM2M]

+ self.local_models = [

+ LocalBookWithM2M,

+ LocalBookWithM2M._meta.get_field('tags').remote_field.through,

+ ]

# Create the tables

with connection.schema_editor() as editor:

@@ -955,6 +961,7 @@ class Meta:

old_field = LocalBookWithM2M._meta.get_field("tags")

new_field = M2MFieldClass(UniqueTest)

new_field.contribute_to_class(LocalBookWithM2M, "uniques")

+ self.local_models += [new_field.remote_field.through]

with connection.schema_editor() as editor:

editor.alter_field(LocalBookWithM2M, old_field, new_field)

# Ensure old M2M is gone

|

https://code.djangoproject.com/ticket/24812

|

https://api.github.com/repos/django/django/pulls/4672

|

2015-05-18T13:13:57Z

|

2015-05-18T14:03:15Z

|

2015-05-18T14:03:15Z

|

2015-05-18T14:17:47Z

| 975

|

django/django

| 51,487

|

Fixed doc about domain whitelisting

|

diff --git a/docs/src/content/howto-ignoredomains.md b/docs/src/content/howto-ignoredomains.md

index b1b1483106..902a17bec6 100644

--- a/docs/src/content/howto-ignoredomains.md

+++ b/docs/src/content/howto-ignoredomains.md

@@ -72,8 +72,7 @@ method to do so:

>>> mitmproxy --ignore-hosts ^example\.com:443$

{{< /highlight >}}

-Here are some other examples for ignore

-patterns:

+Here are some other examples for ignore patterns:

{{< highlight none >}}

# Exempt traffic from the iOS App Store (the regex is lax, but usually just works):

@@ -84,15 +83,22 @@ patterns:

# Ignore example.com, but not its subdomains:

--ignore-hosts '^example.com:'

-# Ignore everything but example.com and mitmproxy.org:

---ignore-hosts '^(?!example\.com)(?!mitmproxy\.org)'

-

# Transparent mode:

--ignore-hosts 17\.178\.96\.59:443

# IP address range:

--ignore-hosts 17\.178\.\d+\.\d+:443

{{< / highlight >}}

+This option can also be used to whitelist some domains through negative lookahead expressions. However, ignore patterns are always matched against the IP address of the target before being matched against its domain name. Thus, the pattern must allow any IP addresses using an expression like `^(?![0-9\.]+:)` in order for domains whitelisting to work. Here are examples of such patterns:

+

+{{< highlight none >}}

+# Ignore everything but example.com and mitmproxy.org (not subdomains):

+--ignore-hosts '^(?![0-9\.]+:)(?!example\.com:)(?!mitmproxy\.org:)'

+

+# Ignore everything but example.com and its subdomains:

+--ignore-hosts '^(?![0-9\.]+:)(?!([^\.:]+\.)*example\.com:)'

+{{< / highlight >}}

+

**Footnotes**

1. This stems from an limitation of explicit HTTP proxying: A single connection

|

This PR fixes the documentation about domains whitelisting. Previous pattern did not work, and solution was given by @mhils in #2994.

I don't think this should close this ticket as there should be a clear way to specify if the pattern should match the IP address and/or the domain, but at least, this PR prevents confusion and gives a workaround.

|

https://api.github.com/repos/mitmproxy/mitmproxy/pulls/3344

|

2018-10-05T02:08:47Z

|

2018-10-10T15:07:17Z

|

2018-10-10T15:07:17Z

|

2018-10-10T15:07:17Z

| 474

|

mitmproxy/mitmproxy

| 28,340

|

[training] SAVE_STATE_WARNING was removed in pytorch

|

diff --git a/src/transformers/trainer_pt_utils.py b/src/transformers/trainer_pt_utils.py

index cb3d4a5bfe5b7..5cb45eb7bd366 100644

--- a/src/transformers/trainer_pt_utils.py

+++ b/src/transformers/trainer_pt_utils.py

@@ -23,7 +23,6 @@

import numpy as np

import torch

-from packaging import version

from torch.utils.data.distributed import DistributedSampler

from torch.utils.data.sampler import RandomSampler, Sampler

@@ -34,10 +33,11 @@

if is_torch_tpu_available():

import torch_xla.core.xla_model as xm

-if version.parse(torch.__version__) <= version.parse("1.4.1"):

- SAVE_STATE_WARNING = ""

-else:

+# this is used to supress an undesired warning emitted by pytorch versions 1.4.2-1.7.0

+try:

from torch.optim.lr_scheduler import SAVE_STATE_WARNING

+except ImportError:

+ SAVE_STATE_WARNING = ""

logger = logging.get_logger(__name__)

|

`SAVE_STATE_WARNING` has been removed from pytorch 3 days ago: pytorch/pytorch#46813

I had to add redundant ()'s to avoid a terrible auto-formatter outcome.

Fixes: #8232

@sgugger, @LysandreJik

|

https://api.github.com/repos/huggingface/transformers/pulls/8979

|

2020-12-08T02:42:29Z

|

2020-12-08T05:59:56Z

|

2020-12-08T05:59:56Z

|

2021-06-08T18:11:11Z

| 240

|

huggingface/transformers

| 12,741

|

Backport PR #36316 on branch 1.1.x (BUG: Don't overflow with large int scalar)

|

diff --git a/doc/source/whatsnew/v1.1.3.rst b/doc/source/whatsnew/v1.1.3.rst

index 25d223418fc92..5cbd160f29d66 100644

--- a/doc/source/whatsnew/v1.1.3.rst

+++ b/doc/source/whatsnew/v1.1.3.rst

@@ -25,6 +25,7 @@ Fixed regressions

Bug fixes

~~~~~~~~~

- Bug in :meth:`Series.str.startswith` and :meth:`Series.str.endswith` with ``category`` dtype not propagating ``na`` parameter (:issue:`36241`)

+- Bug in :class:`Series` constructor where integer overflow would occur for sufficiently large scalar inputs when an index was provided (:issue:`36291`)

.. ---------------------------------------------------------------------------

diff --git a/pandas/core/dtypes/cast.py b/pandas/core/dtypes/cast.py

index e6b4cb598989b..a87bddef481b5 100644

--- a/pandas/core/dtypes/cast.py

+++ b/pandas/core/dtypes/cast.py

@@ -697,6 +697,11 @@ def infer_dtype_from_scalar(val, pandas_dtype: bool = False) -> Tuple[DtypeObj,

else:

dtype = np.dtype(np.int64)

+ try:

+ np.array(val, dtype=dtype)

+ except OverflowError:

+ dtype = np.array(val).dtype

+

elif is_float(val):

if isinstance(val, np.floating):

dtype = np.dtype(type(val))

diff --git a/pandas/tests/series/test_constructors.py b/pandas/tests/series/test_constructors.py

index ce078059479b4..f811806a897ee 100644

--- a/pandas/tests/series/test_constructors.py

+++ b/pandas/tests/series/test_constructors.py

@@ -1474,3 +1474,10 @@ def test_construction_from_ordered_collection(self):

result = Series({"a": 1, "b": 2}.values())

expected = Series([1, 2])

tm.assert_series_equal(result, expected)

+

+ def test_construction_from_large_int_scalar_no_overflow(self):

+ # https://github.com/pandas-dev/pandas/issues/36291

+ n = 1_000_000_000_000_000_000_000

+ result = Series(n, index=[0])

+ expected = Series(n)

+ tm.assert_series_equal(result, expected)

|

Backport PR #36316: BUG: Don't overflow with large int scalar

|

https://api.github.com/repos/pandas-dev/pandas/pulls/36334

|

2020-09-13T13:29:10Z

|

2020-09-13T15:52:02Z

|

2020-09-13T15:52:02Z

|

2020-09-13T15:52:02Z

| 546

|

pandas-dev/pandas

| 44,803

|

Fix incorrect namespace in sitemap's template.

|

diff --git a/docs/ref/contrib/sitemaps.txt b/docs/ref/contrib/sitemaps.txt

index b89a9a13b02ca..fb3871e58e547 100644

--- a/docs/ref/contrib/sitemaps.txt

+++ b/docs/ref/contrib/sitemaps.txt

@@ -456,7 +456,7 @@ generate a Google News compatible sitemap:

<?xml version="1.0" encoding="UTF-8"?>

<urlset

xmlns="http://www.sitemaps.org/schemas/sitemap/0.9"

- xmlns:news="https://www.google.com/schemas/sitemap-news/0.9">

+ xmlns:news="http://www.google.com/schemas/sitemap-news/0.9">

{% spaceless %}

{% for url in urlset %}

<url>

|

According Google's webmaster tools namespace for news are invalid.

|

https://api.github.com/repos/django/django/pulls/7980

|

2017-01-28T19:12:11Z

|

2017-01-30T17:07:15Z

|

2017-01-30T17:07:15Z

|

2017-01-30T17:07:15Z

| 183

|

django/django

| 51,183

|

🔧 Update sponsors, add Jina back as bronze sponsor

|

diff --git a/docs/en/data/sponsors.yml b/docs/en/data/sponsors.yml

index 53cdb9bad1588..6cfd5b5564ba4 100644

--- a/docs/en/data/sponsors.yml

+++ b/docs/en/data/sponsors.yml

@@ -37,3 +37,6 @@ bronze:

- url: https://www.flint.sh

title: IT expertise, consulting and development by passionate people

img: https://fastapi.tiangolo.com/img/sponsors/flint.png

+ - url: https://bit.ly/3JJ7y5C

+ title: Build cross-modal and multimodal applications on the cloud

+ img: https://fastapi.tiangolo.com/img/sponsors/jina2.svg

|

🔧 Update sponsors, add Jina back as bronze sponsor

|

https://api.github.com/repos/tiangolo/fastapi/pulls/10050

|

2023-08-09T13:20:58Z

|

2023-08-09T13:26:33Z

|

2023-08-09T13:26:33Z

|

2023-08-09T13:26:35Z

| 165

|

tiangolo/fastapi

| 22,789

|

remove additional tags in Phind

|

diff --git a/g4f/Provider/Phind.py b/g4f/Provider/Phind.py

index dbf1e7ae36..e71568427d 100644

--- a/g4f/Provider/Phind.py

+++ b/g4f/Provider/Phind.py

@@ -69,6 +69,8 @@ async def create_async_generator(

pass

elif chunk.startswith(b"<PHIND_METADATA>") or chunk.startswith(b"<PHIND_INDICATOR>"):

pass

+ elif chunk.startswith(b"<PHIND_SPAN_BEGIN>") or chunk.startswith(b"<PHIND_SPAN_END>"):

+ pass

elif chunk:

yield chunk.decode()

elif new_line:

|

Phind added new additional tags to their reply and this commit removed them.

Example additional tags:

```

<PHIND_SPAN_BEGIN>{"id": "g30s0idmy56038rjky0i", "indicator": "Using GPT4", "parent": "qolbkr9srwccc7ir3m5f", "start": 1706418122.9927964, "end": null, "indent": 2, "children": []}</PHIND_SPAN_BEGIN><PHIND_SPAN_END>{"id": "g30s0idmy56038rjky0i", "indicator": "Using GPT4", "end": 1706418122.994516}</PHIND_SPAN_END>

```

|

https://api.github.com/repos/xtekky/gpt4free/pulls/1522

|

2024-01-28T13:13:19Z

|

2024-01-28T22:57:57Z

|

2024-01-28T22:57:57Z

|

2024-01-28T22:57:57Z

| 154

|

xtekky/gpt4free

| 38,101

|

Add version switch to flask cli

|

diff --git a/flask/cli.py b/flask/cli.py

index cf2c5c0c9c..90eb0353cf 100644

--- a/flask/cli.py

+++ b/flask/cli.py

@@ -18,7 +18,7 @@

from ._compat import iteritems, reraise

from .helpers import get_debug_flag

-

+from . import __version__

class NoAppException(click.UsageError):

"""Raised if an application cannot be found or loaded."""

@@ -108,6 +108,22 @@ def find_default_import_path():

return app

+def get_version(ctx, param, value):

+ if not value or ctx.resilient_parsing:

+ return

+ message = 'Flask %(version)s\nPython %(python_version)s'

+ click.echo(message % {

+ 'version': __version__,

+ 'python_version': sys.version,

+ }, color=ctx.color)

+ ctx.exit()

+

+version_option = click.Option(['--version'],

+ help='Show the flask version',

+ expose_value=False,

+ callback=get_version,

+ is_flag=True, is_eager=True)

+

class DispatchingApp(object):

"""Special application that dispatches to a flask application which

is imported by name in a background thread. If an error happens

@@ -270,12 +286,19 @@ class FlaskGroup(AppGroup):

:param add_default_commands: if this is True then the default run and

shell commands wil be added.

+ :param add_version_option: adds the :option:`--version` option.

:param create_app: an optional callback that is passed the script info

and returns the loaded app.

"""

- def __init__(self, add_default_commands=True, create_app=None, **extra):

- AppGroup.__init__(self, **extra)

+ def __init__(self, add_default_commands=True, create_app=None,

+ add_version_option=True, **extra):

+ params = list(extra.pop('params', None) or ())

+

+ if add_version_option:

+ params.append(version_option)

+

+ AppGroup.__init__(self, params=params, **extra)

self.create_app = create_app

if add_default_commands:

|

re #1828

|

https://api.github.com/repos/pallets/flask/pulls/1848

|

2016-06-02T10:59:01Z

|

2016-06-02T11:53:13Z

|

2016-06-02T11:53:13Z

|

2020-11-14T04:52:48Z

| 492

|

pallets/flask

| 20,568

|

Fix parameter typing for generateCode

|

diff --git a/frontend/src/App.tsx b/frontend/src/App.tsx

index f1301e04..3ae08a91 100644

--- a/frontend/src/App.tsx

+++ b/frontend/src/App.tsx

@@ -2,7 +2,7 @@ import { useEffect, useRef, useState } from "react";

import ImageUpload from "./components/ImageUpload";

import CodePreview from "./components/CodePreview";

import Preview from "./components/Preview";

-import { CodeGenerationParams, generateCode } from "./generateCode";

+import { generateCode } from "./generateCode";

import Spinner from "./components/Spinner";

import classNames from "classnames";

import {

@@ -18,7 +18,13 @@ import { Button } from "@/components/ui/button";

import { Textarea } from "@/components/ui/textarea";

import { Tabs, TabsContent, TabsList, TabsTrigger } from "./components/ui/tabs";

import SettingsDialog from "./components/SettingsDialog";

-import { Settings, EditorTheme, AppState, GeneratedCodeConfig } from "./types";

+import {

+ AppState,

+ CodeGenerationParams,

+ EditorTheme,

+ GeneratedCodeConfig,

+ Settings,

+} from "./types";

import { IS_RUNNING_ON_CLOUD } from "./config";

import { PicoBadge } from "./components/PicoBadge";

import { OnboardingNote } from "./components/OnboardingNote";

diff --git a/frontend/src/generateCode.ts b/frontend/src/generateCode.ts

index 8a05fe73..96fbdc1c 100644

--- a/frontend/src/generateCode.ts

+++ b/frontend/src/generateCode.ts

@@ -1,23 +1,16 @@

import toast from "react-hot-toast";

import { WS_BACKEND_URL } from "./config";

import { USER_CLOSE_WEB_SOCKET_CODE } from "./constants";

+import { FullGenerationSettings } from "./types";

const ERROR_MESSAGE =

"Error generating code. Check the Developer Console AND the backend logs for details. Feel free to open a Github issue.";

const STOP_MESSAGE = "Code generation stopped";

-export interface CodeGenerationParams {

- generationType: "create" | "update";

- image: string;

- resultImage?: string;

- history?: string[];

- // isImageGenerationEnabled: boolean; // TODO: Merge with Settings type in types.ts

-}

-

export function generateCode(

wsRef: React.MutableRefObject<WebSocket | null>,

- params: CodeGenerationParams,

+ params: FullGenerationSettings,

onChange: (chunk: string) => void,

onSetCode: (code: string) => void,

onStatusUpdate: (status: string) => void,

diff --git a/frontend/src/types.ts b/frontend/src/types.ts

index deb370a2..92027456 100644

--- a/frontend/src/types.ts

+++ b/frontend/src/types.ts

@@ -28,3 +28,12 @@ export enum AppState {

CODING = "CODING",

CODE_READY = "CODE_READY",

}

+

+export interface CodeGenerationParams {

+ generationType: "create" | "update";

+ image: string;

+ resultImage?: string;

+ history?: string[];

+}

+

+export type FullGenerationSettings = CodeGenerationParams & Settings;

|

The parameter type was not complete as `Settings` are also included and expected by the backend

|

https://api.github.com/repos/abi/screenshot-to-code/pulls/170

|

2023-12-08T00:19:23Z

|

2023-12-11T23:23:18Z

|

2023-12-11T23:23:18Z

|

2023-12-11T23:23:48Z

| 695

|

abi/screenshot-to-code

| 46,907

|

Fast path for disabled template load explain.

|

diff --git a/flask/templating.py b/flask/templating.py

index 8c95a6a706..2da4926d25 100644

--- a/flask/templating.py

+++ b/flask/templating.py

@@ -52,27 +52,36 @@ def __init__(self, app):

self.app = app

def get_source(self, environment, template):

- explain = self.app.config['EXPLAIN_TEMPLATE_LOADING']

+ if self.app.config['EXPLAIN_TEMPLATE_LOADING']:

+ return self._get_source_explained(environment, template)

+ return self._get_source_fast(environment, template)

+

+ def _get_source_explained(self, environment, template):

attempts = []

- tmplrv = None

+ trv = None

for srcobj, loader in self._iter_loaders(template):

try:

rv = loader.get_source(environment, template)

- if tmplrv is None:

- tmplrv = rv

- if not explain:

- break

+ if trv is None:

+ trv = rv

except TemplateNotFound:

rv = None

attempts.append((loader, srcobj, rv))

- if explain:

- from .debughelpers import explain_template_loading_attempts

- explain_template_loading_attempts(self.app, template, attempts)

+ from .debughelpers import explain_template_loading_attempts

+ explain_template_loading_attempts(self.app, template, attempts)

+

+ if trv is not None:

+ return trv

+ raise TemplateNotFound(template)

- if tmplrv is not None:

- return tmplrv

+ def _get_source_fast(self, environment, template):

+ for srcobj, loader in self._iter_loaders(template):

+ try:

+ return loader.get_source(environment, template)

+ except TemplateNotFound:

+ continue

raise TemplateNotFound(template)

def _iter_loaders(self, template):

|

Refs #1792

|

https://api.github.com/repos/pallets/flask/pulls/1814

|

2016-05-22T09:36:57Z

|

2016-05-26T19:34:56Z

|

2016-05-26T19:34:56Z

|

2020-11-14T04:42:50Z

| 428

|

pallets/flask

| 20,741

|

boardd: SPI bulk read + write

|

diff --git a/selfdrive/boardd/panda_comms.h b/selfdrive/boardd/panda_comms.h

index aef7b41d070f7e..f42eadc5b23b64 100644

--- a/selfdrive/boardd/panda_comms.h

+++ b/selfdrive/boardd/panda_comms.h

@@ -34,7 +34,7 @@ class PandaCommsHandle {

virtual int bulk_read(unsigned char endpoint, unsigned char* data, int length, unsigned int timeout=TIMEOUT) = 0;

protected:

- std::mutex hw_lock;

+ std::recursive_mutex hw_lock;

};

class PandaUsbHandle : public PandaCommsHandle {

@@ -74,6 +74,7 @@ class PandaSpiHandle : public PandaCommsHandle {

uint8_t rx_buf[SPI_BUF_SIZE];