full_name

stringlengths 9

72

| url

stringlengths 28

91

| description

stringlengths 3

343

⌀ | readme

stringlengths 1

207k

|

|---|---|---|---|

magiklabs/magik-sdk

|

https://github.com/magiklabs/magik-sdk

|

LLM Testing SDK that helps you write and run tests to monitor your LLM app in production

|

_Magik is an LLM output testing SDK + observability platform that helps you write tests and monitor your app in production_.

<br /><br />

# Overview

Reliability of output is one of the biggest challenges for people trying to use LLM apps in production.<br />

Since LLM outputs are non-deterministic, it’s very hard to measure how good the output is.

Eyeballing the responses from an LLM can work in development, but it’s not a great solution.

> _In production, it’s virtually impossible to eyeball thousands of responses. Which means you have very little visibility into how well your LLM is performing._

- Do you know when your LLM app is hallucinating?

- How do you know how well it's _really_ performing?

- Do you know how often it’s producing a critically bad output?

- How do you know what your users are seeing?

- How do you measure how good your LLM responses are? And if you can’t measure it, how do you improve the accuracy?

<br />

> If these sound like problems to you (today or in the future), please reach out to us at [email protected]. We’d love to hear more!

<img width="1576" alt="llm-screenshot-1" src="https://github.com/magiklabs/magik-sdk/assets/7515552/bc87aefa-505f-4732-84cd-b7fe57857850">

<br /><br /><br />

# Documentation

`pip install magik`

See https://docs.magiklabs.app for instructions on how to write and run tests.

- [Overview](https://docs.magiklabs.app/)

- [Quick Start](https://docs.magiklabs.app/quick-start)

- [Writing Tests](https://docs.magiklabs.app/reference/writing-tests)

- [Evaluator Functions](https://docs.magiklabs.app/reference/writing-tests/evaluator-functions)

- [What kind of tests can I write](https://docs.magiklabs.app/reference/writing-tests/what-kind-of-tests-can-i-write)

- [How does the LLM grader work?](https://docs.magiklabs.app/reference/writing-tests/how-does-the-llm-grader-work)

- [Running Tests](https://docs.magiklabs.app/reference/running-tests)

- [Deploying Tests](https://docs.magiklabs.app/reference/deploying-tests)

- [Logging your production data](https://docs.magiklabs.app/reference/logging-your-production-data)

<br />

# Use Cases

Who is this product meant for?

- If you're in the early stages of building an LLM app:

- If you have an LLM app in production

<br /><br />

### If you're in the early stages of building an LLM app:

---

Test-driven development can speed up your development very nicely, and can help you engineer your prompts to be more robust.

For example, assuming your prompt looks like this:

```

Create some marketing copy for a tweet of less than 280 characters for my app {app_name}.

My app helps people generate sales emails using AI.

Make sure the marketing copy contains a complete and valid link to my app.

Here is the link to my app: https://magiklabs.app.

```

You can write tests like this:

```python

from magik.evaluators import (

contains_none,

contains_link,

contains_valid_link,

is_positive_sentiment,

length_less_than,

)

# Local context - this is used as the "ground truth" data that you can compare against in your tests

test_context = {}

# Define tests here

def define_tests(context: dict):

return [

{

"description": "output contains a link",

"eval": contains_link(),

"prompt_vars": {

"app_name": "Uber",

},

"failure_labels": ["bad_response_format"],

},

{

"description": "output contains a valid link",

"eval": contains_valid_link(),

"prompt_vars": {

"app_name": "Magik",

},

"failure_labels": ["bad_response_format"],

},

{

"description": "output sentiment is positive",

"eval": is_positive_sentiment(),

"prompt_vars": {

"app_name": "Lyft",

},

"failure_labels": ["negative_sentiment"],

},

{

"description": "output length is less than 280 characters",

"eval": length_less_than(280),

"prompt_vars": {

"app_name": "Facebook",

},

"failure_labels": ["negative_sentiment", "critical"],

},

{

"description": "output does not contain hashtags",

"eval": contains_none(['#']),

"prompt_vars": {

"app_name": "Datadog",

},

"failure_labels": ["bad_response_format"],

},

]

```

<br /><br />

### If you have an LLM app in production:

---

You can use our **evaluation & monitoring platform** to:

- Observe the prompt, response pairs in production, and analyze response times, cost, token usage, etc for different prompts and date ranges.

- Evaluate your production responses against your own tests to get a quantifiable understanding of how well your LLM app is performing.

- For example, You can run the tests you defined against the LLM responses you are getting in production to measure how your app is performing with real data.

- Filter by failure labels, severity, prompt, etc to identify different types of errors that are occurring in your LLM outputs.

See https://magiklabs.app for more details, or contact us at [[email protected]](mailto:[email protected])

<br /><br />

### Upcoming Features

---

Soon, you will also be able to:

- Fail bad outputs before they get to your users.

- For example, if the LLM response contains sensitive information like PII, you can detect that in real-time, and cut it off before it reaches the end user.

- Set up alerts to notify you about critical errors in production.

<br /><br />

# Platform

Contact us at [[email protected]](mailto:[email protected]) to get access to our LLM observability platform where you can run the tests you've defined here against your LLM responses in production.

|

nicknochnack/Falcon40B

|

https://github.com/nicknochnack/Falcon40B

| null |

# Using Falcon40B Instruct...and any other Open Source LLMs on GPU via HuggingFace

In this tutorial we're going to be checking out some of the biggest baddest LLMs...but running them on a GPU!

## See it live and in action 📺

[](https://youtu.be/hMJgdVJWQRU 'Tutorial')

# Startup 🚀

1. Create a virtual environment `python -m venv gpullm`

2. Activate it:

- Windows:`.\gpullm\Scripts\activate`

- Mac: `source gpullm/bin/activate`

3. Install PyTorch with CUDA Support

N.B. I've included the lib in the requirements.txt file but this is latest installer as of creating this readme.

`pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117`

Download and install CUDA (i've used 11.7 for this tutorial): https://developer.nvidia.com/cuda-11-7-0-download-archive

Download and install the matching cuDNN version ( v8.9.1): https://developer.nvidia.com/rdp/cudnn-archive

5. Clone this repo `git clone https://github.com/nicknochnack/Falcon40B`

6. Go into the directory `cd Falcon40B`

7. Startup jupyter by running `jupyter lab` in a terminal or command prompt

8. Hit `Ctrl + Enter` to run through the notebook!

10. Go back to my YouTube channel and like and subscribe 😉...no seriously...please! lol

# Other References 🔗

<p>-<a href="https://pytorch.org/get-started/locally/">PyTorch Installation</a>:main guide leveraged to handle GPU support.</p>

<p>-<a href="https://python.langchain.com/en/latest/modules/models/llms/integrations/huggingface_pipelines.html">Langchain HF Pipelines</a>:the HF Pipelines class is used in order to pass the local LLM to a chain.</p>

<p>-<a href="https://huggingface.co/tiiuae/falcon-40b-instruct">Falcon 40B Instruct Model Card</a>:check out the model details.</p>

# Who, When, Why?

👨🏾💻 Author: Nick Renotte <br />

📅 Version: 1.x<br />

📜 License: This project is licensed under the MIT License </br>

|

muazkadan/switchy-compose

|

https://github.com/muazkadan/switchy-compose

| null |

# Switchy-compose

[](https://jitpack.io/#muazkadan/switchy-compose)

SwitchyCompose is an Android library that helps you to easily create a custom Switches.

## Preview

<img src="/preview/switchy_compose_preview.gif" width="300" >

## Installation

Add the jitpack.io repository:

```groovy

allprojects {

repositories {

jcenter()

maven { url "https://jitpack.io" }

}

}

```

and the dependency

```groovy

dependencies {

implementation "com.github.muazkadan:switchy-compose:Tag:$version"

}

```

## Usage

TextSwitch

```kotlin

var switchValue by rememberSaveable { mutableStateOf(false) }

TextSwitch(

modifier = Modifier

.padding(horizontal = 16.dp),

switchValue = switchValue,

onValueChanged = {

switchValue = it

},

)

```

ColoredSwitch

```kotlin

var switchValue by rememberSaveable { mutableStateOf(false) }

ColoredSwitch(

modifier = Modifier

.fillMaxWidth()

.padding(horizontal = 16.dp),

switchValue = switchValue,

onValueChanged = {

switchValue = it

},

)

```

ISwitch

```kotlin

var switchValue by rememberSaveable { mutableStateOf(false) }

ISwitch(

switchValue = switchValue,

onValueChanged = {

switchValue = it

},

)

```

IconISwitch

```kotlin

var switchValue by rememberSaveable { mutableStateOf(false) }

IconISwitch(

switchValue = switchValue,

onValueChanged = {

switchValue = it

},

)

```

Also, you can customize your own switch or iswitch. Check the demo project for more information.

### License

Copyright 2023 Muaz KADAN

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

|

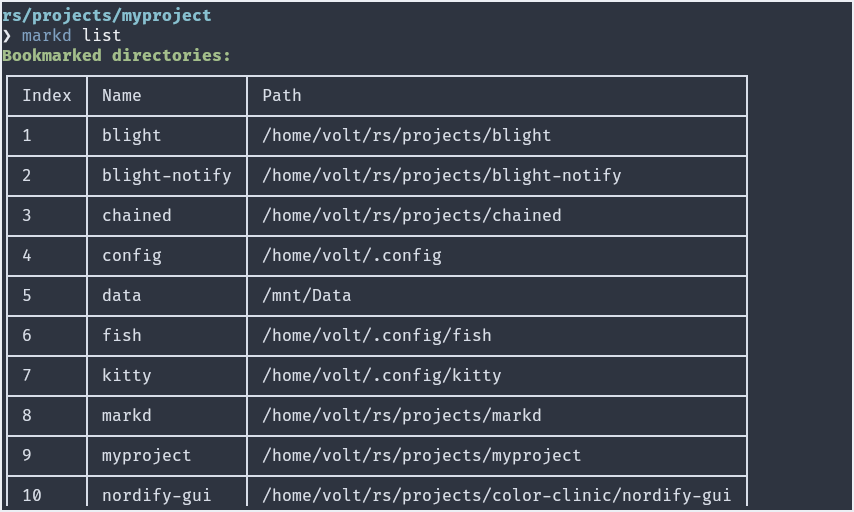

VoltaireNoir/markd

|

https://github.com/VoltaireNoir/markd

|

Bookmark directories for easy directory-hopping in the terminal

|

<div align="center">

[](https://github.com/VoltaireNoir/markd/actions/workflows/rust.yml)

[](https://crates.io/crates/markd)

[](https://crates.io/crates/markd)

</div>

# markd

Bookmark directories for easy directory-hopping in the terminal.

All it takes is one command `markd` to bookmark your current directory, or use the `-p / --path` to specify custom path and `-a / --alias` to set a custom bookmark name. The CLI tool also provides the necessary functionality to search and clean your bookmarks. For example, the `purge` command will check all the paths and remove the ones that no longer exist, and the `list` command supports `--filter`, `--start` and `--end` for advanced searching.

All paths are ensured to be valid ones, relative paths are stored in their expanded forms and names are always lowercase. No duplicate names are allowed (use an alias instead).

Run `markd help` for a full list of supported commands and arguments. Run `markd <COMMAND> --help` to get more info on the command.

> Note: bookmarks are stored in `bookmarks.json` file in the user home directory in the form of `"name":"path"`, which can also be directly edited if necessary.

## Shell Support

Since 'cd' is a built-in shell command, you need to use 'command substitution' to make use of markd to switch directories.

To make it work, simply add a function definition to your shell config file. After adding the necessary code to your shell config, you should be able to jump between directories using the command `goto <bookmark-name>`.

> Note: The function name used here is 'goto' but you can change it to whatever you prefer.

### Fish

- Create a `functions` directory in fish config folder (usually `/home/user/.config/fish`)

- Inside the folder, create a file named `goto.fish`

- Copy and paste the following code and save it

```

function goto

cd $(markd g $argv)

end

```

### Zsh and Bash

- Add the following code to your `.zshrc` or `.bashrc`

```

goto() {

cd $(markd g $1);

}

```

### Powershell (untested)

- Open powershell and open your config file by running `notepad $profile`

- Add the following code and save it

```

function goto([string]$Bookmark) {

cd (markd g $Bookmark)

}

```

## Install

- Using cargo: `cargo install markd`, ensure `$HOME/.cargo/bin` is in path.

- Pre-built binary: download the appropriate pre-built binary from the release section, place the binary in path.

|

as946640/flutter_webrtc_deom

|

https://github.com/as946640/flutter_webrtc_deom

|

flutter 端的 webrtc 基础模板,已经基本完善了推拉流,切换摄像头、分辨率,可实现一人推流多人拉流实现会议功能

|

# flutter_webrtc_demo

这是一个 webrtc 的模板项目,大家拿去即可直接使用

基于 dio、get、flutter_webrtc 插件

后端是 srs :https://github.com/ossrs/srs

帮助到大家的话 请给一个 star,支持开源

项目预览

|

simonw/asgi-proxy-lib

|

https://github.com/simonw/asgi-proxy-lib

|

An ASGI function for proxying to a backend over HTTP

|

# asgi-proxy-lib

[](https://pypi.org/project/asgi-proxy-lib/)

[](https://github.com/simonw/asgi-proxy-lib/releases)

[](https://github.com/simonw/asgi-proxy-lib/blob/main/LICENSE)

An ASGI function for proxying to a backend over HTTP

**⚠️ Warning: this is an early alpha.**

## Installation

Install this library using `pip`:

pip install asgi-proxy-lib

## Usage

This library provides a single ASGI function called `asgi_proxy`. You can use it like this:

```python

from asgi_proxy import asgi_proxy

app = asgi_proxy("https://datasette.io")

```

Now `app` is an ASGI application that will proxy all incoming HTTP requests to the equivalent URL on `https://datasette.io`.

The function takes an optional second argument, `log=` - set this to a Python logger, or any object that has `.info(msg)` and `.error(msg)` methods, and the proxy will log information about each request it proxies.

## CLI tool

You can try this module out like so:

```bash

python -m asgi_proxy https://datasette.io

```

You may need to `pip install uvicorn` first for this to work.

This will start a server on port 8000 that proxies to `https://datasette.io`.

Add `-p PORT` to specify a different port, `--verbose` to see debug logging, and `--host 127.0.0.1` to listen on a different host (the default is `0.0.0.0`).

## Development

To contribute to this library, first checkout the code. Then create a new virtual environment:

cd asgi-proxy-lib

python -m venv venv

source venv/bin/activate

Now install the dependencies and test dependencies:

pip install -e '.[test]'

To run the tests:

pytest

|

mmrobotlab/DailyRobot

|

https://github.com/mmrobotlab/DailyRobot

| null |

# MM-RobotLab 日报

- 本次更新包括了7月4日前精选出的 3 篇 LLM+Robotics+Multi-modal的相关研究文章,所有文章由chatgpt自动筛选,自动生成文章总结,最后由MM-RobotLab社区筛选出质量最高的并进行人工优化。

# P:1 在强化学习中学习调节预训练模型

- 1. Title: Learning to Modulate pre-trained Models in RL

- 2. 论文简介: 该论文由Thomas Schmied等人在ELLIS Unit Linz and LIT AI Lab, Institute for Machine Learning进行研究,提出了一种名为Learning-to-Modulate (L2M)的方法,用于解决强化学习中预训练模型在新任务上适应性不足的问题。

- 3. Authors: Thomas Schmied, Markus Hofmarcher, Fabian Paischer, Razvan Pascanu, Sepp Hochreiter

- 4. Affiliation:

ELLIS Unit Linz and LIT AI Lab, Institute for Machine Learning (奥地利林茨约翰内斯·开普勒大学)

- 5. Keywords: Reinforcement Learning, pre-training, fine-tuning, catastrophic forgetting, Learning-to-Modulate

- 6. Paper: [Link](https://arxiv.org/pdf/2306.14884) Github: None

- 7. 论文总结:

* (1): 该论文的研究背景是强化学习中预训练模型在新任务上适应性不足的问题。

* (2): 过去的方法包括大规模预训练和微调,但往往会导致预训练任务的性能下降。本文提出了一种名为Learning-to-Modulate (L2M)的方法,通过学习可调节的调制池来避免学习技能的退化,从而解决了这个问题。

* (3): 本文提出的L2M方法通过学习可调节的调制池(modulation pool),以一种任务无关的方式高效地调整预训练模型,从而适应新任务。该方法在Continual-World基准测试上取得了最先进的性能,并保持了对预训练任务的性能。

* (4): 本文在Meta-World和DMControl上进行了广泛的评估,比较了多种微调方法的性能,并展示了L2M方法在新任务上的优越性能以及对预训练任务的保持能力。该方法的创新点在于通过学习可调节的调制池来避免学习技能的退化,并且相对于完整模型大小,只引入了很少的额外参数。

**方法部分:**

方法详细介绍:

a. 一句话概览:本文提出了一种名为Learning-to-Modulate (L2M)的方法,通过可学习的调制池来调节预训练模型的信息流,以避免在新任务上学习时导致预训练任务性能下降。

b. 方法的详细步骤:

* (1). 提出Learning-to-Modulate (L2M)方法,该方法结合了参数高效微调和基于提示的调优方法的优点。

* (2). 维护一个调制池,其中包含一组$M$个键,每个键与一组调制矩阵相关联,用于调制预训练模型。

* (3). 对于给定的输入序列,通过查询池中的键,检索出与当前时间步的查询向量最相似的调制矩阵。

* (4). 使用检索到的调制矩阵来调节预训练模型,以改变其行为。

* (5). 使用梯度下降法学习调制模块的权重,同时保持预训练模型冻结。

* (6). 更新键的方法是通过最大化查询向量和键之间的余弦相似度来更新键。

* (7). Learning-to-Modulate (L2M)方法通过保持预训练任务的性能,同时实现高性能和少量可学习参数,提供了一种简单的任务匹配机制,并能够适应大量任务。

* (8). 扩展了Decision Transformer架构,以处理来自具有不同状态/动作空间的多个领域的输入。

* (9). 构建了一个统一的状态空间,包括所有DMControl和Meta-World环境的维度,并使用线性层对状态进行嵌入。

* (10). 对每个动作维度进行分词,并自回归地预测动作标记。

* (11). 使用返回条件的反向强化学习通过交叉熵损失训练Decision Transformer。

* (12). 通过最大观察到的回报设置目标回报,或者使用每个领域的常数代理。

**实验设置:**

- 实验设置(Experimental setting):

本文的实验设置如下:

* (1). 本文考虑了两个不同的基准套件(Meta-World和DMControl),共包含66个不同的任务。

* (2). Meta-World包含50个多样化的机器人操作任务,如抓取、操纵物体、打开/关闭窗户、按按钮、锁定/解锁门和投篮等。DMControl包含16个任务,机器人形态各异,因此状态表示差异较大。

* (3). 通过训练基于Soft Actor Critic (SAC)的任务特定智能体来收集数据集。Meta-World的数据集包含了每个任务的10K个长度为200的轨迹,总共100M个转换。DMControl的数据集包含了每个任务的1000个长度为1000的轨迹,总共16M个转换。

* (4). 评估了各种微调方法在新任务上的性能,并比较了在微调过程中对预训练任务性能的保留程度。

* (5). 在Continual-World基准测试中,L2M方法取得了最先进的性能,并保持了对预训练任务的性能。

**实验结果:**

实验结果和分析:

本研究首先在Meta-World和DMControl两个基准套件的数据集上进行了联合预训练,并评估了多种在自然语言处理中常见的微调方法在新任务上的性能以及对预训练任务性能的保留情况。实验结果表明,大多数微调方法在预训练任务上的性能明显下降。因此,研究提出了一种新的方法,即Learning-to-Modulate (L2M),通过可学习的调制池来调节冻结的预训练模型的信息流,避免了学习技能的退化。该方法在Continual-World基准测试中取得了最先进的性能,并保持了对预训练任务的性能。此外,研究还发布了一个包含50个Meta-World任务和16个DMControl任务的数据集,以促进未来的研究。

具体实验结果如下:

1. 在CW10和DMC6上,FT方法的性能最好,Adapters方法的性能次之,LoRA、 $\text{(IA)}^3$ 和FT-last+head方法的性能较好。PBT和PEFT方法之间存在较大的性能差距,尤其在MT40上。在DMC6上,全面微调和PEFT方法之间的性能差距更大。

2. 在CW10和DMC6上,L2M方法的性能优于其他方法,平均成功率分别为65%和43%。添加任务oracle到L2M方法可以将成功率提高到76%和75%。L2P结合不同的提示方法的性能明显低于L2M。传统的连续强化学习方法EWC在减轻遗忘方面效果不佳。

3. 在微调后评估预训练任务的性能时,FT、L2和EWC方法的性能严重下降,而L2M和L2P方法在微调前后保持了类似的性能水平。

总结起来,本研究通过实验结果验证了Learning-to-Modulate (L2M)方法在连续强化学习任务中的有效性,该方法在新任务上取得了最先进的性能,并保持了对预训练任务的性能。同时,研究还对其他微调方法进行了比较和分析,为未来的研究提供了有价值的数据集。

# P:2 通过源准备增强视觉域自适应

- 1. Title: Enhancing Visual Domain Adaptation with Source Preparation

- 2. 论文简介: 该论文由卡内基梅隆大学的Anirudha Ramesh等人提出,通过提出源准备(Source Preparation)方法,综合考虑源域的特征,解决了机器人感知在低光环境中的挑战。

- 3. Authors: Anirudha Ramesh, Anurag Ghosh, Christoph Mertz, Jeff Schneider

- 4. Affiliation: 卡内基梅隆大学

- 5. Keywords: Robotic Perception, Domain Adaptation, Source Preparation, Unsupervised Domain Adaptation, Supervised Alignment

- 6. Paper: [Link](https://arxiv.org/pdf/2306.10142) Github: None

- 7. 论文总结:

* (1): 该论文的研究背景是机器人在低光环境中的感知问题。

* (2): 过去的方法包括无监督域自适应和半监督域自适应,但它们没有考虑源域本身的特征,导致源模型对源域特征过拟合。本文提出了源准备方法来解决这个问题,并提出了Almost Unsupervised Domain Adaptation (AUDA)框架。

* (3): 本文的研究方法是综合考虑源域特征的源准备方法,并结合无监督域自适应和有限标记数据的监督对齐来实现有效的域自适应。该方法在语义分割任务中取得了显著的性能提升,并且在目标域内具有鲁棒性。

* (4): 本文的方法在语义分割任务中取得了显著的性能提升,mIoU相对于基线提高了最多40.64%。同时,该方法只需要使用目标域的少量标记样本就能显著提高目标域的性能,具有较高的标记效率。

**方法部分:**

方法详细介绍:

a. 一句话概览:本文提出了一种增强视觉领域适应性的方法,通过源域准备(Source Preparation)来减轻源域偏差,结合无监督域适应(Unsupervised Domain Adaptation)和有限标记数据的监督对齐(Supervised Alignment),实现了在低光环境下的语义分割和目标检测任务中的有效领域自适应(Domain Adaptation)。

b. 方法的详细步骤:

* (1). 首先,定义源域 $S$ 和目标域 $T$,其中 $S$ 具有丰富的标记数据,而 $T$ 的标记数据有限或没有。目标是在 $T$ 上构建一个性能良好的模型。为了验证方法的有效性,本文以语义分割为例,使用现有的无监督域适应方法进行改进,但该方法可以扩展到其他任务和形式的领域自适应。

* (2). 定义源域图像集合 $D_s$ 和对应的标签集合 $L_s$,其中 $L_s$ 是源域图像的one-hot标签集合。目标域的定义类似。使用 $f$ 来表示在源域 $S$ 上训练语义分割模型 $\psi_s$ 的过程,其中 $f$ 包括输入数据处理和网络架构。使用无监督域适应方法 $g$ 将 $\psi_s$适应到目标域 $T$,得到目标域的语义分割模型 $\psi_s$。简化表示为 $\psi_s=f\left(D_s, L_s\right), \psi_t=g\left(\psi_s, D_s, L_s, D_t\right)$.。

* (3). 提出了源模型准备(Source Preparation,SP)步骤,将 $f$ 替换为 $f^{\prime}$。新的问题设置为 $\psi_s^{\prime}=f^{\prime}\left(D_s, L_s\right), \psi_t^{\prime}=g\left(\psi_s^{\prime}, D_s, L_s, D_t\right)$。在第3.3节中详细介绍了 $f^{\prime}$ 的设计,但需要注意的是,不提议添加任何额外的参数或显著改变网络架构。

* (4). 最后一步是有限标记数据的监督对齐(Supervised Alignment),通过更新得到最终的目标模型 $\psi_t^{\prime \prime}=h\left(\psi_t^{\prime}, D_t^{\prime}, L_t^{\prime}\right)$,其中 $D_t^{\prime}$ 是有限标记数据集合, $L_t^{\prime}$ 是对应的标签集合。本文提出的方法框架如图2所示,突出了在现有无监督域适应方法基础上构建AUDA的修改。

* (5). 通过实验证明,本文提出的方法在语义分割任务中提高了无监督域适应的效果,跨多个视觉领域的mIoU提高了最多40.64%,同时使目标模型对目标域内的真实世界变化更加鲁棒。同时,本文还展示了AUDA作为一种标签高效的领域自适应框架,在目标域只有少量标记样本的情况下显著提高了目标域的性能。

* (6). 总结:本文提出的方法通过源域准备、无监督域适应和有限标记数据的监督对齐,实现了在低光环境下的语义分割和目标检测任务中的有效领域自适应。实验证明了该方法的有效性和高效性。

**实验设置:**

- 实验设置(Experimental setting):

- 任务(Task): 本文以语义分割为例进行实验,但该方法也适用于其他任务,如全景分割。

- 数据集(Dataset): 在不同的目标领域上测试了提出的方法,包括Cityscapes、DarkZurich、MFNetThermal和CityIntensified。在Cityscapes作为源领域的情况下,通过适应不同的目标领域,包括时间和光照的变化(DarkZurich)、模态的变化(MFNetThermal)以及低光照环境下的目标检测和语义分割(CityIntensified)。评估使用源领域和目标领域共有的标签。其中,CityIntensified和MFNetThermal有用于监督对齐的训练集,而DarkZurich没有。

- 实现细节(Implementation Details): 选择Refign-HRDA*和SegFormer(MiT-B5)作为无监督域自适应(UDA)方法和分割网络。对于Refign,采用与原论文类似的训练方案,但迭代次数增加到原来的1.5倍;对于SegFormer,按照原论文的方法进行训练。在监督对齐(SA)中,对SegFormer进行4000次微调迭代,并将'poly'调度器的预热迭代次数缩减到150。该方法不依赖于特定的UDA方法,可以扩展到其他方法。

**实验结果:**

实验结果和分析:

本文通过一系列实验验证了提出的源准备方法在视觉领域适应中的有效性。实验结果表明,SP方法能够显著提高无监督域自适应(Unsupervised Domain Adaptation, UDA)的性能,并使目标模型对目标域内的真实世界变化更加鲁棒。

在语义分割任务上,作者使用了Cityscapes作为源域,分别适应了DarkZurich、MFNetThermal和CityIntensified等目标域。实验结果显示,SP方法在不同目标域上都能够提升UDA的性能,其中在CS→CI任务中,mIoU相比基线提高了40.64%。此外,作者还发现SP方法能够使目标模型更加鲁棒,对目标域内的真实世界变化更具适应性。

在不同的SP方法中,MixStyle方法在跨模态和跨时间任务中表现最好,对CS→MFNT任务的mIoU提升最高,达到了1.55%。Mixup方法在跨模态任务CS→CI中表现出色,mIoU提升了39.37%。Blur方法在所有源-目标对中都能够提高性能,特别是在CS→CI任务中,mIoU提升了40.64%。

作者还通过实验验证了选择合适的SP方法的指导原则。如果目标域存在大量高频噪声,如雨、雾、雪和运动模糊等,可以选择模糊和mixup等方法来减少对这些噪声的敏感性。如果源域和目标域之间存在显著的风格差异,可以选择MixStyle等方法进行风格正则化。实验结果表明,SP方法不仅能够提高目标域的性能,还能够增加适应后模型对目标域内可能的真实世界变化的鲁棒性。

综上所述,本文的实验结果验证了提出的SP方法在视觉领域适应中的有效性,并提供了选择合适SP方法的指导原则。

# P:3 简化的时间一致性强化学习(ICML' 23)

- 1. Title: Simplified Temporal Consistency Reinforcement Learning

- 2. 论文简介: 本文由芬兰奥尔托大学的Yi Zhao等人提出了一种简化的时间一致性强化学习方法,通过学习潜在动力学模型的潜在时间一致性,解决了强化学习中的样本效率和计算需求问题。

- 3. Authors: Yi Zhao, Wenshuai Zhao, Rinu Boney, Juho Kannala, Joni Pajarinen

- 4. Affiliation: 芬兰奥尔托大学

- 5. Keywords: Reinforcement learning, model-based RL, latent dynamics model, sample efficiency

- 6. Paper: [Paper](https://arxiv.org/pdf/2306.09466) Github: [Github](https://github.com/zhaoyi11/tcrl)

- 7. 论文总结:

* (1):本文研究了潜在时间一致性在基于状态的强化学习中的作用;

* (2):过去的方法主要集中在图像重建和对比学习等自监督学习方法上,但这些方法在状态空间任务中的效果并不明确;

* (3):本文提出了一种简化的方法,通过学习潜在动力学模型的潜在时间一致性,实现了高性能的强化学习。该方法在纯规划和模型无关的强化学习中都取得了良好的效果;

* (4):在实验中,本文的方法在高维运动任务中学习到了准确的动力学模型,并且相比于基于集成的方法,训练速度提高了4.1倍。在高维任务上,与模型无关的方法相比,本文的方法在DeepMind Control Suite中的Humanoid和Dog任务上表现出色,训练速度提高了2.4倍。

**方法部分:**

方法详细介绍:

a. 一句话概览:本文提出了一种简化的时间一致性强化学习方法,通过训练潜在动力学模型来学习高性能的强化学习策略。

b. 方法的详细步骤:

* (1). 模型组成:该方法包括四个组件:编码器、转移函数、值函数和策略函数。

- 编码器将观测映射为潜在状态。

- 转移函数根据潜在状态和动作预测下一个时间步的潜在状态和即时奖励。

- 值函数根据潜在状态和动作估计值。

- 策略函数根据潜在状态生成动作。

* (2). 学习编码器和潜在动力学模型:

- 使用在线编码器将观测映射为潜在表示。

- 使用动作序列和转移函数迭代地预测未来奖励和潜在状态。

- 通过最小化奖励的均方误差和潜在状态的负余弦距离来训练潜在动力学模型。

* (3). 学习策略和值函数:

* 使用深度确定性策略梯度方法(DDPG)和 $n$ 步回报来学习策略和值函数。

* 将潜在状态作为输入,通过最小化值函数的损失来更新值函数。

* 通过最大化Q值来更新策略函数。

* (4). 使用模型进行规划:

* 使用模型预测未来的潜在状态和奖励。

* 使用模型预测的结果进行在线规划,改进策略。

* 通过迭代学习动力学模型来提高规划性能。

* (5). 实验验证:

* 在多个连续控制任务上评估该方法的性能。

* 与其他方法进行比较,包括基于集成模型的方法和基于模型的方法。

* 在高维任务上展示了该方法的优越性能。

* (6). 总结:

- 通过训练潜在动力学模型和使用潜状态进行规划,该方法在高性能强化学习任务中取得了显著的效果。

- 该方法简单且易于实现,同时具有较高的训练速度。

**实验设置:**

- 实验设置:

本文在几个连续的DMC控制任务中评估了TCRL方法。DMC使用了缩放的奖励,所有任务的最大周期性回报都是1000。我们在两个不同的设置中评估了我们的方法:

1. 动力学学习:在第一个设置中,没有涉及值函数或策略。相反,我们直接使用潜在动力学模型进行在线规划,称为TCRL-dynamics。我们的目标是回答是否可以使用时间一致性来学习准确的潜在动力学模型。

2. 策略学习:在第二个设置中,我们以免模型的方式训练策略和值函数,使用潜在状态代替原始观测,称为TCRL。这个实验旨在研究通过时间一致性训练的潜在表示是否有助于策略和值函数的学习。

我们使用模型预测路径积分(MPPI)作为在线规划方法来直接评估学习到的动力学模型的规划结果。在每次迭代中,根据当前策略 $a^j_{t:t+H}$,采样 $N$ 条轨迹。然后,选择具有较高回报 $\sum_{h=0}^H r_{t+h}^j\left(s_{t+h}^j, a_{t+h}^j\right)$ 的 $K$ 条轨迹。接下来,通过对所选的前K条轨迹的回报进行softmax加权平均,计算改进的策略 $a^{j+1}_{t:t+H}$ 。经过 $J$ 次迭代后,执行 $a^{J}_{t:t+H}$ 的第一个动作。

在我们的MPPI实现中,没有涉及值函数或策略网络。相反,我们通过i)通过MPPI规划器收集经验,和ii)通过优化公式2使用收集到的数据来改进动力学模型。通过这种方式,所有采样的动作序列仅通过学习到的潜在动力学模型进行评估,因此规划性能可以反映模型的准确性。我们考虑以下比较方法来学习动力学模型:

- 随机集成模型(PETS):学习一组随机神经网络,预测下一个状态和奖励的均值和标准差。与Chua等人(2018)类似,我们只预测一步未来,因为通过多个时间步骤的不确定性传播不明确。

- 确定集成模型(EnsDet):使用一组确定性神经网络预测多步未来观测和奖励。EnsDet的架构与我们的方法类似,不同之处在于预测下一个观测而不是下一个潜在状态,从而实现了观测预测和潜在空间预测之间的实验比较。

此外,我们还在基于像素的任务上测试了我们的方法,以展示其通用性。在附录A中与PlaNet进行了实验比较,我们的方法在六个常用的基于像素的基准任务上获得了可比或更好的性能。附录A.3中对基于像素的任务进行了详细的消融研究。在图2中,我们将我们的方法与随机集合模型(PETS)和确定性集合模型(EnsDet)在DMC的八个基于状态的任务上进行了比较,包括六个常用的基准任务和两个具有挑战性的高维任务:四足行走和狗行走。尽管简单且没有使用集合,我们的方法在测试任务中要么与比较方法相匹配,要么优于比较方法。高维的动作和观测空间 $\mathcal{A}\in \mathbb{R}^{38},\mathcal{O}\in \mathbb{R}^{223} $ 使得即使对于强大的免模型基线(Haarnoja等人,2018)来说,解决狗行走任务也很困难。事实上,据我们所知,TCRL-dynamics是第一个可以使用学习到的动力学模型进行在线规划来控制法老犬向前行走的方法。Chua等人(2018)讨论了预测任意的(aleatoric)和认识的(aleatoric)不确定性以实现良好规划性能的重要性。然而,在Chua等人(2018)和我们的实验中,算法都在确定性环境中进行了测试,这使得预测任意的不确定性的动机不太明确。事实上,在我们的实验中,TCRL-dynamics和EnsDet在所有任务上都优于PETS。这一证据迫使我们重新思考在这些常用的确定性任务中预测任意的不确定性的作用。此外,与PETS相比,我们的方法和EnsDet都显示了使用多步训练目标的重要性,而这与PETS中使用的随机模型不太兼容,因为正确地在多个时间步骤上传播不确定性是具有挑战性的。与EnsDet相比,我们的结果显示了在潜在空间而不是观测空间中进行预测的优越性。我们在第5节中对此进行了更详细的讨论。此外,我们发现PETS和EnsDet都需要对状态进行归一化。然而,在异策略(off-policy)方法中,状态归一化并不常见,因为这可能会引入额外的不稳定性。我们的假设是,在异策略方法中,回放缓冲区中的数据分布在训练过程中不断变化,这导致归一化状态的均值和标准差不稳定。但是,TCRL-dynamics在没有状态归一化的情况下可以获得良好的性能,这使其成为在基于模型的强化学习中具有吸引力的选择。

总之,本文的实验设置包括两个主要的实验设置:动力学学习和策略学习。在动力学学习中,使用潜在动力学模型进行在线规划。在策略学习中,使用潜在状态代替原始观测进行免模型的策略和值函数学习。实验中使用了模型预测路径积分(MPPI)作为在线规划方法,并与随机集合模型(PETS)和确定性集合模型(EnsDet)进行了比较。此外,还在基于像素的任务上进行了实验比较,并展示了方法的通用性和性能优势。

**实验结果:**

实验结果和分析:

本文通过一系列实验评估了TCRL方法在连续控制任务中的性能。实验分为两个设置进行评估:动力学学习和策略学习。

1. 动力学学习:

在动力学学习设置中,作者使用了潜在动力学模型进行在线规划,称为TCRL-dynamics。实验结果表明,通过仅依赖于通过潜在时间一致性训练的潜在动力学模型,可以学习到准确的动力学模型。与基于集成的方法相比,TCRL-dynamics的训练速度提高了4.1倍,并且在解决具有挑战性的高维运动任务时表现出色。

2. 策略学习:

在策略学习设置中,作者使用潜在状态替代原始观测来以免模型的方式训练策略和值函数,称为TCRL。实验结果显示,通过使用通过时间一致性训练的潜在表示,可以提高策略和值函数的学习效果。特别是在高维任务(如DMC中的Humanoid和Dog任务)上,TCRL方法在免模型的情况下明显优于其他免模型方法,并且在训练速度上比模型基准方法快2.4倍。

3. 模型对比:

作者将TCRL方法与其他模型进行了比较。在连续控制任务中,与PETS和EnsDet方法相比,TCRL方法在多个任务上表现出色,甚至在高维任务中也能取得良好的控制效果。实验结果表明,TCRL-dynamics方法是第一个能够使用学习到的动力学模型进行在线规划的方法。此外,TCRL方法和EnsDet方法在预测潜在空间而不是观测空间方面表现出优越性。

4. 像素任务:

作者还在像素任务上评估了TCRL方法的性能。实验结果显示,TCRL方法在六个视觉控制任务上与PlaNet方法的结果相当,而且训练速度更快。这表明TCRL方法在像素任务和状态任务中都具有竞争力。

综上所述,实验结果表明TCRL方法在连续控制任务中具有较高的性能,并且在动力学学习和策略学习方面都取得了显著的改进。

# 7月3号

# P:1 EmbodiedGPT: 通过具身化思维链进行视觉-语言预训练

- Title: EmbodiedGPT: Vision-Language Pre-Training via Embodied Chain of Thought

- 论文简介: 本文介绍了一种名为EmbodiedGPT的多模态基础模型,用于具有多模态理解和执行能力的具身化人工智能。通过构建一个大规模的具身化规划数据集EgoCOT,采用“思维链”模式生成子目标序列,将7B大型语言模型(LLM)通过前缀调整适应EgoCOT数据集,提出了一种高质量规划生成的高效训练方法。同时,通过从LLM生成的规划查询中提取与任务相关的特征,形成高层规划和低层控制之间的闭环。实验证明了EmbodiedGPT在具身化任务上的有效性,包括具身化规划、具身化控制、视觉字幕生成和视觉问答。特别是,在具身化控制任务中,EmbodiedGPT通过提取更有效的特征,使成功率在Franka Kitchen基准上提高了1.6倍,在Meta-World基准上提高了1.3倍,与使用Ego4D数据集微调的BLIP-2基线相比。

- Authors: Yao Mu, Qinglong Zhang, Mengkang Hu, Wenhai Wang, Mingyu Ding, Jun Jin, Bin Wang, Jifeng Dai, Yu Qiao, Ping Luo

- Affiliation: The University of Hong Kong, OpenGV Lab at Shanghai AI Lab, Huawei

- Keywords: Embodied AI, vision-language pre-training, embodied planning, embodied control, multi-modal understanding

- Paper: [Link](https://arxiv.org/pdf/2305.15021) Github: [None]

- 论文总结:

- (1): 本文的研究背景是具身化人工智能任务,旨在使机器人能够在物理环境中感知、推理和行动,实现长期规划和基于实时观察的自主执行。

- (2): 过去的方法主要集中在大规模语言模型的训练,但在具身化任务中缺乏高质量的数据集和精确的规划生成。本文提出了EgoCOT数据集和EmbodiedGPT模型,通过思维链模式生成子目标序列,并通过前缀调整训练语言模型,从而解决了这些问题。

- (3): 本文提出了一种基于具身化链式思维的视觉-语言预训练框架EmbodiedGPT,包括冻结的视觉模型、冻结的语言模型、具身化模块和策略网络。通过从语言模型生成的规划查询中提取任务相关特征,形成高层规划和低层控制之间的闭环。此外,通过前缀调整训练语言模型,生成更可执行的规划。

- (4): 在具身化任务中,EmbodiedGPT在具身化规划、具身化控制、视觉字幕生成和视觉问答等任务上取得了显著的性能提升。与使用Ego4D数据集微调的BLIP-2基线相比,在Franka Kitchen基准上成功率提高了22.1%,在Meta-World基准上提高了22.5%。这些结果表明了EmbodiedGPT在任务性能上的创新和贡献。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文介绍了一种名为EmbodiedGPT的多模态基础模型,通过视觉-语言预训练实现了具有多模态理解和执行能力的具身化智能体。

b. 方法的详细步骤:

(1). 构建大规模具身化规划数据集EgoCOT:从Ego4D数据集中精选视频,并配以高质量的语言指令,生成具有"Chain of Thoughts"模式的子目标序列,用于有效的具身化规划。

(2). 高质量计划生成的有效训练方法:通过将7B大型语言模型(LLM)适应EgoCOT数据集,通过前缀调整,实现对EmbodiedGPT的高质量计划生成的有效训练。

(3). 从LLM生成的规划查询中提取与任务相关的特征:通过在高层规划和低层控制之间形成闭环,从LLM生成的规划查询中提取与任务相关的特征,用于生成任务执行的低层控制命令。

(4). 引入基于视觉-语言预训练的具身化规划范式:通过将任务描述和具身化规划作为问题和答案,将具身化规划任务形式化为标准的视觉问答(VQA)任务,丰富了具身化规划和标准视觉问答任务的数据,鼓励EmbodiedGPT捕捉更适合具身化控制任务的任务特定特征。

(5). 训练过程分为三个阶段:第一阶段是图像-文本对齐预训练,使用COCO Caption、CC3M和LAION-400M数据集进行预训练;第二阶段是增强模型理解和生成复杂句子的能力,使用"Complex_Reasoning_77k"和"LLaVA_Instruct_150K"数据集进行训练;第三阶段是使用EgoCOT数据集对具身化AI任务进行训练。

(6). 具身化"chain-of-thought"训练:在第三阶段,将预训练的视觉模型转移到视频编码器中,使用Conv3D进行转换,然后引入"chain-of-thought"视觉-语言预训练范式,通过关键帧的视频输入、任务描述、具身化规划和结构化的动词-名词对摘要进行推理。

(7). 后处理:使用CLIP模型评估视频和文本对之间的相似性,筛选出相似性高于阈值的视频-标题-规划对,确保EgoCOT数据集的高质量。同时,创建EgoVQA数据集用于具身化人-物交互视频问答任务的训练。

(8). 其他细节:对于EgoVQA数据集的构建,使用ChatGPT生成与每个标题相关的五个问答对,以丰富训练数据。

(9). 实验结果:EmbodiedGPT在具身化任务中表现出显著的效果,包括具身化规划、具身化控制、视觉字幕和视觉问答。与使用Ego4D数据集进行微调的BLIP-2基线相比,EmbodiedGPT在Franka Kitchen基准上的成功率提高了1.6倍,在Meta-World基准上提高了1.3倍。

**实验结果:**

实验结果和分析:

本文通过对多模态基础模型和EmbodiedGPT进行全面评估,包括视觉字幕生成、具身规划和控制等各种任务。

在图像输入任务的评估中,作者进行了一项用户研究,共有30名参与者。研究包括了来自MS-COCO数据集的10个图像字幕任务,不同具身AI模拟器中的5个具身规划场景,以及5个伴随具身规划任务的真实场景。参与者使用1到10的评分系统,对不同的端到端模型生成的字幕进行了五个维度的评分:物体识别准确性、空间关系理解、答案中的冗余程度、规划的合理性和可执行性。表1显示了所有参与者对不同模型的平均评分。结果表明,尽管EmbodiedGPT在语言模型中只有7B个参数,但在物体识别和空间关系理解方面,它与LLaVA-13B模型达到了可比较的水平。此外,EmbodiedGPT在与具身AI任务相关的内容中生成的冗余内容较少,并且产生了最合理和可执行的规划输出。作者还将EmbodiedGPT与Visual ChatGPT进行了比较,Visual ChatGPT采用分层方法,通过组合多个预训练的视觉模型和语言模型来回答问题。在Virtual-Home基准测试中,Visual ChatGPT由于仅依赖于字幕模型提取视觉信息的局限性,无法找到衣架,导致性能较差。

在具身规划任务的评估中,作者在Franka Kitchen基准和Meta-World基准上与BLIP-2基线进行了比较。结果显示,与使用Ego4D数据集微调的BLIP-2基线相比,EmbodiedGPT在Franka Kitchen基准上的成功率提高了1.6倍,在Meta-World基准上提高了1.3倍。

在具身控制任务的评估中,作者通过提取LLM生成的规划查询的任务相关特征,形成了高层规划和低层控制之间的闭环。实验结果表明,EmbodiedGPT通过提取更有效的特征显著提高了具身控制任务的成功率。

综上所述,实验结果表明EmbodiedGPT在多种任务中表现出良好的性能,包括具身规划、具身控制、视觉字幕生成和视觉问答等。

# P:2 告诉我去哪里:一种用于上下文感知的可组合机器人导航框架

- Title: Tell Me Where to Go: A Composable Framework for Context-Aware Embodied Robot Navigation

- Authors: Harel Biggie, Ajay Narasimha Mopidevi, Dusty Woods, Christoffer Heckman

- Affiliation:

Department of Computer Science, University of Colorado Boulder

- Keywords: Natural language, navigation, contextual navigation

- Paper: [Link to the paper](https://arxiv.org/pdf/2306.09523)

Github: None

- Summary:

- (1): 本文研究背景是人类在陌生环境中通过先前的知识和环境描述进行导航的能力。研究者希望开发一个框架,使机器人能够通过自然语言指令与物理环境进行关联。

- (2): 过去的方法包括使用概率图结构和端到端学习方法将自然语言与物理领域关联起来。然而,这些方法存在一些问题,如泛化能力有限和解释性差。本文的方法通过创建一个中间层来解决这些问题,并利用大型语言模型的先验知识进行导航。

- (3): 本文提出了NavCon框架,它通过将大型语言模型的先验知识转化为Python代码,并与机器人导航框架进行交互,解决了现实世界泛化和透明性问题。该框架利用了LLM先验知识、先进的物体检测器和经典的机器人规划算法,实现了基于自然语言的导航。

- (4): 本文在四个不同的环境和命令类别上评估了NavCon方法,并展示了其解释上下文命令的能力。实验结果表明,该框架能够根据自然语言导航到地标,并从句子的上下文中推断出适当的导航目标。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文提出了一种基于上下文感知的机器人导航框架,通过中间层将大规模语言模型(LLMs)的先验知识转化为机器人可以理解的Python代码,解决了LLMs缺乏现实世界感知和输出不可预测性的问题。

b. 方法的详细步骤:

(1). 导航上下文框架(NavCon)的开发:利用LLMs的丰富上下文先验知识,创建一个可执行的代码层,与运行在具体机器人上的规划算法进行接口交互。该框架采用模块化神经网络和概念学习技术的范例,通过在不同阶段接收输入来完全定义系统的最终导航输出。

(2). 输入表示:将视觉输入表示为RGB图像,可以是半全景视图或三个分别标记的空间图像(左、前、右)。为了进行三维推理和关联不同相机视角与其关联的三维空间关系,需要确定适当的输入表示方法。通过比较将所有视点的拼接图像(半全景)或分别发送每个帧的空间定义(右、前、左),发现在拼接图像上进行空间推理效果最佳。

(3). 中间层:利用最近的代码生成模型,生成中间层代码θ。提供一组功能性的导航指令,以Python API的形式提供给代码生成模型。这些指令包括如何使用类似于[14]中的范例在输入图像上进行视觉推理的规范。此外,还提供了与几何规划器进行接口的API规范,生成基于自然语言提示γc的代码。

(4). 规划:使用基于图的规划器,提供导航终点。具体地,创建一个函数,将图像空间中对象的中心坐标p投影为3D地图上的地标l。规划器使用类似于[59,18]中描述的采样和投影方法来构建计划的图G。当输入3D航点到规划器时,从G中选择最佳路径P供机器人跟随。如果不存在路径,则规划到图的边界并重新采样,直到达到目标。

(5). 通过中间层将航点传递给规划器:具体地,通过执行θ将图像坐标转换为航点,并使用射线投射将结果关联到3D地图m上。在线生成地图m使用[61],而翻译层θ则创建了在输入c、v、m和输出i之间进行转换所需的代码。然后从P中选择最佳路径规划到航点。

(6). 具体步骤详见图3。

综上所述,本文提出了一种基于上下文感知的机器人导航框架,通过中间层将大规模语言模型的先验知识转化为机器人可以理解的Python代码,实现了对自然语言导航指令的解释和执行。该方法在四个不同环境和命令类别上进行了评估,并展示了NavCon解释上下文命令的能力。

**实验设置:**

- 实验设置(Experimental setting):

本文在一台配备了自定义传感套件的Boston Dynamics Spot机器人上进行了两组实验。该套件包括一个3D 64束Ouster激光雷达、一个惯性测量单元(imu)和3个RGB摄像头,提供了环境的半全景视图。第一组实验旨在确定视觉层的最佳输入表示,第二组实验测试了系统在各种真实环境中执行导航的能力。

对于所有实验,本文借鉴了人类概念学习的思想,将句子分为四个类别:通用、具体、关系和上下文。通用句子是指仅暗示“去某处”的句子,例如“走到背包那里”。具体句子包括一个区分性的语言特征,如颜色属性,指导机器人去场景中的一个特定对象。例如,如果有两个背包,一个红色,一个黑色,那么一个具体的句子可能是“开到黑色背包那里”。关系句子是描述场景中物体之间空间关系的句子,例如“移动到椅子上的背包”。上下文示例要求机器人根据背景信息解释导航目标。例如,句子“找一个可以装水的东西”要求机器人知道杯子或桶可以装水。完整的句子列表可以在附录中找到。

为了解决将空间关系编码到没有物理世界概念的模型中的问题,本文通过经验评估确定了将三个不同视点输入到框架中的有效方案。例如,句子“去右边的椅子”仅意味着应该调查总视野的右半部分,但是“去椅子右边的背包”可以在任何图像中。为了解决这些限制,本文使用了两种不同的输入表示方式。第一种方式是将所有三个图像拼接在一起,并在帧之间添加填充(A)。在这种情况下,我们指示LLM按照图像的顺序(左、前、右)进行处理,并让模型处理空间推理。第二种方式是分别处理每个帧(B),并让模型决定要查看哪些帧。我们明确提示模型使用解决“在你的右边”和“右边的”语言风格差异的示例代码片段。在这组实验中,我们评估生成的代码的正确性以及代码是否能够正确识别地图中的对象。这个场景是在教室环境中创建的,光照条件良好,重点是评估输入表示的效果。成功生成代码和对象检测的结果如表1所总结。从表1中我们可以看出,拼接图像的方法明显优于分别发送三个帧。在通用导航案例中,我们能够实现100%的中间层生成和对象识别成功。我们发现,当处理特定对象时,单独处理每个帧的配置(B)开始出现问题。具体来说,当物体出现在多个帧中时,模型无法推理空间关系和物体顺序。例如,对于句子“去中间的插座”,只有当三个插座都出现在同一个摄像头帧中时才有效。如果中间的插座在前面的帧中,而右边的插座在右侧的摄像头帧中,这种方法就会失败。我们在生成的代码中明确看到了这一点。

(1). 实验一:确定视觉层的最佳输入表示。

(2). 实验二:测试系统在各种真实环境中执行导航的能力。

**实验结果:**

- 实验结果和分析(Experimental results and analysis):

本文在一台配备了自定义传感套件的Boston Dynamics Spot机器人上进行了两组实验。第一组实验旨在确定视觉层的最佳输入表示方法,第二组实验测试了系统在各种真实环境中执行导航的能力。

在所有实验中,作者将句子分为四个类别:通用、具体、关系和上下文。通用句子是指只暗示“去某个地方”的句子,例如“走到背包那里”。具体句子包括一个区分性的语言片段,例如一个颜色属性,指导机器人去场景中的一个特定对象。例如,如果有两个背包,一个红色,一个黑色,那么一个具体的句子可能是“开到黑色背包那里”。关系句子是描述场景中物体之间空间关系的句子,例如“移动到椅子上的背包”。上下文示例要求机器人根据背景信息解释导航目标。例如,句子“找一个可以装水的东西”要求机器人知道杯子或桶可以装水。作者在附录中列出了完整的句子列表。

作者通过实证评估确定了将三个不同视点输入到框架中的有效方案。作者使用两种不同的输入表示方法,第一种是将三个图像拼接在一起,并在帧之间添加填充。在这种情况下,作者指示LLM模型图像的顺序(左、前、右),并让模型处理空间推理。第二种情况下,作者逐个处理帧,并让模型决定要查看哪些帧。作者明确提示模型使用示例代码片段解决“在你的右边”和“右边的”语言风格之间的差异。

实验结果表明,将图像拼接在一起的方法明显优于逐个处理三个帧的方法。在通用导航案例中,作者能够实现100%的中间层生成和对象识别成功。然而,在处理特定对象时,逐个处理帧的配置开始出现问题。具体来说,当物体出现在多个帧中时,模型无法推理空间关系和物体顺序。例如,句子“去中间的插座”只有当三个插座都出现在同一帧中时才有效。如果中间的插座在前方帧中,而右边的插座在右侧相机帧中,这种方法就会失败。

总的来说,作者的NavCon框架在四个不同环境和命令类别上的实验中表现出了良好的解释上下文命令的能力。通过将大规模语言模型的先验知识转化为机器人可以理解的Python代码指令,NavCon框架弥补了大规模语言模型缺乏现实世界感知的不足。

# P:3 你的房间不是私密的:深度Q学习的梯度反演攻击

- Title: Your Room is not Private: Gradient Inversion Attack for Deep Q-Learning

- 论文简介: 本文提出了一种利用梯度反演攻击深度Q学习算法的方法,通过梯度反演重构状态、动作和Q值,以揭示在具体决策算法中的隐私泄露问题。该方法利用梯度进行攻击的选择是基于常用的联邦学习技术,这些技术仅利用基于私有用户数据计算的梯度来优化模型,而不会将数据存储或传输到公共服务器。然而,这些梯度包含足够的信息来潜在地暴露私有数据。通过在AI2THOR模拟器上进行实验证明了该方法的有效性。

- Authors: Miao Li, Wenhao Ding, Ding Zhao

- Affiliation:

Carnegie Mellon University (卡内基梅隆大学)

- Keywords: Privacy, Reinforcement Learning, Gradient Inversion

- Paper: [Link to the paper](https://arxiv.org/pdf/2306.09273) Github: None

- 论文总结:

- (1): 本文研究了在具体决策算法中的隐私泄露问题,填补了该领域研究的空白。

- (2): 之前的方法主要集中在联邦学习中的隐私保护,但仍存在梯度反演攻击的漏洞。本文的方法通过梯度反演重构状态、动作和Q值,解决了这一问题,并且在实验中取得了良好的效果。

- (3): 本文提出了一种名为Deep Q-learning Gradient Inversion (QGI)的方法,通过梯度反演来恢复强化学习中的多模态输入信息。该方法通过优化重构状态的梯度与真实梯度之间的余弦相似度,并使用先验项惩罚噪声图像模式,实现了对状态、动作和Q值的准确恢复。

- (4): 本文在AI2THOR模拟器上的主动感知任务中评估了QGI方法,并成功恢复了所有120个房间布局的数据。实验结果表明,该方法在恢复数据方面取得了良好的性能,支持了其目标和创新贡献。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文提出了一种利用梯度反演攻击Deep Q-Learning算法的方法,通过梯度反演重构状态、动作和Q值,从而揭示决策算法中的隐私泄露问题。

b. 方法的详细步骤:

(1). 在第一步中,通过梯度分析确定具有梯度的动作。

(2). 在第二步中,通过两个阶段重构向量状态和图像状态,其中第一阶段重构包括线性层的输入,包括坐标向量和第二阶段重构图像状态。

(3). 在第三步中,通过应用重构的向量来获得Q值Q_rec(s, a),并通过重构误差Q(s, a) - Q(s, a)来估计目标Q值Q_rec。

(4). 当批量大小大于2时,无法直接从批量梯度中识别动作,因此需要进行枚举或优化。

(5). 将状态重构分为两个阶段,首先重构未经卷积层处理的向量状态,然后在固定向量状态的情况下重构需要卷积的图像状态。

(6). 使用梯度匹配损失和总变差损失来优化图像状态的重构。

(7). 使用规则自动计算权重λ来影响总损失中两个项的梯度比率。

(8). 使用梯度更新状态重构。

以上是本文提出的方法的详细步骤。

**实验设置:**

- 实验设置(Experimental setting):

本文在AI2THOR模拟器上进行了实验,评估了提出的算法在具体任务中的效果。实验结果表明,该方法能够成功地从数据中恢复所有信息,并且在所有120个房间布局上都取得了令人信服的效果。

(1). 实验对象:AI2THOR模拟器。

(2). 实验任务:在具体任务中评估算法的效果,特别是在活动感知任务中。

(3). 实验方法:使用梯度反演攻击Deep Q-Learning算法,利用梯度反演重构状态、动作和Q值。

(4). 实验结果:通过定性和定量的实验结果,验证了算法的有效性。在S1设置下,重构的RGB图像和深度图像具有可识别的模式和幅度。在S2设置下,重构的图像具有清晰的边缘和细节。

(5). 实验参数:在S1设置中,使用λ=0.1进行实验;在S2设置中,使用自适应方法进行实验。

(6). 实验数量:共进行了120个房间布局的实验。

(7). 实验对比:与联合优化方法进行对比,结果表明,联合优化在状态重构步骤中的性能下降,而梯度反演方法在所有指标上都取得了较好的效果。

(8). 实验验证:通过实验结果验证了算法的有效性和稳定性。

(9). 实验限制:本文的实验主要集中在AI2THOR模拟器上,对于实际机器人任务的适用性还需要进一步研究。

(10). 实验创新点:本文首次将梯度反演方法应用于机器人任务和多模态数据中,填补了相关领域的研究空白。

(11). 实验结论:实验结果表明,梯度反演攻击可以成功地从梯度中恢复私密数据,这对于机器人任务的隐私保护具有重要意义。

**实验结果:**

- 实验结果和分析:

本文通过在AI2THOR模拟器上进行实验,评估了提出的基于梯度反演的Deep Q-Learning算法攻击的效果。实验结果表明,该方法能够成功地从数据中恢复所有信息,并且在所有120个房间布局中都取得了令人信服的效果。

在S1设置下,使用λ=0.1进行实验,左图中的RGB图像和深度图像都能够被成功重建,并且具有可识别的模式和幅度。RGB图像展现出准确的颜色色调,尽管在局部区域可能存在一些不准确的有色噪声。与RGB图像一起重建的深度图像也显示出类似的噪声模式,但整体连续区域的亮度仍然准确。因此,潜在的对手可以通过这些重建图像获取私人房间的大小。在S2设置下,如右图所示,成功重建的图像展现出清晰的边缘和细节,具有准确性。更多的定性结果请参考附录B。

通过将提出的QGI与联合优化进行比较,评估了QGI的效果。联合优化应用于状态重建步骤,同时优化向量和图像重建。实验结果表明,联合优化的图像状态(RGB+深度)和向量状态(目标坐标)在所有指标上的性能下降。平均IoU低于0.1,而QGI的平均IoU超过0.9。Q值重建也显示出显著的错误,与QGI相比,平均ϵ(Q)增加了40倍。

研究了L img中的自适应权重,将QGI与常数λ=0.1、0.01进行比较。在S1设置中,自适应权重方法在PSNR和SSIM方面略有改善。然而,尽管PSNR和SSIM的标准偏差较大,λ=0.1的视觉质量比自适应λ更稳定。在两个设置中,自适应λ在深度图像方面表现出一致的优势,表1中的结果和图4中的视觉质量都是稳定的,特别是在S2设置中。

综上所述,实验结果表明,提出的基于梯度反演的Deep Q-Learning算法攻击方法在恢复数据方面取得了成功,并且在多个指标上优于联合优化方法。这些结果为深度强化学习中的隐私泄露问题提供了重要的研究基础。

# P:4 通过代理分析改进从LLMs中提取知识以用于机器人任务学习

- Title: Improving Knowledge Extraction from LLMs for Robotic Task Learning through Agent Analysis

- Authors: James R. Kirk, Robert E. Wray, Peter Lindes

- Affiliation: Center for Integrated Cognition at IQMRI

- Keywords: large language models, robotic task learning, prompt engineering, cognitive-agent approach

- Paper: [Link](https://arxiv.org/pdf/2306.06770) Github: None

- Summary:

-(1):本文的研究背景集中在改进用于机器人任务学习的大型语言模型(llm)的知识提取。

-(2):过去的方法,如提示工程,在为机器人代理获取相关的、基于情境的知识方面存在局限性。本文提出的方法旨在扩展和补充提示工程以克服这些局限性。

-(3):本文提出的研究方法是一种认知代理方法,使机器人能够获得与其母语能力、体现、环境和用户相匹配的新任务知识。创新之处在于将智能体分析与提示工程相结合,增强了知识提取能力。

-(4):本文的方法对机器人任务学习进行了评估,所获得的性能支持了机器人代理获取相关的、基于情境的知识的目标。本文的创新和贡献在于认知代理方法,该方法改进了从llm中提取知识用于机器人任务学习的方法。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文提出了一种认知代理方法,通过扩展和补充提示工程,从而提高了从大型语言模型(LLMs)中提取知识用于机器人任务学习的效果。

b. 方法的详细步骤:

(1). 基于交互式任务学习(ITL)代理的扩展:在现有的ITL代理基础上进行扩展,使其能够学习各种不同的任务,并在多个不同的物理和模拟机器人领域中进行学习。

(2). 目标学习流程:当任务的执行策略未知时,使用规划来找到与目标一致的动作序列。如果规划的动作能够实现目标,代理会对执行的动作进行回顾性分析,以学习长期的策略知识,从而在未来能够一次性学习任务目标。这个学习过程在许多领域都取得了成功,但需要大量用户输入来提供可解释和准确的目标描述。

(3). STARS方法:通过扩展基线提示策略,STARS方法通过增加LLMs的响应空间,并在自主机器人中嵌入通用策略来评估、修复和选择LLMs生成的候选响应,从而提高机器人的任务学习效果。具体包括以下三个过程:

- 通过波束搜索检索LLMs的响应树:使用波束搜索策略从单个提示中生成多个高概率的响应,以获得更多的可行响应。

- 分析和修复响应:对从LLMs检索到的响应进行分析和修复,以确保其对机器人是可行的。

- 使用LLMs选择目标响应:通过LLMs选择一个目标响应作为机器人的任务目标,从而减少用户的监督需求。

(4). 可选的监督策略:可以向用户征求反馈,以进一步提高任务学习的效果。

(5). STARS方法流程:根据上述步骤,STARS方法的流程包括基线提示策略和STARS方法的组合,其中STARS方法通过波束搜索、分析和修复响应以及使用LLMs选择目标响应来提高任务学习效果。

以上是本文方法的详细步骤,通过扩展和补充提示工程,使机器人能够从LLMs中获取更多的可行响应,并通过自主分析和修复来提高任务学习的效果。

**实验设置:**

- 实验设置(Experimental setting):

本文描述了一种认知代理方法,通过扩展和补充提示工程,减轻其局限性,并使机器人能够获取与其本地语言能力、体现、环境和用户偏好相匹配的新任务知识。该方法是通过增加LLMs的响应空间,并在自主机器人内部部署一般策略,对LLMs生成的候选响应进行评估、修复和选择。实验通过检索和评估LLMs的多种响应,展示了机器人在无用户监督的情况下能够实现超过75%的一次性学习任务完成率。当提供人类监督时,任务完成率达到100%,同时大大减少了所需的人类监督量。

(1). 实验目标:通过扩展和补充提示工程,使机器人能够从LLMs中获取与其本地语言能力、体现、环境和用户偏好相匹配的新任务知识。

(2). 实验方法:通过增加LLMs的响应空间,并在自主机器人内部部署一般策略,对LLMs生成的候选响应进行评估、修复和选择。

(3). 实验结果:在无用户监督的情况下,机器人能够实现超过75%的一次性学习任务完成率。当提供人类监督时,任务完成率达到100%,同时大大减少了所需的人类监督量。

(4). 实验设计:本实验仅报告了每个条件下的一次运行结果。为了评估可重复性,STARS条件运行了10次。任务完成率在75%到80%之间变化,方差为3.75。

(5). 实验领域:本文的实验测试了单个任务和领域,但计划在其他任务和领域进一步改进和评估STARS方法。

(6). 实验成本:实验中的监督形式包括指令和单词,STARS方法将其减少了403个单词至127个单词。此外,STARS方法提供给用户的响应的准确性也得到了提高。

(7). 实验限制:由于实验成本的限制,本文未能多次运行所有实验。但结果表明,STARS的整体方差较小,对关键结果影响较小。

(8). 实验改进:未来的工作将探索改进LLM选择策略的方法,特别是通过从用户和LLM获取额外的上下文信息。

**实验结果:**

- 实验结果和分析:

本文通过实验结果和分析展示了使用大型语言模型(LLMs)进行机器人任务学习的知识提取的改进方法。以下是实验结果的详细描述:

1. 性能:在实验中,通过对LLMs检索和评估多个响应,机器人可以在一次学习中完成超过75%的任务,无需用户监督。当提供人类监督时,任务完成率达到100%,同时大大减少了需要的人类监督量。

2. 响应质量:通过对LLMs检索到的响应进行分类,发现超过70%的响应是不可行的,只有13%的响应是与情境相关的。使用改进的方法(STARS)可以使机器人获得100%的至少是合理的响应。

3. 成本:使用改进的方法(STARS)可以显著减少用户的指令和输入量,从403个单词减少到127个单词。此外,STARS还提高了响应的准确性,70%的响应被用户接受为正确的。

4. LLM选择策略:实验结果显示,LLM选择策略与基线选择策略相比没有明显的改进。

综上所述,通过实验结果和分析,本文展示了改进的方法(STARS)可以提高机器人从LLMs中提取知识的效果,并减少用户的监督成本。然而,用户的输入仍然是确保任务完成的必要条件。未来的工作可以进一步提高LLM提取的质量,并扩展用户的监督方式。

# P:5 基于语言的场景摘要生成机器人的可执行策略学习

- Title: Embodied Executable Policy Learning with Language-based Scene Summarization

- 论文简介: 本文介绍了一种新的学习范式,通过基于语言的场景摘要生成机器人的可执行动作。该方法利用视觉观察的语言摘要作为连接桥梁,将视觉观察转化为文本形式的可执行动作。该方法不需要人类参与,更适用于实际的机器人学习任务。

- Authors: Jielin Qiu, Mengdi Xu, William Han, Seungwhan Moon, Ding Zhao

- Affiliation:

Carnegie Mellon University (卡内基梅隆大学)

- Keywords: Decision-Making, Embodied Robot Learning, Large Language Model

- Paper: [Link](https://arxiv.org/pdf/2306.05696)

Github: None

- 论文总结:

- (1): 本文的研究背景是利用大型语言模型辅助机器人学习任务,但现有的模型对于基于图像观察的真实世界机器人学习任务的性能依赖于领域特定的文本数据。

- (2): 过去的方法要么使用语言指令,要么结合语言和视觉数据作为输入,但缺乏与非专家环境交互演化的能力。本文的方法通过语言摘要将视觉观察转化为文本形式的可执行动作,避免了需要人类参与的场景摘要过程,更加实用。

- (3): 本文提出的方法包括两个模块:SUM模块和APM模块。SUM模块利用视觉观察解释环境并生成场景的文本摘要,APM模块根据SUM模块提供的自然语言描述生成可执行动作策略。本文通过模仿学习和强化学习两种方法进行微调,以有效适应目标测试任务。

- (4): 在VirtualHome环境的七个房屋布局上进行了大量实验,实验结果表明本文的方法优于现有基线模型,验证了这种新的学习范式的有效性。

**方法部分:**

方法详细介绍:

a. 一句话概览:本文提出了一种新的学习范式,通过基于语言的场景摘要生成机器人的可执行动作,从而将视觉观察和自然语言之间建立联系,实现机器人学习任务的执行。

b. 方法的详细步骤:

(1). 问题定义:考虑一个机器人学习任务,机器人根据连续的视觉观察V生成一系列的动作A。本文旨在探索利用预训练的大型模型在只有视觉观察作为输入的机器人学习任务中的优势,并提出了一种精细调整方法。

(2). SUM:SUM模块的目标是将视觉观察转化为包含可操作特征的语言描述。SUM采用图像字幕模型作为其基础,通过从图像中提取代表性和有意义的视觉表示,并生成连贯和智能的语言描述。SUM模块的输入是像素,经过视觉编码后得到一个或多个特征向量,然后语言模型根据给定的词汇表生成一个词序列。本文采用了具有编码器-解码器架构的预训练语言模型作为SUM的基础。

(3). APM:APM模块的目标是将SUM输出的潜在语言信息转化为可执行的动作计划。APM采用具有编码器-解码器架构的预训练语言模型,其中编码器负责读取和理解SUM的输入语言信息,并创建一个固定长度的上下文向量。解码器将上下文向量作为输入,并生成输出,即可执行的动作计划。由于预训练语言模型的数据分布与新任务之间存在差异,需要在任务特定数据上对模型进行精细调整。本文采用了交叉熵损失和掩码语言模型作为训练策略,并引入了强化学习方法,通过优化序列级指标来进行模型的精细调整。

(4). 训练流程:训练流程包括两个步骤。首先,使用VirtualHome的观察数据对SUM进行精细调整,以使其熟悉任务特定数据中存在的场景类型。然后,加载精细调整后的SUM,并将其输出编码为潜在语言嵌入。将嵌入输入到APM中,并使用不同的精细调整损失目标对APM进行精细调整,以实现最优策略和最大奖励。

以上是本文提出的方法的详细步骤,通过SUM模块将视觉观察转化为语言描述,再通过APM模块将语言信息转化为可执行的动

**实验设置:**

- 实验设置(Experimental setting):

- 环境(Environment): 在实验中使用了VirtualHome作为环境,该环境是一个模拟日常家庭活动的多智能体虚拟平台。共选择了7个不同的VirtualHome环境进行评估。

- 指标(Metrics): 使用了标准的自然语言处理评估指标,包括BLEU、ROUGE、METEOR、CIDEr和SPICE,以及Li等人提出的执行率指标。

- 数据集(Datasets): 使用VirtualHome收集了包括观察、语言指令和动作序列在内的数据。数据集经过增强处理,并包含了超过30,000个可执行程序,每个环境包含超过300个对象和4,000个空间关系。

- 模型比较(Model comparison): 在模型比较中,将提出的方法与MLP、MLP-1和LSTM等基线模型进行比较。结果表明,提出的方法在执行率上优于基线模型。

- 模型性能(Model performance): 在使用专家数据进行迁移学习和强化学习的情况下,对SUM和APM进行了微调,并评估了模型的性能。结果表明,使用专家数据进行微调可以提高模型的性能。同时,使用OFA作为SUM和BART作为APM的组合可以获得最佳性能。

**实验结果:**

实验结果和分析:

本文的实验结果表明,提出的学习范式在机器人学习任务中取得了显著的性能提升。通过使用基于语言的场景总结作为语言模型和视觉观察之间的桥梁,我们的方法能够从纯视觉观察中生成机器人的可执行动作。我们的方法不需要人类参与学习过程中的文本总结,因此更适用于实际的机器人学习任务。

我们的方法包括两个模块:SUM模块和APM模块。SUM模块通过解释环境的视觉观察并生成场景的文本总结,APM模块根据SUM模块提供的自然语言描述生成可执行的动作策略。我们的实验结果表明,我们的方法在VirtualHome环境中的各种SUM/APM模型选择、环境和任务上都优于现有的基线方法,验证了这种新颖学习范式的有效性。

我们使用了标准的自然语言处理评估指标,包括BLEU、ROUGE、METEOR、CIDEr和SPICE,来评估语言模型的性能。此外,我们还引入了执行率作为评估指标,执行率定义为APM输出的动作在整个轨迹上成功执行的概率。我们的实验结果表明,我们的方法在执行率和各项评估指标上均优于现有的基线方法。

我们还进行了进一步的实验,通过使用专家数据进行模型的微调,进一步提高了模型的性能。实验结果表明,使用专家数据进行迁移学习的方法在平均性能和每个环境的性能上都优于使用强化学习进行迁移学习的方法。此外,我们观察到,在SUM模块中使用OFA和在APM模块中使用BART的组合能够在迁移学习后实现最佳性能。

总之,我们的实验结果验证了提出的学习范式的有效性,并证明了该方法在机器人学习任务中的潜力。

# P:6 AlphaBlock: 机器人操作中视觉-语言推理的体现微调

- 1. Title: AlphaBlock: Embodied Finetuning for Vision-Language Reasoning in Robot Manipulation

- 论文简介: 本文提出了一种新颖的框架,用于学习机器人操作任务中的高级认知能力,例如使用积木搭建笑脸。该框架通过自动收集认知机器人数据集AlphaBlock,以及闭环多模态体现规划模型,实现了高级任务的学习和执行。

- 2. Authors: Chuhao Jin, Wenhui Tan, Jiange Yang, Bei Liu, Ruihua Song, Limin Wang, Jianlong Fu

- 3. Affiliation:

Chuhao Jin, Wenhui Tan, Jiange Yang: Renmin University of China

- 4. Keywords: robot manipulation, vision-language reasoning, embodied finetuning, multi-modal planning

- 5. Paper: [Link](https://arxiv.org/pdf/2305.18898) Github: None

- 6. 论文总结:

- (1): 本文研究了机器人操作任务中的高级认知能力学习,旨在解决语言指令和机器人动作之间配对数据有限的挑战。

- (2): 过去的方法采用开环范式将高级指令分解为简单的子任务计划,并使用低级控制模型逐步执行。然而,这些方法在多步推理中缺乏即时观察,导致结果不理想。本文提出了一种闭环多模态体现规划模型,通过自动收集认知机器人数据集AlphaBlock,并利用MiniGPT-4进行微调,实现了对复杂操作任务的精细空间感知。

- (3): 本文提出了一种闭环多模态体现规划模型,通过将图像观察作为输入,自动生成计划。为了有效学习,本文利用MiniGPT-4进行微调,并引入了视觉适配器和Q-former,实现了对操作任务的精细空间感知。实验证明了该方法在机器人任务上的优越性。

- (4): 本文在机器人操作任务中取得了显著的成功率提升,相比于ChatGPT和GPT-4,成功率分别提高了21.4%和14.5%。该方法的创新点在于闭环多模态体现规划模型的设计和AlphaBlock数据集的构建。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文提出了一种新颖的框架,用于在机器人操作任务中学习高级认知能力,通过使用大型语言模型(LLM)自动收集认知机器人数据集AlphaBlock,并提出了闭环多模态体现规划模型CogLoop,通过微调视觉适配器和Q-former实现对细粒度空间感知的有效学习。

b. 方法的详细步骤:

(1). 问题设置:描述了机器人操作任务中的问题设置,包括高级任务指令和低级子任务计划的数据集构建。

(2). 数据集收集:介绍了通过使用LLM和基础机器人模型收集AlphaBlock数据集的方法,包括任务定义、提示设计和自我验证等步骤。

(3). CogLoop模型:详细介绍了闭环多模态体现规划模型CogLoop的架构和训练方法,包括使用MiniGPT-4进行微调和引入视觉适配器和Q-former实现细粒度空间感知。

(4). 实验验证:通过实验验证了CogLoop模型在机器人任务中的优越性,与现有方法相比成功率提高了21.4%和14.5%。

以上是本文的方法详细介绍,通过自动收集AlphaBlock数据集和使用CogLoop模型实现了在机器人操作任务中的高级认知能力学习。

**实验设置:**

- 实验设置(Experimental setting):

本文提出了一种新颖的框架,用于在机器人操作任务中学习高级认知能力,例如使用积木搭建笑脸。这些任务通常涉及复杂的多步推理,由于连接人类指令(例如制作笑脸)和机器人动作(例如末端执行器运动)的配对数据有限,因此存在重大挑战。现有方法通过采用开环范式来缓解这一挑战,将高级指令分解为简单的子任务计划,并使用低级控制模型逐步执行它们。然而,这些方法在多步推理中缺乏即时观察,导致结果不够优化。为了解决这个问题,我们提出通过大型语言模型(LLMs)自动收集认知机器人数据集的方法。得到的AlphaBlock数据集包含35个综合的高级任务,包括多步文本计划和配对的观察序列。为了实现高效的数据获取,我们采用了精心设计的多轮提示设计,有效减轻了人类参与的负担。我们进一步提出了一个闭环多模态体验规划模型,通过将图像观察作为输入,自回归地生成计划。为了实现有效的学习,我们利用带有冻结视觉编码器和LLM的MiniGPT-4,并微调额外的视觉适配器和Q-former,以实现对操作任务的细粒度空间感知。我们进行了实验证明了与现有的开环和闭环方法相比的优越性,并在基于ChatGPT和GPT-4的机器人任务中成功率分别提高了21.4%和14.5%。

**实验结果:**

实验结果和分析:

本文的实验结果表明,我们提出的CogLoop模型在高级认知计划任务中表现出优越性能。与ChatGPT和GPT-4等基线方法相比,CogLoop模型在机器人任务中的成功率分别提高了21.4%和14.5%。具体而言,我们将所有任务分为训练集和测试集,通过比较建筑块的最终位置与真实数据中的位置来评估模型的成功率。如果两者之间的距离小于预定义的阈值(在本实验中为0.08),则任务被认为成功执行。CogLoop模型在相同数量的子任务(15个)和每个子任务的动作步骤(10个)下,取得了较高的成功率。

此外,本文还通过实验结果分析了不同角度的性能。通过采用闭环多模态嵌入规划模型,CogLoop模型能够自动生成计划并根据图像观察进行更新。这种闭环方法相比于开环方法和带有语言观察的闭环方法,在多步推理中能够更好地利用即时观察,从而获得更优的结果。

综上所述,本文的实验结果验证了CogLoop模型在机器人任务中的优越性能,通过融合视觉感知和语言模型,能够实现高水平的认知能力。

# P:7 VoxML作为注释语言的抽象规范

- Title: An Abstract Specification of VoxML as an Annotation Language

- 论文简介: 本文介绍了VoxML作为一种注释语言的抽象规范。

- Authors: Kiyong Lee, Nikhil Krishnaswamy, James Pustejovsky

- Affiliation:

Kiyong Lee: 韩国首尔高丽大学语言学系

Nikhil Krishnaswamy: 美国科罗拉多州立大学计算机科学系

James Pustejovsky: 美国布兰迪斯大学计算机科学系

- Keywords: VoxML, annotation language, modeling language, object affordance, habitat

- Paper: [Link](https://arxiv.org/pdf/2305.13076)

Github: None

- 论文总结:

- (1): 本文的研究背景是VoxML作为一种注释语言的抽象规范。

- (2): 过去的方法存在问题,本文的方法具有很好的动机。

- (3): 本文提出了一种基于VoxML的注释方案,创新性地将其应用于语言数据的标注。

- (4): 本文的方法在标注人-物互动的语言数据上取得了良好的性能,支持了VoxML的建模目的。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文旨在将VoxML作为一种注释语言进行规范化,并展示其在注释表达可视感知的人-物互动的语言数据上的应用。

b. 方法的详细步骤:

(1). 通过图形化的元模型描述标记语言的一般结构,以使注释方案的规范更加透明。

(2). 元模型主要关注实体(物体)和人之间的互动,通过它们的行为触发的动态路径来追踪这些行为的可视感知过程。

(3). VoxML基于注释方案被构建为用于注释表达人-物互动的语言表达,VoxML模型或世界中只包含三类实体:事件(程序):动作、物体和关系。

(4). VoxML的注释语言将语言表达视为可标记的对象,可以通过词语、图像、手势或其他交际行为中的任何东西进行锚定。

(5). 通过抽象语法定义规范语言并严格规定其结构。VoxML基于注释方案的抽象语法被定义为一个集合论元组。

(6). 注释方案根据其抽象语法生成注释结构,这些注释结构包括锚定结构和内容结构。

(7). 锚定结构通过其值来表示,而内容结构则表示为属性-值对。

(8). 通过注释语言的抽象语法生成的注释结构可以用于支持VoxML作为建模语言的建模目的。

以上是本文的方法详细步骤,通过这些步骤,作者规范化了VoxML作为注释语言的使用,并展示了其在注释表达可视感知的人-物互动的语言数据上的应用。

**实验设置:**

- 实验设置(Experimental setting):

该论文旨在将VoxML作为一种注释语言进行规范化。首先,它以一般抽象的方式指定了VoxML,并展示了它在注释表达视觉可感知的人-物互动的语言数据方面的工作原理。生成的注释结构将与VoxML作为建模语言创建的丰富最小模型进行解释,同时支持VoxML的建模目的。该论文使用了一个元模型来图形化地描述标记语言的一般结构。该元模型侧重于实体(物体)和人之间的互动,而由它们的动作触发的动态路径则追踪这些动作的视觉可感知的过程。VoxML基于的注释方案被构建为对表达人-物互动的语言表达进行注释。VoxML模型或世界中只包含三类实体:事件(程序):动作、物体和关系。每个实体都有子类,如图2中的空心三角形所示。该模型代表了一个小的最小世界,侧重于动作、(物理)物体及其相互关系,它们共同构成了更大的本体论,如SUMO(Niles和Pease,2001)。与其他类型的事件不同,代理有意地触发动作,这些代理可以是人类或其他理性代理。这些代理还作为动作的参与者与物体进行交互。关系类别有两个子类,属性和函数。作为一元关系,属性修改实体(物体),例如大桌子。函数是将一个物体映射到另一个物体的特定关系。例如,用于定位的函数loc将物理对象(例如桌子)映射到可以放置其他物体(如苹果)的空间位置。运行时函数τ将事件映射到时间,例如τ(e)指的是事件e的发生时间。还可以引入函数seq,通过对时间t和位置l的有序对t@l进行排序,形成路径。VoxML注释语言没有位置、时间或路径等类别,但可以引入时间点来讨论它们的时间顺序,例如τ(e1)≺τ(e2)。二元或其他n元关系,如in或between,属于关系类别,并且也被引入到VoxML中。VoxML作为一种建模语言,将物理对象和动作视为形成视觉可感知的概念结构voxeme。应用于语言及其组成表达式时,基于VoxML的注释方案将它们视为可标记的,锚定在单词、图像、手势或任何由语言行为组成的东西上。抽象语法定义了一种规范语言并严格地规定了其结构。在构建自然语言语法时,语义注释方案的抽象语法以集合论术语定义为一个元组。VoxML基于的注释方案的抽象语法ASyn voxml也被定义为一个集合论元组。ASyn voxml的定义如下:给定一个有限集D,或数据,其中包含自然语言中的交流片段,VoxML的抽象语法ASyn voxml被定义为一个三元组<M,C,@>,其中:M是D的非空子集,其中包含(可能为空或不连续的)交流片段的字符串,称为可标记物,每个可标记物由基本类别集合B界定;C包括基本类别B和关系类别R:-基本类别B及其子类,如图2所示;对于B中的每个基本类别cat,分配@cat必须具有以下属性列表的值:(3) 扩展BNF中的@cat分配:attributes = identifier,target,type,pred;identifier = categorized prefix + a natural number;target = markable;type = CDATA;pred = CDATA|null;(*谓词内容*)每个类别可能还有其他必需或可选的属性需要分配值。例如,@action的分配要么是process类型,要么是transition类型。类别action具有触发它的@agent属性。

**实验结果:**

实验结果和分析:

本文的实验结果主要是关于VoxML作为注释语言的规范性描述以及其在标注语言数据中的应用。通过使用元模型来描述VoxML的结构,作者展示了VoxML注释方案的构建过程,并将其应用于人-物互动的语言表达。通过生成的注释结构,可以对VoxML作为建模语言所创建的丰富最小模型进行解释,同时支持VoxML的建模目的。

此外,实验结果还展示了VoxML模型中的实体交互,包括事件、对象和关系的子类。其中,动作作为事件的子类在VoxML中起到关键作用,代表了人类或其他理性代理触发的行为。这些代理与对象进行互动,并参与到行为中。关系包括属性和函数两个子类,属性用于修改对象,函数用于将一个对象映射到另一个对象。VoxML注释语言没有位置、时间或路径等类别,但可以引入时间点来讨论它们的时间顺序。

总结来说,本文的实验结果展示了VoxML作为注释语言的规范性描述,并展示了其在标注语言数据中的应用。通过VoxML的注释方案,可以对人-物互动的语言表达进行标注,并解释这些标注结构与VoxML建模语言创建的模型之间的关系。

# P:8 从数据拟合到发现: 通过强化学习解释运动控制的神经动力学

- 1. Title: From Data-Fitting to Discovery: Interpreting the Neural Dynamics of Motor Control through Reinforcement Learning

- 论文简介: 本文通过强化学习解释运动控制的神经动力学,从数据拟合到发现。

- 2. Authors: Eugene R. Rush, Kaushik Jayaram, J. Sean Humbert

- 3. Affiliation:

Eugene R. Rush: 科罗拉多大学博尔德分校机械工程系

Kaushik Jayaram: 科罗拉多大学博尔德分校机械工程系

J. Sean Humbert: 科罗拉多大学博尔德分校机械工程系

- 4. Keywords: motor control, neural dynamics, reinforcement learning, computational neuroscience

- 5. Paper: [Link](https://arxiv.org/pdf/2305.11107) Github: None

- 6. 论文总结:

- (1): 本文研究背景是在运动神经科学中,人工循环神经网络模型通常与动物研究相辅相成。

- (2): 过去的方法主要局限于数据拟合,很少有研究将虚拟机器人代理与强化学习相结合,并直接与生物对应物进行比较。本文的方法在此基础上进行了创新。

- (3): 本文提出了一种研究方法,通过训练虚拟机器人进行步态行走,揭示了神经动力学的结构化活动,这与灵长类动物行走和骑行的实验结果相吻合。通过分析神经轨迹的分离性,本文确定了最大化不同前进、横向和旋转速度条件下平均活动方差的速度轴。

- (4): 本文的方法在步态行走任务中取得了平滑的动力学效果,避免了神经空间中相邻神经轨迹的交叉。这一性质与计算神经科学中的核心原则相吻合。本文的方法对于解释运动控制系统的计算原理具有重要意义,并为神经科学、机器人学和机器学习研究提供了全新的测试平台。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本研究通过使用强化学习训练虚拟机器人进行步态运动,揭示了神经动力学的结构化神经活动,与灵长类动物行走和骑行的实验结果相吻合。

b. 方法的详细步骤:

(1). 实验环境和代理:使用NVIDIA Isaac Gym作为高吞吐量的虚拟实验平台,以及Anymal机器人的虚拟模型。代理的动作空间是12维,观测空间是176维。

(2). 代理训练:使用线性体速度误差和角速度误差作为奖励函数,同时考虑膝盖碰撞、关节加速度、扭矩变化和脚空中时间等惩罚项。训练过程中,代理随机接收线性体速度和角速度指令,并逐渐面对更困难的地形障碍。

(3). 数据收集:在数据收集阶段,代理根据实验需求接收指定的线性体速度和角速度指令。收集数据时,噪声参数与训练阶段相同,但除了扰动实验外,扰动被移除。

(4). 模型架构:使用rl_games实现代理的训练,该实现将长短期记忆(LSTM)网络集成到演员和评论家网络中。演员和评论家网络通过多层感知器传递观测向量,并输出动作向量。本研究主要关注RNN潜在状态和执行活动,与灵长类动物的运动皮层和肌肉样EMG记录进行比较。

(5). 训练RNN:使用截断的时间反向传播(BPTT)训练RNN。通过将RNN展开为四层,可以在每个时间步长上将梯度传播回网络。截断的BPTT有助于训练,因为它可以防止长序列导致梯度消失或梯度爆炸问题。

(6). 结果分析:通过比较代理的神经轨迹和灵长类动物的神经轨迹,发现代理的循环层中的神经轨迹比输入驱动的执行层中的神经轨迹更加分散。此外,通过识别最大化不同前向、横向和旋转速度条件下平均活动方差的速度轴,进一步解释了这些椭圆形轨迹的神经分离。

(7). 结论:本研究通过训练虚拟机器人进行步态运动,揭示了神经动力学的结构化神经活动,与灵长类动物行走和骑行的实验结果相吻合。这一研究填补了现有研究中数据拟合和生物对应的强化学习模型之间的差距。

**实验设置:**

- 实验设置(Experimental setting):

本文通过使用神经动力学模型和强化学习方法,研究了虚拟机器人进行腿部运动的神经动力学。实验中,使用NVIDIA Isaac Gym模拟了大量的机器人执行特定策略的情况。实验数据以每20ms记录一次,通过对数据进行聚类和对齐,得到了不同条件下的平均数据。为了提高数据的可解释性,使用了三维可视化和样条插值方法。此外,还计算了神经轨迹的交织度和进行了主成分分析。实验结果显示,机器人的循环状态和驱动状态在神经轨迹的交织度方面存在差异,循环状态较少交织,而驱动状态较多交织。此外,实验还观察了不同运动方向和速度条件下的神经活动和驱动输出的差异。

**实验结果:**

实验结果和分析:

本研究通过对虚拟机器人进行步态运动的训练,揭示了与灵长类动物行走和骑行实验结果相符的结构化神经活动。研究发现,经过训练的机器人表现出的神经轨迹在循环层中的纠缠程度要低于输入驱动的激活层。此外,通过对速度轴的分析,研究人员发现了最大化不同前进、横向和旋转速度条件下平均活动方差的速度轴。实验结果还显示,循环层的神经活动呈现出平滑的、近似圆形的轨迹,而激活层的神经活动则呈现出“八字”形状的轨迹,并且纠缠程度更高。此外,实验还发现,速度的调节会导致循环层和激活层的轨迹发生变化,且随着速度增加,轨迹会发生拉伸。总的来说,本研究的实验结果支持了计算神经科学中的核心原理,并揭示了虚拟机器人的神经动力学特征与生物对应物的相似性。

# P:9 一个安卓机器人头部作为具有体现性的对话代理

- Title: An Android Robot Head as Embodied Conversational Agent

- Authors: Marcel Heisler, Christian Becker-Asano

- Affiliation: Hochschule der Medien, Stuttgart, Germany

- Keywords: humanoid robotics, machine learning, software development, conversational agents

- Paper: [Link](https://arxiv.org/pdf/2305.10945)

- Github: None

- 论文简介: 本文描述了如何将当前的机器学习技术与简单的基于规则的动画程序相结合,使得一个安卓机器人头部成为一个具有ChatGPT作为核心组件的具有体现性的对话代理。介绍了安卓机器人头部的特点,给出了实现唇同步动画的技术细节,并提出了一般的软件设计决策。通过公开演示系统,发现了改进的机会,并采用迭代的实现方法。

- (1): 这篇论文的研究背景是关于安卓机器人的研究进展,探索了安卓机器人在不同应用领域的潜在机会,如社区老年人的互动伴侣、心理研究中的情感交互等。

- (2): 过去的方法通常使用脚本动作或者“巫师奥兹”研究来实现安卓机器人的社交互动。然而,要在实际场景中使用这些机器人,它们需要具备自主行动的能力。本文提出了一种简化的方法,通过结合机器学习模型和规则动画程序,实现了一个具有体现性的安卓机器人头部作为对话代理。

- (3): 本文提出的研究方法是将机器学习模型与手动定义的动画相结合,实现了自主对话的安卓机器人头部。通过使用机器学习模型实现自动语音识别、语音合成、文本对话和自动唇同步等任务,使机器人能够以自然语言进行对话,并通过面部表情和语音输出实现交互。这种方法的创新之处在于将机器学习技术应用于安卓机器人的对话系统,实现了一种具有体现性的对话代理。

- (4): 本文的方法在语音识别、语音合成、文本对话和唇同步等任务上取得了良好的性能。通过使用现有的机器学习模型和手动定义的动画,安卓机器人头部能够以自然语言进行对话,并通过面部表情和语音输出实现交互。这种方法的性能支持了安卓机器人在实际场景中作为对话代理的目标,同时也在机器学习技术在安卓机器人领域的应用方面做出了创新和贡献。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文介绍了如何将当前的机器学习技术与简单的基于规则的动画程序相结合,使得一个安卓机器人头成为一个具有ChatGPT作为核心组件的具身化对话代理。该方法包括自动语音识别(ASR)、语音合成或文本到语音(TTS)、文本对话或对话(chat)和自动唇同步等任务的解决方案。

b. 方法的详细步骤:

(1). ASR:使用Whisper作为当前最先进的开放式可访问的ASR机器学习模型。Whisper采用了现成的编码器-解码器Transformer架构,并且在训练数据量增加时具有良好的扩展性。Whisper的主要创新之一是它在多个任务和多种语言上进行训练,因此一个Whisper模型不仅能够转录一种语言的语音,还能够执行声音检测、语言识别、说话人分离和从不同语言翻译成英语等任务。

(2). TTS:采用VITS作为语音合成的机器学习模型。VITS是第一个实现接近人类质量的自然语音合成的端到端模型。它的端到端方法也提高了合成速度。VITS在多说话人数据集上训练时,还允许在推理时切换不同的说话风格(例如男声或女声)。目前,模拟不同情绪、克隆说话人的声音或将多种语言组合在单个模型中是活跃的研究课题。

(3). Chat:采用ChatGPT作为对话的机器学习模型。ChatGPT是在GPT模型的基础上通过人类反馈进行了微调的新模型。ChatGPT是在对话格式中进行了不同数据收集的微调,以便允许后续问题。

(4). Lip-Sync:使用多个机器学习模型来预测与输入语音信号相对应的面部表情,特别是唇部运动。这些模型通常用于计算机动画,它们在生成的面部表情表示方面存在差异。本文探讨了如何将预测的面部表情应用于动画化安卓机器人头。首先描述了安卓机器人头的当前设置和功能,然后提供了开发过程的一些细节。

(5). 其他细节:本文还介绍了其他相关的机器学习模型和技术,如GPT、DialoGPT和BlenderBot等,以及它们在自然语言处理任务中的应用。

以上是本文方法的详细步骤。

**实验设置:**

- 实验设置(Experimental setting):

本文描述了如何将当前的机器学习(ML)技术与简单的基于规则的动画程序相结合,使得一个安卓机器人头部成为一个具有ChatGPT作为核心组件的具身化对话代理。首先介绍了安卓机器人头部的当前设置和其能力。接下来提供了一些开发过程的细节。

**实验结果:**

实验结果和分析:

本文描述了如何将当前的机器学习技术与简单的基于规则的动画程序相结合,使得一个安卓机器人头部成为一个具有ChatGPT作为核心组件的具身化对话代理。文章介绍了安卓机器人头部的特点,并详细说明了如何实现唇同步动画以及一般软件设计决策。通过对系统进行公开演示,发现了一些改进的机会,并采用迭代的实现方法进行了改进。

(1). 本文通过将机器学习技术与规则动画程序相结合,成功将安卓机器人头部打造成了一个具身化对话代理。

(2). 文章详细介绍了如何实现唇同步动画,使得机器人头部的口型能够与语音内容相匹配。

(3). 文章提出了一些软件设计决策,为安卓机器人头部的开发提供了指导。

(4). 在公开演示中,发现了一些改进的机会,并采用迭代的实现方法进行了改进。

以上是本文的实验结果和分析。

# P:10 通过生成模拟实现通用机器人:一种有前途的范式

Github: None

- Summary:

-(1):本文的研究背景是在现实环境中部署机器人来执行各种任务。

-(2):过去的机器人研究方法主要集中在提高高级认知和低级运动技能。提出的方法旨在通过使用生成模拟和大型基础模型来弥合这两个领域之间的差距。

-(3):本文提出的研究方法是机器人学习的全自动化生成式流水线,称为生成式仿真。它利用大规模的基础模型来生成不同的任务、场景和训练监督,从而实现低级技能学习的规模化和多面手机器人的开发。

-(4):本文的方法旨在实现能够在物理世界中执行广泛技能的通才机器人。这些方法的性能在摘要中没有明确提到,但作者认为,所提出的方法有潜力赋予多面手机器人权力。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文提出了一种通过生成式模拟来实现通用机器人的方法,通过利用大规模基础模型中的知识来生成多样化的任务、场景和训练监督,从而扩大低级技能学习的规模,最终实现赋予通用机器人能力的机器人基础模型。

b. 方法的详细步骤:

(1). 提出生成式模拟的范式:通过在当今的大规模基础模型中提供适当的指令和上下文示例,自动化和扩大任务和场景生成的每个组件,从而实现机器人学习的扩展。生成式模拟可以在最小程度上涉及人类,并且完全自动化地扩大机器人学习的规模。

(2). 子任务生成:给定高级目标,可以查询基础模型来生成逐步细化的子任务描述。通过递归查询基础模型,可以生成细粒度的子任务描述。还可以通过逐步深入细粒度级别的方式,自动化生成许多高级目标。

(3). 场景配置生成:除了基于高级目标生成子任务外,基础模型还可以根据当前场景配置生成潜在的子任务。例如,给定一个厨房场景,基础模型可以考虑场景中物体的可用性,生成一系列子任务,如“打开柜子的中抽屉”或“转动烤箱旋钮”。这可以大大加快探索和技能获取的速度。

(4). 设计奖励函数:为了训练机器人代理执行特定任务,需要设计奖励函数。例如,为了训练机器人代理执行“扫地”的任务,需要考虑扫帚的位置、方向、与碎片的接触以及将碎片移动到目标位置的进展。可以根据任务的要求设计相应的奖励函数。

(5). 实施挑战:建立通用机器人需要大量的计算资源和硬件,单靠学术界的研究团队可能面临资源限制。因此,作者在实现整个愿景时认识到存在挑战。然而,作者相信在这个早期阶段分享他们的想法可以促进讨论,吸引工业界对提出的路径和相关主题的兴趣,并激发该领域的重大技术进步。

(6). 结论:本文提出的生成式模拟方法为实现通用机器人提供了一种可行的路径。通过利用大规模基础模型生成多样化的任务、场景和训练监督,可以扩大低级技能学习的规模,最终实现赋予通用机器人能力的机器人基础模型。作者希望通过分享他们的想法,吸引工业界的兴趣,并在该领域推动重大的技术进步。

**实验设置:**

- 实验设置(Experimental setting):

本文提出了一种通过生成式模拟的方法来实现通用型机器人的潜在路径。该方法利用最新的大规模基础模型来挖掘知识,通过生成多样化的任务、场景和训练监督来扩展低层技能学习,从而实现为机器人提供基础模型的目标。作者正在积极探索这个方向,并意识到构建具有大规模策略训练的通用型机器人的雄心目标需要大量的计算资源和硬件支持。因此,作者希望在这个早期阶段分享他们的想法,以促进讨论,吸引来自工业界的兴趣,并激发该领域的重大技术进步。

(1). 任务和场景生成:通过在现有的多模态基础模型中引入适当的指令和上下文示例,可以实现自动化和扩展化的任务和场景生成。作者称之为生成式模拟。例如,给定一个高级目标,可以查询基础模型来生成逐步细分的子任务描述。此外,还可以根据当前场景配置生成潜在的子任务,考虑到场景中物体的可用性。这种方法可以加快探索和技能获取的速度。

(2). 资源限制:作者意识到构建通用型机器人需要大量的计算资源和硬件支持,而仅靠学术界的研究团队可能面临严重的资源限制。因此,作者希望通过在早期阶段分享他们的想法,吸引工业界的兴趣,并可能推动该领域的重大技术进步。

(3). 实验目标:本文的实验目标是探索通过生成式模拟的方法来实现通用型机器人的潜在路径。通过利用最新的大规模基础模型来生成多样化的任务、场景和训练监督,以扩展低层技能学习,并最终实现为机器人提供基础模型的目标。

(4). 实验方法:本文提出的方法是通过生成式模拟来实现通用型机器人的潜在路径。该方法利用最新的大规模基础模型来生成多样化的任务、场景和训练监督,以扩展低层技能学习。通过适当的指令和上下文示例,可以实现自动化和扩展化的任务和场景生成。

(5). 实验结果:本文还没有具体的实验结果,因为作者正在积极探索这个方向。作者希望通过在早期阶段分享他们的想法,吸引工业界的兴趣,并可能推动该领域的重大技术进步。

**实验结果:**

- 实验结果和分析:

本文提出了一种通过生成式模拟(generative simulation)的方法,以实现通用型机器人的目标。通过使用最新的大规模基础模型来挖掘知识,生成多样化的任务、场景和训练监督,从而扩大低级技能学习的规模,并最终实现赋予通用型机器人能力的机器人基础模型。作者认为这种方法具有潜力,并正在积极探索这个方向。

作者提出的生成式模拟方法可以自动化和扩展任务和场景生成的各个组成部分,并且可以通过适当的指令和上下文示例来实现。通过给出一个示例奖励函数,作者说明了如何为任务“扫地”设计奖励函数,考虑了扫帚的位置、方向、与碎片的接触以及将碎片移动到目标位置的进展。作者还提出了一个可能的流程,包括子任务生成和场景配置生成两个阶段,以展示生成式模拟的应用。

实验结果表明,通过使用生成式模拟方法,可以自动生成细粒度的子任务描述,并且可以根据当前场景配置生成潜在的子任务。这种方法可以加快探索和技能获取的速度,为机器人学习提供更多的可能性。

总的来说,本文提出的生成式模拟方法为实现通用型机器人提供了一种可行的途径,并且在实验中展示了其潜力和效果。这一方法可以通过自动生成任务和场景来扩大机器人学习的规模,并为机器人研究领域带来重要的技术进展。

# P:11 多模态上下文化计划预测用于具身任务完成

- Title: Multimodal Contextualized Plan Prediction for Embodied Task Completion

- Authors: Mert ˙Inan, Aishwarya Padmakumar, Spandana Gella, Patrick Lange, Dilek Hakkani-Tur

- Affiliation:

1. Mert ˙Inan: University of Pittsburgh, Pittsburgh, USA

2. Aishwarya Padmakumar, Spandana Gella, Patrick Lange, Dilek Hakkani-Tur: Amazon Alexa AI

- Keywords: task planning, embodied task completion, multimodal context, plan prediction, plan execution

- Paper: [Link](https://arxiv.org/pdf/2305.06485) Github: None

- Summary:

-(1):本文着重于使用多模态上下文预测嵌入任务完成的更高层次计划表示。

-(2):以前的方法直接预测低级动作序列,但本文认为预测高级计划更容易转移到物理机器人系统。该方法具有良好的动机,因为它旨在改进计划预测和执行模块,并探索计划预测模型的改进范围。

-(3):本文采用情景转换模型来预测对话历史任务的TEACh执行计划。结果表明,将该模型与基于规则的计划执行模块相结合可以产生更成功的计划。本文还提出了对基本et体系结构的修改,以解决计划预测中观察到的问题。

-(4):本文中的方法在TEACh基准数据集上进行了评估。将该方法的性能与人类演示的oracle计划进行了比较。结果表明,基于上下文的多模态计划预测可以产生更好的计划,该分析为具体任务完成的基准和模型的开发提供了见解。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文提出了一种多模态上下文化的计划预测方法,用于完成具体任务,并探讨了计划预测和执行模块之间的依赖关系。

b. 方法的详细步骤:

(1). 本文使用TEACh数据集,该数据集是一个对话语料库,模拟用户(指挥官)和具体任务执行者(跟随者)之间的交互,用于完成家庭任务。指挥官可以访问任务步骤和物体的位置信息,而跟随者只能与环境中的物体进行交互。跟随者通过丰富的语言和视觉上下文来完成需要链式操作和推理物理状态变化的家庭任务。

(2). 本文关注TEACh数据集中的Execution from Dialog History (EDH)任务。给定人机对话和游戏过程中的动作,模型需要预测跟随者在下一个对话动作之前在环境中采取的后续动作。模型通过比较预测的动作序列引起的物体状态变化与真实动作序列引起的状态变化来进行评估。模型预测的动作序列应该可以直接在TEACh模拟器中执行。动作空间包括导航动作(前进、右转、左转、后退、左平移、右平移、向上看、向下看)和物体交互动作(拾取、放置、打开、关闭、打开、关闭、切割、倒出)。当前神经模型在TEACh EDH任务上的成功率最多为10%。

(3). 与此前的研究不同,本文将模型的预测目标修改为“计划”,即一系列物体交互动作与其执行对象的物体类别的组合。通过预测计划,模型可以简单地预测代理需要拾取土豆,然后通过一个独立的计划执行模块(在本文中为启发式模块,但也可以是学习得到的)将代理定位到正确的位置执行动作。本文选择物体交互动作作为抽象的计划级别,因为这种级别的计划可以从真实动作序列自动创建,无需计划的注释。

(4). 由于EDH任务涉及继续进行的部分会话,某个EDH实例可能显示出例如杯子已经装满水的对话。在这种情况下,模型需要预测的计划可能只涉及将水倒入锅中、将锅放在炉子上并加入土豆的最后几个步骤。

(5). 对于许多TEACh任务,任务可能是参数化的,因此计划预测还涉及根据对话识别任务参数。为了解决这个问题,本文使用启发式方法选择最接近代理的所需类型的物体,并使用模拟器的导航图计算到达该物体的最短路径,然后导航到该物体并尝试预测的动作。

(6). 本文修改了Episodic Transformer (E.T.)模型,用于计划预测。E.T.模型包括一个用于语言输入的Transformer编码器(在本文中为EDH对话历史记录)和使用ResNet-50骨干网络编码的图像观察,这些观察被连接并通过多模态Transformer层传递,然后通过线性层转换为动作和物体类别的预测。该模型被设计为预测与图像观察数量相同的动作和物体。在训练时,模型接收整个轨迹的图像观察,并预测整个轨迹的动作。在推理时,模型接收到目前为止完成的动作的图像观察,以及过去的动作作为输入,预测序列的最后一个动作作为下一个时间步的预测动作。为了修改模型以进行计划预测而不是低级动作预测,本文修改了训练数据,仅保留相应时间步的物体交互动作和图像观察。在推理过程中,执行每个计划步骤后,将该执行的最后一个图像观察附加到视觉输入中,以获取下一个计划步骤。

(7). 本文探索了以下E.T.模型的变体:

- E.T.: 基本的E.T.模型。

- E.T. Hierarchical: 在E.T.模型中,每个时间步的动作和物体都有独立的分类器,但可能导致不可行的动作,例如拾取一个橱柜。本文探索了通过将动作分类器的输出与物体分类器的输入进行连接来共享信息的方法。这样做可以为更高级别的物体分类器提供更多信息,以便根据动作-物体对的有效性选择动作。模型可以从有效样本中学习有效的物体-动作对模式,但这并没有通过分类器的硬约束完全实施,可能仍会导致无效的物体-动作组合。

- E.T. + Mask: 作为分层学习的替代方法,本文在推理过程中探索了预测的动作和物体是否构成可执行的计划步骤。如果不是,将动作替换为下一个最有可能在预测的物体上执行的动作。本文主要关注计划预测的建模。

(8). 为了评估预测的计划,本文将其与两种基于规则的计划执行模块配对。本文计划在未来的工作中探索计划执行的机器学习模型的任务。给定由动作和相关物体类型组成的计划步骤,需要确定代理必须操作的特定物体。为了实现这一点,本文使用启发式方法选择最接近代理的所需类型的物体,然后使用模拟器的导航图计算到达该物体的最短路径,导航到该物体并尝试预测的动作。

**实验设置:**

- 实验设置(Experimental setting):

本文的实验设置主要包括以下内容:

(1). 评估提出的计划预测模型在TEACh数据集的EDH任务上的性能。

(2). 对比基线和Oracle条件。

(3). 使用编辑距离和有效计划步骤的分数作为评估指标。

(4). 在不同模型和计划执行条件下进行训练和推理,并使用3个随机种子进行统计显著性检验。

(5). 分析模型在不同任务上的性能差异。

(6). 考察Oracle计划执行失败的原因,特别是CorefOracle的失败情况。

注意:

- 本文的实验主要集中在TEACh数据集的EDH任务上进行。

- 评估指标包括编辑距离、标准化编辑距离和有效计划步骤的比例。

- 对比了基线模型和Oracle条件,以及不同模型和计划执行条件的性能差异。

- 进一步分析了模型在不同任务上的表现,并探讨了Oracle计划执行失败的原因。

**实验结果:**

实验结果和分析:

本文的实验结果主要集中在对提出的计划预测模型进行评估,并与基线模型和Oracle模型进行比较。评估使用了TEACh数据集中的EDH任务,并使用了不同的执行方法。评估指标包括编辑距离、归一化编辑距离和有效计划步骤的比例。

实验结果表明,使用多模态上下文可以更好地预测计划,并且计划预测模块和计划执行模块可能相互依赖,因此完全解耦可能不是理想的选择。此外,通过对Oracle计划的执行进行基准测试,发现计划预测模型仍有改进的空间。

在评估中,除了基线模型外,所有的模型在成功率上都优于TEACh基线模型。最好的模型是E.T. + Mask,在大多数情况下表现最好。此外,辅助执行对于模型的改进要小于对于Oracle模型的改进。

在任务级别的成功率分析中,发现Oracle模型在涉及更多任务步骤或更复杂的放置动作的任务中成功率较低。而E.T.模型在大多数任务中的成功率都有所提高,但E.T. Hierarchical和E.T. + Mask之间的趋势不一致。基线模型在某些任务上表现优于普通的E.T.模型,但几乎从不优于E.T. Hierarchical或E.T. + Mask。

此外,实验结果还发现,E.T.模型在直接执行时预测的无效计划步骤较多,而E.T. + Mask模型通过设计完全纠正了这一问题。E.T. Hierarchical模型的预测无效计划步骤的情况与预期相反。

对于Oracle计划的执行失败原因的分析发现,导航失败的情况很少,占失败案例的不到1%。放置动作特别具有挑战性,特别是当一个物体需要放置在可能已经包含其他物体的物体上时。将物体放在炉子上总是失败的,将物品放在梳妆台和咖啡桌上的失败率超过50%。开关柜子和微波炉的打开和关闭也很具有挑战性,失败主要是因为对于难以校准的微调位置。

综上所述,本文的实验结果表明,使用多模态上下文可以提高计划预测的性能,辅助执行对于提高成功率也起到了重要作用。然而,目前的模型仍有改进的空间,特别是在处理复杂放置动作和微调位置时的性能仍有待提高。

# P:12 基于图神经嵌入的主动语义定位

- 1. Title: Active Semantic Localization with Graph Neural Embedding

- 论文简介: 本文提出了一种轻量级、完全基于CPU的领域自适应语义定位框架,称为图神经定位器。该方法受到了两种最近出现的技术的启发:场景图和图神经网络。通过将场景图与图神经网络相结合,提出了一种新的图神经定位器框架,用于解决语义定位问题。

- 2. Authors: Mitsuki Yoshida, Kanji Tanaka, Ryogo Yamamoto, and Daiki Iwata

- 3. Affiliation:

Mitsuki Yoshida, Kanji Tanaka, Ryogo Yamamoto, and Daiki Iwata隶属于日本福井大学工程学系。

- 4. Keywords: graph neural embeddings, active semantic localization, knowledge transfer, domain adaptation

- 5. Paper: [Paper](https://arxiv.org/pdf/2305.06141) Github: None

- 6. 论文总结:

- (1):本文的研究背景是在最近出现的具有语义图像模态的机器人自我定位中,语义定位是至关重要的。然而,现有的语义定位方法大多集中在 passsive 视觉任务上,没有考虑视点规划,或者依赖于额外的丰富模态。因此,这个问题在很大程度上尚未解决。

- (2):过去的方法主要集中在 passsive 视觉任务上,没有考虑视点规划,或者依赖于额外的丰富模态。本文的方法通过结合场景图和图神经网络,提出了一种新的图神经定位器框架,解决了这个问题。

- (3):本文提出了一种轻量级、完全基于CPU的领域自适应语义定位框架,称为图神经定位器。该方法通过训练图卷积神经网络作为场景图分类器,然后将其知识转移到强化学习规划器,实现了从被动视觉到主动视觉的知识转移。通过在自监督学习和无监督领域自适应的两个场景下进行实验,验证了该方法的有效性。

- (4):本文的方法在自我监督学习和无监督领域自适应的两个场景下进行了实验,使用了一个逼真的Habitat模拟器。实验结果表明,该方法在自我定位性能、计算效率和领域适应性方面优于基线方法。该方法的性能支持了他们的目标,具有创新性和贡献性。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文提出了一种轻量级、完全基于CPU的领域自适应语义定位框架,称为图神经定位器,通过结合场景图和图神经网络的技术,实现了对语义图像模态的机器人自我定位。

b. 方法的详细步骤:

(1). 相似性保持的图像到图形映射:通过两步启发式方法,将图像分割为部分区域(节点)并推断部分之间的关系(边)。对于节点生成步骤,使用语义分割模型将图像分割为部分区域,并用语义标签和边界框表示每个语义区域。为了增强可重现性,将语义标签重新分类为10个更粗略的元类别,并移除面积小于5000像素的虚拟对象。对于边连接步骤,当部分节点对的边界框重叠或欧氏距离在20像素内时,它们之间会连接一条边。

(2). 增强可辨识性的节点特征:使用部分区域的空间属性作为节点特征的附加属性,包括"大小/位置"词。根据边界框的面积,将部分区域分类为三个大小词:"小(0)","中(1)","大(2)"。将边界框的中心位置离散化为3x3=9个单元格的网格,并将单元格ID作为位置词。最终,节点特征定义为语义、大小和位置词的联合空间中的一个270维的独热向量。

(3). 场景图嵌入:使用图卷积神经网络(GCN)将场景图嵌入到状态向量中。GCN的架构与先前的研究相同。嵌入的场景图可以作为被传递的知识。

(4). 机器人工作空间建模:将机器人的工作空间建模为位置-方位空间中的3D区域,并使用2米和30度的分辨率将其分割为规则的地点类别网格。

(5). 知识传递:将GCN输出的类别概率图作为被传递的知识。为了作为特征向量传递,使用倒数排名向量作为特征向量和强化学习的状态向量。

(6). 粒子滤波器:使用粒子滤波器进行增量估计姿态(位置/方位)。为了将粒子的位置属性转换为类别特定的排名值,使用简单的最大池化。

(7). 粒子初始化:使用引导采样策略从接收到最高初始观测似然的地点类别中采样初始粒子。

(8). 训练/测试过程:在训练/测试过程中,使用增量估计的姿态进行多视角自我定位。

(9). 实验验证:通过在自监督学习和无监督领域自适应的两个场景中使用Habitat模拟器进行实验,验证了所提出方法的有效性。

(10). 结果分析:实验结果表明,所提出的方法在语义定位任务中取得了较好的性能,能够实现机器人的主动视觉定位。

(11). 创新点总结:本文提出了一种轻量级、完全基于CPU的领域自适应语义定位框架,通过结合场景图和图神经网络的技术,实现了对语义图像模态的机器人自我定位。该方法在自监督学习和无监督领域自适应的实验中验证了其有效性。

**实验设置:**

- 实验设置(Experimental setting):

本文使用了3D逼真的模拟器Habitat-Sim和数据集HM3D作为训练和测试环境。在训练阶段,使用了来自Habitat-Matterport3D研究数据集的三个数据集,命名为"00800-TEEsavR23oF","00801-HaxA7YrQdEC"和"00809-Qpor2mEya8F"。在每个数据集中,使用了10000个带有类别标签的训练场景来训练图卷积神经网络(GCN)分类器。在训练和测试阶段,机器人从环境中的随机位置开始,进行初始感知,然后执行由长度为4的计划-动作-感知循环组成的episode。NNQL训练和部署的状态由最新的类别特定的倒数排名特征表示。奖励函数根据Top-1预测的位置类别是否正确返回+1或-1的值。进行了10000次NNQL训练迭代,学习率为0.1,折扣因子为0.9,使用ε-greedy策略进行探索。进行了无监督领域自适应(UDA)实验,通过对测试域的小型验证集进行评估,并选择表现最好的模型。机器人的工作空间通过基于网格的分割表示,位置分辨率为2米,方位分辨率为π/6弧度。将提出的主动多视角方法与基准的单视角和被动多视角方法进行比较。在三个数据集上评估了上述三种不同方法的自我定位性能,并在25个不同的Txy和Tθ设置下进行了评估。主要性能指标为Top-1准确率。实验结果表明,提出的方法在各种数据集上显著提高了准确性。

**实验结果:**

实验结果和分析:

该论文通过在两个场景中进行实验,即自监督学习和无监督领域自适应,验证了所提出方法的有效性。在自监督学习中,使用了Habitat-Sim和HM3D数据集进行训练和测试。通过对10,000个训练场景进行GCN分类器的训练,得到了较高的Top-1准确率。在无监督领域自适应实验中,通过对测试域的小型验证集进行评估,发现提出的方法在所有数据集上都优于被动多视角方法。实验结果表明,该方法在各种数据集上显著提高了准确性。

此外,实验还观察到了一些有趣的现象。在成功案例中,当机器人移动到具有密集自然地标物体的位置时,视觉地点识别性能会得到改善。例如,在初始视点,机器人面对墙壁,无法观察到任何有效的地标物体,但通过在下一个视点改变朝向方向,机器人能够检测到门,并且使用该地标物体提高了自我定位的准确性。失败案例中,超过一半的视点都面对着没有特征的物体,如墙壁和窗户。另一个值得注意的趋势是,当视点离物体太近时,识别成功率会降低,这导致视野变窄。

总的来说,实验结果表明,所提出的方法在各种数据集上显著提高了自我定位的准确性,并且在具有密集自然地标物体的位置时表现更好。

# P:13 以控制为中心的视频预测基准

- Title: A Control-Centric Benchmark for Video Prediction

- 论文简介: 本文提出了一个以控制为中心的视频预测基准,用于评估模型在模拟机器人操作中的性能。

- Authors: Stephen Tian, Chelsea Finn, Jiajun Wu

- Affiliation: Stanford University(斯坦福大学)

- Keywords: video prediction, control benchmark, robotic manipulation, planning performance

- Paper: [Link](https://arxiv.org/pdf/2304.13723) Github: None

- 论文总结:

- (1): 本文的研究背景是视频预测模型在机器人控制任务中的应用。

- (2): 过去的方法主要使用人类感知相似度或像素比较等指标来评估视频预测模型的性能,但这些指标在执行成功的预测中可能不可靠。本文提出的基准通过采样规划来评估模型在模拟机器人操作中的性能,解决了现有指标无法准确预测执行成功的问题。

- (3): 本文提出了一个名为VP2的基准,包括模拟环境、任务定义、训练数据集和完整的规划实现。通过使用视觉预测方法进行规划,评估模型在机器人操作中的性能。这个基准的创新之处在于提供了一个简单的接口,可以评估几乎任何基于动作的视频预测模型。

- (4): 本文在机器人操作任务中评估了五种高性能视频预测模型,并发现模型规模、训练数据量和模型集成等因素对性能有影响。实验结果表明,尽管模型规模可以提高感知质量,但不确定性意识等其他属性也可以提高规划性能。本文的创新之处在于提出了一个以控制为中心的视频预测基准,并通过实验分析了不同因素对模型性能的影响。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文提出了一个基于控制的视频预测基准,用于评估模型在模拟机器人操作中的性能,并通过分析五个高性能视频预测模型的效果,发现模型规模、训练数据量和模型集成等因素对规划性能的影响。

b. 方法的详细步骤:

(1). 选择要分析的模型:选择了两个具有竞争性预测性能且适用于规划的变分视频预测模型,分别是FitVid和SVG模型。

(2). 实验环境和任务定义:选择了两个仿真环境,分别是robosuite和RoboDesk,每个环境包含多个任务类别和任务实例定义。

(3). 模型训练:针对每个环境,使用标准像素级重构损失(MSE)和额外的感知损失(LPIPS)训练了三个变体的FitVid和SVG模型,并进行了150K个梯度步骤的训练。

(4). 模型评估:使用FVD、LPIPS和SSIM等指标对模型在验证集上的预测性能进行评估,并通过视觉预测对机器人操作的规划性能进行评估。

(5). 分析结果:通过对比不同指标与控制性能的相关性,发现现有指标在不同任务上与控制性能的相关性存在较大差异。

(6). 提出基准:为了评估视频预测模型的下游控制性能,提出了Video Prediction for Visual Planning (VP 2 )基准,包括环境和任务定义、基于采样的规划器和训练数据集等组成部分。

(7). 基准特点:VP 2 基准具有易于使用、灵活性强和强调模型优势等特点,可以评估模型在多任务规划等方面的能力。

(8). 基准任务:VP 2 基准支持在两个仿真环境上进行11个任务的评估,包括推动、打开抽屉、将物体从桌子上推下等任务。

(9). 实验设置:为了测试模型的泛化能力和对环境变化的鲁棒性,定义了任务实例,每个任务实例由初始状态和目标图像组成。

(10). 实验结果:通过在VP 2 基准上的实验,验证了现有指标在控制性能评估中的局限性,并展示了不同模型在不同任务上的性能差异。

总结:本文通过分析现有指标在控制任务上的表现,提出了一个基于控制的视频预测基准VP 2 ,并通过实验验证了该基准的有效性。该基准可以评估视频预测模型在模拟机器人操作中的性能,并对模型规模、训练数据量和模型集成等因素进行分析。

**实验设置:**

- 实验设置(Experimental setting):

本文介绍了VP 2基准测试,旨在评估视频预测模型的下游控制性能。为了将模型的效果与环境或控制算法的效果区分开来,我们将每个任务的整个控制方案作为基准测试的一部分。VP 2由三个主要组成部分组成:环境和任务定义、基于采样的规划器和训练数据集。基准测试提供了环境和任务定义,同时还提供了一个控制设置,可以根据控制性能直接评分模型。每次基准测试运行由一系列控制试验组成,每个控制试验在特定的任务实例上使用给定的模型执行基于采样的规划。在T个控制步骤结束时,根据模拟器状态判断任务实例的成功或失败。为了使用视觉预测进行规划,在每个步骤中,基于采样的规划器尝试解决以下优化问题,以根据目标图像Ig、上下文帧Ic、成本函数C和视频预测模型fθ规划一个动作序列:min a1,a2,...aT Σi=1 C(f(Ic, a1:T)i, Ig)。然后选择最佳动作,并在每个步骤进行重新规划以减少模型误差的影响。我们在基准测试中使用2个上下文帧,并在整个基准测试中预测T = 10个未来帧。与模型为基础的强化学习中的先前工作一样,我们实现了一个使用MPPI(Williams et al., 2016; Nagabandi et al., 2019)的基于采样的规划器,用于采样候选动作序列,执行前向预测,然后根据这些分数更新采样分布。我们提供了已经调整过以实现与完美动力学模型的强大性能的规划超参数的默认值,可以在附录B中找到更多细节。VP 2还为每个任务类别指定了成本函数C。对于robosuite环境中的任务类别,我们简单地使用像素均方误差(MSE)作为成本。对于RoboDesk任务类别,我们发现额外的任务特定的预训练分类器可以提高规划性能。我们训练深度卷积网络来对每个任务的成功进行分类,并使用MSE和分类器logits的加权组合作为成本函数。我们将这些预训练模型权重作为基准测试的一部分提供。VP 2中的每个环境都配有用于视频预测模型训练的数据集。每个训练数据集包含35个时间步长的轨迹,每个时间步长包含256×256的RGB图像观测和每个步骤采取的动作。每个环境数据集的具体细节如下,附录D中提供了更多细节:

- robosuite桌面环境:我们包括了50K个与手动脚本策略交互的轨迹,以将随机对象在环境中的随机方向上推动。每个轨迹中的对象纹理是随机化的。

- RoboDesk环境:对于每个任务实例,我们包括了使用手动脚本策略收集的5K个轨迹,总共35K个轨迹。为了鼓励数据集包含不同成功率的轨迹,我们在执行脚本策略之前对每个动作的每个维度应用独立的高斯噪声。

**实验结果:**

实验结果和分析:

本文介绍了一个用于视频预测的控制中心基准测试(VP 2)。该基准测试旨在评估视频预测模型在下游控制任务中的性能。为了隔离模型、环境和控制算法的影响,基准测试包括每个任务的完整控制方案。VP 2由环境和任务定义、基于采样的规划器和训练数据集三个主要组成部分构成。基准测试提供了环境和任务定义,同时还提供了一个控制设置,可以根据控制性能直接评分模型。每次基准测试运行包括一系列控制试验,每个控制试验在特定任务实例上使用给定模型执行基于采样的规划。在T个控制步骤结束时,根据模拟器状态判断任务实例的成功或失败。实验结果表明,模型规模的扩大可以提高视觉多样性场景建模的感知质量,而不确定性意识等其他属性也可以提高规划性能。此外,本文还介绍了五种高性能视频预测模型的效果,并分析了模型规模、训练数据量和模型集成对性能的影响。

# P:14 通过增强现实头戴设备实现多模态基于自然语言的任务规划的体验式人工智能

- Title: Multimodal Grounding for Embodied AI via Augmented Reality Headsets for Natural Language Driven Task Planning

- Authors: Selma Wanna, Fabian Parra, Robert Valner, Karl Kruusamäe, Mitch Pryor

- Affiliation:

Selma Wanna - University of Texas at Austin

- Keywords: Natural Language Processing, Foundation Models, Language Grounding, Multimodality, Human Robot Collaboration

- Paper: [Link to the paper](https://arxiv.org/pdf/2304.13676)

Github: None

- Summary:

-(1):本文的研究背景是在非结构化环境中,特别是在工业领域中,利用人机团队中的机器人进行危险的检查和操作任务。

-(2):过去嵌入人工智能研究的方法依赖于硬提示工程技术,在提示设计和鲁棒性方面存在局限性。本文的方法是很有动机的,因为它解决了多模式接地和即时脆弱性的挑战。

-(3):本文提出的研究方法是将嵌入人工智能研究的前期工作应用于AR头显,将AR头显获得的视觉和语言信息注入GPT-3的语言提示符中。创新之处在于使用AR头戴式耳机进行多模式接地,并将EAI应用于工业任务。

-(4):通过使用AR头显在EAI代理和人类操作员之间进行信息交换的共址人机团队演示,对本文中的方法进行了评估。所取得的性能支持为工业领域的人机协作提供直观和最小限制的多模态控制接口的目标。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文介绍了一种通过增强现实头显实现多模态基础的方法,用于自然语言驱动的任务规划,以及在工业领域中应用的可行性和潜在问题。

b. 方法的详细步骤:

(1). 本文首先介绍了最近在生成建模方面的进展,推动了具身人工智能(EAI)领域的复兴。EAI系统通常使用大型语言模型来与环境进行交互的物理系统。本文探索了在工业领域中应用EAI的可能性,并成功地展示了共同定位的人机协作的可行性。

(2). 本文构建了一个实验,其中增强现实(AR)头显在EAI代理和人类操作员之间进行信息交流,用于各种检查任务。这种使用AR头显进行多模态基础和将EAI应用于工业任务的方法在具身人工智能研究中是新颖的贡献。

(3). 本文还通过定量和定性分析,突出了EAI构建中的潜在问题。大多数依赖于硬提示工程的方法使用人工设计的提示,但很少有定量的设计理据。这是有问题的,因为提示工程是一个非稳健的过程,不同但语义等效的提示可能导致任务性能在纯随机和最先进性能之间变化。本文还介绍了PromptCraft,这是一个开源平台,供机器人研究人员共享他们的提示策略。

(4). 本文还介绍了多模态基础模型(如CLIP)的出现,这些模型将图像信息转化为文本,常用于物体检测和场景描述等任务。本文采用了将视觉信息通过图像到文本算法注入到语言提示中的方法,但放弃了图像到文本模型,而是采用人工生成的虚拟现实(VR)标记。

(5). 本文还介绍了一种用于提示设计的穷举搜索算法,该算法在验证集上评估了10个P 2提示的空间。实验结果支持了我们的假设,即即使对于可以使用传统语法或更简单的神经网络解决的简单任务,提示设计也是高度非稳健的。最佳表现的提示设计具有平均BLEU分数为0.662,最高BLEU分数为0.850。

(6). 总结:本文通过增强现实头显实现了多模态基础的方法,用于自然语言驱动的任务规划,并在工业领域中展示了其可行性。同时,本文还突出了EAI构建中的潜在问题,并提供了定量和定性分析。

**实验设置:**

- 实验设置(Experimental setting):

本文的实验设置主要是探索在工业领域中应用具有增强现实(AR)头显的多模态基础的体验人工智能(EAI)系统的可行性。实验中构建了一个场景,通过AR头显在EAI代理和人类操作员之间进行信息交流,完成各种检查任务。实验中使用了一个验证集,对10个P 2提示进行了详尽的搜索算法,并评估了其性能。实验结果表明,即使对于可以使用传统语法或简单神经网络解决的简单任务,提示设计的鲁棒性也非常低。实验还对提示的脆弱性进行了进一步分析,并探讨了语言增强技术对任务性能的影响。实验中使用了多模态语音和增强现实的UMRF图解析器进行演示,展示了远程检查场景的功能。实验中使用了Clearpath Husky和Universal Robots UR5的移动操纵机器人,配备了Intel RealSense D435相机和Microsoft HoloLens 2 AR头显。实验中还使用了Azure Spatial Anchors插件实现了机器人和AR设备的共定位和共享参考框架。实验中的自然语言命令由HoloLens应用程序捕获,并通过ROS Python节点构建提示,并发送给OpenAI进行处理。实验中使用了Robot Operating System (ROS)和RoboFleet进行组件之间的数据分发。实验结果表明,EAI在安全关键环境中的部署存在一定的风险,提示的性能可能会受到微小的语义变化的影响。

**实验结果:**

- 实验结果和分析(Experimental results and analysis):

本文的实验结果表明,在工业领域中,使用增强现实(AR)头显作为多模态基础的EAI系统在人机协作方面是可行的。通过构建一个实验,将AR头显用于EAI代理和人操作员之间的信息交流,针对各种检查任务取得了成功。这是在具体应用中将AR头显用于多模态基础和将EAI应用于工业任务的创新贡献。此外,通过定量和定性分析,本文还指出了EAI构建中的潜在问题。

(1). 实验结果支持了假设,即即使对于可以使用传统语法或更简单的神经网络解决的简单任务,prompt设计的鲁棒性也非常低。在附录A1中清楚地证明了这一点。图4提供了表现最好的十个prompt。

(2). 在信息排序和示例选择方面存在较大的变化(参见图A3和图A2),但表现最好的prompt最常见的组合是示例类型1、4和5(参见表A1)。除一个例外外,示例类型5在序列中作为第一个示例时表现最好。这可能是因为示例5既是最长的,也是最具信息量的训练示例。然而,当它被放置在prompt的末尾时,LLM在开始解码验证查询时可能会感到困惑。

(3). 表现最好的prompt设计是prompt 70,平均BLEU分数为0.662,结构为示例4 + 示例5。BLEU分数最高的prompt(0.850)的结构为示例1 + 示例4。有关前十个prompt的详细设计,请参见表A2。

(4). 对于文本数据增强的进一步实验表明,GPT-3对于随机删除和插入操作具有较强的鲁棒性,但是随机交换单词和同义词替换对性能影响较大。对prompt应用组合增强后,性能变化更大。建议将Text AutoAugment的幅度参数搜索空间限制在小于0.1的范围内。

(5). 在低数据情况下,无法确定给定prompt与GPT-3预训练数据集中的UMRF示例的相似性之间是否存在相关性。

(6). 实验结果表明,即使是看似无害的同义词替换,如将数字表示转换为书面形式,以及替换类似的单词(例如将“向前移动”替换为“接近”和“桌子”替换为“办公桌”),也会明显影响任务性能。对坐标变量“y”进行转换,例如将其替换为“yttrium”或“atomic number 39”,也会对任务性能产生不利影响。

(7). 本文还展示了基于多模态语音和增强现实的UMRF图解析器的功能。通过远程检查场景的演示,展示了配备摄像头的移动机械手(Clearpath Husky + 两个Universal Robots UR5)与操作员之间的协作。操作员使用AR头显捕捉语音命令,并通过手势操作的虚拟标记定义目标位置。检查和任务执行反馈实时叠加在操作员的视野中。通过Azure Spatial Anchors插件实现了机器人和AR设备的共定位和共享参考框架。实验结果还展示了演示软件的设置,包括HoloLens、命令服务器和机器人等三个主要组件。

(8). 通过实验结果,本文还指出了在安全关键环境中部署EAI的潜在危险。具体而言,即使是语义上等效的prompt,在性能上也可能存在很大差异。对于开发在特定领域的机器人系统的研究人员来说,目前还没有明确的解决方案。不能依赖于在LLM预训练数据集中具有更大任务表示的语言任务的鲁棒性技术。

# P:15 AutoNeRF: 使用自主体验的智能体训练隐式场景表示

- Title: AutoNeRF: Training Implicit Scene Representations with Autonomous Agents

- 论文简介: 本文介绍了一种使用自主体验的智能体来收集数据以训练NeRF模型的方法。通过比较不同的探索策略和奖励函数,我们展示了NeRF模型在多个下游任务上的性能表现。

- Authors: Pierre Marza, Laetitia Matignon, Olivier Simonin, Dhruv Batra, Christian Wolf, Devendra Singh Chaplot

- Affiliation:

Pierre Marza: INSA Lyon

- Keywords: Implicit representations, Neural Radiance Fields (NeRF), autonomous agents, exploration strategies, reward functions

- Paper: [Link](https://arxiv.org/pdf/2304.11241) Github: [None]

- 论文总结:

- (1): 本文的研究背景是在未知环境中构建自主导航智能体的探索问题。

- (2): 过去的方法主要集中在训练探索策略以最大化覆盖范围,但对于构建NeRF模型来说,需要人工收集数据。本文提出了一种使用自主体验的智能体来收集数据的方法,并通过比较不同的探索策略和奖励函数来解决这个问题。

- (3): 本文提出了AutoNeRF方法,通过强化学习训练模块化策略,使智能体能够自主探索未知环境并收集数据以训练NeRF模型。我们还提出了多个下游任务来评估NeRF模型的性能,包括几何和语义地图预测准确性、规划准确性和相机姿态优化。

- (4): 通过实验证明,使用自主收集的数据可以训练NeRF模型,并在多个下游任务上取得良好的性能。模块化训练的探索策略相比传统方法有显著的改进。

**方法部分:**

方法详细介绍:

a. 一句话概览:本文提出了AutoNeRF方法,通过使用自主体验的智能体来收集训练NeRF模型所需的数据,实现了对未知环境的高效探索和自主构建隐式地图表示,同时比较了不同的探索策略对模型性能的影响。

b. 方法的详细步骤:

(1). Vanilla Semantic NeRF:使用多层感知器(MLP)预测给定空间中特定3D位置的密度σ、颜色c和语义类别s。通过对射线进行多点采样,使用随机梯度下降法训练NeRF模型,使其在渲染新视角时尽可能接近真实图像。

(2). Enhanced NeRF (Semantic Nerfacto):在Vanilla Semantic NeRF的基础上,引入了学习的外观嵌入e和球谐编码函数sh,通过改进的采样策略和快速查询粗糙密度表示,提高了训练速度和渲染质量。

(3). AutoNeRF方法:分为两个阶段,探索策略训练和NeRF模型训练。在探索策略训练阶段,使用自监督方法在训练环境中训练探索策略,以收集观测数据。在NeRF模型训练阶段,使用训练好的探索策略在未知测试场景中收集数据,并使用这些数据训练NeRF模型。最后,评估训练好的NeRF模型在多个下游任务中的性能。

(4). 探索策略的模块化架构:使用全局策略负责探索,考虑不同的奖励信号来训练全局策略,以适应场景重建任务的需求。奖励信号包括探索区域、障碍物覆盖、语义对象覆盖和视角覆盖。

(5). 奖励信号的计算:根据探索策略构建的度量地图计算不同奖励信号,包括探索区域、障碍物覆盖、语义对象覆盖和视角覆盖。这些奖励信号在自监督方式下计算,用于训练全局策略。

(6). 下游任务评估:使用训练好的NeRF模型在多个下游任务中进行评估,包括新视角渲染、地图重建、规划和姿态优化。

(7). 实验结果:实验证明,使用AutoNeRF方法可以在未知环境中仅通过一次体验收集的数据训练NeRF模型,并在多个机器人任务中取得良好的性能。模块化训练的探索模型明显优于传统基线模型。

(8). 其他细节:文章还介绍了具体的任务设置和实验细节,包括智能体的初始化、可执行的动作、观测数据的组成等。

(9). 结论:AutoNeRF方法通过使用自主体验的智能体来收集数据,实现了对未知环境的高效探索和自主构建隐式地图表示。实验结果表明,该方法在多个下游任务中取得了良好的性能,为机器人任务提供了有效的解决方案。

**实验设置:**

- 实验设置(Experimental setting):

本文介绍了一种使用自主体验的智能体收集数据来训练NeRF(神经辐射场)的方法,称为AutoNeRF。实验中使用了25个Gibson tiny训练集中的场景进行策略训练,并在5个Gibson验证集场景上进行了评估。每个策略在每个场景中进行了5次不同起始位置的回滚,并在每个轨迹数据上训练了一个NeRF模型。策略训练使用了V100 GPU进行了7个GPU天的训练。实验中还使用了预训练的Mask R-CNN模型,该模型在MS COCO数据集上进行了微调。实验结果表明,NeRF可以在自主收集的数据上进行训练,并且可以用于多个下游机器人任务。此外,模块化训练的探索模型明显优于传统基线模型。

**实验结果:**

- 实验结果和分析(Experimental results and analysis):

本文介绍了一种使用自主体验的智能体收集数据来训练NeRF(神经辐射场)的方法,称为AutoNeRF。实验结果表明,AutoNeRF可以在未知环境中仅使用一次体验的数据来训练NeRF,并且可以用于多个下游机器人任务。此外,使用模块化训练的探索模型明显优于传统基线模型。

(1). 不同探索策略的影响:实验比较了不同探索策略的影响,包括手工制作的基于边界的探索和由训练的高级规划器和经典低级路径跟随器组成的模块化方法。结果显示,模块化训练的探索模型在性能上明显优于传统基线模型。

(2). 下游任务的评估:实验评估了使用不同奖励函数训练的模型在四个不同的下游任务上的表现,包括经典视点渲染、地图重建、规划和姿态细化。实验结果表明,NeRF可以在主动收集的数据上进行训练,并且仅使用一次未知环境中的体验即可完成训练。同时,NeRF在多个下游任务上表现出良好的质量。

(3). 复杂大规模环境的重建:实验展示了使用模块化策略训练的连续表示在智能体探索场景时自主重建复杂大规模环境(如公寓或房屋)的可能性。实验结果显示,生成的几何、外观和语义网格在RGB和语义网格方面都具有令人满意的效果。此外,生成的网格可以加载到Habitat模拟器中,实现正确的导航和碰撞计算。

(4). 其他实验发现:实验还发现,NeRF模型可以在Gibson val场景中的不同起始位置上进行多次运行,并且在每个轨迹数据上训练一个NeRF模型。此外,模型训练使用了V100 GPU进行了7个GPU天的训练。

# P:16 学习语义无关和空间感知表示的可推广视听导航

- 1. Title: Learning Semantic-Agnostic and Spatial-Aware Representation for Generalizable Visual-Audio Navigation

- 论文简介: 本文提出了一种学习语义无关和空间感知表示的方法,用于可推广的视听导航任务。

- 2. Authors: Hongcheng Wang, Yuxuan Wang, Fangwei Zhong, Mingdong Wu, Jianwei Zhang, Yizhou Wang, Hao Dong

- 3. Affiliation:

Hongcheng Wang, Mingdong Wu and Hao Dong are with School of Computer Science, Peking University, Beijing, 100871, China.

- 4. Keywords: Vision-Based Navigation, Representation Learning, Reinforcement Learning

- 5. Paper: [Link](https://arxiv.org/pdf/2304.10773) Github: [Link](https://github.com/wwwwwyyyyyxxxxx/SA2GVAN)

- 6. 论文总结:

- (1): 本文的研究背景是视听导航任务,其中智能体需要利用视听观察来搜索和导航到声源位置。

- (2): 过去的方法在两个方面存在限制:对未听过的声音类别的泛化能力较差,训练样本效率低。本文的方法通过设计两个辅助任务来解决这两个问题,并提出了一种脑启发式的插拔式方法,学习语义无关和空间感知的表示。

- (3): 本文的研究方法是基于人类听觉处理机制,通过两个辅助任务来加速学习具有所需特征的表示。其中一个辅助任务使用对抗机制来忽略语义信息,另一个辅助任务利用视听输入的时间信息来预测声音的相对方向,从而增强空间信息。

- (4): 本文在真实的3D场景(Replica和Matterport3D)上进行了实验,结果表明我们的方法在转移到具有未听过的声音和地图的场景时具有更好的泛化性能。

**方法部分:**

方法详细介绍(Method detailed introduction):

a. 一句话概览:本文提出了一种脑启发式的插拔式方法,用于学习语义无关和空间感知的表示,以实现可推广的视听导航。

b. 方法的详细步骤:

(1). 基于人类对声音的定位能力,认为空间信息足以帮助智能体定位和感知声音。因此,提出了学习语义无关表示的辅助任务,通过对抗训练使音频编码器学习到与导航无关的表示。

(2). 引入了一个音频分类器和一个音频编码器之间的对抗机制,通过梯度反转层实现。音频分类器试图区分音频的语义类别,而音频编码器则试图生成与语义类别无关的表示。通过优化参数,使音频编码器学习到语义无关的表示。

(3). 为了提取空间信息并辅助导航策略学习,引入了预测声音位置的辅助任务。使用一个全连接网络作为位置预测器,通过时间序列模型生成的时间特征作为输入,预测声音相对于智能体的俯仰角和偏航角。通过最小二乘损失函数来计算辅助损失,并利用该损失生成的梯度来更新音频编码器、视觉编码器和时间序列模型。

(4). 通过以上两个辅助任务的训练,智能体学习到了包含空间信息的视听输入的表示,从而实现了对未听过的声音类别和地图的泛化能力。

(5). 在实验中,使用真实的3D场景(Replica和Matterport3D)进行验证,结果表明该方法在应用于具有未见地图和未听声音类别的场景时具有更好的泛化性能。

(6). 总结:本文提出的方法通过学习语义无关和空间感知的表示,解决了视听导航中的两个问题:对未听声音类别的泛化能力差和训练样本效率低的问题。通过引入对抗机制和预测声音位置的辅助任务,智能体学习到了泛化性能更好的表示,实现了对未见地图和未听声音类别的导航能力。

**实验设置:**

- 实验设置(Experimental setting):

本文的实验设置主要包括以下几个方面: