id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

14,535 | 在 Python 中使用机器学习来检测钓鱼链接 | https://www.opensourceforu.com/2022/04/detect-a-phishing-url-using-machine-learning-in-python/ | 2022-05-02T18:06:47 | [

"钓鱼",

"机器学习"

] | https://linux.cn/article-14535-1.html | 在网络钓鱼攻击中,用户会收到一封带有误导性链接的邮件或信息,攻击者可以利用它来收集重要数据,比如你的银行卡密码。本文将会给出一个简短的教程,旨在介绍如何检测这种网络钓鱼的企图。

通过网络钓鱼攻击,攻击者能够获得一些重要凭证,这些凭证可以用来进入你的银行或其他金融账户。攻击者发送的 URL 看起来与我们日常使用的原始应用程序完全相同。这也是人们经常相信它,并在其中输入个人信息的原因。钓鱼网址可以打开一个网页,它看起来与你的银行的原始登录页面相似。最近,这样的网络钓鱼攻击正变得相当普遍,所以,检测钓鱼链接变得非常重要。因此,我将介绍如何在 Python 中使用机器学习来检查一个链接是误导性的还是真实的,因为它可以帮助我们看到网页代码及其输出。注意,本文将使用 Jupyter Notebook。当然,你也可以使用 Google Colab 或 Amazon Sagemaker,如果你对这些更熟悉的话。

### 下载数据集

第一步,我们需要用于训练数据集。你可以从下面的链接中下载数据集。

* 真实的链接:<https://github.com/jishnusaurav/Phishing-attack-PCAP-analysis-using-scapy/blob/master/Phishing-Website-Detection/datasets/legitimate-urls.csv>

* 钓鱼链接:<https://github.com/jishnusaurav/Phishing-attack-PCAP-analysis-using-scapy/blob/master/Phishing-Website-Detection/datasets/phishing-urls.csv>

### 训练机器进行预测

当数据集下载完成,我们需要使用以下几行代码来导入所需的库:

```

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

```

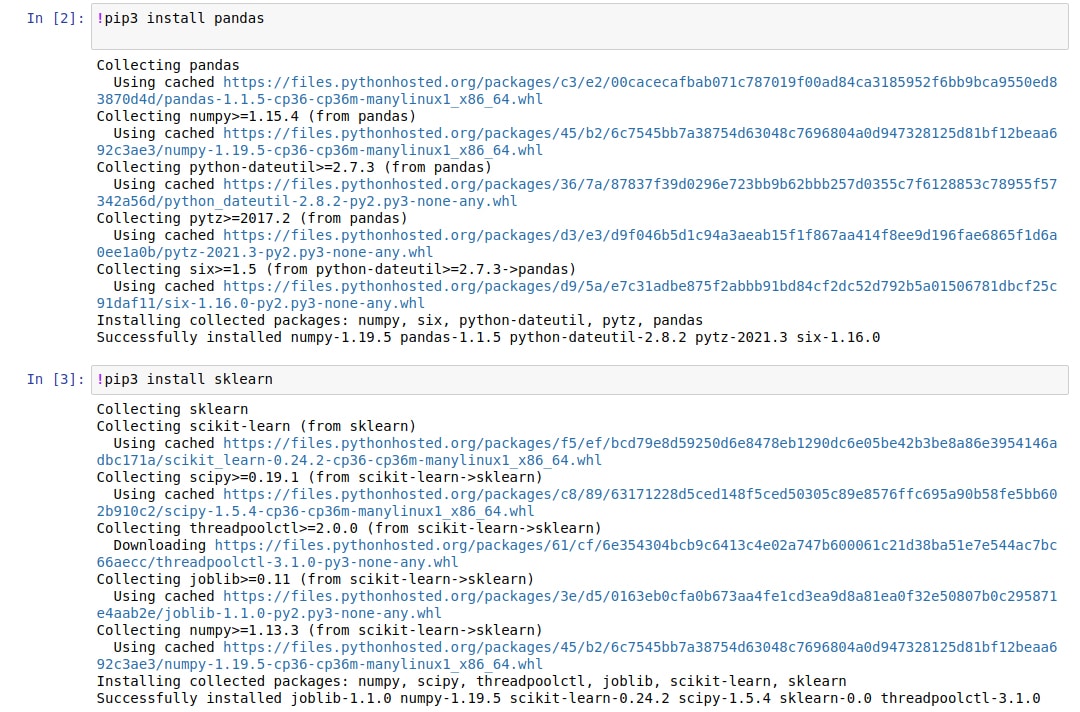

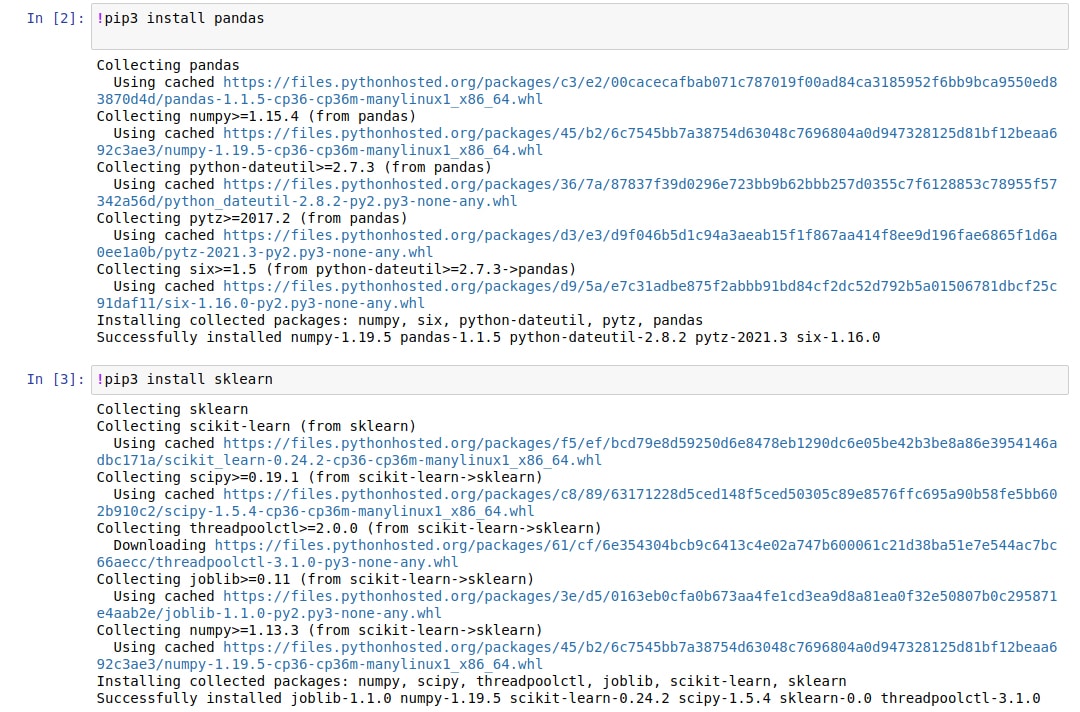

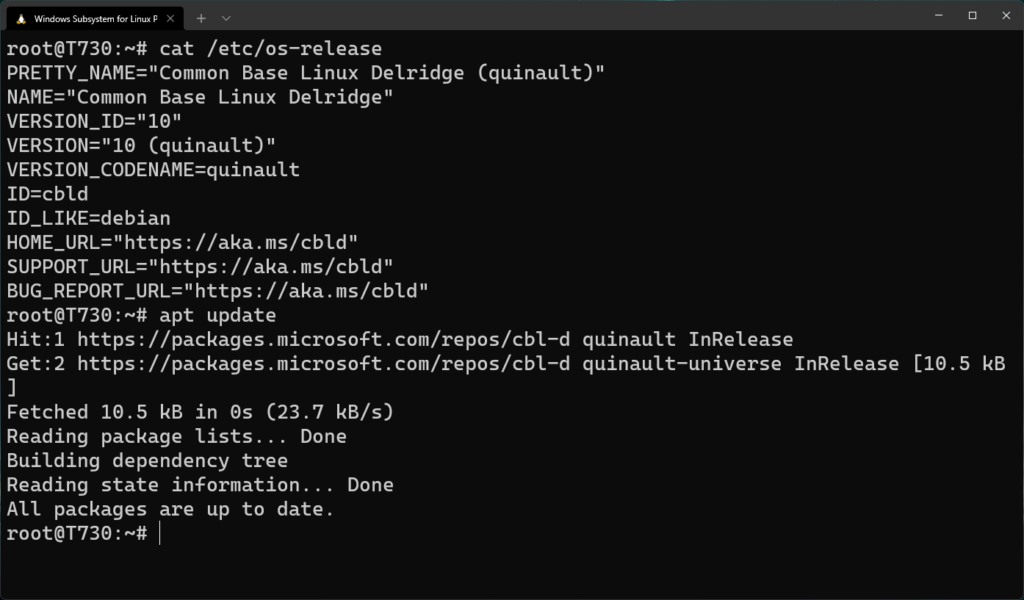

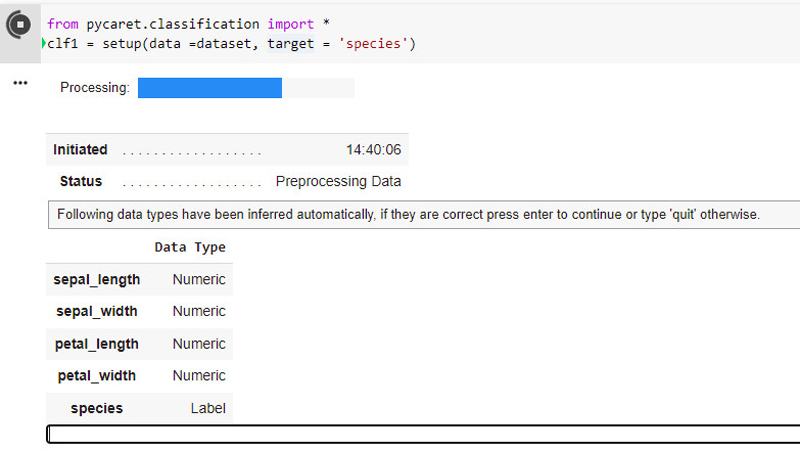

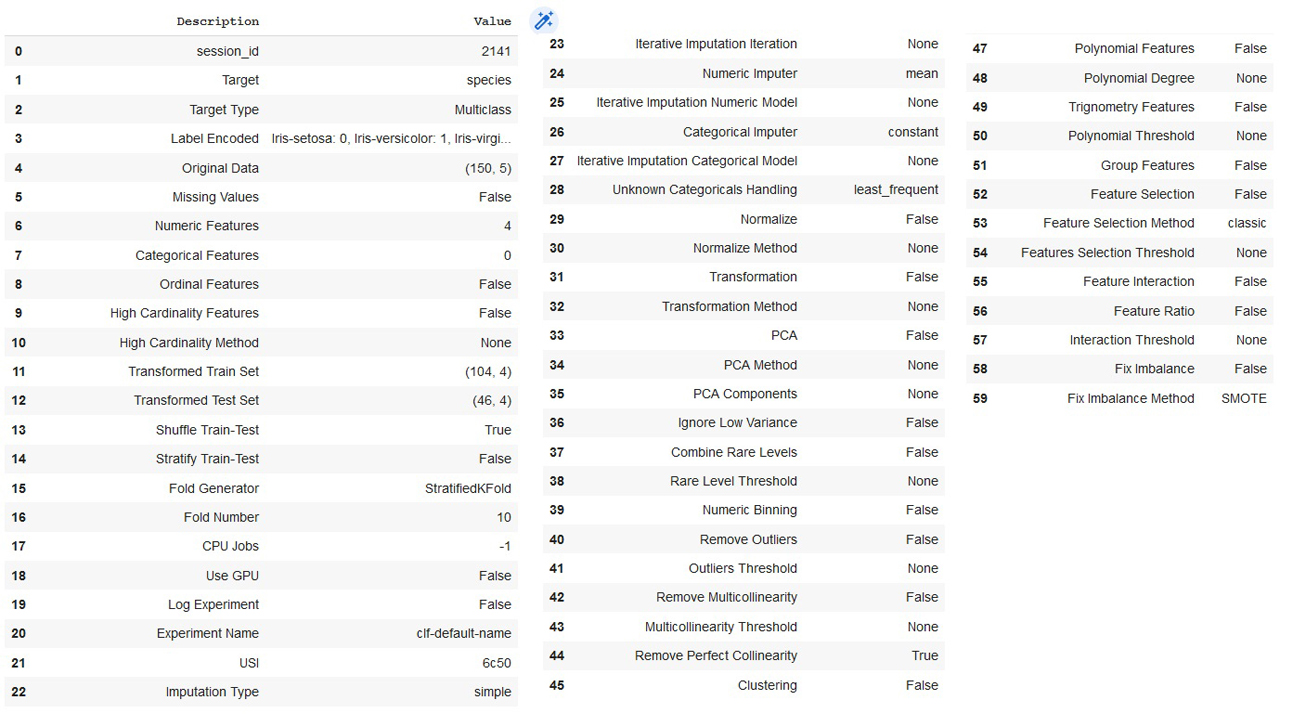

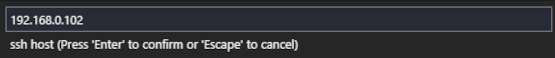

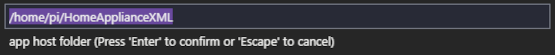

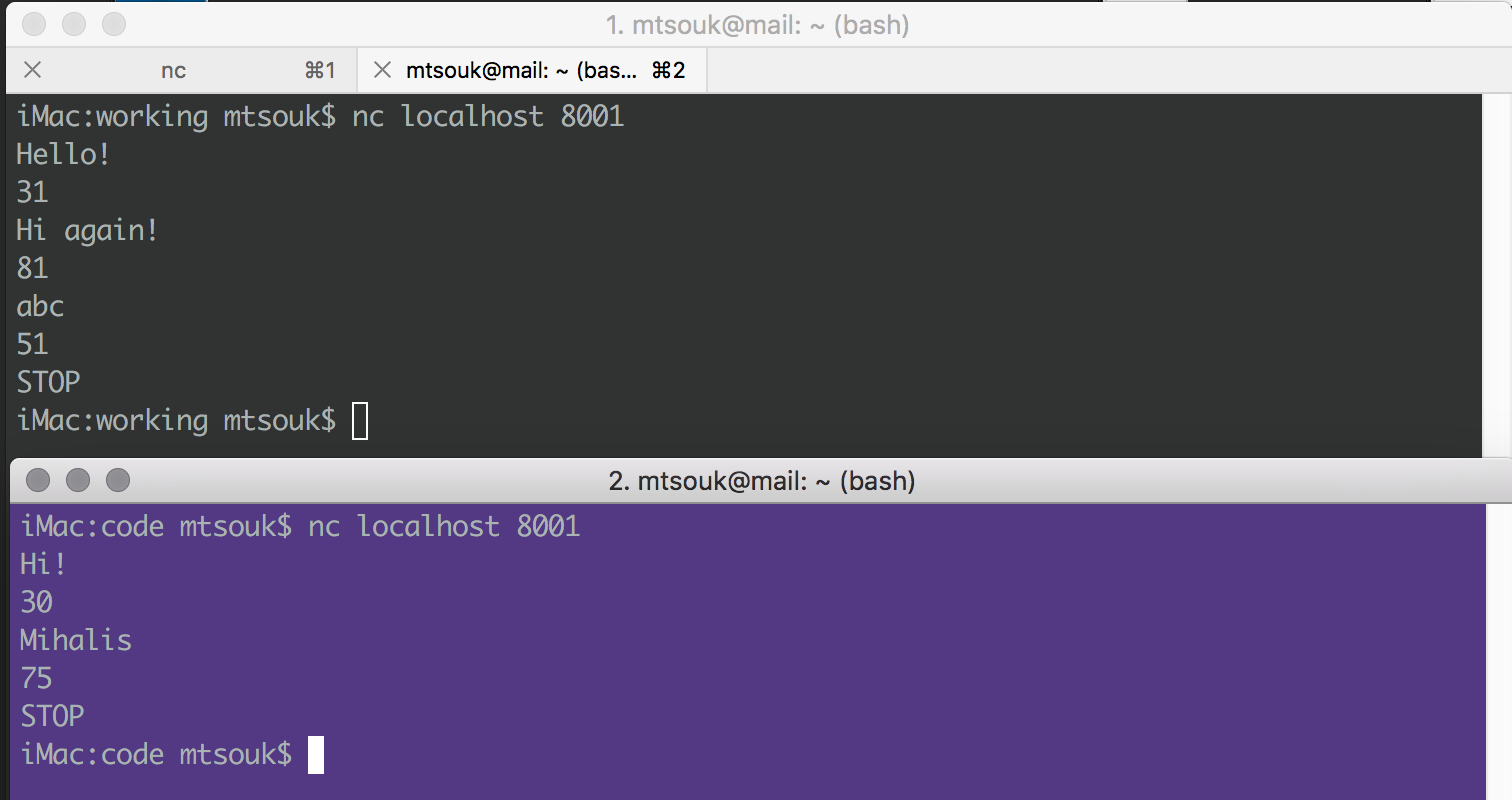

如果你没有这些库,你可以使用 `pip` 工具来安装这些库,如下图所示:

当依赖安装完成,你就可以导入数据集,并将其转换为 `pandas` 数据框架,使用以下几行代码进一步处理:

```

legitimate_urls = pd.read_csv(“/home/jishnusaurav/jupyter/Phishing-Website-Detection/datasets/legitimate-urls.csv”)

phishing_urls = pd.read_csv(“/home/jishnusaurav/jupyter/Phishing-Website-Detection/datasets/phishing-urls.csv”)

```

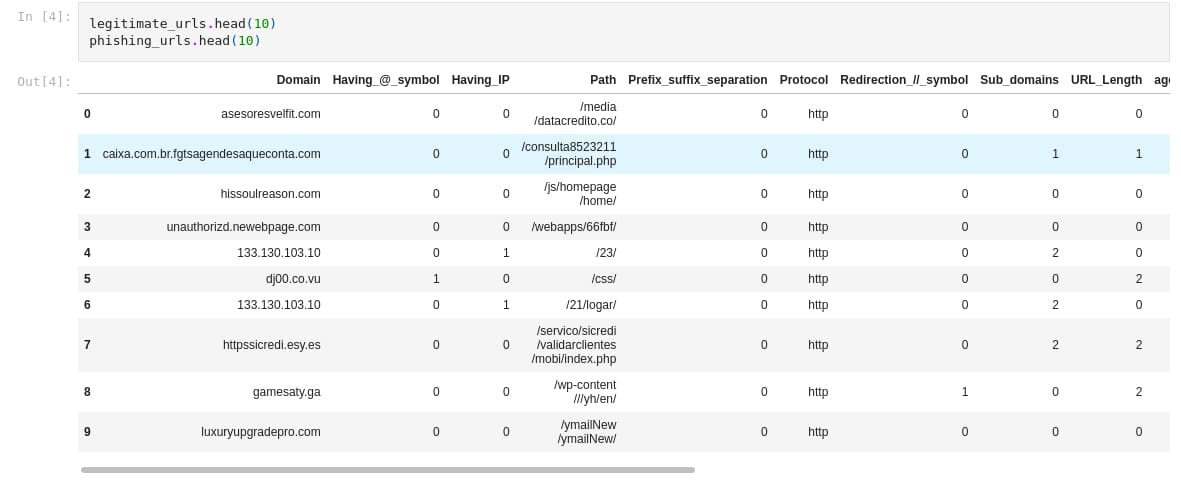

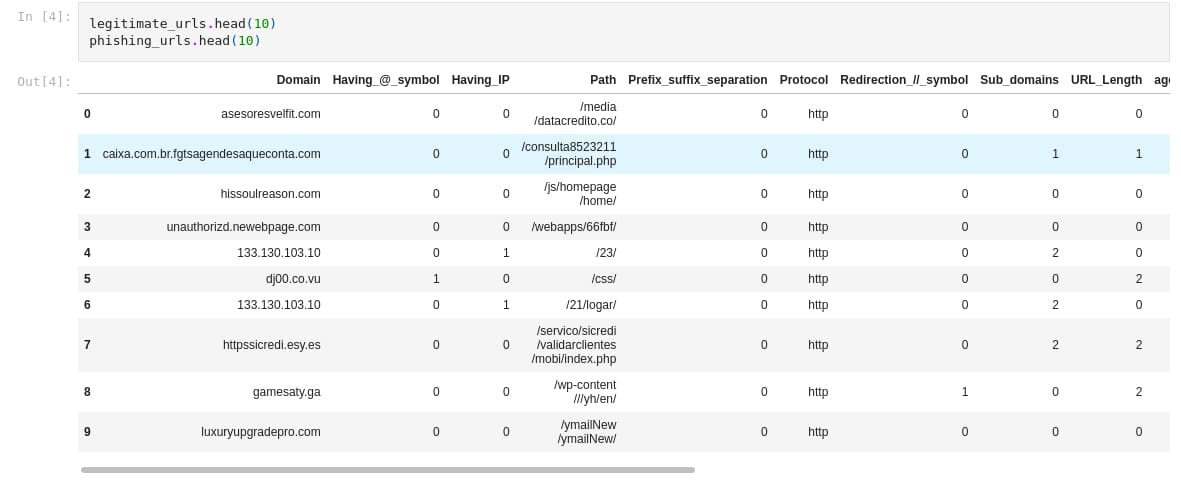

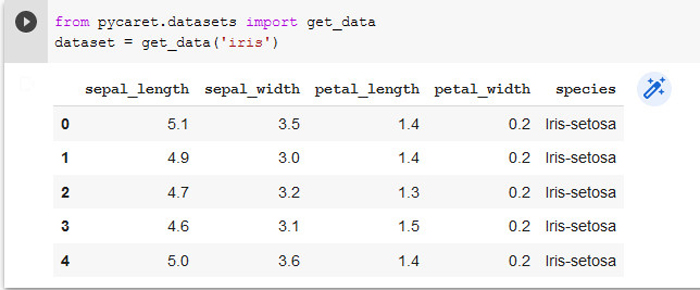

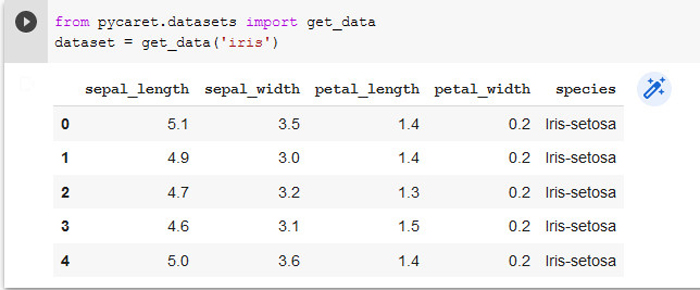

在成功导入后,我们需要把这两个数据集合并,以便形成一个数据集。合并后的数据集的前几行如下图所示:

然后去掉那些我们不需要的列,如路径(`path`)、协议(`protocol`)等,以达到预测的目的:

```

urls = urls.drop(urls.columns[[0,3,5]],axis=1)

```

在这之后,我们需要使用以下代码将数据集分成测试和训练两部分:

```

data_train, data_test, labels_train, labels_test = train_test_split(urls_without_labels, labels, test_size=0.30, random_state=110)

```

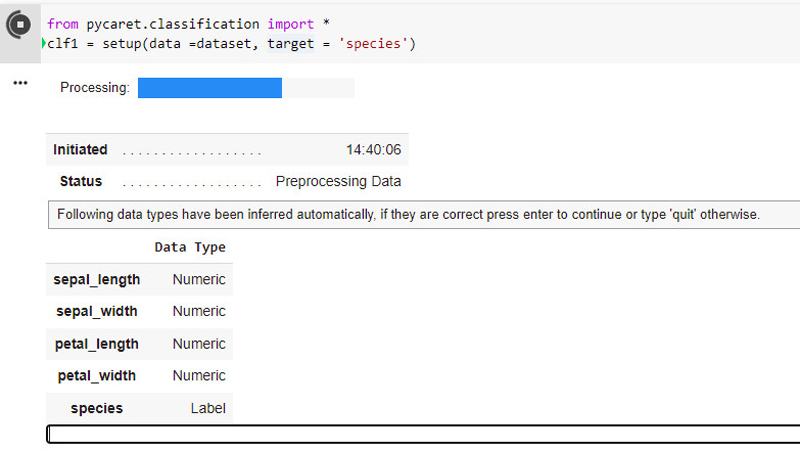

接着,我们使用 `sklearn` 的随机森林分类器建立一个模型,然后使用 `fit` 函数来训练这个模型。

```

random_forest_classifier = RandomForestClassifier()

random_forest_classifier.fit(data_train,labels_train)

```

完成这些后,我们就可以使用 `predict` 函数来最终预测哪些链接是钓鱼链接。下面这行可用于预测:

```

prediction_label = random_forest_classifier.predict(test_data)

```

就是这样啦!你已经建立了一个机器学习模型,它可以预测一个链接是否是钓鱼链接。试一下吧,我相信你会满意的!

---

via: <https://www.opensourceforu.com/2022/04/detect-a-phishing-url-using-machine-learning-in-python/>

作者:[Jishnu Saurav Mittapalli](https://www.opensourceforu.com/author/jishnu-saurav-mittapalli/) 选题:[lkxed](https://github.com/lkxed) 译者:[lkxed](https://github.com/lkxed) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | *In a phishing attack, a user is sent a mail or a message that has a misleading URL, using which the attacker can collect important data like the passwords of the banks your money is in. This article gives a short tutorial on how to detect such phishing attempts.*

Through phishing attacks, attackers acquire important credentials that can be used for getting access to your bank or other financial accounts. The URLs sent by the attacker look exactly the same as the original applications we use on a daily basis. That is why people often believe these and enter their personal details. A phishing URL can open a Web page that looks similar to the original login page of your bank. Detecting such URLs has become very important of late as such phishing attacks are becoming pretty common. So let’s see how we can check whether a URL is a misleading one or a genuine one using machine learning in Python, as it can help us see the code as well as the outputs. We will be using Jupyter Notebook. You can use Google Colab or Amazon Sagemaker too, if you are more comfortable with those.

**Download the data sets**

To start, we will need the data set to work upon. You can download the data sets from the links given below.

**Genuine URLs:** https://github.com/jishnusaurav/Phishing-attack-PCAP-analysis-using-scapy/blob/master/Phishing-Website-Detection/datasets/legitimate-urls.csv

**Phishing URLs:** https://github.com/jishnusaurav/Phishing-attack-PCAP-analysis-using-scapy/blob/master/Phishing-Website-Detection/datasets/phishing-urls.csv

**Training the machine to predict**

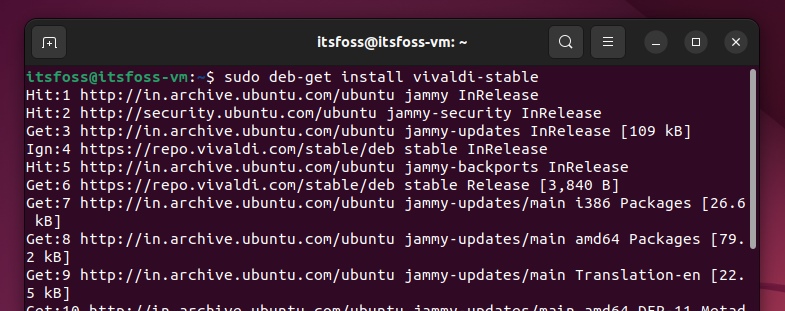

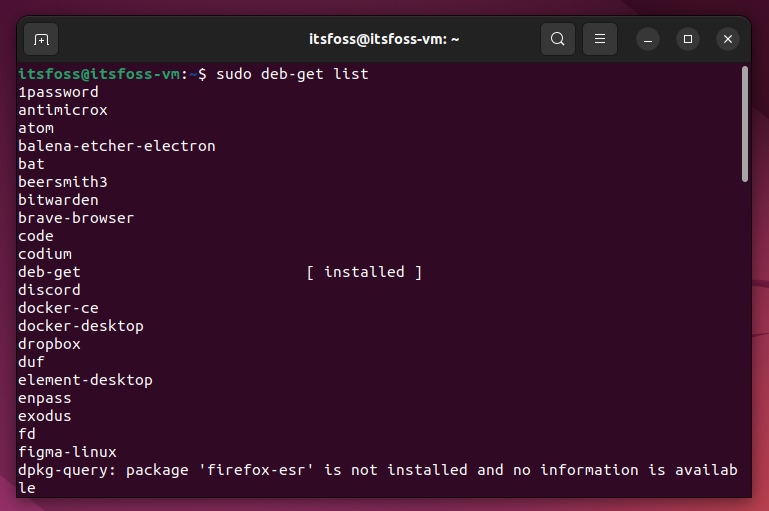

Once we have the data sets, we need to import the required libraries using the following lines of code:

<em>import pandas as pd</em> <em>from sklearn.ensemble import RandomForestClassifier</em>

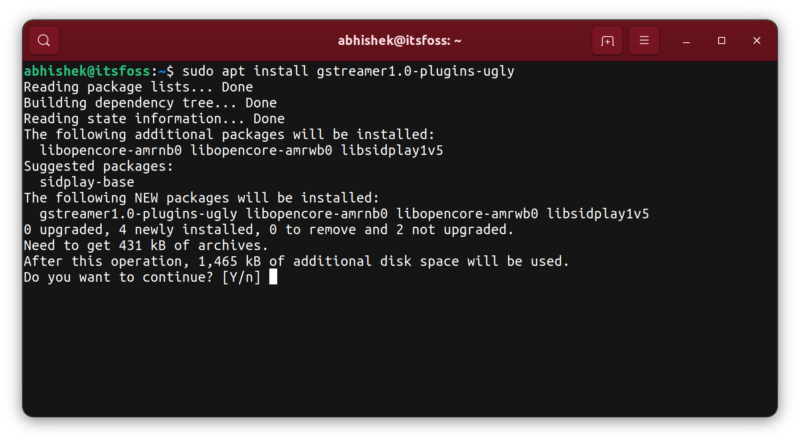

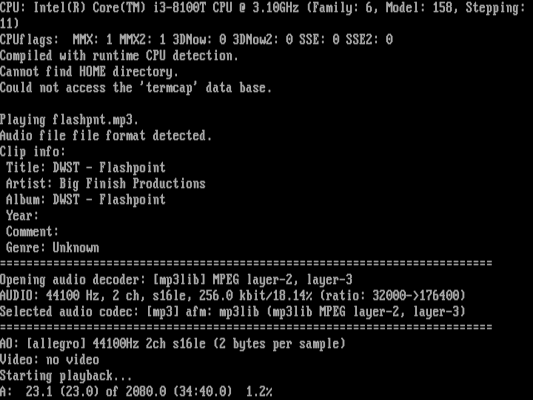

If you do not have the libraries, you can use* pip* to install the libraries, as shown in Figure 1.

Once this is done, you can import the data sets and convert them into pandas dataframe for further processing using the following lines of code:

legitimate_urls = pd.read_csv(“/home/jishnusaurav/jupyter/Phishing-Website-Detection/datasets/legitimate-urls.csv”) phishing_urls = pd.read_cs v(“/home/jishnusaurav/jupyter/Phishing-Website-Detection/datasets/phishing-urls.csv”)

After successful import, we need to merge both the dataframes — the legitimate and the phishing ones, in order to make one data set. The first few lines of the merged data set are shown in Figure 2.

We then drop the columns like path, protocol, etc, that we do not need for the purpose of prediction:

urls = urls.drop(urls.columns[[0,3,5]],axis=1)

After this, we need to split the data set into testing and training parts using the following code:

data_train, data_test, labels_train, labels_test = train_test_split(urls_without_labels, labels, test_size=0.30, random_state=110)

We now make a model using the random forest classifier from sklearn, and then use the fit function to train the model:

random_forest_classifier = RandomForestClassifier() random_forest_classifier.fit(data_train,labels_train)

Once this is done, we can use the predict function to finally predict which URLs are phishing. The following line can be used for the prediction:

prediction_label = random_forest_classifier.predict(test_data)

That is it! You have built a machine learning model that predicts if a URL is a phishing one. Do try it out. I am sure you will have fun. |

14,536 | Archinstall 新的菜单系统让安装 Arch Linux 更容易了 | https://news.itsfoss.com/archinstall-menu/ | 2022-05-02T22:56:58 | [

"Arch Linux"

] | https://linux.cn/article-14536-1.html |

>

> Archinstall 让人们更容易上手 Arch Linux,也应该可以为经验丰富的 Linux 用户节省时间。你觉得呢?

>

>

>

去年的这个时候,Arch Linux [引入了一个引导式的安装程序](https://news.itsfoss.com/arch-linux-easy-install/),使其安装过程更加简单。

你只需要输入 `archinstall`,就可以开始一步步的安装,而不需要自己全部定制。

即使你不是新手用户,它应该也能为你正常安装 Arch Linux 节省一些时间。你可以配置所有基本选项,无论是创建普通用户或 root 用户,还是选择桌面、挑选软件包、选择音频服务器,等等。

如果你感兴趣的话,可以在我们的 [在 VirtualBox 里安装 Arch Linux 的指南](https://itsfoss.com/install-arch-linux-virtualbox/) 中看看 Archinstall 的实际使用。

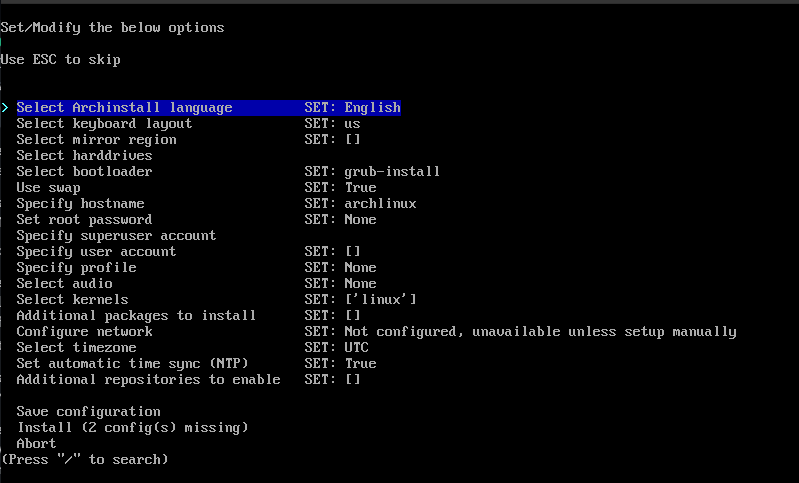

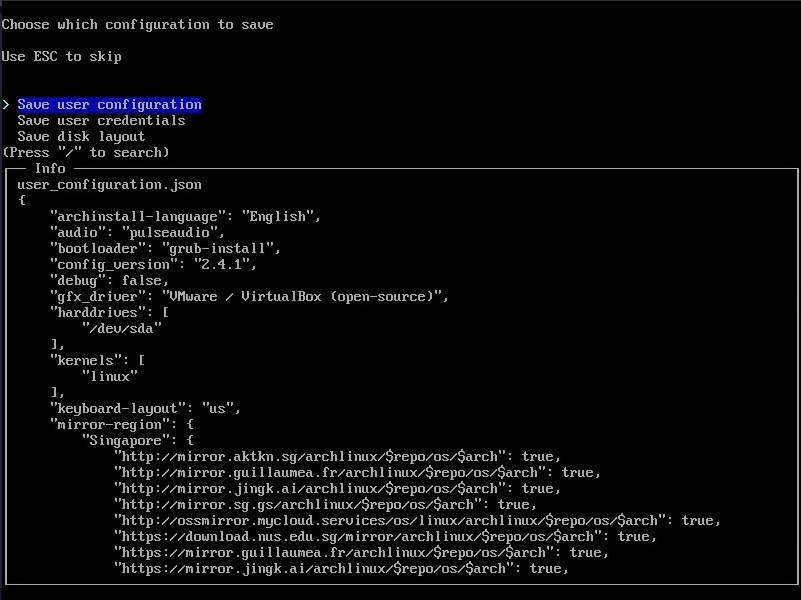

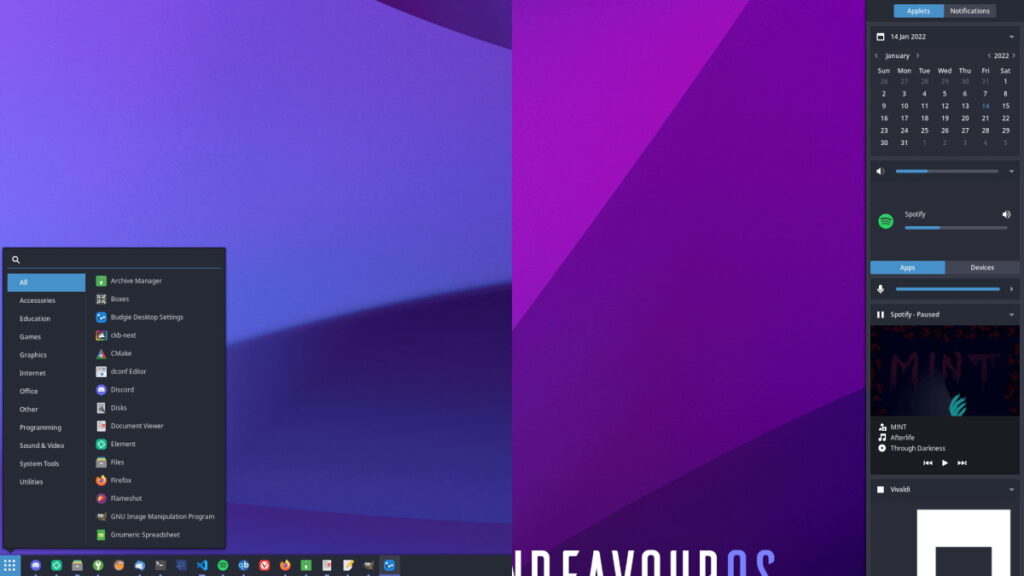

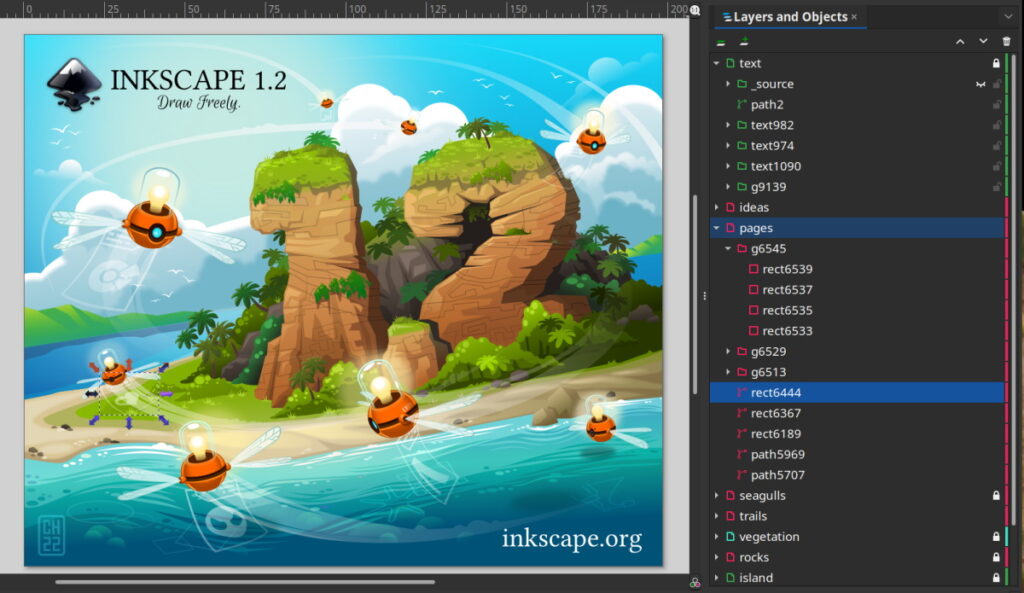

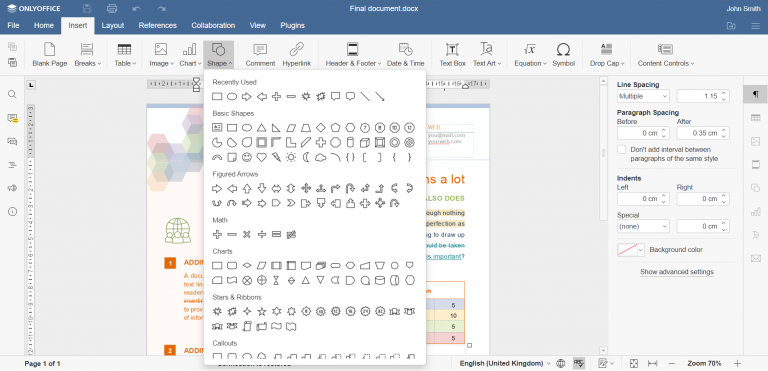

现在,Archinstall v2.4.1 已发布,我们有了新的菜单系统和大量的技术变化。

让我们来看看它是如何工作的吧!

### 新菜单系统及无障碍改进

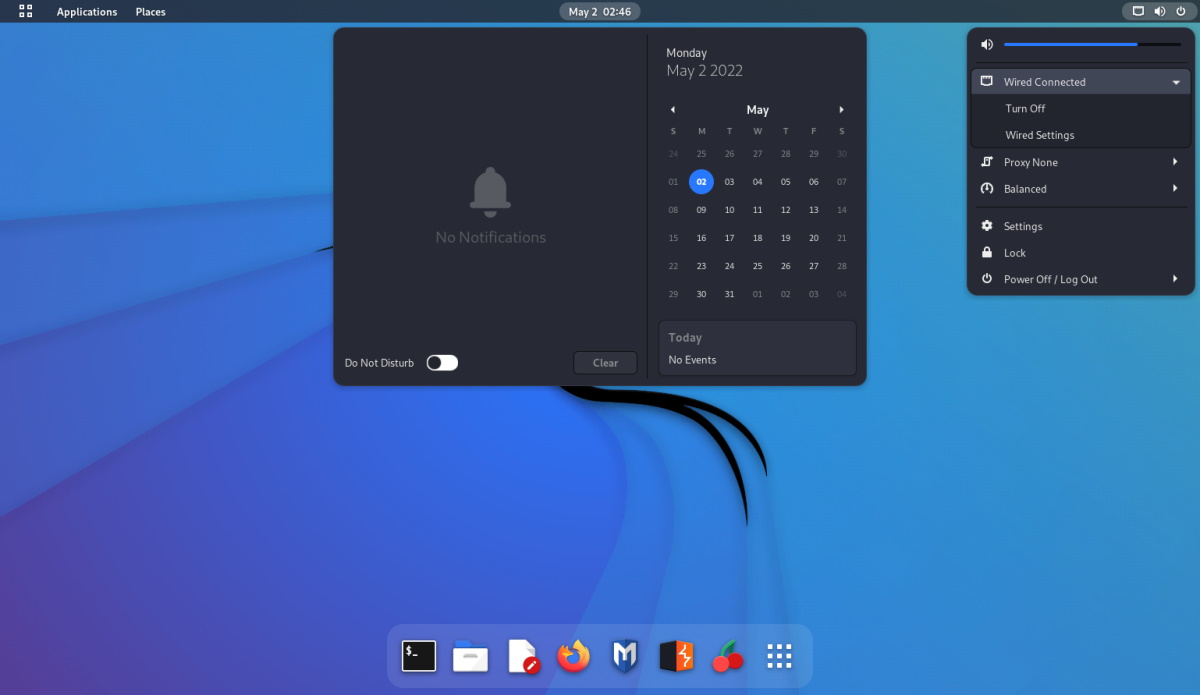

新的菜单系统看起来更有条理了。

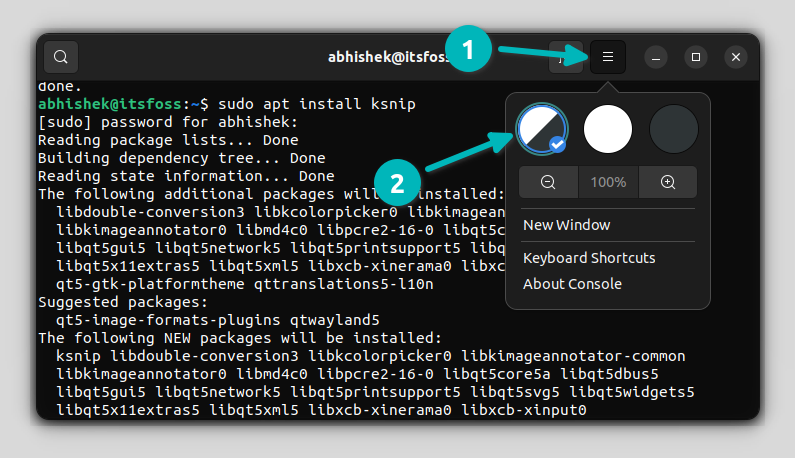

它是以 [simple-term-menu](https://github.com/IngoMeyer441/simple-term-menu) 为基础建立的,simple-term-menu 是一个用于在命令行中创建交互式菜单的软件包。为了避免外部依赖,它与源代码捆绑,这要归功于 Ingo Meyer(开发者)。

另外还得感谢其他开发者,其中包括 [Werner Llácer](https://github.com/wllacer) 和 [Daniel](https://github.com/svartkanin),是他们编写了 1200 多行代码,才让这成为可能。

这个菜单系统也是无障碍的。你可以用数字键盘上的 `*` 把它切换到跟踪选择模式,这应该能让 espeakup 如预期的工作。

在 Archinstall 的未来版本中,它也会支持默认的跟踪模式。

在上面的截图中,你可能会注意到,它支持设置语言、键盘布局、内核、音频服务器、用户、网络和其他基本选项。

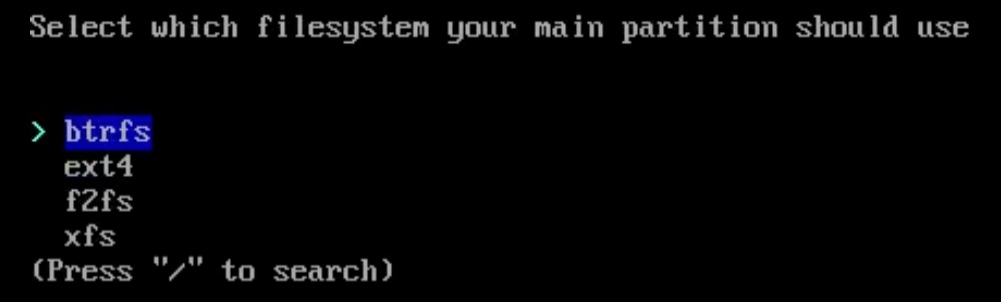

当你选择了硬盘后,菜单将增加另一个选项,让你选择一个“磁盘布局”,你可以在其中选择文件系统的类型。

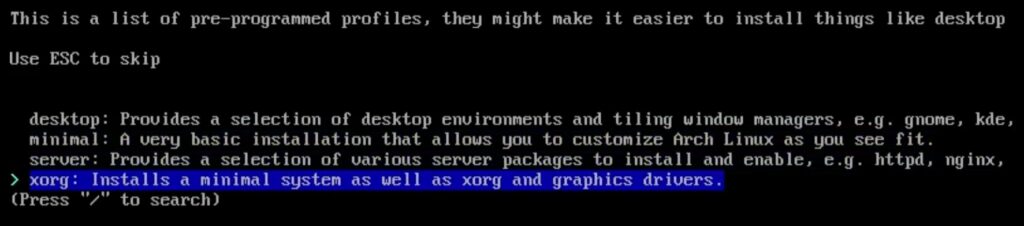

同样,设置每一个选项时,你都可以调整更多细节,比如桌面环境的配置文件。

默认情况下,它会启用一个交换分区。不过,你可以根据你的需要进行调整。总的来说,这应该是一个顺滑的体验,所有的安装先决条件都以菜单形式呈现。

在此感谢我们团队中的 Sreenath,是他测试并提供了这些屏幕截图。

除了这些变化之外,你还可以期待以下改进:

* 如果你选择 btrfs 作为文件系统,会添加一个 BTRFS 压缩选项。

* Archinstall 现在支持同时进行多个网卡配置的手动配置。

* 安装程序可以通过 `archinstall.Installer()` 跟踪哪些软件包已经安装完毕。

要查看所有的技术变化和错误修复,你可以参考 [GitHub 上的发布说明](https://github.com/archlinux/archinstall/releases/tag/v2.4.1)。

**你可以等待最新的 ISO(计划在 5 月 1 日发布),或者从 GitHub 上下载并自己尝试。**

你试过 Arch Linux 上的原来的安装向导吗?还是说,相较于使用安装程序,你更偏向于自己手动配置一切?请在评论区分享你的想法吧!

---

via: <https://news.itsfoss.com/archinstall-menu/>

作者:[Ankush Das](https://news.itsfoss.com/author/ankush/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[lkxed](https://github.com/lkxed) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

Last year, this time around, Arch Linux [introduced a guided installer](https://news.itsfoss.com/arch-linux-easy-install/) to make the installation process easier.

All you had to do was type in “**archinstall**” to get started with the step-by-step installation without needing to customize all by yourself.

Not just for new users, it should also save you some time to install Arch Linux in general. You get all the essential options starting from creating username/root user, selecting the desktop, picking software packages, choosing the audio server, and more.

If you are curious, you can take a look at archinstall in action in our [installation guide for Arch Linux on VirtualBox](https://itsfoss.com/install-arch-linux-virtualbox/?ref=news.itsfoss.com).

Now, with archinstall v2.4.1, we have a new menu system and numerous technical changes.

Let us take a look at it and how it works.

### Archinstall’s New Menu System & Accessibility Improvements

The new menu system looks much more organized.

It is built using [simple-term-menu](https://github.com/IngoMeyer441/simple-term-menu?ref=news.itsfoss.com) as its base, which is a package to create interactive menus on the command line. To avoid external dependencies, it has been bundled with the source, all thanks to Ingo Meyer.

Other developers include [Werner Llácer](https://github.com/wllacer?ref=news.itsfoss.com) and [Daniel](https://github.com/svartkanin?ref=news.itsfoss.com) to make this work with 1200+ lines of code.

The menu system is also accessibility friendly. You can switch it to the tracking selection mode using the “numpad *”, which should make espeakup work, as one would expect.

In future archinstall versions, it will honor default tracking mode as well.

As you can notice in the screenshot above, you get to set the language, keyboard layout, kernels, audio server, user, network, and more essential options.

Once you select the hard drive, the menu will add another option to let you choose a “disk layout” where you can select the type of file system.

Similarly, as you proceed with each option, you will get access to more things to tweak like the profiles to set your desktop environment:

By default, you have a swap enabled. But, you can tweak that as you require. Overall, it should be a seamless experience, with all installation prerequisites presented in a menu form.

Thanks to *Sreenath* on our team for testing this through to give you some screenshots:

In addition to these changes, you can also expect the following improvements:

- BTRFS compression option added as an option if you select btrfs as filesystem.

- Archinstall now supports multiple NIC configurations at the same time for manual configuration

- The installer keeps track of which packages have been installed through archinstall.Installer()

To explore all the technical changes and bug fixes, you can refer to the [release notes on GitHub](https://github.com/archlinux/archinstall/releases/tag/v2.4.1?ref=news.itsfoss.com).

**You can wait for the latest ISO (scheduled for May 1) to try it out, or download it from GitHub and try it yourself.**

*Have you tried the original guided installer on Arch Linux? Or do you prefer to configure everything without the installer? Feel free to let me know your thoughts below.*

## More from It's FOSS...

- Support us by opting for

[It's FOSS Plus](https://itsfoss.com/#/portal/signup)membership. - Join our

[community forum](https://itsfoss.community/). - 📩 Stay updated with the latest on Linux and Open Source. Get our

[weekly Newsletter](https://itsfoss.com/newsletter/). |

14,537 | 如何在 Linux 和 Windows 电脑之间共享文件 | https://opensource.com/article/21/4/share-files-linux-windows | 2022-05-02T23:39:00 | [

"共享",

"Samba"

] | https://linux.cn/article-14537-1.html |

>

> 使用 Samba 设置跨平台文件共享。

>

>

>

如果你使用不同的操作系统,能够在它们之间共享文件会让你倍感方便。这篇文章介绍如何使用 [Samba](https://www.samba.org/) 和 [mount.cifs](https://linux.die.net/man/8/mount.cifs) 在 Linux ([Fedora 33](https://getfedora.org/en/workstation/download/))和 Windows 10 之间设置文件共享。

Samba 是 [SMB/CIFS](https://en.wikipedia.org/wiki/Server_Message_Block) 协议的 Linux 实现,允许通过网络连接直接访问共享的文件夹和打印机。 mount.cifs 是 Samba 套件的一部分,可让你在 Linux 下挂载 [CIFS](https://en.wikipedia.org/wiki/Server_Message_Block) 文件系统。

>

> **注意**: 这些说明适用于在你的私有本地网络内,或在 Linux 宿主机和虚拟化的 Windows 访客机之间的虚拟主机专用网络中共享文件。不要将本文视为你公司网络的操作指南,因为本文没有实现必要的网络安全考虑。

>

>

>

### 从 Windows 访问 Linux

本节介绍从 Windows 文件资源管理器访问用户的 Linux 主目录。

#### 1、安装和配置 Samba

进入你的系统安装 Samba:

```

dnf install samba

```

Samba 是一个系统守护进程,其配置文件位于 `/etc/samba/smb.conf`。它的默认配置应该就可以工作。如果不行,下面这个最小化配置应该可以解决问题:

```

[global]

workgroup = SAMBA

server string = %h server (Samba %v)

invalid users = root

security = user

[homes]

comment = Home Directories

browseable = no

valid users = %S

writable = yes

```

你可以在该项目网站的 [smb.conf](https://www.samba.org/samba/docs/current/man-html/smb.conf.5.html) 部分找到参数的详细说明。

#### 2、修改 LinuxSE

如果你的 Linux 发行版受 [SELinux](https://www.redhat.com/en/topics/linux/what-is-selinux) 保护(比如 Fedora),必须通过以下命令才能通过 Samba 共享主目录:

```

setsebool -P samba_enable_home_dirs on

```

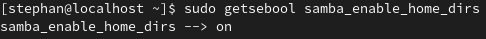

通过以下命令查看这个值:

```

getsebool samba_enable_home_dirs

```

输出如下:

#### 3、启用你的用户

Samba 使用一组用户/密码来管理连接权限。通过键入以下命令将你的 Linux 用户添加到该集合中:

```

smbpasswd -a <你的用户名>

```

系统提示你输入密码。这是一个 *全新* 的密码;而不是你账户的当前密码。请输入你想用来登录 Samba 的密码。

键入以下命令得到有 Samba 使用权限的用户列表:

```

pdbedit -L -v

```

键入以下命令删除一个用户:

```

smbpasswd -x <用户名>

```

#### 4、开启 Samba

既然 Samba 是一个系统守护进程,你可以在 Fedora 上键入以下命令启动它:

```

systemctl start smb

```

这将为当前会话开启 Samba 服务。如果想让它自启动,键入以下命令:

```

systemctl enable smb

```

在某些系统上,Samba 守护进程注册为 `smbd`。

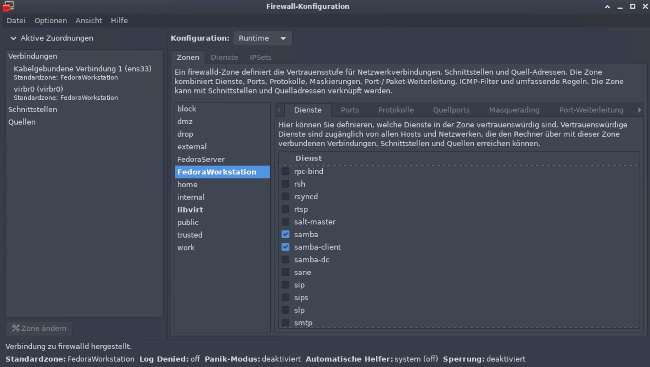

#### 4、配置防火墙

你的防火墙会默认阻拦 Samba。通过配置防火墙允许 Samba 能永久访问网络。

你可以在命令行执行如下操作:

```

firewall-cmd --add-service=samba --permanent

```

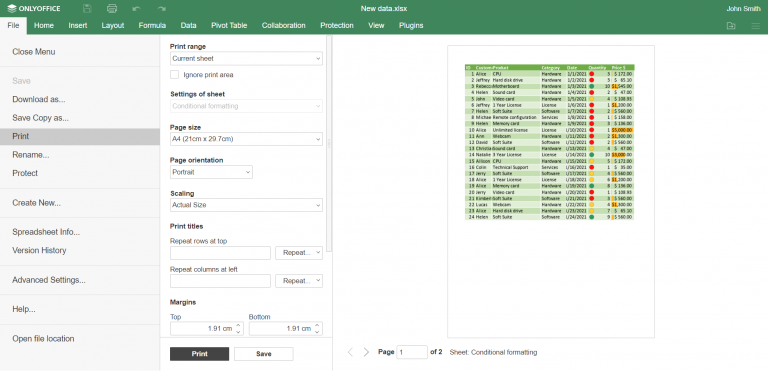

或者,你可以使用 `firewall-config` 工具以图形化方式进行操作:

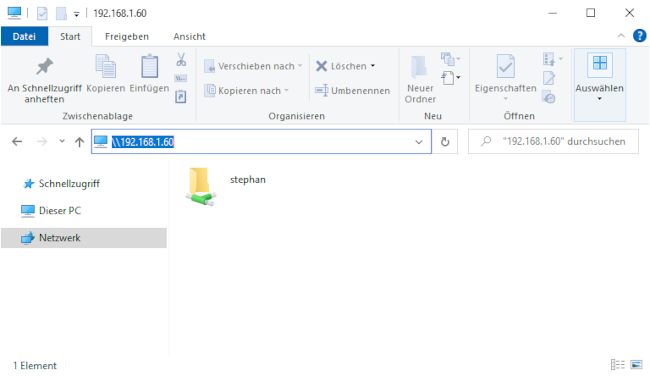

#### 5、从 Windows 访问 Samba

在 Windows 中,打开文件资源管理器。在地址栏中,键入两个反斜杠(`\\`),紧跟你的 Linux 机器的地址(IP 地址或主机名):

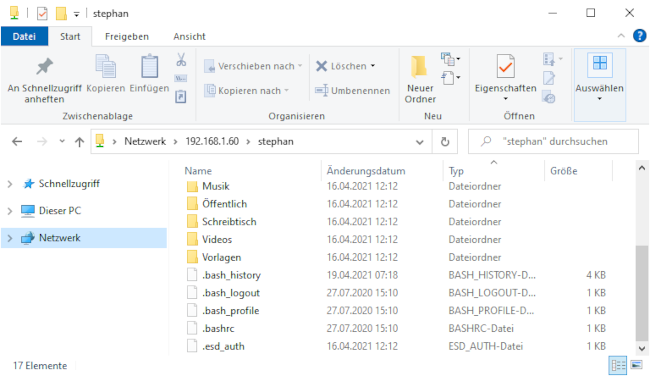

系统将提示你输入登录信息。输入第 3 步中的用户名和密码组合。你现在应该可以访问 Linux 机器上的主目录:

### 从 Linux 访问 Windows

以下步骤说明了如何从 Linux 访问共享的 Windows 文件夹。要实现这一点,需要你的 Windows 用户帐户具有管理员权限。

#### 1、启用文件共享

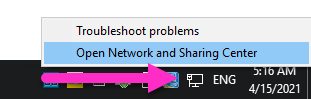

通过点击 “Windows 按钮” > “<ruby> 设置 <rt> Settings </rt></ruby>” > “<ruby> 网络和 Internet <rt> Network & Internet </rt></ruby>” ,或者右键单击任务栏右下角的小监视器图标,<ruby> 打开网络和共享中心 <rt> Open Network an d Sharing Center </rt></ruby>:

在打开的窗口中,找到你要使用的连接并记下其配置文件。我使用了 **以太网 3**,它被标记为 <ruby> 公用网络 <rt> Public Network </rt></ruby>。

>

> **注意**:如果你的 PC 经常连接公用网络,请考虑将本地计算机的连接配置文件更改为 **私有**。

>

>

>

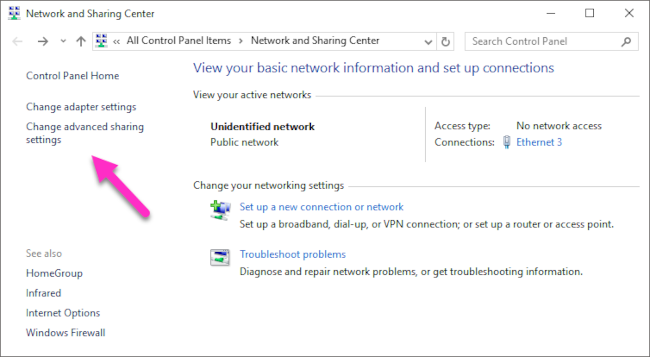

记住你的网络配置,然后单击 <ruby> 更改高级共享设置 <rt> Change advanced sharing settings </rt></ruby>:

选择与你的连接对应的配置文件并打开 <ruby> 网络发现 <rt> network discovery </rt></ruby> 和 <ruby> 文件和打印机共享 <rt> file and printer sharing </rt></ruby>:

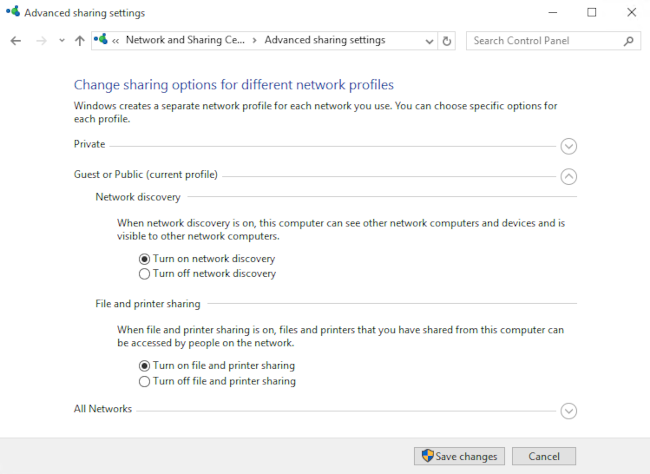

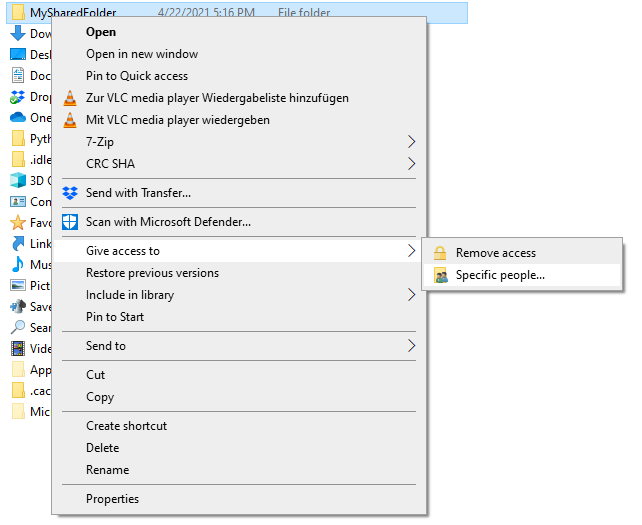

#### 2、定义一个共享文件夹

通过右键单击你要共享的文件夹打开上下文菜单,导航到 <ruby> 授予访问权限 <rt> Give access to </rt></ruby>,然后选择 <ruby> 特定用户... <rt> Specific people... </rt></ruby>:

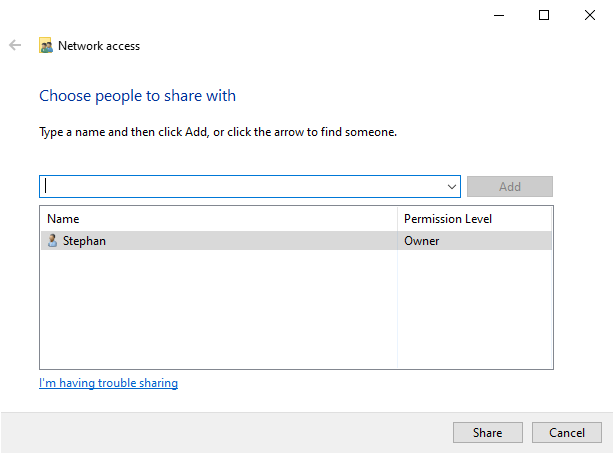

检查你当前的用户名是否在列表中。点击 <ruby> 共享 <rt> Share </rt></ruby> 将此文件夹标记为共享:

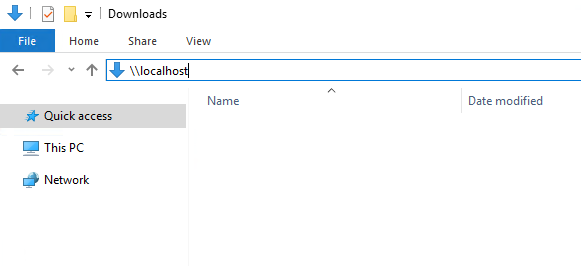

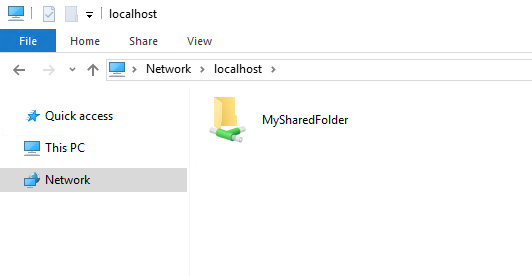

你可以通过在文件资源管理器的地址栏中输入 `\\localhost` 来显示所有共享文件夹的列表:

#### 3、在 Linux 下挂载共享文件夹

回到你的 Linux 系统,打开一个命令行,然后创建一个新文件夹,用于挂载 Windows 共享:

```

mkdir ~/WindowsShare

```

挂载 Windows 共享是使用 `mount.cifs` 完成的,它应该被默认安装。使用如下命令临时挂载你的共享文件夹:

```

sudo mount.cifs //<address-of-windows-pc>/MySharedFolder ~/WindowsShare/ -o user=<Windows-user>,uid=$UID

```

在这个命令里:

* `<address-of-windows-pc>` 是 Windows PC 的地址信息(IP 或主机名)

* `<Windows-user>` 是允许访问共享文件夹的用户(见步骤 2)

系统将提示你输入 Windows 密码。之后,你将能够使用普通 Linux 用户访问 Windows 上的共享文件夹。

要卸载共享文件夹:

```

sudo umount ~/WindowsShare/

```

你还可以在系统启动时挂载 Windows 共享文件夹。按照 [这些步骤](https://timlehr.com/auto-mount-samba-cifs-shares-via-fstab-on-linux/) 相应地配置你的系统。

### 总结

在这里展示了如何建立临时的文件夹共享访问权限,每次重启后都要重新设置,因此修改成永久访问会更便利。我经常在不同的系统之间来回切换,对我而言设置直接文件访问非常实用。

---

via: <https://opensource.com/article/21/4/share-files-linux-windows>

作者:[Stephan Avenwedde](https://opensource.com/users/hansic99) 选题:[lujun9972](https://github.com/lujun9972) 译者:[hanszhao80](https://github.com/hanszhao80) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | If you work with different operating systems, it's handy to be able to share files between them. This article explains how to set up file access between Linux ([Fedora 33](https://getfedora.org/en/workstation/download/)) and Windows 10 using [Samba](https://www.samba.org/) and [mount.cifs](https://linux.die.net/man/8/mount.cifs).

Samba is the Linux implementation of the [SMB/CIFS](https://en.wikipedia.org/wiki/Server_Message_Block) protocol, allowing direct access to shared folders and printers over a network. Mount.cifs is part of the Samba suite and allows you to mount the [CIFS](https://en.wikipedia.org/wiki/Server_Message_Block) filesystem under Linux.

Caution: These instructions are for sharing files within your private local network or in a virtualized host-only network between a Linux host machine and a virtualized Windows guest. Don't consider this article a guideline for your corporate network, as it doesn't implement the necessary cybersecurity considerations.

## Access Linux from Windows

This section explains how to access a user's Linux home directory from Windows File Explorer.

### 1. Install and configure Samba

Start on your Linux system by installing Samba:

`dnf install samba`

Samba is a system daemon, and its configuration file is located in `/etc/samba/smb.conf`

. Its default configuration should work. If not, this minimal configuration should do the job:

```

[global]

workgroup = SAMBA

server string = %h server (Samba %v)

invalid users = root

security = user

[homes]

comment = Home Directories

browseable = no

valid users = %S

writable = yes

```

You can find a detailed description of the parameters in the [smb.conf](https://www.samba.org/samba/docs/current/man-html/smb.conf.5.html) section of the project's website.

### 2. Modify LinuxSE

If your Linux distribution is protected by [SELinux](https://www.redhat.com/en/topics/linux/what-is-selinux) (as Fedora is), you have to enable Samba to be able to access the user's home directory:

`setsebool -P samba_enable_home_dirs on`

Check that the value is set by typing:

`getsebool samba_enable_home_dirs`

Your output should look like this:

(Stephan Avenwedde, CC BY-SA 4.0)

### 3. Enable your user

Samba uses a set of users and passwords that have permission to connect. Add your Linux user to the set by typing:

`smbpasswd -a <your-user>`

You will be prompted for a password. This is a *completely new* password; it is not the current password for your account. Enter the password you want to use to log in to Samba.

To get a list of allowed user types:

`pdbedit -L -v`

Remove a user by typing:

`smbpasswd -x <user-name>`

### 4. Start Samba

Because Samba is a system daemon, you can start it on Fedora with:

`systemctl start smb`

This starts Samba for the current session. If you want Samba to start automatically on system startup, enter:

`systemctl enable smb`

On some systems, the Samba daemon is registered as `smbd`

.

### 4. Configure the firewall

By default, Samba is blocked by your firewall. Allow Samba to access the network permanently by configuring the firewall.

You can do it on the command line with:

`firewall-cmd --add-service=samba --permanent`

Or you do it graphically with the firewall-config tool:

(Stephan Avenwedde, CC BY-SA 4.0)

### 5. Access Samba from Windows

In Windows, open File Explorer. On the address line, type in two backslashes followed by your Linux machine's address (IP address or hostname):

(Stephan Avenwedde, CC BY-SA 4.0)

You will be prompted for your login information. Type in the username and password combination from step 3. You should now be able to access your home directory on your Linux machine:

(Stephan Avenwedde, CC BY-SA 4.0)

## Access Windows from Linux

The following steps explain how to access a shared Windows folder from Linux. To implement them, you need Administrator rights on your Windows user account.

### 1. Enable file sharing

Open the** Network and Sharing Center** either by clicking on the

**Windows Button > Settings > Network & Internet**

or by right-clicking the little monitor icon on the bottom-right of your taskbar:

(Stephan Avenwedde, CC BY-SA 4.0)

In the window that opens, find the connection you want to use and note its profile. I used **Ethernet 3**, which is tagged as a **Public network**.

Caution: Consider changing your local machine's connection profile toPrivateif your PC is frequently connected to public networks.

Remember your network profile and click on **Change advanced sharing settings**:

(Stephan Avenwedde, CC BY-SA 4.0)

Select the profile that corresponds to your connection and turn on **network discovery** and **file and printer sharing**:

(Stephan Avenwedde, CC BY-SA 4.0)

### 2. Define a shared folder

Open the context menu by right-clicking on the folder you want to share, navigate to **Give access to**, and select **Specific people...** :

(Stephan Avenwedde, CC BY-SA 4.0)

Check whether your current username is on the list. Click on **Share** to tag this folder as shared:

(Stephan Avenwedde, CC BY-SA 4.0)

You can display a list of all shared folders by entering `\\localhost`

in File Explorer's address line:

(Stephan Avenwedde, CC BY-SA 4.0)

<p class="rtecenter"><sup>(Stephan Avenwedde, <a href="https://opensource.com/%3Ca%20href%3D"https://creativecommons.org/licenses/by-sa/4.0/" rel="ugc">https://creativecommons.org/licenses/by-sa/4.0/" target="_blank">CC BY-SA 4.0</a>)</sup></p>

### 3. Mount the shared folder under Linux

Go back to your Linux system, open a command shell, and create a new folder where you want to mount the Windows share:

`mkdir ~/WindowsShare`

Mounting Windows shares is done with mount.cifs, which should be installed by default. To mount your shared folder temporarily, use:

`sudo mount.cifs //<address-of-windows-pc>/MySharedFolder ~/WindowsShare/ -o user=<Windows-user>,uid=$UID`

In this command:

`<address-of-windows-pc>`

is the Windows PC's address info (IP or hostname)`<Windows-user>`

is the user that is allowed to access the shared folder (from step 2)

You will be prompted for your Windows password. Enter it, and you will be able to access the shared folder on Windows with your normal Linux user.

To unmount the shared folder:

`sudo umount ~/WindowsShare/`

You can also mount a Windows shared folder on system startup. Follow [these steps](https://timlehr.com/auto-mount-samba-cifs-shares-via-fstab-on-linux/) to configure your system accordingly.

## Summary

This shows how to establish temporary shared folder access that must be renewed after each boot. It is relatively easy to modify this configuration for permanent access. I often switch back and forth between different systems, so I consider it incredibly practical to set up direct file access.

## 2 Comments |

14,538 | Ubuntu 的 Unity 桌面还活着:时隔 6 年后,7.6 测试版发布 | https://news.itsfoss.com/unity-7-6-testing/ | 2022-05-03T17:32:16 | [

"Unity",

"Ubuntu Unity"

] | https://linux.cn/article-14538-1.html |

>

> 虽然 Canonical 已经不再维护 Unity 桌面,但 Ubuntu Unity 的开发者承担了这项重任,发布了一项主要更新(已可用于测试)。

>

>

>

怕你兴奋过头了,先提醒一下,Canonical 并没有回归 Unity 桌面的维护。

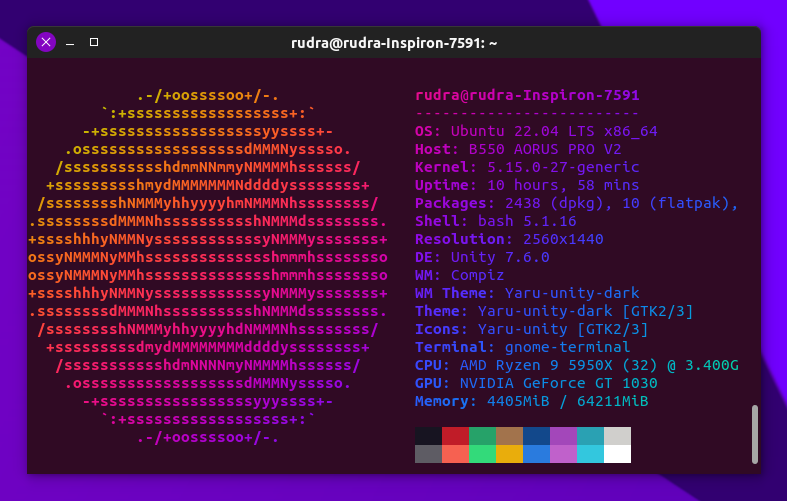

得益于 Ubuntu Unity 发行版开发者(Rudra Saraswat)的不懈努力,时隔 6 年,我们终于看到了 Unity 桌面环境的更新。

补充说一下,[Ubuntu Unity](https://ubuntuunity.org/) 是一款采用 Unity 桌面(而不是 GNOME)的社区项目。因此,如果你想在 Ubuntu 22.04 LTS 上使用 Unity 桌面,那么毋庸置疑,[Ubuntu Unity](https://ubuntuunity.org/) 绝对是你的最佳伙伴。

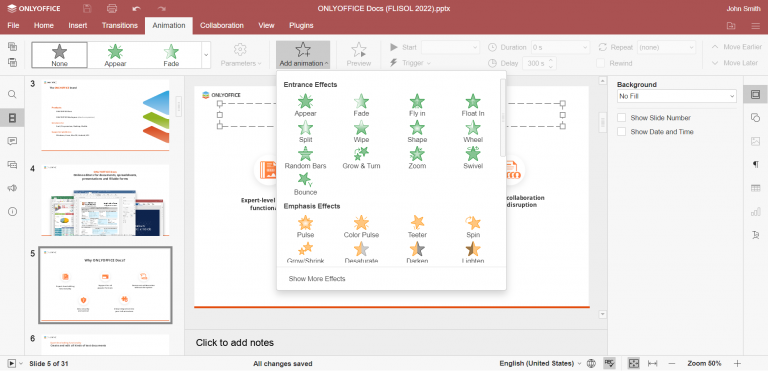

起初,它仅仅提供了经过微调的 Unity 体验,但现在,**Unity 7.6** 的界面得到了一些优化及视觉变更。

下面是你应该了解的东西:

### Unity 7.6:更新了什么?

>

> **提示**:Unity 7.6 是为公共测试而发布的,它不应作为替代其他桌面环境的使用环境。

>

>

>

这不仅仅是面向用户的改进,还有针对开发方面的努力,旨在帮助贡献者,让他们更方便地帮助 Unity7 的开发。

这些改善包括:

#### 用户界面变更

Dash 启动器(应用启动器)与 HUD 现已重新设计,拥有更现代、简洁的视觉。

总的来看,现在的设计看上去更加扁平,但仍旧保留了不错的系统全局模糊效果。

本次引入了一些细微的视觉改进,比如停靠区上的“清空回收站”按钮修改为使用 Nemo(而不是 Nautilus),以及修复了 Dash 预览中的应用详情与评分。

#### 性能改进

在最新的更新中,Unity7 的内存使用量更低,同时你也可以注意到,Ubuntu Unity 22.04 的内存使用量明显降低到约 700-800 MB。

此外,低端显卡模式现在运作得更好,Dash 也比以前更快。

#### 其他变化

Unity7 Shell 的源代码已经完全迁移至 [GitLab](https://gitlab.com/ubuntu-unity)。用于独立测试的 Unity7 启动器已被修复,同时一些有问题的测试项也已被禁用,改善了构建用时(使其大幅缩短)。

发布说明上说,这些改进将帮助 Unity7 的贡献者。

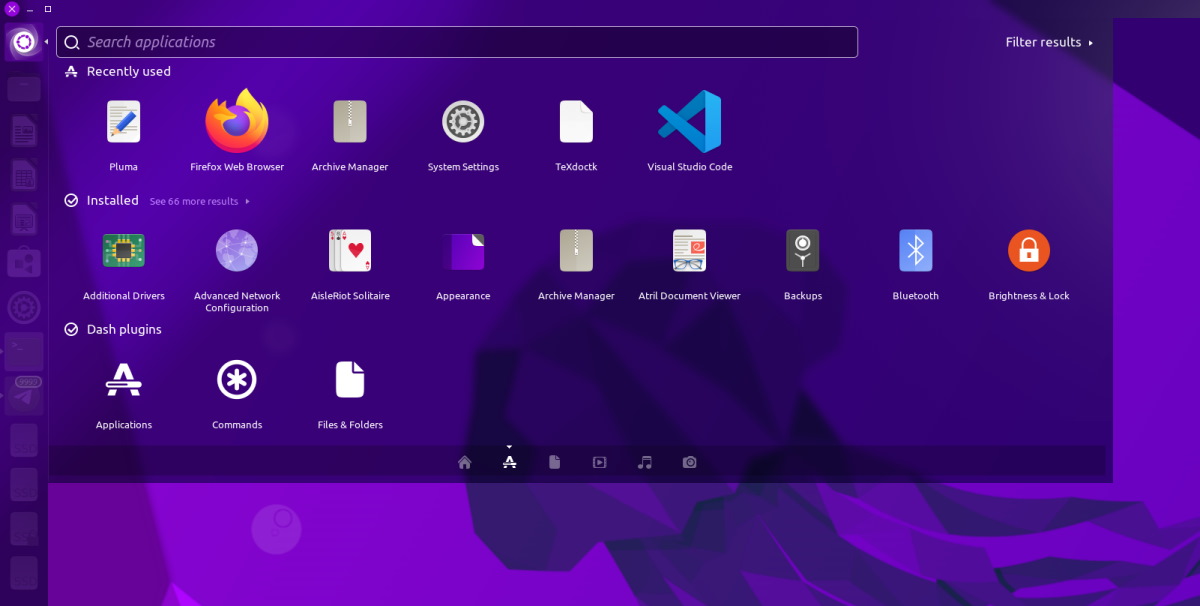

### 测试 Unity 7.6

你可以按照 [官方测试公告](https://unity.ubuntuunity.org/blog/unity-7.6/) 中提到的方式来编译它,并亲自尝试。你也可以前往其官方网站探索更多。

>

> **[Unity 7.6](https://unity.ubuntuunity.org/)**

>

>

>

另一种情况,如果你不想添加测试 PPA 源,你也可以等待 Ubuntu Unity 22.04 的更新。

*你对 Unity 桌面环境的这次更新有什么看法?你喜欢它吗?欢迎在评论区中告诉我你的想法。*

---

via: <https://news.itsfoss.com/unity-7-6-testing/>

作者:[Ankush Das](https://news.itsfoss.com/author/ankush/) 选题:[lkxed](https://github.com/lkxed) 译者:[imgradeone](https://github.com/imgradeone) 校对:[校对者ID](https://github.com/%E6%A0%A1%E5%AF%B9%E8%80%85ID)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

Before you get too excited, you should know that Canonical is not coming back to maintain Unity desktop.

Thanks to the developer of the Ubuntu Unity distribution (*Rudra Saraswat*), we get to see an update to the Unity desktop environment after six long years.

In case you did not know, Ubuntu Unity is a community project that utilizes the Unity interface instead of GNOME. So, yes, if you wanted to use Ubuntu 22.04 LTS with Unity desktop, [Ubuntu Unity](https://ubuntuunity.org/?ref=news.itsfoss.com) is your friend.

Initially, it simply offered the Unity experience with a few tweaks. But, now, **Unity 7.6** looks to be getting some improvements and visual changes to the interface.

Here’s what you should know about it:

## Unity 7.6: What’s New?

**Note**: Unity 7.6 is out for public testing and should not be a replacement for other desktop environments.

It is not just about the user-facing side, but there have been development efforts to help contributors conveniently help with Unity7’s development.

Some refinements include:

### User Interface Changes

The dash launcher (app launcher) and HUD have been redesigned for a modern/slick look.

Overall, the design is now much flatter but retains the good-old system-wide blur effect. The dock’s menu and tooltips also received some refreshed modern look.

There are some subtle visual improvements like ‘Empty Trash’ button in the dock using Nemo instead of Nautilus and fixing the app info and ratings in dash preview.

### Performance Improvements

The RAM usage in Unity7 is lower with the latest update. And, you can notice the RAM usage with Ubuntu Unity 22.04 is significantly lower to about 700-800 MB.

Furthermore, the low graphics mode works much better now, making the dash faster than ever.

### Other Changes

The Unity7’s shell source code has been migrated entirely to [GitLab](https://gitlab.com/ubuntu-unity?ref=news.itsfoss.com). The standalone testing Unity7 launcher has been fixed, and the buggy tests have been disabled, improving the build time (making it much shorter).

The release notes say that these changes should help Unity7 contributors.

## Testing Unity 7.6

You can follow the instructions mentioned in the [official testing announcement](https://unity.ubuntuunity.org/blog/unity-7.6/?ref=news.itsfoss.com) to compile it and try it for yourself. You can also head to its official website to explore more.

In either case, you can wait for an update to Ubuntu Unity 22.04, if you would rather not add the testing PPA yet.

*What do you think about this refreshment to the Unity desktop environment? Do you like it? Let me know your thoughts in the comments.*

## More from It's FOSS...

- Support us by opting for

[It's FOSS Plus](https://itsfoss.com/#/portal/signup)membership. - Join our

[community forum](https://itsfoss.community/). - 📩 Stay updated with the latest on Linux and Open Source. Get our

[weekly Newsletter](https://itsfoss.com/newsletter/). |

14,540 | 埃隆·马斯克开源推特算法的计划存在缺陷 | https://www.opensourceforu.com/2022/04/elon-musks-plan-to-open-source-the-twitter-algorithm-has-flaws/ | 2022-05-03T23:59:40 | [

"推特",

"算法"

] | https://linux.cn/article-14540-1.html |

报道称,在推特确认接受收购请求的几个小时后,埃隆·马斯克就明确表示了他对推特的期望。马斯克在一份新闻稿中罗列了他计划做出的重大改变,包括开源“决定用户在推流中看到什么”的算法。

马斯克希望开源推特的算法,是因为他长期以来一直担心该平台有可能进行政治压制。但老实说,即便开源,也不可能达到他的预期效果。专家们担心,这可能反而带来一连串意想不到的问题。

虽然马斯克对权威深恶痛绝,但是他对算法开源的野心和世界各地立法者的愿望不谋而合。近年来,许多政府都将这一原则作为打击大科技公司的基石。

英国社交媒体监管机构 Ofcom 的首席执行官 Melanie Dawes 曾表示,社交媒体公司应当解释其代码的运作方式。此外,欧盟新近通过的《<ruby> 数字服务法案 <rt> Digital Services Act </rt></ruby>(DSA)》于 4 月 23 日获得批准,该法案将责成平台提供更多的公开性。2022 年 2 月,美国的民主党参议员提交了《<ruby> 算法问责法案 <rt> Algorithmic Accountability Act </rt></ruby>(AAA)》的立法申请。这些法案的目标是加强算法的透明度和监督,包括我们在社交媒体上的“<ruby> 时间轴 <rt> timeline </rt></ruby>”和“<ruby> 新闻流 <rt> news feed </rt></ruby>”以及我们生活的其他方面。

允许竞争者看到并修改推特的算法,可能意味着有人会偷取源代码,并提供一个改名的版本。互联网的许多部分都运行在开源软件上,其中最著名的就是 OpenSSL,这是一个被大量在线使用的安全工具包,而它在 2014 年被黑客攻击了。

还有一些已经创建的开源社交网络。Mastodon 是一个微博网络,为回应对 Twitter 主导地位的担忧而创建。它允许用户检查其代码,这些代码可在 GitHub 软件仓库中找到。

然而,阅读一个算法背后的代码,并不总能告诉你它的工作方式,而且对于大部分普通人来说,它也提供不了足够的关于公司组织架构以及开发流程的信息。

Jonathan Gray 是伦敦国王学院/关键基础设施研究的高级讲师,他说:“这有点像只用遗传物质来理解古代生物。是的,它能告诉我们的信息比任何方式都多,但如果说我们因此了解它们的生活方式,那就太夸张了。”

推特同样也不是由单一算法控制的。Catherine Flick 是英国德蒙福特大学/研究计算和社会责任的研究员,她说:“其中一些会决定人们在他们的“时间轴”上看到什么趋势、内容或者推荐关注的人。调节用户“时间轴”上显示哪些信息的算法,将会是人们最感兴趣的。然而,即使如此,如果缺少训练数据,单纯开源算法也没多大用处。”

Cobbe 认为,开源推特算法的危害大于好处。因为计算机代码并没有透露算法是如何开发或评估的:有哪些元素或考虑、在这个过程中的优先级是什么等等。所以开源可能不会使推特的透明度发生重大变化。反而,它可能会带来严重的安全隐患。

---

via: <https://www.opensourceforu.com/2022/04/elon-musks-plan-to-open-source-the-twitter-algorithm-has-flaws/>

作者:[Laveesh Kocher](https://www.opensourceforu.com/author/laveesh-kocher/) 选题:[lkxed](https://github.com/lkxed) 译者:[lkxed](https://github.com/lkxed) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Elon Musk made his aspirations for Twitter obvious just hours after Twitter said it had accepted Elon Musk’s takeover offer. Musk listed the major changes he intends to make in a press release, including opening up the algorithms that govern what users see in their feed.

Musk’s desire to open source Twitter’s algorithms stems from his long-standing concern about the platform’s potential for political repression, but it’s unlikely that doing so will have the desired effect. Experts worry that it may instead bring a slew of unexpected issues.

Although Musk has a deep dislike for authority, his ambition for algorithmic openness coincides with the wishes of legislators all around the world. In recent years, numerous governments have used this principle as a cornerstone of their efforts to combat Big Tech.

Melanie Dawes, the chief executive of Ofcom, the UK regulator of social media, has stated that social media firms would be required to explain how their code operates. In addition, the European Union’s newly passed Digital Services Act, which was approved on April 23, would oblige platforms to provide more openness. In February 2022, Democratic senators in the United States submitted legislation to create an Algorithmic Accountability Act. Their goal is to increase transparency and supervision of the algorithms that regulate our timelines and news feeds, as well as other aspects of our lives.

Allowing competitors to see and adapt Twitter’s algorithm potentially means that someone could just steal the source code and offer a rebranded version. Vast sections of the internet run on open-source software, the most renowned of which is OpenSSL, a security toolkit used by large swaths of the online that was hacked in 2014.

There are also open source social networks that have already been created. Mastodon, a microblogging network created in response to worries about Twitter’s dominant position, allows users to inspect its code, which is available on the GitHub software repository.

However, reading the code behind an algorithm does not always tell you how it works, and it doesn’t provide the typical individual much insight into the corporate structures and processes that go into its development.

“It’s a bit like trying to understand ancient creatures with genetic material alone,” says Jonathan Gray, a senior lecturer in critical infrastructure studies at King’s College London. “It tells us more than nothing, but it would be a stretch to say we know about how they live.”

Twitter is likewise not controlled by a single algorithm. “Some of them will determine what people see on their timelines in terms of trends, content, or suggested followers,” says Catherine Flick, a researcher at De Montfort University in the United Kingdom who studies computing and social responsibility. The algorithms that regulate what information shows in users’ timelines will be the ones that people are most interested in, but even that won’t be very useful without the training data.

Cobbe believes that the hazards outweigh the advantages. Because the computer code doesn’t reveal how algorithms were developed or evaluated, what elements or considerations went into them, or what was prioritised during the process, open-sourcing it may not make a significant change in Twitter’s transparency. In the meantime, it may pose severe security hazards. |

14,541 | 在虚拟机中运行 Linux 的十大优点 | https://itsfoss.com/why-linux-virtual-machine/ | 2022-05-04T09:34:00 | [

"虚拟机"

] | https://linux.cn/article-14541-1.html |

>

> 你可以在虚拟机环境里运行任何操作系统,不论是测试还是为了某种需要。

>

>

>

*开源朗读者 | 淮晋阳*

对于 Linux 而言,它在虚拟环境下的性能会优于其他操作系统。即便你可能会犹豫是否在物理机(裸金属)上安装 Linux 系统,你仍然可以在虚拟机中安装一个性能几乎和物理机一样好的 Linux 系统。

当然,这并不意味着宿主系统为 Linux 时你就不能在虚拟机实例中安装 Linux 了。

更何况,你在虚拟环境下使用 Linux 系统有许多好处。大致如下。

### 在虚拟环境下运行 Linux 之前的注意事项

在虚拟环境下运行 Linux 或许并不是艰巨的任务,但仍有以下几点你需谨记。

* 虚拟机的性能取决于宿主机的性能,如果你并没有足够的系统资源分配给虚拟机,那么虚拟机的使用体验注定不会很好。

* 某些特性仅在物理机(裸金属)上生效,包括硬件加速以及图形(显卡)驱动等。

* 密集的磁盘 I/O 任务性能会十分受限,例如游戏测试场景。

* 用户的 Linux 虚拟机实例体验会根据你所使用的虚拟化程序而发生变化,这些虚拟化程序包括 VMware、VirtualBox、GNOME Boxes 以及 Hyper-V 。

此外,你应当列出你的需求,并根据这些需求选定适当的虚拟化程序来运行你的 Linux 实例。

### 十条在虚拟环境中运行 Linux 的优点

尽管运行虚拟化 Linux 实例极具吸引力,你仍然应当首先考虑当前使用的宿主系统中已有的选择。例如,如果你不需要图形化桌面,或许利用 Windows 操作系统中的 [WSL 安装 Linux](https://itsfoss.com/install-bash-on-windows/) 就可以满足你的需求。

一旦你确定了使用虚拟机,那么这些优点将会如影随形:

#### 1、部署简便

与在传统物理机(裸金属)上安装 Linux 相比,在虚拟机中部署一般会容易许多。

对于基于 Ubuntu 的发行版而言,像 VMware 这样的虚拟化程序会提供一个 **快速安装** 的选项,你仅需输入用户名和密码,其余过程将自动完成而无需其他操作。你无需手动设置分区、引导程序以及更多高级设置。

某些情况下,一些发行版的开发者会同时提供针对特定虚拟机的预构建镜像,只需打开就可使用。这就好像一个便携式虚拟机镜像,随时可以开箱即用。

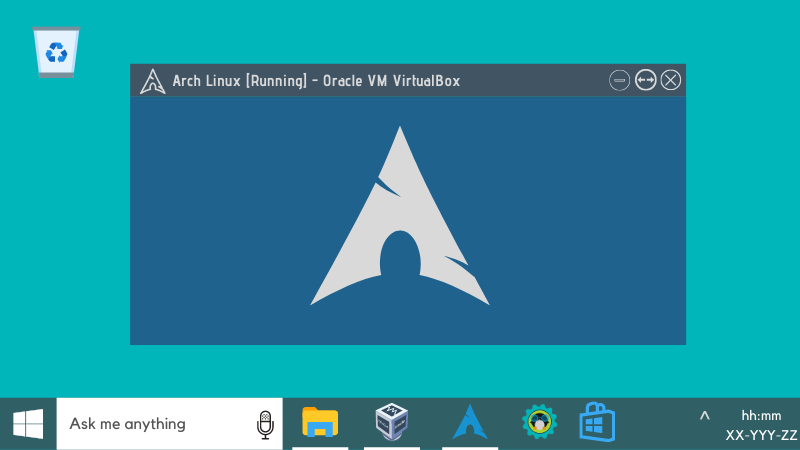

例如,在 [这里](https://itsfoss.com/install-arch-linux-virtualbox/) 你将看到如何在虚拟机中安装 Arch Linux 发行版。

对于其他的发行版,你或许仍需要进行一些配置,但一般都会有快速安装的选项令你可以轻松部署。

#### 2、不会影响宿主机

对于虚拟机,你可以更为随心所欲地使用,因为虚拟机系统和宿主机系统是隔离的。

很多时候,如果你并不熟悉 Linux 系统,你很可能会把配置弄得十分混乱。

所以在虚拟机里,你可以随意尝试而无需担心是否会影响到宿主机系统。换句话说,任何虚拟机的操作都不会影响到宿主机,因为它们是完全隔离的。

故此,虚拟机是你最好的试验场,尤其是对于一些激进或具有破坏性的试验。

#### 3、资源可高效共享

如果你有十分充裕的系统资源,你可以使用虚拟机运行其他任务,从而充分利用起来这部分闲置的系统资源。例如,如果你需要一个十分私密的浏览环境,虚拟机将为你阻挡一切针对宿主机的追踪器。

这可能略显牵强,但这仅仅是一个例子。基于这样的想法你将可以充分利用全部的系统资源。

而对于双启动方案,你需要在单独的磁盘上在 Windows [之后安装 Linux](https://itsfoss.com/dual-boot-hdd-ssd/),或者在 Linux [之后安装 Windows](https://itsfoss.com/install-windows-after-ubuntu-dual-boot/),你需要为你的任务锁定相应的资源。

但利用虚拟机,你无需锁定部分资源也可以使用 Linux ,也不必为了特定的任务而临时共享资源,这样会方便许多。

#### 4、多任务体验更好

有了资源共享机制,多任务会前所未有的容易。

在双启动的场景下,你需要来回重启切换才能使用 Linux 或 Windows 。

但如果使用虚拟机,你几乎不再需要 [双启动](https://itsfoss.com/dual-boot-fedora-windows/),两个系统将无缝协作并完成多任务。

当然,你需要确认你拥有足够的系统资源和额外的硬件(例如双显示器)来更高效地使用。而多任务的潜力也因 Linux 虚拟机的存在而愈发强大。

#### 5、软件测试更为便捷

有了虚拟化,你将可以创建大量的 Linux 实例,来模拟特定的使用场景,并对软件进行测试。

例如,你可以在不同的 Linux 虚拟机中同步测试不同的软件版本。这有丰富的使用场景,包括对开发版软件进行测试以及 Linux 发行版的早期测试等等。

#### 6、开发更为便捷

当你在学习编程或者刚加入一个软件项目的开发的时候,你会希望拥有一个没有任何冲突和错误的开发环境。

在 Linux 虚拟机里,你可以从零开始搭建一个不会与已经存在的环境冲突的开发环境。例如,你可以在 Ubuntu 上 [安装并测试 Flutter](https://itsfoss.com/install-flutter-linux/) 。

如果环境出了问题,你可以轻而易举地删掉这个虚拟机,并重新开始来修正错误。

Linux 虚拟机的隔离环境是一个绝佳的开发和测试环境。

#### 7、学习和研究的好帮手

Linux 值得反复探索。除了基础的计算任务,你可以做许多其他的事情。

你可以学习如何修改你的用户界面,[尝试一些常见的桌面环境](https://itsfoss.com/best-linux-desktop-environments/) 、[安装大量常用软件](https://itsfoss.com/essential-linux-applications/) ,与此同时仍能让一切处于掌控之中。

如果出现问题,新建一个 Linux 虚拟机就可以解决。当然,这并不仅限于日常使用需要,还可以启发系统管理员在其中测试他们所学的知识。

#### 8、更容易复制和迁移

虚拟机可以很容易地复制和迁移。只要其它的宿主机系统支持该虚拟化程序,你就可以很容易地迁移它,而没有特别要求。

不论因何原因,几次简单的点击就可以完成复制和迁移的任务。

#### 9、尝试大量的发行版

你可以在虚拟环境下尝试数以百计的 Linux 发行版。

你或许会认为这和第七条重复了,但是我相信,测试一个发行版是一个巨大的系统性工程,尤其是当你决定切换发行版做为宿主机或其他用途时。

#### 10、便于调试

不论是严肃的开发活动还是一般的研究,在隔离的虚拟环境中调试和除错相对而言会更简单。

你可以快速尝试大量的调试方法而无需考虑影响。同时,如果你的宿主机是 Linux 系统的话,无需宿主机上的 root 权限便可以访问和修改虚拟机中的配置文件。

### 总而言之

如果你不熟悉正在使用的系统或者依赖不同的操作系统工作,虚拟机将是协助你工作的一大利器。

Linux 虚拟机可以广泛用于开发、学习、试验或任何特定用途。

你在虚拟机中使用过 Linux 吗?都有哪些应用场景呢?欢迎留言评论!

---

via: <https://itsfoss.com/why-linux-virtual-machine/>

作者:[Ankush Das](https://itsfoss.com/author/ankush/) 选题:[lkxed](https://github.com/lkxed) 译者:[PeterPan0106](https://github.com/PeterPan0106) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

You can run any operating system as a virtual machine to test things out or for a particular use case.

When it comes to Linux, it is usually a better performer as a virtual machine when compared to other operating systems. Even if you hesitate to install Linux on bare metal, you can try setting up a virtual machine that could run as you would expect on a physical machine.

Of course, we don’t rule out the possibility of running Linux distros in VM even when using Linux as your host OS.

Moreover, you get numerous benefits when trying to run Linux on virtual machines. Here, I shall mention all about that.

## Things to Keep in Mind Before Running Linux as a Virtual Machine

It is worth noting that running Linux on a virtual machine may not be a daunting task, but there are a few pointers that you should keep in mind.

Some of them include:

- The virtual machine performance will depend on your host system. If you do not have enough system resources to allocate, the virtual machine experience will not be pleasant.

- Certain features only work well with bare metal (hardware acceleration, graphics drivers, etc.)

- You should not expect intensive disk I/O tasks to work well, like testing games.

- The user experience with Linux virtual machines varies with the program you use. For instance, you can try VMware, VirtualBox, GNOME Boxes, and Hyper-V.

In addition to all these tips, you should also make a list of your requirements before choosing a virtual machine program to run Linux.

While there are perks to using a Linux VM, you should consider the current opportunities available on your host OS. For instance, you may want to [install Linux using WSL on Windows](https://itsfoss.com/install-bash-on-windows/) if you do not require a GUI desktop.

[How to Install Linux Bash Shell on Windows [Complete Guide]Step-by-step screenshot guide to show you how to install bash on Windows 11 and 10.](https://itsfoss.com/install-bash-on-windows/)

Once you are sure that you need a VM, here’s why you should proceed with it:

## 1. Easy Setup

Compared to the traditional installation process on bare metal, setting up a virtual machine is often easier.

For Ubuntu-based distros, programs like VMware offer an **Easy Install** option where you have to type in the required fields for username and password; the rest will proceed without needing additional inputs. You do not need to select a partition, bootloader, or advanced configurations.

In some cases, you can also use prebuilt images offered by Linux distributions for a specific virtual program, where you need to open it to access the system. Think of it as a portable VM image ready to launch wherever you need it.

For example, you can check out how you can use [VirtualBox to install Arch Linux](https://itsfoss.com/install-arch-linux-virtualbox/).

[How to Install Arch Linux on VirtualBox [Beginner’s Guide]Arch Linux is complicated than most other distributions. Installing Arch Linux in VirtualBox is a safer way to try this famed Linux distribution.](https://itsfoss.com/install-arch-linux-virtualbox/)

You may still need to configure things when installing other distros, but there are options where you need minimal effort.

## 2. Does Not Affect the Host OS

With a virtual machine, you get the freedom to do anything you want, and it is because you get an isolated system.

Usually, if you do not know what you’re doing with a Linux system, you could easily end up with a messed-up configuration.

So, if you set up a VM, you can quickly try whatever you want without worrying about affecting the host OS. In other words, your system will not be impacted by any changes to the VM because it’s entirely isolated.

Hence, a VM is the best way to test any of your ambitious or destructive changes that you may want to perform on bare metal.

## 3. Resource Sharing

If you have ample free system resources, you can utilize the rest using a Virtual Machine for any other tasks. For instance, if you want a private browsing experience without leaving any traces on your host, a VM can help.

It can be a far-fetched example, but it is just one of the ideas. In that way, you get to use the resources fully without much hassle.

Also, as opposed to a dual-boot scenario, where you need to [install Linux alongside Windows](https://itsfoss.com/dual-boot-hdd-ssd/) on separate disks or [install Windows after Linux](https://itsfoss.com/install-windows-after-ubuntu-dual-boot/), you need dedicated resources locked on to your tasks.

However, with a VM, you can always use Linux without locking up your resources, rather than temporarily sharing them to get your tasks done, which can be more convenient.

## 4. Multi-Tasking

With the help of resource-sharing, you can easily multitask.

For instance, you need to switch back and forth between a dual-boot setup to access Windows and Linux.

But, with a virtual machine, you can almost eliminate the need for [dual-booting Linux](https://itsfoss.com/dual-boot-fedora-windows/) and multitask with two operating systems seamlessly.

Of course, you need to ensure that you have the required amount of system resources and external hardware (like dual monitors) to effectively use it. Nevertheless, the potential to multitask increases with a Linux VM in place.

## 5. Facilitates Software Testing

With virtualization, you get the freedom to test software on Linux distros by instantly creating various situations.

For instance, you can test different software versions simultaneously on multiple Linux VMs. There can be more use-cases, such as testing a software development build, early build of a Linux distro, etc.

## 6. Great for Development

When you want to learn to code or just get involved in developing something, you want an environment free from any conflicts and errors.

So, a Linux VM is the perfect place to install new packages from scratch without worrying about conflicts with existing ones. For instance, you can [install and set up Flutter](https://itsfoss.com/install-flutter-linux/) to test things on Ubuntu.

If you mess up the system, you can quickly delete the VM and spin up a new one to learn from your mistakes.

You get a perfect isolated environment for development work and testing with a Linux VM.

## 7. Learning or Research

Linux is something to explore. While you could use it for basic computing tasks, there’s so much more that you can do with it.

You can learn how to customize the user interface, try some [popular desktop environments](https://itsfoss.com/best-linux-desktop-environments/), install [various essential apps](https://itsfoss.com/essential-linux-applications/), and take control of your system without worrying about it.

If anything goes wrong, you create a new Linux VM. Of course, it is not just for general-purpose usage, but aspiring system administrators can also take this opportunity to test what they learn.

## 8. Easy to Clone or Migrate

Virtual machines, in general, are easy to clone and migrate. With a Linux VM, as long as the virtual program is supported on another system or host OS, you can easily migrate it without any special requirements.

If you need to clone an existing virtual machine for any reason, that is pretty easy too, and it should take a couple of clicks to get it done.

## 9. Try A Variety of Distros

Of course, with hundreds of Linux distros available, you can try all kinds of distros by creating a Linux virtual machine.

You may consider this a part of learning/research, but I believe trying out different distros is a massive task if you want to test things out before installing them on your system.

## 10. Debugging

Whether it is for fun or serious research, debugging is relatively more straightforward in an isolated environment provided by the Linux VM.

You get the freedom to try various troubleshooting methods without thinking about the outcome. Furthermore, you do not need root access to your host OS (if it’s Linux) to access the system configuration/files in the VM.

## Wrapping Up

If you are not an experienced user or depend on a different host OS, you can benefit from installing Linux using a virtual machine.

A Linux VM should be beneficial for development, learning, experimenting, or any other special use cases.

*💭 Have you used Linux on a virtual machine? What do you use it for? Let me know in the comments below.* |

14,542 | 使用 dnf 进行 Linux 包管理 | https://opensource.com/article/21/6/dnf-linux | 2022-05-04T10:15:39 | [

"dnf",

"软件包管理"

] | /article-14542-1.html |

>

> 了解如何在 Linux 上使用 `dnf` 命令安装软件包,然后下载我们的速查表,让正确的命令触手可及。

>

>

>

在计算机系统上安装应用程序非常简单:就是将档案(如 `.zip` 文件)中的文件复制到目标计算机上,放在操作系统预期放应用程序的位置。因为我们中的许多人习惯于使用花哨的安装“向导”来帮助我们在计算机上安装软件,所以这个过程似乎在技术上应该比实际更复杂。

然而,复杂的是,是什么构成了一个程序?用户认为的单个应用程序实际上包含了分散在操作系统中的软件库的各种依赖代码(例如:Linux 上的 .so 文件、Windows 上的 .dll 文件和 macOS 上的 .dylib 文件)。

为了让用户不必担心这些程序代码之间的复杂的互相依赖关系, Linux 使用 <ruby> 包管理系统 <rt> package management system </rt></ruby> 来跟踪哪些应用程序需要哪些库,哪些库或应用程序有安全或功能更新,以及每个软件会附带安装哪些额外的数据文件。包管理器本质上是一个安装向导。它们易于使用,提供了图形界面和基于终端的界面,让你的生活更轻松。你越了解你的发行版的包管理器,你的生活就会越轻松。

### 在 Linux 上安装应用程序

如果你在使用 Linux 桌面时,偶尔想要安装一个应用程序,那么你可能正在寻找 [GNOME “软件”](https://wiki.gnome.org/Apps/Software),它是一个桌面应用程序浏览器。

它会按你的预期工作:点击它的界面,直到你找到一个看起来有用的应用程序,然后单击 “安装” 按钮。

或者,你可以在 GNOME “软件” 中打开从网络下载的 `.rpm` 或 `.flatpakref` 软件包,以便它进行安装。

但如果你更倾向于使用命令行,请继续阅读。

### 用 dnf 搜索软件

在安装应用程序之前,你可能需要确认它是否存在于你的发行版的服务器上。通常,使用 `dnf` 搜索应用程序的通用名称就足够了。例如,假设你最近阅读了 [一篇关于 Cockpit 的文章](https://opensource.com/article/20/11/cockpit-server-management),并决定尝试一下。你可以搜索 `cockpit` 验证该发行版是否包含它:

```

$ dnf search cockpit

Last metadata expiration check: 0:01:46 ago on Tue 18 May 2021 19:18:15 NZST.

==== Name Exactly Matched: cockpit ====

cockpit.x86_64 : Web Console for Linux servers

==== Name & Summary Matched: cockpit ==

cockpit-bridge.x86_64 : Cockpit bridge server-side component

cockpit-composer.noarch : Composer GUI for use with Cockpit

[...]

```

有一个精确的匹配。上面列出的匹配的软件包名为 `cockpit.x86_64`,但名称中的 `.x86_64` 部分仅表示它兼容该 CPU 架构。默认情况下,你的系统会安装适配当前 CPU 架构的软件包,因此你可以忽略该扩展名。所以你确认你要查找的软件包确实简称为 `cockpit`。

现在你可以放心地使用 `dnf install` 安装它。 此步骤需要管理员权限:

```

$ sudo dnf install cockpit

```

一般来说,这就是典型的 `dnf` 工作流:搜索并安装。

然而,有时 `dnf search` 的结果并不清晰,或者你想要关于一个软件包的更多信息,而不仅仅是它的通用名称。有一些相关的 `dnf` 子命令,具体取决于你想要的信息。

### 软件包的元数据

如果你觉得你的搜索已 *接近* 想要的结果,但还不确定,查看软件包的元数据通常会有所帮助,例如项目的网址和描述。要获取此信息,请使用顾名思义的 `dnf info` 命令:

```

$ dnf info terminator

Available Packages

Name : terminator

Version : 1.92

Release : 2.el8

Architecture : noarch

Size : 526 k

Source : terminator-1.92-2.el8.src.rpm

Repository : epel

Summary : Store and run multiple GNOME terminals in one window

URL : https://github.com/gnome-terminator

License : GPLv2

Description : Multiple GNOME terminals in one window. This is a project to produce

: an efficient way of filling a large area of screen space with

: terminals. This is done by splitting the window into a resizeable

: grid of terminals. As such, you can produce a very flexible

: arrangements of terminals for different tasks.

```

这个信息告诉你可用软件包的版本、在你系统中注册的哪一个存储库提供了它、该项目的网站以及详细的功能描述。

### 哪个软件包提供的这个文件?

软件包名称并不总是与你要查找的内容相匹配。例如,假设你正在阅读的文档告诉你必须安装名为 `qmake-qt5` 的东西:

```

$ dnf search qmake-qt5

No matches found.

```

`dnf` 数据库非常广泛,因此你不要局限于搜索完全匹配的内容。你可以使用 `dnf provides` 命令来了解你正在寻找的东西是否作为某个更大的软件包的一部分而提供:

```

$ dnf provides qmake-qt5

qt5-qtbase-devel-5.12.5-8.el8.i686 : Development files for qt5-qtbase

Repo : appstream

Matched from:

Filename : /usr/bin/qmake-qt5

qt5-qtbase-devel-5.15.2-3.el8.x86_64 : Development files for qt5-qtbase

Repo : appstream

Matched from:

Filename : /usr/bin/qmake-qt5

```

可以确认应用程序 `qmake-qt5` 是名为 `qt5-qtbase-devel` 的软件包的一部分。它还告诉你,该应用程序会安装到 `/usr/bin`,因此你知道了安装后它的确切位置。

### 软件包中包含哪些文件?

有时我发现自己会从完全不同的角度来对待 `dnf`。有时,我已经确认我的系统上安装了一个应用程序;我只是不知道我是怎么得到它的。还有一些时候,我知道我安装了一个特定的软件包,但我不清楚这个软件包到底在我的系统上安装了什么。

如果你需要对包的<ruby> 有效负载 <rt> payload </rt></ruby>进行 “<ruby> 逆向工程 <rt> reverse engineer </rt></ruby>”,可以使用 `dnf repoquery` 命令和 `--list` 选项。这将查看存储库中有关软件包的元数据,并列出该软件包提供的所有文件:

```

$ dnf repoquery --list qt5-qtbase-devel

/usr/bin/fixqt4headers.pl

/usr/bin/moc-qt5

/usr/bin/qdbuscpp2xml-qt5

/usr/bin/qdbusxml2cpp-qt5

/usr/bin/qlalr

/usr/bin/qmake-qt5

/usr/bin/qvkgen

/usr/bin/rcc-qt5

[...]

```

这些列表可能很长,使用 `less` 或你喜欢的分页命令配合管道操作会有所帮助。

### 移除应用程序

如果你决定系统中不再需要某个应用程序,可以使用 `dnf remove` 卸载它,该软件包本身安装的文件以及不再需要的任何依赖项都会被移除:

```

$ dnf remove bigapp

```

有时,你发现随着一个应用程序一起安装的依赖项对后来安装的其他应用程序也有用。如果两个包需要相同的依赖项,`dnf remove` *不会* 删除依赖项。在安装和卸载大量应用程序之后,孤儿软件包散落各处的现象并不少见。大约每年我都要执行一次 `dnf autoremove` 来清除所有未使用的软件包:

```

$ dnf autoremove

```

这不是必需的,但这是一个让我的电脑感觉更好的大扫除步骤。

### 了解 dnf

你对包管理器的工作方式了解得越多,在必要时安装和查询应用程序就越容易。即便你不是 `dnf` 的重度使用者,当你发现自己与基于 RPM 的发行版交互时,了解它也会很有用。

告别 `yum` 后,我最喜欢的包管理器之一是 `dnf` 命令。虽然我不喜欢它的所有子命令,但我发现它是目前最健壮的 <ruby> 包管理系统 <rt> package management system </rt></ruby> 之一。 [下载我们的 dnf 速查表](https://opensource.com/downloads/dnf-cheat-sheet) 习惯该命令,不要害怕尝试一些新技巧。一旦熟悉了它,你可能会发现很难使用其他任何东西替代它。

>

> **[dnf 速查表](https://opensource.com/downloads/dnf-cheat-sheet)**

>

>

>

---

via: <https://opensource.com/article/21/6/dnf-linux>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[hanszhao80](https://github.com/hanszhao80) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

14,544 | 微软加入开放 3D 基金会,参与开源 3D 开发 | https://www.opensourceforu.com/2022/05/microsoft-joins-the-open-3d-foundation-for-open-source-3d-development-promotion/ | 2022-05-04T20:26:57 | [

"O3DF",

"微软"

] | https://linux.cn/article-14544-1.html |

微软已经以首席成员的身份加入了<ruby> 开放 3D 基金会 <rt> Open 3D Foundation </rt></ruby>(O3DF),其他首席成员是 Adobe、AWS、华为、英特尔和 Niantic。微软的参与为该项目带来了大量的知识和思想引领,这表明了:通过行业合作,创造一个高保真、功能齐全、不受商业条件限制的开源 3D 引擎是多么的关键。

微软首席集团项目经理 Paul Oliver 将加入 O3DF 管理委员会,这表明他将致力于实现基金会的目标,即确保符合开放 3D 社区保持需求与输入的平衡。基金会的战略方向和对 3D 可视化、仿真计划的管理,是由理事会与股东的创新互动来指导的。

“微软在创意方面的根基很深,我们希望帮助所有的创作者,无论他们是谁、在哪里、为哪个平台创作”,Oliver 如是说,“由 Linux 基金会创建的开放 3D 基金会,是朝着帮助更多世界各地的创作者迈出的美妙一步,我们很高兴能成为其中的一员。”

微软不断致力于使游戏制作民主化,并向全世界的游戏创作者提供其工具和技术。加入开放 3D 基金会也反映出这一点。微软去年通过 GitHub 向所有开发者发布了其游戏开发工具包,并正在通过与 O3DF 的新伙伴关系,扩大其向所有人开放技术的承诺。

O3DF 执行董事,兼 Linux 基金会的游戏和数字媒体部总经理 Royal O'Brien 说:“我们很高兴微软以首席成员的身份加入开放 3D 基金会。有像微软这样杰出的行业资深公司做出贡献,并帮助社区推动 3D 引擎的创新,这对开源社区和使用它的公司都是巨大的好处。”

---

via: <https://www.opensourceforu.com/2022/05/microsoft-joins-the-open-3d-foundation-for-open-source-3d-development-promotion/>

作者:[Laveesh Kocher](https://www.opensourceforu.com/author/laveesh-kocher/) 选题:[lkxed](https://github.com/lkxed) 译者:[lkxed](https://github.com/lkxed) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Microsoft has joined the Open 3D Foundation (O3DF) as a Premier member, joining Adobe, AWS, Huawei, Intel, and Niantic. Microsoft’s involvement in the project adds a lot of knowledge and thought leadership to the project, demonstrating how crucial it is for the industry to work together to create a high-fidelity, fully-featured open source 3D engine that is free of commercial conditions.

Paul Oliver, Microsoft’s Principal Group Program Manager, will join the O3DF Governing Board, demonstrating his commitment to the Foundation’s goal of ensuring balanced collaboration and input that fits the needs of the Open 3D community. The Foundation’s strategic direction and stewardship of 3D visualisation and simulation initiatives are guided by the Governing Board’s innovative interactions with stakeholders.

“Microsoft’s roots in creativity run deep and we want to help creators wherever they are, whoever they are, and whatever platform they’re creating for,” said Oliver. “Having the Linux Foundation create the Open 3D Foundation is a fantastic step towards helping more creators everywhere and we are excited to be a part of it.”

Microsoft’s continuous commitment to democratising game production and making its tools and technology available to game creators all over the world is reflected in this step. The company released its Game Development Kit to all developers via GitHub last year. Microsoft is extending its commitment to open up technology to everyone with its new partnership with O3DF.

“We are elated to have Microsoft join the Open 3D Foundation as a Premier member,” said Royal O’Brien, Executive Director of O3DF and General Manager of Games and Digital Media at the Linux Foundation. “Having incredible industry veterans like Microsoft contributing and helping drive innovation with the community for 3D engines is a huge benefit to the open source community and the companies that use it alike.” |

14,545 | 文档并不是开源项目开发的附属品 | https://www.opensourceforu.com/2022/04/documentation-isnt-just-another-aspect-of-open-source-development/ | 2022-05-05T09:00:04 | [

"文档"

] | https://linux.cn/article-14545-1.html | 有些项目长期保持活跃,有些项目却过早消亡 —— 这两者的区别往往在于它们的文档。严谨、聪明的文档可以给你的项目带来它所需要的动力。你应该把文档工作视为一项主要工作,把它与开发相提并论,下面我将说明这么做的理由和正确的做法。

经常会有开发者简单地认为他们的代码的“<ruby> 自我描述 <rt> self-documented </rt></ruby>”已经足够了,继而认为额外的文档是没有必要的。这种过度的自信会让项目付出很大的代价。匮乏或差劲的文档会扼杀你的项目。没有适当的文档,用户将无法理解项目的目标以及正确的工作流程。这可能会导致人们对采用你的开源产品产生一些疑虑。

### 撰写文档,从项目第一天就开始

文档不应该是次要的工作,它应该是与代码开发和管理同等的主要任务。随着内容以 Community Threads、Stack Overflow 和 Quora 问答等形式的广泛传播,文档承担了“<ruby> 信息源 <rt> source of truth </rt></ruby>”的角色。它应该满足那些想参考一手资料的贡献者的需要,并给工程师提供必要的参考支持。它还应该与利益相关者沟通基本计划。一个好的文档可以确保产品的持续改进和发展。

当发布一个软件产品时,我们不仅要发布代码,还要发布好的文档。这给我们带来了一个最重要的概念,大多数良好维护了文档的开源项目都遵循这个概念 —— “<ruby> 文档即代码 <rt> Documentation as code </rt></ruby>”。

### 文档及代码

今天,文档不再被存储为微软 Word 或 PDF 文件。新的需求是版本控制文档,其中所有的文档都是通过版本控制系统添加的,并持续发布。这个概念因 Read the Docs(LCTT 译注:一个文档创建、托管和浏览的平台)而流行,现在已经成为大多数文档团队的内容策略的重要组成部分。

像 Bugzilla 和 GitHub <ruby> 议题 <rt> Issue </rt></ruby>这样的工具可以用来跟踪待处理的文档工作,并从维护者和用户那里获得反馈以验证文档的发布。外部审查可以用来验证文档作品,并持续发布文档。这就保证了除代码外,文档也能不断改进并快速发布。

请记住,如果不遵循规范化的实践,每个文档都会不同。这可能会导致一些混乱,使人们难以获取正确的信息。

哪些东西会被归类为混乱呢?当大多数文件都不遵循规范实践时,不一致就会产生,从而导致更大的混乱!那么,如何整理混乱的开源文档呢?

### 整理混乱的开源文档

遵循一个“文档风格指南”是很重要的。风格指南是创建和展示内容的指导方针的集合。无论你是一个独立的作家还是一个大型文档团队的成员,它都有助于在你的文档中保持一致的风格、口音和语气。

有几个流行的风格指南,如《红帽风格指南》、《谷歌文档风格指南》和《苹果风格指南》。如何选用?首先要从定义你的需求开始。如果你的要求与其他开源项目没有太大区别,你可以遵循一个现成的风格指南,或者你也可以先选一个,然后在它的基础上根据自身需要做一些修改。大多数与语法有关的准则和内容规则可能是通用的,但整体术语可能会有所不同。

你还需要在你的项目中自动采用这些风格指南。为此,你可以使用 Vale,它集成了本地的持续集成(CI)服务,该服务能帮助你确保文档严格遵循风格指南。

>

> **文档类型**

>

>

> * *自述文件*:包含基本的安装和使用说明,这也是任何开源文档中最重要的部分之一。它是潜在的用户/开发者与项目之间的第一个连接点。

> * *参考指南*:可能包括一些基本的参考资料,以便帮助你快速上手,或者是与项目贡献相关的文档。

> * *用户文档*:是最基本的文档,它描述了项目的使用方式。如果没有用户文档,大多数人就会对如何使用该项目感到迷茫。

> * *开发文档*:旨在支持开发团队在项目中不断取得新的进展。它还应该为内部开发工作提供一个良好的途径,并确保功能被很好地传达给股东。

> * *社区内容*:包括基本的博客、视频和外部内容,旨在为那些想进一步了解项目的社区成员提供支持。

>

>

>

通过使用风格指南,文件的整体前提将以统一的语言风格传达给用户。但是,这些文件毕竟是由一个技术作家团队准备的,它们的写作风格可能会冲突,因为写作风格是因人而异的。那么,如何才能使文档规范化呢?

### 规范化文档

当涉及到规范化文档时,有许多方法可以采取。第一个方法显然是创建适用于各种角色的预定义模板。这些模板可以用来记录新的功能、识别错误和问题,以及更新变更日志以适应正在增加的新内容。

如果你采用的是基于 Git 的工作流,试着开发一个规范的工作流程来发布你的文档。最规范的工作流是:<ruby> 复刻 <rt> fork </rt></ruby> 发布文档的仓库,在本地分支上添加你的修改,推送这些修改,提出请求并要求对其进行审查。规范化文档的一个好处就是带来更好的反馈和审查过程。

### 反馈和自动审查

规范化使得你能够得到用户的反馈并生成自动的审查,可以参考这些反馈来改进项目和文档。通过这些反馈,你也可以评估所分享的信息对用户是否有意义。像 GitBook 这样的文档平台会提供合适的反馈服务,这有助于验证文档是否有用。

始终寻求<ruby> 主题专家 <rt> subject matter expert </rt></ruby>(SME)对文档的反馈,他们可以是利益相关者、开发者、工程师,甚至是外部贡献者。你也可以使用自动测试和 CI 来验证你的文档是否遵循风格指南。

### 文档众包

如果你想开源你的文档,最好的方法也许是提供一个快速入门指南。它可以像 `CONTRIBUTING.md` 那样简单,基本上只要说明该如何设置项目并为其作出贡献/单纯使用它即可。

始终开发以用户为中心的文档,标明每个项目的目的。同时,打造学习课程来帮助新的贡献者。

>

> **带着目的编写文档**

>

>

> 始终带着目的编写文档。它是最基本的写作策略之一,它定义了你编写某个特定文档的理由,而非方式。首先回答以下问题:

>

>

> * 这个文档的目标是什么?

> * 需要传递的信息是什么?

> * 你希望用户在这之后采取什么行动?

> * 我与读者分享的价值观是什么?

> * 我的文档风格是否简洁、一致?

>

>

>

### 定义一致的内容策略

一致的内容策略有助于确保文档工作和项目基础设施的长期愿景。它可以围绕以下两个主要方面:

1. 资源:包括项目文档、案例研究和白皮书、项目架构等

2. 品牌内容:博客和特邀帖子、新闻和社区故事、学习课程等

每个开源项目都应该有适当的文档,以说明它能为用户提供的功能,这样用户就可以选择最合适的解决方案。适当的文档可以传达正确的信息,也可以让其他开发者贡献力量来进一步加强和改进项目。虽然听起来很简单,但只有做对了,文档才能成功。而你的项目,反过来,只有在你的文档正确的情况下才能成功,所以永远不要低估它的目标或过程!

策划:Laveesh Kocher

---

via: <https://www.opensourceforu.com/2022/04/documentation-isnt-just-another-aspect-of-open-source-development/>

作者:[Harsh Bardhan Mishra](https://www.opensourceforu.com/author/harsh-bardhan-mishra/) 选题:[lkxed](https://github.com/lkxed) 译者:[lkxed](https://github.com/lkxed) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | *Some projects live on, while some die a premature death – and the difference between the two often lies in the documentation. Meticulous, smart documentation can give your project the boost it needs. Here is why you should consider documentation a primary effort, on par with development, and the right way to go about it!*

Often, developers simply assume that code is self-documented enough and doesn’t need any extra documentation. This overconfidence can cost the project a lot. Insufficient or bad documentation can kill your project. Without proper documentation in place users won’t be able to understand the purpose as well as the proper workflow of the project. That could lead to some apprehensions about adopting your open source product.

**Work on it, right from day one**

Documentation should never be a secondary effort; it should be a primary task on par with code development and management. Documentation acts as the definitive source of truth with the wide redistribution of content in the form of community threads, stack overflows, and quora answers. It should fulfil the need of contributors who would like to refer to the actual resource, and provide the necessary references to support engineers. It should also communicate essential plans with the stakeholders. A good documentation ensures continuous improvement and development of a product.

When releasing a software product, we must not only ship the code but good documentation as well. This brings us to one of the most important concepts that most open source projects with well-maintained documentation follow – ‘documentation as code’.

**Documentation as code**

Today documentation is not being stored in Microsoft Word or PDF files. The new need is version control documentation, wherein all the docs are added over a version control system and released continuously. This concept was popularised by ‘Read the Docs’ and has now become an essential part of the content strategy for most documentation teams.

Tools like Bugzilla and GitHub Issues can be used to track the documentation work that is pending, and take feedback from maintainers and users to validate the release of the documents. External reviews can be used to validate the documentation piece, and to continuously publish it. This ensures that not only code, but the documentation as well, is continuously improved and released quickly.

Keep in mind that no two documentations will ever be the same if they don’t follow any standardised practice. This can lead to some mess, making it hard to fetch the right information.

How exactly do we classify something as messy? When most of the documentation pieces don’t follow standard practices, it leads to inconsistency and hence a big mess! So how do you declutter messy open source documents?

**Decluttering messy open source documentation**

It is important to follow a ‘documentation style guide’. A style guide is a collection of guidelines for creating and presenting content. Whether you’re a standalone writer or part of a large documents team, it helps to keep a consistent style, voice and tone throughout your documentation.

There are several popular style guides available, such as the Red Hat style guide, Google documentation style guide, and Apple style guide. To choose one, first start by defining your requirements. If your requirements do not differ much from other open source projects, you can follow a readily-available style guide, or adapt the same style guide for your own purpose with a few changes here and there. Most of the grammar-related guidelines and content rules may be the same, but overall terminology can vary.

You will also be required to automate the adoption of these style guides within your projects. For this, you can use Vale, which is integrated into the local machinery with a continuous integration (CI) service that will help you to ensure your documentation follows the style guide in a strict manner.

Types of documentation |

README: This contains basic installation and usage instructions, which is also one of the most essential parts of any open source documentation. It is the first point of information and contact between the potential user or developer and the project.

|

By using the style guide, the overall premise of the documentation will be conveyed to the users in a single tone. But since these documents are prepared by a team of technical writers, there can be conflicting writing styles, as these vary from person to person. So how do you standardise the documentation?

**Standardising documentation**

There are many approaches that can be taken when it comes to standardising documentation. The first one is obviously to create predefined templates which can be used for a variety of roles. These can be used for documenting new features, identifying bugs and issues, and updating the change log to accommodate new stuff that is being added.

Try to develop a standard workflow for publishing your documentation if you are following a Git based workflow. The most standard workflow will be to fork the repo where the documentation is published, add your changes on a local branch, push these changes, make your request and ask for reviews on the same. A positive that comes out of standardising your documents is a better feedback and review process.

**Feedback and automated reviews**

Standardisation allows you to get users’ feedback and generate automated reviews, which can be taken into consideration for improving the project and the documentation. With this feedback, you can also evaluate whether the information being shared is making sense to users or not. Having a proper feedback service in place through documentation platforms like GitBook helps to verify if the documentation is useful or not.

Always try to seek out subject matter expert (SME) feedback on the documentation. These SMEs can be stakeholders, developers, engineers, or even external contributors. You can also use automated tests and CI to verify if your documentation is following a style guide or not.

**Crowdsourced documentation efforts**

If you are looking to open source your documentation, perhaps the best way to get started is to provide a quick start guide. The guide can be as simple as ‘CONTRIBUTING.md’; basically, a file showing how a person can set up the project and contribute to or use it.

Always try to develop user-centric documentation that signifies the purpose of each project and build learning courses to help new contributors.

Write with a purpose |

Always look to write with a purpose. It is one of the most essential writing strategies and basically defines ‘why’ you are writing a particular documentation, and not ‘how’ you are writing it. Start by answering the following questions:

|

Defining a consistent content strategy

A consistent content strategy helps to ensure a long-term vision for the documentation efforts and the project infrastructure. This can revolve around two main things:

a.* Resources:* Project docs, case studies and whitepapers, project architecture

b. *Branded content:* Blogs and guest posts, news and community stories, learning courses

Every open source project should have proper documentation stating the functionality it can provide to users, so that they can opt for the most suitable solution. Proper documentation that communicates the right information also allows other developers to put in their efforts to further enhance and improve the project. Simple though it sounds, documentation can only succeed if done right. And your project, in turn, can only succeed if your documentation is right, so never underestimate its purpose or process!

Curated By: Laveesh Kocher |

14,546 | 使 Ubuntu 22.04 LTS 成为史诗版本的 5 个不太流行的功能 | https://www.debugpoint.com/2022/04/ubuntu-22-04-release-unique-feature/ | 2022-05-05T11:27:28 | [

"Ubuntu"

] | /article-14546-1.html |

>

> 这是一份关于 Ubuntu 22.04 LTS 的次要特点的列表,这些特点使它成为迄今为止最好的 LTS 版本之一。

>

>

>

Canonical 的最新 LTS 版本 [Ubuntu 的代号为 “Jammy Jellyfish”](https://www.debugpoint.com/2022/01/ubuntu-22-04-lts/) 受到了全球用户的好评。但是有数百个新的微小功能,以及一些不太流行的功能,没有引起人们的注意。因此,这里有五个 Ubuntu 22.04 的独特功能,我们认为这些功能可以使它成为一个史诗般的版本。

### Ubuntu 22.04 发布 – 五个独特的功能

#### 为数据驱动的方案进行了优化

数据分析和处理是当今每个企业的核心。而要做到这一点,你需要巨大的计算能力。Ubuntu 22.04 LTS 带来了开箱即用的 [英伟达虚拟 GPU(vGPU)](https://docs.xn--cpq367j69h.com/grid/latest/grid-vgpu-release-notes-ubuntu/index.html) 驱动支持。这意味着你可以利用英伟达虚拟 GPU 软件,使你能够在虚拟机中使用从物理 GPU 服务器共享的 GPU 计算能力。

不仅如此,如果你的业务依赖于 SQL Server,Ubuntu LTS for Azure 带来了 Ubuntu 中的 SQL Server,它由 “Micro$oft” 支持,提供优化的性能和可扩展性。

#### 改进的活动目录集成

此外,许多企业在多个工作站中为整个企业用户部署 Ubuntu。而且,部署工作站策略以监测和控制用户访问和各种关键业务控制非常重要。

活动目录实现了基于策略的工作站管理(在 Ubuntu 20.04 中引入),在这个版本中得到了进一步改善。除此之外,这个版本还带来了 [ADsys](https://github.com/ubuntu/adsys) 客户端,它有助于通过命令行远程管理组策略、权限升级和远程脚本执行。从这个版本开始,活动目录现在也支持与高级组策略对象的安装程序集成。

#### 实时内核支持

此外,在 Ubuntu 22.04 LTS 发布期间,Canonical 宣布的一个有趣的消息是,提供“实时”内核选项,现在是测试版。对于电信和其他行业来说,一个低延迟的操作系统对于时间敏感的工作是必需的。因此,考虑到这一点和渗透到这些领域的愿景,Ubuntu 22.04 LTS 带来了一个应用了 PREEMPT\_RT 补丁的实时内核构建。它可用于 x86\_64 和 AArch64 架构。

然而,该 [补丁](https://git.kernel.org/pub/scm/linux/kernel/git/rt/linux-stable-rt.git/) 还没有在主线内核中出现,希望它能很快能出现。

#### 最新的应用、软件包和驱动程序

除了上述变化之外,这个版本还带来了大量的软件包和工具链的升级。例如,这个版本带来了基于各种用途的多种 Linux 内核类型,如 Ubuntu 桌面可以选择使用 [内核 5.17](https://www.debugpoint.com/2022/03/linux-kernel-5-17/),而硬件启用内核仍然是 5.15。

不仅如此,Ubuntu Server 采用长期支持版的 [内核 5.15](https://www.debugpoint.com/2021/11/linux-kernel-5-15/),而 Ubuntu Cloud 镜像可以选择使用与云供应商合作的更优化的内核。

此外,如果你是英伟达用户,值得一提的是,ARM64 上的英伟达驱动的 Linux 限制模块现在已经可用(在 x86\_64 中已经可用)。你可以使用 [ubuntu-drivers](https://launchpad.net/ubuntu/+source/ubuntu-drivers-common) 程序来安装和配置英伟达驱动。

核心模块和子系统构成的完整的操作系统可以完美无缺地工作。因此,考虑到这一点,Ubuntu 22.04 LTS 对它们都进行了仔细的升级,以迎合这个很好的版本。以下是简介:

GNU/Linux 核心:

* GCC 11.2.0

* binutils 2.38

* glibc 2.35

编程工具链:

* Python 3.10.4

* Perl 5.34.0

* LLVM 14

* golang 1.18

* rustc 1.58

* OpenJDK 11(可选使用 OpenJDK 18)

* Ruby 3.0

* PHP 8.1.2

* Apache 2.4.52

* PostgreSQL 14.2

* Django 3.2.12

* MySQL 8.0

* 更新的 NFS 以及 Samba Server

* Systemd 249.11

* OpenSSL 3.0

虚拟化:

* qemu 6.2.0

* libvirt 8.0.0

* virt-manager 4.0.0

#### 性能提升

但这还不是全部。由于一些长期等待的更新,你应该体验到更快的 Ubuntu 22.04 Jammy Jellyfish,这些体验最终会在这个版本中出现。

首先,长期等待的 GNOME 桌面的 [三重缓冲代码] 已经来到。当先前的帧缓冲落后时,三重缓冲会自动启用,它在英特尔和树莓派驱动中产生了更快的桌面性能。不仅如此,代码还监控最后一帧,以便系统不会遇到过量缓冲的情况。

其次,改进的电源管理,在运行时对 AMD 和英伟达的 GPU 起作用,将帮助笔记本电脑用户。

此外,Wayland 现在是大多数系统的默认显示服务器,除了英伟达 GPU 硬件默认为 X11。Wayland 为你提供了更快的跨应用的桌面体验,包括网页浏览器。

最后,定制的 GNOME 42 及其 [独特功能](https://www.debugpoint.com/2022/03/gnome-42-release/)(例如平衡和省电电源配置文件)为重度笔记本电脑用户提供了更多优势。此外,带有浅色/深色外观的新强调色和将选定的 GNOME 模块移植到 GTK4/libadwaita 只是这个史诗般的 Ubuntu 22.04 LTS 版本的一个补充。

### 结论

总而言之,就上述所有内容的变化和许多其他方面而言,我相信这是 Canonical 发布的最好的 LTS 版本之一。

我们希望它得到好评,并在未来能保持稳定。

---

via: <https://www.debugpoint.com/2022/04/ubuntu-22-04-release-unique-feature/>

作者:[Arindam](https://www.debugpoint.com/author/admin1/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | ('Connection aborted.', RemoteDisconnected('Remote end closed connection without response')) | null |

14,547 | 最适合程序员的 10 款 Linux 发行版 | https://www.debugpoint.com/2022/03/top-linux-distributions-programmers-2022/ | 2022-05-05T14:36:00 | [

"Linux 发行版"

] | /article-14547-1.html |

>

> 我们为程序员和开发人员总结了 2022 年最好用的 10 款 Linux 发行版,以便他们开展日常工作和个人项目。

>

>

>

由于工作和项目需要,程序员和开发人员会用到各种工具和应用,包括代码编辑器、编译器、插件以及数据库等等。若对现代开发人员的工作流程做一番整理,一般流程如下:

* 创建代码仓库

* 编程

* 调试

* 测试

* 部署

上述工作流程需要用到各种各样的工具,一份标准的工具清单如下:

* 代码编辑器

* 简单的文本编辑器

* 网页浏览器(包括 Web 开发人员使用的各种浏览器)

* 数据库引擎

* 本地服务器

* 各类编程语言的编译器

* 调试器

* 监视或分析工具(客户端或者网页端)

与 Windows 相比,Linux 可以说是编程的最佳平台。之所以这样说,主要是因为 Linux 发行版与 Windows 不同,预装了许多功能强大的包和软件,自行安装也比较容易。在本文中,考虑到一些原因,我不会将 macOS 纳入对比范围之内。

综上,本文将整理出 2022 年最适合程序员的 10 款 Linux 发行版。

### 2022 最适合程序员的 10 款 Linux 发行版

#### 1、Fedora 工作站

在这 10 款 Linux 发行版当中,最优秀的可能就要数 Fedora Linux 了。Fedora 默认的工作站版本精选了一些软件包,为用户带来真正的 GNOME 桌面体验。

Fedora Linux 默认安装了开箱即用的主流开发软件包,包括 PHP、OpenJDK、PostgreSQL、Django、Ruby on Rails 以及 Ansible 等等。

dnf 是 Fedora Linux 的包管理器,有了它,安装代码编辑器以及其他软件就相当容易了。此外,你还可以使用“软件”应用商店一键搜索、安装软件。

Fedora Linux 支持 Snap 和 Flatpak,使用起来会更加灵活方便。你还可以使用 RPM Fusion 仓库,获取大量自由或非自由的软件。因为许可证等一些原因,Fedora Linux 不希望在其主仓库内包括这些包,于是就有了 RPM Fusion。

点击下方链接,了解 Fedora Linux 最新版本。

>

> **[下载 Fedora](https://getfedora.org/)**

>

>

>

#### 2、Ubuntu Linux

在今天,无论是服务器还是个人电脑,使用最为广泛的发行版当属 Ubuntu Linux。Ubuntu 提供长期支持版本,每个长期支持版本官方提供五年的支持(外加五年的维护支持),并且每年为高级用户提供两个短期版本。

由于 Ubuntu 非常流行,各种包与软件的供应商都会提供适用于 Ubuntu 的版本(.deb)。此外,得益于广泛的知名度,Ubuntu 有着更为庞大的论坛群体和更为丰富的帮助文档。所以说,Ubuntu 是开发人员的最佳之选,尤其是在开发过程中陷入难题的时候,Ubuntu 更能发挥其作用。点击下方链接,了解更多。

>

> **[下载 Ubuntu](https://ubuntu.com/download)**

>

>

>

#### 3、openSUSE

openSUSE 是用在全球关键系统中最稳定、最专业的 Linux 发行版之一,是企业界(包括个人电脑、服务器以及瘦客户机)的首选解决方案。

相较于 Ubuntu 和 Fedora,openSUSE 具有一些独特的优势。首先,openSUSE 有两个版本:Leap 和 Tumbleweed。其中,openSUSE Leap 属于长期支持版,为用户带来稳定的升级体验。openSUSE Tumbleweed 属于滚动发行版,有着最新、最尖端的软件包。

如果你想获得最新的包和硬件支持,助力开发工作,你可以选择 openSUSE Tumbleweed;如果你想要的是稳定性,无需频繁维护即可长期运行,openSUSE Leap 会更适合你。

使用 openSUSE 进行开发工作,最大的优势之一就是 YaST 包管理工具。有了 YaST,许多操作可以轻松实现自动化。

此外,openSUSE 获取软件非常方便。它有专属的应用网站,供用户查找、安装包和软件。

如果你有一些 Linux 发行版的使用经验,推荐选择 openSUSE 进行开发工作。

>

> **[下载 openSUSE](https://www.opensuse.org/)**

>

>

>

#### 4、Manjaro Linux

Manjaro Linux 基于 Arch Linux,不过安装起来更容易一些。Manjaro Linux 自身还有许多独特功能,比如带有图形用户界面的安装程序、pamac 软件安装器以及高质量的软件仓库等等。Manjaro 有三个主要的桌面版本:GNOME、KDE Plasma 和 Xfce,足以满足各类用户的需要。

如果你想使用 Arch Linux 及其滚动发行的软件包来满足开发需求,但又不想在安装原版 Arch 上来回折腾,Manjaro 绝对是你的最佳选择。

>

> **[下载 Manjaro](https://manjaro.org/download/)**

>

>

>

#### 5、Arch Linux

尽管有 Manjaro 以及其他基于 Arch Linux 的发行版,而且安装操作非常简单,你可能还是想在自己的定制电脑上折腾一番,亲自动手 [安装原版 Arch](https://www.debugpoint.com/2022/01/archinstall-guide/)。

不过这种选择更适合程序员和开发人员,因为他们想得到更多的掌控权,或者想要定制一个 Linux 操作系统,用于开发项目或满足开发需求。这样一来,他们可能就会安装 Arch Linux,选择自己最喜欢的桌面,设置专用于开发工作的操作系统。

假设你对 Arch Linux 和普通的电脑都比较熟悉,那么 Arch Linux 就是你的最佳选择,因为在自己定制的 Linux 操作系统上,你可以完全控制每一个软件包。

>

> **[下载 Arch Linux](https://archlinux.org/download/)**

>

>

>

#### 6、Pop OS

Pop OS(即 Pop!\_OS)由电脑制造商 System76 针对其系列硬件开发,是一款基于 Ubuntu 的自由开源的发行版。发行周期与 Ubuntu 保持同步,并为用户提供了额外的调整工具和软件包。

Pop OS 基于 Ubuntu,默认支持多种程序语言,所以非常适合程序员使用。Pop OS 的软件中心非常出色,设有开发软件专区,深受计算机科学家和程序员青睐。

此外,Pop OS 的 COSMIC 桌面(GNOME 桌面的定制版)支持窗口自动平铺,具有柔美的调色板、默认的深色模式以及丰富的设置选项,给程序员带来独特的使用体验。

如果你既想要基于 Ubuntu,又想要适合程序员的稳定 Linux 发行版,推荐选择 Pop OS。

>

> **[下载 POP OS](https://pop.system76.com/)**

>

>

>

#### 7、KDE Neon

作为一个程序员,如果你喜欢 KDE Plasma 桌面,又想使用基于 Qt 的开发环境,那么你应该选择 KDE Neon。

KDE Neon 基于 Ubuntu 长期支持版本,兼具最新的 KDE Plasma 桌面和 KDE 框架。因此,使用 KDE Neon,你不仅可以享受 Ubuntu 长期支持版本的稳定性,还能体验基于 Qt 的最新版 KDE 软件。

运行速度快,程序开箱即用,用户界面友好,广泛的社区支持,如你所愿,完美如斯。

>

> **[下载 KDE Neon](https://neon.kde.org/download)**

>

>

>

#### 8、Debian

Debian GUN/Linux 就无需过多介绍了。Debian 的稳定分支是 Ubuntu 及其衍生系统的基础。换句话说,Debian 是最主要、最稳定的 Linux 发行版之一。优秀的稳定性和较长的支持时间使得 Debian 非常适合用做开发环境。

不过,Debian 的稳定分支比较保守,很少使用最新的软件包。毕竟全世界(几乎)都依赖 Debian 的稳定运行,所以维护者在检查、合并软件包时必须十分谨慎。

Debian 不仅能够长期稳定运行,而且维护成本较低,是高级用户和系统管理员绝佳的编程环境。

>

> **[下载 Debian Linux](https://www.debian.org/distrib/)**

>

>

>

#### 9、Kali Linux

Kali Linux 由 Offensive Security 开发,服务对象为道德黑客和查找网络漏洞的渗透测试人员,内置大量黑客软件和工具。

对技术娴熟的程序员和开发人员来说,Kali Linux 堪称最佳之选。如果你精通 Linux,具备解决错误和依赖问题的经验,推荐选择 Kali Linux。

>

> **[下载 Kali Linux](https://www.kali.org/)**

>

>

>

#### 10、Fedora Labs

最后,我们来看看 Fedora Linux 的各种 Fedora Labs 版本。

Fedora Labs 为程序员、科学家、学生等各类人群提供各类专业化的 Linux 发行版,内置各类专业软件、包和工具。很多人并没有意识到 Fedora Labs 的优势,只要经过适当的配置,这些版本都是非常优秀的发行版。

我们来总结一下这些 Fedora Labs:

Fedora Scientific:

* 采用 KDE Plasma 桌面,集成科学和数学领域的各种开源工具

* 软件清单如下:

+ 基于 C/C++ 的 GNU Scientific Library

+ 兼容 MATLAB 的 MGNU Octave

+ LaTeX

+ Gnuplot:用于绘制 2D 与 3D 图像

+ Pandas:用于数据处理的 Python 库

+ IPython

+ Java 和 R 程序语言相关包

>

> **[下载 Fedora Scientific](https://labs.fedoraproject.org/en/scientific/)**

>

>

>

Fedora COMP NEURO:

* 采用 GNOME 桌面环境,预装神经科学领域的各种开源包和应用。

>

> **[下载 Comp Neuro](https://labs.fedoraproject.org/en/comp-neuro/)**

>

>

>

Fedora Robotics Suite:

* 集成各种开源机器人技术包和软件,适合初学者、资深计算机科学家和编程人员。

>

> **[下载 Fedora Robotics](https://labs.fedoraproject.org/en/robotics/)**

>

>

>

除了上述版本,还有 [Fedora Security Labs](https://labs.fedoraproject.org/en/security)、[Fedora Astronomy](https://labs.fedoraproject.org/en/astronomy) 和 [Fedora Python Classroom](https://labs.fedoraproject.org/en/python-classroom) 可供选择。

在编程项目以及科学领域,Fedora Labs 堪称完美之选。

### 总结

那么,怎样才能从以上 10 款 最适合程序员的 Linux 发行版中选出自己最喜欢的呢?

如果你想要一款开发系统,但又不想耗费太多精力,拿不定主意的话,推荐使用 Fedora 工作站或者 Ubuntu。

如果你的空闲时间比较多或者想要进一步掌控自己的系统,乐于尝试并且能够忍受偶尔发生的错误,推荐选择基于 Arch Linux 的系统。

对于刚接触 Linux 生态的新手程序员来说,Pop OS 也是一个不错的选择。如果有特殊需要的话,可以试试 Fedora Labs。

我希望本文能帮助程序员和开发人员选出最喜欢的 Linux 发行版。

祝你好运!

(题图由 [jplenio](https://pixabay.com/zh/users/jplenio-7645255/?utm_source=link-attribution&utm_medium=referral&utm_campaign=image&utm_content=6792527) 在 [Pixabay](https://pixabay.com/zh/?utm_source=link-attribution&utm_medium=referral&utm_campaign=image&utm_content=6792527) 上发布 )

---

via: <https://www.debugpoint.com/2022/03/top-linux-distributions-programmers-2022/>

作者:[Arindam](https://www.debugpoint.com/author/admin1/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[aREversez](https://github.com/aREversez) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出