Multi-GraspLLM: A Multimodal LLM for Multi-Hand Semantic-Guided Grasp Generation

Updates

- 2025.3: We add the Jaco hand data which is our final version.

- 2025.1: We add the Barrett hand data.

- 2024.12: Released the Multi-GraspSet dataset with meshes of objects.

- 2024.12: Released the Multi-GraspSet dataset with contact annotations.

Overview

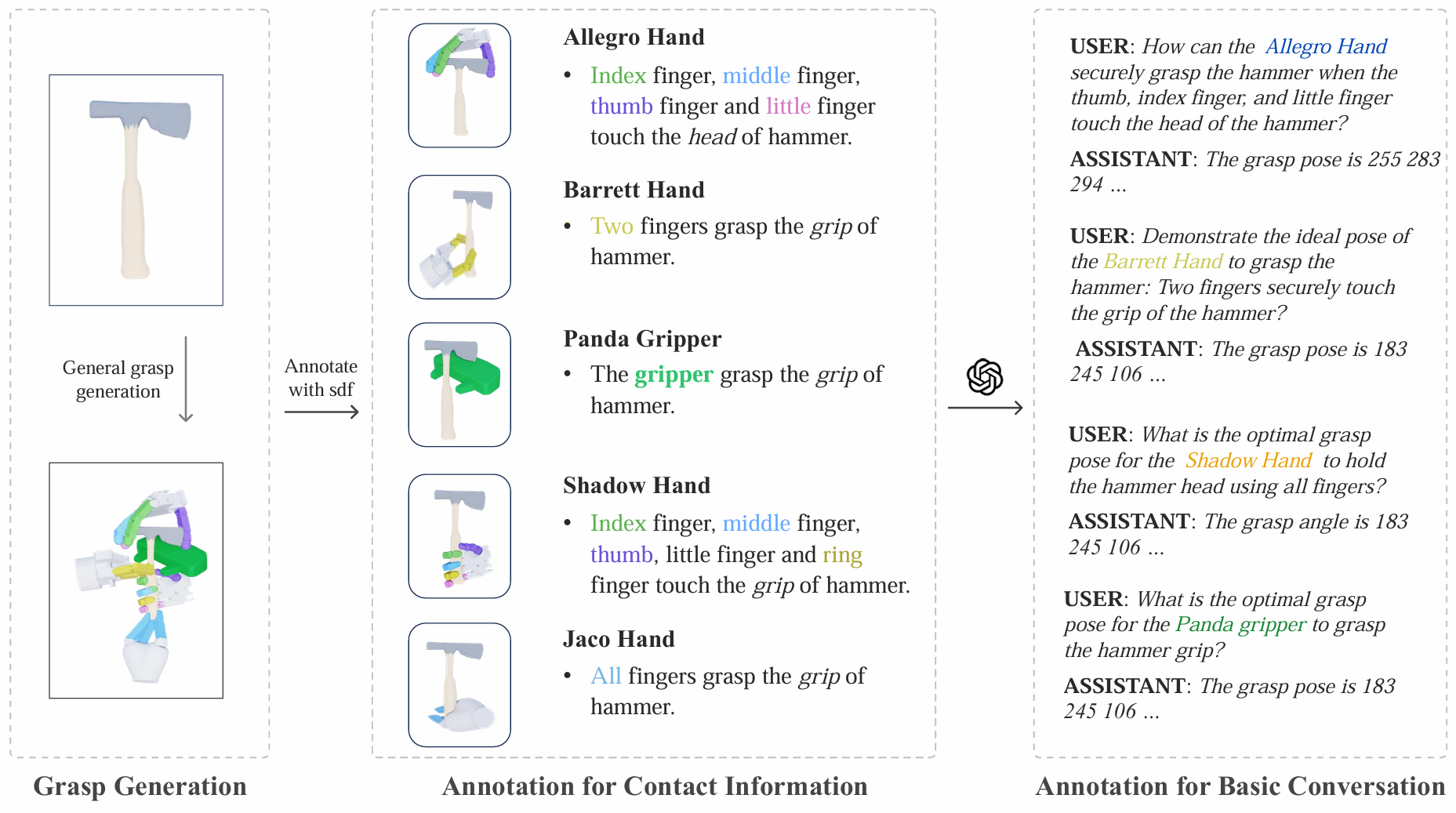

We introduce Multi-GraspSet, the first large-scale multi-hand grasp dataset enriched with automatic contact annotations.

|

|

The Construction process of Multi-GraspSet |

|

|

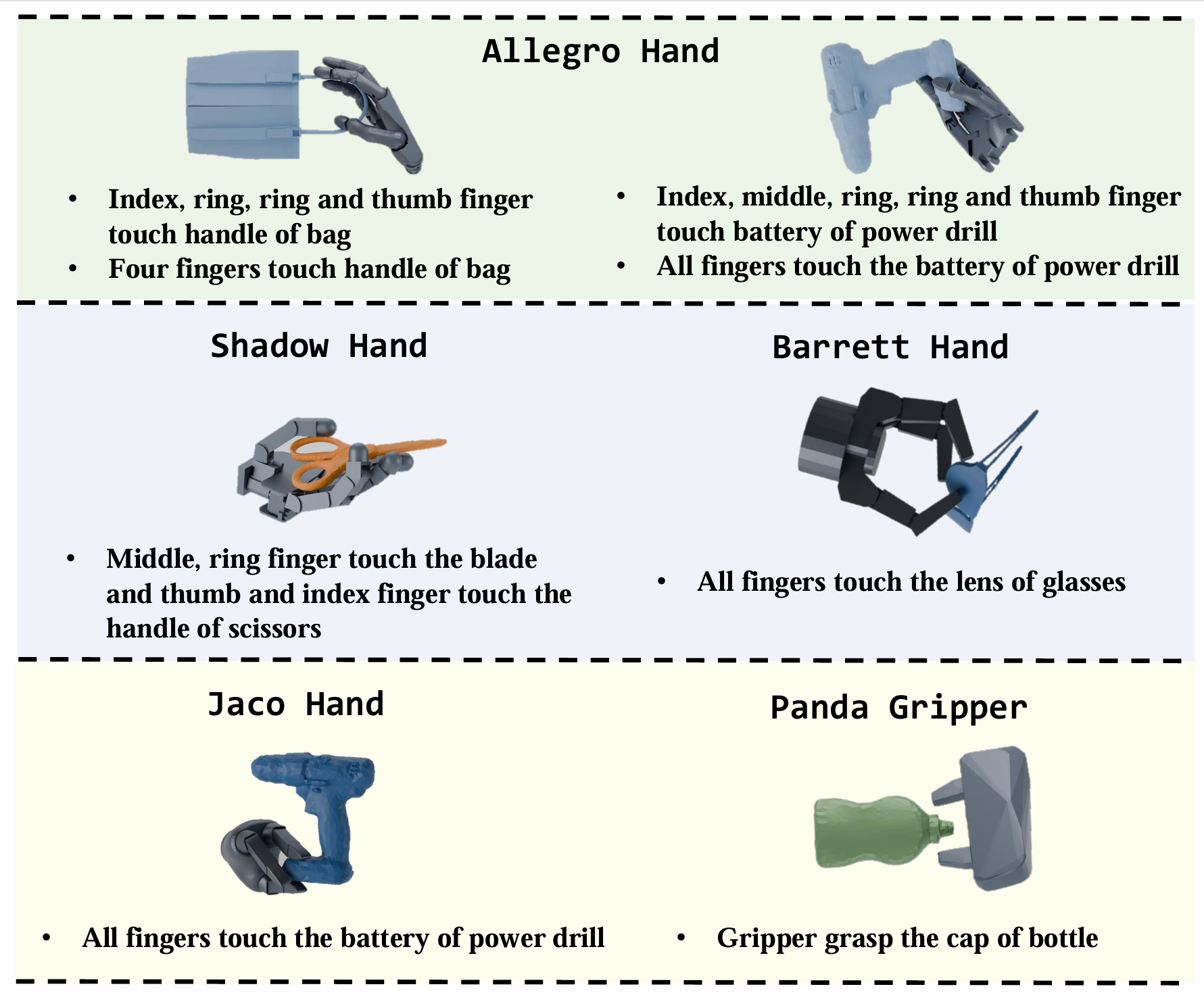

Visualization of Multi-GraspSet with contact annotations |

Installation

Follow these steps to set up the evaluation environment:

Create the Environment

conda create --name eval python=3.9Install PyTorch and Dependencies

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2⚠️ Ensure the CUDA toolkit version matches your installed PyTorch version.

Install Pytorch Kinematics

cd ./pytorch_kinematics pip install -e .Install Remaining Requirements

pip install -r requirements_eval.txt

Visualize the Dataset

Run the Visualization Code

Open and execute the

vis_mid_dataset.ipynbfile to visualize the dataset.