CoALM-405B: The Largest Open-Source Agentic LLM

🌟 Model Overview

CoALM-405B is the largest fully open-source Conversational Agentic Language Model. This model sets a new standard in Conversational AI, seamlessly integrating both Task-Oriented Dialogue (TOD) capabilities and Language Agent (LA) functionalities. It is designed to push the boundaries of open-source agentic LLMs, excelling at multi-turn dialogue, tool usage, reasoning, and API execution. It is the best-performing fully open-source LLM on the Berkeley Function Calling Leaderboard V3 (BFCL V3), marking a leap in open-source AI research.

Model Sources

- 📝 Paper: https://arxiv.org/abs/2502.08820

- 🌐 Project Page: https://emrecanacikgoz.github.io/CoALM/

- 💻 Repository: https://github.com/oumi-ai/oumi/tree/main/configs/projects/CALM

- 💎 Dataset: https://huggingface.co/datasets/uiuc-convai/CoALM-IT

🚀 Model Details

- Model Name: CoALM-405B

- Developed by: Colloboration of UIUC Conversational AI LAB and Oumi

- License: cc-by-nc-4.0

- Architecture: Meta-Llama 3.1-405B Instruct

- Training Data: CoALM-IT

- Fine-tuning Framework: Oumi

- Training Hardware: 8 NVIDIA H100 GPUs

- Training Duration: ~6.5 days

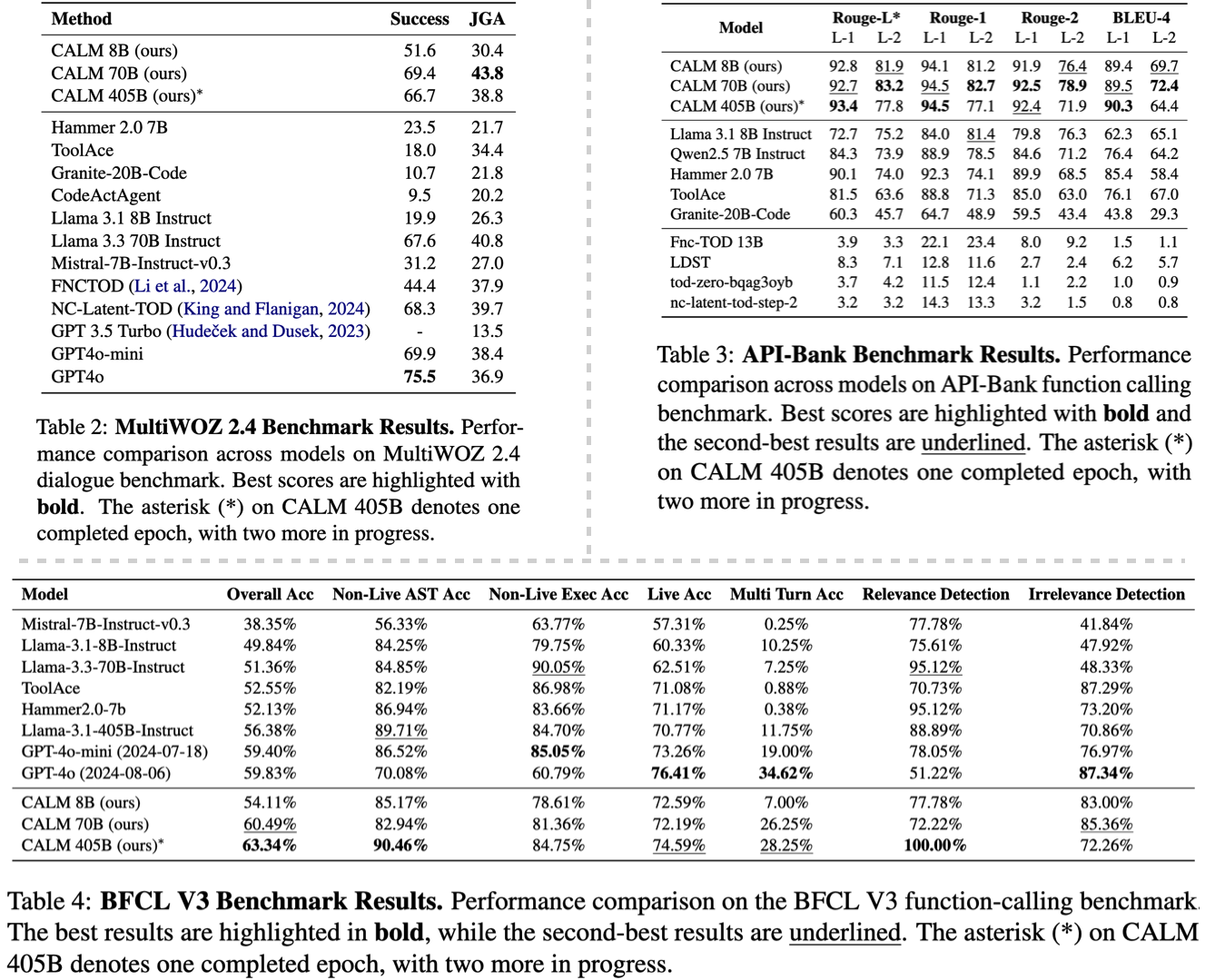

- Evaluation Benchmarks: MultiWOZ 2.4, BFCL V3, API-Bank

- Release Date: February 5, 2025

🏆 Why CoALM-405B is a Game-Changer

- 🚨 Largest Open-Source Agentic LLM: A 405B parameter model that brings state-of-the-art agentic capabilities to the public domain.

- 🎯 Best Open-Source Performance on BFCL V3: Outperforms leading proprietary models like GPT-4o, Gemini, and Claude in function-calling tasks.

- 🔍 True Zero-Shot Function Calling: Generalizes to unseen API tasks with unmatched accuracy.

- 🤖 Multi-Turn Dialogue Mastery: Excels at long conversations, task tracking, and complex reasoning.

- 🛠 API Tool Use and Reasoning: Makes precise API calls, interprets responses, and synthesizes coherent multi-step solutions.

- 📜 Fully Open-Source & Reproducible: Released under cc-by-nc-4.0, including model weights, training logs, and datasets.

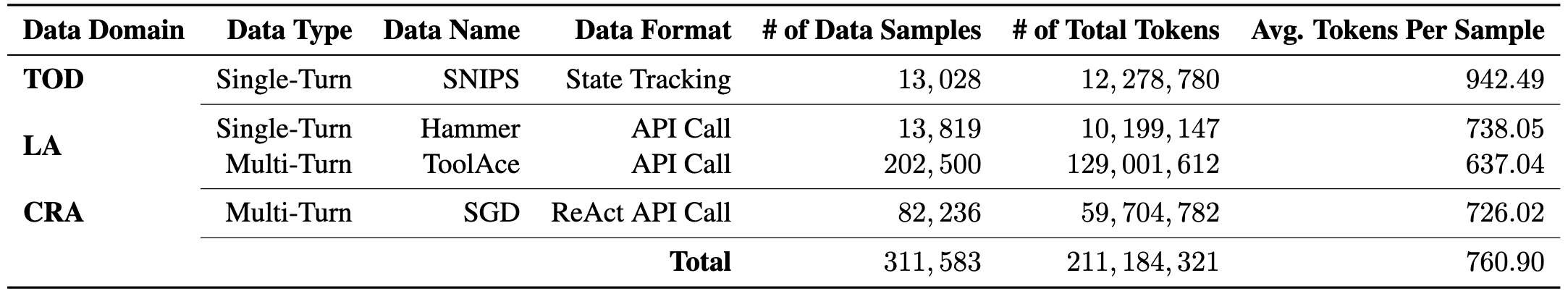

💡 CoALM-IT Dataset

📊 Benchmark Performance

🔧 Training Process

Fine-tuning Stages

- TOD Fine-tuning: Optimized for dialogue state tracking (e.g., augmented SNIPS in instruction-tuned format).

- Function Calling Fine-tuning: Trained to generate highly accurate API calls from LA datasets.

- ReAct-based Fine-tuning: Enhances multi-turn conversations with structured thought-action-observation-response reasoning.

Training Hyperparameters

- Base Model: Meta-Llama 3.1-405B Instruct

- LoRA Config: Rank = 16, Scaling Factor = 32

- Batch Size: 2

- Learning Rate: 1e-4

- Optimizer: AdamW (betas = 0.9, 0.999, epsilon = 1e-8)

- Precision: q4

- Warm-up Steps: 500

- Gradient Accumulation Steps: 1

❗️ How to Use CoALM-405B

It requires 16xH100 NVIDIA GPUs for Inference.

🏗 How to Load the Model using HuggingFace

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("uiuc-convai/CoALM-8B")

model = AutoModelForCausalLM.from_pretrained("uiuc-convai/CoALM-8B")

🛠 Example Oumi Inference

Oumi multi-node inference support is under development. CoALM-405B likely requires multi-node inference as most single nodes support up to 640GB of GPU VRAM. To run multi-node inference, we recommend vLLM.

🛠 Example Oumi Fine-Tuning

pip install oumi

# See oumi_train.yaml in this model's /oumi/ directory.

oumi train -c ./oumi_train.yaml

More fine-tuning and community-driven optimizations are planned to enhance real-world usability.

Acknowledgements

We'd like to thank the Oumi AI Team for collaborating on training the models using the Oumi platform on Together AI's cloud.

License

This model is licensed under Creative Commons NonCommercial (CC BY-NC 4.0).

📖 Citation

If you use CoALM-405B in your research, please cite:

@misc{acikgoz2025singlemodelmastermultiturn,

title={Can a Single Model Master Both Multi-turn Conversations and Tool Use? CoALM: A Unified Conversational Agentic Language Model},

author={Emre Can Acikgoz and Jeremiah Greer and Akul Datta and Ze Yang and William Zeng and Oussama Elachqar and Emmanouil Koukoumidis and Dilek Hakkani-Tür and Gokhan Tur},

year={2025},

eprint={2502.08820},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2502.08820},

}

For more details, visit Project Repository or contact [email protected].

- Downloads last month

- 370