metadata

license: apache-2.0

tags:

- llama.cpp

- gguf

- query-expansion

datasets:

- s-emanuilov/query-expansion

base_model:

- Qwen/Qwen2.5-7B-GGUF

Query Expansion GGUF - based on Qwen2.5-7B

GGUF quantized version of Qwen2.5-7B for query expansion task.

Part of a collection of query expansion models available in different architectures and sizes.

Overview

Task: Search query expansion

Base model: Qwen2.5-7B

Training data: Query Expansion Dataset

Quantized Versions

Model available in multiple quantization formats:

- F16 (Original size)

- Q8_0 (~8-bit quantization)

- Q5_K_M (~5-bit quantization)

- Q4_K_M (~4-bit quantization)

- Q3_K_M (~3-bit quantization)

Related Models

LoRA Adaptors

GGUF Variants

Details

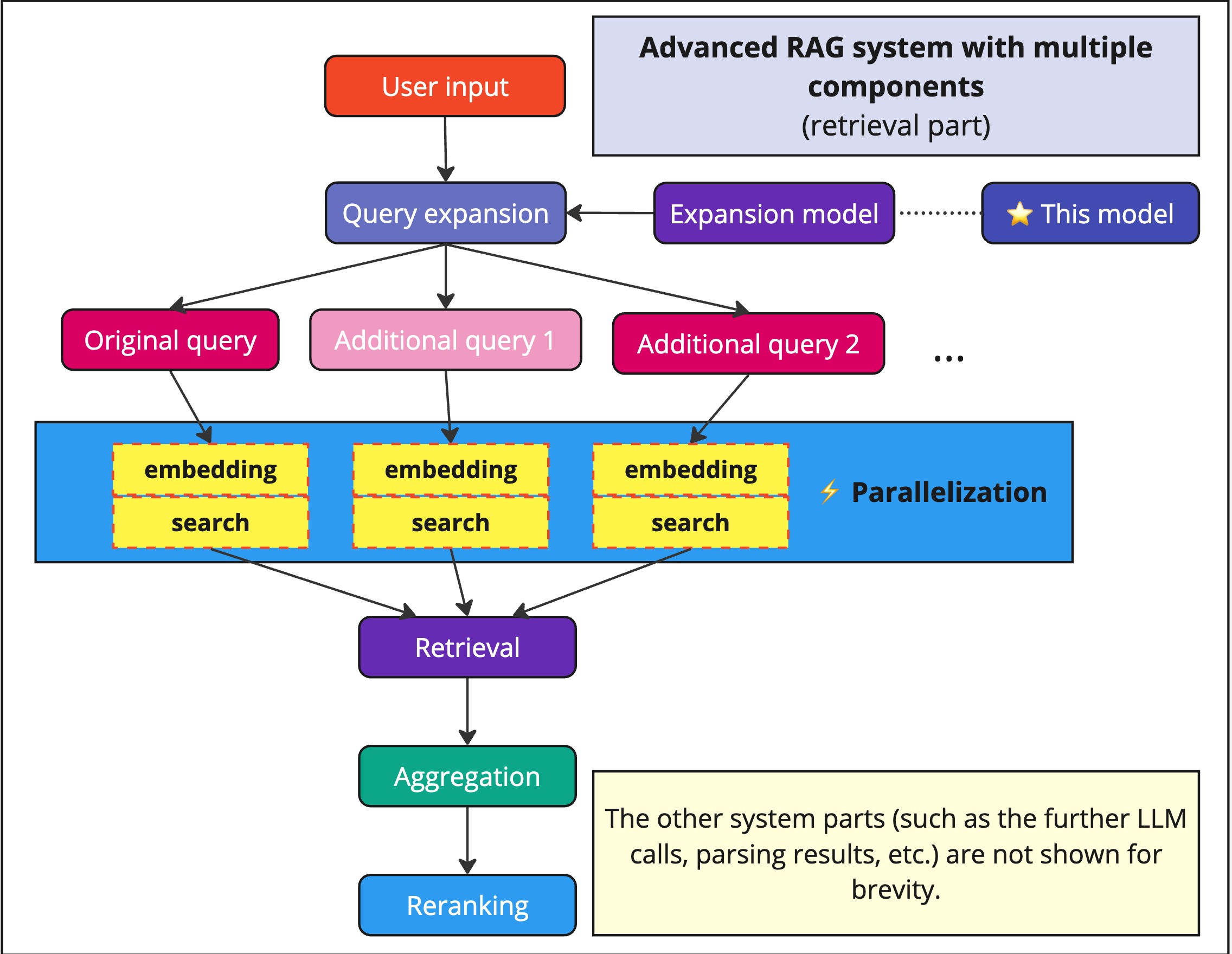

This model is designed for enhancing search and retrieval systems by generating semantically relevant query expansions.

It could be useful for:

- Advanced RAG systems

- Search enhancement

- Query preprocessing

- Low-latency query expansion

Example

Input: "apple stock" Expansions:

- "current apple share value"

- "latest updates on apple's market position"

- "how is apple performing in the current market?"

- "what is the latest information on apple's financial standing?"

Citation

If you find my work helpful, feel free to give me a citation.