Stable-Diffusion-v1.5: Optimized for Mobile Deployment

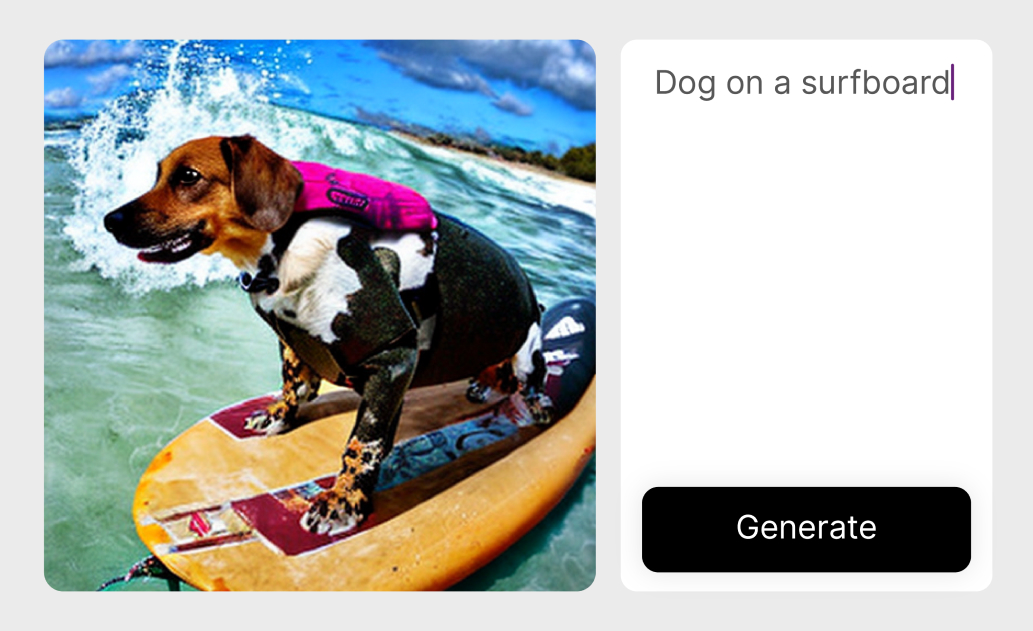

State-of-the-art generative AI model used to generate detailed images conditioned on text descriptions

Generates high resolution images from text prompts using a latent diffusion model. This model uses CLIP ViT-L/14 as text encoder, U-Net based latent denoising, and VAE based decoder to generate the final image.

This model is an implementation of Stable-Diffusion-v1.5 found here.

This repository provides scripts to run Stable-Diffusion-v1.5 on Qualcomm® devices. More details on model performance across various devices, can be found here.

Model Details

- Model Type: Image generation

- Model Stats:

- Input: Text prompt to generate image

- QNN-SDK: 2.28

- Text Encoder Number of parameters: 340M

- UNet Number of parameters: 865M

- VAE Decoder Number of parameters: 83M

- Model size: 1GB

| Model | Device | Chipset | Target Runtime | Inference Time (ms) | Peak Memory Range (MB) | Precision | Primary Compute Unit | Target Model |

|---|---|---|---|---|---|---|---|---|

| TextEncoder | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 | QNN | 4.561 ms | 0 - 2 MB | FP16 | NPU | Stable-Diffusion-v1.5.so |

| TextEncoder | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 | QNN | 3.296 ms | 0 - 20 MB | FP16 | NPU | Stable-Diffusion-v1.5.so |

| TextEncoder | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite | QNN | 3.924 ms | 0 - 43 MB | FP16 | NPU | Use Export Script |

| TextEncoder | SA7255P ADP | SA7255P | QNN | 30.747 ms | 0 - 10 MB | FP16 | NPU | Use Export Script |

| TextEncoder | SA8255 (Proxy) | SA8255P Proxy | QNN | 4.577 ms | 0 - 2 MB | FP16 | NPU | Use Export Script |

| TextEncoder | SA8650 (Proxy) | SA8650P Proxy | QNN | 4.587 ms | 0 - 6 MB | FP16 | NPU | Use Export Script |

| TextEncoder | SA8775P ADP | SA8775P | QNN | 5.879 ms | 0 - 10 MB | FP16 | NPU | Use Export Script |

| TextEncoder | QCS8275 (Proxy) | QCS8275 Proxy | QNN | 30.747 ms | 0 - 10 MB | FP16 | NPU | Use Export Script |

| TextEncoder | QCS8550 (Proxy) | QCS8550 Proxy | QNN | 4.542 ms | 0 - 2 MB | FP16 | NPU | Use Export Script |

| TextEncoder | QCS9075 (Proxy) | QCS9075 Proxy | QNN | 5.879 ms | 0 - 10 MB | FP16 | NPU | Use Export Script |

| TextEncoder | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN | 4.892 ms | 0 - 0 MB | FP16 | NPU | Use Export Script |

| Unet | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 | QNN | 115.147 ms | 0 - 3 MB | FP16 | NPU | Stable-Diffusion-v1.5.so |

| Unet | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 | QNN | 79.863 ms | 0 - 18 MB | FP16 | NPU | Stable-Diffusion-v1.5.so |

| Unet | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite | QNN | 61.747 ms | 0 - 14 MB | FP16 | NPU | Use Export Script |

| Unet | SA7255P ADP | SA7255P | QNN | 1587.126 ms | 0 - 8 MB | FP16 | NPU | Use Export Script |

| Unet | SA8255 (Proxy) | SA8255P Proxy | QNN | 114.342 ms | 0 - 2 MB | FP16 | NPU | Use Export Script |

| Unet | SA8650 (Proxy) | SA8650P Proxy | QNN | 114.465 ms | 0 - 2 MB | FP16 | NPU | Use Export Script |

| Unet | SA8775P ADP | SA8775P | QNN | 131.691 ms | 0 - 9 MB | FP16 | NPU | Use Export Script |

| Unet | QCS8275 (Proxy) | QCS8275 Proxy | QNN | 1587.126 ms | 0 - 8 MB | FP16 | NPU | Use Export Script |

| Unet | QCS8550 (Proxy) | QCS8550 Proxy | QNN | 113.932 ms | 0 - 2 MB | FP16 | NPU | Use Export Script |

| Unet | QCS9075 (Proxy) | QCS9075 Proxy | QNN | 131.691 ms | 0 - 9 MB | FP16 | NPU | Use Export Script |

| Unet | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN | 116.837 ms | 0 - 0 MB | FP16 | NPU | Use Export Script |

| VaeDecoder | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 | QNN | 274.771 ms | 0 - 2 MB | FP16 | NPU | Stable-Diffusion-v1.5.so |

| VaeDecoder | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 | QNN | 206.532 ms | 0 - 19 MB | FP16 | NPU | Stable-Diffusion-v1.5.so |

| VaeDecoder | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite | QNN | 213.958 ms | 0 - 356 MB | FP16 | NPU | Use Export Script |

| VaeDecoder | SA7255P ADP | SA7255P | QNN | 4460.564 ms | 1 - 10 MB | FP16 | NPU | Use Export Script |

| VaeDecoder | SA8255 (Proxy) | SA8255P Proxy | QNN | 276.946 ms | 0 - 3 MB | FP16 | NPU | Use Export Script |

| VaeDecoder | SA8650 (Proxy) | SA8650P Proxy | QNN | 286.404 ms | 0 - 3 MB | FP16 | NPU | Use Export Script |

| VaeDecoder | SA8775P ADP | SA8775P | QNN | 301.218 ms | 0 - 10 MB | FP16 | NPU | Use Export Script |

| VaeDecoder | QCS8275 (Proxy) | QCS8275 Proxy | QNN | 4460.564 ms | 1 - 10 MB | FP16 | NPU | Use Export Script |

| VaeDecoder | QCS8550 (Proxy) | QCS8550 Proxy | QNN | 275.316 ms | 0 - 4 MB | FP16 | NPU | Use Export Script |

| VaeDecoder | QCS9075 (Proxy) | QCS9075 Proxy | QNN | 301.218 ms | 0 - 10 MB | FP16 | NPU | Use Export Script |

| VaeDecoder | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN | 270.107 ms | 0 - 0 MB | FP16 | NPU | Use Export Script |

Installation

Install the package via pip:

pip install "qai-hub-models[stable-diffusion-v1-5-w8a16-quantized]" -f https://qaihub-public-python-wheels.s3.us-west-2.amazonaws.com/index.html

Configure Qualcomm® AI Hub to run this model on a cloud-hosted device

Sign-in to Qualcomm® AI Hub with your

Qualcomm® ID. Once signed in navigate to Account -> Settings -> API Token.

With this API token, you can configure your client to run models on the cloud hosted devices.

qai-hub configure --api_token API_TOKEN

Navigate to docs for more information.

Demo off target

The package contains a simple end-to-end demo that downloads pre-trained weights and runs this model on a sample input.

python -m qai_hub_models.models.stable_diffusion_v1_5_w8a16_quantized.demo

The above demo runs a reference implementation of pre-processing, model inference, and post processing.

NOTE: If you want running in a Jupyter Notebook or Google Colab like environment, please add the following to your cell (instead of the above).

%run -m qai_hub_models.models.stable_diffusion_v1_5_w8a16_quantized.demo

Run model on a cloud-hosted device

In addition to the demo, you can also run the model on a cloud-hosted Qualcomm® device. This script does the following:

- Performance check on-device on a cloud-hosted device

- Downloads compiled assets that can be deployed on-device for Android.

- Accuracy check between PyTorch and on-device outputs.

python -m qai_hub_models.models.stable_diffusion_v1_5_w8a16_quantized.export

Profiling Results

------------------------------------------------------------

TextEncoder

Device : Samsung Galaxy S23 (13)

Runtime : QNN

Estimated inference time (ms) : 4.6

Estimated peak memory usage (MB): [0, 2]

Total # Ops : 437

Compute Unit(s) : NPU (437 ops)

------------------------------------------------------------

Unet

Device : Samsung Galaxy S23 (13)

Runtime : QNN

Estimated inference time (ms) : 115.1

Estimated peak memory usage (MB): [0, 3]

Total # Ops : 4149

Compute Unit(s) : NPU (4149 ops)

------------------------------------------------------------

VaeDecoder

Device : Samsung Galaxy S23 (13)

Runtime : QNN

Estimated inference time (ms) : 274.8

Estimated peak memory usage (MB): [0, 2]

Total # Ops : 189

Compute Unit(s) : NPU (189 ops)

Deploying compiled model to Android

The models can be deployed using multiple runtimes:

TensorFlow Lite (

.tfliteexport): This tutorial provides a guide to deploy the .tflite model in an Android application.QNN (

.soexport ): This sample app provides instructions on how to use the.soshared library in an Android application.

View on Qualcomm® AI Hub

Get more details on Stable-Diffusion-v1.5's performance across various devices here. Explore all available models on Qualcomm® AI Hub

License

- The license for the original implementation of Stable-Diffusion-v1.5 can be found here.

- The license for the compiled assets for on-device deployment can be found here

References

Community

- Join our AI Hub Slack community to collaborate, post questions and learn more about on-device AI.

- For questions or feedback please reach out to us.