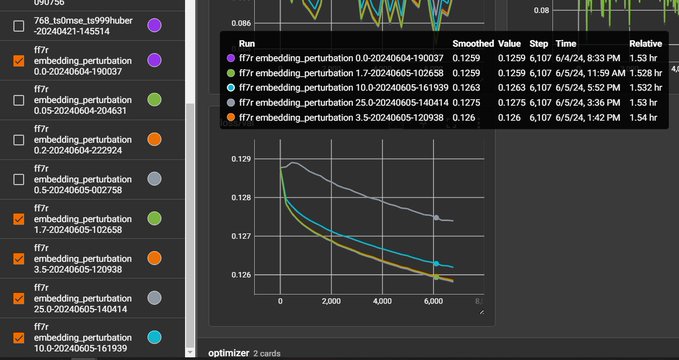

Results from an experiment using Condition Embedding Perturbation. https://arxiv.org/pdf/2405.20494

Code is very simple, add noise during the training loop between the text_encoder forward and the Unet prediction based on this code:

perturbation_deviation = embedding_perturbation / math.sqrt(encoder_hidden_states.shape[2])

perturbation_delta = torch.randn_like(encoder_hidden_states) * (perturbation_deviation)

encoder_hidden_states = encoder_hidden_states + perturbation_delta

"embedding_perturbation" is the tunable gamma from the paper.

Models are SD1.5 trained for 30 epochs, Unet only, AdamW8bit, 2e-6 constant LR, batch size 12. EveryDream2Trainer config json included.

#!bin/bash

# python train.py --config train_ff7_emb_pert000.json --project_name "ff7r embedding_perturbation 0.0" --embedding_perturbation 0.0

python train.py --config train_ff7_emb_pert000.json --project_name "ff7r embedding_perturbation 1.0" --embedding_perturbation 1.0

python train.py --config train_ff7_emb_pert000.json --project_name "ff7r embedding_perturbation 1.7" --embedding_perturbation 1.7

python train.py --config train_ff7_emb_pert000.json --project_name "ff7r embedding_perturbation 3.5" --embedding_perturbation 3.5

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

The model cannot be deployed to the HF Inference API:

The model has no library tag.