Tarsier Model Card

Introduction

Tarsier2-Recap-7b is build upon Qwen2-VL-7B-Instruct by distilling the video description capabilities of Tarsier2-7b. Specifically, we finetuned Qwen2-VL-7B-Instruct on Tarsier2-Recap-585K for 2 epochs with a learning rate of 2e-5. Tarsier2-Recap-7b shares a similar video captioning ability as Tarsier2-7b, reaching an overall F1 score of 40.7% on DREAM-1K, which is only behind Tarsier2-7b (42.0%) and surpasses GPT-4o's 39.2%. See the Tarsier2 technical report for more details.

Model details

- Base Model: Qwen2-VL-7B-Instruct

- Training Data: Tarsier2-Recap-585K

Model date: Tarsier2-Recap-7b was trained in December 2024.

Paper or resources for more information:

- github repo: https://github.com/bytedance/tarsier/tree/tarsier2

- paper link: https://arxiv.org/abs/2501.07888

- leaderboard: https://tarsier-vlm.github.io/

License

Qwen/Qwen2-VL-7B-Instruct license.

Intended use

Primary intended uses: The primary use of Tarsier is research on large multimodal models, especially video description.

Primary intended users: The primary intended users of the model are researchers and hobbyists in computer vision, natural language processing, machine learning, and artificial intelligence.

Model Performance

Video Description

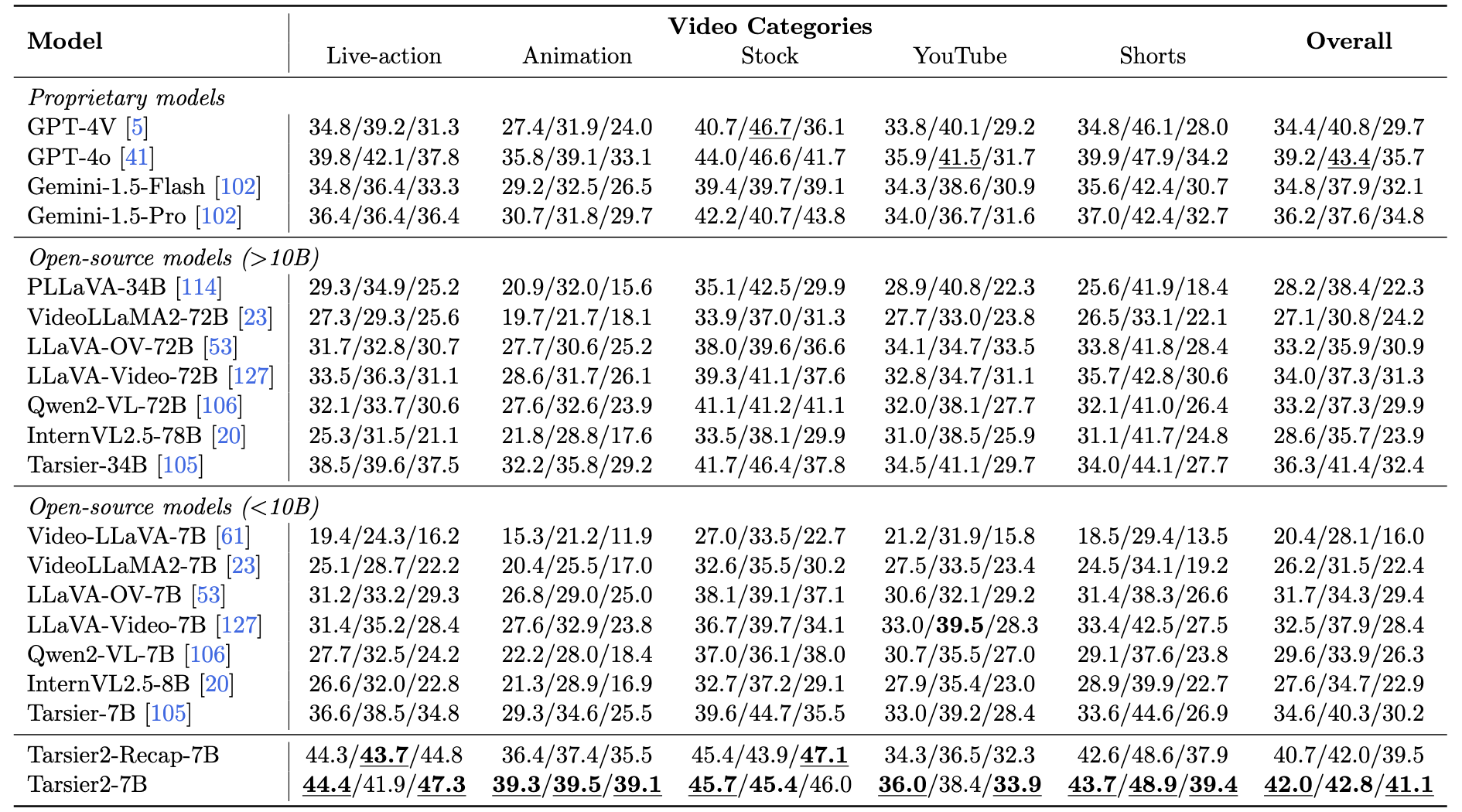

We evaluate Tarsier2-Recap-7b on DREAM-1K, a detailed video description benchmark featuring dynamic and diverse videos, assessing the model’s ability to describe fine-grained actions and events. Here is the evaluation result:

Note: The results of Tarsier2-Recap-7b is different from the results we reported in Table 11 in the Tarsier2 technical report, as Tarsier2-Recap-7b is more fully trained (2 epochs vs 1 epoch).

Note: The results of Tarsier2-Recap-7b is different from the results we reported in Table 11 in the Tarsier2 technical report, as Tarsier2-Recap-7b is more fully trained (2 epochs vs 1 epoch).

Video Question-Answering

We evalute Tarsier2-Recap-7b on TVBench, a novel multiple-choice question-answering which requires a high level of temporal understanding. As Tarsier2-Recap-7b is only trained with video caption data, it needs some additional prompt to enduce it to conduct multi-choice question-answering tasks, see TVBench samples as an example. Here is the evaluation result:

| Task | Tarsier2-Recap-7b | Tarsier2-7b |

|---|---|---|

| Action Antonym | 91.2 | 94.1 |

| Action Count | 43.1 | 40.5 |

| Action Localization | 42.5 | 37.5 |

| Action Sequence | 70.5 | 72.3 |

| Egocentric Sequence | 22.0 | 24.5 |

| Moving Direction | 37.1 | 33.2 |

| Object Count | 46.6 | 62.8 |

| Object Shuffle | 36.9 | 31.6 |

| Scene Transition | 85.9 | 88.1 |

| Unexpected Action | 28.0 | 41.5 |

| OVERALL | 54.0 | 54.7 |

How to Use

see https://github.com/bytedance/tarsier/tree/tarsier2?tab=readme-ov-file#usage (The tarsier2 branch!!!)

Where to send questions or comments about the model: https://github.com/bytedance/tarsier/issues

- Downloads last month

- 0