👼 Simple Transformer

Author: Eshan Jayasundara

Last Updated: 2nd of March 2025

Created: 28th of February 2025

About:

└── Single head transformer (Transformer with self-attention training with teacher-forcing)

Training:

└── Teacher Forcing (Baseline)

├── During training, the actual ground-truth tokens (from the dataset) are fed as input to the decoder instead of using the model’s own predictions.

├── This makes training faster and ensures the model learns accurate token-to-token mappings.

└── Drawback: At inference time, the model doesn't see ground-truth inputs, so errors can accumulate (called exposure bias).

Vocabulary dataset (from huggingface):

└── "yukiarimo/english-vocabulary"

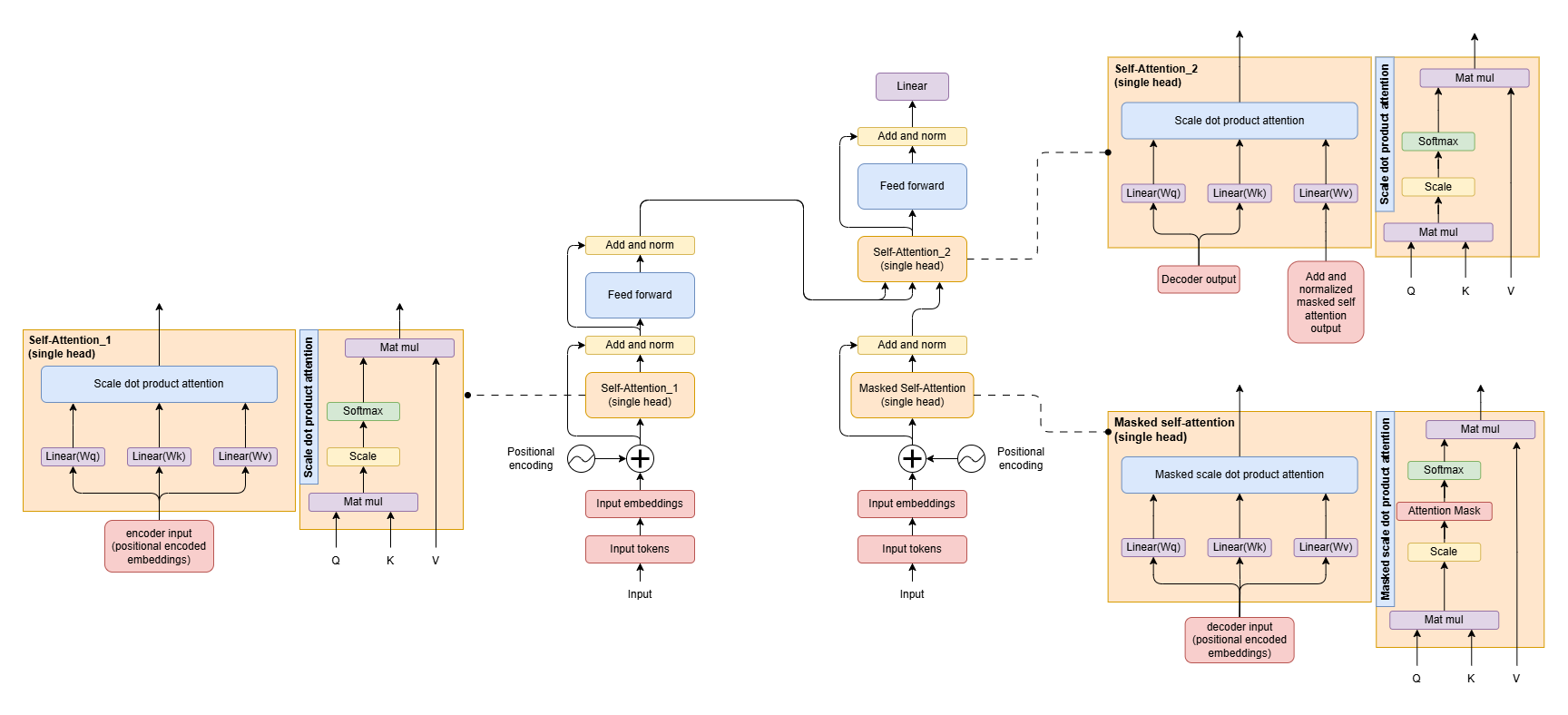

Simple Transformer Architecture:

Encoder

├── Input text

│ └── Eg: "Hello, how are you?"

├── Remove punctuation from input text

├── Input tokenization

├── Embedding lookup with torch.nn.Embedding

├── Positional encoding (sin, cosine)

├── Self-attention

│ ├── single-head

│ ├── Q = Wq @ Embedding

│ ├── K = Wk @ Embedding

│ └── V = Wv @ Embedding

├── Add and norm

├── Feed forward layer

│ ├── 2 hidden layers

│ ├── ReLU as the activation in hidden layer

│ ├── No activation at the output layer

│ └── nn.Linear(in_features=embedding_dim, out_features=d_ff), nn.ReLU(), nn.Linear(in_features=d_ff, out_features=embedding_dim)

├── Add and norm (again)

└── Save encoder out to be used in cross attention

Decoder

├── Decoder teacher text (same as the target text but shifted right)

│ ├── Eg: Decoder teacher text - "<SOS> hello, I'm fine."

│ └── Eg: target text - "hello, I'm fine. <EOS>"

├── Remove punctuation from input text

├── Input tokenization

├── Embedding lookup with torch.nn.Embedding

├── Positional encoding (sin, cosine)

├── Masked-self-attention (single-head, new class signature for masked self attention introduced)

│ ├── single-head

│ ├── causal mask with triangular matrix

│ ├── Q = Wq @ Embedding

│ ├── K = Wk @ Embedding

│ └── V = Wv @ Embedding

├── Add and norm

├── Cross attention (same class signature used in the encoder self-attention can be used)

│ ├── single-head

│ ├── Q = Wq @ Add and normalized output from masked-self-attention

│ ├── K = Wk @ Encoder output

│ └── V = Wv @ Encoder output

├── Add and norm

├── Feed forward layer

│ ├── 2 hidden layers

│ ├── ReLU as the activation in hidden layer

│ ├── No activation at the output layer

│ └── nn.Linear(in_features=embedding_dim, out_features=d_ff), nn.ReLU(), nn.Linear(in_features=d_ff, out_features=embedding_dim)

├── Add and norm (again)

└── Linear layer (No activation or softmax as in 'Attention is all you need' is used here)

Optimization

├── Initialize the Adam optimizer with the model’s parameters and a specified learning rate.

│ └── self.optimizer = torch.optim.Adam(params=self.parameters, lr=learning_rate)

├── Before computing gradients for the current batch, we reset any existing gradients from the previous iteration.

│ └── self.optimizer.zero_grad()

├── The model takes in `input_tokens` and `decoder_teacher_tokens` and performs a forward pass to compute `logits`

│ └── logits = self.forward(input_tokens, decoder_teacher_tokens)

├── The cross-entropy loss

│ ├── Measures the difference between the predicted token distribution (logits) and the actual target tokens (decoder_target_tokens).

│ ├── It expects logits to have raw scores (not probabilities), and it applies softmax internally.

│ └── loss = F.cross_entropy(logits, decoder_target_tokens)

├── Compute the gradients of the loss with respect to all trainable parameters in the model using automatic differentiation (backpropagation).

│ └── loss.backward()

└── Optimizer updates the model's weights using the computed gradients.

└── self.optimizer.step()

After training, to calculate the output tokens -> text, 'Autoregressive text generation' is used (one word at a time)

├── Start with <SOS>. (Initial input to the decoder) but input to the encoder is the `prompt`.

├── Model predicts the next token.

├── Append the predicted token to the sequence.

├── Repeat until an <EOS> token or max length is reached.

└── For illustration let's use words instead of tokens(numerical representation)

<SOS>

<SOS> hello

<SOS> hello I'm

<SOS> hello I'm good

<SOS> hello I'm good <EOS>

Feauter Improvements:

├── Multi-head attention instead of single-head attention.

├── Layer normalization instead of simple mean-variance normalization.

└── Dropout layers for better generalization.

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support