license: apache-2.0

tags:

- T5xxl

- Google FLAN

FLAN-T5-XXL Fused Model

This repository hosts a fused version of the FLAN-T5-XXL model, created by combining the split files from Google's FLAN-T5-XXL repository. The files have been merged for convenience, making it easier to integrate into AI applications, including image generation workflows.

Sample pictures: Base Model blue_pencil-flux1_v0.0.1

Key Features

- Fused for Simplicity: Combines split model files into a single, ready-to-use format.

- Optimized Variants: Available in FP32, FP16, and quantized GGUF formats to balance accuracy and resource usage.

- Enhanced Prompt Accuracy: Outperforms the standard T5-XXL v1.1 in generating precise outputs for image generation tasks.

Model Variants

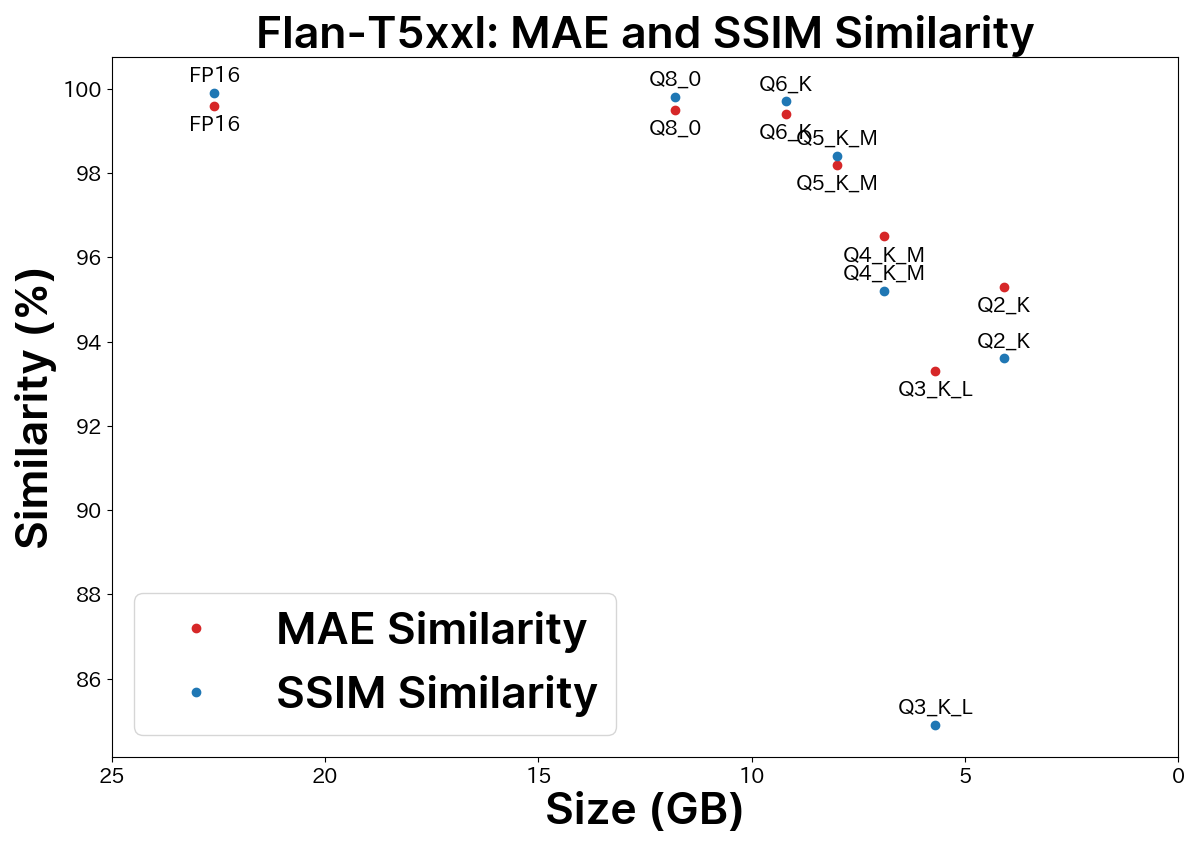

| Model File | Size | Accuracy (SSIM Similarity) | Recommended |

|---|---|---|---|

| flan_t5_xxl_fp32.safetensors | 44.1GB | 100% | |

| flan_t5_xxl_fp16.safetensors | 22.1GB | 99.9% | |

| flan_t5_xxl_TE-only_FP32.safetensors | 18.7GB | 100% | 🔺 |

| flan_t5_xxl_TE-only_FP16.safetensors | 9.4GB | 99.9% | ✅ |

| flan_t5_xxl_TE-only_Q8_0.gguf | 5.5GB | 99.8% | ✅ |

| flan_t5_xxl_TE-only_Q6_K.gguf | 4.4GB | 99.7% | 🔺 |

| flan_t5_xxl_TE-only_Q5_K_M.gguf | 3.8GB | 98.4% | 🔺 |

| flan_t5_xxl_TE-only_Q4_K_M.gguf | 3.2GB | 95.2% | |

| flan_t5_xxl_TE-only_Q3_K_L.gguf | 2.6GB | 84.9% |

Comparison Graph

For a detailed comparison, refer to this blog post.

Usage Instructions

Place the downloaded model files in one of the following directories:

installation_folder/models/text_encoderinstallation_folder/models/clipinstallation_folder/Models/CLIP

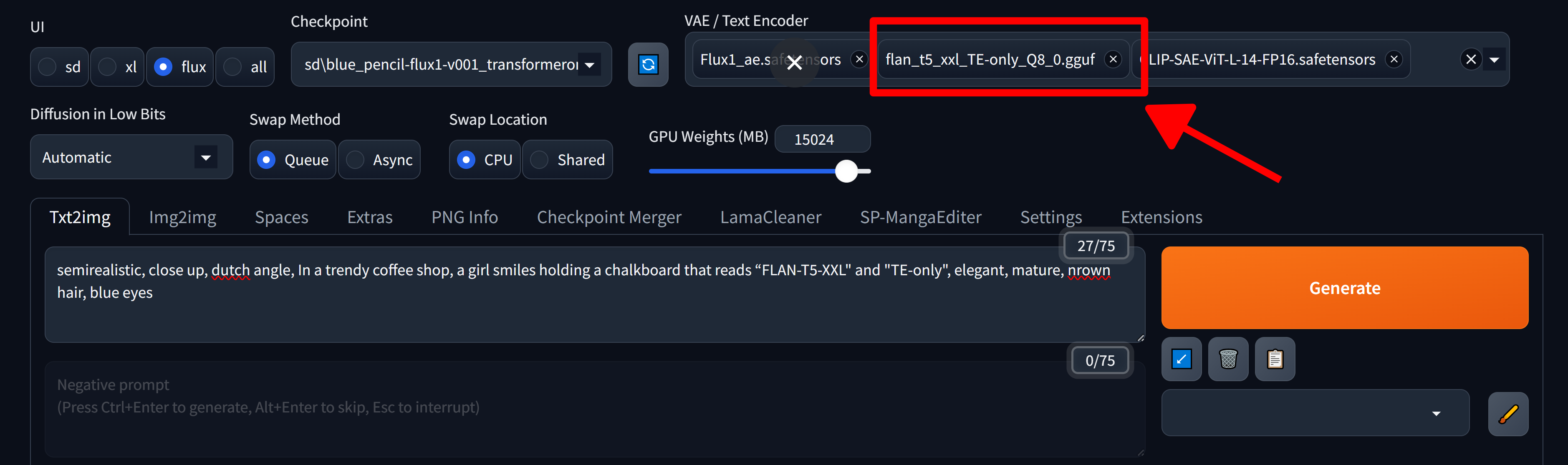

Stable Diffusion WebUI Forge

In Stable Diffusion WebUI Forge, select the FLAN-T5-XXL model instead of the default T5xxl_v1_1 text encoder.

Note: Stable Diffusion WebUI Forge does not support FP32 models. Use FP16 or GGUF formats instead.

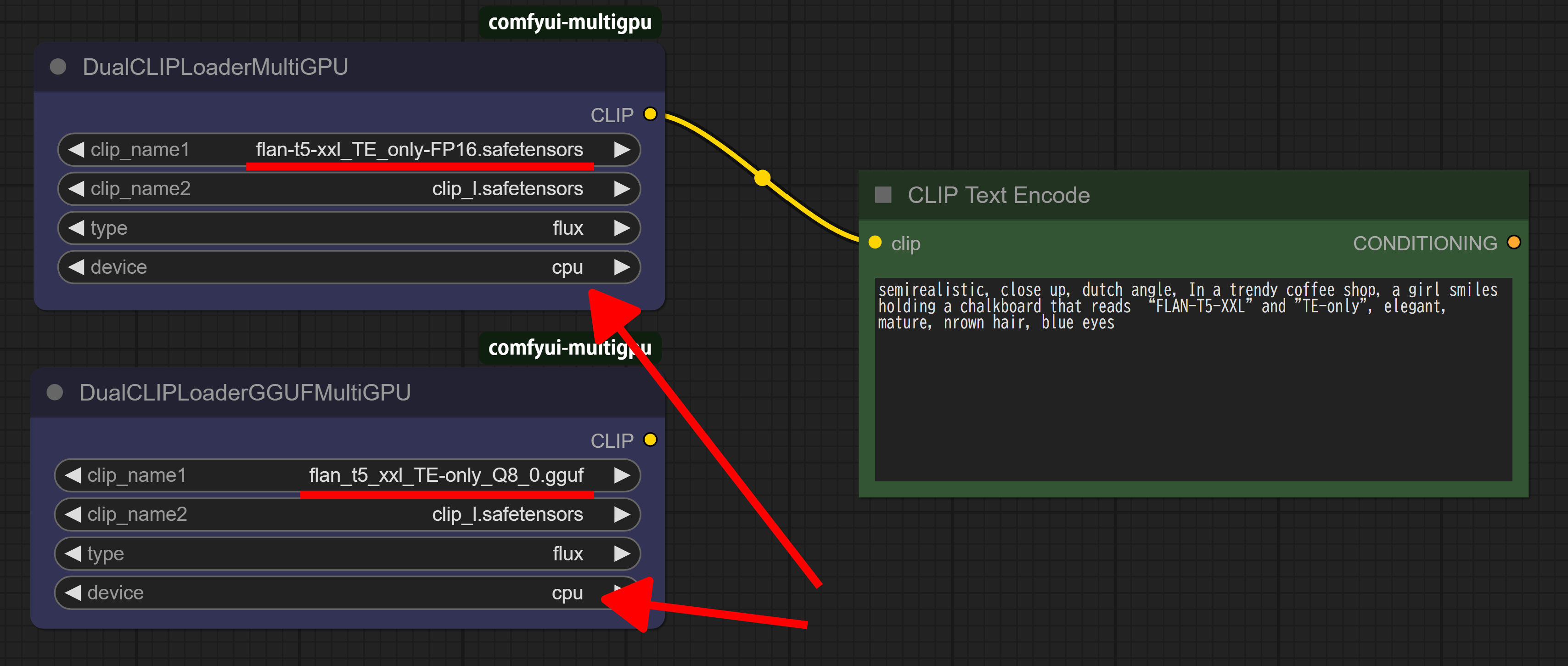

ComfyUI

Sample Workflow

For ComfyUI, we recommend using the ComfyUI-MultiGPU custom node to load the model into system RAM instead of VRAM.

Use the DualCLIPLoaderMultiGPU or DualCLIPLoaderGGUFMultiGPU node and set the device to cpu to load the model into system RAM.

FP32 Support: To use FP32 text encoders in ComfyUI, launch with the --fp32-text-enc flag.

Comparison: FLAN-T5-XXL vs T5-XXL v1.1

These example images were generated using FLAN-T5-XXL and T5-XXL v1.1 models in Flux.1. FLAN-T5-XXL delivers more accurate responses to prompts.

Further Comparisons

Tip: Upgrade CLIP-L Too

For even better results, consider upgrading the CLIP-L text encoder alongside FLAN-T5-XXL:

- LongCLIP-SAE-ViT-L-14 (ComfyUI only)

- CLIP-SAE-ViT-L-14

Combining FLAN-T5-XXL with an upgraded CLIP-L can further enhance image quality.

License

- This model is distributed under the Apache 2.0 License.

- The uploader claims no ownership or rights over the model.