path

stringlengths 7

265

| concatenated_notebook

stringlengths 46

17M

|

|---|---|

notebooks/RedBlackMarble-DP.ipynb

|

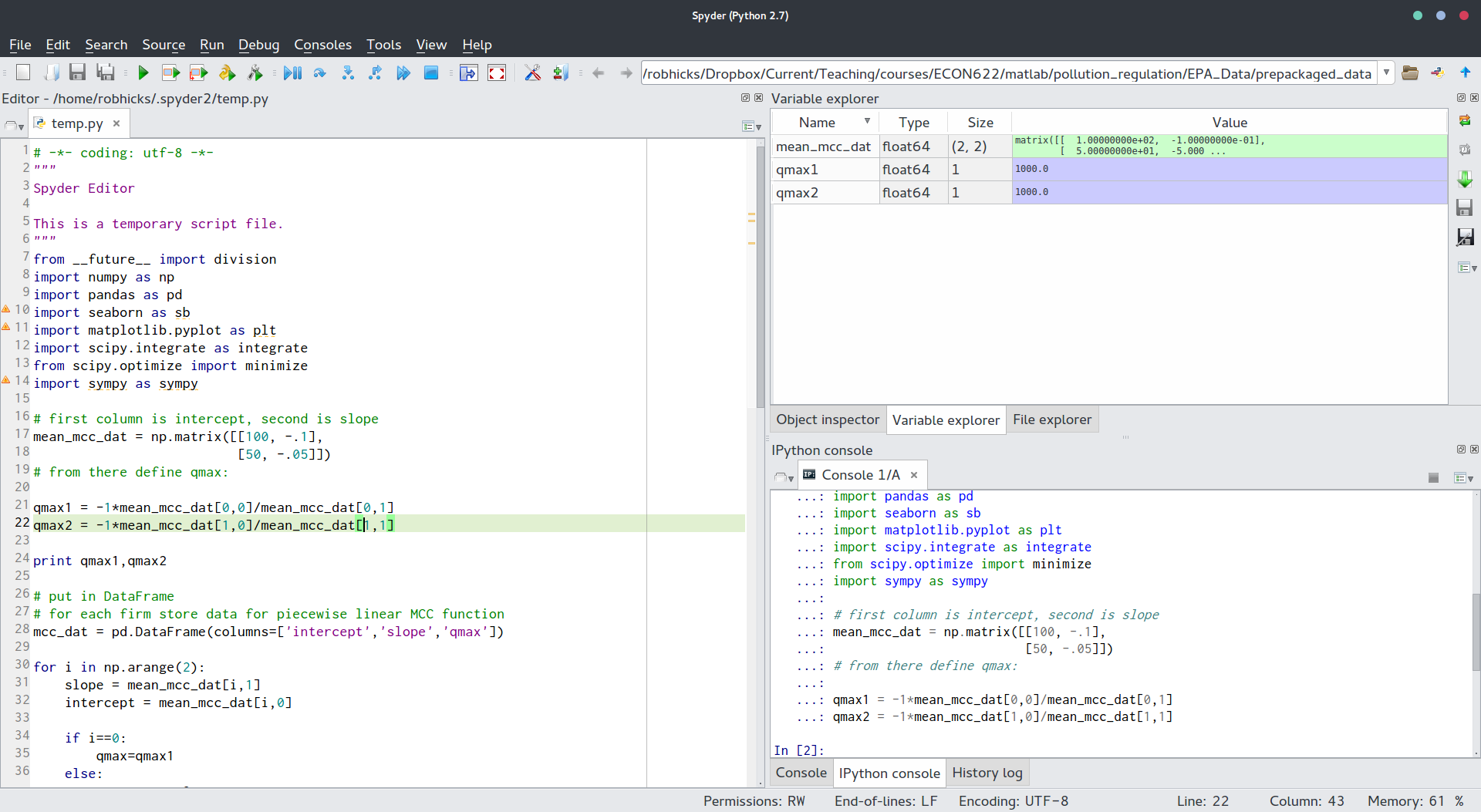

###Markdown

Red Black Marble Question: There are 4 red and 4 black marbles in a bag. Draw one at time. If you have drawn more black than red, winnings are the number of black marbles in excess of red. Otherwise, you get nothing. Quit at any time. What is your optimal strategy? Intuition:- Firstly you can quit any time, this guarantees at least 0 winning, no loss can occur. If you find at any point you lose money (e.g. 3 red, 1 black in hand), just keep going until finish drawing all 8 balls from the bag.- Then, think about the strategy. Is it optimal to quit at following points, you draw: - R, R, - R, R, B, - R, R, B, B, - ... - You don't want to quit in case 1 and 2 to realize the loss. In case 3, stop with winning 0 v.s. keep going with chances of winning something, you choose the latter one. - The option to quit at any time is in our favor ; ) - If you are lucky to draw a Black at 1st time, you seems not satisified to stop and take just 1 dollar. You try to make a 2nd draw. Possibilities can be either you draw a Black(p=3/7) at 2nd time, then win 2 dollar,or you draw a Red(p=4/7), win 0.The expection on 2nd move 3/7 \* 2 + 4/7 * 0 is less then 1.Is it a good time to stop? <!--- No. Even when you draw 1 Black and 1 Red after 2nd move, the immediate payoff is 0. However, chances of winning leads to a positive expected payoff.So we need to modify the payoff of 0 above. - From this, we figured out that the strategy given one status S(num of red, num of black) is not solely depends on its $$ Payoff_{immediate} = max(\black - \red, 0)$$ The decision can be made after comparing it to $$ Payoff_{expected} = p_{red} * payoff(\red+1, \black) + p_{black} * payoff(\red, \black+1) $$ We take the max of the two. --->- The **Dynamic Programming** question can be solved by backward induction.The state space looks like a binomal tree. The state, stategy and payoff at each node of this binomial tree can be computed. The logic is the same for many questions of this kind. Draw a binomial tree

###Code

import matplotlib.pyplot as plt

from IPython.display import Image

Image(url= "https://github.com/dlu-umich/Quant-Lab/blob/master/half_tree.png?raw=true")

###Output

_____no_output_____

###Markdown

Coding - recursion

###Code

def payoff(r, b):

if r == 0:

return 0

if b == 0:

return r

payoff_if_stop = ...

payoff_if_go = ... ## backward recursion

return max(payoff_if_stop, payoff_if_go)

print(payoff(0,0))

print(payoff(1,3))

print(payoff(4,4))

import matplotlib.pyplot as plt

n = 12 ## try n = 20 ?

x = range(n)

res = [payoff(i,i) for i in range(n)]

plt.plot(x,res)

plt.title("payoff of game at the initial point")

plt.show()

# payoff of game at the initial point

import timeit

n = 14 ## try n = 20 ?

for i in range(n):

start = timeit.default_timer()

res = payoff(i,i)

stop = timeit.default_timer()

print('Time: ', stop - start)

# observe that running time grows exponentially fast with n

###Output

Time: 2.318837353501653e-06

Time: 1.0202884355407272e-05

Time: 1.6231861474511533e-05

Time: 4.4057909716531396e-05

Time: 0.00015397080027250985

Time: 0.00047165151770223624

Time: 0.0019237074684649712

Time: 0.007091468394478755

Time: 0.016908961981734055

Time: 0.050692567152370346

Time: 0.2014174588974485

Time: 0.6451585226779974

###Markdown

Complexity analysis:For a bag with n red marbles and n black marbles, how long will the recursion take? - For original state S(n,n), it will need two function calls of S(n-1,n) and S(n,n-1)- Each node will need 2 function calls. Notice, even though the binomial tree is recombined, the function call is not.So going one layer deeper doubles the computing time. We have n + n balls, so the tree is n depth symmetrically on both sides. It is $O(2^{2n})$ complexity. Coding - recursion (with cache)

###Code

payoff_dict = {}

def payoff(r, b):

if r == 0:

return 0

if b == 0:

return r

payoff_if_stop = max(r-b, 0)

...

payoff_if_go = r/(r+b) * red_payoff + b/(r+b) * black_payoff

return max(payoff_if_stop, payoff_if_go)

print(payoff(0,0))

print(payoff(1,3))

print(payoff(4,4))

###Output

0

0.25

0.9999999999999999

###Markdown

Coding - tree node as a class objectBy making use of recombining tree and already computed nodes, we can turn it into $O(n^2)$ complexity - simply counting the edges.

###Code

class Node:

def __init__(self, red, black):

self.r = red

self.b = black

'''pointer to the next node - up/down'''

self.next_node_up = ...

self.next_node_down = ...

'''backward induction'''

self.winning_if_stop = ...

self.winning_if_go = ...

def winning(self):

'''return the winning if following the optimal strategy'''

return ...

def strategy_query(self):

'''print the strategy given the current node'''

print ...

class Game:

def __init__(self):

self.node_dict = {}

def node(self, red, black):

key = (red, black)

if key not in self.node_dict:

self.node_dict[key] = Node(red, black, self)

return self.node_dict[key]

dp = Game()

x = dp.node(4,4).winning()

print(x)

strategy = dp.node(1,3).strategy_query()

## performance test

dp = Game()

res = []

time = []

for n in range(100):

start = timeit.default_timer()

res.append(dp.node(n,n).winning())

stop = timeit.default_timer()

time.append(stop - start)

plt.plot(time)

plt.title("running time with calculated nodes")

plt.show()

###Output

_____no_output_____

|

Testing/Testing_Final_Model.ipynb

|

###Markdown

Generating Headlines for Test Dataset using Final Model **Downloading and Importing required libraries**

###Code

!pip install compress-pickle

!pip install rouge

!python -m spacy download en_core_web_md

!sudo apt install openjdk-8-jdk

!sudo update-alternatives --set java /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java

!pip install language-check

%tensorflow_version 1.x

import tensorflow as tf

from tensorflow.contrib import rnn

import nltk

from nltk.tokenize import word_tokenize

from nltk.translate.bleu_score import sentence_bleu,SmoothingFunction

from rouge import Rouge

import collections

import compress_pickle as pickle

import re

import bz2

import os

import time

import warnings

import numpy as np

import pandas as pd

from tqdm.notebook import trange,tqdm

import spacy

import en_core_web_md

nltk.download('punkt')

nlp = en_core_web_md.load()

###Output

_____no_output_____

###Markdown

**Necessary Utility functions**

###Code

default_path = "/Testing/"

dataset_path = "/Dataset/"

test_article_path = dataset_path + "abstract.test.bz2"

test_title_path = dataset_path + "title.test.bz2"

def clean_str(sentence):

sentence = re.sub("[#.]+", " ", sentence)

return sentence

def get_text_list(data_path, toy=False,clean=True):

with bz2.open (data_path, "r") as f:

if not clean:

return [x.decode().strip() for x in f.readlines()[5000:10000:5]]

if not toy:

return [clean_str(x.decode().strip()) for x in tqdm(f.readlines())]

else:

return [clean_str(x.decode().strip()) for x in tqdm(f.readlines()[:20000])]

def build_dict(step, toy=False,train_article_list=[],train_title_list=[]):

if step == "test" or os.path.exists(default_path+"word_dict.bz"):

with open(default_path+"word_dict.bz", "rb") as f:

word_dict = pickle.load(f,compression='bz2')

elif step == "train":

words = list()

for sentence in tqdm(train_article_list + train_title_list):

for word in word_tokenize(sentence):

words.append(word)

word_counter = collections.Counter(words).most_common(500000)

word_dict = dict()

word_dict["<padding>"] = 0

word_dict["<unk>"] = 1

word_dict["<s>"] = 2

word_dict["</s>"] = 3

cur_len = 4

for word, _ in tqdm(word_counter):

word_dict[word] = cur_len

cur_len += 1

pickle.dump(word_dict, default_path+"word_dict",compression='bz2')

reversed_dict = dict(zip(word_dict.values(), word_dict.keys()))

article_max_len = 250

summary_max_len = 15

return word_dict, reversed_dict, article_max_len, summary_max_len

def batch_iter(inputs, outputs, batch_size, num_epochs):

inputs = np.array(inputs)

outputs = np.array(outputs)

num_batches_per_epoch = (len(inputs) - 1) // batch_size + 1

for epoch in range(num_epochs):

for batch_num in range(num_batches_per_epoch):

start_index = batch_num * batch_size

end_index = min((batch_num + 1) * batch_size, len(inputs))

yield inputs[start_index:end_index], outputs[start_index:end_index]

###Output

_____no_output_____

###Markdown

**Title Modification ( OOV replacment and Grammar Check)**

###Code

tool = language_check.LanguageTool('en-US')

smoothing = SmoothingFunction().method0

def get_unk_tokens(word_dict, article):

unk = defaultdict(float)

tokens = word_tokenize(article)

n = min(250,len(tokens))

for i,token in enumerate(tokens[:250]):

if token not in word_dict:

unk[token]+= get_weight(i,n)

tup = []

for i in unk:

tup.append((unk[i],i))

return sorted(tup[:5],reverse=True)

def get_weight(index, token_len):

p = index/token_len

if(p<=0.1):

return 0.35

if(p<=0.2):

return 0.3

if(p<=0.4):

return 0.2

if(p<=0.7):

return 0.1

return 0.05

def correct(text):

matches = tool.check(text)

text = language_check.correct(text, matches)

return text

def update_title(word_dict,article, title):

replace_count = 0

unk_list = get_unk_tokens(word_dict, article)

for j in range(min(title.count('<unk>'), len(unk_list))):

title = title.replace('<unk>', unk_list[j][1],1)

replace_count += 1

return correct(title)

def calculate_bleu(title, reference):

title_tok,reference_tok = word_tokenize(title), [word_tokenize(reference)]

return sentence_bleu(reference_tok,title_tok,smoothing_function=smoothing)

###Output

_____no_output_____

###Markdown

**RNN Model Implementation**

###Code

class Model(object):

def __init__(self, reversed_dict, article_max_len, summary_max_len, args, forward_only=False):

self.vocabulary_size = len(reversed_dict)

self.embedding_size = args.embedding_size

self.num_hidden = args.num_hidden

self.num_layers = args.num_layers

self.learning_rate = args.learning_rate

self.beam_width = args.beam_width

if not forward_only:

self.keep_prob = args.keep_prob

else:

self.keep_prob = 1.0

self.cell = tf.nn.rnn_cell.LSTMCell

with tf.variable_scope("decoder/projection"):

self.projection_layer = tf.layers.Dense(self.vocabulary_size, use_bias=False)

self.batch_size = tf.placeholder(tf.int32, (), name="batch_size")

self.X = tf.placeholder(tf.int32, [None, article_max_len])

self.X_len = tf.placeholder(tf.int32, [None])

self.decoder_input = tf.placeholder(tf.int32, [None, summary_max_len])

self.decoder_len = tf.placeholder(tf.int32, [None])

self.decoder_target = tf.placeholder(tf.int32, [None, summary_max_len])

self.global_step = tf.Variable(0, trainable=False)

with tf.name_scope("embedding"):

if not forward_only and args.glove:

init_embeddings = tf.constant(get_init_embedding(reversed_dict, self.embedding_size), dtype=tf.float32)

else:

init_embeddings = tf.random_uniform([self.vocabulary_size, self.embedding_size], -1.0, 1.0)

self.embeddings = tf.get_variable("embeddings", initializer=init_embeddings)

self.encoder_emb_inp = tf.transpose(tf.nn.embedding_lookup(self.embeddings, self.X), perm=[1, 0, 2])

self.decoder_emb_inp = tf.transpose(tf.nn.embedding_lookup(self.embeddings, self.decoder_input), perm=[1, 0, 2])

with tf.name_scope("encoder"):

fw_cells = [self.cell(self.num_hidden) for _ in range(self.num_layers)]

bw_cells = [self.cell(self.num_hidden) for _ in range(self.num_layers)]

fw_cells = [rnn.DropoutWrapper(cell) for cell in fw_cells]

bw_cells = [rnn.DropoutWrapper(cell) for cell in bw_cells]

encoder_outputs, encoder_state_fw, encoder_state_bw = tf.contrib.rnn.stack_bidirectional_dynamic_rnn(

fw_cells, bw_cells, self.encoder_emb_inp,

sequence_length=self.X_len, time_major=True, dtype=tf.float32)

self.encoder_output = tf.concat(encoder_outputs, 2)

encoder_state_c = tf.concat((encoder_state_fw[0].c, encoder_state_bw[0].c), 1)

encoder_state_h = tf.concat((encoder_state_fw[0].h, encoder_state_bw[0].h), 1)

self.encoder_state = rnn.LSTMStateTuple(c=encoder_state_c, h=encoder_state_h)

with tf.name_scope("decoder"), tf.variable_scope("decoder") as decoder_scope:

decoder_cell = self.cell(self.num_hidden * 2)

if not forward_only:

attention_states = tf.transpose(self.encoder_output, [1, 0, 2])

attention_mechanism = tf.contrib.seq2seq.BahdanauAttention(

self.num_hidden * 2, attention_states, memory_sequence_length=self.X_len, normalize=True)

decoder_cell = tf.contrib.seq2seq.AttentionWrapper(decoder_cell, attention_mechanism,

attention_layer_size=self.num_hidden * 2)

initial_state = decoder_cell.zero_state(dtype=tf.float32, batch_size=self.batch_size)

initial_state = initial_state.clone(cell_state=self.encoder_state)

helper = tf.contrib.seq2seq.TrainingHelper(self.decoder_emb_inp, self.decoder_len, time_major=True)

decoder = tf.contrib.seq2seq.BasicDecoder(decoder_cell, helper, initial_state)

outputs, _, _ = tf.contrib.seq2seq.dynamic_decode(decoder, output_time_major=True, scope=decoder_scope)

self.decoder_output = outputs.rnn_output

self.logits = tf.transpose(

self.projection_layer(self.decoder_output), perm=[1, 0, 2])

self.logits_reshape = tf.concat(

[self.logits, tf.zeros([self.batch_size, summary_max_len - tf.shape(self.logits)[1], self.vocabulary_size])], axis=1)

else:

tiled_encoder_output = tf.contrib.seq2seq.tile_batch(

tf.transpose(self.encoder_output, perm=[1, 0, 2]), multiplier=self.beam_width)

tiled_encoder_final_state = tf.contrib.seq2seq.tile_batch(self.encoder_state, multiplier=self.beam_width)

tiled_seq_len = tf.contrib.seq2seq.tile_batch(self.X_len, multiplier=self.beam_width)

attention_mechanism = tf.contrib.seq2seq.BahdanauAttention(

self.num_hidden * 2, tiled_encoder_output, memory_sequence_length=tiled_seq_len, normalize=True)

decoder_cell = tf.contrib.seq2seq.AttentionWrapper(decoder_cell, attention_mechanism,

attention_layer_size=self.num_hidden * 2)

initial_state = decoder_cell.zero_state(dtype=tf.float32, batch_size=self.batch_size * self.beam_width)

initial_state = initial_state.clone(cell_state=tiled_encoder_final_state)

decoder = tf.contrib.seq2seq.BeamSearchDecoder(

cell=decoder_cell,

embedding=self.embeddings,

start_tokens=tf.fill([self.batch_size], tf.constant(2)),

end_token=tf.constant(3),

initial_state=initial_state,

beam_width=self.beam_width,

output_layer=self.projection_layer

)

outputs, _, _ = tf.contrib.seq2seq.dynamic_decode(

decoder, output_time_major=True, maximum_iterations=summary_max_len, scope=decoder_scope)

self.prediction = tf.transpose(outputs.predicted_ids, perm=[1, 2, 0])

with tf.name_scope("loss"):

if not forward_only:

crossent = tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=self.logits_reshape, labels=self.decoder_target)

weights = tf.sequence_mask(self.decoder_len, summary_max_len, dtype=tf.float32)

self.loss = tf.reduce_sum(crossent * weights / tf.cast(self.batch_size,tf.float32))

params = tf.trainable_variables()

gradients = tf.gradients(self.loss, params)

clipped_gradients, _ = tf.clip_by_global_norm(gradients, 5.0)

optimizer = tf.train.AdamOptimizer(self.learning_rate)

self.update = optimizer.apply_gradients(zip(clipped_gradients, params), global_step=self.global_step)

###Output

_____no_output_____

###Markdown

**Cell for Title Prediction**

###Code

class args:

pass

args.num_hidden=200

args.num_layers=3

args.beam_width=10

args.embedding_size=300

args.glove = True

args.learning_rate=1e-3

args.batch_size=64

args.num_epochs=5

args.keep_prob = 0.8

args.toy=True

args.with_model="store_true"

word_dict, reversed_dict, article_max_len, summary_max_len = build_dict("test", args.toy)

def generate_title(article):

tf.reset_default_graph()

model = Model(reversed_dict, article_max_len, summary_max_len, args, forward_only=True)

saver = tf.train.Saver(tf.global_variables())

ckpt = tf.train.get_checkpoint_state(default_path + "saved_model/")

with warnings.catch_warnings():

warnings.simplefilter("ignore")

x = [word_tokenize(clean_str(article))]

x = [[word_dict.get(w, word_dict["<unk>"]) for w in d] for d in x]

x = [d[:article_max_len] for d in x]

test_x = [d + (article_max_len - len(d)) * [word_dict["<padding>"]] for d in x]

with tf.Session() as sess:

saver.restore(sess, ckpt.model_checkpoint_path)

batches = batch_iter(test_x, [0] * len(test_x), args.batch_size, 1)

for batch_x, _ in batches:

batch_x_len = [len([y for y in x if y != 0]) for x in batch_x]

test_feed_dict = {

model.batch_size: len(batch_x),

model.X: batch_x,

model.X_len: batch_x_len,

}

prediction = sess.run(model.prediction, feed_dict=test_feed_dict)

prediction_output = [[reversed_dict[y] for y in x] for x in prediction[:, 0, :]]

summary_array = []

for line in prediction_output:

summary = list()

for word in line:

if word == "</s>":

break

if word not in summary:

summary.append(word)

summary_array.append(" ".join(summary))

return " ".join(summary)

def get_title(text):

if text.count(' ')<10:

raise Exception("The length of the abstract is very short. Output will not be good")

title = generate_title(clean_str(text))

updated_title = update_title(word_dict, text, title)

return updated_title

###Output

_____no_output_____

###Markdown

**Generate Titles for Test Dataset**

###Code

abstract_list = get_text_list(test_article_path)

generated_titles = []

for i in trange(len(abstract_list)):

generated_titles.append(get_title(abstract_list[i]))

with open(default_path + "result.txt", "w") as f:

f.write('\n'.join(generated_titles))

###Output

_____no_output_____

###Markdown

**BLEU** and **Rouge** scores calculation

###Code

rouge = Rouge()

original_title,generated_title= [],[]

print("Loading Data...")

original_title = get_text_list(test_title_path)

generated_title = get_generated_title(default_path + "result.txt")

abstract = get_text_list(test_article_path)

print('Tokenizing Data...')

tokens_original = [[word_tokenize(s)] for s in tqdm(original_title)]

tokens_generated = [word_tokenize(s) for s in tqdm(generated_title)]

token_abstract = [word_tokenize(s) for s in tqdm(abstract)]

minmized_abstract = []

for line in token_abstract:

minmized_abstract.append(' '.join(line[:40])+'...')

smoothing = SmoothingFunction().method0

print('Calculating BLEU Score')

bleu_score = []

for i in trange(len(tokens_original)):

bleu_score.append(sentence_bleu(tokens_original[i],tokens_generated[i],smoothing_function=smoothing))

bleu = np.array(bleu_score)

print("BLEU score report")

print("Min Score:",bleu.min(),"Max Score:",bleu.max(),"Avg Score:",bleu.mean())

print('Calculating Rouge Score')

rouge1f,rouge1p,rouge1r = [],[],[]

rouge2f,rouge2p,rouge2r = [],[],[]

rougelf,rougelp,rougelr = [],[],[]

for i in trange(len(tokens_original)):

score = rouge.get_scores(original_title[i],generated_title[i])

rouge1f.append(score[0]['rouge-1']['f'])

rouge1p.append(score[0]['rouge-1']['p'])

rouge1r.append(score[0]['rouge-1']['r'])

rouge2f.append(score[0]['rouge-2']['f'])

rouge2p.append(score[0]['rouge-2']['p'])

rouge2r.append(score[0]['rouge-2']['r'])

rougelf.append(score[0]['rouge-l']['f'])

rougelp.append(score[0]['rouge-l']['p'])

rougelr.append(score[0]['rouge-l']['r'])

rouge1f,rouge1p,rouge1r = np.array(rouge1f),np.array(rouge1p),np.array(rouge1r)

rouge2f,rouge2p,rouge2r = np.array(rouge2f),np.array(rouge2p),np.array(rouge2r)

rougelf,rougelp,rougelr = np.array(rougelf),np.array(rougelp),np.array(rougelr)

df = pd.DataFrame(zip(minmized_abstract,original_title,generated_title,bleu,rouge1f,rouge1p,rouge1r,rouge2f,rouge2p,rouge2r,rougelf,rougelp,rougelr),columns=['Abstract','Original_Headline','Generated_Headline_x','Bleu_Score_x','Rouge-1_F_x','Rouge-1_P_x','Rouge-1_R_x','Rouge-2_F_x','Rouge-2_P_x','Rouge-2_R_x','Rouge-l_F_x','Rouge-l_P_x','Rouge-l_R_x'])

df.to_csv(default_path+'output.csv',index=False)

print('Done!!')

###Output

_____no_output_____

|

Experiments/Siamesa_v3/siamesa_v3_sbd.ipynb

|

###Markdown

GENERAL IMPORTS AND SEED

###Code

import argparse

import torch

import torchvision

from torch import optim

from torchvision import transforms

import os

import os.path as osp

import random

import numpy as np

from pathlib import Path

from torch.utils.data import dataset

import PIL

from PIL import Image

# fix the seed

seed = 1

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

np.random.seed(seed)

random.seed(seed)

###Output

_____no_output_____

###Markdown

ACCESS TO THE DRIVE FOLDER WHERE THE DATASET HAS BEEN STORED

###Code

from google.colab import drive

drive.mount('/content/gdrive')

root_path = 'gdrive/My Drive/' #change dir to your project folder

###Output

Drive already mounted at /content/gdrive; to attempt to forcibly remount, call drive.mount("/content/gdrive", force_remount=True).

###Markdown

DEFINE ARGUMENTS

###Code

class Args:

frontal_images_directories = "gdrive/My Drive/dataset-cfp/Protocol/image_list_F.txt"

profile_images_directories = "gdrive/My Drive/dataset-cfp/Protocol/image_list_P.txt"

split_main_directory = "gdrive/My Drive/dataset-cfp/Protocol/Split"

split_traindata = ["01", "02", "03", "04", "05", "06"]

split_valdata = ["07", "08"]

split_testdata = ["09", "10"]

dataset_root = "gdrive/My Drive"

dataset= "CFPDataset"

lr = float(1e-3)

weight_decay = float(0.0005)

momentum = float(0.9)

betas = (0.9, 0.999)

batch_size = int(14)

workers = int(8)

start_epoch = int(0)

epochs = int(40)

#save_every = int(2)

pretrained = True

#siamese_linear = True

data_aug = True

resume = "checkpoint_e23_lr1e_3_40e_SGD"

###Output

_____no_output_____

###Markdown

DEFINE DATASET CLASS

###Code

class CFPDataset(dataset.Dataset):

def __init__(self, path, args, img_transforms=None, dataset_root="",

split="train", input_size=(224, 224)):

super().__init__()

self.data = []

self.split = split

self.load(path, args)

print("Dataset loaded")

print("{0} samples in the {1} dataset".format(len(self.data),

self.split))

self.transforms = img_transforms

self.dataset_root = dataset_root

self.input_size = input_size

def load(self, path, args):

# read directories for frontal images

lines = open(args.frontal_images_directories).readlines()

idx = 0

directories_frontal_images = []

#print(len(lines))

while idx < len(lines):

x = lines[idx].strip().split()

directories_frontal_images.append(x)

idx += 1

#print(x)

# read directories for profile images

lines = open(args.profile_images_directories).readlines()

idx = 0

directories_profile_images = []

#print(len(lines))

while idx < len(lines):

x = lines[idx].strip().split()

directories_profile_images.append(x)

idx += 1

#print(x)

# read same and different pairs of images and save at dictionary

self.data = []

for i in path:

ff_diff_file = osp.join(args.split_main_directory, 'FF', i,

'diff.txt')

lines = open(ff_diff_file).readlines()

idx = 0

while idx < int(len(lines)/1):

img_pair = lines[idx].strip().split(',')

#print('ff_diff', img_pair)

img1_dir = directories_frontal_images[int(img_pair[0])-1][1]

img2_dir = directories_frontal_images[int(img_pair[1])-1][1]

pair_tag = -1

d = {

"img1_path": img1_dir,

"img2_path": img2_dir,

"pair_tag": pair_tag

}

#print(d)

self.data.append(d)

idx += 1

ff_same_file = osp.join(args.split_main_directory, 'FF', i,

'same.txt')

lines = open(ff_same_file).readlines()

idx = 0

while idx < int(len(lines)/1):

img_pair = lines[idx].strip().split(',')

#print('ff_same', img_pair)

img1_dir = directories_frontal_images[int(img_pair[0])-1][1]

img2_dir = directories_frontal_images[int(img_pair[1])-1][1]

pair_tag = 1

d = {

"img1_path": img1_dir,

"img2_path": img2_dir,

"pair_tag": pair_tag

}

#print(d)

self.data.append(d)

idx += 1

fp_diff_file = osp.join(args.split_main_directory, 'FP', i,

'diff.txt')

lines = open(fp_diff_file).readlines()

idx = 0

while idx < int(len(lines)/1):

img_pair = lines[idx].strip().split(',')

#print('fp_diff', img_pair)

img1_dir = directories_frontal_images[int(img_pair[0])-1][1]

img2_dir = directories_profile_images[int(img_pair[1])-1][1]

pair_tag = -1

d = {

"img1_path": img1_dir,

"img2_path": img2_dir,

"pair_tag": pair_tag

}

#print(d)

self.data.append(d)

idx += 1

fp_same_file = osp.join(args.split_main_directory, 'FP', i,

'same.txt')

lines = open(fp_same_file).readlines()

idx = 0

while idx < int(len(lines)/1):

img_pair = lines[idx].strip().split(',')

#print('ff_same', img_pair)

img1_dir = directories_frontal_images[int(img_pair[0])-1][1]

img2_dir = directories_profile_images[int(img_pair[1])-1][1]

pair_tag = 1

d = {

"img1_path": img1_dir,

"img2_path": img2_dir,

"pair_tag": pair_tag

}

#print(d)

self.data.append(d)

idx += 1

def __len__(self):

return len(self.data)

def __getitem__(self, index):

d = self.data[index]

image1_path = osp.join(self.dataset_root, 'dataset-cfp', d[

'img1_path'])

image2_path = osp.join(self.dataset_root, 'dataset-cfp', d[

'img2_path'])

image1 = Image.open(image1_path).convert('RGB')

image2 = Image.open(image2_path).convert('RGB')

tag = d['pair_tag']

if self.transforms is not None:

# this converts from (HxWxC) to (CxHxW) as wel

img1 = self.transforms(image1)

img2 = self. transforms(image2)

return img1, img2, tag

###Output

_____no_output_____

###Markdown

DEFINE DATA LOADES

###Code

from torch.utils import data

def get_dataloader(datapath, args, img_transforms=None, split="train"):

if split == 'train':

shuffle = True

drop_last = True

else:

shuffle = False

drop_last = False

dataset = CFPDataset(datapath,

args,

split=split,

img_transforms=img_transforms,

dataset_root=osp.expanduser(args.dataset_root))

data_loader = data.DataLoader(dataset,

batch_size=args.batch_size,

shuffle=shuffle,

num_workers=args.workers,

pin_memory=True,

drop_last=drop_last)

return data_loader

###Output

_____no_output_____

###Markdown

DEFINE MODEL

###Code

import torch

from torch import nn

from torchvision.models import vgg16_bn

def l2norm(x):

x = x / torch.sqrt(torch.sum(x**2, dim=-1, keepdim=True))

return x

class SiameseCosine(nn.Module):

"""

Siamese network

"""

def __init__(self, pretrained=False):

super(SiameseCosine, self).__init__()

vgg16_model = vgg16_bn(pretrained=pretrained)

self.feat = vgg16_model.features

self.linear_classifier = vgg16_model.classifier[0]

self.avgpool = nn.AdaptiveAvgPool2d((7, 7))

def forward(self, img1, img2):

feat_1 = self.feat(img1)

feat_1 = self. avgpool(feat_1)

feat_1 = feat_1.view(feat_1.size(0),-1)

feat_1 = self.linear_classifier(feat_1)

feat_1 = l2norm(feat_1)

feat_2 = self.feat(img2)

feat_2 = self. avgpool(feat_2)

feat_2 = feat_2.view(feat_1.size(0),-1)

feat_2 = self.linear_classifier(feat_2)

feat_2 = l2norm(feat_2)

return feat_1, feat_2

###Output

_____no_output_____

###Markdown

DEFINE LOSS

###Code

from torch import nn

class RecognitionCriterion(nn.Module):

def __init__(self):

super().__init__()

self.classification_criterion = nn.CosineEmbeddingLoss(margin=0.5).cuda()

self.cls_loss = None

def forward(self, *input):

self.cls_loss = self.classification_criterion(*input)

return self.cls_loss

###Output

_____no_output_____

###Markdown

DEFINE TRAINING AND VALIDATION FUNCTIONS

###Code

import torch

from torchvision import transforms

from torch.nn import functional as nnfunc

import numpy as np

def similarity (vec1, vec2):

cos = torch.nn.CosineSimilarity(dim=1, eps=1e-8)

cos_a = cos (vec1, vec2)

return cos_a

def accuracy(vec1, vec2, y, treshold):

correct = 0

total = 0

similarity_value = similarity(vec1, vec2)

for value, label in zip(similarity_value, y):

total += 1

if value > treshold and label == 1.0:

correct += 1

if value < treshold and label == -1.0:

correct += 1

return correct/total

def train(model, loss_fn, optimizer, dataloader, epoch, device):

model.train()

all_loss = []

for idx, (img1, img2, prob) in enumerate(dataloader):

img1 = img1.to('cuda:0')

img2 = img2.to('cuda:0')

prob = prob.float().to('cuda:0') #label

out1, out2 = model(img1, img2) #inputs images to model, executes model, returns features

loss = loss_fn(out1, out2, prob) #calculates loss

loss.backward() #upgrades gradients

all_loss.append(loss.item())

optimizer.step()

optimizer.zero_grad()

if idx % 100 == 0:

message1 = "TRAIN Epoch [{0}]: [{1}/{2}] ".format(epoch, idx,

len(dataloader))

#message2 = "Loss: [{0:.4f}]; Accuracy: [{1}]".format(loss.item(),

# acc)

message2 = "Loss: [{0:.4f}]".format(loss.item())

print(message1, message2)

torch.cuda.empty_cache()

return all_loss

def val(model, loss_fn, dataloader, epoch, device):

model.eval()

all_loss = []

for idx, (img1, img2, prob) in enumerate(dataloader):

img1 = img1.to('cuda:0')

img2 = img2.to('cuda:0')

prob = prob.float().to('cuda:0') #label

out1, out2 = model(img1, img2) #inputs images to model, executes model, returns features

loss = loss_fn(out1, out2, prob) #calculates loss

all_loss.append(loss.item())

if idx % 100 == 0:

message1 = "VAL Epoch [{0}]: [{1}/{2}] ".format(epoch, idx,

len(dataloader))

#message2 = "Loss: [{0:.4f}]; Accuracy: [{1:.4f}]".format(loss.item(),

# acc)

message2 = "Loss: [{0:.4f}]".format(loss.item())

print(message1, message2)

torch.cuda.empty_cache()

return all_loss

def val_sim_lim(model, dataloader, epoch, device):

model.eval()

sim_pos_min = 1

sim_neg_max = -1

pos_similarities = []

neg_similarities = []

for idx, (img1, img2, prob) in enumerate(dataloader):

img1 = img1.to('cuda:0')

img2 = img2.to('cuda:0')

prob = prob.float().to('cuda:0') #label

out1, out2 = model(img1, img2) #inputs images to model, executes model, returns features

sim = similarity(out1, out2)

for value, label in zip(sim, prob):

value = value.item()

np.round(value, decimals=3)

if label == 1:

pos_similarities.append(value)

else:

neg_similarities.append(value)

if idx % 100 == 0:

message1 = "VAL Epoch [{0}]: [{1}/{2}] ".format(epoch, idx,

len(dataloader))

print(message1)

torch.cuda.empty_cache()

return pos_similarities, neg_similarities

def val_tr(model, dataloader, epoch, device, tr):

model.eval()

all_loss = []

all_acc = []

for idx, (img1, img2, prob) in enumerate(dataloader):

img1 = img1.to('cuda:0')

img2 = img2.to('cuda:0')

prob = prob.float().to('cuda:0') #label

out1, out2 = model(img1, img2) #inputs images to model, executes model, returns features

acc = accuracy(out1, out2, prob, tr)

all_acc.append(acc)

if idx % 100 == 0:

message1 = "VAL Epoch [{0}]: [{1}/{2}] ".format(epoch, idx,

len(dataloader))

message2 = "Accuracy: [{0}]".format(acc)

#message2 = "Loss: [{0:.4f}]".format(loss.item())

print(message1, message2)

torch.cuda.empty_cache()

return all_acc

def test(model, loss_fn, dataloader, epoch, device, tr):

#model = model.to(device)

model.eval()

all_acc = []

for idx, (img1, img2, prob) in enumerate(dataloader):

img1 = img1.to('cuda:0')

img2 = img2.to('cuda:0')

prob = prob.float().to('cuda:0') #label

out1, out2 = model(img1, img2) #inputs images to model, executes model, returns features

acc = accuracy(out1, out2, prob, tr)

all_acc.append(acc)

if idx % 100 == 0:

message1 = "TEST Epoch [{0}]: [{1}/{2}] ".format(epoch, idx,

len(dataloader))

message2 = "Accuracy: [{0}]".format(acc)

#message2 = "Loss: [{0:.4f}]".format(loss.item())

print(message1, message2)

torch.cuda.empty_cache()

return all_acc

###Output

_____no_output_____

###Markdown

LOAD ARGUMENTS AND DEFINE IMAGE TRANSFORMATIONS

###Code

args = Args()

train_transform=None

if args.data_aug == False:

img_transforms = transforms.Compose([transforms.Resize((224, 224)), transforms.ToTensor()])

else:

img_transforms = transforms.Compose([transforms.Resize((224, 224)),

transforms.RandomHorizontalFlip(),

transforms.RandomRotation(20, resample=PIL.Image.BILINEAR),

transforms.ToTensor()])

val_transforms = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor()

])

###Output

_____no_output_____

###Markdown

LOAD DATASET SPLIT FOR TRAINING

###Code

train_loader = get_dataloader(args.split_traindata, args,

img_transforms=img_transforms)

###Output

Dataset loaded

8400 samples in the train dataset

###Markdown

LOAD DATASET SPLIT FOR VALIDATION

###Code

val_loader = get_dataloader(args.split_valdata, args,

img_transforms=val_transforms, split="val")

torch.cuda.is_available()

###Output

_____no_output_____

###Markdown

SPECIFY DEVICE

###Code

# check for CUDA

if torch.cuda.is_available():

device = torch.device('cuda:0')

else:

device = torch.device('cpu')

###Output

_____no_output_____

###Markdown

LOAD MODEL AND LOSS

###Code

model = SiameseCosine(pretrained=args.pretrained)

model = model.to(device) # treure de train i validation

loss_fn = RecognitionCriterion()

###Output

_____no_output_____

###Markdown

SPECIFY WHEIGHTS DIRECTORY

###Code

# directory where we'll store model weights

weights_dir = "gdrive/My Drive/weights"

if not osp.exists(weights_dir):

os.mkdir(weights_dir)

###Output

_____no_output_____

###Markdown

SELECT OPTIMIZER

###Code

#optimizer = torch.optim.Adam(model.parameters(), lr=args.lr,

# weight_decay=args.weight_decay)

optimizer = optim.SGD(model.parameters(), lr=args.lr,

momentum=args.momentum, weight_decay=args.weight_decay)

###Output

_____no_output_____

###Markdown

DEFINE CHECKPOINT

###Code

def save_checkpoint(state, filename="checkpoint.pth", save_path=weights_dir):

# check if the save directory exists

if not Path(save_path).exists():

Path(save_path).mkdir()

save_path = Path(save_path, filename)

torch.save(state, str(save_path))

###Output

_____no_output_____

###Markdown

RUN TRAIN

###Code

import matplotlib.pyplot as plt

# train and evalute for `epochs`

loss_epoch_train = []

loss_epoch_val = []

acc_epoch_train = []

acc_epoch_val = []

best_loss = 100

best_epoch = 0

for epoch in range(args.start_epoch, args.epochs):

# scheduler.step()

train_loss = train(model, loss_fn, optimizer, train_loader, epoch, device=device)

av_loss = np.mean(train_loss)

loss_epoch_train.append(av_loss)

val_loss = val(model, loss_fn, val_loader, epoch, device=device)

av_loss = np.mean(val_loss)

loss_epoch_val.append(av_loss)

if best_loss > av_loss:

best_loss = av_loss

best_epoch = epoch

save_checkpoint({

'epoch': epoch + 1,

'batch_size': val_loader.batch_size,

'model': model.state_dict(),

'optimizer': optimizer.state_dict()

}, filename=str(args.resume)+".pth",

save_path=weights_dir)

print("Best Epoch: ",best_epoch, "Best Loss: ", best_loss)

epochs = range(1, len(loss_epoch_train) + 1)

# b is for "solid blue line"

plt.plot(epochs, loss_epoch_train, 'b', label='Training loss')

# r is for "solid red line"

plt.plot(epochs, loss_epoch_val, 'r', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

#epochs = range(1, len(acc_epoch_train) + 1)

# b is for "solid blue line"

#plt.plot(epochs, acc_epoch_train, 'b', label='Training accuracy')

# r is for "solid red line"

#plt.plot(epochs, acc_epoch_val, 'r', label='Validation accuracy')

#plt.title('Training and validation accuracy')

#plt.xlabel('Epochs')

#plt.ylabel('Accuracy')

#plt.legend()

#plt.show()

###Output

TRAIN Epoch [0]: [0/600] Loss: [0.1080]

TRAIN Epoch [0]: [100/600] Loss: [0.1172]

TRAIN Epoch [0]: [200/600] Loss: [0.2114]

TRAIN Epoch [0]: [300/600] Loss: [0.2063]

TRAIN Epoch [0]: [400/600] Loss: [0.2105]

TRAIN Epoch [0]: [500/600] Loss: [0.2178]

VAL Epoch [0]: [0/200] Loss: [0.1021]

VAL Epoch [0]: [100/200] Loss: [0.0672]

TRAIN Epoch [1]: [0/600] Loss: [0.2227]

TRAIN Epoch [1]: [100/600] Loss: [0.1890]

TRAIN Epoch [1]: [200/600] Loss: [0.1801]

TRAIN Epoch [1]: [300/600] Loss: [0.1705]

TRAIN Epoch [1]: [400/600] Loss: [0.1183]

TRAIN Epoch [1]: [500/600] Loss: [0.2071]

VAL Epoch [1]: [0/200] Loss: [0.1376]

VAL Epoch [1]: [100/200] Loss: [0.0603]

TRAIN Epoch [2]: [0/600] Loss: [0.1619]

TRAIN Epoch [2]: [100/600] Loss: [0.2246]

TRAIN Epoch [2]: [200/600] Loss: [0.2350]

TRAIN Epoch [2]: [300/600] Loss: [0.1982]

TRAIN Epoch [2]: [400/600] Loss: [0.1776]

TRAIN Epoch [2]: [500/600] Loss: [0.1553]

VAL Epoch [2]: [0/200] Loss: [0.1658]

VAL Epoch [2]: [100/200] Loss: [0.0575]

TRAIN Epoch [3]: [0/600] Loss: [0.1390]

TRAIN Epoch [3]: [100/600] Loss: [0.2018]

TRAIN Epoch [3]: [200/600] Loss: [0.1600]

TRAIN Epoch [3]: [300/600] Loss: [0.0857]

TRAIN Epoch [3]: [400/600] Loss: [0.1550]

TRAIN Epoch [3]: [500/600] Loss: [0.1412]

VAL Epoch [3]: [0/200] Loss: [0.1716]

VAL Epoch [3]: [100/200] Loss: [0.0648]

TRAIN Epoch [4]: [0/600] Loss: [0.1496]

TRAIN Epoch [4]: [100/600] Loss: [0.1031]

TRAIN Epoch [4]: [200/600] Loss: [0.1194]

TRAIN Epoch [4]: [300/600] Loss: [0.1046]

TRAIN Epoch [4]: [400/600] Loss: [0.1127]

TRAIN Epoch [4]: [500/600] Loss: [0.1216]

VAL Epoch [4]: [0/200] Loss: [0.1805]

VAL Epoch [4]: [100/200] Loss: [0.0665]

TRAIN Epoch [5]: [0/600] Loss: [0.0792]

TRAIN Epoch [5]: [100/600] Loss: [0.1464]

TRAIN Epoch [5]: [200/600] Loss: [0.0892]

TRAIN Epoch [5]: [300/600] Loss: [0.1045]

TRAIN Epoch [5]: [400/600] Loss: [0.1741]

TRAIN Epoch [5]: [500/600] Loss: [0.0976]

VAL Epoch [5]: [0/200] Loss: [0.1767]

VAL Epoch [5]: [100/200] Loss: [0.0650]

TRAIN Epoch [6]: [0/600] Loss: [0.1222]

TRAIN Epoch [6]: [100/600] Loss: [0.1173]

TRAIN Epoch [6]: [200/600] Loss: [0.0695]

TRAIN Epoch [6]: [300/600] Loss: [0.1352]

TRAIN Epoch [6]: [400/600] Loss: [0.0725]

TRAIN Epoch [6]: [500/600] Loss: [0.1326]

VAL Epoch [6]: [0/200] Loss: [0.1910]

VAL Epoch [6]: [100/200] Loss: [0.0563]

TRAIN Epoch [7]: [0/600] Loss: [0.0913]

TRAIN Epoch [7]: [100/600] Loss: [0.1533]

TRAIN Epoch [7]: [200/600] Loss: [0.1380]

TRAIN Epoch [7]: [300/600] Loss: [0.1510]

TRAIN Epoch [7]: [400/600] Loss: [0.1655]

TRAIN Epoch [7]: [500/600] Loss: [0.1792]

VAL Epoch [7]: [0/200] Loss: [0.1928]

VAL Epoch [7]: [100/200] Loss: [0.0540]

TRAIN Epoch [8]: [0/600] Loss: [0.0842]

TRAIN Epoch [8]: [100/600] Loss: [0.0815]

TRAIN Epoch [8]: [200/600] Loss: [0.0863]

TRAIN Epoch [8]: [300/600] Loss: [0.1468]

TRAIN Epoch [8]: [400/600] Loss: [0.1377]

TRAIN Epoch [8]: [500/600] Loss: [0.1844]

VAL Epoch [8]: [0/200] Loss: [0.1989]

VAL Epoch [8]: [100/200] Loss: [0.0599]

TRAIN Epoch [9]: [0/600] Loss: [0.1067]

TRAIN Epoch [9]: [100/600] Loss: [0.0943]

TRAIN Epoch [9]: [200/600] Loss: [0.1090]

TRAIN Epoch [9]: [300/600] Loss: [0.1236]

TRAIN Epoch [9]: [400/600] Loss: [0.1380]

TRAIN Epoch [9]: [500/600] Loss: [0.0926]

VAL Epoch [9]: [0/200] Loss: [0.2002]

VAL Epoch [9]: [100/200] Loss: [0.0534]

TRAIN Epoch [10]: [0/600] Loss: [0.1048]

TRAIN Epoch [10]: [100/600] Loss: [0.1387]

TRAIN Epoch [10]: [200/600] Loss: [0.0861]

TRAIN Epoch [10]: [300/600] Loss: [0.1494]

TRAIN Epoch [10]: [400/600] Loss: [0.0970]

TRAIN Epoch [10]: [500/600] Loss: [0.1105]

VAL Epoch [10]: [0/200] Loss: [0.1978]

VAL Epoch [10]: [100/200] Loss: [0.0530]

TRAIN Epoch [11]: [0/600] Loss: [0.1678]

TRAIN Epoch [11]: [100/600] Loss: [0.0517]

TRAIN Epoch [11]: [200/600] Loss: [0.0889]

TRAIN Epoch [11]: [300/600] Loss: [0.1184]

TRAIN Epoch [11]: [400/600] Loss: [0.0563]

TRAIN Epoch [11]: [500/600] Loss: [0.0887]

VAL Epoch [11]: [0/200] Loss: [0.2000]

VAL Epoch [11]: [100/200] Loss: [0.0513]

TRAIN Epoch [12]: [0/600] Loss: [0.1034]

TRAIN Epoch [12]: [100/600] Loss: [0.1195]

TRAIN Epoch [12]: [200/600] Loss: [0.0435]

TRAIN Epoch [12]: [300/600] Loss: [0.0766]

TRAIN Epoch [12]: [400/600] Loss: [0.0728]

TRAIN Epoch [12]: [500/600] Loss: [0.1355]

VAL Epoch [12]: [0/200] Loss: [0.1936]

VAL Epoch [12]: [100/200] Loss: [0.0508]

TRAIN Epoch [13]: [0/600] Loss: [0.0873]

TRAIN Epoch [13]: [100/600] Loss: [0.0611]

TRAIN Epoch [13]: [200/600] Loss: [0.1784]

TRAIN Epoch [13]: [300/600] Loss: [0.0648]

TRAIN Epoch [13]: [400/600] Loss: [0.0973]

TRAIN Epoch [13]: [500/600] Loss: [0.0638]

VAL Epoch [13]: [0/200] Loss: [0.1963]

VAL Epoch [13]: [100/200] Loss: [0.0484]

TRAIN Epoch [14]: [0/600] Loss: [0.1183]

TRAIN Epoch [14]: [100/600] Loss: [0.0725]

TRAIN Epoch [14]: [200/600] Loss: [0.0861]

TRAIN Epoch [14]: [300/600] Loss: [0.0862]

TRAIN Epoch [14]: [400/600] Loss: [0.0863]

TRAIN Epoch [14]: [500/600] Loss: [0.1047]

VAL Epoch [14]: [0/200] Loss: [0.1999]

VAL Epoch [14]: [100/200] Loss: [0.0432]

TRAIN Epoch [15]: [0/600] Loss: [0.1551]

TRAIN Epoch [15]: [100/600] Loss: [0.0906]

TRAIN Epoch [15]: [200/600] Loss: [0.0483]

TRAIN Epoch [15]: [300/600] Loss: [0.0771]

TRAIN Epoch [15]: [400/600] Loss: [0.0902]

TRAIN Epoch [15]: [500/600] Loss: [0.0372]

VAL Epoch [15]: [0/200] Loss: [0.1999]

VAL Epoch [15]: [100/200] Loss: [0.0408]

TRAIN Epoch [16]: [0/600] Loss: [0.1211]

TRAIN Epoch [16]: [100/600] Loss: [0.1037]

TRAIN Epoch [16]: [200/600] Loss: [0.0667]

TRAIN Epoch [16]: [300/600] Loss: [0.1599]

TRAIN Epoch [16]: [400/600] Loss: [0.0266]

TRAIN Epoch [16]: [500/600] Loss: [0.0484]

VAL Epoch [16]: [0/200] Loss: [0.1868]

VAL Epoch [16]: [100/200] Loss: [0.0421]

TRAIN Epoch [17]: [0/600] Loss: [0.1155]

TRAIN Epoch [17]: [100/600] Loss: [0.1340]

TRAIN Epoch [17]: [200/600] Loss: [0.1036]

TRAIN Epoch [17]: [300/600] Loss: [0.0855]

TRAIN Epoch [17]: [400/600] Loss: [0.0374]

TRAIN Epoch [17]: [500/600] Loss: [0.0546]

VAL Epoch [17]: [0/200] Loss: [0.1859]

VAL Epoch [17]: [100/200] Loss: [0.0413]

TRAIN Epoch [18]: [0/600] Loss: [0.0836]

TRAIN Epoch [18]: [100/600] Loss: [0.0587]

TRAIN Epoch [18]: [200/600] Loss: [0.0810]

TRAIN Epoch [18]: [300/600] Loss: [0.0448]

TRAIN Epoch [18]: [400/600] Loss: [0.0964]

TRAIN Epoch [18]: [500/600] Loss: [0.0511]

VAL Epoch [18]: [0/200] Loss: [0.1893]

VAL Epoch [18]: [100/200] Loss: [0.0354]

TRAIN Epoch [19]: [0/600] Loss: [0.0443]

TRAIN Epoch [19]: [100/600] Loss: [0.0535]

TRAIN Epoch [19]: [200/600] Loss: [0.0960]

TRAIN Epoch [19]: [300/600] Loss: [0.0247]

TRAIN Epoch [19]: [400/600] Loss: [0.0736]

TRAIN Epoch [19]: [500/600] Loss: [0.1098]

VAL Epoch [19]: [0/200] Loss: [0.1886]

VAL Epoch [19]: [100/200] Loss: [0.0380]

TRAIN Epoch [20]: [0/600] Loss: [0.0772]

TRAIN Epoch [20]: [100/600] Loss: [0.1032]

TRAIN Epoch [20]: [200/600] Loss: [0.0459]

TRAIN Epoch [20]: [300/600] Loss: [0.0749]

TRAIN Epoch [20]: [400/600] Loss: [0.0881]

TRAIN Epoch [20]: [500/600] Loss: [0.0948]

VAL Epoch [20]: [0/200] Loss: [0.1870]

VAL Epoch [20]: [100/200] Loss: [0.0333]

TRAIN Epoch [21]: [0/600] Loss: [0.0664]

TRAIN Epoch [21]: [100/600] Loss: [0.0447]

TRAIN Epoch [21]: [200/600] Loss: [0.1013]

TRAIN Epoch [21]: [300/600] Loss: [0.0433]

TRAIN Epoch [21]: [400/600] Loss: [0.0671]

TRAIN Epoch [21]: [500/600] Loss: [0.0419]

VAL Epoch [21]: [0/200] Loss: [0.1847]

VAL Epoch [21]: [100/200] Loss: [0.0400]

TRAIN Epoch [22]: [0/600] Loss: [0.0674]

TRAIN Epoch [22]: [100/600] Loss: [0.0236]

TRAIN Epoch [22]: [200/600] Loss: [0.0406]

TRAIN Epoch [22]: [300/600] Loss: [0.0587]

TRAIN Epoch [22]: [400/600] Loss: [0.0558]

TRAIN Epoch [22]: [500/600] Loss: [0.0364]

VAL Epoch [22]: [0/200] Loss: [0.1755]

VAL Epoch [22]: [100/200] Loss: [0.0380]

TRAIN Epoch [23]: [0/600] Loss: [0.0744]

TRAIN Epoch [23]: [100/600] Loss: [0.0287]

TRAIN Epoch [23]: [200/600] Loss: [0.0912]

TRAIN Epoch [23]: [300/600] Loss: [0.0756]

TRAIN Epoch [23]: [400/600] Loss: [0.0177]

TRAIN Epoch [23]: [500/600] Loss: [0.0233]

VAL Epoch [23]: [0/200] Loss: [0.1887]

VAL Epoch [23]: [100/200] Loss: [0.0375]

TRAIN Epoch [24]: [0/600] Loss: [0.0657]

TRAIN Epoch [24]: [100/600] Loss: [0.0133]

TRAIN Epoch [24]: [200/600] Loss: [0.0445]

TRAIN Epoch [24]: [300/600] Loss: [0.0929]

TRAIN Epoch [24]: [400/600] Loss: [0.0471]

TRAIN Epoch [24]: [500/600] Loss: [0.0817]

VAL Epoch [24]: [0/200] Loss: [0.1893]

VAL Epoch [24]: [100/200] Loss: [0.0372]

TRAIN Epoch [25]: [0/600] Loss: [0.0326]

TRAIN Epoch [25]: [100/600] Loss: [0.0557]

TRAIN Epoch [25]: [200/600] Loss: [0.0420]

TRAIN Epoch [25]: [300/600] Loss: [0.0413]

TRAIN Epoch [25]: [400/600] Loss: [0.0135]

TRAIN Epoch [25]: [500/600] Loss: [0.0518]

VAL Epoch [25]: [0/200] Loss: [0.1824]

VAL Epoch [25]: [100/200] Loss: [0.0341]

TRAIN Epoch [26]: [0/600] Loss: [0.0829]

TRAIN Epoch [26]: [100/600] Loss: [0.0599]

TRAIN Epoch [26]: [200/600] Loss: [0.0900]

TRAIN Epoch [26]: [300/600] Loss: [0.0877]

TRAIN Epoch [26]: [400/600] Loss: [0.0491]

TRAIN Epoch [26]: [500/600] Loss: [0.0499]

VAL Epoch [26]: [0/200] Loss: [0.1803]

VAL Epoch [26]: [100/200] Loss: [0.0344]

TRAIN Epoch [27]: [0/600] Loss: [0.1060]

TRAIN Epoch [27]: [100/600] Loss: [0.0442]

TRAIN Epoch [27]: [200/600] Loss: [0.0620]

TRAIN Epoch [27]: [300/600] Loss: [0.0135]

TRAIN Epoch [27]: [400/600] Loss: [0.1370]

TRAIN Epoch [27]: [500/600] Loss: [0.0726]

VAL Epoch [27]: [0/200] Loss: [0.1813]

VAL Epoch [27]: [100/200] Loss: [0.0338]

TRAIN Epoch [28]: [0/600] Loss: [0.0573]

TRAIN Epoch [28]: [100/600] Loss: [0.0465]

TRAIN Epoch [28]: [200/600] Loss: [0.0872]

TRAIN Epoch [28]: [300/600] Loss: [0.0974]

TRAIN Epoch [28]: [400/600] Loss: [0.1087]

TRAIN Epoch [28]: [500/600] Loss: [0.0451]

VAL Epoch [28]: [0/200] Loss: [0.1909]

VAL Epoch [28]: [100/200] Loss: [0.0338]

TRAIN Epoch [29]: [0/600] Loss: [0.0412]

TRAIN Epoch [29]: [100/600] Loss: [0.0496]

TRAIN Epoch [29]: [200/600] Loss: [0.0626]

TRAIN Epoch [29]: [300/600] Loss: [0.0528]

TRAIN Epoch [29]: [400/600] Loss: [0.0754]

TRAIN Epoch [29]: [500/600] Loss: [0.0422]

VAL Epoch [29]: [0/200] Loss: [0.1870]

VAL Epoch [29]: [100/200] Loss: [0.0343]

TRAIN Epoch [30]: [0/600] Loss: [0.0403]

TRAIN Epoch [30]: [100/600] Loss: [0.0774]

TRAIN Epoch [30]: [200/600] Loss: [0.0762]

TRAIN Epoch [30]: [300/600] Loss: [0.0639]

TRAIN Epoch [30]: [400/600] Loss: [0.0453]

TRAIN Epoch [30]: [500/600] Loss: [0.0311]

VAL Epoch [30]: [0/200] Loss: [0.1813]

VAL Epoch [30]: [100/200] Loss: [0.0340]

TRAIN Epoch [31]: [0/600] Loss: [0.0626]

TRAIN Epoch [31]: [100/600] Loss: [0.0283]

TRAIN Epoch [31]: [200/600] Loss: [0.0645]

TRAIN Epoch [31]: [300/600] Loss: [0.0623]

TRAIN Epoch [31]: [400/600] Loss: [0.0688]

TRAIN Epoch [31]: [500/600] Loss: [0.0216]

VAL Epoch [31]: [0/200] Loss: [0.1824]

VAL Epoch [31]: [100/200] Loss: [0.0336]

TRAIN Epoch [32]: [0/600] Loss: [0.0453]

TRAIN Epoch [32]: [100/600] Loss: [0.0435]

TRAIN Epoch [32]: [200/600] Loss: [0.0720]

TRAIN Epoch [32]: [300/600] Loss: [0.0917]

TRAIN Epoch [32]: [400/600] Loss: [0.0287]

TRAIN Epoch [32]: [500/600] Loss: [0.0343]

VAL Epoch [32]: [0/200] Loss: [0.1786]

VAL Epoch [32]: [100/200] Loss: [0.0331]

TRAIN Epoch [33]: [0/600] Loss: [0.0211]

TRAIN Epoch [33]: [100/600] Loss: [0.0320]

TRAIN Epoch [33]: [200/600] Loss: [0.0646]

TRAIN Epoch [33]: [300/600] Loss: [0.0854]

TRAIN Epoch [33]: [400/600] Loss: [0.0534]

TRAIN Epoch [33]: [500/600] Loss: [0.0254]

VAL Epoch [33]: [0/200] Loss: [0.1806]

VAL Epoch [33]: [100/200] Loss: [0.0324]

TRAIN Epoch [34]: [0/600] Loss: [0.0217]

TRAIN Epoch [34]: [100/600] Loss: [0.0458]

TRAIN Epoch [34]: [200/600] Loss: [0.0475]

TRAIN Epoch [34]: [300/600] Loss: [0.1082]

TRAIN Epoch [34]: [400/600] Loss: [0.0419]

TRAIN Epoch [34]: [500/600] Loss: [0.0620]

VAL Epoch [34]: [0/200] Loss: [0.1824]

VAL Epoch [34]: [100/200] Loss: [0.0335]

TRAIN Epoch [35]: [0/600] Loss: [0.0506]

TRAIN Epoch [35]: [100/600] Loss: [0.0642]

TRAIN Epoch [35]: [200/600] Loss: [0.0616]

TRAIN Epoch [35]: [300/600] Loss: [0.0417]

TRAIN Epoch [35]: [400/600] Loss: [0.0661]

TRAIN Epoch [35]: [500/600] Loss: [0.0433]

VAL Epoch [35]: [0/200] Loss: [0.1773]

VAL Epoch [35]: [100/200] Loss: [0.0332]

TRAIN Epoch [36]: [0/600] Loss: [0.1021]

TRAIN Epoch [36]: [100/600] Loss: [0.0556]

TRAIN Epoch [36]: [200/600] Loss: [0.0849]

TRAIN Epoch [36]: [300/600] Loss: [0.0165]

TRAIN Epoch [36]: [400/600] Loss: [0.0629]

TRAIN Epoch [36]: [500/600] Loss: [0.0211]

VAL Epoch [36]: [0/200] Loss: [0.1728]

VAL Epoch [36]: [100/200] Loss: [0.0343]

TRAIN Epoch [37]: [0/600] Loss: [0.0602]

TRAIN Epoch [37]: [100/600] Loss: [0.0248]

TRAIN Epoch [37]: [200/600] Loss: [0.1107]

TRAIN Epoch [37]: [300/600] Loss: [0.0430]

TRAIN Epoch [37]: [400/600] Loss: [0.0121]

TRAIN Epoch [37]: [500/600] Loss: [0.0385]

VAL Epoch [37]: [0/200] Loss: [0.1886]

VAL Epoch [37]: [100/200] Loss: [0.0383]

TRAIN Epoch [38]: [0/600] Loss: [0.0664]

TRAIN Epoch [38]: [100/600] Loss: [0.0295]

TRAIN Epoch [38]: [200/600] Loss: [0.0134]

TRAIN Epoch [38]: [300/600] Loss: [0.0804]

TRAIN Epoch [38]: [400/600] Loss: [0.0970]

TRAIN Epoch [38]: [500/600] Loss: [0.0405]

VAL Epoch [38]: [0/200] Loss: [0.1787]

VAL Epoch [38]: [100/200] Loss: [0.0356]

TRAIN Epoch [39]: [0/600] Loss: [0.0516]

TRAIN Epoch [39]: [100/600] Loss: [0.0309]

TRAIN Epoch [39]: [200/600] Loss: [0.0969]

TRAIN Epoch [39]: [300/600] Loss: [0.0606]

TRAIN Epoch [39]: [400/600] Loss: [0.0132]

TRAIN Epoch [39]: [500/600] Loss: [0.0098]

VAL Epoch [39]: [0/200] Loss: [0.1835]

VAL Epoch [39]: [100/200] Loss: [0.0335]

Best Epoch: 27 Best Loss: 0.10832797806244343

###Markdown

LOAD WEIGHTS STORED FOR THE BEST EPOCH

###Code

weights = osp.join(weights_dir,args.resume+'.pth')

epoch = 28

if args.resume:

print(weights)

checkpoint = torch.load(weights)

model.load_state_dict(checkpoint['model'])

# Set the start epoch if it has not been

if not args.start_epoch:

args.start_epoch = checkpoint['epoch']

###Output

gdrive/My Drive/weights/checkpoint_e23_lr1e_3_40e_SGD.pth

###Markdown

COMPUTE SIMILARITIES AND PERCENTILES FOR THE VALIDATION SPLIT

###Code

import numpy as np

# View similarities

sim_pos, sim_neg = val_sim_lim(model, val_loader, epoch, device=device)

pos_95 = np.percentile(sim_pos,95)

pos_5 = np.percentile(sim_pos,5)

pos_max = np.amax(sim_pos)

pos_min = np.amin(sim_pos)

print(pos_95, pos_5, pos_max, pos_min)

neg_95 = np.percentile(sim_neg,95)

neg_5 = np.percentile(sim_neg,5)

neg_max = np.amax(sim_neg)

neg_min = np.amin(sim_neg)

print(neg_95, neg_5, neg_max, neg_min)

###Output

VAL Epoch [28]: [0/200]

VAL Epoch [28]: [100/200]

0.9918118447065353 0.5256112039089202 0.9972943663597107 0.07290332764387131

0.9578584372997284 -0.1372625157237053 0.9923917055130005 -0.2736058235168457

###Markdown

SELECT THRESHOLD

###Code

import numpy

# Select threshold

best_acc = 0

best_tr = 0

sup = numpy.round(neg_95,decimals=3)

inf = numpy.round(pos_5,decimals=3)

for value in numpy.arange(inf, sup, 0.1):

numpy.round(value,decimals=3)

val_acc = val_tr(model, val_loader, epoch, device=device, tr=value)

av_acc = np.mean(val_acc)

if av_acc > best_acc:

best_acc = av_acc

best_tr = value

print('Best accuracy:', av_acc, 'Treshold:', best_tr)

sup = numpy.round(best_tr+.05,decimals=3)

inf = numpy.round(best_tr-.05,decimals=3)

for value in numpy.arange(inf, sup, 0.01):

numpy.round(value,decimals=3)

val_acc = val_tr(model, val_loader, epoch, device=device, tr=value)

av_acc = np.mean(val_acc)

if av_acc > best_acc:

best_acc = av_acc

best_tr = value

print('Best accuracy:', av_acc, 'Treshold:', best_tr)

sup = numpy.round(best_tr+.005,decimals=3)

inf = numpy.round(best_tr-.005,decimals=3)

for value in numpy.arange(inf, sup, 0.001):

numpy.round(value,decimals=3)

val_acc = val_tr(model, val_loader, epoch, device=device, tr=value)

av_acc = np.mean(val_acc)

if av_acc > best_acc:

best_acc = av_acc

best_tr = value

print('Best accuracy:', av_acc, 'Treshold:', best_tr)

print('Best accuracy:', av_acc, 'Treshold:', best_tr)

###Output

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

Best accuracy: 0.8214285714285714 Treshold: 0.526

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

Best accuracy: 0.8342857142857143 Treshold: 0.626

VAL Epoch [28]: [0/200] Accuracy: [0.5714285714285714]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.7142857142857143]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.7857142857142857]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

Best accuracy: 0.835 Treshold: 0.646

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

Best accuracy: 0.8357142857142857 Treshold: 0.641

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

VAL Epoch [28]: [0/200] Accuracy: [0.5]

VAL Epoch [28]: [100/200] Accuracy: [0.9285714285714286]

Best accuracy: 0.835 Treshold: 0.641

###Markdown

LOAD DATASET SPLIT FOR TEST

###Code

test_loader = get_dataloader(args.split_testdata, args,

img_transforms=val_transforms, split="test")

###Output

Dataset loaded

2800 samples in the test dataset

###Markdown

RUN TEST

###Code

# Test

best_tr = 0.641

test_acc = test(model, loss_fn, test_loader, epoch, device=device, tr=best_tr)

av_acc = np.mean(test_acc)

print('Average test accuracy:', av_acc)

###Output

TEST Epoch [28]: [0/200] Accuracy: [0.7857142857142857]

TEST Epoch [28]: [100/200] Accuracy: [0.7857142857142857]

Average test accuracy: 0.8567857142857142

|

dmu24/dmu24_ELAIS-N1/2_Photo-z_Selection_Function.ipynb

|

###Markdown

ELAIS-N1 Photo-z selection functionsThe goal is to create a selection function for the photometric redshifts that varies spatially across the field. We will use the depth maps for the optical masterlist to find regions of the field that have similar photometric coverage and then calculate the fraction of sources meeting a given photo-z selection within those pixels.1. For optical depth maps: do clustering analysis to find HEALpix with similar photometric properties.2. Calculate selection function within those groups of similar regions as a function of magnitude in a given band.3. Paramatrise the selection function in such a way that it can be easily applied for a given sample of sources or region.

###Code

from herschelhelp_internal import git_version

print("This notebook was run with herschelhelp_internal version: \n{}".format(git_version()))

import datetime

print("This notebook was executed on: \n{}".format(datetime.datetime.now()))

%matplotlib inline

#%config InlineBackend.figure_format = 'svg'

import matplotlib.pyplot as plt

plt.rc('figure', figsize=(10, 6))

import os

import time

from astropy import units as u

from astropy.coordinates import SkyCoord

from astropy.table import Column, Table, join

import numpy as np

from pymoc import MOC

import healpy as hp

#import pandas as pd #Astropy has group_by function so apandas isn't required.

import seaborn as sns

import warnings

#We ignore warnings - this is a little dangerous but a huge number of warnings are generated by empty cells later

warnings.filterwarnings('ignore')

from herschelhelp_internal.utils import inMoc, coords_to_hpidx, flux_to_mag

from herschelhelp_internal.masterlist import find_last_ml_suffix, nb_ccplots

from astropy.io.votable import parse_single_table

from astropy.io import fits

from astropy.stats import binom_conf_interval

from astropy.utils.console import ProgressBar

from astropy.modeling.fitting import LevMarLSQFitter

from sklearn.cluster import MiniBatchKMeans, MeanShift

from collections import Counter

from astropy.modeling import Fittable1DModel, Parameter

class GLF1D(Fittable1DModel):

"""

Generalised Logistic Function

"""

inputs = ('x',)

outputs = ('y',)

A = Parameter()

B = Parameter()

K = Parameter()

Q = Parameter()

nu = Parameter()

M = Parameter()

@staticmethod

def evaluate(x, A, B, K, Q, nu, M):

top = K - A

bottom = (1 + Q*np.exp(-B*(x-M)))**(1/nu)

return A + (top/bottom)

@staticmethod

def fit_deriv(x, A, B, K, Q, nu, M):

d_A = 1 - (1 + (Q*np.exp(-B*(x-M)))**(-1/nu))

d_B = ((K - A) * (x-M) * (Q*np.exp(-B*(x-M)))) / (nu * ((1 + Q*np.exp(-B*(x-M)))**((1/nu) + 1)))

d_K = 1 + (Q*np.exp(-B*(x-M)))**(-1/nu)

d_Q = -((K - A) * (Q*np.exp(-B*(x-M)))) / (nu * ((1 + Q*np.exp(-B*(x-M)))**((1/nu) + 1)))

d_nu = ((K-A) * np.log(1 + (Q*np.exp(-B*(x-M))))) / ((nu**2) * ((1 + Q*np.exp(-B*(x-M)))**((1/nu))))

d_M = -((K - A) * (Q*B*np.exp(-B*(x-M)))) / (nu * ((1 + Q*np.exp(-B*(x-M)))**((1/nu) + 1)))

return [d_A, d_B, d_K, d_Q, d_nu, d_M]

class InverseGLF1D(Fittable1DModel):

"""

Generalised Logistic Function

"""

inputs = ('x',)

outputs = ('y',)

A = Parameter()

B = Parameter()

K = Parameter()

Q = Parameter()

nu = Parameter()

M = Parameter()

@staticmethod

def evaluate(x, A, B, K, Q, nu, M):

return M - (1/B)*(np.log((((K - A)/(x -A))**nu - 1)/Q))

###Output

_____no_output_____

###Markdown

0 - Set relevant initial parameters

###Code

FIELD = 'ELAIS-N1'

ORDER = 10

OUT_DIR = 'selection_functions'

SUFFIX = 'depths_20171016_photoz_20170725'

DEPTH_MAP = 'ELAIS-N1/depths_elais-n1_20171016.fits'

MASTERLIST = 'ELAIS-N1/master_catalogue_elais-n1_20170627.fits'

PHOTOZS = 'ELAIS-N1/master_catalogue_elais-n1_20170706_photoz_20170725_irac1_optimised.fits'

help(find_last_ml_suffix)

###Output

Help on function find_last_ml_suffix in module herschelhelp_internal.masterlist:

find_last_ml_suffix(directory='./data/')

Find the data prefix of the last masterlist.

This function returns the data prefix to use to get the last master list

from a directory.

###Markdown

I - Find clustering of healpix in the depth maps

###Code

depth_map = Table.read(DEPTH_MAP)

# Get Healpix IDs

hp_idx = depth_map['hp_idx_O_{0}'.format(ORDER)]

# Calculate RA, Dec of depth map Healpix pixels for later plotting etc.

dm_hp_ra, dm_hp_dec = hp.pix2ang(2**ORDER, hp_idx, nest=True, lonlat=True)

###Output

_____no_output_____

###Markdown

The depth map provides two measures of depth:

###Code

mean_values = Table(depth_map.columns[2::2]) # Mean 1-sigma error within a cell

p90_values = Table(depth_map.columns[3::2]) # 90th percentile of observed fluxes

###Output

_____no_output_____

###Markdown

For the photo-z selection functions we will make use of the mean 1-sigma error as this can be used to accurately predict the completeness as a function of magnitude.We convert the mean 1-sigma uncertainty to a 3-sigma magnitude upper limit and convert to a useable array.When a given flux has no measurement in a healpix (and *ferr_mean* is therefore a *NaN*) we set the depth to some semi-arbitrary bright limit separate from the observed depths:

###Code

dm_clustering = 23.9 - 2.5*np.log10(3*mean_values.to_pandas().as_matrix())

dm_clustering[np.isnan(dm_clustering)] = 14

dm_clustering[np.isinf(dm_clustering)] = 14

###Output

_____no_output_____

###Markdown

To encourage the clustering to group nearby Healpix together, we also add the RA and Dec of the healpix to the inpux dataset:

###Code

dm_clustering = np.hstack([dm_clustering, np.array(dm_hp_ra.data, ndmin=2).T, np.array(dm_hp_dec.data, ndmin=2).T])

###Output

_____no_output_____

###Markdown

Next, we find clusters within the depth maps using a simple k-means clustering. For the number of clusters we assume an initial guess on the order of the number of different input magnitdues (/depths) in the dataset. This produces good initial results but may need further tuning:

###Code

NCLUSTERS = dm_clustering.shape[1]*2

km = MiniBatchKMeans(n_clusters=NCLUSTERS)

km.fit(dm_clustering)

counts = Counter(km.labels_) # Quickly calculate sizes of the clusters for reference

clusters = dict(zip(hp_idx.data, km.labels_))

Fig, Ax = plt.subplots(1,1,figsize=(8,8))

Ax.scatter(dm_hp_ra, dm_hp_dec, c=km.labels_, cmap=plt.cm.tab20, s=6)

Ax.set_xlabel('Right Ascension [deg]')

Ax.set_ylabel('Declination [deg]')

Ax.set_title('{0}'.format(FIELD))

###Output

_____no_output_____

###Markdown

II - Map photo-$z$ and masterlist objects to their corresponding depth cluster We now load the photometric redshift catalog and keep only the key columns for this selection function.Note: if using a different photo-$z$ measure than the HELP standard `z1_median`, the relevant columns should be retained instead.

###Code

photoz_catalogue = Table.read(PHOTOZS)

photoz_catalogue.keep_columns(['help_id', 'RA', 'DEC', 'id', 'z1_median', 'z1_min', 'z1_max', 'z1_area'])

###Output

_____no_output_____

###Markdown

Next we load the relevant sections of the masterlist catalog (including the magnitude columns) and map the Healpix values to their corresponding cluster. For each of the masterlist/photo-$z$ sources and their corresponding healpix we find the respective cluster.

###Code

masterlist_hdu = fits.open(MASTERLIST, memmap=True)

masterlist = masterlist_hdu[1]

masterlist_catalogue = Table()

masterlist_catalogue['help_id'] = masterlist.data['help_id']

masterlist_catalogue['RA'] = masterlist.data['ra']

masterlist_catalogue['DEC'] = masterlist.data['dec']

for column in masterlist.columns.names:

if (column.startswith('m_') or column.startswith('merr_')):

masterlist_catalogue[column] = masterlist.data[column]

masterlist_hpx = coords_to_hpidx(masterlist_catalogue['RA'], masterlist_catalogue['DEC'], ORDER)

masterlist_catalogue["hp_idx_O_{:d}".format(ORDER)] = masterlist_hpx

masterlist_cl_no = np.array([clusters[hpx] for hpx in masterlist_hpx])

masterlist_catalogue['hp_depth_cluster'] = masterlist_cl_no

merged = join(masterlist_catalogue, photoz_catalogue, join_type='left', keys=['help_id', 'RA', 'DEC'])

###Output

_____no_output_____

###Markdown

Constructing the output selection function table:The photo-$z$ selection function will be saved in a table that mirrors the format of the input optical depth maps, with matching length.

###Code

pz_depth_map = Table()

pz_depth_map.add_column(depth_map['hp_idx_O_13'])

pz_depth_map.add_column(depth_map['hp_idx_O_10'])

###Output

_____no_output_____

###Markdown

III - Creating the binary photo-$z$ selection functionWith the sources now easily grouped into regions of similar photometric properties, we can calculate the photo-$z$ selection function within each cluster of pixels. To begin with we want to create the most basic set of photo-$z$ selection functions - a map of the fraction of sources in the masterlist in a given region that have a photo-$z$ estimate. We will then create more informative selection function maps that make use of the added information from clustering.

###Code

NCLUSTERS # Fixed during the clustering stage above

cluster_photoz_fraction = np.ones(NCLUSTERS)

pz_frac_cat = np.zeros(len(merged))

pz_frac_map = np.zeros(len(dm_hp_ra))

for ic, cluster in enumerate(np.arange(NCLUSTERS)):

ml_sources = (merged['hp_depth_cluster'] == cluster)

has_photoz = (merged['z1_median'] > -90.)

in_ml = np.float(ml_sources.sum())

withz = np.float((ml_sources*has_photoz).sum())

if in_ml > 0:

frac = withz / in_ml

else:

frac = 0.

cluster_photoz_fraction[ic] = frac

print("""{0} In cluster: {1:<6.0f} With photo-z: {2:<6.0f}\

Fraction: {3:<6.3f}""".format(cluster, in_ml, withz, frac))

# Map fraction to catalog positions for reference

where_cat = (merged['hp_depth_cluster'] == cluster)

pz_frac_cat[where_cat] = frac

# Map fraction back to depth map healpix

where_map = (km.labels_ == cluster)

pz_frac_map[where_map] = frac

###Output

0 In cluster: 82628 With photo-z: 69189 Fraction: 0.837

1 In cluster: 21592 With photo-z: 2969 Fraction: 0.138

2 In cluster: 14850 With photo-z: 0 Fraction: 0.000

3 In cluster: 26284 With photo-z: 7225 Fraction: 0.275

4 In cluster: 11563 With photo-z: 1514 Fraction: 0.131

5 In cluster: 24064 With photo-z: 0 Fraction: 0.000

6 In cluster: 19702 With photo-z: 3857 Fraction: 0.196

7 In cluster: 1002 With photo-z: 168 Fraction: 0.168

8 In cluster: 6097 With photo-z: 0 Fraction: 0.000

9 In cluster: 4679 With photo-z: 0 Fraction: 0.000

10 In cluster: 3943 With photo-z: 692 Fraction: 0.176

11 In cluster: 8989 With photo-z: 0 Fraction: 0.000

12 In cluster: 8343 With photo-z: 0 Fraction: 0.000

13 In cluster: 11418 With photo-z: 0 Fraction: 0.000

14 In cluster: 17799 With photo-z: 11404 Fraction: 0.641

15 In cluster: 72915 With photo-z: 61350 Fraction: 0.841

16 In cluster: 4 With photo-z: 0 Fraction: 0.000

17 In cluster: 20290 With photo-z: 3299 Fraction: 0.163

18 In cluster: 97935 With photo-z: 77317 Fraction: 0.789

19 In cluster: 988 With photo-z: 368 Fraction: 0.372

20 In cluster: 3947 With photo-z: 940 Fraction: 0.238

21 In cluster: 22728 With photo-z: 0 Fraction: 0.000

22 In cluster: 9536 With photo-z: 2483 Fraction: 0.260

23 In cluster: 112932 With photo-z: 98701 Fraction: 0.874

24 In cluster: 147240 With photo-z: 120714 Fraction: 0.820

25 In cluster: 20206 With photo-z: 3519 Fraction: 0.174

26 In cluster: 8264 With photo-z: 5573 Fraction: 0.674

27 In cluster: 788 With photo-z: 0 Fraction: 0.000

28 In cluster: 3551 With photo-z: 432 Fraction: 0.122

29 In cluster: 39307 With photo-z: 12482 Fraction: 0.318

30 In cluster: 80280 With photo-z: 66654 Fraction: 0.830

31 In cluster: 4204 With photo-z: 831 Fraction: 0.198

32 In cluster: 16910 With photo-z: 12059 Fraction: 0.713

33 In cluster: 7272 With photo-z: 6153 Fraction: 0.846

34 In cluster: 49929 With photo-z: 32971 Fraction: 0.660

35 In cluster: 134366 With photo-z: 115005 Fraction: 0.856

36 In cluster: 3643 With photo-z: 1086 Fraction: 0.298

37 In cluster: 44602 With photo-z: 8412 Fraction: 0.189

38 In cluster: 12260 With photo-z: 0 Fraction: 0.000

39 In cluster: 61382 With photo-z: 31830 Fraction: 0.519

40 In cluster: 1741 With photo-z: 0 Fraction: 0.000

41 In cluster: 39915 With photo-z: 25612 Fraction: 0.642

42 In cluster: 756 With photo-z: 0 Fraction: 0.000

43 In cluster: 144268 With photo-z: 120085 Fraction: 0.832

44 In cluster: 20944 With photo-z: 15889 Fraction: 0.759

45 In cluster: 25398 With photo-z: 21595 Fraction: 0.850

46 In cluster: 45878 With photo-z: 31389 Fraction: 0.684

47 In cluster: 21939 With photo-z: 4246 Fraction: 0.194

48 In cluster: 11224 With photo-z: 1 Fraction: 0.000

49 In cluster: 19402 With photo-z: 0 Fraction: 0.000

50 In cluster: 16194 With photo-z: 3778 Fraction: 0.233

51 In cluster: 2786 With photo-z: 0 Fraction: 0.000

52 In cluster: 3033 With photo-z: 0 Fraction: 0.000

53 In cluster: 23082 With photo-z: 17528 Fraction: 0.759

54 In cluster: 3837 With photo-z: 877 Fraction: 0.229

55 In cluster: 117955 With photo-z: 98807 Fraction: 0.838

56 In cluster: 4729 With photo-z: 0 Fraction: 0.000

57 In cluster: 16861 With photo-z: 0 Fraction: 0.000

58 In cluster: 143652 With photo-z: 89746 Fraction: 0.625

59 In cluster: 5096 With photo-z: 0 Fraction: 0.000

60 In cluster: 1490 With photo-z: 625 Fraction: 0.419

61 In cluster: 5722 With photo-z: 508 Fraction: 0.089

62 In cluster: 22857 With photo-z: 0 Fraction: 0.000

63 In cluster: 88789 With photo-z: 77004 Fraction: 0.867

64 In cluster: 110994 With photo-z: 95905 Fraction: 0.864

65 In cluster: 20218 With photo-z: 3332 Fraction: 0.165

66 In cluster: 11708 With photo-z: 0 Fraction: 0.000

67 In cluster: 15168 With photo-z: 5342 Fraction: 0.352

68 In cluster: 2529 With photo-z: 0 Fraction: 0.000

69 In cluster: 10158 With photo-z: 6738 Fraction: 0.663

70 In cluster: 149171 With photo-z: 121770 Fraction: 0.816

71 In cluster: 47639 With photo-z: 41370 Fraction: 0.868

72 In cluster: 6004 With photo-z: 865 Fraction: 0.144

73 In cluster: 79984 With photo-z: 68404 Fraction: 0.855

74 In cluster: 11476 With photo-z: 8949 Fraction: 0.780

75 In cluster: 114394 With photo-z: 96172 Fraction: 0.841

76 In cluster: 19232 With photo-z: 4625 Fraction: 0.240

77 In cluster: 8769 With photo-z: 215 Fraction: 0.025

78 In cluster: 3172 With photo-z: 67 Fraction: 0.021

79 In cluster: 170650 With photo-z: 133279 Fraction: 0.781

80 In cluster: 2332 With photo-z: 28 Fraction: 0.012

81 In cluster: 2341 With photo-z: 764 Fraction: 0.326

82 In cluster: 25005 With photo-z: 4839 Fraction: 0.194

83 In cluster: 8840 With photo-z: 6909 Fraction: 0.782

84 In cluster: 5812 With photo-z: 0 Fraction: 0.000

85 In cluster: 19158 With photo-z: 13734 Fraction: 0.717

86 In cluster: 38188 With photo-z: 9414 Fraction: 0.247

87 In cluster: 212908 With photo-z: 173463 Fraction: 0.815

88 In cluster: 3457 With photo-z: 792 Fraction: 0.229

89 In cluster: 21175 With photo-z: 4628 Fraction: 0.219

90 In cluster: 7165 With photo-z: 594 Fraction: 0.083

91 In cluster: 30436 With photo-z: 0 Fraction: 0.000

92 In cluster: 4987 With photo-z: 3660 Fraction: 0.734

93 In cluster: 3765 With photo-z: 851 Fraction: 0.226

94 In cluster: 237613 With photo-z: 197277 Fraction: 0.830

95 In cluster: 55873 With photo-z: 42960 Fraction: 0.769

96 In cluster: 320 With photo-z: 0 Fraction: 0.000