id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

369,287,185 |

angular

|

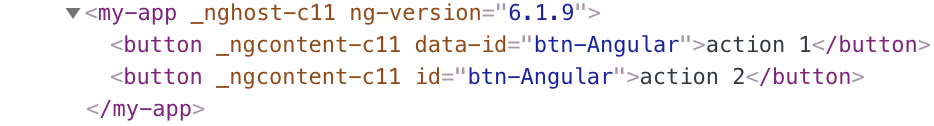

data- prefix gets stripped from bindings such as <div data-id="{{1}}">

|

<!--

PLEASE HELP US PROCESS GITHUB ISSUES FASTER BY PROVIDING THE FOLLOWING INFORMATION.

ISSUES MISSING IMPORTANT INFORMATION MAY BE CLOSED WITHOUT INVESTIGATION.

-->

## I'm submitting a...

<!-- Check one of the following options with "x" -->

<pre><code>

[ ] Regression (a behavior that used to work and stopped working in a new release)

[x] Bug report <!-- Please search GitHub for a similar issue or PR before submitting -->

[ ] Performance issue

[ ] Feature request

[ ] Documentation issue or request

[ ] Support request => Please do not submit support request here, instead see https://github.com/angular/angular/blob/master/CONTRIBUTING.md#question

[x] Other... Please describe: embarrassment

</code></pre>

## Current behavior

```html

<button [attr.data-id]="'btn-' + type">action 1</button>

<button data-id="btn-{{type}}">action 2</button>

```

## Expected behavior

<!-- Describe what the desired behavior would be. -->

There must be the same behavior

## Minimal reproduction of the problem with instructions

https://stackblitz.com/edit/angular-w2nsvr?file=src/app/app.component.html

## Environment

<pre><code>

Angular version: 6

|

type: bug/fix,breaking changes,freq1: low,area: core,state: confirmed,core: basic template syntax,core: binding & interpolation,P3

|

low

|

Critical

|

369,291,746 |

rust

|

Can't cast `self as &Trait` in trait default method

|

```rust

trait Blah {

fn test(&self) {

self as &Blah;

}

}

```

```

error[E0277]: the size for values of type `Self` cannot be known at compilation time

--> src/lib.rs:3:9

|

3 | self as &Blah;

| ^^^^ doesn't have a size known at compile-time

|

= help: the trait `std::marker::Sized` is not implemented for `Self`

= note: to learn more, visit <https://doc.rust-lang.org/book/second-edition/ch19-04-advanced-types.html#dynamically-sized-types-and-sized>

= help: consider adding a `where Self: std::marker::Sized` bound

= note: required for the cast to the object type `dyn Blah`

```

[(playground)](https://play.rust-lang.org/?gist=68b51b8defb6bddf8d1c0b6ec1eafa2d&version=stable&mode=debug&edition=2015)

Well, `self` is usually a thin pointer, tho it may be a fat pointer if eg it's a slice. However `Self` is concrete. `Self` may not be sized, but `&Self` certainly is. `mem::size_of` is a const fn, so [this code](https://play.rust-lang.org/?gist=ba7372f0d844c49ea3f4b338180f67d3&version=stable&mode=debug&edition=2015) proves that the size is known at compile time! Well, it maybe a matter of precisely when in the compile time. Anyways, that code should totally compile.

## Workaround 1

```rust

trait Blah {

fn as_blah(&self) -> &Blah;

…

}

```

## Workaround 2

```rust

trait Blah {

fn test(&self, this: &Blah) {

…

}

}

```

## Workaround 3

Manually construct the reference, somehow.

|

C-enhancement,A-trait-system,T-compiler

|

low

|

Critical

|

369,299,487 |

TypeScript

|

Feature Request: "extends oneof" generic constraint; allows for narrowing type parameters

|

## Search Terms

* generic bounds

* narrow generics

* extends oneof

<!-- List of keywords you searched for before creating this issue. Write them down here so that others can find this suggestion more easily -->

## Suggestion

Add a new kind of generic type bound, similar to `T extends C` but of the form `T extends oneof(A, B, C)`.

(Please bikeshed the semantics, not the syntax. I know this version is not great to write, but it *is* backwards compatible.)

Similar to `T extends C`, when the type parameter is determined (either explicitly or through inference), the compiler would check that the constraint holds. `T extends oneof(A, B, C)` means that *at least one of* `T extends A`, `T extends B`, `T extends C` holds. So, for example, in a function

```ts

function smallest<T extends oneof(string, number)>(x: T[]): T {

if (x.length == 0) {

throw new Error('empty');

}

return x.slice(0).sort()[0];

}

```

Just like today, these would be legal:

```ts

smallest<number>([1, 2, 3); // legal

smallest<string>(["a", "b", "c"]); // legal

smallest([1, 2, 3]); // legal

smallest(["a", "b", "c"]); // legal

```

But (unlike using `extends`) the following would be **illegal**:

```ts

smallest<string | number>(["a", "b", "c"]); // illegal

// string|number does not extend string

// string|number does not extend number

// Therefore, string|number is not "in" string|number, so the call fails (at compile time).

// Similarly, these are illegal:

smallest<string | number>([1, 2, 3]); // illegal

smallest([1, "a", 3]); // illegal

```

## Use Cases / Examples

What this would open up is the ability to narrow *generic parameters* by putting type guards on values inside functions:

```ts

function smallestString(xs: string[]): string {

... // e.g. a natural-sort smallest string function

}

function smallestNumber(x: number[]): number {

... // e.g. a sort that compares numbers correctly instead of lexicographically

}

function smallest<T extends oneof(string, number)>(x: T[]): T {

if (x.length == 0) {

throw new Error('empty');

}

const first = x[0]; // first has type "T"

if (typeof first == "string") {

// it is either the case that T extends string or that T extends number.

// typeof (anything extending number) is not "string", so we know at this point that

// T extends string only.

return smallestString(x); // legal

}

// at this point, we know that if T extended string, it would have exited the first if.

// therefore, we can safely call

return smallestNumber(x);

}

```

This can't be safely done using `extends`, since looking at one item (even if there's *only* one item) can't tell you anything about `T`; only about that object's dynamic type.

## Unresolved: Syntax

The actual syntax isn't really important to me; I just would like to be able to get narrowing of generic types in a principled way.

(EDIT:)

Note: despite the initial appearance, `oneof(...)` is not a type operator. The abstract syntax parse would be more like `T extends_oneof(A, B, C)`; the `oneof` and the `extends` are not separate.

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript / JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. new expression-level syntax)

(any solution will reserve new syntax, so it's not a breaking change, and it only affects flow / type narrowing so no runtime component is needed)

|

Suggestion,In Discussion

|

high

|

Critical

|

369,309,598 |

rust

|

Add filtering to `rustc_on_unimplemented` to avoid misleading suggestion

|

After #54946, the code `let x = [0..10]; for _ in x {}` will cause the following output:

```

error[E0277]: `[std::ops::Range<{integer}>; 1]` is not an iterator

--> $DIR/array-of-ranges.rs:11:14

|

LL | for _ in array_of_range {}

| ^^^^^^^^^^^^^^ if you meant to iterate between two values, remove the square brackets

|

= help: the trait `std::iter::Iterator` is not implemented for `[std::ops::Range<{integer}>; 1]`

= note: `[start..end]` is an array of one `Range`; you might have meant to have a `Range` without the brackets: `start..end`

= note: required by `std::iter::IntoIterator::into_iter`

```

Add a way to identify this case to `rustc_on_unimplemented`, in order to avoid giving this misleading/incorrect diagnostic.

|

C-enhancement,A-diagnostics,T-compiler,F-on_unimplemented

|

low

|

Critical

|

369,320,022 |

terminal

|

WINDOW_BUFFER_SIZE_EVENT generated during window scrolling

|

Windows Version 10.0.17763.1

> [SetConsoleWindowInfo](https://docs.microsoft.com/en-us/windows/console/setconsolewindowinfo) can be used to scroll the contents of the console screen buffer by shifting the position of the window rectangle without changing its size.

Starting from Windows 10 1709 (FCU) such scrolling generates a WINDOW_BUFFER_SIZE_EVENT **even though the console buffer size remains unchanged**.

This breaks our application behaviour and does not make sense for the following reasons:

- The [documentation](https://docs.microsoft.com/en-us/windows/console/window-buffer-size-record-str) explicitly says that WINDOW_BUFFER_SIZE_RECORD "describes *a change in the size* of the console screen buffer", but there's no change in this case.

- The event is generated only if the contents of the console screen buffer is scrolled via SetConsoleWindowInfo API, but it's useless - the application already knows that the console is being scrolled because the scrolling is initiated by the application itself.

- event is **not** generated when the user moves the scrollbar manually, so the application does not know that the console it being scrolled in that case.

- It does not happen in Legacy mode and never happened before for 20+ years.

A minimal project to reproduce the issue attached.

[BufferSizeEventBug.zip](https://github.com/Microsoft/console/files/2470845/BufferSizeEventBug.zip)

|

Work-Item,Issue-Feature,Product-Conhost,Area-Server

|

medium

|

Critical

|

369,329,359 |

vscode

|

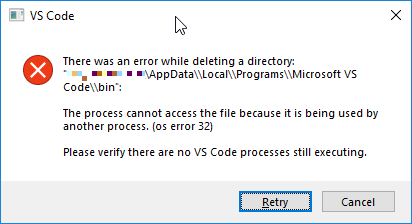

Can't Install local user update due to `\\bin` folder being used by another process

|

> There was an error while deleting a directory: `%LOCALAPPDATA%\Programs\Microsoft VS Code\bin`: the process cannot access the file because it is being used by another process…

- VSCode Version: 1.2.7.2 (user setup)

- OS Version: Windows 10

## Steps to Reproduce:

1. when an update is available, click **Install Update**

**Does this issue occur when all extensions are disabled?:** cannot try this because installation is disabled/destroyed due to incomplete setup

**Workaround:** will go back to system installs instead of [local user installs](https://code.visualstudio.com/updates/v1_26#_user-setup-for-windows).

|

bug,install-update,windows

|

high

|

Critical

|

369,343,061 |

TypeScript

|

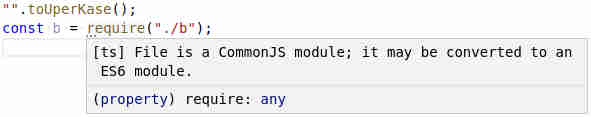

Suggestion diagnostics show in untyped JS code when regular diagnostics don't

|

**TypeScript Version:** 3.2.0-dev.20181011

**Code**

```ts

"".toUperKase();

const b = require("./b");

```

**Expected behavior:**

If we're not showing regular diagnostics, we should probably not show any suggestions either.

**Actual behavior:**

`"".toUperKase()` is fine. `require` is a real problem that we need to let the user know about right away!

|

Suggestion,In Discussion,Domain: Error Messages,Domain: TSServer

|

low

|

Minor

|

369,385,245 |

opencv

|

Poor precision on RGB to L*a*b* color conversion

|

The precision and numerical stability of RGB to L\*a\*b\* color space conversions (and back) is poor when the pixel luminance values are low. For example:

import cv2

import numpy as np

rgbimg = np.array([[[0.001, 0.001, 0.001]]], np.float32)

print rgbimg # Outputs [[[0.001 0.001 0.001]]]

labimg = cv2.cvtColor(rgbimg, cv2.COLOR_RGB2LAB)

print labimg # Outputs [[[0. 0. 0.]]]

rgbimg = cv2.cvtColor(labimg, cv2.COLOR_LAB2RGB)

print rgbimg # Outputs [[[0. 0. 0.]]]

rgbimg = np.array([[[1, 1, 1]]], np.uint8)

print rgbimg # Outputs [[[1 1 1]]]

labimg = cv2.cvtColor(rgbimg, cv2.COLOR_RGB2LAB)

print labimg # Outputs [[[ 1 128 128]]]

rgbimg = cv2.cvtColor(labimg, cv2.COLOR_LAB2RGB)

print rgbimg # Outputs [[[2 2 2]]]

Other image processing libraries maintain much better precision in these conversions.

|

category: imgproc,priority: low

|

low

|

Minor

|

369,400,171 |

tensorflow

|

Feature Request: GPUOptions for Go binding

|

Current implementation of Go binding can not specify options.

GPUOptions struct is in internal package. And `go generate` doesn't work for protobuf directory. So we can't specify GPUOptions for `NewSession`.

|

stat:contribution welcome,type:feature,good first issue

|

high

|

Critical

|

369,432,343 |

node

|

Warn on potentially insecure inspector options (--inspect=0.0.0.0)

|

Extracted from #21774.

Inspector by default is bound to 127.0.0.1, but suggestion to launch it with `--inspect=0.0.0.0` is highly copy-pasted without proper understanding what it does. I've observed that personally in chats, also see [google](https://www.google.ca/search?q="--inspect%3D0.0.0.0").

Binding inspector to 0.0.0.0 (in fact, to anything but the loopback interface ip) allows RCE, which could be catastrophic in cases where the IP is public. The users should be informed of that.

A warning printed to the console (with corresponding documentation change) should at least somewhat mitigate this.

Note: the doc change and the c++ change can come separately.

|

help wanted,doc,security,inspector

|

low

|

Minor

|

369,473,845 |

puppeteer

|

When I try to print `msg.text` in 'console' event with type 'error', I got `JSHandle@error`

|

<!--

STEP 1: Are you in the right place?

- For general technical questions or "how to" guidance, please search StackOverflow for questions tagged "puppeteer" or create a new post.

https://stackoverflow.com/questions/tagged/puppeteer

- For issues or feature requests related to the DevTools Protocol (https://chromedevtools.github.io/devtools-protocol/), file an issue there:

https://github.com/ChromeDevTools/devtools-protocol/issues/new.

- Problem in Headless Chrome? File an issue against Chromium's issue tracker:

https://bugs.chromium.org/p/chromium/issues/entry?components=Internals%3EHeadless&blocking=705916

For issues, feature requests, or setup troubles with Puppeteer, file an issue right here!

-->

### Steps to reproduce

**Tell us about your environment:**

* Puppeteer version: 1.9.0

* Platform / OS version: macos

* URLs (if applicable):

* Node.js version: 8.11.3

**What steps will reproduce the problem?**

_Please include code that reproduces the issue._

1. Simply add these code in your page

```javascript

try {

// try to print a value doesn't exist

// and it will throw an error

console.log(a)

} catch (e) {

// catch the error and print this with `console.error`

console.error(e)

}

```

2. puppeteer script:

```javascript

...

page.on('console', msg => {

console.log(msg.text())

})

...

```

**What is the expected result?**

It prints `JSHandle@error`

**What happens instead?**

The exact error object like `ReferenceError: a is not defined...`

|

feature,chromium,confirmed

|

medium

|

Critical

|

369,494,510 |

TypeScript

|

Improve `Array.from(tuple)` and `[...tuple]`

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Please read the FAQ first, especially the "Common Feature Requests" section.

-->

## Suggestion

<!-- A summary of what you'd like to see added or changed -->

`Array.from(tuple)` and `[...tuple]` should preserve individual types that made up tuple.

## Proposal

### `Array.from` (Simple)

Add this overload to `Array.from`:

```typescript

interface ArrayConstructor {

from<T extends any[]> (array: T): T

}

```

[demo 1](https://www.typescriptlang.org/play/#src=interface%20ArrayConstructor%20%7B%0D%0A%20%20%20%20from%3CT%20extends%20any%5B%5D%3E%20(array%3A%20T)%3A%20T%0D%0A%7D%0D%0A%0D%0Aconst%20a%3A%20%5B0%2C%201%2C%202%5D%20%3D%20%5B0%2C%201%2C%202%5D%0D%0Aconst%20b%20%3D%20Array.from(a)%20%2F%2F%20Type%3A%20%5B0%2C%201%2C%202%5D)

**Caveats:**

* The above definition preserves everything including unrelated properties that do not belong to `Array.prototype` whilst actual `Array.from` discards them. (for instance, if input has `foo: 'bar'`, output array will also has `foo: 'bar'`).

### `Array.from` (Complete)

Fix above caveats.

```typescript

interface ArrayConstructor {

from<T extends any[]> (array: T): CloneArray<T>

}

type CloneArray<T extends any[]> = {

[i in number & keyof T]: T[i]

} & {

length: T['length']

} & any[]

```

[demo 2](https://www.typescriptlang.org/play/#src=interface%20ArrayConstructor%20%7B%0D%0A%20%20%20%20from%3CT%20extends%20any%5B%5D%3E%20(array%3A%20T)%3A%20CloneArray%3CT%3E%0D%0A%7D%0D%0A%0D%0Atype%20CloneArray%3CT%20extends%20any%5B%5D%3E%20%3D%20%7B%0D%0A%20%20%20%20%5Bi%20in%20number%20%26%20keyof%20T%5D%3A%20T%5Bi%5D%0D%0A%7D%20%26%20%7B%0D%0A%20%20%20%20length%3A%20T%5B'length'%5D%0D%0A%7D%20%26%20any%5B%5D%0D%0A%0D%0Aconst%20a%3A%20%5B0%2C%201%2C%202%5D%20%3D%20%5B0%2C%201%2C%202%5D%0D%0Aconst%20b%20%3D%20Array.from(a)%20%2F%2F%20Type%3A%20CloneArray%3C%5B0%2C%201%2C%202%5D%3E%0D%0Aconst%20l%20%3D%20a.length%20%2F%2F%20Type%3A%203%0D%0Aconst%20%5Bx%2C%20y%2C%20z%5D%20%3D%20a%20%2F%2F%20Type%3A%20%5B0%2C%201%2C%202%5D%0D%0Aa.map(x%20%3D%3E%20x)%20%2F%2F%20(0%20%7C%201%20%7C%202)%5B%5D)

### Spread operator

```typescript

declare const tuple: [0, 1, 2] & { foo: 'bar' }

// $ExpectType [string, string, 0, 1, 2, string, string]

const clone = ['a', 'b', ...tuple, 'c', 'd']

```

Note that `typeof clone` does not contain `{ foo: 'bar' }`.

## Use Cases

<!--

What do you want to use this for?

What shortcomings exist with current approaches?

-->

* Clone a tuple without losing type information.

## Examples

<!-- Show how this would be used and what the behavior would be -->

### Clone a tuple

```typescript

const a: [0, 1, 2] = [0, 1, 2]

// $ExpectType [0, 1, 2]

const b = Array.from(a)

```

### Clone a generic tuple

```typescript

function clone<T extends any[]> (a: T): T {

return Array.from(a)

}

```

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript / JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. new expression-level syntax)

|

Suggestion,In Discussion,Domain: lib.d.ts

|

low

|

Critical

|

369,521,758 |

react

|

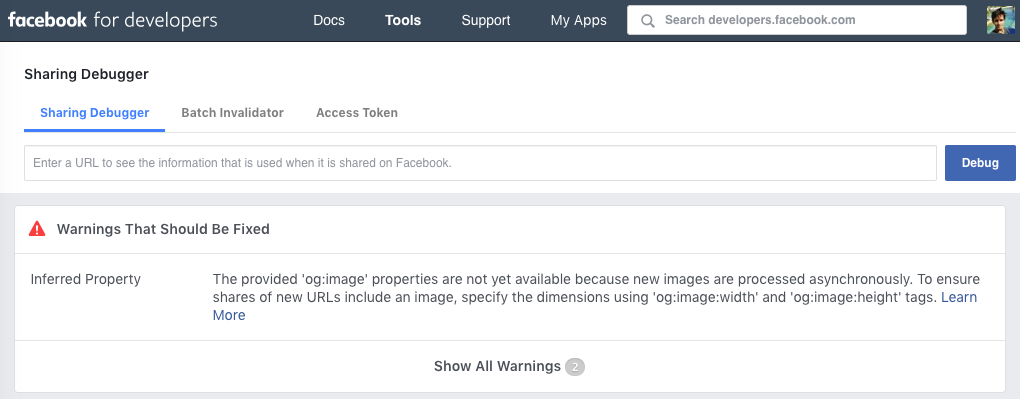

head > meta > content escaping issue

|

<!--

Note: if the issue is about documentation or the website, please file it at:

https://github.com/reactjs/reactjs.org/issues/new

-->

**Do you want to request a *feature* or report a *bug*?**

I'm guessing it's a bug.

**What is the current behavior?**

The following source code,

```jsx

<meta property="og:image" content="https://onepixel.imgix.net/60366a63-1ac8-9626-1df8-9d8d5e5e2601_1000.jpg?auto=format&q=80&mark=watermark%2Fcenter-v5.png&markalign=center%2Cmiddle&h=500&w=500&s=60ec785603e5f71fe944f76b4dacef08" />

```

, is being escaped once server side rendered:

```jsx

<meta property="og:image" content="https://onepixel.imgix.net/60366a63-1ac8-9626-1df8-9d8d5e5e2601_1000.jpg?auto=format&q=80&mark=watermark%2Fcenter-v5.png&markalign=center%2Cmiddle&h=500&w=500&s=60ec785603e5f71fe944f76b4dacef08"/>

```

You can reproduce the behavior like this:

```jsx

const React = require("react");

const ReactDOMServer = require("react-dom/server");

const http = require("http");

const doc = React.createElement("html", {

children: [

React.createElement("head", {

children: React.createElement("meta", {

property: "og:image",

content:

"https://onepixel.imgix.net/60366a63-1ac8-9626-1df8-9d8d5e5e2601_1000.jpg?auto=format&q=80&mark=watermark%2Fcenter-v5.png&markalign=center%2Cmiddle&h=500&w=500&s=60ec785603e5f71fe944f76b4dacef08"

})

}),

React.createElement("body", { children: "og:image" })

]

});

//create a server object:

http

.createServer(function(req, res) {

res.write("<!DOCTYPE html>" + ReactDOMServer.renderToStaticMarkup(doc)); //write a response to the client

res.end(); //end the response

})

.listen(8080); //the server object listens on port 8080

```

editor: https://codesandbox.io/s/my299jk7qp

output : https://my299jk7qp.sse.codesandbox.io/

**What is the expected behavior?**

I would expect the content not being escaped. It's related to https://github.com/zeit/next.js/issues/2006#issuecomment-355917446.

I'm using the `og:image` meta element so my pages can have nice previews within Facebook :).

**Which versions of React, and which browser / OS are affected by this issue? Did this work in previous versions of React?**

16.5.2

|

Component: Server Rendering,Type: Needs Investigation

|

high

|

Critical

|

369,567,305 |

go

|

cmd/go: allow replacement version to be omitted if the target module has a required version

|

Currently you must add a version when entering a non-filesystem (remote) replacement for a module. A foolish attempt to put `replace github.com/fsnotify/fsnotify => gitmirror.corp.xyz.com/fsnotify/fsnotify` provokes a rebuke from `go mod verify`:

`go.mod:42: replacement module without version must be directory path (rooted or starting with ./ or ../)`

- so you have to fix it to be: `replace github.com/fsnotify/fsnotify => gitmirror.corp.xyz.com/fsnotify/fsnotify v1.4.7`.

However, there _is_ already a version specification for that package in `go.mod` the `require` statement, for example:

`require github.com/fsnotify/fsnotify v1.4.7`

This seems to be the reasonable default value for the missing version in the `replace` directive for the same package - "if no replacement version is given, use the same as in the `require` directive for that specific package`.

What would be especially nice is when upgrading a package such as github.com/fsnotify/fsnotify to the future v1..4.8 version, one would not need to first run `go get -u github.com/fsnotify/fsnotify` and then have to look up the new version and manually update the old version to the new one in the `replace` section (or worse, forgetting to do it and ending up with the unintended replacement with the old version).

@thepudds said on Slack that he wanted to suggest this as well. @bcmills @rsc - does it seem reasonable to you?

|

NeedsInvestigation,modules

|

medium

|

Critical

|

369,588,783 |

go

|

net/http: add CONNECT bidi example

|

https://go-review.googlesource.com/c/go/+/123156 is removing some documentation from http.Transport that says not to use CONNECT requests with Transport because it's adding CONNECT support.

We should also add examples. CL 123156 has a test which is close at least, but could be cleaned up and be made less unit-testy.

|

Documentation,help wanted,NeedsFix

|

low

|

Major

|

369,601,132 |

TypeScript

|

Computed Properties aren't bound correctly during Object/Class evaluation

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!-- Please try to reproduce the issue with `typescript@next`. It may have already been fixed. -->

**TypeScript Version:** 3.2.0-dev.20181011

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:** computed property expression

**Code**

```ts

const classes = [];

for (let i = 0; i <= 10; ++i) {

classes.push(

class A {

[i] = "my property";

}

);

}

for (const clazz of classes) {

console.log(Object.getOwnPropertyNames(new clazz()));

}

```

**Expected behavior:** The log statements indicate that each class in `classes` has a different property name (`i` should be evaluated at the time of the class evaluation and all instances of that class should have a property name corresponding to that evaluation of `i`).

**Actual behavior:** Compiled code logs:

> [ '10' ]

> [ '10' ]

> [ '10' ]

> [ '10' ]

> [ '10' ]

> [ '10' ]

> [ '10' ]

> [ '10' ]

> [ '10' ]

> [ '10' ]

> [ '10' ]

[**Playground Link**](https://www.typescriptlang.org/play/index.html#src=const%20classes%20%3D%20%5B%5D%3B%0D%0Afor%20(let%20i%20%3D%200%3B%20i%20%3C%3D%2010%3B%20%2B%2Bi)%20%7B%0D%0A%20%20classes.push(%0D%0A%20%20%20%20class%20A%20%7B%0D%0A%20%20%20%20%20%20%5Bi%5D%20%3D%20%22my%20property%22%3B%0D%0A%20%20%20%20%7D%0D%0A%20%20)%3B%0D%0A%7D%0D%0Afor%20(const%20clazz%20of%20classes)%20%7B%0D%0A%20%20console.log(Object.getOwnPropertyNames(new%20clazz()))%3B%0D%0A%7D)

|

Bug,Help Wanted,Effort: Moderate,Domain: Transforms

|

low

|

Critical

|

369,624,255 |

pytorch

|

Request for stripped down / inference only pytorch wheels

|

## 🚀 Feature

Creating a precompiled pytorch wheel file that is trimmed down, inference only version.

## Motivation

Right now pytorch wheels are on average ~400MB zipped -> 1.+ GB unzipped, which is not a big deal for training & prototyping as generally the wheels are only installed once - but that's not the case for productionizing using service providers like sagemaker / algorithmia / etc.

## Pitch

If we can create a trimmed down, potentially inference only capable wheel file - we can directly improve the load time performance of these algorithms in serverless algorithm delivery environments, which could directly pytorch's ability to compete in the HPC serverless marketplace.

## Alternatives

We could also provide a clear way for users to create their own wheels, by simplifying and documenting the build process somewhat to enable optional features during the compilation process.

## Additional context

Full disclosure, I'm an employee at Algorithmia and this change would make my life much easier :smile:

cc @malfet @seemethere @walterddr

|

module: build,triaged

|

low

|

Major

|

369,669,027 |

opencv

|

opencv_createsamples app can fail if negative images are different sizes

|

##### System information (version)

- OpenCV => 3.4.3

- Operating System / Platform => Windows 64 Bit

- Compiler => Visual Studio 2017

##### Detailed description

In the opencv_createsamples app, the cvCreateTestSamples() for loop calculates maxscale only once if it is initially passed with a negative value. This works fine if all of the negative training images are the same size, but can cause an assertion to be raised if a smaller image is encountered. (width or height becomes too large and results in x or y being negative)

#####

for( i = 0; i < count; i++ )

{

icvGetNextFromBackgroundData( cvbgdata, cvbgreader );

if( maxscale < 0.0 ) /// maxscale is only < 0 once and is never recalculated

{

maxscale = MIN( 0.7F * cvbgreader->src.cols / winwidth,

0.7F * cvbgreader->src.rows / winheight );

}

if( maxscale < 1.0F ) continue;

scale = theRNG().uniform( 1.0F, (float)maxscale );

width = (int) (scale * winwidth);

height = (int) (scale * winheight);

x = (int) ( theRNG().uniform( 0.1, 0.8 ) * (cvbgreader->src.cols - width));

y = (int) ( theRNG().uniform( 0.1, 0.8 ) * (cvbgreader->src.rows - height));

//////

// During loop execution, cvbgreader->src.cols and cvbgreader->src.rows may

// change, but maxscale does not.

// width or height could thus be greater than src.cols or src.rows resulting in

// negative x or y values which causes an assertion when icvPlaceDistortedSample()

// is called

//////

if( invert == CV_RANDOM_INVERT )

{

inverse = theRNG().uniform( 0, 2 );

}

icvPlaceDistortedSample( cvbgreader->src(Rect(x, y, width, height)), inverse, maxintensitydev,

maxxangle, maxyangle, maxzangle,

1, 0.0, 0.0, &data );

|

feature,category: apps

|

low

|

Minor

|

369,675,744 |

rust

|

Expected identifer error hides other expected tokens

|

```

error: expected identifier, found `,`

--> src/lib.rs:5:18

|

5 | #[cfg_attr(all(),,)]

| ^ expected identifier

```

I would expect the error message to say ``^ expected identifier or `)` ``. It doesn't because this diagnostic is created by [`expected_ident_found`](https://github.com/rust-lang/rust/blob/master/src/libsyntax/parse/parser.rs#L797) which ignores the other expected tokens. I wonder if the suggestion can be added to [`expect_one_of`](https://github.com/rust-lang/rust/blob/master/src/libsyntax/parse/parser.rs#L681) with the rest of the logic.

The same seems to happen for "expected type" e.g. `impl;` saying only "expected type" instead of also expecting a `<` for generics. I'm not sure if I should open another issue for that.

|

C-enhancement,A-diagnostics,T-compiler,D-papercut

|

low

|

Critical

|

369,754,297 |

flutter

|

Using copyWith in a degenerative scenario

|

NOTE: this feels like it might be a case of "too bad, too late" in terms of getting any changes into flutter, but I want to raise it anyway to see what feedback I can get.

It appears to be impossible to use `copyWith` in a degenerative scenario. Suppose, for instance, you have an `InputDecoration` and you want to ensure the `errorText` is `null`:

```dart

final effectiveDecoration = decoration.copyWith(errorText: null);

```

This won't have the desired effect because the `copyWith` method does this:

```dart

errorText: errorText ?? this.errorText,

```

So it ignores the request because it can't distinguish between wanting to set `errorText` to `null` and not wanting to set it at all. And in this particular case, one can't even work around it by setting `errorText` to `""` because that still causes an (empty) error message to appear.

The only workaround that I can find is to literally copy the entire object and set the `errorText` to `null`:

```dart

final effectiveDecoration = InputDecoration(

errorText: null,

border: decoration.border,

contentPadding: decoration.contentPadding,

counterStyle: decoration.counterStyle,

counterText: decoration.counterText,

disabledBorder: decoration.disabledBorder,

enabled: decoration.enabled,

enabledBorder: decoration.enabledBorder,

errorBorder: decoration.errorBorder,

errorMaxLines: decoration.errorMaxLines,

errorStyle: decoration.errorStyle,

fillColor: decoration.fillColor,

filled: decoration.filled,

focusedBorder: decoration.focusedBorder,

focusedErrorBorder: decoration.focusedErrorBorder,

helperStyle: decoration.helperStyle,

helperText: decoration.helperText,

hintStyle: decoration.hintStyle,

hintText: decoration.hintText,

icon: decoration.icon,

isDense: decoration.isDense,

labelStyle: decoration.labelStyle,

labelText: decoration.labelText,

prefix: decoration.prefix,

prefixIcon: decoration.prefixIcon,

prefixStyle: decoration.prefixStyle,

prefixText: decoration.prefixText,

suffix: decoration.suffix,

suffixIcon: decoration.suffixIcon,

suffixStyle: decoration.suffixStyle,

suffixText: decoration.suffixText,

);

```

This is obviously super clumsy and not future-proof (fields added to `InputDecoration` will not be copied and won't break the build).

Suggestions?

|

framework,c: proposal,a: null-safety,P2,team-framework,triaged-framework

|

low

|

Critical

|

369,776,297 |

rust

|

Missed optimization: layout optimized enums produce slow derived code

|

Changing

```rust

const FOO_A: u32 = 0xFFFF_FFFF;

const FOO_B: u32 = 0xFFFF_FFFE;

const BAR_X: u32 = 0;

const BAR_Y: u32 = 1;

const BAR_Z: u32 = 2;

struct Foo { u: u32 }

```

https://play.rust-lang.org/?gist=9d1ff0355fbfabbc0c47f15e78e94687&version=nightly&mode=debug&edition=2015

to

```rust

pub enum Bar {

X, Y, Z

}

enum Foo {

A,

B,

Other(Bar),

}

```

https://play.rust-lang.org/?gist=faf6db37cdc627b1c5f8d582ad5c6779&version=nightly&mode=release&edition=2015

While this will result in pretty much the same layout as before, any derived code on `Foo` will now generate less optimal code. Apparently llvm can't manage to clean that up.

The llvm IR for the first playground link is

```llvm

define zeroext i1 @_ZN10playground3foo17h7604dbf314c89374E(i32, i32) unnamed_addr #0 {

start:

%2 = icmp eq i32 %0, %1

ret i1 %2

}

```

while the one for the second link is

```llvm

define zeroext i1 @_ZN10playground3foo17ha662001f5519a11dE(i32, i32) unnamed_addr #0 {

start:

%2 = add nsw i32 %0, -3

%3 = icmp ult i32 %2, 2

%narrow.i = select i1 %3, i32 %2, i32 2

%4 = add nsw i32 %1, -3

%5 = icmp ult i32 %4, 2

%narrow8.i = select i1 %5, i32 %4, i32 2

%6 = icmp eq i32 %narrow.i, %narrow8.i

br i1 %6, label %bb6.i, label %"_ZN56_$LT$playground..Foo$u20$as$u20$core..cmp..PartialEq$GT$2eq17h647dd5d9c0e8f1fcE.exit"

bb6.i: ; preds = %start

%7 = icmp eq i32 %0, %1

%not.or.cond.i = or i1 %3, %5

%spec.select.i = or i1 %7, %not.or.cond.i

br label %"_ZN56_$LT$playground..Foo$u20$as$u20$core..cmp..PartialEq$GT$2eq17h647dd5d9c0e8f1fcE.exit"

"_ZN56_$LT$playground..Foo$u20$as$u20$core..cmp..PartialEq$GT$2eq17h647dd5d9c0e8f1fcE.exit": ; preds = %start, %bb6.i

%8 = phi i1 [ %spec.select.i, %bb6.i ], [ false, %start ]

ret i1 %8

}

```

We can't improve the derives, because the derives on `Foo` can't see the definition of `Bar`.

|

A-LLVM,I-slow,A-codegen,T-compiler

|

low

|

Critical

|

369,806,614 |

rust

|

Functions still get personality function attached to them when landing pads are disabled

|

Compiling with `-Cpanic=abort` or `-Zno-landing-pads` should make associated personality functions entirely unnecessary, yet they still somehow end up getting attached to functions generated with the "current" CG.

Consider for example this function:

```rust

pub fn fails2(a: &mut u32, b: &mut u32) -> i32 {

::std::mem::swap(a, b);

2 + 2

}

```

which when compiled (with or without optimisations) with `-Cpanic=abort`, will contain no personality functions in `1.27.1` but will contain them starting with `1.28`.

<details>

<summary>1.27.1</summary>

```llvm

define i32 @_ZN7example6fails217h8dd58cf9651f12a5E(i32* noalias nocapture dereferenceable(4) %a, i32* noalias nocapture dereferenceable(4) %b) unnamed_addr #0 !dbg !4 {

start:

%0 = load i32, i32* %a, align 1, !dbg !7, !alias.scope !30, !noalias !33

%1 = load i32, i32* %b, align 1, !dbg !35, !alias.scope !33, !noalias !30

store i32 %1, i32* %a, align 1, !dbg !35, !alias.scope !30, !noalias !33

store i32 %0, i32* %b, align 1, !dbg !36, !alias.scope !33, !noalias !30

ret i32 4, !dbg !37

}

```

</details>

<details>

<summary>1.28</summary>

```llvm

define i32 @_ZN7example6fails217h203cd5a0beec258bE(i32* noalias nocapture dereferenceable(4) %a, i32* noalias nocapture dereferenceable(4) %b) unnamed_addr #0 personality i32 (i32, i32, i64, %"unwind::libunwind::_Unwind_Exception"*, %"unwind::libunwind::_Unwind_Context"*)* @rust_eh_personality !dbg !4 {

start:

%tmp.0.copyload.i.i.i = load i32, i32* %a, align 4, !dbg !7, !alias.scope !21, !noalias !24

%0 = load i32, i32* %b, align 4, !dbg !26, !alias.scope !24, !noalias !21

store i32 %0, i32* %a, align 4, !dbg !26, !alias.scope !21, !noalias !24

store i32 %tmp.0.copyload.i.i.i, i32* %b, align 4, !dbg !28, !alias.scope !24, !noalias !21

ret i32 4, !dbg !31

}

```

</details>

This is technically a codegen regression, albeit very innocuous one.

|

A-codegen,T-compiler

|

low

|

Minor

|

369,817,335 |

go

|

encoding/json: confusing errors when unmarshaling custom types

|

In go1.11

There is a minor issue with custom type unmarshaling using "encoding/json". As far as I understand the documentation (and also looking through the internal code), the requirements are:

- when processing map keys, the custom type needs to support UnmarshalText

- when processing values, the custom type needs to support UnmarshalJSON

When both of these interfaces are supported, everything is fine. Otherwise, the produced error messages are a bit cryptic and in some cases really confusing. I believe, adding a test for `Implements(jsonUnmarshallerType)` inside `encoding/json/decode.go: func (d *decodeState) object(v reflect.Value)` will make things more consistent.

Here is the code (try commenting out `UnmarshalText` and/or/xor `UnmarshalJSON` methods):

``` go

package main

import (

"encoding/json"

"fmt"

)

type Enum int

const (

Enum1 = Enum(iota + 1)

Enum2

)

func (enum Enum) String() string {

switch enum {

case Enum1: return "Enum1"

case Enum2: return "Enum2"

default: return "<INVALID ENUM>"

}

}

func (enum *Enum) unmarshal(b []byte) error {

var s string

err := json.Unmarshal(b, &s)

if err != nil { return err }

switch s {

case "ONE": *enum = Enum1

case "TWO": *enum = Enum2

default: return fmt.Errorf("Invalid Enum value '%s'", s)

}

return nil

}

func (enum *Enum) UnmarshalText(b []byte) error {

return enum.unmarshal(b)

}

func (enum *Enum) UnmarshalJSON(b []byte) error {

return enum.unmarshal(b)

}

func main() {

data := []byte(`{"ONE":"ONE", "TWO":"TWO"}`)

var ss map[string]string

err := json.Unmarshal(data, &ss)

if err != nil { fmt.Println("ss failure:", err) } else { fmt.Println("ss success:", ss) }

var se map[string]Enum

err = json.Unmarshal(data, &se)

if err != nil { fmt.Println("se failure:", err) } else { fmt.Println("se success:", se) }

var es map[Enum]string

err = json.Unmarshal(data, &es)

if err != nil { fmt.Println("es failure:", err) } else { fmt.Println("es success:", es) }

var ee map[Enum]Enum

err = json.Unmarshal(data, &ee)

if err != nil { fmt.Println("ee failure:", err) } else { fmt.Println("ee success:", ee) }

// Output when both UnmarshalText and UnmarshalJSON are defined:

// ss success: map[ONE:ONE TWO:TWO]

// se success: map[ONE:Enum1 TWO:Enum2]

// es success: map[Enum1:ONE Enum2:TWO]

// ee success: map[Enum1:Enum1 Enum2:Enum2]

// Output when UnmarshalJSON is commented out:

// ss success: map[ONE:ONE TWO:TWO]

// se failure: invalid character 'T' looking for beginning of value

// es failure: invalid character 'O' looking for beginning of value

// ee failure: invalid character 'T' looking for beginning of value

// Output when UnmarshalText is commented out:

// ss success: map[ONE:ONE TWO:TWO]

// se success: map[ONE:Enum1 TWO:Enum2]

// es failure: json: cannot unmarshal number ONE into Go value of type main.Enum

// ee failure: json: cannot unmarshal number ONE into Go value of type main.Enum

// In more complex cases, having UnmarshalText undefined also produced this

// error message: JSON decoder out of sync - data changing underfoot?

}

```

|

NeedsDecision

|

medium

|

Critical

|

369,834,512 |

vscode

|

Open next build error file+line based on regex parsing of build output.

|

Edit: The proposed ability to jump to first/next build error should not be part of the "Problems" explorer system, since this requires code analysis. **The command should be based solely on regex parsing of the build output, and should work in absence of any language support aka extensions.** I shouldn't have to run 300mb and jvm in the background just to jump to build errors.

When I run a build task to compile my code, I would like a keyboard shortcut that will open the location of the first/next compile error by opening the file and jumping to the line.

This command should ignore non build related errors and warning (from linters / intellisense). I am not interested in general VSCode problem noise, I just want to be able to quickly and easily address compile errors.

It should start at the first compile error in the terminal, and cycle from there. Basically, just go see how all other editors in the world jump to build errors with a shortcut.....do that.

Its a pretty basic and necessary feature.

1. Hit build.

2. Jump to error....

The problems view is not adequate.

**1. It requires the language support plugin to be installed.** This is totally unnecessary, as regex parsing of the build output is simpler, lighter, and tried tested and true.

**2 It is more than a single hotkey press.** In sublime when I build, I press a single shortcut to jump to error, then I fix error, I press a single shortcut to jump to next error....rinse and repeat.

In VSCode I...

-press a shortcut to focus problems

-I press down to highlight error

-I press ENTER to focus editor and the fix error

-I press shortcut to focus problems view again

Its so much more work!

**3. It does not cycle problems in order.** This is important, as when compiling, often the first build error is also causing the subsequent errors.

|

feature-request,tasks

|

medium

|

Critical

|

369,855,222 |

go

|

cmd/go: retry failed fetches

|

```

$ go version

go version go1.11.1 darwin/amd64

```

```go

package main

import (

_ "github.com/google/go-cloud/wire/cmd/wire"

)

func main() {

//

}

```

```

go build -o a.out

go: finding github.com/google/go-cloud/wire/cmd/wire latest

go: finding github.com/google/go-cloud/wire/cmd latest

go: finding github.com/google/go-cloud/wire latest

go: finding google.golang.org/api v0.0.0-20180606215403-8e9de5a6de6d

go: google.golang.org/[email protected]: git fetch -f https://code.googlesource.com/google-api-go-client refs/heads/*:refs/heads/* refs/tags/*:refs/tags/* in /Users/chai/go/pkg/mod/cache/vcs/9e62a95b0409d58bc0130bae299bdffbc7b7e74f3abe1ecf897474cc474b8bc0: exit status 128:

error: RPC failed; curl 18 transfer closed with outstanding read data remaining

fatal: The remote end hung up unexpectedly

fatal: early EOF

fatal: index-pack failed

go: error loading module requirements

```

|

NeedsInvestigation,FeatureRequest,early-in-cycle,modules

|

medium

|

Critical

|

369,864,702 |

godot

|

Need 'brush_transfer' func for 3.0/3.1

|

**Godot version:**

3.1 alpha

**Issue description:**

Miss function in Image Class compared with Godot 2.1.

|

enhancement,topic:core

|

low

|

Minor

|

369,924,671 |

vscode

|

Centered layout should be per workbench, not per editor area

|

With centered editor layout enabled (with or without zen mode), toggling the side bar on/off will resize the sashes. Even though there is enough real-estate for the side bar to show without doing so. To illustrate this behavior, look at the left sash in both these screenshots.

1.

2.

This illustrates the "jumpiness" when opening the side bar in this scenario. It would be preferable that the sashes were not moved unless needed.

|

feature-request,layout,workbench-zen

|

high

|

Critical

|

369,926,347 |

create-react-app

|

Check Node version early

|

We need to add better Node version checks. The current one doesn’t cover all requirements (like Node >= 8.9.0).

|

tag: enhancement,difficulty: starter,contributions: claimed,good first issue

|

medium

|

Major

|

369,926,657 |

flutter

|

ListView should have a addKeepAlive field

|

ListView adds AutomaticKeepAlives by default. When things scroll off screen, the list items are deleted. To work around this, I've been calling `KeepAliveNotification(KeepAliveHandle()).dispatch(context)` for every item in the `ListView`. I believe it would be cleaner to have an option for adding a `KeepAlive` instead of an `AutomaticKeepAlive`.

|

c: new feature,framework,f: scrolling,P3,team-framework,triaged-framework

|

low

|

Major

|

369,941,598 |

create-react-app

|

Compile JSX to direct createElement() calls

|

Due to how webpack works today with CommonJS, we pay the cost of *three* object property accesses (`ReactWebpackBinding.default.createElement`) for every JSX call. It doesn't minify well and has a minor effect on runtime performance. It's also a bit clowny.

We should fix this to compile JSX to something like

```js

var createElement = require('react').createElement

createElement(...)

```

Could be a custom Babel transform. Could be a transform that inserts `_createReactElement` into scope and specifies it as the JSX pragma.

|

contributions: up for grabs!,tag: enhancement,difficulty: medium

|

low

|

Major

|

369,941,882 |

three.js

|

ArrayCamera: Compute frustum based on sub-cameras.

|

ArrayCamera only extends and only supports Perspective Cameras.

|

Enhancement

|

low

|

Major

|

369,952,211 |

rust

|

Refiling "Deprecate "implicit ()" by making it a compilation error."

|

Refiled from https://github.com/rust-lang/rfcs/issues/2098#issuecomment-320580803 as a diagnostics issue:

>

> clippy can't do anything right now if a compiler error occurs before our lints run and most our lints are run after type checking.

>

> I agree though that example A should backtrack the source of the value and see if there's the possibility of removing a semicolon in the presence of `()`.

>

> Note that there are many other situations, e.g. the reverse of the above comparison:

>

> ```rust

> fn main() {

> let v = {

> println!("hacky debug: v is being initialized.");

> 42;

> };

> assert!(42 == v);

> }

> ```

>

> produces

>

> ```

> Compiling playground v0.0.1 (file:///playground)

> error[E0277]: the trait bound `{integer}: std::cmp::PartialEq<()>` is not satisfied

> --> src/main.rs:6:16

> |

> 6 | assert!(42 == v);

> | ^^ can't compare `{integer}` with `()`

> |

> = help: the trait `std::cmp::PartialEq<()>` is not implemented for `{integer}`

> ```

>

> I do not think that this requires an RFC. Simply implementing improved diagnostics and opening a PR is totally fine (I've not seen a denied diagnostic improvement PR so far).

cc @oli-obk @estebank

|

A-frontend,C-enhancement,A-diagnostics,T-compiler,WG-diagnostics

|

low

|

Critical

|

369,955,869 |

godot

|

`KinematicBody.move_and_collide(rel_vec)` returns appears to return `GridMap` (not a PhysicsBody)

|

<!-- Please search existing issues for potential duplicates before filing yours:

https://github.com/godotengine/godot/issues?q=is%3Aissue

-->

**Godot version:**

`v3.0.6-stable`

**Issue description:**

`KinematicBody.move_and_collide(rel_vec)` returns appears to return `GridMap` as the `KinematicCollision.collider`. This is an issue if you want to handle **all** collisions by looping through and adding collision exceptions after each is handled. You cannot add a collision exception with `GridMap` since it is not a `PhysicsBody` and any looping and calling `move_and_collide` will just return `GridMap` .

**Steps to reproduce:**

Cause a collision with a gridmap element that contains a collision shape.

**Minimal reproduction project:**

I'll drop one up here if needed.

I'll take a look at the code soon to see if this is an easy fix. For now I'm just documenting this issue.

|

discussion,topic:core

|

low

|

Minor

|

369,961,475 |

pytorch

|

how to store a bounding box in Tensor?

|

how to store a bounding box of image in TensorProto of caffe2 ?

|

caffe2

|

low

|

Minor

|

369,965,679 |

pytorch

|

Install Jetson TX2 Max Regcount Error

|

## 🐛 Bug

<!-- A clear and concise description of what the bug is. -->

## To Reproduce

Steps to reproduce the behavior:

1. Attempt to install from source on a fresh Jetpack 3.3 on nVidia Jetson TX2

2. Instead of ```python setup.py install```, install with ```python3 setup.py install``` (Tried with both, same error)

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

Errors are:

...about 100 NVLink errors, listing the last few below along with final error log.

nvlink error : entry function '_Z28ncclAllReduceLLKernel_sum_i88ncclColl' with max regcount of 80 calls function '_Z25ncclReduceScatter_max_u64P14CollectiveArgs' with regcount of 96

nvlink error : entry function '_Z29ncclAllReduceLLKernel_sum_i328ncclColl' with max regcount of 80 calls function '_Z25ncclReduceScatter_max_u64P14CollectiveArgs' with regcount of 96

nvlink error : entry function '_Z29ncclAllReduceLLKernel_sum_f168ncclColl' with max regcount of 80 calls function '_Z25ncclReduceScatter_max_u64P14CollectiveArgs' with regcount of 96

nvlink error : entry function '_Z29ncclAllReduceLLKernel_sum_u328ncclColl' with max regcount of 80 calls function '_Z25ncclReduceScatter_max_u64P14CollectiveArgs' with regcount of 96

nvlink error : entry function '_Z29ncclAllReduceLLKernel_sum_f328ncclColl' with max regcount of 80 calls function '_Z25ncclReduceScatter_max_u64P14CollectiveArgs' with regcount of 96

nvlink error : entry function '_Z29ncclAllReduceLLKernel_sum_u648ncclColl' with max regcount of 80 calls function '_Z25ncclReduceScatter_max_u64P14CollectiveArgs' with regcount of 96

nvlink error : entry function '_Z28ncclAllReduceLLKernel_sum_u88ncclColl' with max regcount of 80 calls function '_Z25ncclReduceScatter_max_u64P14CollectiveArgs' with regcount of 96

Makefile:83: recipe for target '/home/nvidia/jetson-reinforcement/build/pytorch/third_party/build/nccl/obj/collectives/device/devlink.o' failed

make[5]: *** [/home/nvidia/jetson-reinforcement/build/pytorch/third_party/build/nccl/obj/collectives/device/devlink.o] Error 255

Makefile:45: recipe for target 'devicelib' failed

make[4]: *** [devicelib] Error 2

Makefile:24: recipe for target 'src.build' failed

make[3]: *** [src.build] Error 2

CMakeFiles/nccl.dir/build.make:60: recipe for target 'lib/libnccl.so' failed

make[2]: *** [lib/libnccl.so] Error 2

CMakeFiles/Makefile2:67: recipe for target 'CMakeFiles/nccl.dir/all' failed

make[1]: *** [CMakeFiles/nccl.dir/all] Error 2

Makefile:127: recipe for target 'all' failed

make: *** [all] Error 2

Failed to run 'bash ../tools/build_pytorch_libs.sh --use-cuda --use-nnpack nccl caffe2 libshm gloo c10d THD'

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

Install should work so that I can open a Python 3 console and can succesfully do: ```import torch```

## Environment

Script does not run.

- PyTorch Version (e.g., 1.0): Latest master

- OS (e.g., Linux): nVidia Jetson TX2 Ubuntu, aarch64 architecture

- How you installed PyTorch (`conda`, `pip`, source): source

- Build command you used (if compiling from source): ```python3 setup.py install```

- Python version: 3.5.3

- CUDA/cuDNN version: 9.0, 7.0

- GPU models and configuration:

- Any other relevant information:

There is no Conda build for aarch64, so have to use standard python libraries.

cc @malfet @seemethere @walterddr

|

needs reproduction,module: build,triaged,module: jetson

|

low

|

Critical

|

369,966,750 |

TypeScript

|

Enforce definite initialization of `static` members

|

Is there some clever reason why TSC can't check this (maybe... related to semantics of JS inheritance?)?

**TypeScript Version:** 3.2.0-dev.20181011

**Search Terms:** strictPropertyInitialization, static, initialized, uninitialized, assigned, property

**Code**

```ts

class A {

static s: number

a() {

A.s * 3 // Should be a compile error

}

}

A.s * 7 // Should be an compile error

```

**Expected behavior:** Both lines should produce compile errors.

**Actual behavior:** Both lines result in runtime exceptions.

[**Playground Link**](https://www.typescriptlang.org/play/index.html#src=class%20A%20%7B%0D%0A%20%20static%20s%3A%20number%0D%0A%20%20a()%20%7B%0D%0A%20%20%20%20A.s%20*%203%20%2F%2F%20Should%20be%20a%20compile%20error%0D%0A%20%20%7D%0D%0A%7D%0D%0AA.s%20*%207%20%2F%2F%20Should%20be%20an%20compile%20error)

**Related Issues:**

- https://github.com/Microsoft/TypeScript/issues/21976

|

Suggestion,Awaiting More Feedback

|

medium

|

Critical

|

369,967,892 |

gin

|

How to use multiple domains?

|

How to use multiple domains with one port ?

|

question

|

low

|

Minor

|

369,975,065 |

go

|

x/crypto/acme/autocert: verify the beginning time of an issued cert is not necessary

|

autocert.go line 1095 compares the current time and the time the cert is issued to check if the cert is valid.

But the time of the server is not always 100% accurate, and if the server time is behind the acme server time (i.e. the real time), autocert judges the cert as not valid, and will request for another cert on the next request which will hit the rate limit in a very short time.

### What did you expect to see?

Verify the beginning of the time of the cert is not necessary. Only the expiry date is needed to be verified. Since the valid duration of an acme cert is typically 3 months long, I don't think any server will have such a huge time difference.

### What did you see instead?

Cert judged as not valid. More cert requests will be sent subsequently (they will be judged as invalid too) and hits the rate limit. No cert can be successfully issued until the server time is corrected.

|

NeedsInvestigation

|

medium

|

Major

|

369,979,536 |

rust

|

improve diagnostic for trait impl involving (infinite?) type recursion and constants

|

I'm seeing an internal compiler error in something I *think* is related to infinite recursion in the type system while implementing a generic trait.

I tried compiling this code via cargo as a library crate (all of this in `lib.rs`):

```

use std::ops::Mul;

use std::f64::consts::PI;

pub trait Unit: Default + Copy + Clone + PartialOrd + PartialEq {}

#[derive(Default, Copy, Clone, PartialOrd, PartialEq)]

pub struct Quantity<U: Unit>(

pub f64,

pub U,

);

#[derive(Default, Copy, Clone, PartialOrd, PartialEq)]

pub struct Point<T=f64> {

pub x: T,

pub y: T,

}

impl<'b, T> Mul<&'b Point<T>> for f64 where f64: Mul<&'b T> {

type Output = Point<<f64 as Mul<&'b T>>::Output>;

fn mul(self, rhs: &'b Point<T>) -> Self::Output { Point{ x: self*&rhs.x, y: self*&rhs.y } }

}

mod detail { // private with public struct, for use in a public type alias only

use super::Unit;

#[derive(Default, Copy, Clone, PartialOrd, PartialEq)]

pub struct AngleUnit;

impl Unit for AngleUnit {}

} // mod detail

pub type Angle = Quantity<detail::AngleUnit>;

pub const RADIANS: Angle = Quantity(1.0, detail::AngleUnit{});

pub const DEGREES: Angle = Quantity(RADIANS.0*PI/180.0, detail::AngleUnit{});

```

I expected to see some reasonable compiler error; I'm pretty confident the code is bad. In particular, if you comment out the last two lines (the definition of the constants), you get a friendlier error:

```

error[E0275]: overflow evaluating the requirement `_: std::marker::Sized`

```

(followed by some less-friendly recursive messages that do eventually truncate).

With those two `const` lines as above, I get:

```

error: internal compiler error: librustc/traits/structural_impls.rs:178: impossible case reached

thread 'main' panicked at 'Box<Any>', librustc_errors/lib.rs:578:9

note: Run with `RUST_BACKTRACE=1` for a backtrace.

error: aborting due to previous error

note: the compiler unexpectedly panicked. this is a bug.

note: we would appreciate a bug report: https://github.com/rust-lang/rust/blob/master/CONTRIBUTING.md#bug-reports

note: rustc 1.29.1 (b801ae664 2018-09-20) running on x86_64-unknown-linux-gnu

note: compiler flags: -C debuginfo=2 -C incremental --crate-type lib

note: some of the compiler flags provided by cargo are hidden

```

## Meta

(see above for version)

Since I'm not completely confident in my ability to run just `rustc` with the same options, here's the output of `RUST_BACKTRACE=1 cargo test`, I get:

```

error: internal compiler error: librustc/traits/structural_impls.rs:178: impossible case reached

thread 'main' panicked at 'Box<Any>', librustc_errors/lib.rs:578:9

stack backtrace:

0: std::sys::unix::backtrace::tracing::imp::unwind_backtrace

stack backtrace:

0: std::sys::unix::backtrace::tracing::imp::unwind_backtrace

at libstd/sys/unix/backtrace/tracing/gcc_s.rs:49

1: std::sys_common::backtrace::print

at libstd/sys/unix/backtrace/tracing/gcc_s.rs:49

1: std::sys_common::backtrace::print

at libstd/sys_common/backtrace.rs:71

at libstd/sys_common/backtrace.rs:59

2: std::panicking::default_hook::{{closure}}

at libstd/sys_common/backtrace.rs:71

at libstd/sys_common/backtrace.rs:59

2: std::panicking::default_hook::{{closure}}

at libstd/panicking.rs:211

3: std::panicking::default_hook

at libstd/panicking.rs:227

4: rustc::util::common::panic_hook

5: std::panicking::rust_panic_with_hook

at libstd/panicking.rs:479

6: std::panicking::begin_panic

7: rustc_errors::Handler::bug

8: rustc::session::opt_span_bug_fmt::{{closure}}

9: rustc::ty::context::tls::with_opt::{{closure}}

10: rustc::ty::context::tls::with_context_opt

11: rustc::ty::context::tls::with_opt

at libstd/panicking.rs12:: 211rustc

:: session ::3opt_span_bug_fmt:

std::panicking ::13default_hook:

rustc:: session at ::libstd/panicking.rsbug_fmt:

227

14: rustc ::4traits: ::structural_implsrustc::::<utilimpl:: commonrustc::::panic_hookty

::context:: Lift <5': tcx>std ::forpanicking ::rustcrust_panic_with_hook::

traits:: SelectionError at <libstd/panicking.rs':a479>

>::lift_to_tcx

6: 15std: ::rustcpanicking::::tybegin_panic::

context::TyCtxt :: lift_to_global7

: rustc_errors ::16Handler: ::rustcbug::

traits::select :: SelectionContext8::: candidate_from_obligation

rustc::session ::17opt_span_bug_fmt: ::rustc{::{traitsclosure::}select}::

SelectionContext::evaluate_stack

9 : 18rustc: ::rustcty::::tycontext::::contexttls::::tlswith_opt::::with_context{

{closure }19}:

rustc:: dep_graph10::: graph::rustcDepGraph::::tywith_anon_task::

context::tls ::20with_context_opt:

rustc:: traits11::: selectrustc::::SelectionContextty::::evaluate_predicate_recursivelycontext

::tls:: with_opt21

: rustc ::12infer: ::InferCtxtrustc::::probesession

::opt_span_bug_fmt

22 : 13: <&rustc'::asession ::mutbug_fmt

I as14 : core::rustciter::::traitsiterator::::structural_implsIterator::><::implnext

rustc::ty ::23context: ::Lift<<alloc'::tcxvec>:: Vecfor< Trustc>:: traitsas:: SelectionErroralloc<::'veca::>SpecExtend><::Tlift_to_tcx,

I> >15::: from_iter

rustc::ty ::24context: ::TyCtxtrustc::::lift_to_globaltraits

::select ::16SelectionContext: ::rustccandidate_from_obligation_no_cache::

traits::select ::25SelectionContext: ::rustccandidate_from_obligation::

ty::context ::17tls: ::rustcwith_context::

traits::select ::26SelectionContext: ::evaluate_stackrustc

::dep_graph:: graph18::: DepGraph::rustcwith_anon_task::

ty:: context27::: tls::rustcwith_context::

traits::select:: SelectionContext19::: candidate_from_obligation

rustc:: dep_graph28::: graph::rustcDepGraph::::traitswith_anon_task::

select::SelectionContext ::20evaluate_stack:

rustc:: traits29::: selectrustc::::SelectionContextty::::evaluate_predicate_recursivelycontext

::tls ::21with_context:

rustc:: infer30::: InferCtxtrustc::::probedep_graph

::graph ::22DepGraph: ::with_anon_task<

&' a31 : mutrustc ::Itraits ::asselect ::coreSelectionContext::::iterevaluate_predicate_recursively::

iterator:: Iterator32>: ::rustcnext::

infer:: InferCtxt23::: probe<

alloc:: vec33::: Vec<<T&>' aas mutalloc ::vec::ISpecExtend <asT ,core ::Iiter>::>iterator::::from_iterIterator

>::next

24: 34rustc: ::traits<::allocselect::::vecSelectionContext::::Veccandidate_from_obligation_no_cache<

T> 25as: allocrustc::::vecty::::SpecExtendcontext<::Ttls,:: with_contextI

>> ::26from_iter:

rustc:: dep_graph35::: graphrustc::::DepGraphtraits::::with_anon_taskselect

::SelectionContext:: candidate_from_obligation_no_cache27

: rustc ::36traits: ::rustcselect::::tySelectionContext::::contextcandidate_from_obligation::

tls:: with_context28

: rustc ::37traits: ::selectrustc::::SelectionContextdep_graph::::evaluate_stackgraph

::DepGraph ::29with_anon_task:

rustc:: ty38::: context::rustctls::::traitswith_context::

select::SelectionContext ::30candidate_from_obligation:

rustc::dep_graph ::39graph: ::rustcDepGraph::::traitswith_anon_task::

select ::31SelectionContext: ::evaluate_stackrustc

::traits:: select40::: SelectionContextrustc::::evaluate_predicate_recursivelyty

::context ::32tls: ::rustcwith_context::

infer:: InferCtxt41::: probe

rustc ::33dep_graph: ::<graph&::'DepGrapha:: with_anon_taskmut

I 42as: corerustc::::itertraits::::iteratorselect::::IteratorSelectionContext>::::evaluate_predicate_recursivelynext

4334: : rustc::<inferalloc::::InferCtxtvec::::probeVec

<T> 44as: <alloc&::'veca:: SpecExtendmut< TI, asI >core>::::iterfrom_iter::

iterator:: Iterator35>: ::rustcnext::

traits::select ::45SelectionContext: ::candidate_from_obligation_no_cache<

alloc:: vec36::: Vec<rustcT::>ty ::ascontext ::alloctls::::vecwith_context::

SpecExtend< T37,: Irustc>::>dep_graph::::from_itergraph

::DepGraph ::46with_anon_task:

rustc::traits ::38select: ::rustcSelectionContext::::traitscandidate_from_obligation_no_cache::

select ::47SelectionContext: ::rustccandidate_from_obligation::

ty ::39context: ::tlsrustc::::with_contexttraits

::select:: SelectionContext48::: evaluate_stackrustc

::dep_graph ::40graph: ::DepGraphrustc::::with_anon_taskty

::context ::49tls: ::rustcwith_context::

traits::select ::41SelectionContext: ::rustccandidate_from_obligation::

dep_graph::graph ::50DepGraph: ::rustcwith_anon_task::

traits:: select42::: SelectionContextrustc::::evaluate_stacktraits

::select ::51SelectionContext: ::rustcevaluate_predicate_recursively::

ty ::43context: ::rustctls::::inferwith_context::

InferCtxt:: probe52

: rustc ::44dep_graph: ::<graph&::'DepGrapha:: with_anon_taskmut

I as53 : corerustc::::itertraits::::iteratorselect::::IteratorSelectionContext>::::evaluate_predicate_recursivelynext

5445: : <allocrustc::::vecinfer::::VecInferCtxt<::Tprobe>

as 55alloc: ::vec<::&SpecExtend'<aT ,mut II> >as:: from_itercore

:: iter46::: iterator::rustcIterator::>traits::::nextselect

::SelectionContext ::56candidate_from_obligation_no_cache:

<alloc ::47vec: ::rustcVec::<tyT::>context ::astls ::allocwith_context::

vec:: SpecExtend48<: Trustc,:: dep_graphI::>graph>::::DepGraphfrom_iter::

with_anon_task

5749: : rustcrustc::::traitstraits::::selectselect::::SelectionContextSelectionContext::::candidate_from_obligation_no_cachecandidate_from_obligation

5850: : rustc::rustcty::::traitscontext::::selecttls::::SelectionContextwith_context::

evaluate_stack

59: rustc51::: dep_graph::rustcgraph::::tyDepGraph::::contextwith_anon_task::

tls ::60with_context:

rustc ::52traits: ::rustcselect::::dep_graphSelectionContext::::graphcandidate_from_obligation::

DepGraph ::61with_anon_task:

rustc::traits ::53select: ::SelectionContextrustc::::evaluate_stacktraits

::select ::62SelectionContext: ::rustcevaluate_predicate_recursively::

ty:: context54::: tls::rustcwith_context::

infer:: InferCtxt63::: proberustc

::dep_graph ::55graph: ::DepGraph<::&with_anon_task'

a mut64 : Irustc ::astraits ::coreselect::::iterSelectionContext::::iteratorevaluate_predicate_recursively::

Iterator>:: next65

: rustc ::56infer: ::InferCtxt<::allocprobe::

vec:: Vec66<: T>< &as' aalloc ::mutvec ::ISpecExtend <asT ,core ::Iiter>::>iterator::::from_iterIterator

>:: next57

: rustc67::: traits::<selectalloc::::SelectionContextvec::::candidate_from_obligation_no_cacheVec

<T >58 : as rustcalloc::::tyvec::::contextSpecExtend::<tlsT::,with_context

I>> ::59from_iter:

rustc::dep_graph ::68graph: ::rustcDepGraph::::traitswith_anon_task::

select::SelectionContext ::60candidate_from_obligation_no_cache:

rustc:: traits69::: select::rustcSelectionContext::::tycandidate_from_obligation::

context:: tls61::: with_contextrustc

::traits ::70select: ::SelectionContextrustc::::evaluate_stackdep_graph

::graph:: DepGraph62::: with_anon_task

rustc:: ty71::: contextrustc::::tlstraits::::with_contextselect

::SelectionContext ::63candidate_from_obligation:

rustc:: dep_graph72::: graphrustc::::DepGraphtraits::::with_anon_taskselect

::SelectionContext ::64evaluate_stack:

rustc::traits:: select73::: SelectionContext::rustcevaluate_predicate_recursively::

ty::context:: tls65::: with_context

rustc::infer ::74InferCtxt: ::rustcprobe::

dep_graph::graph:: DepGraph66::: with_anon_task

<&' a75 : mut rustcI:: traitsas:: core::iter::selectiterator::::SelectionContextIterator::>evaluate_predicate_recursively::

next

76: rustc67::: infer::<InferCtxtalloc::::probevec

::Vec< T77>: <as& 'alloca:: vecmut:: SpecExtendI< Tas, coreI::>iter>::::iteratorfrom_iter::

Iterator>:: next68

: rustc ::78traits: ::<selectalloc::::SelectionContextvec::::candidate_from_obligation_no_cacheVec

<T> 69as: allocrustc::::vecty::::SpecExtendcontext<::Ttls,:: with_contextI

>> ::70from_iter:

rustc::dep_graph ::79graph: ::rustcDepGraph::::traitswith_anon_task::

select:: SelectionContext71::: candidate_from_obligation_no_cacherustc

::traits:: select80::: SelectionContextrustc::::candidate_from_obligationty

::context ::72tls: ::rustcwith_context::

traits:: select81::: SelectionContextrustc::::evaluate_stackdep_graph

::graph ::73DepGraph: ::rustcwith_anon_task::

ty::context ::82tls: ::rustcwith_context::

traits:: select74::: SelectionContextrustc::::candidate_from_obligationdep_graph

::graph ::83DepGraph: ::rustcwith_anon_task::

traits:: select75::: SelectionContext::rustcevaluate_stack::

traits::select ::SelectionContext84::: evaluate_predicate_recursivelyrustc

::ty ::76context: ::tlsrustc::::with_contextinfer

::InferCtxt:: probe85

: rustc ::77dep_graph: ::graph<::&DepGraph'::awith_anon_task

mut I86 : as rustccore::::traitsiter::::selectiterator::::SelectionContextIterator::>evaluate_predicate_recursively::

next

87 : 78: rustc::<inferalloc::::InferCtxtvec::::probeVec

<T> 88as: <alloc&::'veca:: SpecExtendmut< TI, asI >core>::::iterfrom_iter::

iterator:: Iterator79>: ::rustcnext::

traits::select ::89SelectionContext: ::<candidate_from_obligation_no_cachealloc

::vec ::80Vec: <rustcT::>ty ::ascontext ::alloctls::::vecwith_context::

SpecExtend<T ,81 : I>rustc>::::dep_graphfrom_iter::

graph::DepGraph ::90with_anon_task:

rustc:: traits82::: select::rustcSelectionContext::::traitscandidate_from_obligation_no_cache::

select::SelectionContext ::91candidate_from_obligation:

rustc:: ty83::: contextrustc::::tlstraits::::with_contextselect

::SelectionContext:: evaluate_stack92

: rustc ::84dep_graph: ::graphrustc::::DepGraphty::::with_anon_taskcontext

::tls:: with_context93

: rustc ::85traits: ::selectrustc::::SelectionContextdep_graph::::candidate_from_obligationgraph

::DepGraph:: with_anon_task94

: rustc ::86traits: ::selectrustc::::SelectionContexttraits::::evaluate_stackselect

::SelectionContext:: evaluate_predicate_recursively95

: rustc ::87ty: ::contextrustc::::tlsinfer::::with_contextInferCtxt

::probe

96: rustc88::: dep_graph::<graph&::'DepGrapha:: with_anon_taskmut

I 97as: rustccore::::traitsiter::::selectiterator::::SelectionContextIterator::>evaluate_predicate_recursively::

next

98 : 89rustc: ::infer<::allocInferCtxt::::vecprobe::

Vec<T >99 : as< &alloc'::avec ::mutSpecExtend <IT ,as Icore>::>iter::::from_iteriterator

::Iterator 90: rustc::>traits::::nextselect

::SelectionContext::candidate_from_obligation_no_cache

query stack during panic:

91: rustc::ty::context::tls::with_context

92: rustc::dep_graph::graph::DepGraph::with_anon_task

93: rustc::traits::select::SelectionContext::candidate_from_obligation

94: rustc::traits::select::SelectionContext::evaluate_stack

95: rustc::ty::context::tls::with_context

96: rustc::dep_graph::graph::DepGraph::with_anon_task

97: rustc::traits::select::SelectionContext::evaluate_predicate_recursively

98: rustc::infer::InferCtxt::probe

99: <&'a mut I as core::iter::iterator::Iterator>::next

query stack during panic:

#0 [evaluate_obligation] evaluating trait selection obligation `f64: std::ops::Mul<_>`

#1 [typeck_tables_of] processing `DEGREES`

end of query stack

#0 [evaluate_obligation] evaluating trait selection obligation `f64: std::ops::Mul<_>`

#1 [typeck_tables_of] processing `DEGREES`

end of query stack

```

|

C-enhancement,A-diagnostics,A-trait-system,T-compiler

|

low

|

Critical

|

369,986,009 |

pytorch

|

fail to visualize caffe2 model

|

Refer to caffe2 official website, I tried to visualize caffe2 model by these code:

from caffe2.python import net_drawer

from IPython import display

graph=net_drawer.GetPydotGraph(train_model.net.Proto().op,"train_model",rankdir="LR")

display.Image(graph.create_png(),width=800)

I've also tried:

graph = net_drawer.GetPydotGraph(train_model.net.Proto().op, "train_model", rankdir="LR")

graph.write_png('graph.png')

I've installed graphviz by command:

sudo apt-get install graphviz

pip install pydot

But I will meet error as follows:

Traceback (most recent call last):

File "/mnt/xiongcx/R2Plus1D-master/tools/train_net.py", line 535, in <module>

main()

File "/mnt/xiongcx/R2Plus1D-master/tools/train_net.py", line 530, in main

Train(args)

File "/mnt/xiongcx/R2Plus1D-master/tools/train_net.py", line 418, in Train

explog

File "/mnt/xiongcx/R2Plus1D-master/tools/train_net.py", line 144, in RunEpoch

graph = net_drawer.GetPydotGraph(train_model.net.Proto().op, "train", rankdir="TB")

File "/home/xiongcx/anaconda2/envs/r21d/lib/python2.7/site-packages/pydot.py", line 1673, in new_method

encoding=encoding)

File "/home/xiongcx/anaconda2/envs/r21d/lib/python2.7/site-packages/pydot.py", line 1756, in write

s = self.create(prog, format, encoding=encoding)

File "/home/xiongcx/anaconda2/envs/r21d/lib/python2.7/site-packages/pydot.py", line 1884, in create

assert p.returncode == 0, p.returncode

AssertionError: -11

Do you have ideas to solve it? Or do you have any other ideas to visualize caffe2 model? Thanks in advance.

|

caffe2

|

low

|

Critical

|

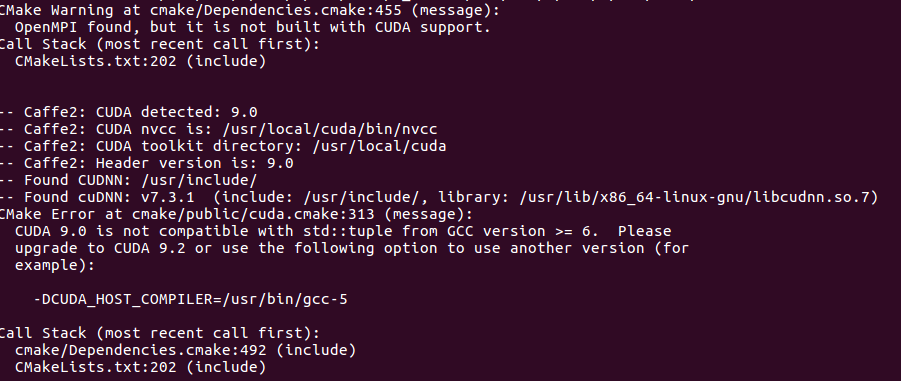

370,106,458 |

pytorch

|

Caffe2 Installation inside Pytorch

|

i am getting this error message (please see the attached) after run the command "python setup.py install":

.

I followed this office guide of pytorch: https://caffe2.ai/docs/getting-started.html?platform=ubuntu&configuration=compile.

But the weird thing is I can print the coffee2 sucessfully

i would like to ask if does anybody knows how to fix this?

|

caffe2

|

low

|

Critical

|

370,132,706 |

rust

|

Unreasonably large stack frames

|

Consider the following function:

```rust

pub struct Tree {

children: Vec<Tree>,

}

pub fn traverse(t: &Tree) {

println!();

for c in &t.children {

traverse(c);

}

}

```

When compiled in release mode, it uses 72 bytes of stack per tree level on x86-64, see [Godbolt](https://godbolt.org/z/AyjJM6).

For comparison, the equivalent C++ function

```c++

struct Tree {

vector<Tree> children;

};

void traverse(const Tree &t) {

cout << endl;

for (const auto &c : t.children) {

traverse(c);

}

}

```

uses only 24 bytes per tree level (when compiled with either gcc or clang), see [Godbolt](https://godbolt.org/z/17JCSX).

------

Version info:

I noticed it on a recent nightly

```

rustc 1.31.0-nightly (b2d6ea98b 2018-10-07)

binary: rustc

commit-hash: b2d6ea98b0db53889c5427e5a23cddb3bcb63040

commit-date: 2018-10-07

host: x86_64-pc-windows-msvc

release: 1.31.0-nightly

LLVM version: 8.0

```

But it's the same on stable 1.29.0 as well.

|

C-enhancement,T-compiler,I-heavy

|

low

|

Minor

|

370,140,805 |

rust

|

Support uftrace (and other fentry consumers)

|