id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

358,861,012 |

go

|

internal/bytealg: valgrind reports invalid reads by C.GoString

|

### What version of Go are you using (`go version`)?

`go version go1.11 linux/amd64`

### Does this issue reproduce with the latest release?

Yes.

### What operating system and processor architecture are you using (`go env`)?

<details>

```

GOARCH="amd64"

GOBIN=""

GOCACHE="/home/kivikakk/.cache/go-build"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOOS="linux"

GOPATH="/home/kivikakk/go"

GOPROXY=""

GORACE=""

GOROOT="/usr/local/go"

GOTMPDIR=""

GOTOOLDIR="/usr/local/go/pkg/tool/linux_amd64"

GCCGO="gccgo"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

GOMOD=""

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build515123667=/tmp/go-build -gno-record-gcc-switches"

```

</details>

### What did you do?

```go

package main

/*

#include <string.h>

#include <stdlib.h>

char* s() {

return strdup("hello");

}

*/

import "C"

import "unsafe"

func main() {

s := C.s()

C.GoString(s)

C.free(unsafe.Pointer(s))

}

```

```console

$ go build

$ valgrind ./sscce

==11241== Memcheck, a memory error detector

==11241== Copyright (C) 2002-2015, and GNU GPL'd, by Julian Seward et al.

==11241== Using Valgrind-3.12.0.SVN and LibVEX; rerun with -h for copyright info

==11241== Command: ./sscce

==11241==

==11241== Warning: ignored attempt to set SIGRT32 handler in sigaction();

==11241== the SIGRT32 signal is used internally by Valgrind

==11241== Warning: ignored attempt to set SIGRT32 handler in sigaction();

==11241== the SIGRT32 signal is used internally by Valgrind

==11241== Warning: client switching stacks? SP change: 0xfff0001b0 --> 0xc0000367d8

==11241== to suppress, use: --max-stackframe=755931244072 or greater

==11241== Warning: client switching stacks? SP change: 0xc000036790 --> 0xfff000260

==11241== to suppress, use: --max-stackframe=755931243824 or greater

==11241== Warning: client switching stacks? SP change: 0xfff000260 --> 0xc000036790

==11241== to suppress, use: --max-stackframe=755931243824 or greater

==11241== further instances of this message will not be shown.

==11241== Conditional jump or move depends on uninitialised value(s)

==11241== at 0x40265B: indexbytebody (/usr/local/go/src/internal/bytealg/indexbyte_amd64.s:151)

==11241==

==11241==

==11241== HEAP SUMMARY:

==11241== in use at exit: 1,200 bytes in 6 blocks

==11241== total heap usage: 10 allocs, 4 frees, 1,310 bytes allocated

==11241==

==11241== LEAK SUMMARY:

==11241== definitely lost: 0 bytes in 0 blocks

==11241== indirectly lost: 0 bytes in 0 blocks

==11241== possibly lost: 1,152 bytes in 4 blocks

==11241== still reachable: 48 bytes in 2 blocks

==11241== suppressed: 0 bytes in 0 blocks

==11241== Rerun with --leak-check=full to see details of leaked memory

==11241==

==11241== For counts of detected and suppressed errors, rerun with: -v

==11241== Use --track-origins=yes to see where uninitialised values come from

==11241== ERROR SUMMARY: 1 errors from 1 contexts (suppressed: 0 from 0)

```

(Ignore the "possibly lost" blocks; they're pthreads started by the Go runtime.)

### What did you expect to see?

No conditional jump/move depending on uninitialised values.

### What did you see instead?

A conditional jump/move depending on uninitialised values.

---

The nature of the issue becomes more apparent if you run Valgrind with `--partial-loads-ok=no`:

```console

$ valgrind --partial-loads-ok=no ./sscce

==11376== Memcheck, a memory error detector

==11376== Copyright (C) 2002-2015, and GNU GPL'd, by Julian Seward et al.

==11376== Using Valgrind-3.12.0.SVN and LibVEX; rerun with -h for copyright info

==11376== Command: ./sscce

==11376==

==11376== Warning: ignored attempt to set SIGRT32 handler in sigaction();

==11376== the SIGRT32 signal is used internally by Valgrind

==11376== Warning: ignored attempt to set SIGRT32 handler in sigaction();

==11376== the SIGRT32 signal is used internally by Valgrind

==11376== Warning: client switching stacks? SP change: 0xfff0001b0 --> 0xc0000367d8

==11376== to suppress, use: --max-stackframe=755931244072 or greater

==11376== Warning: client switching stacks? SP change: 0xc000036790 --> 0xfff000260

==11376== to suppress, use: --max-stackframe=755931243824 or greater

==11376== Warning: client switching stacks? SP change: 0xfff000260 --> 0xc000036790

==11376== to suppress, use: --max-stackframe=755931243824 or greater

==11376== further instances of this message will not be shown.

==11376== Invalid read of size 32

==11376== at 0x40264E: indexbytebody (/usr/local/go/src/internal/bytealg/indexbyte_amd64.s:148)

==11376== Address 0x53f47c0 is 0 bytes inside a block of size 12 alloc'd

==11376== at 0x4C2BBAF: malloc (vg_replace_malloc.c:299)

==11376== by 0x45165D: s (main.go:7)

==11376== by 0x4516A5: _cgo_a004886745c9_Cfunc_s (cgo-gcc-prolog:54)

==11376== by 0x44A0DF: runtime.asmcgocall (/usr/local/go/src/runtime/asm_amd64.s:637)

==11376== by 0x7: ???

==11376== by 0x6C287F: ??? (in /home/kivikakk/sscce/sscce)

==11376== by 0xFFF00024F: ???

==11376== by 0x4462B1: runtime.(*mcache).nextFree.func1 (/usr/local/go/src/runtime/malloc.go:749)

==11376== by 0x448905: runtime.systemstack (/usr/local/go/src/runtime/asm_amd64.s:351)

==11376== by 0x4283BF: ??? (/usr/local/go/src/runtime/proc.go:1146)

==11376== by 0x448798: runtime.rt0_go (/usr/local/go/src/runtime/asm_amd64.s:201)

==11376== by 0x451DEF: ??? (in /home/kivikakk/sscce/sscce)

==11376==

==11376==

==11376== HEAP SUMMARY:

==11376== in use at exit: 1,200 bytes in 6 blocks

==11376== total heap usage: 10 allocs, 4 frees, 1,316 bytes allocated

==11376==

==11376== LEAK SUMMARY:

==11376== definitely lost: 0 bytes in 0 blocks

==11376== indirectly lost: 0 bytes in 0 blocks

==11376== possibly lost: 1,152 bytes in 4 blocks

==11376== still reachable: 48 bytes in 2 blocks

==11376== suppressed: 0 bytes in 0 blocks

==11376== Rerun with --leak-check=full to see details of leaked memory

==11376==

==11376== For counts of detected and suppressed errors, rerun with: -v

==11376== ERROR SUMMARY: 1 errors from 1 contexts (suppressed: 0 from 0)

```

I understand some work has been done to ensure `IndexByte` doesn't run over a page boundary (https://github.com/golang/go/issues/24206), and so that this is unlikely to have a negative effect. Should I just add a suppression and call it a day?

```

{

indexbytebody_loves_to_read

Memcheck:Addr32

fun:indexbytebody

}

```

|

help wanted,NeedsInvestigation

|

medium

|

Critical

|

358,945,806 |

TypeScript

|

Conditional types don't work with Mapped types when you extend enum keys

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!-- Please try to reproduce the issue with `typescript@next`. It may have already been fixed. -->

**TypeScript Version:** 3.0.3, 3.1.0-dev.20180907

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:**

mapped, conditional, enum, extends

**Code**

```ts

enum Commands {

one = "one",

two = "two",

three = "three"

}

const SpecialCommandsObj = {

[Commands.one]: Commands.one,

[Commands.two]: Commands.two,

};

// a and b are correct

type a = Commands.one extends keyof typeof SpecialCommandsObj ? null : any; // null - ok

type b = Commands.three extends keyof typeof SpecialCommandsObj ? null : any; // any - ok

interface CommandAction {

execute(element: any): any;

}

interface SpecialCommandAction {

execute(element: null): any;

}

type CommandsActions = {

[Key in keyof typeof Commands]: Key extends keyof typeof SpecialCommandsObj ? SpecialCommandAction : CommandAction

};

const Actions: CommandsActions = {

[Commands.one]: {

// ERROR

execute: (element) => {} // ELEMENT IS ANY, NOT NULL

},

[Commands.two]: {

// ERROR

execute: (element) => {} // ELEMENT IS ANY, NOT NULL

},

[Commands.three]: {

execute: (element) => {}

},

}

```

**Expected behavior:**

In the Actions object the argument of the execute method for Commands.one and Commands.two should be of type `null`, not `any`

**Actual behavior:**

`element` is always `any`,

type inference for type `a` and `b` are fine so it only occurs when you use mapped types

it works if you don't use enums as keys in SpecialCommandObj. Swap your SpecialCommandsObj with this one and it will start working

```

const SpecialCommandsObj = {

"one": Commands.one,

"two": Commands.two,

};

```

**Playground Link:** https://bit.ly/2QmdTIo

|

Needs Investigation

|

low

|

Critical

|

358,952,723 |

flutter

|

Allow plugins to depend on podspecs outside of CocoaPods specs repo

|

I want to make a plugin that depends on the source which is outside of Specs

like this in **myplugin.podspec**:

s.dependency 'Hoge', :git => 'https://github.com/hoge/fuga_specs.git'

However it looks it is not possible to do that in podspec.

Reference:

https://stackoverflow.com/questions/22447062/how-do-i-create-a-cocoapods-podspec-that-has-a-dependency-that-exists-outside-of

Is there a way to solve the problem?

Thank you in advance.

<!-- Thank you for using Flutter!

Please check out our documentation first:

* https://flutter.io/

* https://docs.flutter.io/

If you can't find the answer there, please consider asking a question on

the Stack Overflow Web site:

* https://stackoverflow.com/questions/tagged/flutter?sort=frequent

Please don't file a GitHub issue for support requests. GitHub issues are

for tracking defects in the product. If you file a bug asking for help, we

will consider this a request for a documentation update.

-->

|

platform-ios,tool,d: stackoverflow,P3,a: plugins,team-ios,triaged-ios

|

low

|

Critical

|

358,954,088 |

TypeScript

|

Check property declarations in JS

|

Recently I found it's helpful to add JSDOC style comment to hint my IDE the variable's type.

For example, If I add comment to a variable:

```js

function fx(jsonAsset) {

/** @type {import("a-dts-file-describes-the-type-of-the-json-object").Type} */

const typedObject = jsonAsset.json;

// IDE will give the member list of the type

typedObject.

}

```

My IDE(Visual Studio Code Lastest) would correctly identify the type of ```typedObject```.

So that it give me a very nice intelligent prompt. Even when I enable the type check through ```// @ts-check```, it will give the type errors.

This feature is helpful to me and my project. It's written in **Javascript**(although we will try to rewrite it using Typescript in future but not for now).

We are using Babel with a plugin called ```transform-class-properties``` means that we can declare class member in ES6 class just like Typescript does:

```js

class C {

/* @types {HTMLCanvasElement} */

@decorator(/* ... */)

canvas = null;

}

```

But when I add similar comment to ```canvas``` declaration, it can not be recognized by IDE. The IDE seems like only decides the type through its initializer. Could you support this feature please?

|

Bug

|

low

|

Critical

|

358,974,323 |

pytorch

|

[feature request] - Allow sequences lengths to be 0 in PackSequence

|

Hi,

### Context

I'm currently working on some NLP stuff which includes working on character-level encoding through RNNs. For this, I'm using pack_padded_sequence/pad_packed_sequence which does the job for word-level encoding, but starts to be a little bit more annoying for the chars.

**_I'm using batch_first=True axis, but the same can be easily applied to batch_first=False_**

The character tensor's shape is [B, W, C, *], where B is the batch axis, W is the word axis and C is the character axis for each word (In our case we flatten the first two axis B and W as each word is independent from each other, resulting in a forwarded tensor's shape: [B x W, C, *]).

Then, as W already contains some padded indexes, some C entries are then padded entries, thus having length = 0 which throw the following error when trying to use pack_padded_sequence :

`ValueError: Length of all samples has to be greater than 0, but found an element in 'lengths' that is <= 0`

It seems that this behaviour comes from the following: https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/native/PackedSequence.cpp#L22

### Proposition

It would be interesting to relax the constraint on the sequence length to be >= 0 in PackSequence, and handling the "0-case" directly as it is currently done to generate each batch that will go to the RNN.

Thanks,

Morgan

cc @albanD @mruberry @jbschlosser @zou3519

|

module: nn,module: rnn,triaged,enhancement

|

low

|

Critical

|

358,999,708 |

go

|

cmd/go: add modvendor sub-command

|

Creating this issue as a follow up to https://github.com/golang/go/issues/26366 (and others).

`go mod vendor` is documented as follows:

```

Vendor resets the main module's vendor directory to include all packages

needed to build and test all the main module's packages.

It does not include test code for vendored packages.

```

Much of the surprise in https://github.com/golang/go/issues/26366 comes about because people are expecting "other" files to also be included in `vendor`.

An alternative to the Go 1.5 `vendor` is to instead "vendor" the module download cache. A proof of concept of this approach is presented here:

https://github.com/myitcv/go-modules-by-example/blob/master/012_modvendor/README.md

Hence I propose `go mod modvendor`, which would be documented as follows:

```

Modvendor resets the main module's modvendor directory to include a

copy of the module download cache required for the main module and its

transitive dependencies.

```

Name and the documentation clearly not final.

### Benefits (WIP)

* Eliminates any potential confusion around what is in/not in `vendor`

* Easier to contribute patches/fixes to upstream module authors (via something like [`gohack`](https://github.com/rogpeppe/gohack)), because the entire module is available

* The modules included in `modvendor` are an _exact_ copy of the original modules. This makes it easier to check their fidelity at any point in time, with either the source or some other reference (e.g. Athens)

* Makes clear the source of modules, via the use of `GOPROXY=/path/to/modvendor`. No potential for confusion like "will the `modvendor` of my dependencies be used?"

* A single deliverable

* Fully reproducible and high fidelity builds (modules in general gives us this, so just re-emphasising the point)

* ...

### Costs (WIP)

* The above steps are currently manual; tooling (the go tool?) can fix this

* Reviewing "vendored" dependencies is now more involved without further tooling. For example it's no longer possible to simply browse the source of a dependency via a GitHub PR when it is added. Again, tooling could help here. As could some central source of truth for trusted, reviewed modules (Athens? cc @bketelsen @arschles)

* ...

### Related discussion

Somewhat related to discussion in https://github.com/golang/go/issues/27227 (cc @rasky) where it is suggested the existence of `vendor` should imply the `-mod=vendor` flag. The same argument could be applied here, namely the existence of `modvendor` implying the setting of `GOPROXY=/path/to/modvendor`. This presupposes, however, that the idea of `modvendor` makes sense in the first place.

### Background discussion:

https://twitter.com/_myitcv/status/1038885458950934528

cc @StabbyCutyou @fatih

cc @bcmills

|

NeedsDecision,modules

|

medium

|

Major

|

359,065,710 |

rust

|

(Identical) function call with Generic arguments breaks compilation when called from within another function that has unrelated Generic arguments

|

I searched and couldn't find a similar issue for now.

The following code shows an example of a function ```interpolate_linear``` that when called with identical (literal) arguments, causes a complier error in the function ```percentile``` (line 16), but no error in the function ```call_interpolate_linear``` (line 21). I haven't yet had time to try and narrow the issue yet, but nevertheless I think the code is valid.

```

use std::convert::From;

use std::fmt::Debug;

pub fn percentile<T: Debug + Copy>(

data_set: &Vec<T>,

n: f64,

) -> f64

where

f64: From<T>,

{

let rank = n * (data_set.len() as f64 - 1f64);

let below = rank.floor() as usize;

let mut above = rank.floor() as usize + 1;

if above == data_set.len() {

above = data_set.len() - 1;

}

let lower_value = data_set[below];

let higher_value = data_set[above];

interpolate_linear(&1.0, &2.0, 0.5); // this line does not compile

interpolate_linear(&lower_value, &higher_value, n)

}

pub fn call_interpolate_linear() {

interpolate_linear(&1.0, &2.0, 0.5); // this line does compile

}

pub fn interpolate_linear<T: Copy>(

a: &T,

b: &T,

how_far_from_a_to_b: f64,

) -> f64

where

f64: From<T>,

{

let a = f64::from(*a);

let b = f64::from(*b);

a + (how_far_from_a_to_b * (b - a))

}

fn main() {}

```

Here is the compiler error:

```

error[E0308]: mismatched types

--> functions_issue.rs:16:24

|

16 | interpolate_linear(&1.0, &2.0, 0.5); // this line does not compile

| ^^^^ expected type parameter, found floating-point variable

|

= note: expected type `&T`

found type `&{float}`

error[E0308]: mismatched types

--> functions_issue.rs:16:30

|

16 | interpolate_linear(&1.0, &2.0, 0.5); // this line does not compile

| ^^^^ expected type parameter, found floating-point variable

|

= note: expected type `&T`

found type `&{float}`

error: aborting due to 2 previous errors

For more information about this error, try `rustc --explain E0308`.

```

|

C-enhancement,A-diagnostics,T-compiler

|

low

|

Critical

|

359,070,956 |

rust

|

NLL: document specs for (new) semantics in rust ref (incl. deviations from RFC)

|

The [NLL RFC][] provided a specification for what we planned to implement. (Or at least it tried to do so.)

[NLL RFC]: https://github.com/rust-lang/rfcs/blob/master/text/2094-nll.md

Since then, the NLL implementation made something that deviated in various ways from that specification.

This ticket is just noting that:

1. we did deviate in various ways, and

2. we should plan to document the actual semantics, in a manner suitable for the rust reference.

It would be good to link to here any PRs/issues where such deviations were implemented or discussed

|

A-lifetimes,P-medium,A-borrow-checker,T-compiler,A-NLL,NLL-reference

|

low

|

Major

|

359,071,761 |

electron

|

AppContainer Process Isolation on Windows 10

|

Using tools like [`electron-windows-store`](https://github.com/felixrieseberg/electron-windows-store), Electron can be packaged as an `appx` app and run in the same environment as Windows Store apps, commonly known as UWP apps. They're still `exe` binaries, they're just running as part of a package and with a package identity attached.

While those applications are running within a scoped amount of virtualization (namely, filesystem and registry redirection), they're not actually running in a process isolation sandbox like their proper UWP siblings. Their capability is therefore `<rescap:Capability Name="runFullTrust"/>`.

With RS5 (October 2018 Update), Windows 10 introduces "partial trust" that'll allow applications running in the desktop bridge to make use of the same app container process isolation security proper UWP applications.

### Getting Electron ready for AppContainer

- [ ] **Get final appxmanifest.xml for partial trust applications from Microsoft**

- [ ] **Create a simple test harness to create and test partial trust Electron apps**

- [ ] **Verify which APIs need UWP additions to function within sandbox**

- [ ] **Extend non-functional APIs with UWP APIs**

We know, as an example, that `shell.*` APIs do not work. We'll need to augment those APIs to use UWP counterparts when [`isRunningInDesktopBridge()`](https://github.com/electron/electron/blob/163e2d35272a1269394add1b941ad961ad801c31/brightray/common/application_info_win.cc#L67-L107) returns `true`. We need to audit every single API and make sure that they either work fine or are documented as non-functional.

|

enhancement :sparkles:,platform/windows

|

low

|

Minor

|

359,095,183 |

pytorch

|

at::Device makes it very easy to write buggy code

|

Imagine this method:

```cpp

Tensor Tensor::cuda(Device dev = at::Device(at::DeviceKind::CUDA)) {

if (this->device() == dev) {

return *this;

}

return ...; // do the transfer

}

```

Can you see the error? I didn't.

<br/>

<br/>

<br/>

<br/>

<br/>

<br/>

<br/>

<br/>

<br/>

<br/>

<br/>

The problem lies in `operator==` of `at::Device`, which enforces strict equality of device kinds and indices. This would be fine if indices were always guaranteed to be valid, but they are in fact optional. On the other hand, the device objects returned from `this->device()` are always guaranteed to have a device set, and so they will always compare as not-equal to the default argument, *always forcing the tensor copy*.

It would be great if we could resolve this in some way. I have three proposals:

#### Create another device type

We want to allow people to specify a device type for their scripts, which often involves specifying the kind only and ignoring the index. This is what `at::Device` aims to do. On the other hand, internal library functions are almost always functional, and use the device to decide what to do *right now*. Those use cases pretty much always require us to have a device index bound to that object.

This proposal would be to add `at::FullDevice` (better name ideas welcome), which acts similarly to `at::Device`, but is guaranteed to have a device index set. We would need implicit conversions going in both ways because:

- `at::Device -> at::FullDevice` would be needed when users are calling into library functions (they would take `FullDevice` as arguments now). This conversion would be a no-op if the index is specified, and would retrieve the currently selected CUDA device otherwise.

- `at::FullDevice -> at::Device` this is a trivial conversion. `Tensor::device()` should now return `at::FullDevice`, but user code will always work with `at::Device`, which is why we need the conversion.

#### Do nothing. Add a warning maybe.

Plus a method like `ensure_has_index()`, which would set the index to currently selected device of this kind if it's missing.

#### Change `operator==` of `at::Device` to depend on `thread_local` state

This means that if we have a CUDA device with no index set, inside `operator==` we will assume that it has an index equal to the currently selected device. I don't like this one all that much, because it makes a supposedly simple operation quite complicated, and might break `operator==` invariants when someone e.g. uses a set of devices, and changes the thread local state during its lifetime.

---

cc @goldsborough

|

triaged,better-engineering

|

low

|

Critical

|

359,138,529 |

vue

|

Oddity with JS transition hooks used in combination with CSS

|

### Version

2.5.17

### Reproduction link

[https://codesandbox.io/s/6x4k5vrrkn](https://codesandbox.io/s/6x4k5vrrkn)

### Steps to reproduce

Remove the [unused] `done` parameter from the `leave` callback signature in `SideSheet.vue`.

### What is expected?

The component to transition both on enter and leave.

### What is actually happening?

The component enters immediately (without transitioning).

---

The `done` callback shouldn't be needed if the transition duration is implicit in CSS (as noted in the docs). However, by retaining the `done` parameter in the function signature it is unclear why it should "work" (since it's unused within the function definition itself).

<!-- generated by vue-issues. DO NOT REMOVE -->

|

transition

|

medium

|

Minor

|

359,139,444 |

vue

|

Race condition in transition-group

|

### Version

2.5.17

### Reproduction link

[https://jsfiddle.net/nkovacs/Lskfredn/](https://jsfiddle.net/nkovacs/Lskfredn/)

### Steps to reproduce

1. Click the add button

### What is expected?

the animation should work properly, and animation classes should be cleaned up

### What is actually happening?

the enter animation doesn't work and the new item's element keeps the `list-enter-to` class forever

---

The style tag binding triggers a second rerender of the transition-group component between the transition-group setting `_enterCb` on the entering new child and `nextFrame` triggering its callback. `prevChildren` is updated to include the new item, and `update` calls the pending `_enterCb` callback. Then after that, `nextFrame` triggers, but because `_enterCb` can only be called once, it won't be called, so the `enter-to` class will remain on the element.

This only happens if the transition-group has a move transition.

The bug also occurs if the elements are changed between `update` and `nextFrame`: https://jsfiddle.net/nkovacs/cnjso1h5/

<!-- generated by vue-issues. DO NOT REMOVE -->

|

transition

|

low

|

Critical

|

359,151,138 |

go

|

cmd/compile: automatically stack-allocate small non-escaping slices of dynamic size

|

This commit:

https://github.com/golang/go/commit/95a11c7381e01fdaaf34e25b82db0632081ab74e

shows a real-world performance gain triggered by moving a small non-escaping slice to the stack. It is my understanding that the Go compiler always allocated the slice in the heap because the length was not known at compile time.

Would it make sense to attempt a similar code transformation for many/all non escaping slices? What would be the cons? Any suggestion on how to identify which slices could benefit from this transformation and which would possibly just create overhead?

|

Performance,NeedsInvestigation,compiler/runtime

|

low

|

Major

|

359,165,290 |

pytorch

|

[JIT][tracer] Slicing shape is specialized to tensor rank

|

Example:

```

import torch

def fill_row_zero(x):

x = torch.cat((torch.rand(1, *x.shape[1:]), x[1:]), dim=0)

return x

traced = torch.jit.trace(fill_row_zero, (torch.rand(3, 4),))

print(traced.graph)

traced(torch.rand(3, 4, 5))

```

```

graph(%0 : Float(3, 4)) {

%4 : int = prim::Constant[value=1]()

%5 : int = aten::size(%0, %4)

%6 : Long() = prim::NumToTensor(%5)

%7 : int = prim::TensorToNum(%6)

%8 : int = prim::Constant[value=1]()

%9 : int[] = prim::ListConstruct(%8, %7)

%10 : int = prim::Constant[value=6]()

%11 : int = prim::Constant[value=0]()

%12 : int[] = prim::Constant[value=[0, -1]]()

%13 : Float(1, 4) = aten::rand(%9, %10, %11, %12)

%14 : int = prim::Constant[value=0]()

%15 : int = prim::Constant[value=1]()

%16 : int = prim::Constant[value=9223372036854775807]()

%17 : int = prim::Constant[value=1]()

%18 : Float(2, 4) = aten::slice(%0, %14, %15, %16, %17)

%19 : Dynamic[] = prim::ListConstruct(%13, %18)

%20 : int = prim::Constant[value=0]()

%21 : Float(3, 4) = aten::cat(%19, %20)

return (%21);

}

```

these `size()` calls we emit (e.g. ` %5 : int = aten::size(%0, %4)`) are specialized to the rank of the tensor we called `.shape` on

|

oncall: jit

|

low

|

Minor

|

359,184,495 |

flutter

|

Search widget's text field might not have large enough tap area

|

Currently at 44 height due to a the default edgeInsets of the no border decoration. Android a11y scanner doesn't flag this for some reason, we should determine why.

|

framework,a: accessibility,P2,team-framework,triaged-framework

|

low

|

Minor

|

359,199,107 |

pytorch

|

[CLEANUP] Context functions should return TypeExtendedInterface, not Type

|

See https://github.com/pytorch/pytorch/pull/11461 for information.

CC @ezyang.

|

triaged,better-engineering

|

low

|

Minor

|

359,213,205 |

kubernetes

|

Reduce the set of metrics exposed by the kubelet

|

### Background

In 1.12, the kubelet exposes a number of sources for metrics directly from [cAdvisor](https://github.com/google/cadvisor#cadvisor). This includes:

* [cAdvisor prometheus metrics](https://github.com/google/cadvisor/blob/master/docs/storage/prometheus.md#prometheus-metrics) at [`/metrics/cadvisor`](https://github.com/kubernetes/kubernetes/blob/master/pkg/kubelet/server/server.go#L277)

* [cAdvisor v1 Json API](https://github.com/google/cadvisor/blob/master/info/v1/container.go#L126) at [`/stats/`, `/stats/container`, `/stats/{podName}/{containerName}`, and `/stats/{namespace}/{podName}/{uid}/{containerName}`](https://github.com/kubernetes/kubernetes/blob/master/pkg/kubelet/server/stats/handler.go#L111)

* [cAdvisor machine info](https://github.com/google/cadvisor/blob/master/info/v1/machine.go#L159) at [`/spec`](https://github.com/kubernetes/kubernetes/blob/master/pkg/kubelet/server/server.go#L291)

The kubelet also exposes the [summary API](https://github.com/kubernetes/kubernetes/blob/master/pkg/kubelet/apis/stats/v1alpha1/types.go#L24), which is not exposed directly by cAdvisor, but queries cAdvisor as one of its sources for metrics.

The [Monitoring Architecture](https://github.com/kubernetes/community/blob/master/contributors/design-proposals/instrumentation/monitoring_architecture.md) documentation describes the path for "core" metrics, and for "monitoring" metrics. The [Core Metrics](https://github.com/kubernetes/community/blob/master/contributors/design-proposals/instrumentation/core-metrics-pipeline.md#core-metrics-in-kubelet) proposal describes the set of metrics that we consider core, and their uses. The motivation for the split architecture is:

* To minimize the performance impact of stats collection for core metrics, allowing these to be collected more frequently

* To make the monitoring pipeline replaceable, and extensible.

### Current kubelet metrics that are not included in core metrics

* Pod and Node-level Network Metrics

* Persistent Volume Metrics

* Container-level (Nvidia) GPU Metrics

* Node-Level RLimit Metrics

* Misc Memory Metrics (e.g. PageFaults)

* Container, Pod, and Node-level Inode metrics (for ephemeral storage)

* Container, Pod, and Node-level DiskIO metrics (from cAdvisor)

Deprecating and removing the Summary API will require out-of-tree sources for each of these metrics. "Direct" cAdvisor endpoints are not often used, and have even been broken for multiple releases (https://github.com/kubernetes/kubernetes/pull/62544) without anyone raising an issue.

### Working Items

* [x] [1.13] Introduce Kubelet `pod-resources` grpc endpoint; KEP: https://github.com/kubernetes/community/pull/2454

* [x] [1.14] Introduce Kubelet Resource Metrics API

* [x] [1.15] Deprecate the "direct" cAdvisor API endpoints by adding and deprecating a `--enable-cadvisor-json-endpoints` flag

* [x] [1.18] Default the `--enable-cadvisor-json-endpoints` flag to disabled

* [ ] [1.21] Remove the `--enable-cadvisor-json-endpoints` flag

* [ ] [1.21] Transition Monitoring Server to Kubelet Resource Metrics API ([requires 3 versions skew](https://github.com/kubernetes/kubernetes/pull/67829#issuecomment-416873857))

* [ ] [TBD] Propose out-of-tree replacements for kubelet monitoring endpoints

* [ ] [TBD] Deprecate the Summary API and cAdvisor prometheus endoints by adding and deprecating a `--enable-container-monitoring-endpoints` flag

* [ ] [TBD+2] Remove "direct" cAdvisor API endpoints

* [ ] [TBD+2] Default the `--enable-container-monitoring-endpoints` flag to disabled

* [ ] [TBD+4] Remove the Summary API, cAdvisor prometheus metrics and remove the `--enable-container-monitoring-endpoints` flag.

### Open Questions

* Should the kubelet be a source for any monitoring metrics?

* For example, metrics about the kubelet itself, or DiskIO metrics for empty-dir volumes (which are "owned" by the kubelet).

* What will provide the metrics listed above, now that the kubelet no longer does?

* cAdvisor can provide Network, RLimit, Misc Memory metrics, Inode metrics, and DiskIO metrics.

* cAdvisor only works for some runtimes, but is a drop-in replacement for "direct" cAdvisor API endpoints

* Container Runtimes can be a source for container-level Memory, Inode, Network and DiskIO metrics.

* NVidia GPU metrics provided by a daemonset published by NVidia

* No source for Persistent Volume metrics?

/sig node

/sig instrumentation

/kind feature

/priority important-longterm

cc @kubernetes/sig-node-proposals @kubernetes/sig-instrumentation-misc

|

sig/node,kind/feature,sig/instrumentation,priority/important-longterm,lifecycle/frozen

|

high

|

Critical

|

359,214,282 |

go

|

cmd/go: do not cache tool output if tools print to stdout/stderr

|

# **Update, Oct 7 2020**: see https://github.com/golang/go/issues/27628#issuecomment-702252564 for most recent proposal in this issue.

### What version of Go are you using (`go version`)?

tip (2e5c32518ce6facc507862f4156d4e6ac776754f), also Go 1.11

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

darwin/amd64

### What did you do?

```

$ go build -toolexec=/usr/bin/time hello.go

# command-line-arguments

0.01 real 0.00 user 0.00 sys

# command-line-arguments

0.12 real 0.11 user 0.02 sys

$ go build hello.go

# command-line-arguments

0.01 real 0.00 user 0.00 sys

# command-line-arguments

0.12 real 0.11 user 0.02 sys

$

```

### What did you expect to see?

The second invocation of `go build` doesn't have `-toolexec`, so it should not invoke the toolexec command (which I think it doesn't), nor reprint its output.

### What did you see instead?

toolexec output is reprinted.

In fact, I think it probably should not cache at all if `-toolexec` is specified, since the external command that toolexec invokes may do anything, and (intentionally) not reproducible.

cc @dr2chase

|

Proposal,Proposal-Accepted,GoCommand

|

medium

|

Critical

|

359,222,238 |

rust

|

Tracking Issue: Procedural Macro Diagnostics (RFC 1566)

|

This is a tracking issue for diagnostics for procedural macros spawned off from https://github.com/rust-lang/rust/issues/38356.

## Overview

### Current Status

* Implemented under `feature(proc_macro_diagnostic)`

* In use by Rocket, Diesel, Maud

### Next Steps

- [x] https://github.com/rust-lang/rust/pull/44125

- [x] Implement introspection methods (https://github.com/rust-lang/rust/pull/52896)

- [x] Implement multi-span support (https://github.com/rust-lang/rust/pull/52896)

- [ ] Implement lint id for warnings (https://github.com/rust-lang/rust/pull/135432)

- [ ] Document thoroughly

- [ ] Stabilize

## Summary

The initial API was implemented in https://github.com/rust-lang/rust/pull/44125 and is being used by crates like Rocket and Diesel to emit user-friendly diagnostics. Apart from thorough documentation, I see two blockers for stabilization:

1. **Multi-Span Support**

At present, it is not possible to create/emit a diagnostic via `proc_macro` that points to more than one `Span`. The internal diagnostics API makes this possible, and we should expose this as well.

The changes necessary to support this are fairly minor: a `Diagnostic` should encapsulate a `Vec<Span>` as opposed to a `Span`, and the `span_` methods should be made generic such that either a `Span` or a `Vec<Span>` (ideally also a `&[Vec]`) can be passed in. This makes it possible for a user to pass in an empty `Vec`, but this case can be handled as if no `Span` was explicitly set.

2. **Lint-Associated Warnings**

At present, if a `proc_macro` emits a warning, it is unconditional as it is not associated with a lint: the user can never silence the warning. I propose that we require proc-macro authors to associate every warning with a lint-level so that the consumer can turn it off.

No API has been formally proposed for this feature. I informally proposed that we allow proc-macros to create lint-levels in an ad-hoc manner; this differs from what happens internally, where all lint-levels have to be known apriori. In code, such an API might look lIke:

```rust

val.span.warning(lint!(unknown_media_type), "unknown media type");

```

The `lint!` macro might check for uniqueness and generate a (hidden) structure for internal use. Alternatively, the proc-macro author could simply pass in a string: `"unknown_media_type"`.

|

A-diagnostics,T-lang,T-libs-api,B-unstable,C-tracking-issue,A-macros-1.2,Libs-Tracked,I-lang-radar

|

high

|

Minor

|

359,231,336 |

pytorch

|

CrossEntropyLoss, ignore_index does not prevent back-prop if the logits are -inf

|

## Issue description

When using CrossEntropyLoss, I assumed that as long as I ignore a target, its loss would not be calculated and will not get back propagated. Therefore I would pass logits = -float(inf) when I attempted to skip that target.

However, even though the loss would be skipped; the loss.backward() would bring the infinity into my gradients.

I think this behavior is at least worth noticing in the document for CrossEntropyLoss.

## Code example

```

import torch

loss = torch.nn.CrossEntropyLoss(ignore_index=-1)

input = torch.randn(3, 5, requires_grad=True)

target = torch.empty(3).long().fill_(-1)

logits = input - float('inf')

output = loss(logits, target)

output.backward()

input.grad

```

## System Info

Collecting environment information...

PyTorch version: 0.4.1

Is debug build: No

CUDA used to build PyTorch: 9.0.176

OS: Ubuntu 16.04.5 LTS

GCC version: (Ubuntu 5.4.0-6ubuntu1~16.04.10) 5.4.0 20160609

CMake version: version 3.5.1

Python version: 3.6

Is CUDA available: Yes

CUDA runtime version: Could not collect

GPU models and configuration:

GPU 0: GeForce GTX 1080 Ti

GPU 1: GeForce GTX 1060 6GB

Nvidia driver version: 396.44

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_static_v7.a

Versions of relevant libraries:

[pip] Could not collect

[conda] cuda90 1.0 h6433d27_0 pytorch

[conda] pytorch 0.4.1 py36_cuda9.0.176_cudnn7.1.2_1 soumith

[conda] torchvision 0.1.9 py36h7584368_1 soumith

cc @brianjo @mruberry @albanD @jbschlosser

|

module: docs,module: nn,module: loss,triaged

|

low

|

Critical

|

359,267,822 |

go

|

x/build/internal/gophers: improve internal package design

|

## Problem

> _Total mess, but a functional mess, and a starting point for the future._

> — Commit [`891b12dc`](https://github.com/golang/build/commit/891b12dcbdd4ee448d573a78681b2e785daa71ca)

The `gophers` package is currently hard to use and hard to modify. It's not easy to read its [documentation](https://godoc.org/golang.org/x/build/internal/gophers) and start using it:

```Go

// (no documentation)

func GetPerson(id string) *Person

```

I've used and modified it multiple times, and each time, I had to read its internal code to figure out:

- what kind of value can "id" be?

- what is its exact format?

- is leading '@' required for GH usernames? optional? unneeded?

- is it case sensitive or not?

- in what order/what type of information to add to the `addPerson(...)` lines?

Despite being an internal package, `gophers` is an important package providing value to 4 other packages, and potentially becoming used in more places. It's no longer just for computing stats, but also for tracking package owners and assigning reviews. Being internal means we can change it easily (even break the API if needed) if we come to agreement on an improved design.

## Proposed Solution

I think it can be made easier to use by:

- documenting it (so its [godoc](https://godoc.org/golang.org/x/build/internal/gophers) is all you need to use it, no need to read code)

For example:

```Go

// GetPerson looks up a person by id and returns one if found, or nil otherwise.

//

// The id is case insensitive, and may be one of:

// - full name ("Brad Fitzpatrick")

// - GitHub username ("@bradfitz")

// - Gerrit <account ID>@<instance ID> ("5065@62eb7196-b449-3ce5-99f1-c037f21e1705")

// - email ("[email protected]")

func GetPerson(id string) *Person

```

@bradfitz If you prefer not to be used as an example, let me know, and we can use someone else (I'm happy to volunteer) or use a generic name. But I think a well known real user makes for a better example.

Made easier to modify by:

- making its internal `addPerson` logic more explicit rather than implicit

For example, instead of what we have now:

```Go

addPerson("Filippo Valsorda", "", "6195@62eb7196-b449-3ce5-99f1-c037f21e1705")

addPerson("Filippo Valsorda", "[email protected]")

addPerson("Filippo Valsorda", "[email protected]", "11715@62eb7196-b449-3ce5-99f1-c037f21e1705")

addPerson("Filippo Valsorda", "[email protected]", "[email protected]", "@FiloSottile")

// what kind of changes should be done to modify the end result Person struct?

```

It could be something more explicit, along the lines of:

```Go

add(Person{

Name: "Filippo Valsorda",

GitHub: "FiloSottile",

Gerrit: "[email protected]",

GerritIDs: []int{6195, 11715}, // Gerrit account IDs.

GitEmails: []string{

"[email protected]",

"[email protected]",

"[email protected]",

},

gomote: "valsorda", // Gomote user.

})

```

The intention is to make it easy for people to manually add and modify their entries, with predictable results, while still being able to to use code generation (ala `gopherstats -mode=find-gerrit-gophers`) to add missing entries.

This is just a quick draft proposal, not necessarily the final API design. If the general direction is well received but there are concerns or improvement suggestions, I'm happy to flesh it out and incorporate feedback. I wouldn't send a CL until I have a solid design.

/cc @bradfitz @andybons

|

Documentation,Builders,NeedsFix

|

low

|

Major

|

359,299,918 |

rust

|

Allow setting breakpoint when Err() is constructed in debug builds

|

This would be really helpful for tracking down the source of errors. It seems the simplest way to allow this would be to emit a no-inline function that's used for setting a particular enum variant.

|

C-feature-request

|

low

|

Critical

|

359,473,423 |

three.js

|

FBXLoader not working with many skeleton animations (e.g., from Mixamo.com)

|

##### Description of the problem

The FBXLoader does not work with many skeleton animations. e.g., from Mixamo.com. One example from Mixamo that fails is the following:

Character: WHITECLOWN N HALLIN

Animation: SAMBA DANCING

You can get this model by downloading it from Mixamo directly but I have also attached it to this issue.

[WhiteClownSambaDancing.zip](https://github.com/mrdoob/three.js/files/2375109/WhiteClownSambaDancing.zip)

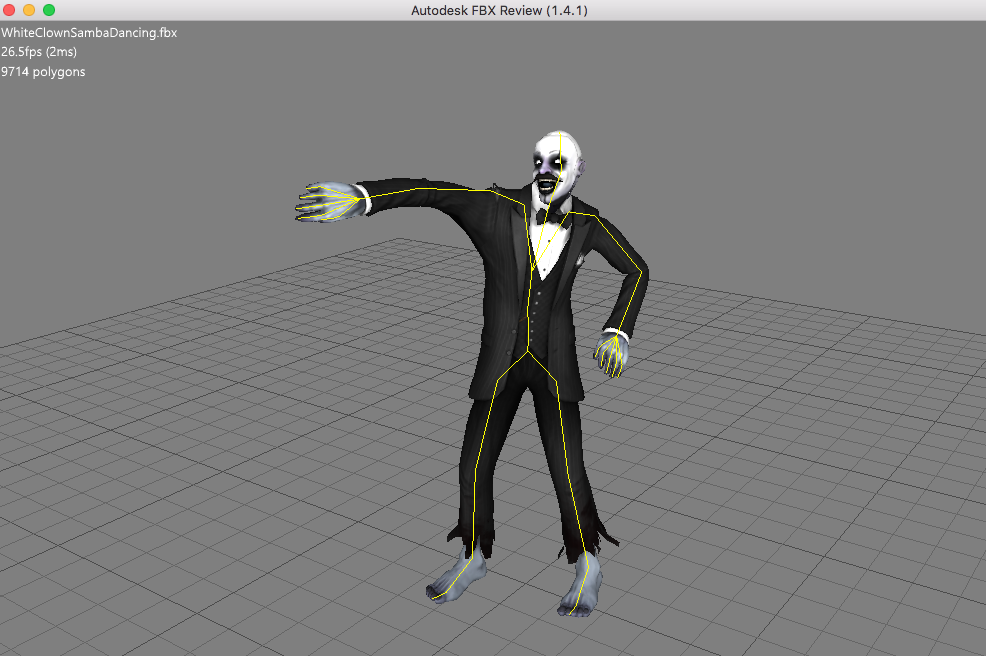

Here is the displayed result using the webgl_loader_fbx.html sample in THREE v96 modified to load the WhiteClownSamaDancing.fbx:

_Screen shot using webgl_loader_fbx.html_

Here is the same FBX file displayed in AutoDesk FBX Review:

_Screen shot using AutoDesk FBX Review_

There are many other Mixamo character/animations pairings that work fine with the FBXLoader but many that do not. It is possible (though not confirmed) that the ones that fail were created with Maya (this has been stated as a possible problem in some other issues).

Also, the WhiteClownSambaDancing.fbx file loads correctly in many other software including the Mixamo site itself and [http://www.open3mod.com/](http://www.open3mod.com/). The later is fully open source so you can see the exact code they use to perform the node/bone transforms and animations. They actually use AssImp for their conversion and you can see the exact code there. In particular, see the following for their FBX import 3D transform handling:

[https://github.com/assimp/assimp/blob/master/code/FBXConverter.cpp#L644](https://github.com/assimp/assimp/blob/master/code/FBXConverter.cpp#L644)

After digging into the FBXLoader code a bit, it seems there may be several areas where the issue could lie:

- There does not seem to be any code to honor the FBX inherit type on the nodes.

- There does not seem to be any code to implement rotate/scale pivot points on the nodes (NOT the geometry transforms which are implemented).

The following code from the AutoDesk FBX SDK may be of some help for implementing both of the above, esp. the code and comments in CalculateGlobalTransform():

[AutoDesk FBX source code Transformations/main.cxx](http://docs.autodesk.com/FBX/2014/ENU/FBX-SDK-Documentation/index.html?url=cpp_ref/_transformations_2main_8cxx-example.html,topicNumber=cpp_ref__transformations_2main_8cxx_example_htmlfc10a1e1-b18d-4e72-9dc0-70d0f1959f5e)

There are some other THREE.js issues which have not been fully addressed regarding incorrect FBX animations loaded via the FBXLoader (#11895, #13466, #13821). This issue is about improving the FBXLoader, not a particular file or asset pipeline. Please do not suggest use of FBX2GLTF (which just bakes the animations) or other converters.

Also, we are willing to provide some help with design and coding, if need be, but doing a full solution with a PR is beyond our bandwidth at this time (ping @looeee @Kyle-Larson @takahirox ?).

##### Three.js version

- [ ] Dev

- [X] r96

- [ ] ...

##### Browser

- [x] All of them

- [ ] Chrome

- [ ] Firefox

- [ ] Internet Explorer

##### OS

- [x] All of them

- [ ] Windows

- [ ] macOS

- [ ] Linux

- [ ] Android

- [ ] iOS

##### Hardware Requirements (graphics card, VR Device, ...)

|

Bug,Loaders

|

medium

|

Major

|

359,473,497 |

vscode

|

Use the modifier properties on mouse event instead of tracking keydown/keyup

|

Extracted from our conversation at https://github.com/Microsoft/vscode/commit/e82498a544b88f5041ec7f8b531ab8ca6eb29eaf

|

help wanted,debt,editor-drag-and-drop

|

low

|

Minor

|

359,530,410 |

go

|

x/text/message: package level docs about MatchLanguage are unclear

|

Please answer these questions before submitting your issue. Thanks!

### What version of Go are you using (`go version`)?

go1.11 linux/amd64

### Does this issue reproduce with the latest release?

Yes.

### What operating system and processor architecture are you using (`go env`)?

linux/amd64

### What did you do?

I tried to use `message.MatchLanguage("nl")` to obtain `language.Dutch`, as per the example on [its site](https://godoc.org/golang.org/x/text/message).

See the following code for an example:

```

package main

import (

"golang.org/x/text/message"

"golang.org/x/text/language"

"fmt"

)

func main() {

nl := message.MatchLanguage("nl")

fmt.Println(nl) // Prints "und", expected "nl"

fmt.Println(language.Dutch) // Prints "nl" as expected

p := message.NewPrinter(message.MatchLanguage("nl"))

p.Printf("%.2f\n", 5000.00) // Prints "5,000.00", expected "5.000,00"

p2 := message.NewPrinter(message.MatchLanguage("bn"))

p2.Println(123456.78) // Prints "5,000.00", expected "১,২৩,৪৫৬.৭৮"

}

```

### What did you expect to see?

I expected to receive `language.Dutch`, so that when I called `p.Printf("%.2f\n", 5000.00)`, I would get "5.000,00", as Dutch uses "." for thousand separators and "," for decimal separators.

### What did you see instead?

Instead `message.MatchLanguage("nl")` returned the `und Tag` rather than the `Dutch Tag`. And therefore when I called `p.Printf()` as per the example on the package's description, I got "5,000.00", which ironically matches the example in the description, but the example is wrong.

I also tried the third example in opening example, which also did not seem to produce the expected result.

It seems to me that `message.MatchLanguage()` either does not work or does not work as described.

Note: Making a new `Printer` with `language.Dutch` works as expected.

|

Documentation,NeedsInvestigation

|

low

|

Minor

|

359,536,193 |

You-Dont-Know-JS

|

Calling template literals "interpolated string literals" is misleading

|

Chapter in question: https://github.com/getify/You-Dont-Know-JS/blob/master/es6%20%26%20beyond/ch2.md#template-literals

The suggestion seems to be that the template literals would be all about strings; there's only examples that result in strings, and phrases like "final string value" and "generating the string from the literal" are used in what should be a general context, but the tag functions can return any object, and there's obvious use cases where they'd return regexp objects, DOM nodes, etc.

|

for second edition

|

medium

|

Minor

|

359,548,761 |

flutter

|

Would like to measure/track Flutter's total download size

|

I've seen several claims that our total download is too large. e.g.

https://twitter.com/FerventGeek/status/1038480155990261761

@gspencergoog do you know if we already track this as part of the bundle building?

CC @FerventGeek @mit-mit

|

team,framework,P2,team-framework,triaged-framework

|

low

|

Major

|

359,567,274 |

pytorch

|

Request to import pytest in test/*.py

|

Currently PyTorch uses the builtin `unittest` module for testing. Would it be possible to add a dependency on [pytest](https://docs.pytest.org/en/latest/) so developers can more easily write parametrized tests?

While working on [test/test_distributions.py](https://github.com/pytorch/pytorch/blob/master/test/test_distributions.py), @neerajprad, @alicanb, and @fritzo have found it challenging to write heavily parametrized tests. As a result, test coverage suffers. By contrast in Pyro we use heavily parametrized tests [using pytest](https://docs.pytest.org/en/latest/parametrize.html), and our Pyro tests seem to catch many bugs that aren't caught in PyTorch's own tests (#9917, #9521, #9977, #10241).

In particular, I'd like to be able to gather [xfailing tests](https://docs.pytest.org/en/documentation-restructure/how-to/skipping.html) in batch

```py

DISTRIBUTIONS = [Bernoulli, Beta, Cauchy, ..., Weibull]

@pytest.mark.parametrize('Dist', DISTRIBUTIONS)

def test_that_sometime_fails(Dist):

dist = Dist(...)

assert something(dist)

```

and then mark xfailing parameters

```py

DISTRIBUTIONS = [

Bernoulli,

Beta,

pytest.param(Cauchy, marks=[pytest.mark.xfail(reason='schema not found for node')]),

...

Weibull,

]

```

pytest makes it easy to collect xfailing tests in batch (`pytest -v --tb=no`) and to see the entire list of xfailing tests, and makes it easy to run xfailing tests to see which have started passing since the last time tests were run (`pytest -v`, or `pytest --runxfail`). In contrast, `unittest` fixtures typically parametrize via for loops and can report only a single failure on each run. (Apologies if I'm unaware of convenient functionality in `unittest`!)

I think allowing usage of pytest in test/*.py could help improve PyTorch test coverage.

cc @mruberry

|

module: tests,triaged

|

medium

|

Critical

|

359,574,800 |

opencv

|

VideoCapture bug with Acer Switch 5 tablet

|

##### System information (version)

- OpenCV = 3.4.1

- Operating System / Platform => Windows 64 Bit

- Compiler => Visual Studio 2015

##### Detailed description

Camera capture on Acer Switch 5 tablet appears to work, but if I try to manually focus the camera (using the onscreen menu) it actually just applies some kind of sharpen/blur, not focus.

The Windows Camera app allows a very close (macro) focus with the tablet camera, but OpenCV is unable to reproduce this.

It is as if the parameter OpenCV thinks is focus, is actually something completely different

##### Steps to reproduce

```

#include "opencv2/opencv.hpp"

#include <iostream>

using namespace std;

using namespace cv;

int main(){

// Create a VideoCapture object and open the input file

// If the input is the web camera, pass 0 instead of the video file name

VideoCapture cap(0);

// Check if camera opened successfully

if(!cap.isOpened()){

cout << "Error opening video stream or file" << endl;

return -1;

}

cap.set(CV_CAP_PROP_SETTINGS,1); // To display the settings

// Now if the user tries to adjust the focus, something other than focus happens!

// If I leave it on auto-focus I can focus very close up so autofocus still works

// If I switch off autofocus and try to focus using the slider, I cannot focus

// I suspect the parameter being changed by OpenCV is NOT focus, even though it thinks it is

// (Note. I do think the parameter being changed by the auto-focus on/off check box is correct - it switches off auto focus)

while(1){

Mat frame;

// Capture frame-by-frame

cap >> frame;

// If the frame is empty, break immediately

if (frame.empty())

break;

// Display the resulting frame

imshow( "Frame", frame );

// Press ESC on keyboard to exit

char c=(char)waitKey(25);

if(c==27)

break;

}

// When everything done, release the video capture object

cap.release();

// Closes all the frames

destroyAllWindows();

return 0;

}

```

|

priority: low,category: videoio,platform: winrt/uwp

|

low

|

Critical

|

359,579,444 |

rust

|

Confusing error message when wildcard importing two same-named traits

|

Discovered this when using `tokio-async-await` and `futures`. Both define `FutureExt` and `StreamExt` traits in their `preludes`. Both traits are meant to be used at the same time (from what I understand).

```rust

use tokio::prelude::*; // contains StreamExt, FutureExt

use futures::prelude::*; // contains StreamExt, FutureExt

```

After doing both this imports rust proceeds to act like _neither_ of the traits got imported and give error help on any method usage something like "trait not in scope; use `tokio::async_await::stream::StreamExt`".

Here is a Rust playground illustrating the bug: https://play.rust-lang.org/?gist=c8ebd2f79eca354ecb2dd6c828d73ad0&version=nightly&mode=debug&edition=2015

And here is a work-around for it (in nightly): https://play.rust-lang.org/?gist=f28928ef69a1985ee79d321cb58ae7e1&version=nightly&mode=debug&edition=2015

I think the way forward here is a good warning (or error) message when a duplicate trait item import happens between two wildcard imports.

|

C-enhancement,A-diagnostics,A-trait-system,T-compiler

|

low

|

Critical

|

359,608,021 |

bitcoin

|

Test coverage of our networking code

|

Our python functional testing framework is pretty limited for what kinds of p2p behaviors we can test. Basically, we can currently only make manual connections between bitcoind nodes (using the `addnode` rpc), which are treated differently in our code than outbound peers selected using addrman.

While we do have some unit-testing coverage of some of the components (like addrman, and parts of net_processing), I don't believe we currently are able to test the overall logic of how bitcoind uses those components (I recall this coming up when working on #11560, as a specific example).

Anyway I am just mentioning this here as a potential project idea, as this is a material gap in our testing that I think would be valuable to work towards improving, and I wasn't sure how well known this is.

|

Tests

|

medium

|

Critical

|

359,656,898 |

flutter

|

Document how to set SystemChrome brightness properly

|

## Steps to Reproduce

1. Create a simple app with an appbar

2. Try to set the color of the icons manually with `SystemChrome.setSystemUIOverlayStyle(SystemUiOverlayStyle.dark)`

3. The icon's colors don't change

[Here's an example app](https://pastebin.com/k00S4PWs)

[Here's an example video](https://cdn.discordapp.com/attachments/408312522521706496/489544643256516619/2018-09-12_23-15-07.mp4)

I believe the problem stems from [line 479-497 in app_bar.dart](https://github.com/flutter/flutter/blob/d927c9331005f81157fa39dff7b5dab415ad330b/packages/flutter/lib/src/material/app_bar.dart#L479)

## Logs

```

[√] Flutter (Channel beta, v0.7.3, on Microsoft Windows [Version 10.0.17134.228], locale en-BE)

• Flutter version 0.7.3 at C:\tools\flutter

• Framework revision 3b309bda07 (2 weeks ago), 2018-08-28 12:39:24 -0700

• Engine revision af42b6dc95

• Dart version 2.1.0-dev.1.0.flutter-ccb16f7282

[√] Android toolchain - develop for Android devices (Android SDK 27.0.3)

• Android SDK at C:\Android\android-sdk

• Android NDK location not configured (optional; useful for native profiling support)

• Platform android-27, build-tools 27.0.3

• ANDROID_HOME = C:\Android\android-sdk

• Java binary at: C:\Program Files\Android\Android Studio\jre\bin\java

• Java version OpenJDK Runtime Environment (build 1.8.0_152-release-1024-b02)

• All Android licenses accepted.

[√] Android Studio (version 3.1)

• Android Studio at C:\Program Files\Android\Android Studio

• Flutter plugin version 27.1.1

• Dart plugin version 173.4700

• Java version OpenJDK Runtime Environment (build 1.8.0_152-release-1024-b02)

[√] Connected devices (1 available)

• Android SDK built for x86 64 • emulator-5554 • android-x64 • Android 7.0 (API 24) (emulator)

• No issues found!

```

|

framework,d: api docs,has reproducible steps,P2,found in release: 3.7,found in release: 3.8,team-framework,triaged-framework

|

low

|

Major

|

359,695,769 |

pytorch

|

[feature request] Triangular Matrix Representation

|

I tried searching the documentation, but besides sparse matrices (which in most cases would use _more_ space than a dense matrix), I didn't see any tensor types that would take advantage of the ability to save space with the knowledge that the tensor is triangular. This would also save time when performing `matmul()`, I'd imagine. For example, my use case is a symmetric matrix (a modification of the distance matrix (https://en.wikipedia.org/wiki/Distance_matrix), and I only need the upper half without the diagonal, so performing operations on the other elements would be worthless, and saving them would be a waste of space.

Can such a type be implemented? Apologies if I missed something and it already exists.

|

feature,triaged

|

low

|

Major

|

359,707,887 |

godot

|

Audio clipping / static / interference on rapid intervals of sound (fixed in `master`)

|

**Godot version:**

3.1 alpha

**OS/device including version:**

Kubuntu 18.04 and Windows 10

**Issue description:**

Creating a rapidly firing weapon, if sounds are played at intervals less than 0.5 or 0.6 seconds there is a clipping or static sound.

Demo: https://www.dropbox.com/s/q5tx4y6272lgdae/2018-09-12%2017-16-00.mp4?dl=0

Improvement can come from modifying sound files with fade in and fade out at either end, but the issue is still present.

Demo: https://www.dropbox.com/s/hnvlu161x1t62fl/secondtest.mp4?dl=0

In the case of testing with a sine wave sound file, instead of clipping / static there is a sort of interference or modulation sound instead.

Demo: https://www.dropbox.com/s/nd2rcsrdrqxo6sd/2018-09-12%2021-19-52.mp4?dl=0

The clipping / static sound can seemingly be eliminated by setting a LowPassFilter and LowShelfFilter on a sound bus, both with a cutoff of 1000Hz.

These filters don't eliminate the modulation type of sound that comes up when using a sine testing sound.

**Steps to reproduce:**

Create a timer with an interval of about 0.3 seconds and have either a single sound repeat at that interval, or rotate between two different sounds.

Issue present in the editor on Linux and Windows, in builds for Linux and Windows, on 2 PCs in my house, through 3 headphone sets and two speaker sets. Also verified by another user who tested on 2 of their headphone sets.

**Minimal reproduction project:**

[SoundTesting.zip](https://github.com/godotengine/godot/files/2377320/SoundTesting.zip)

**Tests and attempted solutions that didn't help**

Before trying the filters, I also tried:

* Having an AudioStreamPlayer2D as a child of each instantiated missile (this made the effect worse)

* Having the Player character scene play a sound from a child AudioStreamPlayer or AudioStreamPlayer2D

* Creating two child AudioStreamPlayers and alternating between playing one or the other, using the same sound

* Alternating between those two child nodes, but playing two different sounds

* Creating a stand alone scene with an AudioStreamPlayer in it, and instantiating it newly and adding it as a child each time

* Outputting the sound from the two child AudioStreamPlayers to different audio buses

* All three different mix target options

* Reducing volume - tested at -2Db, -4Db, -6Db

* Tested with 9 sound files

* Modified a sound effect with fade in and fade out processing to ensure no clicking in the file itself

* Tried reducing the duration of the sound file to 0.3 seconds, but still had the static effect at intervals of 0.5 seconds or less

* Trying with different sound playback hardware

* Tested on Linux and two installations of Windows

* Tested on 2 PCs

* Tested in editor and in Linux and Windows builds (Windows builds tested on two PCs)

**Extra information**

I spent a few hours with the helpful folks at the Godot discord last night going through various test to try and isolate the source of the clipping / interference sounds. Our tests did lead us to believe it wasn't an issue localized to my setup alone.

Different people heard different effects from the videos and test project above. Some heard clipping / static on every sound, some heard none at all. One other person heard it as clearly as I did, and confirmed the same on two of their headphone sets.

@starry-abyss had the theory it was something to do with too many high frequency sounds at once, hence we tried the low pass / shelf filters which eliminated the clipping / static sounds.

Given the effect of the filters I'm not sure this is actually an issue as opposed to a sound management technique being required.

If we can confirm this is just about handling sound in a certain way, perhaps I can contribute to the docs with some information for people in the future who run into this problem.

|

bug,confirmed,topic:audio

|

high

|

Critical

|

359,732,707 |

pytorch

|

Add min mode to embedding bags

|

It would be nice to add the min mode to embeddingBag.

Following [this paper](https://arxiv.org/pdf/1803.01400.pdf) it seems like it can trigger pretty good result. More globally adding the power-mean formula would be awesome.

Additionally, the current error `ValueError: mode has to be one of sum or mean` is not correct since it does allow `max`

cc @albanD @mruberry @jbschlosser

|

module: nn,triaged,enhancement

|

low

|

Critical

|

359,784,320 |

rust

|

Exponential type/trait-checking behavior from linearly nested iterator adapters.

|

The following test takes an exponential amount of time to type-check the body of `huge` (at the time of this writing, reported by `-Z time-passes` under "item-types checking"):

```rust

#![crate_type = "lib"]

pub fn unit() -> std::iter::Empty<()> {

std::iter::empty()

}

macro_rules! nest {

($inner:expr) => (unit().flat_map(|_| {

$inner

}).flat_map(|_| unit()))

}

macro_rules! nests {

() => (unit());

($_first:tt $($rest:tt)*) => (nest!(nests!($($rest)*)))

}

pub fn huge() -> impl Iterator<Item = ()> {

nests! {

// 1/x * 6/5 seconds.

4096 2048 1024 512 256 128 64 32 16 8 4 2

// x * 6/5 seconds.

1 2 4 8 // 16 32 64

}

}

```

This has been reduced from an ambiguity-aware parse tree visitor, and you can see a partial reduction here: https://gist.github.com/eddyb/5f20b8f48b68c92f7d4f022a18c374f4#file-repro-rs.

cc @nikomatsakis

|

C-enhancement,A-trait-system,I-compiletime,T-compiler

|

low

|

Minor

|

359,838,986 |

flutter

|

embedder channel apis poorly documented, makes it harder to write custom embedders

|

trying to create a embedder for flutter, but the documentation around the platform channels is pretty sparse.

documentation I've looked at:

[custom flutter engines](https://github.com/flutter/engine/wiki/Custom-Flutter-Engine-Embedders)

[flutter api docs](https://master-docs-flutter-io.firebaseapp.com/)

[desktop embedder text model](https://github.com/google/flutter-desktop-embedding/blob/master/linux/library/src/internal/text_input_model.cc)

particularly around `flutter/textinput` would love a way to see what platform messages are being sent back and forth within android studio to reverse engineer the protocol and see what the expected messages/responses are. since that would be faster than trying to read the c++ source code.

any way to do this easily?

otherwise where does one find the individual platform channel code for the particular platforms? (android preferably)

|

engine,d: api docs,e: embedder,P2,team-engine,triaged-engine

|

low

|

Minor

|

359,873,268 |

pytorch

|

DataLoader: Could not wrapper a exception in threads

|

## Issue description

It seems that the code in dataloader.py try to wrap exceptions in threads and re-raise it with traceback info which formatted to string.

https://github.com/pytorch/pytorch/blob/v0.4.0/torch/utils/data/dataloader.py#L22

https://github.com/pytorch/pytorch/blob/v0.4.0/torch/utils/data/dataloader.py#L303

(I paste links in v0.4.0 same to my environment, but it's not changed in master branch)

It did not work in some custom exception which not have a "good" `__init__` method.

I have tried to fix it but failed. it is impossible to just re-raise a exception (`exc_type` in code) without knowing the detail of `__int__` method.

## Code example

```

class CustomException(BaseException):

def __init__(self, **kwargs):

pass

class SomeDataset(torch.utils.data.Dataset):

def __init__(self, data):

self.data = data

def __getitem__(self, index):

raise CustomException('test')

def __len__(self):

return len(self.data)

train_dataset = SomeDatset(train_data)

train_dataloder = torch.utils.data.DataLoader(train_dataset)

for data in train_loader:

pass

```

Error Message:

```

Traceback (most recent call last):

File "train.py", line 221, in <module>

for data in train_loader:

File "/usr/local/lib/python3.6/dist-packages/torch/utils/data/dataloader.py", line 286, in __next__

return self._process_next_batch(batch)

File "/usr/local/lib/python3.6/dist-packages/torch/utils/data/dataloader.py", line 307, in _process_next_batch

raise batch.exc_type(batch.exc_msg)

TypeError: __init__() takes 1 positional argument but 2 were given

```

## System Info

```

# python collect_env.py

Collecting environment information...

PyTorch version: 0.4.0

Is debug build: No

CUDA used to build PyTorch: 9.0.176

OS: Ubuntu 16.04.4 LTS

GCC version: (Ubuntu 5.4.0-6ubuntu1~16.04.10) 5.4.0 20160609

CMake version: version 3.5.1

Python version: 3.6

Is CUDA available: Yes

CUDA runtime version: 9.0.176

GPU models and configuration: GPU 0: GeForce GTX 1080

Nvidia driver version: 390.30

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.1.4

/usr/lib/x86_64-linux-gnu/libcudnn_static_v7.a

/usr/local/lib/python3.6/dist-packages/cntk/libs/libcudnn.so.7

Versions of relevant libraries:

[pip] Could not collect

[conda] Could not collect

```

|

module: dataloader,module: error checking,triaged

|

low

|

Critical

|

359,884,864 |

pytorch

|

[Feature request] Advanced indexing in functions like `expand`

|

For functions like `torch.expand`, it would be nice to support advanced indexing with symbols like `...` and `:`.

For example,

```

>>> a = torch.randn(2, 3, 4, 1)

>>> a.expand(..., 10).shape

torch.Size([2, 3, 4, 10])

```

|

triaged,module: advanced indexing

|

low

|

Minor

|

359,885,254 |

TypeScript

|

Inline function refactoring

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Please read the FAQ first, especially the "Common Feature Requests" section.

-->

## Search Terms

<!-- List of keywords you searched for before creating this issue. Write them down here so that others can find this suggestion more easily -->

inline function method refactoring

## Suggestion

<!-- A summary of what you'd like to see added or changed -->

I would like a refactoring that inlines a function from

```

1: function foo() { return 42; }

2: function bar() { const meaningOfLife = foo(); }

```

to

```

1: function bar() { const meaningOfLife = 42; }

```

## Use Cases

<!--

What do you want to use this for?

What shortcomings exist with current approaches?

-->

This is a very common refactoring and thus widely used while cleaning up code.

## Examples

<!-- Show how this would be used and what the behavior would be -->

In the above code sample, block 1, line 1: selecting foo, the user should be able to inline this function to every occurence and optionally delete the function definition.

In the above code sample, block 1, line 2: selecting foo, the user should be able to inline the function to this occurence, and, if it's the only one, optionally delete the function definition.

I am not sure how the option can be handled in vscode. Eclipse brings up a pop-up with the two options, but afaik vs code tries to be minimalist.

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This wouldn't be a breaking change in existing TypeScript / JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. new expression-level syntax)

|

Suggestion,In Discussion,Domain: Refactorings

|

medium

|

Critical

|

359,900,859 |

pytorch

|

undefined reference to caffe2

|

I am trying to cross compile caffe2 and generate a binary for my platform.

I have successfully generated libcaffe2.so.

But while compiling the code facing few issue:

```

/tmp/ccIOey60.o: In function `caffe2::Argument::set_name(char const*)':

temp.cpp:(.text._ZN6caffe28Argument8set_nameEPKc[_ZN6caffe28Argument8set_nameEPKc]+0x24): undefined reference to `caffe2::GetEmptyStringAlreadyInited[abi:cxx11]()'

/tmp/ccIOey60.o: In function `caffe2::OperatorDef::set_type(char const*)':

temp.cpp:(.text._ZN6caffe211OperatorDef8set_typeEPKc[_ZN6caffe211OperatorDef8set_typeEPKc]+0x24): undefined reference to `caffe2::GetEmptyStringAlreadyInited[abi:cxx11]()'

/tmp/ccIOey60.o: In function `caffe2::NetDef::name[abi:cxx11]() const':

temp.cpp:(.text._ZNK6caffe26NetDef4nameB5cxx11Ev[_ZNK6caffe26NetDef4nameB5cxx11Ev]+0x18): undefined reference to `caffe2::GetEmptyStringAlreadyInited[abi:cxx11]()'

/tmp/ccIOey60.o: In function `caffe2::NetDef::set_name(char const*)':

temp.cpp:(.text._ZN6caffe26NetDef8set_nameEPKc[_ZN6caffe26NetDef8set_nameEPKc]+0x24): undefined reference to `caffe2::GetEmptyStringAlreadyInited[abi:cxx11]()'

/tmp/ccIOey60.o: In function `std::default_delete<caffe2::ThreadPool>::operator()(caffe2::ThreadPool*) const':

temp.cpp:(.text._ZNKSt14default_deleteIN6caffe210ThreadPoolEEclEPS1_[_ZNKSt14default_deleteIN6caffe210ThreadPoolEEclEPS1_]+0x24): undefined reference to `caffe2::ThreadPool::~ThreadPool()'