problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_34867

|

rasdani/github-patches

|

git_diff

|

openstates__openstates-scrapers-3028

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

NH: people scraper broken

State: NH (be sure to include in ticket title)

The NH people scraper is broken, because

http://www.gencourt.state.nh.us/downloads/Members.txt

no longer exists. Instead, there is a

http://www.gencourt.state.nh.us/downloads/Members.csv

I'll own this.

</issue>

<code>

[start of openstates/nh/people.py]

1 import re

2

3 from pupa.scrape import Person, Scraper

4 from openstates.utils import LXMLMixin

5

6

7 class NHPersonScraper(Scraper, LXMLMixin):

8 members_url = 'http://www.gencourt.state.nh.us/downloads/Members.txt'

9 lookup_url = 'http://www.gencourt.state.nh.us/house/members/memberlookup.aspx'

10 house_profile_url = 'http://www.gencourt.state.nh.us/house/members/member.aspx?member={}'

11 senate_profile_url = 'http://www.gencourt.state.nh.us/Senate/members/webpages/district{}.aspx'

12

13 chamber_map = {'H': 'lower', 'S': 'upper'}

14 party_map = {

15 'D': 'Democratic',

16 'R': 'Republican',

17 'I': 'Independent',

18 'L': 'Libertarian',

19 }

20

21 def _get_photo(self, url, chamber):

22 """Attempts to find a portrait in the given legislator profile."""

23 try:

24 doc = self.lxmlize(url)

25 except Exception as e:

26 self.warning("skipping {}: {}".format(url, e))

27 return ""

28

29 if chamber == 'upper':

30 src = doc.xpath('//div[@id="page_content"]//img[contains(@src, '

31 '"images/senators") or contains(@src, "Senator")]/@src')

32 elif chamber == 'lower':

33 src = doc.xpath('//img[contains(@src, "images/memberpics")]/@src')

34

35 if src and 'nophoto' not in src[0]:

36 photo_url = src[0]

37 else:

38 photo_url = ''

39

40 return photo_url

41

42 def _parse_person(self, row, chamber, seat_map):

43 # Capture legislator vitals.

44 first_name = row['FirstName']

45 middle_name = row['MiddleName']

46 last_name = row['LastName']

47 full_name = '{} {} {}'.format(first_name, middle_name, last_name)

48 full_name = re.sub(r'[\s]{2,}', ' ', full_name)

49

50 if chamber == 'lower':

51 district = '{} {}'.format(row['County'], int(row['District'])).strip()

52 else:

53 district = str(int(row['District'])).strip()

54

55 party = self.party_map[row['party'].upper()]

56 email = row['WorkEmail']

57

58 if district == '0':

59 self.warning('Skipping {}, district is set to 0'.format(full_name))

60 return

61

62 # Temporary fix for Kari Lerner

63 if district == 'Rockingham 0' and last_name == 'Lerner':

64 district = 'Rockingham 4'

65

66 # Temporary fix for Casey Conley

67 if last_name == 'Conley':

68 if district == '13':

69 district = 'Strafford 13'

70 elif district == 'Strafford 13':

71 self.info('"Temporary fix for Casey Conley" can be removed')

72

73 person = Person(primary_org=chamber,

74 district=district,

75 name=full_name,

76 party=party)

77

78 extras = {

79 'first_name': first_name,

80 'middle_name': middle_name,

81 'last_name': last_name

82 }

83

84 person.extras = extras

85 if email:

86 office = 'Capitol' if email.endswith('@leg.state.nh.us') else 'District'

87 person.add_contact_detail(type='email', value=email, note=office + ' Office')

88

89 # Capture legislator office contact information.

90 district_address = '{}\n{}\n{}, {} {}'.format(row['Address'],

91 row['address2'],

92 row['city'], row['State'],

93 row['Zipcode']).strip()

94

95 phone = row['Phone'].strip()

96 if not phone:

97 phone = None

98

99 if district_address:

100 office = 'Capitol' if chamber == 'upper' else 'District'

101 person.add_contact_detail(type='address', value=district_address,

102 note=office + ' Office')

103 if phone:

104 office = 'Capitol' if '271-' in phone else 'District'

105 person.add_contact_detail(type='voice', value=phone, note=office + ' Office')

106

107 # Retrieve legislator portrait.

108 profile_url = None

109 if chamber == 'upper':

110 profile_url = self.senate_profile_url.format(row['District'])

111 elif chamber == 'lower':

112 try:

113 seat_number = seat_map[row['seatno']]

114 profile_url = self.house_profile_url.format(seat_number)

115 except KeyError:

116 pass

117

118 if profile_url:

119 person.image = self._get_photo(profile_url, chamber)

120 person.add_source(profile_url)

121

122 return person

123

124 def _parse_members_txt(self):

125 lines = self.get(self.members_url).text.splitlines()

126

127 header = lines[0].split('\t')

128

129 for line in lines[1:]:

130 yield dict(zip(header, line.split('\t')))

131

132 def _parse_seat_map(self):

133 """Get mapping between seat numbers and legislator identifiers."""

134 seat_map = {}

135 page = self.lxmlize(self.lookup_url)

136 options = page.xpath('//select[@id="member"]/option')

137 for option in options:

138 member_url = self.house_profile_url.format(option.attrib['value'])

139 member_page = self.lxmlize(member_url)

140 table = member_page.xpath('//table[@id="Table1"]')

141 if table:

142 res = re.search(r'seat #:(\d+)', table[0].text_content(), re.IGNORECASE)

143 if res:

144 seat_map[res.groups()[0]] = option.attrib['value']

145 return seat_map

146

147 def scrape(self, chamber=None):

148 chambers = [chamber] if chamber is not None else ['upper', 'lower']

149 seat_map = self._parse_seat_map()

150 for chamber in chambers:

151 for row in self._parse_members_txt():

152 print(row['electedStatus'])

153 if self.chamber_map[row['LegislativeBody']] == chamber:

154 person = self._parse_person(row, chamber, seat_map)

155

156 # allow for skipping

157 if not person:

158 continue

159

160 person.add_source(self.members_url)

161 person.add_link(self.members_url)

162 yield person

163

[end of openstates/nh/people.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

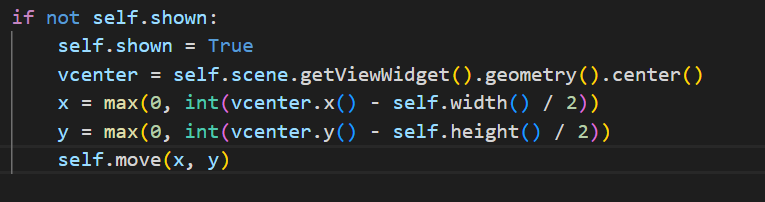

diff --git a/openstates/nh/people.py b/openstates/nh/people.py

--- a/openstates/nh/people.py

+++ b/openstates/nh/people.py

@@ -1,11 +1,12 @@

import re

-

+import csv

from pupa.scrape import Person, Scraper

from openstates.utils import LXMLMixin

+import requests

class NHPersonScraper(Scraper, LXMLMixin):

- members_url = 'http://www.gencourt.state.nh.us/downloads/Members.txt'

+ members_url = 'http://www.gencourt.state.nh.us/downloads/Members.csv'

lookup_url = 'http://www.gencourt.state.nh.us/house/members/memberlookup.aspx'

house_profile_url = 'http://www.gencourt.state.nh.us/house/members/member.aspx?member={}'

senate_profile_url = 'http://www.gencourt.state.nh.us/Senate/members/webpages/district{}.aspx'

@@ -59,17 +60,6 @@

self.warning('Skipping {}, district is set to 0'.format(full_name))

return

- # Temporary fix for Kari Lerner

- if district == 'Rockingham 0' and last_name == 'Lerner':

- district = 'Rockingham 4'

-

- # Temporary fix for Casey Conley

- if last_name == 'Conley':

- if district == '13':

- district = 'Strafford 13'

- elif district == 'Strafford 13':

- self.info('"Temporary fix for Casey Conley" can be removed')

-

person = Person(primary_org=chamber,

district=district,

name=full_name,

@@ -122,12 +112,13 @@

return person

def _parse_members_txt(self):

- lines = self.get(self.members_url).text.splitlines()

+ response = requests.get(self.members_url)

+ lines = csv.reader(response.text.strip().split('\n'), delimiter=',')

- header = lines[0].split('\t')

+ header = next(lines)

- for line in lines[1:]:

- yield dict(zip(header, line.split('\t')))

+ for line in lines:

+ yield dict(zip(header, line))

def _parse_seat_map(self):

"""Get mapping between seat numbers and legislator identifiers."""

|

{"golden_diff": "diff --git a/openstates/nh/people.py b/openstates/nh/people.py\n--- a/openstates/nh/people.py\n+++ b/openstates/nh/people.py\n@@ -1,11 +1,12 @@\n import re\n-\n+import csv\n from pupa.scrape import Person, Scraper\n from openstates.utils import LXMLMixin\n+import requests\n \n \n class NHPersonScraper(Scraper, LXMLMixin):\n- members_url = 'http://www.gencourt.state.nh.us/downloads/Members.txt'\n+ members_url = 'http://www.gencourt.state.nh.us/downloads/Members.csv'\n lookup_url = 'http://www.gencourt.state.nh.us/house/members/memberlookup.aspx'\n house_profile_url = 'http://www.gencourt.state.nh.us/house/members/member.aspx?member={}'\n senate_profile_url = 'http://www.gencourt.state.nh.us/Senate/members/webpages/district{}.aspx'\n@@ -59,17 +60,6 @@\n self.warning('Skipping {}, district is set to 0'.format(full_name))\n return\n \n- # Temporary fix for Kari Lerner\n- if district == 'Rockingham 0' and last_name == 'Lerner':\n- district = 'Rockingham 4'\n-\n- # Temporary fix for Casey Conley\n- if last_name == 'Conley':\n- if district == '13':\n- district = 'Strafford 13'\n- elif district == 'Strafford 13':\n- self.info('\"Temporary fix for Casey Conley\" can be removed')\n-\n person = Person(primary_org=chamber,\n district=district,\n name=full_name,\n@@ -122,12 +112,13 @@\n return person\n \n def _parse_members_txt(self):\n- lines = self.get(self.members_url).text.splitlines()\n+ response = requests.get(self.members_url)\n+ lines = csv.reader(response.text.strip().split('\\n'), delimiter=',')\n \n- header = lines[0].split('\\t')\n+ header = next(lines)\n \n- for line in lines[1:]:\n- yield dict(zip(header, line.split('\\t')))\n+ for line in lines:\n+ yield dict(zip(header, line))\n \n def _parse_seat_map(self):\n \"\"\"Get mapping between seat numbers and legislator identifiers.\"\"\"\n", "issue": "NH: people scraper broken\nState: NH (be sure to include in ticket title)\r\n\r\nThe NH people scraper is broken, because \r\n http://www.gencourt.state.nh.us/downloads/Members.txt\r\nno longer exists. Instead, there is a \r\n http://www.gencourt.state.nh.us/downloads/Members.csv\r\n\r\nI'll own this.\n", "before_files": [{"content": "import re\n\nfrom pupa.scrape import Person, Scraper\nfrom openstates.utils import LXMLMixin\n\n\nclass NHPersonScraper(Scraper, LXMLMixin):\n members_url = 'http://www.gencourt.state.nh.us/downloads/Members.txt'\n lookup_url = 'http://www.gencourt.state.nh.us/house/members/memberlookup.aspx'\n house_profile_url = 'http://www.gencourt.state.nh.us/house/members/member.aspx?member={}'\n senate_profile_url = 'http://www.gencourt.state.nh.us/Senate/members/webpages/district{}.aspx'\n\n chamber_map = {'H': 'lower', 'S': 'upper'}\n party_map = {\n 'D': 'Democratic',\n 'R': 'Republican',\n 'I': 'Independent',\n 'L': 'Libertarian',\n }\n\n def _get_photo(self, url, chamber):\n \"\"\"Attempts to find a portrait in the given legislator profile.\"\"\"\n try:\n doc = self.lxmlize(url)\n except Exception as e:\n self.warning(\"skipping {}: {}\".format(url, e))\n return \"\"\n\n if chamber == 'upper':\n src = doc.xpath('//div[@id=\"page_content\"]//img[contains(@src, '\n '\"images/senators\") or contains(@src, \"Senator\")]/@src')\n elif chamber == 'lower':\n src = doc.xpath('//img[contains(@src, \"images/memberpics\")]/@src')\n\n if src and 'nophoto' not in src[0]:\n photo_url = src[0]\n else:\n photo_url = ''\n\n return photo_url\n\n def _parse_person(self, row, chamber, seat_map):\n # Capture legislator vitals.\n first_name = row['FirstName']\n middle_name = row['MiddleName']\n last_name = row['LastName']\n full_name = '{} {} {}'.format(first_name, middle_name, last_name)\n full_name = re.sub(r'[\\s]{2,}', ' ', full_name)\n\n if chamber == 'lower':\n district = '{} {}'.format(row['County'], int(row['District'])).strip()\n else:\n district = str(int(row['District'])).strip()\n\n party = self.party_map[row['party'].upper()]\n email = row['WorkEmail']\n\n if district == '0':\n self.warning('Skipping {}, district is set to 0'.format(full_name))\n return\n\n # Temporary fix for Kari Lerner\n if district == 'Rockingham 0' and last_name == 'Lerner':\n district = 'Rockingham 4'\n\n # Temporary fix for Casey Conley\n if last_name == 'Conley':\n if district == '13':\n district = 'Strafford 13'\n elif district == 'Strafford 13':\n self.info('\"Temporary fix for Casey Conley\" can be removed')\n\n person = Person(primary_org=chamber,\n district=district,\n name=full_name,\n party=party)\n\n extras = {\n 'first_name': first_name,\n 'middle_name': middle_name,\n 'last_name': last_name\n }\n\n person.extras = extras\n if email:\n office = 'Capitol' if email.endswith('@leg.state.nh.us') else 'District'\n person.add_contact_detail(type='email', value=email, note=office + ' Office')\n\n # Capture legislator office contact information.\n district_address = '{}\\n{}\\n{}, {} {}'.format(row['Address'],\n row['address2'],\n row['city'], row['State'],\n row['Zipcode']).strip()\n\n phone = row['Phone'].strip()\n if not phone:\n phone = None\n\n if district_address:\n office = 'Capitol' if chamber == 'upper' else 'District'\n person.add_contact_detail(type='address', value=district_address,\n note=office + ' Office')\n if phone:\n office = 'Capitol' if '271-' in phone else 'District'\n person.add_contact_detail(type='voice', value=phone, note=office + ' Office')\n\n # Retrieve legislator portrait.\n profile_url = None\n if chamber == 'upper':\n profile_url = self.senate_profile_url.format(row['District'])\n elif chamber == 'lower':\n try:\n seat_number = seat_map[row['seatno']]\n profile_url = self.house_profile_url.format(seat_number)\n except KeyError:\n pass\n\n if profile_url:\n person.image = self._get_photo(profile_url, chamber)\n person.add_source(profile_url)\n\n return person\n\n def _parse_members_txt(self):\n lines = self.get(self.members_url).text.splitlines()\n\n header = lines[0].split('\\t')\n\n for line in lines[1:]:\n yield dict(zip(header, line.split('\\t')))\n\n def _parse_seat_map(self):\n \"\"\"Get mapping between seat numbers and legislator identifiers.\"\"\"\n seat_map = {}\n page = self.lxmlize(self.lookup_url)\n options = page.xpath('//select[@id=\"member\"]/option')\n for option in options:\n member_url = self.house_profile_url.format(option.attrib['value'])\n member_page = self.lxmlize(member_url)\n table = member_page.xpath('//table[@id=\"Table1\"]')\n if table:\n res = re.search(r'seat #:(\\d+)', table[0].text_content(), re.IGNORECASE)\n if res:\n seat_map[res.groups()[0]] = option.attrib['value']\n return seat_map\n\n def scrape(self, chamber=None):\n chambers = [chamber] if chamber is not None else ['upper', 'lower']\n seat_map = self._parse_seat_map()\n for chamber in chambers:\n for row in self._parse_members_txt():\n print(row['electedStatus'])\n if self.chamber_map[row['LegislativeBody']] == chamber:\n person = self._parse_person(row, chamber, seat_map)\n\n # allow for skipping\n if not person:\n continue\n\n person.add_source(self.members_url)\n person.add_link(self.members_url)\n yield person\n", "path": "openstates/nh/people.py"}]}

| 2,338 | 528 |

gh_patches_debug_36742

|

rasdani/github-patches

|

git_diff

|

searxng__searxng-2109

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Search query got emphasized even in the middle of another, unrelated word

<!-- PLEASE FILL THESE FIELDS, IT REALLY HELPS THE MAINTAINERS OF SearXNG -->

**Version of SearXNG**

2023.01.09-afd71a6c

**How did you install SearXNG?**

Installed using docker with clone, docker build and run.

**What happened?**

Query letters got emphasized even in the middle of another unrelated word.

**How To Reproduce**

Do some search using alphabetic, English words with all language flag, such as `the :all`, `java :all`, `master :all`.

**Expected behavior**

Emphasization should occur on queries found in standalone words only, such as `java` query only emphasize '**java**' instead of '**java**script', or `master` only in '**Master**' or '**master**' instead of 'grand**master**'.

**Screenshots & Logs**

|  |

| --- |

| In the word 'o**the**rwise' |

|  |

| --- |

| In the word '**The**saurus' and '**the**ir' |

|  |

| --- |

| In the word '**master**ful', '**master**s', 'grand**master**' |

**Additional context**

Likely happened because the regex being used does not isolate the query for occurrences in standalone words and instead it looks for all occurrences in the whole text without requiring the presence of spaces before or after it. This regex actually works well for the emphasization of queries in Chinese characters, for example:

|  |

| --- |

| Query used: ’村上春樹’ |

</issue>

<code>

[start of searx/webutils.py]

1 # -*- coding: utf-8 -*-

2 import os

3 import pathlib

4 import csv

5 import hashlib

6 import hmac

7 import re

8 import inspect

9 import itertools

10 from datetime import datetime, timedelta

11 from typing import Iterable, List, Tuple, Dict

12

13 from io import StringIO

14 from codecs import getincrementalencoder

15

16 from flask_babel import gettext, format_date

17

18 from searx import logger, settings

19 from searx.engines import Engine, OTHER_CATEGORY

20

21

22 VALID_LANGUAGE_CODE = re.compile(r'^[a-z]{2,3}(-[a-zA-Z]{2})?$')

23

24 logger = logger.getChild('webutils')

25

26

27 class UnicodeWriter:

28 """

29 A CSV writer which will write rows to CSV file "f",

30 which is encoded in the given encoding.

31 """

32

33 def __init__(self, f, dialect=csv.excel, encoding="utf-8", **kwds):

34 # Redirect output to a queue

35 self.queue = StringIO()

36 self.writer = csv.writer(self.queue, dialect=dialect, **kwds)

37 self.stream = f

38 self.encoder = getincrementalencoder(encoding)()

39

40 def writerow(self, row):

41 self.writer.writerow(row)

42 # Fetch UTF-8 output from the queue ...

43 data = self.queue.getvalue()

44 data = data.strip('\x00')

45 # ... and re-encode it into the target encoding

46 data = self.encoder.encode(data)

47 # write to the target stream

48 self.stream.write(data.decode())

49 # empty queue

50 self.queue.truncate(0)

51

52 def writerows(self, rows):

53 for row in rows:

54 self.writerow(row)

55

56

57 def get_themes(templates_path):

58 """Returns available themes list."""

59 return os.listdir(templates_path)

60

61

62 def get_hash_for_file(file: pathlib.Path) -> str:

63 m = hashlib.sha1()

64 with file.open('rb') as f:

65 m.update(f.read())

66 return m.hexdigest()

67

68

69 def get_static_files(static_path: str) -> Dict[str, str]:

70 static_files: Dict[str, str] = {}

71 static_path_path = pathlib.Path(static_path)

72

73 def walk(path: pathlib.Path):

74 for file in path.iterdir():

75 if file.name.startswith('.'):

76 # ignore hidden file

77 continue

78 if file.is_file():

79 static_files[str(file.relative_to(static_path_path))] = get_hash_for_file(file)

80 if file.is_dir() and file.name not in ('node_modules', 'src'):

81 # ignore "src" and "node_modules" directories

82 walk(file)

83

84 walk(static_path_path)

85 return static_files

86

87

88 def get_result_templates(templates_path):

89 result_templates = set()

90 templates_path_length = len(templates_path) + 1

91 for directory, _, files in os.walk(templates_path):

92 if directory.endswith('result_templates'):

93 for filename in files:

94 f = os.path.join(directory[templates_path_length:], filename)

95 result_templates.add(f)

96 return result_templates

97

98

99 def new_hmac(secret_key, url):

100 return hmac.new(secret_key.encode(), url, hashlib.sha256).hexdigest()

101

102

103 def is_hmac_of(secret_key, value, hmac_to_check):

104 hmac_of_value = new_hmac(secret_key, value)

105 return len(hmac_of_value) == len(hmac_to_check) and hmac.compare_digest(hmac_of_value, hmac_to_check)

106

107

108 def prettify_url(url, max_length=74):

109 if len(url) > max_length:

110 chunk_len = int(max_length / 2 + 1)

111 return '{0}[...]{1}'.format(url[:chunk_len], url[-chunk_len:])

112 else:

113 return url

114

115

116 def highlight_content(content, query):

117

118 if not content:

119 return None

120 # ignoring html contents

121 # TODO better html content detection

122 if content.find('<') != -1:

123 return content

124

125 if content.lower().find(query.lower()) > -1:

126 query_regex = '({0})'.format(re.escape(query))

127 content = re.sub(query_regex, '<span class="highlight">\\1</span>', content, flags=re.I | re.U)

128 else:

129 regex_parts = []

130 for chunk in query.split():

131 chunk = chunk.replace('"', '')

132 if len(chunk) == 0:

133 continue

134 elif len(chunk) == 1:

135 regex_parts.append('\\W+{0}\\W+'.format(re.escape(chunk)))

136 else:

137 regex_parts.append('{0}'.format(re.escape(chunk)))

138 query_regex = '({0})'.format('|'.join(regex_parts))

139 content = re.sub(query_regex, '<span class="highlight">\\1</span>', content, flags=re.I | re.U)

140

141 return content

142

143

144 def searxng_l10n_timespan(dt: datetime) -> str: # pylint: disable=invalid-name

145 """Returns a human-readable and translated string indicating how long ago

146 a date was in the past / the time span of the date to the present.

147

148 On January 1st, midnight, the returned string only indicates how many years

149 ago the date was.

150 """

151 # TODO, check if timezone is calculated right # pylint: disable=fixme

152 d = dt.date()

153 t = dt.time()

154 if d.month == 1 and d.day == 1 and t.hour == 0 and t.minute == 0 and t.second == 0:

155 return str(d.year)

156 if dt.replace(tzinfo=None) >= datetime.now() - timedelta(days=1):

157 timedifference = datetime.now() - dt.replace(tzinfo=None)

158 minutes = int((timedifference.seconds / 60) % 60)

159 hours = int(timedifference.seconds / 60 / 60)

160 if hours == 0:

161 return gettext('{minutes} minute(s) ago').format(minutes=minutes)

162 return gettext('{hours} hour(s), {minutes} minute(s) ago').format(hours=hours, minutes=minutes)

163 return format_date(dt)

164

165

166 def is_flask_run_cmdline():

167 """Check if the application was started using "flask run" command line

168

169 Inspect the callstack.

170 See https://github.com/pallets/flask/blob/master/src/flask/__main__.py

171

172 Returns:

173 bool: True if the application was started using "flask run".

174 """

175 frames = inspect.stack()

176 if len(frames) < 2:

177 return False

178 return frames[-2].filename.endswith('flask/cli.py')

179

180

181 DEFAULT_GROUP_NAME = 'others'

182

183

184 def group_engines_in_tab(engines: Iterable[Engine]) -> List[Tuple[str, Iterable[Engine]]]:

185 """Groups an Iterable of engines by their first non tab category"""

186

187 def get_group(eng):

188 non_tab_categories = [

189 c for c in eng.categories if c not in list(settings['categories_as_tabs'].keys()) + [OTHER_CATEGORY]

190 ]

191 return non_tab_categories[0] if len(non_tab_categories) > 0 else DEFAULT_GROUP_NAME

192

193 groups = itertools.groupby(sorted(engines, key=get_group), get_group)

194

195 def group_sort_key(group):

196 return (group[0] == DEFAULT_GROUP_NAME, group[0].lower())

197

198 sorted_groups = sorted(((name, list(engines)) for name, engines in groups), key=group_sort_key)

199

200 def engine_sort_key(engine):

201 return (engine.about.get('language', ''), engine.name)

202

203 return [(groupname, sorted(engines, key=engine_sort_key)) for groupname, engines in sorted_groups]

204

[end of searx/webutils.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

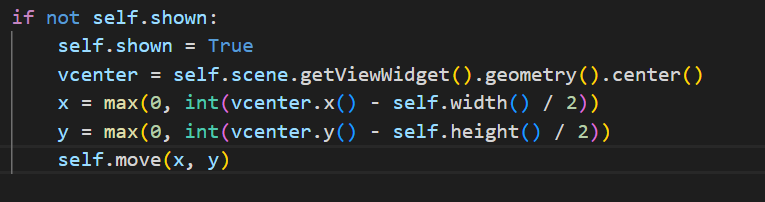

diff --git a/searx/webutils.py b/searx/webutils.py

--- a/searx/webutils.py

+++ b/searx/webutils.py

@@ -113,31 +113,68 @@

return url

+def contains_cjko(s: str) -> bool:

+ """This function check whether or not a string contains Chinese, Japanese,

+ or Korean characters. It employs regex and uses the u escape sequence to

+ match any character in a set of Unicode ranges.

+

+ Args:

+ s (str): string to be checked.

+

+ Returns:

+ bool: True if the input s contains the characters and False otherwise.

+ """

+ unicode_ranges = (

+ '\u4e00-\u9fff' # Chinese characters

+ '\u3040-\u309f' # Japanese hiragana

+ '\u30a0-\u30ff' # Japanese katakana

+ '\u4e00-\u9faf' # Japanese kanji

+ '\uac00-\ud7af' # Korean hangul syllables

+ '\u1100-\u11ff' # Korean hangul jamo

+ )

+ return bool(re.search(fr'[{unicode_ranges}]', s))

+

+

+def regex_highlight_cjk(word: str) -> str:

+ """Generate the regex pattern to match for a given word according

+ to whether or not the word contains CJK characters or not.

+ If the word is and/or contains CJK character, the regex pattern

+ will match standalone word by taking into account the presence

+ of whitespace before and after it; if not, it will match any presence

+ of the word throughout the text, ignoring the whitespace.

+

+ Args:

+ word (str): the word to be matched with regex pattern.

+

+ Returns:

+ str: the regex pattern for the word.

+ """

+ rword = re.escape(word)

+ if contains_cjko(rword):

+ return fr'({rword})'

+ else:

+ return fr'\b({rword})(?!\w)'

+

+

def highlight_content(content, query):

if not content:

return None

+

# ignoring html contents

# TODO better html content detection

if content.find('<') != -1:

return content

- if content.lower().find(query.lower()) > -1:

- query_regex = '({0})'.format(re.escape(query))

- content = re.sub(query_regex, '<span class="highlight">\\1</span>', content, flags=re.I | re.U)

- else:

- regex_parts = []

- for chunk in query.split():

- chunk = chunk.replace('"', '')

- if len(chunk) == 0:

- continue

- elif len(chunk) == 1:

- regex_parts.append('\\W+{0}\\W+'.format(re.escape(chunk)))

- else:

- regex_parts.append('{0}'.format(re.escape(chunk)))

- query_regex = '({0})'.format('|'.join(regex_parts))

- content = re.sub(query_regex, '<span class="highlight">\\1</span>', content, flags=re.I | re.U)

-

+ querysplit = query.split()

+ queries = []

+ for qs in querysplit:

+ qs = qs.replace("'", "").replace('"', '').replace(" ", "")

+ if len(qs) > 0:

+ queries.extend(re.findall(regex_highlight_cjk(qs), content, flags=re.I | re.U))

+ if len(queries) > 0:

+ for q in set(queries):

+ content = re.sub(regex_highlight_cjk(q), f'<span class="highlight">{q}</span>', content)

return content

|

{"golden_diff": "diff --git a/searx/webutils.py b/searx/webutils.py\n--- a/searx/webutils.py\n+++ b/searx/webutils.py\n@@ -113,31 +113,68 @@\n return url\n \n \n+def contains_cjko(s: str) -> bool:\n+ \"\"\"This function check whether or not a string contains Chinese, Japanese,\n+ or Korean characters. It employs regex and uses the u escape sequence to\n+ match any character in a set of Unicode ranges.\n+\n+ Args:\n+ s (str): string to be checked.\n+\n+ Returns:\n+ bool: True if the input s contains the characters and False otherwise.\n+ \"\"\"\n+ unicode_ranges = (\n+ '\\u4e00-\\u9fff' # Chinese characters\n+ '\\u3040-\\u309f' # Japanese hiragana\n+ '\\u30a0-\\u30ff' # Japanese katakana\n+ '\\u4e00-\\u9faf' # Japanese kanji\n+ '\\uac00-\\ud7af' # Korean hangul syllables\n+ '\\u1100-\\u11ff' # Korean hangul jamo\n+ )\n+ return bool(re.search(fr'[{unicode_ranges}]', s))\n+\n+\n+def regex_highlight_cjk(word: str) -> str:\n+ \"\"\"Generate the regex pattern to match for a given word according\n+ to whether or not the word contains CJK characters or not.\n+ If the word is and/or contains CJK character, the regex pattern\n+ will match standalone word by taking into account the presence\n+ of whitespace before and after it; if not, it will match any presence\n+ of the word throughout the text, ignoring the whitespace.\n+\n+ Args:\n+ word (str): the word to be matched with regex pattern.\n+\n+ Returns:\n+ str: the regex pattern for the word.\n+ \"\"\"\n+ rword = re.escape(word)\n+ if contains_cjko(rword):\n+ return fr'({rword})'\n+ else:\n+ return fr'\\b({rword})(?!\\w)'\n+\n+\n def highlight_content(content, query):\n \n if not content:\n return None\n+\n # ignoring html contents\n # TODO better html content detection\n if content.find('<') != -1:\n return content\n \n- if content.lower().find(query.lower()) > -1:\n- query_regex = '({0})'.format(re.escape(query))\n- content = re.sub(query_regex, '<span class=\"highlight\">\\\\1</span>', content, flags=re.I | re.U)\n- else:\n- regex_parts = []\n- for chunk in query.split():\n- chunk = chunk.replace('\"', '')\n- if len(chunk) == 0:\n- continue\n- elif len(chunk) == 1:\n- regex_parts.append('\\\\W+{0}\\\\W+'.format(re.escape(chunk)))\n- else:\n- regex_parts.append('{0}'.format(re.escape(chunk)))\n- query_regex = '({0})'.format('|'.join(regex_parts))\n- content = re.sub(query_regex, '<span class=\"highlight\">\\\\1</span>', content, flags=re.I | re.U)\n-\n+ querysplit = query.split()\n+ queries = []\n+ for qs in querysplit:\n+ qs = qs.replace(\"'\", \"\").replace('\"', '').replace(\" \", \"\")\n+ if len(qs) > 0:\n+ queries.extend(re.findall(regex_highlight_cjk(qs), content, flags=re.I | re.U))\n+ if len(queries) > 0:\n+ for q in set(queries):\n+ content = re.sub(regex_highlight_cjk(q), f'<span class=\"highlight\">{q}</span>', content)\n return content\n", "issue": "Search query got emphasized even in the middle of another, unrelated word\n<!-- PLEASE FILL THESE FIELDS, IT REALLY HELPS THE MAINTAINERS OF SearXNG -->\r\n\r\n**Version of SearXNG**\r\n\r\n2023.01.09-afd71a6c\r\n\r\n**How did you install SearXNG?**\r\n\r\nInstalled using docker with clone, docker build and run.\r\n\r\n**What happened?**\r\n\r\nQuery letters got emphasized even in the middle of another unrelated word.\r\n\r\n**How To Reproduce**\r\n\r\nDo some search using alphabetic, English words with all language flag, such as `the :all`, `java :all`, `master :all`. \r\n\r\n**Expected behavior**\r\n\r\nEmphasization should occur on queries found in standalone words only, such as `java` query only emphasize '**java**' instead of '**java**script', or `master` only in '**Master**' or '**master**' instead of 'grand**master**'.\r\n\r\n**Screenshots & Logs**\r\n\r\n|  |\r\n| --- |\r\n| In the word 'o**the**rwise' |\r\n \r\n|  |\r\n| --- |\r\n| In the word '**The**saurus' and '**the**ir' |\r\n\r\n|  |\r\n| --- |\r\n| In the word '**master**ful', '**master**s', 'grand**master**' |\r\n\r\n**Additional context**\r\n\r\nLikely happened because the regex being used does not isolate the query for occurrences in standalone words and instead it looks for all occurrences in the whole text without requiring the presence of spaces before or after it. This regex actually works well for the emphasization of queries in Chinese characters, for example:\r\n\r\n|  |\r\n| --- |\r\n| Query used: \u2019\u6751\u4e0a\u6625\u6a39\u2019 |\r\n\r\n\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\nimport os\nimport pathlib\nimport csv\nimport hashlib\nimport hmac\nimport re\nimport inspect\nimport itertools\nfrom datetime import datetime, timedelta\nfrom typing import Iterable, List, Tuple, Dict\n\nfrom io import StringIO\nfrom codecs import getincrementalencoder\n\nfrom flask_babel import gettext, format_date\n\nfrom searx import logger, settings\nfrom searx.engines import Engine, OTHER_CATEGORY\n\n\nVALID_LANGUAGE_CODE = re.compile(r'^[a-z]{2,3}(-[a-zA-Z]{2})?$')\n\nlogger = logger.getChild('webutils')\n\n\nclass UnicodeWriter:\n \"\"\"\n A CSV writer which will write rows to CSV file \"f\",\n which is encoded in the given encoding.\n \"\"\"\n\n def __init__(self, f, dialect=csv.excel, encoding=\"utf-8\", **kwds):\n # Redirect output to a queue\n self.queue = StringIO()\n self.writer = csv.writer(self.queue, dialect=dialect, **kwds)\n self.stream = f\n self.encoder = getincrementalencoder(encoding)()\n\n def writerow(self, row):\n self.writer.writerow(row)\n # Fetch UTF-8 output from the queue ...\n data = self.queue.getvalue()\n data = data.strip('\\x00')\n # ... and re-encode it into the target encoding\n data = self.encoder.encode(data)\n # write to the target stream\n self.stream.write(data.decode())\n # empty queue\n self.queue.truncate(0)\n\n def writerows(self, rows):\n for row in rows:\n self.writerow(row)\n\n\ndef get_themes(templates_path):\n \"\"\"Returns available themes list.\"\"\"\n return os.listdir(templates_path)\n\n\ndef get_hash_for_file(file: pathlib.Path) -> str:\n m = hashlib.sha1()\n with file.open('rb') as f:\n m.update(f.read())\n return m.hexdigest()\n\n\ndef get_static_files(static_path: str) -> Dict[str, str]:\n static_files: Dict[str, str] = {}\n static_path_path = pathlib.Path(static_path)\n\n def walk(path: pathlib.Path):\n for file in path.iterdir():\n if file.name.startswith('.'):\n # ignore hidden file\n continue\n if file.is_file():\n static_files[str(file.relative_to(static_path_path))] = get_hash_for_file(file)\n if file.is_dir() and file.name not in ('node_modules', 'src'):\n # ignore \"src\" and \"node_modules\" directories\n walk(file)\n\n walk(static_path_path)\n return static_files\n\n\ndef get_result_templates(templates_path):\n result_templates = set()\n templates_path_length = len(templates_path) + 1\n for directory, _, files in os.walk(templates_path):\n if directory.endswith('result_templates'):\n for filename in files:\n f = os.path.join(directory[templates_path_length:], filename)\n result_templates.add(f)\n return result_templates\n\n\ndef new_hmac(secret_key, url):\n return hmac.new(secret_key.encode(), url, hashlib.sha256).hexdigest()\n\n\ndef is_hmac_of(secret_key, value, hmac_to_check):\n hmac_of_value = new_hmac(secret_key, value)\n return len(hmac_of_value) == len(hmac_to_check) and hmac.compare_digest(hmac_of_value, hmac_to_check)\n\n\ndef prettify_url(url, max_length=74):\n if len(url) > max_length:\n chunk_len = int(max_length / 2 + 1)\n return '{0}[...]{1}'.format(url[:chunk_len], url[-chunk_len:])\n else:\n return url\n\n\ndef highlight_content(content, query):\n\n if not content:\n return None\n # ignoring html contents\n # TODO better html content detection\n if content.find('<') != -1:\n return content\n\n if content.lower().find(query.lower()) > -1:\n query_regex = '({0})'.format(re.escape(query))\n content = re.sub(query_regex, '<span class=\"highlight\">\\\\1</span>', content, flags=re.I | re.U)\n else:\n regex_parts = []\n for chunk in query.split():\n chunk = chunk.replace('\"', '')\n if len(chunk) == 0:\n continue\n elif len(chunk) == 1:\n regex_parts.append('\\\\W+{0}\\\\W+'.format(re.escape(chunk)))\n else:\n regex_parts.append('{0}'.format(re.escape(chunk)))\n query_regex = '({0})'.format('|'.join(regex_parts))\n content = re.sub(query_regex, '<span class=\"highlight\">\\\\1</span>', content, flags=re.I | re.U)\n\n return content\n\n\ndef searxng_l10n_timespan(dt: datetime) -> str: # pylint: disable=invalid-name\n \"\"\"Returns a human-readable and translated string indicating how long ago\n a date was in the past / the time span of the date to the present.\n\n On January 1st, midnight, the returned string only indicates how many years\n ago the date was.\n \"\"\"\n # TODO, check if timezone is calculated right # pylint: disable=fixme\n d = dt.date()\n t = dt.time()\n if d.month == 1 and d.day == 1 and t.hour == 0 and t.minute == 0 and t.second == 0:\n return str(d.year)\n if dt.replace(tzinfo=None) >= datetime.now() - timedelta(days=1):\n timedifference = datetime.now() - dt.replace(tzinfo=None)\n minutes = int((timedifference.seconds / 60) % 60)\n hours = int(timedifference.seconds / 60 / 60)\n if hours == 0:\n return gettext('{minutes} minute(s) ago').format(minutes=minutes)\n return gettext('{hours} hour(s), {minutes} minute(s) ago').format(hours=hours, minutes=minutes)\n return format_date(dt)\n\n\ndef is_flask_run_cmdline():\n \"\"\"Check if the application was started using \"flask run\" command line\n\n Inspect the callstack.\n See https://github.com/pallets/flask/blob/master/src/flask/__main__.py\n\n Returns:\n bool: True if the application was started using \"flask run\".\n \"\"\"\n frames = inspect.stack()\n if len(frames) < 2:\n return False\n return frames[-2].filename.endswith('flask/cli.py')\n\n\nDEFAULT_GROUP_NAME = 'others'\n\n\ndef group_engines_in_tab(engines: Iterable[Engine]) -> List[Tuple[str, Iterable[Engine]]]:\n \"\"\"Groups an Iterable of engines by their first non tab category\"\"\"\n\n def get_group(eng):\n non_tab_categories = [\n c for c in eng.categories if c not in list(settings['categories_as_tabs'].keys()) + [OTHER_CATEGORY]\n ]\n return non_tab_categories[0] if len(non_tab_categories) > 0 else DEFAULT_GROUP_NAME\n\n groups = itertools.groupby(sorted(engines, key=get_group), get_group)\n\n def group_sort_key(group):\n return (group[0] == DEFAULT_GROUP_NAME, group[0].lower())\n\n sorted_groups = sorted(((name, list(engines)) for name, engines in groups), key=group_sort_key)\n\n def engine_sort_key(engine):\n return (engine.about.get('language', ''), engine.name)\n\n return [(groupname, sorted(engines, key=engine_sort_key)) for groupname, engines in sorted_groups]\n", "path": "searx/webutils.py"}]}

| 3,414 | 852 |

gh_patches_debug_23663

|

rasdani/github-patches

|

git_diff

|

pantsbuild__pants-17663

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

`deploy_jar` attempts to build Java source files that do not exist in the package

Attempting to build a `deploy_jar` results in:

```

FileNotFoundError: [Errno 2] No such file or directory: '/Users/chrisjrn/src/pants/src/python/pants/jvm/jar_tool/src/java/org/pantsbuild/args4j'

```

Took a look through the unzipped pants wheel, and the relevant files are nowhere to be found.

</issue>

<code>

[start of src/python/pants/jvm/jar_tool/jar_tool.py]

1 # Copyright 2022 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3

4 from __future__ import annotations

5

6 import os

7 from dataclasses import dataclass

8 from enum import Enum, unique

9 from typing import Iterable, Mapping

10

11 import pkg_resources

12

13 from pants.base.glob_match_error_behavior import GlobMatchErrorBehavior

14 from pants.core.goals.generate_lockfiles import DEFAULT_TOOL_LOCKFILE, GenerateToolLockfileSentinel

15 from pants.engine.fs import (

16 CreateDigest,

17 Digest,

18 DigestEntries,

19 DigestSubset,

20 Directory,

21 FileContent,

22 FileEntry,

23 MergeDigests,

24 PathGlobs,

25 RemovePrefix,

26 )

27 from pants.engine.process import ProcessResult

28 from pants.engine.rules import Get, MultiGet, collect_rules, rule

29 from pants.engine.unions import UnionRule

30 from pants.jvm.jdk_rules import InternalJdk, JvmProcess

31 from pants.jvm.resolve.coursier_fetch import ToolClasspath, ToolClasspathRequest

32 from pants.jvm.resolve.jvm_tool import GenerateJvmLockfileFromTool

33 from pants.util.frozendict import FrozenDict

34 from pants.util.logging import LogLevel

35 from pants.util.meta import frozen_after_init

36 from pants.util.ordered_set import FrozenOrderedSet

37

38

39 @unique

40 class JarDuplicateAction(Enum):

41 SKIP = "skip"

42 REPLACE = "replace"

43 CONCAT = "concat"

44 CONCAT_TEXT = "concat_text"

45 THROW = "throw"

46

47

48 @dataclass(unsafe_hash=True)

49 @frozen_after_init

50 class JarToolRequest:

51 jar_name: str

52 digest: Digest

53 main_class: str | None

54 classpath_entries: tuple[str, ...]

55 manifest: str | None

56 jars: tuple[str, ...]

57 file_mappings: FrozenDict[str, str]

58 default_action: JarDuplicateAction | None

59 policies: tuple[tuple[str, JarDuplicateAction], ...]

60 skip: tuple[str, ...]

61 compress: bool

62 update: bool

63

64 def __init__(

65 self,

66 *,

67 jar_name: str,

68 digest: Digest,

69 main_class: str | None = None,

70 classpath_entries: Iterable[str] | None = None,

71 manifest: str | None = None,

72 jars: Iterable[str] | None = None,

73 file_mappings: Mapping[str, str] | None = None,

74 default_action: JarDuplicateAction | None = None,

75 policies: Iterable[tuple[str, str | JarDuplicateAction]] | None = None,

76 skip: Iterable[str] | None = None,

77 compress: bool = False,

78 update: bool = False,

79 ) -> None:

80 self.jar_name = jar_name

81 self.digest = digest

82 self.main_class = main_class

83 self.manifest = manifest

84 self.classpath_entries = tuple(classpath_entries or ())

85 self.jars = tuple(jars or ())

86 self.file_mappings = FrozenDict(file_mappings or {})

87 self.default_action = default_action

88 self.policies = tuple(JarToolRequest.__parse_policies(policies or ()))

89 self.skip = tuple(skip or ())

90 self.compress = compress

91 self.update = update

92

93 @staticmethod

94 def __parse_policies(

95 policies: Iterable[tuple[str, str | JarDuplicateAction]]

96 ) -> Iterable[tuple[str, JarDuplicateAction]]:

97 return [

98 (

99 pattern,

100 action

101 if isinstance(action, JarDuplicateAction)

102 else JarDuplicateAction(action.lower()),

103 )

104 for (pattern, action) in policies

105 ]

106

107

108 _JAR_TOOL_MAIN_CLASS = "org.pantsbuild.tools.jar.Main"

109

110

111 class JarToolGenerateLockfileSentinel(GenerateToolLockfileSentinel):

112 resolve_name = "jar_tool"

113

114

115 @dataclass(frozen=True)

116 class JarToolCompiledClassfiles:

117 digest: Digest

118

119

120 @rule

121 async def run_jar_tool(

122 request: JarToolRequest, jdk: InternalJdk, jar_tool: JarToolCompiledClassfiles

123 ) -> Digest:

124 output_prefix = "__out"

125 output_jarname = os.path.join(output_prefix, request.jar_name)

126

127 lockfile_request, empty_output_digest = await MultiGet(

128 Get(GenerateJvmLockfileFromTool, JarToolGenerateLockfileSentinel()),

129 Get(Digest, CreateDigest([Directory(output_prefix)])),

130 )

131

132 tool_classpath = await Get(ToolClasspath, ToolClasspathRequest(lockfile=lockfile_request))

133

134 toolcp_prefix = "__toolcp"

135 jartoolcp_prefix = "__jartoolcp"

136 input_prefix = "__in"

137 immutable_input_digests = {

138 toolcp_prefix: tool_classpath.digest,

139 jartoolcp_prefix: jar_tool.digest,

140 input_prefix: request.digest,

141 }

142

143 policies = ",".join(

144 f"{pattern}={action.value.upper()}" for (pattern, action) in request.policies

145 )

146 file_mappings = ",".join(

147 f"{os.path.join(input_prefix, fs_path)}={jar_path}"

148 for fs_path, jar_path in request.file_mappings.items()

149 )

150

151 tool_process = JvmProcess(

152 jdk=jdk,

153 argv=[

154 _JAR_TOOL_MAIN_CLASS,

155 output_jarname,

156 *((f"-main={request.main_class}",) if request.main_class else ()),

157 *(

158 (f"-classpath={','.join(request.classpath_entries)}",)

159 if request.classpath_entries

160 else ()

161 ),

162 *(

163 (f"-manifest={os.path.join(input_prefix, request.manifest)}",)

164 if request.manifest

165 else ()

166 ),

167 *(

168 (f"-jars={','.join([os.path.join(input_prefix, jar) for jar in request.jars])}",)

169 if request.jars

170 else ()

171 ),

172 *((f"-files={file_mappings}",) if file_mappings else ()),

173 *(

174 (f"-default_action={request.default_action.value.upper()}",)

175 if request.default_action

176 else ()

177 ),

178 *((f"-policies={policies}",) if policies else ()),

179 *((f"-skip={','.join(request.skip)}",) if request.skip else ()),

180 *(("-compress",) if request.compress else ()),

181 *(("-update",) if request.update else ()),

182 ],

183 classpath_entries=[*tool_classpath.classpath_entries(toolcp_prefix), jartoolcp_prefix],

184 input_digest=empty_output_digest,

185 extra_immutable_input_digests=immutable_input_digests,

186 extra_nailgun_keys=immutable_input_digests.keys(),

187 description=f"Building jar {request.jar_name}",

188 output_directories=(output_prefix,),

189 level=LogLevel.DEBUG,

190 )

191

192 result = await Get(ProcessResult, JvmProcess, tool_process)

193 return await Get(Digest, RemovePrefix(result.output_digest, output_prefix))

194

195

196 _JAR_TOOL_SRC_PACKAGES = ["org.pantsbuild.args4j", "org.pantsbuild.tools.jar"]

197

198

199 def _load_jar_tool_sources() -> list[FileContent]:

200 result = []

201 for package in _JAR_TOOL_SRC_PACKAGES:

202 pkg_path = package.replace(".", os.path.sep)

203 relative_folder = os.path.join("src", pkg_path)

204 for basename in pkg_resources.resource_listdir(__name__, relative_folder):

205 result.append(

206 FileContent(

207 path=os.path.join(pkg_path, basename),

208 content=pkg_resources.resource_string(

209 __name__, os.path.join(relative_folder, basename)

210 ),

211 )

212 )

213 return result

214

215

216 # TODO(13879): Consolidate compilation of wrapper binaries to common rules.

217 @rule

218 async def build_jar_tool(jdk: InternalJdk) -> JarToolCompiledClassfiles:

219 lockfile_request, source_digest = await MultiGet(

220 Get(GenerateJvmLockfileFromTool, JarToolGenerateLockfileSentinel()),

221 Get(

222 Digest,

223 CreateDigest(_load_jar_tool_sources()),

224 ),

225 )

226

227 dest_dir = "classfiles"

228 materialized_classpath, java_subset_digest, empty_dest_dir = await MultiGet(

229 Get(ToolClasspath, ToolClasspathRequest(prefix="__toolcp", lockfile=lockfile_request)),

230 Get(

231 Digest,

232 DigestSubset(

233 source_digest,

234 PathGlobs(

235 ["**/*.java"],

236 glob_match_error_behavior=GlobMatchErrorBehavior.error,

237 description_of_origin="jar tool sources",

238 ),

239 ),

240 ),

241 Get(Digest, CreateDigest([Directory(path=dest_dir)])),

242 )

243

244 merged_digest, src_entries = await MultiGet(

245 Get(

246 Digest,

247 MergeDigests([materialized_classpath.digest, source_digest, empty_dest_dir]),

248 ),

249 Get(DigestEntries, Digest, java_subset_digest),

250 )

251

252 compile_result = await Get(

253 ProcessResult,

254 JvmProcess(

255 jdk=jdk,

256 classpath_entries=[f"{jdk.java_home}/lib/tools.jar"],

257 argv=[

258 "com.sun.tools.javac.Main",

259 "-cp",

260 ":".join(materialized_classpath.classpath_entries()),

261 "-d",

262 dest_dir,

263 *[entry.path for entry in src_entries if isinstance(entry, FileEntry)],

264 ],

265 input_digest=merged_digest,

266 output_directories=(dest_dir,),

267 description="Compile jar-tool sources using javac.",

268 level=LogLevel.DEBUG,

269 use_nailgun=False,

270 ),

271 )

272

273 stripped_classfiles_digest = await Get(

274 Digest, RemovePrefix(compile_result.output_digest, dest_dir)

275 )

276 return JarToolCompiledClassfiles(digest=stripped_classfiles_digest)

277

278

279 @rule

280 async def generate_jartool_lockfile_request(

281 _: JarToolGenerateLockfileSentinel,

282 ) -> GenerateJvmLockfileFromTool:

283 return GenerateJvmLockfileFromTool(

284 artifact_inputs=FrozenOrderedSet(

285 {

286 "args4j:args4j:2.33",

287 "com.google.code.findbugs:jsr305:3.0.2",

288 "com.google.guava:guava:18.0",

289 }

290 ),

291 artifact_option_name="n/a",

292 lockfile_option_name="n/a",

293 resolve_name=JarToolGenerateLockfileSentinel.resolve_name,

294 read_lockfile_dest=DEFAULT_TOOL_LOCKFILE,

295 write_lockfile_dest="src/python/pants/jvm/jar_tool/jar_tool.lock",

296 default_lockfile_resource=("pants.jvm.jar_tool", "jar_tool.lock"),

297 )

298

299

300 def rules():

301 return [

302 *collect_rules(),

303 UnionRule(GenerateToolLockfileSentinel, JarToolGenerateLockfileSentinel),

304 ]

305

[end of src/python/pants/jvm/jar_tool/jar_tool.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/src/python/pants/jvm/jar_tool/jar_tool.py b/src/python/pants/jvm/jar_tool/jar_tool.py

--- a/src/python/pants/jvm/jar_tool/jar_tool.py

+++ b/src/python/pants/jvm/jar_tool/jar_tool.py

@@ -193,20 +193,20 @@

return await Get(Digest, RemovePrefix(result.output_digest, output_prefix))

-_JAR_TOOL_SRC_PACKAGES = ["org.pantsbuild.args4j", "org.pantsbuild.tools.jar"]

+_JAR_TOOL_SRC_PACKAGES = ["args4j", "jar_tool_source"]

def _load_jar_tool_sources() -> list[FileContent]:

result = []

for package in _JAR_TOOL_SRC_PACKAGES:

- pkg_path = package.replace(".", os.path.sep)

- relative_folder = os.path.join("src", pkg_path)

- for basename in pkg_resources.resource_listdir(__name__, relative_folder):

+ # pkg_path = package.replace(".", os.path.sep)

+ # relative_folder = os.path.join("src", pkg_path)

+ for basename in pkg_resources.resource_listdir(__name__, package):

result.append(

FileContent(

- path=os.path.join(pkg_path, basename),

+ path=os.path.join(package, basename),

content=pkg_resources.resource_string(

- __name__, os.path.join(relative_folder, basename)

+ __name__, os.path.join(package, basename)

),

)

)

|

{"golden_diff": "diff --git a/src/python/pants/jvm/jar_tool/jar_tool.py b/src/python/pants/jvm/jar_tool/jar_tool.py\n--- a/src/python/pants/jvm/jar_tool/jar_tool.py\n+++ b/src/python/pants/jvm/jar_tool/jar_tool.py\n@@ -193,20 +193,20 @@\n return await Get(Digest, RemovePrefix(result.output_digest, output_prefix))\n \n \n-_JAR_TOOL_SRC_PACKAGES = [\"org.pantsbuild.args4j\", \"org.pantsbuild.tools.jar\"]\n+_JAR_TOOL_SRC_PACKAGES = [\"args4j\", \"jar_tool_source\"]\n \n \n def _load_jar_tool_sources() -> list[FileContent]:\n result = []\n for package in _JAR_TOOL_SRC_PACKAGES:\n- pkg_path = package.replace(\".\", os.path.sep)\n- relative_folder = os.path.join(\"src\", pkg_path)\n- for basename in pkg_resources.resource_listdir(__name__, relative_folder):\n+ # pkg_path = package.replace(\".\", os.path.sep)\n+ # relative_folder = os.path.join(\"src\", pkg_path)\n+ for basename in pkg_resources.resource_listdir(__name__, package):\n result.append(\n FileContent(\n- path=os.path.join(pkg_path, basename),\n+ path=os.path.join(package, basename),\n content=pkg_resources.resource_string(\n- __name__, os.path.join(relative_folder, basename)\n+ __name__, os.path.join(package, basename)\n ),\n )\n )\n", "issue": "`deploy_jar` attempts to build Java source files that do not exist in the package\nAttempting to build a `deploy_jar` results in:\r\n\r\n```\r\nFileNotFoundError: [Errno 2] No such file or directory: '/Users/chrisjrn/src/pants/src/python/pants/jvm/jar_tool/src/java/org/pantsbuild/args4j'\r\n```\r\n\r\nTook a look through the unzipped pants wheel, and the relevant files are nowhere to be found.\n", "before_files": [{"content": "# Copyright 2022 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\nfrom __future__ import annotations\n\nimport os\nfrom dataclasses import dataclass\nfrom enum import Enum, unique\nfrom typing import Iterable, Mapping\n\nimport pkg_resources\n\nfrom pants.base.glob_match_error_behavior import GlobMatchErrorBehavior\nfrom pants.core.goals.generate_lockfiles import DEFAULT_TOOL_LOCKFILE, GenerateToolLockfileSentinel\nfrom pants.engine.fs import (\n CreateDigest,\n Digest,\n DigestEntries,\n DigestSubset,\n Directory,\n FileContent,\n FileEntry,\n MergeDigests,\n PathGlobs,\n RemovePrefix,\n)\nfrom pants.engine.process import ProcessResult\nfrom pants.engine.rules import Get, MultiGet, collect_rules, rule\nfrom pants.engine.unions import UnionRule\nfrom pants.jvm.jdk_rules import InternalJdk, JvmProcess\nfrom pants.jvm.resolve.coursier_fetch import ToolClasspath, ToolClasspathRequest\nfrom pants.jvm.resolve.jvm_tool import GenerateJvmLockfileFromTool\nfrom pants.util.frozendict import FrozenDict\nfrom pants.util.logging import LogLevel\nfrom pants.util.meta import frozen_after_init\nfrom pants.util.ordered_set import FrozenOrderedSet\n\n\n@unique\nclass JarDuplicateAction(Enum):\n SKIP = \"skip\"\n REPLACE = \"replace\"\n CONCAT = \"concat\"\n CONCAT_TEXT = \"concat_text\"\n THROW = \"throw\"\n\n\n@dataclass(unsafe_hash=True)\n@frozen_after_init\nclass JarToolRequest:\n jar_name: str\n digest: Digest\n main_class: str | None\n classpath_entries: tuple[str, ...]\n manifest: str | None\n jars: tuple[str, ...]\n file_mappings: FrozenDict[str, str]\n default_action: JarDuplicateAction | None\n policies: tuple[tuple[str, JarDuplicateAction], ...]\n skip: tuple[str, ...]\n compress: bool\n update: bool\n\n def __init__(\n self,\n *,\n jar_name: str,\n digest: Digest,\n main_class: str | None = None,\n classpath_entries: Iterable[str] | None = None,\n manifest: str | None = None,\n jars: Iterable[str] | None = None,\n file_mappings: Mapping[str, str] | None = None,\n default_action: JarDuplicateAction | None = None,\n policies: Iterable[tuple[str, str | JarDuplicateAction]] | None = None,\n skip: Iterable[str] | None = None,\n compress: bool = False,\n update: bool = False,\n ) -> None:\n self.jar_name = jar_name\n self.digest = digest\n self.main_class = main_class\n self.manifest = manifest\n self.classpath_entries = tuple(classpath_entries or ())\n self.jars = tuple(jars or ())\n self.file_mappings = FrozenDict(file_mappings or {})\n self.default_action = default_action\n self.policies = tuple(JarToolRequest.__parse_policies(policies or ()))\n self.skip = tuple(skip or ())\n self.compress = compress\n self.update = update\n\n @staticmethod\n def __parse_policies(\n policies: Iterable[tuple[str, str | JarDuplicateAction]]\n ) -> Iterable[tuple[str, JarDuplicateAction]]:\n return [\n (\n pattern,\n action\n if isinstance(action, JarDuplicateAction)\n else JarDuplicateAction(action.lower()),\n )\n for (pattern, action) in policies\n ]\n\n\n_JAR_TOOL_MAIN_CLASS = \"org.pantsbuild.tools.jar.Main\"\n\n\nclass JarToolGenerateLockfileSentinel(GenerateToolLockfileSentinel):\n resolve_name = \"jar_tool\"\n\n\n@dataclass(frozen=True)\nclass JarToolCompiledClassfiles:\n digest: Digest\n\n\n@rule\nasync def run_jar_tool(\n request: JarToolRequest, jdk: InternalJdk, jar_tool: JarToolCompiledClassfiles\n) -> Digest:\n output_prefix = \"__out\"\n output_jarname = os.path.join(output_prefix, request.jar_name)\n\n lockfile_request, empty_output_digest = await MultiGet(\n Get(GenerateJvmLockfileFromTool, JarToolGenerateLockfileSentinel()),\n Get(Digest, CreateDigest([Directory(output_prefix)])),\n )\n\n tool_classpath = await Get(ToolClasspath, ToolClasspathRequest(lockfile=lockfile_request))\n\n toolcp_prefix = \"__toolcp\"\n jartoolcp_prefix = \"__jartoolcp\"\n input_prefix = \"__in\"\n immutable_input_digests = {\n toolcp_prefix: tool_classpath.digest,\n jartoolcp_prefix: jar_tool.digest,\n input_prefix: request.digest,\n }\n\n policies = \",\".join(\n f\"{pattern}={action.value.upper()}\" for (pattern, action) in request.policies\n )\n file_mappings = \",\".join(\n f\"{os.path.join(input_prefix, fs_path)}={jar_path}\"\n for fs_path, jar_path in request.file_mappings.items()\n )\n\n tool_process = JvmProcess(\n jdk=jdk,\n argv=[\n _JAR_TOOL_MAIN_CLASS,\n output_jarname,\n *((f\"-main={request.main_class}\",) if request.main_class else ()),\n *(\n (f\"-classpath={','.join(request.classpath_entries)}\",)\n if request.classpath_entries\n else ()\n ),\n *(\n (f\"-manifest={os.path.join(input_prefix, request.manifest)}\",)\n if request.manifest\n else ()\n ),\n *(\n (f\"-jars={','.join([os.path.join(input_prefix, jar) for jar in request.jars])}\",)\n if request.jars\n else ()\n ),\n *((f\"-files={file_mappings}\",) if file_mappings else ()),\n *(\n (f\"-default_action={request.default_action.value.upper()}\",)\n if request.default_action\n else ()\n ),\n *((f\"-policies={policies}\",) if policies else ()),\n *((f\"-skip={','.join(request.skip)}\",) if request.skip else ()),\n *((\"-compress\",) if request.compress else ()),\n *((\"-update\",) if request.update else ()),\n ],\n classpath_entries=[*tool_classpath.classpath_entries(toolcp_prefix), jartoolcp_prefix],\n input_digest=empty_output_digest,\n extra_immutable_input_digests=immutable_input_digests,\n extra_nailgun_keys=immutable_input_digests.keys(),\n description=f\"Building jar {request.jar_name}\",\n output_directories=(output_prefix,),\n level=LogLevel.DEBUG,\n )\n\n result = await Get(ProcessResult, JvmProcess, tool_process)\n return await Get(Digest, RemovePrefix(result.output_digest, output_prefix))\n\n\n_JAR_TOOL_SRC_PACKAGES = [\"org.pantsbuild.args4j\", \"org.pantsbuild.tools.jar\"]\n\n\ndef _load_jar_tool_sources() -> list[FileContent]:\n result = []\n for package in _JAR_TOOL_SRC_PACKAGES:\n pkg_path = package.replace(\".\", os.path.sep)\n relative_folder = os.path.join(\"src\", pkg_path)\n for basename in pkg_resources.resource_listdir(__name__, relative_folder):\n result.append(\n FileContent(\n path=os.path.join(pkg_path, basename),\n content=pkg_resources.resource_string(\n __name__, os.path.join(relative_folder, basename)\n ),\n )\n )\n return result\n\n\n# TODO(13879): Consolidate compilation of wrapper binaries to common rules.\n@rule\nasync def build_jar_tool(jdk: InternalJdk) -> JarToolCompiledClassfiles:\n lockfile_request, source_digest = await MultiGet(\n Get(GenerateJvmLockfileFromTool, JarToolGenerateLockfileSentinel()),\n Get(\n Digest,\n CreateDigest(_load_jar_tool_sources()),\n ),\n )\n\n dest_dir = \"classfiles\"\n materialized_classpath, java_subset_digest, empty_dest_dir = await MultiGet(\n Get(ToolClasspath, ToolClasspathRequest(prefix=\"__toolcp\", lockfile=lockfile_request)),\n Get(\n Digest,\n DigestSubset(\n source_digest,\n PathGlobs(\n [\"**/*.java\"],\n glob_match_error_behavior=GlobMatchErrorBehavior.error,\n description_of_origin=\"jar tool sources\",\n ),\n ),\n ),\n Get(Digest, CreateDigest([Directory(path=dest_dir)])),\n )\n\n merged_digest, src_entries = await MultiGet(\n Get(\n Digest,\n MergeDigests([materialized_classpath.digest, source_digest, empty_dest_dir]),\n ),\n Get(DigestEntries, Digest, java_subset_digest),\n )\n\n compile_result = await Get(\n ProcessResult,\n JvmProcess(\n jdk=jdk,\n classpath_entries=[f\"{jdk.java_home}/lib/tools.jar\"],\n argv=[\n \"com.sun.tools.javac.Main\",\n \"-cp\",\n \":\".join(materialized_classpath.classpath_entries()),\n \"-d\",\n dest_dir,\n *[entry.path for entry in src_entries if isinstance(entry, FileEntry)],\n ],\n input_digest=merged_digest,\n output_directories=(dest_dir,),\n description=\"Compile jar-tool sources using javac.\",\n level=LogLevel.DEBUG,\n use_nailgun=False,\n ),\n )\n\n stripped_classfiles_digest = await Get(\n Digest, RemovePrefix(compile_result.output_digest, dest_dir)\n )\n return JarToolCompiledClassfiles(digest=stripped_classfiles_digest)\n\n\n@rule\nasync def generate_jartool_lockfile_request(\n _: JarToolGenerateLockfileSentinel,\n) -> GenerateJvmLockfileFromTool:\n return GenerateJvmLockfileFromTool(\n artifact_inputs=FrozenOrderedSet(\n {\n \"args4j:args4j:2.33\",\n \"com.google.code.findbugs:jsr305:3.0.2\",\n \"com.google.guava:guava:18.0\",\n }\n ),\n artifact_option_name=\"n/a\",\n lockfile_option_name=\"n/a\",\n resolve_name=JarToolGenerateLockfileSentinel.resolve_name,\n read_lockfile_dest=DEFAULT_TOOL_LOCKFILE,\n write_lockfile_dest=\"src/python/pants/jvm/jar_tool/jar_tool.lock\",\n default_lockfile_resource=(\"pants.jvm.jar_tool\", \"jar_tool.lock\"),\n )\n\n\ndef rules():\n return [\n *collect_rules(),\n UnionRule(GenerateToolLockfileSentinel, JarToolGenerateLockfileSentinel),\n ]\n", "path": "src/python/pants/jvm/jar_tool/jar_tool.py"}]}

| 3,741 | 324 |

gh_patches_debug_27381

|

rasdani/github-patches

|

git_diff

|

strawberry-graphql__strawberry-1985

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Regression using mutable default values in arguments.

<!-- Provide a general summary of the bug in the title above. -->

<!--- This template is entirely optional and can be removed, but is here to help both you and us. -->

<!--- Anything on lines wrapped in comments like these will not show up in the final text. -->

## Describe the Bug

Prior to v0.115

```python

@strawberry.input

class Options:

flag: str = ''

@strawberry.type

class Query:

@strawberry.field

def field(self, x: list[str] = [], y: Options = {}) -> str:

return f'{x} {y}'

```

would correctly resolve to

```graphql

type Query {

field(x: [String!]! = [], y: Options! = {}): String!

}

```

As of v0.115 it raises

```python

File "lib/python3.10/site-packages/strawberry/types/fields/resolver.py", line 87, in find

resolver._resolved_annotations[parameter] = resolved_annotation

File "/lib/python3.10/inspect.py", line 2740, in __hash__

return hash((self.name, self.kind, self.annotation, self.default))

TypeError: unhashable type: 'list'

```

For lists, there is a workaround to use a tuple instead, but it's not ideal because GraphQL type coercion will correctly supply a list. For objects, there's no clean workaround; one would have to use the equivalent of a `frozendict`.

## System Information

- Strawberry version 0.115

</issue>

<code>

[start of strawberry/types/fields/resolver.py]

1 from __future__ import annotations as _

2

3 import builtins

4 import inspect

5 import sys

6 import warnings

7 from inspect import isasyncgenfunction, iscoroutinefunction

8 from typing import ( # type: ignore[attr-defined]

9 Any,

10 Callable,

11 Dict,

12 ForwardRef,

13 Generic,

14 List,

15 Mapping,

16 NamedTuple,

17 Optional,

18 Tuple,

19 Type,

20 TypeVar,

21 Union,

22 _eval_type,

23 )

24

25 from backports.cached_property import cached_property

26 from typing_extensions import Annotated, Protocol, get_args, get_origin

27

28 from strawberry.annotation import StrawberryAnnotation

29 from strawberry.arguments import StrawberryArgument

30 from strawberry.exceptions import MissingArgumentsAnnotationsError

31 from strawberry.type import StrawberryType

32 from strawberry.types.info import Info

33

34

35 class ReservedParameterSpecification(Protocol):

36 def find(

37 self, parameters: Tuple[inspect.Parameter, ...], resolver: StrawberryResolver

38 ) -> Optional[inspect.Parameter]:

39 """Finds the reserved parameter from ``parameters``."""

40

41

42 class ReservedName(NamedTuple):

43 name: str

44

45 def find(

46 self, parameters: Tuple[inspect.Parameter, ...], _: StrawberryResolver

47 ) -> Optional[inspect.Parameter]:

48 return next((p for p in parameters if p.name == self.name), None)

49

50

51 class ReservedNameBoundParameter(NamedTuple):

52 name: str

53

54 def find(

55 self, parameters: Tuple[inspect.Parameter, ...], _: StrawberryResolver

56 ) -> Optional[inspect.Parameter]:

57 if parameters: # Add compatibility for resolvers with no arguments

58 first_parameter = parameters[0]

59 return first_parameter if first_parameter.name == self.name else None

60 else:

61 return None

62

63

64 class ReservedType(NamedTuple):

65 """Define a reserved type by name or by type.

66

67 To preserve backwards-comaptibility, if an annotation was defined but does not match

68 :attr:`type`, then the name is used as a fallback.

69 """

70

71 name: str

72 type: Type

73

74 def find(

75 self, parameters: Tuple[inspect.Parameter, ...], resolver: StrawberryResolver

76 ) -> Optional[inspect.Parameter]:

77 for parameter in parameters:

78 annotation = parameter.annotation

79 try:

80 resolved_annotation = _eval_type(

81 ForwardRef(annotation)

82 if isinstance(annotation, str)

83 else annotation,

84 resolver._namespace,

85 None,

86 )

87 resolver._resolved_annotations[parameter] = resolved_annotation

88 except NameError:

89 # Type-annotation could not be resolved

90 resolved_annotation = annotation

91 if self.is_reserved_type(resolved_annotation):

92 return parameter

93

94 # Fallback to matching by name

95 reserved_name = ReservedName(name=self.name).find(parameters, resolver)

96 if reserved_name:

97 warning = DeprecationWarning(

98 f"Argument name-based matching of '{self.name}' is deprecated and will "

99 "be removed in v1.0. Ensure that reserved arguments are annotated "

100 "their respective types (i.e. use value: 'DirectiveValue[str]' instead "

101 "of 'value: str' and 'info: Info' instead of a plain 'info')."

102 )

103 warnings.warn(warning)

104 return reserved_name

105 else:

106 return None

107

108 def is_reserved_type(self, other: Type) -> bool:

109 if get_origin(other) is Annotated:

110 # Handle annotated arguments such as Private[str] and DirectiveValue[str]

111 return any(isinstance(argument, self.type) for argument in get_args(other))

112 else:

113 # Handle both concrete and generic types (i.e Info, and Info[Any, Any])

114 return other is self.type or get_origin(other) is self.type

115

116

117 SELF_PARAMSPEC = ReservedNameBoundParameter("self")

118 CLS_PARAMSPEC = ReservedNameBoundParameter("cls")

119 ROOT_PARAMSPEC = ReservedName("root")

120 INFO_PARAMSPEC = ReservedType("info", Info)

121

122 T = TypeVar("T")

123

124

125 class StrawberryResolver(Generic[T]):

126

127 RESERVED_PARAMSPEC: Tuple[ReservedParameterSpecification, ...] = (

128 SELF_PARAMSPEC,

129 CLS_PARAMSPEC,

130 ROOT_PARAMSPEC,

131 INFO_PARAMSPEC,

132 )

133

134 def __init__(

135 self,

136 func: Union[Callable[..., T], staticmethod, classmethod],

137 *,

138 description: Optional[str] = None,

139 type_override: Optional[Union[StrawberryType, type]] = None,

140 ):

141 self.wrapped_func = func

142 self._description = description

143 self._type_override = type_override

144 """Specify the type manually instead of calculating from wrapped func

145

146 This is used when creating copies of types w/ generics

147 """

148 self._resolved_annotations: Dict[inspect.Parameter, Any] = {}

149 """Populated during reserved parameter determination.

150

151 Caching resolved annotations this way prevents evaling them repeatedly.

152 """

153

154 # TODO: Use this when doing the actual resolving? How to deal with async resolvers?

155 def __call__(self, *args, **kwargs) -> T:

156 if not callable(self.wrapped_func):

157 raise UncallableResolverError(self)

158 return self.wrapped_func(*args, **kwargs)

159

160 @cached_property

161 def signature(self) -> inspect.Signature:

162 return inspect.signature(self._unbound_wrapped_func)

163

164 @cached_property

165 def reserved_parameters(

166 self,

167 ) -> Dict[ReservedParameterSpecification, Optional[inspect.Parameter]]:

168 """Mapping of reserved parameter specification to parameter."""

169 parameters = tuple(self.signature.parameters.values())

170 return {spec: spec.find(parameters, self) for spec in self.RESERVED_PARAMSPEC}

171

172 @cached_property

173 def arguments(self) -> List[StrawberryArgument]:

174 """Resolver arguments exposed in the GraphQL Schema."""

175 parameters = self.signature.parameters.values()

176 reserved_parameters = set(self.reserved_parameters.values())

177

178 missing_annotations = set()

179 arguments = []

180 user_parameters = (p for p in parameters if p not in reserved_parameters)

181 for param in user_parameters:

182 annotation = self._resolved_annotations.get(param, param.annotation)

183 if annotation is inspect.Signature.empty:

184 missing_annotations.add(param.name)

185 else:

186 argument = StrawberryArgument(

187 python_name=param.name,

188 graphql_name=None,

189 type_annotation=StrawberryAnnotation(

190 annotation=annotation, namespace=self._namespace

191 ),

192 default=param.default,

193 )

194 arguments.append(argument)

195 if missing_annotations:

196 raise MissingArgumentsAnnotationsError(self.name, missing_annotations)

197 return arguments

198

199 @cached_property

200 def info_parameter(self) -> Optional[inspect.Parameter]:

201 return self.reserved_parameters.get(INFO_PARAMSPEC)

202

203 @cached_property

204 def root_parameter(self) -> Optional[inspect.Parameter]:

205 return self.reserved_parameters.get(ROOT_PARAMSPEC)

206

207 @cached_property

208 def self_parameter(self) -> Optional[inspect.Parameter]:

209 return self.reserved_parameters.get(SELF_PARAMSPEC)

210

211 @cached_property

212 def name(self) -> str:

213 # TODO: What to do if resolver is a lambda?

214 return self._unbound_wrapped_func.__name__

215

216 @cached_property

217 def annotations(self) -> Dict[str, object]:

218 """Annotations for the resolver.

219

220 Does not include special args defined in `RESERVED_PARAMSPEC` (e.g. self, root,

221 info)

222 """

223 reserved_parameters = self.reserved_parameters

224 reserved_names = {p.name for p in reserved_parameters.values() if p is not None}

225

226 annotations = self._unbound_wrapped_func.__annotations__

227 annotations = {

228 name: annotation

229 for name, annotation in annotations.items()

230 if name not in reserved_names

231 }

232

233 return annotations

234

235 @cached_property

236 def type_annotation(self) -> Optional[StrawberryAnnotation]:

237 return_annotation = self.signature.return_annotation

238 if return_annotation is inspect.Signature.empty:

239 return None

240 else:

241 type_annotation = StrawberryAnnotation(

242 annotation=return_annotation, namespace=self._namespace

243 )

244 return type_annotation

245

246 @property

247 def type(self) -> Optional[Union[StrawberryType, type]]:

248 if self._type_override:

249 return self._type_override

250 if self.type_annotation is None:

251 return None

252 return self.type_annotation.resolve()

253

254 @cached_property

255 def is_async(self) -> bool:

256 return iscoroutinefunction(self._unbound_wrapped_func) or isasyncgenfunction(

257 self._unbound_wrapped_func

258 )

259

260 def copy_with(

261 self, type_var_map: Mapping[TypeVar, Union[StrawberryType, builtins.type]]

262 ) -> StrawberryResolver: