problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_8080

|

rasdani/github-patches

|

git_diff

|

keras-team__keras-nlp-195

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Why does the docstring say vocab size should be no larger than 999?

https://github.com/keras-team/keras-nlp/blob/e3adddaa98bbe1aee071117c01678fe3017dae80/keras_nlp/layers/token_and_position_embedding.py#L30

Seems like a very small vocab to me

</issue>

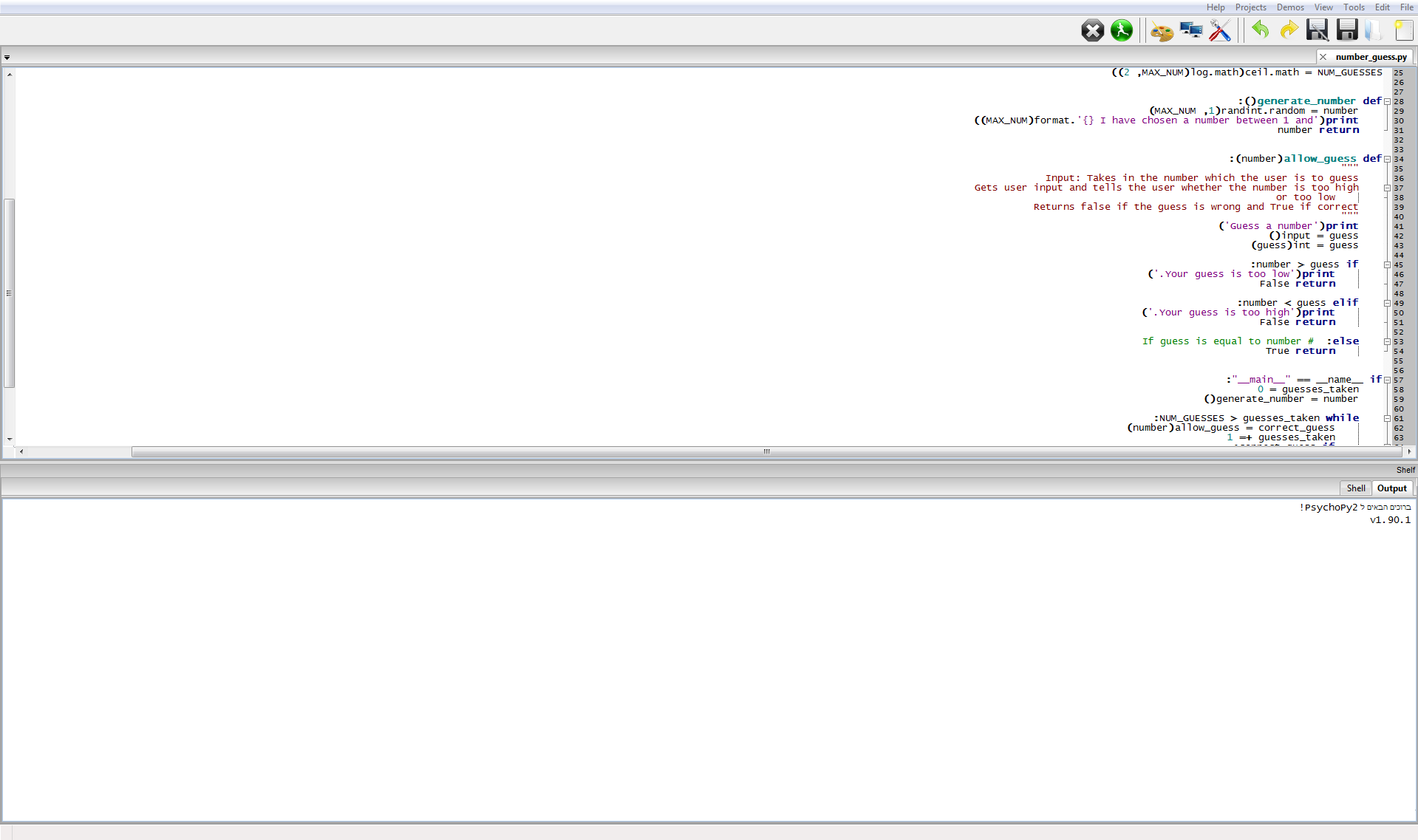

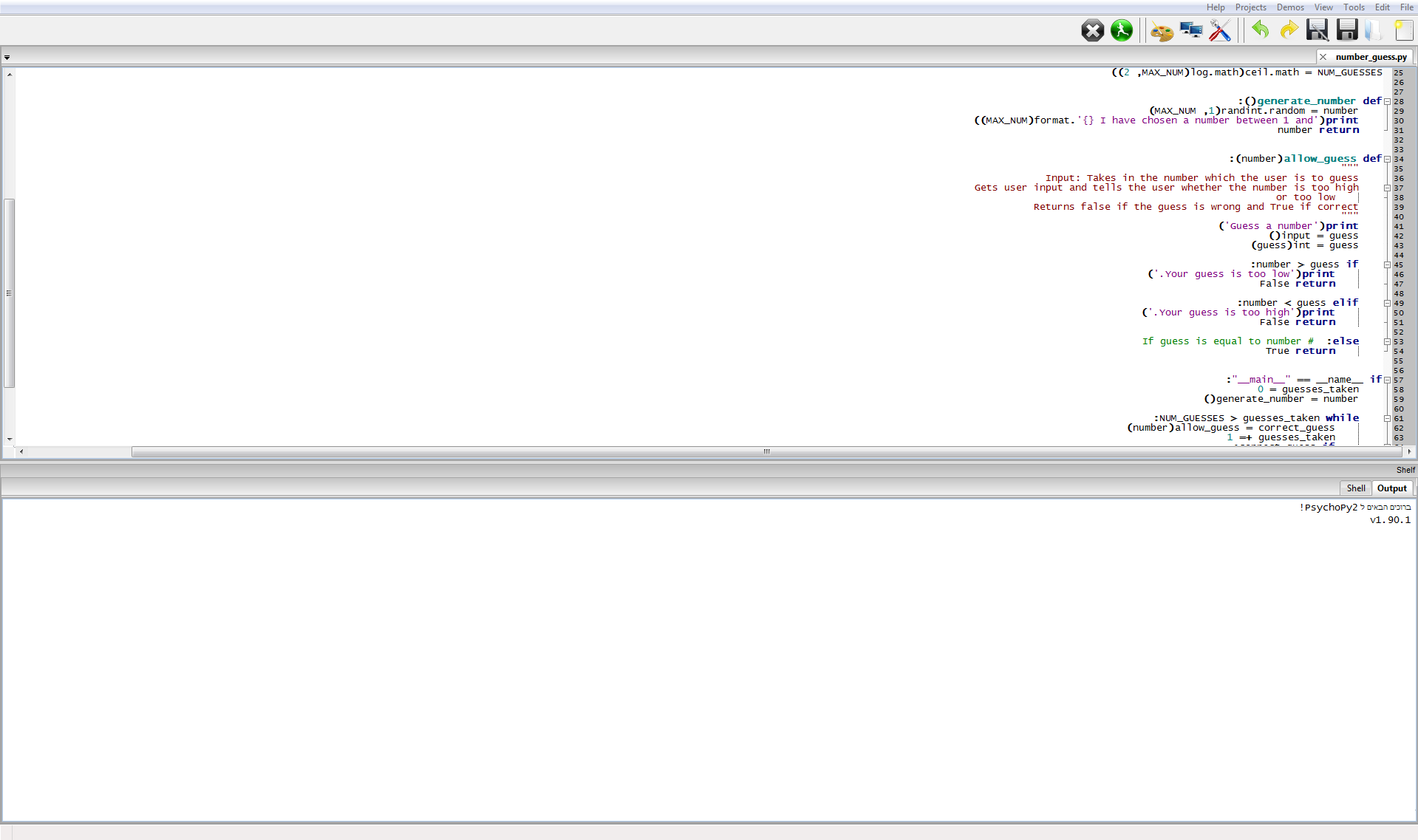

<code>

[start of keras_nlp/layers/token_and_position_embedding.py]

1 # Copyright 2022 The KerasNLP Authors

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # https://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 """Creates an Embedding Layer and adds Positional Embeddings"""

16

17 from tensorflow import keras

18

19 import keras_nlp.layers

20

21

22 class TokenAndPositionEmbedding(keras.layers.Layer):

23 """A layer which sums a token and position embedding.

24

25 This layer assumes that the last dimension in the input corresponds

26 to the sequence dimension.

27

28 Args:

29 vocabulary_size: The size of the vocabulary (should be no larger

30 than 999)

31 sequence_length: The maximum length of input sequence

32 embedding_dim: The output dimension of the embedding layer

33 embeddings_initializer: The initializer to use for the Embedding

34 Layers

35 mask_zero: Boolean, whether or not the input value 0 is a special

36 "padding" value that should be masked out.

37 This is useful when using recurrent layers which may take variable

38 length input. If this is True, then all subsequent layers in the

39 model need to support masking or an exception will be raised.

40 If mask_zero` is set to True, as a consequence, index 0 cannot be

41 used in the vocabulary

42 (input_dim should equal size of vocabulary + 1).

43

44 Examples:

45 ```python

46 seq_length = 50

47 vocab_size = 5000

48 embed_dim = 128

49 inputs = keras.Input(shape=(seq_length,))

50 embedding_layer = keras_nlp.layers.TokenAndPositionEmbedding(

51 vocabulary_size=vocab_size,

52 sequence_length=seq_length,

53 embedding_dim=embed_dim,

54 )

55 outputs = embedding_layer(inputs)

56 ```

57 """

58

59 def __init__(

60 self,

61 vocabulary_size,

62 sequence_length,

63 embedding_dim,

64 embeddings_initializer="glorot_uniform",

65 mask_zero=False,

66 **kwargs

67 ):

68 super().__init__(**kwargs)

69 if vocabulary_size is None:

70 raise ValueError(

71 "`vocabulary_size` must be an Integer, received `None`."

72 )

73 if sequence_length is None:

74 raise ValueError(

75 "`sequence_length` must be an Integer, received `None`."

76 )

77 if embedding_dim is None:

78 raise ValueError(

79 "`embedding_dim` must be an Integer, received `None`."

80 )

81 self.vocabulary_size = int(vocabulary_size)

82 self.sequence_length = int(sequence_length)

83 self.embedding_dim = int(embedding_dim)

84 self.token_embedding = keras.layers.Embedding(

85 vocabulary_size,

86 embedding_dim,

87 embeddings_initializer=embeddings_initializer,

88 mask_zero=mask_zero,

89 )

90 self.position_embedding = keras_nlp.layers.PositionEmbedding(

91 sequence_length=sequence_length,

92 initializer=embeddings_initializer,

93 )

94 self.supports_masking = self.token_embedding.supports_masking

95

96 def get_config(self):

97 config = super().get_config()

98 config.update(

99 {

100 "vocabulary_size": self.vocabulary_size,

101 "sequence_length": self.sequence_length,

102 "embedding_dim": self.embedding_dim,

103 "embeddings_initializer": keras.initializers.serialize(

104 self.token_embedding.embeddings_initializer

105 ),

106 "mask_zero": self.token_embedding.mask_zero,

107 },

108 )

109 return config

110

111 def call(self, inputs):

112 embedded_tokens = self.token_embedding(inputs)

113 embedded_positions = self.position_embedding(embedded_tokens)

114 outputs = embedded_tokens + embedded_positions

115 return outputs

116

117 def compute_mask(self, inputs, mask=None):

118 return self.token_embedding.compute_mask(inputs, mask=mask)

119

[end of keras_nlp/layers/token_and_position_embedding.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/keras_nlp/layers/token_and_position_embedding.py b/keras_nlp/layers/token_and_position_embedding.py

--- a/keras_nlp/layers/token_and_position_embedding.py

+++ b/keras_nlp/layers/token_and_position_embedding.py

@@ -26,8 +26,7 @@

to the sequence dimension.

Args:

- vocabulary_size: The size of the vocabulary (should be no larger

- than 999)

+ vocabulary_size: The size of the vocabulary.

sequence_length: The maximum length of input sequence

embedding_dim: The output dimension of the embedding layer

embeddings_initializer: The initializer to use for the Embedding

|

{"golden_diff": "diff --git a/keras_nlp/layers/token_and_position_embedding.py b/keras_nlp/layers/token_and_position_embedding.py\n--- a/keras_nlp/layers/token_and_position_embedding.py\n+++ b/keras_nlp/layers/token_and_position_embedding.py\n@@ -26,8 +26,7 @@\n to the sequence dimension.\n \n Args:\n- vocabulary_size: The size of the vocabulary (should be no larger\n- than 999)\n+ vocabulary_size: The size of the vocabulary.\n sequence_length: The maximum length of input sequence\n embedding_dim: The output dimension of the embedding layer\n embeddings_initializer: The initializer to use for the Embedding\n", "issue": "Why does the docstring say vocab size should be no larger than 999?\nhttps://github.com/keras-team/keras-nlp/blob/e3adddaa98bbe1aee071117c01678fe3017dae80/keras_nlp/layers/token_and_position_embedding.py#L30\r\n\r\nSeems like a very small vocab to me\n", "before_files": [{"content": "# Copyright 2022 The KerasNLP Authors\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# https://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"Creates an Embedding Layer and adds Positional Embeddings\"\"\"\n\nfrom tensorflow import keras\n\nimport keras_nlp.layers\n\n\nclass TokenAndPositionEmbedding(keras.layers.Layer):\n \"\"\"A layer which sums a token and position embedding.\n\n This layer assumes that the last dimension in the input corresponds\n to the sequence dimension.\n\n Args:\n vocabulary_size: The size of the vocabulary (should be no larger\n than 999)\n sequence_length: The maximum length of input sequence\n embedding_dim: The output dimension of the embedding layer\n embeddings_initializer: The initializer to use for the Embedding\n Layers\n mask_zero: Boolean, whether or not the input value 0 is a special\n \"padding\" value that should be masked out.\n This is useful when using recurrent layers which may take variable\n length input. If this is True, then all subsequent layers in the\n model need to support masking or an exception will be raised.\n If mask_zero` is set to True, as a consequence, index 0 cannot be\n used in the vocabulary\n (input_dim should equal size of vocabulary + 1).\n\n Examples:\n ```python\n seq_length = 50\n vocab_size = 5000\n embed_dim = 128\n inputs = keras.Input(shape=(seq_length,))\n embedding_layer = keras_nlp.layers.TokenAndPositionEmbedding(\n vocabulary_size=vocab_size,\n sequence_length=seq_length,\n embedding_dim=embed_dim,\n )\n outputs = embedding_layer(inputs)\n ```\n \"\"\"\n\n def __init__(\n self,\n vocabulary_size,\n sequence_length,\n embedding_dim,\n embeddings_initializer=\"glorot_uniform\",\n mask_zero=False,\n **kwargs\n ):\n super().__init__(**kwargs)\n if vocabulary_size is None:\n raise ValueError(\n \"`vocabulary_size` must be an Integer, received `None`.\"\n )\n if sequence_length is None:\n raise ValueError(\n \"`sequence_length` must be an Integer, received `None`.\"\n )\n if embedding_dim is None:\n raise ValueError(\n \"`embedding_dim` must be an Integer, received `None`.\"\n )\n self.vocabulary_size = int(vocabulary_size)\n self.sequence_length = int(sequence_length)\n self.embedding_dim = int(embedding_dim)\n self.token_embedding = keras.layers.Embedding(\n vocabulary_size,\n embedding_dim,\n embeddings_initializer=embeddings_initializer,\n mask_zero=mask_zero,\n )\n self.position_embedding = keras_nlp.layers.PositionEmbedding(\n sequence_length=sequence_length,\n initializer=embeddings_initializer,\n )\n self.supports_masking = self.token_embedding.supports_masking\n\n def get_config(self):\n config = super().get_config()\n config.update(\n {\n \"vocabulary_size\": self.vocabulary_size,\n \"sequence_length\": self.sequence_length,\n \"embedding_dim\": self.embedding_dim,\n \"embeddings_initializer\": keras.initializers.serialize(\n self.token_embedding.embeddings_initializer\n ),\n \"mask_zero\": self.token_embedding.mask_zero,\n },\n )\n return config\n\n def call(self, inputs):\n embedded_tokens = self.token_embedding(inputs)\n embedded_positions = self.position_embedding(embedded_tokens)\n outputs = embedded_tokens + embedded_positions\n return outputs\n\n def compute_mask(self, inputs, mask=None):\n return self.token_embedding.compute_mask(inputs, mask=mask)\n", "path": "keras_nlp/layers/token_and_position_embedding.py"}]}

| 1,757 | 153 |

gh_patches_debug_4313

|

rasdani/github-patches

|

git_diff

|

fossasia__open-event-server-352

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

HTML Template rendered when page doesn't exist in API

If a paginated API endpoint is called with a non-existant page number, a template is rendered which should never happen in case of REST APIs.

```

http http://localhost:5000/api/v1/event/page/2

HTTP/1.0 404 NOT FOUND

Content-Length: 1062

Content-Type: text/html; charset=utf-8

Date: Sat, 21 May 2016 07:51:38 GMT

Server: Werkzeug/0.11.7 Python/2.7.10

<!DOCTYPE html>

<html>

<head lang="en">

<meta charset="UTF-8">

<title>You got 404'd</title>

<link href="/admin/static/bootstrap/bootstrap3/css/bootstrap.min.css" rel="stylesheet">

<link href="/static/admin/css/roboto.css" rel="stylesheet">

<link href="/static/admin/css/material-custom.css" rel="stylesheet">

</head>

<body>

<div class="container">

<div class="row">

<div class="col-md-push-3 col-md-6" style="margin-top: 20px;">

<div class="jumbotron">

<h2 style="font-weight: 100; ">Page Not Found</h2>

<p class="lead">Oops, the page you're looking for does not exist.</p>

<p style="font-size: 14px;">

You may want to head back to the homepage and restart your journey.

</p>

<a href="/" class="btn btn-large btn-info" style="background-color: #3f51b5;">

<i class="glyphicon glyphicon-home"></i> Take Me Home

</a>

</div>

</div>

</div>

</div>

</body>

</html>

```

</issue>

<code>

[start of open_event/helpers/object_formatter.py]

1 """Copyright 2015 Rafal Kowalski"""

2 from flask import jsonify

3

4 from .query_filter import QueryFilter

5

6

7 PER_PAGE = 20

8

9

10 class ObjectFormatter(object):

11 """Object formatter class"""

12 @staticmethod

13 def get_json(name, query, request, page=None):

14 """Returns formatted json"""

15 objects = QueryFilter(request.args, query).get_filtered_data()

16 count = objects.count()

17 if not page:

18 return jsonify(

19 {name: [

20 table_object.serialize

21 for table_object in

22 objects]})

23 else:

24 pagination = objects.paginate(page, PER_PAGE)

25 return jsonify({

26 name: [

27 table_object.serialize

28 for table_object in

29 pagination.items

30 ],

31 'total_pages': pagination.pages,

32 'page': pagination.page

33 })

34

[end of open_event/helpers/object_formatter.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/open_event/helpers/object_formatter.py b/open_event/helpers/object_formatter.py

--- a/open_event/helpers/object_formatter.py

+++ b/open_event/helpers/object_formatter.py

@@ -21,6 +21,8 @@

for table_object in

objects]})

else:

+ if count <= ((page-1) * PER_PAGE): # no results possible

+ return jsonify({})

pagination = objects.paginate(page, PER_PAGE)

return jsonify({

name: [

|

{"golden_diff": "diff --git a/open_event/helpers/object_formatter.py b/open_event/helpers/object_formatter.py\n--- a/open_event/helpers/object_formatter.py\n+++ b/open_event/helpers/object_formatter.py\n@@ -21,6 +21,8 @@\n for table_object in\n objects]})\n else:\n+ if count <= ((page-1) * PER_PAGE): # no results possible\n+ return jsonify({})\n pagination = objects.paginate(page, PER_PAGE)\n return jsonify({\n name: [\n", "issue": "HTML Template rendered when page doesn't exist in API\nIf a paginated API endpoint is called with a non-existant page number, a template is rendered which should never happen in case of REST APIs.\n\n```\nhttp http://localhost:5000/api/v1/event/page/2\nHTTP/1.0 404 NOT FOUND\nContent-Length: 1062\nContent-Type: text/html; charset=utf-8\nDate: Sat, 21 May 2016 07:51:38 GMT\nServer: Werkzeug/0.11.7 Python/2.7.10\n\n<!DOCTYPE html>\n<html>\n<head lang=\"en\">\n <meta charset=\"UTF-8\">\n <title>You got 404'd</title>\n <link href=\"/admin/static/bootstrap/bootstrap3/css/bootstrap.min.css\" rel=\"stylesheet\">\n <link href=\"/static/admin/css/roboto.css\" rel=\"stylesheet\">\n <link href=\"/static/admin/css/material-custom.css\" rel=\"stylesheet\">\n</head>\n<body>\n<div class=\"container\">\n <div class=\"row\">\n <div class=\"col-md-push-3 col-md-6\" style=\"margin-top: 20px;\">\n <div class=\"jumbotron\">\n <h2 style=\"font-weight: 100; \">Page Not Found</h2>\n <p class=\"lead\">Oops, the page you're looking for does not exist.</p>\n <p style=\"font-size: 14px;\">\n You may want to head back to the homepage and restart your journey.\n </p>\n <a href=\"/\" class=\"btn btn-large btn-info\" style=\"background-color: #3f51b5;\">\n <i class=\"glyphicon glyphicon-home\"></i> Take Me Home\n </a>\n </div>\n </div>\n </div>\n</div>\n</body>\n</html>\n```\n\n", "before_files": [{"content": "\"\"\"Copyright 2015 Rafal Kowalski\"\"\"\nfrom flask import jsonify\n\nfrom .query_filter import QueryFilter\n\n\nPER_PAGE = 20\n\n\nclass ObjectFormatter(object):\n \"\"\"Object formatter class\"\"\"\n @staticmethod\n def get_json(name, query, request, page=None):\n \"\"\"Returns formatted json\"\"\"\n objects = QueryFilter(request.args, query).get_filtered_data()\n count = objects.count()\n if not page:\n return jsonify(\n {name: [\n table_object.serialize\n for table_object in\n objects]})\n else:\n pagination = objects.paginate(page, PER_PAGE)\n return jsonify({\n name: [\n table_object.serialize\n for table_object in\n pagination.items\n ],\n 'total_pages': pagination.pages,\n 'page': pagination.page\n })\n", "path": "open_event/helpers/object_formatter.py"}]}

| 1,186 | 104 |

gh_patches_debug_23197

|

rasdani/github-patches

|

git_diff

|

mlcommons__GaNDLF-860

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[BUG] Noise and Blur enhanced fail for certain images

### Describe the bug

The variable blur and noise augmentations [[ref](https://github.com/mlcommons/GaNDLF/blob/master/GANDLF/data/augmentation/blur_enhanced.py),[ref](https://github.com/mlcommons/GaNDLF/blob/master/GANDLF/data/augmentation/noise_enhanced.py)] fail for certain images that do not have a float pixel type.

### To Reproduce

Steps to reproduce the behavior:

1. Use png data for training.

2. Use either of one of these 2 augmentations.

3. See error.

### Expected behavior

It should run. A simple fix is to add `image.data.float()` before calling `torch.std` for both.

### Media

N.A.

### Environment information

<!-- Put the output of the following command:

python ./gandlf_debugInfo

-->

N.A.

### Additional context

N.A.

</issue>

<code>

[start of GANDLF/data/augmentation/blur_enhanced.py]

1 # adapted from https://github.com/fepegar/torchio/blob/main/src/torchio/transforms/augmentation/intensity/random_blur.py

2

3 from collections import defaultdict

4 from typing import Union, Tuple

5

6 import torch

7

8 from torchio.typing import TypeTripletFloat, TypeSextetFloat

9 from torchio.data.subject import Subject

10 from torchio.transforms import IntensityTransform

11 from torchio.transforms.augmentation import RandomTransform

12 from torchio.transforms.augmentation.intensity.random_blur import Blur

13

14

15 class RandomBlurEnhanced(RandomTransform, IntensityTransform):

16 r"""Blur an image using a random-sized Gaussian filter.

17

18 Args:

19 std: Tuple :math:`(a_1, b_1, a_2, b_2, a_3, b_3)` representing the

20 ranges (in mm) of the standard deviations

21 :math:`(\sigma_1, \sigma_2, \sigma_3)` of the Gaussian kernels used

22 to blur the image along each axis, where

23 :math:`\sigma_i \sim \mathcal{U}(a_i, b_i)`.

24 If two values :math:`(a, b)` are provided,

25 then :math:`\sigma_i \sim \mathcal{U}(a, b)`.

26 If only one value :math:`x` is provided,

27 then :math:`\sigma_i \sim \mathcal{U}(0, x)`.

28 If three values :math:`(x_1, x_2, x_3)` are provided,

29 then :math:`\sigma_i \sim \mathcal{U}(0, x_i)`.

30 **kwargs: See :class:`~torchio.transforms.Transform` for additional

31 keyword arguments.

32 """

33

34 def __init__(self, std: Union[float, Tuple[float, float]] = None, **kwargs):

35 super().__init__(**kwargs)

36 self.std_original = std

37

38 def apply_transform(self, subject: Subject) -> Subject:

39 arguments = defaultdict(dict)

40 for name, image in self.get_images_dict(subject).items():

41 self.std_ranges = self.calculate_std_ranges(image)

42 arguments["std"][name] = self.get_params(self.std_ranges)

43 transform = Blur(**self.add_include_exclude(arguments))

44 transformed = transform(subject)

45 return transformed

46

47 def get_params(self, std_ranges: TypeSextetFloat) -> TypeTripletFloat:

48 std = self.sample_uniform_sextet(std_ranges)

49 return std

50

51 def calculate_std_ranges(self, image: torch.Tensor) -> Tuple[float, float]:

52 std_ranges = self.std_original

53 if self.std_original is None:

54 # calculate the default std range based on 1.5% of the input image std - https://github.com/mlcommons/GaNDLF/issues/518

55 std_ranges = (0, 0.015 * torch.std(image.data).item())

56 return self.parse_params(std_ranges, None, "std", min_constraint=0)

57

[end of GANDLF/data/augmentation/blur_enhanced.py]

[start of GANDLF/data/augmentation/noise_enhanced.py]

1 # adapted from https://github.com/fepegar/torchio/blob/main/src/torchio/transforms/augmentation/intensity/random_noise.py

2

3 from collections import defaultdict

4 from typing import Tuple, Union

5

6 import torch

7 from torchio.data.subject import Subject

8 from torchio.transforms import IntensityTransform

9 from torchio.transforms.augmentation import RandomTransform

10 from torchio.transforms.augmentation.intensity.random_noise import Noise

11

12

13 class RandomNoiseEnhanced(RandomTransform, IntensityTransform):

14 r"""Add Gaussian noise with random parameters.

15

16 Add noise sampled from a normal distribution with random parameters.

17

18 Args:

19 mean: Mean :math:`\mu` of the Gaussian distribution

20 from which the noise is sampled.

21 If two values :math:`(a, b)` are provided,

22 then :math:`\mu \sim \mathcal{U}(a, b)`.

23 If only one value :math:`d` is provided,

24 :math:`\mu \sim \mathcal{U}(-d, d)`.

25 std: Standard deviation :math:`\sigma` of the Gaussian distribution

26 from which the noise is sampled.

27 If two values :math:`(a, b)` are provided,

28 then :math:`\sigma \sim \mathcal{U}(a, b)`.

29 If only one value :math:`d` is provided,

30 :math:`\sigma \sim \mathcal{U}(0, d)`.

31 **kwargs: See :class:`~torchio.transforms.Transform` for additional

32 keyword arguments.

33 """

34

35 def __init__(

36 self,

37 mean: Union[float, Tuple[float, float]] = 0,

38 std: Union[float, Tuple[float, float]] = (0, 0.25),

39 **kwargs

40 ):

41 super().__init__(**kwargs)

42 self.mean_range = self._parse_range(mean, "mean")

43 self.std_original = std

44

45 def apply_transform(self, subject: Subject) -> Subject:

46 arguments = defaultdict(dict)

47 for name, image in self.get_images_dict(subject).items():

48 self.std_range = self.calculate_std_ranges(image)

49 mean, std, seed = self.get_params(self.mean_range, self.std_range)

50 arguments["mean"][name] = mean

51 arguments["std"][name] = std

52 arguments["seed"][name] = seed

53 transform = Noise(**self.add_include_exclude(arguments))

54 transformed = transform(subject)

55 return transformed

56

57 def get_params(

58 self, mean_range: Tuple[float, float], std_range: Tuple[float, float]

59 ) -> Tuple[float, float]:

60 mean = self.sample_uniform(*mean_range)

61 std = self.sample_uniform(*std_range)

62 seed = self._get_random_seed()

63 return mean, std, seed

64

65 def calculate_std_ranges(self, image: torch.Tensor) -> Tuple[float, float]:

66 std_ranges = self.std_original

67 if self.std_original is None:

68 # calculate the default std range based on 1.5% of the input image std - https://github.com/mlcommons/GaNDLF/issues/518

69 std_ranges = (0, 0.015 * torch.std(image.data).item())

70 return self._parse_range(std_ranges, "std", min_constraint=0)

71

[end of GANDLF/data/augmentation/noise_enhanced.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/GANDLF/data/augmentation/blur_enhanced.py b/GANDLF/data/augmentation/blur_enhanced.py

--- a/GANDLF/data/augmentation/blur_enhanced.py

+++ b/GANDLF/data/augmentation/blur_enhanced.py

@@ -52,5 +52,5 @@

std_ranges = self.std_original

if self.std_original is None:

# calculate the default std range based on 1.5% of the input image std - https://github.com/mlcommons/GaNDLF/issues/518

- std_ranges = (0, 0.015 * torch.std(image.data).item())

+ std_ranges = (0, 0.015 * torch.std(image.data.float()).item())

return self.parse_params(std_ranges, None, "std", min_constraint=0)

diff --git a/GANDLF/data/augmentation/noise_enhanced.py b/GANDLF/data/augmentation/noise_enhanced.py

--- a/GANDLF/data/augmentation/noise_enhanced.py

+++ b/GANDLF/data/augmentation/noise_enhanced.py

@@ -66,5 +66,5 @@

std_ranges = self.std_original

if self.std_original is None:

# calculate the default std range based on 1.5% of the input image std - https://github.com/mlcommons/GaNDLF/issues/518

- std_ranges = (0, 0.015 * torch.std(image.data).item())

+ std_ranges = (0, 0.015 * torch.std(image.data.float()).item())

return self._parse_range(std_ranges, "std", min_constraint=0)

|

{"golden_diff": "diff --git a/GANDLF/data/augmentation/blur_enhanced.py b/GANDLF/data/augmentation/blur_enhanced.py\n--- a/GANDLF/data/augmentation/blur_enhanced.py\n+++ b/GANDLF/data/augmentation/blur_enhanced.py\n@@ -52,5 +52,5 @@\n std_ranges = self.std_original\n if self.std_original is None:\n # calculate the default std range based on 1.5% of the input image std - https://github.com/mlcommons/GaNDLF/issues/518\n- std_ranges = (0, 0.015 * torch.std(image.data).item())\n+ std_ranges = (0, 0.015 * torch.std(image.data.float()).item())\n return self.parse_params(std_ranges, None, \"std\", min_constraint=0)\ndiff --git a/GANDLF/data/augmentation/noise_enhanced.py b/GANDLF/data/augmentation/noise_enhanced.py\n--- a/GANDLF/data/augmentation/noise_enhanced.py\n+++ b/GANDLF/data/augmentation/noise_enhanced.py\n@@ -66,5 +66,5 @@\n std_ranges = self.std_original\n if self.std_original is None:\n # calculate the default std range based on 1.5% of the input image std - https://github.com/mlcommons/GaNDLF/issues/518\n- std_ranges = (0, 0.015 * torch.std(image.data).item())\n+ std_ranges = (0, 0.015 * torch.std(image.data.float()).item())\n return self._parse_range(std_ranges, \"std\", min_constraint=0)\n", "issue": "[BUG] Noise and Blur enhanced fail for certain images\n### Describe the bug\r\nThe variable blur and noise augmentations [[ref](https://github.com/mlcommons/GaNDLF/blob/master/GANDLF/data/augmentation/blur_enhanced.py),[ref](https://github.com/mlcommons/GaNDLF/blob/master/GANDLF/data/augmentation/noise_enhanced.py)] fail for certain images that do not have a float pixel type.\r\n\r\n### To Reproduce\r\nSteps to reproduce the behavior:\r\n1. Use png data for training.\r\n2. Use either of one of these 2 augmentations.\r\n3. See error.\r\n\r\n### Expected behavior\r\nIt should run. A simple fix is to add `image.data.float()` before calling `torch.std` for both.\r\n\r\n### Media\r\nN.A.\r\n\r\n### Environment information\r\n<!-- Put the output of the following command:\r\npython ./gandlf_debugInfo\r\n-->\r\nN.A.\r\n\r\n### Additional context\r\nN.A.\r\n\n", "before_files": [{"content": "# adapted from https://github.com/fepegar/torchio/blob/main/src/torchio/transforms/augmentation/intensity/random_blur.py\n\nfrom collections import defaultdict\nfrom typing import Union, Tuple\n\nimport torch\n\nfrom torchio.typing import TypeTripletFloat, TypeSextetFloat\nfrom torchio.data.subject import Subject\nfrom torchio.transforms import IntensityTransform\nfrom torchio.transforms.augmentation import RandomTransform\nfrom torchio.transforms.augmentation.intensity.random_blur import Blur\n\n\nclass RandomBlurEnhanced(RandomTransform, IntensityTransform):\n r\"\"\"Blur an image using a random-sized Gaussian filter.\n\n Args:\n std: Tuple :math:`(a_1, b_1, a_2, b_2, a_3, b_3)` representing the\n ranges (in mm) of the standard deviations\n :math:`(\\sigma_1, \\sigma_2, \\sigma_3)` of the Gaussian kernels used\n to blur the image along each axis, where\n :math:`\\sigma_i \\sim \\mathcal{U}(a_i, b_i)`.\n If two values :math:`(a, b)` are provided,\n then :math:`\\sigma_i \\sim \\mathcal{U}(a, b)`.\n If only one value :math:`x` is provided,\n then :math:`\\sigma_i \\sim \\mathcal{U}(0, x)`.\n If three values :math:`(x_1, x_2, x_3)` are provided,\n then :math:`\\sigma_i \\sim \\mathcal{U}(0, x_i)`.\n **kwargs: See :class:`~torchio.transforms.Transform` for additional\n keyword arguments.\n \"\"\"\n\n def __init__(self, std: Union[float, Tuple[float, float]] = None, **kwargs):\n super().__init__(**kwargs)\n self.std_original = std\n\n def apply_transform(self, subject: Subject) -> Subject:\n arguments = defaultdict(dict)\n for name, image in self.get_images_dict(subject).items():\n self.std_ranges = self.calculate_std_ranges(image)\n arguments[\"std\"][name] = self.get_params(self.std_ranges)\n transform = Blur(**self.add_include_exclude(arguments))\n transformed = transform(subject)\n return transformed\n\n def get_params(self, std_ranges: TypeSextetFloat) -> TypeTripletFloat:\n std = self.sample_uniform_sextet(std_ranges)\n return std\n\n def calculate_std_ranges(self, image: torch.Tensor) -> Tuple[float, float]:\n std_ranges = self.std_original\n if self.std_original is None:\n # calculate the default std range based on 1.5% of the input image std - https://github.com/mlcommons/GaNDLF/issues/518\n std_ranges = (0, 0.015 * torch.std(image.data).item())\n return self.parse_params(std_ranges, None, \"std\", min_constraint=0)\n", "path": "GANDLF/data/augmentation/blur_enhanced.py"}, {"content": "# adapted from https://github.com/fepegar/torchio/blob/main/src/torchio/transforms/augmentation/intensity/random_noise.py\n\nfrom collections import defaultdict\nfrom typing import Tuple, Union\n\nimport torch\nfrom torchio.data.subject import Subject\nfrom torchio.transforms import IntensityTransform\nfrom torchio.transforms.augmentation import RandomTransform\nfrom torchio.transforms.augmentation.intensity.random_noise import Noise\n\n\nclass RandomNoiseEnhanced(RandomTransform, IntensityTransform):\n r\"\"\"Add Gaussian noise with random parameters.\n\n Add noise sampled from a normal distribution with random parameters.\n\n Args:\n mean: Mean :math:`\\mu` of the Gaussian distribution\n from which the noise is sampled.\n If two values :math:`(a, b)` are provided,\n then :math:`\\mu \\sim \\mathcal{U}(a, b)`.\n If only one value :math:`d` is provided,\n :math:`\\mu \\sim \\mathcal{U}(-d, d)`.\n std: Standard deviation :math:`\\sigma` of the Gaussian distribution\n from which the noise is sampled.\n If two values :math:`(a, b)` are provided,\n then :math:`\\sigma \\sim \\mathcal{U}(a, b)`.\n If only one value :math:`d` is provided,\n :math:`\\sigma \\sim \\mathcal{U}(0, d)`.\n **kwargs: See :class:`~torchio.transforms.Transform` for additional\n keyword arguments.\n \"\"\"\n\n def __init__(\n self,\n mean: Union[float, Tuple[float, float]] = 0,\n std: Union[float, Tuple[float, float]] = (0, 0.25),\n **kwargs\n ):\n super().__init__(**kwargs)\n self.mean_range = self._parse_range(mean, \"mean\")\n self.std_original = std\n\n def apply_transform(self, subject: Subject) -> Subject:\n arguments = defaultdict(dict)\n for name, image in self.get_images_dict(subject).items():\n self.std_range = self.calculate_std_ranges(image)\n mean, std, seed = self.get_params(self.mean_range, self.std_range)\n arguments[\"mean\"][name] = mean\n arguments[\"std\"][name] = std\n arguments[\"seed\"][name] = seed\n transform = Noise(**self.add_include_exclude(arguments))\n transformed = transform(subject)\n return transformed\n\n def get_params(\n self, mean_range: Tuple[float, float], std_range: Tuple[float, float]\n ) -> Tuple[float, float]:\n mean = self.sample_uniform(*mean_range)\n std = self.sample_uniform(*std_range)\n seed = self._get_random_seed()\n return mean, std, seed\n\n def calculate_std_ranges(self, image: torch.Tensor) -> Tuple[float, float]:\n std_ranges = self.std_original\n if self.std_original is None:\n # calculate the default std range based on 1.5% of the input image std - https://github.com/mlcommons/GaNDLF/issues/518\n std_ranges = (0, 0.015 * torch.std(image.data).item())\n return self._parse_range(std_ranges, \"std\", min_constraint=0)\n", "path": "GANDLF/data/augmentation/noise_enhanced.py"}]}

| 2,380 | 381 |

gh_patches_debug_2103

|

rasdani/github-patches

|

git_diff

|

streamlit__streamlit-2499

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

st.number_input doesn't accept reasonable int arguments

# Summary

Using `st.number_input` in a very reasonable way:

```python

x = st.number_input("x", 0, 10)

```

causes an exception to be thrown

```

StreamlitAPIException: All arguments must be of the same type. value has float type. min_value has int type. max_value has int type.

Traceback:

x = st.number_input("x", 0, 10)

```

## Expected behavior:

This should "just work," in the sense that it should create a number input that accepts `int`s between 0 and 10, with an initial default value of 0.

## Actual behavior:

You get the exception above. You can "trick" Streamlit into providing the right behavior by forcing the `value` parameter to have type `int` as follows:

```python

x = st.number_input("x", 0, 10, 0)

```

But I think this should just work without that extra parameter.

## Is this a regression?

??

# Debug info

- Streamlit version: `Streamlit, version 0.73.0`

- Python version: `Python 3.8.5`

- Python environment: `pipenv, version 2020.11.4`

- OS version: `Ubuntu 20.04.1 LTS`

</issue>

<code>

[start of lib/streamlit/elements/number_input.py]

1 # Copyright 2018-2020 Streamlit Inc.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 import numbers

16 from typing import cast

17

18 import streamlit

19 from streamlit.errors import StreamlitAPIException

20 from streamlit.js_number import JSNumber, JSNumberBoundsException

21 from streamlit.proto.NumberInput_pb2 import NumberInput as NumberInputProto

22 from .utils import register_widget, NoValue

23

24

25 class NumberInputMixin:

26 def number_input(

27 self,

28 label,

29 min_value=None,

30 max_value=None,

31 value=NoValue(),

32 step=None,

33 format=None,

34 key=None,

35 ):

36 """Display a numeric input widget.

37

38 Parameters

39 ----------

40 label : str or None

41 A short label explaining to the user what this input is for.

42 min_value : int or float or None

43 The minimum permitted value.

44 If None, there will be no minimum.

45 max_value : int or float or None

46 The maximum permitted value.

47 If None, there will be no maximum.

48 value : int or float or None

49 The value of this widget when it first renders.

50 Defaults to min_value, or 0.0 if min_value is None

51 step : int or float or None

52 The stepping interval.

53 Defaults to 1 if the value is an int, 0.01 otherwise.

54 If the value is not specified, the format parameter will be used.

55 format : str or None

56 A printf-style format string controlling how the interface should

57 display numbers. Output must be purely numeric. This does not impact

58 the return value. Valid formatters: %d %e %f %g %i

59 key : str

60 An optional string to use as the unique key for the widget.

61 If this is omitted, a key will be generated for the widget

62 based on its content. Multiple widgets of the same type may

63 not share the same key.

64

65 Returns

66 -------

67 int or float

68 The current value of the numeric input widget. The return type

69 will match the data type of the value parameter.

70

71 Example

72 -------

73 >>> number = st.number_input('Insert a number')

74 >>> st.write('The current number is ', number)

75 """

76

77 if isinstance(value, NoValue):

78 if min_value:

79 value = min_value

80 else:

81 value = 0.0 # We set a float as default

82

83 int_value = isinstance(value, numbers.Integral)

84 float_value = isinstance(value, float)

85

86 if value is None:

87 raise StreamlitAPIException(

88 "Default value for number_input should be an int or a float."

89 )

90 else:

91 if format is None:

92 format = "%d" if int_value else "%0.2f"

93

94 if format in ["%d", "%u", "%i"] and float_value:

95 # Warn user to check if displaying float as int was really intended.

96 import streamlit as st

97

98 st.warning(

99 "Warning: NumberInput value below is float, but format {} displays as integer.".format(

100 format

101 )

102 )

103

104 if step is None:

105 step = 1 if int_value else 0.01

106

107 try:

108 float(format % 2)

109 except (TypeError, ValueError):

110 raise StreamlitAPIException(

111 "Format string for st.number_input contains invalid characters: %s"

112 % format

113 )

114

115 # Ensure that all arguments are of the same type.

116 args = [min_value, max_value, step]

117

118 int_args = all(

119 map(

120 lambda a: (

121 isinstance(a, numbers.Integral) or isinstance(a, type(None))

122 ),

123 args,

124 )

125 )

126 float_args = all(

127 map(lambda a: (isinstance(a, float) or isinstance(a, type(None))), args)

128 )

129

130 if not int_args and not float_args:

131 raise StreamlitAPIException(

132 "All arguments must be of the same type."

133 "\n`value` has %(value_type)s type."

134 "\n`min_value` has %(min_type)s type."

135 "\n`max_value` has %(max_type)s type."

136 % {

137 "value_type": type(value).__name__,

138 "min_type": type(min_value).__name__,

139 "max_type": type(max_value).__name__,

140 }

141 )

142

143 # Ensure that the value matches arguments' types.

144 all_ints = int_value and int_args

145 all_floats = float_value and float_args

146

147 if not all_ints and not all_floats:

148 raise StreamlitAPIException(

149 "All numerical arguments must be of the same type."

150 "\n`value` has %(value_type)s type."

151 "\n`min_value` has %(min_type)s type."

152 "\n`max_value` has %(max_type)s type."

153 "\n`step` has %(step_type)s type."

154 % {

155 "value_type": type(value).__name__,

156 "min_type": type(min_value).__name__,

157 "max_type": type(max_value).__name__,

158 "step_type": type(step).__name__,

159 }

160 )

161

162 if (min_value and min_value > value) or (max_value and max_value < value):

163 raise StreamlitAPIException(

164 "The default `value` of %(value)s "

165 "must lie between the `min_value` of %(min)s "

166 "and the `max_value` of %(max)s, inclusively."

167 % {"value": value, "min": min_value, "max": max_value}

168 )

169

170 # Bounds checks. JSNumber produces human-readable exceptions that

171 # we simply re-package as StreamlitAPIExceptions.

172 try:

173 if all_ints:

174 if min_value is not None:

175 JSNumber.validate_int_bounds(min_value, "`min_value`")

176 if max_value is not None:

177 JSNumber.validate_int_bounds(max_value, "`max_value`")

178 if step is not None:

179 JSNumber.validate_int_bounds(step, "`step`")

180 JSNumber.validate_int_bounds(value, "`value`")

181 else:

182 if min_value is not None:

183 JSNumber.validate_float_bounds(min_value, "`min_value`")

184 if max_value is not None:

185 JSNumber.validate_float_bounds(max_value, "`max_value`")

186 if step is not None:

187 JSNumber.validate_float_bounds(step, "`step`")

188 JSNumber.validate_float_bounds(value, "`value`")

189 except JSNumberBoundsException as e:

190 raise StreamlitAPIException(str(e))

191

192 number_input_proto = NumberInputProto()

193 number_input_proto.data_type = (

194 NumberInputProto.INT if all_ints else NumberInputProto.FLOAT

195 )

196 number_input_proto.label = label

197 number_input_proto.default = value

198

199 if min_value is not None:

200 number_input_proto.min = min_value

201 number_input_proto.has_min = True

202

203 if max_value is not None:

204 number_input_proto.max = max_value

205 number_input_proto.has_max = True

206

207 if step is not None:

208 number_input_proto.step = step

209

210 if format is not None:

211 number_input_proto.format = format

212

213 ui_value = register_widget("number_input", number_input_proto, user_key=key)

214

215 return_value = ui_value if ui_value is not None else value

216 return self.dg._enqueue("number_input", number_input_proto, return_value)

217

218 @property

219 def dg(self) -> "streamlit.delta_generator.DeltaGenerator":

220 """Get our DeltaGenerator."""

221 return cast("streamlit.delta_generator.DeltaGenerator", self)

222

[end of lib/streamlit/elements/number_input.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/lib/streamlit/elements/number_input.py b/lib/streamlit/elements/number_input.py

--- a/lib/streamlit/elements/number_input.py

+++ b/lib/streamlit/elements/number_input.py

@@ -75,7 +75,7 @@

"""

if isinstance(value, NoValue):

- if min_value:

+ if min_value is not None:

value = min_value

else:

value = 0.0 # We set a float as default

|

{"golden_diff": "diff --git a/lib/streamlit/elements/number_input.py b/lib/streamlit/elements/number_input.py\n--- a/lib/streamlit/elements/number_input.py\n+++ b/lib/streamlit/elements/number_input.py\n@@ -75,7 +75,7 @@\n \"\"\"\n \n if isinstance(value, NoValue):\n- if min_value:\n+ if min_value is not None:\n value = min_value\n else:\n value = 0.0 # We set a float as default\n", "issue": "st.number_input doesn't accept reasonable int arguments\n# Summary\r\n\r\nUsing `st.number_input` in a very reasonable way:\r\n\r\n```python\r\nx = st.number_input(\"x\", 0, 10)\r\n```\r\n\r\ncauses an exception to be thrown\r\n\r\n```\r\nStreamlitAPIException: All arguments must be of the same type. value has float type. min_value has int type. max_value has int type.\r\n\r\nTraceback:\r\n x = st.number_input(\"x\", 0, 10)\r\n```\r\n\r\n## Expected behavior:\r\n\r\nThis should \"just work,\" in the sense that it should create a number input that accepts `int`s between 0 and 10, with an initial default value of 0.\r\n\r\n## Actual behavior:\r\n\r\nYou get the exception above. You can \"trick\" Streamlit into providing the right behavior by forcing the `value` parameter to have type `int` as follows:\r\n\r\n```python\r\nx = st.number_input(\"x\", 0, 10, 0)\r\n```\r\n\r\nBut I think this should just work without that extra parameter.\r\n\r\n## Is this a regression?\r\n\r\n??\r\n\r\n# Debug info\r\n\r\n- Streamlit version: `Streamlit, version 0.73.0`\r\n- Python version: `Python 3.8.5`\r\n- Python environment: `pipenv, version 2020.11.4`\r\n- OS version: `Ubuntu 20.04.1 LTS`\r\n\r\n\n", "before_files": [{"content": "# Copyright 2018-2020 Streamlit Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport numbers\nfrom typing import cast\n\nimport streamlit\nfrom streamlit.errors import StreamlitAPIException\nfrom streamlit.js_number import JSNumber, JSNumberBoundsException\nfrom streamlit.proto.NumberInput_pb2 import NumberInput as NumberInputProto\nfrom .utils import register_widget, NoValue\n\n\nclass NumberInputMixin:\n def number_input(\n self,\n label,\n min_value=None,\n max_value=None,\n value=NoValue(),\n step=None,\n format=None,\n key=None,\n ):\n \"\"\"Display a numeric input widget.\n\n Parameters\n ----------\n label : str or None\n A short label explaining to the user what this input is for.\n min_value : int or float or None\n The minimum permitted value.\n If None, there will be no minimum.\n max_value : int or float or None\n The maximum permitted value.\n If None, there will be no maximum.\n value : int or float or None\n The value of this widget when it first renders.\n Defaults to min_value, or 0.0 if min_value is None\n step : int or float or None\n The stepping interval.\n Defaults to 1 if the value is an int, 0.01 otherwise.\n If the value is not specified, the format parameter will be used.\n format : str or None\n A printf-style format string controlling how the interface should\n display numbers. Output must be purely numeric. This does not impact\n the return value. Valid formatters: %d %e %f %g %i\n key : str\n An optional string to use as the unique key for the widget.\n If this is omitted, a key will be generated for the widget\n based on its content. Multiple widgets of the same type may\n not share the same key.\n\n Returns\n -------\n int or float\n The current value of the numeric input widget. The return type\n will match the data type of the value parameter.\n\n Example\n -------\n >>> number = st.number_input('Insert a number')\n >>> st.write('The current number is ', number)\n \"\"\"\n\n if isinstance(value, NoValue):\n if min_value:\n value = min_value\n else:\n value = 0.0 # We set a float as default\n\n int_value = isinstance(value, numbers.Integral)\n float_value = isinstance(value, float)\n\n if value is None:\n raise StreamlitAPIException(\n \"Default value for number_input should be an int or a float.\"\n )\n else:\n if format is None:\n format = \"%d\" if int_value else \"%0.2f\"\n\n if format in [\"%d\", \"%u\", \"%i\"] and float_value:\n # Warn user to check if displaying float as int was really intended.\n import streamlit as st\n\n st.warning(\n \"Warning: NumberInput value below is float, but format {} displays as integer.\".format(\n format\n )\n )\n\n if step is None:\n step = 1 if int_value else 0.01\n\n try:\n float(format % 2)\n except (TypeError, ValueError):\n raise StreamlitAPIException(\n \"Format string for st.number_input contains invalid characters: %s\"\n % format\n )\n\n # Ensure that all arguments are of the same type.\n args = [min_value, max_value, step]\n\n int_args = all(\n map(\n lambda a: (\n isinstance(a, numbers.Integral) or isinstance(a, type(None))\n ),\n args,\n )\n )\n float_args = all(\n map(lambda a: (isinstance(a, float) or isinstance(a, type(None))), args)\n )\n\n if not int_args and not float_args:\n raise StreamlitAPIException(\n \"All arguments must be of the same type.\"\n \"\\n`value` has %(value_type)s type.\"\n \"\\n`min_value` has %(min_type)s type.\"\n \"\\n`max_value` has %(max_type)s type.\"\n % {\n \"value_type\": type(value).__name__,\n \"min_type\": type(min_value).__name__,\n \"max_type\": type(max_value).__name__,\n }\n )\n\n # Ensure that the value matches arguments' types.\n all_ints = int_value and int_args\n all_floats = float_value and float_args\n\n if not all_ints and not all_floats:\n raise StreamlitAPIException(\n \"All numerical arguments must be of the same type.\"\n \"\\n`value` has %(value_type)s type.\"\n \"\\n`min_value` has %(min_type)s type.\"\n \"\\n`max_value` has %(max_type)s type.\"\n \"\\n`step` has %(step_type)s type.\"\n % {\n \"value_type\": type(value).__name__,\n \"min_type\": type(min_value).__name__,\n \"max_type\": type(max_value).__name__,\n \"step_type\": type(step).__name__,\n }\n )\n\n if (min_value and min_value > value) or (max_value and max_value < value):\n raise StreamlitAPIException(\n \"The default `value` of %(value)s \"\n \"must lie between the `min_value` of %(min)s \"\n \"and the `max_value` of %(max)s, inclusively.\"\n % {\"value\": value, \"min\": min_value, \"max\": max_value}\n )\n\n # Bounds checks. JSNumber produces human-readable exceptions that\n # we simply re-package as StreamlitAPIExceptions.\n try:\n if all_ints:\n if min_value is not None:\n JSNumber.validate_int_bounds(min_value, \"`min_value`\")\n if max_value is not None:\n JSNumber.validate_int_bounds(max_value, \"`max_value`\")\n if step is not None:\n JSNumber.validate_int_bounds(step, \"`step`\")\n JSNumber.validate_int_bounds(value, \"`value`\")\n else:\n if min_value is not None:\n JSNumber.validate_float_bounds(min_value, \"`min_value`\")\n if max_value is not None:\n JSNumber.validate_float_bounds(max_value, \"`max_value`\")\n if step is not None:\n JSNumber.validate_float_bounds(step, \"`step`\")\n JSNumber.validate_float_bounds(value, \"`value`\")\n except JSNumberBoundsException as e:\n raise StreamlitAPIException(str(e))\n\n number_input_proto = NumberInputProto()\n number_input_proto.data_type = (\n NumberInputProto.INT if all_ints else NumberInputProto.FLOAT\n )\n number_input_proto.label = label\n number_input_proto.default = value\n\n if min_value is not None:\n number_input_proto.min = min_value\n number_input_proto.has_min = True\n\n if max_value is not None:\n number_input_proto.max = max_value\n number_input_proto.has_max = True\n\n if step is not None:\n number_input_proto.step = step\n\n if format is not None:\n number_input_proto.format = format\n\n ui_value = register_widget(\"number_input\", number_input_proto, user_key=key)\n\n return_value = ui_value if ui_value is not None else value\n return self.dg._enqueue(\"number_input\", number_input_proto, return_value)\n\n @property\n def dg(self) -> \"streamlit.delta_generator.DeltaGenerator\":\n \"\"\"Get our DeltaGenerator.\"\"\"\n return cast(\"streamlit.delta_generator.DeltaGenerator\", self)\n", "path": "lib/streamlit/elements/number_input.py"}]}

| 3,161 | 111 |

gh_patches_debug_2905

|

rasdani/github-patches

|

git_diff

|

mabel-dev__opteryx-1689

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

🪲 VIEWs load error should be in debug mode only

### Thank you for taking the time to report a problem with Opteryx.

_To help us to respond to your request we ask that you try to provide the below detail about the bug._

**Describe the bug** _A clear and specific description of what the bug is. What the error, incorrect or unexpected behaviour was._

**Expected behaviour** _A clear and concise description of what you expected to happen._

**Sample Code/Statement** _If you can, please submit the SQL statement or Python code snippet, or a representative example using the sample datasets._

~~~sql

~~~

**Additional context** _Add any other context about the problem here, for example what you have done to try to diagnose or workaround the problem._

</issue>

<code>

[start of opteryx/planner/views/__init__.py]

1 # Licensed under the Apache License, Version 2.0 (the "License");

2 # you may not use this file except in compliance with the License.

3 # You may obtain a copy of the License at

4 #

5 # http://www.apache.org/licenses/LICENSE-2.0

6 #

7 # Unless required by applicable law or agreed to in writing, software

8 # distributed under the License is distributed on an "AS IS" BASIS,

9 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

10 # See the License for the specific language governing permissions and

11 # limitations under the License.

12

13 import orjson

14

15 from opteryx.planner.logical_planner import LogicalPlan

16

17

18 def _load_views():

19 try:

20 with open("views.json", "rb") as defs:

21 return orjson.loads(defs.read())

22 except Exception as err:

23 print(f"[OPTERYX] Unable to open views definition file. {err}")

24 return {}

25

26

27 VIEWS = _load_views()

28

29

30 def is_view(view_name: str) -> bool:

31 return view_name in VIEWS

32

33

34 def view_as_plan(view_name: str) -> LogicalPlan:

35 from opteryx.planner.logical_planner import do_logical_planning_phase

36 from opteryx.third_party import sqloxide

37 from opteryx.utils.sql import clean_statement

38 from opteryx.utils.sql import remove_comments

39

40 operation = VIEWS.get(view_name)["statement"]

41

42 clean_sql = clean_statement(remove_comments(operation))

43 parsed_statements = sqloxide.parse_sql(clean_sql, dialect="mysql")

44 logical_plan, _, _ = next(do_logical_planning_phase(parsed_statements))

45

46 return logical_plan

47

[end of opteryx/planner/views/__init__.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/opteryx/planner/views/__init__.py b/opteryx/planner/views/__init__.py

--- a/opteryx/planner/views/__init__.py

+++ b/opteryx/planner/views/__init__.py

@@ -20,7 +20,7 @@

with open("views.json", "rb") as defs:

return orjson.loads(defs.read())

except Exception as err:

- print(f"[OPTERYX] Unable to open views definition file. {err}")

+ # DEBUG:: log (f"[OPTERYX] Unable to open views definition file. {err}")

return {}

|

{"golden_diff": "diff --git a/opteryx/planner/views/__init__.py b/opteryx/planner/views/__init__.py\n--- a/opteryx/planner/views/__init__.py\n+++ b/opteryx/planner/views/__init__.py\n@@ -20,7 +20,7 @@\n with open(\"views.json\", \"rb\") as defs:\n return orjson.loads(defs.read())\n except Exception as err:\n- print(f\"[OPTERYX] Unable to open views definition file. {err}\")\n+ # DEBUG:: log (f\"[OPTERYX] Unable to open views definition file. {err}\")\n return {}\n", "issue": "\ud83e\udeb2 VIEWs load error should be in debug mode only\n### Thank you for taking the time to report a problem with Opteryx.\r\n_To help us to respond to your request we ask that you try to provide the below detail about the bug._\r\n\r\n**Describe the bug** _A clear and specific description of what the bug is. What the error, incorrect or unexpected behaviour was._\r\n\r\n\r\n**Expected behaviour** _A clear and concise description of what you expected to happen._\r\n\r\n\r\n**Sample Code/Statement** _If you can, please submit the SQL statement or Python code snippet, or a representative example using the sample datasets._\r\n\r\n~~~sql\r\n\r\n~~~\r\n\r\n**Additional context** _Add any other context about the problem here, for example what you have done to try to diagnose or workaround the problem._\r\n\n", "before_files": [{"content": "# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport orjson\n\nfrom opteryx.planner.logical_planner import LogicalPlan\n\n\ndef _load_views():\n try:\n with open(\"views.json\", \"rb\") as defs:\n return orjson.loads(defs.read())\n except Exception as err:\n print(f\"[OPTERYX] Unable to open views definition file. {err}\")\n return {}\n\n\nVIEWS = _load_views()\n\n\ndef is_view(view_name: str) -> bool:\n return view_name in VIEWS\n\n\ndef view_as_plan(view_name: str) -> LogicalPlan:\n from opteryx.planner.logical_planner import do_logical_planning_phase\n from opteryx.third_party import sqloxide\n from opteryx.utils.sql import clean_statement\n from opteryx.utils.sql import remove_comments\n\n operation = VIEWS.get(view_name)[\"statement\"]\n\n clean_sql = clean_statement(remove_comments(operation))\n parsed_statements = sqloxide.parse_sql(clean_sql, dialect=\"mysql\")\n logical_plan, _, _ = next(do_logical_planning_phase(parsed_statements))\n\n return logical_plan\n", "path": "opteryx/planner/views/__init__.py"}]}

| 1,155 | 137 |

gh_patches_debug_18343

|

rasdani/github-patches

|

git_diff

|

ManimCommunity__manim-3528

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

`--save_last_frame` appears twice in options

## Description of bug / unexpected behavior

<!-- Add a clear and concise description of the problem you encountered. -->

When using `manim render --help`, you can see `--save_last_frame` appearing twice, once marked as deprecated:

```

-s, --save_last_frame

...

-s, --save_last_frame Save last frame as png (Deprecated).

```

This is also visible i n [reference manual](https://docs.manim.community/en/stable/guides/configuration.html#:~:text=webm%7Cmov%5D%0A%20%20%2Ds%2C%20%2D%2D-,save_last_frame,-%2Dq%2C%20%2D%2Dquality%20%5Bl).

## Expected behavior

<!-- Add a clear and concise description of what you expected to happen. -->

The option appears exactly once

## How to reproduce the issue

<!-- Provide a piece of code illustrating the undesired behavior. -->

<details><summary>Code for reproducing the problem</summary>

```bash

> manim render --help | grep 'save_last_frame'

```

</details>

## Additional media files

<!-- Paste in the files manim produced on rendering the code above. -->

<details><summary>Images/GIFs</summary>

<!-- PASTE MEDIA HERE -->

</details>

## Logs

<details><summary>Terminal output</summary>

<!-- Add "-v DEBUG" when calling manim to generate more detailed logs -->

```

PASTE HERE OR PROVIDE LINK TO https://pastebin.com/ OR SIMILAR

```

<!-- Insert screenshots here (only when absolutely necessary, we prefer copy/pasted output!) -->

</details>

## System specifications

<details><summary>System Details</summary>

- OS (with version, e.g., Windows 10 v2004 or macOS 10.15 (Catalina)):

- RAM:

- Python version (`python/py/python3 --version`):

- Installed modules (provide output from `pip list`):

```

PASTE HERE

```

</details>

<details><summary>LaTeX details</summary>

+ LaTeX distribution (e.g. TeX Live 2020):

+ Installed LaTeX packages:

<!-- output of `tlmgr list --only-installed` for TeX Live or a screenshot of the Packages page for MikTeX -->

</details>

<details><summary>FFMPEG</summary>

Output of `ffmpeg -version`:

```

PASTE HERE

```

</details>

## Additional comments

<!-- Add further context that you think might be relevant for this issue here. -->

This is probably a code cleanup issue, as you can clearly see the option being declared twice in [`render_options.py`](https://github.com/ManimCommunity/manim/blob/main/manim/cli/render/render_options.py):

```py

# line 61

option("-s", "--save_last_frame", is_flag=True, default=None),

# line 125:131

option(

"-s",

"--save_last_frame",

default=None,

is_flag=True,

help="Save last frame as png (Deprecated).",

),

```

I can create a PR to remove one of them, just need info which one should be removed - the first one without help, or the second one marking it deprecated (easiest to just remove the "deprecated" keyword as it's formated nicely, and get rid of the first one).

Should I also report it as a bug in [cloup](https://github.com/janLuke/cloup) that it doesn't check for duplicates?

</issue>

<code>

[start of manim/cli/render/render_options.py]

1 from __future__ import annotations

2

3 import re

4

5 from cloup import Choice, option, option_group

6

7 from manim.constants import QUALITIES, RendererType

8

9 from ... import logger

10

11

12 def validate_scene_range(ctx, param, value):

13 try:

14 start = int(value)

15 return (start,)

16 except Exception:

17 pass

18

19 if value:

20 try:

21 start, end = map(int, re.split(r"[;,\-]", value))

22 return start, end

23 except Exception:

24 logger.error("Couldn't determine a range for -n option.")

25 exit()

26

27

28 def validate_resolution(ctx, param, value):

29 if value:

30 try:

31 start, end = map(int, re.split(r"[;,\-]", value))

32 return (start, end)

33 except Exception:

34 logger.error("Resolution option is invalid.")

35 exit()

36

37

38 render_options = option_group(

39 "Render Options",

40 option(

41 "-n",

42 "--from_animation_number",

43 callback=validate_scene_range,

44 help="Start rendering from n_0 until n_1. If n_1 is left unspecified, "

45 "renders all scenes after n_0.",

46 default=None,

47 ),

48 option(

49 "-a",

50 "--write_all",

51 is_flag=True,

52 help="Render all scenes in the input file.",

53 default=None,

54 ),

55 option(

56 "--format",

57 type=Choice(["png", "gif", "mp4", "webm", "mov"], case_sensitive=False),

58 default=None,

59 ),

60 option("-s", "--save_last_frame", is_flag=True, default=None),

61 option(

62 "-q",

63 "--quality",

64 default=None,

65 type=Choice(

66 list(reversed([q["flag"] for q in QUALITIES.values() if q["flag"]])), # type: ignore

67 case_sensitive=False,

68 ),

69 help="Render quality at the follow resolution framerates, respectively: "

70 + ", ".join(

71 reversed(

72 [

73 f'{q["pixel_width"]}x{q["pixel_height"]} {q["frame_rate"]}FPS'

74 for q in QUALITIES.values()

75 if q["flag"]

76 ]

77 )

78 ),

79 ),

80 option(

81 "-r",

82 "--resolution",

83 callback=validate_resolution,

84 default=None,

85 help='Resolution in "W,H" for when 16:9 aspect ratio isn\'t possible.',

86 ),

87 option(

88 "--fps",

89 "--frame_rate",

90 "frame_rate",

91 type=float,

92 default=None,

93 help="Render at this frame rate.",

94 ),

95 option(

96 "--renderer",

97 type=Choice(

98 [renderer_type.value for renderer_type in RendererType],

99 case_sensitive=False,

100 ),

101 help="Select a renderer for your Scene.",

102 default="cairo",

103 ),

104 option(

105 "-g",

106 "--save_pngs",

107 is_flag=True,

108 default=None,

109 help="Save each frame as png (Deprecated).",

110 ),

111 option(

112 "-i",

113 "--save_as_gif",

114 default=None,

115 is_flag=True,

116 help="Save as a gif (Deprecated).",

117 ),

118 option(

119 "--save_sections",

120 default=None,

121 is_flag=True,

122 help="Save section videos in addition to movie file.",

123 ),

124 option(

125 "-s",

126 "--save_last_frame",

127 default=None,

128 is_flag=True,

129 help="Save last frame as png (Deprecated).",

130 ),

131 option(

132 "-t",

133 "--transparent",

134 is_flag=True,

135 help="Render scenes with alpha channel.",

136 ),

137 option(

138 "--use_projection_fill_shaders",

139 is_flag=True,

140 help="Use shaders for OpenGLVMobject fill which are compatible with transformation matrices.",

141 default=None,

142 ),

143 option(

144 "--use_projection_stroke_shaders",

145 is_flag=True,

146 help="Use shaders for OpenGLVMobject stroke which are compatible with transformation matrices.",

147 default=None,

148 ),

149 )

150

[end of manim/cli/render/render_options.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/manim/cli/render/render_options.py b/manim/cli/render/render_options.py

--- a/manim/cli/render/render_options.py

+++ b/manim/cli/render/render_options.py

@@ -57,7 +57,13 @@

type=Choice(["png", "gif", "mp4", "webm", "mov"], case_sensitive=False),

default=None,

),

- option("-s", "--save_last_frame", is_flag=True, default=None),

+ option(

+ "-s",

+ "--save_last_frame",

+ default=None,

+ is_flag=True,

+ help="Render and save only the last frame of a scene as a PNG image.",

+ ),

option(

"-q",

"--quality",

@@ -121,13 +127,6 @@

is_flag=True,

help="Save section videos in addition to movie file.",

),

- option(

- "-s",

- "--save_last_frame",

- default=None,

- is_flag=True,

- help="Save last frame as png (Deprecated).",

- ),

option(

"-t",

"--transparent",

|