problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

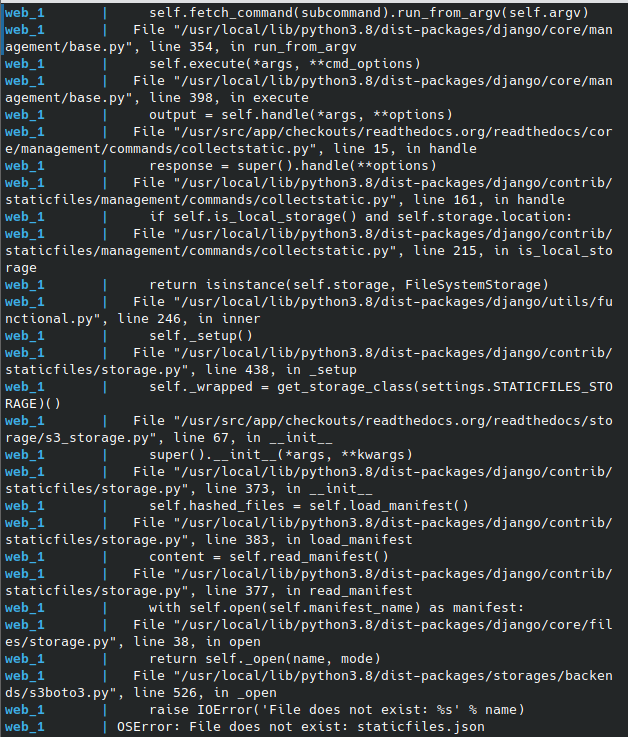

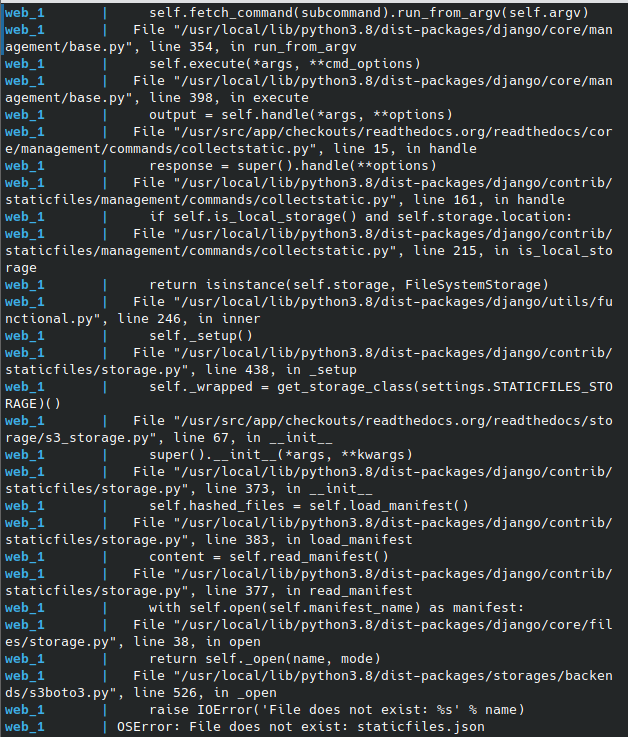

gh_patches_debug_4242 | rasdani/github-patches | git_diff | kivy__python-for-android-1995 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

TestGetSystemPythonExecutable.test_virtualenv test fail

The `TestGetSystemPythonExecutable.test_virtualenv` and `TestGetSystemPythonExecutable.test_venv` tests started failing all of a sudden.

Error was:

```

ModuleNotFoundError: No module named \'pytoml\'\n'

```

This ca be reproduced in local via:

```sh

pytest tests/test_pythonpackage_basic.py::TestGetSystemPythonExecutable::test_virtualenv

```

</issue>

<code>

[start of setup.py]

1

2 import glob

3 from io import open # for open(..,encoding=...) parameter in python 2

4 from os import walk

5 from os.path import join, dirname, sep

6 import os

7 import re

8 from setuptools import setup, find_packages

9

10 # NOTE: All package data should also be set in MANIFEST.in

11

12 packages = find_packages()

13

14 package_data = {'': ['*.tmpl',

15 '*.patch', ], }

16

17 data_files = []

18

19

20

21 # must be a single statement since buildozer is currently parsing it, refs:

22 # https://github.com/kivy/buildozer/issues/722

23 install_reqs = [

24 'appdirs', 'colorama>=0.3.3', 'jinja2', 'six',

25 'enum34; python_version<"3.4"', 'sh>=1.10; sys_platform!="nt"',

26 'pep517', 'pytoml', 'virtualenv'

27 ]

28 # (pep517, pytoml and virtualenv are used by pythonpackage.py)

29

30 # By specifying every file manually, package_data will be able to

31 # include them in binary distributions. Note that we have to add

32 # everything as a 'pythonforandroid' rule, using '' apparently doesn't

33 # work.

34 def recursively_include(results, directory, patterns):

35 for root, subfolders, files in walk(directory):

36 for fn in files:

37 if not any([glob.fnmatch.fnmatch(fn, pattern) for pattern in patterns]):

38 continue

39 filename = join(root, fn)

40 directory = 'pythonforandroid'

41 if directory not in results:

42 results[directory] = []

43 results[directory].append(join(*filename.split(sep)[1:]))

44

45 recursively_include(package_data, 'pythonforandroid/recipes',

46 ['*.patch', 'Setup*', '*.pyx', '*.py', '*.c', '*.h',

47 '*.mk', '*.jam', ])

48 recursively_include(package_data, 'pythonforandroid/bootstraps',

49 ['*.properties', '*.xml', '*.java', '*.tmpl', '*.txt', '*.png',

50 '*.mk', '*.c', '*.h', '*.py', '*.sh', '*.jpg', '*.aidl',

51 '*.gradle', '.gitkeep', 'gradlew*', '*.jar', "*.patch", ])

52 recursively_include(package_data, 'pythonforandroid/bootstraps',

53 ['sdl-config', ])

54 recursively_include(package_data, 'pythonforandroid/bootstraps/webview',

55 ['*.html', ])

56 recursively_include(package_data, 'pythonforandroid',

57 ['liblink', 'biglink', 'liblink.sh'])

58

59 with open(join(dirname(__file__), 'README.md'),

60 encoding="utf-8",

61 errors="replace",

62 ) as fileh:

63 long_description = fileh.read()

64

65 init_filen = join(dirname(__file__), 'pythonforandroid', '__init__.py')

66 version = None

67 try:

68 with open(init_filen,

69 encoding="utf-8",

70 errors="replace"

71 ) as fileh:

72 lines = fileh.readlines()

73 except IOError:

74 pass

75 else:

76 for line in lines:

77 line = line.strip()

78 if line.startswith('__version__ = '):

79 matches = re.findall(r'["\'].+["\']', line)

80 if matches:

81 version = matches[0].strip("'").strip('"')

82 break

83 if version is None:

84 raise Exception('Error: version could not be loaded from {}'.format(init_filen))

85

86 setup(name='python-for-android',

87 version=version,

88 description='Android APK packager for Python scripts and apps',

89 long_description=long_description,

90 long_description_content_type='text/markdown',

91 author='The Kivy team',

92 author_email='[email protected]',

93 url='https://github.com/kivy/python-for-android',

94 license='MIT',

95 install_requires=install_reqs,

96 entry_points={

97 'console_scripts': [

98 'python-for-android = pythonforandroid.entrypoints:main',

99 'p4a = pythonforandroid.entrypoints:main',

100 ],

101 'distutils.commands': [

102 'apk = pythonforandroid.bdistapk:BdistAPK',

103 ],

104 },

105 classifiers = [

106 'Development Status :: 5 - Production/Stable',

107 'Intended Audience :: Developers',

108 'License :: OSI Approved :: MIT License',

109 'Operating System :: Microsoft :: Windows',

110 'Operating System :: OS Independent',

111 'Operating System :: POSIX :: Linux',

112 'Operating System :: MacOS :: MacOS X',

113 'Operating System :: Android',

114 'Programming Language :: C',

115 'Programming Language :: Python :: 3',

116 'Topic :: Software Development',

117 'Topic :: Utilities',

118 ],

119 packages=packages,

120 package_data=package_data,

121 )

122

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -23,7 +23,7 @@

install_reqs = [

'appdirs', 'colorama>=0.3.3', 'jinja2', 'six',

'enum34; python_version<"3.4"', 'sh>=1.10; sys_platform!="nt"',

- 'pep517', 'pytoml', 'virtualenv'

+ 'pep517<0.7.0"', 'pytoml', 'virtualenv'

]

# (pep517, pytoml and virtualenv are used by pythonpackage.py)

| {"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -23,7 +23,7 @@\n install_reqs = [\n 'appdirs', 'colorama>=0.3.3', 'jinja2', 'six',\n 'enum34; python_version<\"3.4\"', 'sh>=1.10; sys_platform!=\"nt\"',\n- 'pep517', 'pytoml', 'virtualenv'\n+ 'pep517<0.7.0\"', 'pytoml', 'virtualenv'\n ]\n # (pep517, pytoml and virtualenv are used by pythonpackage.py)\n", "issue": "TestGetSystemPythonExecutable.test_virtualenv test fail\nThe `TestGetSystemPythonExecutable.test_virtualenv` and `TestGetSystemPythonExecutable.test_venv` tests started failing all of a sudden.\r\nError was:\r\n```\r\nModuleNotFoundError: No module named \\'pytoml\\'\\n'\r\n```\r\nThis ca be reproduced in local via:\r\n```sh\r\npytest tests/test_pythonpackage_basic.py::TestGetSystemPythonExecutable::test_virtualenv\r\n```\r\n\r\n\n", "before_files": [{"content": "\nimport glob\nfrom io import open # for open(..,encoding=...) parameter in python 2\nfrom os import walk\nfrom os.path import join, dirname, sep\nimport os\nimport re\nfrom setuptools import setup, find_packages\n\n# NOTE: All package data should also be set in MANIFEST.in\n\npackages = find_packages()\n\npackage_data = {'': ['*.tmpl',\n '*.patch', ], }\n\ndata_files = []\n\n\n\n# must be a single statement since buildozer is currently parsing it, refs:\n# https://github.com/kivy/buildozer/issues/722\ninstall_reqs = [\n 'appdirs', 'colorama>=0.3.3', 'jinja2', 'six',\n 'enum34; python_version<\"3.4\"', 'sh>=1.10; sys_platform!=\"nt\"',\n 'pep517', 'pytoml', 'virtualenv'\n]\n# (pep517, pytoml and virtualenv are used by pythonpackage.py)\n\n# By specifying every file manually, package_data will be able to\n# include them in binary distributions. Note that we have to add\n# everything as a 'pythonforandroid' rule, using '' apparently doesn't\n# work.\ndef recursively_include(results, directory, patterns):\n for root, subfolders, files in walk(directory):\n for fn in files:\n if not any([glob.fnmatch.fnmatch(fn, pattern) for pattern in patterns]):\n continue\n filename = join(root, fn)\n directory = 'pythonforandroid'\n if directory not in results:\n results[directory] = []\n results[directory].append(join(*filename.split(sep)[1:]))\n\nrecursively_include(package_data, 'pythonforandroid/recipes',\n ['*.patch', 'Setup*', '*.pyx', '*.py', '*.c', '*.h',\n '*.mk', '*.jam', ])\nrecursively_include(package_data, 'pythonforandroid/bootstraps',\n ['*.properties', '*.xml', '*.java', '*.tmpl', '*.txt', '*.png',\n '*.mk', '*.c', '*.h', '*.py', '*.sh', '*.jpg', '*.aidl',\n '*.gradle', '.gitkeep', 'gradlew*', '*.jar', \"*.patch\", ])\nrecursively_include(package_data, 'pythonforandroid/bootstraps',\n ['sdl-config', ])\nrecursively_include(package_data, 'pythonforandroid/bootstraps/webview',\n ['*.html', ])\nrecursively_include(package_data, 'pythonforandroid',\n ['liblink', 'biglink', 'liblink.sh'])\n\nwith open(join(dirname(__file__), 'README.md'),\n encoding=\"utf-8\",\n errors=\"replace\",\n ) as fileh:\n long_description = fileh.read()\n\ninit_filen = join(dirname(__file__), 'pythonforandroid', '__init__.py')\nversion = None\ntry:\n with open(init_filen,\n encoding=\"utf-8\",\n errors=\"replace\"\n ) as fileh:\n lines = fileh.readlines()\nexcept IOError:\n pass\nelse:\n for line in lines:\n line = line.strip()\n if line.startswith('__version__ = '):\n matches = re.findall(r'[\"\\'].+[\"\\']', line)\n if matches:\n version = matches[0].strip(\"'\").strip('\"')\n break\nif version is None:\n raise Exception('Error: version could not be loaded from {}'.format(init_filen))\n\nsetup(name='python-for-android',\n version=version,\n description='Android APK packager for Python scripts and apps',\n long_description=long_description,\n long_description_content_type='text/markdown',\n author='The Kivy team',\n author_email='[email protected]',\n url='https://github.com/kivy/python-for-android',\n license='MIT',\n install_requires=install_reqs,\n entry_points={\n 'console_scripts': [\n 'python-for-android = pythonforandroid.entrypoints:main',\n 'p4a = pythonforandroid.entrypoints:main',\n ],\n 'distutils.commands': [\n 'apk = pythonforandroid.bdistapk:BdistAPK',\n ],\n },\n classifiers = [\n 'Development Status :: 5 - Production/Stable',\n 'Intended Audience :: Developers',\n 'License :: OSI Approved :: MIT License',\n 'Operating System :: Microsoft :: Windows',\n 'Operating System :: OS Independent',\n 'Operating System :: POSIX :: Linux',\n 'Operating System :: MacOS :: MacOS X',\n 'Operating System :: Android',\n 'Programming Language :: C',\n 'Programming Language :: Python :: 3',\n 'Topic :: Software Development',\n 'Topic :: Utilities',\n ],\n packages=packages,\n package_data=package_data,\n )\n", "path": "setup.py"}]} | 1,898 | 150 |

gh_patches_debug_23758 | rasdani/github-patches | git_diff | holoviz__panel-705 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Broken image link in Django user guide

The link https://panel.pyviz.org/apps/django2/sliders.png in https://panel.pyviz.org/user_guide/Django_Apps.html doesn't seem to go anywhere; was that meant to point to https://parambokeh.pyviz.org/assets/sliders.png ?

Broken image link in Django user guide

The link https://panel.pyviz.org/apps/django2/sliders.png in https://panel.pyviz.org/user_guide/Django_Apps.html doesn't seem to go anywhere; was that meant to point to https://parambokeh.pyviz.org/assets/sliders.png ?

</issue>

<code>

[start of panel/util.py]

1 """

2 Various general utilities used in the panel codebase.

3 """

4 from __future__ import absolute_import, division, unicode_literals

5

6 import re

7 import sys

8 import inspect

9 import numbers

10 import datetime as dt

11

12 from datetime import datetime

13 from six import string_types

14 from collections import defaultdict, OrderedDict

15 try:

16 from collections.abc import MutableSequence, MutableMapping

17 except ImportError: # support for python>3.8

18 from collections import MutableSequence, MutableMapping

19

20 import param

21 import numpy as np

22

23 datetime_types = (np.datetime64, dt.datetime, dt.date)

24

25 if sys.version_info.major > 2:

26 unicode = str

27

28

29 def hashable(x):

30 if isinstance(x, MutableSequence):

31 return tuple(x)

32 elif isinstance(x, MutableMapping):

33 return tuple([(k,v) for k,v in x.items()])

34 else:

35 return x

36

37

38 def isIn(obj, objs):

39 """

40 Checks if the object is in the list of objects safely.

41 """

42 for o in objs:

43 if o is obj:

44 return True

45 try:

46 if o == obj:

47 return True

48 except:

49 pass

50 return False

51

52

53 def indexOf(obj, objs):

54 """

55 Returns the index of an object in a list of objects. Unlike the

56 list.index method this function only checks for identity not

57 equality.

58 """

59 for i, o in enumerate(objs):

60 if o is obj:

61 return i

62 try:

63 if o == obj:

64 return i

65 except:

66 pass

67 raise ValueError('%s not in list' % obj)

68

69

70 def as_unicode(obj):

71 """

72 Safely casts any object to unicode including regular string

73 (i.e. bytes) types in python 2.

74 """

75 if sys.version_info.major < 3 and isinstance(obj, str):

76 obj = obj.decode('utf-8')

77 return unicode(obj)

78

79

80 def param_name(name):

81 """

82 Removes the integer id from a Parameterized class name.

83 """

84 match = re.match(r'(.)+(\d){5}', name)

85 return name[:-5] if match else name

86

87

88 def unicode_repr(obj):

89 """

90 Returns a repr without the unicode prefix.

91 """

92 if sys.version_info.major == 2 and isinstance(obj, unicode):

93 return repr(obj)[1:]

94 return repr(obj)

95

96

97 def abbreviated_repr(value, max_length=25, natural_breaks=(',', ' ')):

98 """

99 Returns an abbreviated repr for the supplied object. Attempts to

100 find a natural break point while adhering to the maximum length.

101 """

102 vrepr = repr(value)

103 if len(vrepr) > max_length:

104 # Attempt to find natural cutoff point

105 abbrev = vrepr[max_length//2:]

106 natural_break = None

107 for brk in natural_breaks:

108 if brk in abbrev:

109 natural_break = abbrev.index(brk) + max_length//2

110 break

111 if natural_break and natural_break < max_length:

112 max_length = natural_break + 1

113

114 end_char = ''

115 if isinstance(value, list):

116 end_char = ']'

117 elif isinstance(value, OrderedDict):

118 end_char = '])'

119 elif isinstance(value, (dict, set)):

120 end_char = '}'

121 return vrepr[:max_length+1] + '...' + end_char

122 return vrepr

123

124

125 def param_reprs(parameterized, skip=None):

126 """

127 Returns a list of reprs for parameters on the parameterized object.

128 Skips default and empty values.

129 """

130 cls = type(parameterized).__name__

131 param_reprs = []

132 for p, v in sorted(parameterized.get_param_values()):

133 if v is parameterized.param[p].default: continue

134 elif v is None: continue

135 elif isinstance(v, string_types) and v == '': continue

136 elif isinstance(v, list) and v == []: continue

137 elif isinstance(v, dict) and v == {}: continue

138 elif (skip and p in skip) or (p == 'name' and v.startswith(cls)): continue

139 param_reprs.append('%s=%s' % (p, abbreviated_repr(v)))

140 return param_reprs

141

142

143 def full_groupby(l, key=lambda x: x):

144 """

145 Groupby implementation which does not require a prior sort

146 """

147 d = defaultdict(list)

148 for item in l:

149 d[key(item)].append(item)

150 return d.items()

151

152

153 def get_method_owner(meth):

154 """

155 Returns the instance owning the supplied instancemethod or

156 the class owning the supplied classmethod.

157 """

158 if inspect.ismethod(meth):

159 if sys.version_info < (3,0):

160 return meth.im_class if meth.im_self is None else meth.im_self

161 else:

162 return meth.__self__

163

164

165 def is_parameterized(obj):

166 """

167 Whether an object is a Parameterized class or instance.

168 """

169 return (isinstance(obj, param.Parameterized) or

170 (isinstance(obj, type) and issubclass(obj, param.Parameterized)))

171

172

173 def isdatetime(value):

174 """

175 Whether the array or scalar is recognized datetime type.

176 """

177 if isinstance(value, np.ndarray):

178 return (value.dtype.kind == "M" or

179 (value.dtype.kind == "O" and len(value) and

180 isinstance(value[0], datetime_types)))

181 elif isinstance(value, list):

182 return all(isinstance(d, datetime_types) for d in value)

183 else:

184 return isinstance(value, datetime_types)

185

186 def value_as_datetime(value):

187 """

188 Retrieve the value tuple as a tuple of datetime objects.

189 """

190 if isinstance(value, numbers.Number):

191 value = datetime.utcfromtimestamp(value / 1000)

192 return value

193

194

195 def value_as_date(value):

196 if isinstance(value, numbers.Number):

197 value = datetime.utcfromtimestamp(value / 1000).date()

198 elif isinstance(value, datetime):

199 value = value.date()

200 return value

201

[end of panel/util.py]

[start of doc/conf.py]

1 # -*- coding: utf-8 -*-

2

3 from nbsite.shared_conf import *

4

5 project = u'Panel'

6 authors = u'Panel contributors'

7 copyright = u'2019 ' + authors

8 description = 'High-level dashboarding for python visualization libraries'

9

10 import panel

11 version = release = str(panel.__version__)

12

13 html_static_path += ['_static']

14 html_theme = 'sphinx_ioam_theme'

15 html_theme_options = {

16 'logo': 'logo_horizontal.png',

17 'favicon': 'favicon.ico',

18 'css': 'site.css'

19 }

20

21 extensions += ['nbsite.gallery']

22

23 nbsite_gallery_conf = {

24 'github_org': 'pyviz',

25 'github_project': 'panel',

26 'galleries': {

27 'gallery': {

28 'title': 'Gallery',

29 'sections': [

30 {'path': 'demos',

31 'title': 'Demos',

32 'description': 'A set of sophisticated apps built to demonstrate the features of Panel.'},

33 {'path': 'simple',

34 'title': 'Simple Apps',

35 'description': 'Simple example apps meant to provide a quick introduction to Panel.'},

36 {'path': 'apis',

37 'title': 'APIs',

38 'description': ('Examples meant to demonstrate the usage of different Panel APIs '

39 'such as interact and reactive functions.')},

40 {'path': 'layout',

41 'title': 'Layouts',

42 'description': 'How to leverage Panel layout components to achieve complex layouts.'},

43 {'path': 'dynamic',

44 'title': 'Dynamic UIs',

45 'description': ('Examples demonstrating how to build dynamic UIs with components that'

46 'are added or removed interactively.')},

47 {'path': 'param',

48 'title': 'Param based apps',

49 'description': 'Using the Param library to express UIs independently of Panel.'},

50 {'path': 'links',

51 'title': 'Linking',

52 'description': ('Using Javascript based links to define interactivity without '

53 'without requiring a live kernel.')},

54 {'path': 'external',

55 'title': 'External libraries',

56 'description': 'Wrapping external libraries with Panel.'}

57 ]

58 },

59 'reference': {

60 'title': 'Reference Gallery',

61 'sections': [

62 'panes',

63 'layouts',

64 'widgets'

65 ]

66 }

67 },

68 'thumbnail_url': 'https://assets.holoviews.org/panel/thumbnails',

69 'deployment_url': 'https://panel-gallery.pyviz.demo.anaconda.com/'

70 }

71

72 _NAV = (

73 ('Getting started', 'getting_started/index'),

74 ('User Guide', 'user_guide/index'),

75 ('Gallery', 'gallery/index'),

76 ('Reference Gallery', 'reference/index'),

77 ('Developer Guide', 'developer_guide/index'),

78 ('FAQ', 'FAQ'),

79 ('About', 'about')

80 )

81

82 templates_path = ['_templates']

83

84 html_context.update({

85 'PROJECT': project,

86 'DESCRIPTION': description,

87 'AUTHOR': authors,

88 'VERSION': version,

89 'WEBSITE_URL': 'https://panel.pyviz.org',

90 'WEBSITE_SERVER': 'https://panel.pyviz.org',

91 'VERSION': version,

92 'NAV': _NAV,

93 'LINKS': _NAV,

94 'SOCIAL': (

95 ('Gitter', '//gitter.im/pyviz/pyviz'),

96 ('Github', '//github.com/pyviz/panel'),

97 )

98 })

99

100 nbbuild_patterns_to_take_along = ["simple.html"]

101

[end of doc/conf.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/doc/conf.py b/doc/conf.py

--- a/doc/conf.py

+++ b/doc/conf.py

@@ -69,7 +69,7 @@

'deployment_url': 'https://panel-gallery.pyviz.demo.anaconda.com/'

}

-_NAV = (

+_NAV = (

('Getting started', 'getting_started/index'),

('User Guide', 'user_guide/index'),

('Gallery', 'gallery/index'),

@@ -88,7 +88,6 @@

'VERSION': version,

'WEBSITE_URL': 'https://panel.pyviz.org',

'WEBSITE_SERVER': 'https://panel.pyviz.org',

- 'VERSION': version,

'NAV': _NAV,

'LINKS': _NAV,

'SOCIAL': (

diff --git a/panel/util.py b/panel/util.py

--- a/panel/util.py

+++ b/panel/util.py

@@ -12,9 +12,9 @@

from datetime import datetime

from six import string_types

from collections import defaultdict, OrderedDict

-try:

+try: # python >= 3.3

from collections.abc import MutableSequence, MutableMapping

-except ImportError: # support for python>3.8

+except ImportError:

from collections import MutableSequence, MutableMapping

import param

| {"golden_diff": "diff --git a/doc/conf.py b/doc/conf.py\n--- a/doc/conf.py\n+++ b/doc/conf.py\n@@ -69,7 +69,7 @@\n 'deployment_url': 'https://panel-gallery.pyviz.demo.anaconda.com/'\n }\n \n-_NAV = (\n+_NAV = (\n ('Getting started', 'getting_started/index'),\n ('User Guide', 'user_guide/index'),\n ('Gallery', 'gallery/index'),\n@@ -88,7 +88,6 @@\n 'VERSION': version,\n 'WEBSITE_URL': 'https://panel.pyviz.org',\n 'WEBSITE_SERVER': 'https://panel.pyviz.org',\n- 'VERSION': version,\n 'NAV': _NAV,\n 'LINKS': _NAV,\n 'SOCIAL': (\ndiff --git a/panel/util.py b/panel/util.py\n--- a/panel/util.py\n+++ b/panel/util.py\n@@ -12,9 +12,9 @@\n from datetime import datetime\n from six import string_types\n from collections import defaultdict, OrderedDict\n-try:\n+try: # python >= 3.3\n from collections.abc import MutableSequence, MutableMapping\n-except ImportError: # support for python>3.8\n+except ImportError:\n from collections import MutableSequence, MutableMapping\n \n import param\n", "issue": "Broken image link in Django user guide\nThe link https://panel.pyviz.org/apps/django2/sliders.png in https://panel.pyviz.org/user_guide/Django_Apps.html doesn't seem to go anywhere; was that meant to point to https://parambokeh.pyviz.org/assets/sliders.png ?\nBroken image link in Django user guide\nThe link https://panel.pyviz.org/apps/django2/sliders.png in https://panel.pyviz.org/user_guide/Django_Apps.html doesn't seem to go anywhere; was that meant to point to https://parambokeh.pyviz.org/assets/sliders.png ?\n", "before_files": [{"content": "\"\"\"\nVarious general utilities used in the panel codebase.\n\"\"\"\nfrom __future__ import absolute_import, division, unicode_literals\n\nimport re\nimport sys\nimport inspect\nimport numbers\nimport datetime as dt\n\nfrom datetime import datetime\nfrom six import string_types\nfrom collections import defaultdict, OrderedDict\ntry:\n from collections.abc import MutableSequence, MutableMapping\nexcept ImportError: # support for python>3.8\n from collections import MutableSequence, MutableMapping\n\nimport param\nimport numpy as np\n\ndatetime_types = (np.datetime64, dt.datetime, dt.date)\n\nif sys.version_info.major > 2:\n unicode = str\n\n\ndef hashable(x):\n if isinstance(x, MutableSequence):\n return tuple(x)\n elif isinstance(x, MutableMapping):\n return tuple([(k,v) for k,v in x.items()])\n else:\n return x\n\n\ndef isIn(obj, objs):\n \"\"\"\n Checks if the object is in the list of objects safely.\n \"\"\"\n for o in objs:\n if o is obj:\n return True\n try:\n if o == obj:\n return True\n except:\n pass\n return False\n\n\ndef indexOf(obj, objs):\n \"\"\"\n Returns the index of an object in a list of objects. Unlike the\n list.index method this function only checks for identity not\n equality.\n \"\"\"\n for i, o in enumerate(objs):\n if o is obj:\n return i\n try:\n if o == obj:\n return i\n except:\n pass\n raise ValueError('%s not in list' % obj)\n\n\ndef as_unicode(obj):\n \"\"\"\n Safely casts any object to unicode including regular string\n (i.e. bytes) types in python 2.\n \"\"\"\n if sys.version_info.major < 3 and isinstance(obj, str):\n obj = obj.decode('utf-8')\n return unicode(obj)\n\n\ndef param_name(name):\n \"\"\"\n Removes the integer id from a Parameterized class name.\n \"\"\"\n match = re.match(r'(.)+(\\d){5}', name)\n return name[:-5] if match else name\n\n\ndef unicode_repr(obj):\n \"\"\"\n Returns a repr without the unicode prefix.\n \"\"\"\n if sys.version_info.major == 2 and isinstance(obj, unicode):\n return repr(obj)[1:]\n return repr(obj)\n\n\ndef abbreviated_repr(value, max_length=25, natural_breaks=(',', ' ')):\n \"\"\"\n Returns an abbreviated repr for the supplied object. Attempts to\n find a natural break point while adhering to the maximum length.\n \"\"\"\n vrepr = repr(value)\n if len(vrepr) > max_length:\n # Attempt to find natural cutoff point\n abbrev = vrepr[max_length//2:]\n natural_break = None\n for brk in natural_breaks:\n if brk in abbrev:\n natural_break = abbrev.index(brk) + max_length//2\n break\n if natural_break and natural_break < max_length:\n max_length = natural_break + 1\n\n end_char = ''\n if isinstance(value, list):\n end_char = ']'\n elif isinstance(value, OrderedDict):\n end_char = '])'\n elif isinstance(value, (dict, set)):\n end_char = '}'\n return vrepr[:max_length+1] + '...' + end_char\n return vrepr\n\n\ndef param_reprs(parameterized, skip=None):\n \"\"\"\n Returns a list of reprs for parameters on the parameterized object.\n Skips default and empty values.\n \"\"\"\n cls = type(parameterized).__name__\n param_reprs = []\n for p, v in sorted(parameterized.get_param_values()):\n if v is parameterized.param[p].default: continue\n elif v is None: continue\n elif isinstance(v, string_types) and v == '': continue\n elif isinstance(v, list) and v == []: continue\n elif isinstance(v, dict) and v == {}: continue\n elif (skip and p in skip) or (p == 'name' and v.startswith(cls)): continue\n param_reprs.append('%s=%s' % (p, abbreviated_repr(v)))\n return param_reprs\n\n\ndef full_groupby(l, key=lambda x: x):\n \"\"\"\n Groupby implementation which does not require a prior sort\n \"\"\"\n d = defaultdict(list)\n for item in l:\n d[key(item)].append(item)\n return d.items()\n\n\ndef get_method_owner(meth):\n \"\"\"\n Returns the instance owning the supplied instancemethod or\n the class owning the supplied classmethod.\n \"\"\"\n if inspect.ismethod(meth):\n if sys.version_info < (3,0):\n return meth.im_class if meth.im_self is None else meth.im_self\n else:\n return meth.__self__\n\n\ndef is_parameterized(obj):\n \"\"\"\n Whether an object is a Parameterized class or instance.\n \"\"\"\n return (isinstance(obj, param.Parameterized) or\n (isinstance(obj, type) and issubclass(obj, param.Parameterized)))\n\n\ndef isdatetime(value):\n \"\"\"\n Whether the array or scalar is recognized datetime type.\n \"\"\"\n if isinstance(value, np.ndarray):\n return (value.dtype.kind == \"M\" or\n (value.dtype.kind == \"O\" and len(value) and\n isinstance(value[0], datetime_types)))\n elif isinstance(value, list):\n return all(isinstance(d, datetime_types) for d in value)\n else:\n return isinstance(value, datetime_types)\n\ndef value_as_datetime(value):\n \"\"\"\n Retrieve the value tuple as a tuple of datetime objects.\n \"\"\"\n if isinstance(value, numbers.Number):\n value = datetime.utcfromtimestamp(value / 1000)\n return value\n\n\ndef value_as_date(value):\n if isinstance(value, numbers.Number):\n value = datetime.utcfromtimestamp(value / 1000).date()\n elif isinstance(value, datetime):\n value = value.date()\n return value\n", "path": "panel/util.py"}, {"content": "# -*- coding: utf-8 -*-\n\nfrom nbsite.shared_conf import *\n\nproject = u'Panel'\nauthors = u'Panel contributors'\ncopyright = u'2019 ' + authors\ndescription = 'High-level dashboarding for python visualization libraries'\n\nimport panel\nversion = release = str(panel.__version__)\n\nhtml_static_path += ['_static']\nhtml_theme = 'sphinx_ioam_theme'\nhtml_theme_options = {\n 'logo': 'logo_horizontal.png',\n 'favicon': 'favicon.ico',\n 'css': 'site.css' \n}\n\nextensions += ['nbsite.gallery']\n\nnbsite_gallery_conf = {\n 'github_org': 'pyviz',\n 'github_project': 'panel',\n 'galleries': {\n 'gallery': {\n 'title': 'Gallery',\n 'sections': [\n {'path': 'demos',\n 'title': 'Demos',\n 'description': 'A set of sophisticated apps built to demonstrate the features of Panel.'},\n {'path': 'simple',\n 'title': 'Simple Apps',\n 'description': 'Simple example apps meant to provide a quick introduction to Panel.'},\n {'path': 'apis',\n 'title': 'APIs',\n 'description': ('Examples meant to demonstrate the usage of different Panel APIs '\n 'such as interact and reactive functions.')},\n {'path': 'layout',\n 'title': 'Layouts',\n 'description': 'How to leverage Panel layout components to achieve complex layouts.'},\n {'path': 'dynamic',\n 'title': 'Dynamic UIs',\n 'description': ('Examples demonstrating how to build dynamic UIs with components that'\n 'are added or removed interactively.')},\n {'path': 'param',\n 'title': 'Param based apps',\n 'description': 'Using the Param library to express UIs independently of Panel.'},\n {'path': 'links',\n 'title': 'Linking',\n 'description': ('Using Javascript based links to define interactivity without '\n 'without requiring a live kernel.')},\n {'path': 'external',\n 'title': 'External libraries',\n 'description': 'Wrapping external libraries with Panel.'}\n ]\n },\n 'reference': {\n 'title': 'Reference Gallery',\n 'sections': [\n 'panes',\n 'layouts',\n 'widgets'\n ]\n }\n },\n 'thumbnail_url': 'https://assets.holoviews.org/panel/thumbnails',\n 'deployment_url': 'https://panel-gallery.pyviz.demo.anaconda.com/'\n}\n\n_NAV = (\n ('Getting started', 'getting_started/index'),\n ('User Guide', 'user_guide/index'),\n ('Gallery', 'gallery/index'),\n ('Reference Gallery', 'reference/index'),\n ('Developer Guide', 'developer_guide/index'),\n ('FAQ', 'FAQ'),\n ('About', 'about')\n)\n\ntemplates_path = ['_templates']\n\nhtml_context.update({\n 'PROJECT': project,\n 'DESCRIPTION': description,\n 'AUTHOR': authors,\n 'VERSION': version,\n 'WEBSITE_URL': 'https://panel.pyviz.org',\n 'WEBSITE_SERVER': 'https://panel.pyviz.org',\n 'VERSION': version,\n 'NAV': _NAV,\n 'LINKS': _NAV,\n 'SOCIAL': (\n ('Gitter', '//gitter.im/pyviz/pyviz'),\n ('Github', '//github.com/pyviz/panel'),\n )\n})\n\nnbbuild_patterns_to_take_along = [\"simple.html\"]\n", "path": "doc/conf.py"}]} | 3,392 | 283 |

gh_patches_debug_11585 | rasdani/github-patches | git_diff | ibis-project__ibis-4602 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

bug: `.visualize(label_edges=True)` case ops.NodeList we get ValueError tuple.index(x): x not in tuple

Hi,

There's still one small thing that needs fixing in the great `.visualize(label_edges=True)` feature 😄 :

When running...

```py

import ibis

t = ibis.table((("a", "int32"), ("b", "string")))

expr = t[(t["a"] == 1) & (t["b"] == "x")]

expr.visualize(label_edges=True)

```

...I get:

```

Exception has occurred: ValueError - tuple.index(x): x not in tuple

```

at the following line:

https://github.com/ibis-project/ibis/blob/2c9cfea15fc4d5f61e9099c3b270ea61498b5e45/ibis/expr/visualize.py#L117

This is happening when `v` is an `ops.NodeList` and thus its `v.args` is a tuple `of tuples` and NOT a tuple of nodes.

Given that on the next line we have special logic for `ops.NodeList`, maybe one quick fix could be to use the `.values`/`.args[0]` in such cases:

Thank you.

</issue>

<code>

[start of ibis/expr/visualize.py]

1 import sys

2 import tempfile

3 from html import escape

4

5 import graphviz as gv

6

7 import ibis

8 import ibis.common.exceptions as com

9 import ibis.expr.operations as ops

10 from ibis.common.graph import Graph

11

12

13 def get_type(node):

14 try:

15 return str(node.output_dtype)

16 except (AttributeError, NotImplementedError):

17 pass

18

19 try:

20 schema = node.schema

21 except (AttributeError, NotImplementedError):

22 # TODO(kszucs): this branch should be removed

23 try:

24 # As a last resort try get the name of the output_type class

25 return node.output_type.__name__

26 except (AttributeError, NotImplementedError):

27 return '\u2205' # empty set character

28 except com.IbisError:

29 assert isinstance(node, ops.Join)

30 left_table_name = getattr(node.left, 'name', None) or ops.genname()

31 left_schema = node.left.schema

32 right_table_name = getattr(node.right, 'name', None) or ops.genname()

33 right_schema = node.right.schema

34 pairs = [

35 (f'{left_table_name}.{left_column}', type)

36 for left_column, type in left_schema.items()

37 ] + [

38 (f'{right_table_name}.{right_column}', type)

39 for right_column, type in right_schema.items()

40 ]

41 schema = ibis.schema(pairs)

42

43 return (

44 ''.join(

45 '<BR ALIGN="LEFT" /> <I>{}</I>: {}'.format(

46 escape(name), escape(str(type))

47 )

48 for name, type in zip(schema.names, schema.types)

49 )

50 + '<BR ALIGN="LEFT" />'

51 )

52

53

54 def get_label(node):

55 typename = get_type(node) # Already an escaped string

56 name = type(node).__name__

57 nodename = (

58 node.name

59 if isinstance(

60 node, (ops.Literal, ops.TableColumn, ops.Alias, ops.PhysicalTable)

61 )

62 else None

63 )

64 if nodename is not None:

65 if isinstance(node, ops.TableNode):

66 label_fmt = '<<I>{}</I>: <B>{}</B>{}>'

67 else:

68 label_fmt = '<<I>{}</I>: <B>{}</B><BR ALIGN="LEFT" />:: {}>'

69 label = label_fmt.format(escape(nodename), escape(name), typename)

70 else:

71 if isinstance(node, ops.TableNode):

72 label_fmt = '<<B>{}</B>{}>'

73 else:

74 label_fmt = '<<B>{}</B><BR ALIGN="LEFT" />:: {}>'

75 label = label_fmt.format(escape(name), typename)

76 return label

77

78

79 DEFAULT_NODE_ATTRS = {'shape': 'box', 'fontname': 'Deja Vu Sans Mono'}

80 DEFAULT_EDGE_ATTRS = {'fontname': 'Deja Vu Sans Mono'}

81

82

83 def to_graph(expr, node_attr=None, edge_attr=None, label_edges: bool = False):

84 graph = Graph.from_bfs(expr.op())

85

86 g = gv.Digraph(

87 node_attr=node_attr or DEFAULT_NODE_ATTRS,

88 edge_attr=edge_attr or DEFAULT_EDGE_ATTRS,

89 )

90

91 g.attr(rankdir='BT')

92

93 seen = set()

94 edges = set()

95

96 for v, us in graph.items():

97 if isinstance(v, ops.NodeList) and not v:

98 continue

99

100 vhash = str(hash(v))

101 if v not in seen:

102 g.node(vhash, label=get_label(v))

103 seen.add(v)

104

105 for u in us:

106 if isinstance(u, ops.NodeList) and not u:

107 continue

108

109 uhash = str(hash(u))

110 if u not in seen:

111 g.node(uhash, label=get_label(u))

112 seen.add(u)

113 if (edge := (u, v)) not in edges:

114 if not label_edges:

115 label = None

116 else:

117 index = v.args.index(u)

118 if isinstance(v, ops.NodeList):

119 arg_name = f"values[{index}]"

120 else:

121 arg_name = v.argnames[index]

122 label = f"<.{arg_name}>"

123

124 g.edge(uhash, vhash, label=label)

125 edges.add(edge)

126 return g

127

128

129 def draw(graph, path=None, format='png', verbose: bool = False):

130 if verbose:

131 print(graph.source, file=sys.stderr)

132

133 piped_source = graph.pipe(format=format)

134

135 if path is None:

136 with tempfile.NamedTemporaryFile(

137 delete=False, suffix=f'.{format}', mode='wb'

138 ) as f:

139 f.write(piped_source)

140 return f.name

141 else:

142 with open(path, mode='wb') as f:

143 f.write(piped_source)

144 return path

145

146

147 if __name__ == '__main__':

148 from argparse import ArgumentParser

149

150 from ibis import _

151

152 p = ArgumentParser(

153 description="Render a GraphViz SVG of an example ibis expression."

154 )

155

156 p.add_argument(

157 "-v",

158 "--verbose",

159 action="count",

160 default=0,

161 help="Print GraphViz DOT code to stderr.",

162 )

163 p.add_argument(

164 "-l",

165 "--label-edges",

166 action="store_true",

167 help="Show operation inputs as edge labels.",

168 )

169

170 args = p.parse_args()

171

172 left = ibis.table(dict(a="int64", b="string"), name="left")

173 right = ibis.table(dict(b="string", c="int64", d="string"), name="right")

174 expr = (

175 left.inner_join(right, "b")

176 .select(left.a, b=right.c, c=right.d)

177 .filter((_.a + _.b * 2 * _.b / _.b**3 > 4) & (_.b > 5))

178 .groupby(_.c)

179 .having(_.a.mean() > 0.0)

180 .aggregate(a_mean=_.a.mean(), b_sum=_.b.sum())

181 )

182 expr.visualize(verbose=args.verbose > 0, label_edges=args.label_edges)

183

[end of ibis/expr/visualize.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/ibis/expr/visualize.py b/ibis/expr/visualize.py

--- a/ibis/expr/visualize.py

+++ b/ibis/expr/visualize.py

@@ -114,10 +114,11 @@

if not label_edges:

label = None

else:

- index = v.args.index(u)

if isinstance(v, ops.NodeList):

+ index = v.values.index(u)

arg_name = f"values[{index}]"

else:

+ index = v.args.index(u)

arg_name = v.argnames[index]

label = f"<.{arg_name}>"

| {"golden_diff": "diff --git a/ibis/expr/visualize.py b/ibis/expr/visualize.py\n--- a/ibis/expr/visualize.py\n+++ b/ibis/expr/visualize.py\n@@ -114,10 +114,11 @@\n if not label_edges:\n label = None\n else:\n- index = v.args.index(u)\n if isinstance(v, ops.NodeList):\n+ index = v.values.index(u)\n arg_name = f\"values[{index}]\"\n else:\n+ index = v.args.index(u)\n arg_name = v.argnames[index]\n label = f\"<.{arg_name}>\"\n", "issue": "bug: `.visualize(label_edges=True)` case ops.NodeList we get ValueError tuple.index(x): x not in tuple\nHi,\r\n\r\nThere's still one small thing that needs fixing in the great `.visualize(label_edges=True)` feature \ud83d\ude04 :\r\n\r\nWhen running...\r\n```py\r\nimport ibis\r\nt = ibis.table(((\"a\", \"int32\"), (\"b\", \"string\")))\r\nexpr = t[(t[\"a\"] == 1) & (t[\"b\"] == \"x\")]\r\n\r\nexpr.visualize(label_edges=True)\r\n```\r\n...I get:\r\n```\r\nException has occurred: ValueError - tuple.index(x): x not in tuple\r\n```\r\nat the following line:\r\nhttps://github.com/ibis-project/ibis/blob/2c9cfea15fc4d5f61e9099c3b270ea61498b5e45/ibis/expr/visualize.py#L117\r\n\r\nThis is happening when `v` is an `ops.NodeList` and thus its `v.args` is a tuple `of tuples` and NOT a tuple of nodes.\r\n\r\nGiven that on the next line we have special logic for `ops.NodeList`, maybe one quick fix could be to use the `.values`/`.args[0]` in such cases:\r\n\r\n\r\n\r\nThank you.\n", "before_files": [{"content": "import sys\nimport tempfile\nfrom html import escape\n\nimport graphviz as gv\n\nimport ibis\nimport ibis.common.exceptions as com\nimport ibis.expr.operations as ops\nfrom ibis.common.graph import Graph\n\n\ndef get_type(node):\n try:\n return str(node.output_dtype)\n except (AttributeError, NotImplementedError):\n pass\n\n try:\n schema = node.schema\n except (AttributeError, NotImplementedError):\n # TODO(kszucs): this branch should be removed\n try:\n # As a last resort try get the name of the output_type class\n return node.output_type.__name__\n except (AttributeError, NotImplementedError):\n return '\\u2205' # empty set character\n except com.IbisError:\n assert isinstance(node, ops.Join)\n left_table_name = getattr(node.left, 'name', None) or ops.genname()\n left_schema = node.left.schema\n right_table_name = getattr(node.right, 'name', None) or ops.genname()\n right_schema = node.right.schema\n pairs = [\n (f'{left_table_name}.{left_column}', type)\n for left_column, type in left_schema.items()\n ] + [\n (f'{right_table_name}.{right_column}', type)\n for right_column, type in right_schema.items()\n ]\n schema = ibis.schema(pairs)\n\n return (\n ''.join(\n '<BR ALIGN=\"LEFT\" /> <I>{}</I>: {}'.format(\n escape(name), escape(str(type))\n )\n for name, type in zip(schema.names, schema.types)\n )\n + '<BR ALIGN=\"LEFT\" />'\n )\n\n\ndef get_label(node):\n typename = get_type(node) # Already an escaped string\n name = type(node).__name__\n nodename = (\n node.name\n if isinstance(\n node, (ops.Literal, ops.TableColumn, ops.Alias, ops.PhysicalTable)\n )\n else None\n )\n if nodename is not None:\n if isinstance(node, ops.TableNode):\n label_fmt = '<<I>{}</I>: <B>{}</B>{}>'\n else:\n label_fmt = '<<I>{}</I>: <B>{}</B><BR ALIGN=\"LEFT\" />:: {}>'\n label = label_fmt.format(escape(nodename), escape(name), typename)\n else:\n if isinstance(node, ops.TableNode):\n label_fmt = '<<B>{}</B>{}>'\n else:\n label_fmt = '<<B>{}</B><BR ALIGN=\"LEFT\" />:: {}>'\n label = label_fmt.format(escape(name), typename)\n return label\n\n\nDEFAULT_NODE_ATTRS = {'shape': 'box', 'fontname': 'Deja Vu Sans Mono'}\nDEFAULT_EDGE_ATTRS = {'fontname': 'Deja Vu Sans Mono'}\n\n\ndef to_graph(expr, node_attr=None, edge_attr=None, label_edges: bool = False):\n graph = Graph.from_bfs(expr.op())\n\n g = gv.Digraph(\n node_attr=node_attr or DEFAULT_NODE_ATTRS,\n edge_attr=edge_attr or DEFAULT_EDGE_ATTRS,\n )\n\n g.attr(rankdir='BT')\n\n seen = set()\n edges = set()\n\n for v, us in graph.items():\n if isinstance(v, ops.NodeList) and not v:\n continue\n\n vhash = str(hash(v))\n if v not in seen:\n g.node(vhash, label=get_label(v))\n seen.add(v)\n\n for u in us:\n if isinstance(u, ops.NodeList) and not u:\n continue\n\n uhash = str(hash(u))\n if u not in seen:\n g.node(uhash, label=get_label(u))\n seen.add(u)\n if (edge := (u, v)) not in edges:\n if not label_edges:\n label = None\n else:\n index = v.args.index(u)\n if isinstance(v, ops.NodeList):\n arg_name = f\"values[{index}]\"\n else:\n arg_name = v.argnames[index]\n label = f\"<.{arg_name}>\"\n\n g.edge(uhash, vhash, label=label)\n edges.add(edge)\n return g\n\n\ndef draw(graph, path=None, format='png', verbose: bool = False):\n if verbose:\n print(graph.source, file=sys.stderr)\n\n piped_source = graph.pipe(format=format)\n\n if path is None:\n with tempfile.NamedTemporaryFile(\n delete=False, suffix=f'.{format}', mode='wb'\n ) as f:\n f.write(piped_source)\n return f.name\n else:\n with open(path, mode='wb') as f:\n f.write(piped_source)\n return path\n\n\nif __name__ == '__main__':\n from argparse import ArgumentParser\n\n from ibis import _\n\n p = ArgumentParser(\n description=\"Render a GraphViz SVG of an example ibis expression.\"\n )\n\n p.add_argument(\n \"-v\",\n \"--verbose\",\n action=\"count\",\n default=0,\n help=\"Print GraphViz DOT code to stderr.\",\n )\n p.add_argument(\n \"-l\",\n \"--label-edges\",\n action=\"store_true\",\n help=\"Show operation inputs as edge labels.\",\n )\n\n args = p.parse_args()\n\n left = ibis.table(dict(a=\"int64\", b=\"string\"), name=\"left\")\n right = ibis.table(dict(b=\"string\", c=\"int64\", d=\"string\"), name=\"right\")\n expr = (\n left.inner_join(right, \"b\")\n .select(left.a, b=right.c, c=right.d)\n .filter((_.a + _.b * 2 * _.b / _.b**3 > 4) & (_.b > 5))\n .groupby(_.c)\n .having(_.a.mean() > 0.0)\n .aggregate(a_mean=_.a.mean(), b_sum=_.b.sum())\n )\n expr.visualize(verbose=args.verbose > 0, label_edges=args.label_edges)\n", "path": "ibis/expr/visualize.py"}]} | 2,641 | 147 |

gh_patches_debug_37107 | rasdani/github-patches | git_diff | opsdroid__opsdroid-1355 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Telegram connector needs update for event message

# Description

The telegram connector uses message.target['id'] instead of message.target. This leads to problems when trying to use default_target per normal configuration and usage of the event flow logic.

## Steps to Reproduce

Setup telegram as the default connector... call the telegram connector.send with a target...

```

await self.opsdroid.send(Message(text='hello', target='<useridhere>')

```

The default_target is also always None.

```

await self.opsdroid.send(Message(text='hello')

```

You can hack around it with..

```

sillytarget = { 'id': <useridhere> }

await self.opsdroid.send(Message(text='hello', target=sillytarget)

```

## Expected Functionality

message.target should work like the other core connectors

## Experienced Functionality

Errors out.

```

opsdroid | ERROR opsdroid.connector.telegram.send_message(): Unable to respond.

```

## Versions

- opsdroid: latest/stable

- python 3.7.6

- docker image: opsdroid/opsdroid:latest

## Configuration File

```yaml

connectors:

## Telegram (core)

telegram:

token: "......"

# optional

update-interval: 0.5 # Interval between checking for messages

whitelisted-users: # List of users who can speak to the bot, if not set anyone can speak

- ......

```

## Additional Details

None

<!-- Love opsdroid? Please consider supporting our collective:

+👉 https://opencollective.com/opsdroid/donate -->

</issue>

<code>

[start of opsdroid/connector/telegram/__init__.py]

1 """A connector for Telegram."""

2 import asyncio

3 import logging

4 import aiohttp

5 from voluptuous import Required

6

7 from opsdroid.connector import Connector, register_event

8 from opsdroid.events import Message, Image

9

10

11 _LOGGER = logging.getLogger(__name__)

12 CONFIG_SCHEMA = {

13 Required("token"): str,

14 "update-interval": float,

15 "default-user": str,

16 "whitelisted-users": list,

17 }

18

19

20 class ConnectorTelegram(Connector):

21 """A connector the the char service Telegram."""

22

23 def __init__(self, config, opsdroid=None):

24 """Create the connector.

25

26 Args:

27 config (dict): configuration settings from the

28 file config.yaml.

29 opsdroid (OpsDroid): An instance of opsdroid.core.

30

31 """

32 _LOGGER.debug(_("Loaded Telegram Connector"))

33 super().__init__(config, opsdroid=opsdroid)

34 self.name = "telegram"

35 self.opsdroid = opsdroid

36 self.latest_update = None

37 self.default_target = None

38 self.listening = True

39 self.default_user = config.get("default-user", None)

40 self.whitelisted_users = config.get("whitelisted-users", None)

41 self.update_interval = config.get("update-interval", 1)

42 self.session = None

43 self._closing = asyncio.Event()

44 self.loop = asyncio.get_event_loop()

45

46 try:

47 self.token = config["token"]

48 except (KeyError, AttributeError):

49 _LOGGER.error(

50 _(

51 "Unable to login: Access token is missing. Telegram connector will be unavailable."

52 )

53 )

54

55 @staticmethod

56 def get_user(response):

57 """Get user from response.

58

59 The API response is different depending on how

60 the bot is set up and where the message is coming

61 from. This method was created to keep if/else

62 statements to a minium on _parse_message.

63

64 Args:

65 response (dict): Response returned by aiohttp.ClientSession.

66

67 """

68 user = None

69 user_id = None

70

71 if "username" in response["message"]["from"]:

72 user = response["message"]["from"]["username"]

73

74 elif "first_name" in response["message"]["from"]:

75 user = response["message"]["from"]["first_name"]

76 user_id = response["message"]["from"]["id"]

77

78 return user, user_id

79

80 def handle_user_permission(self, response, user):

81 """Handle user permissions.

82

83 This will check if the user that tried to talk with

84 the bot is allowed to do so. It will also work with

85 userid to improve security.

86

87 """

88 user_id = response["message"]["from"]["id"]

89

90 if (

91 not self.whitelisted_users

92 or user in self.whitelisted_users

93 or user_id in self.whitelisted_users

94 ):

95 return True

96

97 return False

98

99 def build_url(self, method):

100 """Build the url to connect to the API.

101

102 Args:

103 method (string): API call end point.

104

105 Return:

106 String that represents the full API url.

107

108 """

109 return "https://api.telegram.org/bot{}/{}".format(self.token, method)

110

111 async def delete_webhook(self):

112 """Delete Telegram webhook.

113

114 The Telegram api will thrown an 409 error when an webhook is

115 active and a call to getUpdates is made. This method will

116 try to request the deletion of the webhook to make the getUpdate

117 request possible.

118

119 """

120 _LOGGER.debug(_("Sending deleteWebhook request to Telegram..."))

121 resp = await self.session.get(self.build_url("deleteWebhook"))

122

123 if resp.status == 200:

124 _LOGGER.debug(_("Telegram webhook deleted successfully."))

125 else:

126 _LOGGER.debug(_("Unable to delete webhook."))

127

128 async def connect(self):

129 """Connect to Telegram.

130

131 This method is not an authorization call. It basically

132 checks if the API token was provided and makes an API

133 call to Telegram and evaluates the status of the call.

134

135 """

136

137 _LOGGER.debug(_("Connecting to Telegram."))

138 self.session = aiohttp.ClientSession()

139

140 resp = await self.session.get(self.build_url("getMe"))

141

142 if resp.status != 200:

143 _LOGGER.error(_("Unable to connect."))

144 _LOGGER.error(_("Telegram error %s, %s."), resp.status, resp.text)

145 else:

146 json = await resp.json()

147 _LOGGER.debug(json)

148 _LOGGER.debug(_("Connected to Telegram as %s."), json["result"]["username"])

149

150 async def _parse_message(self, response):

151 """Handle logic to parse a received message.

152

153 Since everyone can send a private message to any user/bot

154 in Telegram, this method allows to set a list of whitelisted

155 users that can interact with the bot. If any other user tries

156 to interact with the bot the command is not parsed and instead

157 the bot will inform that user that he is not allowed to talk

158 with the bot.

159

160 We also set self.latest_update to +1 in order to get the next

161 available message (or an empty {} if no message has been received

162 yet) with the method self._get_messages().

163

164 Args:

165 response (dict): Response returned by aiohttp.ClientSession.

166

167 """

168 for result in response["result"]:

169 _LOGGER.debug(result)

170 if result.get("edited_message", None):

171 result["message"] = result.pop("edited_message")

172 if "channel" in result["message"]["chat"]["type"]:

173 _LOGGER.debug(

174 _("Channel message parsing not supported " "- Ignoring message.")

175 )

176 elif "message" in result and "text" in result["message"]:

177 user, user_id = self.get_user(result)

178 message = Message(

179 text=result["message"]["text"],

180 user=user,

181 user_id=user_id,

182 target=result["message"]["chat"],

183 connector=self,

184 )

185

186 if self.handle_user_permission(result, user):

187 await self.opsdroid.parse(message)

188 else:

189 message.text = (

190 "Sorry, you're not allowed " "to speak with this bot."

191 )

192 await self.send(message)

193 self.latest_update = result["update_id"] + 1

194 elif (

195 "message" in result

196 and "sticker" in result["message"]

197 and "emoji" in result["message"]["sticker"]

198 ):

199 self.latest_update = result["update_id"] + 1

200 _LOGGER.debug(

201 _("Emoji message parsing not supported - Ignoring message.")

202 )

203 else:

204 _LOGGER.error(_("Unable to parse the message."))

205

206 async def _get_messages(self):

207 """Connect to the Telegram API.

208

209 Uses an aiohttp ClientSession to connect to Telegram API

210 and get the latest messages from the chat service.

211

212 The data["offset"] is used to consume every new message, the API

213 returns an int - "update_id" value. In order to get the next

214 message this value needs to be increased by 1 the next time

215 the API is called. If no new messages exists the API will just

216 return an empty {}.

217

218 """

219 data = {}

220 if self.latest_update is not None:

221 data["offset"] = self.latest_update

222

223 await asyncio.sleep(self.update_interval)

224 resp = await self.session.get(self.build_url("getUpdates"), params=data)

225

226 if resp.status == 409:

227 _LOGGER.info(

228 _(

229 "Can't get updates because previous webhook is still active. Will try to delete webhook."

230 )

231 )

232 await self.delete_webhook()

233

234 if resp.status != 200:

235 _LOGGER.error(_("Telegram error %s, %s."), resp.status, resp.text)

236 self.listening = False

237 else:

238 json = await resp.json()

239

240 await self._parse_message(json)

241

242 async def get_messages_loop(self):

243 """Listen for and parse new messages.

244

245 The bot will always listen to all opened chat windows,

246 as long as opsdroid is running. Since anyone can start

247 a new chat with the bot is recommended that a list of

248 users to be whitelisted be provided in config.yaml.

249

250 The method will sleep asynchronously at the end of

251 every loop. The time can either be specified in the

252 config.yaml with the param update-interval - this

253 defaults to 1 second.

254

255 """

256 while self.listening:

257 await self._get_messages()

258

259 async def listen(self):

260 """Listen method of the connector.

261

262 Every connector has to implement the listen method. When an

263 infinite loop is running, it becomes hard to cancel this task.

264 So we are creating a task and set it on a variable so we can

265 cancel the task.

266

267 """

268 message_getter = self.loop.create_task(await self.get_messages_loop())

269 await self._closing.wait()

270 message_getter.cancel()

271

272 @register_event(Message)

273 async def send_message(self, message):

274 """Respond with a message.

275

276 Args:

277 message (object): An instance of Message.

278

279 """

280 _LOGGER.debug(_("Responding with: %s."), message.text)

281

282 data = dict()

283 data["chat_id"] = message.target["id"]

284 data["text"] = message.text

285 resp = await self.session.post(self.build_url("sendMessage"), data=data)

286 if resp.status == 200:

287 _LOGGER.debug(_("Successfully responded."))

288 else:

289 _LOGGER.error(_("Unable to respond."))

290

291 @register_event(Image)

292 async def send_image(self, file_event):

293 """Send Image to Telegram.

294

295 Gets the chat id from the channel and then

296 sends the bytes of the image as multipart/form-data.

297

298 """

299 data = aiohttp.FormData()

300 data.add_field(

301 "chat_id", str(file_event.target["id"]), content_type="multipart/form-data"

302 )

303 data.add_field(

304 "photo",

305 await file_event.get_file_bytes(),

306 content_type="multipart/form-data",

307 )

308

309 resp = await self.session.post(self.build_url("sendPhoto"), data=data)

310 if resp.status == 200:

311 _LOGGER.debug(_("Sent %s image successfully."), file_event.name)

312 else:

313 _LOGGER.debug(_("Unable to send image - Status Code %s."), resp.status)

314

315 async def disconnect(self):

316 """Disconnect from Telegram.

317

318 Stops the infinite loop found in self._listen(), closes

319 aiohttp session.

320

321 """

322 self.listening = False

323 self._closing.set()

324 await self.session.close()

325

[end of opsdroid/connector/telegram/__init__.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/opsdroid/connector/telegram/__init__.py b/opsdroid/connector/telegram/__init__.py

--- a/opsdroid/connector/telegram/__init__.py

+++ b/opsdroid/connector/telegram/__init__.py

@@ -34,9 +34,9 @@

self.name = "telegram"

self.opsdroid = opsdroid

self.latest_update = None

- self.default_target = None

self.listening = True

self.default_user = config.get("default-user", None)

+ self.default_target = self.default_user

self.whitelisted_users = config.get("whitelisted-users", None)

self.update_interval = config.get("update-interval", 1)

self.session = None

@@ -167,9 +167,13 @@

"""

for result in response["result"]:

_LOGGER.debug(result)

+

if result.get("edited_message", None):

result["message"] = result.pop("edited_message")

- if "channel" in result["message"]["chat"]["type"]:

+ if result.get("channel_post", None) or result.get(

+ "edited_channel_post", None

+ ):

+ self.latest_update = result["update_id"] + 1

_LOGGER.debug(

_("Channel message parsing not supported " "- Ignoring message.")

)

@@ -179,7 +183,7 @@

text=result["message"]["text"],

user=user,

user_id=user_id,

- target=result["message"]["chat"],

+ target=result["message"]["chat"]["id"],

connector=self,

)

@@ -277,10 +281,12 @@

message (object): An instance of Message.

"""

- _LOGGER.debug(_("Responding with: %s."), message.text)

+ _LOGGER.debug(

+ _("Responding with: '%s' at target: '%s'"), message.text, message.target

+ )

data = dict()

- data["chat_id"] = message.target["id"]

+ data["chat_id"] = message.target

data["text"] = message.text

resp = await self.session.post(self.build_url("sendMessage"), data=data)

if resp.status == 200: