problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

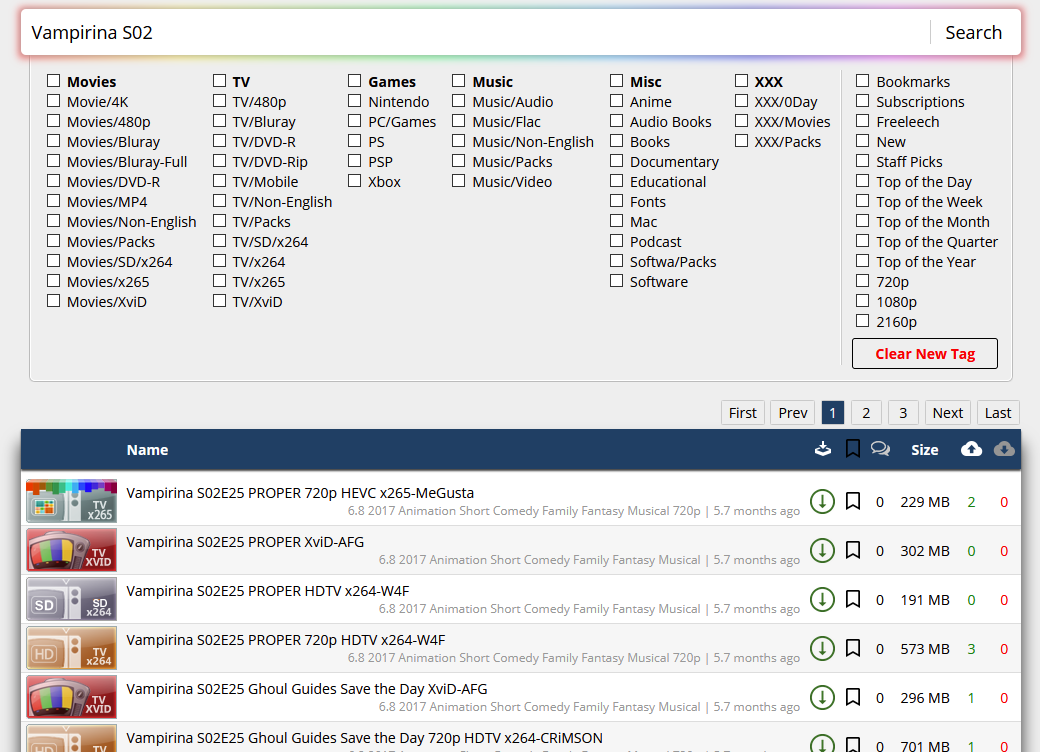

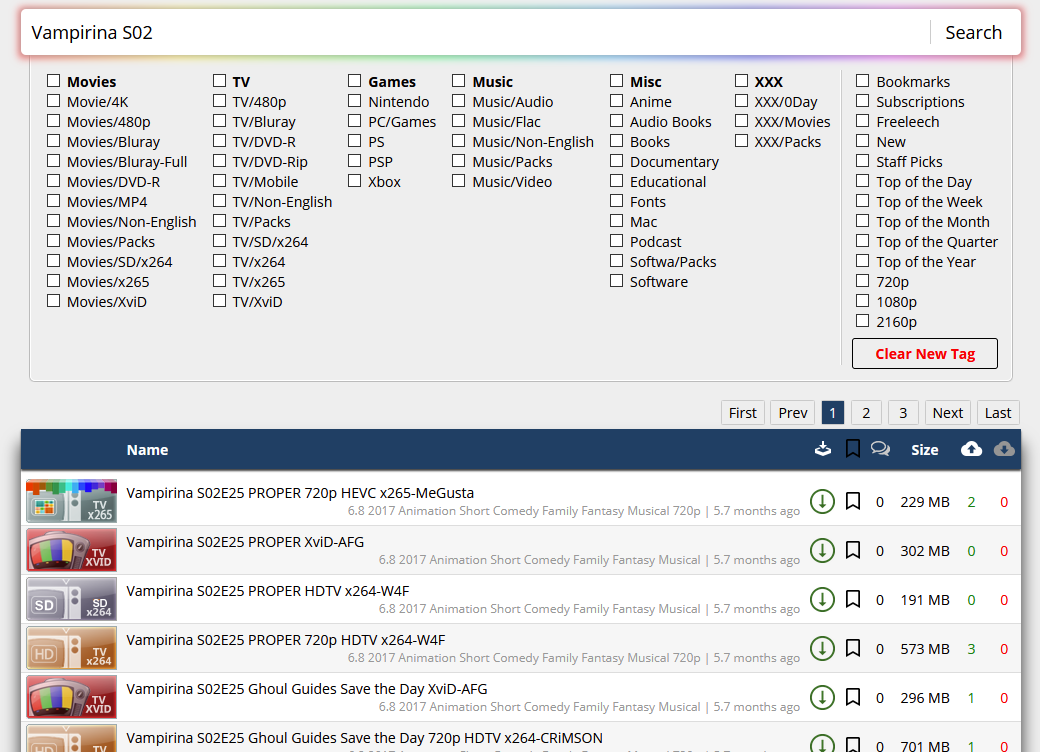

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_61378 | rasdani/github-patches | git_diff | Lightning-AI__torchmetrics-1288 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

inplace operation in pairwise_cosine_similarity

## 🐛 Bug

Hello !

The x, y values are modified inplace in the `pairwise_cosine_similarity` function.

This is not documented and may cause bugs that are difficult to find.

Thank you.

### To Reproduce

```python

import torch

from torchmetrics.functional import pairwise_cosine_similarity

x = torch.tensor([[2, 3], [3, 5], [5, 8]], dtype=torch.float32)

y = torch.tensor([[1, 0], [2, 1]], dtype=torch.float32)

print("Result:", pairwise_cosine_similarity(x, y))

print("X:", x)

print("Y:", y)

"""Out[0]

Result: tensor([[0.5547, 0.8682],

[0.5145, 0.8437],

[0.5300, 0.8533]])

X: tensor([[0.5547, 0.8321],

[0.5145, 0.8575],

[0.5300, 0.8480]])

Y: tensor([[1.0000, 0.0000],

[0.8944, 0.4472]])

"""

```

### Environment

torchmetrics==0.10.0

</issue>

<code>

[start of src/torchmetrics/functional/pairwise/cosine.py]

1 # Copyright The PyTorch Lightning team.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 from typing import Optional

15

16 import torch

17 from torch import Tensor

18 from typing_extensions import Literal

19

20 from torchmetrics.functional.pairwise.helpers import _check_input, _reduce_distance_matrix

21 from torchmetrics.utilities.compute import _safe_matmul

22

23

24 def _pairwise_cosine_similarity_update(

25 x: Tensor, y: Optional[Tensor] = None, zero_diagonal: Optional[bool] = None

26 ) -> Tensor:

27 """Calculates the pairwise cosine similarity matrix.

28

29 Args:

30 x: tensor of shape ``[N,d]``

31 y: tensor of shape ``[M,d]``

32 zero_diagonal: determines if the diagonal of the distance matrix should be set to zero

33 """

34 x, y, zero_diagonal = _check_input(x, y, zero_diagonal)

35

36 norm = torch.norm(x, p=2, dim=1)

37 x /= norm.unsqueeze(1)

38 norm = torch.norm(y, p=2, dim=1)

39 y /= norm.unsqueeze(1)

40

41 distance = _safe_matmul(x, y)

42 if zero_diagonal:

43 distance.fill_diagonal_(0)

44 return distance

45

46

47 def pairwise_cosine_similarity(

48 x: Tensor,

49 y: Optional[Tensor] = None,

50 reduction: Literal["mean", "sum", "none", None] = None,

51 zero_diagonal: Optional[bool] = None,

52 ) -> Tensor:

53 r"""Calculates pairwise cosine similarity:

54

55 .. math::

56 s_{cos}(x,y) = \frac{<x,y>}{||x|| \cdot ||y||}

57 = \frac{\sum_{d=1}^D x_d \cdot y_d }{\sqrt{\sum_{d=1}^D x_i^2} \cdot \sqrt{\sum_{d=1}^D y_i^2}}

58

59 If both :math:`x` and :math:`y` are passed in, the calculation will be performed pairwise

60 between the rows of :math:`x` and :math:`y`.

61 If only :math:`x` is passed in, the calculation will be performed between the rows of :math:`x`.

62

63 Args:

64 x: Tensor with shape ``[N, d]``

65 y: Tensor with shape ``[M, d]``, optional

66 reduction: reduction to apply along the last dimension. Choose between `'mean'`, `'sum'`

67 (applied along column dimension) or `'none'`, `None` for no reduction

68 zero_diagonal: if the diagonal of the distance matrix should be set to 0. If only :math:`x` is given

69 this defaults to ``True`` else if :math:`y` is also given it defaults to ``False``

70

71 Returns:

72 A ``[N,N]`` matrix of distances if only ``x`` is given, else a ``[N,M]`` matrix

73

74 Example:

75 >>> import torch

76 >>> from torchmetrics.functional import pairwise_cosine_similarity

77 >>> x = torch.tensor([[2, 3], [3, 5], [5, 8]], dtype=torch.float32)

78 >>> y = torch.tensor([[1, 0], [2, 1]], dtype=torch.float32)

79 >>> pairwise_cosine_similarity(x, y)

80 tensor([[0.5547, 0.8682],

81 [0.5145, 0.8437],

82 [0.5300, 0.8533]])

83 >>> pairwise_cosine_similarity(x)

84 tensor([[0.0000, 0.9989, 0.9996],

85 [0.9989, 0.0000, 0.9998],

86 [0.9996, 0.9998, 0.0000]])

87 """

88 distance = _pairwise_cosine_similarity_update(x, y, zero_diagonal)

89 return _reduce_distance_matrix(distance, reduction)

90

[end of src/torchmetrics/functional/pairwise/cosine.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/src/torchmetrics/functional/pairwise/cosine.py b/src/torchmetrics/functional/pairwise/cosine.py

--- a/src/torchmetrics/functional/pairwise/cosine.py

+++ b/src/torchmetrics/functional/pairwise/cosine.py

@@ -34,9 +34,9 @@

x, y, zero_diagonal = _check_input(x, y, zero_diagonal)

norm = torch.norm(x, p=2, dim=1)

- x /= norm.unsqueeze(1)

+ x = x / norm.unsqueeze(1)

norm = torch.norm(y, p=2, dim=1)

- y /= norm.unsqueeze(1)

+ y = y / norm.unsqueeze(1)

distance = _safe_matmul(x, y)

if zero_diagonal:

| {"golden_diff": "diff --git a/src/torchmetrics/functional/pairwise/cosine.py b/src/torchmetrics/functional/pairwise/cosine.py\n--- a/src/torchmetrics/functional/pairwise/cosine.py\n+++ b/src/torchmetrics/functional/pairwise/cosine.py\n@@ -34,9 +34,9 @@\n x, y, zero_diagonal = _check_input(x, y, zero_diagonal)\n \n norm = torch.norm(x, p=2, dim=1)\n- x /= norm.unsqueeze(1)\n+ x = x / norm.unsqueeze(1)\n norm = torch.norm(y, p=2, dim=1)\n- y /= norm.unsqueeze(1)\n+ y = y / norm.unsqueeze(1)\n \n distance = _safe_matmul(x, y)\n if zero_diagonal:\n", "issue": "inplace operation in pairwise_cosine_similarity\n## \ud83d\udc1b Bug\r\nHello !\r\nThe x, y values are modified inplace in the `pairwise_cosine_similarity` function. \r\nThis is not documented and may cause bugs that are difficult to find. \r\nThank you.\r\n\r\n### To Reproduce\r\n\r\n```python\r\nimport torch\r\nfrom torchmetrics.functional import pairwise_cosine_similarity\r\nx = torch.tensor([[2, 3], [3, 5], [5, 8]], dtype=torch.float32)\r\ny = torch.tensor([[1, 0], [2, 1]], dtype=torch.float32)\r\nprint(\"Result:\", pairwise_cosine_similarity(x, y))\r\nprint(\"X:\", x)\r\nprint(\"Y:\", y)\r\n\"\"\"Out[0]\r\nResult: tensor([[0.5547, 0.8682],\r\n [0.5145, 0.8437],\r\n [0.5300, 0.8533]])\r\nX: tensor([[0.5547, 0.8321],\r\n [0.5145, 0.8575],\r\n [0.5300, 0.8480]])\r\nY: tensor([[1.0000, 0.0000],\r\n [0.8944, 0.4472]])\r\n\"\"\"\r\n```\r\n\r\n### Environment\r\ntorchmetrics==0.10.0\r\n\r\n\n", "before_files": [{"content": "# Copyright The PyTorch Lightning team.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\nfrom typing import Optional\n\nimport torch\nfrom torch import Tensor\nfrom typing_extensions import Literal\n\nfrom torchmetrics.functional.pairwise.helpers import _check_input, _reduce_distance_matrix\nfrom torchmetrics.utilities.compute import _safe_matmul\n\n\ndef _pairwise_cosine_similarity_update(\n x: Tensor, y: Optional[Tensor] = None, zero_diagonal: Optional[bool] = None\n) -> Tensor:\n \"\"\"Calculates the pairwise cosine similarity matrix.\n\n Args:\n x: tensor of shape ``[N,d]``\n y: tensor of shape ``[M,d]``\n zero_diagonal: determines if the diagonal of the distance matrix should be set to zero\n \"\"\"\n x, y, zero_diagonal = _check_input(x, y, zero_diagonal)\n\n norm = torch.norm(x, p=2, dim=1)\n x /= norm.unsqueeze(1)\n norm = torch.norm(y, p=2, dim=1)\n y /= norm.unsqueeze(1)\n\n distance = _safe_matmul(x, y)\n if zero_diagonal:\n distance.fill_diagonal_(0)\n return distance\n\n\ndef pairwise_cosine_similarity(\n x: Tensor,\n y: Optional[Tensor] = None,\n reduction: Literal[\"mean\", \"sum\", \"none\", None] = None,\n zero_diagonal: Optional[bool] = None,\n) -> Tensor:\n r\"\"\"Calculates pairwise cosine similarity:\n\n .. math::\n s_{cos}(x,y) = \\frac{<x,y>}{||x|| \\cdot ||y||}\n = \\frac{\\sum_{d=1}^D x_d \\cdot y_d }{\\sqrt{\\sum_{d=1}^D x_i^2} \\cdot \\sqrt{\\sum_{d=1}^D y_i^2}}\n\n If both :math:`x` and :math:`y` are passed in, the calculation will be performed pairwise\n between the rows of :math:`x` and :math:`y`.\n If only :math:`x` is passed in, the calculation will be performed between the rows of :math:`x`.\n\n Args:\n x: Tensor with shape ``[N, d]``\n y: Tensor with shape ``[M, d]``, optional\n reduction: reduction to apply along the last dimension. Choose between `'mean'`, `'sum'`\n (applied along column dimension) or `'none'`, `None` for no reduction\n zero_diagonal: if the diagonal of the distance matrix should be set to 0. If only :math:`x` is given\n this defaults to ``True`` else if :math:`y` is also given it defaults to ``False``\n\n Returns:\n A ``[N,N]`` matrix of distances if only ``x`` is given, else a ``[N,M]`` matrix\n\n Example:\n >>> import torch\n >>> from torchmetrics.functional import pairwise_cosine_similarity\n >>> x = torch.tensor([[2, 3], [3, 5], [5, 8]], dtype=torch.float32)\n >>> y = torch.tensor([[1, 0], [2, 1]], dtype=torch.float32)\n >>> pairwise_cosine_similarity(x, y)\n tensor([[0.5547, 0.8682],\n [0.5145, 0.8437],\n [0.5300, 0.8533]])\n >>> pairwise_cosine_similarity(x)\n tensor([[0.0000, 0.9989, 0.9996],\n [0.9989, 0.0000, 0.9998],\n [0.9996, 0.9998, 0.0000]])\n \"\"\"\n distance = _pairwise_cosine_similarity_update(x, y, zero_diagonal)\n return _reduce_distance_matrix(distance, reduction)\n", "path": "src/torchmetrics/functional/pairwise/cosine.py"}]} | 2,044 | 185 |

gh_patches_debug_1038 | rasdani/github-patches | git_diff | mathesar-foundation__mathesar-341 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Individually run API tests don't build tables database

## Description

Running a individual test in `mathesar` that doesn't use the `engine` or `test_db` fixture will not have the tables databases built for the test. As a result, many will error when trying to access the tables database.

## Expected behavior

The tables database should always be built.

## To Reproduce

Run any test in `mathesar` that doesn't use `engine` or `test_db`. Ex:

```

docker exec mathesar_web_1 pytest mathesar/tests/views/api/test_schema_api.py::test_schema_update

```

## Additional context

Introduced due to the changes in #329, since `pytest-django` no longer creates the tables db for us.

</issue>

<code>

[start of conftest.py]

1 """

2 This file should provide utilities for setting up test DBs and the like. It's

3 intended to be the containment zone for anything specific about the testing

4 environment (e.g., the login info for the Postgres instance for testing)

5 """

6 import pytest

7 from sqlalchemy import create_engine, text

8 from config.settings import DATABASES

9

10 TEST_DB = "mathesar_db_test"

11

12

13 @pytest.fixture(scope="session")

14 def test_db_name():

15 return TEST_DB

16

17

18 @pytest.fixture(scope="session")

19 def test_db():

20 superuser_engine = _get_superuser_engine()

21 with superuser_engine.connect() as conn:

22 conn.execution_options(isolation_level="AUTOCOMMIT")

23 conn.execute(text(f"DROP DATABASE IF EXISTS {TEST_DB} WITH (FORCE)"))

24 conn.execute(text(f"CREATE DATABASE {TEST_DB}"))

25 yield TEST_DB

26 with superuser_engine.connect() as conn:

27 conn.execution_options(isolation_level="AUTOCOMMIT")

28 conn.execute(text(f"DROP DATABASE {TEST_DB} WITH (FORCE)"))

29

30

31 @pytest.fixture(scope="session")

32 def engine(test_db):

33 return create_engine(

34 _get_connection_string(

35 DATABASES["default"]["USER"],

36 DATABASES["default"]["PASSWORD"],

37 DATABASES["default"]["HOST"],

38 test_db,

39 ),

40 future=True,

41 )

42

43

44 def _get_superuser_engine():

45 return create_engine(

46 _get_connection_string(

47 username=DATABASES["default"]["USER"],

48 password=DATABASES["default"]["PASSWORD"],

49 hostname=DATABASES["default"]["HOST"],

50 database=DATABASES["default"]["NAME"],

51 ),

52 future=True,

53 )

54

55

56 def _get_connection_string(username, password, hostname, database):

57 return f"postgresql://{username}:{password}@{hostname}/{database}"

58

[end of conftest.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/conftest.py b/conftest.py

--- a/conftest.py

+++ b/conftest.py

@@ -15,7 +15,7 @@

return TEST_DB

[email protected](scope="session")

[email protected](scope="session", autouse=True)

def test_db():

superuser_engine = _get_superuser_engine()

with superuser_engine.connect() as conn:

| {"golden_diff": "diff --git a/conftest.py b/conftest.py\n--- a/conftest.py\n+++ b/conftest.py\n@@ -15,7 +15,7 @@\n return TEST_DB\n \n \[email protected](scope=\"session\")\[email protected](scope=\"session\", autouse=True)\n def test_db():\n superuser_engine = _get_superuser_engine()\n with superuser_engine.connect() as conn:\n", "issue": "Individually run API tests don't build tables database\n## Description\r\nRunning a individual test in `mathesar` that doesn't use the `engine` or `test_db` fixture will not have the tables databases built for the test. As a result, many will error when trying to access the tables database.\r\n\r\n## Expected behavior\r\nThe tables database should always be built.\r\n\r\n## To Reproduce\r\nRun any test in `mathesar` that doesn't use `engine` or `test_db`. Ex:\r\n```\r\ndocker exec mathesar_web_1 pytest mathesar/tests/views/api/test_schema_api.py::test_schema_update\r\n```\r\n\r\n## Additional context\r\nIntroduced due to the changes in #329, since `pytest-django` no longer creates the tables db for us.\r\n\n", "before_files": [{"content": "\"\"\"\nThis file should provide utilities for setting up test DBs and the like. It's\nintended to be the containment zone for anything specific about the testing\nenvironment (e.g., the login info for the Postgres instance for testing)\n\"\"\"\nimport pytest\nfrom sqlalchemy import create_engine, text\nfrom config.settings import DATABASES\n\nTEST_DB = \"mathesar_db_test\"\n\n\[email protected](scope=\"session\")\ndef test_db_name():\n return TEST_DB\n\n\[email protected](scope=\"session\")\ndef test_db():\n superuser_engine = _get_superuser_engine()\n with superuser_engine.connect() as conn:\n conn.execution_options(isolation_level=\"AUTOCOMMIT\")\n conn.execute(text(f\"DROP DATABASE IF EXISTS {TEST_DB} WITH (FORCE)\"))\n conn.execute(text(f\"CREATE DATABASE {TEST_DB}\"))\n yield TEST_DB\n with superuser_engine.connect() as conn:\n conn.execution_options(isolation_level=\"AUTOCOMMIT\")\n conn.execute(text(f\"DROP DATABASE {TEST_DB} WITH (FORCE)\"))\n\n\[email protected](scope=\"session\")\ndef engine(test_db):\n return create_engine(\n _get_connection_string(\n DATABASES[\"default\"][\"USER\"],\n DATABASES[\"default\"][\"PASSWORD\"],\n DATABASES[\"default\"][\"HOST\"],\n test_db,\n ),\n future=True,\n )\n\n\ndef _get_superuser_engine():\n return create_engine(\n _get_connection_string(\n username=DATABASES[\"default\"][\"USER\"],\n password=DATABASES[\"default\"][\"PASSWORD\"],\n hostname=DATABASES[\"default\"][\"HOST\"],\n database=DATABASES[\"default\"][\"NAME\"],\n ),\n future=True,\n )\n\n\ndef _get_connection_string(username, password, hostname, database):\n return f\"postgresql://{username}:{password}@{hostname}/{database}\"\n", "path": "conftest.py"}]} | 1,179 | 90 |

gh_patches_debug_23746 | rasdani/github-patches | git_diff | mitmproxy__mitmproxy-3114 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

tcp_message script not working

Hi,

I tried to execute the TCP message replace script from the doc but it seems is not working. I don't know if this is a issue with the doc script or with mitmproxy.

The script was unchanged.

##### Steps to reproduce the problem:

1. mitmdump --mode transparent --tcp-host ".*" -k -s examples/complex/tcp_message.py

Loading script: examples/tcp_message.py

Proxy server listening at http://*:8080

192.168.1.241:37604: clientconnect

::ffff:192.168.1.241:37604: Certificate verification error for None: hostname 'no-hostname' doesn't match either of '*.local.org', 'local.org'

::ffff:192.168.1.241:37604: Ignoring server verification error, continuing with connection

Addon error: Traceback (most recent call last):

File "examples/tcp_message.py", line 16, in tcp_message

modified_msg = tcp_msg.message.replace("foo", "bar")

AttributeError: 'TCPFlow' object has no attribute 'message'

192.168.1.241:37604 -> tcp -> 10.0.0.2:5443

Addon error: Traceback (most recent call last):

File "examples/tcp_message.py", line 16, in tcp_message

modified_msg = tcp_msg.message.replace("foo", "bar")

AttributeError: 'TCPFlow' object has no attribute 'message'

192.168.1.241:37604 <- tcp <- 10.0.0.2:5443

##### System information

<!-- Paste the output of "mitmproxy --version" here. -->

mitmdump --version

Mitmproxy: 3.0.4

Python: 3.6.0

OpenSSL: OpenSSL 1.1.0h 27 Mar 2018

Platform: Linux-3.19.0-65-generic-x86_64-with-debian-jessie-sid

<!-- Please use the mitmproxy forums (https://discourse.mitmproxy.org/) for support/how-to questions. Thanks! :) -->

</issue>

<code>

[start of examples/complex/tcp_message.py]

1 """

2 tcp_message Inline Script Hook API Demonstration

3 ------------------------------------------------

4

5 * modifies packets containing "foo" to "bar"

6 * prints various details for each packet.

7

8 example cmdline invocation:

9 mitmdump -T --host --tcp ".*" -q -s examples/tcp_message.py

10 """

11 from mitmproxy.utils import strutils

12 from mitmproxy import ctx

13

14

15 def tcp_message(tcp_msg):

16 modified_msg = tcp_msg.message.replace("foo", "bar")

17

18 is_modified = False if modified_msg == tcp_msg.message else True

19 tcp_msg.message = modified_msg

20

21 ctx.log.info(

22 "[tcp_message{}] from {} {} to {} {}:\r\n{}".format(

23 " (modified)" if is_modified else "",

24 "client" if tcp_msg.sender == tcp_msg.client_conn else "server",

25 tcp_msg.sender.address,

26 "server" if tcp_msg.receiver == tcp_msg.server_conn else "client",

27 tcp_msg.receiver.address, strutils.bytes_to_escaped_str(tcp_msg.message))

28 )

29

[end of examples/complex/tcp_message.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/examples/complex/tcp_message.py b/examples/complex/tcp_message.py

--- a/examples/complex/tcp_message.py

+++ b/examples/complex/tcp_message.py

@@ -6,23 +6,22 @@

* prints various details for each packet.

example cmdline invocation:

-mitmdump -T --host --tcp ".*" -q -s examples/tcp_message.py

+mitmdump --rawtcp --tcp-host ".*" -s examples/complex/tcp_message.py

"""

from mitmproxy.utils import strutils

from mitmproxy import ctx

+from mitmproxy import tcp

-def tcp_message(tcp_msg):

- modified_msg = tcp_msg.message.replace("foo", "bar")

-

- is_modified = False if modified_msg == tcp_msg.message else True

- tcp_msg.message = modified_msg

+def tcp_message(flow: tcp.TCPFlow):

+ message = flow.messages[-1]

+ old_content = message.content

+ message.content = old_content.replace(b"foo", b"bar")

ctx.log.info(

- "[tcp_message{}] from {} {} to {} {}:\r\n{}".format(

- " (modified)" if is_modified else "",

- "client" if tcp_msg.sender == tcp_msg.client_conn else "server",

- tcp_msg.sender.address,

- "server" if tcp_msg.receiver == tcp_msg.server_conn else "client",

- tcp_msg.receiver.address, strutils.bytes_to_escaped_str(tcp_msg.message))

+ "[tcp_message{}] from {} to {}:\n{}".format(

+ " (modified)" if message.content != old_content else "",

+ "client" if message.from_client else "server",

+ "server" if message.from_client else "client",

+ strutils.bytes_to_escaped_str(message.content))

)

| {"golden_diff": "diff --git a/examples/complex/tcp_message.py b/examples/complex/tcp_message.py\n--- a/examples/complex/tcp_message.py\n+++ b/examples/complex/tcp_message.py\n@@ -6,23 +6,22 @@\n * prints various details for each packet.\n \n example cmdline invocation:\n-mitmdump -T --host --tcp \".*\" -q -s examples/tcp_message.py\n+mitmdump --rawtcp --tcp-host \".*\" -s examples/complex/tcp_message.py\n \"\"\"\n from mitmproxy.utils import strutils\n from mitmproxy import ctx\n+from mitmproxy import tcp\n \n \n-def tcp_message(tcp_msg):\n- modified_msg = tcp_msg.message.replace(\"foo\", \"bar\")\n-\n- is_modified = False if modified_msg == tcp_msg.message else True\n- tcp_msg.message = modified_msg\n+def tcp_message(flow: tcp.TCPFlow):\n+ message = flow.messages[-1]\n+ old_content = message.content\n+ message.content = old_content.replace(b\"foo\", b\"bar\")\n \n ctx.log.info(\n- \"[tcp_message{}] from {} {} to {} {}:\\r\\n{}\".format(\n- \" (modified)\" if is_modified else \"\",\n- \"client\" if tcp_msg.sender == tcp_msg.client_conn else \"server\",\n- tcp_msg.sender.address,\n- \"server\" if tcp_msg.receiver == tcp_msg.server_conn else \"client\",\n- tcp_msg.receiver.address, strutils.bytes_to_escaped_str(tcp_msg.message))\n+ \"[tcp_message{}] from {} to {}:\\n{}\".format(\n+ \" (modified)\" if message.content != old_content else \"\",\n+ \"client\" if message.from_client else \"server\",\n+ \"server\" if message.from_client else \"client\",\n+ strutils.bytes_to_escaped_str(message.content))\n )\n", "issue": "tcp_message script not working\nHi,\r\n\r\nI tried to execute the TCP message replace script from the doc but it seems is not working. I don't know if this is a issue with the doc script or with mitmproxy.\r\n\r\nThe script was unchanged.\r\n\r\n##### Steps to reproduce the problem:\r\n\r\n1. mitmdump --mode transparent --tcp-host \".*\" -k -s examples/complex/tcp_message.py\r\n\r\nLoading script: examples/tcp_message.py\r\nProxy server listening at http://*:8080\r\n192.168.1.241:37604: clientconnect\r\n::ffff:192.168.1.241:37604: Certificate verification error for None: hostname 'no-hostname' doesn't match either of '*.local.org', 'local.org'\r\n::ffff:192.168.1.241:37604: Ignoring server verification error, continuing with connection\r\nAddon error: Traceback (most recent call last):\r\n File \"examples/tcp_message.py\", line 16, in tcp_message\r\n modified_msg = tcp_msg.message.replace(\"foo\", \"bar\")\r\nAttributeError: 'TCPFlow' object has no attribute 'message'\r\n\r\n192.168.1.241:37604 -> tcp -> 10.0.0.2:5443\r\nAddon error: Traceback (most recent call last):\r\n File \"examples/tcp_message.py\", line 16, in tcp_message\r\n modified_msg = tcp_msg.message.replace(\"foo\", \"bar\")\r\nAttributeError: 'TCPFlow' object has no attribute 'message'\r\n\r\n192.168.1.241:37604 <- tcp <- 10.0.0.2:5443\r\n\r\n##### System information\r\n\r\n<!-- Paste the output of \"mitmproxy --version\" here. -->\r\n\r\nmitmdump --version\r\nMitmproxy: 3.0.4 \r\nPython: 3.6.0\r\nOpenSSL: OpenSSL 1.1.0h 27 Mar 2018\r\nPlatform: Linux-3.19.0-65-generic-x86_64-with-debian-jessie-sid\r\n\r\n<!-- Please use the mitmproxy forums (https://discourse.mitmproxy.org/) for support/how-to questions. Thanks! :) -->\r\n\n", "before_files": [{"content": "\"\"\"\ntcp_message Inline Script Hook API Demonstration\n------------------------------------------------\n\n* modifies packets containing \"foo\" to \"bar\"\n* prints various details for each packet.\n\nexample cmdline invocation:\nmitmdump -T --host --tcp \".*\" -q -s examples/tcp_message.py\n\"\"\"\nfrom mitmproxy.utils import strutils\nfrom mitmproxy import ctx\n\n\ndef tcp_message(tcp_msg):\n modified_msg = tcp_msg.message.replace(\"foo\", \"bar\")\n\n is_modified = False if modified_msg == tcp_msg.message else True\n tcp_msg.message = modified_msg\n\n ctx.log.info(\n \"[tcp_message{}] from {} {} to {} {}:\\r\\n{}\".format(\n \" (modified)\" if is_modified else \"\",\n \"client\" if tcp_msg.sender == tcp_msg.client_conn else \"server\",\n tcp_msg.sender.address,\n \"server\" if tcp_msg.receiver == tcp_msg.server_conn else \"client\",\n tcp_msg.receiver.address, strutils.bytes_to_escaped_str(tcp_msg.message))\n )\n", "path": "examples/complex/tcp_message.py"}]} | 1,321 | 387 |

gh_patches_debug_13366 | rasdani/github-patches | git_diff | ansible__awx-13022 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Genrated certificate in install bundle for Execution node is valid only for 10 days

### Please confirm the following

- [X] I agree to follow this project's [code of conduct](https://docs.ansible.com/ansible/latest/community/code_of_conduct.html).

- [X] I have checked the [current issues](https://github.com/ansible/awx/issues) for duplicates.

- [X] I understand that AWX is open source software provided for free and that I might not receive a timely response.

### Bug Summary

The certificate for receptor in install bundle (`*.tar.gz`) for execution node that generated by AWX is valid only for 10 days.

In my understanding currently there is no automated certificate renewal feature in both AWX and Receptor, so I guess the execution environment will be invalidated after 10 days.

Therefore frequently renewal (generating bundle and invoke playbook again) by hand might be required.

```bash

$ ls -l

total 24

-rw-rw-r--. 1 kuro kuro 11278 Oct 7 05:12 ansible.log

drwxrwxr-x. 2 kuro kuro 21 Oct 7 05:09 group_vars

-rw-r--r--. 1 kuro kuro 406 Jan 1 1970 install_receptor.yml

-rw-r--r--. 1 kuro kuro 159 Oct 7 05:10 inventory.yml

drwxrwxr-x. 3 kuro kuro 44 Oct 7 05:09 receptor

-rw-r--r--. 1 kuro kuro 137 Jan 1 1970 requirements.yml

$ openssl x509 -text -in receptor/tls/receptor.crt -noout | grep Not

Not Before: Oct 6 20:09:21 2022 GMT

Not After : Oct 16 20:09:21 2022 GMT 👈👈👈

```

Installer (playbook) just place certificate on execution node without any modification, so deployed execution node uses certificate that included in the install bandle as is.

I have no idea if this is a _bug_ or _designed behavior_, but just in case I'm creating this issue.

### AWX version

21.7.0

### Select the relevant components

- [ ] UI

- [X] API

- [ ] Docs

- [ ] Collection

- [ ] CLI

- [ ] Other

### Installation method

kubernetes

### Modifications

no

### Ansible version

N/A

### Operating system

CentOS 8 Stream

### Web browser

Chrome

### Steps to reproduce

1. Deploy AWX 21.7.0 on any platform

2. Add new instance and generate install bundle for the instance

3. Extract install bundle and ensure `Not After` in the `receptor.crt` by `openssl x509 -text -in receptor/tls/receptor.crt -noout`

### Expected results

Certificate and execution environment are valid for enough life time.

### Actual results

Not tested enough, I guess my execution environment will be expired in 10 days if I won't make any manual renewal for the certs.

### Additional information

@TheRealHaoLiu @jbradberry

Thanks for excellent feature; execution node! It works as expected in my environment in this few days :)

Sorry for annoying you by this mention, but I want to confirm that this is designed or not.

I found that there is hard-coded `days=10` in your commit.

https://github.com/ansible/awx/blob/150c55c72a4d1d896474c9d3aaaceeb1b69ee253/awx/api/views/instance_install_bundle.py#L181

According to your comment on `awx-operator` repository, it seems that you've designed it as _10 years_ instead of _10 days_ I guess...?; https://github.com/ansible/awx-operator/pull/1012#issuecomment-1208423340

Please let me know it's not recommended that trying to use generated certs in the long term, or if you have an easier way to renew certs.

</issue>

<code>

[start of awx/api/views/instance_install_bundle.py]

1 # Copyright (c) 2018 Red Hat, Inc.

2 # All Rights Reserved.

3

4 import datetime

5 import io

6 import ipaddress

7 import os

8 import tarfile

9

10 import asn1

11 from awx.api import serializers

12 from awx.api.generics import GenericAPIView, Response

13 from awx.api.permissions import IsSystemAdminOrAuditor

14 from awx.main import models

15 from cryptography import x509

16 from cryptography.hazmat.primitives import hashes, serialization

17 from cryptography.hazmat.primitives.asymmetric import rsa

18 from cryptography.x509 import DNSName, IPAddress, ObjectIdentifier, OtherName

19 from cryptography.x509.oid import NameOID

20 from django.http import HttpResponse

21 from django.template.loader import render_to_string

22 from django.utils.translation import gettext_lazy as _

23 from rest_framework import status

24

25 # Red Hat has an OID namespace (RHANANA). Receptor has its own designation under that.

26 RECEPTOR_OID = "1.3.6.1.4.1.2312.19.1"

27

28 # generate install bundle for the instance

29 # install bundle directory structure

30 # ├── install_receptor.yml (playbook)

31 # ├── inventory.yml

32 # ├── group_vars

33 # │ └── all.yml

34 # ├── receptor

35 # │ ├── tls

36 # │ │ ├── ca

37 # │ │ │ └── receptor-ca.crt

38 # │ │ ├── receptor.crt

39 # │ │ └── receptor.key

40 # │ └── work-public-key.pem

41 # └── requirements.yml

42 class InstanceInstallBundle(GenericAPIView):

43

44 name = _('Install Bundle')

45 model = models.Instance

46 serializer_class = serializers.InstanceSerializer

47 permission_classes = (IsSystemAdminOrAuditor,)

48

49 def get(self, request, *args, **kwargs):

50 instance_obj = self.get_object()

51

52 if instance_obj.node_type not in ('execution',):

53 return Response(

54 data=dict(msg=_('Install bundle can only be generated for execution nodes.')),

55 status=status.HTTP_400_BAD_REQUEST,

56 )

57

58 with io.BytesIO() as f:

59 with tarfile.open(fileobj=f, mode='w:gz') as tar:

60 # copy /etc/receptor/tls/ca/receptor-ca.crt to receptor/tls/ca in the tar file

61 tar.add(

62 os.path.realpath('/etc/receptor/tls/ca/receptor-ca.crt'), arcname=f"{instance_obj.hostname}_install_bundle/receptor/tls/ca/receptor-ca.crt"

63 )

64

65 # copy /etc/receptor/signing/work-public-key.pem to receptor/work-public-key.pem

66 tar.add('/etc/receptor/signing/work-public-key.pem', arcname=f"{instance_obj.hostname}_install_bundle/receptor/work-public-key.pem")

67

68 # generate and write the receptor key to receptor/tls/receptor.key in the tar file

69 key, cert = generate_receptor_tls(instance_obj)

70

71 key_tarinfo = tarfile.TarInfo(f"{instance_obj.hostname}_install_bundle/receptor/tls/receptor.key")

72 key_tarinfo.size = len(key)

73 tar.addfile(key_tarinfo, io.BytesIO(key))

74

75 cert_tarinfo = tarfile.TarInfo(f"{instance_obj.hostname}_install_bundle/receptor/tls/receptor.crt")

76 cert_tarinfo.size = len(cert)

77 tar.addfile(cert_tarinfo, io.BytesIO(cert))

78

79 # generate and write install_receptor.yml to the tar file

80 playbook = generate_playbook().encode('utf-8')

81 playbook_tarinfo = tarfile.TarInfo(f"{instance_obj.hostname}_install_bundle/install_receptor.yml")

82 playbook_tarinfo.size = len(playbook)

83 tar.addfile(playbook_tarinfo, io.BytesIO(playbook))

84

85 # generate and write inventory.yml to the tar file

86 inventory_yml = generate_inventory_yml(instance_obj).encode('utf-8')

87 inventory_yml_tarinfo = tarfile.TarInfo(f"{instance_obj.hostname}_install_bundle/inventory.yml")

88 inventory_yml_tarinfo.size = len(inventory_yml)

89 tar.addfile(inventory_yml_tarinfo, io.BytesIO(inventory_yml))

90

91 # generate and write group_vars/all.yml to the tar file

92 group_vars = generate_group_vars_all_yml(instance_obj).encode('utf-8')

93 group_vars_tarinfo = tarfile.TarInfo(f"{instance_obj.hostname}_install_bundle/group_vars/all.yml")

94 group_vars_tarinfo.size = len(group_vars)

95 tar.addfile(group_vars_tarinfo, io.BytesIO(group_vars))

96

97 # generate and write requirements.yml to the tar file

98 requirements_yml = generate_requirements_yml().encode('utf-8')

99 requirements_yml_tarinfo = tarfile.TarInfo(f"{instance_obj.hostname}_install_bundle/requirements.yml")

100 requirements_yml_tarinfo.size = len(requirements_yml)

101 tar.addfile(requirements_yml_tarinfo, io.BytesIO(requirements_yml))

102

103 # respond with the tarfile

104 f.seek(0)

105 response = HttpResponse(f.read(), status=status.HTTP_200_OK)

106 response['Content-Disposition'] = f"attachment; filename={instance_obj.hostname}_install_bundle.tar.gz"

107 return response

108

109

110 def generate_playbook():

111 return render_to_string("instance_install_bundle/install_receptor.yml")

112

113

114 def generate_requirements_yml():

115 return render_to_string("instance_install_bundle/requirements.yml")

116

117

118 def generate_inventory_yml(instance_obj):

119 return render_to_string("instance_install_bundle/inventory.yml", context=dict(instance=instance_obj))

120

121

122 def generate_group_vars_all_yml(instance_obj):

123 return render_to_string("instance_install_bundle/group_vars/all.yml", context=dict(instance=instance_obj))

124

125

126 def generate_receptor_tls(instance_obj):

127 # generate private key for the receptor

128 key = rsa.generate_private_key(public_exponent=65537, key_size=2048)

129

130 # encode receptor hostname to asn1

131 hostname = instance_obj.hostname

132 encoder = asn1.Encoder()

133 encoder.start()

134 encoder.write(hostname.encode(), nr=asn1.Numbers.UTF8String)

135 hostname_asn1 = encoder.output()

136

137 san_params = [

138 DNSName(hostname),

139 OtherName(ObjectIdentifier(RECEPTOR_OID), hostname_asn1),

140 ]

141

142 try:

143 san_params.append(IPAddress(ipaddress.IPv4Address(hostname)))

144 except ipaddress.AddressValueError:

145 pass

146

147 # generate certificate for the receptor

148 csr = (

149 x509.CertificateSigningRequestBuilder()

150 .subject_name(

151 x509.Name(

152 [

153 x509.NameAttribute(NameOID.COMMON_NAME, hostname),

154 ]

155 )

156 )

157 .add_extension(

158 x509.SubjectAlternativeName(san_params),

159 critical=False,

160 )

161 .sign(key, hashes.SHA256())

162 )

163

164 # sign csr with the receptor ca key from /etc/receptor/ca/receptor-ca.key

165 with open('/etc/receptor/tls/ca/receptor-ca.key', 'rb') as f:

166 ca_key = serialization.load_pem_private_key(

167 f.read(),

168 password=None,

169 )

170

171 with open('/etc/receptor/tls/ca/receptor-ca.crt', 'rb') as f:

172 ca_cert = x509.load_pem_x509_certificate(f.read())

173

174 cert = (

175 x509.CertificateBuilder()

176 .subject_name(csr.subject)

177 .issuer_name(ca_cert.issuer)

178 .public_key(csr.public_key())

179 .serial_number(x509.random_serial_number())

180 .not_valid_before(datetime.datetime.utcnow())

181 .not_valid_after(datetime.datetime.utcnow() + datetime.timedelta(days=10))

182 .add_extension(

183 csr.extensions.get_extension_for_class(x509.SubjectAlternativeName).value,

184 critical=csr.extensions.get_extension_for_class(x509.SubjectAlternativeName).critical,

185 )

186 .sign(ca_key, hashes.SHA256())

187 )

188

189 key = key.private_bytes(

190 encoding=serialization.Encoding.PEM,

191 format=serialization.PrivateFormat.TraditionalOpenSSL,

192 encryption_algorithm=serialization.NoEncryption(),

193 )

194

195 cert = cert.public_bytes(

196 encoding=serialization.Encoding.PEM,

197 )

198

199 return key, cert

200

[end of awx/api/views/instance_install_bundle.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/awx/api/views/instance_install_bundle.py b/awx/api/views/instance_install_bundle.py

--- a/awx/api/views/instance_install_bundle.py

+++ b/awx/api/views/instance_install_bundle.py

@@ -178,7 +178,7 @@

.public_key(csr.public_key())

.serial_number(x509.random_serial_number())

.not_valid_before(datetime.datetime.utcnow())

- .not_valid_after(datetime.datetime.utcnow() + datetime.timedelta(days=10))

+ .not_valid_after(datetime.datetime.utcnow() + datetime.timedelta(days=3650))

.add_extension(

csr.extensions.get_extension_for_class(x509.SubjectAlternativeName).value,

critical=csr.extensions.get_extension_for_class(x509.SubjectAlternativeName).critical,

| {"golden_diff": "diff --git a/awx/api/views/instance_install_bundle.py b/awx/api/views/instance_install_bundle.py\n--- a/awx/api/views/instance_install_bundle.py\n+++ b/awx/api/views/instance_install_bundle.py\n@@ -178,7 +178,7 @@\n .public_key(csr.public_key())\n .serial_number(x509.random_serial_number())\n .not_valid_before(datetime.datetime.utcnow())\n- .not_valid_after(datetime.datetime.utcnow() + datetime.timedelta(days=10))\n+ .not_valid_after(datetime.datetime.utcnow() + datetime.timedelta(days=3650))\n .add_extension(\n csr.extensions.get_extension_for_class(x509.SubjectAlternativeName).value,\n critical=csr.extensions.get_extension_for_class(x509.SubjectAlternativeName).critical,\n", "issue": "Genrated certificate in install bundle for Execution node is valid only for 10 days\n### Please confirm the following\r\n\r\n- [X] I agree to follow this project's [code of conduct](https://docs.ansible.com/ansible/latest/community/code_of_conduct.html).\r\n- [X] I have checked the [current issues](https://github.com/ansible/awx/issues) for duplicates.\r\n- [X] I understand that AWX is open source software provided for free and that I might not receive a timely response.\r\n\r\n### Bug Summary\r\n\r\nThe certificate for receptor in install bundle (`*.tar.gz`) for execution node that generated by AWX is valid only for 10 days.\r\n\r\nIn my understanding currently there is no automated certificate renewal feature in both AWX and Receptor, so I guess the execution environment will be invalidated after 10 days. \r\n\r\nTherefore frequently renewal (generating bundle and invoke playbook again) by hand might be required.\r\n\r\n```bash\r\n$ ls -l\r\ntotal 24\r\n-rw-rw-r--. 1 kuro kuro 11278 Oct 7 05:12 ansible.log\r\ndrwxrwxr-x. 2 kuro kuro 21 Oct 7 05:09 group_vars\r\n-rw-r--r--. 1 kuro kuro 406 Jan 1 1970 install_receptor.yml\r\n-rw-r--r--. 1 kuro kuro 159 Oct 7 05:10 inventory.yml\r\ndrwxrwxr-x. 3 kuro kuro 44 Oct 7 05:09 receptor\r\n-rw-r--r--. 1 kuro kuro 137 Jan 1 1970 requirements.yml\r\n\r\n$ openssl x509 -text -in receptor/tls/receptor.crt -noout | grep Not\r\n Not Before: Oct 6 20:09:21 2022 GMT\r\n Not After : Oct 16 20:09:21 2022 GMT \ud83d\udc48\ud83d\udc48\ud83d\udc48\r\n```\r\n\r\nInstaller (playbook) just place certificate on execution node without any modification, so deployed execution node uses certificate that included in the install bandle as is. \r\n\r\nI have no idea if this is a _bug_ or _designed behavior_, but just in case I'm creating this issue.\r\n\r\n### AWX version\r\n\r\n21.7.0\r\n\r\n### Select the relevant components\r\n\r\n- [ ] UI\r\n- [X] API\r\n- [ ] Docs\r\n- [ ] Collection\r\n- [ ] CLI\r\n- [ ] Other\r\n\r\n### Installation method\r\n\r\nkubernetes\r\n\r\n### Modifications\r\n\r\nno\r\n\r\n### Ansible version\r\n\r\nN/A\r\n\r\n### Operating system\r\n\r\nCentOS 8 Stream\r\n\r\n### Web browser\r\n\r\nChrome\r\n\r\n### Steps to reproduce\r\n\r\n1. Deploy AWX 21.7.0 on any platform\r\n2. Add new instance and generate install bundle for the instance\r\n3. Extract install bundle and ensure `Not After` in the `receptor.crt` by `openssl x509 -text -in receptor/tls/receptor.crt -noout`\r\n\r\n### Expected results\r\n\r\nCertificate and execution environment are valid for enough life time.\r\n\r\n### Actual results\r\n\r\nNot tested enough, I guess my execution environment will be expired in 10 days if I won't make any manual renewal for the certs.\r\n\r\n### Additional information\r\n\r\n@TheRealHaoLiu @jbradberry \r\nThanks for excellent feature; execution node! It works as expected in my environment in this few days :)\r\nSorry for annoying you by this mention, but I want to confirm that this is designed or not.\r\n\r\nI found that there is hard-coded `days=10` in your commit.\r\nhttps://github.com/ansible/awx/blob/150c55c72a4d1d896474c9d3aaaceeb1b69ee253/awx/api/views/instance_install_bundle.py#L181\r\n\r\nAccording to your comment on `awx-operator` repository, it seems that you've designed it as _10 years_ instead of _10 days_ I guess...?; https://github.com/ansible/awx-operator/pull/1012#issuecomment-1208423340\r\n\r\nPlease let me know it's not recommended that trying to use generated certs in the long term, or if you have an easier way to renew certs.\n", "before_files": [{"content": "# Copyright (c) 2018 Red Hat, Inc.\n# All Rights Reserved.\n\nimport datetime\nimport io\nimport ipaddress\nimport os\nimport tarfile\n\nimport asn1\nfrom awx.api import serializers\nfrom awx.api.generics import GenericAPIView, Response\nfrom awx.api.permissions import IsSystemAdminOrAuditor\nfrom awx.main import models\nfrom cryptography import x509\nfrom cryptography.hazmat.primitives import hashes, serialization\nfrom cryptography.hazmat.primitives.asymmetric import rsa\nfrom cryptography.x509 import DNSName, IPAddress, ObjectIdentifier, OtherName\nfrom cryptography.x509.oid import NameOID\nfrom django.http import HttpResponse\nfrom django.template.loader import render_to_string\nfrom django.utils.translation import gettext_lazy as _\nfrom rest_framework import status\n\n# Red Hat has an OID namespace (RHANANA). Receptor has its own designation under that.\nRECEPTOR_OID = \"1.3.6.1.4.1.2312.19.1\"\n\n# generate install bundle for the instance\n# install bundle directory structure\n# \u251c\u2500\u2500 install_receptor.yml (playbook)\n# \u251c\u2500\u2500 inventory.yml\n# \u251c\u2500\u2500 group_vars\n# \u2502 \u2514\u2500\u2500 all.yml\n# \u251c\u2500\u2500 receptor\n# \u2502 \u251c\u2500\u2500 tls\n# \u2502 \u2502 \u251c\u2500\u2500 ca\n# \u2502 \u2502 \u2502 \u2514\u2500\u2500 receptor-ca.crt\n# \u2502 \u2502 \u251c\u2500\u2500 receptor.crt\n# \u2502 \u2502 \u2514\u2500\u2500 receptor.key\n# \u2502 \u2514\u2500\u2500 work-public-key.pem\n# \u2514\u2500\u2500 requirements.yml\nclass InstanceInstallBundle(GenericAPIView):\n\n name = _('Install Bundle')\n model = models.Instance\n serializer_class = serializers.InstanceSerializer\n permission_classes = (IsSystemAdminOrAuditor,)\n\n def get(self, request, *args, **kwargs):\n instance_obj = self.get_object()\n\n if instance_obj.node_type not in ('execution',):\n return Response(\n data=dict(msg=_('Install bundle can only be generated for execution nodes.')),\n status=status.HTTP_400_BAD_REQUEST,\n )\n\n with io.BytesIO() as f:\n with tarfile.open(fileobj=f, mode='w:gz') as tar:\n # copy /etc/receptor/tls/ca/receptor-ca.crt to receptor/tls/ca in the tar file\n tar.add(\n os.path.realpath('/etc/receptor/tls/ca/receptor-ca.crt'), arcname=f\"{instance_obj.hostname}_install_bundle/receptor/tls/ca/receptor-ca.crt\"\n )\n\n # copy /etc/receptor/signing/work-public-key.pem to receptor/work-public-key.pem\n tar.add('/etc/receptor/signing/work-public-key.pem', arcname=f\"{instance_obj.hostname}_install_bundle/receptor/work-public-key.pem\")\n\n # generate and write the receptor key to receptor/tls/receptor.key in the tar file\n key, cert = generate_receptor_tls(instance_obj)\n\n key_tarinfo = tarfile.TarInfo(f\"{instance_obj.hostname}_install_bundle/receptor/tls/receptor.key\")\n key_tarinfo.size = len(key)\n tar.addfile(key_tarinfo, io.BytesIO(key))\n\n cert_tarinfo = tarfile.TarInfo(f\"{instance_obj.hostname}_install_bundle/receptor/tls/receptor.crt\")\n cert_tarinfo.size = len(cert)\n tar.addfile(cert_tarinfo, io.BytesIO(cert))\n\n # generate and write install_receptor.yml to the tar file\n playbook = generate_playbook().encode('utf-8')\n playbook_tarinfo = tarfile.TarInfo(f\"{instance_obj.hostname}_install_bundle/install_receptor.yml\")\n playbook_tarinfo.size = len(playbook)\n tar.addfile(playbook_tarinfo, io.BytesIO(playbook))\n\n # generate and write inventory.yml to the tar file\n inventory_yml = generate_inventory_yml(instance_obj).encode('utf-8')\n inventory_yml_tarinfo = tarfile.TarInfo(f\"{instance_obj.hostname}_install_bundle/inventory.yml\")\n inventory_yml_tarinfo.size = len(inventory_yml)\n tar.addfile(inventory_yml_tarinfo, io.BytesIO(inventory_yml))\n\n # generate and write group_vars/all.yml to the tar file\n group_vars = generate_group_vars_all_yml(instance_obj).encode('utf-8')\n group_vars_tarinfo = tarfile.TarInfo(f\"{instance_obj.hostname}_install_bundle/group_vars/all.yml\")\n group_vars_tarinfo.size = len(group_vars)\n tar.addfile(group_vars_tarinfo, io.BytesIO(group_vars))\n\n # generate and write requirements.yml to the tar file\n requirements_yml = generate_requirements_yml().encode('utf-8')\n requirements_yml_tarinfo = tarfile.TarInfo(f\"{instance_obj.hostname}_install_bundle/requirements.yml\")\n requirements_yml_tarinfo.size = len(requirements_yml)\n tar.addfile(requirements_yml_tarinfo, io.BytesIO(requirements_yml))\n\n # respond with the tarfile\n f.seek(0)\n response = HttpResponse(f.read(), status=status.HTTP_200_OK)\n response['Content-Disposition'] = f\"attachment; filename={instance_obj.hostname}_install_bundle.tar.gz\"\n return response\n\n\ndef generate_playbook():\n return render_to_string(\"instance_install_bundle/install_receptor.yml\")\n\n\ndef generate_requirements_yml():\n return render_to_string(\"instance_install_bundle/requirements.yml\")\n\n\ndef generate_inventory_yml(instance_obj):\n return render_to_string(\"instance_install_bundle/inventory.yml\", context=dict(instance=instance_obj))\n\n\ndef generate_group_vars_all_yml(instance_obj):\n return render_to_string(\"instance_install_bundle/group_vars/all.yml\", context=dict(instance=instance_obj))\n\n\ndef generate_receptor_tls(instance_obj):\n # generate private key for the receptor\n key = rsa.generate_private_key(public_exponent=65537, key_size=2048)\n\n # encode receptor hostname to asn1\n hostname = instance_obj.hostname\n encoder = asn1.Encoder()\n encoder.start()\n encoder.write(hostname.encode(), nr=asn1.Numbers.UTF8String)\n hostname_asn1 = encoder.output()\n\n san_params = [\n DNSName(hostname),\n OtherName(ObjectIdentifier(RECEPTOR_OID), hostname_asn1),\n ]\n\n try:\n san_params.append(IPAddress(ipaddress.IPv4Address(hostname)))\n except ipaddress.AddressValueError:\n pass\n\n # generate certificate for the receptor\n csr = (\n x509.CertificateSigningRequestBuilder()\n .subject_name(\n x509.Name(\n [\n x509.NameAttribute(NameOID.COMMON_NAME, hostname),\n ]\n )\n )\n .add_extension(\n x509.SubjectAlternativeName(san_params),\n critical=False,\n )\n .sign(key, hashes.SHA256())\n )\n\n # sign csr with the receptor ca key from /etc/receptor/ca/receptor-ca.key\n with open('/etc/receptor/tls/ca/receptor-ca.key', 'rb') as f:\n ca_key = serialization.load_pem_private_key(\n f.read(),\n password=None,\n )\n\n with open('/etc/receptor/tls/ca/receptor-ca.crt', 'rb') as f:\n ca_cert = x509.load_pem_x509_certificate(f.read())\n\n cert = (\n x509.CertificateBuilder()\n .subject_name(csr.subject)\n .issuer_name(ca_cert.issuer)\n .public_key(csr.public_key())\n .serial_number(x509.random_serial_number())\n .not_valid_before(datetime.datetime.utcnow())\n .not_valid_after(datetime.datetime.utcnow() + datetime.timedelta(days=10))\n .add_extension(\n csr.extensions.get_extension_for_class(x509.SubjectAlternativeName).value,\n critical=csr.extensions.get_extension_for_class(x509.SubjectAlternativeName).critical,\n )\n .sign(ca_key, hashes.SHA256())\n )\n\n key = key.private_bytes(\n encoding=serialization.Encoding.PEM,\n format=serialization.PrivateFormat.TraditionalOpenSSL,\n encryption_algorithm=serialization.NoEncryption(),\n )\n\n cert = cert.public_bytes(\n encoding=serialization.Encoding.PEM,\n )\n\n return key, cert\n", "path": "awx/api/views/instance_install_bundle.py"}]} | 3,783 | 175 |

gh_patches_debug_758 | rasdani/github-patches | git_diff | vllm-project__vllm-2337 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[v0.2.7] Release Tracker

**ETA**: Jan 3rd - 4th

## Major changes

TBD

## PRs to be merged before the release

- [x] #2221

- [ ] ~~#2293~~ (deferred)

</issue>

<code>

[start of vllm/__init__.py]

1 """vLLM: a high-throughput and memory-efficient inference engine for LLMs"""

2

3 from vllm.engine.arg_utils import AsyncEngineArgs, EngineArgs

4 from vllm.engine.async_llm_engine import AsyncLLMEngine

5 from vllm.engine.llm_engine import LLMEngine

6 from vllm.engine.ray_utils import initialize_cluster

7 from vllm.entrypoints.llm import LLM

8 from vllm.outputs import CompletionOutput, RequestOutput

9 from vllm.sampling_params import SamplingParams

10

11 __version__ = "0.2.6"

12

13 __all__ = [

14 "LLM",

15 "SamplingParams",

16 "RequestOutput",

17 "CompletionOutput",

18 "LLMEngine",

19 "EngineArgs",

20 "AsyncLLMEngine",

21 "AsyncEngineArgs",

22 "initialize_cluster",

23 ]

24

[end of vllm/__init__.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/vllm/__init__.py b/vllm/__init__.py

--- a/vllm/__init__.py

+++ b/vllm/__init__.py

@@ -8,7 +8,7 @@

from vllm.outputs import CompletionOutput, RequestOutput

from vllm.sampling_params import SamplingParams

-__version__ = "0.2.6"

+__version__ = "0.2.7"

__all__ = [

"LLM",

| {"golden_diff": "diff --git a/vllm/__init__.py b/vllm/__init__.py\n--- a/vllm/__init__.py\n+++ b/vllm/__init__.py\n@@ -8,7 +8,7 @@\n from vllm.outputs import CompletionOutput, RequestOutput\n from vllm.sampling_params import SamplingParams\n \n-__version__ = \"0.2.6\"\n+__version__ = \"0.2.7\"\n \n __all__ = [\n \"LLM\",\n", "issue": "[v0.2.7] Release Tracker\n**ETA**: Jan 3rd - 4th\r\n\r\n## Major changes\r\n\r\nTBD\r\n\r\n## PRs to be merged before the release\r\n\r\n- [x] #2221 \r\n- [ ] ~~#2293~~ (deferred)\n", "before_files": [{"content": "\"\"\"vLLM: a high-throughput and memory-efficient inference engine for LLMs\"\"\"\n\nfrom vllm.engine.arg_utils import AsyncEngineArgs, EngineArgs\nfrom vllm.engine.async_llm_engine import AsyncLLMEngine\nfrom vllm.engine.llm_engine import LLMEngine\nfrom vllm.engine.ray_utils import initialize_cluster\nfrom vllm.entrypoints.llm import LLM\nfrom vllm.outputs import CompletionOutput, RequestOutput\nfrom vllm.sampling_params import SamplingParams\n\n__version__ = \"0.2.6\"\n\n__all__ = [\n \"LLM\",\n \"SamplingParams\",\n \"RequestOutput\",\n \"CompletionOutput\",\n \"LLMEngine\",\n \"EngineArgs\",\n \"AsyncLLMEngine\",\n \"AsyncEngineArgs\",\n \"initialize_cluster\",\n]\n", "path": "vllm/__init__.py"}]} | 819 | 108 |

gh_patches_debug_12904 | rasdani/github-patches | git_diff | netket__netket-611 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Floating Point Error in Lattice.py

I tried generating a periodic Kagome lattice using lattice.py:

>>> kagome = nk.graph.Lattice(basis_vectors=[[2.,0.],[1.,np.sqrt(3)]],extent=[2,2],atoms_coord=[[0.,0.],[1./2.,np.sqrt(3)/2.],[1.,0.]])

Only half of the edges appeared:

>>>kagome.n_edges()

12

The bug is related to floating points, some distances are registered as 0.99999... and some are registered as 1.0

</issue>

<code>

[start of netket/graph/lattice.py]

1 # Copyright 2020, 2021 The NetKet Authors - All rights reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 from .graph import NetworkX

16 from scipy.spatial import cKDTree

17 from scipy.sparse import find, triu

18 import numpy as _np

19 import itertools

20 import networkx as _nx

21 import warnings

22

23

24 def get_edges(atoms_positions, cutoff):

25 kdtree = cKDTree(atoms_positions)

26 dist_matrix = kdtree.sparse_distance_matrix(kdtree, cutoff)

27 id1, id2, values = find(triu(dist_matrix))

28 pairs = []

29 min_dists = {} # keys are nodes, values are min dists

30 for node in _np.unique(_np.concatenate((id1, id2))):

31 min_dist = _np.min(values[(id1 == node) | (id2 == node)])

32 min_dists[node] = min_dist

33 for node in _np.unique(id1):

34 min_dist = _np.min(values[id1 == node])

35 mask = (id1 == node) & (values == min_dist)

36 first = id1[mask]

37 second = id2[mask]

38 for pair in zip(first, second):

39 if min_dist == min_dists[pair[0]] and min_dist == min_dists[pair[1]]:

40 pairs.append(pair)

41 return pairs

42

43

44 def create_points(basis_vectors, extent, atom_coords, pbc):

45 shell_vec = _np.zeros(extent.size, dtype=int)

46 shift_vec = _np.zeros(extent.size, dtype=int)

47 # note: by modifying these, the number of shells can be tuned.

48 shell_vec[pbc] = 2

49 shift_vec[pbc] = 1

50 ranges = tuple([list(range(ex)) for ex in extent + shell_vec])

51 atoms = []

52 cellANDlabel_to_site = {}

53 for s_cell in itertools.product(*ranges):

54 s_coord_cell = _np.asarray(s_cell) - shift_vec

55 if _np.any(s_coord_cell < 0) or _np.any(s_coord_cell > (extent - 1)):

56 inside = False

57 else:

58 inside = True

59 atom_count = len(atoms)

60 for i, atom_coord in enumerate(atom_coords):

61 s_coord_atom = s_coord_cell + atom_coord

62 r_coord_atom = _np.matmul(basis_vectors.T, s_coord_atom)

63 atoms.append(

64 {

65 "Label": i,

66 "cell": s_coord_cell,

67 "r_coord": r_coord_atom,

68 "inside": inside,

69 }

70 )

71 if tuple(s_coord_cell) not in cellANDlabel_to_site.keys():

72 cellANDlabel_to_site[tuple(s_coord_cell)] = {}

73 cellANDlabel_to_site[tuple(s_coord_cell)][i] = atom_count + i

74 return atoms, cellANDlabel_to_site

75

76

77 def get_true_edges(basis_vectors, atoms, cellANDlabel_to_site, extent):

78 atoms_positions = dicts_to_array(atoms, "r_coord")

79 naive_edges = get_edges(

80 atoms_positions, _np.linalg.norm(basis_vectors, axis=1).max()

81 )

82 true_edges = []

83 for node1, node2 in naive_edges:

84 atom1 = atoms[node1]

85 atom2 = atoms[node2]

86 if atom1["inside"] and atom2["inside"]:

87 true_edges.append((node1, node2))

88 elif atom1["inside"] or atom2["inside"]:

89 cell1 = atom1["cell"] % extent

90 cell2 = atom2["cell"] % extent

91 node1 = cellANDlabel_to_site[tuple(cell1)][atom1["Label"]]

92 node2 = cellANDlabel_to_site[tuple(cell2)][atom2["Label"]]

93 edge = (node1, node2)

94 if edge not in true_edges and (node2, node1) not in true_edges:

95 true_edges.append(edge)

96 return true_edges

97

98

99 def dicts_to_array(dicts, key):

100 result = []

101 for d in dicts:

102 result.append(d[key])

103 return _np.asarray(result)

104

105

106 class Lattice(NetworkX):

107 """A lattice built translating a unit cell and adding edges between nearest neighbours sites.

108

109 The unit cell is defined by the ``basis_vectors`` and it can contain an arbitrary number of atoms.

110 Each atom is located at an arbitrary position and is labelled by an integer number,

111 meant to distinguish between the different atoms within the unit cell.

112 Periodic boundary conditions can also be imposed along the desired directions.

113 There are three different ways to refer to the lattice sites. A site can be labelled

114 by a simple integer number (the site index) or by its coordinates (actual position in space).

115 """

116

117 def __init__(self, basis_vectors, extent, *, pbc: bool = True, atoms_coord=[]):

118 """

119 Constructs a new ``Lattice`` given its side length and the features of the unit cell.

120

121 Args:

122 basis_vectors: The basis vectors of the unit cell.

123 extent: The number of copies of the unit cell.

124 pbc: If ``True`` then the constructed lattice

125 will have periodic boundary conditions, otherwise

126 open boundary conditions are imposed (default=``True``).

127 atoms_coord: The coordinates of different atoms in the unit cell (default=one atom at the origin).

128

129 Examples:

130 Constructs a rectangular 3X4 lattice with periodic boundary conditions.

131

132 >>> import netket

133 >>> g=netket.graph.Lattice(basis_vectors=[[1,0],[0,1]],extent=[3,4])

134 >>> print(g.n_nodes)

135 12

136

137 """

138

139 self._basis_vectors = _np.asarray(basis_vectors)

140 if self._basis_vectors.ndim != 2:

141 raise ValueError("Every vector must have the same dimension.")

142 if self._basis_vectors.shape[0] != self._basis_vectors.shape[1]:

143 raise ValueError(

144 "basis_vectors must be a basis for the N-dimensional vector space you chose"

145 )

146

147 if not atoms_coord:

148 atoms_coord = [_np.zeros(self._basis_vectors.shape[0])]

149 atoms_coord = _np.asarray(atoms_coord)

150 atoms_coord_fractional = _np.asarray(

151 [

152 _np.matmul(_np.linalg.inv(self._basis_vectors.T), atom_coord)

153 for atom_coord in atoms_coord

154 ]

155 )

156 if atoms_coord_fractional.min() < 0 or atoms_coord_fractional.max() >= 1:

157 # Maybe there is another way to state this. I want to avoid that there exists the possibility that two atoms from different cells are at the same position:

158 raise ValueError(

159 "atoms must reside inside their corresponding unit cell, which includes only the 0-faces in fractional coordinates."

160 )

161 uniques = _np.unique(atoms_coord, axis=0)

162 if len(atoms_coord) != uniques.shape[0]:

163 atoms_coord = _np.asarray(uniques)

164 warnings.warn(

165 f"Some atom positions are not unique. Duplicates were dropped, and now atom positions are {atoms_coord}",

166 UserWarning,

167 )

168

169 self._atoms_coord = atoms_coord

170

171 if isinstance(pbc, bool):

172 self._pbc = [pbc] * self._basis_vectors.shape[1]

173 elif (

174 not isinstance(pbc, list)

175 or len(pbc) != self._basis_vectors.shape[1]

176 or sum([1 for pbci in pbc if isinstance(pbci, bool)])

177 != self._basis_vectors.shape[1]

178 ):

179 raise ValueError(

180 "pbc must be either a boolean or a list of booleans with the same dimension as the vector space you chose."

181 )

182 else:

183 self._pbc = pbc

184

185 extent = _np.asarray(extent)

186 self.extent = extent

187

188 atoms, cellANDlabel_to_site = create_points(

189 self._basis_vectors, extent, atoms_coord_fractional, pbc

190 )

191 edges = get_true_edges(self._basis_vectors, atoms, cellANDlabel_to_site, extent)

192 graph = _nx.MultiGraph(edges)

193

194 # Rename atoms

195 old_nodes = sorted(set([node for edge in edges for node in edge]))

196 self._atoms = [atoms[old_node] for old_node in old_nodes]

197 self._coord_to_site = {

198 tuple(atom["r_coord"]): new_site

199 for new_site, atom in enumerate(self._atoms)

200 }

201 new_nodes = {old_node: new_node for new_node, old_node in enumerate(old_nodes)}

202 graph = _nx.relabel_nodes(graph, new_nodes)

203

204 # Order node names

205 nodes = sorted(graph.nodes())

206 edges = list(graph.edges())

207 graph = _nx.MultiGraph()

208 graph.add_nodes_from(nodes)

209 graph.add_edges_from(edges)

210

211 super().__init__(graph)

212

213 @property

214 def basis_vectors(self):

215 return self._basis_vectors

216

217 @property

218 def atoms_coord(self):

219 """

220 Coordinates of atoms in the unit cell.

221 """

222 return self._atoms_coord

223

224 def atom_label(self, site):

225 return self._atoms[site]["Label"]

226

227 def site_to_coord(self, site):

228 return self._atoms[site]["r_coord"]

229

230 def coord_to_site(self, coord):

231 return self._coord_to_site[tuple(coord)]

232

233 def site_to_vector(self, site):

234 return self._atoms[site]["cell"]

235

236 def vector_to_coord(self, vector):

237 return _np.matmul(self._basis_vectors, vector)

238

239 def __repr__(self):

240 return "Lattice(n_nodes={})\n extent={}\n basis_vectors={}".format(

241 self.n_nodes, self.extent.tolist(), self.basis_vectors.tolist()

242 )

243

[end of netket/graph/lattice.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/netket/graph/lattice.py b/netket/graph/lattice.py

--- a/netket/graph/lattice.py

+++ b/netket/graph/lattice.py

@@ -32,11 +32,13 @@

min_dists[node] = min_dist

for node in _np.unique(id1):

min_dist = _np.min(values[id1 == node])

- mask = (id1 == node) & (values == min_dist)

+ mask = (id1 == node) & (_np.isclose(values, min_dist))

first = id1[mask]

second = id2[mask]

for pair in zip(first, second):

- if min_dist == min_dists[pair[0]] and min_dist == min_dists[pair[1]]:

+ if _np.isclose(min_dist, min_dists[pair[0]]) and _np.isclose(

+ min_dist, min_dists[pair[1]]

+ ):

pairs.append(pair)

return pairs