problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_37597 | rasdani/github-patches | git_diff | streamlit__streamlit-3548 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

File uploader does not return multiple files

From: https://discuss.streamlit.io/t/issue-with-file-uploader-and-streamlit-version-0-84-0/14812

---

Hi everyone,

I'm new here. First of all thank you really much for your great work in this forum. It helped me out multiple times. But now I encountered an issue where I couldn't find a thread on, yet.

With the latest version (0.84.0) I'm experiencing a problem with the file_uploader widget.

In the prior version (0.82.0) it was very handy, for my case, to select one file after another and drag&drop it to the widget. With the latest version this doesn't seems possible anymore. When one or more files were uploaded the next file/s draged and droped to the widget are not accessible, even though these files appear in the interactive list below the widget.

I want to use st.session_state is the app I'm working on and for that reason the version 0.84.0 is necessary to my understanding.

In order for you to reproduce the situation I made up this example:

```

import streamlit as st

uploaded_files = st.file_uploader('Select files',type=['txt'],accept_multiple_files=True)

file_lst = [uploaded_file.name for uploaded_file in uploaded_files]

st.write(file_lst)

```

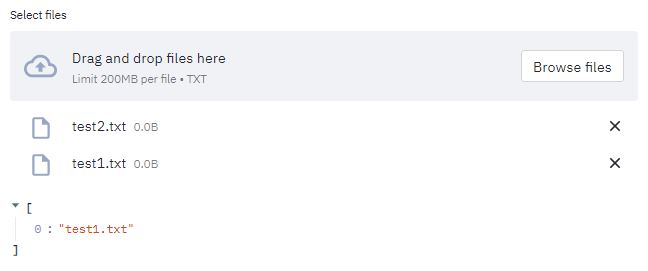

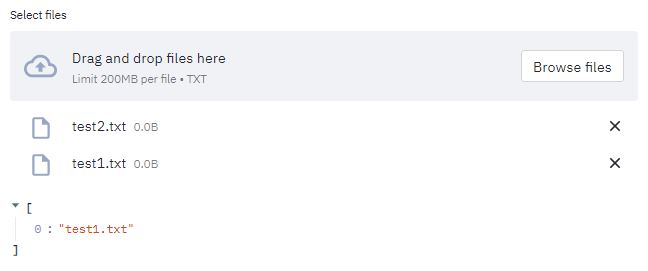

Assuming I want to upload the two files test1.txt and test2.txt one after another. For the first file (test1.txt) the behavior is as expected and equivalent for both versions:

Then later I want to upload another file in this case test2.txt.

The expected behavior can be seen with version 0.82.0. Both files are shown in the interactive list below the widget as well as in the written file_lst.

With version 0.84.0 only the interactive list below the widget shows both files. The written file_lst shows only test1.txt.

Anyone had a similar issue? I apologize, if the solution is obvious, but I got stuck with it and can't figure out, how to solve the issue.

</issue>

<code>

[start of lib/streamlit/elements/file_uploader.py]

1 # Copyright 2018-2021 Streamlit Inc.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 from typing import cast, List, Optional, Union

16

17 import streamlit

18 from streamlit import config

19 from streamlit.logger import get_logger

20 from streamlit.proto.FileUploader_pb2 import FileUploader as FileUploaderProto

21 from streamlit.report_thread import get_report_ctx

22 from streamlit.state.widgets import register_widget, NoValue

23 from .form import current_form_id

24 from ..proto.Common_pb2 import SInt64Array

25 from ..uploaded_file_manager import UploadedFile, UploadedFileRec

26 from .utils import check_callback_rules, check_session_state_rules

27

28 LOGGER = get_logger(__name__)

29

30

31 class FileUploaderMixin:

32 def file_uploader(

33 self,

34 label,

35 type=None,

36 accept_multiple_files=False,

37 key=None,

38 help=None,

39 on_change=None,

40 args=None,

41 kwargs=None,

42 ):

43 """Display a file uploader widget.

44 By default, uploaded files are limited to 200MB. You can configure

45 this using the `server.maxUploadSize` config option.

46

47 Parameters

48 ----------

49 label : str

50 A short label explaining to the user what this file uploader is for.

51

52 type : str or list of str or None

53 Array of allowed extensions. ['png', 'jpg']

54 The default is None, which means all extensions are allowed.

55

56 accept_multiple_files : bool

57 If True, allows the user to upload multiple files at the same time,

58 in which case the return value will be a list of files.

59 Default: False

60

61 key : str

62 An optional string to use as the unique key for the widget.

63 If this is omitted, a key will be generated for the widget

64 based on its content. Multiple widgets of the same type may

65 not share the same key.

66

67 help : str

68 A tooltip that gets displayed next to the file uploader.

69

70 on_change : callable

71 An optional callback invoked when this file_uploader's value

72 changes.

73

74 args : tuple

75 An optional tuple of args to pass to the callback.

76

77 kwargs : dict

78 An optional dict of kwargs to pass to the callback.

79

80 Returns

81 -------

82 None or UploadedFile or list of UploadedFile

83 - If accept_multiple_files is False, returns either None or

84 an UploadedFile object.

85 - If accept_multiple_files is True, returns a list with the

86 uploaded files as UploadedFile objects. If no files were

87 uploaded, returns an empty list.

88

89 The UploadedFile class is a subclass of BytesIO, and therefore

90 it is "file-like". This means you can pass them anywhere where

91 a file is expected.

92

93 Examples

94 --------

95 Insert a file uploader that accepts a single file at a time:

96

97 >>> uploaded_file = st.file_uploader("Choose a file")

98 >>> if uploaded_file is not None:

99 ... # To read file as bytes:

100 ... bytes_data = uploaded_file.getvalue()

101 ... st.write(bytes_data)

102 >>>

103 ... # To convert to a string based IO:

104 ... stringio = StringIO(uploaded_file.getvalue().decode("utf-8"))

105 ... st.write(stringio)

106 >>>

107 ... # To read file as string:

108 ... string_data = stringio.read()

109 ... st.write(string_data)

110 >>>

111 ... # Can be used wherever a "file-like" object is accepted:

112 ... dataframe = pd.read_csv(uploaded_file)

113 ... st.write(dataframe)

114

115 Insert a file uploader that accepts multiple files at a time:

116

117 >>> uploaded_files = st.file_uploader("Choose a CSV file", accept_multiple_files=True)

118 >>> for uploaded_file in uploaded_files:

119 ... bytes_data = uploaded_file.read()

120 ... st.write("filename:", uploaded_file.name)

121 ... st.write(bytes_data)

122 """

123 check_callback_rules(self.dg, on_change)

124 check_session_state_rules(default_value=None, key=key, writes_allowed=False)

125

126 if type:

127 if isinstance(type, str):

128 type = [type]

129

130 # May need a regex or a library to validate file types are valid

131 # extensions.

132 type = [

133 file_type if file_type[0] == "." else f".{file_type}"

134 for file_type in type

135 ]

136

137 file_uploader_proto = FileUploaderProto()

138 file_uploader_proto.label = label

139 file_uploader_proto.type[:] = type if type is not None else []

140 file_uploader_proto.max_upload_size_mb = config.get_option(

141 "server.maxUploadSize"

142 )

143 file_uploader_proto.multiple_files = accept_multiple_files

144 file_uploader_proto.form_id = current_form_id(self.dg)

145 if help is not None:

146 file_uploader_proto.help = help

147

148 def deserialize_file_uploader(

149 ui_value: List[int], widget_id: str

150 ) -> Optional[Union[List[UploadedFile], UploadedFile]]:

151 file_recs = self._get_file_recs(widget_id, ui_value)

152 if len(file_recs) == 0:

153 return_value: Optional[Union[List[UploadedFile], UploadedFile]] = (

154 [] if accept_multiple_files else None

155 )

156 else:

157 files = [UploadedFile(rec) for rec in file_recs]

158 return_value = files if accept_multiple_files else files[0]

159 return return_value

160

161 def serialize_file_uploader(

162 files: Optional[Union[List[UploadedFile], UploadedFile]]

163 ) -> List[int]:

164 if not files:

165 return []

166 if isinstance(files, list):

167 ids = [f.id for f in files]

168 else:

169 ids = [files.id]

170 ctx = get_report_ctx()

171 if ctx is None:

172 return []

173 max_id = ctx.uploaded_file_mgr._file_id_counter

174 return [max_id] + ids

175

176 # FileUploader's widget value is a list of file IDs

177 # representing the current set of files that this uploader should

178 # know about.

179 widget_value, _ = register_widget(

180 "file_uploader",

181 file_uploader_proto,

182 user_key=key,

183 on_change_handler=on_change,

184 args=args,

185 kwargs=kwargs,

186 deserializer=deserialize_file_uploader,

187 serializer=serialize_file_uploader,

188 )

189

190 self.dg._enqueue("file_uploader", file_uploader_proto)

191 return widget_value

192

193 @staticmethod

194 def _get_file_recs(

195 widget_id: str, widget_value: Optional[List[int]]

196 ) -> List[UploadedFileRec]:

197 if widget_value is None:

198 return []

199

200 ctx = get_report_ctx()

201 if ctx is None:

202 return []

203

204 if len(widget_value) == 0:

205 # Sanity check

206 LOGGER.warning(

207 "Got an empty FileUploader widget_value. (We expect a list with at least one value in it.)"

208 )

209 return []

210

211 # The first number in the widget_value list is 'newestServerFileId'

212 newest_file_id = widget_value[0]

213 active_file_ids = list(widget_value[1:])

214

215 # Grab the files that correspond to our active file IDs.

216 file_recs = ctx.uploaded_file_mgr.get_files(

217 session_id=ctx.session_id,

218 widget_id=widget_id,

219 file_ids=active_file_ids,

220 )

221

222 # Garbage collect "orphaned" files.

223 ctx.uploaded_file_mgr.remove_orphaned_files(

224 session_id=ctx.session_id,

225 widget_id=widget_id,

226 newest_file_id=newest_file_id,

227 active_file_ids=active_file_ids,

228 )

229

230 return file_recs

231

232 @property

233 def dg(self) -> "streamlit.delta_generator.DeltaGenerator":

234 """Get our DeltaGenerator."""

235 return cast("streamlit.delta_generator.DeltaGenerator", self)

236

[end of lib/streamlit/elements/file_uploader.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/lib/streamlit/elements/file_uploader.py b/lib/streamlit/elements/file_uploader.py

--- a/lib/streamlit/elements/file_uploader.py

+++ b/lib/streamlit/elements/file_uploader.py

@@ -167,10 +167,15 @@

ids = [f.id for f in files]

else:

ids = [files.id]

+

ctx = get_report_ctx()

if ctx is None:

return []

- max_id = ctx.uploaded_file_mgr._file_id_counter

+

+ # ctx.uploaded_file_mgr._file_id_counter stores the id to use for

+ # the next uploaded file, so the current highest file id is the

+ # counter minus 1.

+ max_id = ctx.uploaded_file_mgr._file_id_counter - 1

return [max_id] + ids

# FileUploader's widget value is a list of file IDs

@@ -187,6 +192,22 @@

serializer=serialize_file_uploader,

)

+ ctx = get_report_ctx()

+ if ctx is not None and widget_value:

+ serialized = serialize_file_uploader(widget_value)

+

+ # The first number in the serialized widget_value list is the id

+ # of the most recently uploaded file.

+ newest_file_id = serialized[0]

+ active_file_ids = list(serialized[1:])

+

+ ctx.uploaded_file_mgr.remove_orphaned_files(

+ session_id=ctx.session_id,

+ widget_id=file_uploader_proto.id,

+ newest_file_id=newest_file_id,

+ active_file_ids=active_file_ids,

+ )

+

self.dg._enqueue("file_uploader", file_uploader_proto)

return widget_value

@@ -208,27 +229,15 @@

)

return []

- # The first number in the widget_value list is 'newestServerFileId'

- newest_file_id = widget_value[0]

active_file_ids = list(widget_value[1:])

# Grab the files that correspond to our active file IDs.

- file_recs = ctx.uploaded_file_mgr.get_files(

+ return ctx.uploaded_file_mgr.get_files(

session_id=ctx.session_id,

widget_id=widget_id,

file_ids=active_file_ids,

)

- # Garbage collect "orphaned" files.

- ctx.uploaded_file_mgr.remove_orphaned_files(

- session_id=ctx.session_id,

- widget_id=widget_id,

- newest_file_id=newest_file_id,

- active_file_ids=active_file_ids,

- )

-

- return file_recs

-

@property

def dg(self) -> "streamlit.delta_generator.DeltaGenerator":

"""Get our DeltaGenerator."""

| {"golden_diff": "diff --git a/lib/streamlit/elements/file_uploader.py b/lib/streamlit/elements/file_uploader.py\n--- a/lib/streamlit/elements/file_uploader.py\n+++ b/lib/streamlit/elements/file_uploader.py\n@@ -167,10 +167,15 @@\n ids = [f.id for f in files]\n else:\n ids = [files.id]\n+\n ctx = get_report_ctx()\n if ctx is None:\n return []\n- max_id = ctx.uploaded_file_mgr._file_id_counter\n+\n+ # ctx.uploaded_file_mgr._file_id_counter stores the id to use for\n+ # the next uploaded file, so the current highest file id is the\n+ # counter minus 1.\n+ max_id = ctx.uploaded_file_mgr._file_id_counter - 1\n return [max_id] + ids\n \n # FileUploader's widget value is a list of file IDs\n@@ -187,6 +192,22 @@\n serializer=serialize_file_uploader,\n )\n \n+ ctx = get_report_ctx()\n+ if ctx is not None and widget_value:\n+ serialized = serialize_file_uploader(widget_value)\n+\n+ # The first number in the serialized widget_value list is the id\n+ # of the most recently uploaded file.\n+ newest_file_id = serialized[0]\n+ active_file_ids = list(serialized[1:])\n+\n+ ctx.uploaded_file_mgr.remove_orphaned_files(\n+ session_id=ctx.session_id,\n+ widget_id=file_uploader_proto.id,\n+ newest_file_id=newest_file_id,\n+ active_file_ids=active_file_ids,\n+ )\n+\n self.dg._enqueue(\"file_uploader\", file_uploader_proto)\n return widget_value\n \n@@ -208,27 +229,15 @@\n )\n return []\n \n- # The first number in the widget_value list is 'newestServerFileId'\n- newest_file_id = widget_value[0]\n active_file_ids = list(widget_value[1:])\n \n # Grab the files that correspond to our active file IDs.\n- file_recs = ctx.uploaded_file_mgr.get_files(\n+ return ctx.uploaded_file_mgr.get_files(\n session_id=ctx.session_id,\n widget_id=widget_id,\n file_ids=active_file_ids,\n )\n \n- # Garbage collect \"orphaned\" files.\n- ctx.uploaded_file_mgr.remove_orphaned_files(\n- session_id=ctx.session_id,\n- widget_id=widget_id,\n- newest_file_id=newest_file_id,\n- active_file_ids=active_file_ids,\n- )\n-\n- return file_recs\n-\n @property\n def dg(self) -> \"streamlit.delta_generator.DeltaGenerator\":\n \"\"\"Get our DeltaGenerator.\"\"\"\n", "issue": "File uploader does not return multiple files\nFrom: https://discuss.streamlit.io/t/issue-with-file-uploader-and-streamlit-version-0-84-0/14812\r\n\r\n---\r\n\r\nHi everyone,\r\n\r\nI'm new here. First of all thank you really much for your great work in this forum. It helped me out multiple times. But now I encountered an issue where I couldn't find a thread on, yet.\r\n\r\nWith the latest version (0.84.0) I'm experiencing a problem with the file_uploader widget.\r\n\r\nIn the prior version (0.82.0) it was very handy, for my case, to select one file after another and drag&drop it to the widget. With the latest version this doesn't seems possible anymore. When one or more files were uploaded the next file/s draged and droped to the widget are not accessible, even though these files appear in the interactive list below the widget. \r\nI want to use st.session_state is the app I'm working on and for that reason the version 0.84.0 is necessary to my understanding.\r\n\r\nIn order for you to reproduce the situation I made up this example:\r\n\r\n```\r\nimport streamlit as st\r\n\r\nuploaded_files = st.file_uploader('Select files',type=['txt'],accept_multiple_files=True)\r\nfile_lst = [uploaded_file.name for uploaded_file in uploaded_files]\r\nst.write(file_lst)\r\n```\r\n\r\nAssuming I want to upload the two files test1.txt and test2.txt one after another. For the first file (test1.txt) the behavior is as expected and equivalent for both versions:\r\n\r\n\r\n\r\nThen later I want to upload another file in this case test2.txt. \r\nThe expected behavior can be seen with version 0.82.0. Both files are shown in the interactive list below the widget as well as in the written file_lst.\r\n\r\n\r\n\r\nWith version 0.84.0 only the interactive list below the widget shows both files. The written file_lst shows only test1.txt.\r\n\r\n\r\n\r\nAnyone had a similar issue? I apologize, if the solution is obvious, but I got stuck with it and can't figure out, how to solve the issue.\n", "before_files": [{"content": "# Copyright 2018-2021 Streamlit Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom typing import cast, List, Optional, Union\n\nimport streamlit\nfrom streamlit import config\nfrom streamlit.logger import get_logger\nfrom streamlit.proto.FileUploader_pb2 import FileUploader as FileUploaderProto\nfrom streamlit.report_thread import get_report_ctx\nfrom streamlit.state.widgets import register_widget, NoValue\nfrom .form import current_form_id\nfrom ..proto.Common_pb2 import SInt64Array\nfrom ..uploaded_file_manager import UploadedFile, UploadedFileRec\nfrom .utils import check_callback_rules, check_session_state_rules\n\nLOGGER = get_logger(__name__)\n\n\nclass FileUploaderMixin:\n def file_uploader(\n self,\n label,\n type=None,\n accept_multiple_files=False,\n key=None,\n help=None,\n on_change=None,\n args=None,\n kwargs=None,\n ):\n \"\"\"Display a file uploader widget.\n By default, uploaded files are limited to 200MB. You can configure\n this using the `server.maxUploadSize` config option.\n\n Parameters\n ----------\n label : str\n A short label explaining to the user what this file uploader is for.\n\n type : str or list of str or None\n Array of allowed extensions. ['png', 'jpg']\n The default is None, which means all extensions are allowed.\n\n accept_multiple_files : bool\n If True, allows the user to upload multiple files at the same time,\n in which case the return value will be a list of files.\n Default: False\n\n key : str\n An optional string to use as the unique key for the widget.\n If this is omitted, a key will be generated for the widget\n based on its content. Multiple widgets of the same type may\n not share the same key.\n\n help : str\n A tooltip that gets displayed next to the file uploader.\n\n on_change : callable\n An optional callback invoked when this file_uploader's value\n changes.\n\n args : tuple\n An optional tuple of args to pass to the callback.\n\n kwargs : dict\n An optional dict of kwargs to pass to the callback.\n\n Returns\n -------\n None or UploadedFile or list of UploadedFile\n - If accept_multiple_files is False, returns either None or\n an UploadedFile object.\n - If accept_multiple_files is True, returns a list with the\n uploaded files as UploadedFile objects. If no files were\n uploaded, returns an empty list.\n\n The UploadedFile class is a subclass of BytesIO, and therefore\n it is \"file-like\". This means you can pass them anywhere where\n a file is expected.\n\n Examples\n --------\n Insert a file uploader that accepts a single file at a time:\n\n >>> uploaded_file = st.file_uploader(\"Choose a file\")\n >>> if uploaded_file is not None:\n ... # To read file as bytes:\n ... bytes_data = uploaded_file.getvalue()\n ... st.write(bytes_data)\n >>>\n ... # To convert to a string based IO:\n ... stringio = StringIO(uploaded_file.getvalue().decode(\"utf-8\"))\n ... st.write(stringio)\n >>>\n ... # To read file as string:\n ... string_data = stringio.read()\n ... st.write(string_data)\n >>>\n ... # Can be used wherever a \"file-like\" object is accepted:\n ... dataframe = pd.read_csv(uploaded_file)\n ... st.write(dataframe)\n\n Insert a file uploader that accepts multiple files at a time:\n\n >>> uploaded_files = st.file_uploader(\"Choose a CSV file\", accept_multiple_files=True)\n >>> for uploaded_file in uploaded_files:\n ... bytes_data = uploaded_file.read()\n ... st.write(\"filename:\", uploaded_file.name)\n ... st.write(bytes_data)\n \"\"\"\n check_callback_rules(self.dg, on_change)\n check_session_state_rules(default_value=None, key=key, writes_allowed=False)\n\n if type:\n if isinstance(type, str):\n type = [type]\n\n # May need a regex or a library to validate file types are valid\n # extensions.\n type = [\n file_type if file_type[0] == \".\" else f\".{file_type}\"\n for file_type in type\n ]\n\n file_uploader_proto = FileUploaderProto()\n file_uploader_proto.label = label\n file_uploader_proto.type[:] = type if type is not None else []\n file_uploader_proto.max_upload_size_mb = config.get_option(\n \"server.maxUploadSize\"\n )\n file_uploader_proto.multiple_files = accept_multiple_files\n file_uploader_proto.form_id = current_form_id(self.dg)\n if help is not None:\n file_uploader_proto.help = help\n\n def deserialize_file_uploader(\n ui_value: List[int], widget_id: str\n ) -> Optional[Union[List[UploadedFile], UploadedFile]]:\n file_recs = self._get_file_recs(widget_id, ui_value)\n if len(file_recs) == 0:\n return_value: Optional[Union[List[UploadedFile], UploadedFile]] = (\n [] if accept_multiple_files else None\n )\n else:\n files = [UploadedFile(rec) for rec in file_recs]\n return_value = files if accept_multiple_files else files[0]\n return return_value\n\n def serialize_file_uploader(\n files: Optional[Union[List[UploadedFile], UploadedFile]]\n ) -> List[int]:\n if not files:\n return []\n if isinstance(files, list):\n ids = [f.id for f in files]\n else:\n ids = [files.id]\n ctx = get_report_ctx()\n if ctx is None:\n return []\n max_id = ctx.uploaded_file_mgr._file_id_counter\n return [max_id] + ids\n\n # FileUploader's widget value is a list of file IDs\n # representing the current set of files that this uploader should\n # know about.\n widget_value, _ = register_widget(\n \"file_uploader\",\n file_uploader_proto,\n user_key=key,\n on_change_handler=on_change,\n args=args,\n kwargs=kwargs,\n deserializer=deserialize_file_uploader,\n serializer=serialize_file_uploader,\n )\n\n self.dg._enqueue(\"file_uploader\", file_uploader_proto)\n return widget_value\n\n @staticmethod\n def _get_file_recs(\n widget_id: str, widget_value: Optional[List[int]]\n ) -> List[UploadedFileRec]:\n if widget_value is None:\n return []\n\n ctx = get_report_ctx()\n if ctx is None:\n return []\n\n if len(widget_value) == 0:\n # Sanity check\n LOGGER.warning(\n \"Got an empty FileUploader widget_value. (We expect a list with at least one value in it.)\"\n )\n return []\n\n # The first number in the widget_value list is 'newestServerFileId'\n newest_file_id = widget_value[0]\n active_file_ids = list(widget_value[1:])\n\n # Grab the files that correspond to our active file IDs.\n file_recs = ctx.uploaded_file_mgr.get_files(\n session_id=ctx.session_id,\n widget_id=widget_id,\n file_ids=active_file_ids,\n )\n\n # Garbage collect \"orphaned\" files.\n ctx.uploaded_file_mgr.remove_orphaned_files(\n session_id=ctx.session_id,\n widget_id=widget_id,\n newest_file_id=newest_file_id,\n active_file_ids=active_file_ids,\n )\n\n return file_recs\n\n @property\n def dg(self) -> \"streamlit.delta_generator.DeltaGenerator\":\n \"\"\"Get our DeltaGenerator.\"\"\"\n return cast(\"streamlit.delta_generator.DeltaGenerator\", self)\n", "path": "lib/streamlit/elements/file_uploader.py"}]} | 3,693 | 615 |

gh_patches_debug_3516 | rasdani/github-patches | git_diff | getmoto__moto-2446 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

STS: Invalid xmlns in response from STS

I got error below, when trying to AssumeRole by AWS SDK (for PHP).

```

Error parsing XML: xmlns: 'https:\/\/sts.amazonaws.com\/doc\/ 2011-06-15\/' is not a valid URI

```

</issue>

<code>

[start of moto/sts/responses.py]

1 from __future__ import unicode_literals

2

3 from moto.core.responses import BaseResponse

4 from moto.iam.models import ACCOUNT_ID

5 from moto.iam import iam_backend

6 from .exceptions import STSValidationError

7 from .models import sts_backend

8

9 MAX_FEDERATION_TOKEN_POLICY_LENGTH = 2048

10

11

12 class TokenResponse(BaseResponse):

13

14 def get_session_token(self):

15 duration = int(self.querystring.get('DurationSeconds', [43200])[0])

16 token = sts_backend.get_session_token(duration=duration)

17 template = self.response_template(GET_SESSION_TOKEN_RESPONSE)

18 return template.render(token=token)

19

20 def get_federation_token(self):

21 duration = int(self.querystring.get('DurationSeconds', [43200])[0])

22 policy = self.querystring.get('Policy', [None])[0]

23

24 if policy is not None and len(policy) > MAX_FEDERATION_TOKEN_POLICY_LENGTH:

25 raise STSValidationError(

26 "1 validation error detected: Value "

27 "'{\"Version\": \"2012-10-17\", \"Statement\": [...]}' "

28 "at 'policy' failed to satisfy constraint: Member must have length less than or "

29 " equal to %s" % MAX_FEDERATION_TOKEN_POLICY_LENGTH

30 )

31

32 name = self.querystring.get('Name')[0]

33 token = sts_backend.get_federation_token(

34 duration=duration, name=name, policy=policy)

35 template = self.response_template(GET_FEDERATION_TOKEN_RESPONSE)

36 return template.render(token=token, account_id=ACCOUNT_ID)

37

38 def assume_role(self):

39 role_session_name = self.querystring.get('RoleSessionName')[0]

40 role_arn = self.querystring.get('RoleArn')[0]

41

42 policy = self.querystring.get('Policy', [None])[0]

43 duration = int(self.querystring.get('DurationSeconds', [3600])[0])

44 external_id = self.querystring.get('ExternalId', [None])[0]

45

46 role = sts_backend.assume_role(

47 role_session_name=role_session_name,

48 role_arn=role_arn,

49 policy=policy,

50 duration=duration,

51 external_id=external_id,

52 )

53 template = self.response_template(ASSUME_ROLE_RESPONSE)

54 return template.render(role=role)

55

56 def assume_role_with_web_identity(self):

57 role_session_name = self.querystring.get('RoleSessionName')[0]

58 role_arn = self.querystring.get('RoleArn')[0]

59

60 policy = self.querystring.get('Policy', [None])[0]

61 duration = int(self.querystring.get('DurationSeconds', [3600])[0])

62 external_id = self.querystring.get('ExternalId', [None])[0]

63

64 role = sts_backend.assume_role_with_web_identity(

65 role_session_name=role_session_name,

66 role_arn=role_arn,

67 policy=policy,

68 duration=duration,

69 external_id=external_id,

70 )

71 template = self.response_template(ASSUME_ROLE_WITH_WEB_IDENTITY_RESPONSE)

72 return template.render(role=role)

73

74 def get_caller_identity(self):

75 template = self.response_template(GET_CALLER_IDENTITY_RESPONSE)

76

77 # Default values in case the request does not use valid credentials generated by moto

78 user_id = "AKIAIOSFODNN7EXAMPLE"

79 arn = "arn:aws:sts::{account_id}:user/moto".format(account_id=ACCOUNT_ID)

80

81 access_key_id = self.get_current_user()

82 assumed_role = sts_backend.get_assumed_role_from_access_key(access_key_id)

83 if assumed_role:

84 user_id = assumed_role.user_id

85 arn = assumed_role.arn

86

87 user = iam_backend.get_user_from_access_key_id(access_key_id)

88 if user:

89 user_id = user.id

90 arn = user.arn

91

92 return template.render(account_id=ACCOUNT_ID, user_id=user_id, arn=arn)

93

94

95 GET_SESSION_TOKEN_RESPONSE = """<GetSessionTokenResponse xmlns="https://sts.amazonaws.com/doc/2011-06-15/">

96 <GetSessionTokenResult>

97 <Credentials>

98 <SessionToken>AQoEXAMPLEH4aoAH0gNCAPyJxz4BlCFFxWNE1OPTgk5TthT+FvwqnKwRcOIfrRh3c/LTo6UDdyJwOOvEVPvLXCrrrUtdnniCEXAMPLE/IvU1dYUg2RVAJBanLiHb4IgRmpRV3zrkuWJOgQs8IZZaIv2BXIa2R4OlgkBN9bkUDNCJiBeb/AXlzBBko7b15fjrBs2+cTQtpZ3CYWFXG8C5zqx37wnOE49mRl/+OtkIKGO7fAE</SessionToken>

99 <SecretAccessKey>wJalrXUtnFEMI/K7MDENG/bPxRfiCYzEXAMPLEKEY</SecretAccessKey>

100 <Expiration>{{ token.expiration_ISO8601 }}</Expiration>

101 <AccessKeyId>AKIAIOSFODNN7EXAMPLE</AccessKeyId>

102 </Credentials>

103 </GetSessionTokenResult>

104 <ResponseMetadata>

105 <RequestId>58c5dbae-abef-11e0-8cfe-09039844ac7d</RequestId>

106 </ResponseMetadata>

107 </GetSessionTokenResponse>"""

108

109

110 GET_FEDERATION_TOKEN_RESPONSE = """<GetFederationTokenResponse xmlns="https://sts.amazonaws.com/doc/

111 2011-06-15/">

112 <GetFederationTokenResult>

113 <Credentials>

114 <SessionToken>AQoDYXdzEPT//////////wEXAMPLEtc764bNrC9SAPBSM22wDOk4x4HIZ8j4FZTwdQWLWsKWHGBuFqwAeMicRXmxfpSPfIeoIYRqTflfKD8YUuwthAx7mSEI/qkPpKPi/kMcGdQrmGdeehM4IC1NtBmUpp2wUE8phUZampKsburEDy0KPkyQDYwT7WZ0wq5VSXDvp75YU9HFvlRd8Tx6q6fE8YQcHNVXAkiY9q6d+xo0rKwT38xVqr7ZD0u0iPPkUL64lIZbqBAz+scqKmlzm8FDrypNC9Yjc8fPOLn9FX9KSYvKTr4rvx3iSIlTJabIQwj2ICCR/oLxBA==</SessionToken>

115 <SecretAccessKey>wJalrXUtnFEMI/K7MDENG/bPxRfiCYzEXAMPLEKEY</SecretAccessKey>

116 <Expiration>{{ token.expiration_ISO8601 }}</Expiration>

117 <AccessKeyId>AKIAIOSFODNN7EXAMPLE</AccessKeyId>

118 </Credentials>

119 <FederatedUser>

120 <Arn>arn:aws:sts::{{ account_id }}:federated-user/{{ token.name }}</Arn>

121 <FederatedUserId>{{ account_id }}:{{ token.name }}</FederatedUserId>

122 </FederatedUser>

123 <PackedPolicySize>6</PackedPolicySize>

124 </GetFederationTokenResult>

125 <ResponseMetadata>

126 <RequestId>c6104cbe-af31-11e0-8154-cbc7ccf896c7</RequestId>

127 </ResponseMetadata>

128 </GetFederationTokenResponse>"""

129

130

131 ASSUME_ROLE_RESPONSE = """<AssumeRoleResponse xmlns="https://sts.amazonaws.com/doc/

132 2011-06-15/">

133 <AssumeRoleResult>

134 <Credentials>

135 <SessionToken>{{ role.session_token }}</SessionToken>

136 <SecretAccessKey>{{ role.secret_access_key }}</SecretAccessKey>

137 <Expiration>{{ role.expiration_ISO8601 }}</Expiration>

138 <AccessKeyId>{{ role.access_key_id }}</AccessKeyId>

139 </Credentials>

140 <AssumedRoleUser>

141 <Arn>{{ role.arn }}</Arn>

142 <AssumedRoleId>{{ role.user_id }}</AssumedRoleId>

143 </AssumedRoleUser>

144 <PackedPolicySize>6</PackedPolicySize>

145 </AssumeRoleResult>

146 <ResponseMetadata>

147 <RequestId>c6104cbe-af31-11e0-8154-cbc7ccf896c7</RequestId>

148 </ResponseMetadata>

149 </AssumeRoleResponse>"""

150

151

152 ASSUME_ROLE_WITH_WEB_IDENTITY_RESPONSE = """<AssumeRoleWithWebIdentityResponse xmlns="https://sts.amazonaws.com/doc/2011-06-15/">

153 <AssumeRoleWithWebIdentityResult>

154 <Credentials>

155 <SessionToken>{{ role.session_token }}</SessionToken>

156 <SecretAccessKey>{{ role.secret_access_key }}</SecretAccessKey>

157 <Expiration>{{ role.expiration_ISO8601 }}</Expiration>

158 <AccessKeyId>{{ role.access_key_id }}</AccessKeyId>

159 </Credentials>

160 <AssumedRoleUser>

161 <Arn>{{ role.arn }}</Arn>

162 <AssumedRoleId>ARO123EXAMPLE123:{{ role.session_name }}</AssumedRoleId>

163 </AssumedRoleUser>

164 <PackedPolicySize>6</PackedPolicySize>

165 </AssumeRoleWithWebIdentityResult>

166 <ResponseMetadata>

167 <RequestId>c6104cbe-af31-11e0-8154-cbc7ccf896c7</RequestId>

168 </ResponseMetadata>

169 </AssumeRoleWithWebIdentityResponse>"""

170

171

172 GET_CALLER_IDENTITY_RESPONSE = """<GetCallerIdentityResponse xmlns="https://sts.amazonaws.com/doc/2011-06-15/">

173 <GetCallerIdentityResult>

174 <Arn>{{ arn }}</Arn>

175 <UserId>{{ user_id }}</UserId>

176 <Account>{{ account_id }}</Account>

177 </GetCallerIdentityResult>

178 <ResponseMetadata>

179 <RequestId>c6104cbe-af31-11e0-8154-cbc7ccf896c7</RequestId>

180 </ResponseMetadata>

181 </GetCallerIdentityResponse>

182 """

183

[end of moto/sts/responses.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/moto/sts/responses.py b/moto/sts/responses.py

--- a/moto/sts/responses.py

+++ b/moto/sts/responses.py

@@ -128,8 +128,7 @@

</GetFederationTokenResponse>"""

-ASSUME_ROLE_RESPONSE = """<AssumeRoleResponse xmlns="https://sts.amazonaws.com/doc/

-2011-06-15/">

+ASSUME_ROLE_RESPONSE = """<AssumeRoleResponse xmlns="https://sts.amazonaws.com/doc/2011-06-15/">

<AssumeRoleResult>

<Credentials>

<SessionToken>{{ role.session_token }}</SessionToken>

| {"golden_diff": "diff --git a/moto/sts/responses.py b/moto/sts/responses.py\n--- a/moto/sts/responses.py\n+++ b/moto/sts/responses.py\n@@ -128,8 +128,7 @@\n </GetFederationTokenResponse>\"\"\"\n \n \n-ASSUME_ROLE_RESPONSE = \"\"\"<AssumeRoleResponse xmlns=\"https://sts.amazonaws.com/doc/\n-2011-06-15/\">\n+ASSUME_ROLE_RESPONSE = \"\"\"<AssumeRoleResponse xmlns=\"https://sts.amazonaws.com/doc/2011-06-15/\">\n <AssumeRoleResult>\n <Credentials>\n <SessionToken>{{ role.session_token }}</SessionToken>\n", "issue": "STS: Invalid xmlns in response from STS\nI got error below, when trying to AssumeRole by AWS SDK (for PHP).\r\n```\r\nError parsing XML: xmlns: 'https:\\/\\/sts.amazonaws.com\\/doc\\/ 2011-06-15\\/' is not a valid URI\r\n```\r\n\n", "before_files": [{"content": "from __future__ import unicode_literals\n\nfrom moto.core.responses import BaseResponse\nfrom moto.iam.models import ACCOUNT_ID\nfrom moto.iam import iam_backend\nfrom .exceptions import STSValidationError\nfrom .models import sts_backend\n\nMAX_FEDERATION_TOKEN_POLICY_LENGTH = 2048\n\n\nclass TokenResponse(BaseResponse):\n\n def get_session_token(self):\n duration = int(self.querystring.get('DurationSeconds', [43200])[0])\n token = sts_backend.get_session_token(duration=duration)\n template = self.response_template(GET_SESSION_TOKEN_RESPONSE)\n return template.render(token=token)\n\n def get_federation_token(self):\n duration = int(self.querystring.get('DurationSeconds', [43200])[0])\n policy = self.querystring.get('Policy', [None])[0]\n\n if policy is not None and len(policy) > MAX_FEDERATION_TOKEN_POLICY_LENGTH:\n raise STSValidationError(\n \"1 validation error detected: Value \"\n \"'{\\\"Version\\\": \\\"2012-10-17\\\", \\\"Statement\\\": [...]}' \"\n \"at 'policy' failed to satisfy constraint: Member must have length less than or \"\n \" equal to %s\" % MAX_FEDERATION_TOKEN_POLICY_LENGTH\n )\n\n name = self.querystring.get('Name')[0]\n token = sts_backend.get_federation_token(\n duration=duration, name=name, policy=policy)\n template = self.response_template(GET_FEDERATION_TOKEN_RESPONSE)\n return template.render(token=token, account_id=ACCOUNT_ID)\n\n def assume_role(self):\n role_session_name = self.querystring.get('RoleSessionName')[0]\n role_arn = self.querystring.get('RoleArn')[0]\n\n policy = self.querystring.get('Policy', [None])[0]\n duration = int(self.querystring.get('DurationSeconds', [3600])[0])\n external_id = self.querystring.get('ExternalId', [None])[0]\n\n role = sts_backend.assume_role(\n role_session_name=role_session_name,\n role_arn=role_arn,\n policy=policy,\n duration=duration,\n external_id=external_id,\n )\n template = self.response_template(ASSUME_ROLE_RESPONSE)\n return template.render(role=role)\n\n def assume_role_with_web_identity(self):\n role_session_name = self.querystring.get('RoleSessionName')[0]\n role_arn = self.querystring.get('RoleArn')[0]\n\n policy = self.querystring.get('Policy', [None])[0]\n duration = int(self.querystring.get('DurationSeconds', [3600])[0])\n external_id = self.querystring.get('ExternalId', [None])[0]\n\n role = sts_backend.assume_role_with_web_identity(\n role_session_name=role_session_name,\n role_arn=role_arn,\n policy=policy,\n duration=duration,\n external_id=external_id,\n )\n template = self.response_template(ASSUME_ROLE_WITH_WEB_IDENTITY_RESPONSE)\n return template.render(role=role)\n\n def get_caller_identity(self):\n template = self.response_template(GET_CALLER_IDENTITY_RESPONSE)\n\n # Default values in case the request does not use valid credentials generated by moto\n user_id = \"AKIAIOSFODNN7EXAMPLE\"\n arn = \"arn:aws:sts::{account_id}:user/moto\".format(account_id=ACCOUNT_ID)\n\n access_key_id = self.get_current_user()\n assumed_role = sts_backend.get_assumed_role_from_access_key(access_key_id)\n if assumed_role:\n user_id = assumed_role.user_id\n arn = assumed_role.arn\n\n user = iam_backend.get_user_from_access_key_id(access_key_id)\n if user:\n user_id = user.id\n arn = user.arn\n\n return template.render(account_id=ACCOUNT_ID, user_id=user_id, arn=arn)\n\n\nGET_SESSION_TOKEN_RESPONSE = \"\"\"<GetSessionTokenResponse xmlns=\"https://sts.amazonaws.com/doc/2011-06-15/\">\n <GetSessionTokenResult>\n <Credentials>\n <SessionToken>AQoEXAMPLEH4aoAH0gNCAPyJxz4BlCFFxWNE1OPTgk5TthT+FvwqnKwRcOIfrRh3c/LTo6UDdyJwOOvEVPvLXCrrrUtdnniCEXAMPLE/IvU1dYUg2RVAJBanLiHb4IgRmpRV3zrkuWJOgQs8IZZaIv2BXIa2R4OlgkBN9bkUDNCJiBeb/AXlzBBko7b15fjrBs2+cTQtpZ3CYWFXG8C5zqx37wnOE49mRl/+OtkIKGO7fAE</SessionToken>\n <SecretAccessKey>wJalrXUtnFEMI/K7MDENG/bPxRfiCYzEXAMPLEKEY</SecretAccessKey>\n <Expiration>{{ token.expiration_ISO8601 }}</Expiration>\n <AccessKeyId>AKIAIOSFODNN7EXAMPLE</AccessKeyId>\n </Credentials>\n </GetSessionTokenResult>\n <ResponseMetadata>\n <RequestId>58c5dbae-abef-11e0-8cfe-09039844ac7d</RequestId>\n </ResponseMetadata>\n</GetSessionTokenResponse>\"\"\"\n\n\nGET_FEDERATION_TOKEN_RESPONSE = \"\"\"<GetFederationTokenResponse xmlns=\"https://sts.amazonaws.com/doc/\n2011-06-15/\">\n <GetFederationTokenResult>\n <Credentials>\n <SessionToken>AQoDYXdzEPT//////////wEXAMPLEtc764bNrC9SAPBSM22wDOk4x4HIZ8j4FZTwdQWLWsKWHGBuFqwAeMicRXmxfpSPfIeoIYRqTflfKD8YUuwthAx7mSEI/qkPpKPi/kMcGdQrmGdeehM4IC1NtBmUpp2wUE8phUZampKsburEDy0KPkyQDYwT7WZ0wq5VSXDvp75YU9HFvlRd8Tx6q6fE8YQcHNVXAkiY9q6d+xo0rKwT38xVqr7ZD0u0iPPkUL64lIZbqBAz+scqKmlzm8FDrypNC9Yjc8fPOLn9FX9KSYvKTr4rvx3iSIlTJabIQwj2ICCR/oLxBA==</SessionToken>\n <SecretAccessKey>wJalrXUtnFEMI/K7MDENG/bPxRfiCYzEXAMPLEKEY</SecretAccessKey>\n <Expiration>{{ token.expiration_ISO8601 }}</Expiration>\n <AccessKeyId>AKIAIOSFODNN7EXAMPLE</AccessKeyId>\n </Credentials>\n <FederatedUser>\n <Arn>arn:aws:sts::{{ account_id }}:federated-user/{{ token.name }}</Arn>\n <FederatedUserId>{{ account_id }}:{{ token.name }}</FederatedUserId>\n </FederatedUser>\n <PackedPolicySize>6</PackedPolicySize>\n </GetFederationTokenResult>\n <ResponseMetadata>\n <RequestId>c6104cbe-af31-11e0-8154-cbc7ccf896c7</RequestId>\n </ResponseMetadata>\n</GetFederationTokenResponse>\"\"\"\n\n\nASSUME_ROLE_RESPONSE = \"\"\"<AssumeRoleResponse xmlns=\"https://sts.amazonaws.com/doc/\n2011-06-15/\">\n <AssumeRoleResult>\n <Credentials>\n <SessionToken>{{ role.session_token }}</SessionToken>\n <SecretAccessKey>{{ role.secret_access_key }}</SecretAccessKey>\n <Expiration>{{ role.expiration_ISO8601 }}</Expiration>\n <AccessKeyId>{{ role.access_key_id }}</AccessKeyId>\n </Credentials>\n <AssumedRoleUser>\n <Arn>{{ role.arn }}</Arn>\n <AssumedRoleId>{{ role.user_id }}</AssumedRoleId>\n </AssumedRoleUser>\n <PackedPolicySize>6</PackedPolicySize>\n </AssumeRoleResult>\n <ResponseMetadata>\n <RequestId>c6104cbe-af31-11e0-8154-cbc7ccf896c7</RequestId>\n </ResponseMetadata>\n</AssumeRoleResponse>\"\"\"\n\n\nASSUME_ROLE_WITH_WEB_IDENTITY_RESPONSE = \"\"\"<AssumeRoleWithWebIdentityResponse xmlns=\"https://sts.amazonaws.com/doc/2011-06-15/\">\n <AssumeRoleWithWebIdentityResult>\n <Credentials>\n <SessionToken>{{ role.session_token }}</SessionToken>\n <SecretAccessKey>{{ role.secret_access_key }}</SecretAccessKey>\n <Expiration>{{ role.expiration_ISO8601 }}</Expiration>\n <AccessKeyId>{{ role.access_key_id }}</AccessKeyId>\n </Credentials>\n <AssumedRoleUser>\n <Arn>{{ role.arn }}</Arn>\n <AssumedRoleId>ARO123EXAMPLE123:{{ role.session_name }}</AssumedRoleId>\n </AssumedRoleUser>\n <PackedPolicySize>6</PackedPolicySize>\n </AssumeRoleWithWebIdentityResult>\n <ResponseMetadata>\n <RequestId>c6104cbe-af31-11e0-8154-cbc7ccf896c7</RequestId>\n </ResponseMetadata>\n</AssumeRoleWithWebIdentityResponse>\"\"\"\n\n\nGET_CALLER_IDENTITY_RESPONSE = \"\"\"<GetCallerIdentityResponse xmlns=\"https://sts.amazonaws.com/doc/2011-06-15/\">\n <GetCallerIdentityResult>\n <Arn>{{ arn }}</Arn>\n <UserId>{{ user_id }}</UserId>\n <Account>{{ account_id }}</Account>\n </GetCallerIdentityResult>\n <ResponseMetadata>\n <RequestId>c6104cbe-af31-11e0-8154-cbc7ccf896c7</RequestId>\n </ResponseMetadata>\n</GetCallerIdentityResponse>\n\"\"\"\n", "path": "moto/sts/responses.py"}]} | 3,335 | 153 |

gh_patches_debug_42444 | rasdani/github-patches | git_diff | geopandas__geopandas-1160 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Lazy Loading Dataframes

There have been times when I wanted to use geopandas to get an idea of what was in a large file. But, it took a long time to load it in. So, I used `fiona` instead. Are there any plans (or desires) to do lazy loading of the geopandas data?

This is library I recently saw is related:

https://vaex.readthedocs.io/en/latest/index.html

https://github.com/vaexio/vaex

</issue>

<code>

[start of geopandas/io/file.py]

1 from distutils.version import LooseVersion

2

3 import numpy as np

4 import pandas as pd

5

6 import fiona

7

8 from geopandas import GeoDataFrame, GeoSeries

9

10 try:

11 from fiona import Env as fiona_env

12 except ImportError:

13 from fiona import drivers as fiona_env

14 # Adapted from pandas.io.common

15 from urllib.request import urlopen as _urlopen

16 from urllib.parse import urlparse as parse_url

17 from urllib.parse import uses_relative, uses_netloc, uses_params

18

19

20 _FIONA18 = LooseVersion(fiona.__version__) >= LooseVersion("1.8")

21 _VALID_URLS = set(uses_relative + uses_netloc + uses_params)

22 _VALID_URLS.discard("")

23

24

25 def _is_url(url):

26 """Check to see if *url* has a valid protocol."""

27 try:

28 return parse_url(url).scheme in _VALID_URLS

29 except Exception:

30 return False

31

32

33 def read_file(filename, bbox=None, **kwargs):

34 """

35 Returns a GeoDataFrame from a file or URL.

36

37 Parameters

38 ----------

39 filename: str

40 Either the absolute or relative path to the file or URL to

41 be opened.

42 bbox : tuple | GeoDataFrame or GeoSeries, default None

43 Filter features by given bounding box, GeoSeries, or GeoDataFrame.

44 CRS mis-matches are resolved if given a GeoSeries or GeoDataFrame.

45 **kwargs:

46 Keyword args to be passed to the `open` or `BytesCollection` method

47 in the fiona library when opening the file. For more information on

48 possible keywords, type:

49 ``import fiona; help(fiona.open)``

50

51 Examples

52 --------

53 >>> df = geopandas.read_file("nybb.shp")

54

55 Returns

56 -------

57 geodataframe : GeoDataFrame

58

59 Notes

60 -----

61 The format drivers will attempt to detect the encoding of your data, but

62 may fail. In this case, the proper encoding can be specified explicitly

63 by using the encoding keyword parameter, e.g. ``encoding='utf-8'``.

64 """

65 if _is_url(filename):

66 req = _urlopen(filename)

67 path_or_bytes = req.read()

68 reader = fiona.BytesCollection

69 else:

70 path_or_bytes = filename

71 reader = fiona.open

72

73 with fiona_env():

74 with reader(path_or_bytes, **kwargs) as features:

75

76 # In a future Fiona release the crs attribute of features will

77 # no longer be a dict, but will behave like a dict. So this should

78 # be forwards compatible

79 crs = (

80 features.crs["init"]

81 if features.crs and "init" in features.crs

82 else features.crs_wkt

83 )

84

85 if bbox is not None:

86 if isinstance(bbox, GeoDataFrame) or isinstance(bbox, GeoSeries):

87 bbox = tuple(bbox.to_crs(crs).total_bounds)

88 assert len(bbox) == 4

89 f_filt = features.filter(bbox=bbox)

90 else:

91 f_filt = features

92

93 columns = list(features.meta["schema"]["properties"]) + ["geometry"]

94 gdf = GeoDataFrame.from_features(f_filt, crs=crs, columns=columns)

95

96 return gdf

97

98

99 def to_file(df, filename, driver="ESRI Shapefile", schema=None, index=None, **kwargs):

100 """

101 Write this GeoDataFrame to an OGR data source

102

103 A dictionary of supported OGR providers is available via:

104 >>> import fiona

105 >>> fiona.supported_drivers

106

107 Parameters

108 ----------

109 df : GeoDataFrame to be written

110 filename : string

111 File path or file handle to write to.

112 driver : string, default 'ESRI Shapefile'

113 The OGR format driver used to write the vector file.

114 schema : dict, default None

115 If specified, the schema dictionary is passed to Fiona to

116 better control how the file is written. If None, GeoPandas

117 will determine the schema based on each column's dtype

118 index : bool, default None

119 If True, write index into one or more columns (for MultiIndex).

120 Default None writes the index into one or more columns only if

121 the index is named, is a MultiIndex, or has a non-integer data

122 type. If False, no index is written.

123

124 .. versionadded:: 0.7

125 Previously the index was not written.

126

127 The *kwargs* are passed to fiona.open and can be used to write

128 to multi-layer data, store data within archives (zip files), etc.

129 The path may specify a fiona VSI scheme.

130

131 Notes

132 -----

133 The format drivers will attempt to detect the encoding of your data, but

134 may fail. In this case, the proper encoding can be specified explicitly

135 by using the encoding keyword parameter, e.g. ``encoding='utf-8'``.

136 """

137 if index is None:

138 # Determine if index attribute(s) should be saved to file

139 index = list(df.index.names) != [None] or type(df.index) not in (

140 pd.RangeIndex,

141 pd.Int64Index,

142 )

143 if index:

144 df = df.reset_index(drop=False)

145 if schema is None:

146 schema = infer_schema(df)

147 with fiona_env():

148 crs_wkt = None

149 try:

150 gdal_version = fiona.env.get_gdal_release_name()

151 except AttributeError:

152 gdal_version = "2.0.0" # just assume it is not the latest

153 if LooseVersion(gdal_version) >= LooseVersion("3.0.0") and df.crs:

154 crs_wkt = df.crs.to_wkt()

155 elif df.crs:

156 crs_wkt = df.crs.to_wkt("WKT1_GDAL")

157 with fiona.open(

158 filename, "w", driver=driver, crs_wkt=crs_wkt, schema=schema, **kwargs

159 ) as colxn:

160 colxn.writerecords(df.iterfeatures())

161

162

163 def infer_schema(df):

164 from collections import OrderedDict

165

166 # TODO: test pandas string type and boolean type once released

167 types = {"Int64": "int", "string": "str", "boolean": "bool"}

168

169 def convert_type(column, in_type):

170 if in_type == object:

171 return "str"

172 if in_type.name.startswith("datetime64"):

173 # numpy datetime type regardless of frequency

174 return "datetime"

175 if str(in_type) in types:

176 out_type = types[str(in_type)]

177 else:

178 out_type = type(np.zeros(1, in_type).item()).__name__

179 if out_type == "long":

180 out_type = "int"

181 if not _FIONA18 and out_type == "bool":

182 raise ValueError(

183 'column "{}" is boolean type, '.format(column)

184 + "which is unsupported in file writing with fiona "

185 "< 1.8. Consider casting the column to int type."

186 )

187 return out_type

188

189 properties = OrderedDict(

190 [

191 (col, convert_type(col, _type))

192 for col, _type in zip(df.columns, df.dtypes)

193 if col != df._geometry_column_name

194 ]

195 )

196

197 if df.empty:

198 raise ValueError("Cannot write empty DataFrame to file.")

199

200 # Since https://github.com/Toblerity/Fiona/issues/446 resolution,

201 # Fiona allows a list of geometry types

202 geom_types = _geometry_types(df)

203

204 schema = {"geometry": geom_types, "properties": properties}

205

206 return schema

207

208

209 def _geometry_types(df):

210 """

211 Determine the geometry types in the GeoDataFrame for the schema.

212 """

213 if _FIONA18:

214 # Starting from Fiona 1.8, schema submitted to fiona to write a gdf

215 # can have mixed geometries:

216 # - 3D and 2D shapes can coexist in inferred schema

217 # - Shape and MultiShape types can (and must) coexist in inferred

218 # schema

219 geom_types_2D = df[~df.geometry.has_z].geometry.geom_type.unique()

220 geom_types_2D = [gtype for gtype in geom_types_2D if gtype is not None]

221 geom_types_3D = df[df.geometry.has_z].geometry.geom_type.unique()

222 geom_types_3D = ["3D " + gtype for gtype in geom_types_3D if gtype is not None]

223 geom_types = geom_types_3D + geom_types_2D

224

225 else:

226 # Before Fiona 1.8, schema submitted to write a gdf should have

227 # one single geometry type whenever possible:

228 # - 3D and 2D shapes cannot coexist in inferred schema

229 # - Shape and MultiShape can not coexist in inferred schema

230 geom_types = _geometry_types_back_compat(df)

231

232 if len(geom_types) == 0:

233 # Default geometry type supported by Fiona

234 # (Since https://github.com/Toblerity/Fiona/issues/446 resolution)

235 return "Unknown"

236

237 if len(geom_types) == 1:

238 geom_types = geom_types[0]

239

240 return geom_types

241

242

243 def _geometry_types_back_compat(df):

244 """

245 for backward compatibility with Fiona<1.8 only

246 """

247 unique_geom_types = df.geometry.geom_type.unique()

248 unique_geom_types = [gtype for gtype in unique_geom_types if gtype is not None]

249

250 # merge single and Multi types (eg Polygon and MultiPolygon)

251 unique_geom_types = [

252 gtype

253 for gtype in unique_geom_types

254 if not gtype.startswith("Multi") or gtype[5:] not in unique_geom_types

255 ]

256

257 if df.geometry.has_z.any():

258 # declare all geometries as 3D geometries

259 unique_geom_types = ["3D " + type for type in unique_geom_types]

260 # by default, all geometries are 2D geometries

261

262 return unique_geom_types

263

[end of geopandas/io/file.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/geopandas/io/file.py b/geopandas/io/file.py

--- a/geopandas/io/file.py

+++ b/geopandas/io/file.py

@@ -4,17 +4,21 @@

import pandas as pd

import fiona

-

-from geopandas import GeoDataFrame, GeoSeries

+from shapely.geometry import mapping

+from shapely.geometry.base import BaseGeometry

try:

from fiona import Env as fiona_env

except ImportError:

from fiona import drivers as fiona_env

+

+from geopandas import GeoDataFrame, GeoSeries

+

+

# Adapted from pandas.io.common

from urllib.request import urlopen as _urlopen

from urllib.parse import urlparse as parse_url

-from urllib.parse import uses_relative, uses_netloc, uses_params

+from urllib.parse import uses_netloc, uses_params, uses_relative

_FIONA18 = LooseVersion(fiona.__version__) >= LooseVersion("1.8")

@@ -30,18 +34,29 @@

return False

-def read_file(filename, bbox=None, **kwargs):

+def read_file(filename, bbox=None, mask=None, rows=None, **kwargs):

"""

Returns a GeoDataFrame from a file or URL.

+ .. versionadded:: 0.7.0 mask, rows

+

Parameters

----------

filename: str

Either the absolute or relative path to the file or URL to

be opened.

- bbox : tuple | GeoDataFrame or GeoSeries, default None

- Filter features by given bounding box, GeoSeries, or GeoDataFrame.

+ bbox: tuple | GeoDataFrame or GeoSeries | shapely Geometry, default None

+ Filter features by given bounding box, GeoSeries, GeoDataFrame or a

+ shapely geometry. CRS mis-matches are resolved if given a GeoSeries

+ or GeoDataFrame. Cannot be used with mask.

+ mask: dict | GeoDataFrame or GeoSeries | shapely Geometry, default None

+ Filter for features that intersect with the given dict-like geojson

+ geometry, GeoSeries, GeoDataFrame or shapely geometry.

CRS mis-matches are resolved if given a GeoSeries or GeoDataFrame.

+ Cannot be used with bbox.

+ rows: int or slice, default None

+ Load in specific rows by passing an integer (first `n` rows) or a

+ slice() object.

**kwargs:

Keyword args to be passed to the `open` or `BytesCollection` method

in the fiona library when opening the file. For more information on

@@ -54,7 +69,7 @@

Returns

-------

- geodataframe : GeoDataFrame

+ :obj:`geopandas.GeoDataFrame`

Notes

-----

@@ -82,11 +97,29 @@

else features.crs_wkt

)

+ # handle loading the bounding box

if bbox is not None:

- if isinstance(bbox, GeoDataFrame) or isinstance(bbox, GeoSeries):

+ if isinstance(bbox, (GeoDataFrame, GeoSeries)):

bbox = tuple(bbox.to_crs(crs).total_bounds)

+ elif isinstance(bbox, BaseGeometry):

+ bbox = bbox.bounds

assert len(bbox) == 4

- f_filt = features.filter(bbox=bbox)

+ # handle loading the mask

+ elif isinstance(mask, (GeoDataFrame, GeoSeries)):

+ mask = mapping(mask.to_crs(crs).unary_union)

+ elif isinstance(mask, BaseGeometry):

+ mask = mapping(mask)

+ # setup the data loading filter

+ if rows is not None:

+ if isinstance(rows, int):

+ rows = slice(rows)

+ elif not isinstance(rows, slice):

+ raise TypeError("'rows' must be an integer or a slice.")

+ f_filt = features.filter(

+ rows.start, rows.stop, rows.step, bbox=bbox, mask=mask

+ )

+ elif any((bbox, mask)):

+ f_filt = features.filter(bbox=bbox, mask=mask)

else:

f_filt = features

| {"golden_diff": "diff --git a/geopandas/io/file.py b/geopandas/io/file.py\n--- a/geopandas/io/file.py\n+++ b/geopandas/io/file.py\n@@ -4,17 +4,21 @@\n import pandas as pd\n \n import fiona\n-\n-from geopandas import GeoDataFrame, GeoSeries\n+from shapely.geometry import mapping\n+from shapely.geometry.base import BaseGeometry\n \n try:\n from fiona import Env as fiona_env\n except ImportError:\n from fiona import drivers as fiona_env\n+\n+from geopandas import GeoDataFrame, GeoSeries\n+\n+\n # Adapted from pandas.io.common\n from urllib.request import urlopen as _urlopen\n from urllib.parse import urlparse as parse_url\n-from urllib.parse import uses_relative, uses_netloc, uses_params\n+from urllib.parse import uses_netloc, uses_params, uses_relative\n \n \n _FIONA18 = LooseVersion(fiona.__version__) >= LooseVersion(\"1.8\")\n@@ -30,18 +34,29 @@\n return False\n \n \n-def read_file(filename, bbox=None, **kwargs):\n+def read_file(filename, bbox=None, mask=None, rows=None, **kwargs):\n \"\"\"\n Returns a GeoDataFrame from a file or URL.\n \n+ .. versionadded:: 0.7.0 mask, rows\n+\n Parameters\n ----------\n filename: str\n Either the absolute or relative path to the file or URL to\n be opened.\n- bbox : tuple | GeoDataFrame or GeoSeries, default None\n- Filter features by given bounding box, GeoSeries, or GeoDataFrame.\n+ bbox: tuple | GeoDataFrame or GeoSeries | shapely Geometry, default None\n+ Filter features by given bounding box, GeoSeries, GeoDataFrame or a\n+ shapely geometry. CRS mis-matches are resolved if given a GeoSeries\n+ or GeoDataFrame. Cannot be used with mask.\n+ mask: dict | GeoDataFrame or GeoSeries | shapely Geometry, default None\n+ Filter for features that intersect with the given dict-like geojson\n+ geometry, GeoSeries, GeoDataFrame or shapely geometry.\n CRS mis-matches are resolved if given a GeoSeries or GeoDataFrame.\n+ Cannot be used with bbox.\n+ rows: int or slice, default None\n+ Load in specific rows by passing an integer (first `n` rows) or a\n+ slice() object.\n **kwargs:\n Keyword args to be passed to the `open` or `BytesCollection` method\n in the fiona library when opening the file. For more information on\n@@ -54,7 +69,7 @@\n \n Returns\n -------\n- geodataframe : GeoDataFrame\n+ :obj:`geopandas.GeoDataFrame`\n \n Notes\n -----\n@@ -82,11 +97,29 @@\n else features.crs_wkt\n )\n \n+ # handle loading the bounding box\n if bbox is not None:\n- if isinstance(bbox, GeoDataFrame) or isinstance(bbox, GeoSeries):\n+ if isinstance(bbox, (GeoDataFrame, GeoSeries)):\n bbox = tuple(bbox.to_crs(crs).total_bounds)\n+ elif isinstance(bbox, BaseGeometry):\n+ bbox = bbox.bounds\n assert len(bbox) == 4\n- f_filt = features.filter(bbox=bbox)\n+ # handle loading the mask\n+ elif isinstance(mask, (GeoDataFrame, GeoSeries)):\n+ mask = mapping(mask.to_crs(crs).unary_union)\n+ elif isinstance(mask, BaseGeometry):\n+ mask = mapping(mask)\n+ # setup the data loading filter\n+ if rows is not None:\n+ if isinstance(rows, int):\n+ rows = slice(rows)\n+ elif not isinstance(rows, slice):\n+ raise TypeError(\"'rows' must be an integer or a slice.\")\n+ f_filt = features.filter(\n+ rows.start, rows.stop, rows.step, bbox=bbox, mask=mask\n+ )\n+ elif any((bbox, mask)):\n+ f_filt = features.filter(bbox=bbox, mask=mask)\n else:\n f_filt = features\n", "issue": "Lazy Loading Dataframes\nThere have been times when I wanted to use geopandas to get an idea of what was in a large file. But, it took a long time to load it in. So, I used `fiona` instead. Are there any plans (or desires) to do lazy loading of the geopandas data?\r\n\r\nThis is library I recently saw is related:\r\nhttps://vaex.readthedocs.io/en/latest/index.html\r\nhttps://github.com/vaexio/vaex\n", "before_files": [{"content": "from distutils.version import LooseVersion\n\nimport numpy as np\nimport pandas as pd\n\nimport fiona\n\nfrom geopandas import GeoDataFrame, GeoSeries\n\ntry:\n from fiona import Env as fiona_env\nexcept ImportError:\n from fiona import drivers as fiona_env\n# Adapted from pandas.io.common\nfrom urllib.request import urlopen as _urlopen\nfrom urllib.parse import urlparse as parse_url\nfrom urllib.parse import uses_relative, uses_netloc, uses_params\n\n\n_FIONA18 = LooseVersion(fiona.__version__) >= LooseVersion(\"1.8\")\n_VALID_URLS = set(uses_relative + uses_netloc + uses_params)\n_VALID_URLS.discard(\"\")\n\n\ndef _is_url(url):\n \"\"\"Check to see if *url* has a valid protocol.\"\"\"\n try:\n return parse_url(url).scheme in _VALID_URLS\n except Exception:\n return False\n\n\ndef read_file(filename, bbox=None, **kwargs):\n \"\"\"\n Returns a GeoDataFrame from a file or URL.\n\n Parameters\n ----------\n filename: str\n Either the absolute or relative path to the file or URL to\n be opened.\n bbox : tuple | GeoDataFrame or GeoSeries, default None\n Filter features by given bounding box, GeoSeries, or GeoDataFrame.\n CRS mis-matches are resolved if given a GeoSeries or GeoDataFrame.\n **kwargs:\n Keyword args to be passed to the `open` or `BytesCollection` method\n in the fiona library when opening the file. For more information on\n possible keywords, type:\n ``import fiona; help(fiona.open)``\n\n Examples\n --------\n >>> df = geopandas.read_file(\"nybb.shp\")\n\n Returns\n -------\n geodataframe : GeoDataFrame\n\n Notes\n -----\n The format drivers will attempt to detect the encoding of your data, but\n may fail. In this case, the proper encoding can be specified explicitly\n by using the encoding keyword parameter, e.g. ``encoding='utf-8'``.\n \"\"\"\n if _is_url(filename):\n req = _urlopen(filename)\n path_or_bytes = req.read()\n reader = fiona.BytesCollection\n else:\n path_or_bytes = filename\n reader = fiona.open\n\n with fiona_env():\n with reader(path_or_bytes, **kwargs) as features:\n\n # In a future Fiona release the crs attribute of features will\n # no longer be a dict, but will behave like a dict. So this should\n # be forwards compatible\n crs = (\n features.crs[\"init\"]\n if features.crs and \"init\" in features.crs\n else features.crs_wkt\n )\n\n if bbox is not None:\n if isinstance(bbox, GeoDataFrame) or isinstance(bbox, GeoSeries):\n bbox = tuple(bbox.to_crs(crs).total_bounds)\n assert len(bbox) == 4\n f_filt = features.filter(bbox=bbox)\n else:\n f_filt = features\n\n columns = list(features.meta[\"schema\"][\"properties\"]) + [\"geometry\"]\n gdf = GeoDataFrame.from_features(f_filt, crs=crs, columns=columns)\n\n return gdf\n\n\ndef to_file(df, filename, driver=\"ESRI Shapefile\", schema=None, index=None, **kwargs):\n \"\"\"\n Write this GeoDataFrame to an OGR data source\n\n A dictionary of supported OGR providers is available via:\n >>> import fiona\n >>> fiona.supported_drivers\n\n Parameters\n ----------\n df : GeoDataFrame to be written\n filename : string\n File path or file handle to write to.\n driver : string, default 'ESRI Shapefile'\n The OGR format driver used to write the vector file.\n schema : dict, default None\n If specified, the schema dictionary is passed to Fiona to\n better control how the file is written. If None, GeoPandas\n will determine the schema based on each column's dtype\n index : bool, default None\n If True, write index into one or more columns (for MultiIndex).\n Default None writes the index into one or more columns only if\n the index is named, is a MultiIndex, or has a non-integer data\n type. If False, no index is written.\n\n .. versionadded:: 0.7\n Previously the index was not written.\n\n The *kwargs* are passed to fiona.open and can be used to write\n to multi-layer data, store data within archives (zip files), etc.\n The path may specify a fiona VSI scheme.\n\n Notes\n -----\n The format drivers will attempt to detect the encoding of your data, but\n may fail. In this case, the proper encoding can be specified explicitly\n by using the encoding keyword parameter, e.g. ``encoding='utf-8'``.\n \"\"\"\n if index is None:\n # Determine if index attribute(s) should be saved to file\n index = list(df.index.names) != [None] or type(df.index) not in (\n pd.RangeIndex,\n pd.Int64Index,\n )\n if index:\n df = df.reset_index(drop=False)\n if schema is None:\n schema = infer_schema(df)\n with fiona_env():\n crs_wkt = None\n try:\n gdal_version = fiona.env.get_gdal_release_name()\n except AttributeError:\n gdal_version = \"2.0.0\" # just assume it is not the latest\n if LooseVersion(gdal_version) >= LooseVersion(\"3.0.0\") and df.crs:\n crs_wkt = df.crs.to_wkt()\n elif df.crs:\n crs_wkt = df.crs.to_wkt(\"WKT1_GDAL\")\n with fiona.open(\n filename, \"w\", driver=driver, crs_wkt=crs_wkt, schema=schema, **kwargs\n ) as colxn:\n colxn.writerecords(df.iterfeatures())\n\n\ndef infer_schema(df):\n from collections import OrderedDict\n\n # TODO: test pandas string type and boolean type once released\n types = {\"Int64\": \"int\", \"string\": \"str\", \"boolean\": \"bool\"}\n\n def convert_type(column, in_type):\n if in_type == object:\n return \"str\"\n if in_type.name.startswith(\"datetime64\"):\n # numpy datetime type regardless of frequency\n return \"datetime\"\n if str(in_type) in types:\n out_type = types[str(in_type)]\n else:\n out_type = type(np.zeros(1, in_type).item()).__name__\n if out_type == \"long\":\n out_type = \"int\"\n if not _FIONA18 and out_type == \"bool\":\n raise ValueError(\n 'column \"{}\" is boolean type, '.format(column)\n + \"which is unsupported in file writing with fiona \"\n \"< 1.8. Consider casting the column to int type.\"\n )\n return out_type\n\n properties = OrderedDict(\n [\n (col, convert_type(col, _type))\n for col, _type in zip(df.columns, df.dtypes)\n if col != df._geometry_column_name\n ]\n )\n\n if df.empty:\n raise ValueError(\"Cannot write empty DataFrame to file.\")\n\n # Since https://github.com/Toblerity/Fiona/issues/446 resolution,\n # Fiona allows a list of geometry types\n geom_types = _geometry_types(df)\n\n schema = {\"geometry\": geom_types, \"properties\": properties}\n\n return schema\n\n\ndef _geometry_types(df):\n \"\"\"\n Determine the geometry types in the GeoDataFrame for the schema.\n \"\"\"\n if _FIONA18:\n # Starting from Fiona 1.8, schema submitted to fiona to write a gdf\n # can have mixed geometries:\n # - 3D and 2D shapes can coexist in inferred schema\n # - Shape and MultiShape types can (and must) coexist in inferred\n # schema\n geom_types_2D = df[~df.geometry.has_z].geometry.geom_type.unique()\n geom_types_2D = [gtype for gtype in geom_types_2D if gtype is not None]\n geom_types_3D = df[df.geometry.has_z].geometry.geom_type.unique()\n geom_types_3D = [\"3D \" + gtype for gtype in geom_types_3D if gtype is not None]\n geom_types = geom_types_3D + geom_types_2D\n\n else:\n # Before Fiona 1.8, schema submitted to write a gdf should have\n # one single geometry type whenever possible:\n # - 3D and 2D shapes cannot coexist in inferred schema\n # - Shape and MultiShape can not coexist in inferred schema\n geom_types = _geometry_types_back_compat(df)\n\n if len(geom_types) == 0:\n # Default geometry type supported by Fiona\n # (Since https://github.com/Toblerity/Fiona/issues/446 resolution)\n return \"Unknown\"\n\n if len(geom_types) == 1:\n geom_types = geom_types[0]\n\n return geom_types\n\n\ndef _geometry_types_back_compat(df):\n \"\"\"\n for backward compatibility with Fiona<1.8 only\n \"\"\"\n unique_geom_types = df.geometry.geom_type.unique()\n unique_geom_types = [gtype for gtype in unique_geom_types if gtype is not None]\n\n # merge single and Multi types (eg Polygon and MultiPolygon)\n unique_geom_types = [\n gtype\n for gtype in unique_geom_types\n if not gtype.startswith(\"Multi\") or gtype[5:] not in unique_geom_types\n ]\n\n if df.geometry.has_z.any():\n # declare all geometries as 3D geometries\n unique_geom_types = [\"3D \" + type for type in unique_geom_types]\n # by default, all geometries are 2D geometries\n\n return unique_geom_types\n", "path": "geopandas/io/file.py"}]} | 3,527 | 903 |

gh_patches_debug_32143 | rasdani/github-patches | git_diff | bridgecrewio__checkov-321 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

crashing on GCP EGRESS firewall rule which doesn't have allow field defined

**Describe the bug**

Checkov crashing on GCP EGRESS firewall rule which doesn't have allow field defined. Tf version 0.12.26 Google provider version: 3.23

**To Reproduce**

Steps to reproduce the behavior:

1. Resource defined as

```

resource "google_compute_firewall" "default_deny_egress" {

name = "deny-all-egress-all"

description = "Prevent all egress traffic by default"

disabled = true

network = google_compute_network.vpc_network.name

enable_logging = true

priority = 65534

direction = "EGRESS"

destination_ranges = ["0.0.0.0/0"]

deny { protocol = "all" }

}

```

2. Run cli command : checkov -d folder

3. See error

```

ERROR:checkov.terraform.checks.resource.gcp.GoogleComputeFirewallUnrestrictedIngress3389:Failed to run check: Ensure Google compute firewall ingress does not allow unrestricted rdp access for configuration: {'name': ['${var.env}-deny-all-egress-all'], 'description': ['Prevent all egress traffic by default'], 'disabled': [True], 'network': ['${google_compute_network.vpc_network.name}'], 'enable_logging': [True], 'priority': [65534], 'direction': ['EGRESS'], 'destination_ranges': [['0.0.0.0/0']], 'deny': [{'protocol': {'all': {}}}]} at file: /../../modules/network/firewalls.tf

Traceback (most recent call last):

File "/Users/jakub/Development/GIT_REPO/tfenv/bin/checkov", line 5, in <module>

run()

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/main.py", line 76, in run

files=file)

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/common/runners/runner_registry.py", line 26, in run

runner_filter=self.runner_filter)

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/terraform/runner.py", line 50, in run

self.check_tf_definition(report, root_folder, runner_filter)

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/terraform/runner.py", line 91, in check_tf_definition

block_type, runner_filter)

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/terraform/runner.py", line 113, in run_block

results = registry.scan(scanned_file, entity, skipped_checks, runner_filter)

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/common/checks/base_check_registry.py", line 64, in scan

result = self.run_check(check, entity_configuration, entity_name, entity_type, scanned_file, skip_info)

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/common/checks/base_check_registry.py", line 71, in run_check

entity_name=entity_name, entity_type=entity_type, skip_info=skip_info)

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/common/checks/base_check.py", line 44, in run

raise e

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/common/checks/base_check.py", line 33, in run

check_result['result'] = self.scan_entity_conf(entity_configuration)

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/terraform/checks/resource/base_resource_check.py", line 20, in scan_entity_conf

return self.scan_resource_conf(conf)

File "/Users/jakub/Development/GIT_REPO/tfenv/lib/python3.7/site-packages/checkov/terraform/checks/resource/gcp/**GoogleComputeFirewallUnrestrictedIngress3389.py**", line 22, in scan_resource_conf

allow_blocks = conf['allow']

KeyError: 'allow'

```

**Expected behavior**

Not to crash when resource has valid specification

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Desktop (please complete the following information):**

- OS: Mac OS

- Checkov Version: 1.0.346

**Additional context**

Add any other context about the problem here (e.g. code snippets).

</issue>

<code>

[start of checkov/terraform/checks/resource/gcp/GoogleComputeFirewallUnrestrictedIngress22.py]

1 from checkov.common.models.enums import CheckResult, CheckCategories

2 from checkov.terraform.checks.resource.base_resource_check import BaseResourceCheck

3

4 PORT = '22'

5

6

7 class GoogleComputeFirewallUnrestrictedIngress22(BaseResourceCheck):

8 def __init__(self):

9 name = "Ensure Google compute firewall ingress does not allow unrestricted ssh access"

10 id = "CKV_GCP_2"

11 supported_resources = ['google_compute_firewall']

12 categories = [CheckCategories.NETWORKING]

13 super().__init__(name=name, id=id, categories=categories, supported_resources=supported_resources)

14

15 def scan_resource_conf(self, conf):

16 """

17 Looks for password configuration at google_compute_firewall:

18 https://www.terraform.io/docs/providers/google/r/compute_firewall.html

19 :param conf: azure_instance configuration

20 :return: <CheckResult>

21 """

22 allow_blocks = conf['allow']

23 for block in allow_blocks:

24 if 'ports' in block.keys():

25 if PORT in block['ports'][0]:

26 if 'source_ranges' in conf.keys():

27 source_ranges = conf['source_ranges'][0]

28 if "0.0.0.0/0" in source_ranges:

29 return CheckResult.FAILED

30 return CheckResult.PASSED

31

32

33 check = GoogleComputeFirewallUnrestrictedIngress22()

34