problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

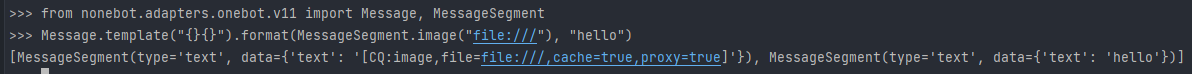

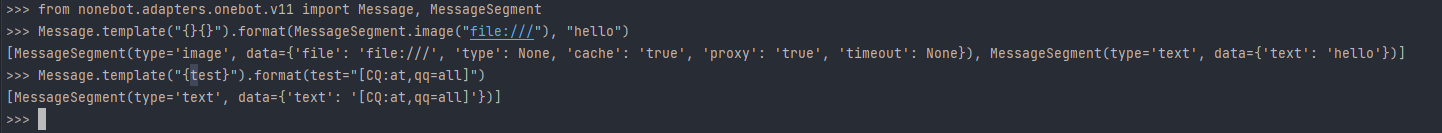

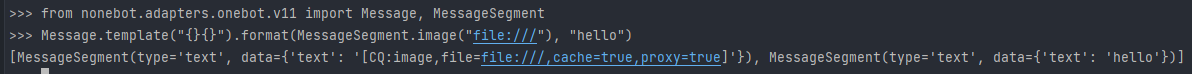

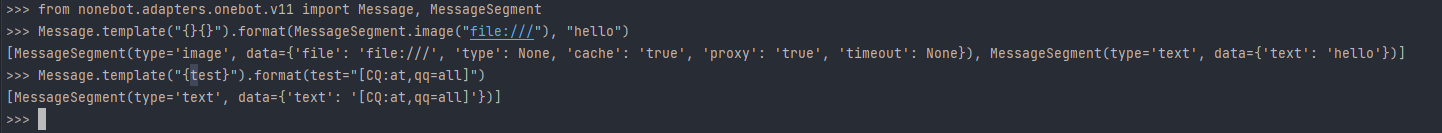

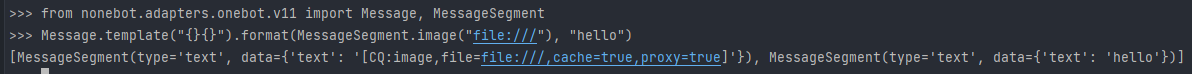

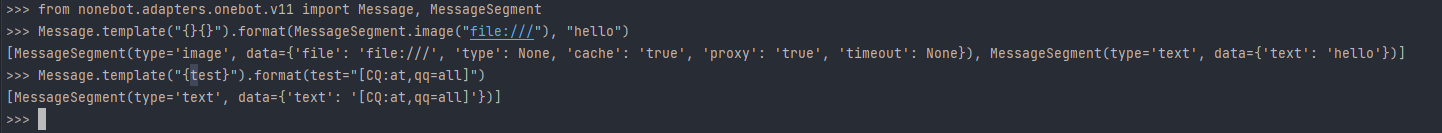

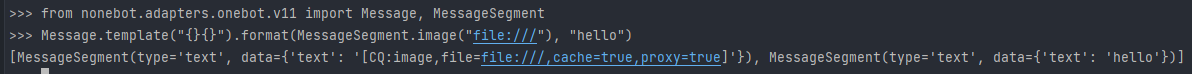

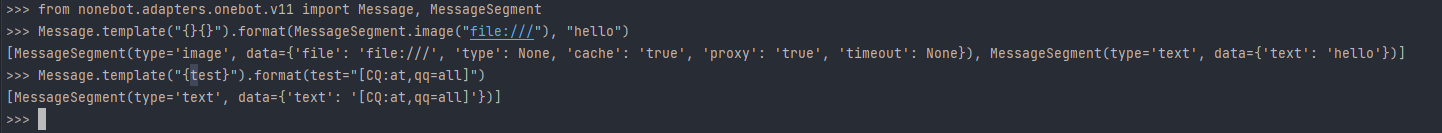

gh_patches_debug_10958 | rasdani/github-patches | git_diff | python-telegram-bot__python-telegram-bot-1760 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[BUG] v12.4 breaks PicklePersistence

<!--

Thanks for reporting issues of python-telegram-bot!

Use this template to notify us if you found a bug.

To make it easier for us to help you please enter detailed information below.

Please note, we only support the latest version of python-telegram-bot and

master branch. Please make sure to upgrade & recreate the issue on the latest

version prior to opening an issue.

-->

### Steps to reproduce

1. Have a bot using PicklePersistence with singlefile=True

2. Upgrade to v12.4

3. restart bot

### Expected behaviour

pickled file is read correctly

### Actual behaviour

key error `bot_data` is thrown

### Current workaround:

Add an empty dict `bot_data` to the file manually. Quick and dirty script:

```

import pickle

filename = 'my_pickle_persistence_file'

with (open(filename, 'rb')) as file:

data = pickle.load(file)

data['bot_data'] = {}

with open(filename, 'wb') as f:

pickle.dump(data, f)

```

Will be closed by #1760

</issue>

<code>

[start of telegram/ext/picklepersistence.py]

1 #!/usr/bin/env python

2 #

3 # A library that provides a Python interface to the Telegram Bot API

4 # Copyright (C) 2015-2020

5 # Leandro Toledo de Souza <[email protected]>

6 #

7 # This program is free software: you can redistribute it and/or modify

8 # it under the terms of the GNU Lesser Public License as published by

9 # the Free Software Foundation, either version 3 of the License, or

10 # (at your option) any later version.

11 #

12 # This program is distributed in the hope that it will be useful,

13 # but WITHOUT ANY WARRANTY; without even the implied warranty of

14 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

15 # GNU Lesser Public License for more details.

16 #

17 # You should have received a copy of the GNU Lesser Public License

18 # along with this program. If not, see [http://www.gnu.org/licenses/].

19 """This module contains the PicklePersistence class."""

20 import pickle

21 from collections import defaultdict

22 from copy import deepcopy

23

24 from telegram.ext import BasePersistence

25

26

27 class PicklePersistence(BasePersistence):

28 """Using python's builtin pickle for making you bot persistent.

29

30 Attributes:

31 filename (:obj:`str`): The filename for storing the pickle files. When :attr:`single_file`

32 is false this will be used as a prefix.

33 store_user_data (:obj:`bool`): Optional. Whether user_data should be saved by this

34 persistence class.

35 store_chat_data (:obj:`bool`): Optional. Whether user_data should be saved by this

36 persistence class.

37 store_bot_data (:obj:`bool`): Optional. Whether bot_data should be saved by this

38 persistence class.

39 single_file (:obj:`bool`): Optional. When ``False`` will store 3 sperate files of

40 `filename_user_data`, `filename_chat_data` and `filename_conversations`. Default is

41 ``True``.

42 on_flush (:obj:`bool`, optional): When ``True`` will only save to file when :meth:`flush`

43 is called and keep data in memory until that happens. When ``False`` will store data

44 on any transaction *and* on call fo :meth:`flush`. Default is ``False``.

45

46 Args:

47 filename (:obj:`str`): The filename for storing the pickle files. When :attr:`single_file`

48 is false this will be used as a prefix.

49 store_user_data (:obj:`bool`, optional): Whether user_data should be saved by this

50 persistence class. Default is ``True``.

51 store_chat_data (:obj:`bool`, optional): Whether user_data should be saved by this

52 persistence class. Default is ``True``.

53 store_bot_data (:obj:`bool`, optional): Whether bot_data should be saved by this

54 persistence class. Default is ``True`` .

55 single_file (:obj:`bool`, optional): When ``False`` will store 3 sperate files of

56 `filename_user_data`, `filename_chat_data` and `filename_conversations`. Default is

57 ``True``.

58 on_flush (:obj:`bool`, optional): When ``True`` will only save to file when :meth:`flush`

59 is called and keep data in memory until that happens. When ``False`` will store data

60 on any transaction *and* on call fo :meth:`flush`. Default is ``False``.

61 """

62

63 def __init__(self, filename,

64 store_user_data=True,

65 store_chat_data=True,

66 store_bot_data=True,

67 single_file=True,

68 on_flush=False):

69 super(PicklePersistence, self).__init__(store_user_data=store_user_data,

70 store_chat_data=store_chat_data,

71 store_bot_data=store_bot_data)

72 self.filename = filename

73 self.single_file = single_file

74 self.on_flush = on_flush

75 self.user_data = None

76 self.chat_data = None

77 self.bot_data = None

78 self.conversations = None

79

80 def load_singlefile(self):

81 try:

82 filename = self.filename

83 with open(self.filename, "rb") as f:

84 all = pickle.load(f)

85 self.user_data = defaultdict(dict, all['user_data'])

86 self.chat_data = defaultdict(dict, all['chat_data'])

87 self.bot_data = all['bot_data']

88 self.conversations = all['conversations']

89 except IOError:

90 self.conversations = {}

91 self.user_data = defaultdict(dict)

92 self.chat_data = defaultdict(dict)

93 self.bot_data = {}

94 except pickle.UnpicklingError:

95 raise TypeError("File {} does not contain valid pickle data".format(filename))

96 except Exception:

97 raise TypeError("Something went wrong unpickling {}".format(filename))

98

99 def load_file(self, filename):

100 try:

101 with open(filename, "rb") as f:

102 return pickle.load(f)

103 except IOError:

104 return None

105 except pickle.UnpicklingError:

106 raise TypeError("File {} does not contain valid pickle data".format(filename))

107 except Exception:

108 raise TypeError("Something went wrong unpickling {}".format(filename))

109

110 def dump_singlefile(self):

111 with open(self.filename, "wb") as f:

112 all = {'conversations': self.conversations, 'user_data': self.user_data,

113 'chat_data': self.chat_data, 'bot_data': self.bot_data}

114 pickle.dump(all, f)

115

116 def dump_file(self, filename, data):

117 with open(filename, "wb") as f:

118 pickle.dump(data, f)

119

120 def get_user_data(self):

121 """Returns the user_data from the pickle file if it exsists or an empty defaultdict.

122

123 Returns:

124 :obj:`defaultdict`: The restored user data.

125 """

126 if self.user_data:

127 pass

128 elif not self.single_file:

129 filename = "{}_user_data".format(self.filename)

130 data = self.load_file(filename)

131 if not data:

132 data = defaultdict(dict)

133 else:

134 data = defaultdict(dict, data)

135 self.user_data = data

136 else:

137 self.load_singlefile()

138 return deepcopy(self.user_data)

139

140 def get_chat_data(self):

141 """Returns the chat_data from the pickle file if it exsists or an empty defaultdict.

142

143 Returns:

144 :obj:`defaultdict`: The restored chat data.

145 """

146 if self.chat_data:

147 pass

148 elif not self.single_file:

149 filename = "{}_chat_data".format(self.filename)

150 data = self.load_file(filename)

151 if not data:

152 data = defaultdict(dict)

153 else:

154 data = defaultdict(dict, data)

155 self.chat_data = data

156 else:

157 self.load_singlefile()

158 return deepcopy(self.chat_data)

159

160 def get_bot_data(self):

161 """Returns the bot_data from the pickle file if it exsists or an empty dict.

162

163 Returns:

164 :obj:`defaultdict`: The restored bot data.

165 """

166 if self.bot_data:

167 pass

168 elif not self.single_file:

169 filename = "{}_bot_data".format(self.filename)

170 data = self.load_file(filename)

171 if not data:

172 data = {}

173 self.bot_data = data

174 else:

175 self.load_singlefile()

176 return deepcopy(self.bot_data)

177

178 def get_conversations(self, name):

179 """Returns the conversations from the pickle file if it exsists or an empty defaultdict.

180

181 Args:

182 name (:obj:`str`): The handlers name.

183

184 Returns:

185 :obj:`dict`: The restored conversations for the handler.

186 """

187 if self.conversations:

188 pass

189 elif not self.single_file:

190 filename = "{}_conversations".format(self.filename)

191 data = self.load_file(filename)

192 if not data:

193 data = {name: {}}

194 self.conversations = data

195 else:

196 self.load_singlefile()

197 return self.conversations.get(name, {}).copy()

198

199 def update_conversation(self, name, key, new_state):

200 """Will update the conversations for the given handler and depending on :attr:`on_flush`

201 save the pickle file.

202

203 Args:

204 name (:obj:`str`): The handlers name.

205 key (:obj:`tuple`): The key the state is changed for.

206 new_state (:obj:`tuple` | :obj:`any`): The new state for the given key.

207 """

208 if self.conversations.setdefault(name, {}).get(key) == new_state:

209 return

210 self.conversations[name][key] = new_state

211 if not self.on_flush:

212 if not self.single_file:

213 filename = "{}_conversations".format(self.filename)

214 self.dump_file(filename, self.conversations)

215 else:

216 self.dump_singlefile()

217

218 def update_user_data(self, user_id, data):

219 """Will update the user_data (if changed) and depending on :attr:`on_flush` save the

220 pickle file.

221

222 Args:

223 user_id (:obj:`int`): The user the data might have been changed for.

224 data (:obj:`dict`): The :attr:`telegram.ext.dispatcher.user_data` [user_id].

225 """

226 if self.user_data.get(user_id) == data:

227 return

228 self.user_data[user_id] = data

229 if not self.on_flush:

230 if not self.single_file:

231 filename = "{}_user_data".format(self.filename)

232 self.dump_file(filename, self.user_data)

233 else:

234 self.dump_singlefile()

235

236 def update_chat_data(self, chat_id, data):

237 """Will update the chat_data (if changed) and depending on :attr:`on_flush` save the

238 pickle file.

239

240 Args:

241 chat_id (:obj:`int`): The chat the data might have been changed for.

242 data (:obj:`dict`): The :attr:`telegram.ext.dispatcher.chat_data` [chat_id].

243 """

244 if self.chat_data.get(chat_id) == data:

245 return

246 self.chat_data[chat_id] = data

247 if not self.on_flush:

248 if not self.single_file:

249 filename = "{}_chat_data".format(self.filename)

250 self.dump_file(filename, self.chat_data)

251 else:

252 self.dump_singlefile()

253

254 def update_bot_data(self, data):

255 """Will update the bot_data (if changed) and depending on :attr:`on_flush` save the

256 pickle file.

257

258 Args:

259 data (:obj:`dict`): The :attr:`telegram.ext.dispatcher.bot_data`.

260 """

261 if self.bot_data == data:

262 return

263 self.bot_data = data.copy()

264 if not self.on_flush:

265 if not self.single_file:

266 filename = "{}_bot_data".format(self.filename)

267 self.dump_file(filename, self.bot_data)

268 else:

269 self.dump_singlefile()

270

271 def flush(self):

272 """ Will save all data in memory to pickle file(s).

273 """

274 if self.single_file:

275 if self.user_data or self.chat_data or self.conversations:

276 self.dump_singlefile()

277 else:

278 if self.user_data:

279 self.dump_file("{}_user_data".format(self.filename), self.user_data)

280 if self.chat_data:

281 self.dump_file("{}_chat_data".format(self.filename), self.chat_data)

282 if self.bot_data:

283 self.dump_file("{}_bot_data".format(self.filename), self.bot_data)

284 if self.conversations:

285 self.dump_file("{}_conversations".format(self.filename), self.conversations)

286

[end of telegram/ext/picklepersistence.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/telegram/ext/picklepersistence.py b/telegram/ext/picklepersistence.py

--- a/telegram/ext/picklepersistence.py

+++ b/telegram/ext/picklepersistence.py

@@ -84,7 +84,8 @@

all = pickle.load(f)

self.user_data = defaultdict(dict, all['user_data'])

self.chat_data = defaultdict(dict, all['chat_data'])

- self.bot_data = all['bot_data']

+ # For backwards compatibility with files not containing bot data

+ self.bot_data = all.get('bot_data', {})

self.conversations = all['conversations']

except IOError:

self.conversations = {}

| {"golden_diff": "diff --git a/telegram/ext/picklepersistence.py b/telegram/ext/picklepersistence.py\n--- a/telegram/ext/picklepersistence.py\n+++ b/telegram/ext/picklepersistence.py\n@@ -84,7 +84,8 @@\n all = pickle.load(f)\n self.user_data = defaultdict(dict, all['user_data'])\n self.chat_data = defaultdict(dict, all['chat_data'])\n- self.bot_data = all['bot_data']\n+ # For backwards compatibility with files not containing bot data\n+ self.bot_data = all.get('bot_data', {})\n self.conversations = all['conversations']\n except IOError:\n self.conversations = {}\n", "issue": "[BUG] v12.4 breaks PicklePersistence\n<!--\r\nThanks for reporting issues of python-telegram-bot!\r\n\r\nUse this template to notify us if you found a bug.\r\n\r\nTo make it easier for us to help you please enter detailed information below.\r\n\r\nPlease note, we only support the latest version of python-telegram-bot and\r\nmaster branch. Please make sure to upgrade & recreate the issue on the latest\r\nversion prior to opening an issue.\r\n-->\r\n### Steps to reproduce\r\n1. Have a bot using PicklePersistence with singlefile=True\r\n\r\n2. Upgrade to v12.4\r\n\r\n3. restart bot\r\n\r\n### Expected behaviour\r\npickled file is read correctly\r\n\r\n### Actual behaviour\r\nkey error `bot_data` is thrown\r\n\r\n### Current workaround:\r\nAdd an empty dict `bot_data` to the file manually. Quick and dirty script:\r\n```\r\nimport pickle\r\n\r\nfilename = 'my_pickle_persistence_file'\r\n\r\nwith (open(filename, 'rb')) as file:\r\n data = pickle.load(file)\r\n\r\ndata['bot_data'] = {}\r\n\r\nwith open(filename, 'wb') as f:\r\n pickle.dump(data, f)\r\n```\r\n\r\nWill be closed by #1760 \n", "before_files": [{"content": "#!/usr/bin/env python\n#\n# A library that provides a Python interface to the Telegram Bot API\n# Copyright (C) 2015-2020\n# Leandro Toledo de Souza <[email protected]>\n#\n# This program is free software: you can redistribute it and/or modify\n# it under the terms of the GNU Lesser Public License as published by\n# the Free Software Foundation, either version 3 of the License, or\n# (at your option) any later version.\n#\n# This program is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU Lesser Public License for more details.\n#\n# You should have received a copy of the GNU Lesser Public License\n# along with this program. If not, see [http://www.gnu.org/licenses/].\n\"\"\"This module contains the PicklePersistence class.\"\"\"\nimport pickle\nfrom collections import defaultdict\nfrom copy import deepcopy\n\nfrom telegram.ext import BasePersistence\n\n\nclass PicklePersistence(BasePersistence):\n \"\"\"Using python's builtin pickle for making you bot persistent.\n\n Attributes:\n filename (:obj:`str`): The filename for storing the pickle files. When :attr:`single_file`\n is false this will be used as a prefix.\n store_user_data (:obj:`bool`): Optional. Whether user_data should be saved by this\n persistence class.\n store_chat_data (:obj:`bool`): Optional. Whether user_data should be saved by this\n persistence class.\n store_bot_data (:obj:`bool`): Optional. Whether bot_data should be saved by this\n persistence class.\n single_file (:obj:`bool`): Optional. When ``False`` will store 3 sperate files of\n `filename_user_data`, `filename_chat_data` and `filename_conversations`. Default is\n ``True``.\n on_flush (:obj:`bool`, optional): When ``True`` will only save to file when :meth:`flush`\n is called and keep data in memory until that happens. When ``False`` will store data\n on any transaction *and* on call fo :meth:`flush`. Default is ``False``.\n\n Args:\n filename (:obj:`str`): The filename for storing the pickle files. When :attr:`single_file`\n is false this will be used as a prefix.\n store_user_data (:obj:`bool`, optional): Whether user_data should be saved by this\n persistence class. Default is ``True``.\n store_chat_data (:obj:`bool`, optional): Whether user_data should be saved by this\n persistence class. Default is ``True``.\n store_bot_data (:obj:`bool`, optional): Whether bot_data should be saved by this\n persistence class. Default is ``True`` .\n single_file (:obj:`bool`, optional): When ``False`` will store 3 sperate files of\n `filename_user_data`, `filename_chat_data` and `filename_conversations`. Default is\n ``True``.\n on_flush (:obj:`bool`, optional): When ``True`` will only save to file when :meth:`flush`\n is called and keep data in memory until that happens. When ``False`` will store data\n on any transaction *and* on call fo :meth:`flush`. Default is ``False``.\n \"\"\"\n\n def __init__(self, filename,\n store_user_data=True,\n store_chat_data=True,\n store_bot_data=True,\n single_file=True,\n on_flush=False):\n super(PicklePersistence, self).__init__(store_user_data=store_user_data,\n store_chat_data=store_chat_data,\n store_bot_data=store_bot_data)\n self.filename = filename\n self.single_file = single_file\n self.on_flush = on_flush\n self.user_data = None\n self.chat_data = None\n self.bot_data = None\n self.conversations = None\n\n def load_singlefile(self):\n try:\n filename = self.filename\n with open(self.filename, \"rb\") as f:\n all = pickle.load(f)\n self.user_data = defaultdict(dict, all['user_data'])\n self.chat_data = defaultdict(dict, all['chat_data'])\n self.bot_data = all['bot_data']\n self.conversations = all['conversations']\n except IOError:\n self.conversations = {}\n self.user_data = defaultdict(dict)\n self.chat_data = defaultdict(dict)\n self.bot_data = {}\n except pickle.UnpicklingError:\n raise TypeError(\"File {} does not contain valid pickle data\".format(filename))\n except Exception:\n raise TypeError(\"Something went wrong unpickling {}\".format(filename))\n\n def load_file(self, filename):\n try:\n with open(filename, \"rb\") as f:\n return pickle.load(f)\n except IOError:\n return None\n except pickle.UnpicklingError:\n raise TypeError(\"File {} does not contain valid pickle data\".format(filename))\n except Exception:\n raise TypeError(\"Something went wrong unpickling {}\".format(filename))\n\n def dump_singlefile(self):\n with open(self.filename, \"wb\") as f:\n all = {'conversations': self.conversations, 'user_data': self.user_data,\n 'chat_data': self.chat_data, 'bot_data': self.bot_data}\n pickle.dump(all, f)\n\n def dump_file(self, filename, data):\n with open(filename, \"wb\") as f:\n pickle.dump(data, f)\n\n def get_user_data(self):\n \"\"\"Returns the user_data from the pickle file if it exsists or an empty defaultdict.\n\n Returns:\n :obj:`defaultdict`: The restored user data.\n \"\"\"\n if self.user_data:\n pass\n elif not self.single_file:\n filename = \"{}_user_data\".format(self.filename)\n data = self.load_file(filename)\n if not data:\n data = defaultdict(dict)\n else:\n data = defaultdict(dict, data)\n self.user_data = data\n else:\n self.load_singlefile()\n return deepcopy(self.user_data)\n\n def get_chat_data(self):\n \"\"\"Returns the chat_data from the pickle file if it exsists or an empty defaultdict.\n\n Returns:\n :obj:`defaultdict`: The restored chat data.\n \"\"\"\n if self.chat_data:\n pass\n elif not self.single_file:\n filename = \"{}_chat_data\".format(self.filename)\n data = self.load_file(filename)\n if not data:\n data = defaultdict(dict)\n else:\n data = defaultdict(dict, data)\n self.chat_data = data\n else:\n self.load_singlefile()\n return deepcopy(self.chat_data)\n\n def get_bot_data(self):\n \"\"\"Returns the bot_data from the pickle file if it exsists or an empty dict.\n\n Returns:\n :obj:`defaultdict`: The restored bot data.\n \"\"\"\n if self.bot_data:\n pass\n elif not self.single_file:\n filename = \"{}_bot_data\".format(self.filename)\n data = self.load_file(filename)\n if not data:\n data = {}\n self.bot_data = data\n else:\n self.load_singlefile()\n return deepcopy(self.bot_data)\n\n def get_conversations(self, name):\n \"\"\"Returns the conversations from the pickle file if it exsists or an empty defaultdict.\n\n Args:\n name (:obj:`str`): The handlers name.\n\n Returns:\n :obj:`dict`: The restored conversations for the handler.\n \"\"\"\n if self.conversations:\n pass\n elif not self.single_file:\n filename = \"{}_conversations\".format(self.filename)\n data = self.load_file(filename)\n if not data:\n data = {name: {}}\n self.conversations = data\n else:\n self.load_singlefile()\n return self.conversations.get(name, {}).copy()\n\n def update_conversation(self, name, key, new_state):\n \"\"\"Will update the conversations for the given handler and depending on :attr:`on_flush`\n save the pickle file.\n\n Args:\n name (:obj:`str`): The handlers name.\n key (:obj:`tuple`): The key the state is changed for.\n new_state (:obj:`tuple` | :obj:`any`): The new state for the given key.\n \"\"\"\n if self.conversations.setdefault(name, {}).get(key) == new_state:\n return\n self.conversations[name][key] = new_state\n if not self.on_flush:\n if not self.single_file:\n filename = \"{}_conversations\".format(self.filename)\n self.dump_file(filename, self.conversations)\n else:\n self.dump_singlefile()\n\n def update_user_data(self, user_id, data):\n \"\"\"Will update the user_data (if changed) and depending on :attr:`on_flush` save the\n pickle file.\n\n Args:\n user_id (:obj:`int`): The user the data might have been changed for.\n data (:obj:`dict`): The :attr:`telegram.ext.dispatcher.user_data` [user_id].\n \"\"\"\n if self.user_data.get(user_id) == data:\n return\n self.user_data[user_id] = data\n if not self.on_flush:\n if not self.single_file:\n filename = \"{}_user_data\".format(self.filename)\n self.dump_file(filename, self.user_data)\n else:\n self.dump_singlefile()\n\n def update_chat_data(self, chat_id, data):\n \"\"\"Will update the chat_data (if changed) and depending on :attr:`on_flush` save the\n pickle file.\n\n Args:\n chat_id (:obj:`int`): The chat the data might have been changed for.\n data (:obj:`dict`): The :attr:`telegram.ext.dispatcher.chat_data` [chat_id].\n \"\"\"\n if self.chat_data.get(chat_id) == data:\n return\n self.chat_data[chat_id] = data\n if not self.on_flush:\n if not self.single_file:\n filename = \"{}_chat_data\".format(self.filename)\n self.dump_file(filename, self.chat_data)\n else:\n self.dump_singlefile()\n\n def update_bot_data(self, data):\n \"\"\"Will update the bot_data (if changed) and depending on :attr:`on_flush` save the\n pickle file.\n\n Args:\n data (:obj:`dict`): The :attr:`telegram.ext.dispatcher.bot_data`.\n \"\"\"\n if self.bot_data == data:\n return\n self.bot_data = data.copy()\n if not self.on_flush:\n if not self.single_file:\n filename = \"{}_bot_data\".format(self.filename)\n self.dump_file(filename, self.bot_data)\n else:\n self.dump_singlefile()\n\n def flush(self):\n \"\"\" Will save all data in memory to pickle file(s).\n \"\"\"\n if self.single_file:\n if self.user_data or self.chat_data or self.conversations:\n self.dump_singlefile()\n else:\n if self.user_data:\n self.dump_file(\"{}_user_data\".format(self.filename), self.user_data)\n if self.chat_data:\n self.dump_file(\"{}_chat_data\".format(self.filename), self.chat_data)\n if self.bot_data:\n self.dump_file(\"{}_bot_data\".format(self.filename), self.bot_data)\n if self.conversations:\n self.dump_file(\"{}_conversations\".format(self.filename), self.conversations)\n", "path": "telegram/ext/picklepersistence.py"}]} | 3,966 | 146 |

gh_patches_debug_33779 | rasdani/github-patches | git_diff | CTFd__CTFd-1911 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

IP to City Database

I think we can provide an IP to city database now instead of just showing country.

</issue>

<code>

[start of CTFd/utils/initialization/__init__.py]

1 import datetime

2 import logging

3 import os

4 import sys

5

6 from flask import abort, redirect, render_template, request, session, url_for

7 from sqlalchemy.exc import IntegrityError, InvalidRequestError

8 from werkzeug.middleware.dispatcher import DispatcherMiddleware

9

10 from CTFd.cache import clear_user_recent_ips

11 from CTFd.exceptions import UserNotFoundException, UserTokenExpiredException

12 from CTFd.models import Tracking, db

13 from CTFd.utils import config, get_config, markdown

14 from CTFd.utils.config import (

15 can_send_mail,

16 ctf_logo,

17 ctf_name,

18 ctf_theme,

19 integrations,

20 is_setup,

21 )

22 from CTFd.utils.config.pages import get_pages

23 from CTFd.utils.dates import isoformat, unix_time, unix_time_millis

24 from CTFd.utils.events import EventManager, RedisEventManager

25 from CTFd.utils.humanize.words import pluralize

26 from CTFd.utils.modes import generate_account_url, get_mode_as_word

27 from CTFd.utils.plugins import (

28 get_configurable_plugins,

29 get_registered_admin_scripts,

30 get_registered_admin_stylesheets,

31 get_registered_scripts,

32 get_registered_stylesheets,

33 )

34 from CTFd.utils.security.auth import login_user, logout_user, lookup_user_token

35 from CTFd.utils.security.csrf import generate_nonce

36 from CTFd.utils.user import (

37 authed,

38 get_current_team_attrs,

39 get_current_user_attrs,

40 get_current_user_recent_ips,

41 get_ip,

42 is_admin,

43 )

44

45

46 def init_template_filters(app):

47 app.jinja_env.filters["markdown"] = markdown

48 app.jinja_env.filters["unix_time"] = unix_time

49 app.jinja_env.filters["unix_time_millis"] = unix_time_millis

50 app.jinja_env.filters["isoformat"] = isoformat

51 app.jinja_env.filters["pluralize"] = pluralize

52

53

54 def init_template_globals(app):

55 from CTFd.constants import JINJA_ENUMS

56 from CTFd.constants.config import Configs

57 from CTFd.constants.plugins import Plugins

58 from CTFd.constants.sessions import Session

59 from CTFd.constants.static import Static

60 from CTFd.constants.users import User

61 from CTFd.constants.teams import Team

62 from CTFd.forms import Forms

63 from CTFd.utils.config.visibility import (

64 accounts_visible,

65 challenges_visible,

66 registration_visible,

67 scores_visible,

68 )

69 from CTFd.utils.countries import get_countries, lookup_country_code

70 from CTFd.utils.countries.geoip import lookup_ip_address

71

72 app.jinja_env.globals.update(config=config)

73 app.jinja_env.globals.update(get_pages=get_pages)

74 app.jinja_env.globals.update(can_send_mail=can_send_mail)

75 app.jinja_env.globals.update(get_ctf_name=ctf_name)

76 app.jinja_env.globals.update(get_ctf_logo=ctf_logo)

77 app.jinja_env.globals.update(get_ctf_theme=ctf_theme)

78 app.jinja_env.globals.update(get_configurable_plugins=get_configurable_plugins)

79 app.jinja_env.globals.update(get_registered_scripts=get_registered_scripts)

80 app.jinja_env.globals.update(get_registered_stylesheets=get_registered_stylesheets)

81 app.jinja_env.globals.update(

82 get_registered_admin_scripts=get_registered_admin_scripts

83 )

84 app.jinja_env.globals.update(

85 get_registered_admin_stylesheets=get_registered_admin_stylesheets

86 )

87 app.jinja_env.globals.update(get_config=get_config)

88 app.jinja_env.globals.update(generate_account_url=generate_account_url)

89 app.jinja_env.globals.update(get_countries=get_countries)

90 app.jinja_env.globals.update(lookup_country_code=lookup_country_code)

91 app.jinja_env.globals.update(lookup_ip_address=lookup_ip_address)

92 app.jinja_env.globals.update(accounts_visible=accounts_visible)

93 app.jinja_env.globals.update(challenges_visible=challenges_visible)

94 app.jinja_env.globals.update(registration_visible=registration_visible)

95 app.jinja_env.globals.update(scores_visible=scores_visible)

96 app.jinja_env.globals.update(get_mode_as_word=get_mode_as_word)

97 app.jinja_env.globals.update(integrations=integrations)

98 app.jinja_env.globals.update(authed=authed)

99 app.jinja_env.globals.update(is_admin=is_admin)

100 app.jinja_env.globals.update(get_current_user_attrs=get_current_user_attrs)

101 app.jinja_env.globals.update(get_current_team_attrs=get_current_team_attrs)

102 app.jinja_env.globals.update(get_ip=get_ip)

103 app.jinja_env.globals.update(Configs=Configs)

104 app.jinja_env.globals.update(Plugins=Plugins)

105 app.jinja_env.globals.update(Session=Session)

106 app.jinja_env.globals.update(Static=Static)

107 app.jinja_env.globals.update(Forms=Forms)

108 app.jinja_env.globals.update(User=User)

109 app.jinja_env.globals.update(Team=Team)

110

111 # Add in JinjaEnums

112 # The reason this exists is that on double import, JinjaEnums are not reinitialized

113 # Thus, if you try to create two jinja envs (e.g. during testing), sometimes

114 # an Enum will not be available to Jinja.

115 # Instead we can just directly grab them from the persisted global dictionary.

116 for k, v in JINJA_ENUMS.items():

117 # .update() can't be used here because it would use the literal value k

118 app.jinja_env.globals[k] = v

119

120

121 def init_logs(app):

122 logger_submissions = logging.getLogger("submissions")

123 logger_logins = logging.getLogger("logins")

124 logger_registrations = logging.getLogger("registrations")

125

126 logger_submissions.setLevel(logging.INFO)

127 logger_logins.setLevel(logging.INFO)

128 logger_registrations.setLevel(logging.INFO)

129

130 log_dir = app.config["LOG_FOLDER"]

131 if not os.path.exists(log_dir):

132 os.makedirs(log_dir)

133

134 logs = {

135 "submissions": os.path.join(log_dir, "submissions.log"),

136 "logins": os.path.join(log_dir, "logins.log"),

137 "registrations": os.path.join(log_dir, "registrations.log"),

138 }

139

140 try:

141 for log in logs.values():

142 if not os.path.exists(log):

143 open(log, "a").close()

144

145 submission_log = logging.handlers.RotatingFileHandler(

146 logs["submissions"], maxBytes=10485760, backupCount=5

147 )

148 login_log = logging.handlers.RotatingFileHandler(

149 logs["logins"], maxBytes=10485760, backupCount=5

150 )

151 registration_log = logging.handlers.RotatingFileHandler(

152 logs["registrations"], maxBytes=10485760, backupCount=5

153 )

154

155 logger_submissions.addHandler(submission_log)

156 logger_logins.addHandler(login_log)

157 logger_registrations.addHandler(registration_log)

158 except IOError:

159 pass

160

161 stdout = logging.StreamHandler(stream=sys.stdout)

162

163 logger_submissions.addHandler(stdout)

164 logger_logins.addHandler(stdout)

165 logger_registrations.addHandler(stdout)

166

167 logger_submissions.propagate = 0

168 logger_logins.propagate = 0

169 logger_registrations.propagate = 0

170

171

172 def init_events(app):

173 if app.config.get("CACHE_TYPE") == "redis":

174 app.events_manager = RedisEventManager()

175 elif app.config.get("CACHE_TYPE") == "filesystem":

176 app.events_manager = EventManager()

177 else:

178 app.events_manager = EventManager()

179 app.events_manager.listen()

180

181

182 def init_request_processors(app):

183 @app.url_defaults

184 def inject_theme(endpoint, values):

185 if "theme" not in values and app.url_map.is_endpoint_expecting(

186 endpoint, "theme"

187 ):

188 values["theme"] = ctf_theme()

189

190 @app.before_request

191 def needs_setup():

192 if is_setup() is False:

193 if request.endpoint in (

194 "views.setup",

195 "views.integrations",

196 "views.themes",

197 "views.files",

198 ):

199 return

200 else:

201 return redirect(url_for("views.setup"))

202

203 @app.before_request

204 def tracker():

205 if request.endpoint == "views.themes":

206 return

207

208 if authed():

209 user_ips = get_current_user_recent_ips()

210 ip = get_ip()

211

212 track = None

213 if (ip not in user_ips) or (request.method != "GET"):

214 track = Tracking.query.filter_by(

215 ip=get_ip(), user_id=session["id"]

216 ).first()

217

218 if track:

219 track.date = datetime.datetime.utcnow()

220 else:

221 track = Tracking(ip=get_ip(), user_id=session["id"])

222 db.session.add(track)

223

224 if track:

225 try:

226 db.session.commit()

227 except (InvalidRequestError, IntegrityError):

228 db.session.rollback()

229 db.session.close()

230 logout_user()

231 else:

232 clear_user_recent_ips(user_id=session["id"])

233

234 @app.before_request

235 def banned():

236 if request.endpoint == "views.themes":

237 return

238

239 if authed():

240 user = get_current_user_attrs()

241 team = get_current_team_attrs()

242

243 if user and user.banned:

244 return (

245 render_template(

246 "errors/403.html", error="You have been banned from this CTF"

247 ),

248 403,

249 )

250

251 if team and team.banned:

252 return (

253 render_template(

254 "errors/403.html",

255 error="Your team has been banned from this CTF",

256 ),

257 403,

258 )

259

260 @app.before_request

261 def tokens():

262 token = request.headers.get("Authorization")

263 if token and request.content_type == "application/json":

264 try:

265 token_type, token = token.split(" ", 1)

266 user = lookup_user_token(token)

267 except UserNotFoundException:

268 abort(401)

269 except UserTokenExpiredException:

270 abort(401, description="Your access token has expired")

271 except Exception:

272 abort(401)

273 else:

274 login_user(user)

275

276 @app.before_request

277 def csrf():

278 try:

279 func = app.view_functions[request.endpoint]

280 except KeyError:

281 abort(404)

282 if hasattr(func, "_bypass_csrf"):

283 return

284 if request.headers.get("Authorization"):

285 return

286 if not session.get("nonce"):

287 session["nonce"] = generate_nonce()

288 if request.method not in ("GET", "HEAD", "OPTIONS", "TRACE"):

289 if request.content_type == "application/json":

290 if session["nonce"] != request.headers.get("CSRF-Token"):

291 abort(403)

292 if request.content_type != "application/json":

293 if session["nonce"] != request.form.get("nonce"):

294 abort(403)

295

296 application_root = app.config.get("APPLICATION_ROOT")

297 if application_root != "/":

298

299 @app.before_request

300 def force_subdirectory_redirect():

301 if request.path.startswith(application_root) is False:

302 return redirect(

303 application_root + request.script_root + request.full_path

304 )

305

306 app.wsgi_app = DispatcherMiddleware(app.wsgi_app, {application_root: app})

307

[end of CTFd/utils/initialization/__init__.py]

[start of CTFd/utils/countries/geoip.py]

1 import geoacumen

2 import maxminddb

3 from flask import current_app

4

5 IP_ADDR_LOOKUP = maxminddb.open_database(

6 current_app.config.get("GEOIP_DATABASE_PATH", geoacumen.db_path)

7 )

8

9

10 def lookup_ip_address(addr):

11 try:

12 response = IP_ADDR_LOOKUP.get(addr)

13 return response["country"]["iso_code"]

14 except (KeyError, ValueError):

15 return None

16

[end of CTFd/utils/countries/geoip.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/CTFd/utils/countries/geoip.py b/CTFd/utils/countries/geoip.py

--- a/CTFd/utils/countries/geoip.py

+++ b/CTFd/utils/countries/geoip.py

@@ -1,9 +1,9 @@

-import geoacumen

+import geoacumen_city

import maxminddb

from flask import current_app

IP_ADDR_LOOKUP = maxminddb.open_database(

- current_app.config.get("GEOIP_DATABASE_PATH", geoacumen.db_path)

+ current_app.config.get("GEOIP_DATABASE_PATH", geoacumen_city.db_path)

)

@@ -11,5 +11,13 @@

try:

response = IP_ADDR_LOOKUP.get(addr)

return response["country"]["iso_code"]

- except (KeyError, ValueError):

+ except (KeyError, ValueError, TypeError):

+ return None

+

+

+def lookup_ip_address_city(addr):

+ try:

+ response = IP_ADDR_LOOKUP.get(addr)

+ return response["city"]["names"]["en"]

+ except (KeyError, ValueError, TypeError):

return None

diff --git a/CTFd/utils/initialization/__init__.py b/CTFd/utils/initialization/__init__.py

--- a/CTFd/utils/initialization/__init__.py

+++ b/CTFd/utils/initialization/__init__.py

@@ -67,7 +67,7 @@

scores_visible,

)

from CTFd.utils.countries import get_countries, lookup_country_code

- from CTFd.utils.countries.geoip import lookup_ip_address

+ from CTFd.utils.countries.geoip import lookup_ip_address, lookup_ip_address_city

app.jinja_env.globals.update(config=config)

app.jinja_env.globals.update(get_pages=get_pages)

@@ -89,6 +89,7 @@

app.jinja_env.globals.update(get_countries=get_countries)

app.jinja_env.globals.update(lookup_country_code=lookup_country_code)

app.jinja_env.globals.update(lookup_ip_address=lookup_ip_address)

+ app.jinja_env.globals.update(lookup_ip_address_city=lookup_ip_address_city)

app.jinja_env.globals.update(accounts_visible=accounts_visible)

app.jinja_env.globals.update(challenges_visible=challenges_visible)

app.jinja_env.globals.update(registration_visible=registration_visible)

| {"golden_diff": "diff --git a/CTFd/utils/countries/geoip.py b/CTFd/utils/countries/geoip.py\n--- a/CTFd/utils/countries/geoip.py\n+++ b/CTFd/utils/countries/geoip.py\n@@ -1,9 +1,9 @@\n-import geoacumen\n+import geoacumen_city\n import maxminddb\n from flask import current_app\n \n IP_ADDR_LOOKUP = maxminddb.open_database(\n- current_app.config.get(\"GEOIP_DATABASE_PATH\", geoacumen.db_path)\n+ current_app.config.get(\"GEOIP_DATABASE_PATH\", geoacumen_city.db_path)\n )\n \n \n@@ -11,5 +11,13 @@\n try:\n response = IP_ADDR_LOOKUP.get(addr)\n return response[\"country\"][\"iso_code\"]\n- except (KeyError, ValueError):\n+ except (KeyError, ValueError, TypeError):\n+ return None\n+\n+\n+def lookup_ip_address_city(addr):\n+ try:\n+ response = IP_ADDR_LOOKUP.get(addr)\n+ return response[\"city\"][\"names\"][\"en\"]\n+ except (KeyError, ValueError, TypeError):\n return None\ndiff --git a/CTFd/utils/initialization/__init__.py b/CTFd/utils/initialization/__init__.py\n--- a/CTFd/utils/initialization/__init__.py\n+++ b/CTFd/utils/initialization/__init__.py\n@@ -67,7 +67,7 @@\n scores_visible,\n )\n from CTFd.utils.countries import get_countries, lookup_country_code\n- from CTFd.utils.countries.geoip import lookup_ip_address\n+ from CTFd.utils.countries.geoip import lookup_ip_address, lookup_ip_address_city\n \n app.jinja_env.globals.update(config=config)\n app.jinja_env.globals.update(get_pages=get_pages)\n@@ -89,6 +89,7 @@\n app.jinja_env.globals.update(get_countries=get_countries)\n app.jinja_env.globals.update(lookup_country_code=lookup_country_code)\n app.jinja_env.globals.update(lookup_ip_address=lookup_ip_address)\n+ app.jinja_env.globals.update(lookup_ip_address_city=lookup_ip_address_city)\n app.jinja_env.globals.update(accounts_visible=accounts_visible)\n app.jinja_env.globals.update(challenges_visible=challenges_visible)\n app.jinja_env.globals.update(registration_visible=registration_visible)\n", "issue": "IP to City Database\nI think we can provide an IP to city database now instead of just showing country. \n", "before_files": [{"content": "import datetime\nimport logging\nimport os\nimport sys\n\nfrom flask import abort, redirect, render_template, request, session, url_for\nfrom sqlalchemy.exc import IntegrityError, InvalidRequestError\nfrom werkzeug.middleware.dispatcher import DispatcherMiddleware\n\nfrom CTFd.cache import clear_user_recent_ips\nfrom CTFd.exceptions import UserNotFoundException, UserTokenExpiredException\nfrom CTFd.models import Tracking, db\nfrom CTFd.utils import config, get_config, markdown\nfrom CTFd.utils.config import (\n can_send_mail,\n ctf_logo,\n ctf_name,\n ctf_theme,\n integrations,\n is_setup,\n)\nfrom CTFd.utils.config.pages import get_pages\nfrom CTFd.utils.dates import isoformat, unix_time, unix_time_millis\nfrom CTFd.utils.events import EventManager, RedisEventManager\nfrom CTFd.utils.humanize.words import pluralize\nfrom CTFd.utils.modes import generate_account_url, get_mode_as_word\nfrom CTFd.utils.plugins import (\n get_configurable_plugins,\n get_registered_admin_scripts,\n get_registered_admin_stylesheets,\n get_registered_scripts,\n get_registered_stylesheets,\n)\nfrom CTFd.utils.security.auth import login_user, logout_user, lookup_user_token\nfrom CTFd.utils.security.csrf import generate_nonce\nfrom CTFd.utils.user import (\n authed,\n get_current_team_attrs,\n get_current_user_attrs,\n get_current_user_recent_ips,\n get_ip,\n is_admin,\n)\n\n\ndef init_template_filters(app):\n app.jinja_env.filters[\"markdown\"] = markdown\n app.jinja_env.filters[\"unix_time\"] = unix_time\n app.jinja_env.filters[\"unix_time_millis\"] = unix_time_millis\n app.jinja_env.filters[\"isoformat\"] = isoformat\n app.jinja_env.filters[\"pluralize\"] = pluralize\n\n\ndef init_template_globals(app):\n from CTFd.constants import JINJA_ENUMS\n from CTFd.constants.config import Configs\n from CTFd.constants.plugins import Plugins\n from CTFd.constants.sessions import Session\n from CTFd.constants.static import Static\n from CTFd.constants.users import User\n from CTFd.constants.teams import Team\n from CTFd.forms import Forms\n from CTFd.utils.config.visibility import (\n accounts_visible,\n challenges_visible,\n registration_visible,\n scores_visible,\n )\n from CTFd.utils.countries import get_countries, lookup_country_code\n from CTFd.utils.countries.geoip import lookup_ip_address\n\n app.jinja_env.globals.update(config=config)\n app.jinja_env.globals.update(get_pages=get_pages)\n app.jinja_env.globals.update(can_send_mail=can_send_mail)\n app.jinja_env.globals.update(get_ctf_name=ctf_name)\n app.jinja_env.globals.update(get_ctf_logo=ctf_logo)\n app.jinja_env.globals.update(get_ctf_theme=ctf_theme)\n app.jinja_env.globals.update(get_configurable_plugins=get_configurable_plugins)\n app.jinja_env.globals.update(get_registered_scripts=get_registered_scripts)\n app.jinja_env.globals.update(get_registered_stylesheets=get_registered_stylesheets)\n app.jinja_env.globals.update(\n get_registered_admin_scripts=get_registered_admin_scripts\n )\n app.jinja_env.globals.update(\n get_registered_admin_stylesheets=get_registered_admin_stylesheets\n )\n app.jinja_env.globals.update(get_config=get_config)\n app.jinja_env.globals.update(generate_account_url=generate_account_url)\n app.jinja_env.globals.update(get_countries=get_countries)\n app.jinja_env.globals.update(lookup_country_code=lookup_country_code)\n app.jinja_env.globals.update(lookup_ip_address=lookup_ip_address)\n app.jinja_env.globals.update(accounts_visible=accounts_visible)\n app.jinja_env.globals.update(challenges_visible=challenges_visible)\n app.jinja_env.globals.update(registration_visible=registration_visible)\n app.jinja_env.globals.update(scores_visible=scores_visible)\n app.jinja_env.globals.update(get_mode_as_word=get_mode_as_word)\n app.jinja_env.globals.update(integrations=integrations)\n app.jinja_env.globals.update(authed=authed)\n app.jinja_env.globals.update(is_admin=is_admin)\n app.jinja_env.globals.update(get_current_user_attrs=get_current_user_attrs)\n app.jinja_env.globals.update(get_current_team_attrs=get_current_team_attrs)\n app.jinja_env.globals.update(get_ip=get_ip)\n app.jinja_env.globals.update(Configs=Configs)\n app.jinja_env.globals.update(Plugins=Plugins)\n app.jinja_env.globals.update(Session=Session)\n app.jinja_env.globals.update(Static=Static)\n app.jinja_env.globals.update(Forms=Forms)\n app.jinja_env.globals.update(User=User)\n app.jinja_env.globals.update(Team=Team)\n\n # Add in JinjaEnums\n # The reason this exists is that on double import, JinjaEnums are not reinitialized\n # Thus, if you try to create two jinja envs (e.g. during testing), sometimes\n # an Enum will not be available to Jinja.\n # Instead we can just directly grab them from the persisted global dictionary.\n for k, v in JINJA_ENUMS.items():\n # .update() can't be used here because it would use the literal value k\n app.jinja_env.globals[k] = v\n\n\ndef init_logs(app):\n logger_submissions = logging.getLogger(\"submissions\")\n logger_logins = logging.getLogger(\"logins\")\n logger_registrations = logging.getLogger(\"registrations\")\n\n logger_submissions.setLevel(logging.INFO)\n logger_logins.setLevel(logging.INFO)\n logger_registrations.setLevel(logging.INFO)\n\n log_dir = app.config[\"LOG_FOLDER\"]\n if not os.path.exists(log_dir):\n os.makedirs(log_dir)\n\n logs = {\n \"submissions\": os.path.join(log_dir, \"submissions.log\"),\n \"logins\": os.path.join(log_dir, \"logins.log\"),\n \"registrations\": os.path.join(log_dir, \"registrations.log\"),\n }\n\n try:\n for log in logs.values():\n if not os.path.exists(log):\n open(log, \"a\").close()\n\n submission_log = logging.handlers.RotatingFileHandler(\n logs[\"submissions\"], maxBytes=10485760, backupCount=5\n )\n login_log = logging.handlers.RotatingFileHandler(\n logs[\"logins\"], maxBytes=10485760, backupCount=5\n )\n registration_log = logging.handlers.RotatingFileHandler(\n logs[\"registrations\"], maxBytes=10485760, backupCount=5\n )\n\n logger_submissions.addHandler(submission_log)\n logger_logins.addHandler(login_log)\n logger_registrations.addHandler(registration_log)\n except IOError:\n pass\n\n stdout = logging.StreamHandler(stream=sys.stdout)\n\n logger_submissions.addHandler(stdout)\n logger_logins.addHandler(stdout)\n logger_registrations.addHandler(stdout)\n\n logger_submissions.propagate = 0\n logger_logins.propagate = 0\n logger_registrations.propagate = 0\n\n\ndef init_events(app):\n if app.config.get(\"CACHE_TYPE\") == \"redis\":\n app.events_manager = RedisEventManager()\n elif app.config.get(\"CACHE_TYPE\") == \"filesystem\":\n app.events_manager = EventManager()\n else:\n app.events_manager = EventManager()\n app.events_manager.listen()\n\n\ndef init_request_processors(app):\n @app.url_defaults\n def inject_theme(endpoint, values):\n if \"theme\" not in values and app.url_map.is_endpoint_expecting(\n endpoint, \"theme\"\n ):\n values[\"theme\"] = ctf_theme()\n\n @app.before_request\n def needs_setup():\n if is_setup() is False:\n if request.endpoint in (\n \"views.setup\",\n \"views.integrations\",\n \"views.themes\",\n \"views.files\",\n ):\n return\n else:\n return redirect(url_for(\"views.setup\"))\n\n @app.before_request\n def tracker():\n if request.endpoint == \"views.themes\":\n return\n\n if authed():\n user_ips = get_current_user_recent_ips()\n ip = get_ip()\n\n track = None\n if (ip not in user_ips) or (request.method != \"GET\"):\n track = Tracking.query.filter_by(\n ip=get_ip(), user_id=session[\"id\"]\n ).first()\n\n if track:\n track.date = datetime.datetime.utcnow()\n else:\n track = Tracking(ip=get_ip(), user_id=session[\"id\"])\n db.session.add(track)\n\n if track:\n try:\n db.session.commit()\n except (InvalidRequestError, IntegrityError):\n db.session.rollback()\n db.session.close()\n logout_user()\n else:\n clear_user_recent_ips(user_id=session[\"id\"])\n\n @app.before_request\n def banned():\n if request.endpoint == \"views.themes\":\n return\n\n if authed():\n user = get_current_user_attrs()\n team = get_current_team_attrs()\n\n if user and user.banned:\n return (\n render_template(\n \"errors/403.html\", error=\"You have been banned from this CTF\"\n ),\n 403,\n )\n\n if team and team.banned:\n return (\n render_template(\n \"errors/403.html\",\n error=\"Your team has been banned from this CTF\",\n ),\n 403,\n )\n\n @app.before_request\n def tokens():\n token = request.headers.get(\"Authorization\")\n if token and request.content_type == \"application/json\":\n try:\n token_type, token = token.split(\" \", 1)\n user = lookup_user_token(token)\n except UserNotFoundException:\n abort(401)\n except UserTokenExpiredException:\n abort(401, description=\"Your access token has expired\")\n except Exception:\n abort(401)\n else:\n login_user(user)\n\n @app.before_request\n def csrf():\n try:\n func = app.view_functions[request.endpoint]\n except KeyError:\n abort(404)\n if hasattr(func, \"_bypass_csrf\"):\n return\n if request.headers.get(\"Authorization\"):\n return\n if not session.get(\"nonce\"):\n session[\"nonce\"] = generate_nonce()\n if request.method not in (\"GET\", \"HEAD\", \"OPTIONS\", \"TRACE\"):\n if request.content_type == \"application/json\":\n if session[\"nonce\"] != request.headers.get(\"CSRF-Token\"):\n abort(403)\n if request.content_type != \"application/json\":\n if session[\"nonce\"] != request.form.get(\"nonce\"):\n abort(403)\n\n application_root = app.config.get(\"APPLICATION_ROOT\")\n if application_root != \"/\":\n\n @app.before_request\n def force_subdirectory_redirect():\n if request.path.startswith(application_root) is False:\n return redirect(\n application_root + request.script_root + request.full_path\n )\n\n app.wsgi_app = DispatcherMiddleware(app.wsgi_app, {application_root: app})\n", "path": "CTFd/utils/initialization/__init__.py"}, {"content": "import geoacumen\nimport maxminddb\nfrom flask import current_app\n\nIP_ADDR_LOOKUP = maxminddb.open_database(\n current_app.config.get(\"GEOIP_DATABASE_PATH\", geoacumen.db_path)\n)\n\n\ndef lookup_ip_address(addr):\n try:\n response = IP_ADDR_LOOKUP.get(addr)\n return response[\"country\"][\"iso_code\"]\n except (KeyError, ValueError):\n return None\n", "path": "CTFd/utils/countries/geoip.py"}]} | 3,951 | 520 |

gh_patches_debug_39078 | rasdani/github-patches | git_diff | ranaroussi__yfinance-1297 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Scraper error "TypeError: string indices must be integers" - Yahoo decrypt fail

## Updates

### 2023 January 13

By the time of posting the issue (2023 January 12), the issue only occured sometimes. The library is now (2023 January 13) completely broken and I am unable to retrieve any stock informatio

### 2023 January 14

Fix has been merged to the branch `dev`

## Info about your system:

yfinance version: 0.2.3

Operating system: macOS Monteray 12.0.1

### Snippet that can recreate the error

```

stock = yf.Ticker("^GSPC")

info = stock.info

```

## Error

Message:`TypeError: string indices must be integers`

It seems to be a problem where the scraper is not scraping the correct information, leading to a crash.

### Traceback:

```

Traceback (most recent call last):

File "/home/2022/szhang139/.local/lib/python3.10/site-packages/apscheduler/executors/base_py3.py", line 30, in run_coroutine_job

retval = await job.func(*job.args, **job.kwargs)

File "/home/2022/szhang139/repos/STONK/src/main.py", line 61, in notify

market = get_major_index(f'Market Close - {daytime.today_date()}')

File "/home/2022/szhang139/repos/STONK/src/market_info.py", line 63, in get_major_index

sp500 = get_stock('^GSPC')

File "/home/2022/szhang139/repos/STONK/src/market_info.py", line 41, in get_stock

stock_info = get_stock_info(stock_name)

File "/home/2022/szhang139/repos/STONK/src/market_info.py", line 8, in get_stock_info

info = stock.info

File "/home/2022/szhang139/.local/lib/python3.10/site-packages/yfinance/ticker.py", line 138, in info

return self.get_info()

File "/home/2022/szhang139/.local/lib/python3.10/site-packages/yfinance/base.py", line 894, in get_info

data = self._quote.info

File "/home/2022/szhang139/.local/lib/python3.10/site-packages/yfinance/scrapers/quote.py", line 27, in info

self._scrape(self.proxy)

File "/home/2022/szhang139/.local/lib/python3.10/site-packages/yfinance/scrapers/quote.py", line 58, in _scrape

quote_summary_store = json_data['QuoteSummaryStore']

```

### Frequency

The error occurs in no apparent pattern. Every time it occurs, it seem to persist for some range of time before it recovers back to normal.

n.

</issue>

<code>

[start of yfinance/data.py]

1 import functools

2 from functools import lru_cache

3

4 import hashlib

5 from base64 import b64decode

6 usePycryptodome = False # slightly faster

7 # usePycryptodome = True

8 if usePycryptodome:

9 from Crypto.Cipher import AES

10 from Crypto.Util.Padding import unpad

11 else:

12 from cryptography.hazmat.primitives import padding

13 from cryptography.hazmat.primitives.ciphers import Cipher, algorithms, modes

14

15 import requests as requests

16 import re

17

18 from frozendict import frozendict

19

20 try:

21 import ujson as json

22 except ImportError:

23 import json as json

24

25 cache_maxsize = 64

26

27

28 def lru_cache_freezeargs(func):

29 """

30 Decorator transforms mutable dictionary and list arguments into immutable types

31 Needed so lru_cache can cache method calls what has dict or list arguments.

32 """

33

34 @functools.wraps(func)

35 def wrapped(*args, **kwargs):

36 args = tuple([frozendict(arg) if isinstance(arg, dict) else arg for arg in args])

37 kwargs = {k: frozendict(v) if isinstance(v, dict) else v for k, v in kwargs.items()}

38 args = tuple([tuple(arg) if isinstance(arg, list) else arg for arg in args])

39 kwargs = {k: tuple(v) if isinstance(v, list) else v for k, v in kwargs.items()}

40 return func(*args, **kwargs)

41

42 # copy over the lru_cache extra methods to this wrapper to be able to access them

43 # after this decorator has been applied

44 wrapped.cache_info = func.cache_info

45 wrapped.cache_clear = func.cache_clear

46 return wrapped

47

48

49 def decrypt_cryptojs_aes(data):

50 encrypted_stores = data['context']['dispatcher']['stores']

51 _cs = data["_cs"]

52 _cr = data["_cr"]

53

54 _cr = b"".join(int.to_bytes(i, length=4, byteorder="big", signed=True) for i in json.loads(_cr)["words"])

55 password = hashlib.pbkdf2_hmac("sha1", _cs.encode("utf8"), _cr, 1, dklen=32).hex()

56

57 encrypted_stores = b64decode(encrypted_stores)

58 assert encrypted_stores[0:8] == b"Salted__"

59 salt = encrypted_stores[8:16]

60 encrypted_stores = encrypted_stores[16:]

61

62 def EVPKDF(password, salt, keySize=32, ivSize=16, iterations=1, hashAlgorithm="md5") -> tuple:

63 """OpenSSL EVP Key Derivation Function

64 Args:

65 password (Union[str, bytes, bytearray]): Password to generate key from.

66 salt (Union[bytes, bytearray]): Salt to use.

67 keySize (int, optional): Output key length in bytes. Defaults to 32.

68 ivSize (int, optional): Output Initialization Vector (IV) length in bytes. Defaults to 16.

69 iterations (int, optional): Number of iterations to perform. Defaults to 1.

70 hashAlgorithm (str, optional): Hash algorithm to use for the KDF. Defaults to 'md5'.

71 Returns:

72 key, iv: Derived key and Initialization Vector (IV) bytes.

73

74 Taken from: https://gist.github.com/rafiibrahim8/0cd0f8c46896cafef6486cb1a50a16d3

75 OpenSSL original code: https://github.com/openssl/openssl/blob/master/crypto/evp/evp_key.c#L78

76 """

77

78 assert iterations > 0, "Iterations can not be less than 1."

79

80 if isinstance(password, str):

81 password = password.encode("utf-8")

82

83 final_length = keySize + ivSize

84 key_iv = b""

85 block = None

86

87 while len(key_iv) < final_length:

88 hasher = hashlib.new(hashAlgorithm)

89 if block:

90 hasher.update(block)

91 hasher.update(password)

92 hasher.update(salt)

93 block = hasher.digest()

94 for _ in range(1, iterations):

95 block = hashlib.new(hashAlgorithm, block).digest()

96 key_iv += block

97

98 key, iv = key_iv[:keySize], key_iv[keySize:final_length]

99 return key, iv

100

101 key, iv = EVPKDF(password, salt, keySize=32, ivSize=16, iterations=1, hashAlgorithm="md5")

102

103 if usePycryptodome:

104 cipher = AES.new(key, AES.MODE_CBC, iv=iv)

105 plaintext = cipher.decrypt(encrypted_stores)

106 plaintext = unpad(plaintext, 16, style="pkcs7")

107 else:

108 cipher = Cipher(algorithms.AES(key), modes.CBC(iv))

109 decryptor = cipher.decryptor()

110 plaintext = decryptor.update(encrypted_stores) + decryptor.finalize()

111 unpadder = padding.PKCS7(128).unpadder()

112 plaintext = unpadder.update(plaintext) + unpadder.finalize()

113 plaintext = plaintext.decode("utf-8")

114

115 decoded_stores = json.loads(plaintext)

116 return decoded_stores

117

118

119 _SCRAPE_URL_ = 'https://finance.yahoo.com/quote'

120

121

122 class TickerData:

123 """

124 Have one place to retrieve data from Yahoo API in order to ease caching and speed up operations

125 """

126 user_agent_headers = {

127 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36'}

128

129 def __init__(self, ticker: str, session=None):

130 self.ticker = ticker

131 self._session = session or requests

132

133 def get(self, url, user_agent_headers=None, params=None, proxy=None, timeout=30):

134 proxy = self._get_proxy(proxy)

135 response = self._session.get(

136 url=url,

137 params=params,

138 proxies=proxy,

139 timeout=timeout,

140 headers=user_agent_headers or self.user_agent_headers)

141 return response

142

143 @lru_cache_freezeargs

144 @lru_cache(maxsize=cache_maxsize)

145 def cache_get(self, url, user_agent_headers=None, params=None, proxy=None, timeout=30):

146 return self.get(url, user_agent_headers, params, proxy, timeout)

147

148 def _get_proxy(self, proxy):

149 # setup proxy in requests format

150 if proxy is not None:

151 if isinstance(proxy, dict) and "https" in proxy:

152 proxy = proxy["https"]

153 proxy = {"https": proxy}

154 return proxy

155

156 @lru_cache_freezeargs

157 @lru_cache(maxsize=cache_maxsize)

158 def get_json_data_stores(self, sub_page: str = None, proxy=None) -> dict:

159 '''

160 get_json_data_stores returns a python dictionary of the data stores in yahoo finance web page.

161 '''

162 if sub_page:

163 ticker_url = "{}/{}/{}".format(_SCRAPE_URL_, self.ticker, sub_page)

164 else:

165 ticker_url = "{}/{}".format(_SCRAPE_URL_, self.ticker)

166

167 html = self.get(url=ticker_url, proxy=proxy).text

168

169 # The actual json-data for stores is in a javascript assignment in the webpage

170 try:

171 json_str = html.split('root.App.main =')[1].split(

172 '(this)')[0].split(';\n}')[0].strip()

173 except IndexError:

174 # Fetch failed, probably because Yahoo spam triggered

175 return {}

176

177 data = json.loads(json_str)

178

179 if "_cs" in data and "_cr" in data:

180 data = decrypt_cryptojs_aes(data)

181

182 if "context" in data and "dispatcher" in data["context"]:

183 # Keep old code, just in case

184 data = data['context']['dispatcher']['stores']

185

186 # return data

187 new_data = json.dumps(data).replace('{}', 'null')

188 new_data = re.sub(

189 r'{[\'|\"]raw[\'|\"]:(.*?),(.*?)}', r'\1', new_data)

190

191 return json.loads(new_data)

192

[end of yfinance/data.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/yfinance/data.py b/yfinance/data.py

--- a/yfinance/data.py

+++ b/yfinance/data.py

@@ -46,13 +46,33 @@

return wrapped

-def decrypt_cryptojs_aes(data):

+def decrypt_cryptojs_aes_stores(data):

encrypted_stores = data['context']['dispatcher']['stores']

- _cs = data["_cs"]

- _cr = data["_cr"]

- _cr = b"".join(int.to_bytes(i, length=4, byteorder="big", signed=True) for i in json.loads(_cr)["words"])

- password = hashlib.pbkdf2_hmac("sha1", _cs.encode("utf8"), _cr, 1, dklen=32).hex()

+ if "_cs" in data and "_cr" in data:

+ _cs = data["_cs"]

+ _cr = data["_cr"]

+ _cr = b"".join(int.to_bytes(i, length=4, byteorder="big", signed=True) for i in json.loads(_cr)["words"])

+ password = hashlib.pbkdf2_hmac("sha1", _cs.encode("utf8"), _cr, 1, dklen=32).hex()

+ else:

+ # Currently assume one extra key in dict, which is password. Print error if

+ # more extra keys detected.

+ new_keys = [k for k in data.keys() if k not in ["context", "plugins"]]

+ l = len(new_keys)

+ if l == 0:

+ return None

+ elif l == 1 and isinstance(data[new_keys[0]], str):

+ password_key = new_keys[0]

+ else:

+ msg = "Yahoo has again changed data format, yfinance now unsure which key(s) is for decryption:"

+ k = new_keys[0]

+ k_str = k if len(k) < 32 else k[:32-3]+"..."

+ msg += f" '{k_str}'->{type(data[k])}"

+ for i in range(1, len(new_keys)):

+ msg += f" , '{k_str}'->{type(data[k])}"

+ raise Exception(msg)

+ password_key = new_keys[0]

+ password = data[password_key]

encrypted_stores = b64decode(encrypted_stores)

assert encrypted_stores[0:8] == b"Salted__"

@@ -98,7 +118,10 @@

key, iv = key_iv[:keySize], key_iv[keySize:final_length]

return key, iv

- key, iv = EVPKDF(password, salt, keySize=32, ivSize=16, iterations=1, hashAlgorithm="md5")

+ try:

+ key, iv = EVPKDF(password, salt, keySize=32, ivSize=16, iterations=1, hashAlgorithm="md5")

+ except:

+ raise Exception("yfinance failed to decrypt Yahoo data response")

if usePycryptodome:

cipher = AES.new(key, AES.MODE_CBC, iv=iv)

@@ -176,15 +199,16 @@

data = json.loads(json_str)

- if "_cs" in data and "_cr" in data:

- data = decrypt_cryptojs_aes(data)

-

- if "context" in data and "dispatcher" in data["context"]:

- # Keep old code, just in case

- data = data['context']['dispatcher']['stores']

+ stores = decrypt_cryptojs_aes_stores(data)

+ if stores is None:

+ # Maybe Yahoo returned old format, not encrypted

+ if "context" in data and "dispatcher" in data["context"]:

+ stores = data['context']['dispatcher']['stores']

+ if stores is None:

+ raise Exception(f"{self.ticker}: Failed to extract data stores from web request")

# return data

- new_data = json.dumps(data).replace('{}', 'null')

+ new_data = json.dumps(stores).replace('{}', 'null')

new_data = re.sub(

r'{[\'|\"]raw[\'|\"]:(.*?),(.*?)}', r'\1', new_data)