problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_643

|

rasdani/github-patches

|

git_diff

|

pex-tool__pex-1925

|

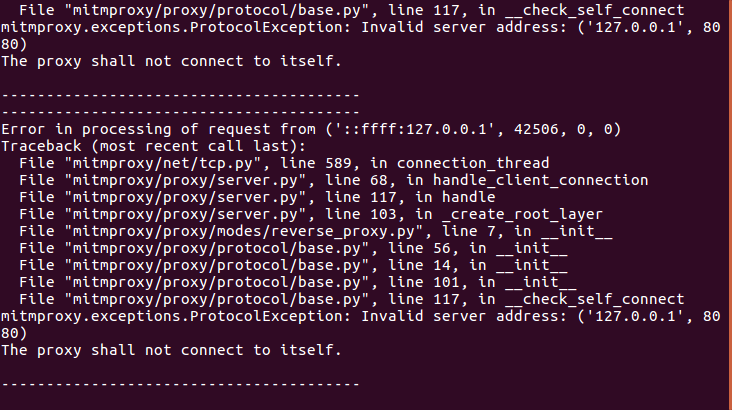

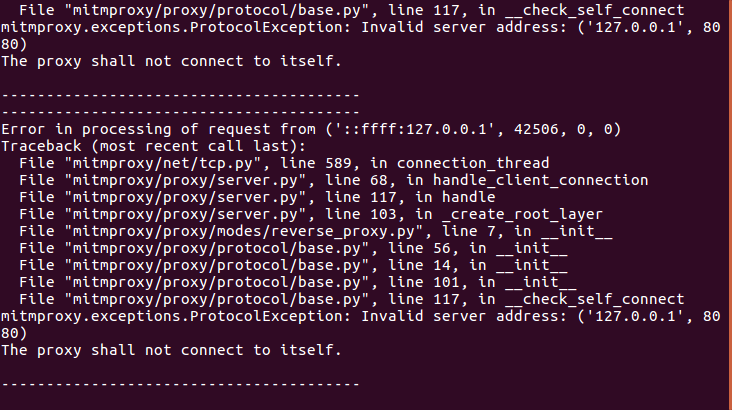

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Release 2.1.107

On the docket:

+ [x] `git` username replaced with `****` redaction in lockfile for `git+ssh` direct references #1918

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pex/version.py`

Content:

```

1 # Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3

4 __version__ = "2.1.106"

5

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/pex/version.py b/pex/version.py

--- a/pex/version.py

+++ b/pex/version.py

@@ -1,4 +1,4 @@

# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).

# Licensed under the Apache License, Version 2.0 (see LICENSE).

-__version__ = "2.1.106"

+__version__ = "2.1.107"

|

{"golden_diff": "diff --git a/pex/version.py b/pex/version.py\n--- a/pex/version.py\n+++ b/pex/version.py\n@@ -1,4 +1,4 @@\n # Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n # Licensed under the Apache License, Version 2.0 (see LICENSE).\n \n-__version__ = \"2.1.106\"\n+__version__ = \"2.1.107\"\n", "issue": "Release 2.1.107\nOn the docket:\r\n+ [x] `git` username replaced with `****` redaction in lockfile for `git+ssh` direct references #1918\n", "before_files": [{"content": "# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\n__version__ = \"2.1.106\"\n", "path": "pex/version.py"}], "after_files": [{"content": "# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\n__version__ = \"2.1.107\"\n", "path": "pex/version.py"}]}

| 355 | 98 |

gh_patches_debug_37259

|

rasdani/github-patches

|

git_diff

|

ansible__ansible-25990

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

cloud/vmware/vmware_vswitch.py nic_name should be optional

##### ISSUE TYPE

- Bug Report

##### COMPONENT NAME

cloud/vmware/vmware_vswitch.py

##### ANSIBLE VERSION

```

ansible 2.3.1.0

config file = /etc/ansible/ansible.cfg

configured module search path = Default w/o overrides

python version = 2.7.12 (default, Nov 19 2016, 06:48:10) [GCC 5.4.0 20160609]

```

##### CONFIGURATION

n/a

##### OS / ENVIRONMENT

n/a

##### SUMMARY

The vmware_vswitch module erroneously assumes that 'nic_name' is required. It is valid (and sometimes desired) to make a vmware virtual switch that does not have any uplink nics at all - the use case is multiple isolated port-groups for isolated networking.

After the vswitch is created, we create a port-group with VLAN 4095 (all vlans), with network policy permitting mac changes, forged transmit, and promiscuous all enabled.

In /ansible/modules/cloud/vmware/vmware_vswitch.py , we can omit this line if nic_name is not specified and the port-group is created as desired.

```python

if self.nic_name:

vss_spec.bridge = vim.host.VirtualSwitch.BondBridge(nicDevice=[self.nic_name])

```

##### STEPS TO REPRODUCE

Run ansible-playbook against a task using the vmware_vswitch module, omitting nic_name.

```

- name: add test_switch

local_action:

module: vmware_vswitch

hostname: esxi_host

username: esxi_username

password: esxi_password

switch_name: item

mtu: 9000

validate_certs: no

number_of_ports: 8

#nic_name: 'null'

```

##### EXPECTED RESULTS

I expect the vmware vswitch to be created, but without any uplink nics.

##### ACTUAL RESULTS

```

fatal: [localhost -> localhost]: FAILED! => {"changed": false, "failed": true, "msg": "missing required arguments: nic_name"}

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `lib/ansible/modules/cloud/vmware/vmware_vswitch.py`

Content:

```

1 #!/usr/bin/python

2 # -*- coding: utf-8 -*-

3

4 # (c) 2015, Joseph Callen <jcallen () csc.com>

5 #

6 # This file is part of Ansible

7 #

8 # Ansible is free software: you can redistribute it and/or modify

9 # it under the terms of the GNU General Public License as published by

10 # the Free Software Foundation, either version 3 of the License, or

11 # (at your option) any later version.

12 #

13 # Ansible is distributed in the hope that it will be useful,

14 # but WITHOUT ANY WARRANTY; without even the implied warranty of

15 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

16 # GNU General Public License for more details.

17 #

18 # You should have received a copy of the GNU General Public License

19 # along with Ansible. If not, see <http://www.gnu.org/licenses/>.

20

21 ANSIBLE_METADATA = {'metadata_version': '1.0',

22 'status': ['preview'],

23 'supported_by': 'community'}

24

25

26 DOCUMENTATION = '''

27 ---

28 module: vmware_vswitch

29 short_description: Add a VMware Standard Switch to an ESXi host

30 description:

31 - Add a VMware Standard Switch to an ESXi host

32 version_added: 2.0

33 author: "Joseph Callen (@jcpowermac), Russell Teague (@mtnbikenc)"

34 notes:

35 - Tested on vSphere 5.5

36 requirements:

37 - "python >= 2.6"

38 - PyVmomi

39 options:

40 switch_name:

41 description:

42 - vSwitch name to add

43 required: True

44 nic_name:

45 description:

46 - vmnic name to attach to vswitch

47 required: True

48 number_of_ports:

49 description:

50 - Number of port to configure on vswitch

51 default: 128

52 required: False

53 mtu:

54 description:

55 - MTU to configure on vswitch

56 required: False

57 state:

58 description:

59 - Add or remove the switch

60 default: 'present'

61 choices:

62 - 'present'

63 - 'absent'

64 required: False

65 extends_documentation_fragment: vmware.documentation

66 '''

67

68 EXAMPLES = '''

69 # Example from Ansible playbook

70

71 - name: Add a VMware vSwitch

72 local_action:

73 module: vmware_vswitch

74 hostname: esxi_hostname

75 username: esxi_username

76 password: esxi_password

77 switch_name: vswitch_name

78 nic_name: vmnic_name

79 mtu: 9000

80 '''

81

82 try:

83 from pyVmomi import vim, vmodl

84 HAS_PYVMOMI = True

85 except ImportError:

86 HAS_PYVMOMI = False

87

88

89 def find_vswitch_by_name(host, vswitch_name):

90 for vss in host.config.network.vswitch:

91 if vss.name == vswitch_name:

92 return vss

93 return None

94

95

96 class VMwareHostVirtualSwitch(object):

97

98 def __init__(self, module):

99 self.host_system = None

100 self.content = None

101 self.vss = None

102 self.module = module

103 self.switch_name = module.params['switch_name']

104 self.number_of_ports = module.params['number_of_ports']

105 self.nic_name = module.params['nic_name']

106 self.mtu = module.params['mtu']

107 self.state = module.params['state']

108 self.content = connect_to_api(self.module)

109

110 def process_state(self):

111 try:

112 vswitch_states = {

113 'absent': {

114 'present': self.state_destroy_vswitch,

115 'absent': self.state_exit_unchanged,

116 },

117 'present': {

118 'update': self.state_update_vswitch,

119 'present': self.state_exit_unchanged,

120 'absent': self.state_create_vswitch,

121 }

122 }

123

124 vswitch_states[self.state][self.check_vswitch_configuration()]()

125

126 except vmodl.RuntimeFault as runtime_fault:

127 self.module.fail_json(msg=runtime_fault.msg)

128 except vmodl.MethodFault as method_fault:

129 self.module.fail_json(msg=method_fault.msg)

130 except Exception as e:

131 self.module.fail_json(msg=str(e))

132

133

134 # Source from

135 # https://github.com/rreubenur/pyvmomi-community-samples/blob/patch-1/samples/create_vswitch.py

136

137 def state_create_vswitch(self):

138 vss_spec = vim.host.VirtualSwitch.Specification()

139 vss_spec.numPorts = self.number_of_ports

140 vss_spec.mtu = self.mtu

141 vss_spec.bridge = vim.host.VirtualSwitch.BondBridge(nicDevice=[self.nic_name])

142 self.host_system.configManager.networkSystem.AddVirtualSwitch(vswitchName=self.switch_name, spec=vss_spec)

143 self.module.exit_json(changed=True)

144

145 def state_exit_unchanged(self):

146 self.module.exit_json(changed=False)

147

148 def state_destroy_vswitch(self):

149 config = vim.host.NetworkConfig()

150

151 for portgroup in self.host_system.configManager.networkSystem.networkInfo.portgroup:

152 if portgroup.spec.vswitchName == self.vss.name:

153 portgroup_config = vim.host.PortGroup.Config()

154 portgroup_config.changeOperation = "remove"

155 portgroup_config.spec = vim.host.PortGroup.Specification()

156 portgroup_config.spec.name = portgroup.spec.name

157 portgroup_config.spec.name = portgroup.spec.name

158 portgroup_config.spec.vlanId = portgroup.spec.vlanId

159 portgroup_config.spec.vswitchName = portgroup.spec.vswitchName

160 portgroup_config.spec.policy = vim.host.NetworkPolicy()

161 config.portgroup.append(portgroup_config)

162

163 self.host_system.configManager.networkSystem.UpdateNetworkConfig(config, "modify")

164 self.host_system.configManager.networkSystem.RemoveVirtualSwitch(self.vss.name)

165 self.module.exit_json(changed=True)

166

167 def state_update_vswitch(self):

168 self.module.exit_json(changed=False, msg="Currently not implemented.")

169

170 def check_vswitch_configuration(self):

171 host = get_all_objs(self.content, [vim.HostSystem])

172 if not host:

173 self.module.fail_json(msg="Unable to find host")

174

175 self.host_system = host.keys()[0]

176 self.vss = find_vswitch_by_name(self.host_system, self.switch_name)

177

178 if self.vss is None:

179 return 'absent'

180 else:

181 return 'present'

182

183

184 def main():

185 argument_spec = vmware_argument_spec()

186 argument_spec.update(dict(switch_name=dict(required=True, type='str'),

187 nic_name=dict(required=True, type='str'),

188 number_of_ports=dict(required=False, type='int', default=128),

189 mtu=dict(required=False, type='int', default=1500),

190 state=dict(default='present', choices=['present', 'absent'], type='str')))

191

192 module = AnsibleModule(argument_spec=argument_spec, supports_check_mode=False)

193

194 if not HAS_PYVMOMI:

195 module.fail_json(msg='pyvmomi is required for this module')

196

197 host_virtual_switch = VMwareHostVirtualSwitch(module)

198 host_virtual_switch.process_state()

199

200 from ansible.module_utils.vmware import *

201 from ansible.module_utils.basic import *

202

203 if __name__ == '__main__':

204 main()

205

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/lib/ansible/modules/cloud/vmware/vmware_vswitch.py b/lib/ansible/modules/cloud/vmware/vmware_vswitch.py

--- a/lib/ansible/modules/cloud/vmware/vmware_vswitch.py

+++ b/lib/ansible/modules/cloud/vmware/vmware_vswitch.py

@@ -44,7 +44,7 @@

nic_name:

description:

- vmnic name to attach to vswitch

- required: True

+ required: False

number_of_ports:

description:

- Number of port to configure on vswitch

@@ -66,17 +66,24 @@

'''

EXAMPLES = '''

-# Example from Ansible playbook

-

- - name: Add a VMware vSwitch

- local_action:

- module: vmware_vswitch

- hostname: esxi_hostname

- username: esxi_username

- password: esxi_password

- switch_name: vswitch_name

- nic_name: vmnic_name

- mtu: 9000

+- name: Add a VMware vSwitch

+ local_action:

+ module: vmware_vswitch

+ hostname: esxi_hostname

+ username: esxi_username

+ password: esxi_password

+ switch_name: vswitch_name

+ nic_name: vmnic_name

+ mtu: 9000

+

+- name: Add a VMWare vSwitch without any physical NIC attached

+ vmware_vswitch:

+ hostname: 192.168.10.1

+ username: admin

+ password: password123

+ switch_name: vswitch_0001

+ mtu: 9000

+

'''

try:

@@ -138,7 +145,8 @@

vss_spec = vim.host.VirtualSwitch.Specification()

vss_spec.numPorts = self.number_of_ports

vss_spec.mtu = self.mtu

- vss_spec.bridge = vim.host.VirtualSwitch.BondBridge(nicDevice=[self.nic_name])

+ if self.nic_name:

+ vss_spec.bridge = vim.host.VirtualSwitch.BondBridge(nicDevice=[self.nic_name])

self.host_system.configManager.networkSystem.AddVirtualSwitch(vswitchName=self.switch_name, spec=vss_spec)

self.module.exit_json(changed=True)

@@ -184,7 +192,7 @@

def main():

argument_spec = vmware_argument_spec()

argument_spec.update(dict(switch_name=dict(required=True, type='str'),

- nic_name=dict(required=True, type='str'),

+ nic_name=dict(required=False, type='str'),

number_of_ports=dict(required=False, type='int', default=128),

mtu=dict(required=False, type='int', default=1500),

state=dict(default='present', choices=['present', 'absent'], type='str')))

|

{"golden_diff": "diff --git a/lib/ansible/modules/cloud/vmware/vmware_vswitch.py b/lib/ansible/modules/cloud/vmware/vmware_vswitch.py\n--- a/lib/ansible/modules/cloud/vmware/vmware_vswitch.py\n+++ b/lib/ansible/modules/cloud/vmware/vmware_vswitch.py\n@@ -44,7 +44,7 @@\n nic_name:\n description:\n - vmnic name to attach to vswitch\n- required: True\n+ required: False\n number_of_ports:\n description:\n - Number of port to configure on vswitch\n@@ -66,17 +66,24 @@\n '''\n \n EXAMPLES = '''\n-# Example from Ansible playbook\n-\n- - name: Add a VMware vSwitch\n- local_action:\n- module: vmware_vswitch\n- hostname: esxi_hostname\n- username: esxi_username\n- password: esxi_password\n- switch_name: vswitch_name\n- nic_name: vmnic_name\n- mtu: 9000\n+- name: Add a VMware vSwitch\n+ local_action:\n+ module: vmware_vswitch\n+ hostname: esxi_hostname\n+ username: esxi_username\n+ password: esxi_password\n+ switch_name: vswitch_name\n+ nic_name: vmnic_name\n+ mtu: 9000\n+\n+- name: Add a VMWare vSwitch without any physical NIC attached\n+ vmware_vswitch:\n+ hostname: 192.168.10.1\n+ username: admin\n+ password: password123\n+ switch_name: vswitch_0001\n+ mtu: 9000\n+\n '''\n \n try:\n@@ -138,7 +145,8 @@\n vss_spec = vim.host.VirtualSwitch.Specification()\n vss_spec.numPorts = self.number_of_ports\n vss_spec.mtu = self.mtu\n- vss_spec.bridge = vim.host.VirtualSwitch.BondBridge(nicDevice=[self.nic_name])\n+ if self.nic_name:\n+ vss_spec.bridge = vim.host.VirtualSwitch.BondBridge(nicDevice=[self.nic_name])\n self.host_system.configManager.networkSystem.AddVirtualSwitch(vswitchName=self.switch_name, spec=vss_spec)\n self.module.exit_json(changed=True)\n \n@@ -184,7 +192,7 @@\n def main():\n argument_spec = vmware_argument_spec()\n argument_spec.update(dict(switch_name=dict(required=True, type='str'),\n- nic_name=dict(required=True, type='str'),\n+ nic_name=dict(required=False, type='str'),\n number_of_ports=dict(required=False, type='int', default=128),\n mtu=dict(required=False, type='int', default=1500),\n state=dict(default='present', choices=['present', 'absent'], type='str')))\n", "issue": "cloud/vmware/vmware_vswitch.py nic_name should be optional\n##### ISSUE TYPE\r\n - Bug Report\r\n\r\n##### COMPONENT NAME\r\ncloud/vmware/vmware_vswitch.py\r\n\r\n##### ANSIBLE VERSION\r\n```\r\nansible 2.3.1.0\r\n config file = /etc/ansible/ansible.cfg\r\n configured module search path = Default w/o overrides\r\n python version = 2.7.12 (default, Nov 19 2016, 06:48:10) [GCC 5.4.0 20160609]\r\n\r\n```\r\n\r\n##### CONFIGURATION\r\nn/a\r\n##### OS / ENVIRONMENT\r\nn/a\r\n##### SUMMARY\r\nThe vmware_vswitch module erroneously assumes that 'nic_name' is required. It is valid (and sometimes desired) to make a vmware virtual switch that does not have any uplink nics at all - the use case is multiple isolated port-groups for isolated networking. \r\n\r\nAfter the vswitch is created, we create a port-group with VLAN 4095 (all vlans), with network policy permitting mac changes, forged transmit, and promiscuous all enabled.\r\n\r\nIn /ansible/modules/cloud/vmware/vmware_vswitch.py , we can omit this line if nic_name is not specified and the port-group is created as desired.\r\n\r\n```python\r\nif self.nic_name:\r\n vss_spec.bridge = vim.host.VirtualSwitch.BondBridge(nicDevice=[self.nic_name])\r\n```\r\n\r\n##### STEPS TO REPRODUCE\r\n\r\nRun ansible-playbook against a task using the vmware_vswitch module, omitting nic_name.\r\n```\r\n - name: add test_switch\r\n local_action:\r\n module: vmware_vswitch\r\n hostname: esxi_host\r\n username: esxi_username\r\n password: esxi_password\r\n switch_name: item\r\n mtu: 9000\r\n validate_certs: no\r\n number_of_ports: 8\r\n #nic_name: 'null'\r\n\r\n```\r\n\r\n##### EXPECTED RESULTS\r\nI expect the vmware vswitch to be created, but without any uplink nics.\r\n\r\n##### ACTUAL RESULTS\r\n```\r\nfatal: [localhost -> localhost]: FAILED! => {\"changed\": false, \"failed\": true, \"msg\": \"missing required arguments: nic_name\"}\r\n```\n", "before_files": [{"content": "#!/usr/bin/python\n# -*- coding: utf-8 -*-\n\n# (c) 2015, Joseph Callen <jcallen () csc.com>\n#\n# This file is part of Ansible\n#\n# Ansible is free software: you can redistribute it and/or modify\n# it under the terms of the GNU General Public License as published by\n# the Free Software Foundation, either version 3 of the License, or\n# (at your option) any later version.\n#\n# Ansible is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License\n# along with Ansible. If not, see <http://www.gnu.org/licenses/>.\n\nANSIBLE_METADATA = {'metadata_version': '1.0',\n 'status': ['preview'],\n 'supported_by': 'community'}\n\n\nDOCUMENTATION = '''\n---\nmodule: vmware_vswitch\nshort_description: Add a VMware Standard Switch to an ESXi host\ndescription:\n - Add a VMware Standard Switch to an ESXi host\nversion_added: 2.0\nauthor: \"Joseph Callen (@jcpowermac), Russell Teague (@mtnbikenc)\"\nnotes:\n - Tested on vSphere 5.5\nrequirements:\n - \"python >= 2.6\"\n - PyVmomi\noptions:\n switch_name:\n description:\n - vSwitch name to add\n required: True\n nic_name:\n description:\n - vmnic name to attach to vswitch\n required: True\n number_of_ports:\n description:\n - Number of port to configure on vswitch\n default: 128\n required: False\n mtu:\n description:\n - MTU to configure on vswitch\n required: False\n state:\n description:\n - Add or remove the switch\n default: 'present'\n choices:\n - 'present'\n - 'absent'\n required: False\nextends_documentation_fragment: vmware.documentation\n'''\n\nEXAMPLES = '''\n# Example from Ansible playbook\n\n - name: Add a VMware vSwitch\n local_action:\n module: vmware_vswitch\n hostname: esxi_hostname\n username: esxi_username\n password: esxi_password\n switch_name: vswitch_name\n nic_name: vmnic_name\n mtu: 9000\n'''\n\ntry:\n from pyVmomi import vim, vmodl\n HAS_PYVMOMI = True\nexcept ImportError:\n HAS_PYVMOMI = False\n\n\ndef find_vswitch_by_name(host, vswitch_name):\n for vss in host.config.network.vswitch:\n if vss.name == vswitch_name:\n return vss\n return None\n\n\nclass VMwareHostVirtualSwitch(object):\n\n def __init__(self, module):\n self.host_system = None\n self.content = None\n self.vss = None\n self.module = module\n self.switch_name = module.params['switch_name']\n self.number_of_ports = module.params['number_of_ports']\n self.nic_name = module.params['nic_name']\n self.mtu = module.params['mtu']\n self.state = module.params['state']\n self.content = connect_to_api(self.module)\n\n def process_state(self):\n try:\n vswitch_states = {\n 'absent': {\n 'present': self.state_destroy_vswitch,\n 'absent': self.state_exit_unchanged,\n },\n 'present': {\n 'update': self.state_update_vswitch,\n 'present': self.state_exit_unchanged,\n 'absent': self.state_create_vswitch,\n }\n }\n\n vswitch_states[self.state][self.check_vswitch_configuration()]()\n\n except vmodl.RuntimeFault as runtime_fault:\n self.module.fail_json(msg=runtime_fault.msg)\n except vmodl.MethodFault as method_fault:\n self.module.fail_json(msg=method_fault.msg)\n except Exception as e:\n self.module.fail_json(msg=str(e))\n\n\n # Source from\n # https://github.com/rreubenur/pyvmomi-community-samples/blob/patch-1/samples/create_vswitch.py\n\n def state_create_vswitch(self):\n vss_spec = vim.host.VirtualSwitch.Specification()\n vss_spec.numPorts = self.number_of_ports\n vss_spec.mtu = self.mtu\n vss_spec.bridge = vim.host.VirtualSwitch.BondBridge(nicDevice=[self.nic_name])\n self.host_system.configManager.networkSystem.AddVirtualSwitch(vswitchName=self.switch_name, spec=vss_spec)\n self.module.exit_json(changed=True)\n\n def state_exit_unchanged(self):\n self.module.exit_json(changed=False)\n\n def state_destroy_vswitch(self):\n config = vim.host.NetworkConfig()\n\n for portgroup in self.host_system.configManager.networkSystem.networkInfo.portgroup:\n if portgroup.spec.vswitchName == self.vss.name:\n portgroup_config = vim.host.PortGroup.Config()\n portgroup_config.changeOperation = \"remove\"\n portgroup_config.spec = vim.host.PortGroup.Specification()\n portgroup_config.spec.name = portgroup.spec.name\n portgroup_config.spec.name = portgroup.spec.name\n portgroup_config.spec.vlanId = portgroup.spec.vlanId\n portgroup_config.spec.vswitchName = portgroup.spec.vswitchName\n portgroup_config.spec.policy = vim.host.NetworkPolicy()\n config.portgroup.append(portgroup_config)\n\n self.host_system.configManager.networkSystem.UpdateNetworkConfig(config, \"modify\")\n self.host_system.configManager.networkSystem.RemoveVirtualSwitch(self.vss.name)\n self.module.exit_json(changed=True)\n\n def state_update_vswitch(self):\n self.module.exit_json(changed=False, msg=\"Currently not implemented.\")\n\n def check_vswitch_configuration(self):\n host = get_all_objs(self.content, [vim.HostSystem])\n if not host:\n self.module.fail_json(msg=\"Unable to find host\")\n\n self.host_system = host.keys()[0]\n self.vss = find_vswitch_by_name(self.host_system, self.switch_name)\n\n if self.vss is None:\n return 'absent'\n else:\n return 'present'\n\n\ndef main():\n argument_spec = vmware_argument_spec()\n argument_spec.update(dict(switch_name=dict(required=True, type='str'),\n nic_name=dict(required=True, type='str'),\n number_of_ports=dict(required=False, type='int', default=128),\n mtu=dict(required=False, type='int', default=1500),\n state=dict(default='present', choices=['present', 'absent'], type='str')))\n\n module = AnsibleModule(argument_spec=argument_spec, supports_check_mode=False)\n\n if not HAS_PYVMOMI:\n module.fail_json(msg='pyvmomi is required for this module')\n\n host_virtual_switch = VMwareHostVirtualSwitch(module)\n host_virtual_switch.process_state()\n\nfrom ansible.module_utils.vmware import *\nfrom ansible.module_utils.basic import *\n\nif __name__ == '__main__':\n main()\n", "path": "lib/ansible/modules/cloud/vmware/vmware_vswitch.py"}], "after_files": [{"content": "#!/usr/bin/python\n# -*- coding: utf-8 -*-\n\n# (c) 2015, Joseph Callen <jcallen () csc.com>\n#\n# This file is part of Ansible\n#\n# Ansible is free software: you can redistribute it and/or modify\n# it under the terms of the GNU General Public License as published by\n# the Free Software Foundation, either version 3 of the License, or\n# (at your option) any later version.\n#\n# Ansible is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License\n# along with Ansible. If not, see <http://www.gnu.org/licenses/>.\n\nANSIBLE_METADATA = {'metadata_version': '1.0',\n 'status': ['preview'],\n 'supported_by': 'community'}\n\n\nDOCUMENTATION = '''\n---\nmodule: vmware_vswitch\nshort_description: Add a VMware Standard Switch to an ESXi host\ndescription:\n - Add a VMware Standard Switch to an ESXi host\nversion_added: 2.0\nauthor: \"Joseph Callen (@jcpowermac), Russell Teague (@mtnbikenc)\"\nnotes:\n - Tested on vSphere 5.5\nrequirements:\n - \"python >= 2.6\"\n - PyVmomi\noptions:\n switch_name:\n description:\n - vSwitch name to add\n required: True\n nic_name:\n description:\n - vmnic name to attach to vswitch\n required: False\n number_of_ports:\n description:\n - Number of port to configure on vswitch\n default: 128\n required: False\n mtu:\n description:\n - MTU to configure on vswitch\n required: False\n state:\n description:\n - Add or remove the switch\n default: 'present'\n choices:\n - 'present'\n - 'absent'\n required: False\nextends_documentation_fragment: vmware.documentation\n'''\n\nEXAMPLES = '''\n- name: Add a VMware vSwitch\n local_action:\n module: vmware_vswitch\n hostname: esxi_hostname\n username: esxi_username\n password: esxi_password\n switch_name: vswitch_name\n nic_name: vmnic_name\n mtu: 9000\n\n- name: Add a VMWare vSwitch without any physical NIC attached\n vmware_vswitch:\n hostname: 192.168.10.1\n username: admin\n password: password123\n switch_name: vswitch_0001\n mtu: 9000\n\n'''\n\ntry:\n from pyVmomi import vim, vmodl\n HAS_PYVMOMI = True\nexcept ImportError:\n HAS_PYVMOMI = False\n\n\ndef find_vswitch_by_name(host, vswitch_name):\n for vss in host.config.network.vswitch:\n if vss.name == vswitch_name:\n return vss\n return None\n\n\nclass VMwareHostVirtualSwitch(object):\n\n def __init__(self, module):\n self.host_system = None\n self.content = None\n self.vss = None\n self.module = module\n self.switch_name = module.params['switch_name']\n self.number_of_ports = module.params['number_of_ports']\n self.nic_name = module.params['nic_name']\n self.mtu = module.params['mtu']\n self.state = module.params['state']\n self.content = connect_to_api(self.module)\n\n def process_state(self):\n try:\n vswitch_states = {\n 'absent': {\n 'present': self.state_destroy_vswitch,\n 'absent': self.state_exit_unchanged,\n },\n 'present': {\n 'update': self.state_update_vswitch,\n 'present': self.state_exit_unchanged,\n 'absent': self.state_create_vswitch,\n }\n }\n\n vswitch_states[self.state][self.check_vswitch_configuration()]()\n\n except vmodl.RuntimeFault as runtime_fault:\n self.module.fail_json(msg=runtime_fault.msg)\n except vmodl.MethodFault as method_fault:\n self.module.fail_json(msg=method_fault.msg)\n except Exception as e:\n self.module.fail_json(msg=str(e))\n\n\n # Source from\n # https://github.com/rreubenur/pyvmomi-community-samples/blob/patch-1/samples/create_vswitch.py\n\n def state_create_vswitch(self):\n vss_spec = vim.host.VirtualSwitch.Specification()\n vss_spec.numPorts = self.number_of_ports\n vss_spec.mtu = self.mtu\n if self.nic_name:\n vss_spec.bridge = vim.host.VirtualSwitch.BondBridge(nicDevice=[self.nic_name])\n self.host_system.configManager.networkSystem.AddVirtualSwitch(vswitchName=self.switch_name, spec=vss_spec)\n self.module.exit_json(changed=True)\n\n def state_exit_unchanged(self):\n self.module.exit_json(changed=False)\n\n def state_destroy_vswitch(self):\n config = vim.host.NetworkConfig()\n\n for portgroup in self.host_system.configManager.networkSystem.networkInfo.portgroup:\n if portgroup.spec.vswitchName == self.vss.name:\n portgroup_config = vim.host.PortGroup.Config()\n portgroup_config.changeOperation = \"remove\"\n portgroup_config.spec = vim.host.PortGroup.Specification()\n portgroup_config.spec.name = portgroup.spec.name\n portgroup_config.spec.name = portgroup.spec.name\n portgroup_config.spec.vlanId = portgroup.spec.vlanId\n portgroup_config.spec.vswitchName = portgroup.spec.vswitchName\n portgroup_config.spec.policy = vim.host.NetworkPolicy()\n config.portgroup.append(portgroup_config)\n\n self.host_system.configManager.networkSystem.UpdateNetworkConfig(config, \"modify\")\n self.host_system.configManager.networkSystem.RemoveVirtualSwitch(self.vss.name)\n self.module.exit_json(changed=True)\n\n def state_update_vswitch(self):\n self.module.exit_json(changed=False, msg=\"Currently not implemented.\")\n\n def check_vswitch_configuration(self):\n host = get_all_objs(self.content, [vim.HostSystem])\n if not host:\n self.module.fail_json(msg=\"Unable to find host\")\n\n self.host_system = host.keys()[0]\n self.vss = find_vswitch_by_name(self.host_system, self.switch_name)\n\n if self.vss is None:\n return 'absent'\n else:\n return 'present'\n\n\ndef main():\n argument_spec = vmware_argument_spec()\n argument_spec.update(dict(switch_name=dict(required=True, type='str'),\n nic_name=dict(required=False, type='str'),\n number_of_ports=dict(required=False, type='int', default=128),\n mtu=dict(required=False, type='int', default=1500),\n state=dict(default='present', choices=['present', 'absent'], type='str')))\n\n module = AnsibleModule(argument_spec=argument_spec, supports_check_mode=False)\n\n if not HAS_PYVMOMI:\n module.fail_json(msg='pyvmomi is required for this module')\n\n host_virtual_switch = VMwareHostVirtualSwitch(module)\n host_virtual_switch.process_state()\n\nfrom ansible.module_utils.vmware import *\nfrom ansible.module_utils.basic import *\n\nif __name__ == '__main__':\n main()\n", "path": "lib/ansible/modules/cloud/vmware/vmware_vswitch.py"}]}

| 2,835 | 654 |

gh_patches_debug_1877

|

rasdani/github-patches

|

git_diff

|

conan-io__conan-2921

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

local cache inconsistent after enabling short_paths in a recipe

To help us debug your issue please explain:

- [x] I've read the [CONTRIBUTING guide](https://raw.githubusercontent.com/conan-io/conan/develop/.github/CONTRIBUTING.md).

- [x] I've specified the Conan version, operating system version and any tool that can be relevant.

- [x] I've explained the steps to reproduce the error or the motivation/use case of the question/suggestion.

Conan Version 1.3.3

Windows 10

With a package in local cache whose recipe does NOT have `short_paths=True`, modify in normal development folder the recipe and set `short_paths=True` and run conan create.

Folders in local cache become inconsistent showing both folders from previous conan create run and .conan_link files pointing to the short paths folders.

This seems no not affect conan tool behavior when running commands and works well if `short_paths` is removed once again.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `conans/util/windows.py`

Content:

```

1 import os

2 import subprocess

3

4 from conans.util.files import load, mkdir, save, rmdir

5 import tempfile

6

7

8 CONAN_LINK = ".conan_link"

9

10

11 def conan_expand_user(path):

12 """ wrapper to the original expanduser function, to workaround python returning

13 verbatim %USERPROFILE% when some other app (git for windows) sets HOME envvar

14 """

15 # In win these variables should exist and point to user directory, which

16 # must exist. Using context to avoid permanent modification of os.environ

17 old_env = dict(os.environ)

18 try:

19 home = os.environ.get("HOME")

20 # Problematic cases of wrong HOME variable

21 # - HOME = %USERPROFILE% verbatim, as messed by some other tools

22 # - MSYS console, that defines a different user home in /c/mingw/msys/users/xxx

23 # In these cases, it is safe to remove it and rely on USERPROFILE directly

24 if home and (not os.path.exists(home) or

25 (os.getenv("MSYSTEM") and os.getenv("USERPROFILE"))):

26 del os.environ["HOME"]

27 result = os.path.expanduser(path)

28 finally:

29 os.environ.clear()

30 os.environ.update(old_env)

31 return result

32

33

34 def path_shortener(path, short_paths):

35 """ short_paths is 4-state:

36 False: Never shorten the path

37 True: Always shorten the path, create link if not existing

38 None: Use shorten path only if already exists, not create

39 """

40 if short_paths is False or os.getenv("CONAN_USER_HOME_SHORT") == "None":

41 return path

42 link = os.path.join(path, CONAN_LINK)

43 if os.path.exists(link):

44 return load(link)

45 elif short_paths is None:

46 return path

47

48 short_home = os.getenv("CONAN_USER_HOME_SHORT")

49 if not short_home:

50 drive = os.path.splitdrive(path)[0]

51 short_home = drive + "/.conan"

52 mkdir(short_home)

53

54 # Workaround for short_home living in NTFS file systems. Give full control permission to current user to avoid

55 # access problems in cygwin/msys2 windows subsystems when using short_home folder

56 try:

57 username = os.getenv("USERDOMAIN")

58 domainname = "%s\%s" % (username, os.environ["USERNAME"]) if username else os.environ["USERNAME"]

59 cmd = r'cacls %s /E /G "%s":F' % (short_home, domainname)

60 subprocess.check_output(cmd, stderr=subprocess.STDOUT) # Ignoring any returned output, make command quiet

61 except subprocess.CalledProcessError:

62 # cmd can fail if trying to set ACL in non NTFS drives, ignoring it.

63 pass

64

65 redirect = tempfile.mkdtemp(dir=short_home, prefix="")

66 # This "1" is the way to have a non-existing directory, so commands like

67 # shutil.copytree() to it, works. It can be removed without compromising the

68 # temp folder generator and conan-links consistency

69 redirect = os.path.join(redirect, "1")

70 save(link, redirect)

71 return redirect

72

73

74 def ignore_long_path_files(src_folder, build_folder, output):

75 def _filter(src, files):

76 filtered_files = []

77 for the_file in files:

78 source_path = os.path.join(src, the_file)

79 # Without storage path, just relative

80 rel_path = os.path.relpath(source_path, src_folder)

81 dest_path = os.path.normpath(os.path.join(build_folder, rel_path))

82 # it is NOT that "/" is counted as "\\" so it counts double

83 # seems a bug in python, overflows paths near the limit of 260,

84 if len(dest_path) >= 249:

85 filtered_files.append(the_file)

86 output.warn("Filename too long, file excluded: %s" % dest_path)

87 return filtered_files

88 return _filter

89

90

91 def rm_conandir(path):

92 """removal of a directory that might contain a link to a short path"""

93 link = os.path.join(path, CONAN_LINK)

94 if os.path.exists(link):

95 short_path = load(link)

96 rmdir(os.path.dirname(short_path))

97 rmdir(path)

98

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/conans/util/windows.py b/conans/util/windows.py

--- a/conans/util/windows.py

+++ b/conans/util/windows.py

@@ -45,6 +45,9 @@

elif short_paths is None:

return path

+ if os.path.exists(path):

+ rmdir(path)

+

short_home = os.getenv("CONAN_USER_HOME_SHORT")

if not short_home:

drive = os.path.splitdrive(path)[0]

|

{"golden_diff": "diff --git a/conans/util/windows.py b/conans/util/windows.py\n--- a/conans/util/windows.py\n+++ b/conans/util/windows.py\n@@ -45,6 +45,9 @@\n elif short_paths is None:\n return path\n \n+ if os.path.exists(path):\n+ rmdir(path)\n+\n short_home = os.getenv(\"CONAN_USER_HOME_SHORT\")\n if not short_home:\n drive = os.path.splitdrive(path)[0]\n", "issue": "local cache inconsistent after enabling short_paths in a recipe\nTo help us debug your issue please explain:\r\n\r\n- [x] I've read the [CONTRIBUTING guide](https://raw.githubusercontent.com/conan-io/conan/develop/.github/CONTRIBUTING.md).\r\n- [x] I've specified the Conan version, operating system version and any tool that can be relevant.\r\n- [x] I've explained the steps to reproduce the error or the motivation/use case of the question/suggestion.\r\n\r\nConan Version 1.3.3\r\nWindows 10\r\n\r\nWith a package in local cache whose recipe does NOT have `short_paths=True`, modify in normal development folder the recipe and set `short_paths=True` and run conan create.\r\n\r\nFolders in local cache become inconsistent showing both folders from previous conan create run and .conan_link files pointing to the short paths folders.\r\n\r\nThis seems no not affect conan tool behavior when running commands and works well if `short_paths` is removed once again.\r\n\r\n\n", "before_files": [{"content": "import os\nimport subprocess\n\nfrom conans.util.files import load, mkdir, save, rmdir\nimport tempfile\n\n\nCONAN_LINK = \".conan_link\"\n\n\ndef conan_expand_user(path):\n \"\"\" wrapper to the original expanduser function, to workaround python returning\n verbatim %USERPROFILE% when some other app (git for windows) sets HOME envvar\n \"\"\"\n # In win these variables should exist and point to user directory, which\n # must exist. Using context to avoid permanent modification of os.environ\n old_env = dict(os.environ)\n try:\n home = os.environ.get(\"HOME\")\n # Problematic cases of wrong HOME variable\n # - HOME = %USERPROFILE% verbatim, as messed by some other tools\n # - MSYS console, that defines a different user home in /c/mingw/msys/users/xxx\n # In these cases, it is safe to remove it and rely on USERPROFILE directly\n if home and (not os.path.exists(home) or\n (os.getenv(\"MSYSTEM\") and os.getenv(\"USERPROFILE\"))):\n del os.environ[\"HOME\"]\n result = os.path.expanduser(path)\n finally:\n os.environ.clear()\n os.environ.update(old_env)\n return result\n\n\ndef path_shortener(path, short_paths):\n \"\"\" short_paths is 4-state:\n False: Never shorten the path\n True: Always shorten the path, create link if not existing\n None: Use shorten path only if already exists, not create\n \"\"\"\n if short_paths is False or os.getenv(\"CONAN_USER_HOME_SHORT\") == \"None\":\n return path\n link = os.path.join(path, CONAN_LINK)\n if os.path.exists(link):\n return load(link)\n elif short_paths is None:\n return path\n\n short_home = os.getenv(\"CONAN_USER_HOME_SHORT\")\n if not short_home:\n drive = os.path.splitdrive(path)[0]\n short_home = drive + \"/.conan\"\n mkdir(short_home)\n\n # Workaround for short_home living in NTFS file systems. Give full control permission to current user to avoid\n # access problems in cygwin/msys2 windows subsystems when using short_home folder\n try:\n username = os.getenv(\"USERDOMAIN\")\n domainname = \"%s\\%s\" % (username, os.environ[\"USERNAME\"]) if username else os.environ[\"USERNAME\"]\n cmd = r'cacls %s /E /G \"%s\":F' % (short_home, domainname)\n subprocess.check_output(cmd, stderr=subprocess.STDOUT) # Ignoring any returned output, make command quiet\n except subprocess.CalledProcessError:\n # cmd can fail if trying to set ACL in non NTFS drives, ignoring it.\n pass\n\n redirect = tempfile.mkdtemp(dir=short_home, prefix=\"\")\n # This \"1\" is the way to have a non-existing directory, so commands like\n # shutil.copytree() to it, works. It can be removed without compromising the\n # temp folder generator and conan-links consistency\n redirect = os.path.join(redirect, \"1\")\n save(link, redirect)\n return redirect\n\n\ndef ignore_long_path_files(src_folder, build_folder, output):\n def _filter(src, files):\n filtered_files = []\n for the_file in files:\n source_path = os.path.join(src, the_file)\n # Without storage path, just relative\n rel_path = os.path.relpath(source_path, src_folder)\n dest_path = os.path.normpath(os.path.join(build_folder, rel_path))\n # it is NOT that \"/\" is counted as \"\\\\\" so it counts double\n # seems a bug in python, overflows paths near the limit of 260,\n if len(dest_path) >= 249:\n filtered_files.append(the_file)\n output.warn(\"Filename too long, file excluded: %s\" % dest_path)\n return filtered_files\n return _filter\n\n\ndef rm_conandir(path):\n \"\"\"removal of a directory that might contain a link to a short path\"\"\"\n link = os.path.join(path, CONAN_LINK)\n if os.path.exists(link):\n short_path = load(link)\n rmdir(os.path.dirname(short_path))\n rmdir(path)\n", "path": "conans/util/windows.py"}], "after_files": [{"content": "import os\nimport subprocess\n\nfrom conans.util.files import load, mkdir, save, rmdir\nimport tempfile\n\n\nCONAN_LINK = \".conan_link\"\n\n\ndef conan_expand_user(path):\n \"\"\" wrapper to the original expanduser function, to workaround python returning\n verbatim %USERPROFILE% when some other app (git for windows) sets HOME envvar\n \"\"\"\n # In win these variables should exist and point to user directory, which\n # must exist. Using context to avoid permanent modification of os.environ\n old_env = dict(os.environ)\n try:\n home = os.environ.get(\"HOME\")\n # Problematic cases of wrong HOME variable\n # - HOME = %USERPROFILE% verbatim, as messed by some other tools\n # - MSYS console, that defines a different user home in /c/mingw/msys/users/xxx\n # In these cases, it is safe to remove it and rely on USERPROFILE directly\n if home and (not os.path.exists(home) or\n (os.getenv(\"MSYSTEM\") and os.getenv(\"USERPROFILE\"))):\n del os.environ[\"HOME\"]\n result = os.path.expanduser(path)\n finally:\n os.environ.clear()\n os.environ.update(old_env)\n return result\n\n\ndef path_shortener(path, short_paths):\n \"\"\" short_paths is 4-state:\n False: Never shorten the path\n True: Always shorten the path, create link if not existing\n None: Use shorten path only if already exists, not create\n \"\"\"\n if short_paths is False or os.getenv(\"CONAN_USER_HOME_SHORT\") == \"None\":\n return path\n link = os.path.join(path, CONAN_LINK)\n if os.path.exists(link):\n return load(link)\n elif short_paths is None:\n return path\n\n if os.path.exists(path):\n rmdir(path)\n\n short_home = os.getenv(\"CONAN_USER_HOME_SHORT\")\n if not short_home:\n drive = os.path.splitdrive(path)[0]\n short_home = drive + \"/.conan\"\n mkdir(short_home)\n\n # Workaround for short_home living in NTFS file systems. Give full control permission to current user to avoid\n # access problems in cygwin/msys2 windows subsystems when using short_home folder\n try:\n username = os.getenv(\"USERDOMAIN\")\n domainname = \"%s\\%s\" % (username, os.environ[\"USERNAME\"]) if username else os.environ[\"USERNAME\"]\n cmd = r'cacls %s /E /G \"%s\":F' % (short_home, domainname)\n subprocess.check_output(cmd, stderr=subprocess.STDOUT) # Ignoring any returned output, make command quiet\n except subprocess.CalledProcessError:\n # cmd can fail if trying to set ACL in non NTFS drives, ignoring it.\n pass\n\n redirect = tempfile.mkdtemp(dir=short_home, prefix=\"\")\n # This \"1\" is the way to have a non-existing directory, so commands like\n # shutil.copytree() to it, works. It can be removed without compromising the\n # temp folder generator and conan-links consistency\n redirect = os.path.join(redirect, \"1\")\n save(link, redirect)\n return redirect\n\n\ndef ignore_long_path_files(src_folder, build_folder, output):\n def _filter(src, files):\n filtered_files = []\n for the_file in files:\n source_path = os.path.join(src, the_file)\n # Without storage path, just relative\n rel_path = os.path.relpath(source_path, src_folder)\n dest_path = os.path.normpath(os.path.join(build_folder, rel_path))\n # it is NOT that \"/\" is counted as \"\\\\\" so it counts double\n # seems a bug in python, overflows paths near the limit of 260,\n if len(dest_path) >= 249:\n filtered_files.append(the_file)\n output.warn(\"Filename too long, file excluded: %s\" % dest_path)\n return filtered_files\n return _filter\n\n\ndef rm_conandir(path):\n \"\"\"removal of a directory that might contain a link to a short path\"\"\"\n link = os.path.join(path, CONAN_LINK)\n if os.path.exists(link):\n short_path = load(link)\n rmdir(os.path.dirname(short_path))\n rmdir(path)\n", "path": "conans/util/windows.py"}]}

| 1,576 | 100 |

gh_patches_debug_28256

|

rasdani/github-patches

|

git_diff

|

meltano__meltano-8355

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

feature: Install multiple plugins of any type

### Feature scope

CLI (options, error messages, logging, etc.)

### Description

Currently, you can only leverage `meltano install` in parallel for all plugin types or all plugins of a specific type:

```sh

# all plugins

meltano install

# all extractors

meltano install [extractor|extractors]

# all loaders

meltano install [loader|loaders]

```

It would be great if you could install multiple plugins of any type - something like:

```sh

meltano install <extractor> <loader> <transformer> <utility>

```

This change would remove the need to specify a plugin type at all, since a plugin name is already unique to a Meltano project. This is currently not possible without a breaking change however, since a plugin type is required as the first argument to `meltano install` when specifying plugin names. #8228 introduced the `--from-file` option for `meltano config <plugin> set`, which accepts a special character `-` to refer to stdin - `meltano install` could reuse this or a similar concept to skip the plugin type argument and leverage parallel install:

```sh

meltano install - <extractor> <loader> <transformer> <utility>

```

Once a convention is established, this feature could be extended to `meltano remove` as well.

I have a POC of this working for `meltano install` locally, so happy to open a PR.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/meltano/cli/install.py`

Content:

```

1 """CLI command `meltano install`."""

2

3 from __future__ import annotations

4

5 import typing as t

6

7 import click

8 import structlog

9

10 from meltano.cli.params import pass_project

11 from meltano.cli.utils import CliError, PartialInstrumentedCmd, install_plugins

12 from meltano.core.block.parser import BlockParser

13 from meltano.core.plugin import PluginType

14 from meltano.core.schedule_service import ScheduleService

15 from meltano.core.tracking.contexts import CliEvent, PluginsTrackingContext

16

17 if t.TYPE_CHECKING:

18 from meltano.core.project import Project

19 from meltano.core.tracking import Tracker

20

21 logger = structlog.getLogger(__name__)

22

23

24 @click.command(cls=PartialInstrumentedCmd, short_help="Install project dependencies.")

25 @click.argument(

26 "plugin_type",

27 type=click.Choice(PluginType.cli_arguments()),

28 required=False,

29 )

30 @click.argument("plugin_name", nargs=-1, required=False)

31 @click.option(

32 "--clean",

33 is_flag=True,

34 help="Completely reinstall a plugin rather than simply upgrading if necessary.",

35 )

36 @click.option(

37 "--parallelism",

38 "-p",

39 type=click.INT,

40 default=None,

41 help=(

42 "Limit the number of plugins to install in parallel. "

43 "Defaults to the number of cores."

44 ),

45 )

46 @click.option(

47 "--force",

48 "-f",

49 is_flag=True,

50 help="Ignore the required Python version declared by the plugins.",

51 )

52 @click.option(

53 "--schedule",

54 "-s",

55 "schedule_name",

56 help="Install all plugins from the given schedule.",

57 )

58 @click.pass_context

59 @pass_project(migrate=True)

60 def install( # noqa: C901

61 project: Project,

62 ctx: click.Context,

63 plugin_type: str,

64 plugin_name: str,

65 clean: bool,

66 parallelism: int,

67 force: bool,

68 schedule_name: str,

69 ):

70 """

71 Install all the dependencies of your project based on the meltano.yml file.

72

73 \b\nRead more at https://docs.meltano.com/reference/command-line-interface#install

74 """

75 tracker: Tracker = ctx.obj["tracker"]

76 try:

77 if plugin_type:

78 plugin_type = PluginType.from_cli_argument(plugin_type)

79 plugins = project.plugins.get_plugins_of_type(plugin_type)

80 if plugin_name:

81 plugins = [plugin for plugin in plugins if plugin.name in plugin_name]

82 else:

83 plugins = list(project.plugins.plugins())

84

85 if schedule_name:

86 schedule_plugins = _get_schedule_plugins(

87 ctx.obj["project"],

88 schedule_name,

89 )

90 plugins = list(set(plugins) & set(schedule_plugins))

91 except Exception:

92 tracker.track_command_event(CliEvent.aborted)

93 raise

94

95 click.echo(f"Installing {len(plugins)} plugins...")

96 tracker.add_contexts(

97 PluginsTrackingContext([(candidate, None) for candidate in plugins]),

98 )

99 tracker.track_command_event(CliEvent.inflight)

100

101 success = install_plugins(

102 project,

103 plugins,

104 parallelism=parallelism,

105 clean=clean,

106 force=force,

107 )

108 if not success:

109 tracker.track_command_event(CliEvent.failed)

110 raise CliError("Failed to install plugin(s)") # noqa: EM101

111 tracker.track_command_event(CliEvent.completed)

112

113

114 def _get_schedule_plugins(project: Project, schedule_name: str):

115 schedule_service = ScheduleService(project)

116 schedule_obj = schedule_service.find_schedule(schedule_name)

117 schedule_plugins = set()

118 if schedule_obj.elt_schedule:

119 for plugin_name in (schedule_obj.extractor, schedule_obj.loader):

120 schedule_plugins.add(project.plugins.find_plugin(plugin_name))

121 else:

122 task_sets = schedule_service.task_sets_service.get(schedule_obj.job)

123 for blocks in task_sets.flat_args_per_set:

124 parser = BlockParser(logger, project, blocks)

125 for plugin in parser.plugins:

126 schedule_plugins.add(

127 project.plugins.find_plugin(plugin.info.get("name"))

128 if plugin.type == PluginType.MAPPERS

129 else plugin,

130 )

131 return schedule_plugins

132

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/meltano/cli/install.py b/src/meltano/cli/install.py

--- a/src/meltano/cli/install.py

+++ b/src/meltano/cli/install.py

@@ -18,13 +18,15 @@

from meltano.core.project import Project

from meltano.core.tracking import Tracker

+ANY = "-"

+

logger = structlog.getLogger(__name__)

@click.command(cls=PartialInstrumentedCmd, short_help="Install project dependencies.")

@click.argument(

"plugin_type",

- type=click.Choice(PluginType.cli_arguments()),

+ type=click.Choice((*PluginType.cli_arguments(), ANY)),

required=False,

)

@click.argument("plugin_name", nargs=-1, required=False)

@@ -74,14 +76,15 @@

"""

tracker: Tracker = ctx.obj["tracker"]

try:

- if plugin_type:

+ if plugin_type and plugin_type != ANY:

plugin_type = PluginType.from_cli_argument(plugin_type)

plugins = project.plugins.get_plugins_of_type(plugin_type)

- if plugin_name:

- plugins = [plugin for plugin in plugins if plugin.name in plugin_name]

else:

plugins = list(project.plugins.plugins())

+ if plugin_name:

+ plugins = [plugin for plugin in plugins if plugin.name in plugin_name]

+

if schedule_name:

schedule_plugins = _get_schedule_plugins(

ctx.obj["project"],

|

{"golden_diff": "diff --git a/src/meltano/cli/install.py b/src/meltano/cli/install.py\n--- a/src/meltano/cli/install.py\n+++ b/src/meltano/cli/install.py\n@@ -18,13 +18,15 @@\n from meltano.core.project import Project\n from meltano.core.tracking import Tracker\n \n+ANY = \"-\"\n+\n logger = structlog.getLogger(__name__)\n \n \n @click.command(cls=PartialInstrumentedCmd, short_help=\"Install project dependencies.\")\n @click.argument(\n \"plugin_type\",\n- type=click.Choice(PluginType.cli_arguments()),\n+ type=click.Choice((*PluginType.cli_arguments(), ANY)),\n required=False,\n )\n @click.argument(\"plugin_name\", nargs=-1, required=False)\n@@ -74,14 +76,15 @@\n \"\"\"\n tracker: Tracker = ctx.obj[\"tracker\"]\n try:\n- if plugin_type:\n+ if plugin_type and plugin_type != ANY:\n plugin_type = PluginType.from_cli_argument(plugin_type)\n plugins = project.plugins.get_plugins_of_type(plugin_type)\n- if plugin_name:\n- plugins = [plugin for plugin in plugins if plugin.name in plugin_name]\n else:\n plugins = list(project.plugins.plugins())\n \n+ if plugin_name:\n+ plugins = [plugin for plugin in plugins if plugin.name in plugin_name]\n+\n if schedule_name:\n schedule_plugins = _get_schedule_plugins(\n ctx.obj[\"project\"],\n", "issue": "feature: Install multiple plugins of any type\n### Feature scope\r\n\r\nCLI (options, error messages, logging, etc.)\r\n\r\n### Description\r\n\r\nCurrently, you can only leverage `meltano install` in parallel for all plugin types or all plugins of a specific type:\r\n\r\n```sh\r\n# all plugins\r\nmeltano install\r\n\r\n# all extractors\r\nmeltano install [extractor|extractors]\r\n\r\n# all loaders\r\nmeltano install [loader|loaders]\r\n```\r\n\r\nIt would be great if you could install multiple plugins of any type - something like:\r\n\r\n```sh\r\nmeltano install <extractor> <loader> <transformer> <utility>\r\n```\r\n\r\nThis change would remove the need to specify a plugin type at all, since a plugin name is already unique to a Meltano project. This is currently not possible without a breaking change however, since a plugin type is required as the first argument to `meltano install` when specifying plugin names. #8228 introduced the `--from-file` option for `meltano config <plugin> set`, which accepts a special character `-` to refer to stdin - `meltano install` could reuse this or a similar concept to skip the plugin type argument and leverage parallel install:\r\n\r\n```sh\r\nmeltano install - <extractor> <loader> <transformer> <utility>\r\n```\r\n\r\nOnce a convention is established, this feature could be extended to `meltano remove` as well.\r\n\r\nI have a POC of this working for `meltano install` locally, so happy to open a PR.\n", "before_files": [{"content": "\"\"\"CLI command `meltano install`.\"\"\"\n\nfrom __future__ import annotations\n\nimport typing as t\n\nimport click\nimport structlog\n\nfrom meltano.cli.params import pass_project\nfrom meltano.cli.utils import CliError, PartialInstrumentedCmd, install_plugins\nfrom meltano.core.block.parser import BlockParser\nfrom meltano.core.plugin import PluginType\nfrom meltano.core.schedule_service import ScheduleService\nfrom meltano.core.tracking.contexts import CliEvent, PluginsTrackingContext\n\nif t.TYPE_CHECKING:\n from meltano.core.project import Project\n from meltano.core.tracking import Tracker\n\nlogger = structlog.getLogger(__name__)\n\n\[email protected](cls=PartialInstrumentedCmd, short_help=\"Install project dependencies.\")\[email protected](\n \"plugin_type\",\n type=click.Choice(PluginType.cli_arguments()),\n required=False,\n)\[email protected](\"plugin_name\", nargs=-1, required=False)\[email protected](\n \"--clean\",\n is_flag=True,\n help=\"Completely reinstall a plugin rather than simply upgrading if necessary.\",\n)\[email protected](\n \"--parallelism\",\n \"-p\",\n type=click.INT,\n default=None,\n help=(\n \"Limit the number of plugins to install in parallel. \"\n \"Defaults to the number of cores.\"\n ),\n)\[email protected](\n \"--force\",\n \"-f\",\n is_flag=True,\n help=\"Ignore the required Python version declared by the plugins.\",\n)\[email protected](\n \"--schedule\",\n \"-s\",\n \"schedule_name\",\n help=\"Install all plugins from the given schedule.\",\n)\[email protected]_context\n@pass_project(migrate=True)\ndef install( # noqa: C901\n project: Project,\n ctx: click.Context,\n plugin_type: str,\n plugin_name: str,\n clean: bool,\n parallelism: int,\n force: bool,\n schedule_name: str,\n):\n \"\"\"\n Install all the dependencies of your project based on the meltano.yml file.\n\n \\b\\nRead more at https://docs.meltano.com/reference/command-line-interface#install\n \"\"\"\n tracker: Tracker = ctx.obj[\"tracker\"]\n try:\n if plugin_type:\n plugin_type = PluginType.from_cli_argument(plugin_type)\n plugins = project.plugins.get_plugins_of_type(plugin_type)\n if plugin_name:\n plugins = [plugin for plugin in plugins if plugin.name in plugin_name]\n else:\n plugins = list(project.plugins.plugins())\n\n if schedule_name:\n schedule_plugins = _get_schedule_plugins(\n ctx.obj[\"project\"],\n schedule_name,\n )\n plugins = list(set(plugins) & set(schedule_plugins))\n except Exception:\n tracker.track_command_event(CliEvent.aborted)\n raise\n\n click.echo(f\"Installing {len(plugins)} plugins...\")\n tracker.add_contexts(\n PluginsTrackingContext([(candidate, None) for candidate in plugins]),\n )\n tracker.track_command_event(CliEvent.inflight)\n\n success = install_plugins(\n project,\n plugins,\n parallelism=parallelism,\n clean=clean,\n force=force,\n )\n if not success:\n tracker.track_command_event(CliEvent.failed)\n raise CliError(\"Failed to install plugin(s)\") # noqa: EM101\n tracker.track_command_event(CliEvent.completed)\n\n\ndef _get_schedule_plugins(project: Project, schedule_name: str):\n schedule_service = ScheduleService(project)\n schedule_obj = schedule_service.find_schedule(schedule_name)\n schedule_plugins = set()\n if schedule_obj.elt_schedule:\n for plugin_name in (schedule_obj.extractor, schedule_obj.loader):\n schedule_plugins.add(project.plugins.find_plugin(plugin_name))\n else:\n task_sets = schedule_service.task_sets_service.get(schedule_obj.job)\n for blocks in task_sets.flat_args_per_set:\n parser = BlockParser(logger, project, blocks)\n for plugin in parser.plugins:\n schedule_plugins.add(\n project.plugins.find_plugin(plugin.info.get(\"name\"))\n if plugin.type == PluginType.MAPPERS\n else plugin,\n )\n return schedule_plugins\n", "path": "src/meltano/cli/install.py"}], "after_files": [{"content": "\"\"\"CLI command `meltano install`.\"\"\"\n\nfrom __future__ import annotations\n\nimport typing as t\n\nimport click\nimport structlog\n\nfrom meltano.cli.params import pass_project\nfrom meltano.cli.utils import CliError, PartialInstrumentedCmd, install_plugins\nfrom meltano.core.block.parser import BlockParser\nfrom meltano.core.plugin import PluginType\nfrom meltano.core.schedule_service import ScheduleService\nfrom meltano.core.tracking.contexts import CliEvent, PluginsTrackingContext\n\nif t.TYPE_CHECKING:\n from meltano.core.project import Project\n from meltano.core.tracking import Tracker\n\nANY = \"-\"\n\nlogger = structlog.getLogger(__name__)\n\n\[email protected](cls=PartialInstrumentedCmd, short_help=\"Install project dependencies.\")\[email protected](\n \"plugin_type\",\n type=click.Choice((*PluginType.cli_arguments(), ANY)),\n required=False,\n)\[email protected](\"plugin_name\", nargs=-1, required=False)\[email protected](\n \"--clean\",\n is_flag=True,\n help=\"Completely reinstall a plugin rather than simply upgrading if necessary.\",\n)\[email protected](\n \"--parallelism\",\n \"-p\",\n type=click.INT,\n default=None,\n help=(\n \"Limit the number of plugins to install in parallel. \"\n \"Defaults to the number of cores.\"\n ),\n)\[email protected](\n \"--force\",\n \"-f\",\n is_flag=True,\n help=\"Ignore the required Python version declared by the plugins.\",\n)\[email protected](\n \"--schedule\",\n \"-s\",\n \"schedule_name\",\n help=\"Install all plugins from the given schedule.\",\n)\[email protected]_context\n@pass_project(migrate=True)\ndef install( # noqa: C901\n project: Project,\n ctx: click.Context,\n plugin_type: str,\n plugin_name: str,\n clean: bool,\n parallelism: int,\n force: bool,\n schedule_name: str,\n):\n \"\"\"\n Install all the dependencies of your project based on the meltano.yml file.\n\n \\b\\nRead more at https://docs.meltano.com/reference/command-line-interface#install\n \"\"\"\n tracker: Tracker = ctx.obj[\"tracker\"]\n try:\n if plugin_type and plugin_type != ANY:\n plugin_type = PluginType.from_cli_argument(plugin_type)\n plugins = project.plugins.get_plugins_of_type(plugin_type)\n else:\n plugins = list(project.plugins.plugins())\n\n if plugin_name:\n plugins = [plugin for plugin in plugins if plugin.name in plugin_name]\n\n if schedule_name:\n schedule_plugins = _get_schedule_plugins(\n ctx.obj[\"project\"],\n schedule_name,\n )\n plugins = list(set(plugins) & set(schedule_plugins))\n except Exception:\n tracker.track_command_event(CliEvent.aborted)\n raise\n\n click.echo(f\"Installing {len(plugins)} plugins...\")\n tracker.add_contexts(\n PluginsTrackingContext([(candidate, None) for candidate in plugins]),\n )\n tracker.track_command_event(CliEvent.inflight)\n\n success = install_plugins(\n project,\n plugins,\n parallelism=parallelism,\n clean=clean,\n force=force,\n )\n if not success:\n tracker.track_command_event(CliEvent.failed)\n raise CliError(\"Failed to install plugin(s)\") # noqa: EM101\n tracker.track_command_event(CliEvent.completed)\n\n\ndef _get_schedule_plugins(project: Project, schedule_name: str):\n schedule_service = ScheduleService(project)\n schedule_obj = schedule_service.find_schedule(schedule_name)\n schedule_plugins = set()\n if schedule_obj.elt_schedule:\n for plugin_name in (schedule_obj.extractor, schedule_obj.loader):\n schedule_plugins.add(project.plugins.find_plugin(plugin_name))\n else:\n task_sets = schedule_service.task_sets_service.get(schedule_obj.job)\n for blocks in task_sets.flat_args_per_set:\n parser = BlockParser(logger, project, blocks)\n for plugin in parser.plugins:\n schedule_plugins.add(\n project.plugins.find_plugin(plugin.info.get(\"name\"))\n if plugin.type == PluginType.MAPPERS\n else plugin,\n )\n return schedule_plugins\n", "path": "src/meltano/cli/install.py"}]}

| 1,742 | 309 |

gh_patches_debug_31034

|

rasdani/github-patches

|

git_diff

|

python-telegram-bot__python-telegram-bot-688

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Encode error when downloading files with non-ASCII filenames.

<!--

Thanks for reporting issues of python-telegram-bot!

To make it easier for us to help you please enter detailed information below.

Please note, we only support the latest version of python-telegram-bot and

master branch. Please make sure to upgrade & recreate the issue on the latest

version prior to opening an issue.

-->

### Steps to reproduce

1. `head /dev/random > 凵冂工匚口わ巨` and send the file to a bot.

2.

```python

import telegram

b = telegram.Bot(TOKEN)

file_id = b.getUpdates()[0].message.document.file_id

b.getFile(file_id).download("./storage")

```

### Expected behaviour

Tell us what should happen

Download the file to specified directory.

### Actual behaviour

Tell us what happens instead

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python3.6/site-packages/telegram/file.py", line 106, in download

self.bot.request.download(url, filename, timeout=timeout)

File "/usr/local/lib/python3.6/site-packages/telegram/utils/request.py", line 284, in download

buf = self.retrieve(url, timeout=timeout)

File "/usr/local/lib/python3.6/site-packages/telegram/utils/request.py", line 270, in retrieve

return self._request_wrapper('GET', url, **urlopen_kwargs)

File "/usr/local/lib/python3.6/site-packages/telegram/utils/request.py", line 174, in _request_wrapper

resp = self._con_pool.request(*args, **kwargs)

File "/usr/local/lib/python3.6/site-packages/telegram/vendor/ptb_urllib3/urllib3/request.py", line 66, in request

**urlopen_kw)

File "/usr/local/lib/python3.6/site-packages/telegram/vendor/ptb_urllib3/urllib3/request.py", line 87, in request_encode_url

return self.urlopen(method, url, **extra_kw)

File "/usr/local/lib/python3.6/site-packages/telegram/vendor/ptb_urllib3/urllib3/poolmanager.py", line 244, in urlopen

response = conn.urlopen(method, u.request_uri, **kw)

File "/usr/local/lib/python3.6/site-packages/telegram/vendor/ptb_urllib3/urllib3/connectionpool.py", line 617, in urlopen

chunked=chunked)

File "/usr/local/lib/python3.6/site-packages/telegram/vendor/ptb_urllib3/urllib3/connectionpool.py", line 390, in _make_request

conn.request(method, url, **httplib_request_kw)

File "/usr/local/Cellar/python3/3.6.1/Frameworks/Python.framework/Versions/3.6/lib/python3.6/http/client.py", line 1239, in request

self._send_request(method, url, body, headers, encode_chunked)

File "/usr/local/Cellar/python3/3.6.1/Frameworks/Python.framework/Versions/3.6/lib/python3.6/http/client.py", line 1250, in _send_request

self.putrequest(method, url, **skips)

File "/usr/local/Cellar/python3/3.6.1/Frameworks/Python.framework/Versions/3.6/lib/python3.6/http/client.py", line 1117, in putrequest

self._output(request.encode('ascii'))

UnicodeEncodeError: 'ascii' codec can't encode characters in position 69-75: ordinal not in range(128)

'ascii' codec can't encode characters in position 69-75: ordinal not in range(128)

```

### Configuration

**Operating System:**

Tested on:

- Mac OS X 10.11

- Ubuntu 16.04

**Version of Python, python-telegram-bot & dependencies:**

``$ python -m telegram``

```

python-telegram-bot 6.0.3

urllib3 1.21.1

certifi 2017.04.17

future 0.16.0

Python 3.6.1 (default, Mar 23 2017, 16:49:01) [GCC 4.2.1 Compatible Apple LLVM 8.0.0 (clang-800.0.42.1)]

```

### Possible Solution

URL escape the "download path" given by `getFile`, then download.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `telegram/files/file.py`

Content:

```

1 #!/usr/bin/env python

2 #

3 # A library that provides a Python interface to the Telegram Bot API

4 # Copyright (C) 2015-2017

5 # Leandro Toledo de Souza <[email protected]>

6 #

7 # This program is free software: you can redistribute it and/or modify

8 # it under the terms of the GNU Lesser Public License as published by

9 # the Free Software Foundation, either version 3 of the License, or

10 # (at your option) any later version.

11 #

12 # This program is distributed in the hope that it will be useful,

13 # but WITHOUT ANY WARRANTY; without even the implied warranty of

14 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

15 # GNU Lesser Public License for more details.

16 #

17 # You should have received a copy of the GNU Lesser Public License

18 # along with this program. If not, see [http://www.gnu.org/licenses/].

19 """This module contains an object that represents a Telegram File."""

20

21 from os.path import basename

22

23 from telegram import TelegramObject

24

25

26 class File(TelegramObject):

27 """This object represents a Telegram File.

28

29 Attributes:

30 file_id (str):

31 file_size (str):

32 file_path (str):

33

34 Args:

35 file_id (str):

36 bot (telegram.Bot):

37 file_size (Optional[int]):

38 file_path (Optional[str]):

39 **kwargs (dict): Arbitrary keyword arguments.

40

41 """

42

43 def __init__(self, file_id, bot, file_size=None, file_path=None, **kwargs):

44 # Required

45 self.file_id = str(file_id)

46

47 # Optionals

48 self.file_size = file_size

49 if file_path:

50 self.file_path = str(file_path)

51

52 self.bot = bot

53

54 self._id_attrs = (self.file_id,)

55

56 @staticmethod

57 def de_json(data, bot):

58 """

59 Args:

60 data (dict):

61 bot (telegram.Bot):

62

63 Returns:

64 telegram.File:

65 """

66 if not data:

67 return None

68

69 return File(bot=bot, **data)

70

71 def download(self, custom_path=None, out=None, timeout=None):

72 """

73 Download this file. By default, the file is saved in the current working directory with its

74 original filename as reported by Telegram. If a ``custom_path`` is supplied, it will be

75 saved to that path instead. If ``out`` is defined, the file contents will be saved to that

76 object using the ``out.write`` method. ``custom_path`` and ``out`` are mutually exclusive.

77

78 Args:

79 custom_path (Optional[str]): Custom path.

80 out (Optional[object]): A file-like object. Must be opened in binary mode, if

81 applicable.

82 timeout (Optional[int|float]): If this value is specified, use it as the read timeout

83 from the server (instead of the one specified during creation of the connection

84 pool).

85

86 Raises:

87 ValueError: If both ``custom_path`` and ``out`` are passed.

88

89 """

90

91 if custom_path is not None and out is not None:

92 raise ValueError('custom_path and out are mutually exclusive')

93

94 url = self.file_path

95

96 if out:

97 buf = self.bot.request.retrieve(url)

98 out.write(buf)

99

100 else:

101 if custom_path:

102 filename = custom_path

103 else:

104 filename = basename(url)

105

106 self.bot.request.download(url, filename, timeout=timeout)

107

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/telegram/files/file.py b/telegram/files/file.py

--- a/telegram/files/file.py

+++ b/telegram/files/file.py

@@ -17,9 +17,10 @@

# You should have received a copy of the GNU Lesser Public License

# along with this program. If not, see [http://www.gnu.org/licenses/].

"""This module contains an object that represents a Telegram File."""

-

from os.path import basename

+from future.backports.urllib import parse as urllib_parse

+

from telegram import TelegramObject

@@ -46,8 +47,7 @@

# Optionals

self.file_size = file_size

- if file_path:

- self.file_path = str(file_path)

+ self.file_path = file_path

self.bot = bot

@@ -91,7 +91,10 @@

if custom_path is not None and out is not None:

raise ValueError('custom_path and out are mutually exclusive')

- url = self.file_path

+ # Convert any UTF-8 char into a url encoded ASCII string.

+ sres = urllib_parse.urlsplit(self.file_path)

+ url = urllib_parse.urlunsplit(urllib_parse.SplitResult(

+ sres.scheme, sres.netloc, urllib_parse.quote(sres.path), sres.query, sres.fragment))

if out:

buf = self.bot.request.retrieve(url)

@@ -101,6 +104,6 @@

if custom_path:

filename = custom_path

else:

- filename = basename(url)

+ filename = basename(self.file_path)

self.bot.request.download(url, filename, timeout=timeout)

|