problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_16805

|

rasdani/github-patches

|

git_diff

|

cython__cython-5332

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fatal Python error: PyEval_SaveThread when creating ufunc with nogil [BUG]

### Describe the bug

I'm generating ufuncs for my fortran library by the new version 3.0.0b1 of cython, using the @cython.ufunc function decorator.

I declare the function as nogil. and call it in python script. It works fine when the input array length is less than 500. When the input array length is greater than 500, it stops and throws a thread error.

```

Fatal Python error: PyEval_SaveThread: the function must be called with the GIL held, but the GIL is released (the current Python thread state is NULL)

Python runtime state: initialized

```

I'm using the mingw-w64 compiler provided by msys2 on windows, but that doesn't seem to be the cause of the error. I transferred the program to an ubuntu machine and found the same error.

### Code to reproduce the behaviour:

```cython

# test_ufunc.pyx

cimport cython

@cython.ufunc

cdef double add_one(double x) nogil:

return x+1

```

```python

import test_ufunc

import numpy as np

x=np.linspace(-1,1,501)

x_add=test_ufunc.add_one(x)

print(x_add)

```

### Expected behaviour

No errors, result returns successfully.

### OS

Ubuntu and Windows

### Python version

3.10.6 (Ubuntu), 3.11.2 (Windows)

### Cython version

3.0.0b1

### Additional context

_No response_

--- END ISSUE ---

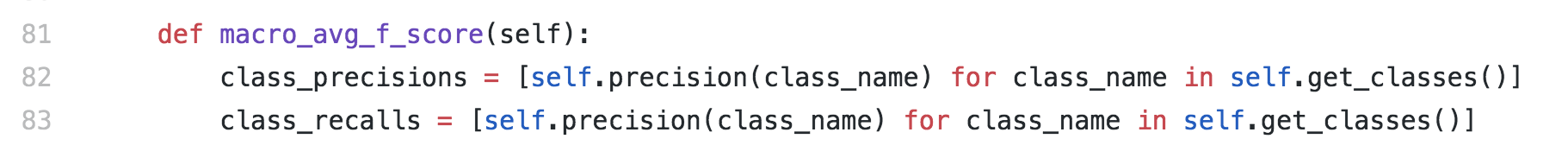

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `Cython/Compiler/UFuncs.py`

Content:

```

1 from . import (

2 Nodes,

3 ExprNodes,

4 FusedNode,

5 TreeFragment,

6 Pipeline,

7 ParseTreeTransforms,

8 Naming,

9 UtilNodes,

10 )

11 from .Errors import error

12 from . import PyrexTypes

13 from .UtilityCode import CythonUtilityCode

14 from .Code import TempitaUtilityCode, UtilityCode

15 from .Visitor import PrintTree, TreeVisitor, VisitorTransform

16

17 numpy_int_types = [

18 "NPY_BYTE",

19 "NPY_INT8",

20 "NPY_SHORT",

21 "NPY_INT16",

22 "NPY_INT",

23 "NPY_INT32",

24 "NPY_LONG",

25 "NPY_LONGLONG",

26 "NPY_INT64",

27 ]

28 numpy_uint_types = [tp.replace("NPY_", "NPY_U") for tp in numpy_int_types]

29 # note: half float type is deliberately omitted

30 numpy_numeric_types = (

31 numpy_int_types

32 + numpy_uint_types

33 + [

34 "NPY_FLOAT",

35 "NPY_FLOAT32",

36 "NPY_DOUBLE",

37 "NPY_FLOAT64",

38 "NPY_LONGDOUBLE",

39 ]

40 )

41

42

43 def _get_type_constant(pos, type_):

44 if type_.is_complex:

45 # 'is' checks don't seem to work for complex types

46 if type_ == PyrexTypes.c_float_complex_type:

47 return "NPY_CFLOAT"

48 elif type_ == PyrexTypes.c_double_complex_type:

49 return "NPY_CDOUBLE"

50 elif type_ == PyrexTypes.c_longdouble_complex_type:

51 return "NPY_CLONGDOUBLE"

52 elif type_.is_numeric:

53 postfix = type_.empty_declaration_code().upper().replace(" ", "")

54 typename = "NPY_%s" % postfix

55 if typename in numpy_numeric_types:

56 return typename

57 elif type_.is_pyobject:

58 return "NPY_OBJECT"

59 # TODO possible NPY_BOOL to bint but it needs a cast?

60 # TODO NPY_DATETIME, NPY_TIMEDELTA, NPY_STRING, NPY_UNICODE and maybe NPY_VOID might be handleable

61 error(pos, "Type '%s' cannot be used as a ufunc argument" % type_)

62

63

64 class _FindCFuncDefNode(TreeVisitor):

65 """

66 Finds the CFuncDefNode in the tree

67

68 The assumption is that there's only one CFuncDefNode

69 """

70

71 found_node = None

72

73 def visit_Node(self, node):

74 if self.found_node:

75 return

76 else:

77 self.visitchildren(node)

78

79 def visit_CFuncDefNode(self, node):

80 self.found_node = node

81

82 def __call__(self, tree):

83 self.visit(tree)

84 return self.found_node

85

86

87 def get_cfunc_from_tree(tree):

88 return _FindCFuncDefNode()(tree)

89

90

91 class _ArgumentInfo(object):

92 """

93 Everything related to defining an input/output argument for a ufunc

94

95 type - PyrexType

96 type_constant - str such as "NPY_INT8" representing numpy dtype constants

97 """

98

99 def __init__(self, type, type_constant):

100 self.type = type

101 self.type_constant = type_constant

102

103

104 class UFuncConversion(object):

105 def __init__(self, node):

106 self.node = node

107 self.global_scope = node.local_scope.global_scope()

108

109 self.in_definitions = self.get_in_type_info()

110 self.out_definitions = self.get_out_type_info()

111

112 def get_in_type_info(self):

113 definitions = []

114 for n, arg in enumerate(self.node.args):

115 type_const = _get_type_constant(self.node.pos, arg.type)

116 definitions.append(_ArgumentInfo(arg.type, type_const))

117 return definitions

118

119 def get_out_type_info(self):

120 if self.node.return_type.is_ctuple:

121 components = self.node.return_type.components

122 else:

123 components = [self.node.return_type]

124 definitions = []

125 for n, type in enumerate(components):

126 definitions.append(

127 _ArgumentInfo(type, _get_type_constant(self.node.pos, type))

128 )

129 return definitions

130

131 def generate_cy_utility_code(self):

132 arg_types = [a.type for a in self.in_definitions]

133 out_types = [a.type for a in self.out_definitions]

134 inline_func_decl = self.node.entry.type.declaration_code(

135 self.node.entry.cname, pyrex=True

136 )

137 self.node.entry.used = True

138

139 ufunc_cname = self.global_scope.next_id(self.node.entry.name + "_ufunc_def")

140

141 context = dict(

142 func_cname=ufunc_cname,

143 in_types=arg_types,

144 out_types=out_types,

145 inline_func_call=self.node.entry.cname,

146 inline_func_declaration=inline_func_decl,

147 nogil=self.node.entry.type.nogil,

148 )

149

150 code = CythonUtilityCode.load(

151 "UFuncDefinition",

152 "UFuncs.pyx",

153 context=context,

154 outer_module_scope=self.global_scope,

155 )

156

157 tree = code.get_tree(entries_only=True)

158 return tree

159

160 def use_generic_utility_code(self):

161 # use the invariant C utility code

162 self.global_scope.use_utility_code(

163 UtilityCode.load_cached("UFuncsInit", "UFuncs_C.c")

164 )

165 self.global_scope.use_utility_code(

166 UtilityCode.load_cached("NumpyImportUFunc", "NumpyImportArray.c")

167 )

168

169

170 def convert_to_ufunc(node):

171 if isinstance(node, Nodes.CFuncDefNode):

172 if node.local_scope.parent_scope.is_c_class_scope:

173 error(node.pos, "Methods cannot currently be converted to a ufunc")

174 return node

175 converters = [UFuncConversion(node)]

176 original_node = node

177 elif isinstance(node, FusedNode.FusedCFuncDefNode) and isinstance(

178 node.node, Nodes.CFuncDefNode

179 ):

180 if node.node.local_scope.parent_scope.is_c_class_scope:

181 error(node.pos, "Methods cannot currently be converted to a ufunc")

182 return node

183 converters = [UFuncConversion(n) for n in node.nodes]

184 original_node = node.node

185 else:

186 error(node.pos, "Only C functions can be converted to a ufunc")

187 return node

188

189 if not converters:

190 return # this path probably shouldn't happen

191

192 del converters[0].global_scope.entries[original_node.entry.name]

193 # the generic utility code is generic, so there's no reason to do it multiple times

194 converters[0].use_generic_utility_code()

195 return [node] + _generate_stats_from_converters(converters, original_node)

196

197

198 def generate_ufunc_initialization(converters, cfunc_nodes, original_node):

199 global_scope = converters[0].global_scope

200 ufunc_funcs_name = global_scope.next_id(Naming.pyrex_prefix + "funcs")

201 ufunc_types_name = global_scope.next_id(Naming.pyrex_prefix + "types")

202 ufunc_data_name = global_scope.next_id(Naming.pyrex_prefix + "data")

203 type_constants = []

204 narg_in = None

205 narg_out = None

206 for c in converters:

207 in_const = [d.type_constant for d in c.in_definitions]

208 if narg_in is not None:

209 assert narg_in == len(in_const)

210 else:

211 narg_in = len(in_const)

212 type_constants.extend(in_const)

213 out_const = [d.type_constant for d in c.out_definitions]

214 if narg_out is not None:

215 assert narg_out == len(out_const)

216 else:

217 narg_out = len(out_const)

218 type_constants.extend(out_const)

219

220 func_cnames = [cfnode.entry.cname for cfnode in cfunc_nodes]

221

222 context = dict(

223 ufunc_funcs_name=ufunc_funcs_name,

224 func_cnames=func_cnames,

225 ufunc_types_name=ufunc_types_name,

226 type_constants=type_constants,

227 ufunc_data_name=ufunc_data_name,

228 )

229 global_scope.use_utility_code(

230 TempitaUtilityCode.load("UFuncConsts", "UFuncs_C.c", context=context)

231 )

232

233 pos = original_node.pos

234 func_name = original_node.entry.name

235 docstr = original_node.doc

236 args_to_func = '%s(), %s, %s(), %s, %s, %s, PyUFunc_None, "%s", %s, 0' % (

237 ufunc_funcs_name,

238 ufunc_data_name,

239 ufunc_types_name,

240 len(func_cnames),

241 narg_in,

242 narg_out,

243 func_name,

244 docstr.as_c_string_literal() if docstr else "NULL",

245 )

246

247 call_node = ExprNodes.PythonCapiCallNode(

248 pos,

249 function_name="PyUFunc_FromFuncAndData",

250 # use a dummy type because it's honestly too fiddly

251 func_type=PyrexTypes.CFuncType(

252 PyrexTypes.py_object_type,

253 [PyrexTypes.CFuncTypeArg("dummy", PyrexTypes.c_void_ptr_type, None)],

254 ),

255 args=[

256 ExprNodes.ConstNode(

257 pos, type=PyrexTypes.c_void_ptr_type, value=args_to_func

258 )

259 ],

260 )

261 lhs_entry = global_scope.declare_var(func_name, PyrexTypes.py_object_type, pos)

262 assgn_node = Nodes.SingleAssignmentNode(

263 pos,

264 lhs=ExprNodes.NameNode(

265 pos, name=func_name, type=PyrexTypes.py_object_type, entry=lhs_entry

266 ),

267 rhs=call_node,

268 )

269 return assgn_node

270

271

272 def _generate_stats_from_converters(converters, node):

273 stats = []

274 for converter in converters:

275 tree = converter.generate_cy_utility_code()

276 ufunc_node = get_cfunc_from_tree(tree)

277 # merge in any utility code

278 converter.global_scope.utility_code_list.extend(tree.scope.utility_code_list)

279 stats.append(ufunc_node)

280

281 stats.append(generate_ufunc_initialization(converters, stats, node))

282 return stats

283

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/Cython/Compiler/UFuncs.py b/Cython/Compiler/UFuncs.py

--- a/Cython/Compiler/UFuncs.py

+++ b/Cython/Compiler/UFuncs.py

@@ -138,6 +138,9 @@

ufunc_cname = self.global_scope.next_id(self.node.entry.name + "_ufunc_def")

+ will_be_called_without_gil = not (any(t.is_pyobject for t in arg_types) or

+ any(t.is_pyobject for t in out_types))

+

context = dict(

func_cname=ufunc_cname,

in_types=arg_types,

@@ -145,6 +148,7 @@

inline_func_call=self.node.entry.cname,

inline_func_declaration=inline_func_decl,

nogil=self.node.entry.type.nogil,

+ will_be_called_without_gil=will_be_called_without_gil,

)

code = CythonUtilityCode.load(

|

{"golden_diff": "diff --git a/Cython/Compiler/UFuncs.py b/Cython/Compiler/UFuncs.py\n--- a/Cython/Compiler/UFuncs.py\n+++ b/Cython/Compiler/UFuncs.py\n@@ -138,6 +138,9 @@\n \n ufunc_cname = self.global_scope.next_id(self.node.entry.name + \"_ufunc_def\")\n \n+ will_be_called_without_gil = not (any(t.is_pyobject for t in arg_types) or\n+ any(t.is_pyobject for t in out_types))\n+\n context = dict(\n func_cname=ufunc_cname,\n in_types=arg_types,\n@@ -145,6 +148,7 @@\n inline_func_call=self.node.entry.cname,\n inline_func_declaration=inline_func_decl,\n nogil=self.node.entry.type.nogil,\n+ will_be_called_without_gil=will_be_called_without_gil,\n )\n \n code = CythonUtilityCode.load(\n", "issue": "Fatal Python error: PyEval_SaveThread when creating ufunc with nogil [BUG] \n### Describe the bug\n\nI'm generating ufuncs for my fortran library by the new version 3.0.0b1 of cython, using the @cython.ufunc function decorator.\r\nI declare the function as nogil. and call it in python script. It works fine when the input array length is less than 500. When the input array length is greater than 500, it stops and throws a thread error.\r\n```\r\nFatal Python error: PyEval_SaveThread: the function must be called with the GIL held, but the GIL is released (the current Python thread state is NULL)\r\nPython runtime state: initialized\r\n```\r\nI'm using the mingw-w64 compiler provided by msys2 on windows, but that doesn't seem to be the cause of the error. I transferred the program to an ubuntu machine and found the same error.\n\n### Code to reproduce the behaviour:\n\n```cython\r\n# test_ufunc.pyx\r\ncimport cython\r\n\r\[email protected]\r\ncdef double add_one(double x) nogil:\r\n return x+1\r\n```\r\n\r\n```python\r\nimport test_ufunc\r\nimport numpy as np\r\n\r\nx=np.linspace(-1,1,501)\r\nx_add=test_ufunc.add_one(x)\r\nprint(x_add)\r\n```\n\n### Expected behaviour\n\nNo errors, result returns successfully.\n\n### OS\n\nUbuntu and Windows\n\n### Python version\n\n3.10.6 (Ubuntu), 3.11.2 (Windows)\n\n### Cython version\n\n3.0.0b1\n\n### Additional context\n\n_No response_\n", "before_files": [{"content": "from . import (\n Nodes,\n ExprNodes,\n FusedNode,\n TreeFragment,\n Pipeline,\n ParseTreeTransforms,\n Naming,\n UtilNodes,\n)\nfrom .Errors import error\nfrom . import PyrexTypes\nfrom .UtilityCode import CythonUtilityCode\nfrom .Code import TempitaUtilityCode, UtilityCode\nfrom .Visitor import PrintTree, TreeVisitor, VisitorTransform\n\nnumpy_int_types = [\n \"NPY_BYTE\",\n \"NPY_INT8\",\n \"NPY_SHORT\",\n \"NPY_INT16\",\n \"NPY_INT\",\n \"NPY_INT32\",\n \"NPY_LONG\",\n \"NPY_LONGLONG\",\n \"NPY_INT64\",\n]\nnumpy_uint_types = [tp.replace(\"NPY_\", \"NPY_U\") for tp in numpy_int_types]\n# note: half float type is deliberately omitted\nnumpy_numeric_types = (\n numpy_int_types\n + numpy_uint_types\n + [\n \"NPY_FLOAT\",\n \"NPY_FLOAT32\",\n \"NPY_DOUBLE\",\n \"NPY_FLOAT64\",\n \"NPY_LONGDOUBLE\",\n ]\n)\n\n\ndef _get_type_constant(pos, type_):\n if type_.is_complex:\n # 'is' checks don't seem to work for complex types\n if type_ == PyrexTypes.c_float_complex_type:\n return \"NPY_CFLOAT\"\n elif type_ == PyrexTypes.c_double_complex_type:\n return \"NPY_CDOUBLE\"\n elif type_ == PyrexTypes.c_longdouble_complex_type:\n return \"NPY_CLONGDOUBLE\"\n elif type_.is_numeric:\n postfix = type_.empty_declaration_code().upper().replace(\" \", \"\")\n typename = \"NPY_%s\" % postfix\n if typename in numpy_numeric_types:\n return typename\n elif type_.is_pyobject:\n return \"NPY_OBJECT\"\n # TODO possible NPY_BOOL to bint but it needs a cast?\n # TODO NPY_DATETIME, NPY_TIMEDELTA, NPY_STRING, NPY_UNICODE and maybe NPY_VOID might be handleable\n error(pos, \"Type '%s' cannot be used as a ufunc argument\" % type_)\n\n\nclass _FindCFuncDefNode(TreeVisitor):\n \"\"\"\n Finds the CFuncDefNode in the tree\n\n The assumption is that there's only one CFuncDefNode\n \"\"\"\n\n found_node = None\n\n def visit_Node(self, node):\n if self.found_node:\n return\n else:\n self.visitchildren(node)\n\n def visit_CFuncDefNode(self, node):\n self.found_node = node\n\n def __call__(self, tree):\n self.visit(tree)\n return self.found_node\n\n\ndef get_cfunc_from_tree(tree):\n return _FindCFuncDefNode()(tree)\n\n\nclass _ArgumentInfo(object):\n \"\"\"\n Everything related to defining an input/output argument for a ufunc\n\n type - PyrexType\n type_constant - str such as \"NPY_INT8\" representing numpy dtype constants\n \"\"\"\n\n def __init__(self, type, type_constant):\n self.type = type\n self.type_constant = type_constant\n\n\nclass UFuncConversion(object):\n def __init__(self, node):\n self.node = node\n self.global_scope = node.local_scope.global_scope()\n\n self.in_definitions = self.get_in_type_info()\n self.out_definitions = self.get_out_type_info()\n\n def get_in_type_info(self):\n definitions = []\n for n, arg in enumerate(self.node.args):\n type_const = _get_type_constant(self.node.pos, arg.type)\n definitions.append(_ArgumentInfo(arg.type, type_const))\n return definitions\n\n def get_out_type_info(self):\n if self.node.return_type.is_ctuple:\n components = self.node.return_type.components\n else:\n components = [self.node.return_type]\n definitions = []\n for n, type in enumerate(components):\n definitions.append(\n _ArgumentInfo(type, _get_type_constant(self.node.pos, type))\n )\n return definitions\n\n def generate_cy_utility_code(self):\n arg_types = [a.type for a in self.in_definitions]\n out_types = [a.type for a in self.out_definitions]\n inline_func_decl = self.node.entry.type.declaration_code(\n self.node.entry.cname, pyrex=True\n )\n self.node.entry.used = True\n\n ufunc_cname = self.global_scope.next_id(self.node.entry.name + \"_ufunc_def\")\n\n context = dict(\n func_cname=ufunc_cname,\n in_types=arg_types,\n out_types=out_types,\n inline_func_call=self.node.entry.cname,\n inline_func_declaration=inline_func_decl,\n nogil=self.node.entry.type.nogil,\n )\n\n code = CythonUtilityCode.load(\n \"UFuncDefinition\",\n \"UFuncs.pyx\",\n context=context,\n outer_module_scope=self.global_scope,\n )\n\n tree = code.get_tree(entries_only=True)\n return tree\n\n def use_generic_utility_code(self):\n # use the invariant C utility code\n self.global_scope.use_utility_code(\n UtilityCode.load_cached(\"UFuncsInit\", \"UFuncs_C.c\")\n )\n self.global_scope.use_utility_code(\n UtilityCode.load_cached(\"NumpyImportUFunc\", \"NumpyImportArray.c\")\n )\n\n\ndef convert_to_ufunc(node):\n if isinstance(node, Nodes.CFuncDefNode):\n if node.local_scope.parent_scope.is_c_class_scope:\n error(node.pos, \"Methods cannot currently be converted to a ufunc\")\n return node\n converters = [UFuncConversion(node)]\n original_node = node\n elif isinstance(node, FusedNode.FusedCFuncDefNode) and isinstance(\n node.node, Nodes.CFuncDefNode\n ):\n if node.node.local_scope.parent_scope.is_c_class_scope:\n error(node.pos, \"Methods cannot currently be converted to a ufunc\")\n return node\n converters = [UFuncConversion(n) for n in node.nodes]\n original_node = node.node\n else:\n error(node.pos, \"Only C functions can be converted to a ufunc\")\n return node\n\n if not converters:\n return # this path probably shouldn't happen\n\n del converters[0].global_scope.entries[original_node.entry.name]\n # the generic utility code is generic, so there's no reason to do it multiple times\n converters[0].use_generic_utility_code()\n return [node] + _generate_stats_from_converters(converters, original_node)\n\n\ndef generate_ufunc_initialization(converters, cfunc_nodes, original_node):\n global_scope = converters[0].global_scope\n ufunc_funcs_name = global_scope.next_id(Naming.pyrex_prefix + \"funcs\")\n ufunc_types_name = global_scope.next_id(Naming.pyrex_prefix + \"types\")\n ufunc_data_name = global_scope.next_id(Naming.pyrex_prefix + \"data\")\n type_constants = []\n narg_in = None\n narg_out = None\n for c in converters:\n in_const = [d.type_constant for d in c.in_definitions]\n if narg_in is not None:\n assert narg_in == len(in_const)\n else:\n narg_in = len(in_const)\n type_constants.extend(in_const)\n out_const = [d.type_constant for d in c.out_definitions]\n if narg_out is not None:\n assert narg_out == len(out_const)\n else:\n narg_out = len(out_const)\n type_constants.extend(out_const)\n\n func_cnames = [cfnode.entry.cname for cfnode in cfunc_nodes]\n\n context = dict(\n ufunc_funcs_name=ufunc_funcs_name,\n func_cnames=func_cnames,\n ufunc_types_name=ufunc_types_name,\n type_constants=type_constants,\n ufunc_data_name=ufunc_data_name,\n )\n global_scope.use_utility_code(\n TempitaUtilityCode.load(\"UFuncConsts\", \"UFuncs_C.c\", context=context)\n )\n\n pos = original_node.pos\n func_name = original_node.entry.name\n docstr = original_node.doc\n args_to_func = '%s(), %s, %s(), %s, %s, %s, PyUFunc_None, \"%s\", %s, 0' % (\n ufunc_funcs_name,\n ufunc_data_name,\n ufunc_types_name,\n len(func_cnames),\n narg_in,\n narg_out,\n func_name,\n docstr.as_c_string_literal() if docstr else \"NULL\",\n )\n\n call_node = ExprNodes.PythonCapiCallNode(\n pos,\n function_name=\"PyUFunc_FromFuncAndData\",\n # use a dummy type because it's honestly too fiddly\n func_type=PyrexTypes.CFuncType(\n PyrexTypes.py_object_type,\n [PyrexTypes.CFuncTypeArg(\"dummy\", PyrexTypes.c_void_ptr_type, None)],\n ),\n args=[\n ExprNodes.ConstNode(\n pos, type=PyrexTypes.c_void_ptr_type, value=args_to_func\n )\n ],\n )\n lhs_entry = global_scope.declare_var(func_name, PyrexTypes.py_object_type, pos)\n assgn_node = Nodes.SingleAssignmentNode(\n pos,\n lhs=ExprNodes.NameNode(\n pos, name=func_name, type=PyrexTypes.py_object_type, entry=lhs_entry\n ),\n rhs=call_node,\n )\n return assgn_node\n\n\ndef _generate_stats_from_converters(converters, node):\n stats = []\n for converter in converters:\n tree = converter.generate_cy_utility_code()\n ufunc_node = get_cfunc_from_tree(tree)\n # merge in any utility code\n converter.global_scope.utility_code_list.extend(tree.scope.utility_code_list)\n stats.append(ufunc_node)\n\n stats.append(generate_ufunc_initialization(converters, stats, node))\n return stats\n", "path": "Cython/Compiler/UFuncs.py"}], "after_files": [{"content": "from . import (\n Nodes,\n ExprNodes,\n FusedNode,\n TreeFragment,\n Pipeline,\n ParseTreeTransforms,\n Naming,\n UtilNodes,\n)\nfrom .Errors import error\nfrom . import PyrexTypes\nfrom .UtilityCode import CythonUtilityCode\nfrom .Code import TempitaUtilityCode, UtilityCode\nfrom .Visitor import PrintTree, TreeVisitor, VisitorTransform\n\nnumpy_int_types = [\n \"NPY_BYTE\",\n \"NPY_INT8\",\n \"NPY_SHORT\",\n \"NPY_INT16\",\n \"NPY_INT\",\n \"NPY_INT32\",\n \"NPY_LONG\",\n \"NPY_LONGLONG\",\n \"NPY_INT64\",\n]\nnumpy_uint_types = [tp.replace(\"NPY_\", \"NPY_U\") for tp in numpy_int_types]\n# note: half float type is deliberately omitted\nnumpy_numeric_types = (\n numpy_int_types\n + numpy_uint_types\n + [\n \"NPY_FLOAT\",\n \"NPY_FLOAT32\",\n \"NPY_DOUBLE\",\n \"NPY_FLOAT64\",\n \"NPY_LONGDOUBLE\",\n ]\n)\n\n\ndef _get_type_constant(pos, type_):\n if type_.is_complex:\n # 'is' checks don't seem to work for complex types\n if type_ == PyrexTypes.c_float_complex_type:\n return \"NPY_CFLOAT\"\n elif type_ == PyrexTypes.c_double_complex_type:\n return \"NPY_CDOUBLE\"\n elif type_ == PyrexTypes.c_longdouble_complex_type:\n return \"NPY_CLONGDOUBLE\"\n elif type_.is_numeric:\n postfix = type_.empty_declaration_code().upper().replace(\" \", \"\")\n typename = \"NPY_%s\" % postfix\n if typename in numpy_numeric_types:\n return typename\n elif type_.is_pyobject:\n return \"NPY_OBJECT\"\n # TODO possible NPY_BOOL to bint but it needs a cast?\n # TODO NPY_DATETIME, NPY_TIMEDELTA, NPY_STRING, NPY_UNICODE and maybe NPY_VOID might be handleable\n error(pos, \"Type '%s' cannot be used as a ufunc argument\" % type_)\n\n\nclass _FindCFuncDefNode(TreeVisitor):\n \"\"\"\n Finds the CFuncDefNode in the tree\n\n The assumption is that there's only one CFuncDefNode\n \"\"\"\n\n found_node = None\n\n def visit_Node(self, node):\n if self.found_node:\n return\n else:\n self.visitchildren(node)\n\n def visit_CFuncDefNode(self, node):\n self.found_node = node\n\n def __call__(self, tree):\n self.visit(tree)\n return self.found_node\n\n\ndef get_cfunc_from_tree(tree):\n return _FindCFuncDefNode()(tree)\n\n\nclass _ArgumentInfo(object):\n \"\"\"\n Everything related to defining an input/output argument for a ufunc\n\n type - PyrexType\n type_constant - str such as \"NPY_INT8\" representing numpy dtype constants\n \"\"\"\n\n def __init__(self, type, type_constant):\n self.type = type\n self.type_constant = type_constant\n\n\nclass UFuncConversion(object):\n def __init__(self, node):\n self.node = node\n self.global_scope = node.local_scope.global_scope()\n\n self.in_definitions = self.get_in_type_info()\n self.out_definitions = self.get_out_type_info()\n\n def get_in_type_info(self):\n definitions = []\n for n, arg in enumerate(self.node.args):\n type_const = _get_type_constant(self.node.pos, arg.type)\n definitions.append(_ArgumentInfo(arg.type, type_const))\n return definitions\n\n def get_out_type_info(self):\n if self.node.return_type.is_ctuple:\n components = self.node.return_type.components\n else:\n components = [self.node.return_type]\n definitions = []\n for n, type in enumerate(components):\n definitions.append(\n _ArgumentInfo(type, _get_type_constant(self.node.pos, type))\n )\n return definitions\n\n def generate_cy_utility_code(self):\n arg_types = [a.type for a in self.in_definitions]\n out_types = [a.type for a in self.out_definitions]\n inline_func_decl = self.node.entry.type.declaration_code(\n self.node.entry.cname, pyrex=True\n )\n self.node.entry.used = True\n\n ufunc_cname = self.global_scope.next_id(self.node.entry.name + \"_ufunc_def\")\n\n will_be_called_without_gil = not (any(t.is_pyobject for t in arg_types) or\n any(t.is_pyobject for t in out_types))\n\n context = dict(\n func_cname=ufunc_cname,\n in_types=arg_types,\n out_types=out_types,\n inline_func_call=self.node.entry.cname,\n inline_func_declaration=inline_func_decl,\n nogil=self.node.entry.type.nogil,\n will_be_called_without_gil=will_be_called_without_gil,\n )\n\n code = CythonUtilityCode.load(\n \"UFuncDefinition\",\n \"UFuncs.pyx\",\n context=context,\n outer_module_scope=self.global_scope,\n )\n\n tree = code.get_tree(entries_only=True)\n return tree\n\n def use_generic_utility_code(self):\n # use the invariant C utility code\n self.global_scope.use_utility_code(\n UtilityCode.load_cached(\"UFuncsInit\", \"UFuncs_C.c\")\n )\n self.global_scope.use_utility_code(\n UtilityCode.load_cached(\"NumpyImportUFunc\", \"NumpyImportArray.c\")\n )\n\n\ndef convert_to_ufunc(node):\n if isinstance(node, Nodes.CFuncDefNode):\n if node.local_scope.parent_scope.is_c_class_scope:\n error(node.pos, \"Methods cannot currently be converted to a ufunc\")\n return node\n converters = [UFuncConversion(node)]\n original_node = node\n elif isinstance(node, FusedNode.FusedCFuncDefNode) and isinstance(\n node.node, Nodes.CFuncDefNode\n ):\n if node.node.local_scope.parent_scope.is_c_class_scope:\n error(node.pos, \"Methods cannot currently be converted to a ufunc\")\n return node\n converters = [UFuncConversion(n) for n in node.nodes]\n original_node = node.node\n else:\n error(node.pos, \"Only C functions can be converted to a ufunc\")\n return node\n\n if not converters:\n return # this path probably shouldn't happen\n\n del converters[0].global_scope.entries[original_node.entry.name]\n # the generic utility code is generic, so there's no reason to do it multiple times\n converters[0].use_generic_utility_code()\n return [node] + _generate_stats_from_converters(converters, original_node)\n\n\ndef generate_ufunc_initialization(converters, cfunc_nodes, original_node):\n global_scope = converters[0].global_scope\n ufunc_funcs_name = global_scope.next_id(Naming.pyrex_prefix + \"funcs\")\n ufunc_types_name = global_scope.next_id(Naming.pyrex_prefix + \"types\")\n ufunc_data_name = global_scope.next_id(Naming.pyrex_prefix + \"data\")\n type_constants = []\n narg_in = None\n narg_out = None\n for c in converters:\n in_const = [d.type_constant for d in c.in_definitions]\n if narg_in is not None:\n assert narg_in == len(in_const)\n else:\n narg_in = len(in_const)\n type_constants.extend(in_const)\n out_const = [d.type_constant for d in c.out_definitions]\n if narg_out is not None:\n assert narg_out == len(out_const)\n else:\n narg_out = len(out_const)\n type_constants.extend(out_const)\n\n func_cnames = [cfnode.entry.cname for cfnode in cfunc_nodes]\n\n context = dict(\n ufunc_funcs_name=ufunc_funcs_name,\n func_cnames=func_cnames,\n ufunc_types_name=ufunc_types_name,\n type_constants=type_constants,\n ufunc_data_name=ufunc_data_name,\n )\n global_scope.use_utility_code(\n TempitaUtilityCode.load(\"UFuncConsts\", \"UFuncs_C.c\", context=context)\n )\n\n pos = original_node.pos\n func_name = original_node.entry.name\n docstr = original_node.doc\n args_to_func = '%s(), %s, %s(), %s, %s, %s, PyUFunc_None, \"%s\", %s, 0' % (\n ufunc_funcs_name,\n ufunc_data_name,\n ufunc_types_name,\n len(func_cnames),\n narg_in,\n narg_out,\n func_name,\n docstr.as_c_string_literal() if docstr else \"NULL\",\n )\n\n call_node = ExprNodes.PythonCapiCallNode(\n pos,\n function_name=\"PyUFunc_FromFuncAndData\",\n # use a dummy type because it's honestly too fiddly\n func_type=PyrexTypes.CFuncType(\n PyrexTypes.py_object_type,\n [PyrexTypes.CFuncTypeArg(\"dummy\", PyrexTypes.c_void_ptr_type, None)],\n ),\n args=[\n ExprNodes.ConstNode(\n pos, type=PyrexTypes.c_void_ptr_type, value=args_to_func\n )\n ],\n )\n lhs_entry = global_scope.declare_var(func_name, PyrexTypes.py_object_type, pos)\n assgn_node = Nodes.SingleAssignmentNode(\n pos,\n lhs=ExprNodes.NameNode(\n pos, name=func_name, type=PyrexTypes.py_object_type, entry=lhs_entry\n ),\n rhs=call_node,\n )\n return assgn_node\n\n\ndef _generate_stats_from_converters(converters, node):\n stats = []\n for converter in converters:\n tree = converter.generate_cy_utility_code()\n ufunc_node = get_cfunc_from_tree(tree)\n # merge in any utility code\n converter.global_scope.utility_code_list.extend(tree.scope.utility_code_list)\n stats.append(ufunc_node)\n\n stats.append(generate_ufunc_initialization(converters, stats, node))\n return stats\n", "path": "Cython/Compiler/UFuncs.py"}]}

| 3,535 | 213 |

gh_patches_debug_12302

|

rasdani/github-patches

|

git_diff

|

open-telemetry__opentelemetry-python-contrib-381

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

aiopg instrumentation creating nested traces

**Describe your environment**

Python 3.6.12

Macos 11.1

opentelemetry-python-contrib: Tried released version, as well as `main`.

aiohttp==3.7.3

aiopg==1.1.0

Describe any aspect of your environment relevant to the problem, including your Python version, [platform](https://docs.python.org/3/library/platform.html), version numbers of installed dependencies, information about your cloud hosting provider, etc. If you're reporting a problem with a specific version of a library in this repo, please check whether the problem has been fixed on main.

**Steps to reproduce**

I am trying the `aiopg` instrumentation. I am using it along aiohttp. This may be an usage issue, but I have created a minimal example in [here](https://github.com/cdvv7788/aiopg-instrumentor-bug) (Just install requirements, run, and visit `localhost:4000`...you will need a postgres database named test and jaeger running somewhere).

The trace will keep growing with every request, nesting new traces every time:

**What is the expected behavior?**

Every connection has a single trace (no nesting)

**What is the actual behavior?**

Every time a request is made, a new trace is nested and sends the whole stack to jaeger

**Notes**

This may be a bad usage issue. If that is the case, please let me know what may be causing it and how to use this properly.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py`

Content:

```

1 import typing

2

3 import wrapt

4 from aiopg.utils import _ContextManager, _PoolAcquireContextManager

5

6 from opentelemetry.instrumentation.dbapi import (

7 CursorTracer,

8 DatabaseApiIntegration,

9 )

10 from opentelemetry.trace import SpanKind

11

12

13 # pylint: disable=abstract-method

14 class AsyncProxyObject(wrapt.ObjectProxy):

15 def __aiter__(self):

16 return self.__wrapped__.__aiter__()

17

18 async def __anext__(self):

19 result = await self.__wrapped__.__anext__()

20 return result

21

22 async def __aenter__(self):

23 return await self.__wrapped__.__aenter__()

24

25 async def __aexit__(self, exc_type, exc_val, exc_tb):

26 return await self.__wrapped__.__aexit__(exc_type, exc_val, exc_tb)

27

28 def __await__(self):

29 return self.__wrapped__.__await__()

30

31

32 class AiopgIntegration(DatabaseApiIntegration):

33 async def wrapped_connection(

34 self,

35 connect_method: typing.Callable[..., typing.Any],

36 args: typing.Tuple[typing.Any, typing.Any],

37 kwargs: typing.Dict[typing.Any, typing.Any],

38 ):

39 """Add object proxy to connection object."""

40 connection = await connect_method(*args, **kwargs)

41 # pylint: disable=protected-access

42 self.get_connection_attributes(connection._conn)

43 return get_traced_connection_proxy(connection, self)

44

45 async def wrapped_pool(self, create_pool_method, args, kwargs):

46 pool = await create_pool_method(*args, **kwargs)

47 async with pool.acquire() as connection:

48 # pylint: disable=protected-access

49 self.get_connection_attributes(connection._conn)

50 return get_traced_pool_proxy(pool, self)

51

52

53 def get_traced_connection_proxy(

54 connection, db_api_integration, *args, **kwargs

55 ):

56 # pylint: disable=abstract-method

57 class TracedConnectionProxy(AsyncProxyObject):

58 # pylint: disable=unused-argument

59 def __init__(self, connection, *args, **kwargs):

60 super().__init__(connection)

61

62 def cursor(self, *args, **kwargs):

63 coro = self._cursor(*args, **kwargs)

64 return _ContextManager(coro)

65

66 async def _cursor(self, *args, **kwargs):

67 # pylint: disable=protected-access

68 cursor = await self.__wrapped__._cursor(*args, **kwargs)

69 return get_traced_cursor_proxy(cursor, db_api_integration)

70

71 return TracedConnectionProxy(connection, *args, **kwargs)

72

73

74 def get_traced_pool_proxy(pool, db_api_integration, *args, **kwargs):

75 # pylint: disable=abstract-method

76 class TracedPoolProxy(AsyncProxyObject):

77 # pylint: disable=unused-argument

78 def __init__(self, pool, *args, **kwargs):

79 super().__init__(pool)

80

81 def acquire(self):

82 """Acquire free connection from the pool."""

83 coro = self._acquire()

84 return _PoolAcquireContextManager(coro, self)

85

86 async def _acquire(self):

87 # pylint: disable=protected-access

88 connection = await self.__wrapped__._acquire()

89 return get_traced_connection_proxy(

90 connection, db_api_integration, *args, **kwargs

91 )

92

93 return TracedPoolProxy(pool, *args, **kwargs)

94

95

96 class AsyncCursorTracer(CursorTracer):

97 async def traced_execution(

98 self,

99 cursor,

100 query_method: typing.Callable[..., typing.Any],

101 *args: typing.Tuple[typing.Any, typing.Any],

102 **kwargs: typing.Dict[typing.Any, typing.Any]

103 ):

104 name = ""

105 if args:

106 name = self.get_operation_name(cursor, args)

107

108 if not name:

109 name = (

110 self._db_api_integration.database

111 if self._db_api_integration.database

112 else self._db_api_integration.name

113 )

114

115 with self._db_api_integration.get_tracer().start_as_current_span(

116 name, kind=SpanKind.CLIENT

117 ) as span:

118 self._populate_span(span, cursor, *args)

119 return await query_method(*args, **kwargs)

120

121

122 def get_traced_cursor_proxy(cursor, db_api_integration, *args, **kwargs):

123 _traced_cursor = AsyncCursorTracer(db_api_integration)

124

125 # pylint: disable=abstract-method

126 class AsyncCursorTracerProxy(AsyncProxyObject):

127

128 # pylint: disable=unused-argument

129 def __init__(self, cursor, *args, **kwargs):

130 super().__init__(cursor)

131

132 async def execute(self, *args, **kwargs):

133 result = await _traced_cursor.traced_execution(

134 self, self.__wrapped__.execute, *args, **kwargs

135 )

136 return result

137

138 async def executemany(self, *args, **kwargs):

139 result = await _traced_cursor.traced_execution(

140 self, self.__wrapped__.executemany, *args, **kwargs

141 )

142 return result

143

144 async def callproc(self, *args, **kwargs):

145 result = await _traced_cursor.traced_execution(

146 self, self.__wrapped__.callproc, *args, **kwargs

147 )

148 return result

149

150 return AsyncCursorTracerProxy(cursor, *args, **kwargs)

151

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py b/instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py

--- a/instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py

+++ b/instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py

@@ -86,9 +86,11 @@

async def _acquire(self):

# pylint: disable=protected-access

connection = await self.__wrapped__._acquire()

- return get_traced_connection_proxy(

- connection, db_api_integration, *args, **kwargs

- )

+ if not isinstance(connection, AsyncProxyObject):

+ connection = get_traced_connection_proxy(

+ connection, db_api_integration, *args, **kwargs

+ )

+ return connection

return TracedPoolProxy(pool, *args, **kwargs)

|

{"golden_diff": "diff --git a/instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py b/instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py\n--- a/instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py\n+++ b/instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py\n@@ -86,9 +86,11 @@\n async def _acquire(self):\n # pylint: disable=protected-access\n connection = await self.__wrapped__._acquire()\n- return get_traced_connection_proxy(\n- connection, db_api_integration, *args, **kwargs\n- )\n+ if not isinstance(connection, AsyncProxyObject):\n+ connection = get_traced_connection_proxy(\n+ connection, db_api_integration, *args, **kwargs\n+ )\n+ return connection\n \n return TracedPoolProxy(pool, *args, **kwargs)\n", "issue": "aiopg instrumentation creating nested traces\n**Describe your environment**\r\nPython 3.6.12\r\nMacos 11.1\r\nopentelemetry-python-contrib: Tried released version, as well as `main`.\r\naiohttp==3.7.3\r\naiopg==1.1.0\r\n\r\n Describe any aspect of your environment relevant to the problem, including your Python version, [platform](https://docs.python.org/3/library/platform.html), version numbers of installed dependencies, information about your cloud hosting provider, etc. If you're reporting a problem with a specific version of a library in this repo, please check whether the problem has been fixed on main.\r\n\r\n**Steps to reproduce**\r\nI am trying the `aiopg` instrumentation. I am using it along aiohttp. This may be an usage issue, but I have created a minimal example in [here](https://github.com/cdvv7788/aiopg-instrumentor-bug) (Just install requirements, run, and visit `localhost:4000`...you will need a postgres database named test and jaeger running somewhere).\r\nThe trace will keep growing with every request, nesting new traces every time:\r\n\r\n\r\n\r\n**What is the expected behavior?**\r\nEvery connection has a single trace (no nesting)\r\n\r\n**What is the actual behavior?**\r\nEvery time a request is made, a new trace is nested and sends the whole stack to jaeger\r\n\r\n**Notes**\r\nThis may be a bad usage issue. If that is the case, please let me know what may be causing it and how to use this properly.\r\n\n", "before_files": [{"content": "import typing\n\nimport wrapt\nfrom aiopg.utils import _ContextManager, _PoolAcquireContextManager\n\nfrom opentelemetry.instrumentation.dbapi import (\n CursorTracer,\n DatabaseApiIntegration,\n)\nfrom opentelemetry.trace import SpanKind\n\n\n# pylint: disable=abstract-method\nclass AsyncProxyObject(wrapt.ObjectProxy):\n def __aiter__(self):\n return self.__wrapped__.__aiter__()\n\n async def __anext__(self):\n result = await self.__wrapped__.__anext__()\n return result\n\n async def __aenter__(self):\n return await self.__wrapped__.__aenter__()\n\n async def __aexit__(self, exc_type, exc_val, exc_tb):\n return await self.__wrapped__.__aexit__(exc_type, exc_val, exc_tb)\n\n def __await__(self):\n return self.__wrapped__.__await__()\n\n\nclass AiopgIntegration(DatabaseApiIntegration):\n async def wrapped_connection(\n self,\n connect_method: typing.Callable[..., typing.Any],\n args: typing.Tuple[typing.Any, typing.Any],\n kwargs: typing.Dict[typing.Any, typing.Any],\n ):\n \"\"\"Add object proxy to connection object.\"\"\"\n connection = await connect_method(*args, **kwargs)\n # pylint: disable=protected-access\n self.get_connection_attributes(connection._conn)\n return get_traced_connection_proxy(connection, self)\n\n async def wrapped_pool(self, create_pool_method, args, kwargs):\n pool = await create_pool_method(*args, **kwargs)\n async with pool.acquire() as connection:\n # pylint: disable=protected-access\n self.get_connection_attributes(connection._conn)\n return get_traced_pool_proxy(pool, self)\n\n\ndef get_traced_connection_proxy(\n connection, db_api_integration, *args, **kwargs\n):\n # pylint: disable=abstract-method\n class TracedConnectionProxy(AsyncProxyObject):\n # pylint: disable=unused-argument\n def __init__(self, connection, *args, **kwargs):\n super().__init__(connection)\n\n def cursor(self, *args, **kwargs):\n coro = self._cursor(*args, **kwargs)\n return _ContextManager(coro)\n\n async def _cursor(self, *args, **kwargs):\n # pylint: disable=protected-access\n cursor = await self.__wrapped__._cursor(*args, **kwargs)\n return get_traced_cursor_proxy(cursor, db_api_integration)\n\n return TracedConnectionProxy(connection, *args, **kwargs)\n\n\ndef get_traced_pool_proxy(pool, db_api_integration, *args, **kwargs):\n # pylint: disable=abstract-method\n class TracedPoolProxy(AsyncProxyObject):\n # pylint: disable=unused-argument\n def __init__(self, pool, *args, **kwargs):\n super().__init__(pool)\n\n def acquire(self):\n \"\"\"Acquire free connection from the pool.\"\"\"\n coro = self._acquire()\n return _PoolAcquireContextManager(coro, self)\n\n async def _acquire(self):\n # pylint: disable=protected-access\n connection = await self.__wrapped__._acquire()\n return get_traced_connection_proxy(\n connection, db_api_integration, *args, **kwargs\n )\n\n return TracedPoolProxy(pool, *args, **kwargs)\n\n\nclass AsyncCursorTracer(CursorTracer):\n async def traced_execution(\n self,\n cursor,\n query_method: typing.Callable[..., typing.Any],\n *args: typing.Tuple[typing.Any, typing.Any],\n **kwargs: typing.Dict[typing.Any, typing.Any]\n ):\n name = \"\"\n if args:\n name = self.get_operation_name(cursor, args)\n\n if not name:\n name = (\n self._db_api_integration.database\n if self._db_api_integration.database\n else self._db_api_integration.name\n )\n\n with self._db_api_integration.get_tracer().start_as_current_span(\n name, kind=SpanKind.CLIENT\n ) as span:\n self._populate_span(span, cursor, *args)\n return await query_method(*args, **kwargs)\n\n\ndef get_traced_cursor_proxy(cursor, db_api_integration, *args, **kwargs):\n _traced_cursor = AsyncCursorTracer(db_api_integration)\n\n # pylint: disable=abstract-method\n class AsyncCursorTracerProxy(AsyncProxyObject):\n\n # pylint: disable=unused-argument\n def __init__(self, cursor, *args, **kwargs):\n super().__init__(cursor)\n\n async def execute(self, *args, **kwargs):\n result = await _traced_cursor.traced_execution(\n self, self.__wrapped__.execute, *args, **kwargs\n )\n return result\n\n async def executemany(self, *args, **kwargs):\n result = await _traced_cursor.traced_execution(\n self, self.__wrapped__.executemany, *args, **kwargs\n )\n return result\n\n async def callproc(self, *args, **kwargs):\n result = await _traced_cursor.traced_execution(\n self, self.__wrapped__.callproc, *args, **kwargs\n )\n return result\n\n return AsyncCursorTracerProxy(cursor, *args, **kwargs)\n", "path": "instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py"}], "after_files": [{"content": "import typing\n\nimport wrapt\nfrom aiopg.utils import _ContextManager, _PoolAcquireContextManager\n\nfrom opentelemetry.instrumentation.dbapi import (\n CursorTracer,\n DatabaseApiIntegration,\n)\nfrom opentelemetry.trace import SpanKind\n\n\n# pylint: disable=abstract-method\nclass AsyncProxyObject(wrapt.ObjectProxy):\n def __aiter__(self):\n return self.__wrapped__.__aiter__()\n\n async def __anext__(self):\n result = await self.__wrapped__.__anext__()\n return result\n\n async def __aenter__(self):\n return await self.__wrapped__.__aenter__()\n\n async def __aexit__(self, exc_type, exc_val, exc_tb):\n return await self.__wrapped__.__aexit__(exc_type, exc_val, exc_tb)\n\n def __await__(self):\n return self.__wrapped__.__await__()\n\n\nclass AiopgIntegration(DatabaseApiIntegration):\n async def wrapped_connection(\n self,\n connect_method: typing.Callable[..., typing.Any],\n args: typing.Tuple[typing.Any, typing.Any],\n kwargs: typing.Dict[typing.Any, typing.Any],\n ):\n \"\"\"Add object proxy to connection object.\"\"\"\n connection = await connect_method(*args, **kwargs)\n # pylint: disable=protected-access\n self.get_connection_attributes(connection._conn)\n return get_traced_connection_proxy(connection, self)\n\n async def wrapped_pool(self, create_pool_method, args, kwargs):\n pool = await create_pool_method(*args, **kwargs)\n async with pool.acquire() as connection:\n # pylint: disable=protected-access\n self.get_connection_attributes(connection._conn)\n return get_traced_pool_proxy(pool, self)\n\n\ndef get_traced_connection_proxy(\n connection, db_api_integration, *args, **kwargs\n):\n # pylint: disable=abstract-method\n class TracedConnectionProxy(AsyncProxyObject):\n # pylint: disable=unused-argument\n def __init__(self, connection, *args, **kwargs):\n super().__init__(connection)\n\n def cursor(self, *args, **kwargs):\n coro = self._cursor(*args, **kwargs)\n return _ContextManager(coro)\n\n async def _cursor(self, *args, **kwargs):\n # pylint: disable=protected-access\n cursor = await self.__wrapped__._cursor(*args, **kwargs)\n return get_traced_cursor_proxy(cursor, db_api_integration)\n\n return TracedConnectionProxy(connection, *args, **kwargs)\n\n\ndef get_traced_pool_proxy(pool, db_api_integration, *args, **kwargs):\n # pylint: disable=abstract-method\n class TracedPoolProxy(AsyncProxyObject):\n # pylint: disable=unused-argument\n def __init__(self, pool, *args, **kwargs):\n super().__init__(pool)\n\n def acquire(self):\n \"\"\"Acquire free connection from the pool.\"\"\"\n coro = self._acquire()\n return _PoolAcquireContextManager(coro, self)\n\n async def _acquire(self):\n # pylint: disable=protected-access\n connection = await self.__wrapped__._acquire()\n if not isinstance(connection, AsyncProxyObject):\n connection = get_traced_connection_proxy(\n connection, db_api_integration, *args, **kwargs\n )\n return connection\n\n return TracedPoolProxy(pool, *args, **kwargs)\n\n\nclass AsyncCursorTracer(CursorTracer):\n async def traced_execution(\n self,\n cursor,\n query_method: typing.Callable[..., typing.Any],\n *args: typing.Tuple[typing.Any, typing.Any],\n **kwargs: typing.Dict[typing.Any, typing.Any]\n ):\n name = \"\"\n if args:\n name = self.get_operation_name(cursor, args)\n\n if not name:\n name = (\n self._db_api_integration.database\n if self._db_api_integration.database\n else self._db_api_integration.name\n )\n\n with self._db_api_integration.get_tracer().start_as_current_span(\n name, kind=SpanKind.CLIENT\n ) as span:\n self._populate_span(span, cursor, *args)\n return await query_method(*args, **kwargs)\n\n\ndef get_traced_cursor_proxy(cursor, db_api_integration, *args, **kwargs):\n _traced_cursor = AsyncCursorTracer(db_api_integration)\n\n # pylint: disable=abstract-method\n class AsyncCursorTracerProxy(AsyncProxyObject):\n\n # pylint: disable=unused-argument\n def __init__(self, cursor, *args, **kwargs):\n super().__init__(cursor)\n\n async def execute(self, *args, **kwargs):\n result = await _traced_cursor.traced_execution(\n self, self.__wrapped__.execute, *args, **kwargs\n )\n return result\n\n async def executemany(self, *args, **kwargs):\n result = await _traced_cursor.traced_execution(\n self, self.__wrapped__.executemany, *args, **kwargs\n )\n return result\n\n async def callproc(self, *args, **kwargs):\n result = await _traced_cursor.traced_execution(\n self, self.__wrapped__.callproc, *args, **kwargs\n )\n return result\n\n return AsyncCursorTracerProxy(cursor, *args, **kwargs)\n", "path": "instrumentation/opentelemetry-instrumentation-aiopg/src/opentelemetry/instrumentation/aiopg/aiopg_integration.py"}]}

| 2,165 | 257 |

gh_patches_debug_39156

|

rasdani/github-patches

|

git_diff

|

nilearn__nilearn-576

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

plot_stat_map (and probably plot_*) fails when display_mode axis has singleton dimension

Fuzzing the plotting code, I find the following testcase fails

```

for axis, direction in enumerate("xyz"):

shape = [5, 6, 7]

shape[axis] = 1

img = nibabel.Nifti1Image(np.ones(shape), np.eye(4))

plot_stat_map(img, None, display_mode=direction)

```

`ValueError: Too many cuts requested for the data: n_cuts=7, data size=1`

The culprit is the find_cut_slices function.

A fix would be to return all the slices along the axis if there are at least as many requested n_slices as there are slices.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `nilearn/plotting/find_cuts.py`

Content:

```

1 """

2 Tools to find activations and cut on maps

3 """

4

5 # Author: Gael Varoquaux

6 # License: BSD

7

8 import numpy as np

9 from scipy import ndimage

10

11 # Local imports

12 from .._utils.ndimage import largest_connected_component

13 from .._utils import new_img_like

14 from .._utils.extmath import fast_abs_percentile

15 from .._utils.numpy_conversions import as_ndarray

16 from ..image.resampling import get_mask_bounds, coord_transform

17 from ..image.image import _smooth_array

18

19 ################################################################################

20 # Functions for automatic choice of cuts coordinates

21 ################################################################################

22

23

24 def find_xyz_cut_coords(img, mask=None, activation_threshold=None):

25 """ Find the center of the largest activation connected component.

26

27 Parameters

28 -----------

29 img : 3D Nifti1Image

30 The brain map.

31 mask : 3D ndarray, boolean, optional

32 An optional brain mask.

33 activation_threshold : float, optional

34 The lower threshold to the positive activation. If None, the

35 activation threshold is computed using the 80% percentile of

36 the absolute value of the map.

37

38 Returns

39 -------

40 x : float

41 the x world coordinate.

42 y : float

43 the y world coordinate.

44 z : float

45 the z world coordinate.

46 """

47 data = img.get_data()

48 # To speed up computations, we work with partial views of the array,

49 # and keep track of the offset

50 offset = np.zeros(3)

51

52 # Deal with masked arrays:

53 if hasattr(data, 'mask'):

54 not_mask = np.logical_not(data.mask)

55 if mask is None:

56 mask = not_mask

57 else:

58 mask *= not_mask

59 data = np.asarray(data)

60

61 # Get rid of potential memmapping

62 data = as_ndarray(data)

63 my_map = data.copy()

64 if mask is not None:

65 slice_x, slice_y, slice_z = ndimage.find_objects(mask)[0]

66 my_map = my_map[slice_x, slice_y, slice_z]

67 mask = mask[slice_x, slice_y, slice_z]

68 my_map *= mask

69 offset += [slice_x.start, slice_y.start, slice_z.start]

70

71 # Testing min and max is faster than np.all(my_map == 0)

72 if (my_map.max() == 0) and (my_map.min() == 0):

73 return .5 * np.array(data.shape)

74 if activation_threshold is None:

75 activation_threshold = fast_abs_percentile(my_map[my_map != 0].ravel(),

76 80)

77 mask = np.abs(my_map) > activation_threshold - 1.e-15

78 # mask may be zero everywhere in rare cases

79 if mask.max() == 0:

80 return .5 * np.array(data.shape)

81 mask = largest_connected_component(mask)

82 slice_x, slice_y, slice_z = ndimage.find_objects(mask)[0]

83 my_map = my_map[slice_x, slice_y, slice_z]

84 mask = mask[slice_x, slice_y, slice_z]

85 my_map *= mask

86 offset += [slice_x.start, slice_y.start, slice_z.start]

87

88 # For the second threshold, we use a mean, as it is much faster,

89 # althought it is less robust

90 second_threshold = np.abs(np.mean(my_map[mask]))

91 second_mask = (np.abs(my_map) > second_threshold)

92 if second_mask.sum() > 50:

93 my_map *= largest_connected_component(second_mask)

94 cut_coords = ndimage.center_of_mass(np.abs(my_map))

95 x_map, y_map, z_map = cut_coords + offset

96

97 # Return as a list of scalars

98 return np.asarray(coord_transform(x_map, y_map, z_map,

99 img.get_affine())).tolist()

100

101

102 def _get_auto_mask_bounds(img):

103 """ Compute the bounds of the data with an automaticaly computed mask

104 """

105 data = img.get_data().copy()

106 affine = img.get_affine()

107 if hasattr(data, 'mask'):

108 # Masked array

109 mask = np.logical_not(data.mask)

110 data = np.asarray(data)

111 else:

112 # The mask will be anything that is fairly different

113 # from the values in the corners

114 edge_value = float(data[0, 0, 0] + data[0, -1, 0]

115 + data[-1, 0, 0] + data[0, 0, -1]

116 + data[-1, -1, 0] + data[-1, 0, -1]

117 + data[0, -1, -1] + data[-1, -1, -1]

118 )

119 edge_value /= 6

120 mask = np.abs(data - edge_value) > .005*data.ptp()

121 xmin, xmax, ymin, ymax, zmin, zmax = \

122 get_mask_bounds(new_img_like(img, mask, affine))

123 return (xmin, xmax), (ymin, ymax), (zmin, zmax)

124

125

126 def find_cut_slices(img, direction='z', n_cuts=12, spacing='auto'):

127 """ Find 'good' cross-section slicing positions along a given axis.

128

129 Parameters

130 ----------

131 img: 3D Nifti1Image

132 the brain map

133 direction: string, optional (default "z")

134 sectional direction; possible values are "x", "y", or "z"

135 n_cuts: int, optional (default 12)

136 number of cuts in the plot

137 spacing: 'auto' or int, optional (default 'auto')

138 minimum spacing between cuts (in voxels, not milimeters)

139 if 'auto', the spacing is .5 / n_cuts * img_length

140

141 Returns

142 -------

143 cut_coords: 1D array of length n_cuts

144 the computed cut_coords

145

146 Notes

147 -----

148 This code works by iteratively locating peak activations that are

149 separated by a distance of at least 'spacing'. If n_cuts is very

150 large and all the activated regions are covered, cuts with a spacing

151 less than 'spacing' will be returned.

152 """

153

154 assert direction in 'xyz'

155

156 axis = 'xyz'.index(direction)

157

158 affine = img.get_affine()

159 orig_data = np.abs(img.get_data())

160 this_shape = orig_data.shape[axis]

161 if n_cuts > this_shape:

162 raise ValueError('Too many cuts requested for the data: '

163 'n_cuts=%i, data size=%i' % (n_cuts, this_shape))

164

165 data = orig_data.copy()

166 if data.dtype.kind == 'i':

167 data = data.astype(np.float)

168

169 data = _smooth_array(data, affine, fwhm='fast')

170

171 if spacing == 'auto':

172 spacing = max(int(.5 / n_cuts * data.shape[axis]), 1)

173

174 slices = [slice(None, None), slice(None, None), slice(None, None)]

175

176 cut_coords = list()

177

178 for _ in range(n_cuts):

179 # Find a peak

180 max_along_axis = np.unravel_index(np.abs(data).argmax(),

181 data.shape)[axis]

182

183 # cancel out the surroundings of the peak

184 start = max(0, max_along_axis - spacing)

185 stop = max_along_axis + spacing

186 slices[axis] = slice(start, stop)

187 # We don't actually fully zero the neighborhood, to avoid ending

188 # up with fully zeros if n_cuts is too big: we can do multiple

189 # passes on the data

190 data[slices] *= 1.e-3

191

192 cut_coords.append(max_along_axis)

193

194 # We sometimes get duplicated cuts, so we add cuts at the beginning

195 # and the end

196 cut_coords = np.unique(cut_coords).tolist()

197 while len(cut_coords) < n_cuts:

198 # Candidates for new cuts:

199 slice_below = min(cut_coords) - 2

200 slice_above = max(cut_coords) + 2

201 candidates = [slice_above]

202 # One slice where there is the biggest gap in the existing

203 # cut_coords

204 if len(cut_coords) > 1:

205 middle_idx = np.argmax(np.diff(cut_coords))

206 slice_middle = int(.5 * (cut_coords[middle_idx]

207 + cut_coords[middle_idx + 1]))

208 if not slice_middle in cut_coords:

209 candidates.append(slice_middle)

210 if slice_below >= 0:

211 # We need positive slice to avoid having negative

212 # indices, which would work, but not the way we think of them

213 candidates.append(slice_below)

214 best_weight = -10

215 for candidate in candidates:

216 if candidate >= this_shape:

217 this_weight = 0

218 else:

219 this_weight = np.sum(np.rollaxis(orig_data, axis)[candidate])

220 if this_weight > best_weight:

221 best_candidate = candidate

222 best_weight = this_weight

223

224 cut_coords.append(best_candidate)

225 cut_coords = np.unique(cut_coords).tolist()

226

227 cut_coords = np.array(cut_coords)

228 cut_coords.sort()

229

230 # Transform this back in image space

231 kwargs = dict()

232 for name in 'xyz':

233 kwargs[name] = np.zeros(len(cut_coords))

234 kwargs[direction] = cut_coords

235 kwargs['affine'] = affine

236

237 cut_coords = coord_transform(**kwargs)[axis]

238 # We need to atleast_1d to make sure that when n_cuts is 1 we do

239 # get an iterable

240 return np.atleast_1d(cut_coords)

241

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/nilearn/plotting/find_cuts.py b/nilearn/plotting/find_cuts.py

--- a/nilearn/plotting/find_cuts.py

+++ b/nilearn/plotting/find_cuts.py

@@ -5,6 +5,7 @@

# Author: Gael Varoquaux

# License: BSD

+import warnings

import numpy as np

from scipy import ndimage

@@ -123,6 +124,39 @@

return (xmin, xmax), (ymin, ymax), (zmin, zmax)

+def _transform_cut_coords(cut_coords, direction, affine):

+ """Transforms cut_coords back in image space

+

+ Parameters

+ ----------

+ cut_coords: 1D array of length n_cuts

+ The coordinates to be transformed.

+

+ direction: string, optional (default "z")

+ sectional direction; possible values are "x", "y", or "z"

+

+ affine: 2D array of shape (4, 4)

+ The affine for the image.

+

+ Returns

+ -------

+ cut_coords: 1D array of length n_cuts

+ The original cut_coords transformed image space.

+ """

+ # make kwargs

+ axis = 'xyz'.index(direction)

+ kwargs = {}

+ for name in 'xyz':

+ kwargs[name] = np.zeros(len(cut_coords))

+ kwargs[direction] = cut_coords

+ kwargs['affine'] = affine

+

+ # We need atleast_1d to make sure that when n_cuts is 1 we do

+ # get an iterable

+ cut_coords = coord_transform(**kwargs)[axis]

+ return np.atleast_1d(cut_coords)

+

+

def find_cut_slices(img, direction='z', n_cuts=12, spacing='auto'):

""" Find 'good' cross-section slicing positions along a given axis.

@@ -151,16 +185,23 @@

less than 'spacing' will be returned.

"""

- assert direction in 'xyz'

-

+ # misc

+ if not direction in 'xyz':

+ raise ValueError(

+ "'direction' must be one of 'x', 'y', or 'z'. Got '%s'" % (

+ direction))

axis = 'xyz'.index(direction)

-

affine = img.get_affine()

orig_data = np.abs(img.get_data())

this_shape = orig_data.shape[axis]

+

+ # BF issue #575: Return all the slices along and axis if this axis

+ # is the display mode and there are at least as many requested

+ # n_slices as there are slices.

if n_cuts > this_shape:

- raise ValueError('Too many cuts requested for the data: '

- 'n_cuts=%i, data size=%i' % (n_cuts, this_shape))

+ warnings.warn('Too many cuts requested for the data: '

+ 'n_cuts=%i, data size=%i' % (n_cuts, this_shape))

+ return _transform_cut_coords(np.arange(this_shape), direction, affine)

data = orig_data.copy()

if data.dtype.kind == 'i':

@@ -227,14 +268,4 @@

cut_coords = np.array(cut_coords)

cut_coords.sort()

- # Transform this back in image space

- kwargs = dict()

- for name in 'xyz':

- kwargs[name] = np.zeros(len(cut_coords))

- kwargs[direction] = cut_coords

- kwargs['affine'] = affine

-

- cut_coords = coord_transform(**kwargs)[axis]

- # We need to atleast_1d to make sure that when n_cuts is 1 we do

- # get an iterable

- return np.atleast_1d(cut_coords)

+ return _transform_cut_coords(cut_coords, direction, affine)

|