problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_6236

|

rasdani/github-patches

|

git_diff

|

engnadeau__pybotics-18

|

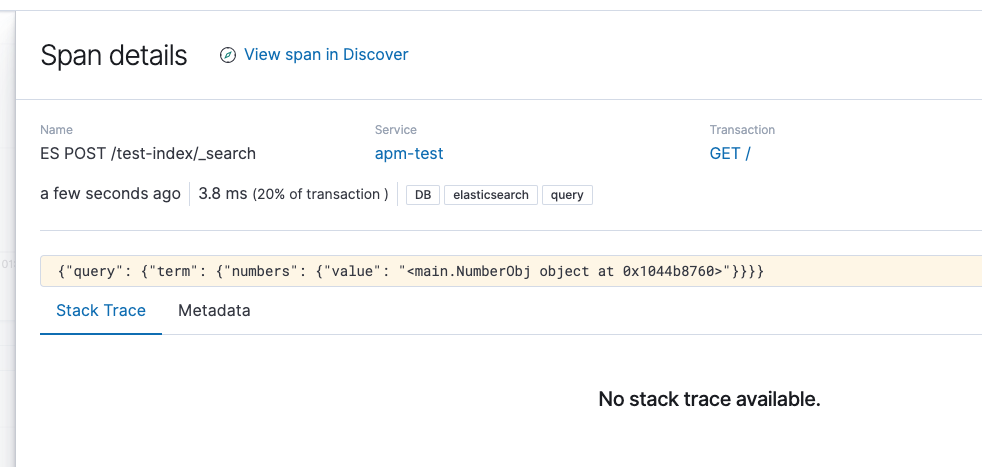

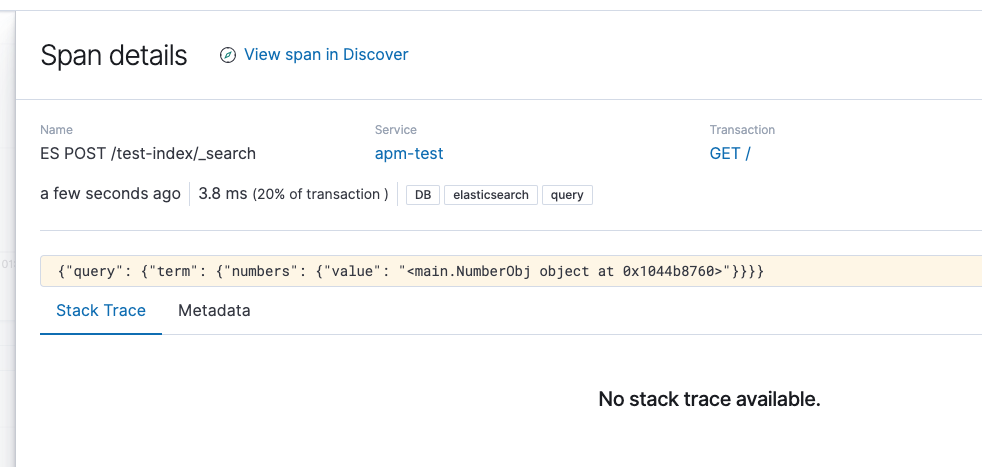

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Update examples

Examples are now out of sync with current codebase. Potential use for iPython?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `examples/example_robot.py`

Content:

```

1 import copy

2

3 import pybotics as py

4 import numpy as np

5

6 # set numpy print options

7 np.set_printoptions(precision=3)

8 np.set_printoptions(suppress=True)

9

10 # create robot

11 model = np.loadtxt('ur10-mdh.csv', delimiter=',')

12 robot = py.Robot(model)

13

14 print('Robot Model:\n{}\n'.format(robot.robot_model))

15

16 # demonstrate forward kinematics

17 joints = [0] * robot.num_dof()

18 pose = robot.fk(joints)

19

20 print('Pose:\n{}\n'.format(pose))

21

22 # demonstrate inverse kinematics

23 new_joints = robot.ik(pose)

24 print('Solved Joints:\n{}\n'.format(new_joints))

25

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/examples/example_robot.py b/examples/example_robot.py

deleted file mode 100644

--- a/examples/example_robot.py

+++ /dev/null

@@ -1,24 +0,0 @@

-import copy

-

-import pybotics as py

-import numpy as np

-

-# set numpy print options

-np.set_printoptions(precision=3)

-np.set_printoptions(suppress=True)

-

-# create robot

-model = np.loadtxt('ur10-mdh.csv', delimiter=',')

-robot = py.Robot(model)

-

-print('Robot Model:\n{}\n'.format(robot.robot_model))

-

-# demonstrate forward kinematics

-joints = [0] * robot.num_dof()

-pose = robot.fk(joints)

-

-print('Pose:\n{}\n'.format(pose))

-

-# demonstrate inverse kinematics

-new_joints = robot.ik(pose)

-print('Solved Joints:\n{}\n'.format(new_joints))

|

{"golden_diff": "diff --git a/examples/example_robot.py b/examples/example_robot.py\ndeleted file mode 100644\n--- a/examples/example_robot.py\n+++ /dev/null\n@@ -1,24 +0,0 @@\n-import copy\n-\n-import pybotics as py\n-import numpy as np\n-\n-# set numpy print options\n-np.set_printoptions(precision=3)\n-np.set_printoptions(suppress=True)\n-\n-# create robot\n-model = np.loadtxt('ur10-mdh.csv', delimiter=',')\n-robot = py.Robot(model)\n-\n-print('Robot Model:\\n{}\\n'.format(robot.robot_model))\n-\n-# demonstrate forward kinematics\n-joints = [0] * robot.num_dof()\n-pose = robot.fk(joints)\n-\n-print('Pose:\\n{}\\n'.format(pose))\n-\n-# demonstrate inverse kinematics\n-new_joints = robot.ik(pose)\n-print('Solved Joints:\\n{}\\n'.format(new_joints))\n", "issue": "Update examples\nExamples are now out of sync with current codebase. Potential use for iPython?\n", "before_files": [{"content": "import copy\n\nimport pybotics as py\nimport numpy as np\n\n# set numpy print options\nnp.set_printoptions(precision=3)\nnp.set_printoptions(suppress=True)\n\n# create robot\nmodel = np.loadtxt('ur10-mdh.csv', delimiter=',')\nrobot = py.Robot(model)\n\nprint('Robot Model:\\n{}\\n'.format(robot.robot_model))\n\n# demonstrate forward kinematics\njoints = [0] * robot.num_dof()\npose = robot.fk(joints)\n\nprint('Pose:\\n{}\\n'.format(pose))\n\n# demonstrate inverse kinematics\nnew_joints = robot.ik(pose)\nprint('Solved Joints:\\n{}\\n'.format(new_joints))\n", "path": "examples/example_robot.py"}], "after_files": [{"content": null, "path": "examples/example_robot.py"}]}

| 474 | 212 |

gh_patches_debug_10739

|

rasdani/github-patches

|

git_diff

|

easybuilders__easybuild-easyblocks-2326

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

R package modules have priority over user-installed packages

R has multiple variables that control the library path, `R_LIBS`, `R_LIBS_USER` and `R_LIBS_SITE`.

The path can be inspected with `.libPaths()` and is made up of in order of the entries in `R_LIBS`, `R_LIBS_USER` and `R_LIBS_SITE` and last the R installation itself.

Our R package modules currently prepend their path to `R_LIBS` similar to how `PYTHONPATH` is handled, but this means that the user's directory (typically specified in `~/.Renviron` or the default) is not at the front of the library path.

This means that users cannot override system-installed packages and more importantly, `install.packages` only ever considers the first entry in the library path unless you explicitly specify a `lib=` to install to. Some installation functions like `install_github` doesn't even have that customization point.

I suggest that we alter our R packages to prepend to `R_LIBS_SITE` instead, it seems to yield the preferred order for an end-user.

Our R installations do not have a bundled `site-library` directory so the environment variable is currently unused.

Upstream documentation on how the paths are constructed: https://stat.ethz.ch/R-manual/R-devel/library/base/html/libPaths.html

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `easybuild/easyblocks/generic/rpackage.py`

Content:

```

1 ##

2 # Copyright 2009-2021 Ghent University

3 #

4 # This file is part of EasyBuild,

5 # originally created by the HPC team of Ghent University (http://ugent.be/hpc/en),

6 # with support of Ghent University (http://ugent.be/hpc),

7 # the Flemish Supercomputer Centre (VSC) (https://www.vscentrum.be),

8 # Flemish Research Foundation (FWO) (http://www.fwo.be/en)

9 # and the Department of Economy, Science and Innovation (EWI) (http://www.ewi-vlaanderen.be/en).

10 #

11 # https://github.com/easybuilders/easybuild

12 #

13 # EasyBuild is free software: you can redistribute it and/or modify

14 # it under the terms of the GNU General Public License as published by

15 # the Free Software Foundation v2.

16 #

17 # EasyBuild is distributed in the hope that it will be useful,

18 # but WITHOUT ANY WARRANTY; without even the implied warranty of

19 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

20 # GNU General Public License for more details.

21 #

22 # You should have received a copy of the GNU General Public License

23 # along with EasyBuild. If not, see <http://www.gnu.org/licenses/>.

24 ##

25 """

26 EasyBuild support for building and installing R packages, implemented as an easyblock

27

28 @author: Stijn De Weirdt (Ghent University)

29 @author: Dries Verdegem (Ghent University)

30 @author: Kenneth Hoste (Ghent University)

31 @author: Jens Timmerman (Ghent University)

32 @author: Toon Willems (Ghent University)

33 @author: Balazs Hajgato (Vrije Universiteit Brussel)

34 """

35 import os

36

37 from easybuild.easyblocks.r import EXTS_FILTER_R_PACKAGES, EB_R

38 from easybuild.easyblocks.generic.configuremake import check_config_guess, obtain_config_guess

39 from easybuild.framework.easyconfig import CUSTOM

40 from easybuild.framework.extensioneasyblock import ExtensionEasyBlock

41 from easybuild.tools.build_log import EasyBuildError

42 from easybuild.tools.filetools import mkdir, copy_file

43 from easybuild.tools.run import run_cmd, parse_log_for_error

44

45

46 def make_R_install_option(opt, values, cmdline=False):

47 """

48 Make option list for install.packages, to specify in R environment.

49 """

50 txt = ""

51 if values:

52 if cmdline:

53 txt = " --%s=\"%s" % (opt, values[0])

54 else:

55 txt = "%s=c(\"%s" % (opt, values[0])

56 for i in values[1:]:

57 txt += " %s" % i

58 if cmdline:

59 txt += "\""

60 else:

61 txt += "\")"

62 return txt

63

64

65 class RPackage(ExtensionEasyBlock):

66 """

67 Install an R package as a separate module, or as an extension.

68 """

69

70 @staticmethod

71 def extra_options(extra_vars=None):

72 """Extra easyconfig parameters specific to RPackage."""

73 extra_vars = ExtensionEasyBlock.extra_options(extra_vars=extra_vars)

74 extra_vars.update({

75 'exts_subdir': ['', "Subdirectory where R extensions should be installed info", CUSTOM],

76 'unpack_sources': [False, "Unpack sources before installation", CUSTOM],

77 })

78 return extra_vars

79

80 def __init__(self, *args, **kwargs):

81 """Initliaze RPackage-specific class variables."""

82

83 super(RPackage, self).__init__(*args, **kwargs)

84

85 self.configurevars = []

86 self.configureargs = []

87 self.ext_src = None

88

89 def make_r_cmd(self, prefix=None):

90 """Create a command to run in R to install an R package."""

91 confvars = "confvars"

92 confargs = "confargs"

93 confvarslist = make_R_install_option(confvars, self.configurevars)

94 confargslist = make_R_install_option(confargs, self.configureargs)

95 confvarsstr = ""

96 if confvarslist:

97 confvarslist = confvarslist + "; names(%s)=\"%s\"" % (confvars, self.name)

98 confvarsstr = ", configure.vars=%s" % confvars

99 confargsstr = ""

100 if confargslist:

101 confargslist = confargslist + "; names(%s)=\"%s\"" % (confargs, self.name)

102 confargsstr = ", configure.args=%s" % confargs

103

104 if prefix:

105 prefix = '"%s", ' % prefix

106 else:

107 prefix = ''

108

109 r_cmd = """

110 options(repos=c(CRAN="http://www.freestatistics.org/cran"))

111 %s

112 %s

113 install.packages("%s", %s dependencies = FALSE %s%s)

114 """ % (confvarslist, confargslist, self.name, prefix, confvarsstr, confargsstr)

115 cmd = "%s R -q --no-save %s" % (self.cfg['preinstallopts'], self.cfg['installopts'])

116

117 self.log.debug("make_r_cmd returns %s with input %s" % (cmd, r_cmd))

118

119 return (cmd, r_cmd)

120

121 def make_cmdline_cmd(self, prefix=None):

122 """Create a command line to install an R package."""

123 confvars = ""

124 if self.configurevars:

125 confvars = make_R_install_option("configure-vars", self.configurevars, cmdline=True)

126 confargs = ""

127 if self.configureargs:

128 confargs = make_R_install_option("configure-args", self.configureargs, cmdline=True)

129

130 if prefix:

131 prefix = '--library=%s' % prefix

132 else:

133 prefix = ''

134

135 if self.start_dir:

136 loc = os.path.join(self.ext_dir or os.path.sep, self.start_dir)

137 else:

138 loc = self.ext_dir or self.ext_src

139

140 cmd = ' '.join([

141 self.cfg['preinstallopts'],

142 "R CMD INSTALL",

143 loc,

144 confargs,

145 confvars,

146 prefix,

147 '--no-clean-on-error',

148 self.cfg['installopts'],

149 ])

150

151 self.log.debug("make_cmdline_cmd returns %s" % cmd)

152 return cmd, None

153

154 def configure_step(self):

155 """No configuration for installing R packages."""

156 pass

157

158 def build_step(self):

159 """No separate build step for R packages."""

160 pass

161

162 def install_R_package(self, cmd, inp=None):

163 """Install R package as specified, and check for errors."""

164

165 cmdttdouterr, _ = run_cmd(cmd, log_all=True, simple=False, inp=inp, regexp=False)

166

167 cmderrors = parse_log_for_error(cmdttdouterr, regExp="^ERROR:")

168 if cmderrors:

169 cmd = "R -q --no-save"

170 stdin = """

171 remove.library(%s)

172 """ % self.name

173 # remove package if errors were detected

174 # it's possible that some of the dependencies failed, but the package itself was installed

175 run_cmd(cmd, log_all=False, log_ok=False, simple=False, inp=stdin, regexp=False)

176 raise EasyBuildError("Errors detected during installation of R package %s!", self.name)

177 else:

178 self.log.debug("R package %s installed succesfully" % self.name)

179

180 def update_config_guess(self, path):

181 """Update any config.guess found in specified directory"""

182 for config_guess_dir in (root for root, _, files in os.walk(path) if 'config.guess' in files):

183 config_guess = os.path.join(config_guess_dir, 'config.guess')

184 if not check_config_guess(config_guess):

185 updated_config_guess = obtain_config_guess()

186 if updated_config_guess:

187 self.log.debug("Replacing outdated %s with more recent %s", config_guess, updated_config_guess)

188 copy_file(updated_config_guess, config_guess)

189 else:

190 raise EasyBuildError("Failed to obtain updated config.guess")

191

192 def install_step(self):

193 """Install procedure for R packages."""

194 # Update config.guess if the package was extracted

195 if self.start_dir:

196 self.update_config_guess(self.start_dir)

197 cmd, stdin = self.make_cmdline_cmd(prefix=os.path.join(self.installdir, self.cfg['exts_subdir']))

198 self.install_R_package(cmd, inp=stdin)

199

200 def run(self):

201 """Install R package as an extension."""

202

203 # determine location

204 if isinstance(self.master, EB_R):

205 # extension is being installed as part of an R installation/module

206 (out, _) = run_cmd("R RHOME", log_all=True, simple=False)

207 rhome = out.strip()

208 lib_install_prefix = os.path.join(rhome, 'library')

209 else:

210 # extension is being installed in a separate installation prefix

211 lib_install_prefix = os.path.join(self.installdir, self.cfg['exts_subdir'])

212 mkdir(lib_install_prefix, parents=True)

213

214 if self.src:

215 super(RPackage, self).run(unpack_src=True)

216 self.ext_src = self.src

217 self.update_config_guess(self.ext_dir)

218 self.log.debug("Installing R package %s version %s." % (self.name, self.version))

219 cmd, stdin = self.make_cmdline_cmd(prefix=lib_install_prefix)

220 else:

221 if self.patches:

222 raise EasyBuildError("Cannot patch R package %s as no explicit source is given!", self.name)

223 self.log.debug("Installing most recent version of R package %s (source not found)." % self.name)

224 cmd, stdin = self.make_r_cmd(prefix=lib_install_prefix)

225

226 self.install_R_package(cmd, inp=stdin)

227

228 def sanity_check_step(self, *args, **kwargs):

229 """

230 Custom sanity check for R packages

231 """

232 return super(RPackage, self).sanity_check_step(EXTS_FILTER_R_PACKAGES, *args, **kwargs)

233

234 def make_module_extra(self):

235 """Add install path to R_LIBS"""

236 # prepend R_LIBS with install path

237 extra = self.module_generator.prepend_paths("R_LIBS", [self.cfg['exts_subdir']])

238 return super(RPackage, self).make_module_extra(extra)

239

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/easybuild/easyblocks/generic/rpackage.py b/easybuild/easyblocks/generic/rpackage.py

--- a/easybuild/easyblocks/generic/rpackage.py

+++ b/easybuild/easyblocks/generic/rpackage.py

@@ -232,7 +232,7 @@

return super(RPackage, self).sanity_check_step(EXTS_FILTER_R_PACKAGES, *args, **kwargs)

def make_module_extra(self):

- """Add install path to R_LIBS"""

- # prepend R_LIBS with install path

- extra = self.module_generator.prepend_paths("R_LIBS", [self.cfg['exts_subdir']])

+ """Add install path to R_LIBS_SITE"""

+ # prepend R_LIBS_SITE with install path

+ extra = self.module_generator.prepend_paths("R_LIBS_SITE", [self.cfg['exts_subdir']])

return super(RPackage, self).make_module_extra(extra)

|

{"golden_diff": "diff --git a/easybuild/easyblocks/generic/rpackage.py b/easybuild/easyblocks/generic/rpackage.py\n--- a/easybuild/easyblocks/generic/rpackage.py\n+++ b/easybuild/easyblocks/generic/rpackage.py\n@@ -232,7 +232,7 @@\n return super(RPackage, self).sanity_check_step(EXTS_FILTER_R_PACKAGES, *args, **kwargs)\n \n def make_module_extra(self):\n- \"\"\"Add install path to R_LIBS\"\"\"\n- # prepend R_LIBS with install path\n- extra = self.module_generator.prepend_paths(\"R_LIBS\", [self.cfg['exts_subdir']])\n+ \"\"\"Add install path to R_LIBS_SITE\"\"\"\n+ # prepend R_LIBS_SITE with install path\n+ extra = self.module_generator.prepend_paths(\"R_LIBS_SITE\", [self.cfg['exts_subdir']])\n return super(RPackage, self).make_module_extra(extra)\n", "issue": "R package modules have priority over user-installed packages\nR has multiple variables that control the library path, `R_LIBS`, `R_LIBS_USER` and `R_LIBS_SITE`.\r\n\r\nThe path can be inspected with `.libPaths()` and is made up of in order of the entries in `R_LIBS`, `R_LIBS_USER` and `R_LIBS_SITE` and last the R installation itself.\r\n\r\nOur R package modules currently prepend their path to `R_LIBS` similar to how `PYTHONPATH` is handled, but this means that the user's directory (typically specified in `~/.Renviron` or the default) is not at the front of the library path.\r\n\r\nThis means that users cannot override system-installed packages and more importantly, `install.packages` only ever considers the first entry in the library path unless you explicitly specify a `lib=` to install to. Some installation functions like `install_github` doesn't even have that customization point.\r\n\r\nI suggest that we alter our R packages to prepend to `R_LIBS_SITE` instead, it seems to yield the preferred order for an end-user.\r\n\r\nOur R installations do not have a bundled `site-library` directory so the environment variable is currently unused.\r\n\r\nUpstream documentation on how the paths are constructed: https://stat.ethz.ch/R-manual/R-devel/library/base/html/libPaths.html\n", "before_files": [{"content": "##\n# Copyright 2009-2021 Ghent University\n#\n# This file is part of EasyBuild,\n# originally created by the HPC team of Ghent University (http://ugent.be/hpc/en),\n# with support of Ghent University (http://ugent.be/hpc),\n# the Flemish Supercomputer Centre (VSC) (https://www.vscentrum.be),\n# Flemish Research Foundation (FWO) (http://www.fwo.be/en)\n# and the Department of Economy, Science and Innovation (EWI) (http://www.ewi-vlaanderen.be/en).\n#\n# https://github.com/easybuilders/easybuild\n#\n# EasyBuild is free software: you can redistribute it and/or modify\n# it under the terms of the GNU General Public License as published by\n# the Free Software Foundation v2.\n#\n# EasyBuild is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License\n# along with EasyBuild. If not, see <http://www.gnu.org/licenses/>.\n##\n\"\"\"\nEasyBuild support for building and installing R packages, implemented as an easyblock\n\n@author: Stijn De Weirdt (Ghent University)\n@author: Dries Verdegem (Ghent University)\n@author: Kenneth Hoste (Ghent University)\n@author: Jens Timmerman (Ghent University)\n@author: Toon Willems (Ghent University)\n@author: Balazs Hajgato (Vrije Universiteit Brussel)\n\"\"\"\nimport os\n\nfrom easybuild.easyblocks.r import EXTS_FILTER_R_PACKAGES, EB_R\nfrom easybuild.easyblocks.generic.configuremake import check_config_guess, obtain_config_guess\nfrom easybuild.framework.easyconfig import CUSTOM\nfrom easybuild.framework.extensioneasyblock import ExtensionEasyBlock\nfrom easybuild.tools.build_log import EasyBuildError\nfrom easybuild.tools.filetools import mkdir, copy_file\nfrom easybuild.tools.run import run_cmd, parse_log_for_error\n\n\ndef make_R_install_option(opt, values, cmdline=False):\n \"\"\"\n Make option list for install.packages, to specify in R environment.\n \"\"\"\n txt = \"\"\n if values:\n if cmdline:\n txt = \" --%s=\\\"%s\" % (opt, values[0])\n else:\n txt = \"%s=c(\\\"%s\" % (opt, values[0])\n for i in values[1:]:\n txt += \" %s\" % i\n if cmdline:\n txt += \"\\\"\"\n else:\n txt += \"\\\")\"\n return txt\n\n\nclass RPackage(ExtensionEasyBlock):\n \"\"\"\n Install an R package as a separate module, or as an extension.\n \"\"\"\n\n @staticmethod\n def extra_options(extra_vars=None):\n \"\"\"Extra easyconfig parameters specific to RPackage.\"\"\"\n extra_vars = ExtensionEasyBlock.extra_options(extra_vars=extra_vars)\n extra_vars.update({\n 'exts_subdir': ['', \"Subdirectory where R extensions should be installed info\", CUSTOM],\n 'unpack_sources': [False, \"Unpack sources before installation\", CUSTOM],\n })\n return extra_vars\n\n def __init__(self, *args, **kwargs):\n \"\"\"Initliaze RPackage-specific class variables.\"\"\"\n\n super(RPackage, self).__init__(*args, **kwargs)\n\n self.configurevars = []\n self.configureargs = []\n self.ext_src = None\n\n def make_r_cmd(self, prefix=None):\n \"\"\"Create a command to run in R to install an R package.\"\"\"\n confvars = \"confvars\"\n confargs = \"confargs\"\n confvarslist = make_R_install_option(confvars, self.configurevars)\n confargslist = make_R_install_option(confargs, self.configureargs)\n confvarsstr = \"\"\n if confvarslist:\n confvarslist = confvarslist + \"; names(%s)=\\\"%s\\\"\" % (confvars, self.name)\n confvarsstr = \", configure.vars=%s\" % confvars\n confargsstr = \"\"\n if confargslist:\n confargslist = confargslist + \"; names(%s)=\\\"%s\\\"\" % (confargs, self.name)\n confargsstr = \", configure.args=%s\" % confargs\n\n if prefix:\n prefix = '\"%s\", ' % prefix\n else:\n prefix = ''\n\n r_cmd = \"\"\"\n options(repos=c(CRAN=\"http://www.freestatistics.org/cran\"))\n %s\n %s\n install.packages(\"%s\", %s dependencies = FALSE %s%s)\n \"\"\" % (confvarslist, confargslist, self.name, prefix, confvarsstr, confargsstr)\n cmd = \"%s R -q --no-save %s\" % (self.cfg['preinstallopts'], self.cfg['installopts'])\n\n self.log.debug(\"make_r_cmd returns %s with input %s\" % (cmd, r_cmd))\n\n return (cmd, r_cmd)\n\n def make_cmdline_cmd(self, prefix=None):\n \"\"\"Create a command line to install an R package.\"\"\"\n confvars = \"\"\n if self.configurevars:\n confvars = make_R_install_option(\"configure-vars\", self.configurevars, cmdline=True)\n confargs = \"\"\n if self.configureargs:\n confargs = make_R_install_option(\"configure-args\", self.configureargs, cmdline=True)\n\n if prefix:\n prefix = '--library=%s' % prefix\n else:\n prefix = ''\n\n if self.start_dir:\n loc = os.path.join(self.ext_dir or os.path.sep, self.start_dir)\n else:\n loc = self.ext_dir or self.ext_src\n\n cmd = ' '.join([\n self.cfg['preinstallopts'],\n \"R CMD INSTALL\",\n loc,\n confargs,\n confvars,\n prefix,\n '--no-clean-on-error',\n self.cfg['installopts'],\n ])\n\n self.log.debug(\"make_cmdline_cmd returns %s\" % cmd)\n return cmd, None\n\n def configure_step(self):\n \"\"\"No configuration for installing R packages.\"\"\"\n pass\n\n def build_step(self):\n \"\"\"No separate build step for R packages.\"\"\"\n pass\n\n def install_R_package(self, cmd, inp=None):\n \"\"\"Install R package as specified, and check for errors.\"\"\"\n\n cmdttdouterr, _ = run_cmd(cmd, log_all=True, simple=False, inp=inp, regexp=False)\n\n cmderrors = parse_log_for_error(cmdttdouterr, regExp=\"^ERROR:\")\n if cmderrors:\n cmd = \"R -q --no-save\"\n stdin = \"\"\"\n remove.library(%s)\n \"\"\" % self.name\n # remove package if errors were detected\n # it's possible that some of the dependencies failed, but the package itself was installed\n run_cmd(cmd, log_all=False, log_ok=False, simple=False, inp=stdin, regexp=False)\n raise EasyBuildError(\"Errors detected during installation of R package %s!\", self.name)\n else:\n self.log.debug(\"R package %s installed succesfully\" % self.name)\n\n def update_config_guess(self, path):\n \"\"\"Update any config.guess found in specified directory\"\"\"\n for config_guess_dir in (root for root, _, files in os.walk(path) if 'config.guess' in files):\n config_guess = os.path.join(config_guess_dir, 'config.guess')\n if not check_config_guess(config_guess):\n updated_config_guess = obtain_config_guess()\n if updated_config_guess:\n self.log.debug(\"Replacing outdated %s with more recent %s\", config_guess, updated_config_guess)\n copy_file(updated_config_guess, config_guess)\n else:\n raise EasyBuildError(\"Failed to obtain updated config.guess\")\n\n def install_step(self):\n \"\"\"Install procedure for R packages.\"\"\"\n # Update config.guess if the package was extracted\n if self.start_dir:\n self.update_config_guess(self.start_dir)\n cmd, stdin = self.make_cmdline_cmd(prefix=os.path.join(self.installdir, self.cfg['exts_subdir']))\n self.install_R_package(cmd, inp=stdin)\n\n def run(self):\n \"\"\"Install R package as an extension.\"\"\"\n\n # determine location\n if isinstance(self.master, EB_R):\n # extension is being installed as part of an R installation/module\n (out, _) = run_cmd(\"R RHOME\", log_all=True, simple=False)\n rhome = out.strip()\n lib_install_prefix = os.path.join(rhome, 'library')\n else:\n # extension is being installed in a separate installation prefix\n lib_install_prefix = os.path.join(self.installdir, self.cfg['exts_subdir'])\n mkdir(lib_install_prefix, parents=True)\n\n if self.src:\n super(RPackage, self).run(unpack_src=True)\n self.ext_src = self.src\n self.update_config_guess(self.ext_dir)\n self.log.debug(\"Installing R package %s version %s.\" % (self.name, self.version))\n cmd, stdin = self.make_cmdline_cmd(prefix=lib_install_prefix)\n else:\n if self.patches:\n raise EasyBuildError(\"Cannot patch R package %s as no explicit source is given!\", self.name)\n self.log.debug(\"Installing most recent version of R package %s (source not found).\" % self.name)\n cmd, stdin = self.make_r_cmd(prefix=lib_install_prefix)\n\n self.install_R_package(cmd, inp=stdin)\n\n def sanity_check_step(self, *args, **kwargs):\n \"\"\"\n Custom sanity check for R packages\n \"\"\"\n return super(RPackage, self).sanity_check_step(EXTS_FILTER_R_PACKAGES, *args, **kwargs)\n\n def make_module_extra(self):\n \"\"\"Add install path to R_LIBS\"\"\"\n # prepend R_LIBS with install path\n extra = self.module_generator.prepend_paths(\"R_LIBS\", [self.cfg['exts_subdir']])\n return super(RPackage, self).make_module_extra(extra)\n", "path": "easybuild/easyblocks/generic/rpackage.py"}], "after_files": [{"content": "##\n# Copyright 2009-2021 Ghent University\n#\n# This file is part of EasyBuild,\n# originally created by the HPC team of Ghent University (http://ugent.be/hpc/en),\n# with support of Ghent University (http://ugent.be/hpc),\n# the Flemish Supercomputer Centre (VSC) (https://www.vscentrum.be),\n# Flemish Research Foundation (FWO) (http://www.fwo.be/en)\n# and the Department of Economy, Science and Innovation (EWI) (http://www.ewi-vlaanderen.be/en).\n#\n# https://github.com/easybuilders/easybuild\n#\n# EasyBuild is free software: you can redistribute it and/or modify\n# it under the terms of the GNU General Public License as published by\n# the Free Software Foundation v2.\n#\n# EasyBuild is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License\n# along with EasyBuild. If not, see <http://www.gnu.org/licenses/>.\n##\n\"\"\"\nEasyBuild support for building and installing R packages, implemented as an easyblock\n\n@author: Stijn De Weirdt (Ghent University)\n@author: Dries Verdegem (Ghent University)\n@author: Kenneth Hoste (Ghent University)\n@author: Jens Timmerman (Ghent University)\n@author: Toon Willems (Ghent University)\n@author: Balazs Hajgato (Vrije Universiteit Brussel)\n\"\"\"\nimport os\n\nfrom easybuild.easyblocks.r import EXTS_FILTER_R_PACKAGES, EB_R\nfrom easybuild.easyblocks.generic.configuremake import check_config_guess, obtain_config_guess\nfrom easybuild.framework.easyconfig import CUSTOM\nfrom easybuild.framework.extensioneasyblock import ExtensionEasyBlock\nfrom easybuild.tools.build_log import EasyBuildError\nfrom easybuild.tools.filetools import mkdir, copy_file\nfrom easybuild.tools.run import run_cmd, parse_log_for_error\n\n\ndef make_R_install_option(opt, values, cmdline=False):\n \"\"\"\n Make option list for install.packages, to specify in R environment.\n \"\"\"\n txt = \"\"\n if values:\n if cmdline:\n txt = \" --%s=\\\"%s\" % (opt, values[0])\n else:\n txt = \"%s=c(\\\"%s\" % (opt, values[0])\n for i in values[1:]:\n txt += \" %s\" % i\n if cmdline:\n txt += \"\\\"\"\n else:\n txt += \"\\\")\"\n return txt\n\n\nclass RPackage(ExtensionEasyBlock):\n \"\"\"\n Install an R package as a separate module, or as an extension.\n \"\"\"\n\n @staticmethod\n def extra_options(extra_vars=None):\n \"\"\"Extra easyconfig parameters specific to RPackage.\"\"\"\n extra_vars = ExtensionEasyBlock.extra_options(extra_vars=extra_vars)\n extra_vars.update({\n 'exts_subdir': ['', \"Subdirectory where R extensions should be installed info\", CUSTOM],\n 'unpack_sources': [False, \"Unpack sources before installation\", CUSTOM],\n })\n return extra_vars\n\n def __init__(self, *args, **kwargs):\n \"\"\"Initliaze RPackage-specific class variables.\"\"\"\n\n super(RPackage, self).__init__(*args, **kwargs)\n\n self.configurevars = []\n self.configureargs = []\n self.ext_src = None\n\n def make_r_cmd(self, prefix=None):\n \"\"\"Create a command to run in R to install an R package.\"\"\"\n confvars = \"confvars\"\n confargs = \"confargs\"\n confvarslist = make_R_install_option(confvars, self.configurevars)\n confargslist = make_R_install_option(confargs, self.configureargs)\n confvarsstr = \"\"\n if confvarslist:\n confvarslist = confvarslist + \"; names(%s)=\\\"%s\\\"\" % (confvars, self.name)\n confvarsstr = \", configure.vars=%s\" % confvars\n confargsstr = \"\"\n if confargslist:\n confargslist = confargslist + \"; names(%s)=\\\"%s\\\"\" % (confargs, self.name)\n confargsstr = \", configure.args=%s\" % confargs\n\n if prefix:\n prefix = '\"%s\", ' % prefix\n else:\n prefix = ''\n\n r_cmd = \"\"\"\n options(repos=c(CRAN=\"http://www.freestatistics.org/cran\"))\n %s\n %s\n install.packages(\"%s\", %s dependencies = FALSE %s%s)\n \"\"\" % (confvarslist, confargslist, self.name, prefix, confvarsstr, confargsstr)\n cmd = \"%s R -q --no-save %s\" % (self.cfg['preinstallopts'], self.cfg['installopts'])\n\n self.log.debug(\"make_r_cmd returns %s with input %s\" % (cmd, r_cmd))\n\n return (cmd, r_cmd)\n\n def make_cmdline_cmd(self, prefix=None):\n \"\"\"Create a command line to install an R package.\"\"\"\n confvars = \"\"\n if self.configurevars:\n confvars = make_R_install_option(\"configure-vars\", self.configurevars, cmdline=True)\n confargs = \"\"\n if self.configureargs:\n confargs = make_R_install_option(\"configure-args\", self.configureargs, cmdline=True)\n\n if prefix:\n prefix = '--library=%s' % prefix\n else:\n prefix = ''\n\n if self.start_dir:\n loc = os.path.join(self.ext_dir or os.path.sep, self.start_dir)\n else:\n loc = self.ext_dir or self.ext_src\n\n cmd = ' '.join([\n self.cfg['preinstallopts'],\n \"R CMD INSTALL\",\n loc,\n confargs,\n confvars,\n prefix,\n '--no-clean-on-error',\n self.cfg['installopts'],\n ])\n\n self.log.debug(\"make_cmdline_cmd returns %s\" % cmd)\n return cmd, None\n\n def configure_step(self):\n \"\"\"No configuration for installing R packages.\"\"\"\n pass\n\n def build_step(self):\n \"\"\"No separate build step for R packages.\"\"\"\n pass\n\n def install_R_package(self, cmd, inp=None):\n \"\"\"Install R package as specified, and check for errors.\"\"\"\n\n cmdttdouterr, _ = run_cmd(cmd, log_all=True, simple=False, inp=inp, regexp=False)\n\n cmderrors = parse_log_for_error(cmdttdouterr, regExp=\"^ERROR:\")\n if cmderrors:\n cmd = \"R -q --no-save\"\n stdin = \"\"\"\n remove.library(%s)\n \"\"\" % self.name\n # remove package if errors were detected\n # it's possible that some of the dependencies failed, but the package itself was installed\n run_cmd(cmd, log_all=False, log_ok=False, simple=False, inp=stdin, regexp=False)\n raise EasyBuildError(\"Errors detected during installation of R package %s!\", self.name)\n else:\n self.log.debug(\"R package %s installed succesfully\" % self.name)\n\n def update_config_guess(self, path):\n \"\"\"Update any config.guess found in specified directory\"\"\"\n for config_guess_dir in (root for root, _, files in os.walk(path) if 'config.guess' in files):\n config_guess = os.path.join(config_guess_dir, 'config.guess')\n if not check_config_guess(config_guess):\n updated_config_guess = obtain_config_guess()\n if updated_config_guess:\n self.log.debug(\"Replacing outdated %s with more recent %s\", config_guess, updated_config_guess)\n copy_file(updated_config_guess, config_guess)\n else:\n raise EasyBuildError(\"Failed to obtain updated config.guess\")\n\n def install_step(self):\n \"\"\"Install procedure for R packages.\"\"\"\n # Update config.guess if the package was extracted\n if self.start_dir:\n self.update_config_guess(self.start_dir)\n cmd, stdin = self.make_cmdline_cmd(prefix=os.path.join(self.installdir, self.cfg['exts_subdir']))\n self.install_R_package(cmd, inp=stdin)\n\n def run(self):\n \"\"\"Install R package as an extension.\"\"\"\n\n # determine location\n if isinstance(self.master, EB_R):\n # extension is being installed as part of an R installation/module\n (out, _) = run_cmd(\"R RHOME\", log_all=True, simple=False)\n rhome = out.strip()\n lib_install_prefix = os.path.join(rhome, 'library')\n else:\n # extension is being installed in a separate installation prefix\n lib_install_prefix = os.path.join(self.installdir, self.cfg['exts_subdir'])\n mkdir(lib_install_prefix, parents=True)\n\n if self.src:\n super(RPackage, self).run(unpack_src=True)\n self.ext_src = self.src\n self.update_config_guess(self.ext_dir)\n self.log.debug(\"Installing R package %s version %s.\" % (self.name, self.version))\n cmd, stdin = self.make_cmdline_cmd(prefix=lib_install_prefix)\n else:\n if self.patches:\n raise EasyBuildError(\"Cannot patch R package %s as no explicit source is given!\", self.name)\n self.log.debug(\"Installing most recent version of R package %s (source not found).\" % self.name)\n cmd, stdin = self.make_r_cmd(prefix=lib_install_prefix)\n\n self.install_R_package(cmd, inp=stdin)\n\n def sanity_check_step(self, *args, **kwargs):\n \"\"\"\n Custom sanity check for R packages\n \"\"\"\n return super(RPackage, self).sanity_check_step(EXTS_FILTER_R_PACKAGES, *args, **kwargs)\n\n def make_module_extra(self):\n \"\"\"Add install path to R_LIBS_SITE\"\"\"\n # prepend R_LIBS_SITE with install path\n extra = self.module_generator.prepend_paths(\"R_LIBS_SITE\", [self.cfg['exts_subdir']])\n return super(RPackage, self).make_module_extra(extra)\n", "path": "easybuild/easyblocks/generic/rpackage.py"}]}

| 3,343 | 210 |

gh_patches_debug_31739

|

rasdani/github-patches

|

git_diff

|

streamlink__streamlink-1863

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Remove 9anime.to

As discussed over here: https://github.com/streamlink/streamlink/issues/1110#issuecomment-400687075 9anime.to isn't worth supporting at this point and is broken so I'm proposing we remove it.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/streamlink/plugins/nineanime.py`

Content:

```

1 import re

2 from streamlink.plugin import Plugin

3 from streamlink.plugin.api import http

4 from streamlink.plugin.api import useragents

5 from streamlink.plugin.api import validate

6 from streamlink.stream import HTTPStream

7 from streamlink.compat import urlparse

8

9

10 class NineAnime(Plugin):

11 _episode_info_url = "//9anime.to/ajax/episode/info"

12

13 _info_schema = validate.Schema({

14 "grabber": validate.url(),

15 "params": {

16 "id": validate.text,

17 "token": validate.text,

18 "options": validate.text,

19 }

20 })

21

22 _streams_schema = validate.Schema({

23 "token": validate.text,

24 "error": None,

25 "data": [{

26 "label": validate.text,

27 "file": validate.url(),

28 "type": "mp4"

29 }]

30 })

31

32 _url_re = re.compile(r"https?://9anime.to/watch/(?:[^.]+?\.)(\w+)/(\w+)")

33

34 @classmethod

35 def can_handle_url(cls, url):

36 return cls._url_re.match(url) is not None

37

38 def add_scheme(self, url):

39 # update the scheme for the grabber url if required

40 if url.startswith("//"):

41 url = "{0}:{1}".format(urlparse(self.url).scheme, url)

42 return url

43

44 @Plugin.broken(1110)

45 def _get_streams(self):

46 match = self._url_re.match(self.url)

47 film_id, episode_id = match.groups()

48

49 headers = {

50 "Referer": self.url,

51 "User-Agent": useragents.FIREFOX

52 }

53

54 # Get the info about the Episode, including the Grabber API URL

55 info_res = http.get(self.add_scheme(self._episode_info_url),

56 params=dict(update=0, film=film_id, id=episode_id),

57 headers=headers)

58 info = http.json(info_res, schema=self._info_schema)

59

60 # Get the data about the streams from the Grabber API

61 grabber_url = self.add_scheme(info["grabber"])

62 stream_list_res = http.get(grabber_url, params=info["params"], headers=headers)

63 stream_data = http.json(stream_list_res, schema=self._streams_schema)

64

65 for stream in stream_data["data"]:

66 yield stream["label"], HTTPStream(self.session, stream["file"])

67

68

69 __plugin__ = NineAnime

70

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/streamlink/plugins/nineanime.py b/src/streamlink/plugins/nineanime.py

--- a/src/streamlink/plugins/nineanime.py

+++ b/src/streamlink/plugins/nineanime.py

@@ -1,69 +1 @@

-import re

-from streamlink.plugin import Plugin

-from streamlink.plugin.api import http

-from streamlink.plugin.api import useragents

-from streamlink.plugin.api import validate

-from streamlink.stream import HTTPStream

-from streamlink.compat import urlparse

-

-

-class NineAnime(Plugin):

- _episode_info_url = "//9anime.to/ajax/episode/info"

-

- _info_schema = validate.Schema({

- "grabber": validate.url(),

- "params": {

- "id": validate.text,

- "token": validate.text,

- "options": validate.text,

- }

- })

-

- _streams_schema = validate.Schema({

- "token": validate.text,

- "error": None,

- "data": [{

- "label": validate.text,

- "file": validate.url(),

- "type": "mp4"

- }]

- })

-

- _url_re = re.compile(r"https?://9anime.to/watch/(?:[^.]+?\.)(\w+)/(\w+)")

-

- @classmethod

- def can_handle_url(cls, url):

- return cls._url_re.match(url) is not None

-

- def add_scheme(self, url):

- # update the scheme for the grabber url if required

- if url.startswith("//"):

- url = "{0}:{1}".format(urlparse(self.url).scheme, url)

- return url

-

- @Plugin.broken(1110)

- def _get_streams(self):

- match = self._url_re.match(self.url)

- film_id, episode_id = match.groups()

-

- headers = {

- "Referer": self.url,

- "User-Agent": useragents.FIREFOX

- }

-

- # Get the info about the Episode, including the Grabber API URL

- info_res = http.get(self.add_scheme(self._episode_info_url),

- params=dict(update=0, film=film_id, id=episode_id),

- headers=headers)

- info = http.json(info_res, schema=self._info_schema)

-

- # Get the data about the streams from the Grabber API

- grabber_url = self.add_scheme(info["grabber"])

- stream_list_res = http.get(grabber_url, params=info["params"], headers=headers)

- stream_data = http.json(stream_list_res, schema=self._streams_schema)

-

- for stream in stream_data["data"]:

- yield stream["label"], HTTPStream(self.session, stream["file"])

-

-

-__plugin__ = NineAnime

+# Plugin removed - https://github.com/streamlink/streamlink/issues/1862

|

{"golden_diff": "diff --git a/src/streamlink/plugins/nineanime.py b/src/streamlink/plugins/nineanime.py\n--- a/src/streamlink/plugins/nineanime.py\n+++ b/src/streamlink/plugins/nineanime.py\n@@ -1,69 +1 @@\n-import re\n-from streamlink.plugin import Plugin\n-from streamlink.plugin.api import http\n-from streamlink.plugin.api import useragents\n-from streamlink.plugin.api import validate\n-from streamlink.stream import HTTPStream\n-from streamlink.compat import urlparse\n-\n-\n-class NineAnime(Plugin):\n- _episode_info_url = \"//9anime.to/ajax/episode/info\"\n-\n- _info_schema = validate.Schema({\n- \"grabber\": validate.url(),\n- \"params\": {\n- \"id\": validate.text,\n- \"token\": validate.text,\n- \"options\": validate.text,\n- }\n- })\n-\n- _streams_schema = validate.Schema({\n- \"token\": validate.text,\n- \"error\": None,\n- \"data\": [{\n- \"label\": validate.text,\n- \"file\": validate.url(),\n- \"type\": \"mp4\"\n- }]\n- })\n-\n- _url_re = re.compile(r\"https?://9anime.to/watch/(?:[^.]+?\\.)(\\w+)/(\\w+)\")\n-\n- @classmethod\n- def can_handle_url(cls, url):\n- return cls._url_re.match(url) is not None\n-\n- def add_scheme(self, url):\n- # update the scheme for the grabber url if required\n- if url.startswith(\"//\"):\n- url = \"{0}:{1}\".format(urlparse(self.url).scheme, url)\n- return url\n-\n- @Plugin.broken(1110)\n- def _get_streams(self):\n- match = self._url_re.match(self.url)\n- film_id, episode_id = match.groups()\n-\n- headers = {\n- \"Referer\": self.url,\n- \"User-Agent\": useragents.FIREFOX\n- }\n-\n- # Get the info about the Episode, including the Grabber API URL\n- info_res = http.get(self.add_scheme(self._episode_info_url),\n- params=dict(update=0, film=film_id, id=episode_id),\n- headers=headers)\n- info = http.json(info_res, schema=self._info_schema)\n-\n- # Get the data about the streams from the Grabber API\n- grabber_url = self.add_scheme(info[\"grabber\"])\n- stream_list_res = http.get(grabber_url, params=info[\"params\"], headers=headers)\n- stream_data = http.json(stream_list_res, schema=self._streams_schema)\n-\n- for stream in stream_data[\"data\"]:\n- yield stream[\"label\"], HTTPStream(self.session, stream[\"file\"])\n-\n-\n-__plugin__ = NineAnime\n+# Plugin removed - https://github.com/streamlink/streamlink/issues/1862\n", "issue": "Remove 9anime.to\nAs discussed over here: https://github.com/streamlink/streamlink/issues/1110#issuecomment-400687075 9anime.to isn't worth supporting at this point and is broken so I'm proposing we remove it.\r\n\n", "before_files": [{"content": "import re\nfrom streamlink.plugin import Plugin\nfrom streamlink.plugin.api import http\nfrom streamlink.plugin.api import useragents\nfrom streamlink.plugin.api import validate\nfrom streamlink.stream import HTTPStream\nfrom streamlink.compat import urlparse\n\n\nclass NineAnime(Plugin):\n _episode_info_url = \"//9anime.to/ajax/episode/info\"\n\n _info_schema = validate.Schema({\n \"grabber\": validate.url(),\n \"params\": {\n \"id\": validate.text,\n \"token\": validate.text,\n \"options\": validate.text,\n }\n })\n\n _streams_schema = validate.Schema({\n \"token\": validate.text,\n \"error\": None,\n \"data\": [{\n \"label\": validate.text,\n \"file\": validate.url(),\n \"type\": \"mp4\"\n }]\n })\n\n _url_re = re.compile(r\"https?://9anime.to/watch/(?:[^.]+?\\.)(\\w+)/(\\w+)\")\n\n @classmethod\n def can_handle_url(cls, url):\n return cls._url_re.match(url) is not None\n\n def add_scheme(self, url):\n # update the scheme for the grabber url if required\n if url.startswith(\"//\"):\n url = \"{0}:{1}\".format(urlparse(self.url).scheme, url)\n return url\n\n @Plugin.broken(1110)\n def _get_streams(self):\n match = self._url_re.match(self.url)\n film_id, episode_id = match.groups()\n\n headers = {\n \"Referer\": self.url,\n \"User-Agent\": useragents.FIREFOX\n }\n\n # Get the info about the Episode, including the Grabber API URL\n info_res = http.get(self.add_scheme(self._episode_info_url),\n params=dict(update=0, film=film_id, id=episode_id),\n headers=headers)\n info = http.json(info_res, schema=self._info_schema)\n\n # Get the data about the streams from the Grabber API\n grabber_url = self.add_scheme(info[\"grabber\"])\n stream_list_res = http.get(grabber_url, params=info[\"params\"], headers=headers)\n stream_data = http.json(stream_list_res, schema=self._streams_schema)\n\n for stream in stream_data[\"data\"]:\n yield stream[\"label\"], HTTPStream(self.session, stream[\"file\"])\n\n\n__plugin__ = NineAnime\n", "path": "src/streamlink/plugins/nineanime.py"}], "after_files": [{"content": "# Plugin removed - https://github.com/streamlink/streamlink/issues/1862\n", "path": "src/streamlink/plugins/nineanime.py"}]}

| 968 | 632 |

gh_patches_debug_8550

|

rasdani/github-patches

|

git_diff

|

zulip__zulip-12755

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

portico/docs: Improve OpenAPI descriptions and titles.

Followups to https://github.com/zulip/zulip/pull/11045.

- [ ] Currently we only use the first paragraph of text for the description. It would be better to concatenate the first few paragraphs, and truncate. Basically it's just using bs.find_all('p') and joining things together in a loop until it gets too long.

One catch is that is that we probably want to stop at the first non-paragraph block so we don't cross a list or something.

- [ ] Currently, we've just tested our openAPI things with /api and /help. We should extend to the /integrations pages, and also figure out what we want to do for the top-10 major portico pages (especially /features, /why-zulip, and /for/open-source), which could benefit from a similar treatment.

To work on this I would start by skimming the code from #11045.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `zerver/views/documentation.py`

Content:

```

1 from typing import Any, Dict, Tuple

2 from collections import OrderedDict

3 from django.views.generic import TemplateView

4 from django.conf import settings

5 from django.http import HttpRequest, HttpResponse, HttpResponseNotFound

6 from django.template import loader

7

8 import os

9 import random

10 import re

11

12 from zerver.lib.integrations import CATEGORIES, INTEGRATIONS, HubotIntegration, \

13 WebhookIntegration, EmailIntegration

14 from zerver.lib.request import has_request_variables, REQ

15 from zerver.lib.subdomains import get_subdomain

16 from zerver.models import Realm

17 from zerver.templatetags.app_filters import render_markdown_path

18 from zerver.context_processors import zulip_default_context

19

20 def add_api_uri_context(context: Dict[str, Any], request: HttpRequest) -> None:

21 context.update(zulip_default_context(request))

22

23 subdomain = get_subdomain(request)

24 if (subdomain != Realm.SUBDOMAIN_FOR_ROOT_DOMAIN

25 or not settings.ROOT_DOMAIN_LANDING_PAGE):

26 display_subdomain = subdomain

27 html_settings_links = True

28 else:

29 display_subdomain = 'yourZulipDomain'

30 html_settings_links = False

31

32 display_host = Realm.host_for_subdomain(display_subdomain)

33 api_url_scheme_relative = display_host + "/api"

34 api_url = settings.EXTERNAL_URI_SCHEME + api_url_scheme_relative

35 zulip_url = settings.EXTERNAL_URI_SCHEME + display_host

36

37 context['external_uri_scheme'] = settings.EXTERNAL_URI_SCHEME

38 context['api_url'] = api_url

39 context['api_url_scheme_relative'] = api_url_scheme_relative

40 context['zulip_url'] = zulip_url

41

42 context["html_settings_links"] = html_settings_links

43 if html_settings_links:

44 settings_html = '<a href="/#settings">Zulip settings page</a>'

45 subscriptions_html = '<a target="_blank" href="/#streams">streams page</a>'

46 else:

47 settings_html = 'Zulip settings page'

48 subscriptions_html = 'streams page'

49 context['settings_html'] = settings_html

50 context['subscriptions_html'] = subscriptions_html

51

52 class ApiURLView(TemplateView):

53 def get_context_data(self, **kwargs: Any) -> Dict[str, str]:

54 context = super().get_context_data(**kwargs)

55 add_api_uri_context(context, self.request)

56 return context

57

58 class APIView(ApiURLView):

59 template_name = 'zerver/api.html'

60

61

62 class MarkdownDirectoryView(ApiURLView):

63 path_template = ""

64

65 def get_path(self, article: str) -> Tuple[str, int]:

66 http_status = 200

67 if article == "":

68 article = "index"

69 elif article == "include/sidebar_index":

70 pass

71 elif "/" in article:

72 article = "missing"

73 http_status = 404

74 elif len(article) > 100 or not re.match('^[0-9a-zA-Z_-]+$', article):

75 article = "missing"

76 http_status = 404

77

78 path = self.path_template % (article,)

79 try:

80 loader.get_template(path)

81 return (path, http_status)

82 except loader.TemplateDoesNotExist:

83 return (self.path_template % ("missing",), 404)

84

85 def get_context_data(self, **kwargs: Any) -> Dict[str, Any]:

86 article = kwargs["article"]

87 context = super().get_context_data() # type: Dict[str, Any]

88 (context["article"], http_status_ignored) = self.get_path(article)

89

90 # For disabling the "Back to home" on the homepage

91 context["not_index_page"] = not context["article"].endswith("/index.md")

92 if self.path_template == '/zerver/help/%s.md':

93 context["page_is_help_center"] = True

94 context["doc_root"] = "/help/"

95 (sidebar_index, http_status_ignored) = self.get_path("include/sidebar_index")

96 # We want the sliding/collapsing behavior for /help pages only

97 sidebar_class = "sidebar slide"

98 title_base = "Zulip Help Center"

99 else:

100 context["page_is_api_center"] = True

101 context["doc_root"] = "/api/"

102 (sidebar_index, http_status_ignored) = self.get_path("sidebar_index")

103 sidebar_class = "sidebar"

104 title_base = "Zulip API Documentation"

105

106 # The following is a somewhat hacky approach to extract titles from articles.

107 # Hack: `context["article"] has a leading `/`, so we use + to add directories.

108 article_path = os.path.join(settings.DEPLOY_ROOT, 'templates') + context["article"]

109 if os.path.exists(article_path):

110 with open(article_path) as article_file:

111 first_line = article_file.readlines()[0]

112 # Strip the header and then use the first line to get the article title

113 article_title = first_line.strip().lstrip("# ")

114 if context["not_index_page"]:

115 context["OPEN_GRAPH_TITLE"] = "%s (%s)" % (article_title, title_base)

116 else:

117 context["OPEN_GRAPH_TITLE"] = title_base

118 self.request.placeholder_open_graph_description = (

119 "REPLACMENT_OPEN_GRAPH_DESCRIPTION_%s" % (int(2**24 * random.random()),))

120 context["OPEN_GRAPH_DESCRIPTION"] = self.request.placeholder_open_graph_description

121

122 context["sidebar_index"] = sidebar_index

123 context["sidebar_class"] = sidebar_class

124 # An "article" might require the api_uri_context to be rendered

125 api_uri_context = {} # type: Dict[str, Any]

126 add_api_uri_context(api_uri_context, self.request)

127 api_uri_context["run_content_validators"] = True

128 context["api_uri_context"] = api_uri_context

129 return context

130

131 def get(self, request: HttpRequest, article: str="") -> HttpResponse:

132 (path, http_status) = self.get_path(article)

133 result = super().get(self, article=article)

134 if http_status != 200:

135 result.status_code = http_status

136 return result

137

138 def add_integrations_context(context: Dict[str, Any]) -> None:

139 alphabetical_sorted_categories = OrderedDict(sorted(CATEGORIES.items()))

140 alphabetical_sorted_integration = OrderedDict(sorted(INTEGRATIONS.items()))

141 enabled_integrations_count = len(list(filter(lambda v: v.is_enabled(), INTEGRATIONS.values())))

142 # Subtract 1 so saying "Over X integrations" is correct. Then,

143 # round down to the nearest multiple of 10.

144 integrations_count_display = ((enabled_integrations_count - 1) // 10) * 10

145 context['categories_dict'] = alphabetical_sorted_categories

146 context['integrations_dict'] = alphabetical_sorted_integration

147 context['integrations_count_display'] = integrations_count_display

148

149 def add_integrations_open_graph_context(context: Dict[str, Any], request: HttpRequest) -> None:

150 path_name = request.path.rstrip('/').split('/')[-1]

151 description = ('Zulip comes with over a hundred native integrations out of the box, '

152 'and integrates with Zapier, IFTTT, and Hubot to provide hundreds more. '

153 'Connect the apps you use everyday to Zulip.')

154

155 if path_name in INTEGRATIONS:

156 integration = INTEGRATIONS[path_name]

157 context['OPEN_GRAPH_TITLE'] = 'Connect {name} to Zulip'.format(name=integration.display_name)

158 context['OPEN_GRAPH_DESCRIPTION'] = description

159

160 elif path_name in CATEGORIES:

161 category = CATEGORIES[path_name]

162 context['OPEN_GRAPH_TITLE'] = 'Connect your {category} tools to Zulip'.format(category=category)

163 context['OPEN_GRAPH_DESCRIPTION'] = description

164

165 class IntegrationView(ApiURLView):

166 template_name = 'zerver/integrations/index.html'

167

168 def get_context_data(self, **kwargs: Any) -> Dict[str, Any]:

169 context = super().get_context_data(**kwargs) # type: Dict[str, Any]

170 add_integrations_context(context)

171 add_integrations_open_graph_context(context, self.request)

172 return context

173

174

175 @has_request_variables

176 def integration_doc(request: HttpRequest, integration_name: str=REQ(default=None)) -> HttpResponse:

177 if not request.is_ajax():

178 return HttpResponseNotFound()

179 try:

180 integration = INTEGRATIONS[integration_name]

181 except KeyError:

182 return HttpResponseNotFound()

183

184 context = {} # type: Dict[str, Any]

185 add_api_uri_context(context, request)

186

187 context['integration_name'] = integration.name

188 context['integration_display_name'] = integration.display_name

189 if hasattr(integration, 'stream_name'):

190 context['recommended_stream_name'] = integration.stream_name

191 if isinstance(integration, WebhookIntegration):

192 context['integration_url'] = integration.url[3:]

193 if isinstance(integration, HubotIntegration):

194 context['hubot_docs_url'] = integration.hubot_docs_url

195 if isinstance(integration, EmailIntegration):

196 context['email_gateway_example'] = settings.EMAIL_GATEWAY_EXAMPLE

197

198 doc_html_str = render_markdown_path(integration.doc, context)

199

200 return HttpResponse(doc_html_str)

201

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/zerver/views/documentation.py b/zerver/views/documentation.py

--- a/zerver/views/documentation.py

+++ b/zerver/views/documentation.py

@@ -162,6 +162,10 @@

context['OPEN_GRAPH_TITLE'] = 'Connect your {category} tools to Zulip'.format(category=category)

context['OPEN_GRAPH_DESCRIPTION'] = description

+ elif path_name == 'integrations':

+ context['OPEN_GRAPH_TITLE'] = 'Connect the tools you use to Zulip'

+ context['OPEN_GRAPH_DESCRIPTION'] = description

+

class IntegrationView(ApiURLView):

template_name = 'zerver/integrations/index.html'

|

{"golden_diff": "diff --git a/zerver/views/documentation.py b/zerver/views/documentation.py\n--- a/zerver/views/documentation.py\n+++ b/zerver/views/documentation.py\n@@ -162,6 +162,10 @@\n context['OPEN_GRAPH_TITLE'] = 'Connect your {category} tools to Zulip'.format(category=category)\n context['OPEN_GRAPH_DESCRIPTION'] = description\n \n+ elif path_name == 'integrations':\n+ context['OPEN_GRAPH_TITLE'] = 'Connect the tools you use to Zulip'\n+ context['OPEN_GRAPH_DESCRIPTION'] = description\n+\n class IntegrationView(ApiURLView):\n template_name = 'zerver/integrations/index.html'\n", "issue": "portico/docs: Improve OpenAPI descriptions and titles.\nFollowups to https://github.com/zulip/zulip/pull/11045.\r\n- [ ] Currently we only use the first paragraph of text for the description. It would be better to concatenate the first few paragraphs, and truncate. Basically it's just using bs.find_all('p') and joining things together in a loop until it gets too long. \r\nOne catch is that is that we probably want to stop at the first non-paragraph block so we don't cross a list or something.\r\n- [ ] Currently, we've just tested our openAPI things with /api and /help. We should extend to the /integrations pages, and also figure out what we want to do for the top-10 major portico pages (especially /features, /why-zulip, and /for/open-source), which could benefit from a similar treatment.\r\n\r\nTo work on this I would start by skimming the code from #11045. \n", "before_files": [{"content": "from typing import Any, Dict, Tuple\nfrom collections import OrderedDict\nfrom django.views.generic import TemplateView\nfrom django.conf import settings\nfrom django.http import HttpRequest, HttpResponse, HttpResponseNotFound\nfrom django.template import loader\n\nimport os\nimport random\nimport re\n\nfrom zerver.lib.integrations import CATEGORIES, INTEGRATIONS, HubotIntegration, \\\n WebhookIntegration, EmailIntegration\nfrom zerver.lib.request import has_request_variables, REQ\nfrom zerver.lib.subdomains import get_subdomain\nfrom zerver.models import Realm\nfrom zerver.templatetags.app_filters import render_markdown_path\nfrom zerver.context_processors import zulip_default_context\n\ndef add_api_uri_context(context: Dict[str, Any], request: HttpRequest) -> None:\n context.update(zulip_default_context(request))\n\n subdomain = get_subdomain(request)\n if (subdomain != Realm.SUBDOMAIN_FOR_ROOT_DOMAIN\n or not settings.ROOT_DOMAIN_LANDING_PAGE):\n display_subdomain = subdomain\n html_settings_links = True\n else:\n display_subdomain = 'yourZulipDomain'\n html_settings_links = False\n\n display_host = Realm.host_for_subdomain(display_subdomain)\n api_url_scheme_relative = display_host + \"/api\"\n api_url = settings.EXTERNAL_URI_SCHEME + api_url_scheme_relative\n zulip_url = settings.EXTERNAL_URI_SCHEME + display_host\n\n context['external_uri_scheme'] = settings.EXTERNAL_URI_SCHEME\n context['api_url'] = api_url\n context['api_url_scheme_relative'] = api_url_scheme_relative\n context['zulip_url'] = zulip_url\n\n context[\"html_settings_links\"] = html_settings_links\n if html_settings_links:\n settings_html = '<a href=\"/#settings\">Zulip settings page</a>'\n subscriptions_html = '<a target=\"_blank\" href=\"/#streams\">streams page</a>'\n else:\n settings_html = 'Zulip settings page'\n subscriptions_html = 'streams page'\n context['settings_html'] = settings_html\n context['subscriptions_html'] = subscriptions_html\n\nclass ApiURLView(TemplateView):\n def get_context_data(self, **kwargs: Any) -> Dict[str, str]:\n context = super().get_context_data(**kwargs)\n add_api_uri_context(context, self.request)\n return context\n\nclass APIView(ApiURLView):\n template_name = 'zerver/api.html'\n\n\nclass MarkdownDirectoryView(ApiURLView):\n path_template = \"\"\n\n def get_path(self, article: str) -> Tuple[str, int]:\n http_status = 200\n if article == \"\":\n article = \"index\"\n elif article == \"include/sidebar_index\":\n pass\n elif \"/\" in article:\n article = \"missing\"\n http_status = 404\n elif len(article) > 100 or not re.match('^[0-9a-zA-Z_-]+$', article):\n article = \"missing\"\n http_status = 404\n\n path = self.path_template % (article,)\n try:\n loader.get_template(path)\n return (path, http_status)\n except loader.TemplateDoesNotExist:\n return (self.path_template % (\"missing\",), 404)\n\n def get_context_data(self, **kwargs: Any) -> Dict[str, Any]:\n article = kwargs[\"article\"]\n context = super().get_context_data() # type: Dict[str, Any]\n (context[\"article\"], http_status_ignored) = self.get_path(article)\n\n # For disabling the \"Back to home\" on the homepage\n context[\"not_index_page\"] = not context[\"article\"].endswith(\"/index.md\")\n if self.path_template == '/zerver/help/%s.md':\n context[\"page_is_help_center\"] = True\n context[\"doc_root\"] = \"/help/\"\n (sidebar_index, http_status_ignored) = self.get_path(\"include/sidebar_index\")\n # We want the sliding/collapsing behavior for /help pages only\n sidebar_class = \"sidebar slide\"\n title_base = \"Zulip Help Center\"\n else:\n context[\"page_is_api_center\"] = True\n context[\"doc_root\"] = \"/api/\"\n (sidebar_index, http_status_ignored) = self.get_path(\"sidebar_index\")\n sidebar_class = \"sidebar\"\n title_base = \"Zulip API Documentation\"\n\n # The following is a somewhat hacky approach to extract titles from articles.\n # Hack: `context[\"article\"] has a leading `/`, so we use + to add directories.\n article_path = os.path.join(settings.DEPLOY_ROOT, 'templates') + context[\"article\"]\n if os.path.exists(article_path):\n with open(article_path) as article_file:\n first_line = article_file.readlines()[0]\n # Strip the header and then use the first line to get the article title\n article_title = first_line.strip().lstrip(\"# \")\n if context[\"not_index_page\"]:\n context[\"OPEN_GRAPH_TITLE\"] = \"%s (%s)\" % (article_title, title_base)\n else:\n context[\"OPEN_GRAPH_TITLE\"] = title_base\n self.request.placeholder_open_graph_description = (\n \"REPLACMENT_OPEN_GRAPH_DESCRIPTION_%s\" % (int(2**24 * random.random()),))\n context[\"OPEN_GRAPH_DESCRIPTION\"] = self.request.placeholder_open_graph_description\n\n context[\"sidebar_index\"] = sidebar_index\n context[\"sidebar_class\"] = sidebar_class\n # An \"article\" might require the api_uri_context to be rendered\n api_uri_context = {} # type: Dict[str, Any]\n add_api_uri_context(api_uri_context, self.request)\n api_uri_context[\"run_content_validators\"] = True\n context[\"api_uri_context\"] = api_uri_context\n return context\n\n def get(self, request: HttpRequest, article: str=\"\") -> HttpResponse:\n (path, http_status) = self.get_path(article)\n result = super().get(self, article=article)\n if http_status != 200:\n result.status_code = http_status\n return result\n\ndef add_integrations_context(context: Dict[str, Any]) -> None:\n alphabetical_sorted_categories = OrderedDict(sorted(CATEGORIES.items()))\n alphabetical_sorted_integration = OrderedDict(sorted(INTEGRATIONS.items()))\n enabled_integrations_count = len(list(filter(lambda v: v.is_enabled(), INTEGRATIONS.values())))\n # Subtract 1 so saying \"Over X integrations\" is correct. Then,\n # round down to the nearest multiple of 10.\n integrations_count_display = ((enabled_integrations_count - 1) // 10) * 10\n context['categories_dict'] = alphabetical_sorted_categories\n context['integrations_dict'] = alphabetical_sorted_integration\n context['integrations_count_display'] = integrations_count_display\n\ndef add_integrations_open_graph_context(context: Dict[str, Any], request: HttpRequest) -> None:\n path_name = request.path.rstrip('/').split('/')[-1]\n description = ('Zulip comes with over a hundred native integrations out of the box, '\n 'and integrates with Zapier, IFTTT, and Hubot to provide hundreds more. '\n 'Connect the apps you use everyday to Zulip.')\n\n if path_name in INTEGRATIONS:\n integration = INTEGRATIONS[path_name]\n context['OPEN_GRAPH_TITLE'] = 'Connect {name} to Zulip'.format(name=integration.display_name)\n context['OPEN_GRAPH_DESCRIPTION'] = description\n\n elif path_name in CATEGORIES:\n category = CATEGORIES[path_name]\n context['OPEN_GRAPH_TITLE'] = 'Connect your {category} tools to Zulip'.format(category=category)\n context['OPEN_GRAPH_DESCRIPTION'] = description\n\nclass IntegrationView(ApiURLView):\n template_name = 'zerver/integrations/index.html'\n\n def get_context_data(self, **kwargs: Any) -> Dict[str, Any]:\n context = super().get_context_data(**kwargs) # type: Dict[str, Any]\n add_integrations_context(context)\n add_integrations_open_graph_context(context, self.request)\n return context\n\n\n@has_request_variables\ndef integration_doc(request: HttpRequest, integration_name: str=REQ(default=None)) -> HttpResponse:\n if not request.is_ajax():\n return HttpResponseNotFound()\n try:\n integration = INTEGRATIONS[integration_name]\n except KeyError:\n return HttpResponseNotFound()\n\n context = {} # type: Dict[str, Any]\n add_api_uri_context(context, request)\n\n context['integration_name'] = integration.name\n context['integration_display_name'] = integration.display_name\n if hasattr(integration, 'stream_name'):\n context['recommended_stream_name'] = integration.stream_name\n if isinstance(integration, WebhookIntegration):\n context['integration_url'] = integration.url[3:]\n if isinstance(integration, HubotIntegration):\n context['hubot_docs_url'] = integration.hubot_docs_url\n if isinstance(integration, EmailIntegration):\n context['email_gateway_example'] = settings.EMAIL_GATEWAY_EXAMPLE\n\n doc_html_str = render_markdown_path(integration.doc, context)\n\n return HttpResponse(doc_html_str)\n", "path": "zerver/views/documentation.py"}], "after_files": [{"content": "from typing import Any, Dict, Tuple\nfrom collections import OrderedDict\nfrom django.views.generic import TemplateView\nfrom django.conf import settings\nfrom django.http import HttpRequest, HttpResponse, HttpResponseNotFound\nfrom django.template import loader\n\nimport os\nimport random\nimport re\n\nfrom zerver.lib.integrations import CATEGORIES, INTEGRATIONS, HubotIntegration, \\\n WebhookIntegration, EmailIntegration\nfrom zerver.lib.request import has_request_variables, REQ\nfrom zerver.lib.subdomains import get_subdomain\nfrom zerver.models import Realm\nfrom zerver.templatetags.app_filters import render_markdown_path\nfrom zerver.context_processors import zulip_default_context\n\ndef add_api_uri_context(context: Dict[str, Any], request: HttpRequest) -> None:\n context.update(zulip_default_context(request))\n\n subdomain = get_subdomain(request)\n if (subdomain != Realm.SUBDOMAIN_FOR_ROOT_DOMAIN\n or not settings.ROOT_DOMAIN_LANDING_PAGE):\n display_subdomain = subdomain\n html_settings_links = True\n else:\n display_subdomain = 'yourZulipDomain'\n html_settings_links = False\n\n display_host = Realm.host_for_subdomain(display_subdomain)\n api_url_scheme_relative = display_host + \"/api\"\n api_url = settings.EXTERNAL_URI_SCHEME + api_url_scheme_relative\n zulip_url = settings.EXTERNAL_URI_SCHEME + display_host\n\n context['external_uri_scheme'] = settings.EXTERNAL_URI_SCHEME\n context['api_url'] = api_url\n context['api_url_scheme_relative'] = api_url_scheme_relative\n context['zulip_url'] = zulip_url\n\n context[\"html_settings_links\"] = html_settings_links\n if html_settings_links:\n settings_html = '<a href=\"/#settings\">Zulip settings page</a>'\n subscriptions_html = '<a target=\"_blank\" href=\"/#streams\">streams page</a>'\n else:\n settings_html = 'Zulip settings page'\n subscriptions_html = 'streams page'\n context['settings_html'] = settings_html\n context['subscriptions_html'] = subscriptions_html\n\nclass ApiURLView(TemplateView):\n def get_context_data(self, **kwargs: Any) -> Dict[str, str]:\n context = super().get_context_data(**kwargs)\n add_api_uri_context(context, self.request)\n return context\n\nclass APIView(ApiURLView):\n template_name = 'zerver/api.html'\n\n\nclass MarkdownDirectoryView(ApiURLView):\n path_template = \"\"\n\n def get_path(self, article: str) -> Tuple[str, int]:\n http_status = 200\n if article == \"\":\n article = \"index\"\n elif article == \"include/sidebar_index\":\n pass\n elif \"/\" in article:\n article = \"missing\"\n http_status = 404\n elif len(article) > 100 or not re.match('^[0-9a-zA-Z_-]+$', article):\n article = \"missing\"\n http_status = 404\n\n path = self.path_template % (article,)\n try:\n loader.get_template(path)\n return (path, http_status)\n except loader.TemplateDoesNotExist:\n return (self.path_template % (\"missing\",), 404)\n\n def get_context_data(self, **kwargs: Any) -> Dict[str, Any]:\n article = kwargs[\"article\"]\n context = super().get_context_data() # type: Dict[str, Any]\n (context[\"article\"], http_status_ignored) = self.get_path(article)\n\n # For disabling the \"Back to home\" on the homepage\n context[\"not_index_page\"] = not context[\"article\"].endswith(\"/index.md\")\n if self.path_template == '/zerver/help/%s.md':\n context[\"page_is_help_center\"] = True\n context[\"doc_root\"] = \"/help/\"\n (sidebar_index, http_status_ignored) = self.get_path(\"include/sidebar_index\")\n # We want the sliding/collapsing behavior for /help pages only\n sidebar_class = \"sidebar slide\"\n title_base = \"Zulip Help Center\"\n else:\n context[\"page_is_api_center\"] = True\n context[\"doc_root\"] = \"/api/\"\n (sidebar_index, http_status_ignored) = self.get_path(\"sidebar_index\")\n sidebar_class = \"sidebar\"\n title_base = \"Zulip API Documentation\"\n\n # The following is a somewhat hacky approach to extract titles from articles.\n # Hack: `context[\"article\"] has a leading `/`, so we use + to add directories.\n article_path = os.path.join(settings.DEPLOY_ROOT, 'templates') + context[\"article\"]\n if os.path.exists(article_path):\n with open(article_path) as article_file:\n first_line = article_file.readlines()[0]\n # Strip the header and then use the first line to get the article title\n article_title = first_line.strip().lstrip(\"# \")\n if context[\"not_index_page\"]:\n context[\"OPEN_GRAPH_TITLE\"] = \"%s (%s)\" % (article_title, title_base)\n else:\n context[\"OPEN_GRAPH_TITLE\"] = title_base\n self.request.placeholder_open_graph_description = (\n \"REPLACMENT_OPEN_GRAPH_DESCRIPTION_%s\" % (int(2**24 * random.random()),))\n context[\"OPEN_GRAPH_DESCRIPTION\"] = self.request.placeholder_open_graph_description\n\n context[\"sidebar_index\"] = sidebar_index\n context[\"sidebar_class\"] = sidebar_class\n # An \"article\" might require the api_uri_context to be rendered\n api_uri_context = {} # type: Dict[str, Any]\n add_api_uri_context(api_uri_context, self.request)\n api_uri_context[\"run_content_validators\"] = True\n context[\"api_uri_context\"] = api_uri_context\n return context\n\n def get(self, request: HttpRequest, article: str=\"\") -> HttpResponse:\n (path, http_status) = self.get_path(article)\n result = super().get(self, article=article)\n if http_status != 200:\n result.status_code = http_status\n return result\n\ndef add_integrations_context(context: Dict[str, Any]) -> None:\n alphabetical_sorted_categories = OrderedDict(sorted(CATEGORIES.items()))\n alphabetical_sorted_integration = OrderedDict(sorted(INTEGRATIONS.items()))\n enabled_integrations_count = len(list(filter(lambda v: v.is_enabled(), INTEGRATIONS.values())))\n # Subtract 1 so saying \"Over X integrations\" is correct. Then,\n # round down to the nearest multiple of 10.\n integrations_count_display = ((enabled_integrations_count - 1) // 10) * 10\n context['categories_dict'] = alphabetical_sorted_categories\n context['integrations_dict'] = alphabetical_sorted_integration\n context['integrations_count_display'] = integrations_count_display\n\ndef add_integrations_open_graph_context(context: Dict[str, Any], request: HttpRequest) -> None:\n path_name = request.path.rstrip('/').split('/')[-1]\n description = ('Zulip comes with over a hundred native integrations out of the box, '\n 'and integrates with Zapier, IFTTT, and Hubot to provide hundreds more. '\n 'Connect the apps you use everyday to Zulip.')\n\n if path_name in INTEGRATIONS:\n integration = INTEGRATIONS[path_name]\n context['OPEN_GRAPH_TITLE'] = 'Connect {name} to Zulip'.format(name=integration.display_name)\n context['OPEN_GRAPH_DESCRIPTION'] = description\n\n elif path_name in CATEGORIES:\n category = CATEGORIES[path_name]\n context['OPEN_GRAPH_TITLE'] = 'Connect your {category} tools to Zulip'.format(category=category)\n context['OPEN_GRAPH_DESCRIPTION'] = description\n\n elif path_name == 'integrations':\n context['OPEN_GRAPH_TITLE'] = 'Connect the tools you use to Zulip'\n context['OPEN_GRAPH_DESCRIPTION'] = description\n\nclass IntegrationView(ApiURLView):\n template_name = 'zerver/integrations/index.html'\n\n def get_context_data(self, **kwargs: Any) -> Dict[str, Any]:\n context = super().get_context_data(**kwargs) # type: Dict[str, Any]\n add_integrations_context(context)\n add_integrations_open_graph_context(context, self.request)\n return context\n\n\n@has_request_variables\ndef integration_doc(request: HttpRequest, integration_name: str=REQ(default=None)) -> HttpResponse:\n if not request.is_ajax():\n return HttpResponseNotFound()\n try:\n integration = INTEGRATIONS[integration_name]\n except KeyError:\n return HttpResponseNotFound()\n\n context = {} # type: Dict[str, Any]\n add_api_uri_context(context, request)\n\n context['integration_name'] = integration.name\n context['integration_display_name'] = integration.display_name\n if hasattr(integration, 'stream_name'):\n context['recommended_stream_name'] = integration.stream_name\n if isinstance(integration, WebhookIntegration):\n context['integration_url'] = integration.url[3:]\n if isinstance(integration, HubotIntegration):\n context['hubot_docs_url'] = integration.hubot_docs_url\n if isinstance(integration, EmailIntegration):\n context['email_gateway_example'] = settings.EMAIL_GATEWAY_EXAMPLE\n\n doc_html_str = render_markdown_path(integration.doc, context)\n\n return HttpResponse(doc_html_str)\n", "path": "zerver/views/documentation.py"}]}

| 2,942 | 144 |

gh_patches_debug_5172

|

rasdani/github-patches

|

git_diff

|