problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

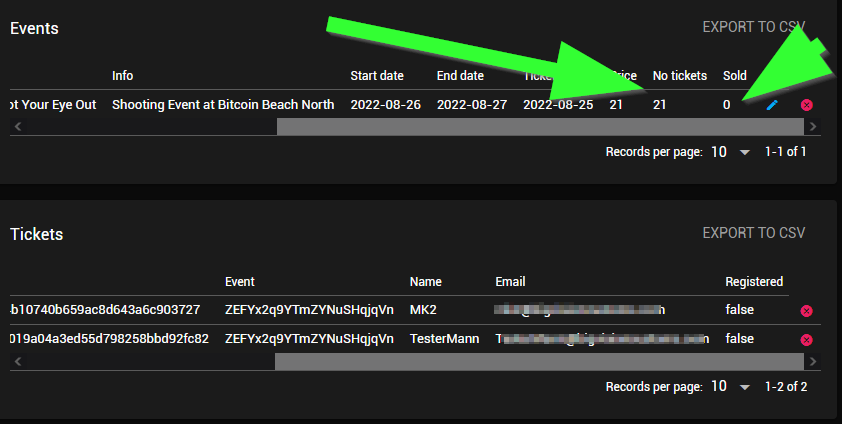

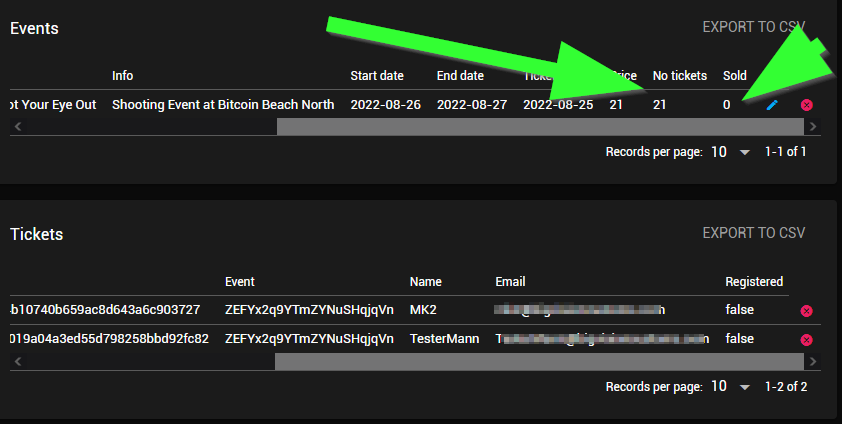

|---|---|---|---|---|---|---|---|---|

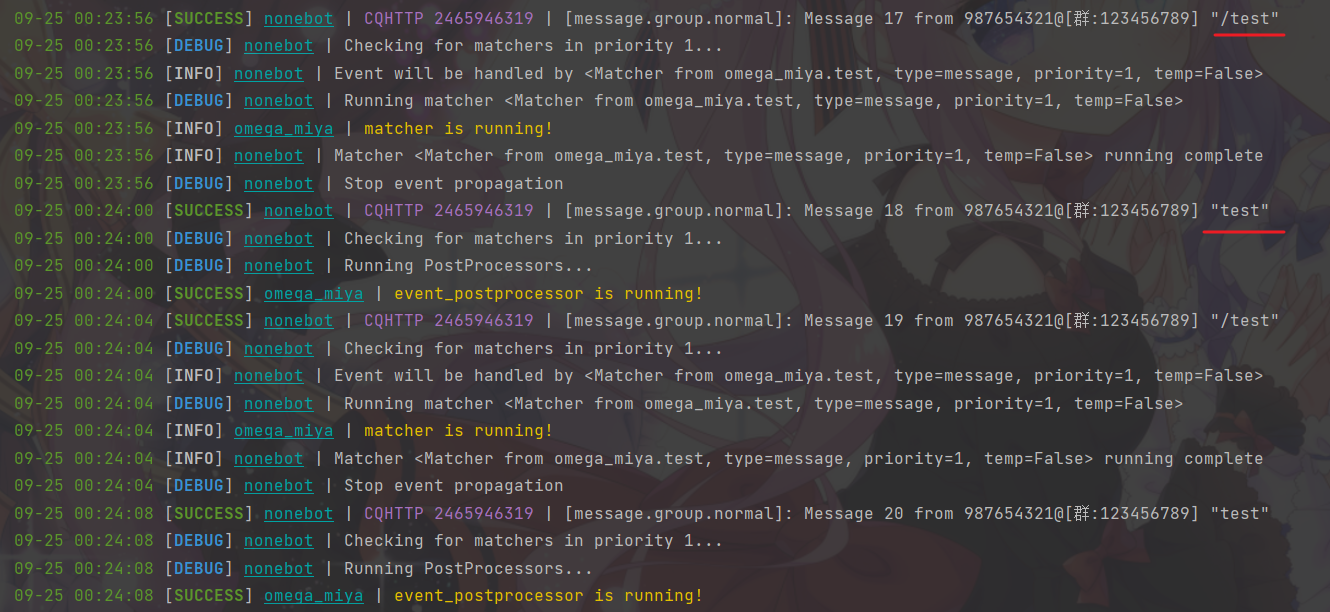

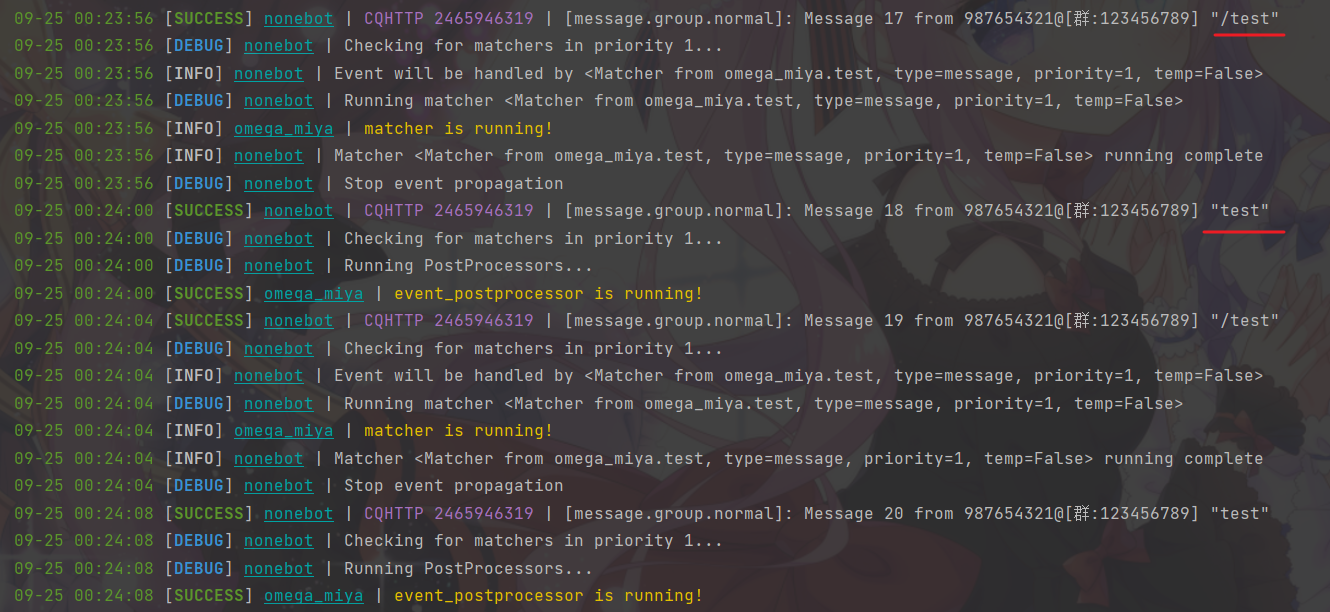

gh_patches_debug_31412 | rasdani/github-patches | git_diff | nonebot__nonebot2-539 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Bug: event_postprocessor 不能在 matcher 运行后正常执行

**描述问题:**

event_postprocessor 不能在 matcher 运行后正常执行

**如何复现?**

编写以下插件

```python

from nonebot import on_command, logger

from nonebot.message import event_postprocessor

from nonebot.typing import T_State

from nonebot.adapters.cqhttp.event import Event, MessageEvent

from nonebot.adapters.cqhttp.bot import Bot

test = on_command('test')

@test.handle()

async def handle_test(bot: Bot, event: MessageEvent, state: T_State):

logger.opt(colors=True).info('<ly>matcher is running!</ly>')

@event_postprocessor

async def handle_event_postprocessor(bot: Bot, event: Event, state: T_State):

logger.opt(colors=True).success('<ly>event_postprocessor is running!</ly>')

```

**期望的结果**

event_postprocessor 能在任意情况下正常执行

实际上当 matcher 运行后 event_postprocessor 会被跳过

**其他信息**

第 250 行 `return result` 将直接导致 matcher 运行后 event_postprocessor 被跳过

https://github.com/nonebot/nonebot2/blob/0b35d5e724a2fc7fc1e7d90499aea8a9c27e821d/nonebot/message.py#L238-L262

**环境信息:**

- OS: Windows 10 21H1

- Python Version: 3.9.6

- Nonebot Version: 2.0.0a15

**协议端信息:**

- 协议端: 无

- 协议端版本: 无

**截图或日志**

如上

--- END ISSUE ---

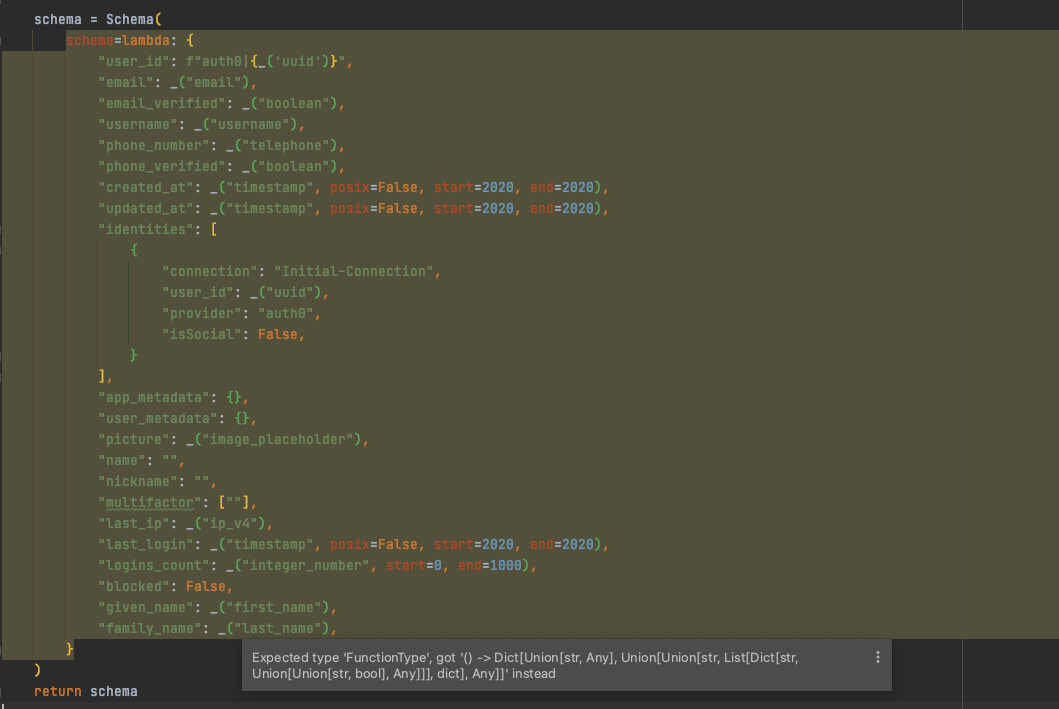

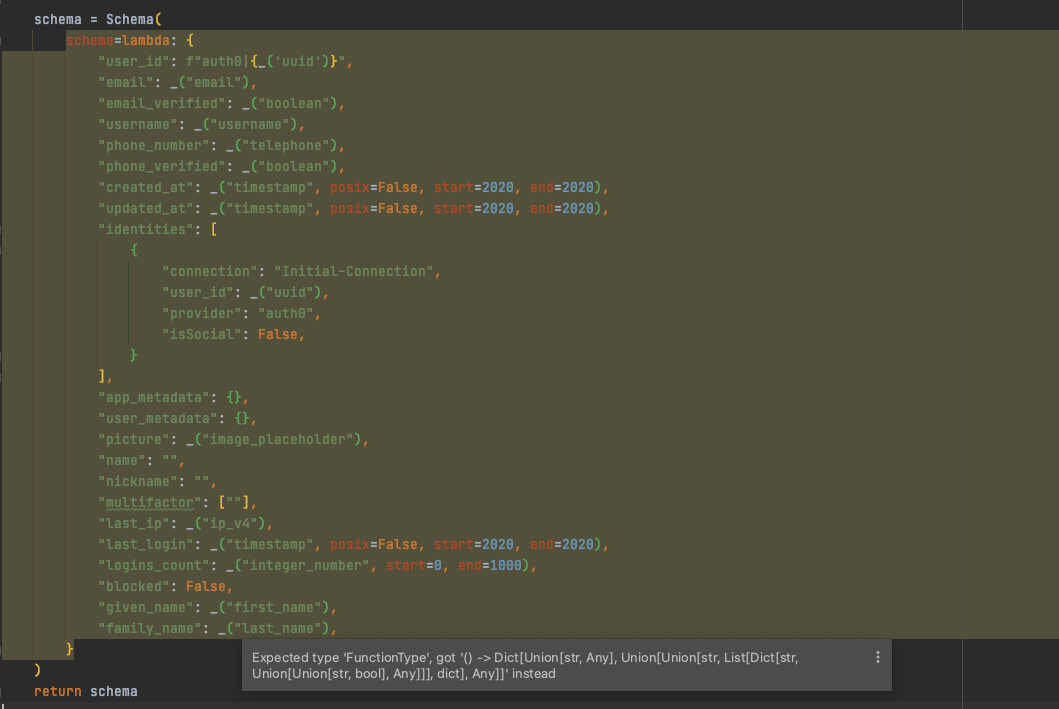

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `nonebot/message.py`

Content:

```

1 """

2 事件处理

3 ========

4

5 NoneBot 内部处理并按优先级分发事件给所有事件响应器,提供了多个插槽以进行事件的预处理等。

6 """

7

8 import asyncio

9 from datetime import datetime

10 from typing import TYPE_CHECKING, Set, Type, Optional

11

12 from nonebot.log import logger

13 from nonebot.rule import TrieRule

14 from nonebot.utils import escape_tag

15 from nonebot.matcher import Matcher, matchers

16 from nonebot.exception import NoLogException, StopPropagation, IgnoredException

17 from nonebot.typing import (T_State, T_RunPreProcessor, T_RunPostProcessor,

18 T_EventPreProcessor, T_EventPostProcessor)

19

20 if TYPE_CHECKING:

21 from nonebot.adapters import Bot, Event

22

23 _event_preprocessors: Set[T_EventPreProcessor] = set()

24 _event_postprocessors: Set[T_EventPostProcessor] = set()

25 _run_preprocessors: Set[T_RunPreProcessor] = set()

26 _run_postprocessors: Set[T_RunPostProcessor] = set()

27

28

29 def event_preprocessor(func: T_EventPreProcessor) -> T_EventPreProcessor:

30 """

31 :说明:

32

33 事件预处理。装饰一个函数,使它在每次接收到事件并分发给各响应器之前执行。

34

35 :参数:

36

37 事件预处理函数接收三个参数。

38

39 * ``bot: Bot``: Bot 对象

40 * ``event: Event``: Event 对象

41 * ``state: T_State``: 当前 State

42 """

43 _event_preprocessors.add(func)

44 return func

45

46

47 def event_postprocessor(func: T_EventPostProcessor) -> T_EventPostProcessor:

48 """

49 :说明:

50

51 事件后处理。装饰一个函数,使它在每次接收到事件并分发给各响应器之后执行。

52

53 :参数:

54

55 事件后处理函数接收三个参数。

56

57 * ``bot: Bot``: Bot 对象

58 * ``event: Event``: Event 对象

59 * ``state: T_State``: 当前事件运行前 State

60 """

61 _event_postprocessors.add(func)

62 return func

63

64

65 def run_preprocessor(func: T_RunPreProcessor) -> T_RunPreProcessor:

66 """

67 :说明:

68

69 运行预处理。装饰一个函数,使它在每次事件响应器运行前执行。

70

71 :参数:

72

73 运行预处理函数接收四个参数。

74

75 * ``matcher: Matcher``: 当前要运行的事件响应器

76 * ``bot: Bot``: Bot 对象

77 * ``event: Event``: Event 对象

78 * ``state: T_State``: 当前 State

79 """

80 _run_preprocessors.add(func)

81 return func

82

83

84 def run_postprocessor(func: T_RunPostProcessor) -> T_RunPostProcessor:

85 """

86 :说明:

87

88 运行后处理。装饰一个函数,使它在每次事件响应器运行后执行。

89

90 :参数:

91

92 运行后处理函数接收五个参数。

93

94 * ``matcher: Matcher``: 运行完毕的事件响应器

95 * ``exception: Optional[Exception]``: 事件响应器运行错误(如果存在)

96 * ``bot: Bot``: Bot 对象

97 * ``event: Event``: Event 对象

98 * ``state: T_State``: 当前 State

99 """

100 _run_postprocessors.add(func)

101 return func

102

103

104 async def _check_matcher(priority: int, Matcher: Type[Matcher], bot: "Bot",

105 event: "Event", state: T_State) -> None:

106 if Matcher.expire_time and datetime.now() > Matcher.expire_time:

107 try:

108 matchers[priority].remove(Matcher)

109 except Exception:

110 pass

111 return

112

113 try:

114 if not await Matcher.check_perm(

115 bot, event) or not await Matcher.check_rule(bot, event, state):

116 return

117 except Exception as e:

118 logger.opt(colors=True, exception=e).error(

119 f"<r><bg #f8bbd0>Rule check failed for {Matcher}.</bg #f8bbd0></r>")

120 return

121

122 if Matcher.temp:

123 try:

124 matchers[priority].remove(Matcher)

125 except Exception:

126 pass

127

128 await _run_matcher(Matcher, bot, event, state)

129

130

131 async def _run_matcher(Matcher: Type[Matcher], bot: "Bot", event: "Event",

132 state: T_State) -> None:

133 logger.info(f"Event will be handled by {Matcher}")

134

135 matcher = Matcher()

136

137 coros = list(

138 map(lambda x: x(matcher, bot, event, state), _run_preprocessors))

139 if coros:

140 try:

141 await asyncio.gather(*coros)

142 except IgnoredException:

143 logger.opt(colors=True).info(

144 f"Matcher {matcher} running is <b>cancelled</b>")

145 return

146 except Exception as e:

147 logger.opt(colors=True, exception=e).error(

148 "<r><bg #f8bbd0>Error when running RunPreProcessors. "

149 "Running cancelled!</bg #f8bbd0></r>")

150 return

151

152 exception = None

153

154 try:

155 logger.debug(f"Running matcher {matcher}")

156 await matcher.run(bot, event, state)

157 except Exception as e:

158 logger.opt(colors=True, exception=e).error(

159 f"<r><bg #f8bbd0>Running matcher {matcher} failed.</bg #f8bbd0></r>"

160 )

161 exception = e

162

163 coros = list(

164 map(lambda x: x(matcher, exception, bot, event, state),

165 _run_postprocessors))

166 if coros:

167 try:

168 await asyncio.gather(*coros)

169 except Exception as e:

170 logger.opt(colors=True, exception=e).error(

171 "<r><bg #f8bbd0>Error when running RunPostProcessors</bg #f8bbd0></r>"

172 )

173

174 if matcher.block:

175 raise StopPropagation

176 return

177

178

179 async def handle_event(bot: "Bot", event: "Event") -> Optional[Exception]:

180 """

181 :说明:

182

183 处理一个事件。调用该函数以实现分发事件。

184

185 :参数:

186

187 * ``bot: Bot``: Bot 对象

188 * ``event: Event``: Event 对象

189

190 :示例:

191

192 .. code-block:: python

193

194 import asyncio

195 asyncio.create_task(handle_event(bot, event))

196 """

197 show_log = True

198 log_msg = f"<m>{escape_tag(bot.type.upper())} {escape_tag(bot.self_id)}</m> | "

199 try:

200 log_msg += event.get_log_string()

201 except NoLogException:

202 show_log = False

203 if show_log:

204 logger.opt(colors=True).success(log_msg)

205

206 state = {}

207 coros = list(map(lambda x: x(bot, event, state), _event_preprocessors))

208 if coros:

209 try:

210 if show_log:

211 logger.debug("Running PreProcessors...")

212 await asyncio.gather(*coros)

213 except IgnoredException as e:

214 logger.opt(colors=True).info(

215 f"Event {escape_tag(event.get_event_name())} is <b>ignored</b>")

216 return e

217 except Exception as e:

218 logger.opt(colors=True, exception=e).error(

219 "<r><bg #f8bbd0>Error when running EventPreProcessors. "

220 "Event ignored!</bg #f8bbd0></r>")

221 return e

222

223 # Trie Match

224 _, _ = TrieRule.get_value(bot, event, state)

225

226 break_flag = False

227 for priority in sorted(matchers.keys()):

228 if break_flag:

229 break

230

231 if show_log:

232 logger.debug(f"Checking for matchers in priority {priority}...")

233

234 pending_tasks = [

235 _check_matcher(priority, matcher, bot, event, state.copy())

236 for matcher in matchers[priority]

237 ]

238

239 results = await asyncio.gather(*pending_tasks, return_exceptions=True)

240

241 for result in results:

242 if not isinstance(result, Exception):

243 continue

244 if isinstance(result, StopPropagation):

245 break_flag = True

246 logger.debug("Stop event propagation")

247 else:

248 logger.opt(colors=True, exception=result).error(

249 "<r><bg #f8bbd0>Error when checking Matcher.</bg #f8bbd0></r>"

250 )

251 return result

252

253 coros = list(map(lambda x: x(bot, event, state), _event_postprocessors))

254 if coros:

255 try:

256 if show_log:

257 logger.debug("Running PostProcessors...")

258 await asyncio.gather(*coros)

259 except Exception as e:

260 logger.opt(colors=True, exception=e).error(

261 "<r><bg #f8bbd0>Error when running EventPostProcessors</bg #f8bbd0></r>"

262 )

263 return e

264

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/nonebot/message.py b/nonebot/message.py

--- a/nonebot/message.py

+++ b/nonebot/message.py

@@ -176,7 +176,7 @@

return

-async def handle_event(bot: "Bot", event: "Event") -> Optional[Exception]:

+async def handle_event(bot: "Bot", event: "Event") -> None:

"""

:说明:

@@ -213,12 +213,12 @@

except IgnoredException as e:

logger.opt(colors=True).info(

f"Event {escape_tag(event.get_event_name())} is <b>ignored</b>")

- return e

+ return

except Exception as e:

logger.opt(colors=True, exception=e).error(

"<r><bg #f8bbd0>Error when running EventPreProcessors. "

"Event ignored!</bg #f8bbd0></r>")

- return e

+ return

# Trie Match

_, _ = TrieRule.get_value(bot, event, state)

@@ -248,7 +248,6 @@

logger.opt(colors=True, exception=result).error(

"<r><bg #f8bbd0>Error when checking Matcher.</bg #f8bbd0></r>"

)

- return result

coros = list(map(lambda x: x(bot, event, state), _event_postprocessors))

if coros:

@@ -260,4 +259,3 @@

logger.opt(colors=True, exception=e).error(

"<r><bg #f8bbd0>Error when running EventPostProcessors</bg #f8bbd0></r>"

)

- return e

| {"golden_diff": "diff --git a/nonebot/message.py b/nonebot/message.py\n--- a/nonebot/message.py\n+++ b/nonebot/message.py\n@@ -176,7 +176,7 @@\n return\n \n \n-async def handle_event(bot: \"Bot\", event: \"Event\") -> Optional[Exception]:\n+async def handle_event(bot: \"Bot\", event: \"Event\") -> None:\n \"\"\"\n :\u8bf4\u660e:\n \n@@ -213,12 +213,12 @@\n except IgnoredException as e:\n logger.opt(colors=True).info(\n f\"Event {escape_tag(event.get_event_name())} is <b>ignored</b>\")\n- return e\n+ return\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running EventPreProcessors. \"\n \"Event ignored!</bg #f8bbd0></r>\")\n- return e\n+ return\n \n # Trie Match\n _, _ = TrieRule.get_value(bot, event, state)\n@@ -248,7 +248,6 @@\n logger.opt(colors=True, exception=result).error(\n \"<r><bg #f8bbd0>Error when checking Matcher.</bg #f8bbd0></r>\"\n )\n- return result\n \n coros = list(map(lambda x: x(bot, event, state), _event_postprocessors))\n if coros:\n@@ -260,4 +259,3 @@\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running EventPostProcessors</bg #f8bbd0></r>\"\n )\n- return e\n", "issue": "Bug: event_postprocessor \u4e0d\u80fd\u5728 matcher \u8fd0\u884c\u540e\u6b63\u5e38\u6267\u884c\n**\u63cf\u8ff0\u95ee\u9898\uff1a**\r\n\r\nevent_postprocessor \u4e0d\u80fd\u5728 matcher \u8fd0\u884c\u540e\u6b63\u5e38\u6267\u884c\r\n\r\n**\u5982\u4f55\u590d\u73b0\uff1f**\r\n\r\n\u7f16\u5199\u4ee5\u4e0b\u63d2\u4ef6\r\n```python\r\nfrom nonebot import on_command, logger\r\nfrom nonebot.message import event_postprocessor\r\nfrom nonebot.typing import T_State\r\nfrom nonebot.adapters.cqhttp.event import Event, MessageEvent\r\nfrom nonebot.adapters.cqhttp.bot import Bot\r\n\r\n\r\ntest = on_command('test')\r\n\r\n\r\[email protected]()\r\nasync def handle_test(bot: Bot, event: MessageEvent, state: T_State):\r\n logger.opt(colors=True).info('<ly>matcher is running!</ly>')\r\n\r\n\r\n@event_postprocessor\r\nasync def handle_event_postprocessor(bot: Bot, event: Event, state: T_State):\r\n logger.opt(colors=True).success('<ly>event_postprocessor is running!</ly>')\r\n```\r\n\r\n**\u671f\u671b\u7684\u7ed3\u679c**\r\n\r\nevent_postprocessor \u80fd\u5728\u4efb\u610f\u60c5\u51b5\u4e0b\u6b63\u5e38\u6267\u884c\r\n\r\n\u5b9e\u9645\u4e0a\u5f53 matcher \u8fd0\u884c\u540e event_postprocessor \u4f1a\u88ab\u8df3\u8fc7\r\n\r\n\r\n\r\n**\u5176\u4ed6\u4fe1\u606f**\r\n\r\n\u7b2c 250 \u884c `return result` \u5c06\u76f4\u63a5\u5bfc\u81f4 matcher \u8fd0\u884c\u540e event_postprocessor \u88ab\u8df3\u8fc7\r\n\r\nhttps://github.com/nonebot/nonebot2/blob/0b35d5e724a2fc7fc1e7d90499aea8a9c27e821d/nonebot/message.py#L238-L262\r\n\r\n**\u73af\u5883\u4fe1\u606f\uff1a**\r\n\r\n - OS: Windows 10 21H1\r\n - Python Version: 3.9.6\r\n - Nonebot Version: 2.0.0a15\r\n\r\n**\u534f\u8bae\u7aef\u4fe1\u606f\uff1a**\r\n\r\n - \u534f\u8bae\u7aef: \u65e0\r\n - \u534f\u8bae\u7aef\u7248\u672c: \u65e0\r\n\r\n**\u622a\u56fe\u6216\u65e5\u5fd7**\r\n\r\n\u5982\u4e0a\r\n\n", "before_files": [{"content": "\"\"\"\n\u4e8b\u4ef6\u5904\u7406\n========\n\nNoneBot \u5185\u90e8\u5904\u7406\u5e76\u6309\u4f18\u5148\u7ea7\u5206\u53d1\u4e8b\u4ef6\u7ed9\u6240\u6709\u4e8b\u4ef6\u54cd\u5e94\u5668\uff0c\u63d0\u4f9b\u4e86\u591a\u4e2a\u63d2\u69fd\u4ee5\u8fdb\u884c\u4e8b\u4ef6\u7684\u9884\u5904\u7406\u7b49\u3002\n\"\"\"\n\nimport asyncio\nfrom datetime import datetime\nfrom typing import TYPE_CHECKING, Set, Type, Optional\n\nfrom nonebot.log import logger\nfrom nonebot.rule import TrieRule\nfrom nonebot.utils import escape_tag\nfrom nonebot.matcher import Matcher, matchers\nfrom nonebot.exception import NoLogException, StopPropagation, IgnoredException\nfrom nonebot.typing import (T_State, T_RunPreProcessor, T_RunPostProcessor,\n T_EventPreProcessor, T_EventPostProcessor)\n\nif TYPE_CHECKING:\n from nonebot.adapters import Bot, Event\n\n_event_preprocessors: Set[T_EventPreProcessor] = set()\n_event_postprocessors: Set[T_EventPostProcessor] = set()\n_run_preprocessors: Set[T_RunPreProcessor] = set()\n_run_postprocessors: Set[T_RunPostProcessor] = set()\n\n\ndef event_preprocessor(func: T_EventPreProcessor) -> T_EventPreProcessor:\n \"\"\"\n :\u8bf4\u660e:\n\n \u4e8b\u4ef6\u9884\u5904\u7406\u3002\u88c5\u9970\u4e00\u4e2a\u51fd\u6570\uff0c\u4f7f\u5b83\u5728\u6bcf\u6b21\u63a5\u6536\u5230\u4e8b\u4ef6\u5e76\u5206\u53d1\u7ed9\u5404\u54cd\u5e94\u5668\u4e4b\u524d\u6267\u884c\u3002\n\n :\u53c2\u6570:\n\n \u4e8b\u4ef6\u9884\u5904\u7406\u51fd\u6570\u63a5\u6536\u4e09\u4e2a\u53c2\u6570\u3002\n\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n * ``state: T_State``: \u5f53\u524d State\n \"\"\"\n _event_preprocessors.add(func)\n return func\n\n\ndef event_postprocessor(func: T_EventPostProcessor) -> T_EventPostProcessor:\n \"\"\"\n :\u8bf4\u660e:\n\n \u4e8b\u4ef6\u540e\u5904\u7406\u3002\u88c5\u9970\u4e00\u4e2a\u51fd\u6570\uff0c\u4f7f\u5b83\u5728\u6bcf\u6b21\u63a5\u6536\u5230\u4e8b\u4ef6\u5e76\u5206\u53d1\u7ed9\u5404\u54cd\u5e94\u5668\u4e4b\u540e\u6267\u884c\u3002\n\n :\u53c2\u6570:\n\n \u4e8b\u4ef6\u540e\u5904\u7406\u51fd\u6570\u63a5\u6536\u4e09\u4e2a\u53c2\u6570\u3002\n\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n * ``state: T_State``: \u5f53\u524d\u4e8b\u4ef6\u8fd0\u884c\u524d State\n \"\"\"\n _event_postprocessors.add(func)\n return func\n\n\ndef run_preprocessor(func: T_RunPreProcessor) -> T_RunPreProcessor:\n \"\"\"\n :\u8bf4\u660e:\n\n \u8fd0\u884c\u9884\u5904\u7406\u3002\u88c5\u9970\u4e00\u4e2a\u51fd\u6570\uff0c\u4f7f\u5b83\u5728\u6bcf\u6b21\u4e8b\u4ef6\u54cd\u5e94\u5668\u8fd0\u884c\u524d\u6267\u884c\u3002\n\n :\u53c2\u6570:\n\n \u8fd0\u884c\u9884\u5904\u7406\u51fd\u6570\u63a5\u6536\u56db\u4e2a\u53c2\u6570\u3002\n\n * ``matcher: Matcher``: \u5f53\u524d\u8981\u8fd0\u884c\u7684\u4e8b\u4ef6\u54cd\u5e94\u5668\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n * ``state: T_State``: \u5f53\u524d State\n \"\"\"\n _run_preprocessors.add(func)\n return func\n\n\ndef run_postprocessor(func: T_RunPostProcessor) -> T_RunPostProcessor:\n \"\"\"\n :\u8bf4\u660e:\n\n \u8fd0\u884c\u540e\u5904\u7406\u3002\u88c5\u9970\u4e00\u4e2a\u51fd\u6570\uff0c\u4f7f\u5b83\u5728\u6bcf\u6b21\u4e8b\u4ef6\u54cd\u5e94\u5668\u8fd0\u884c\u540e\u6267\u884c\u3002\n\n :\u53c2\u6570:\n\n \u8fd0\u884c\u540e\u5904\u7406\u51fd\u6570\u63a5\u6536\u4e94\u4e2a\u53c2\u6570\u3002\n\n * ``matcher: Matcher``: \u8fd0\u884c\u5b8c\u6bd5\u7684\u4e8b\u4ef6\u54cd\u5e94\u5668\n * ``exception: Optional[Exception]``: \u4e8b\u4ef6\u54cd\u5e94\u5668\u8fd0\u884c\u9519\u8bef\uff08\u5982\u679c\u5b58\u5728\uff09\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n * ``state: T_State``: \u5f53\u524d State\n \"\"\"\n _run_postprocessors.add(func)\n return func\n\n\nasync def _check_matcher(priority: int, Matcher: Type[Matcher], bot: \"Bot\",\n event: \"Event\", state: T_State) -> None:\n if Matcher.expire_time and datetime.now() > Matcher.expire_time:\n try:\n matchers[priority].remove(Matcher)\n except Exception:\n pass\n return\n\n try:\n if not await Matcher.check_perm(\n bot, event) or not await Matcher.check_rule(bot, event, state):\n return\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n f\"<r><bg #f8bbd0>Rule check failed for {Matcher}.</bg #f8bbd0></r>\")\n return\n\n if Matcher.temp:\n try:\n matchers[priority].remove(Matcher)\n except Exception:\n pass\n\n await _run_matcher(Matcher, bot, event, state)\n\n\nasync def _run_matcher(Matcher: Type[Matcher], bot: \"Bot\", event: \"Event\",\n state: T_State) -> None:\n logger.info(f\"Event will be handled by {Matcher}\")\n\n matcher = Matcher()\n\n coros = list(\n map(lambda x: x(matcher, bot, event, state), _run_preprocessors))\n if coros:\n try:\n await asyncio.gather(*coros)\n except IgnoredException:\n logger.opt(colors=True).info(\n f\"Matcher {matcher} running is <b>cancelled</b>\")\n return\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running RunPreProcessors. \"\n \"Running cancelled!</bg #f8bbd0></r>\")\n return\n\n exception = None\n\n try:\n logger.debug(f\"Running matcher {matcher}\")\n await matcher.run(bot, event, state)\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n f\"<r><bg #f8bbd0>Running matcher {matcher} failed.</bg #f8bbd0></r>\"\n )\n exception = e\n\n coros = list(\n map(lambda x: x(matcher, exception, bot, event, state),\n _run_postprocessors))\n if coros:\n try:\n await asyncio.gather(*coros)\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running RunPostProcessors</bg #f8bbd0></r>\"\n )\n\n if matcher.block:\n raise StopPropagation\n return\n\n\nasync def handle_event(bot: \"Bot\", event: \"Event\") -> Optional[Exception]:\n \"\"\"\n :\u8bf4\u660e:\n\n \u5904\u7406\u4e00\u4e2a\u4e8b\u4ef6\u3002\u8c03\u7528\u8be5\u51fd\u6570\u4ee5\u5b9e\u73b0\u5206\u53d1\u4e8b\u4ef6\u3002\n\n :\u53c2\u6570:\n\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n\n :\u793a\u4f8b:\n\n .. code-block:: python\n\n import asyncio\n asyncio.create_task(handle_event(bot, event))\n \"\"\"\n show_log = True\n log_msg = f\"<m>{escape_tag(bot.type.upper())} {escape_tag(bot.self_id)}</m> | \"\n try:\n log_msg += event.get_log_string()\n except NoLogException:\n show_log = False\n if show_log:\n logger.opt(colors=True).success(log_msg)\n\n state = {}\n coros = list(map(lambda x: x(bot, event, state), _event_preprocessors))\n if coros:\n try:\n if show_log:\n logger.debug(\"Running PreProcessors...\")\n await asyncio.gather(*coros)\n except IgnoredException as e:\n logger.opt(colors=True).info(\n f\"Event {escape_tag(event.get_event_name())} is <b>ignored</b>\")\n return e\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running EventPreProcessors. \"\n \"Event ignored!</bg #f8bbd0></r>\")\n return e\n\n # Trie Match\n _, _ = TrieRule.get_value(bot, event, state)\n\n break_flag = False\n for priority in sorted(matchers.keys()):\n if break_flag:\n break\n\n if show_log:\n logger.debug(f\"Checking for matchers in priority {priority}...\")\n\n pending_tasks = [\n _check_matcher(priority, matcher, bot, event, state.copy())\n for matcher in matchers[priority]\n ]\n\n results = await asyncio.gather(*pending_tasks, return_exceptions=True)\n\n for result in results:\n if not isinstance(result, Exception):\n continue\n if isinstance(result, StopPropagation):\n break_flag = True\n logger.debug(\"Stop event propagation\")\n else:\n logger.opt(colors=True, exception=result).error(\n \"<r><bg #f8bbd0>Error when checking Matcher.</bg #f8bbd0></r>\"\n )\n return result\n\n coros = list(map(lambda x: x(bot, event, state), _event_postprocessors))\n if coros:\n try:\n if show_log:\n logger.debug(\"Running PostProcessors...\")\n await asyncio.gather(*coros)\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running EventPostProcessors</bg #f8bbd0></r>\"\n )\n return e\n", "path": "nonebot/message.py"}], "after_files": [{"content": "\"\"\"\n\u4e8b\u4ef6\u5904\u7406\n========\n\nNoneBot \u5185\u90e8\u5904\u7406\u5e76\u6309\u4f18\u5148\u7ea7\u5206\u53d1\u4e8b\u4ef6\u7ed9\u6240\u6709\u4e8b\u4ef6\u54cd\u5e94\u5668\uff0c\u63d0\u4f9b\u4e86\u591a\u4e2a\u63d2\u69fd\u4ee5\u8fdb\u884c\u4e8b\u4ef6\u7684\u9884\u5904\u7406\u7b49\u3002\n\"\"\"\n\nimport asyncio\nfrom datetime import datetime\nfrom typing import TYPE_CHECKING, Set, Type, Optional\n\nfrom nonebot.log import logger\nfrom nonebot.rule import TrieRule\nfrom nonebot.utils import escape_tag\nfrom nonebot.matcher import Matcher, matchers\nfrom nonebot.exception import NoLogException, StopPropagation, IgnoredException\nfrom nonebot.typing import (T_State, T_RunPreProcessor, T_RunPostProcessor,\n T_EventPreProcessor, T_EventPostProcessor)\n\nif TYPE_CHECKING:\n from nonebot.adapters import Bot, Event\n\n_event_preprocessors: Set[T_EventPreProcessor] = set()\n_event_postprocessors: Set[T_EventPostProcessor] = set()\n_run_preprocessors: Set[T_RunPreProcessor] = set()\n_run_postprocessors: Set[T_RunPostProcessor] = set()\n\n\ndef event_preprocessor(func: T_EventPreProcessor) -> T_EventPreProcessor:\n \"\"\"\n :\u8bf4\u660e:\n\n \u4e8b\u4ef6\u9884\u5904\u7406\u3002\u88c5\u9970\u4e00\u4e2a\u51fd\u6570\uff0c\u4f7f\u5b83\u5728\u6bcf\u6b21\u63a5\u6536\u5230\u4e8b\u4ef6\u5e76\u5206\u53d1\u7ed9\u5404\u54cd\u5e94\u5668\u4e4b\u524d\u6267\u884c\u3002\n\n :\u53c2\u6570:\n\n \u4e8b\u4ef6\u9884\u5904\u7406\u51fd\u6570\u63a5\u6536\u4e09\u4e2a\u53c2\u6570\u3002\n\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n * ``state: T_State``: \u5f53\u524d State\n \"\"\"\n _event_preprocessors.add(func)\n return func\n\n\ndef event_postprocessor(func: T_EventPostProcessor) -> T_EventPostProcessor:\n \"\"\"\n :\u8bf4\u660e:\n\n \u4e8b\u4ef6\u540e\u5904\u7406\u3002\u88c5\u9970\u4e00\u4e2a\u51fd\u6570\uff0c\u4f7f\u5b83\u5728\u6bcf\u6b21\u63a5\u6536\u5230\u4e8b\u4ef6\u5e76\u5206\u53d1\u7ed9\u5404\u54cd\u5e94\u5668\u4e4b\u540e\u6267\u884c\u3002\n\n :\u53c2\u6570:\n\n \u4e8b\u4ef6\u540e\u5904\u7406\u51fd\u6570\u63a5\u6536\u4e09\u4e2a\u53c2\u6570\u3002\n\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n * ``state: T_State``: \u5f53\u524d\u4e8b\u4ef6\u8fd0\u884c\u524d State\n \"\"\"\n _event_postprocessors.add(func)\n return func\n\n\ndef run_preprocessor(func: T_RunPreProcessor) -> T_RunPreProcessor:\n \"\"\"\n :\u8bf4\u660e:\n\n \u8fd0\u884c\u9884\u5904\u7406\u3002\u88c5\u9970\u4e00\u4e2a\u51fd\u6570\uff0c\u4f7f\u5b83\u5728\u6bcf\u6b21\u4e8b\u4ef6\u54cd\u5e94\u5668\u8fd0\u884c\u524d\u6267\u884c\u3002\n\n :\u53c2\u6570:\n\n \u8fd0\u884c\u9884\u5904\u7406\u51fd\u6570\u63a5\u6536\u56db\u4e2a\u53c2\u6570\u3002\n\n * ``matcher: Matcher``: \u5f53\u524d\u8981\u8fd0\u884c\u7684\u4e8b\u4ef6\u54cd\u5e94\u5668\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n * ``state: T_State``: \u5f53\u524d State\n \"\"\"\n _run_preprocessors.add(func)\n return func\n\n\ndef run_postprocessor(func: T_RunPostProcessor) -> T_RunPostProcessor:\n \"\"\"\n :\u8bf4\u660e:\n\n \u8fd0\u884c\u540e\u5904\u7406\u3002\u88c5\u9970\u4e00\u4e2a\u51fd\u6570\uff0c\u4f7f\u5b83\u5728\u6bcf\u6b21\u4e8b\u4ef6\u54cd\u5e94\u5668\u8fd0\u884c\u540e\u6267\u884c\u3002\n\n :\u53c2\u6570:\n\n \u8fd0\u884c\u540e\u5904\u7406\u51fd\u6570\u63a5\u6536\u4e94\u4e2a\u53c2\u6570\u3002\n\n * ``matcher: Matcher``: \u8fd0\u884c\u5b8c\u6bd5\u7684\u4e8b\u4ef6\u54cd\u5e94\u5668\n * ``exception: Optional[Exception]``: \u4e8b\u4ef6\u54cd\u5e94\u5668\u8fd0\u884c\u9519\u8bef\uff08\u5982\u679c\u5b58\u5728\uff09\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n * ``state: T_State``: \u5f53\u524d State\n \"\"\"\n _run_postprocessors.add(func)\n return func\n\n\nasync def _check_matcher(priority: int, Matcher: Type[Matcher], bot: \"Bot\",\n event: \"Event\", state: T_State) -> None:\n if Matcher.expire_time and datetime.now() > Matcher.expire_time:\n try:\n matchers[priority].remove(Matcher)\n except Exception:\n pass\n return\n\n try:\n if not await Matcher.check_perm(\n bot, event) or not await Matcher.check_rule(bot, event, state):\n return\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n f\"<r><bg #f8bbd0>Rule check failed for {Matcher}.</bg #f8bbd0></r>\")\n return\n\n if Matcher.temp:\n try:\n matchers[priority].remove(Matcher)\n except Exception:\n pass\n\n await _run_matcher(Matcher, bot, event, state)\n\n\nasync def _run_matcher(Matcher: Type[Matcher], bot: \"Bot\", event: \"Event\",\n state: T_State) -> None:\n logger.info(f\"Event will be handled by {Matcher}\")\n\n matcher = Matcher()\n\n coros = list(\n map(lambda x: x(matcher, bot, event, state), _run_preprocessors))\n if coros:\n try:\n await asyncio.gather(*coros)\n except IgnoredException:\n logger.opt(colors=True).info(\n f\"Matcher {matcher} running is <b>cancelled</b>\")\n return\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running RunPreProcessors. \"\n \"Running cancelled!</bg #f8bbd0></r>\")\n return\n\n exception = None\n\n try:\n logger.debug(f\"Running matcher {matcher}\")\n await matcher.run(bot, event, state)\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n f\"<r><bg #f8bbd0>Running matcher {matcher} failed.</bg #f8bbd0></r>\"\n )\n exception = e\n\n coros = list(\n map(lambda x: x(matcher, exception, bot, event, state),\n _run_postprocessors))\n if coros:\n try:\n await asyncio.gather(*coros)\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running RunPostProcessors</bg #f8bbd0></r>\"\n )\n\n if matcher.block:\n raise StopPropagation\n return\n\n\nasync def handle_event(bot: \"Bot\", event: \"Event\") -> None:\n \"\"\"\n :\u8bf4\u660e:\n\n \u5904\u7406\u4e00\u4e2a\u4e8b\u4ef6\u3002\u8c03\u7528\u8be5\u51fd\u6570\u4ee5\u5b9e\u73b0\u5206\u53d1\u4e8b\u4ef6\u3002\n\n :\u53c2\u6570:\n\n * ``bot: Bot``: Bot \u5bf9\u8c61\n * ``event: Event``: Event \u5bf9\u8c61\n\n :\u793a\u4f8b:\n\n .. code-block:: python\n\n import asyncio\n asyncio.create_task(handle_event(bot, event))\n \"\"\"\n show_log = True\n log_msg = f\"<m>{escape_tag(bot.type.upper())} {escape_tag(bot.self_id)}</m> | \"\n try:\n log_msg += event.get_log_string()\n except NoLogException:\n show_log = False\n if show_log:\n logger.opt(colors=True).success(log_msg)\n\n state = {}\n coros = list(map(lambda x: x(bot, event, state), _event_preprocessors))\n if coros:\n try:\n if show_log:\n logger.debug(\"Running PreProcessors...\")\n await asyncio.gather(*coros)\n except IgnoredException as e:\n logger.opt(colors=True).info(\n f\"Event {escape_tag(event.get_event_name())} is <b>ignored</b>\")\n return\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running EventPreProcessors. \"\n \"Event ignored!</bg #f8bbd0></r>\")\n return\n\n # Trie Match\n _, _ = TrieRule.get_value(bot, event, state)\n\n break_flag = False\n for priority in sorted(matchers.keys()):\n if break_flag:\n break\n\n if show_log:\n logger.debug(f\"Checking for matchers in priority {priority}...\")\n\n pending_tasks = [\n _check_matcher(priority, matcher, bot, event, state.copy())\n for matcher in matchers[priority]\n ]\n\n results = await asyncio.gather(*pending_tasks, return_exceptions=True)\n\n for result in results:\n if not isinstance(result, Exception):\n continue\n if isinstance(result, StopPropagation):\n break_flag = True\n logger.debug(\"Stop event propagation\")\n else:\n logger.opt(colors=True, exception=result).error(\n \"<r><bg #f8bbd0>Error when checking Matcher.</bg #f8bbd0></r>\"\n )\n\n coros = list(map(lambda x: x(bot, event, state), _event_postprocessors))\n if coros:\n try:\n if show_log:\n logger.debug(\"Running PostProcessors...\")\n await asyncio.gather(*coros)\n except Exception as e:\n logger.opt(colors=True, exception=e).error(\n \"<r><bg #f8bbd0>Error when running EventPostProcessors</bg #f8bbd0></r>\"\n )\n", "path": "nonebot/message.py"}]} | 3,375 | 382 |

gh_patches_debug_37 | rasdani/github-patches | git_diff | nextcloud__appstore-67 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

After clicking confirm button I got a 404

- click the confirm link in the email

- click the button on that page

- getting redirected to https://.../accounts/login/ instead of https://.../login/ which is not available

cc @BernhardPosselt @adsworth

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `nextcloudappstore/settings/base.py`

Content:

```

1 """

2 Django settings for nextcloudappstore project.

3

4 Generated by 'django-admin startproject' using Django 1.9.6.

5

6 For more information on this file, see

7 https://docs.djangoproject.com/en/1.9/topics/settings/

8

9 For the full list of settings and their values, see

10 https://docs.djangoproject.com/en/1.9/ref/settings/

11 """

12

13 from os.path import dirname, abspath, join, pardir, realpath

14

15 # Build paths inside the project like this: os.path.join(BASE_DIR, ...)

16 from django.conf.global_settings import LANGUAGES

17

18 BASE_DIR = realpath(join(dirname(dirname(abspath(__file__))), pardir))

19

20 # Quick-start development settings - unsuitable for production

21 # See https://docs.djangoproject.com/en/1.9/howto/deployment/checklist/

22

23 # Application definition

24

25 INSTALLED_APPS = [

26 'nextcloudappstore.core.apps.CoreConfig',

27 'parler',

28 'captcha',

29 'rest_framework',

30 'corsheaders',

31 'allauth',

32 'allauth.account',

33 'allauth.socialaccount',

34 'allauth.socialaccount.providers.github',

35 'allauth.socialaccount.providers.bitbucket',

36 'django.contrib.admin',

37 'django.contrib.auth',

38 'django.contrib.contenttypes',

39 'django.contrib.sessions',

40 'django.contrib.messages',

41 'django.contrib.sites',

42 'django.contrib.staticfiles',

43 ]

44

45 MIDDLEWARE_CLASSES = [

46 'django.middleware.security.SecurityMiddleware',

47 'django.contrib.sessions.middleware.SessionMiddleware',

48 'corsheaders.middleware.CorsMiddleware',

49 'django.middleware.common.CommonMiddleware',

50 'django.middleware.csrf.CsrfViewMiddleware',

51 'django.contrib.auth.middleware.AuthenticationMiddleware',

52 'django.contrib.auth.middleware.SessionAuthenticationMiddleware',

53 'django.contrib.messages.middleware.MessageMiddleware',

54 'django.middleware.clickjacking.XFrameOptionsMiddleware',

55 ]

56

57 ROOT_URLCONF = 'nextcloudappstore.urls'

58

59 TEMPLATES = [

60 {

61 'BACKEND': 'django.template.backends.django.DjangoTemplates',

62 'DIRS': [],

63 'APP_DIRS': True,

64 'OPTIONS': {

65 'context_processors': [

66 'django.template.context_processors.debug',

67 'django.template.context_processors.request',

68 'django.contrib.auth.context_processors.auth',

69 'django.contrib.messages.context_processors.messages',

70 ],

71 },

72 },

73 ]

74

75 WSGI_APPLICATION = 'nextcloudappstore.wsgi.application'

76

77 # Database

78 # https://docs.djangoproject.com/en/1.9/ref/settings/#databases

79

80 DATABASES = {

81 'default': {

82 'ENGINE': 'django.db.backends.sqlite3',

83 'NAME': join(BASE_DIR, 'db.sqlite3'),

84 'TEST': {

85 'NAME': join(BASE_DIR, 'test.sqlite3'),

86 }

87 }

88 }

89

90 AUTHENTICATION_BACKENDS = (

91 # Needed to login by username in Django admin, regardless of `allauth`

92 'django.contrib.auth.backends.ModelBackend',

93

94 # `allauth` specific authentication methods, such as login by e-mail

95 'allauth.account.auth_backends.AuthenticationBackend',

96 )

97

98 # Password validation

99 # https://docs.djangoproject.com/en/1.9/ref/settings/#auth-password-validators

100

101 AUTH_PASSWORD_VALIDATORS = [

102 {

103 'NAME': 'django.contrib.auth.password_validation'

104 '.UserAttributeSimilarityValidator',

105 },

106 {

107 'NAME': 'django.contrib.auth.password_validation'

108 '.MinimumLengthValidator',

109 },

110 {

111 'NAME': 'django.contrib.auth.password_validation'

112 '.CommonPasswordValidator',

113 },

114 {

115 'NAME': 'django.contrib.auth.password_validation'

116 '.NumericPasswordValidator',

117 },

118 ]

119

120 REST_FRAMEWORK = {

121 'DEFAULT_RENDERER_CLASSES': (

122 'djangorestframework_camel_case.render.CamelCaseJSONRenderer',

123 ),

124 'DEFAULT_PARSER_CLASSES': (

125 'djangorestframework_camel_case.parser.CamelCaseJSONParser',

126 ),

127 'DEFAULT_THROTTLE_RATES': {

128 'app_upload': '100/day'

129 }

130 }

131

132 SITE_ID = 1

133

134 # Allauth configuration

135 # http://django-allauth.readthedocs.io/en/latest/configuration.html

136 ACCOUNT_EMAIL_REQUIRED = True

137 ACCOUNT_EMAIL_VERIFICATION = "mandatory"

138 ACCOUNT_LOGOUT_ON_GET = True

139 ACCOUNT_LOGOUT_REDIRECT_URL = 'home'

140 ACCOUNT_SESSION_REMEMBER = True

141 ACCOUNT_SIGNUP_FORM_CLASS = \

142 'nextcloudappstore.core.user.forms.SignupFormRecaptcha'

143

144 # Internationalization

145 # https://docs.djangoproject.com/en/1.9/topics/i18n/

146 LANGUAGE_CODE = 'en-us'

147 TIME_ZONE = 'UTC'

148 USE_I18N = True

149 USE_L10N = True

150 USE_TZ = True

151

152 PARLER_LANGUAGES = {

153 1: [{'code': code} for code, trans in LANGUAGES],

154 'default': {

155 'fallbacks': ['en'],

156 'hide_untranslated': False,

157 }

158 }

159

160 # Static files (CSS, JavaScript, Images)

161 # https://docs.djangoproject.com/en/1.9/howto/static-files/

162 MEDIA_ROOT = join(BASE_DIR, 'media')

163 RELEASE_DOWNLOAD_ROOT = None

164 STATIC_URL = '/static/'

165 MEDIA_URL = '/media/'

166

167 # Default security settings

168 SECURE_BROWSER_XSS_FILTER = True

169 SECURE_CONTENT_TYPE_NOSNIFF = True

170 CORS_ORIGIN_ALLOW_ALL = True

171 CORS_URLS_REGEX = r'^/api/.*$'

172 CORS_ALLOW_HEADERS = (

173 'x-requested-with',

174 'content-type',

175 'accept',

176 'origin',

177 'authorization',

178 'x-csrftoken',

179 'if-none-match',

180 )

181 CORS_EXPOSE_HEADERS = (

182 'etag',

183 'x-content-type-options',

184 'content-type',

185 )

186

187 # use modern no Captcha reCaptcha

188 NOCAPTCHA = True

189

190 LOGIN_REDIRECT_URL = 'home'

191

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/nextcloudappstore/settings/base.py b/nextcloudappstore/settings/base.py

--- a/nextcloudappstore/settings/base.py

+++ b/nextcloudappstore/settings/base.py

@@ -188,3 +188,4 @@

NOCAPTCHA = True

LOGIN_REDIRECT_URL = 'home'

+LOGIN_URL = 'account_login'

| {"golden_diff": "diff --git a/nextcloudappstore/settings/base.py b/nextcloudappstore/settings/base.py\n--- a/nextcloudappstore/settings/base.py\n+++ b/nextcloudappstore/settings/base.py\n@@ -188,3 +188,4 @@\n NOCAPTCHA = True\n \n LOGIN_REDIRECT_URL = 'home'\n+LOGIN_URL = 'account_login'\n", "issue": "After clicking confirm button I got a 404\n- click the confirm link in the email\n- click the button on that page\n- getting redirected to https://.../accounts/login/ instead of https://.../login/ which is not available\n\ncc @BernhardPosselt @adsworth \n\n", "before_files": [{"content": "\"\"\"\nDjango settings for nextcloudappstore project.\n\nGenerated by 'django-admin startproject' using Django 1.9.6.\n\nFor more information on this file, see\nhttps://docs.djangoproject.com/en/1.9/topics/settings/\n\nFor the full list of settings and their values, see\nhttps://docs.djangoproject.com/en/1.9/ref/settings/\n\"\"\"\n\nfrom os.path import dirname, abspath, join, pardir, realpath\n\n# Build paths inside the project like this: os.path.join(BASE_DIR, ...)\nfrom django.conf.global_settings import LANGUAGES\n\nBASE_DIR = realpath(join(dirname(dirname(abspath(__file__))), pardir))\n\n# Quick-start development settings - unsuitable for production\n# See https://docs.djangoproject.com/en/1.9/howto/deployment/checklist/\n\n# Application definition\n\nINSTALLED_APPS = [\n 'nextcloudappstore.core.apps.CoreConfig',\n 'parler',\n 'captcha',\n 'rest_framework',\n 'corsheaders',\n 'allauth',\n 'allauth.account',\n 'allauth.socialaccount',\n 'allauth.socialaccount.providers.github',\n 'allauth.socialaccount.providers.bitbucket',\n 'django.contrib.admin',\n 'django.contrib.auth',\n 'django.contrib.contenttypes',\n 'django.contrib.sessions',\n 'django.contrib.messages',\n 'django.contrib.sites',\n 'django.contrib.staticfiles',\n]\n\nMIDDLEWARE_CLASSES = [\n 'django.middleware.security.SecurityMiddleware',\n 'django.contrib.sessions.middleware.SessionMiddleware',\n 'corsheaders.middleware.CorsMiddleware',\n 'django.middleware.common.CommonMiddleware',\n 'django.middleware.csrf.CsrfViewMiddleware',\n 'django.contrib.auth.middleware.AuthenticationMiddleware',\n 'django.contrib.auth.middleware.SessionAuthenticationMiddleware',\n 'django.contrib.messages.middleware.MessageMiddleware',\n 'django.middleware.clickjacking.XFrameOptionsMiddleware',\n]\n\nROOT_URLCONF = 'nextcloudappstore.urls'\n\nTEMPLATES = [\n {\n 'BACKEND': 'django.template.backends.django.DjangoTemplates',\n 'DIRS': [],\n 'APP_DIRS': True,\n 'OPTIONS': {\n 'context_processors': [\n 'django.template.context_processors.debug',\n 'django.template.context_processors.request',\n 'django.contrib.auth.context_processors.auth',\n 'django.contrib.messages.context_processors.messages',\n ],\n },\n },\n]\n\nWSGI_APPLICATION = 'nextcloudappstore.wsgi.application'\n\n# Database\n# https://docs.djangoproject.com/en/1.9/ref/settings/#databases\n\nDATABASES = {\n 'default': {\n 'ENGINE': 'django.db.backends.sqlite3',\n 'NAME': join(BASE_DIR, 'db.sqlite3'),\n 'TEST': {\n 'NAME': join(BASE_DIR, 'test.sqlite3'),\n }\n }\n}\n\nAUTHENTICATION_BACKENDS = (\n # Needed to login by username in Django admin, regardless of `allauth`\n 'django.contrib.auth.backends.ModelBackend',\n\n # `allauth` specific authentication methods, such as login by e-mail\n 'allauth.account.auth_backends.AuthenticationBackend',\n)\n\n# Password validation\n# https://docs.djangoproject.com/en/1.9/ref/settings/#auth-password-validators\n\nAUTH_PASSWORD_VALIDATORS = [\n {\n 'NAME': 'django.contrib.auth.password_validation'\n '.UserAttributeSimilarityValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation'\n '.MinimumLengthValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation'\n '.CommonPasswordValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation'\n '.NumericPasswordValidator',\n },\n]\n\nREST_FRAMEWORK = {\n 'DEFAULT_RENDERER_CLASSES': (\n 'djangorestframework_camel_case.render.CamelCaseJSONRenderer',\n ),\n 'DEFAULT_PARSER_CLASSES': (\n 'djangorestframework_camel_case.parser.CamelCaseJSONParser',\n ),\n 'DEFAULT_THROTTLE_RATES': {\n 'app_upload': '100/day'\n }\n}\n\nSITE_ID = 1\n\n# Allauth configuration\n# http://django-allauth.readthedocs.io/en/latest/configuration.html\nACCOUNT_EMAIL_REQUIRED = True\nACCOUNT_EMAIL_VERIFICATION = \"mandatory\"\nACCOUNT_LOGOUT_ON_GET = True\nACCOUNT_LOGOUT_REDIRECT_URL = 'home'\nACCOUNT_SESSION_REMEMBER = True\nACCOUNT_SIGNUP_FORM_CLASS = \\\n 'nextcloudappstore.core.user.forms.SignupFormRecaptcha'\n\n# Internationalization\n# https://docs.djangoproject.com/en/1.9/topics/i18n/\nLANGUAGE_CODE = 'en-us'\nTIME_ZONE = 'UTC'\nUSE_I18N = True\nUSE_L10N = True\nUSE_TZ = True\n\nPARLER_LANGUAGES = {\n 1: [{'code': code} for code, trans in LANGUAGES],\n 'default': {\n 'fallbacks': ['en'],\n 'hide_untranslated': False,\n }\n}\n\n# Static files (CSS, JavaScript, Images)\n# https://docs.djangoproject.com/en/1.9/howto/static-files/\nMEDIA_ROOT = join(BASE_DIR, 'media')\nRELEASE_DOWNLOAD_ROOT = None\nSTATIC_URL = '/static/'\nMEDIA_URL = '/media/'\n\n# Default security settings\nSECURE_BROWSER_XSS_FILTER = True\nSECURE_CONTENT_TYPE_NOSNIFF = True\nCORS_ORIGIN_ALLOW_ALL = True\nCORS_URLS_REGEX = r'^/api/.*$'\nCORS_ALLOW_HEADERS = (\n 'x-requested-with',\n 'content-type',\n 'accept',\n 'origin',\n 'authorization',\n 'x-csrftoken',\n 'if-none-match',\n)\nCORS_EXPOSE_HEADERS = (\n 'etag',\n 'x-content-type-options',\n 'content-type',\n)\n\n# use modern no Captcha reCaptcha\nNOCAPTCHA = True\n\nLOGIN_REDIRECT_URL = 'home'\n", "path": "nextcloudappstore/settings/base.py"}], "after_files": [{"content": "\"\"\"\nDjango settings for nextcloudappstore project.\n\nGenerated by 'django-admin startproject' using Django 1.9.6.\n\nFor more information on this file, see\nhttps://docs.djangoproject.com/en/1.9/topics/settings/\n\nFor the full list of settings and their values, see\nhttps://docs.djangoproject.com/en/1.9/ref/settings/\n\"\"\"\n\nfrom os.path import dirname, abspath, join, pardir, realpath\n\n# Build paths inside the project like this: os.path.join(BASE_DIR, ...)\nfrom django.conf.global_settings import LANGUAGES\n\nBASE_DIR = realpath(join(dirname(dirname(abspath(__file__))), pardir))\n\n# Quick-start development settings - unsuitable for production\n# See https://docs.djangoproject.com/en/1.9/howto/deployment/checklist/\n\n# Application definition\n\nINSTALLED_APPS = [\n 'nextcloudappstore.core.apps.CoreConfig',\n 'parler',\n 'captcha',\n 'rest_framework',\n 'corsheaders',\n 'allauth',\n 'allauth.account',\n 'allauth.socialaccount',\n 'allauth.socialaccount.providers.github',\n 'allauth.socialaccount.providers.bitbucket',\n 'django.contrib.admin',\n 'django.contrib.auth',\n 'django.contrib.contenttypes',\n 'django.contrib.sessions',\n 'django.contrib.messages',\n 'django.contrib.sites',\n 'django.contrib.staticfiles',\n]\n\nMIDDLEWARE_CLASSES = [\n 'django.middleware.security.SecurityMiddleware',\n 'django.contrib.sessions.middleware.SessionMiddleware',\n 'corsheaders.middleware.CorsMiddleware',\n 'django.middleware.common.CommonMiddleware',\n 'django.middleware.csrf.CsrfViewMiddleware',\n 'django.contrib.auth.middleware.AuthenticationMiddleware',\n 'django.contrib.auth.middleware.SessionAuthenticationMiddleware',\n 'django.contrib.messages.middleware.MessageMiddleware',\n 'django.middleware.clickjacking.XFrameOptionsMiddleware',\n]\n\nROOT_URLCONF = 'nextcloudappstore.urls'\n\nTEMPLATES = [\n {\n 'BACKEND': 'django.template.backends.django.DjangoTemplates',\n 'DIRS': [],\n 'APP_DIRS': True,\n 'OPTIONS': {\n 'context_processors': [\n 'django.template.context_processors.debug',\n 'django.template.context_processors.request',\n 'django.contrib.auth.context_processors.auth',\n 'django.contrib.messages.context_processors.messages',\n ],\n },\n },\n]\n\nWSGI_APPLICATION = 'nextcloudappstore.wsgi.application'\n\n# Database\n# https://docs.djangoproject.com/en/1.9/ref/settings/#databases\n\nDATABASES = {\n 'default': {\n 'ENGINE': 'django.db.backends.sqlite3',\n 'NAME': join(BASE_DIR, 'db.sqlite3'),\n 'TEST': {\n 'NAME': join(BASE_DIR, 'test.sqlite3'),\n }\n }\n}\n\nAUTHENTICATION_BACKENDS = (\n # Needed to login by username in Django admin, regardless of `allauth`\n 'django.contrib.auth.backends.ModelBackend',\n\n # `allauth` specific authentication methods, such as login by e-mail\n 'allauth.account.auth_backends.AuthenticationBackend',\n)\n\n# Password validation\n# https://docs.djangoproject.com/en/1.9/ref/settings/#auth-password-validators\n\nAUTH_PASSWORD_VALIDATORS = [\n {\n 'NAME': 'django.contrib.auth.password_validation'\n '.UserAttributeSimilarityValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation'\n '.MinimumLengthValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation'\n '.CommonPasswordValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation'\n '.NumericPasswordValidator',\n },\n]\n\nREST_FRAMEWORK = {\n 'DEFAULT_RENDERER_CLASSES': (\n 'djangorestframework_camel_case.render.CamelCaseJSONRenderer',\n ),\n 'DEFAULT_PARSER_CLASSES': (\n 'djangorestframework_camel_case.parser.CamelCaseJSONParser',\n ),\n 'DEFAULT_THROTTLE_RATES': {\n 'app_upload': '100/day'\n }\n}\n\nSITE_ID = 1\n\n# Allauth configuration\n# http://django-allauth.readthedocs.io/en/latest/configuration.html\nACCOUNT_EMAIL_REQUIRED = True\nACCOUNT_EMAIL_VERIFICATION = \"mandatory\"\nACCOUNT_LOGOUT_ON_GET = True\nACCOUNT_LOGOUT_REDIRECT_URL = 'home'\nACCOUNT_SESSION_REMEMBER = True\nACCOUNT_SIGNUP_FORM_CLASS = \\\n 'nextcloudappstore.core.user.forms.SignupFormRecaptcha'\n\n# Internationalization\n# https://docs.djangoproject.com/en/1.9/topics/i18n/\nLANGUAGE_CODE = 'en-us'\nTIME_ZONE = 'UTC'\nUSE_I18N = True\nUSE_L10N = True\nUSE_TZ = True\n\nPARLER_LANGUAGES = {\n 1: [{'code': code} for code, trans in LANGUAGES],\n 'default': {\n 'fallbacks': ['en'],\n 'hide_untranslated': False,\n }\n}\n\n# Static files (CSS, JavaScript, Images)\n# https://docs.djangoproject.com/en/1.9/howto/static-files/\nMEDIA_ROOT = join(BASE_DIR, 'media')\nRELEASE_DOWNLOAD_ROOT = None\nSTATIC_URL = '/static/'\nMEDIA_URL = '/media/'\n\n# Default security settings\nSECURE_BROWSER_XSS_FILTER = True\nSECURE_CONTENT_TYPE_NOSNIFF = True\nCORS_ORIGIN_ALLOW_ALL = True\nCORS_URLS_REGEX = r'^/api/.*$'\nCORS_ALLOW_HEADERS = (\n 'x-requested-with',\n 'content-type',\n 'accept',\n 'origin',\n 'authorization',\n 'x-csrftoken',\n 'if-none-match',\n)\nCORS_EXPOSE_HEADERS = (\n 'etag',\n 'x-content-type-options',\n 'content-type',\n)\n\n# use modern no Captcha reCaptcha\nNOCAPTCHA = True\n\nLOGIN_REDIRECT_URL = 'home'\nLOGIN_URL = 'account_login'\n", "path": "nextcloudappstore/settings/base.py"}]} | 2,020 | 79 |

gh_patches_debug_5843 | rasdani/github-patches | git_diff | svthalia__concrexit-2277 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

True personal Agenda ICal Feed

### Problem

I believe it is annoying that when exporting the Personal iCal Feed to the agenda, you get the events your registered for + the open events + the events with optional registration. In practice this is very annoying as you don't want all these open events you might not go to in your agenda.

### Solution

That is why I suggest:

- Creating a 3rd button "iCal feed (personal)", which exports an iCal feed only containing the events you actually registered for.

- Renaming the current "iCal feed personal" to "iCal feed personal + open events"

### Motivation

A better user experience

### Describe alternatives you've considered

If this is not possible, I would consider adding an "add to agenda" button to the event pages so you can add events to your agenda individually.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `website/events/feeds.py`

Content:

```

1 """The feeds defined by the events package."""

2 from django.conf import settings

3 from django.db.models.query_utils import Q

4 from django.urls import reverse

5 from django.utils.translation import activate

6 from django.utils.translation import gettext as _

7 from django_ical.views import ICalFeed

8

9 from events.models import Event, FeedToken

10

11

12 class EventFeed(ICalFeed):

13 """Output an iCal feed containing all published events."""

14

15 def __init__(self, lang="en"):

16 super().__init__()

17 self.lang = lang

18 self.user = None

19

20 def __call__(self, request, *args, **kwargs):

21 if "u" in request.GET:

22 self.user = FeedToken.get_member(request.GET["u"])

23 else:

24 self.user = None

25

26 return super().__call__(request, args, kwargs)

27

28 def product_id(self):

29 return f"-//{settings.SITE_DOMAIN}//EventCalendar//{self.lang.upper()}"

30

31 def file_name(self):

32 return f"thalia_{self.lang}.ics"

33

34 def title(self):

35 activate(self.lang)

36 return _("Study Association Thalia event calendar")

37

38 def items(self):

39 query = Q(published=True)

40

41 if self.user:

42 query &= Q(registration_start__isnull=True) | (

43 Q(eventregistration__member=self.user)

44 & Q(eventregistration__date_cancelled=None)

45 )

46

47 return Event.objects.filter(query).order_by("-start")

48

49 def item_title(self, item):

50 return item.title

51

52 def item_description(self, item):

53 return f'{item.description} <a href="' f'{self.item_link(item)}">Website</a>'

54

55 def item_start_datetime(self, item):

56 return item.start

57

58 def item_end_datetime(self, item):

59 return item.end

60

61 def item_link(self, item):

62 return settings.BASE_URL + reverse("events:event", kwargs={"pk": item.id})

63

64 def item_location(self, item):

65 return f"{item.location} - {item.map_location}"

66

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/website/events/feeds.py b/website/events/feeds.py

--- a/website/events/feeds.py

+++ b/website/events/feeds.py

@@ -39,9 +39,8 @@

query = Q(published=True)

if self.user:

- query &= Q(registration_start__isnull=True) | (

- Q(eventregistration__member=self.user)

- & Q(eventregistration__date_cancelled=None)

+ query &= Q(eventregistration__member=self.user) & Q(

+ eventregistration__date_cancelled=None

)

return Event.objects.filter(query).order_by("-start")

| {"golden_diff": "diff --git a/website/events/feeds.py b/website/events/feeds.py\n--- a/website/events/feeds.py\n+++ b/website/events/feeds.py\n@@ -39,9 +39,8 @@\n query = Q(published=True)\n \n if self.user:\n- query &= Q(registration_start__isnull=True) | (\n- Q(eventregistration__member=self.user)\n- & Q(eventregistration__date_cancelled=None)\n+ query &= Q(eventregistration__member=self.user) & Q(\n+ eventregistration__date_cancelled=None\n )\n \n return Event.objects.filter(query).order_by(\"-start\")\n", "issue": "True personal Agenda ICal Feed\n### Problem\r\nI believe it is annoying that when exporting the Personal iCal Feed to the agenda, you get the events your registered for + the open events + the events with optional registration. In practice this is very annoying as you don't want all these open events you might not go to in your agenda.\r\n\r\n### Solution\r\nThat is why I suggest:\r\n- Creating a 3rd button \"iCal feed (personal)\", which exports an iCal feed only containing the events you actually registered for.\r\n- Renaming the current \"iCal feed personal\" to \"iCal feed personal + open events\"\r\n\r\n### Motivation\r\nA better user experience \r\n\r\n### Describe alternatives you've considered\r\nIf this is not possible, I would consider adding an \"add to agenda\" button to the event pages so you can add events to your agenda individually.\r\n\n", "before_files": [{"content": "\"\"\"The feeds defined by the events package.\"\"\"\nfrom django.conf import settings\nfrom django.db.models.query_utils import Q\nfrom django.urls import reverse\nfrom django.utils.translation import activate\nfrom django.utils.translation import gettext as _\nfrom django_ical.views import ICalFeed\n\nfrom events.models import Event, FeedToken\n\n\nclass EventFeed(ICalFeed):\n \"\"\"Output an iCal feed containing all published events.\"\"\"\n\n def __init__(self, lang=\"en\"):\n super().__init__()\n self.lang = lang\n self.user = None\n\n def __call__(self, request, *args, **kwargs):\n if \"u\" in request.GET:\n self.user = FeedToken.get_member(request.GET[\"u\"])\n else:\n self.user = None\n\n return super().__call__(request, args, kwargs)\n\n def product_id(self):\n return f\"-//{settings.SITE_DOMAIN}//EventCalendar//{self.lang.upper()}\"\n\n def file_name(self):\n return f\"thalia_{self.lang}.ics\"\n\n def title(self):\n activate(self.lang)\n return _(\"Study Association Thalia event calendar\")\n\n def items(self):\n query = Q(published=True)\n\n if self.user:\n query &= Q(registration_start__isnull=True) | (\n Q(eventregistration__member=self.user)\n & Q(eventregistration__date_cancelled=None)\n )\n\n return Event.objects.filter(query).order_by(\"-start\")\n\n def item_title(self, item):\n return item.title\n\n def item_description(self, item):\n return f'{item.description} <a href=\"' f'{self.item_link(item)}\">Website</a>'\n\n def item_start_datetime(self, item):\n return item.start\n\n def item_end_datetime(self, item):\n return item.end\n\n def item_link(self, item):\n return settings.BASE_URL + reverse(\"events:event\", kwargs={\"pk\": item.id})\n\n def item_location(self, item):\n return f\"{item.location} - {item.map_location}\"\n", "path": "website/events/feeds.py"}], "after_files": [{"content": "\"\"\"The feeds defined by the events package.\"\"\"\nfrom django.conf import settings\nfrom django.db.models.query_utils import Q\nfrom django.urls import reverse\nfrom django.utils.translation import activate\nfrom django.utils.translation import gettext as _\nfrom django_ical.views import ICalFeed\n\nfrom events.models import Event, FeedToken\n\n\nclass EventFeed(ICalFeed):\n \"\"\"Output an iCal feed containing all published events.\"\"\"\n\n def __init__(self, lang=\"en\"):\n super().__init__()\n self.lang = lang\n self.user = None\n\n def __call__(self, request, *args, **kwargs):\n if \"u\" in request.GET:\n self.user = FeedToken.get_member(request.GET[\"u\"])\n else:\n self.user = None\n\n return super().__call__(request, args, kwargs)\n\n def product_id(self):\n return f\"-//{settings.SITE_DOMAIN}//EventCalendar//{self.lang.upper()}\"\n\n def file_name(self):\n return f\"thalia_{self.lang}.ics\"\n\n def title(self):\n activate(self.lang)\n return _(\"Study Association Thalia event calendar\")\n\n def items(self):\n query = Q(published=True)\n\n if self.user:\n query &= Q(eventregistration__member=self.user) & Q(\n eventregistration__date_cancelled=None\n )\n\n return Event.objects.filter(query).order_by(\"-start\")\n\n def item_title(self, item):\n return item.title\n\n def item_description(self, item):\n return f'{item.description} <a href=\"' f'{self.item_link(item)}\">Website</a>'\n\n def item_start_datetime(self, item):\n return item.start\n\n def item_end_datetime(self, item):\n return item.end\n\n def item_link(self, item):\n return settings.BASE_URL + reverse(\"events:event\", kwargs={\"pk\": item.id})\n\n def item_location(self, item):\n return f\"{item.location} - {item.map_location}\"\n", "path": "website/events/feeds.py"}]} | 996 | 139 |

gh_patches_debug_19675 | rasdani/github-patches | git_diff | python-pillow__Pillow-6178 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

"box" parameter of PIL.ImageTk.PhotoImage.paste method appears to be dysfunctional

The "**box**" 4-tuple parameter of the **PIL.ImageTk.PhotoImage.paste** method is intended to allow a PIL Image to be pasted onto a Tkinter compatible PhotoImage within the specified box coordinates, but "box" appears to be dysfunctional, and I can't see anything in the source code of the method to implement its function. Smaller images pasted to larger images appear top-left and ignore any "box" value.

The documentation detailing the "box" parameter is here:

https://pillow.readthedocs.io/en/stable/reference/ImageTk.html#PIL.ImageTk.PhotoImage.paste

The source code of the paste method includes "box" as a parameter and has a docstring for it:

https://github.com/python-pillow/Pillow/blob/main/src/PIL/ImageTk.py#L178

Test code. A smaller blue box pasted into a larger yellow box always appears top-left and ignores the paste coordinates:

```python

import tkinter as tk

from PIL import Image, ImageTk

root = tk.Tk()

pil_img1 = Image.new("RGB",(400, 200), "yellow")

tk_img1 = ImageTk.PhotoImage(pil_img1)

tk.Label(root, image=tk_img1).pack()

pil_img2 = Image.new("RGB",(200, 100), "blue")

tk_img1.paste(pil_img2, box=(100, 50, 200, 150))

root.mainloop()

```

Tested with Windows 10, Python 3.10.4, Pillow 9.0.1

and with Ubuntu 21.10, Python 3.9.7, Pillow 8.1.2

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/PIL/ImageTk.py`

Content:

```

1 #

2 # The Python Imaging Library.

3 # $Id$

4 #

5 # a Tk display interface

6 #

7 # History:

8 # 96-04-08 fl Created

9 # 96-09-06 fl Added getimage method

10 # 96-11-01 fl Rewritten, removed image attribute and crop method

11 # 97-05-09 fl Use PyImagingPaste method instead of image type

12 # 97-05-12 fl Minor tweaks to match the IFUNC95 interface

13 # 97-05-17 fl Support the "pilbitmap" booster patch

14 # 97-06-05 fl Added file= and data= argument to image constructors

15 # 98-03-09 fl Added width and height methods to Image classes

16 # 98-07-02 fl Use default mode for "P" images without palette attribute

17 # 98-07-02 fl Explicitly destroy Tkinter image objects

18 # 99-07-24 fl Support multiple Tk interpreters (from Greg Couch)

19 # 99-07-26 fl Automatically hook into Tkinter (if possible)

20 # 99-08-15 fl Hook uses _imagingtk instead of _imaging

21 #

22 # Copyright (c) 1997-1999 by Secret Labs AB

23 # Copyright (c) 1996-1997 by Fredrik Lundh

24 #

25 # See the README file for information on usage and redistribution.

26 #

27

28 import tkinter

29 from io import BytesIO

30

31 from . import Image

32

33 # --------------------------------------------------------------------

34 # Check for Tkinter interface hooks

35

36 _pilbitmap_ok = None

37

38

39 def _pilbitmap_check():

40 global _pilbitmap_ok

41 if _pilbitmap_ok is None:

42 try:

43 im = Image.new("1", (1, 1))

44 tkinter.BitmapImage(data=f"PIL:{im.im.id}")

45 _pilbitmap_ok = 1

46 except tkinter.TclError:

47 _pilbitmap_ok = 0

48 return _pilbitmap_ok

49

50

51 def _get_image_from_kw(kw):

52 source = None

53 if "file" in kw:

54 source = kw.pop("file")

55 elif "data" in kw:

56 source = BytesIO(kw.pop("data"))

57 if source:

58 return Image.open(source)

59

60

61 def _pyimagingtkcall(command, photo, id):

62 tk = photo.tk

63 try:

64 tk.call(command, photo, id)

65 except tkinter.TclError:

66 # activate Tkinter hook

67 # may raise an error if it cannot attach to Tkinter

68 from . import _imagingtk

69

70 try:

71 if hasattr(tk, "interp"):

72 # Required for PyPy, which always has CFFI installed

73 from cffi import FFI

74

75 ffi = FFI()

76

77 # PyPy is using an FFI CDATA element

78 # (Pdb) self.tk.interp

79 # <cdata 'Tcl_Interp *' 0x3061b50>

80 _imagingtk.tkinit(int(ffi.cast("uintptr_t", tk.interp)), 1)

81 else:

82 _imagingtk.tkinit(tk.interpaddr(), 1)

83 except AttributeError:

84 _imagingtk.tkinit(id(tk), 0)

85 tk.call(command, photo, id)

86

87

88 # --------------------------------------------------------------------

89 # PhotoImage

90

91

92 class PhotoImage:

93 """

94 A Tkinter-compatible photo image. This can be used

95 everywhere Tkinter expects an image object. If the image is an RGBA

96 image, pixels having alpha 0 are treated as transparent.

97

98 The constructor takes either a PIL image, or a mode and a size.

99 Alternatively, you can use the ``file`` or ``data`` options to initialize

100 the photo image object.

101

102 :param image: Either a PIL image, or a mode string. If a mode string is

103 used, a size must also be given.

104 :param size: If the first argument is a mode string, this defines the size

105 of the image.

106 :keyword file: A filename to load the image from (using

107 ``Image.open(file)``).

108 :keyword data: An 8-bit string containing image data (as loaded from an

109 image file).

110 """

111

112 def __init__(self, image=None, size=None, **kw):

113

114 # Tk compatibility: file or data

115 if image is None:

116 image = _get_image_from_kw(kw)

117

118 if hasattr(image, "mode") and hasattr(image, "size"):

119 # got an image instead of a mode

120 mode = image.mode

121 if mode == "P":

122 # palette mapped data

123 image.load()

124 try:

125 mode = image.palette.mode

126 except AttributeError:

127 mode = "RGB" # default

128 size = image.size

129 kw["width"], kw["height"] = size

130 else:

131 mode = image

132 image = None

133

134 if mode not in ["1", "L", "RGB", "RGBA"]:

135 mode = Image.getmodebase(mode)

136

137 self.__mode = mode

138 self.__size = size

139 self.__photo = tkinter.PhotoImage(**kw)

140 self.tk = self.__photo.tk

141 if image:

142 self.paste(image)

143

144 def __del__(self):

145 name = self.__photo.name

146 self.__photo.name = None

147 try:

148 self.__photo.tk.call("image", "delete", name)

149 except Exception:

150 pass # ignore internal errors

151

152 def __str__(self):

153 """

154 Get the Tkinter photo image identifier. This method is automatically

155 called by Tkinter whenever a PhotoImage object is passed to a Tkinter

156 method.

157

158 :return: A Tkinter photo image identifier (a string).

159 """

160 return str(self.__photo)

161

162 def width(self):

163 """

164 Get the width of the image.

165

166 :return: The width, in pixels.

167 """

168 return self.__size[0]

169

170 def height(self):

171 """

172 Get the height of the image.

173

174 :return: The height, in pixels.

175 """

176 return self.__size[1]

177

178 def paste(self, im, box=None):

179 """

180 Paste a PIL image into the photo image. Note that this can

181 be very slow if the photo image is displayed.

182

183 :param im: A PIL image. The size must match the target region. If the

184 mode does not match, the image is converted to the mode of

185 the bitmap image.

186 :param box: A 4-tuple defining the left, upper, right, and lower pixel

187 coordinate. See :ref:`coordinate-system`. If None is given

188 instead of a tuple, all of the image is assumed.

189 """

190

191 # convert to blittable

192 im.load()

193 image = im.im

194 if image.isblock() and im.mode == self.__mode:

195 block = image

196 else:

197 block = image.new_block(self.__mode, im.size)

198 image.convert2(block, image) # convert directly between buffers

199

200 _pyimagingtkcall("PyImagingPhoto", self.__photo, block.id)

201

202

203 # --------------------------------------------------------------------

204 # BitmapImage

205

206

207 class BitmapImage:

208 """

209 A Tkinter-compatible bitmap image. This can be used everywhere Tkinter

210 expects an image object.

211

212 The given image must have mode "1". Pixels having value 0 are treated as

213 transparent. Options, if any, are passed on to Tkinter. The most commonly

214 used option is ``foreground``, which is used to specify the color for the

215 non-transparent parts. See the Tkinter documentation for information on

216 how to specify colours.

217

218 :param image: A PIL image.

219 """

220

221 def __init__(self, image=None, **kw):

222

223 # Tk compatibility: file or data

224 if image is None:

225 image = _get_image_from_kw(kw)

226

227 self.__mode = image.mode

228 self.__size = image.size

229

230 if _pilbitmap_check():

231 # fast way (requires the pilbitmap booster patch)

232 image.load()

233 kw["data"] = f"PIL:{image.im.id}"

234 self.__im = image # must keep a reference

235 else:

236 # slow but safe way

237 kw["data"] = image.tobitmap()

238 self.__photo = tkinter.BitmapImage(**kw)

239

240 def __del__(self):

241 name = self.__photo.name

242 self.__photo.name = None

243 try:

244 self.__photo.tk.call("image", "delete", name)

245 except Exception:

246 pass # ignore internal errors

247

248 def width(self):

249 """

250 Get the width of the image.

251

252 :return: The width, in pixels.

253 """

254 return self.__size[0]

255

256 def height(self):

257 """

258 Get the height of the image.

259

260 :return: The height, in pixels.

261 """

262 return self.__size[1]

263

264 def __str__(self):

265 """

266 Get the Tkinter bitmap image identifier. This method is automatically

267 called by Tkinter whenever a BitmapImage object is passed to a Tkinter

268 method.

269

270 :return: A Tkinter bitmap image identifier (a string).

271 """

272 return str(self.__photo)

273

274

275 def getimage(photo):

276 """Copies the contents of a PhotoImage to a PIL image memory."""

277 im = Image.new("RGBA", (photo.width(), photo.height()))

278 block = im.im

279

280 _pyimagingtkcall("PyImagingPhotoGet", photo, block.id)

281

282 return im

283

284

285 def _show(image, title):

286 """Helper for the Image.show method."""

287

288 class UI(tkinter.Label):

289 def __init__(self, master, im):

290 if im.mode == "1":

291 self.image = BitmapImage(im, foreground="white", master=master)

292 else:

293 self.image = PhotoImage(im, master=master)

294 super().__init__(master, image=self.image, bg="black", bd=0)

295

296 if not tkinter._default_root:

297 raise OSError("tkinter not initialized")

298 top = tkinter.Toplevel()

299 if title:

300 top.title(title)

301 UI(top, image).pack()

302

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/src/PIL/ImageTk.py b/src/PIL/ImageTk.py

--- a/src/PIL/ImageTk.py

+++ b/src/PIL/ImageTk.py

@@ -26,6 +26,7 @@

#

import tkinter

+import warnings

from io import BytesIO

from . import Image

@@ -183,11 +184,15 @@

:param im: A PIL image. The size must match the target region. If the

mode does not match, the image is converted to the mode of

the bitmap image.

- :param box: A 4-tuple defining the left, upper, right, and lower pixel

- coordinate. See :ref:`coordinate-system`. If None is given

- instead of a tuple, all of the image is assumed.

"""

+ if box is not None:

+ warnings.warn(

+ "The box parameter is deprecated and will be removed in Pillow 10 "

+ "(2023-07-01).",

+ DeprecationWarning,

+ )

+

# convert to blittable

im.load()

image = im.im