problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_4181

|

rasdani/github-patches

|

git_diff

|

learningequality__kolibri-4903

|

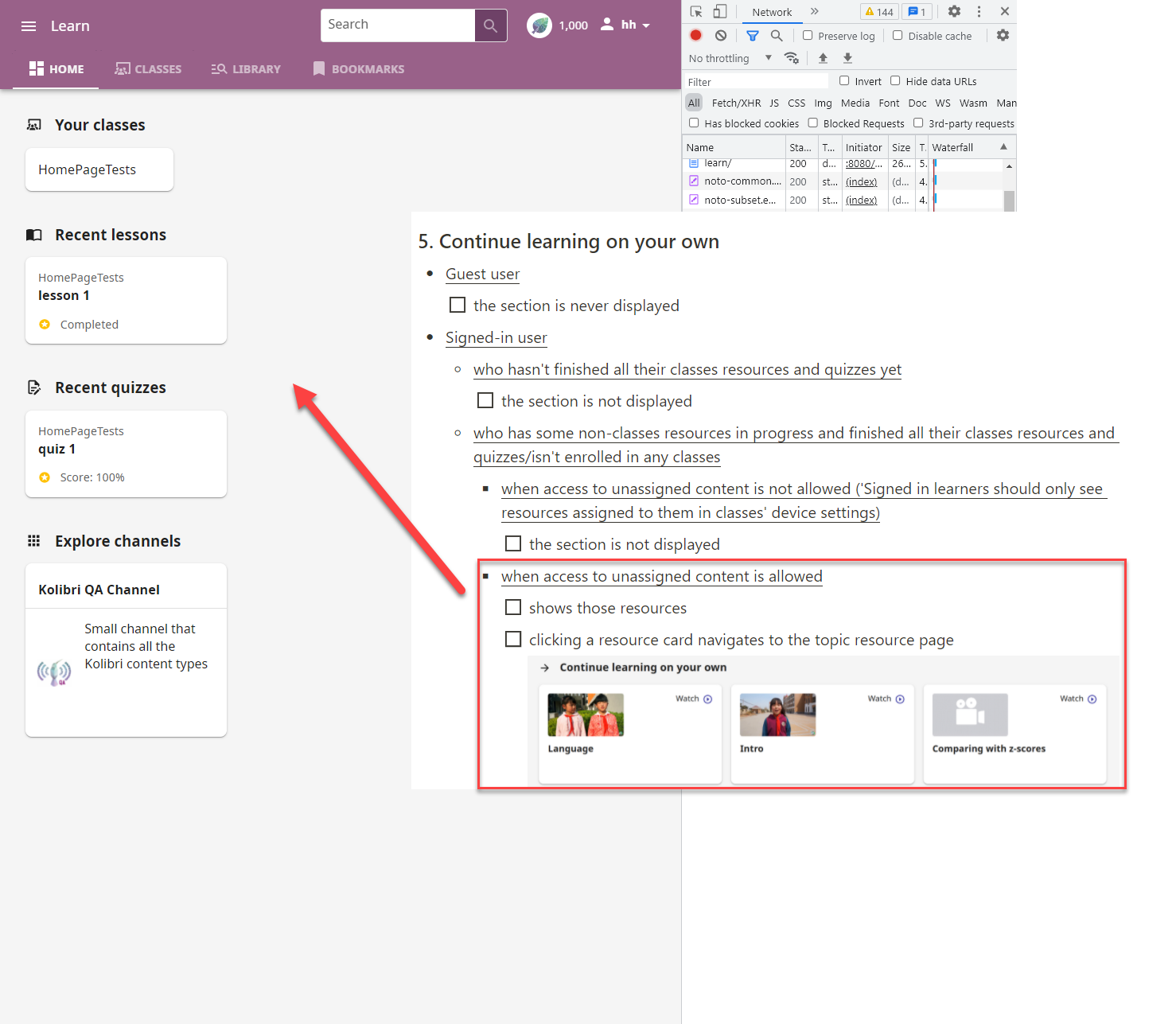

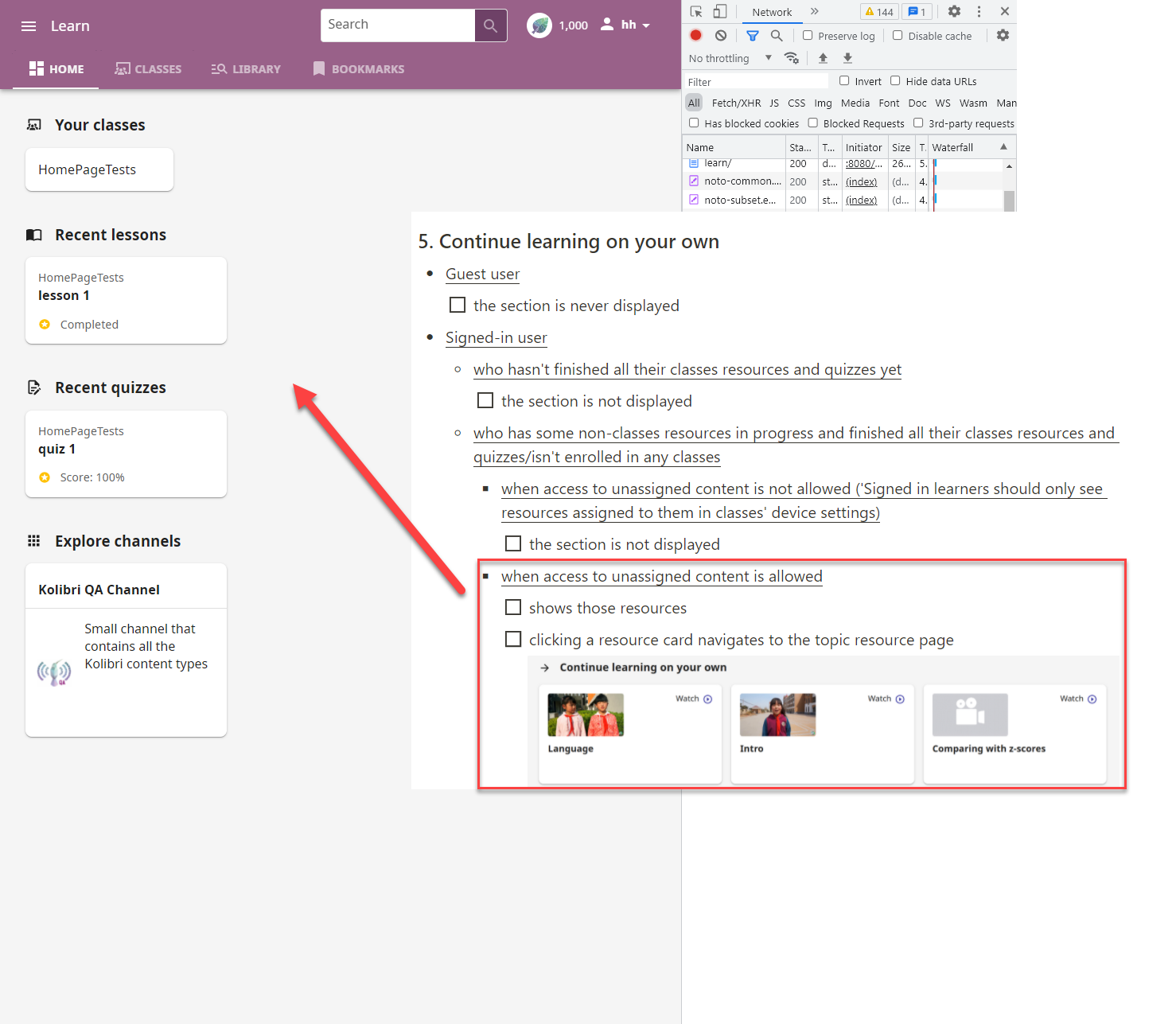

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

When starting Exams, Notifications and Attempt logs can be out of sync

### Observed behavior

1. As a learner, start an exam, but do not do any of the questions

1. After some time, a Notification will be recorded for the exam with a status of "Started"

1. However, querying the class summary API, the 'exam learner status' for that exam will have a status of "NotStarted".

This can lead to an inconsistency, where the Notification will update the in-memory classSummary data and cause the dashboard and reports to show that 1 learner has "started" an exam, but if you were to refresh (and get the on-server class summary data without the updating notification), it will revert to showing 0 learners starting the exam.

### Expected behavior

Since, the UI intends to use Notifications to patch class summary in real time, the two notions of "Exam Started" should match to avoid situations like the on described above.

### User-facing consequences

inconsistent / fluctuating values in reports

### Steps to reproduce

<!--

Precise steps that someone else can follow in order to see this behavior

-->

…

### Context

0.12.0 a 7

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `kolibri/plugins/coach/class_summary_api.py`

Content:

```

1 from django.db.models import Max

2 from django.db.models import Sum

3 from django.shortcuts import get_object_or_404

4 from rest_framework import serializers

5 from rest_framework import viewsets

6 from rest_framework.response import Response

7

8 from kolibri.core.auth import models as auth_models

9 from kolibri.core.content.models import ContentNode

10 from kolibri.core.exams.models import Exam

11 from kolibri.core.lessons.models import Lesson

12 from kolibri.core.logger import models as logger_models

13 from kolibri.core.notifications.models import LearnerProgressNotification

14 from kolibri.core.notifications.models import NotificationEventType

15

16

17 # Intended to match NotificationEventType

18 NOT_STARTED = "NotStarted"

19 STARTED = "Started"

20 HELP_NEEDED = "HelpNeeded"

21 COMPLETED = "Completed"

22

23

24 def content_status_serializer(lesson_data, learners_data, classroom):

25

26 # First generate a unique set of content node ids from all the lessons

27 lesson_node_ids = set()

28 for lesson in lesson_data:

29 lesson_node_ids |= set(lesson.get("node_ids"))

30

31 # Now create a map of content_id to node_id so that we can map between lessons, and notifications

32 # which use the node id, and summary logs, which use content_id

33 content_map = {n[0]: n[1] for n in ContentNode.objects.filter(id__in=lesson_node_ids).values_list("content_id", "id")}

34

35 # Get all the values we need from the summary logs to be able to summarize current status on the

36 # relevant content items.

37 content_log_values = logger_models.ContentSummaryLog.objects.filter(

38 content_id__in=set(content_map.keys()), user__in=[learner["id"] for learner in learners_data]

39 ).values("user_id", "content_id", "end_timestamp", "time_spent", "progress")

40

41 # In order to make the lookup speedy, generate a unique key for each user/node that we find

42 # listed in the needs help notifications that are relevant. We can then just check

43 # existence of this key in the set in order to see whether this user has been flagged as needing

44 # help.

45 lookup_key = "{user_id}-{node_id}"

46 needs_help = {

47 lookup_key.format(user_id=n[0], node_id=n[1]): n[2] for n in LearnerProgressNotification.objects.filter(

48 classroom_id=classroom.id,

49 notification_event=NotificationEventType.Help,

50 lesson_id__in=[lesson["id"] for lesson in lesson_data],

51 ).values_list("user_id", "contentnode_id", "timestamp")

52 }

53

54 # In case a previously flagged learner has since completed an exercise, check all the completed

55 # notifications also

56 completed = {

57 lookup_key.format(user_id=n[0], node_id=n[1]): n[2] for n in LearnerProgressNotification.objects.filter(

58 classroom_id=classroom.id,

59 notification_event=NotificationEventType.Completed,

60 lesson_id__in=[lesson["id"] for lesson in lesson_data],

61 ).values_list("user_id", "contentnode_id", "timestamp")

62 }

63

64 def get_status(log):

65 """

66 Read the dict from a content summary log values query and return the status

67 In the case that we have found a needs help notification for the user and content node

68 in question, return that they need help, otherwise return status based on their

69 current progress.

70 """

71 content_id = log["content_id"]

72 if content_id in content_map:

73 # Don't try to lookup anything if we don't know the content_id

74 # node_id mapping - might happen if a channel has since been deleted

75 key = lookup_key.format(user_id=log["user_id"], node_id=content_map[content_id])

76 if key in needs_help:

77 # Now check if we have not already registered completion of the content node

78 # or if we have and the timestamp is earlier than that on the needs_help event

79 if key not in completed or completed[key] < needs_help[key]:

80 return HELP_NEEDED

81 if log["progress"] == 1:

82 return COMPLETED

83 elif log["progress"] == 0:

84 return NOT_STARTED

85 return STARTED

86

87 def map_content_logs(log):

88 """

89 Parse the content logs to return objects in the expected format.

90 """

91 return {

92 "learner_id": log["user_id"],

93 "content_id": log["content_id"],

94 "status": get_status(log),

95 "last_activity": log["end_timestamp"],

96 "time_spent": log["time_spent"],

97 }

98

99 return map(map_content_logs, content_log_values)

100

101

102 class ExamStatusSerializer(serializers.ModelSerializer):

103 status = serializers.SerializerMethodField()

104 exam_id = serializers.PrimaryKeyRelatedField(source="exam", read_only=True)

105 learner_id = serializers.PrimaryKeyRelatedField(source="user", read_only=True)

106 last_activity = serializers.CharField()

107 num_correct = serializers.SerializerMethodField()

108

109 def get_status(self, exam_log):

110 if exam_log.closed:

111 return COMPLETED

112 elif exam_log.attemptlogs.values_list("item").count() > 0:

113 return STARTED

114 return NOT_STARTED

115

116 def get_num_correct(self, exam_log):

117 return (

118 exam_log.attemptlogs.values_list('item')

119 .order_by('completion_timestamp')

120 .distinct()

121 .aggregate(Sum('correct'))

122 .get('correct__sum')

123 )

124

125 class Meta:

126 model = logger_models.ExamLog

127 fields = ("exam_id", "learner_id", "status", "last_activity", "num_correct")

128

129

130 class GroupSerializer(serializers.ModelSerializer):

131 member_ids = serializers.SerializerMethodField()

132

133 def get_member_ids(self, group):

134 return group.get_members().values_list("id", flat=True)

135

136 class Meta:

137 model = auth_models.LearnerGroup

138 fields = ("id", "name", "member_ids")

139

140

141 class UserSerializer(serializers.ModelSerializer):

142 name = serializers.CharField(source="full_name")

143

144 class Meta:

145 model = auth_models.FacilityUser

146 fields = ("id", "name", "username")

147

148

149 class LessonNodeIdsField(serializers.Field):

150 def to_representation(self, values):

151 return [value["contentnode_id"] for value in values]

152

153

154 class LessonAssignmentsField(serializers.RelatedField):

155 def to_representation(self, assignment):

156 return assignment.collection.id

157

158

159 class LessonSerializer(serializers.ModelSerializer):

160 active = serializers.BooleanField(source="is_active")

161 node_ids = LessonNodeIdsField(default=[], source="resources")

162

163 # classrooms are in here, and filtered out later

164 groups = LessonAssignmentsField(

165 many=True, read_only=True, source="lesson_assignments"

166 )

167

168 class Meta:

169 model = Lesson

170 fields = ("id", "title", "active", "node_ids", "groups")

171

172

173 class ExamQuestionSourcesField(serializers.Field):

174 def to_representation(self, values):

175 return values

176

177

178 class ExamAssignmentsField(serializers.RelatedField):

179 def to_representation(self, assignment):

180 return assignment.collection.id

181

182

183 class ExamSerializer(serializers.ModelSerializer):

184

185 question_sources = ExamQuestionSourcesField(default=[])

186

187 # classes are in here, and filtered out later

188 groups = ExamAssignmentsField(many=True, read_only=True, source="assignments")

189

190 class Meta:

191 model = Exam

192 fields = ("id", "title", "active", "question_sources", "groups")

193

194

195 class ContentSerializer(serializers.ModelSerializer):

196 node_id = serializers.CharField(source="id")

197

198 class Meta:

199 model = ContentNode

200 fields = ("node_id", "content_id", "title", "kind")

201

202

203 def data(Serializer, queryset):

204 return Serializer(queryset, many=True).data

205

206

207 class ClassSummaryViewSet(viewsets.ViewSet):

208 def retrieve(self, request, pk):

209 classroom = get_object_or_404(auth_models.Classroom, id=pk)

210 query_learners = classroom.get_members()

211 query_lesson = Lesson.objects.filter(collection=pk)

212 query_exams = Exam.objects.filter(collection=pk)

213 query_exam_logs = logger_models.ExamLog.objects.filter(

214 exam__in=query_exams

215 ).annotate(last_activity=Max("attemptlogs__end_timestamp"))

216

217 lesson_data = data(LessonSerializer, query_lesson)

218 exam_data = data(ExamSerializer, query_exams)

219

220 # filter classes out of exam assignments

221 for exam in exam_data:

222 exam["groups"] = [g for g in exam["groups"] if g != pk]

223

224 # filter classes out of lesson assignments

225 for lesson in lesson_data:

226 lesson["groups"] = [g for g in lesson["groups"] if g != pk]

227

228 all_node_ids = set()

229 for lesson in lesson_data:

230 all_node_ids |= set(lesson.get("node_ids"))

231 for exam in exam_data:

232 exam_node_ids = [question['exercise_id'] for question in exam.get("question_sources")]

233 all_node_ids |= set(exam_node_ids)

234

235 query_content = ContentNode.objects.filter(id__in=all_node_ids)

236

237 learners_data = data(UserSerializer, query_learners)

238

239 output = {

240 "id": pk,

241 "name": classroom.name,

242 "coaches": data(UserSerializer, classroom.get_coaches()),

243 "learners": learners_data,

244 "groups": data(GroupSerializer, classroom.get_learner_groups()),

245 "exams": exam_data,

246 "exam_learner_status": data(ExamStatusSerializer, query_exam_logs),

247 "content": data(ContentSerializer, query_content),

248 "content_learner_status": content_status_serializer(lesson_data, learners_data, classroom),

249 "lessons": lesson_data,

250 }

251

252 return Response(output)

253

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/kolibri/plugins/coach/class_summary_api.py b/kolibri/plugins/coach/class_summary_api.py

--- a/kolibri/plugins/coach/class_summary_api.py

+++ b/kolibri/plugins/coach/class_summary_api.py

@@ -108,9 +108,8 @@

def get_status(self, exam_log):

if exam_log.closed:

return COMPLETED

- elif exam_log.attemptlogs.values_list("item").count() > 0:

+ else:

return STARTED

- return NOT_STARTED

def get_num_correct(self, exam_log):

return (

|

{"golden_diff": "diff --git a/kolibri/plugins/coach/class_summary_api.py b/kolibri/plugins/coach/class_summary_api.py\n--- a/kolibri/plugins/coach/class_summary_api.py\n+++ b/kolibri/plugins/coach/class_summary_api.py\n@@ -108,9 +108,8 @@\n def get_status(self, exam_log):\n if exam_log.closed:\n return COMPLETED\n- elif exam_log.attemptlogs.values_list(\"item\").count() > 0:\n+ else:\n return STARTED\n- return NOT_STARTED\n \n def get_num_correct(self, exam_log):\n return (\n", "issue": "When starting Exams, Notifications and Attempt logs can be out of sync\n\r\n### Observed behavior\r\n\r\n1. As a learner, start an exam, but do not do any of the questions\r\n1. After some time, a Notification will be recorded for the exam with a status of \"Started\"\r\n1. However, querying the class summary API, the 'exam learner status' for that exam will have a status of \"NotStarted\".\r\n\r\nThis can lead to an inconsistency, where the Notification will update the in-memory classSummary data and cause the dashboard and reports to show that 1 learner has \"started\" an exam, but if you were to refresh (and get the on-server class summary data without the updating notification), it will revert to showing 0 learners starting the exam.\r\n\r\n### Expected behavior\r\n\r\nSince, the UI intends to use Notifications to patch class summary in real time, the two notions of \"Exam Started\" should match to avoid situations like the on described above.\r\n\r\n\r\n### User-facing consequences\r\n\r\ninconsistent / fluctuating values in reports\r\n\r\n\r\n### Steps to reproduce\r\n<!--\r\nPrecise steps that someone else can follow in order to see this behavior\r\n-->\r\n\r\n\u2026\r\n\r\n### Context\r\n\r\n0.12.0 a 7\n", "before_files": [{"content": "from django.db.models import Max\nfrom django.db.models import Sum\nfrom django.shortcuts import get_object_or_404\nfrom rest_framework import serializers\nfrom rest_framework import viewsets\nfrom rest_framework.response import Response\n\nfrom kolibri.core.auth import models as auth_models\nfrom kolibri.core.content.models import ContentNode\nfrom kolibri.core.exams.models import Exam\nfrom kolibri.core.lessons.models import Lesson\nfrom kolibri.core.logger import models as logger_models\nfrom kolibri.core.notifications.models import LearnerProgressNotification\nfrom kolibri.core.notifications.models import NotificationEventType\n\n\n# Intended to match NotificationEventType\nNOT_STARTED = \"NotStarted\"\nSTARTED = \"Started\"\nHELP_NEEDED = \"HelpNeeded\"\nCOMPLETED = \"Completed\"\n\n\ndef content_status_serializer(lesson_data, learners_data, classroom):\n\n # First generate a unique set of content node ids from all the lessons\n lesson_node_ids = set()\n for lesson in lesson_data:\n lesson_node_ids |= set(lesson.get(\"node_ids\"))\n\n # Now create a map of content_id to node_id so that we can map between lessons, and notifications\n # which use the node id, and summary logs, which use content_id\n content_map = {n[0]: n[1] for n in ContentNode.objects.filter(id__in=lesson_node_ids).values_list(\"content_id\", \"id\")}\n\n # Get all the values we need from the summary logs to be able to summarize current status on the\n # relevant content items.\n content_log_values = logger_models.ContentSummaryLog.objects.filter(\n content_id__in=set(content_map.keys()), user__in=[learner[\"id\"] for learner in learners_data]\n ).values(\"user_id\", \"content_id\", \"end_timestamp\", \"time_spent\", \"progress\")\n\n # In order to make the lookup speedy, generate a unique key for each user/node that we find\n # listed in the needs help notifications that are relevant. We can then just check\n # existence of this key in the set in order to see whether this user has been flagged as needing\n # help.\n lookup_key = \"{user_id}-{node_id}\"\n needs_help = {\n lookup_key.format(user_id=n[0], node_id=n[1]): n[2] for n in LearnerProgressNotification.objects.filter(\n classroom_id=classroom.id,\n notification_event=NotificationEventType.Help,\n lesson_id__in=[lesson[\"id\"] for lesson in lesson_data],\n ).values_list(\"user_id\", \"contentnode_id\", \"timestamp\")\n }\n\n # In case a previously flagged learner has since completed an exercise, check all the completed\n # notifications also\n completed = {\n lookup_key.format(user_id=n[0], node_id=n[1]): n[2] for n in LearnerProgressNotification.objects.filter(\n classroom_id=classroom.id,\n notification_event=NotificationEventType.Completed,\n lesson_id__in=[lesson[\"id\"] for lesson in lesson_data],\n ).values_list(\"user_id\", \"contentnode_id\", \"timestamp\")\n }\n\n def get_status(log):\n \"\"\"\n Read the dict from a content summary log values query and return the status\n In the case that we have found a needs help notification for the user and content node\n in question, return that they need help, otherwise return status based on their\n current progress.\n \"\"\"\n content_id = log[\"content_id\"]\n if content_id in content_map:\n # Don't try to lookup anything if we don't know the content_id\n # node_id mapping - might happen if a channel has since been deleted\n key = lookup_key.format(user_id=log[\"user_id\"], node_id=content_map[content_id])\n if key in needs_help:\n # Now check if we have not already registered completion of the content node\n # or if we have and the timestamp is earlier than that on the needs_help event\n if key not in completed or completed[key] < needs_help[key]:\n return HELP_NEEDED\n if log[\"progress\"] == 1:\n return COMPLETED\n elif log[\"progress\"] == 0:\n return NOT_STARTED\n return STARTED\n\n def map_content_logs(log):\n \"\"\"\n Parse the content logs to return objects in the expected format.\n \"\"\"\n return {\n \"learner_id\": log[\"user_id\"],\n \"content_id\": log[\"content_id\"],\n \"status\": get_status(log),\n \"last_activity\": log[\"end_timestamp\"],\n \"time_spent\": log[\"time_spent\"],\n }\n\n return map(map_content_logs, content_log_values)\n\n\nclass ExamStatusSerializer(serializers.ModelSerializer):\n status = serializers.SerializerMethodField()\n exam_id = serializers.PrimaryKeyRelatedField(source=\"exam\", read_only=True)\n learner_id = serializers.PrimaryKeyRelatedField(source=\"user\", read_only=True)\n last_activity = serializers.CharField()\n num_correct = serializers.SerializerMethodField()\n\n def get_status(self, exam_log):\n if exam_log.closed:\n return COMPLETED\n elif exam_log.attemptlogs.values_list(\"item\").count() > 0:\n return STARTED\n return NOT_STARTED\n\n def get_num_correct(self, exam_log):\n return (\n exam_log.attemptlogs.values_list('item')\n .order_by('completion_timestamp')\n .distinct()\n .aggregate(Sum('correct'))\n .get('correct__sum')\n )\n\n class Meta:\n model = logger_models.ExamLog\n fields = (\"exam_id\", \"learner_id\", \"status\", \"last_activity\", \"num_correct\")\n\n\nclass GroupSerializer(serializers.ModelSerializer):\n member_ids = serializers.SerializerMethodField()\n\n def get_member_ids(self, group):\n return group.get_members().values_list(\"id\", flat=True)\n\n class Meta:\n model = auth_models.LearnerGroup\n fields = (\"id\", \"name\", \"member_ids\")\n\n\nclass UserSerializer(serializers.ModelSerializer):\n name = serializers.CharField(source=\"full_name\")\n\n class Meta:\n model = auth_models.FacilityUser\n fields = (\"id\", \"name\", \"username\")\n\n\nclass LessonNodeIdsField(serializers.Field):\n def to_representation(self, values):\n return [value[\"contentnode_id\"] for value in values]\n\n\nclass LessonAssignmentsField(serializers.RelatedField):\n def to_representation(self, assignment):\n return assignment.collection.id\n\n\nclass LessonSerializer(serializers.ModelSerializer):\n active = serializers.BooleanField(source=\"is_active\")\n node_ids = LessonNodeIdsField(default=[], source=\"resources\")\n\n # classrooms are in here, and filtered out later\n groups = LessonAssignmentsField(\n many=True, read_only=True, source=\"lesson_assignments\"\n )\n\n class Meta:\n model = Lesson\n fields = (\"id\", \"title\", \"active\", \"node_ids\", \"groups\")\n\n\nclass ExamQuestionSourcesField(serializers.Field):\n def to_representation(self, values):\n return values\n\n\nclass ExamAssignmentsField(serializers.RelatedField):\n def to_representation(self, assignment):\n return assignment.collection.id\n\n\nclass ExamSerializer(serializers.ModelSerializer):\n\n question_sources = ExamQuestionSourcesField(default=[])\n\n # classes are in here, and filtered out later\n groups = ExamAssignmentsField(many=True, read_only=True, source=\"assignments\")\n\n class Meta:\n model = Exam\n fields = (\"id\", \"title\", \"active\", \"question_sources\", \"groups\")\n\n\nclass ContentSerializer(serializers.ModelSerializer):\n node_id = serializers.CharField(source=\"id\")\n\n class Meta:\n model = ContentNode\n fields = (\"node_id\", \"content_id\", \"title\", \"kind\")\n\n\ndef data(Serializer, queryset):\n return Serializer(queryset, many=True).data\n\n\nclass ClassSummaryViewSet(viewsets.ViewSet):\n def retrieve(self, request, pk):\n classroom = get_object_or_404(auth_models.Classroom, id=pk)\n query_learners = classroom.get_members()\n query_lesson = Lesson.objects.filter(collection=pk)\n query_exams = Exam.objects.filter(collection=pk)\n query_exam_logs = logger_models.ExamLog.objects.filter(\n exam__in=query_exams\n ).annotate(last_activity=Max(\"attemptlogs__end_timestamp\"))\n\n lesson_data = data(LessonSerializer, query_lesson)\n exam_data = data(ExamSerializer, query_exams)\n\n # filter classes out of exam assignments\n for exam in exam_data:\n exam[\"groups\"] = [g for g in exam[\"groups\"] if g != pk]\n\n # filter classes out of lesson assignments\n for lesson in lesson_data:\n lesson[\"groups\"] = [g for g in lesson[\"groups\"] if g != pk]\n\n all_node_ids = set()\n for lesson in lesson_data:\n all_node_ids |= set(lesson.get(\"node_ids\"))\n for exam in exam_data:\n exam_node_ids = [question['exercise_id'] for question in exam.get(\"question_sources\")]\n all_node_ids |= set(exam_node_ids)\n\n query_content = ContentNode.objects.filter(id__in=all_node_ids)\n\n learners_data = data(UserSerializer, query_learners)\n\n output = {\n \"id\": pk,\n \"name\": classroom.name,\n \"coaches\": data(UserSerializer, classroom.get_coaches()),\n \"learners\": learners_data,\n \"groups\": data(GroupSerializer, classroom.get_learner_groups()),\n \"exams\": exam_data,\n \"exam_learner_status\": data(ExamStatusSerializer, query_exam_logs),\n \"content\": data(ContentSerializer, query_content),\n \"content_learner_status\": content_status_serializer(lesson_data, learners_data, classroom),\n \"lessons\": lesson_data,\n }\n\n return Response(output)\n", "path": "kolibri/plugins/coach/class_summary_api.py"}], "after_files": [{"content": "from django.db.models import Max\nfrom django.db.models import Sum\nfrom django.shortcuts import get_object_or_404\nfrom rest_framework import serializers\nfrom rest_framework import viewsets\nfrom rest_framework.response import Response\n\nfrom kolibri.core.auth import models as auth_models\nfrom kolibri.core.content.models import ContentNode\nfrom kolibri.core.exams.models import Exam\nfrom kolibri.core.lessons.models import Lesson\nfrom kolibri.core.logger import models as logger_models\nfrom kolibri.core.notifications.models import LearnerProgressNotification\nfrom kolibri.core.notifications.models import NotificationEventType\n\n\nNOT_STARTED = \"not_started\"\nSTARTED = \"started\"\nHELP_NEEDED = \"help_needed\"\nCOMPLETED = \"completed\"\n\n\ndef content_status_serializer(lesson_data, learners_data, classroom):\n\n # First generate a unique set of content node ids from all the lessons\n lesson_node_ids = set()\n for lesson in lesson_data:\n lesson_node_ids |= set(lesson.get(\"node_ids\"))\n\n # Now create a map of content_id to node_id so that we can map between lessons, and notifications\n # which use the node id, and summary logs, which use content_id\n content_map = {n[0]: n[1] for n in ContentNode.objects.filter(id__in=lesson_node_ids).values_list(\"content_id\", \"id\")}\n\n # Get all the values we need from the summary logs to be able to summarize current status on the\n # relevant content items.\n content_log_values = logger_models.ContentSummaryLog.objects.filter(\n content_id__in=set(content_map.keys()), user__in=[learner[\"id\"] for learner in learners_data]\n ).values(\"user_id\", \"content_id\", \"end_timestamp\", \"time_spent\", \"progress\")\n\n # In order to make the lookup speedy, generate a unique key for each user/node that we find\n # listed in the needs help notifications that are relevant. We can then just check\n # existence of this key in the set in order to see whether this user has been flagged as needing\n # help.\n lookup_key = \"{user_id}-{node_id}\"\n needs_help = {\n lookup_key.format(user_id=n[0], node_id=n[1]): n[2] for n in LearnerProgressNotification.objects.filter(\n classroom_id=classroom.id,\n notification_event=NotificationEventType.Help,\n lesson_id__in=[lesson[\"id\"] for lesson in lesson_data],\n ).values_list(\"user_id\", \"contentnode_id\", \"timestamp\")\n }\n\n # In case a previously flagged learner has since completed an exercise, check all the completed\n # notifications also\n completed = {\n lookup_key.format(user_id=n[0], node_id=n[1]): n[2] for n in LearnerProgressNotification.objects.filter(\n classroom_id=classroom.id,\n notification_event=NotificationEventType.Completed,\n lesson_id__in=[lesson[\"id\"] for lesson in lesson_data],\n ).values_list(\"user_id\", \"contentnode_id\", \"timestamp\")\n }\n\n def get_status(log):\n \"\"\"\n Read the dict from a content summary log values query and return the status\n In the case that we have found a needs help notification for the user and content node\n in question, return that they need help, otherwise return status based on their\n current progress.\n \"\"\"\n content_id = log[\"content_id\"]\n if content_id in content_map:\n # Don't try to lookup anything if we don't know the content_id\n # node_id mapping - might happen if a channel has since been deleted\n key = lookup_key.format(user_id=log[\"user_id\"], node_id=content_map[content_id])\n if key in needs_help:\n # Now check if we have not already registered completion of the content node\n # or if we have and the timestamp is earlier than that on the needs_help event\n if key not in completed or completed[key] < needs_help[key]:\n return HELP_NEEDED\n if log[\"progress\"] == 1:\n return COMPLETED\n elif log[\"progress\"] == 0:\n return NOT_STARTED\n return STARTED\n\n def map_content_logs(log):\n \"\"\"\n Parse the content logs to return objects in the expected format.\n \"\"\"\n return {\n \"learner_id\": log[\"user_id\"],\n \"content_id\": log[\"content_id\"],\n \"status\": get_status(log),\n \"last_activity\": log[\"end_timestamp\"],\n \"time_spent\": log[\"time_spent\"],\n }\n\n return map(map_content_logs, content_log_values)\n\n\nclass ExamStatusSerializer(serializers.ModelSerializer):\n status = serializers.SerializerMethodField()\n exam_id = serializers.PrimaryKeyRelatedField(source=\"exam\", read_only=True)\n learner_id = serializers.PrimaryKeyRelatedField(source=\"user\", read_only=True)\n last_activity = serializers.CharField()\n num_correct = serializers.SerializerMethodField()\n\n def get_status(self, exam_log):\n if exam_log.closed:\n return COMPLETED\n else:\n return STARTED\n\n def get_num_correct(self, exam_log):\n return (\n exam_log.attemptlogs.values_list('item')\n .order_by('completion_timestamp')\n .distinct()\n .aggregate(Sum('correct'))\n .get('correct__sum')\n )\n\n class Meta:\n model = logger_models.ExamLog\n fields = (\"exam_id\", \"learner_id\", \"status\", \"last_activity\", \"num_correct\")\n\n\nclass GroupSerializer(serializers.ModelSerializer):\n member_ids = serializers.SerializerMethodField()\n\n def get_member_ids(self, group):\n return group.get_members().values_list(\"id\", flat=True)\n\n class Meta:\n model = auth_models.LearnerGroup\n fields = (\"id\", \"name\", \"member_ids\")\n\n\nclass UserSerializer(serializers.ModelSerializer):\n name = serializers.CharField(source=\"full_name\")\n\n class Meta:\n model = auth_models.FacilityUser\n fields = (\"id\", \"name\", \"username\")\n\n\nclass LessonNodeIdsField(serializers.Field):\n def to_representation(self, values):\n return [value[\"contentnode_id\"] for value in values]\n\n\nclass LessonAssignmentsField(serializers.RelatedField):\n def to_representation(self, assignment):\n return assignment.collection.id\n\n\nclass LessonSerializer(serializers.ModelSerializer):\n active = serializers.BooleanField(source=\"is_active\")\n node_ids = LessonNodeIdsField(default=[], source=\"resources\")\n\n # classrooms are in here, and filtered out later\n groups = LessonAssignmentsField(\n many=True, read_only=True, source=\"lesson_assignments\"\n )\n\n class Meta:\n model = Lesson\n fields = (\"id\", \"title\", \"active\", \"node_ids\", \"groups\")\n\n\nclass ExamQuestionSourcesField(serializers.Field):\n def to_representation(self, values):\n return values\n\n\nclass ExamAssignmentsField(serializers.RelatedField):\n def to_representation(self, assignment):\n return assignment.collection.id\n\n\nclass ExamSerializer(serializers.ModelSerializer):\n\n question_sources = ExamQuestionSourcesField(default=[])\n\n # classes are in here, and filtered out later\n groups = ExamAssignmentsField(many=True, read_only=True, source=\"assignments\")\n\n class Meta:\n model = Exam\n fields = (\"id\", \"title\", \"active\", \"question_sources\", \"groups\")\n\n\nclass ContentSerializer(serializers.ModelSerializer):\n node_id = serializers.CharField(source=\"id\")\n\n class Meta:\n model = ContentNode\n fields = (\"node_id\", \"content_id\", \"title\", \"kind\")\n\n\ndef data(Serializer, queryset):\n return Serializer(queryset, many=True).data\n\n\nclass ClassSummaryViewSet(viewsets.ViewSet):\n def retrieve(self, request, pk):\n classroom = get_object_or_404(auth_models.Classroom, id=pk)\n query_learners = classroom.get_members()\n query_lesson = Lesson.objects.filter(collection=pk)\n query_exams = Exam.objects.filter(collection=pk)\n query_exam_logs = logger_models.ExamLog.objects.filter(\n exam__in=query_exams\n ).annotate(last_activity=Max(\"attemptlogs__end_timestamp\"))\n\n lesson_data = data(LessonSerializer, query_lesson)\n exam_data = data(ExamSerializer, query_exams)\n\n # filter classes out of exam assignments\n for exam in exam_data:\n exam[\"groups\"] = [g for g in exam[\"groups\"] if g != pk]\n\n # filter classes out of lesson assignments\n for lesson in lesson_data:\n lesson[\"groups\"] = [g for g in lesson[\"groups\"] if g != pk]\n\n all_node_ids = set()\n for lesson in lesson_data:\n all_node_ids |= set(lesson.get(\"node_ids\"))\n for exam in exam_data:\n exam_node_ids = [question['exercise_id'] for question in exam.get(\"question_sources\")]\n all_node_ids |= set(exam_node_ids)\n\n query_content = ContentNode.objects.filter(id__in=all_node_ids)\n\n learners_data = data(UserSerializer, query_learners)\n\n output = {\n \"id\": pk,\n \"name\": classroom.name,\n \"coaches\": data(UserSerializer, classroom.get_coaches()),\n \"learners\": learners_data,\n \"groups\": data(GroupSerializer, classroom.get_learner_groups()),\n \"exams\": exam_data,\n \"exam_learner_status\": data(ExamStatusSerializer, query_exam_logs),\n \"content\": data(ContentSerializer, query_content),\n \"content_learner_status\": content_status_serializer(lesson_data, learners_data, classroom),\n \"lessons\": lesson_data,\n }\n\n return Response(output)\n", "path": "kolibri/plugins/coach/class_summary_api.py"}]}

| 3,248 | 133 |

gh_patches_debug_692

|

rasdani/github-patches

|

git_diff

|

hylang__hy-2312

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

New release

It's time for a new release soon. Here are the things I'd like to get done, or at least try to get done, first. If you think you'll make a PR soon that you'd also like to get in for this release, mention that, too. Volunteers to take these tasks on are also welcome.

- ~#2291~; ~#2292~ - These are more difficult than I thought. I don't think I'm going to make the release wait for them.

- Install bytecode (for Hy and for Hyrule): hylang/hyrule#42; at least partly addresses #1747

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `docs/conf.py`

Content:

```

1 # This file is execfile()d with the current directory set to its containing dir.

2

3 import html

4 import os

5 import re

6 import sys

7 import time

8

9 sys.path.insert(0, os.path.abspath(".."))

10

11 extensions = [

12 "sphinx.ext.napoleon",

13 "sphinx.ext.intersphinx",

14 "sphinx.ext.autodoc",

15 "sphinx.ext.viewcode",

16 "sphinxcontrib.hydomain",

17 ]

18

19 from get_version import __version__ as hy_version

20

21 # Read the Docs might dirty its checkout, so strip the dirty flag.

22 hy_version = re.sub(r"[+.]dirty\Z", "", hy_version)

23

24 templates_path = ["_templates"]

25 source_suffix = ".rst"

26

27 master_doc = "index"

28

29 # General information about the project.

30 project = "hy"

31 copyright = "%s the authors" % time.strftime("%Y")

32

33 # The version info for the project you're documenting, acts as replacement for

34 # |version| and |release|, also used in various other places throughout the

35 # built documents.

36 #

37 # The short X.Y version.

38 version = ".".join(hy_version.split(".")[:-1])

39 # The full version, including alpha/beta/rc tags.

40 release = hy_version

41 hy_descriptive_version = html.escape(hy_version)

42 if "+" in hy_version:

43 hy_descriptive_version += " <strong style='color: red;'>(unstable)</strong>"

44

45 exclude_patterns = ["_build", "coreteam.rst"]

46 add_module_names = True

47

48 pygments_style = "sphinx"

49

50 import sphinx_rtd_theme

51

52 html_theme = "sphinx_rtd_theme"

53 html_theme_path = [sphinx_rtd_theme.get_html_theme_path()]

54

55 # Add any paths that contain custom static files (such as style sheets) here,

56 # relative to this directory. They are copied after the builtin static files,

57 # so a file named "default.css" will overwrite the builtin "default.css".

58 html_static_path = ["_static"]

59

60 html_use_smartypants = False

61 html_show_sphinx = False

62

63 html_context = dict(

64 hy_descriptive_version=hy_descriptive_version,

65 has_active_alpha=True,

66 )

67

68 highlight_language = "clojure"

69

70 intersphinx_mapping = dict(

71 py=("https://docs.python.org/3/", None),

72 py3_10=("https://docs.python.org/3.10/", None),

73 hyrule=("https://hyrule.readthedocs.io/en/master/", None),

74 )

75 # ** Generate Cheatsheet

76 import json

77 from itertools import zip_longest

78 from pathlib import Path

79

80

81 def refize(spec):

82 role = ":hy:func:"

83 if isinstance(spec, dict):

84 _name = spec["name"]

85 uri = spec["uri"]

86 if spec.get("internal"):

87 role = ":ref:"

88 else:

89 uri = spec

90 _name = str.split(uri, ".")[-1]

91 return "{}`{} <{}>`".format(role, _name, uri)

92

93

94 def format_refs(refs, indent):

95 args = [iter(map(refize, refs))]

96 ref_groups = zip_longest(*args, fillvalue="")

97 return str.join(

98 " \\\n" + " " * (indent + 3),

99 [str.join(" ", ref_group) for ref_group in ref_groups],

100 )

101

102

103 def format_row(category, divider_loc):

104 return "{title: <{width}} | {methods}".format(

105 width=divider_loc,

106 title=category["name"],

107 methods=format_refs(category["methods"], divider_loc),

108 )

109

110

111 def format_table(table_spec):

112 table_name = table_spec["name"]

113 categories = table_spec["categories"]

114 longest_cat_name = max(len(category["name"]) for category in categories)

115 table = [

116 table_name,

117 "-" * len(table_name),

118 "",

119 "=" * longest_cat_name + " " + "=" * 25,

120 *(format_row(category, longest_cat_name) for category in categories),

121 "=" * longest_cat_name + " " + "=" * 25,

122 "",

123 ]

124 return "\n".join(table)

125

126

127 # Modifications to the cheatsheet should be added in `cheatsheet.json`

128 cheatsheet_spec = json.loads(Path("./docs/cheatsheet.json").read_text())

129 cheatsheet = [

130 "..",

131 " DO NOT MODIFY THIS FILE. IT IS AUTO GENERATED BY ``conf.py``",

132 " If you need to change or add methods, modify ``cheatsheet_spec`` in ``conf.py``",

133 "",

134 ".. _cheatsheet:",

135 "",

136 "Cheatsheet",

137 "==========",

138 "",

139 *map(format_table, cheatsheet_spec),

140 ]

141 Path("./docs/cheatsheet.rst").write_text("\n".join(cheatsheet))

142

143

144 # ** Sphinx App Setup

145

146

147 def setup(app):

148 app.add_css_file("overrides.css")

149

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/docs/conf.py b/docs/conf.py

--- a/docs/conf.py

+++ b/docs/conf.py

@@ -61,9 +61,7 @@

html_show_sphinx = False

html_context = dict(

- hy_descriptive_version=hy_descriptive_version,

- has_active_alpha=True,

-)

+ hy_descriptive_version=hy_descriptive_version)

highlight_language = "clojure"

|

{"golden_diff": "diff --git a/docs/conf.py b/docs/conf.py\n--- a/docs/conf.py\n+++ b/docs/conf.py\n@@ -61,9 +61,7 @@\n html_show_sphinx = False\n \n html_context = dict(\n- hy_descriptive_version=hy_descriptive_version,\n- has_active_alpha=True,\n-)\n+ hy_descriptive_version=hy_descriptive_version)\n \n highlight_language = \"clojure\"\n", "issue": "New release\nIt's time for a new release soon. Here are the things I'd like to get done, or at least try to get done, first. If you think you'll make a PR soon that you'd also like to get in for this release, mention that, too. Volunteers to take these tasks on are also welcome.\r\n\r\n- ~#2291~; ~#2292~ - These are more difficult than I thought. I don't think I'm going to make the release wait for them.\r\n- Install bytecode (for Hy and for Hyrule): hylang/hyrule#42; at least partly addresses #1747\n", "before_files": [{"content": "# This file is execfile()d with the current directory set to its containing dir.\n\nimport html\nimport os\nimport re\nimport sys\nimport time\n\nsys.path.insert(0, os.path.abspath(\"..\"))\n\nextensions = [\n \"sphinx.ext.napoleon\",\n \"sphinx.ext.intersphinx\",\n \"sphinx.ext.autodoc\",\n \"sphinx.ext.viewcode\",\n \"sphinxcontrib.hydomain\",\n]\n\nfrom get_version import __version__ as hy_version\n\n# Read the Docs might dirty its checkout, so strip the dirty flag.\nhy_version = re.sub(r\"[+.]dirty\\Z\", \"\", hy_version)\n\ntemplates_path = [\"_templates\"]\nsource_suffix = \".rst\"\n\nmaster_doc = \"index\"\n\n# General information about the project.\nproject = \"hy\"\ncopyright = \"%s the authors\" % time.strftime(\"%Y\")\n\n# The version info for the project you're documenting, acts as replacement for\n# |version| and |release|, also used in various other places throughout the\n# built documents.\n#\n# The short X.Y version.\nversion = \".\".join(hy_version.split(\".\")[:-1])\n# The full version, including alpha/beta/rc tags.\nrelease = hy_version\nhy_descriptive_version = html.escape(hy_version)\nif \"+\" in hy_version:\n hy_descriptive_version += \" <strong style='color: red;'>(unstable)</strong>\"\n\nexclude_patterns = [\"_build\", \"coreteam.rst\"]\nadd_module_names = True\n\npygments_style = \"sphinx\"\n\nimport sphinx_rtd_theme\n\nhtml_theme = \"sphinx_rtd_theme\"\nhtml_theme_path = [sphinx_rtd_theme.get_html_theme_path()]\n\n# Add any paths that contain custom static files (such as style sheets) here,\n# relative to this directory. They are copied after the builtin static files,\n# so a file named \"default.css\" will overwrite the builtin \"default.css\".\nhtml_static_path = [\"_static\"]\n\nhtml_use_smartypants = False\nhtml_show_sphinx = False\n\nhtml_context = dict(\n hy_descriptive_version=hy_descriptive_version,\n has_active_alpha=True,\n)\n\nhighlight_language = \"clojure\"\n\nintersphinx_mapping = dict(\n py=(\"https://docs.python.org/3/\", None),\n py3_10=(\"https://docs.python.org/3.10/\", None),\n hyrule=(\"https://hyrule.readthedocs.io/en/master/\", None),\n)\n# ** Generate Cheatsheet\nimport json\nfrom itertools import zip_longest\nfrom pathlib import Path\n\n\ndef refize(spec):\n role = \":hy:func:\"\n if isinstance(spec, dict):\n _name = spec[\"name\"]\n uri = spec[\"uri\"]\n if spec.get(\"internal\"):\n role = \":ref:\"\n else:\n uri = spec\n _name = str.split(uri, \".\")[-1]\n return \"{}`{} <{}>`\".format(role, _name, uri)\n\n\ndef format_refs(refs, indent):\n args = [iter(map(refize, refs))]\n ref_groups = zip_longest(*args, fillvalue=\"\")\n return str.join(\n \" \\\\\\n\" + \" \" * (indent + 3),\n [str.join(\" \", ref_group) for ref_group in ref_groups],\n )\n\n\ndef format_row(category, divider_loc):\n return \"{title: <{width}} | {methods}\".format(\n width=divider_loc,\n title=category[\"name\"],\n methods=format_refs(category[\"methods\"], divider_loc),\n )\n\n\ndef format_table(table_spec):\n table_name = table_spec[\"name\"]\n categories = table_spec[\"categories\"]\n longest_cat_name = max(len(category[\"name\"]) for category in categories)\n table = [\n table_name,\n \"-\" * len(table_name),\n \"\",\n \"=\" * longest_cat_name + \" \" + \"=\" * 25,\n *(format_row(category, longest_cat_name) for category in categories),\n \"=\" * longest_cat_name + \" \" + \"=\" * 25,\n \"\",\n ]\n return \"\\n\".join(table)\n\n\n# Modifications to the cheatsheet should be added in `cheatsheet.json`\ncheatsheet_spec = json.loads(Path(\"./docs/cheatsheet.json\").read_text())\ncheatsheet = [\n \"..\",\n \" DO NOT MODIFY THIS FILE. IT IS AUTO GENERATED BY ``conf.py``\",\n \" If you need to change or add methods, modify ``cheatsheet_spec`` in ``conf.py``\",\n \"\",\n \".. _cheatsheet:\",\n \"\",\n \"Cheatsheet\",\n \"==========\",\n \"\",\n *map(format_table, cheatsheet_spec),\n]\nPath(\"./docs/cheatsheet.rst\").write_text(\"\\n\".join(cheatsheet))\n\n\n# ** Sphinx App Setup\n\n\ndef setup(app):\n app.add_css_file(\"overrides.css\")\n", "path": "docs/conf.py"}], "after_files": [{"content": "# This file is execfile()d with the current directory set to its containing dir.\n\nimport html\nimport os\nimport re\nimport sys\nimport time\n\nsys.path.insert(0, os.path.abspath(\"..\"))\n\nextensions = [\n \"sphinx.ext.napoleon\",\n \"sphinx.ext.intersphinx\",\n \"sphinx.ext.autodoc\",\n \"sphinx.ext.viewcode\",\n \"sphinxcontrib.hydomain\",\n]\n\nfrom get_version import __version__ as hy_version\n\n# Read the Docs might dirty its checkout, so strip the dirty flag.\nhy_version = re.sub(r\"[+.]dirty\\Z\", \"\", hy_version)\n\ntemplates_path = [\"_templates\"]\nsource_suffix = \".rst\"\n\nmaster_doc = \"index\"\n\n# General information about the project.\nproject = \"hy\"\ncopyright = \"%s the authors\" % time.strftime(\"%Y\")\n\n# The version info for the project you're documenting, acts as replacement for\n# |version| and |release|, also used in various other places throughout the\n# built documents.\n#\n# The short X.Y version.\nversion = \".\".join(hy_version.split(\".\")[:-1])\n# The full version, including alpha/beta/rc tags.\nrelease = hy_version\nhy_descriptive_version = html.escape(hy_version)\nif \"+\" in hy_version:\n hy_descriptive_version += \" <strong style='color: red;'>(unstable)</strong>\"\n\nexclude_patterns = [\"_build\", \"coreteam.rst\"]\nadd_module_names = True\n\npygments_style = \"sphinx\"\n\nimport sphinx_rtd_theme\n\nhtml_theme = \"sphinx_rtd_theme\"\nhtml_theme_path = [sphinx_rtd_theme.get_html_theme_path()]\n\n# Add any paths that contain custom static files (such as style sheets) here,\n# relative to this directory. They are copied after the builtin static files,\n# so a file named \"default.css\" will overwrite the builtin \"default.css\".\nhtml_static_path = [\"_static\"]\n\nhtml_use_smartypants = False\nhtml_show_sphinx = False\n\nhtml_context = dict(\n hy_descriptive_version=hy_descriptive_version)\n\nhighlight_language = \"clojure\"\n\nintersphinx_mapping = dict(\n py=(\"https://docs.python.org/3/\", None),\n py3_10=(\"https://docs.python.org/3.10/\", None),\n hyrule=(\"https://hyrule.readthedocs.io/en/master/\", None),\n)\n# ** Generate Cheatsheet\nimport json\nfrom itertools import zip_longest\nfrom pathlib import Path\n\n\ndef refize(spec):\n role = \":hy:func:\"\n if isinstance(spec, dict):\n _name = spec[\"name\"]\n uri = spec[\"uri\"]\n if spec.get(\"internal\"):\n role = \":ref:\"\n else:\n uri = spec\n _name = str.split(uri, \".\")[-1]\n return \"{}`{} <{}>`\".format(role, _name, uri)\n\n\ndef format_refs(refs, indent):\n args = [iter(map(refize, refs))]\n ref_groups = zip_longest(*args, fillvalue=\"\")\n return str.join(\n \" \\\\\\n\" + \" \" * (indent + 3),\n [str.join(\" \", ref_group) for ref_group in ref_groups],\n )\n\n\ndef format_row(category, divider_loc):\n return \"{title: <{width}} | {methods}\".format(\n width=divider_loc,\n title=category[\"name\"],\n methods=format_refs(category[\"methods\"], divider_loc),\n )\n\n\ndef format_table(table_spec):\n table_name = table_spec[\"name\"]\n categories = table_spec[\"categories\"]\n longest_cat_name = max(len(category[\"name\"]) for category in categories)\n table = [\n table_name,\n \"-\" * len(table_name),\n \"\",\n \"=\" * longest_cat_name + \" \" + \"=\" * 25,\n *(format_row(category, longest_cat_name) for category in categories),\n \"=\" * longest_cat_name + \" \" + \"=\" * 25,\n \"\",\n ]\n return \"\\n\".join(table)\n\n\n# Modifications to the cheatsheet should be added in `cheatsheet.json`\ncheatsheet_spec = json.loads(Path(\"./docs/cheatsheet.json\").read_text())\ncheatsheet = [\n \"..\",\n \" DO NOT MODIFY THIS FILE. IT IS AUTO GENERATED BY ``conf.py``\",\n \" If you need to change or add methods, modify ``cheatsheet_spec`` in ``conf.py``\",\n \"\",\n \".. _cheatsheet:\",\n \"\",\n \"Cheatsheet\",\n \"==========\",\n \"\",\n *map(format_table, cheatsheet_spec),\n]\nPath(\"./docs/cheatsheet.rst\").write_text(\"\\n\".join(cheatsheet))\n\n\n# ** Sphinx App Setup\n\n\ndef setup(app):\n app.add_css_file(\"overrides.css\")\n", "path": "docs/conf.py"}]}

| 1,780 | 89 |

gh_patches_debug_37323

|

rasdani/github-patches

|

git_diff

|

pyro-ppl__pyro-743

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Default guide for `Importance` gives non-zero `log_weight`

The default `guide` in `Importance` blocks _all_ sites (and not just those observed). This leads to the `guide_trace` being empty (except for the input and return values). As a result, no samples are reused when replayed on the model _and_ the `guide_trace.log_pdf()` evaluates to `0.0`. The `log_weight` is then equal to the `model_trace.log_pdf()` (which is also evaluated on different samples), which I believe is unintended and incorrect.

The program below illustrates this for a simple univariate Gaussian, where my proposal and target distribution are identical and I would expect the log of the weights to be `0.0`. The latter is only the case when the sites are _explicitly_ exposed.

```python

import torch

from torch.autograd import Variable

import pyro

from pyro import distributions as dist

from pyro import infer

from pyro import poutine

def gaussian():

return pyro.sample('x', dist.normal,

Variable(torch.Tensor([0.0])),

Variable(torch.Tensor([1.0])))

# Using `Importance` with the default `guide`, the `log_weight` is equal to the

# `model_trace.log_pdf()`. That is, the `guide_trace.log_pdf()` (evaluated

# internally) is incorrectly `0.0`.

print('importance_default_guide:')

importance_default_guide = infer.Importance(gaussian, num_samples=10)

for model_trace, log_weight in importance_default_guide._traces():

model_trace_log_pdf = model_trace.log_pdf()

are_equal = log_weight.data[0] == model_trace_log_pdf.data[0]

print(log_weight.data[0], are_equal)

# However, setting the `guide` to expose `x` ensures that it is replayed so

# that the `log_weight` is exactly zero for each sample.

print('importance_exposed_guide:')

importance_exposed_guide = infer.Importance(

gaussian,

guide=poutine.block(gaussian, expose=['x']),

num_samples=10)

for model_trace, log_weight in importance_exposed_guide._traces():

print(log_weight.data[0])

```

```

importance_default_guide:

-0.9368391633033752 True

-1.3421428203582764 True

-0.9189755320549011 True

-2.1423826217651367 True

-2.301940679550171 True

-1.142196774482727 True

-0.9449963569641113 True

-2.7146053314208984 True

-3.420013904571533 True

-1.7994171380996704 True

importance_exposed_guide:

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pyro/poutine/block_poutine.py`

Content:

```

1 from __future__ import absolute_import, division, print_function

2

3 from .poutine import Poutine

4

5

6 class BlockPoutine(Poutine):

7 """

8 This Poutine selectively hides pyro primitive sites from the outside world.

9

10 For example, suppose the stochastic function fn has two sample sites "a" and "b".

11 Then any poutine outside of BlockPoutine(fn, hide=["a"])

12 will not be applied to site "a" and will only see site "b":

13

14 >>> fn_inner = TracePoutine(fn)

15 >>> fn_outer = TracePoutine(BlockPoutine(TracePoutine(fn), hide=["a"]))

16 >>> trace_inner = fn_inner.get_trace()

17 >>> trace_outer = fn_outer.get_trace()

18 >>> "a" in trace_inner

19 True

20 >>> "a" in trace_outer

21 False

22 >>> "b" in trace_inner

23 True

24 >>> "b" in trace_outer

25 True

26

27 BlockPoutine has a flexible interface that allows users

28 to specify in several different ways

29 which sites should be hidden or exposed.

30 See the constructor for details.

31 """

32

33 def __init__(self, fn,

34 hide_all=True, expose_all=False,

35 hide=None, expose=None,

36 hide_types=None, expose_types=None):

37 """

38 :param bool hide_all: hide all sites

39 :param bool expose_all: expose all sites normally

40 :param list hide: list of site names to hide, rest will be exposed normally

41 :param list expose: list of site names to expose, rest will be hidden

42 :param list hide_types: list of site types to hide, rest will be exposed normally

43 :param list expose_types: list of site types to expose normally, rest will be hidden

44

45 Constructor for blocking poutine

46 Default behavior: block everything (hide_all == True)

47

48 A site is hidden if at least one of the following holds:

49 1. msg["name"] in hide

50 2. msg["type"] in hide_types

51 3. msg["name"] not in expose and msg["type"] not in expose_types

52 4. hide_all == True

53 """

54 super(BlockPoutine, self).__init__(fn)

55 # first, some sanity checks:

56 # hide_all and expose_all intersect?

57 assert (hide_all is False and expose_all is False) or \

58 (hide_all != expose_all), "cannot hide and expose a site"

59

60 # hide and expose intersect?

61 if hide is None:

62 hide = []

63 else:

64 hide_all = False

65

66 if expose is None:

67 expose = []

68 assert set(hide).isdisjoint(set(expose)), \

69 "cannot hide and expose a site"

70

71 # hide_types and expose_types intersect?

72 if hide_types is None:

73 hide_types = []

74 if expose_types is None:

75 expose_types = []

76 assert set(hide_types).isdisjoint(set(expose_types)), \

77 "cannot hide and expose a site type"

78

79 # now set stuff

80 self.hide_all = hide_all

81 self.expose_all = expose_all

82 self.hide = hide

83 self.expose = expose

84 self.hide_types = hide_types

85 self.expose_types = expose_types

86

87 def _block_up(self, msg):

88 """

89 :param msg: current message at a trace site, after all execution finished.

90 :returns: boolean decision to hide or expose site.

91

92 A site is hidden if at least one of the following holds:

93 1. msg["name"] in self.hide

94 2. msg["type"] in self.hide_types

95 3. msg["name"] not in self.expose and msg["type"] not in self.expose_types

96 4. self.hide_all == True

97 """

98 # handle observes

99 if msg["type"] == "sample" and msg["is_observed"]:

100 msg_type = "observe"

101 else:

102 msg_type = msg["type"]

103

104 # decision rule for hiding:

105 if (msg["name"] in self.hide) or \

106 (msg_type in self.hide_types) or \

107 ((msg["name"] not in self.expose) and

108 (msg_type not in self.expose_types) and self.hide_all): # noqa: E129

109

110 return True

111 # otherwise expose

112 else:

113 return False

114

115 def _pyro_sample(self, msg):

116 """

117 :param msg: current message at a trace site

118 :returns: a sample from the stochastic function at the site.

119

120 Default sample behavior with a side effect.

121 Applies self._block_up to decide whether to hide the site.

122 """

123 ret = super(BlockPoutine, self)._pyro_sample(msg)

124 msg["stop"] = self._block_up(msg)

125 return ret

126

127 def _pyro_param(self, msg):

128 """

129 :param msg: current message at a trace site

130 :returns: the result of querying the parameter store.

131

132 Default param behavior with a side effect.

133 Applies self._block_up to decide whether to hide the site.

134 """

135 ret = super(BlockPoutine, self)._pyro_param(msg)

136 msg["stop"] = self._block_up(msg)

137 return ret

138

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/pyro/poutine/block_poutine.py b/pyro/poutine/block_poutine.py

--- a/pyro/poutine/block_poutine.py

+++ b/pyro/poutine/block_poutine.py

@@ -49,7 +49,7 @@

1. msg["name"] in hide

2. msg["type"] in hide_types

3. msg["name"] not in expose and msg["type"] not in expose_types

- 4. hide_all == True

+ 4. hide_all == True and hide, hide_types, and expose_types are all None

"""

super(BlockPoutine, self).__init__(fn)

# first, some sanity checks:

@@ -65,14 +65,23 @@

if expose is None:

expose = []

+ else:

+ hide_all = True

+

assert set(hide).isdisjoint(set(expose)), \

"cannot hide and expose a site"

# hide_types and expose_types intersect?

if hide_types is None:

hide_types = []

+ else:

+ hide_all = False

+

if expose_types is None:

expose_types = []

+ else:

+ hide_all = True

+

assert set(hide_types).isdisjoint(set(expose_types)), \

"cannot hide and expose a site type"

@@ -93,7 +102,7 @@

1. msg["name"] in self.hide

2. msg["type"] in self.hide_types

3. msg["name"] not in self.expose and msg["type"] not in self.expose_types

- 4. self.hide_all == True

+ 4. self.hide_all == True and hide, hide_types, and expose_types are all None

"""

# handle observes

if msg["type"] == "sample" and msg["is_observed"]:

@@ -101,11 +110,13 @@

else:

msg_type = msg["type"]

+ is_not_exposed = (msg["name"] not in self.expose) and \

+ (msg_type not in self.expose_types)

+

# decision rule for hiding:

if (msg["name"] in self.hide) or \

(msg_type in self.hide_types) or \

- ((msg["name"] not in self.expose) and

- (msg_type not in self.expose_types) and self.hide_all): # noqa: E129

+ (is_not_exposed and self.hide_all): # noqa: E129

return True

# otherwise expose

|

{"golden_diff": "diff --git a/pyro/poutine/block_poutine.py b/pyro/poutine/block_poutine.py\n--- a/pyro/poutine/block_poutine.py\n+++ b/pyro/poutine/block_poutine.py\n@@ -49,7 +49,7 @@\n 1. msg[\"name\"] in hide\n 2. msg[\"type\"] in hide_types\n 3. msg[\"name\"] not in expose and msg[\"type\"] not in expose_types\n- 4. hide_all == True\n+ 4. hide_all == True and hide, hide_types, and expose_types are all None\n \"\"\"\n super(BlockPoutine, self).__init__(fn)\n # first, some sanity checks:\n@@ -65,14 +65,23 @@\n \n if expose is None:\n expose = []\n+ else:\n+ hide_all = True\n+\n assert set(hide).isdisjoint(set(expose)), \\\n \"cannot hide and expose a site\"\n \n # hide_types and expose_types intersect?\n if hide_types is None:\n hide_types = []\n+ else:\n+ hide_all = False\n+\n if expose_types is None:\n expose_types = []\n+ else:\n+ hide_all = True\n+\n assert set(hide_types).isdisjoint(set(expose_types)), \\\n \"cannot hide and expose a site type\"\n \n@@ -93,7 +102,7 @@\n 1. msg[\"name\"] in self.hide\n 2. msg[\"type\"] in self.hide_types\n 3. msg[\"name\"] not in self.expose and msg[\"type\"] not in self.expose_types\n- 4. self.hide_all == True\n+ 4. self.hide_all == True and hide, hide_types, and expose_types are all None\n \"\"\"\n # handle observes\n if msg[\"type\"] == \"sample\" and msg[\"is_observed\"]:\n@@ -101,11 +110,13 @@\n else:\n msg_type = msg[\"type\"]\n \n+ is_not_exposed = (msg[\"name\"] not in self.expose) and \\\n+ (msg_type not in self.expose_types)\n+\n # decision rule for hiding:\n if (msg[\"name\"] in self.hide) or \\\n (msg_type in self.hide_types) or \\\n- ((msg[\"name\"] not in self.expose) and\n- (msg_type not in self.expose_types) and self.hide_all): # noqa: E129\n+ (is_not_exposed and self.hide_all): # noqa: E129\n \n return True\n # otherwise expose\n", "issue": "Default guide for `Importance` gives non-zero `log_weight`\nThe default `guide` in `Importance` blocks _all_ sites (and not just those observed). This leads to the `guide_trace` being empty (except for the input and return values). As a result, no samples are reused when replayed on the model _and_ the `guide_trace.log_pdf()` evaluates to `0.0`. The `log_weight` is then equal to the `model_trace.log_pdf()` (which is also evaluated on different samples), which I believe is unintended and incorrect.\r\n\r\nThe program below illustrates this for a simple univariate Gaussian, where my proposal and target distribution are identical and I would expect the log of the weights to be `0.0`. The latter is only the case when the sites are _explicitly_ exposed.\r\n\r\n```python\r\nimport torch\r\nfrom torch.autograd import Variable\r\n\r\nimport pyro\r\nfrom pyro import distributions as dist\r\nfrom pyro import infer\r\nfrom pyro import poutine\r\n\r\n\r\ndef gaussian():\r\n return pyro.sample('x', dist.normal,\r\n Variable(torch.Tensor([0.0])),\r\n Variable(torch.Tensor([1.0])))\r\n\r\n\r\n# Using `Importance` with the default `guide`, the `log_weight` is equal to the\r\n# `model_trace.log_pdf()`. That is, the `guide_trace.log_pdf()` (evaluated\r\n# internally) is incorrectly `0.0`.\r\nprint('importance_default_guide:')\r\nimportance_default_guide = infer.Importance(gaussian, num_samples=10)\r\nfor model_trace, log_weight in importance_default_guide._traces():\r\n model_trace_log_pdf = model_trace.log_pdf()\r\n are_equal = log_weight.data[0] == model_trace_log_pdf.data[0]\r\n print(log_weight.data[0], are_equal)\r\n\r\n# However, setting the `guide` to expose `x` ensures that it is replayed so\r\n# that the `log_weight` is exactly zero for each sample.\r\nprint('importance_exposed_guide:')\r\nimportance_exposed_guide = infer.Importance(\r\n gaussian,\r\n guide=poutine.block(gaussian, expose=['x']),\r\n num_samples=10)\r\nfor model_trace, log_weight in importance_exposed_guide._traces():\r\n print(log_weight.data[0])\r\n```\r\n```\r\nimportance_default_guide:\r\n-0.9368391633033752 True\r\n-1.3421428203582764 True\r\n-0.9189755320549011 True\r\n-2.1423826217651367 True\r\n-2.301940679550171 True\r\n-1.142196774482727 True\r\n-0.9449963569641113 True\r\n-2.7146053314208984 True\r\n-3.420013904571533 True\r\n-1.7994171380996704 True\r\nimportance_exposed_guide:\r\n0.0\r\n0.0\r\n0.0\r\n0.0\r\n0.0\r\n0.0\r\n0.0\r\n0.0\r\n0.0\r\n0.0\r\n```\n", "before_files": [{"content": "from __future__ import absolute_import, division, print_function\n\nfrom .poutine import Poutine\n\n\nclass BlockPoutine(Poutine):\n \"\"\"\n This Poutine selectively hides pyro primitive sites from the outside world.\n\n For example, suppose the stochastic function fn has two sample sites \"a\" and \"b\".\n Then any poutine outside of BlockPoutine(fn, hide=[\"a\"])\n will not be applied to site \"a\" and will only see site \"b\":\n\n >>> fn_inner = TracePoutine(fn)\n >>> fn_outer = TracePoutine(BlockPoutine(TracePoutine(fn), hide=[\"a\"]))\n >>> trace_inner = fn_inner.get_trace()\n >>> trace_outer = fn_outer.get_trace()\n >>> \"a\" in trace_inner\n True\n >>> \"a\" in trace_outer\n False\n >>> \"b\" in trace_inner\n True\n >>> \"b\" in trace_outer\n True\n\n BlockPoutine has a flexible interface that allows users\n to specify in several different ways\n which sites should be hidden or exposed.\n See the constructor for details.\n \"\"\"\n\n def __init__(self, fn,\n hide_all=True, expose_all=False,\n hide=None, expose=None,\n hide_types=None, expose_types=None):\n \"\"\"\n :param bool hide_all: hide all sites\n :param bool expose_all: expose all sites normally\n :param list hide: list of site names to hide, rest will be exposed normally\n :param list expose: list of site names to expose, rest will be hidden\n :param list hide_types: list of site types to hide, rest will be exposed normally\n :param list expose_types: list of site types to expose normally, rest will be hidden\n\n Constructor for blocking poutine\n Default behavior: block everything (hide_all == True)\n\n A site is hidden if at least one of the following holds:\n 1. msg[\"name\"] in hide\n 2. msg[\"type\"] in hide_types\n 3. msg[\"name\"] not in expose and msg[\"type\"] not in expose_types\n 4. hide_all == True\n \"\"\"\n super(BlockPoutine, self).__init__(fn)\n # first, some sanity checks:\n # hide_all and expose_all intersect?\n assert (hide_all is False and expose_all is False) or \\\n (hide_all != expose_all), \"cannot hide and expose a site\"\n\n # hide and expose intersect?\n if hide is None:\n hide = []\n else:\n hide_all = False\n\n if expose is None:\n expose = []\n assert set(hide).isdisjoint(set(expose)), \\\n \"cannot hide and expose a site\"\n\n # hide_types and expose_types intersect?\n if hide_types is None:\n hide_types = []\n if expose_types is None:\n expose_types = []\n assert set(hide_types).isdisjoint(set(expose_types)), \\\n \"cannot hide and expose a site type\"\n\n # now set stuff\n self.hide_all = hide_all\n self.expose_all = expose_all\n self.hide = hide\n self.expose = expose\n self.hide_types = hide_types\n self.expose_types = expose_types\n\n def _block_up(self, msg):\n \"\"\"\n :param msg: current message at a trace site, after all execution finished.\n :returns: boolean decision to hide or expose site.\n\n A site is hidden if at least one of the following holds:\n 1. msg[\"name\"] in self.hide\n 2. msg[\"type\"] in self.hide_types\n 3. msg[\"name\"] not in self.expose and msg[\"type\"] not in self.expose_types\n 4. self.hide_all == True\n \"\"\"\n # handle observes\n if msg[\"type\"] == \"sample\" and msg[\"is_observed\"]:\n msg_type = \"observe\"\n else:\n msg_type = msg[\"type\"]\n\n # decision rule for hiding:\n if (msg[\"name\"] in self.hide) or \\\n (msg_type in self.hide_types) or \\\n ((msg[\"name\"] not in self.expose) and\n (msg_type not in self.expose_types) and self.hide_all): # noqa: E129\n\n return True\n # otherwise expose\n else:\n return False\n\n def _pyro_sample(self, msg):\n \"\"\"\n :param msg: current message at a trace site\n :returns: a sample from the stochastic function at the site.\n\n Default sample behavior with a side effect.\n Applies self._block_up to decide whether to hide the site.\n \"\"\"\n ret = super(BlockPoutine, self)._pyro_sample(msg)\n msg[\"stop\"] = self._block_up(msg)\n return ret\n\n def _pyro_param(self, msg):\n \"\"\"\n :param msg: current message at a trace site\n :returns: the result of querying the parameter store.\n\n Default param behavior with a side effect.\n Applies self._block_up to decide whether to hide the site.\n \"\"\"\n ret = super(BlockPoutine, self)._pyro_param(msg)\n msg[\"stop\"] = self._block_up(msg)\n return ret\n", "path": "pyro/poutine/block_poutine.py"}], "after_files": [{"content": "from __future__ import absolute_import, division, print_function\n\nfrom .poutine import Poutine\n\n\nclass BlockPoutine(Poutine):\n \"\"\"\n This Poutine selectively hides pyro primitive sites from the outside world.\n\n For example, suppose the stochastic function fn has two sample sites \"a\" and \"b\".\n Then any poutine outside of BlockPoutine(fn, hide=[\"a\"])\n will not be applied to site \"a\" and will only see site \"b\":\n\n >>> fn_inner = TracePoutine(fn)\n >>> fn_outer = TracePoutine(BlockPoutine(TracePoutine(fn), hide=[\"a\"]))\n >>> trace_inner = fn_inner.get_trace()\n >>> trace_outer = fn_outer.get_trace()\n >>> \"a\" in trace_inner\n True\n >>> \"a\" in trace_outer\n False\n >>> \"b\" in trace_inner\n True\n >>> \"b\" in trace_outer\n True\n\n BlockPoutine has a flexible interface that allows users\n to specify in several different ways\n which sites should be hidden or exposed.\n See the constructor for details.\n \"\"\"\n\n def __init__(self, fn,\n hide_all=True, expose_all=False,\n hide=None, expose=None,\n hide_types=None, expose_types=None):\n \"\"\"\n :param bool hide_all: hide all sites\n :param bool expose_all: expose all sites normally\n :param list hide: list of site names to hide, rest will be exposed normally\n :param list expose: list of site names to expose, rest will be hidden\n :param list hide_types: list of site types to hide, rest will be exposed normally\n :param list expose_types: list of site types to expose normally, rest will be hidden\n\n Constructor for blocking poutine\n Default behavior: block everything (hide_all == True)\n\n A site is hidden if at least one of the following holds:\n 1. msg[\"name\"] in hide\n 2. msg[\"type\"] in hide_types\n 3. msg[\"name\"] not in expose and msg[\"type\"] not in expose_types\n 4. hide_all == True and hide, hide_types, and expose_types are all None\n \"\"\"\n super(BlockPoutine, self).__init__(fn)\n # first, some sanity checks:\n # hide_all and expose_all intersect?\n assert (hide_all is False and expose_all is False) or \\\n (hide_all != expose_all), \"cannot hide and expose a site\"\n\n # hide and expose intersect?\n if hide is None:\n hide = []\n else:\n hide_all = False\n\n if expose is None:\n expose = []\n else:\n hide_all = True\n\n assert set(hide).isdisjoint(set(expose)), \\\n \"cannot hide and expose a site\"\n\n # hide_types and expose_types intersect?\n if hide_types is None:\n hide_types = []\n else:\n hide_all = False\n\n if expose_types is None:\n expose_types = []\n else:\n hide_all = True\n\n assert set(hide_types).isdisjoint(set(expose_types)), \\\n \"cannot hide and expose a site type\"\n\n # now set stuff\n self.hide_all = hide_all\n self.expose_all = expose_all\n self.hide = hide\n self.expose = expose\n self.hide_types = hide_types\n self.expose_types = expose_types\n\n def _block_up(self, msg):\n \"\"\"\n :param msg: current message at a trace site, after all execution finished.\n :returns: boolean decision to hide or expose site.\n\n A site is hidden if at least one of the following holds:\n 1. msg[\"name\"] in self.hide\n 2. msg[\"type\"] in self.hide_types\n 3. msg[\"name\"] not in self.expose and msg[\"type\"] not in self.expose_types\n 4. self.hide_all == True and hide, hide_types, and expose_types are all None\n \"\"\"\n # handle observes\n if msg[\"type\"] == \"sample\" and msg[\"is_observed\"]:\n msg_type = \"observe\"\n else:\n msg_type = msg[\"type\"]\n\n is_not_exposed = (msg[\"name\"] not in self.expose) and \\\n (msg_type not in self.expose_types)\n\n # decision rule for hiding:\n if (msg[\"name\"] in self.hide) or \\\n (msg_type in self.hide_types) or \\\n (is_not_exposed and self.hide_all): # noqa: E129\n\n return True\n # otherwise expose\n else:\n return False\n\n def _pyro_sample(self, msg):\n \"\"\"\n :param msg: current message at a trace site\n :returns: a sample from the stochastic function at the site.\n\n Default sample behavior with a side effect.\n Applies self._block_up to decide whether to hide the site.\n \"\"\"\n ret = super(BlockPoutine, self)._pyro_sample(msg)\n msg[\"stop\"] = self._block_up(msg)\n return ret\n\n def _pyro_param(self, msg):\n \"\"\"\n :param msg: current message at a trace site\n :returns: the result of querying the parameter store.\n\n Default param behavior with a side effect.\n Applies self._block_up to decide whether to hide the site.\n \"\"\"\n ret = super(BlockPoutine, self)._pyro_param(msg)\n msg[\"stop\"] = self._block_up(msg)\n return ret\n", "path": "pyro/poutine/block_poutine.py"}]}

| 2,453 | 583 |

gh_patches_debug_65907

|

rasdani/github-patches

|

git_diff

|

ipython__ipython-3338

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

find_cmd test failure on Windows

I think this is caused by #3301. The [Windows implementation of find_cmd](https://github.com/ipython/ipython/blob/master/IPython/utils/_process_win32.py#L74) expects a command name without an extension, but the test now uses 'python.exe'.

I think that 'python.exe' is a valid command on Windows, so I think we should modify `find_cmd` to allow passing a command with an extension. Alternatively, we could modify the test to strip the extension.

```

======================================================================

ERROR: Make sure we find sys.exectable for python.

----------------------------------------------------------------------

Traceback (most recent call last):

File "S:\Users\slave\Jenkins\shiningpanda\jobs\d5f643a2\virtualenvs\ff035a1d\lib\site-packages\nose\case.py", line 197, in runTest

self.test(*self.arg)

File "S:\Users\slave\Jenkins\shiningpanda\jobs\d5f643a2\virtualenvs\ff035a1d\lib\site-packages\ipython-1.0.dev-py2.7.egg\IPython\utils\tests\test_process.py", line 36, in test_find_cmd_python

nt.assert_equal(find_cmd(python), sys.executable)

File "S:\Users\slave\Jenkins\shiningpanda\jobs\d5f643a2\virtualenvs\ff035a1d\lib\site-packages\ipython-1.0.dev-py2.7.egg\IPython\utils\process.py", line 67, in find_cmd

raise FindCmdError('command could not be found: %s' % cmd)

FindCmdError: command could not be found: python.exe

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `IPython/utils/_process_win32.py`

Content:

```

1 """Windows-specific implementation of process utilities.

2

3 This file is only meant to be imported by process.py, not by end-users.

4 """

5

6 #-----------------------------------------------------------------------------

7 # Copyright (C) 2010-2011 The IPython Development Team

8 #

9 # Distributed under the terms of the BSD License. The full license is in

10 # the file COPYING, distributed as part of this software.

11 #-----------------------------------------------------------------------------

12

13 #-----------------------------------------------------------------------------

14 # Imports

15 #-----------------------------------------------------------------------------

16 from __future__ import print_function

17

18 # stdlib

19 import os

20 import sys

21 import ctypes

22 import msvcrt

23

24 from ctypes import c_int, POINTER

25 from ctypes.wintypes import LPCWSTR, HLOCAL

26 from subprocess import STDOUT

27

28 # our own imports

29 from ._process_common import read_no_interrupt, process_handler, arg_split as py_arg_split

30 from . import py3compat

31 from .encoding import DEFAULT_ENCODING

32

33 #-----------------------------------------------------------------------------

34 # Function definitions

35 #-----------------------------------------------------------------------------

36

37 class AvoidUNCPath(object):

38 """A context manager to protect command execution from UNC paths.

39

40 In the Win32 API, commands can't be invoked with the cwd being a UNC path.

41 This context manager temporarily changes directory to the 'C:' drive on

42 entering, and restores the original working directory on exit.

43

44 The context manager returns the starting working directory *if* it made a

45 change and None otherwise, so that users can apply the necessary adjustment

46 to their system calls in the event of a change.

47

48 Example

49 -------

50 ::

51 cmd = 'dir'

52 with AvoidUNCPath() as path:

53 if path is not None:

54 cmd = '"pushd %s &&"%s' % (path, cmd)

55 os.system(cmd)

56 """

57 def __enter__(self):

58 self.path = os.getcwdu()

59 self.is_unc_path = self.path.startswith(r"\\")

60 if self.is_unc_path:

61 # change to c drive (as cmd.exe cannot handle UNC addresses)

62 os.chdir("C:")

63 return self.path

64 else:

65 # We return None to signal that there was no change in the working

66 # directory

67 return None

68

69 def __exit__(self, exc_type, exc_value, traceback):

70 if self.is_unc_path:

71 os.chdir(self.path)

72

73

74 def _find_cmd(cmd):

75 """Find the full path to a .bat or .exe using the win32api module."""

76 try:

77 from win32api import SearchPath

78 except ImportError:

79 raise ImportError('you need to have pywin32 installed for this to work')

80 else:

81 PATH = os.environ['PATH']

82 extensions = ['.exe', '.com', '.bat', '.py']

83 path = None

84 for ext in extensions:

85 try:

86 path = SearchPath(PATH, cmd + ext)[0]

87 except:

88 pass

89 if path is None:

90 raise OSError("command %r not found" % cmd)

91 else:

92 return path

93

94