problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_20873

|

rasdani/github-patches

|

git_diff

|

GeotrekCE__Geotrek-admin-2223

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fix translations in package

The compilemessages step for geotrek and mapentity is missing somewhere

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

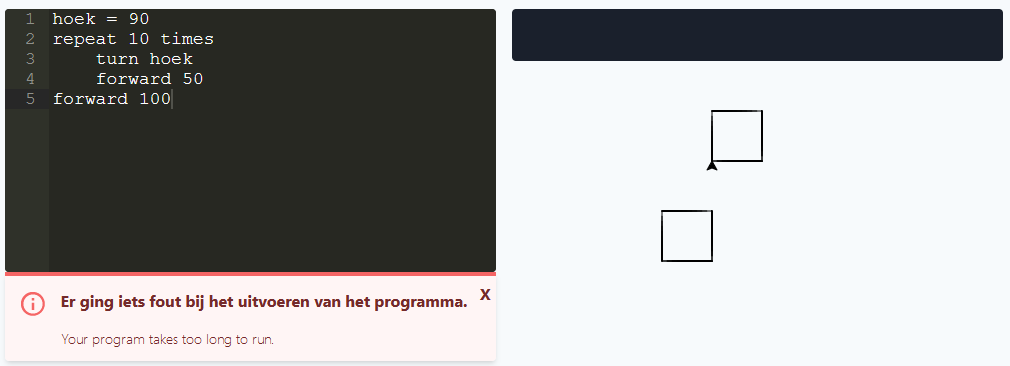

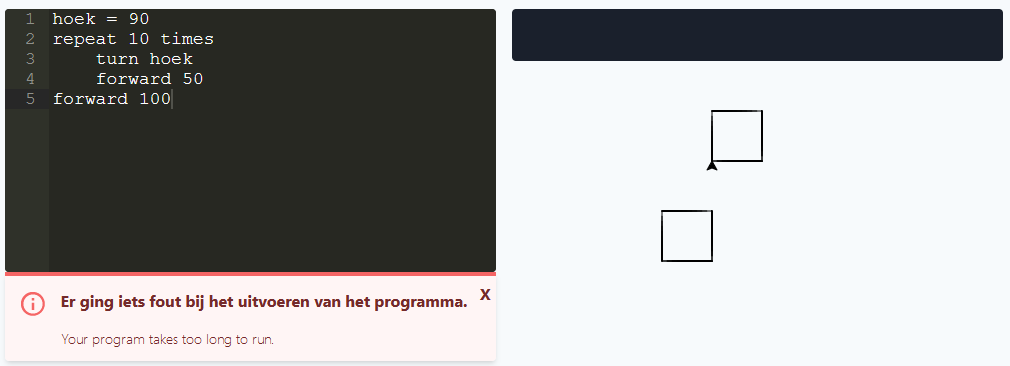

Path: `setup.py`

Content:

```

1 #!/usr/bin/python3

2 import os

3 import distutils.command.build

4 from setuptools import setup, find_packages

5

6 here = os.path.abspath(os.path.dirname(__file__))

7

8

9 class BuildCommand(distutils.command.build.build):

10 def run(self):

11 print("before")

12 distutils.command.build.build.run(self)

13 print("after")

14 from django.core.management import call_command

15 curdir = os.getcwd()

16 os.chdir(os.path.join(curdir, 'geotrek'))

17 call_command('compilemessages')

18 os.chdir(os.path.join(curdir, 'mapentity'))

19 call_command('compilemessages')

20 os.chdir(curdir)

21

22

23 setup(

24 name='geotrek',

25 version=open(os.path.join(here, 'VERSION')).read().strip(),

26 author='Makina Corpus',

27 author_email='[email protected]',

28 url='http://makina-corpus.com',

29 description="Geotrek",

30 long_description=(open(os.path.join(here, 'README.rst')).read() + '\n\n'

31 + open(os.path.join(here, 'docs', 'changelog.rst')).read()),

32 scripts=['manage.py'],

33 install_requires=[

34 # pinned by requirements.txt

35 'psycopg2',

36 'docutils',

37 'GDAL',

38 'Pillow',

39 'easy-thumbnails',

40 'simplekml',

41 'pygal',

42 'django-extended-choices',

43 'django-multiselectfield',

44 'geojson',

45 'tif2geojson',

46 'pytz',

47 'djangorestframework-gis',

48 'drf-dynamic-fields',

49 'django-rest-swagger',

50 'django-embed-video',

51 'xlrd',

52 'landez',

53 'redis',

54 'celery',

55 'django-celery-results',

56 'requests[security]',

57 'drf-extensions',

58 'django-colorfield',

59 'factory_boy',

60 ],

61 cmdclass={"build": BuildCommand},

62 include_package_data=True,

63 license='BSD, see LICENSE file.',

64 packages=find_packages(),

65 classifiers=['Natural Language :: English',

66 'Environment :: Web Environment',

67 'Framework :: Django',

68 'Development Status :: 5 - Production/Stable',

69 'Programming Language :: Python :: 2.7'],

70 )

71

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -1,23 +1,24 @@

#!/usr/bin/python3

import os

import distutils.command.build

+from pathlib import Path

from setuptools import setup, find_packages

+from shutil import copy

here = os.path.abspath(os.path.dirname(__file__))

class BuildCommand(distutils.command.build.build):

def run(self):

- print("before")

distutils.command.build.build.run(self)

- print("after")

from django.core.management import call_command

curdir = os.getcwd()

- os.chdir(os.path.join(curdir, 'geotrek'))

- call_command('compilemessages')

- os.chdir(os.path.join(curdir, 'mapentity'))

- call_command('compilemessages')

- os.chdir(curdir)

+ for subdir in ('geotrek', 'mapentity'):

+ os.chdir(subdir)

+ call_command('compilemessages')

+ for path in Path('.').rglob('*.mo'):

+ copy(path, os.path.join(curdir, self.build_lib, subdir, path))

+ os.chdir(curdir)

setup(

|

{"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -1,23 +1,24 @@\n #!/usr/bin/python3\n import os\n import distutils.command.build\n+from pathlib import Path\n from setuptools import setup, find_packages\n+from shutil import copy\n \n here = os.path.abspath(os.path.dirname(__file__))\n \n \n class BuildCommand(distutils.command.build.build):\n def run(self):\n- print(\"before\")\n distutils.command.build.build.run(self)\n- print(\"after\")\n from django.core.management import call_command\n curdir = os.getcwd()\n- os.chdir(os.path.join(curdir, 'geotrek'))\n- call_command('compilemessages')\n- os.chdir(os.path.join(curdir, 'mapentity'))\n- call_command('compilemessages')\n- os.chdir(curdir)\n+ for subdir in ('geotrek', 'mapentity'):\n+ os.chdir(subdir)\n+ call_command('compilemessages')\n+ for path in Path('.').rglob('*.mo'):\n+ copy(path, os.path.join(curdir, self.build_lib, subdir, path))\n+ os.chdir(curdir)\n \n \n setup(\n", "issue": "Fix translations in package\nThe compilemessages step for geotrek and mapentity is missing somewhere\n", "before_files": [{"content": "#!/usr/bin/python3\nimport os\nimport distutils.command.build\nfrom setuptools import setup, find_packages\n\nhere = os.path.abspath(os.path.dirname(__file__))\n\n\nclass BuildCommand(distutils.command.build.build):\n def run(self):\n print(\"before\")\n distutils.command.build.build.run(self)\n print(\"after\")\n from django.core.management import call_command\n curdir = os.getcwd()\n os.chdir(os.path.join(curdir, 'geotrek'))\n call_command('compilemessages')\n os.chdir(os.path.join(curdir, 'mapentity'))\n call_command('compilemessages')\n os.chdir(curdir)\n\n\nsetup(\n name='geotrek',\n version=open(os.path.join(here, 'VERSION')).read().strip(),\n author='Makina Corpus',\n author_email='[email protected]',\n url='http://makina-corpus.com',\n description=\"Geotrek\",\n long_description=(open(os.path.join(here, 'README.rst')).read() + '\\n\\n'\n + open(os.path.join(here, 'docs', 'changelog.rst')).read()),\n scripts=['manage.py'],\n install_requires=[\n # pinned by requirements.txt\n 'psycopg2',\n 'docutils',\n 'GDAL',\n 'Pillow',\n 'easy-thumbnails',\n 'simplekml',\n 'pygal',\n 'django-extended-choices',\n 'django-multiselectfield',\n 'geojson',\n 'tif2geojson',\n 'pytz',\n 'djangorestframework-gis',\n 'drf-dynamic-fields',\n 'django-rest-swagger',\n 'django-embed-video',\n 'xlrd',\n 'landez',\n 'redis',\n 'celery',\n 'django-celery-results',\n 'requests[security]',\n 'drf-extensions',\n 'django-colorfield',\n 'factory_boy',\n ],\n cmdclass={\"build\": BuildCommand},\n include_package_data=True,\n license='BSD, see LICENSE file.',\n packages=find_packages(),\n classifiers=['Natural Language :: English',\n 'Environment :: Web Environment',\n 'Framework :: Django',\n 'Development Status :: 5 - Production/Stable',\n 'Programming Language :: Python :: 2.7'],\n)\n", "path": "setup.py"}], "after_files": [{"content": "#!/usr/bin/python3\nimport os\nimport distutils.command.build\nfrom pathlib import Path\nfrom setuptools import setup, find_packages\nfrom shutil import copy\n\nhere = os.path.abspath(os.path.dirname(__file__))\n\n\nclass BuildCommand(distutils.command.build.build):\n def run(self):\n distutils.command.build.build.run(self)\n from django.core.management import call_command\n curdir = os.getcwd()\n for subdir in ('geotrek', 'mapentity'):\n os.chdir(subdir)\n call_command('compilemessages')\n for path in Path('.').rglob('*.mo'):\n copy(path, os.path.join(curdir, self.build_lib, subdir, path))\n os.chdir(curdir)\n\n\nsetup(\n name='geotrek',\n version=open(os.path.join(here, 'VERSION')).read().strip(),\n author='Makina Corpus',\n author_email='[email protected]',\n url='http://makina-corpus.com',\n description=\"Geotrek\",\n long_description=(open(os.path.join(here, 'README.rst')).read() + '\\n\\n'\n + open(os.path.join(here, 'docs', 'changelog.rst')).read()),\n scripts=['manage.py'],\n install_requires=[\n # pinned by requirements.txt\n 'psycopg2',\n 'docutils',\n 'GDAL',\n 'Pillow',\n 'easy-thumbnails',\n 'simplekml',\n 'pygal',\n 'django-extended-choices',\n 'django-multiselectfield',\n 'geojson',\n 'tif2geojson',\n 'pytz',\n 'djangorestframework-gis',\n 'drf-dynamic-fields',\n 'django-rest-swagger',\n 'django-embed-video',\n 'xlrd',\n 'landez',\n 'redis',\n 'celery',\n 'django-celery-results',\n 'requests[security]',\n 'drf-extensions',\n 'django-colorfield',\n 'factory_boy',\n ],\n cmdclass={\"build\": BuildCommand},\n include_package_data=True,\n license='BSD, see LICENSE file.',\n packages=find_packages(),\n classifiers=['Natural Language :: English',\n 'Environment :: Web Environment',\n 'Framework :: Django',\n 'Development Status :: 5 - Production/Stable',\n 'Programming Language :: Python :: 2.7'],\n)\n", "path": "setup.py"}]}

| 898 | 255 |

gh_patches_debug_26330

|

rasdani/github-patches

|

git_diff

|

streamlink__streamlink-1583

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Vaughnlive changed IP's to break Streamlink

This will be a very brief bug report... As of tonight the head vaughnlive.py references IPs which were disconnected by vaughn to thwart streamlinking. I've observed vaughn serving video now from "66.90.93.44","66.90.93.35" and have personally gotten it to work overwriting the IP's in rtmp_server_map with those two alternating. I would submit the commit but I think some more testing is needed as I only use streamlink with one occasional stream and don't know how far those IPs will get more frequent SL users.

#1187 contains lengthy discussion on the history of the war vaughn has waged against streamlink, this is probably not the last time the IPs will change.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/streamlink/plugins/vaughnlive.py`

Content:

```

1 import random

2 import re

3 import itertools

4 import ssl

5 import websocket

6

7 from streamlink.plugin import Plugin

8 from streamlink.plugin.api import useragents, http

9 from streamlink.stream import RTMPStream

10

11 _url_re = re.compile(r"""

12 http(s)?://(\w+\.)?

13 (?P<domain>vaughnlive|breakers|instagib|vapers|pearltime).tv

14 (/embed/video)?

15 /(?P<channel>[^/&?]+)

16 """, re.VERBOSE)

17

18

19 class VLWebSocket(websocket.WebSocket):

20 def __init__(self, **_):

21 self.session = _.pop("session")

22 self.logger = self.session.logger.new_module("plugins.vaughnlive.websocket")

23 sslopt = _.pop("sslopt", {})

24 sslopt["cert_reqs"] = ssl.CERT_NONE

25 super(VLWebSocket, self).__init__(sslopt=sslopt, **_)

26

27 def send(self, payload, opcode=websocket.ABNF.OPCODE_TEXT):

28 self.logger.debug("Sending message: {0}", payload)

29 return super(VLWebSocket, self).send(payload + "\n\x00", opcode)

30

31 def recv(self):

32 d = super(VLWebSocket, self).recv().replace("\n", "").replace("\x00", "")

33 return d.split(" ", 1)

34

35

36 class VaughnLive(Plugin):

37 servers = ["wss://sapi-ws-{0}x{1:02}.vaughnlive.tv".format(x, y) for x, y in itertools.product(range(1, 3),

38 range(1, 6))]

39 origin = "https://vaughnlive.tv"

40 rtmp_server_map = {

41 "594140c69edad": "66.90.93.42",

42 "585c4cab1bef1": "66.90.93.34",

43 "5940d648b3929": "66.90.93.42",

44 "5941854b39bc4": "198.255.0.10"

45 }

46 name_remap = {"#vl": "live", "#btv": "btv", "#pt": "pt", "#igb": "instagib", "#vtv": "vtv"}

47 domain_map = {"vaughnlive": "#vl", "breakers": "#btv", "instagib": "#igb", "vapers": "#vtv", "pearltime": "#pt"}

48

49 @classmethod

50 def can_handle_url(cls, url):

51 return _url_re.match(url)

52

53 def api_url(self):

54 return random.choice(self.servers)

55

56 def parse_ack(self, action, message):

57 if action.endswith("3"):

58 channel, _, viewers, token, server, choked, is_live, chls, trns, ingest = message.split(";")

59 is_live = is_live == "1"

60 viewers = int(viewers)

61 self.logger.debug("Viewers: {0}, isLive={1}", viewers, is_live)

62 domain, channel = channel.split("-", 1)

63 return is_live, server, domain, channel, token, ingest

64 else:

65 self.logger.error("Unhandled action format: {0}", action)

66

67 def _get_info(self, stream_name):

68 server = self.api_url()

69 self.logger.debug("Connecting to API: {0}", server)

70 ws = websocket.create_connection(server,

71 header=["User-Agent: {0}".format(useragents.CHROME)],

72 origin=self.origin,

73 class_=VLWebSocket,

74 session=self.session)

75 ws.send("MVN LOAD3 {0}".format(stream_name))

76 action, message = ws.recv()

77 return self.parse_ack(action, message)

78

79 def _get_rtmp_streams(self, server, domain, channel, token):

80 rtmp_server = self.rtmp_server_map.get(server, server)

81

82 url = "rtmp://{0}/live?{1}".format(rtmp_server, token)

83

84 yield "live", RTMPStream(self.session, params={

85 "rtmp": url,

86 "pageUrl": self.url,

87 "playpath": "{0}_{1}".format(self.name_remap.get(domain, "live"), channel),

88 "live": True

89 })

90

91 def _get_streams(self):

92 m = _url_re.match(self.url)

93 if m:

94 stream_name = "{0}-{1}".format(self.domain_map[(m.group("domain").lower())],

95 m.group("channel"))

96

97 is_live, server, domain, channel, token, ingest = self._get_info(stream_name)

98

99 if not is_live:

100 self.logger.info("Stream is currently off air")

101 else:

102 for s in self._get_rtmp_streams(server, domain, channel, token):

103 yield s

104

105

106 __plugin__ = VaughnLive

107

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/streamlink/plugins/vaughnlive.py b/src/streamlink/plugins/vaughnlive.py

--- a/src/streamlink/plugins/vaughnlive.py

+++ b/src/streamlink/plugins/vaughnlive.py

@@ -38,10 +38,10 @@

range(1, 6))]

origin = "https://vaughnlive.tv"

rtmp_server_map = {

- "594140c69edad": "66.90.93.42",

- "585c4cab1bef1": "66.90.93.34",

- "5940d648b3929": "66.90.93.42",

- "5941854b39bc4": "198.255.0.10"

+ "594140c69edad": "192.240.105.171:1935",

+ "585c4cab1bef1": "192.240.105.171:1935",

+ "5940d648b3929": "192.240.105.171:1935",

+ "5941854b39bc4": "192.240.105.171:1935"

}

name_remap = {"#vl": "live", "#btv": "btv", "#pt": "pt", "#igb": "instagib", "#vtv": "vtv"}

domain_map = {"vaughnlive": "#vl", "breakers": "#btv", "instagib": "#igb", "vapers": "#vtv", "pearltime": "#pt"}

@@ -99,6 +99,7 @@

if not is_live:

self.logger.info("Stream is currently off air")

else:

+ self.logger.info("Stream powered by VaughnSoft - remember to support them.")

for s in self._get_rtmp_streams(server, domain, channel, token):

yield s

|

{"golden_diff": "diff --git a/src/streamlink/plugins/vaughnlive.py b/src/streamlink/plugins/vaughnlive.py\n--- a/src/streamlink/plugins/vaughnlive.py\n+++ b/src/streamlink/plugins/vaughnlive.py\n@@ -38,10 +38,10 @@\n range(1, 6))]\n origin = \"https://vaughnlive.tv\"\n rtmp_server_map = {\n- \"594140c69edad\": \"66.90.93.42\",\n- \"585c4cab1bef1\": \"66.90.93.34\",\n- \"5940d648b3929\": \"66.90.93.42\",\n- \"5941854b39bc4\": \"198.255.0.10\"\n+ \"594140c69edad\": \"192.240.105.171:1935\",\n+ \"585c4cab1bef1\": \"192.240.105.171:1935\",\n+ \"5940d648b3929\": \"192.240.105.171:1935\",\n+ \"5941854b39bc4\": \"192.240.105.171:1935\"\n }\n name_remap = {\"#vl\": \"live\", \"#btv\": \"btv\", \"#pt\": \"pt\", \"#igb\": \"instagib\", \"#vtv\": \"vtv\"}\n domain_map = {\"vaughnlive\": \"#vl\", \"breakers\": \"#btv\", \"instagib\": \"#igb\", \"vapers\": \"#vtv\", \"pearltime\": \"#pt\"}\n@@ -99,6 +99,7 @@\n if not is_live:\n self.logger.info(\"Stream is currently off air\")\n else:\n+ self.logger.info(\"Stream powered by VaughnSoft - remember to support them.\")\n for s in self._get_rtmp_streams(server, domain, channel, token):\n yield s\n", "issue": "Vaughnlive changed IP's to break Streamlink\nThis will be a very brief bug report... As of tonight the head vaughnlive.py references IPs which were disconnected by vaughn to thwart streamlinking. I've observed vaughn serving video now from \"66.90.93.44\",\"66.90.93.35\" and have personally gotten it to work overwriting the IP's in rtmp_server_map with those two alternating. I would submit the commit but I think some more testing is needed as I only use streamlink with one occasional stream and don't know how far those IPs will get more frequent SL users.\r\n\r\n #1187 contains lengthy discussion on the history of the war vaughn has waged against streamlink, this is probably not the last time the IPs will change.\n", "before_files": [{"content": "import random\nimport re\nimport itertools\nimport ssl\nimport websocket\n\nfrom streamlink.plugin import Plugin\nfrom streamlink.plugin.api import useragents, http\nfrom streamlink.stream import RTMPStream\n\n_url_re = re.compile(r\"\"\"\n http(s)?://(\\w+\\.)?\n (?P<domain>vaughnlive|breakers|instagib|vapers|pearltime).tv\n (/embed/video)?\n /(?P<channel>[^/&?]+)\n\"\"\", re.VERBOSE)\n\n\nclass VLWebSocket(websocket.WebSocket):\n def __init__(self, **_):\n self.session = _.pop(\"session\")\n self.logger = self.session.logger.new_module(\"plugins.vaughnlive.websocket\")\n sslopt = _.pop(\"sslopt\", {})\n sslopt[\"cert_reqs\"] = ssl.CERT_NONE\n super(VLWebSocket, self).__init__(sslopt=sslopt, **_)\n\n def send(self, payload, opcode=websocket.ABNF.OPCODE_TEXT):\n self.logger.debug(\"Sending message: {0}\", payload)\n return super(VLWebSocket, self).send(payload + \"\\n\\x00\", opcode)\n\n def recv(self):\n d = super(VLWebSocket, self).recv().replace(\"\\n\", \"\").replace(\"\\x00\", \"\")\n return d.split(\" \", 1)\n\n\nclass VaughnLive(Plugin):\n servers = [\"wss://sapi-ws-{0}x{1:02}.vaughnlive.tv\".format(x, y) for x, y in itertools.product(range(1, 3),\n range(1, 6))]\n origin = \"https://vaughnlive.tv\"\n rtmp_server_map = {\n \"594140c69edad\": \"66.90.93.42\",\n \"585c4cab1bef1\": \"66.90.93.34\",\n \"5940d648b3929\": \"66.90.93.42\",\n \"5941854b39bc4\": \"198.255.0.10\"\n }\n name_remap = {\"#vl\": \"live\", \"#btv\": \"btv\", \"#pt\": \"pt\", \"#igb\": \"instagib\", \"#vtv\": \"vtv\"}\n domain_map = {\"vaughnlive\": \"#vl\", \"breakers\": \"#btv\", \"instagib\": \"#igb\", \"vapers\": \"#vtv\", \"pearltime\": \"#pt\"}\n\n @classmethod\n def can_handle_url(cls, url):\n return _url_re.match(url)\n\n def api_url(self):\n return random.choice(self.servers)\n\n def parse_ack(self, action, message):\n if action.endswith(\"3\"):\n channel, _, viewers, token, server, choked, is_live, chls, trns, ingest = message.split(\";\")\n is_live = is_live == \"1\"\n viewers = int(viewers)\n self.logger.debug(\"Viewers: {0}, isLive={1}\", viewers, is_live)\n domain, channel = channel.split(\"-\", 1)\n return is_live, server, domain, channel, token, ingest\n else:\n self.logger.error(\"Unhandled action format: {0}\", action)\n\n def _get_info(self, stream_name):\n server = self.api_url()\n self.logger.debug(\"Connecting to API: {0}\", server)\n ws = websocket.create_connection(server,\n header=[\"User-Agent: {0}\".format(useragents.CHROME)],\n origin=self.origin,\n class_=VLWebSocket,\n session=self.session)\n ws.send(\"MVN LOAD3 {0}\".format(stream_name))\n action, message = ws.recv()\n return self.parse_ack(action, message)\n\n def _get_rtmp_streams(self, server, domain, channel, token):\n rtmp_server = self.rtmp_server_map.get(server, server)\n\n url = \"rtmp://{0}/live?{1}\".format(rtmp_server, token)\n\n yield \"live\", RTMPStream(self.session, params={\n \"rtmp\": url,\n \"pageUrl\": self.url,\n \"playpath\": \"{0}_{1}\".format(self.name_remap.get(domain, \"live\"), channel),\n \"live\": True\n })\n\n def _get_streams(self):\n m = _url_re.match(self.url)\n if m:\n stream_name = \"{0}-{1}\".format(self.domain_map[(m.group(\"domain\").lower())],\n m.group(\"channel\"))\n\n is_live, server, domain, channel, token, ingest = self._get_info(stream_name)\n\n if not is_live:\n self.logger.info(\"Stream is currently off air\")\n else:\n for s in self._get_rtmp_streams(server, domain, channel, token):\n yield s\n\n\n__plugin__ = VaughnLive\n", "path": "src/streamlink/plugins/vaughnlive.py"}], "after_files": [{"content": "import random\nimport re\nimport itertools\nimport ssl\nimport websocket\n\nfrom streamlink.plugin import Plugin\nfrom streamlink.plugin.api import useragents, http\nfrom streamlink.stream import RTMPStream\n\n_url_re = re.compile(r\"\"\"\n http(s)?://(\\w+\\.)?\n (?P<domain>vaughnlive|breakers|instagib|vapers|pearltime).tv\n (/embed/video)?\n /(?P<channel>[^/&?]+)\n\"\"\", re.VERBOSE)\n\n\nclass VLWebSocket(websocket.WebSocket):\n def __init__(self, **_):\n self.session = _.pop(\"session\")\n self.logger = self.session.logger.new_module(\"plugins.vaughnlive.websocket\")\n sslopt = _.pop(\"sslopt\", {})\n sslopt[\"cert_reqs\"] = ssl.CERT_NONE\n super(VLWebSocket, self).__init__(sslopt=sslopt, **_)\n\n def send(self, payload, opcode=websocket.ABNF.OPCODE_TEXT):\n self.logger.debug(\"Sending message: {0}\", payload)\n return super(VLWebSocket, self).send(payload + \"\\n\\x00\", opcode)\n\n def recv(self):\n d = super(VLWebSocket, self).recv().replace(\"\\n\", \"\").replace(\"\\x00\", \"\")\n return d.split(\" \", 1)\n\n\nclass VaughnLive(Plugin):\n servers = [\"wss://sapi-ws-{0}x{1:02}.vaughnlive.tv\".format(x, y) for x, y in itertools.product(range(1, 3),\n range(1, 6))]\n origin = \"https://vaughnlive.tv\"\n rtmp_server_map = {\n \"594140c69edad\": \"192.240.105.171:1935\",\n \"585c4cab1bef1\": \"192.240.105.171:1935\",\n \"5940d648b3929\": \"192.240.105.171:1935\",\n \"5941854b39bc4\": \"192.240.105.171:1935\"\n }\n name_remap = {\"#vl\": \"live\", \"#btv\": \"btv\", \"#pt\": \"pt\", \"#igb\": \"instagib\", \"#vtv\": \"vtv\"}\n domain_map = {\"vaughnlive\": \"#vl\", \"breakers\": \"#btv\", \"instagib\": \"#igb\", \"vapers\": \"#vtv\", \"pearltime\": \"#pt\"}\n\n @classmethod\n def can_handle_url(cls, url):\n return _url_re.match(url)\n\n def api_url(self):\n return random.choice(self.servers)\n\n def parse_ack(self, action, message):\n if action.endswith(\"3\"):\n channel, _, viewers, token, server, choked, is_live, chls, trns, ingest = message.split(\";\")\n is_live = is_live == \"1\"\n viewers = int(viewers)\n self.logger.debug(\"Viewers: {0}, isLive={1}\", viewers, is_live)\n domain, channel = channel.split(\"-\", 1)\n return is_live, server, domain, channel, token, ingest\n else:\n self.logger.error(\"Unhandled action format: {0}\", action)\n\n def _get_info(self, stream_name):\n server = self.api_url()\n self.logger.debug(\"Connecting to API: {0}\", server)\n ws = websocket.create_connection(server,\n header=[\"User-Agent: {0}\".format(useragents.CHROME)],\n origin=self.origin,\n class_=VLWebSocket,\n session=self.session)\n ws.send(\"MVN LOAD3 {0}\".format(stream_name))\n action, message = ws.recv()\n return self.parse_ack(action, message)\n\n def _get_rtmp_streams(self, server, domain, channel, token):\n rtmp_server = self.rtmp_server_map.get(server, server)\n\n url = \"rtmp://{0}/live?{1}\".format(rtmp_server, token)\n\n yield \"live\", RTMPStream(self.session, params={\n \"rtmp\": url,\n \"pageUrl\": self.url,\n \"playpath\": \"{0}_{1}\".format(self.name_remap.get(domain, \"live\"), channel),\n \"live\": True\n })\n\n def _get_streams(self):\n m = _url_re.match(self.url)\n if m:\n stream_name = \"{0}-{1}\".format(self.domain_map[(m.group(\"domain\").lower())],\n m.group(\"channel\"))\n\n is_live, server, domain, channel, token, ingest = self._get_info(stream_name)\n\n if not is_live:\n self.logger.info(\"Stream is currently off air\")\n else:\n self.logger.info(\"Stream powered by VaughnSoft - remember to support them.\")\n for s in self._get_rtmp_streams(server, domain, channel, token):\n yield s\n\n\n__plugin__ = VaughnLive\n", "path": "src/streamlink/plugins/vaughnlive.py"}]}

| 1,737 | 518 |

gh_patches_debug_26363

|

rasdani/github-patches

|

git_diff

|

mathesar-foundation__mathesar-786

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Implement showing and changing a column's type

## Problem

<!-- Please provide a clear and concise description of the problem that this feature request is designed to solve.-->

Users might want to change the data type of an existing column on their table.

## Proposed solution

<!-- A clear and concise description of your proposed solution or feature. -->

The ["Working with Columns" design spec](https://wiki.mathesar.org/en/design/specs/working-with-columns) has a solution for showing and changing column types, which we need to implement on the frontend.

Please note that we're only implementing changing the Mathesar data type in this milestone. Options specific to individual data types will be implemented in the next milestone.

Number data types should save as `NUMERIC`.

Text data types should save as `VARCHAR`.

Date/time data types can be disabled for now since they're not fully implemented on the backend.

## Additional context

<!-- Add any other context or screenshots about the feature request here.-->

- Backend work:

- #532 to get the list of types

- #199 to get valid target types and change types

- Design issue: #324

- Design discussion: #436

- #269

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mathesar/views.py`

Content:

```

1 from django.shortcuts import render, redirect, get_object_or_404

2

3 from mathesar.models import Database, Schema, Table

4 from mathesar.api.serializers.databases import DatabaseSerializer

5 from mathesar.api.serializers.schemas import SchemaSerializer

6 from mathesar.api.serializers.tables import TableSerializer

7

8

9 def get_schema_list(request, database):

10 schema_serializer = SchemaSerializer(

11 Schema.objects.filter(database=database),

12 many=True,

13 context={'request': request}

14 )

15 return schema_serializer.data

16

17

18 def get_database_list(request):

19 database_serializer = DatabaseSerializer(

20 Database.objects.all(),

21 many=True,

22 context={'request': request}

23 )

24 return database_serializer.data

25

26

27 def get_table_list(request, schema):

28 if schema is None:

29 return []

30 table_serializer = TableSerializer(

31 Table.objects.filter(schema=schema),

32 many=True,

33 context={'request': request}

34 )

35 return table_serializer.data

36

37

38 def get_common_data(request, database, schema=None):

39 return {

40 'current_db': database.name if database else None,

41 'current_schema': schema.id if schema else None,

42 'schemas': get_schema_list(request, database),

43 'databases': get_database_list(request),

44 'tables': get_table_list(request, schema)

45 }

46

47

48 def get_current_database(request, db_name):

49 # if there's a DB name passed in, try to retrieve the database, or return a 404 error.

50 if db_name is not None:

51 return get_object_or_404(Database, name=db_name)

52 else:

53 try:

54 # Try to get the first database available

55 return Database.objects.order_by('id').first()

56 except Database.DoesNotExist:

57 return None

58

59

60 def get_current_schema(request, schema_id, database):

61 # if there's a schema ID passed in, try to retrieve the schema, or return a 404 error.

62 if schema_id is not None:

63 return get_object_or_404(Schema, id=schema_id)

64 else:

65 try:

66 # Try to get the first schema in the DB

67 return Schema.objects.filter(database=database).order_by('id').first()

68 except Schema.DoesNotExist:

69 return None

70

71

72 def render_schema(request, database, schema):

73 # if there's no schema available, redirect to the schemas page.

74 if not schema:

75 return redirect('schemas', db_name=database.name)

76 else:

77 # We are redirecting so that the correct URL is passed to the frontend.

78 return redirect('schema_home', db_name=database.name, schema_id=schema.id)

79

80

81 def home(request):

82 database = get_current_database(request, None)

83 schema = get_current_schema(request, None, database)

84 return render_schema(request, database, schema)

85

86

87 def db_home(request, db_name):

88 database = get_current_database(request, db_name)

89 schema = get_current_schema(request, None, database)

90 return render_schema(request, database, schema)

91

92

93 def schema_home(request, db_name, schema_id):

94 database = get_current_database(request, db_name)

95 schema = get_current_schema(request, schema_id, database)

96 return render(request, 'mathesar/index.html', {

97 'common_data': get_common_data(request, database, schema)

98 })

99

100

101 def schemas(request, db_name):

102 database = get_current_database(request, db_name)

103 schema = get_current_schema(request, None, database)

104 return render(request, 'mathesar/index.html', {

105 'common_data': get_common_data(request, database, schema)

106 })

107

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/mathesar/views.py b/mathesar/views.py

--- a/mathesar/views.py

+++ b/mathesar/views.py

@@ -1,7 +1,7 @@

from django.shortcuts import render, redirect, get_object_or_404

from mathesar.models import Database, Schema, Table

-from mathesar.api.serializers.databases import DatabaseSerializer

+from mathesar.api.serializers.databases import DatabaseSerializer, TypeSerializer

from mathesar.api.serializers.schemas import SchemaSerializer

from mathesar.api.serializers.tables import TableSerializer

@@ -35,13 +35,25 @@

return table_serializer.data

+def get_type_list(request, database):

+ if database is None:

+ return []

+ type_serializer = TypeSerializer(

+ database.supported_types,

+ many=True,

+ context={'request': request}

+ )

+ return type_serializer.data

+

+

def get_common_data(request, database, schema=None):

return {

'current_db': database.name if database else None,

'current_schema': schema.id if schema else None,

'schemas': get_schema_list(request, database),

'databases': get_database_list(request),

- 'tables': get_table_list(request, schema)

+ 'tables': get_table_list(request, schema),

+ 'abstract_types': get_type_list(request, database)

}

|

{"golden_diff": "diff --git a/mathesar/views.py b/mathesar/views.py\n--- a/mathesar/views.py\n+++ b/mathesar/views.py\n@@ -1,7 +1,7 @@\n from django.shortcuts import render, redirect, get_object_or_404\n \n from mathesar.models import Database, Schema, Table\n-from mathesar.api.serializers.databases import DatabaseSerializer\n+from mathesar.api.serializers.databases import DatabaseSerializer, TypeSerializer\n from mathesar.api.serializers.schemas import SchemaSerializer\n from mathesar.api.serializers.tables import TableSerializer\n \n@@ -35,13 +35,25 @@\n return table_serializer.data\n \n \n+def get_type_list(request, database):\n+ if database is None:\n+ return []\n+ type_serializer = TypeSerializer(\n+ database.supported_types,\n+ many=True,\n+ context={'request': request}\n+ )\n+ return type_serializer.data\n+\n+\n def get_common_data(request, database, schema=None):\n return {\n 'current_db': database.name if database else None,\n 'current_schema': schema.id if schema else None,\n 'schemas': get_schema_list(request, database),\n 'databases': get_database_list(request),\n- 'tables': get_table_list(request, schema)\n+ 'tables': get_table_list(request, schema),\n+ 'abstract_types': get_type_list(request, database)\n }\n", "issue": "Implement showing and changing a column's type\n## Problem\r\n<!-- Please provide a clear and concise description of the problem that this feature request is designed to solve.-->\r\nUsers might want to change the data type of an existing column on their table.\r\n\r\n## Proposed solution\r\n<!-- A clear and concise description of your proposed solution or feature. -->\r\nThe [\"Working with Columns\" design spec](https://wiki.mathesar.org/en/design/specs/working-with-columns) has a solution for showing and changing column types, which we need to implement on the frontend.\r\n\r\nPlease note that we're only implementing changing the Mathesar data type in this milestone. Options specific to individual data types will be implemented in the next milestone.\r\n\r\nNumber data types should save as `NUMERIC`.\r\nText data types should save as `VARCHAR`.\r\nDate/time data types can be disabled for now since they're not fully implemented on the backend.\r\n\r\n## Additional context\r\n<!-- Add any other context or screenshots about the feature request here.-->\r\n- Backend work:\r\n - #532 to get the list of types \r\n - #199 to get valid target types and change types \r\n- Design issue: #324 \r\n- Design discussion: #436\r\n- #269 \n", "before_files": [{"content": "from django.shortcuts import render, redirect, get_object_or_404\n\nfrom mathesar.models import Database, Schema, Table\nfrom mathesar.api.serializers.databases import DatabaseSerializer\nfrom mathesar.api.serializers.schemas import SchemaSerializer\nfrom mathesar.api.serializers.tables import TableSerializer\n\n\ndef get_schema_list(request, database):\n schema_serializer = SchemaSerializer(\n Schema.objects.filter(database=database),\n many=True,\n context={'request': request}\n )\n return schema_serializer.data\n\n\ndef get_database_list(request):\n database_serializer = DatabaseSerializer(\n Database.objects.all(),\n many=True,\n context={'request': request}\n )\n return database_serializer.data\n\n\ndef get_table_list(request, schema):\n if schema is None:\n return []\n table_serializer = TableSerializer(\n Table.objects.filter(schema=schema),\n many=True,\n context={'request': request}\n )\n return table_serializer.data\n\n\ndef get_common_data(request, database, schema=None):\n return {\n 'current_db': database.name if database else None,\n 'current_schema': schema.id if schema else None,\n 'schemas': get_schema_list(request, database),\n 'databases': get_database_list(request),\n 'tables': get_table_list(request, schema)\n }\n\n\ndef get_current_database(request, db_name):\n # if there's a DB name passed in, try to retrieve the database, or return a 404 error.\n if db_name is not None:\n return get_object_or_404(Database, name=db_name)\n else:\n try:\n # Try to get the first database available\n return Database.objects.order_by('id').first()\n except Database.DoesNotExist:\n return None\n\n\ndef get_current_schema(request, schema_id, database):\n # if there's a schema ID passed in, try to retrieve the schema, or return a 404 error.\n if schema_id is not None:\n return get_object_or_404(Schema, id=schema_id)\n else:\n try:\n # Try to get the first schema in the DB\n return Schema.objects.filter(database=database).order_by('id').first()\n except Schema.DoesNotExist:\n return None\n\n\ndef render_schema(request, database, schema):\n # if there's no schema available, redirect to the schemas page.\n if not schema:\n return redirect('schemas', db_name=database.name)\n else:\n # We are redirecting so that the correct URL is passed to the frontend.\n return redirect('schema_home', db_name=database.name, schema_id=schema.id)\n\n\ndef home(request):\n database = get_current_database(request, None)\n schema = get_current_schema(request, None, database)\n return render_schema(request, database, schema)\n\n\ndef db_home(request, db_name):\n database = get_current_database(request, db_name)\n schema = get_current_schema(request, None, database)\n return render_schema(request, database, schema)\n\n\ndef schema_home(request, db_name, schema_id):\n database = get_current_database(request, db_name)\n schema = get_current_schema(request, schema_id, database)\n return render(request, 'mathesar/index.html', {\n 'common_data': get_common_data(request, database, schema)\n })\n\n\ndef schemas(request, db_name):\n database = get_current_database(request, db_name)\n schema = get_current_schema(request, None, database)\n return render(request, 'mathesar/index.html', {\n 'common_data': get_common_data(request, database, schema)\n })\n", "path": "mathesar/views.py"}], "after_files": [{"content": "from django.shortcuts import render, redirect, get_object_or_404\n\nfrom mathesar.models import Database, Schema, Table\nfrom mathesar.api.serializers.databases import DatabaseSerializer, TypeSerializer\nfrom mathesar.api.serializers.schemas import SchemaSerializer\nfrom mathesar.api.serializers.tables import TableSerializer\n\n\ndef get_schema_list(request, database):\n schema_serializer = SchemaSerializer(\n Schema.objects.filter(database=database),\n many=True,\n context={'request': request}\n )\n return schema_serializer.data\n\n\ndef get_database_list(request):\n database_serializer = DatabaseSerializer(\n Database.objects.all(),\n many=True,\n context={'request': request}\n )\n return database_serializer.data\n\n\ndef get_table_list(request, schema):\n if schema is None:\n return []\n table_serializer = TableSerializer(\n Table.objects.filter(schema=schema),\n many=True,\n context={'request': request}\n )\n return table_serializer.data\n\n\ndef get_type_list(request, database):\n if database is None:\n return []\n type_serializer = TypeSerializer(\n database.supported_types,\n many=True,\n context={'request': request}\n )\n return type_serializer.data\n\n\ndef get_common_data(request, database, schema=None):\n return {\n 'current_db': database.name if database else None,\n 'current_schema': schema.id if schema else None,\n 'schemas': get_schema_list(request, database),\n 'databases': get_database_list(request),\n 'tables': get_table_list(request, schema),\n 'abstract_types': get_type_list(request, database)\n }\n\n\ndef get_current_database(request, db_name):\n # if there's a DB name passed in, try to retrieve the database, or return a 404 error.\n if db_name is not None:\n return get_object_or_404(Database, name=db_name)\n else:\n try:\n # Try to get the first database available\n return Database.objects.order_by('id').first()\n except Database.DoesNotExist:\n return None\n\n\ndef get_current_schema(request, schema_id, database):\n # if there's a schema ID passed in, try to retrieve the schema, or return a 404 error.\n if schema_id is not None:\n return get_object_or_404(Schema, id=schema_id)\n else:\n try:\n # Try to get the first schema in the DB\n return Schema.objects.filter(database=database).order_by('id').first()\n except Schema.DoesNotExist:\n return None\n\n\ndef render_schema(request, database, schema):\n # if there's no schema available, redirect to the schemas page.\n if not schema:\n return redirect('schemas', db_name=database.name)\n else:\n # We are redirecting so that the correct URL is passed to the frontend.\n return redirect('schema_home', db_name=database.name, schema_id=schema.id)\n\n\ndef home(request):\n database = get_current_database(request, None)\n schema = get_current_schema(request, None, database)\n return render_schema(request, database, schema)\n\n\ndef db_home(request, db_name):\n database = get_current_database(request, db_name)\n schema = get_current_schema(request, None, database)\n return render_schema(request, database, schema)\n\n\ndef schema_home(request, db_name, schema_id):\n database = get_current_database(request, db_name)\n schema = get_current_schema(request, schema_id, database)\n return render(request, 'mathesar/index.html', {\n 'common_data': get_common_data(request, database, schema)\n })\n\n\ndef schemas(request, db_name):\n database = get_current_database(request, db_name)\n schema = get_current_schema(request, None, database)\n return render(request, 'mathesar/index.html', {\n 'common_data': get_common_data(request, database, schema)\n })\n", "path": "mathesar/views.py"}]}

| 1,490 | 295 |

gh_patches_debug_34062

|

rasdani/github-patches

|

git_diff

|

mampfes__hacs_waste_collection_schedule-1871

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[Bug]: Chichester District Council is not working

### I Have A Problem With:

A specific source

### What's Your Problem

The source has stopped working since Tuesday 13th February 2024. All the collection days no longer show on the calendar at all. The Chichester District Council website still shows me the days.

### Source (if relevant)

chichester_gov_uk

### Logs

```Shell

This error originated from a custom integration.

Logger: waste_collection_schedule.source_shell

Source: custom_components/waste_collection_schedule/waste_collection_schedule/source_shell.py:136

Integration: waste_collection_schedule (documentation)

First occurred: 11:36:47 (1 occurrences)

Last logged: 11:36:47

fetch failed for source Chichester District Council: Traceback (most recent call last): File "/config/custom_components/waste_collection_schedule/waste_collection_schedule/source_shell.py", line 134, in fetch entries = self._source.fetch() ^^^^^^^^^^^^^^^^^^^^ File "/config/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py", line 37, in fetch form_url = form["action"] ~~~~^^^^^^^^^^ TypeError: 'NoneType' object is not subscriptable

```

### Relevant Configuration

```YAML

waste_collection_schedule:

sources:

- name: chichester_gov_uk

args:

uprn: 10002466648

```

### Checklist Source Error

- [X] Use the example parameters for your source (often available in the documentation) (don't forget to restart Home Assistant after changing the configuration)

- [X] Checked that the website of your service provider is still working

- [X] Tested my attributes on the service provider website (if possible)

- [X] I have tested with the latest version of the integration (master) (for HACS in the 3 dot menu of the integration click on "Redownload" and choose master as version)

### Checklist Sensor Error

- [X] Checked in the Home Assistant Calendar tab if the event names match the types names (if types argument is used)

### Required

- [X] I have searched past (closed AND opened) issues to see if this bug has already been reported, and it hasn't been.

- [X] I understand that people give their precious time for free, and thus I've done my very best to make this problem as easy as possible to investigate.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py`

Content:

```

1 from datetime import datetime

2

3 import requests

4 from bs4 import BeautifulSoup

5 from waste_collection_schedule import Collection

6

7 TITLE = "Chichester District Council"

8 DESCRIPTION = "Source for chichester.gov.uk services for Chichester"

9 URL = "chichester.gov.uk"

10

11 TEST_CASES = {

12 "Test_001": {"uprn": "010002476348"},

13 "Test_002": {"uprn": "100062612654"},

14 "Test_003": {"uprn": "100061745708"},

15 }

16

17 ICON_MAP = {

18 "General Waste": "mdi:trash-can",

19 "Recycling": "mdi:recycle",

20 "Garden Recycling": "mdi:leaf",

21 }

22

23

24 class Source:

25 def __init__(self, uprn):

26 self._uprn = uprn

27

28 def fetch(self):

29 session = requests.Session()

30 # Start a session

31 r = session.get("https://www.chichester.gov.uk/checkyourbinday")

32 r.raise_for_status()

33 soup = BeautifulSoup(r.text, features="html.parser")

34

35 # Extract form submission url

36 form = soup.find("form", attrs={"id": "WASTECOLLECTIONCALENDARV2_FORM"})

37 form_url = form["action"]

38

39 # Submit form

40 form_data = {

41 "WASTECOLLECTIONCALENDARV2_FORMACTION_NEXT": "Submit",

42 "WASTECOLLECTIONCALENDARV2_CALENDAR_UPRN": self._uprn,

43 }

44 r = session.post(form_url, data=form_data)

45 r.raise_for_status()

46

47 # Extract collection dates

48 soup = BeautifulSoup(r.text, features="html.parser")

49 entries = []

50 data = soup.find_all("div", attrs={"class": "bin-days"})

51 for bin in data:

52 if "print-only" in bin["class"]:

53 continue

54

55 type = bin.find("span").contents[0].replace("bin", "").strip().title()

56 list_items = bin.find_all("li")

57 if list_items:

58 for item in list_items:

59 date = datetime.strptime(item.text, "%d %B %Y").date()

60 entries.append(

61 Collection(

62 date=date,

63 t=type,

64 icon=ICON_MAP.get(type),

65 )

66 )

67

68 return entries

69

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py b/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py

--- a/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py

+++ b/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py

@@ -33,13 +33,13 @@

soup = BeautifulSoup(r.text, features="html.parser")

# Extract form submission url

- form = soup.find("form", attrs={"id": "WASTECOLLECTIONCALENDARV2_FORM"})

+ form = soup.find("form", attrs={"id": "WASTECOLLECTIONCALENDARV5_FORM"})

form_url = form["action"]

# Submit form

form_data = {

- "WASTECOLLECTIONCALENDARV2_FORMACTION_NEXT": "Submit",

- "WASTECOLLECTIONCALENDARV2_CALENDAR_UPRN": self._uprn,

+ "WASTECOLLECTIONCALENDARV5_FORMACTION_NEXT": "Submit",

+ "WASTECOLLECTIONCALENDARV5_CALENDAR_UPRN": self._uprn,

}

r = session.post(form_url, data=form_data)

r.raise_for_status()

@@ -47,16 +47,18 @@

# Extract collection dates

soup = BeautifulSoup(r.text, features="html.parser")

entries = []

- data = soup.find_all("div", attrs={"class": "bin-days"})

- for bin in data:

- if "print-only" in bin["class"]:

- continue

-

- type = bin.find("span").contents[0].replace("bin", "").strip().title()

- list_items = bin.find_all("li")

- if list_items:

- for item in list_items:

- date = datetime.strptime(item.text, "%d %B %Y").date()

+ tables = soup.find_all("table", attrs={"class": "bin-collection-dates"})

+ # Data is presented in two tables side-by-side

+ for table in tables:

+ # Each collection is a table row

+ data = table.find_all("tr")

+ for bin in data:

+ cells = bin.find_all("td")

+ # Ignore the header row

+ if len(cells) == 2:

+ date = datetime.strptime(cells[0].text, "%d %B %Y").date()

+ # Maintain backwards compatibility - it used to be General Waste and now it is General waste

+ type = cells[1].text.title()

entries.append(

Collection(

date=date,

|

{"golden_diff": "diff --git a/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py b/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py\n--- a/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py\n+++ b/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py\n@@ -33,13 +33,13 @@\n soup = BeautifulSoup(r.text, features=\"html.parser\")\n \n # Extract form submission url\n- form = soup.find(\"form\", attrs={\"id\": \"WASTECOLLECTIONCALENDARV2_FORM\"})\n+ form = soup.find(\"form\", attrs={\"id\": \"WASTECOLLECTIONCALENDARV5_FORM\"})\n form_url = form[\"action\"]\n \n # Submit form\n form_data = {\n- \"WASTECOLLECTIONCALENDARV2_FORMACTION_NEXT\": \"Submit\",\n- \"WASTECOLLECTIONCALENDARV2_CALENDAR_UPRN\": self._uprn,\n+ \"WASTECOLLECTIONCALENDARV5_FORMACTION_NEXT\": \"Submit\",\n+ \"WASTECOLLECTIONCALENDARV5_CALENDAR_UPRN\": self._uprn,\n }\n r = session.post(form_url, data=form_data)\n r.raise_for_status()\n@@ -47,16 +47,18 @@\n # Extract collection dates\n soup = BeautifulSoup(r.text, features=\"html.parser\")\n entries = []\n- data = soup.find_all(\"div\", attrs={\"class\": \"bin-days\"})\n- for bin in data:\n- if \"print-only\" in bin[\"class\"]:\n- continue\n-\n- type = bin.find(\"span\").contents[0].replace(\"bin\", \"\").strip().title()\n- list_items = bin.find_all(\"li\")\n- if list_items:\n- for item in list_items:\n- date = datetime.strptime(item.text, \"%d %B %Y\").date()\n+ tables = soup.find_all(\"table\", attrs={\"class\": \"bin-collection-dates\"})\n+ # Data is presented in two tables side-by-side\n+ for table in tables:\n+ # Each collection is a table row\n+ data = table.find_all(\"tr\")\n+ for bin in data:\n+ cells = bin.find_all(\"td\")\n+ # Ignore the header row\n+ if len(cells) == 2:\n+ date = datetime.strptime(cells[0].text, \"%d %B %Y\").date()\n+ # Maintain backwards compatibility - it used to be General Waste and now it is General waste\n+ type = cells[1].text.title()\n entries.append(\n Collection(\n date=date,\n", "issue": "[Bug]: Chichester District Council is not working\n### I Have A Problem With:\n\nA specific source\n\n### What's Your Problem\n\nThe source has stopped working since Tuesday 13th February 2024. All the collection days no longer show on the calendar at all. The Chichester District Council website still shows me the days.\n\n### Source (if relevant)\n\nchichester_gov_uk\n\n### Logs\n\n```Shell\nThis error originated from a custom integration.\r\n\r\nLogger: waste_collection_schedule.source_shell\r\nSource: custom_components/waste_collection_schedule/waste_collection_schedule/source_shell.py:136\r\nIntegration: waste_collection_schedule (documentation)\r\nFirst occurred: 11:36:47 (1 occurrences)\r\nLast logged: 11:36:47\r\n\r\nfetch failed for source Chichester District Council: Traceback (most recent call last): File \"/config/custom_components/waste_collection_schedule/waste_collection_schedule/source_shell.py\", line 134, in fetch entries = self._source.fetch() ^^^^^^^^^^^^^^^^^^^^ File \"/config/custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py\", line 37, in fetch form_url = form[\"action\"] ~~~~^^^^^^^^^^ TypeError: 'NoneType' object is not subscriptable\n```\n\n\n### Relevant Configuration\n\n```YAML\nwaste_collection_schedule:\r\n sources:\r\n - name: chichester_gov_uk\r\n args:\r\n uprn: 10002466648\n```\n\n\n### Checklist Source Error\n\n- [X] Use the example parameters for your source (often available in the documentation) (don't forget to restart Home Assistant after changing the configuration)\n- [X] Checked that the website of your service provider is still working\n- [X] Tested my attributes on the service provider website (if possible)\n- [X] I have tested with the latest version of the integration (master) (for HACS in the 3 dot menu of the integration click on \"Redownload\" and choose master as version)\n\n### Checklist Sensor Error\n\n- [X] Checked in the Home Assistant Calendar tab if the event names match the types names (if types argument is used)\n\n### Required\n\n- [X] I have searched past (closed AND opened) issues to see if this bug has already been reported, and it hasn't been.\n- [X] I understand that people give their precious time for free, and thus I've done my very best to make this problem as easy as possible to investigate.\n", "before_files": [{"content": "from datetime import datetime\n\nimport requests\nfrom bs4 import BeautifulSoup\nfrom waste_collection_schedule import Collection\n\nTITLE = \"Chichester District Council\"\nDESCRIPTION = \"Source for chichester.gov.uk services for Chichester\"\nURL = \"chichester.gov.uk\"\n\nTEST_CASES = {\n \"Test_001\": {\"uprn\": \"010002476348\"},\n \"Test_002\": {\"uprn\": \"100062612654\"},\n \"Test_003\": {\"uprn\": \"100061745708\"},\n}\n\nICON_MAP = {\n \"General Waste\": \"mdi:trash-can\",\n \"Recycling\": \"mdi:recycle\",\n \"Garden Recycling\": \"mdi:leaf\",\n}\n\n\nclass Source:\n def __init__(self, uprn):\n self._uprn = uprn\n\n def fetch(self):\n session = requests.Session()\n # Start a session\n r = session.get(\"https://www.chichester.gov.uk/checkyourbinday\")\n r.raise_for_status()\n soup = BeautifulSoup(r.text, features=\"html.parser\")\n\n # Extract form submission url\n form = soup.find(\"form\", attrs={\"id\": \"WASTECOLLECTIONCALENDARV2_FORM\"})\n form_url = form[\"action\"]\n\n # Submit form\n form_data = {\n \"WASTECOLLECTIONCALENDARV2_FORMACTION_NEXT\": \"Submit\",\n \"WASTECOLLECTIONCALENDARV2_CALENDAR_UPRN\": self._uprn,\n }\n r = session.post(form_url, data=form_data)\n r.raise_for_status()\n\n # Extract collection dates\n soup = BeautifulSoup(r.text, features=\"html.parser\")\n entries = []\n data = soup.find_all(\"div\", attrs={\"class\": \"bin-days\"})\n for bin in data:\n if \"print-only\" in bin[\"class\"]:\n continue\n\n type = bin.find(\"span\").contents[0].replace(\"bin\", \"\").strip().title()\n list_items = bin.find_all(\"li\")\n if list_items:\n for item in list_items:\n date = datetime.strptime(item.text, \"%d %B %Y\").date()\n entries.append(\n Collection(\n date=date,\n t=type,\n icon=ICON_MAP.get(type),\n )\n )\n\n return entries\n", "path": "custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py"}], "after_files": [{"content": "from datetime import datetime\n\nimport requests\nfrom bs4 import BeautifulSoup\nfrom waste_collection_schedule import Collection\n\nTITLE = \"Chichester District Council\"\nDESCRIPTION = \"Source for chichester.gov.uk services for Chichester\"\nURL = \"chichester.gov.uk\"\n\nTEST_CASES = {\n \"Test_001\": {\"uprn\": \"010002476348\"},\n \"Test_002\": {\"uprn\": \"100062612654\"},\n \"Test_003\": {\"uprn\": \"100061745708\"},\n}\n\nICON_MAP = {\n \"General Waste\": \"mdi:trash-can\",\n \"Recycling\": \"mdi:recycle\",\n \"Garden Recycling\": \"mdi:leaf\",\n}\n\n\nclass Source:\n def __init__(self, uprn):\n self._uprn = uprn\n\n def fetch(self):\n session = requests.Session()\n # Start a session\n r = session.get(\"https://www.chichester.gov.uk/checkyourbinday\")\n r.raise_for_status()\n soup = BeautifulSoup(r.text, features=\"html.parser\")\n\n # Extract form submission url\n form = soup.find(\"form\", attrs={\"id\": \"WASTECOLLECTIONCALENDARV5_FORM\"})\n form_url = form[\"action\"]\n\n # Submit form\n form_data = {\n \"WASTECOLLECTIONCALENDARV5_FORMACTION_NEXT\": \"Submit\",\n \"WASTECOLLECTIONCALENDARV5_CALENDAR_UPRN\": self._uprn,\n }\n r = session.post(form_url, data=form_data)\n r.raise_for_status()\n\n # Extract collection dates\n soup = BeautifulSoup(r.text, features=\"html.parser\")\n entries = []\n tables = soup.find_all(\"table\", attrs={\"class\": \"bin-collection-dates\"})\n # Data is presented in two tables side-by-side\n for table in tables:\n # Each collection is a table row\n data = table.find_all(\"tr\")\n for bin in data:\n cells = bin.find_all(\"td\")\n # Ignore the header row\n if len(cells) == 2:\n date = datetime.strptime(cells[0].text, \"%d %B %Y\").date()\n # Maintain backwards compatibility - it used to be General Waste and now it is General waste\n type = cells[1].text.title()\n entries.append(\n Collection(\n date=date,\n t=type,\n icon=ICON_MAP.get(type),\n )\n )\n\n return entries\n", "path": "custom_components/waste_collection_schedule/waste_collection_schedule/source/chichester_gov_uk.py"}]}

| 1,465 | 596 |

gh_patches_debug_899

|

rasdani/github-patches

|

git_diff

|

python-telegram-bot__python-telegram-bot-1063

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

User.full_name doesn't handle non-ASCII (in Python 2?)

### Steps to reproduce

```python

updater = ext.Updater(token=settings.telegram_token())

def F(bot, update):

user = update.effective_user

print repr(user.first_name), repr(user.last_name)

print '%s %s' % (user.first_name, user.last_name)

print user.full_name

updater.dispatcher.add_handler(ext.MessageHandler(0, F))

updater.start_polling()

updater.idle()

```

### Expected behaviour

```

u'Dan\u2022iel' u'Reed'

Dan•iel Reed

Dan•iel Reed

```

### Actual behaviour

```

u'Dan\u2022iel' u'Reed'

Dan•iel Reed

ERROR dispatcher.py:301] An uncaught error was raised while processing the update

Traceback (most recent call last):

File "local/lib/python2.7/site-packages/telegram/ext/dispatcher.py", line 279, in process_update

handler.handle_update(update, self)

File "local/lib/python2.7/site-packages/telegram/ext/messagehandler.py", line 169, in handle_update

return self.callback(dispatcher.bot, update, **optional_args)

File "<stdin>", line 5, in F

File "local/lib/python2.7/site-packages/telegram/user.py", line 91, in full_name

return '{} {}'.format(self.first_name, self.last_name)

UnicodeEncodeError: 'ascii' codec can't encode character u'\u2022' in position 3: ordinal not in range(128)

```

### Configuration

**Operating System:**

**Version of Python, python-telegram-bot & dependencies:**

```

python-telegram-bot 10.0.1

certifi 2018.01.18

future 0.16.0

Python 2.7.14 (default, Sep 23 2017, 22:06:14) [GCC 7.2.0]

```

I'm a little rushed, but this is works for me:

```python

@property

def full_name(self):

"""

:obj:`str`: Convenience property. The user's :attr:`first_name`, followed by (if available)

:attr:`last_name`.

"""

if self.last_name:

! return u'{} {}'.format(self.first_name, self.last_name)

return self.first_name

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `telegram/user.py`

Content:

```

1 #!/usr/bin/env python

2 # pylint: disable=C0103,W0622

3 #

4 # A library that provides a Python interface to the Telegram Bot API

5 # Copyright (C) 2015-2018

6 # Leandro Toledo de Souza <[email protected]>

7 #

8 # This program is free software: you can redistribute it and/or modify

9 # it under the terms of the GNU Lesser Public License as published by

10 # the Free Software Foundation, either version 3 of the License, or

11 # (at your option) any later version.

12 #

13 # This program is distributed in the hope that it will be useful,

14 # but WITHOUT ANY WARRANTY; without even the implied warranty of

15 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

16 # GNU Lesser Public License for more details.

17 #

18 # You should have received a copy of the GNU Lesser Public License

19 # along with this program. If not, see [http://www.gnu.org/licenses/].

20 """This module contains an object that represents a Telegram User."""

21

22 from telegram import TelegramObject

23 from telegram.utils.helpers import mention_html as util_mention_html

24 from telegram.utils.helpers import mention_markdown as util_mention_markdown

25

26

27 class User(TelegramObject):

28 """This object represents a Telegram user or bot.

29

30 Attributes:

31 id (:obj:`int`): Unique identifier for this user or bot.

32 is_bot (:obj:`bool`): True, if this user is a bot

33 first_name (:obj:`str`): User's or bot's first name.

34 last_name (:obj:`str`): Optional. User's or bot's last name.

35 username (:obj:`str`): Optional. User's or bot's username.

36 language_code (:obj:`str`): Optional. IETF language tag of the user's language.

37 bot (:class:`telegram.Bot`): Optional. The Bot to use for instance methods.

38

39 Args:

40 id (:obj:`int`): Unique identifier for this user or bot.

41 is_bot (:obj:`bool`): True, if this user is a bot

42 first_name (:obj:`str`): User's or bot's first name.

43 last_name (:obj:`str`, optional): User's or bot's last name.

44 username (:obj:`str`, optional): User's or bot's username.

45 language_code (:obj:`str`, optional): IETF language tag of the user's language.

46 bot (:class:`telegram.Bot`, optional): The Bot to use for instance methods.

47

48 """

49

50 def __init__(self,

51 id,

52 first_name,

53 is_bot,

54 last_name=None,

55 username=None,

56 language_code=None,

57 bot=None,

58 **kwargs):

59 # Required

60 self.id = int(id)

61 self.first_name = first_name

62 self.is_bot = is_bot

63 # Optionals

64 self.last_name = last_name

65 self.username = username

66 self.language_code = language_code

67

68 self.bot = bot

69

70 self._id_attrs = (self.id,)

71

72 @property

73 def name(self):

74 """

75 :obj:`str`: Convenience property. If available, returns the user's :attr:`username`

76 prefixed with "@". If :attr:`username` is not available, returns :attr:`full_name`.

77

78 """

79 if self.username:

80 return '@{}'.format(self.username)

81 return self.full_name

82

83 @property

84 def full_name(self):

85 """

86 :obj:`str`: Convenience property. The user's :attr:`first_name`, followed by (if available)

87 :attr:`last_name`.

88

89 """

90 if self.last_name:

91 return '{} {}'.format(self.first_name, self.last_name)

92 return self.first_name

93

94 @classmethod

95 def de_json(cls, data, bot):

96 if not data:

97 return None

98

99 data = super(User, cls).de_json(data, bot)

100

101 return cls(bot=bot, **data)

102

103 def get_profile_photos(self, *args, **kwargs):

104 """

105 Shortcut for::

106

107 bot.get_user_profile_photos(update.message.from_user.id, *args, **kwargs)

108

109 """

110

111 return self.bot.get_user_profile_photos(self.id, *args, **kwargs)

112

113 @classmethod

114 def de_list(cls, data, bot):

115 if not data:

116 return []

117

118 users = list()

119 for user in data:

120 users.append(cls.de_json(user, bot))

121

122 return users

123

124 def mention_markdown(self, name=None):

125 """

126 Args:

127 name (:obj:`str`): If provided, will overwrite the user's name.

128

129 Returns:

130 :obj:`str`: The inline mention for the user as markdown.

131 """

132 if not name:

133 return util_mention_markdown(self.id, self.name)

134 else:

135 return util_mention_markdown(self.id, name)

136

137 def mention_html(self, name=None):

138 """

139 Args:

140 name (:obj:`str`): If provided, will overwrite the user's name.

141

142 Returns:

143 :obj:`str`: The inline mention for the user as HTML.

144 """

145 if not name:

146 return util_mention_html(self.id, self.name)

147 else:

148 return util_mention_html(self.id, name)

149

150 def send_message(self, *args, **kwargs):

151 """Shortcut for::

152

153 bot.send_message(User.chat_id, *args, **kwargs)

154

155 Where User is the current instance.

156

157 Returns:

158 :class:`telegram.Message`: On success, instance representing the message posted.

159

160 """

161 return self.bot.send_message(self.id, *args, **kwargs)

162

163 def send_photo(self, *args, **kwargs):

164 """Shortcut for::

165

166 bot.send_photo(User.chat_id, *args, **kwargs)

167

168 Where User is the current instance.

169

170 Returns:

171 :class:`telegram.Message`: On success, instance representing the message posted.

172

173 """

174 return self.bot.send_photo(self.id, *args, **kwargs)

175

176 def send_audio(self, *args, **kwargs):

177 """Shortcut for::

178

179 bot.send_audio(User.chat_id, *args, **kwargs)

180

181 Where User is the current instance.

182

183 Returns:

184 :class:`telegram.Message`: On success, instance representing the message posted.

185

186 """

187 return self.bot.send_audio(self.id, *args, **kwargs)

188

189 def send_document(self, *args, **kwargs):

190 """Shortcut for::

191

192 bot.send_document(User.chat_id, *args, **kwargs)

193

194 Where User is the current instance.

195

196 Returns:

197 :class:`telegram.Message`: On success, instance representing the message posted.

198

199 """

200 return self.bot.send_document(self.id, *args, **kwargs)

201

202 def send_sticker(self, *args, **kwargs):

203 """Shortcut for::

204

205 bot.send_sticker(User.chat_id, *args, **kwargs)

206

207 Where User is the current instance.

208

209 Returns:

210 :class:`telegram.Message`: On success, instance representing the message posted.

211

212 """

213 return self.bot.send_sticker(self.id, *args, **kwargs)

214

215 def send_video(self, *args, **kwargs):

216 """Shortcut for::

217

218 bot.send_video(User.chat_id, *args, **kwargs)

219

220 Where User is the current instance.

221

222 Returns:

223 :class:`telegram.Message`: On success, instance representing the message posted.

224

225 """

226 return self.bot.send_video(self.id, *args, **kwargs)

227

228 def send_video_note(self, *args, **kwargs):

229 """Shortcut for::

230

231 bot.send_video_note(User.chat_id, *args, **kwargs)

232

233 Where User is the current instance.

234

235 Returns:

236 :class:`telegram.Message`: On success, instance representing the message posted.

237

238 """

239 return self.bot.send_video_note(self.id, *args, **kwargs)

240

241 def send_voice(self, *args, **kwargs):

242 """Shortcut for::

243

244 bot.send_voice(User.chat_id, *args, **kwargs)

245

246 Where User is the current instance.

247

248 Returns:

249 :class:`telegram.Message`: On success, instance representing the message posted.

250

251 """

252 return self.bot.send_voice(self.id, *args, **kwargs)

253

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/telegram/user.py b/telegram/user.py

--- a/telegram/user.py

+++ b/telegram/user.py

@@ -88,7 +88,7 @@

"""

if self.last_name:

- return '{} {}'.format(self.first_name, self.last_name)

+ return u'{} {}'.format(self.first_name, self.last_name)

return self.first_name

@classmethod

|